MTSA-CG: Mongolian Text Sentiment Analysis Based on ConvBERT and Graph Attention Network

Abstract

1. Introduction

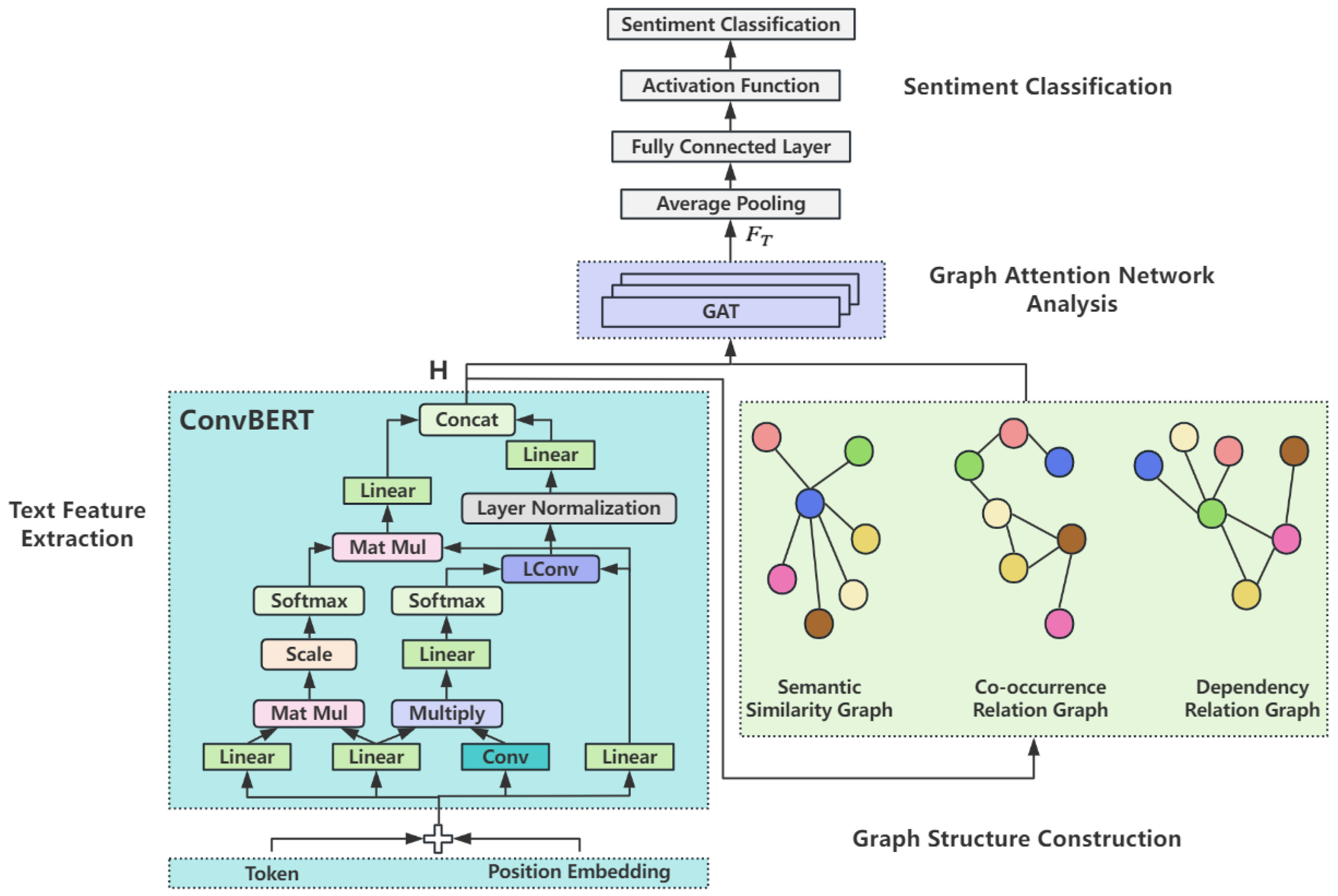

- A ConvBERT pre-trained model is employed to extract textual features from Mongolian text data. By leveraging token embedding and positional embedding, ConvBERT captures local textual features through convolutional modules and further enhances feature representation by integrating multi-head self-attention mechanisms and feedforward neural networks. This enables effective textual representation even under conditions of limited data.

- A hierarchical multi-graph attention framework is proposed, which incorporates three types of graph structures: co-occurrence, syntactic dependency, and semantic similarity. Unlike existing multi-graph fusion approaches that simply concatenate or average graph representations, our method employs a novel adaptive weighting mechanism where each graph type is processed through independent attention heads, and their contributions are dynamically adjusted based on context-specific semantic relevance. This hierarchical architecture allows the model to selectively emphasize the most informative graph structure for each sentiment classification instance. These graphs, together with the feature vectors produced by the ConvBERT model, are input into the GAT to enable comprehensive integration of global semantic information.

- The MTSA-CG model is introduced. Experimental results show that the proposed model effectively improves the accuracy of MTSA. Additionally, we provide comprehensive ablation studies demonstrating the contribution of each component and discuss the model’s applicability to other low-resource languages.

2. Related Work

3. Methods

3.1. Overview

3.2. Text Feature Extraction

3.3. Graph Structure Construction

3.3.1. Module Overview

Leakage-Safe Protocol

3.3.2. Co-Occurrence Relation Graph (CRG)

Leakage Control

3.3.3. Cross-Lingual Dependency Transformation (DRG)

Linguistic Motivation

Transformation Pipeline

- (1)

- Morphological Alignment: Chinese syntactic markers are mapped to Mongolian case markers using a rule-based conversion table derived from comparative grammatical studies.

- (2)

- Postposition Handling: Chinese prepositions are converted into Mongolian postpositions through 24 predefined mappings.

- (3)

- Verb Complex Transformation: Aspectual and modal auxiliaries in Chinese are mapped to Mongolian converbal and modal constructions.

4. Experiments and Evaluation

4.1. Dataset and Evaluation Index

4.1.1. Mongolian Sentiment Dataset

4.1.2. Mongolian-Chinese Parallel Corpus

- For each cross-validation fold, vocabulary statistics, co-occurrence counts, and bilingual vector alignments are computed exclusively from the training split.

- The parallel corpus is used only to derive transformation rules and general linguistic patterns, not to access test instance information.

- Dependency parsers and embedding models are trained or adapted only on training data.

- All hyperparameters and normalization constants are determined per-fold based on training data alone.

4.2. Text Preprocessing

4.2.1. Preprocessing Pipeline

Step 1: Noise Removal (Pre-Parsing)

- Non-textual elements: URLs, email addresses, and HTML tags using regular expressions.

- Special symbols: Emoticons and non-Mongolian script characters (e.g., Latin, Cyrillic) are removed, except punctuation marks which are retained for sentence boundary detection.

- Duplicated whitespace: Consecutive spaces, tabs, and newlines are normalized to single spaces.

Step 2: Tokenization

- Suffix boundaries: Mongolian is agglutinative, so we identify morpheme boundaries using a finite-state transducer trained on 10,000 manually segmented words.

- Multi-word expressions: Compound nouns and idiomatic phrases are treated as single tokens based on a lexicon of 500 common expressions.

- Punctuation: Sentence-final punctuation (period, question mark, exclamation mark) is separated as distinct tokens.

Step 3: Dependency Parsing

- Chinese side: Stanford CoreNLP 4.5.0 with the Chinese dependency parser (trained on Chinese Treebank 9.0).

- Mongolian side (for baseline): A Mongolian dependency parser adapted from Universal Dependencies 2.10 framework, trained on 5000 manually annotated Mongolian sentences.

Step 4: Graph-Level Filtering (Post-Parsing)

- Low-frequency node removal: Nodes (words) appearing fewer than 3 times in the training vocabulary are marked as <UNK> but retain their dependency connections through re-attachment: if a node is removed, its dependents are re-attached to its head, preserving tree connectivity.

- Stopword masking (optional): For SSG only, we optionally mask function words (17 Mongolian postpositions, 8 conjunctions) by setting their similarity scores to zero while keeping them in the graph structure. This step is ablated in Section 4.6.

- Connectivity: All dependency graphs remain weakly connected trees (single root, no cycles).

- Size consistency: Node count matches tokenized sequence length.

- Edge validity: All edges connect valid node indices within .

Preprocessing Tools and Versions

- Python 3.8.10;

- Stanford CoreNLP 4.5.0 (Chinese parser);

- Universal Dependencies 2.10 (Mongolian parser);

- Custom Mongolian tokenizer (released with code);

- NumPy 1.21.0, SciPy 1.7.3 (for graph operations).

Reproducibility

4.3. Evaluation Metrics

4.4. MTSA-CG Model Experiment

4.5. Contrast Experiment

4.6. Ablation Experiments

4.6.1. Component Ablation

- w/o GAT: Removes the Graph Attention Network; only ConvBERT features are fed into a two-layer MLP classifier (hidden size = 256).

- w/o CRG: Excludes the Co-occurrence Relation Graph, integrating only DRG and SSG features.

- w/o DRG: Removes the Dependency Relation Graph, utilizing only CRG and SSG for fusion.

- w/o SSG: Excludes the Semantic Similarity Graph, combining only CRG and DRG.

- w/o Cross-Graph Attention: Replaces the hierarchical attention fusion with a simple concatenation of graph representations.

4.6.2. Dependency Transformation Ablation

- (1)

- Chinese Parse Only: Use dependency trees directly from Chinese parallel sentences (no transformation).

- (2)

- Mongolian Parse Only: Apply a monolingual Mongolian dependency parser (LAS = 81.3%) without cross-lingual transfer.

- (3)

- Transformed (Proposed): Apply the full 15-rule transformation pipeline to Chinese parses.

- (4)

- Hybrid (Oracle): Combine transformed and Mongolian parses via confidence-weighted voting (upper bound).

4.6.3. Data Leakage Control Experiment

- Leakage-Safe (Proposed): CRG, SSG, and embeddings computed exclusively from the training split in each fold.

- Global Statistics (Leaked): Graphs and embeddings precomputed from the entire corpus (train + validation + test).

4.6.4. SSG Configuration Ablation

- (1)

- Surface Forms (default): Compute token-level similarity without lemmatization.

- (2)

- Lemmatized: Apply rule-based stemmer for inflection normalization before computing similarity.

- (3)

- No Stopword Masking: Retain function words in SSG with full similarity weights.

- (4)

- Threshold Variation: Evaluate similarity thresholds .

5. Error Analysis

5.1. Confusion Matrix Analysis

- Sad–Fear Confusion (23.9% combined): The two classes exhibit substantial overlap due to shared vocabulary and similar dependency structures characterized by stative verbs and negative polarity.

- Neutral Misclassification: The Neutral category shows a 19.1% total misclassification rate, frequently confused with emotional classes, suggesting it encapsulates mixed or ambiguous sentiment.

- Anger → Disgust: A 4.8% error rate indicates lexical and syntactic overlap, such as shared use of exclamations and rejection-related terms.

5.2. Linguistic Error Analysis

5.3. Implications for Future Improvement

- Enhanced Dependency Rules: Extend the current 15-rule transformation framework to cover negation scope (Rules 16–18), conditional mood (Rules 19–20), and clause embedding (Rules 21–23), ensuring accurate head attachment of modal and aspectual markers.

- Lexical Disambiguation: Incorporate context-aware word sense disambiguation for high-ambiguity terms using contextualized embeddings such as ELMo or fine-tuned fastText.

- Long-Range Dependency Modeling: For lengthy sentences (>30 words), employ hierarchical attention or additional transformer layers before graph construction to capture global semantic dependencies.

- Neutral Class Refinement: Redefine the Neutral category into subtypes (e.g., factual, mixed-sentiment, or ambiguous) or apply ordinal regression to represent sentiment intensity continuously.

- Cross-Lingual Alignment Enhancement: Improve bilingual embedding alignment using larger seed lexicons (increase from 5000 to 20,000 pairs) and domain-adaptive fine-tuning on sentiment-specific corpora.

6. Discussion

6.1. Positioning in the Era of Large Language Models

- Hybrid Architecture: LLMs could serve as feature extractors replacing ConvBERT, with their outputs fed into our hierarchical multi-graph attention framework. This would combine LLMs’ broad linguistic knowledge with our model’s structural reasoning capabilities.

- Prompt-Based Graph Construction: LLMs could be employed to automatically generate or refine graph structures (co-occurrence, dependency, similarity) through carefully designed prompts, improving graph quality without manual linguistic analysis.

6.2. Cross-Lingual Applicability

- Parallel Corpus Construction: Establishing a parallel corpus between the target language and a high-resource language (e.g., English or Chinese) for cross-lingual knowledge transfer.

- Morphological Analysis: Developing language-specific rules for morphological decomposition and syntactic transformation, potentially leveraging existing linguistic descriptions or universal dependency frameworks [41].

- Graph Construction Rules: Adapting co-occurrence, dependency, and similarity graph construction to accommodate the target language’s unique grammatical structures (e.g., case systems, agreement patterns, word order variations).

- Pre-trained Model Selection: Identifying or fine-tuning appropriate pre-trained models (multilingual BERT, XLM-R, or language-specific models if available) for initial feature extraction [42].

6.3. Limitations

- Dataset Scale and Diversity: The current study employs a dataset of 2100 samples across seven emotion categories. While this represents a substantial effort given Mongolian’s low-resource status, the limited scale may restrict the model’s generalization to broader domains, writing styles, and dialectal variations. The dataset primarily consists of standard written Mongolian, potentially underrepresenting colloquial expressions, code-switching phenomena, and regional linguistic variations prevalent in social media and informal communication.

- Cross-Lingual Dependency on Parallel Corpora: Our graph construction methodology relies on Mongolian-Chinese parallel corpora for cross-lingual knowledge transfer. The quality and coverage of these parallel resources directly impact the accuracy of dependency transformation and semantic mapping. For languages lacking high-quality parallel corpora, this approach may introduce systematic biases or errors. Furthermore, the assumption of structural alignability between language pairs may not hold universally, particularly for typologically distant languages.

- Computational Complexity: While more efficient than LLMs, the hierarchical multi-graph attention mechanism introduces non-trivial computational overhead compared to single-graph or graph-free models. The independent processing of three graph types (CRG, DRG, SSG) followed by cross-graph attention fusion increases training time and memory requirements. For real-time applications or extremely resource-constrained environments, this may pose practical deployment challenges.

- Rule-Based Transformation Brittleness: The dependency transformation from Chinese SVO to Mongolian SOV structures relies on manually crafted linguistic rules. While validated by expert linguists, these rules may fail to capture all nuances of complex syntactic phenomena (e.g., non-canonical word orders, ellipsis, pro-drop constructions). Edge cases and exceptions could lead to malformed dependency graphs, propagating errors to downstream sentiment classification.

- Static Graph Structures: Our current implementation constructs graph structures (co-occurrence, dependency, similarity) statically before model training. This pre-defined approach cannot adapt to context-specific variations or dynamically adjust graph topologies based on learned patterns. Recent research on dynamic graph neural networks suggests that adaptive graph construction during training could enhance model flexibility and performance.

- Limited Multimodal Integration: The model focuses exclusively on textual sentiment analysis. However, real-world Mongolian social media and communication increasingly incorporate multimodal elements (images, emojis, audio). Extending MTSA-CG to handle multimodal sentiment would require substantial architectural modifications and aligned multimodal datasets, which remain scarce for Mongolian.

- Evaluation Metric Limitations: While accuracy, recall, and F1-score provide standard evaluation, they may not fully capture the model’s performance on minority or ambiguous sentiment classes. Metrics such as Matthews Correlation Coefficient (MCC), macro-averaged metrics, or confusion matrix analysis would provide more nuanced insights, particularly for imbalanced emotion distributions.

- Generalization to Other NLP Tasks: The current study demonstrates MTSA-CG’s effectiveness for sentiment analysis. However, its applicability to other Mongolian NLP tasks (named entity recognition, machine translation, question answering) remains unexplored. The graph construction methodology and hierarchical attention mechanism may require task-specific adaptations, limiting immediate transferability.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Dependency Transformation Rules

Appendix A.1. Rule 1: Subject-Object-Verb Reordering

- Linguistic Rationale: Chinese follows SVO word order, while Mongolian follows SOV. The verb must be repositioned to sentence-final position, and dependency arc directions are reversed to reflect head-final structure.

- Transformation: Chinese: nsubj(V, S), dobj(V, O) → Mongolian: nsubj(V, S), dobj(V, O) with arc direction V ← O

- Example:

- –

- Chinese: “I like this book”;

- –

- Structure: nsubj(like, I), dobj(like, this book);

- –

- Mongolian: “I this book like”;

- –

- Structure: nsubj(like, I), dobj(like, book) with reversed arc like ← book.

Appendix A.2. Rule 2: Case Marker Mapping

- Linguistic Rationale: Chinese uses prepositions and word order to mark grammatical relations, while Mongolian uses postpositional case suffixes. We map Chinese function words to Mongolian case markers.

- Transformation:

- –

- Chinese: “ba” (disposal) → Mongolian: accusative suffix;

- –

- Chinese: “in…li” (in) → Mongolian: locative suffix;

- –

- Chinese: “cong” (from) → Mongolian: ablative suffix;

- Example:

- –

- Chinese: “He studies in the library”;

- –

- Structure: nmod:prep(studies, library), case(library, in);

- –

- Mongolian: “He library-from studies”;

- –

- Structure: obl(studies, library-from), case(library-from, ablative).

Appendix A.3. Rule 3: Attributive Modifier Inversion

- Linguistic Rationale: Chinese attributive modifiers precede the head noun (Adj + N), while Mongolian follows the same order but uses a genitive case for noun modifiers.

- Transformation: Chinese: amod(N, Adj) → Mongolian: amod(N, Adj) (order preserved, add genitive suffix if noun modifier)

- Example:

- –

- Chinese: “red flower”;

- –

- Structure: amod(flower, red), case(red, de);

- –

- Mongolian: “red flower”;

- –

- Structure: amod(flower, red).

Appendix A.4. Rule 4: Postposition Handling

- Linguistic Rationale: Chinese prepositions appear before their complement; Mongolian postpositions appear after. We invert the dependency direction and map to Mongolian postpositions.

- Transformation:

- –

- Chinese: “zài…shàng” (on) → Mongolian: “…deer-e” (on);

- –

- Chinese: “wèile” (for) → Mongolian: “…-yn tölθθ” (for).

- Example:

- –

- Chinese: “The book is on the table”;

- –

- Structure: case(table, on);

- –

- Mongolian: “book table-on is”;

- –

- Structure: case(table, on).

Appendix A.5. Rule 5: Relative Clause Attachment

- Linguistic Rationale: Both languages use pre-nominal relative clauses, but Mongolian marks the relativized verb with a participial suffix (e.g., for past, for future).

- Transformation: Chinese: acl:relcl(N, V_rel) → Mongolian: acl(N, V_rel) with participial marking on V_rel

- Example:

- –

- Chinese: “the movie I watched yesterday”;

- –

- Structure: acl:relcl(movie, watched), mark(watched, de);

- –

- Mongolian: “my yesterday watched-participial movie”;

- –

- Structure: acl(movie, watched-participial).

Appendix A.6. Rule 6: Coordination Transformation

- Linguistic Rationale: Chinese uses “he” (and) between conjuncts; Mongolian uses “bolon” or no overt marker with intonation.

- Transformation: Chinese: conj(X, Y), cc(X, he) → Mongolian: conj(X, Y), cc(X, bolon)

- Example:

- –

- Chinese: “apples and bananas”;

- –

- Structure: conj(apples, bananas), cc(apples, and);

- –

- Mongolian: “apples and bananas”;

- –

- Structure: conj(apples, bananas), cc(apples, and).

Appendix A.7. Rule 7: Verb Complement Clauses

- Linguistic Rationale: Chinese complement clauses follow the main verb; Mongolian converbal constructions precede the main verb.

- Transformation: Chinese: ccomp(V_main, V_comp) → Mongolian: ccomp(V_main, V_comp) with V_comp preceding V_main and taking converb suffix

- Example:

- –

- Chinese: “He says he is very busy”;

- –

- Structure: ccomp(says, busy), nsubj(busy, he);

- –

- Mongolian: “He very busy-converb says”;

- –

- Structure: ccomp(says, busy) with converb on busy.

Appendix A.8. Rule 8: Aspectual Auxiliary Transformation

- Linguistic Rationale: Chinese uses auxiliary verbs like “le” (perfective) and “zhe” (durative); Mongolian uses verb suffixes.

- Transformation:

- –

- Chinese: aux(V, le) → Mongolian: Add perfective suffix to V;

- –

- Chinese: aux(V, zhe) → Mongolian: Add durative suffix to V.

- Example:

- –

- Chinese: “I ate”;

- –

- Structure: aux(ate, le);

- –

- Mongolian: “I ate-perfective”;

- –

- Transformation: ate + perfective; → ate-suffix.

Appendix A.9. Rule 9: Adverbial Modifier Position

- Linguistic Rationale: Chinese adverbs typically precede verbs; Mongolian adverbs also precede verbs but may take case marking.

- Transformation: Chinese: advmod(V, Adv) → Mongolian: advmod(V, Adv) (position preserved)

- Example:

- –

- Chinese: “He runs quickly”;

- –

- Structure: advmod(runs, quickly);

- –

- Mongolian: “He quickly runs”;

- –

- Structure: advmod(runs, quickly).

Appendix A.10. Rule 10: Possessive Construction

- Linguistic Rationale: Chinese uses “de” between possessor and possessed; Mongolian uses genitive case suffix.

- Transformation: Chinese: nmod:poss(N_possessed, N_possessor), case(N_possessor, de) → Mongolian: nmod:poss(N_possessed, N_possessor) with genitive suffix on N_possessor

- Example:

- –

- Chinese: “my book”;

- –

- Structure: nmod:poss(book, my), case(my, de);

- –

- Mongolian: “my-genitive book”;

- –

- Structure: nmod:poss(book, my-genitive).

Appendix A.11. Rule 11: Modal Verb Transformation

- Linguistic Rationale: Chinese modal verbs (e.g., can, should) precede main verbs; Mongolian uses modal particles or auxiliary verbs following the main verb in converb form.

- Transformation: Chinese: aux(V_main, Modal) → Mongolian: V_main in converb + Modal suffix (e.g., for ability)

- Example:

- –

- Chinese: “He can come”;

- –

- Structure: aux(come, can);

- –

- Mongolian: “He come-converb can”;

- –

- Transformation: come → come-converb + can → can-suffix.

Appendix A.12. Rule 12: Comparative Construction

- Linguistic Rationale: Chinese uses “bi” (than) before the standard of comparison; Mongolian uses ablative case.

- Transformation: Chinese: case(N_standard, bi) → Mongolian: obl(Adj, N_standard) with ablative suffix on N_standard

- Example:

- –

- Chinese: “He is taller than me”;

- –

- Structure: case(me, than), nsubj(tall, he);

- –

- Mongolian: “He me-ablative tall”;

- –

- Structure: obl(tall, me-ablative).

Appendix A.13. Rule 13: Question Particle Handling

- Linguistic Rationale: Chinese question particles like “ma” appear sentence-finally; Mongolian uses sentence-final particles.

- Transformation: Chinese: discourse(V, ma) → Mongolian: discourse(V, question particle) (position maintained);

- Example:

- –

- Chinese: “Did you eat?”;

- –

- Structure: discourse(eat, ma);

- –

- Mongolian: “You ate question particle?”;

- –

- Structure: discourse(eat, question particle).

Appendix A.14. Rule 14: Passive Voice Transformation

- Linguistic Rationale: Chinese uses “bèi” to mark passive; Mongolian uses a suffix on the verb.

- Transformation: Chinese: nsubjpass(V, N), auxpass(V, bèi) → Mongolian: Add passive suffix to V, demote agent to oblique with ablative case

- Example:

- –

- Chinese: “The book was taken by him”;

- –

- Structure: nsubjpass(taken, book), auxpass(taken, by);

- –

- Mongolian: “Book him-ablative taken-passive”;

- –

- Structure: nsubjpass(taken-passive, book), obl(taken-passive, him-ablative).

Appendix A.15. Rule 15: Embedded Clause Reordering

- Linguistic Rationale: Chinese embedded clauses follow matrix verbs; Mongolian embedded clauses precede matrix verbs with nominalized verb forms.

- Transformation: Chinese: ccomp(V_matrix, V_embedded) → Mongolian: V_embedded with nominalizer precedes V_matrix

- Example:

- –

- Chinese: “I hope he comes”;

- –

- Structure: ccomp(hope, comes);

- –

- Mongolian: “I he come-nominalizer hope”;

- –

- Structure: ccomp(hope, come-nominalizer).

Appendix B. Precision Values for Table 2

| Emotion Category | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Happy | 93.57 | 93.42 | 91.84 | 92.69 |

| Surprise | 90.24 | 90.13 | 89.76 | 90.00 |

| Anger | 86.72 | 86.38 | 85.11 | 85.90 |

| Disgust | 84.93 | 84.51 | 83.54 | 84.20 |

| Neutral | 82.45 | 82.18 | 80.87 | 81.65 |

| Sad | 79.38 | 78.92 | 77.26 | 78.30 |

| Fear | 78.16 | 77.65 | 75.49 | 76.80 |

| Macro Average | 85.92 | 84.74 | 83.98 | 84.79 |

Appendix C. Precision Values for Table 3

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| CNN | 85.76 | 83.45 | 80.21 | 84.93 |

| BERT | 88.40 | 86.72 | 85.31 | 89.61 |

| ConvBERT | 90.57 | 89.28 | 89.16 | 91.38 |

| KIG | 89.73 | 88.51 | 93.09 | 91.66 |

| MTG-XLNET | 91.58 | 90.63 | 92.01 | 92.47 |

| MTSA-CG (proposed) | 92.80 | 92.05 | 93.43 | 92.71 |

Appendix D. Component Ablation with Precision

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| w/o GAT | 81.61 ± 1.3 | 80.89 ± 1.4 | 80.42 ± 1.5 | 82.95 ± 1.2 |

| w/o CRG | 85.27 ± 1.1 | 84.12 ± 1.2 | 83.04 ± 1.3 | 83.76 ± 1.0 |

| w/o DRG | 84.87 ± 1.2 | 83.76 ± 1.3 | 83.02 ± 1.4 | 83.49 ± 1.1 |

| w/o SSG | 89.58 ± 0.9 | 87.45 ± 0.9 | 86.35 ± 1.0 | 88.21 ± 0.8 |

| w/o Cross-Attention | 90.15 ± 0.8 | 88.54 ± 1.0 | 88.72 ± 1.1 | 89.44 ± 0.9 |

| MTSA-CG (Full) | 92.80 ± 0.7 | 92.05 ± 0.7 | 93.43 ± 0.8 | 92.71 ± 0.6 |

Appendix E. 95% Confidence Intervals

| Emotion | Accuracy (95% CI) | Precision (95% CI) | Recall (95% CI) | F1-Score (95% CI) |

|---|---|---|---|---|

| Happy | 93.57 (92.8, 94.3) | 93.42 (92.6, 94.2) | 91.84 (91.0, 92.7) | 92.69 (91.9, 93.5) |

| Surprise | 90.24 (89.3, 91.2) | 90.13 (89.2, 91.1) | 89.76 (88.8, 90.7) | 90.00 (89.1, 90.9) |

| Anger | 86.72 (85.6, 87.9) | 86.38 (85.2, 87.6) | 85.11 (84.0, 86.2) | 85.90 (84.8, 87.0) |

| Disgust | 84.93 (83.7, 86.2) | 84.51 (83.3, 85.7) | 83.54 (82.3, 84.8) | 84.20 (83.0, 85.4) |

| Neutral | 82.45 (81.1, 83.8) | 82.18 (80.8, 83.6) | 80.87 (79.5, 82.3) | 81.65 (80.3, 83.0) |

| Sad | 79.38 (77.9, 80.9) | 78.92 (77.4, 80.5) | 77.26 (75.7, 78.8) | 78.30 (76.8, 79.8) |

| Fear | 78.16 (76.5, 79.8) | 77.65 (76.0, 79.3) | 75.49 (73.8, 77.2) | 76.80 (75.1, 78.5) |

| Macro | 85.92 (85.1, 86.7) | 84.74 (83.9, 85.6) | 83.98 (83.1, 84.9) | 84.79 (84.0, 85.6) |

| Model | Accuracy (95% CI) | Precision (95% CI) | Recall (95% CI) | F1-Score (95% CI) |

|---|---|---|---|---|

| CNN | 85.76 (84.5, 87.0) | 83.45 (82.1, 84.8) | 80.21 (78.8, 81.6) | 84.93 (83.6, 86.3) |

| BERT | 88.40 (87.3, 89.5) | 86.72 (85.5, 88.0) | 85.31 (84.0, 86.6) | 89.61 (88.4, 90.8) |

| ConvBERT | 90.57 (89.6, 91.5) | 89.28 (88.2, 90.4) | 89.16 (88.1, 90.2) | 91.38 (90.4, 92.4) |

| KIG | 89.73 (88.7, 90.8) | 88.51 (87.4, 89.6) | 93.09 (92.2, 94.0) | 91.66 (90.7, 92.6) |

| MTG-XLNET | 91.58 (90.7, 92.5) | 90.63 (89.7, 91.6) | 92.01 (91.1, 93.0) | 92.47 (91.5, 93.4) |

| MTSA-CG | 92.80 (92.0, 93.6) | 92.05 (91.2, 92.9) | 93.43 (92.6, 94.3) | 92.71 (91.9, 93.5) |

Appendix F. Cross-Reference Resolution

- Section 4.6.3 (Data Leakage Control): Referenced in Section 4.1.2 line 354;

- Table 2: Referenced in Section 4.4 line 434;

- Table 3: Corrected reference in Section 4.5 line 455;

- Section 4.6.2 (Dependency Ablation): Correctly points to Section 4.1.2 line 215.

Appendix G. Reproducibility Checklist

- Preprocessing Scripts: Python code for tokenization, noise removal, and graph construction;

- Transformation Rules: Complete implementation of 15 rules with validation checks;

- Model Architecture: PyTorch 1.12.1 code for MTSA-CG with hyperparameters;

- Training Protocol: 5-fold CV with seed management and leakage-safe data splitting;

- Evaluation Metrics: Complete calculation scripts with bootstrap CI estimation;

- Sample Data: 100 anonymized instances per category (available upon request).

References

- Hung, L.P.; Alias, S. Beyond sentiment analysis: A review of recent trends in text based sentiment analysis and emotion detection. J. Adv. Comput. Intell. Intell. Inform. 2023, 27, 84–95. [Google Scholar] [CrossRef]

- Rao, Y.; Lei, J.; Liu, W.; Li, Q.; Chen, M. Building emotional dictionary for sentiment analysis of online news. World Wide Web 2014, 17, 723–742. [Google Scholar] [CrossRef]

- Gao, Y.; Su, P.; Zhao, H.; Qiu, M.; Liu, M. Research on sentiment dictionary based on sentiment analysis in news domain. In Proceedings of the 2021 7th IEEE Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Virtual, 15–17 May 2021; pp. 117–122. [Google Scholar]

- Yeow, B.Z.; Chua, H.N. A depression diagnostic system using lexicon-based text sentiment analysis. Int. J. Percept. Cogn. Comput. 2022, 8, 29–39. [Google Scholar]

- Prusa, J.; Khoshgoftaar, T.M.; Dittman, D.J. Using ensemble learners to improve classifier performance on tweet sentiment data. In Proceedings of the 2015 IEEE International Conference on Information Reuse and Integration, San Francisco, CA, USA, 13–15 August 2015; pp. 252–257. [Google Scholar]

- Yang, S.; Chen, F. Research on multi-level classification of microblog emotion based on SVM multi-feature fusion. Data Anal. Knowl. Discov. 2017, 1, 73–79. [Google Scholar]

- Styawati, S.; Nurkholis, A.; Aldino, A.A.; Samsugi, S.; Suryati, E.; Cahyono, R.P. Sentiment analysis on online transportation reviews using Word2Vec text embedding model feature extraction and support vector machine (SVM) algorithm. In Proceedings of the 2021 International Seminar on Machine Learning, Optimization, and Data Science (ISMODE), Jakarta, Indonesia, 29–30 January 2022; pp. 163–167. [Google Scholar]

- Mustakim, N.; Das, A.; Sharif, O.; Hoque, M.M. DeepSen: A Deep Learning-based Framework for Sentiment Analysis from Multi-Domain Heterogeneous Data. In Proceedings of the 2022 25th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 17–19 December 2022; pp. 785–790. [Google Scholar]

- Shang, W.; Chai, J.; Cao, J.; Lei, X.; Zhu, H.; Fan, Y.; Ding, W. Aspect-level sentiment analysis based on aspect-sentence graph convolution network. Inf. Fusion 2024, 104, 102143. [Google Scholar] [CrossRef]

- Khan, L.; Qazi, A.; Chang, H.-T.; Alhajlah, M.; Mahmood, A. Empowering Urdu sentiment analysis: An attention-based stacked CNN-Bi-LSTM DNN with multilingual BERT. Complex Intell. Syst. 2025, 11, 10. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Anthropic. Activating AI Safety Level 3 Protections, Anthropic. 2025. Available online: https://www-cdn.anthropic.com/807c59454757214bfd37592d6e048079cd7a7728.pdf (accessed on 14 November 2025).

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. LLaMA 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Liu, J.; Fu, B. Responsible Multilingual Large Language Models: A Survey of Development, Applications, and Societal Impact. arXiv 2024, arXiv:2410.17532. [Google Scholar] [CrossRef]

- Xu, Y.; Hu, L.; Zhao, J.; Qiu, Z.; Xu, K.; Ye, Y.; Gu, H. A Survey on Multilingual Large Language Models: Corpora, Alignment, and Bias. arXiv 2024, arXiv:2404.00929. [Google Scholar] [CrossRef]

- Joshi, P.; Santy, S.; Budhiraja, A.; Bali, K.; Choudhury, M. The State and Fate of Linguistic Diversity and Inclusion in the NLP World. In Proceedings of the ACL 2020, Online, 5–10 July 2020; pp. 6282–6293. [Google Scholar]

- Zhang, Y.; Qi, P.; Manning, C.D. Graph Convolution over Pruned Dependency Trees Improves Relation Extraction. In Proceedings of the EMNLP 2018, Brussels, Belgium, 31 October–4 November 2018; pp. 2205–2215. [Google Scholar]

- Liu, H.; Wu, Y.; Yang, Y. Analogical Inference for Multi-relational Embeddings. In Proceedings of the ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 2168–2178. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. Graph Convolutional Networks for Text Classification. In Proceedings of the AAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7370–7377. [Google Scholar]

- Dos Santos, C.; Gatti, M. Deep convolutional neural networks for sentiment analysis of short texts. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 69–78. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long and Short Papers), pp. 4171–4186. [Google Scholar]

- Jiang, Z.-H.; Yu, W.; Zhou, D.; Chen, Y.; Feng, J.; Yan, S. ConvBERT: Improving BERT with Span-based Dynamic Convolution. Adv. Neural Inf. Process. Syst. 2020, 33, 12837–12848. [Google Scholar]

- Zhao, Y.; Mamat, M.; Aysa, A.; Ubul, K. Knowledge-fusion-based iterative graph structure learning framework for implicit sentiment identification. Sensors 2023, 23, 6257. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; He, R.-F. Multi-modal Sentiment Analysis of Mongolian Language based on Pre-trained Models and High-resolution Networks. In Proceedings of the 2024 International Conference on Asian Language Processing (IALP), Hohhot, China, 4–6 August 2024; pp. 291–296. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. PaLM: Scaling Language Modeling with Pathways. arXiv 2022, arXiv:2204.02311. [Google Scholar] [CrossRef]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent Abilities of Large Language Models. arXiv 2022, arXiv:2206.07682. [Google Scholar] [CrossRef]

- Hedderich, M.A.; Lange, L.; Adel, H.; Strötgen, J.; Klakow, D. A Survey on Recent Approaches for Natural Language Processing in Low-Resource Scenarios. In Proceedings of the NAACL 2021, Online, 6–11 June 2021; pp. 2545–2568. [Google Scholar]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Deep Learning in NLP. In Proceedings of the ACL 2019, Florence, Italy, 28 July–2 August 2019; pp. 3645–3650. [Google Scholar]

- Danilevsky, M.; Qian, K.; Aharonov, R.; Katsis, Y.; Kawas, B.; Sen, P. A Survey of the State of Explainable AI for Natural Language Processing. In Proceedings of the AACL 2020, Online, 5–10 July 2020; pp. 447–459. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Caruana, R. Multitask Learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Liu, P.; Qiu, X.; Huang, X. Recurrent Neural Network for Text Classification with Multi-Task Learning. In Proceedings of the IJCAI 2016, New York, NY, USA, 9–15 July 2016; pp. 2873–2879. [Google Scholar]

- Tsarfaty, R.; Nivre, J.; Andersson, E. Cross-Framework Evaluation for Statistical Parsing. In Proceedings of the EACL 2012, Avignon, France, 23–27 April 2012; pp. 44–54. [Google Scholar]

- Çöltekin, Ç. A Freely Available Morphological Analyzer for Turkish. In Proceedings of the LREC 2010, Valletta, Malta, 17–23 May 2010; pp. 820–827. [Google Scholar]

- Nivre, J.; de Marneffe, M.-C.; Ginter, F.; Goldberg, Y.; Hajič, J.; Manning, C.D.; McDonald, R.; Petrov, S.; Pyysalo, S.; Silveira, N.; et al. Universal Dependencies v1: A Multilingual Treebank Collection. In Proceedings of the LREC 2016, Portorož, Slovenia, 23–28 May 2016; pp. 1659–1666. [Google Scholar]

- Kann, K.; Cho, K.; Bowman, S.R. Towards Realistic Practices In Low-Resource Natural Language Processing: The Development Set. In Proceedings of the EMNLP 2019, Hong Kong, China, 3–7 November 2019; pp. 3342–3349. [Google Scholar]

- Ruder, S.; Vulić, I.; Søgaard, A. A Survey of Cross-lingual Word Embedding Models. J. Artif. Intell. Res. 2019, 65, 569–631. [Google Scholar] [CrossRef]

- Zeman, D.; Hajič, J.; Popel, M.; Potthast, M.; Straka, M.; Ginter, F.; Nivre, J.; Petrov, S. CoNLL 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies. In Proceedings of the CoNLL 2018, Brussels, Belgium, 31 October–1 November 2018; pp. 1–21. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the ACL 2020, Online, 5–10 July 2020; pp. 8440–8451. [Google Scholar]

- Artetxe, M.; Labaka, G.; Agirre, E. Learning principled bilingual mappings of word embeddings while preserving monolingual invariance. In Proceedings of the EMNLP 2016, Austin, TX, USA, 1–4 November 2016; pp. 2289–2294. [Google Scholar]

- Lample, G.; Conneau, A.; Ranzato, M.; Denoyer, L.; Jégou, H. Word translation without parallel data. In Proceedings of the ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

| Category | Instances | Avg. Length (Words) | Token Count | Unique Tokens |

|---|---|---|---|---|

| Happy | 300 | 18.3 | 5490 | 1247 |

| Surprise | 300 | 16.7 | 5010 | 1189 |

| Anger | 300 | 21.5 | 6450 | 1356 |

| Disgust | 300 | 19.8 | 5940 | 1298 |

| Neutral | 300 | 22.1 | 6630 | 1412 |

| Sad | 300 | 20.4 | 6120 | 1323 |

| Fear | 300 | 17.9 | 5370 | 1205 |

| Total | 2100 | 19.5 | 41,010 | 3847 |

| Emotion Category | Accuracy (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Happy | 93.57 | 91.84 | 92.69 |

| Surprise | 90.24 | 89.76 | 90.00 |

| Anger | 86.72 | 85.11 | 85.90 |

| Disgust | 84.93 | 83.54 | 84.20 |

| Neutral | 82.45 | 80.87 | 81.65 |

| Sad | 79.38 | 77.26 | 78.30 |

| Fear | 78.16 | 75.49 | 76.80 |

| Average | 85.92 | 83.98 | 84.79 |

| Model | Accuracy (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| CNN | 85.76 | 80.21 | 84.93 |

| BERT | 88.40 | 85.31 | 89.61 |

| ConvBERT | 90.57 | 89.16 | 91.38 |

| KIG | 89.73 | 93.09 | 91.66 |

| MTG-XLNET | 91.58 | 92.01 | 92.47 |

| MTSA-CG (proposed) | 92.80 | 93.43 | 92.71 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| w/o GAT | 81.61 ± 1.3 | 81.18 ± 1.4 | 80.42 ± 1.5 | 82.95 ± 1.2 |

| w/o CRG | 85.27 ± 1.1 | 84.51 ± 1.2 | 83.04 ± 1.3 | 83.76 ± 1.0 |

| w/o DRG | 84.87 ± 1.2 | 84.20 ± 1.3 | 83.02 ± 1.4 | 83.49 ± 1.1 |

| w/o SSG | 89.58 ± 0.9 | 87.93 ± 0.9 | 86.35 ± 1.0 | 88.21 ± 0.8 |

| w/o Cross-Attention | 90.15 ± 0.8 | 88.96 ± 1.0 | 88.72 ± 1.1 | 89.44 ± 0.9 |

| MTSA-CG (Full) | 92.80 ± 0.7 | 92.05 ± 0.7 | 93.43 ± 0.8 | 92.71 ± 0.6 |

| Method | F1-Score (%) | LAS (%) | # Sentences a |

|---|---|---|---|

| Chinese Only (no transform) | 75.32 ± 2.1 | — | 105 (5%) |

| Mongolian Only | 88.14 ± 1.0 | 81.3 | 2100 (100%) |

| Transformed (Proposed) | 92.71 ± 0.6 | 89.7 b | 2100 (100%) |

| Hybrid Oracle | 94.23 ± 0.5 | 92.4 b | 2100 (100%) |

| Setting | Accuracy (%) | F1-Score (%) | ΔF1 |

|---|---|---|---|

| Global Statistics (leaked) | 94.17 ± 0.6 | 94.52 ± 0.5 | +1.81% |

| Leakage-Safe (Proposed) | 92.80 ± 0.7 | 92.71 ± 0.6 | baseline |

| Configuration | F1-Score (%) | Graph Density | Avg. Degree |

|---|---|---|---|

| Surface Forms (default) | 92.71 ± 0.6 | 0.087 | 8.3 |

| Lemmatized | 91.95 ± 0.7 | 0.092 | 8.9 |

| No Stopword Masking | 91.48 ± 0.8 | 0.114 | 11.2 |

| 91.82 ± 0.7 | 0.135 | 13.1 | |

| 92.34 ± 0.6 | 0.061 | 5.8 | |

| 91.76 ± 0.8 | 0.043 | 4.1 |

| True/Pred. | Happy | Surprise | Anger | Disgust | Neutral | Sad | Fear |

|---|---|---|---|---|---|---|---|

| Happy | 93.6 | 2.1 | 0.3 | 0.7 | 2.4 | 0.5 | 0.4 |

| Surprise | 3.2 | 89.8 | 1.1 | 0.6 | 3.5 | 0.9 | 0.9 |

| Anger | 0.4 | 1.3 | 85.1 | 4.8 | 4.2 | 2.7 | 1.5 |

| Disgust | 0.6 | 0.8 | 5.2 | 83.5 | 6.1 | 2.3 | 1.5 |

| Neutral | 2.8 | 3.1 | 4.5 | 5.9 | 80.9 | 1.6 | 1.2 |

| Sad | 0.7 | 1.2 | 3.1 | 2.5 | 4.8 | 77.3 | 10.4 |

| Fear | 0.5 | 1.5 | 2.3 | 2.1 | 4.6 | 13.5 | 75.5 |

| Factor | Sad Errors (n = 35) | Fear Errors (n = 38) | Neutral Errors (n = 42) |

|---|---|---|---|

| Case Marker Ambiguity | 8 (22.9%) | 6 (15.8%) | 5 (11.9%) |

| Verb Argument Mismatch | 12 (34.3%) | 15 (39.5%) | 9 (21.4%) |

| Negation Scope Error | 5 (14.3%) | 8 (21.1%) | 3 (7.1%) |

| Long Sentence (>30 words) | 6 (17.1%) | 5 (13.2%) | 12 (28.6%) |

| Clause Embedding | 9 (25.7%) | 11 (28.9%) | 8 (19.0%) |

| Modal Particle Subtlety | 7 (20.0%) | 9 (23.7%) | 6 (14.3%) |

| Code-Switching (MN–CN) | 2 (5.7%) | 1 (2.6%) | 8 (19.0%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, Q.; Wang, Q.; Lu, Y.; Ji, Y.; Wu, N. MTSA-CG: Mongolian Text Sentiment Analysis Based on ConvBERT and Graph Attention Network. Electronics 2025, 14, 4581. https://doi.org/10.3390/electronics14234581

Ren Q, Wang Q, Lu Y, Ji Y, Wu N. MTSA-CG: Mongolian Text Sentiment Analysis Based on ConvBERT and Graph Attention Network. Electronics. 2025; 14(23):4581. https://doi.org/10.3390/electronics14234581

Chicago/Turabian StyleRen, Qingdaoerji, Qihui Wang, Ying Lu, Yatu Ji, and Nier Wu. 2025. "MTSA-CG: Mongolian Text Sentiment Analysis Based on ConvBERT and Graph Attention Network" Electronics 14, no. 23: 4581. https://doi.org/10.3390/electronics14234581

APA StyleRen, Q., Wang, Q., Lu, Y., Ji, Y., & Wu, N. (2025). MTSA-CG: Mongolian Text Sentiment Analysis Based on ConvBERT and Graph Attention Network. Electronics, 14(23), 4581. https://doi.org/10.3390/electronics14234581