1. Introduction

With the continuous advancement of industrial automation, cranes [

1,

2,

3] are increasingly applied in construction, logistics, manufacturing, and other fields. As a key load-bearing component in cranes, the condition of the wire rope directly affects the operational safety and load-bearing capacity of the equipment. Therefore, wire rope fault detection remains an important research direction for ensuring the safe operation of lifting systems [

4].

Current research mainly focuses on wire breakage [

5], wear [

6,

7], and stress conditions [

8]. For example, magnetic flux leakage, electromagnetic induction, or computer vision methods are used to identify wire breakage [

9,

10]. Infrared, ultrasonic, and eddy current techniques are applied to detect surface and internal wear or corrosion [

11]. In addition, tension sensors and camera devices are used to monitor the stress posture of wire ropes [

12]. The lifting ratio refers to the number of effective branches of the wire rope in a pulley block system, which directly affects the load-bearing capacity and operational stability of the equipment. A wire rope lifting ratio fault refers to a situation in which the actual working ratio of the wire rope during crane operation does not match the designed ratio or the ratio configured in the system. An incorrect ratio may cause excessive stress on a single rope strand, increasing the risk of breakage, and may also lead to crane overload, posing serious safety hazards. At the same time, an improper ratio accelerates the wear of both the wire rope and the pulley block, shortens equipment lifespan, and raises maintenance costs. Therefore, automated detection of wire rope lifting ratio faults is of great significance for improving the safety of lifting operations and the operational stability of equipment.

However, compared with conventional faults such as broken wires and wear, research on the detection of abnormal wire rope lifting ratios remains relatively limited, and existing approaches face significant limitations in practical applications [

13,

14]. Manual inspection is still commonly used on-site, where operators determine the lifting ratio by visually counting the number of wire rope loops. This method is highly subjective, inefficient, and heavily reliant on operator experience, making it unsuitable for continuous monitoring under complex working conditions. In addition, sensor and signal processing techniques are often applied for indirect estimation of the lifting ratio, such as installing encoders, strain gauges, or displacement sensors to acquire signals like drum rotation speed, tension, or rope length, which are then used in structural models to estimate the ratio [

15]. These methods suffer from accumulated errors, strong dependence on accurate modeling, and high installation and maintenance complexity, which limit their effectiveness in scenarios involving multiple lifting ratio changes or irregular rope winding. In recent years, some studies begin to explore computer vision techniques for detecting surface defects of wire ropes [

6,

7], and extend these efforts to lifting ratio estimation by using edge detection or pulley counting to indirectly infer the number of rope loops. However, these approaches often rely on handcrafted rules or traditional detection networks and lack robustness in the presence of structural overlaps, shadow occlusions, and background interference. In the broader field of industrial visual inspection, researchers are addressing similar difficulties by introducing advanced Transformer architectures. For instance, the NN2ViT model proposed by Wahid et al. enhances global and local feature analysis for anomaly detection in industrial images by integrating neural networks with Vision Transformers [

16]. Concurrently, research exploring the combination of frequency-domain information with Transformers, such as the frequency-domain nuances guided parallel transformer model, aims to improve the accuracy of industrial anomaly localization [

17]. Therefore, how to leverage more powerful visual modeling capabilities to accurately extract spatial structural information of wire ropes under complex conditions remains a key challenge in achieving intelligent diagnosis of lifting ratio faults.

In recent years, cross-domain intelligent diagnostic methods provide new ideas for wire rope fault monitoring. For example, the CFFsBD method [

18] is originally applied to vibration signal analysis of rolling bearings. Its core idea is to perform blind deconvolution based on candidate fault frequencies (CFFs) to enhance weak periodic fault features. This method effectively addresses frequency shift issues caused by slip or measurement errors. Its innovation lies in identifying the frequencies most likely related to faults by deeply mining local time-frequency features, and using these frequencies as a basis for feature enhancement. Although CFFsBD targets vibration signals, its approach of robustly extracting key features from complex noisy data aligns closely with the challenge of extracting effective boundaries and structural features from wire rope images in complex backgrounds. Meanwhile, semi-supervised learning methods, such as variational autoencoders (VAE) [

19], can leverage unlabeled data for effective learning under limited labeled samples and have been demonstrated to perform well in bearing fault diagnosis. Physics-informed neural networks (PINNs) [

20] introduce physical laws into the neural network training process, which helps improve feature extraction effectiveness and model generalization capability. These cross-domain methods not only enrich monitoring techniques but also demonstrate that the concept of robust feature extraction from complex data is transferable across different industrial diagnostic tasks, providing strong theoretical and methodological support for lifting ratio detection and fault diagnosis of wire ropes. Specifically regarding the application of visual models in complex industrial environments, recent studies have demonstrated customized Transformer solutions tailored to specific challenges. For example, LyFormer significantly improves the detection accuracy of small, dense components in low-contrast, high-density X-ray images from SMT manufacturing by incorporating a context-aware transformer with progressive preprocessing [

21]. This offers valuable insights for accurately distinguishing dense wire ropes in similarly complex scenarios. Furthermore, the potential of the Transformer architecture for cross-domain diagnosis is being further explored, as seen in research employing a variant Swin Transformer framework for multi-source heterogeneous information fusion to achieve intelligent cross-domain fault diagnosis [

22].

In the task of wire rope lifting ratio fault detection, computer vision techniques become an important approach for achieving automated recognition. The feature enhancement and robustness concepts provided by cross-domain methods inspire visual approaches for image analysis under complex operating conditions. Computer vision techniques have evolved from early handcrafted feature extraction methods to end-to-end learning frameworks centered on deep learning. Classical approaches such as SIFT [

23] and HOG [

24] extract local gradients and keypoints with strong geometric invariance, and are widely used in tasks like image matching and pedestrian detection. With the rise of convolutional neural networks (CNNs) [

25], hierarchical spatial feature modeling becomes the mainstream, significantly improving the performance of image classification, detection, and segmentation tasks [

26]. Faster R-CNN [

27] introduces a region proposal network (RPN) to enable efficient detection through shared features, while Mask R-CNN [

28] further extends this framework to instance segmentation. The YOLO series [

29,

30,

31] reformulates object detection as a regression problem and achieves extremely high inference speeds while maintaining accuracy, reaching up to 155 FPS. In the field of semantic segmentation, UNet [

32] leverages skip connections and a symmetric architecture to enable efficient pixel-level prediction, making it suitable for scenarios with limited labeled data.

With the success of the Transformer [

33] architecture in the field of natural language processing (NLP), researchers introduce it into computer vision (CV) to overcome the limitations of CNNs in receptive field size and global context modeling. Vision Transformer (ViT) [

34], proposed by Dosovitskiy et al., divides an image into fixed-size patches to enable global modeling. It achieves impressive results on large-scale datasets such as ImageNet-21k, but its performance on small datasets remains unstable, and it requires substantial computational resources, which limits its applicability in industrial scenarios [

35]. To address these issues, Swin Transformer [

36] introduces a local window-based attention mechanism that balances computational efficiency with cross-window information exchange, achieving state-of-the-art performance on tasks such as COCO and ADE20K. Pyramid Vision Transformer (PVT) [

37] incorporates a pyramid structure to extract multi-scale features, making it a fundamental backbone for semantic segmentation and object detection.

Beyond foundational architecture research, Transformer-based models see widespread application across various downstream vision tasks, demonstrating excellent performance and generalization capabilities. In image segmentation, MaskFormer [

38] reformulates semantic segmentation as a mask classification problem and effectively unifies semantic and instance segmentation using a unified mask representation, achieving 55.6 mIoU on the ADE20K dataset. TransUNet [

39] combines the strengths of Transformer and UNet architectures with a dual-branch structure that enhances multi-scale feature extraction, showing outstanding performance in surface defect detection. MedFormer [

40] introduces a hybrid axial attention mechanism and achieves 91.2% and 89.4% mIoU in colonoscopy image segmentation and skin lesion detection respectively, effectively addressing fine-grained structure segmentation needs in medical imaging. In object detection, RT-DETR [

41] is a real-time detection Transformer architecture that balances accuracy and inference speed through an end-to-end query mechanism and improved feature enhancement modules, without relying on candidate box generation or NMS post-processing. Its hardware-friendly design also provides good deployment potential for industrial inspection tasks.

SegFormer, proposed by NVIDIA [

42], is an efficient and lightweight semantic segmentation framework that integrates a hierarchical Transformer encoder (MiT) with a full MLP decoder that does not require positional encoding. It has strong multi-scale modeling capability and good deployment performance. Compared to ViT, which has limitations related to fixed input resolution, and the complex window-shifting design in Swin Transformer, SegFormer achieves advanced segmentation accuracy on several mainstream semantic segmentation datasets such as ADE20K and Cityscapes. It also demonstrates good cross-scene adaptability and real-time performance due to its simple architecture and hardware-friendly design.

The image environment addressed in this study presents distinct industrial characteristics, such as complex wire rope backgrounds, highly variable winding structures, and blurred target boundaries. Traditional CNN architectures, such as UNet, perform well in basic segmentation tasks but are limited by their local receptive fields, making it difficult to effectively model the spatial continuity and arrangement patterns of wire ropes across the entire pulley system. In contrast, object detection algorithms like YOLO and RT-DETR mainly focus on locating and identifying object bounding boxes in images. They excel at quickly detecting object categories and positions, but typically output rectangular bounding boxes rather than fine-grained pixel-level masks. This makes them inadequate for the detailed segmentation required by the complex shapes and blurred boundaries of wire ropes in this study. Moreover, wire ropes in industrial scenarios often exhibit highly entangled and densely interlaced geometric structures. Detection methods struggle to accurately distinguish overlapping or adjacent strands and cannot directly obtain shape information, which limits their ability to precisely determine structural integrity and defect boundaries in wire rope fault diagnosis. SegFormer, by contrast, is based on a self-attention mechanism that enables global context modeling. It accurately perceives the distribution and geometric features of wire ropes across pulleys, effectively improving structural consistency and boundary discrimination. In addition, the lightweight design of SegFormer aligns well with the practical requirements for inference efficiency and edge deployment in industrial environments. Therefore, considering segmentation performance, structural adaptability, and engineering deployment value, this paper selects SegFormer as the backbone network and further introduces a self-attention mechanism in the decoder stage to enhance semantic representation and diagnostic accuracy in segmentation results.

To address the limitations of traditional lifting ratio identification methods, such as low accuracy and poor adaptability, this paper proposes a lifting ratio detection method based on an enhanced SegFormer architecture, aiming to achieve automatic fault detection of wire rope lifting ratios under complex industrial conditions. The core innovation lies in a targeted and parsimonious enhancement to the SegFormer decoder, where a self-attention module is introduced to equip the decoder with global contextual reasoning capabilities. This design effectively mitigates the spatial modeling limitations and overfitting risk inherent in the original pure-MLP decoder, enabling accurate extraction of the spatial distribution and path information of wire ropes from images. Based on the segmentation results, the number of effective wire rope segments between the drum and the hook can be automatically determined, thereby completing the fault diagnosis task for the reeving system. It should be noted that the focus of this work is primarily on the design and optimization of the semantic segmentation method for wire rope images, while the specific implementation of the lifting ratio calculation is not elaborated in detail. To validate the effectiveness of the proposed method, a comprehensive image dataset covering multiple lifting ratio scenarios has been constructed, and systematic comparative experiments conducted. Experimental results demonstrate that this minimal architectural intervention yields performance gains while preserving the low computational footprint of the original SegFormer, illustrating an effective customization of a modern foundation model for the specific and demanding task of high-precision, robust crane wire rope lifting ratio detection. The main contributions of this work are as follows:

The Transformer architecture is applied to the detection of crane wire rope lifting ratio. A novel model is proposed by integrating a self-attention module into the original SegFormer framework.

A wire rope image dataset containing multiple multiplicity states is constructed to provide a data basis for related research.

The accuracy and robustness of the proposed method are verified in multiple multiplicity scenarios, which proves its potential application in practical engineering.

The rest of this work is organized as follows.

Section 2 introduces the SegFormer-based wire rope segmentation and lifting ratio identification method.

Section 3 presents the experimental results and analysis based on the constructed dataset.

Section 4 summarizes the research contributions of this paper and discusses potential directions for future work.

2. Methods

The objective of the crane wire rope lifting ratio fault diagnosis task is to accurately determine the configuration of the wire rope ratio from the image data. This involves recognizing the specific winding patterns and the amount of wire ropes involved within the pulley block system. This task can be modeled as a semantic segmentation problem, where each pixel is classified into a specific ratio category. Traditional edge-based or texture-based detection methods struggle with the complexities of wire rope images, which often include occlusion, overlap, and varying lighting conditions. Therefore, deep learning models with strong feature extraction capabilities, particularly those based on Transformer architectures, offer an effective solution.

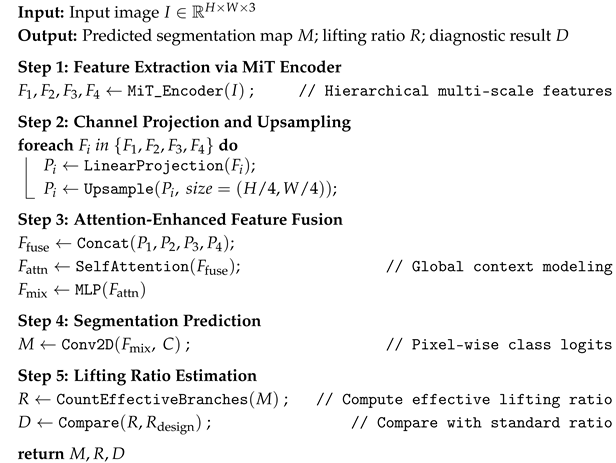

In this study, SegFormer is adopted as the backbone architecture, leveraging its efficient feature extraction and semantic segmentation capabilities to design a network adapted to the identification of the crane pulley ratio. SegFormer primarily consists of two components: a hierarchical Transformer encoder and a lightweight All-MLP decoder. The Mix Transformer (MiT) encoder extracts multi-scale global semantic information through a multi-stage structure, enhancing the model’s ability to understand complex scenes. The All-MLP decoder, with its lightweight design, integrates and restores multi-scale features to achieve efficient and accurate pixel-level classification. The synergy between these two components enables the model to maintain a low computational complexity while achieving superior performance in lifting ratio recognition. Subsequently, a self-attention module is integrated into the decoder of the original SegFormer architecture, enabling the model to fuse multi-scale features while enhancing its global modeling capability and spatial positional sensitivity. This design aims to improve boundary segmentation accuracy, structural coherence, and overall robustness. The overall block diagram of the method is shown in

Figure 1.

2.1. Hierarchical Transformer Encoder

The encoder in this study adopts the Mix Transformer (MiT) architecture from SegFormer, which extracts multi-scale features from the input image through a hierarchical design. Unlike the standard Vision Transformer (ViT) that uses non-overlapping patch partitioning, MiT employs an overlapping patch embedding strategy by controlling the convolution kernel size, stride, and padding to enhance semantic continuity between adjacent regions. Specifically, different convolution parameters (e.g., , , and , , ) are used at different stages, effectively capturing local details such as edges and textures while maintaining the same feature map dimensions.

Each encoding stage consists of multiple Transformer blocks, each containing Multi-Head Self-Attention (MHSA) and a Feed-Forward Network (FFN). Departing from traditional Transformers, our model uses the Mix-FFN architecture proposed by SegFormer, which incorporates a 3 × 3 convolutional layer within the FFN. The zero-padding in this convolution provides implicit positional encoding, avoiding the performance degradation associated with explicit positional encoding when inference resolution varies. Furthermore, the Spatial Reduction Attention (SRA) mechanism is applied, performing spatial down-sampling on the Key and Value tensors to reduce the computational complexity of MHSA from to , significantly improving the processing efficiency for high-resolution images.

These designs enable the MiT encoder to effectively learn multi-scale semantic structures while maintaining computational efficiency, providing strong semantic support for the downstream pulley ratio recognition task.

2.2. Lightweight MLP-Attention Decoder

Building upon the original SegFormer’s lightweight All-MLP decoder, this study introduces a lightweight self-attention module during the decoding stage to enhance global context modeling capability during multi-scale feature fusion.

The original SegFormer decoder integrates multi-scale features and generates semantic segmentation results through four key steps: channel unification, upsampling, feature fusion, and final prediction. While this design is computationally efficient, the pure MLP-based approach has limitations in spatial position awareness when handling targets with complex winding structures and blurred boundaries like wire ropes.

Our proposed improvement integrates a Parallel Self-Attention (PSA) mechanism during the feature fusion stage. Specifically, after upsampling features from various scales to resolution and concatenating them, the self-attention module explicitly models global contextual relationships, enhancing semantic representation and spatial position awareness. This design significantly improves boundary delineation accuracy and structural continuity while maintaining the lightweight nature of SegFormer.

This enhancement is particularly suitable for segmenting wire rope images with blurred boundaries and complex structures in industrial scenarios, effectively improving geometric consistency and segmentation robustness. Importantly, the improved decoder still avoids reliance on conventional components such as convolution, skip connections, or positional encoding, maintaining low computational complexity and making it particularly suitable for real-time deployment in resource-constrained industrial environments.

2.3. Lifting Ratio Calculation and Fault Diagnosis

In the actual fault detection process, a camera deployed on the crane first captures images of the wire ropes and inputs them into the trained semantic segmentation model for processing. The segmentation categories include wire ropes and background. Then, the wire rope lifting ratio counting algorithm analyzes the mask based on the segmentation results to obtain the actual lifting ratio. Specifically, the algorithm calculates the ratio by identifying connected regions in the mask: it scans the image row by row to collect pixel values and counts the size of each connected region, filtering out regions with sizes not exceeding a threshold (set to 5 pixels in this study). Next, it counts the frequency of different connected regions appearing in each row and selects those whose occurrence is not lower than a threshold (set to 30 in this study). The maximum of these values is taken as the wire rope lifting ratio.

When the occurrence of the maximum value is close to the threshold (within 50% difference in this study), the algorithm determines an uncertain case and outputs the lifting ratio along with an uncertainty flag to prompt the user for further verification. Finally, the algorithm compares the calculated ratio with the task’s expected design ratio. If the two values do not match, a lifting ratio fault is determined. The above threshold parameters are verified through multiple experiments and reliably enable accurate determination of the wire rope lifting ratio under various imaging conditions, thus completing a closed-loop process from image segmentation results to fault decision. The pseudo code of the method is shown in Algorithm 1.

| Algorithm 1: SegFormer+AM-Based Wire Rope Lifting Ratio Detection |

![Electronics 14 04560 i001 Electronics 14 04560 i001]() |

2.4. Training Settings

Datasets: This study constructs a dataset using images collected from crane operation sites. The dataset contains a total of 1044 high-resolution images, of which 846 are used for training, 94 for validation, and 104 reserved as the test set. The images are annotated for semantic segmentation using the LabelMe tool, with the annotated category being the wire ropes mounted on the crane (excluding wire ropes on the drum). During annotation, boundaries are typically drawn close to the outer edges of the wire ropes. For densely arranged or occluded wire ropes, gaps are left at the boundaries to distinguish different ropes. Knots and accessories on the wire ropes are considered part of the rope. Annotation is performed independently by three annotators and subsequently checked and refined by one of them to ensure consistency and quality. Furthermore, to enhance the model’s generalization under varying conditions, data augmentation is applied to the original dataset. Specifically, several augmentation techniques are used, including random rotation (to simulate changes in viewpoint), horizontal and vertical flipping, random cropping, and random adjustment of brightness and contrast (to simulate illumination variations). These techniques aim to improve model robustness by exposing it to a wider range of scenarios.

Hyperparameter Selection and Implementation Details: The hyperparameters in our study are chosen based on established practices for Transformer-based models and empirical validation on our dataset. The model is trained on a server equipped with an RTX 4090 GPU. The batch size is set to 8, the weight decay to 0.05, and the backbone is initialized with the official SegFormer pre-trained MiT-B1 model, with an additional self-attention module introduced in the decoder. During training, several data augmentation strategies are applied, including random resizing (scaling ratio between 0.5 and 2.0), random cropping (crop size of 512 × 512 with a maximum crop ratio of 75%), random flipping, and photometric distortion (brightness delta of 32 and contrast range of [0.5, 1.5]). The decoder’s self-attention module is configured with 8 attention heads, consistent with the design of the SegFormer-B1 backbone’s encoder layers. The embedding dimensions throughout the network adhere to the standardized SegFormer-B1 (MiT-B1) specifications, where the encoder outputs multi-scale features with channels of [64, 128, 320, 512] across its four stages, and the decoder projects all features to a unified dimension of 256 before fusion. The AdamW optimizer is used with a base learning rate of 6 × 10−5. A polynomial learning rate decay with linear warm-up is employed as the scheduler. Training is performed for 160,000 iterations on 846 training images. Model performance is evaluated in terms of mean Intersection over Union (mIoU), mean Pixel Accuracy (mPA), average Accuracy (aAcc), floating-point operations (FLOPs), and inference time, with metrics reported every 4000 iterations.

Comparison Methods: To validate the effectiveness of the proposed method, we selected five representative semantic segmentation networks as baseline models for comparison: the original SegFormer, the classic U-Net [

32], DeepLab V3+ [

43], Mask2Former [

38], YOLOv11-seg [

44], and RT-DETR [

41]. All models were evaluated using the same dataset and experimental setup to ensure a fair performance comparison. The original SegFormer, as the foundational model for this study, combines a hierarchical Transformer encoder with a lightweight fully MLP decoder, offering strong multi-scale feature extraction capabilities and efficient inference performance. Choosing SegFormer as the baseline helps assess the performance improvements brought by introducing the self-attention mechanism in the decoder stage. U-Net is a classic convolutional neural network architecture widely used in medical image processing and industrial inspection tasks. Its symmetric encoder-decoder structure with skip connections effectively recovers spatial details. However, since feature extraction relies primarily on local convolutional operations and lacks global context modeling, it often encounters performance bottlenecks in tasks involving complex object structures or requiring fine boundary segmentation. DeepLabV3+ is a widely used semantic segmentation network that integrates Atrous Spatial Pyramid Pooling (ASPP) with an encoder–decoder structure. By aggregating multi-scale contextual information, it effectively enhances the characterization of complex scenes and object boundaries. Mask2Former is a Transformer-based unified segmentation framework that leverages mask attention to jointly model semantic, instance, and panoptic segmentation, effectively capturing both global context and fine-grained boundary details. YOLOv11-seg is the latest high-performance instance segmentation architecture in the YOLO series, capable of accurately capturing edge details and multi-scale object features. It is suitable for tasks that require detailed analysis, such as local texture and structural analysis of wire ropes. RT-DETR, a real-time target detector based on Transformer, represents the state-of-the-art in end-to-end object detection, offering both high accuracy and fast inference capabilities.

3. Results and Discussion

This section presents the experimental setup and results to evaluate the performance and robustness of the proposed SegFormer-based crane wire rope lifting ratio fault diagnosis method. The section is divided into two subsections: Segmentation Results and Analysis and Robustness Analysis. In the first subsection, we compare the segmentation results of the proposed method with the baseline models and present various evaluation metrics, such as mIoU, mPA, and aAcc. The segmentation accuracy of the models will also be visualized using heatmaps to highlight the model’s precision. The second subsection tests the robustness of the model by simulating real-world complex scenarios through operations such as noise injection, adjustments to brightness and contrast, and random rotations and cropping. These operations are applied to the test images to evaluate the model’s performance under challenging conditions.

3.1. Segmentation Results and Analysis

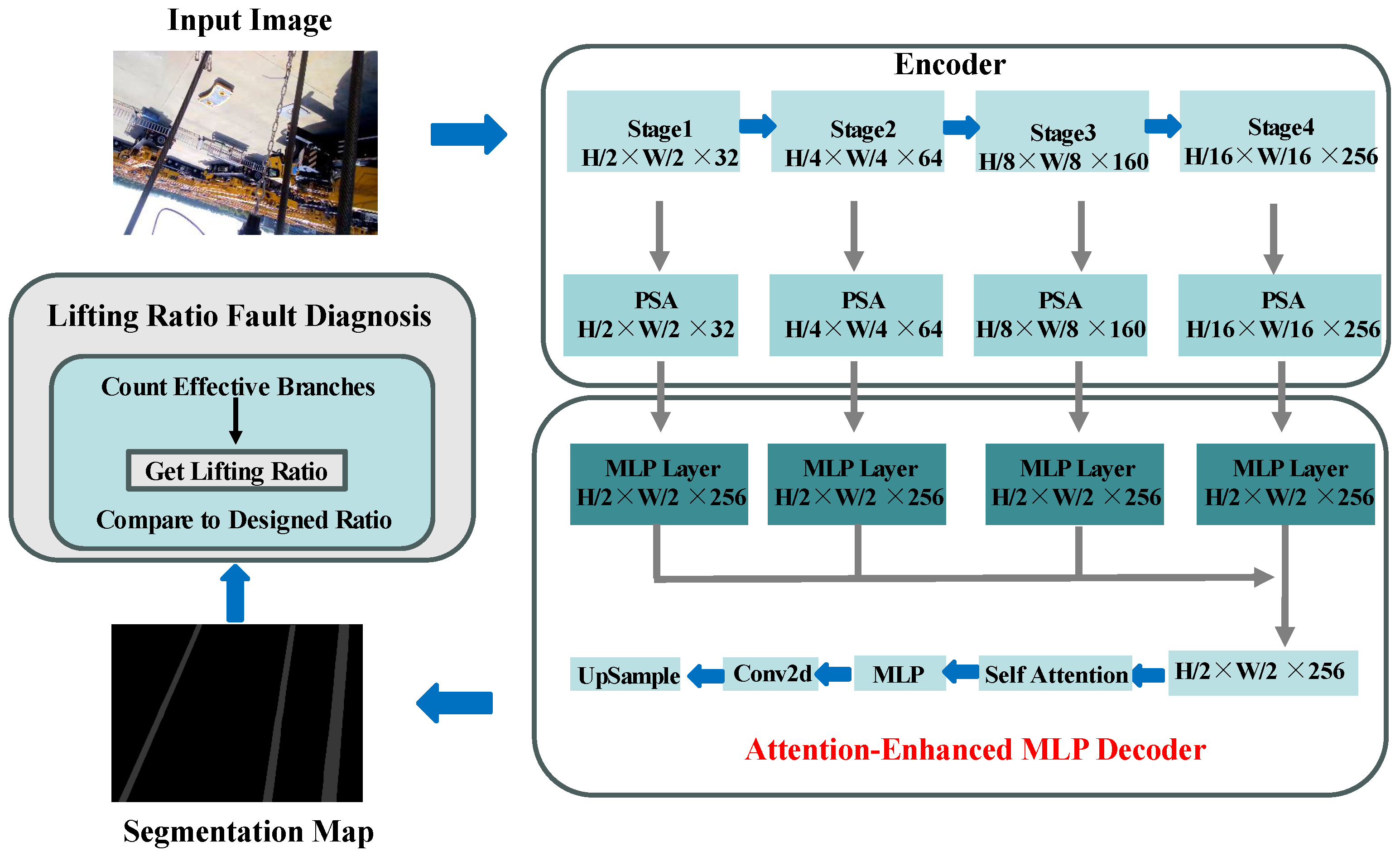

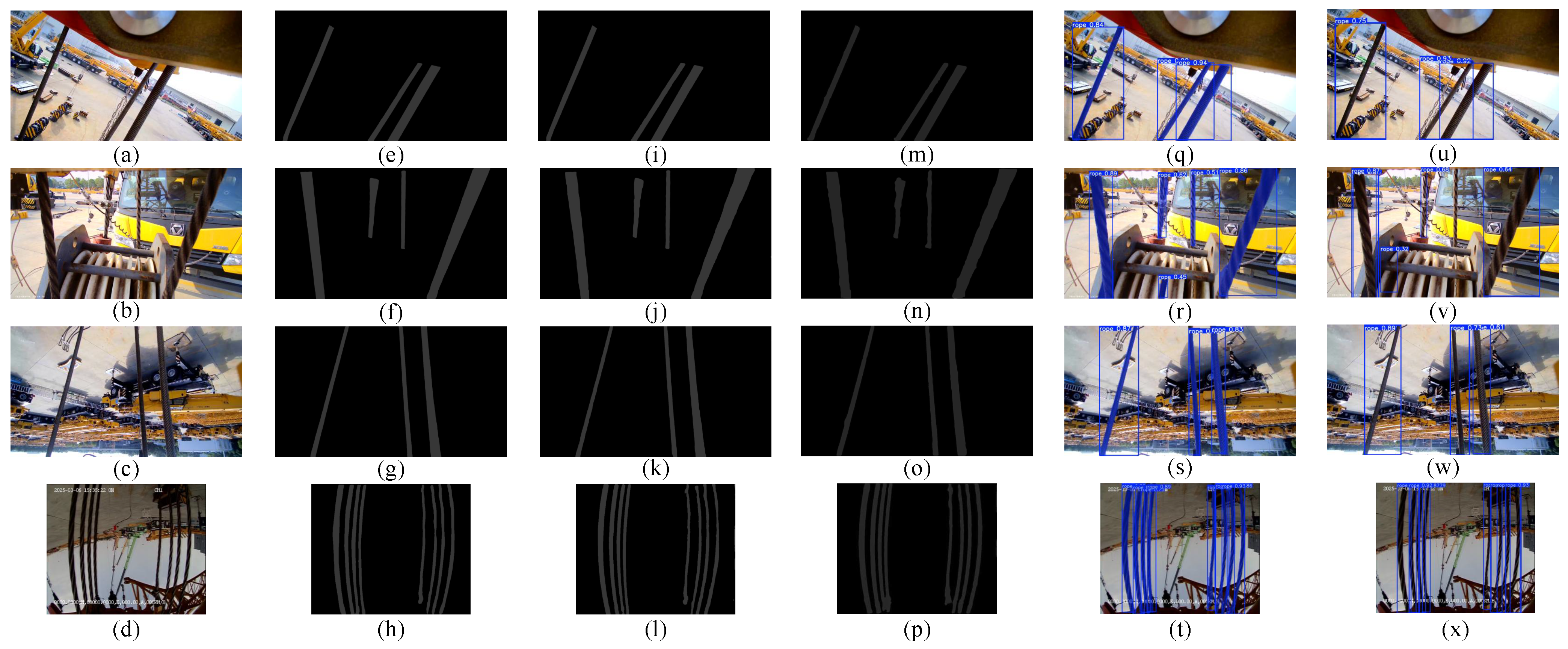

Figure 2 presents the training loss curve of the proposed SegFormer+AM method, which is provided to monitor the training behavior. As shown, the loss decreases rapidly in the initial stage and maintains a stable, low level thereafter. This profile confirms the convergence of our training procedure and the lack of overfitting. As shown in

Table 1, the semantic segmentation performance of these five models in the wire rope lifting ratio recognition task is compared. The evaluation metrics include the following: Mean Intersection over Union (mIoU), which measures the overlap between the predicted and ground truth regions for each class and reflects the model’s ability to fit object boundaries, shapes, and sizes; Mean Pixel Accuracy (mPA), which assesses the average pixel-level classification accuracy across all classes; Average Accuracy (aAcc), representing the overall correctness of pixel-level predictions; Floating Point Operations (FLOPs), quantifying the computational complexity of the model by indicating the total number of floating-point operations executed; Predicted Time, indicating the time required for the model to generate predictions for a single image; Precision (P), which measures the proportion of correctly identified objects in the predictions; Mean Average Precision at IoU = 0.50 (mAP50), evaluating the average detection accuracy when the Intersection over Union (IoU) between the predicted and ground truth bounding boxes is ≥50%; and Mean Average Precision at IoU from 0.50 to 0.95 (mAP50-95), considering the average detection accuracy at 10 different IoU thresholds ranging from 0.50 to 0.95, providing a comprehensive view of the detection performance across various levels of localization strictness.

The accurate segmentation of wire ropes, as demonstrated by the high mIoU and visual results, is the critical first step toward reliable lifting ratio fault diagnosis. The practical impact of this capability lies in its direct application to prevent mechanical overloads. As established, an undetected low lifting ratio forces fewer rope segments to carry the intended load, leading to dangerous tensile overloading. Our method, by enabling precise counting of the effective rope segments, provides the necessary information to verify the actual load distribution against the crane’s design specifications. Identifying a discrepancy triggers a fault alert, allowing operators to halt the operation before an overload condition occurs. Therefore, the segmentation performance reported here directly translates into an enhanced capability to mitigate a key mechanical risk factor in crane operations.

To validate the effectiveness of the proposed method, we selected five representative semantic segmentation and object detection networks as baseline models for comparison: the original SegFormer [

42], U-Net [

32], DeepLabV3+ [

43], Mask2Former [

38], YOLOv11-seg [

44], and RT-DETR [

41]. This selection was made to ensure a comprehensive comparison across different architectural paradigms. In experiment, SegFormer+AM (SegFormer with integrated attention mechanism) achieved slight improvements across all metrics compared with the baseline models. Specifically, SegFormer+AM reached an mIoU of 93.31%, slightly higher than SegFormer’s 93.19%, while also showing marginal gains in mPA and aAcc, achieving 96.37% and 98.84%, respectively. In terms of computational complexity, SegFormer+AM recorded 50.73 G FLOPs, which is nearly identical to SegFormer’s 50.71 G, indicating that the introduction of the attention mechanism imposes minimal additional computational cost. Its inference time was 122.8 ms, slightly longer than SegFormer’s 121.5 ms, but still faster than U-Net’s 129.5 ms. By comparison, U-Net achieved an mIoU of 92.78%, showing weaker performance, while its computational cost reached 225.84 G FLOPs, resulting in significantly lower inference efficiency. DeepLabV3+ demonstrated relatively stable performance across Rope IoU, mIoU, and aAcc, achieving 87.56%, 92.94%, and 98.51%, respectively. Its advantage mainly stems from the ASPP module, which provides multi-scale context modeling. Although its computational cost is lower at 35.69 G FLOPs, the inference time still reached 135.9 ms, making it slower than the lightweight SegFormer series. This indicates that DeepLabV3+ offers certain strengths in handling complex boundaries but still has limitations in inference efficiency. In contrast, Mask2Former achieved the best performance on nearly all semantic segmentation metrics, with a Rope IoU of 88.89%, mIoU of 94.30%, aAcc of 98.94%, and mPA of 97.62%, significantly outperforming other baseline methods. This demonstrates that its Transformer-based mask attention mechanism effectively integrates global context with local details, resulting in stronger recognition capability for fine-grained rope structures. However, Mask2Former incurs much higher computational complexity and inference time than other methods, with inference reaching 582.1 ms, which presents limitations in real-time industrial inspection scenarios. For detection-based methods, YOLOv11-seg shows outstanding inference speed with only 29.3 ms, while also achieving strong accuracy, with an mAP50 of 99.05%. However, its mAP50-95 was only 76.16%, indicating limited robustness for multi-scale fine-structure recognition. RT-DETR achieves moderate performance in both accuracy and speed, with an mAP50 of 90.02% and an inference time of 55.2 ms. Overall, although YOLOv11-seg has a significant advantage in real-time performance, its accuracy is somewhat limited.

In terms of quantitative results, the proposed SegFormer+AM achieves consistent improvements in mIoU, mPA, and aAcc over the original SegFormer [

42], while maintaining a nearly identical computational cost and inference time. This demonstrates that the introduced attention mechanism enhances performance without a significant burden. A critical advantage of our method is its superior trade-off between accuracy and efficiency, as summarized in

Table 1. It shows clear advantages over the computationally intensive U-Net [

32], achieving higher accuracy with less than a quarter of the FLOPs. While DeepLabV3+ [

43] excels in boundary modeling, our model offers a better balance for efficient industrial deployment. Compared to the highly accurate Mask2Former [

38], SegFormer+AM delivers performance sufficient for industrial tasks at a fraction of the computational cost and is over 4.5 times faster, making it far more suitable for real-time applications. Against detection-based methods, YOLOv11-seg [

44] exhibits superior inference speed but lacks the fine-grained, pixel-level segmentation essential for accurately determining the wire rope’s spatial path, and RT-DETR [

41] offers a moderate balance. Therefore, the proposed enhancement provides a solution, elevating the segmentation performance of an efficient base architecture without the substantial penalties of larger, more complex models.

To further evaluate the ability of SegFormer+AM to capture fine-grained structures, we analyzed its confusion matrix, as shown in

Table 2. The model achieved a correctness rate of 90.47% for background pixels and 8.37% for rope pixels, leading to an overall high accuracy. The misclassification rates were relatively low: 0.56% of background pixels were misclassified as rope, and 0.59% of rope pixels were misclassified as background. These results indicate that the introduced attention mechanism effectively strengthens the model’s capacity for fine-grained structure recognition while maintaining robust background identification. Consequently, SegFormer+AM improves the precision and continuity of segmentation boundaries, enhancing fine-grained target recognition without increasing computational overhead.

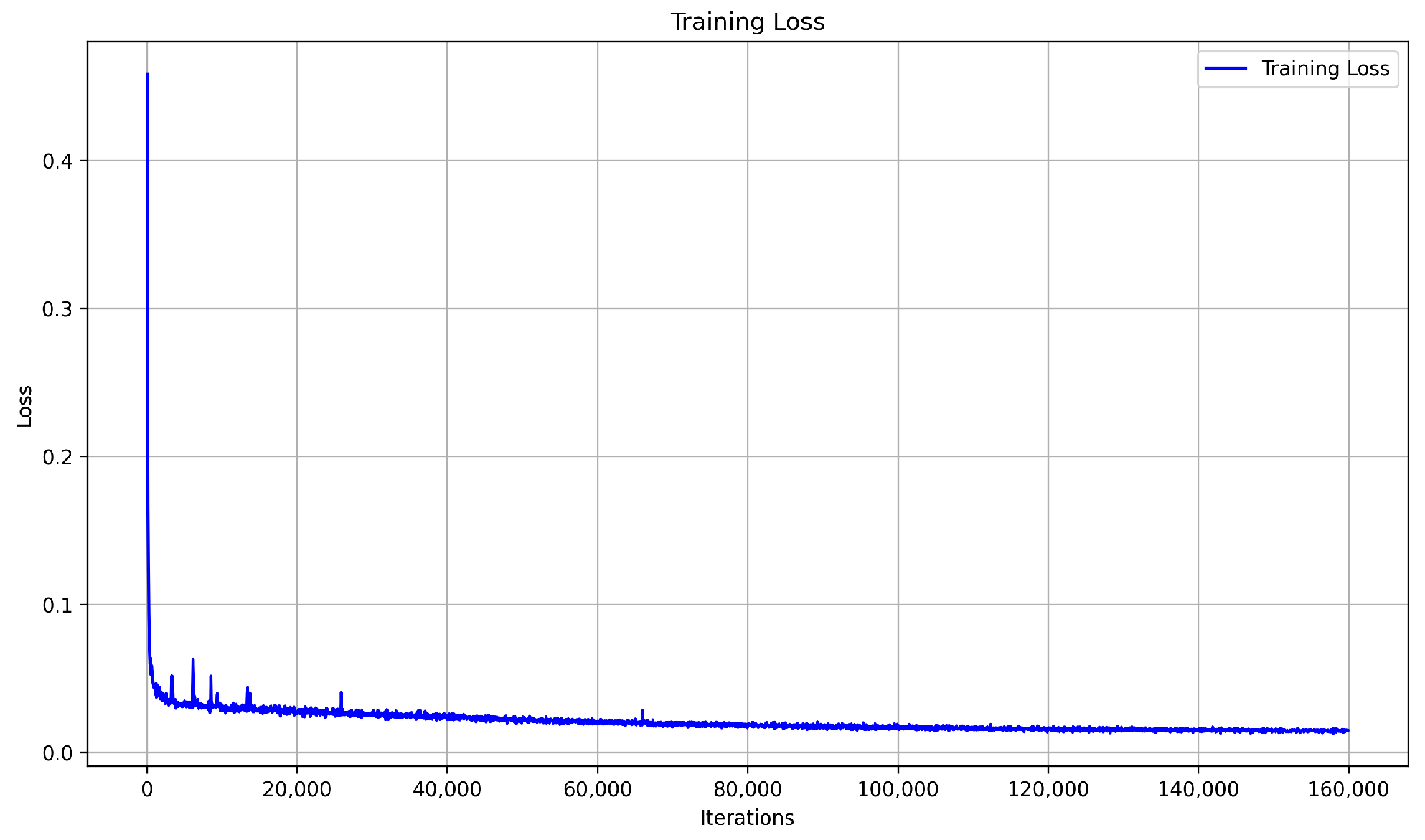

Furthermore, four specific scenarios are selected to compare the segmentation results of different methods, as shown in

Figure 3. From the visual results, it is evident that the SegFormer+AM (

Figure 3e–h) achieves the clearest segmentation boundaries and the best continuity in key structural regions of the image, such as wire rope winding areas, pulley edges, and hook intersections. The predicted regions closely align with the actual physical structures. This demonstrates that, based on the original SegFormer [

42] encoder’s multi-scale feature extraction, the introduced self-attention mechanism further enhances global modeling capabilities, enabling more precise differentiation of complex regions during the decoding stage. In contrast, the SegFormer [

42] (

Figure 3i–l) delivers relatively accurate overall structure recognition, but still suffers from local misjudgments in fine details—such as blurred wire rope edges and indistinct connections at winding points. This suggests that its pure MLP-based decoder lacks spatial context sensitivity, making it less effective in handling areas with adjacent boundaries or dense textures. The UNet [

32] (

Figure 3m–p) performs the worst, exhibiting broken structures, inaccurate boundaries, and severe background interference. While U-Net [

32] still retains some advantages in low-level feature extraction, its convolution-based local receptive field limits its ability to capture global semantic relationships. This results in unstable performance, especially in cases with multiple windings, complex backgrounds, or similar lifting ratios. YOLOv11-seg [

44] and RT-DETR [

41], as integrated detection and segmentation methods, show good accuracy in locating wire ropes and can quickly identify their general positions. While suitable for fast detection, their segmentation precision remains inferior to our method for these slender targets.

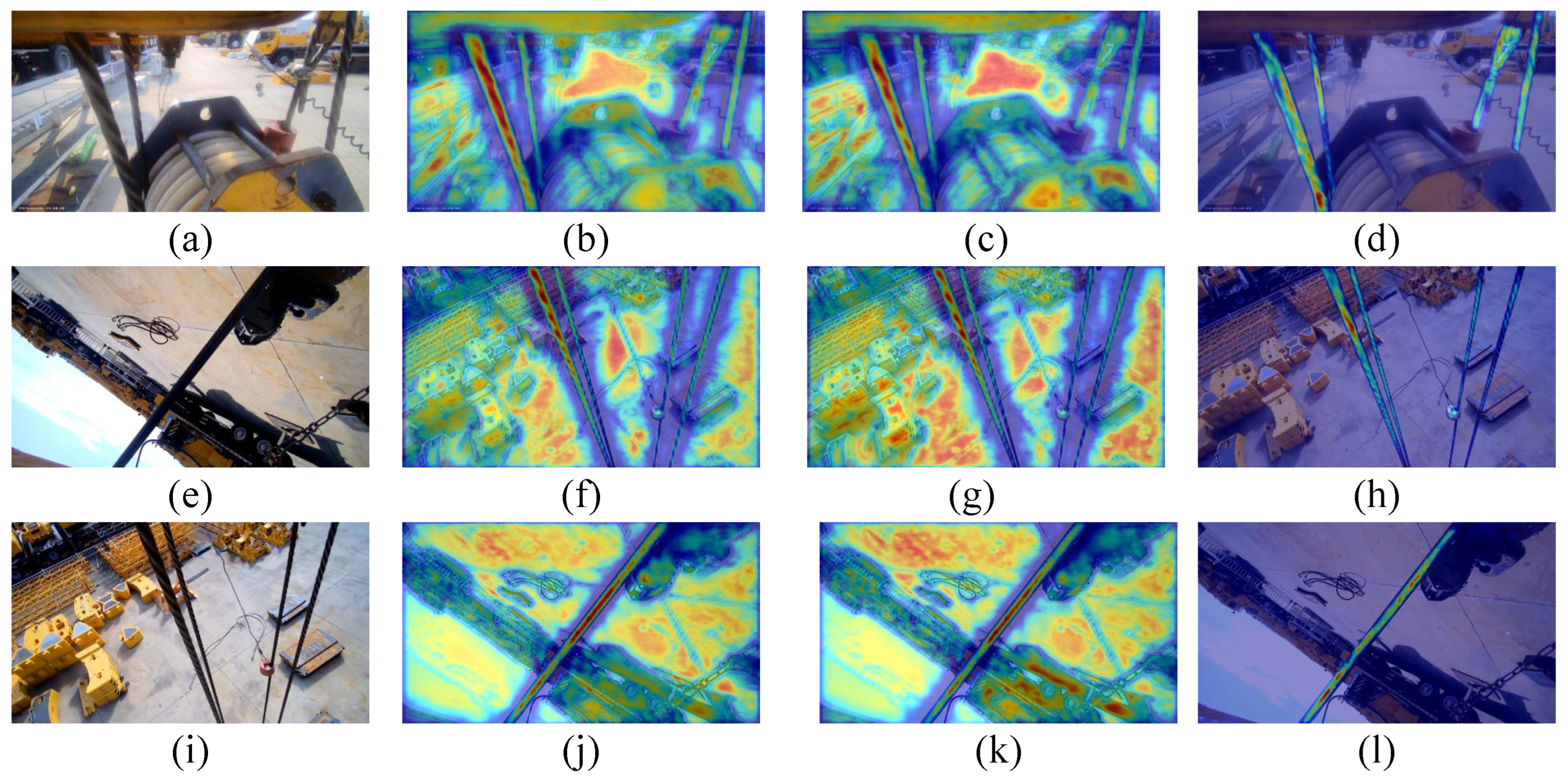

To provide analytical insights into the functional efficacy of the proposed attention mechanism, we employ Grad-CAM to generate visual heatmaps for SegFormer+AM, SegFormer [

42], and U-Net [

32], as shown in

Figure 4, where the red-to-blue gradient reflects the strength of feature activation across different regions. The results reveal a clear evolutionary trend: U-Net’s [

32] responses are primarily confined to local wire rope areas, capturing salient features but demonstrating limited global context utilization. In contrast, SegFormer [

42] exhibits stronger global context modeling, maintaining high activation on the ropes while integrating broader contextual information. Notably, SegFormer+AM, enhanced with the decoder self-attention mechanism, shows superior analytical characteristics—its activations are not only stronger and more precisely confined to the wire rope structures but also demonstrate enhanced continuity along the entire rope path. This indicates a refined ability to suppress irrelevant background features and capture long-range dependencies, effectively validating that the added self-attention module significantly improves feature discrimination, spatial coherence, and target localization, particularly in challenging scenarios with overlapping ropes or complex backgrounds.

3.2. Ablation Experiment

To further validate the effectiveness of the attention mechanism design in the proposed method, we conducted ablation experiments that systematically analyzed the impact of varying the number of decoder self-attention heads as well as the effects of inserting the self-attention mechanism in the encoder and decoder. The experimental results are presented in

Table 3. Since the complete training process is computationally expensive, the number of iterations for this set of ablation experiments was uniformly set to 4000 to ensure comparability under a reasonable computational cost.

The results show that introducing a self-attention mechanism in either the encoder or the decoder can improve the segmentation performance, with the encoder-side insertion yielding a more significant improvement of 0.41% compared to the baseline model. This indicates that incorporating global modeling capability at the feature extraction stage enhances the ability to distinguish wire rope targets from complex backgrounds, thereby achieving more accurate segmentation boundaries. Meanwhile, we further investigated the effect of the number of decoder self-attention heads. The experiments demonstrate that multi-head attention improves the multi-scale modeling capability of features to some extent, with the best performance observed when the number of heads is set to eight. In this case, the model achieved an additional improvement of 0.12% over the encoder-only attention configuration. These results suggest that properly configuring the number of decoder self-attention heads can further enhance segmentation performance while keeping the model size and computational cost at a reasonable level.

Although the absolute performance gains observed in our ablation study may appear modest, it is important to consider the context. The baseline SegFormer model already represents a strong, high-performance benchmark. In such high-performance regimes, achieving any further consistent improvement is challenging and often signifies a meaningful enhancement. To rigorously evaluate our results, we conducted a statistical significance test comparing our model (Encoder + Decoder Attention) with baseline. The results confirm that the observed improvements are statistically significant (p < 0.05), indicating that they are not due to random chance. Furthermore, this enhancement is achieved with a negligible computational overhead, as evidenced by the minimal increase in FLOPs (from 50.71 G to 50.73 G). This demonstrates that the introduced self-attention mechanism in the decoder is a effective modification, yielding performance boost at an almost negligible cost.

3.3. Robustness Analysis

In crane operational environments, images captured by cameras are often affected by factors such as crane arm movement, object occlusion, and lighting variations, posing significant challenges for wire rope segmentation. To verify the robustness of the proposed SegFormer+AM model in complex scenarios, two types of tests were conducted. First, data augmentation was applied to the test dataset during evaluation to simulate incidental uncertainties such as variations in illumination and noise. Moreover, cross-scene testing was performed using out-of-distribution test datasets to examine the model’s performance under epistemic uncertainty.

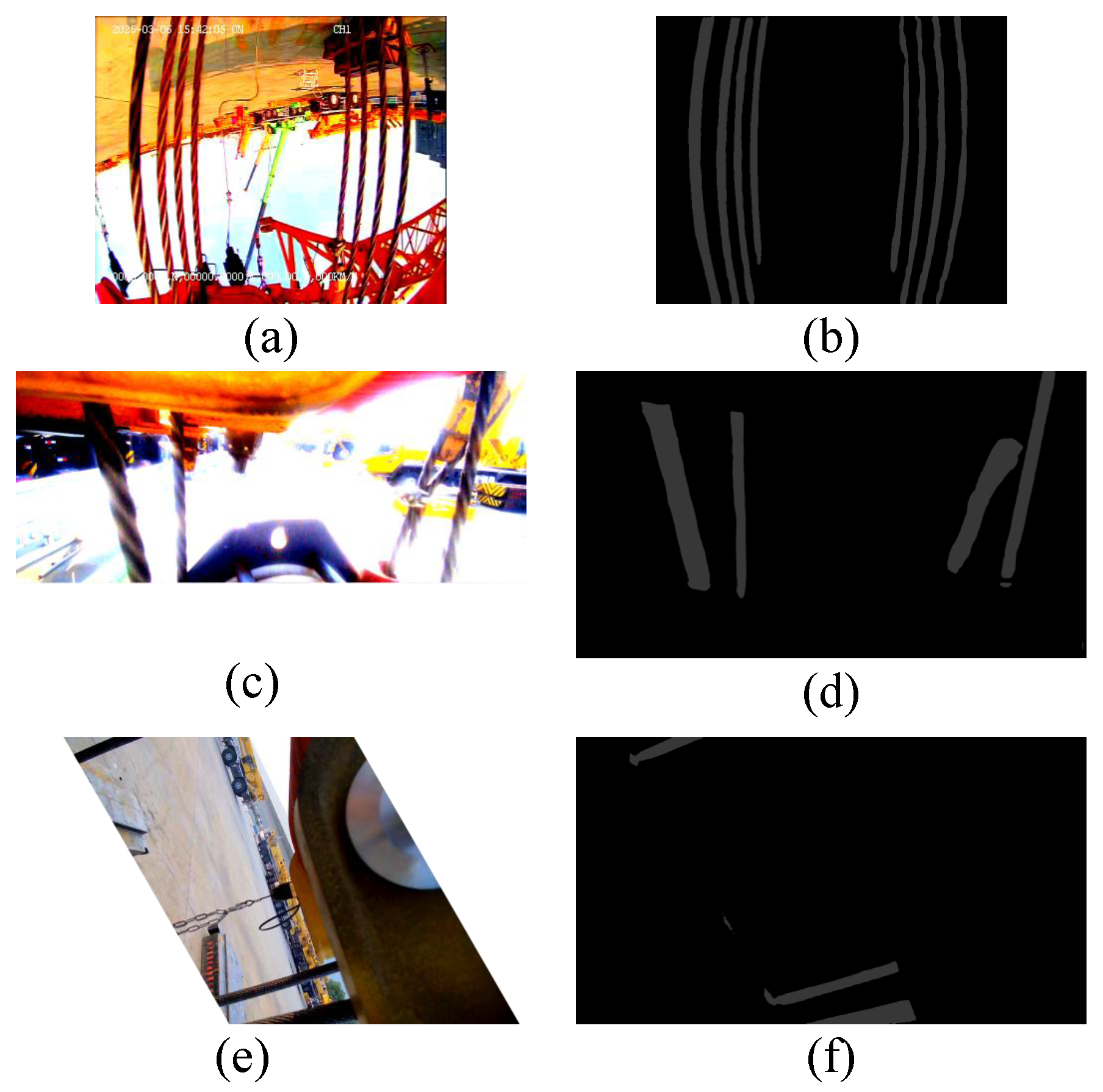

Three types of typical perturbations were introduced in the test data: (1) random noise injection to simulate sensor jitter or interference during image acquisition; (2) brightness and contrast adjustments to emulate visual differences caused by varying external illumination; and (3) random rotation and cropping to mimic changes in camera angles or partial field-of-view loss. As shown in

Figure 5, even under these uncertain conditions, SegFormer+AM accurately identifies and segments the overall wire rope structure, maintaining high integrity and continuity in the segmentation results. This demonstrates the model’s strong robustness and generalization capability across different environmental disturbances. Further analysis indicates that noise injection and lighting variations slightly affect background regions, but the model still emphasizes wire rope areas while suppressing irrelevant information. Under random rotation and cropping, although some rope shapes undergo noticeable changes, the model continues to effectively capture and segment the target. Overall, the robustness of SegFormer+AM in complex dynamic environments highlights its potential for practical applications in crane wire rope condition monitoring and safety assurance.

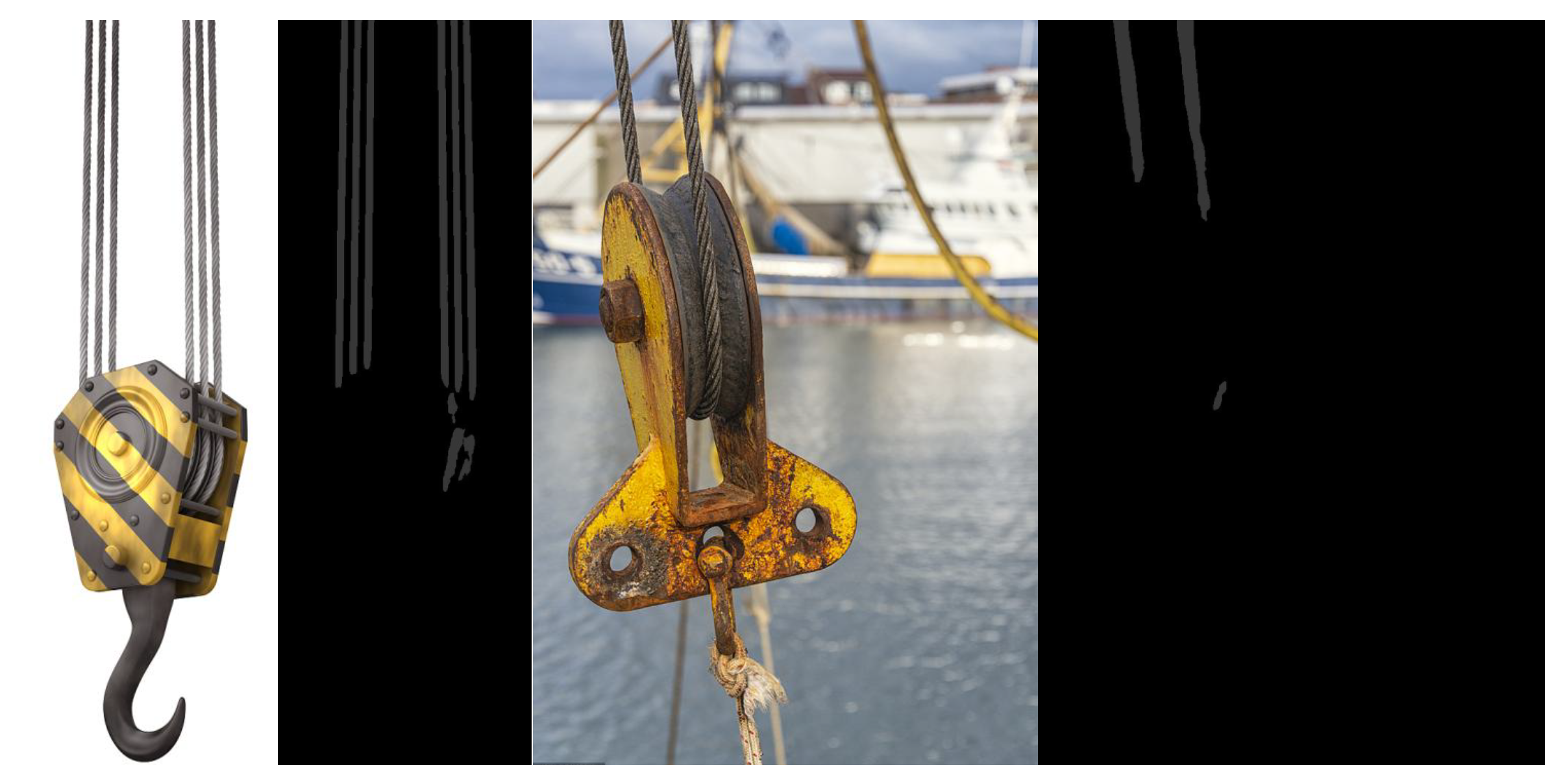

To further validate the generalization of the model in different deployment environments, we conduct cross-scene robustness tests. The test images are collected from different types of cranes (e.g., bridge and gantry cranes) and various work sites (indoor/outdoor with different lighting and background conditions), where the wire ropes exhibit significant variations in appearance, winding patterns, and background complexity. As shown in

Figure 6, the trained SegFormer+AM model is directly applied to these images, which are not included in the training set. The results demonstrate that the model accurately segments the wire ropes, producing clear boundaries and maintaining structural integrity, even under complex backgrounds and diverse wire rope configurations. This cross-scene evaluation indicates that SegFormer+AM is not only resilient to human-induced variations but also generalizes well to real-world operating conditions, showing strong robustness and practical application potential. Its robustness in complex dynamic environments makes it highly valuable for wire rope condition monitoring and safety assurance.

3.4. Uncertainty Study

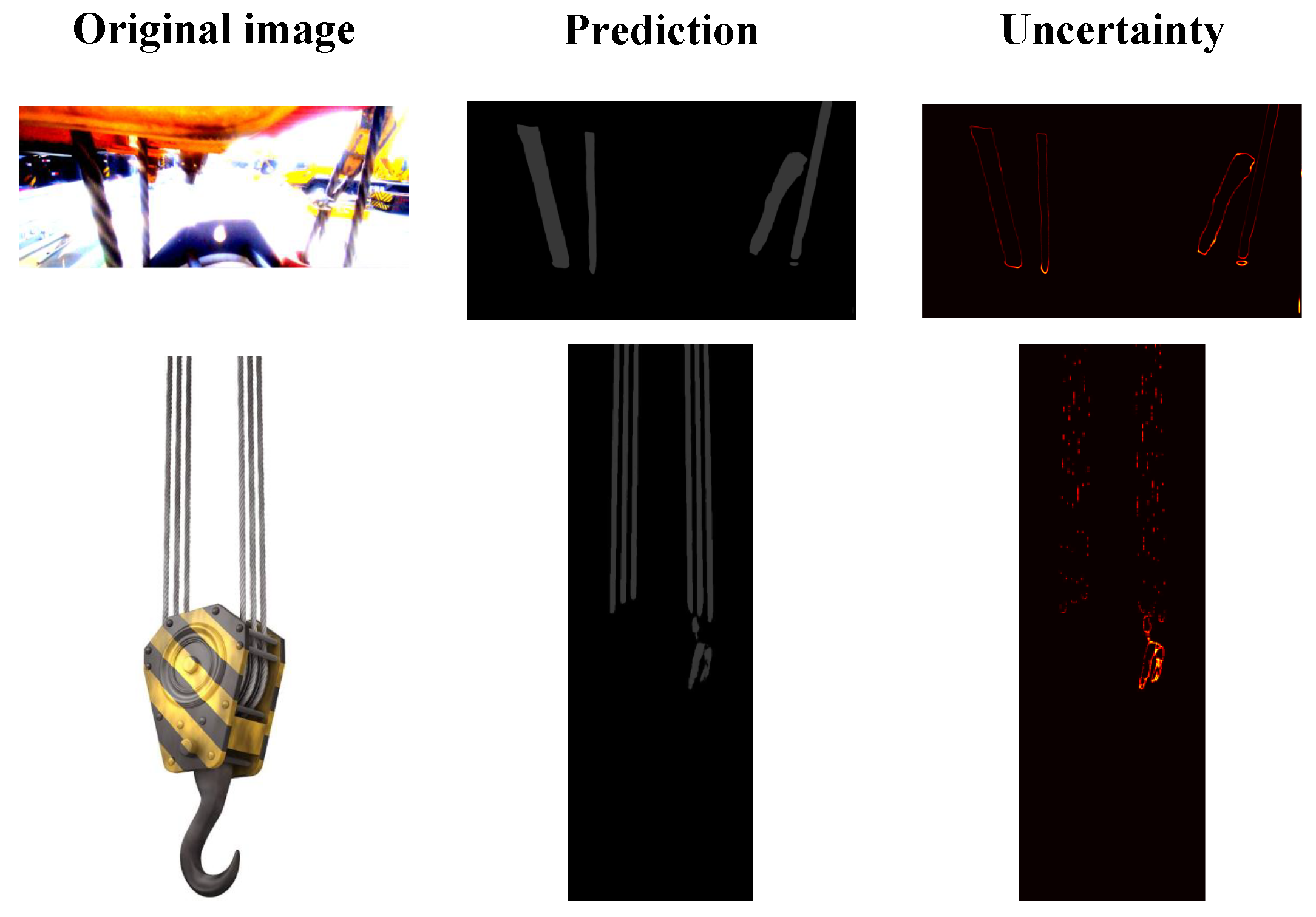

To quantify and visualize the predictive uncertainty of the model, we adopted the Monte-Carlo Dropout method. During inference, this approach repeatedly activates the Dropout layers and collects multiple prediction results, thereby generating a series of uncertainty maps. The prediction results are shown in

Figure 7, where the brightness of the color represents the level of uncertainty. As observed, the uncertainty is mainly concentrated around the edges of the steel wire ropes. This phenomenon may be attributed to the inherent ambiguity of rope boundaries and the variability of fine-grained features across different Dropout samples. Overall, the model is able to maintain satisfactory segmentation performance when faced with aleatoric uncertainty and epistemic uncertainty. However, the uncertainty analysis also reveals that object boundaries remain regions of relatively high uncertainty, which provides clear directions for future improvement, such as leveraging ensemble learning, designing more refined loss functions, or incorporating edge detection algorithms to further enhance model performance.

4. Conclusions

For the automatic detection of lifting ratio faults in wire ropes under complex industrial environments, this paper proposes a SegFormer-based method for wire rope lifting ratio detection. Compared with traditional approaches, existing CNN models have limited receptive fields and struggle to capture global structural information, while object detection methods typically output coarse boundaries, which cannot meet the requirements of fine-grained segmentation. By introducing a self-attention module in the decoder of the SegFormer architecture, the model significantly enhances its ability to capture global contextual information while maintaining spatial sensitivity, thereby improving wire rope segmentation accuracy under various crane configurations. To validate the effectiveness of the proposed method, we construct a wire rope image dataset covering multiple lifting ratio states and conduct extensive experiments. The results show that the method achieves 93.31% mIoU, 96.37% mPA, and 98.84% aAcc, significantly outperforming baseline models including the original SegFormer, UNet, YOLO11-seg, and RT-DETR. Notably, the model demonstrates strong robustness in complex crane operating environments, consistently producing accurate segmentation even under noise, illumination changes, occlusion, and viewpoint shifts. This approach offers a promising solution for automated intelligent monitoring of lifting equipment, with potential applications in engineering safety, maintenance optimization, and visual inspection. At the same time, we note that under extreme low-light conditions, severe blur, or highly similar background textures, local mis-segmentation may occur. Future work will explore integrating multimodal information, such as depth or infrared data, to enhance feature representation, adopting semi-supervised learning to reduce dependence on large-scale annotations, and applying lightweight optimization to improve deployment performance on embedded platforms. In addition, we will continue to collect image information of cranes in industrial production, actively expand our datasets, facilitating the implementation of subsequent model training and performance testing and other tasks.