Robust Direct Multi-Camera SLAM in Challenging Scenarios

Abstract

1. Introduction

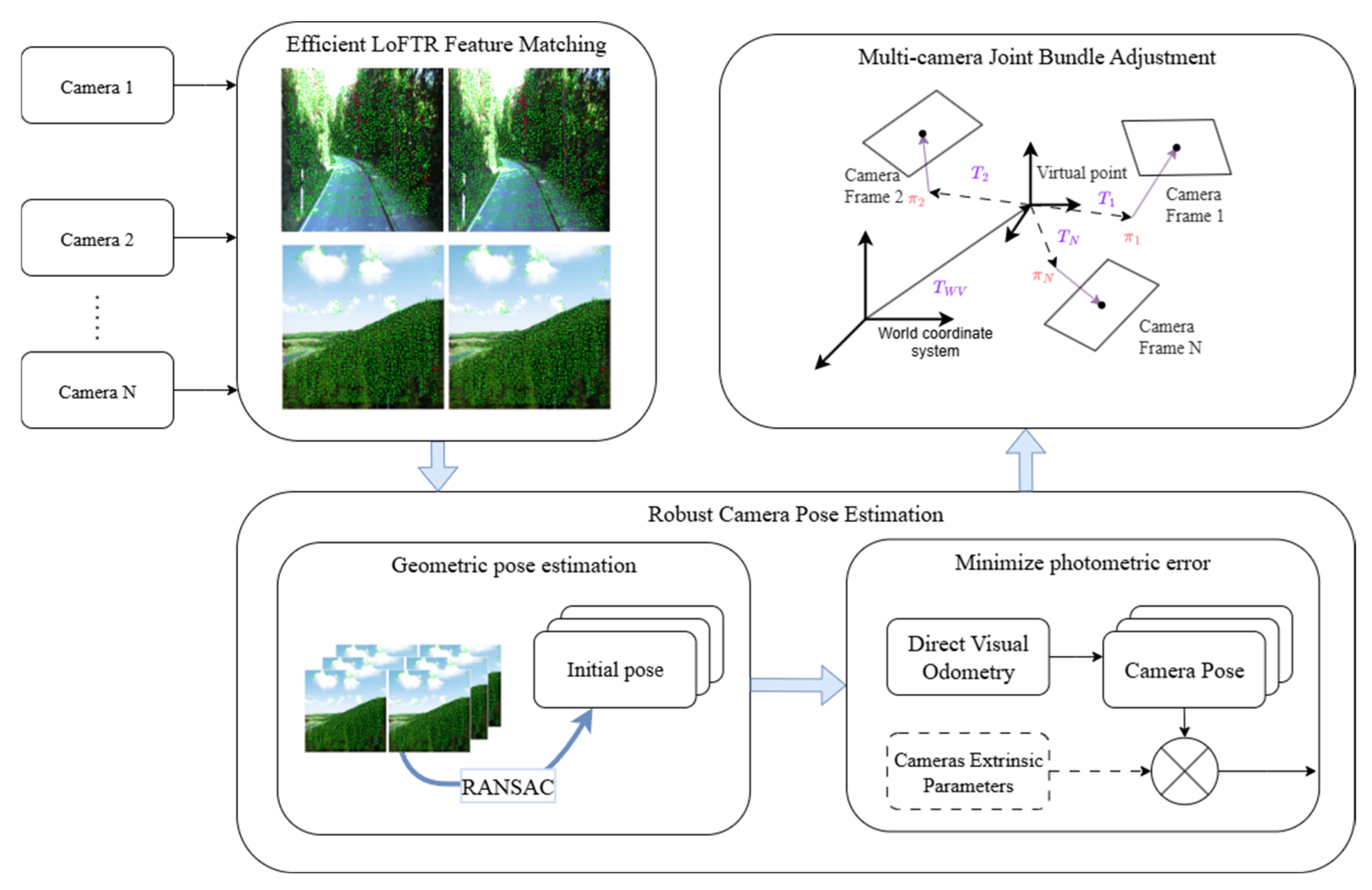

- A multi-camera visual SLAM framework based on direct methods is designed and implemented to maintain high localization accuracy and robustness in weakly textured and unstructured environments, where visual information is degraded.

- A robust visual front-end integrating deep learning and the direct method is proposed. This front-end first leverages the semi-dense matching capability of Efficient LoFTR to robustly estimate the inter-frame initial poses, fundamentally overcoming the initialization failures of traditional direct methods that depend on the constant velocity model in cases of non-smooth motion or weak textures. Subsequently, this high-precision initialization successfully guides the direct method to perform photometric error optimization, thereby achieving reliable and accurate camera pose estimation even in challenging scenarios.

- A multi-camera joint optimization back-end is designed in which the inter-frame photometric error constraints and the inter-camera rigid geometric consistency constraints are tightly coupled. By effectively exploiting redundant observations from multiple cameras, the system refines poses online and further improves overall localization accuracy.

- Extensive experimental evaluations are conducted on public datasets and a self-constructed simulation dataset, with the results demonstrating the superior robustness and localization accuracy of the proposed method.

2. Related Work

2.1. Multi-Camera Visual SLAM

2.2. Deep Feature Matching

3. Materials and Methods

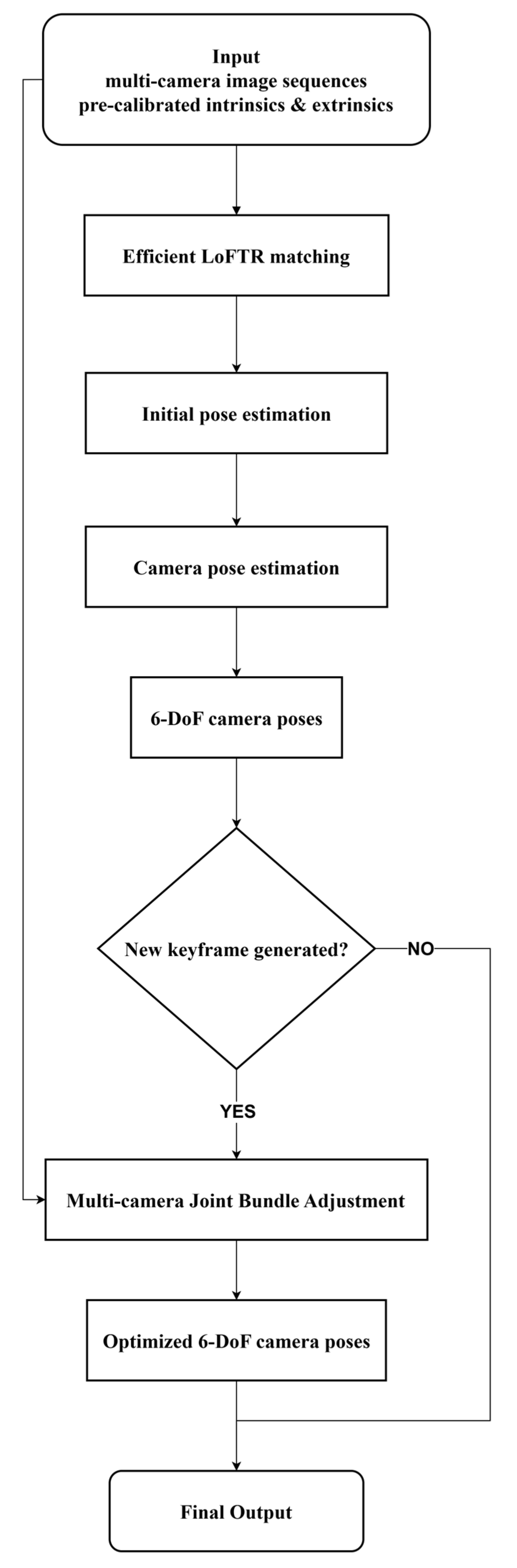

3.1. System Overview

3.2. Robust Camera Pose Estimation

3.3. Multi-Camera Joint Bundle Adjustment

4. Results and Discussion

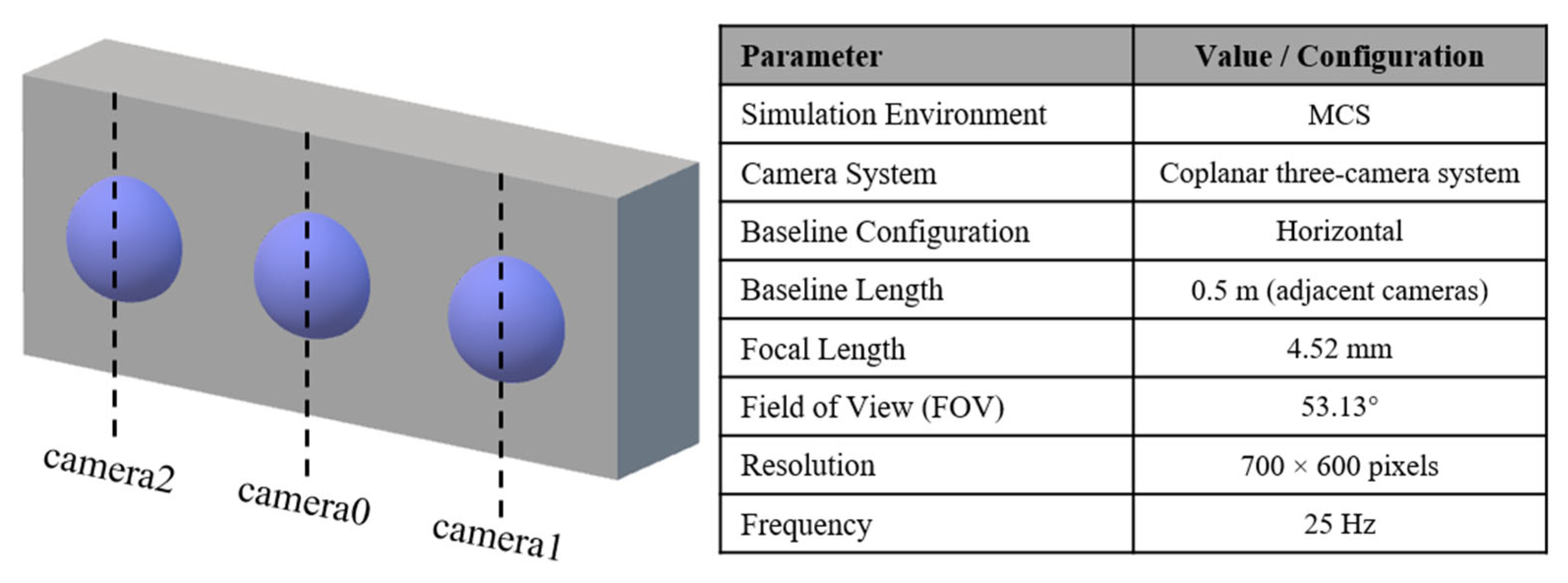

4.1. Datasets

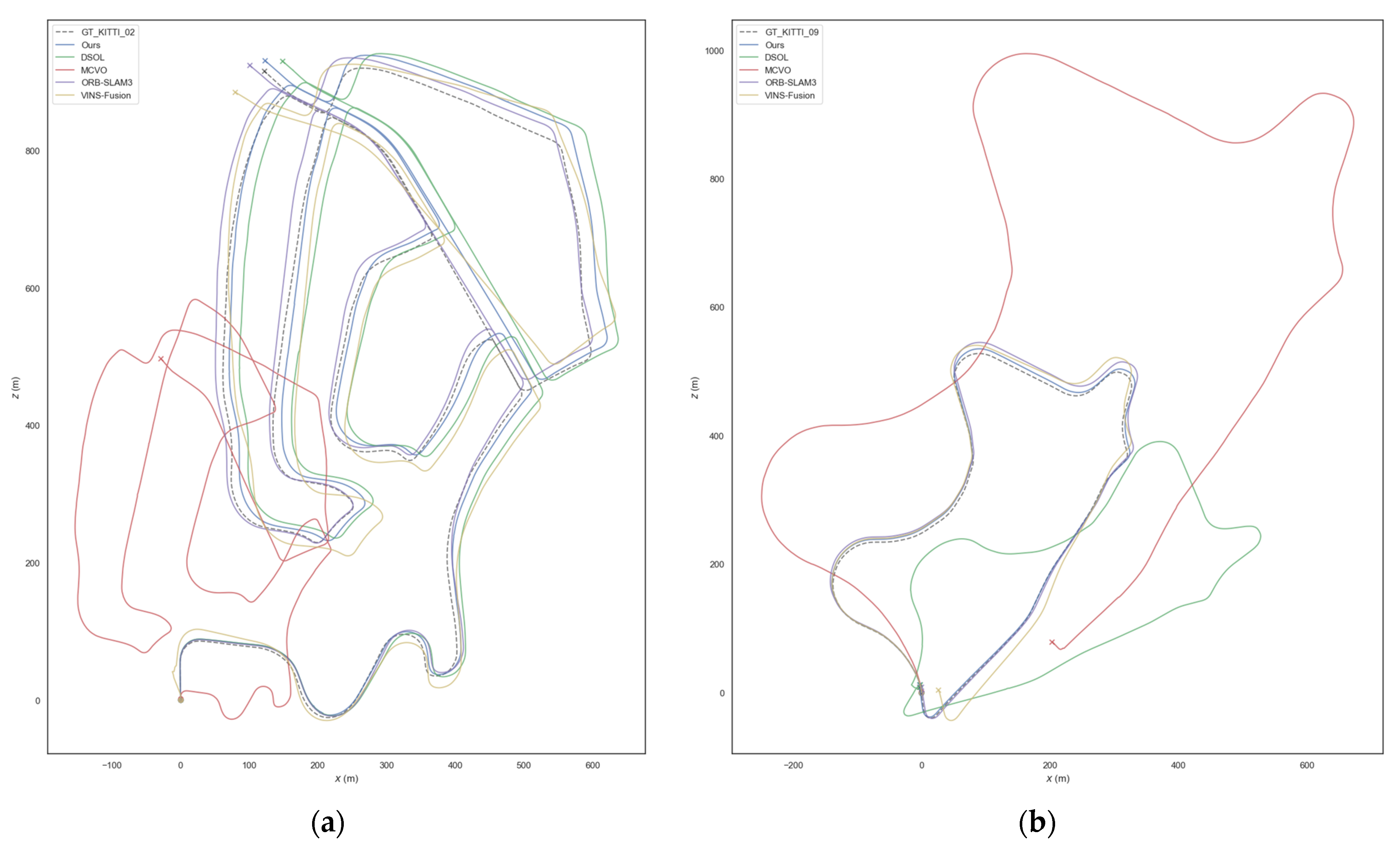

- KITTI Odometry

- 2.

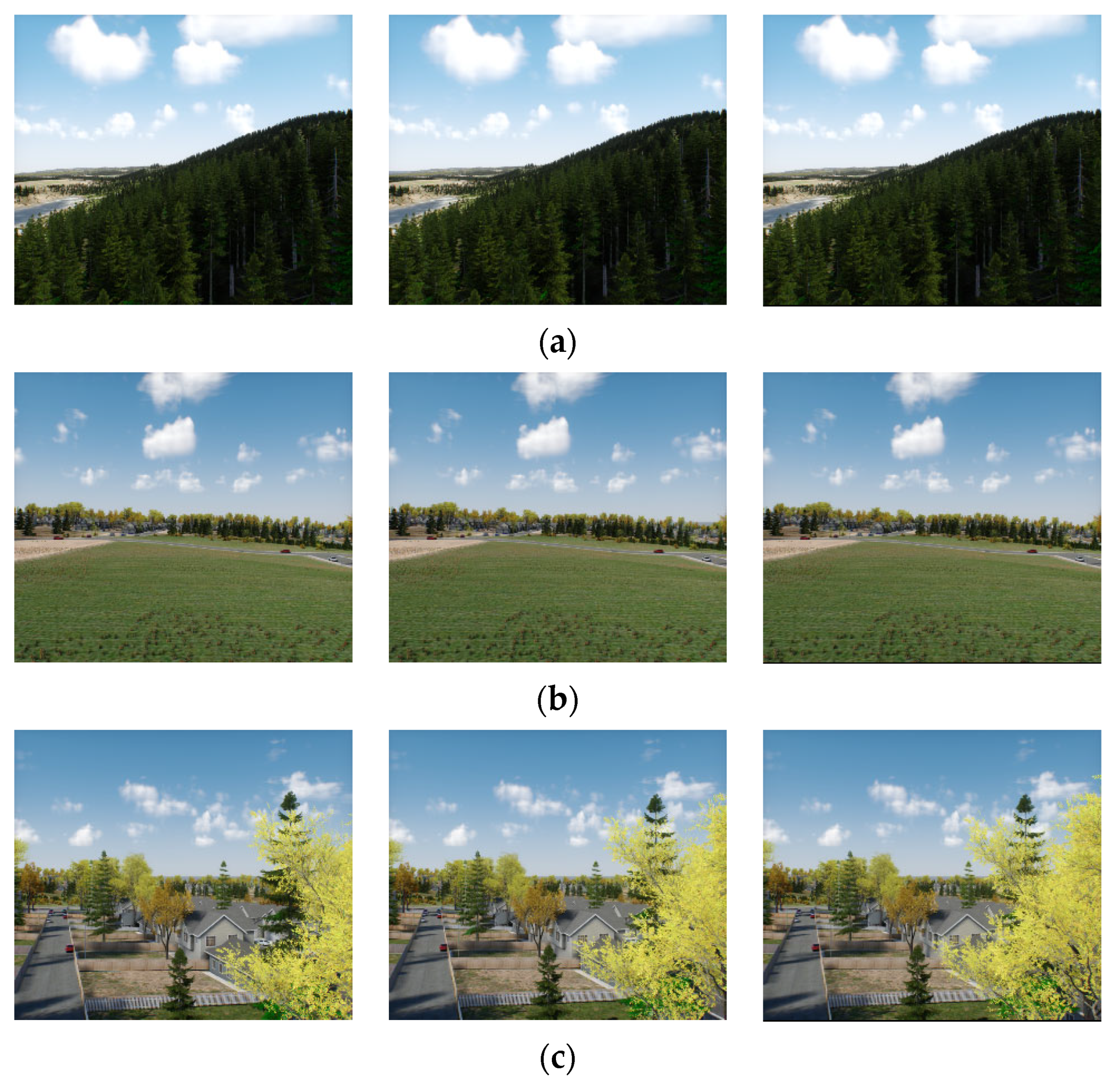

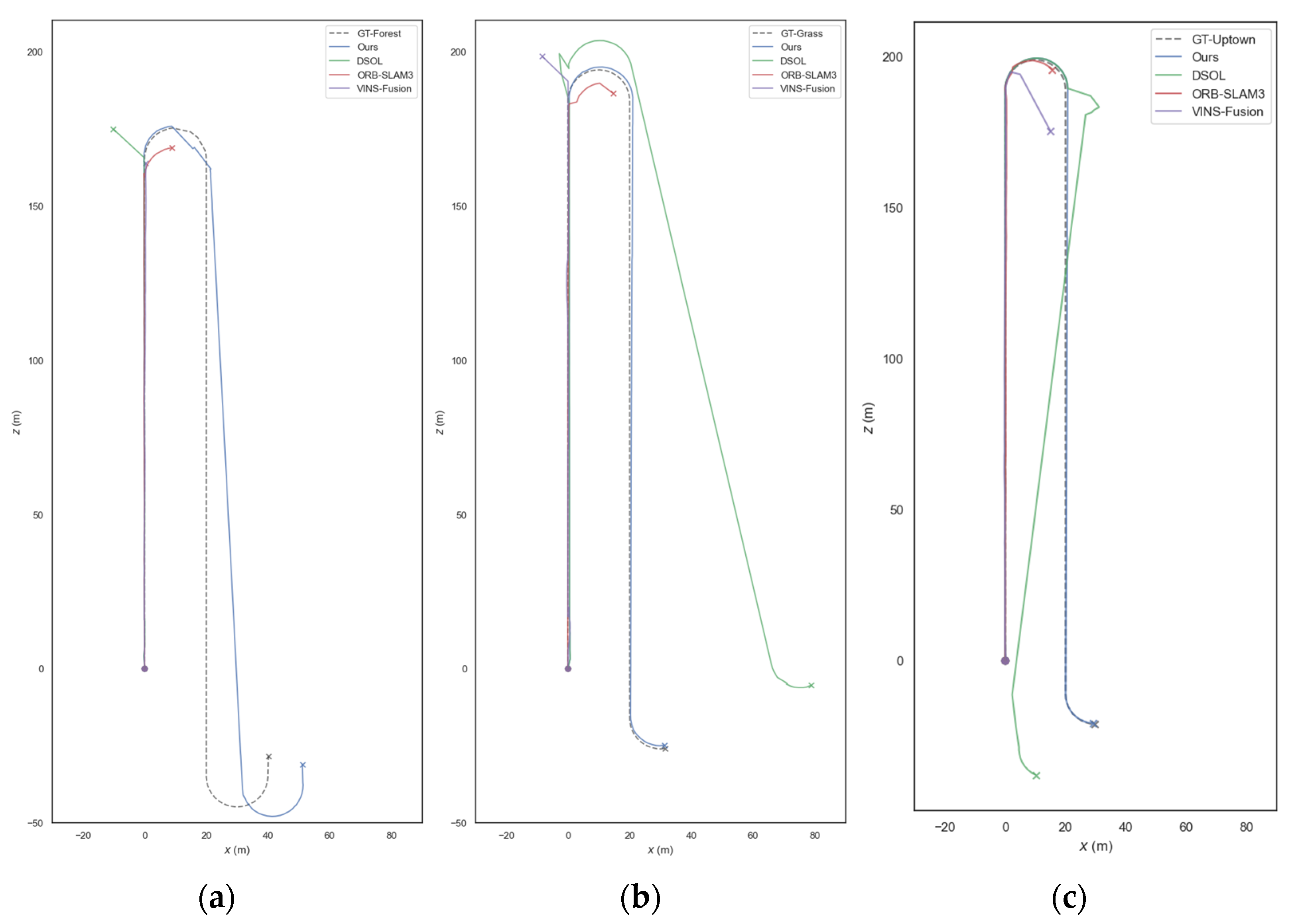

- MCSData

- Forest: dense vegetation coverage with large unstructured and visually similar regions;

- Grass: open areas with sparse low vegetation and limited ground texture features;

- Uptown: containing buildings, roads, and partial vegetation, with some structural features but also large homogeneous regions (e.g., walls and road surfaces).

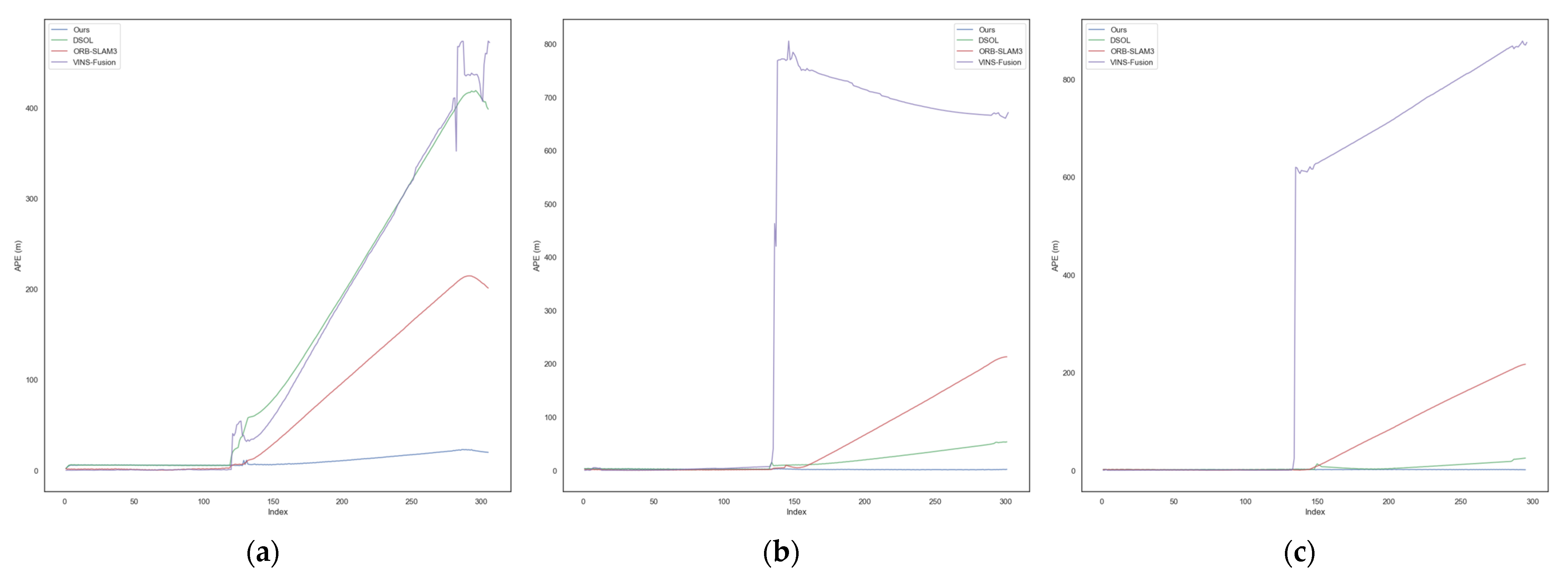

4.2. Comparative Experiments

4.3. Experimental Analysis and Discussion

4.3.1. Ablation Analysis

4.3.2. Runtime Performance

4.3.3. Analysis of Generalization Ability and Sim-to-Real Gap

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SLAM | Simultaneous Localization and Mapping |

| vSLAM | Visual Simultaneous Localization and Mapping |

| DSO | Direct Sparse Odometry |

| BA | Bundle Adjustment |

| ATE | Absolute Trajectory Error |

| SVD | Singular Value Decomposition |

| RANSAC | Random Sample Consensus |

References

- Pritchard, T.; Ijaz, S.; Clark, R.; Kocer, B. ForestVO: Enhancing Visual Odometry in Forest Environments through ForestGlue. IEEE Robot. Autom. Lett. 2025, 10, 5233–5240. [Google Scholar] [CrossRef]

- Yu, H.; Wang, J.; He, Y.; Yang, W.; Xia, G.S. MCVO: A Generic Visual Odometry for Arbitrarily Arranged Multi-Cameras. arXiv 2024, arXiv:2412.03146. [Google Scholar] [CrossRef]

- Tourani, A.; Bavle, H.; Sanchez-Lopez, J.; Voos, H. Visual SLAM: What Are the Current Trends and What to Expect? Sensors 2022, 22, 9297. [Google Scholar] [CrossRef] [PubMed]

- Davison, A.; Cid, Y.; Kita, N. Real-Time 3D SLAM with Wide-Angle Vision. IFAC Proc. Vol. 2004, 37, 868–873. [Google Scholar] [CrossRef]

- Ragab, M. Multiple Camera Pose Estimation. Ph.D. Thesis, The Chinese University of Hong Kong, Hong Kong, 2008. [Google Scholar]

- Harmat, A.; Trentini, M.; Sharf, I. Multi-Camera Tracking and Mapping for Unmanned Aerial Vehicles in Un-Structured Environments. J. Intell. Robot. Syst. 2015, 78, 291–317. [Google Scholar] [CrossRef]

- Yang, A.; Cui, C.; Barsan, I.; Urtasun, R.; Wang, S. Asynchronous Multi-View SLAM. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- Zhang, L.; Wisth, D.; Camurri, M.; Fallon, M. Balancing the Budget: Feature Selection and Tracking for Multi-Camera Visual-Inertial Odometry. IEEE Robot. Autom. Lett. 2021, 7, 1182–1189. [Google Scholar] [CrossRef]

- Song, H.; Liu, C.; Dai, H. BundledSLAM: An Accurate Visual SLAM System Using Multiple Cameras. In Proceedings of the 2024 IEEE 7th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 15–17 March 2024. [Google Scholar]

- Mur-Artal, R.; Tardós, J. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Yang, Y.; Pan, M.; Tang, D.; Wang, T.; Yue, Y.; Liu, T.; Fu, M. MCOV-SLAM: A Multi-Camera Omnidirectional Visual SLAM System. IEEE/ASME Trans. Mechatron. 2024, 29, 3556–3567. [Google Scholar] [CrossRef]

- Pan, M.; Li, J.; Zhang, Y.; Yang, Y.; Yue, Y. MCOO-SLAM: A Multi-Camera Omnidirectional Object SLAM System. arXiv 2025, arXiv:2506.15402. [Google Scholar] [CrossRef]

- Mao, H.; Luo, J. PLY-SLAM: Semantic Visual SLAM Integrating Point–Line Features with YOLOv8-seg in Dynamic Scenes. Sensors 2025, 25, 3597. [Google Scholar] [CrossRef] [PubMed]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Zhi, Y.; Zhang, S.; He, R.; Bao, Z. Semi-Direct Monocular SLAM with Three Levels of Parallel Optimizations. IEEE Access 2021, 9, 86801–86810. [Google Scholar] [CrossRef]

- Liu, W.; Zhou, W.; Liu, J.; Hu, P.; Cheng, J.; Han, J.; Lin, W. Modality-Aware Feature Matching: A Comprehensive Review of Single- and Cross-Modality Techniques. arXiv 2025, arXiv:2507.22791. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, Y.; He, X.; Peng, S.; Tan, D.; Zhou, X. Efficient LoFTR: Semi-Dense Local Feature Matching with Sparse-Like Speed. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Wang, Q.; Zhang, J.; Yang, K.; Peng, K.; Stiefelhagen, R. MatchFormer: Interleaving Attention in Transformers for Feature Matching. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022. [Google Scholar]

- He, X.; Sun, J.; Wang, Y.; Peng, S.; Huang, Q.; Bao, H.; Zhou, X. Detector-Free Structure from Motion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Qu, C.; Shivakumar, S.; Miller, I.; Taylor, C. DSOL: A Fast Direct Sparse Odometry Scheme. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Qi, Q.; Wang, G.; Pan, Y.; Fan, H.; Li, B. MCS-Sim: A Photo-Realistic Simulator for Multi-Camera UAV Visual Perception Research. Drones 2025, 9, 656. [Google Scholar] [CrossRef]

- Qin, T.; Cao, S.; Pan, J.; Shen, S. A General Optimization-Based Framework for Global Pose Estimation with Multiple Sensors. arXiv 2019, arXiv:1901.03642. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multi-Map SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Jürgen, S.; Nikolas, E.; Felix, E.; Wolfram, B.; Daniel, C. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012. [Google Scholar]

| KITTI_02 (5067.2 m) | KITTI_09 (1705.1 m) | MCS_Forest1 (434.2 m) | MCS_Grass1 (432.6 m) | MCS_Uptown1 (435.4 m) | |

|---|---|---|---|---|---|

| VINS-Fusion | 0.76% | 1.09% | 48.03% | 121.09% | 126.83% |

| ORB-SLAM3 | 0.32% | 0.69% | 23.73% | 20.22% | 21.17% |

| DSOL | 0.63% | 0.81% a | 46.74% | 5.22% | 1.82% |

| MCVO | 1.88% a,s | 2.21% a,s | Failed | Failed | Failed |

| Ours | 0.51% | 0.73% | 2.69% | 0.44% | 0.34% |

| MCS_Forest1 (434.2 m) | MCS_Grass1 (432.6 m) | MCS_Uptown1 (435.4 m) | |

|---|---|---|---|

| DSOL | 5.70 m (39.54%) | 2.66 m (44.70%) | 1.51 m (50.34%) |

| ORB-SLAM3 | 2.18 m (42.48%) | 1.60 m (48.01%) | 0.89 m (48.65%) |

| VINS-Fusion | 0.49 m (39.22%) | 3.54 m (44.37%) | 2.17 m (45.27%) |

| Ours (w/o LoFTR Init) | Ours (w/o Multi-View BA) | Ours (Full) | |

|---|---|---|---|

| MCS_Forest1 | 107.53 m | 33.56 m | 11.69 m |

| MCS_Grass1 | 51.37 m | 2.24 m | 1.90 m |

| MCS_Uptown1 | 26.73 m | 1.73 m | 1.49 m |

| Ours (w/o LoFTR Init) | Ours (w/o Multi-view BA) | Ours (Full) | ORB-SLAM3 | |

|---|---|---|---|---|

| Time | 37.13 s | 52.34 s | 93.65 s | 31.14 s |

| RAM Usage | 1.2 GB | 2.5 GB | 2.5 GB | 0.5 GB |

| Module | Trigger Count | Total Time | Avg. Time per Trigger |

|---|---|---|---|

| LoFTR Init | 300 | 51.97 s | 173.2 ms |

| Tracking | 300 | 0.76 s | 2.5 ms |

| Multi-view BA | 62 | 22.42 s | 361.6 ms |

| Other Overheads | - | 18.50 s | - |

| Ours (Full) | - | 93.65 s | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Y.; Zhou, Y.; Qi, Q.; Wang, G.; Jiang, Y.; Fan, H.; He, J. Robust Direct Multi-Camera SLAM in Challenging Scenarios. Electronics 2025, 14, 4556. https://doi.org/10.3390/electronics14234556

Pan Y, Zhou Y, Qi Q, Wang G, Jiang Y, Fan H, He J. Robust Direct Multi-Camera SLAM in Challenging Scenarios. Electronics. 2025; 14(23):4556. https://doi.org/10.3390/electronics14234556

Chicago/Turabian StylePan, Yonglei, Yueshang Zhou, Qiming Qi, Guoyan Wang, Yanwen Jiang, Hongqi Fan, and Jun He. 2025. "Robust Direct Multi-Camera SLAM in Challenging Scenarios" Electronics 14, no. 23: 4556. https://doi.org/10.3390/electronics14234556

APA StylePan, Y., Zhou, Y., Qi, Q., Wang, G., Jiang, Y., Fan, H., & He, J. (2025). Robust Direct Multi-Camera SLAM in Challenging Scenarios. Electronics, 14(23), 4556. https://doi.org/10.3390/electronics14234556