Campus Abnormal Behavior Detection with a Spatio-Temporal Fusion–Temporal Difference Network

Abstract

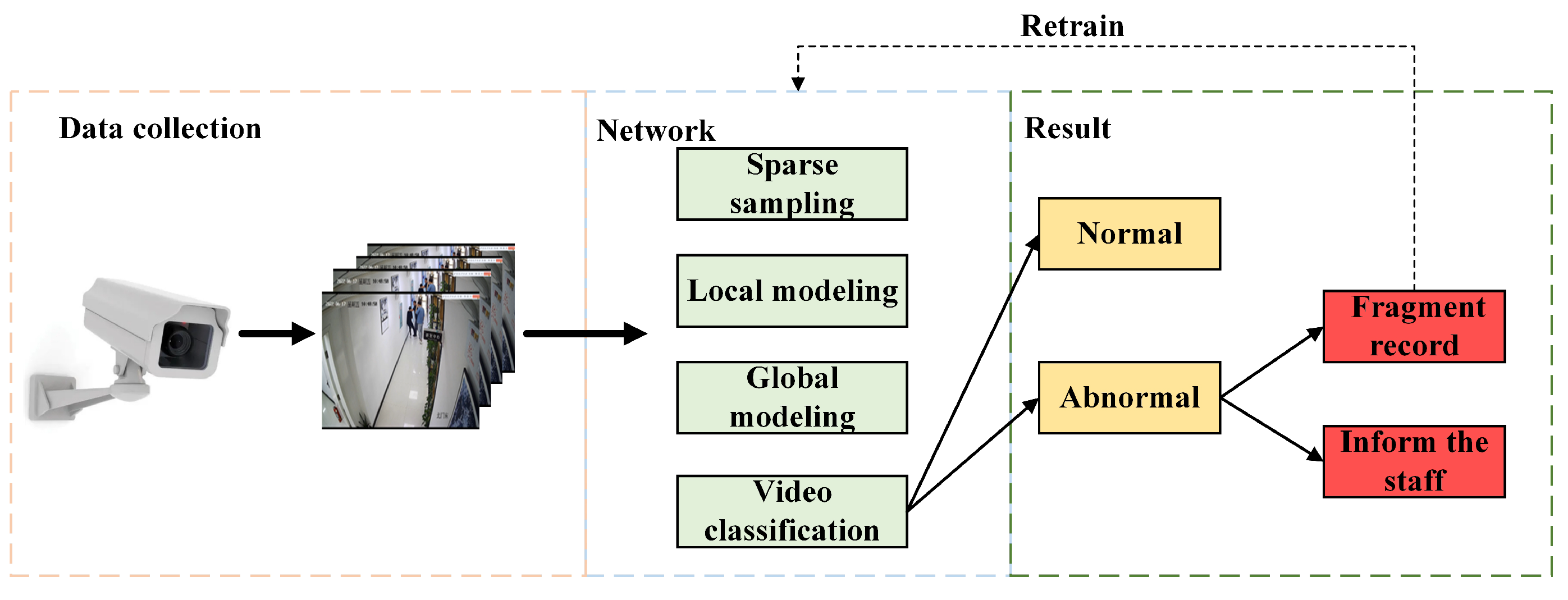

1. Introduction

2. Related Work

2.1. Conventional Anomaly Detection Techniques

2.2. Deep Learning-Based Anomaly Detection Methods

3. Contribution

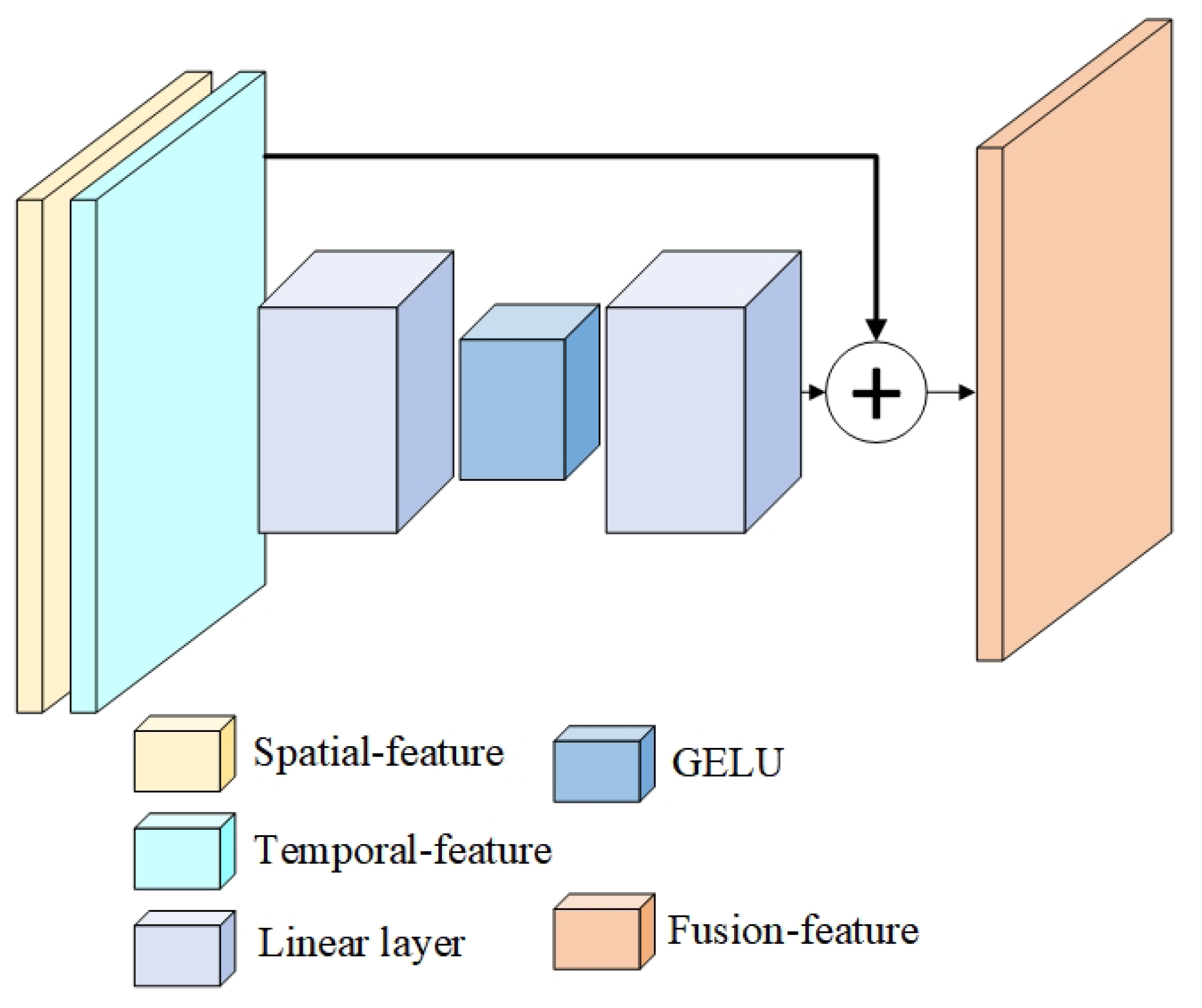

- A Novel Spatio-Temporal Fusion Module: We design a dedicated STF block to replace the rudimentary weighted averaging in standard TDNs. By employing a SENet-inspired bottleneck with GELU activation and residual connections, it enables dynamic, channel-wise refinement for a deeper integration of spatial and temporal features, thereby strengthening the representation of complex behaviors.

- Robust Learning under Long-Tailed Distributions: We introduce the use of focal loss to replace cross-entropy, directly tackling the natural class imbalance in surveillance data. This strategy recalibrates the learning focus towards hard and minority examples without resorting to artificial re-balancing, significantly enhancing model robustness and generalization.

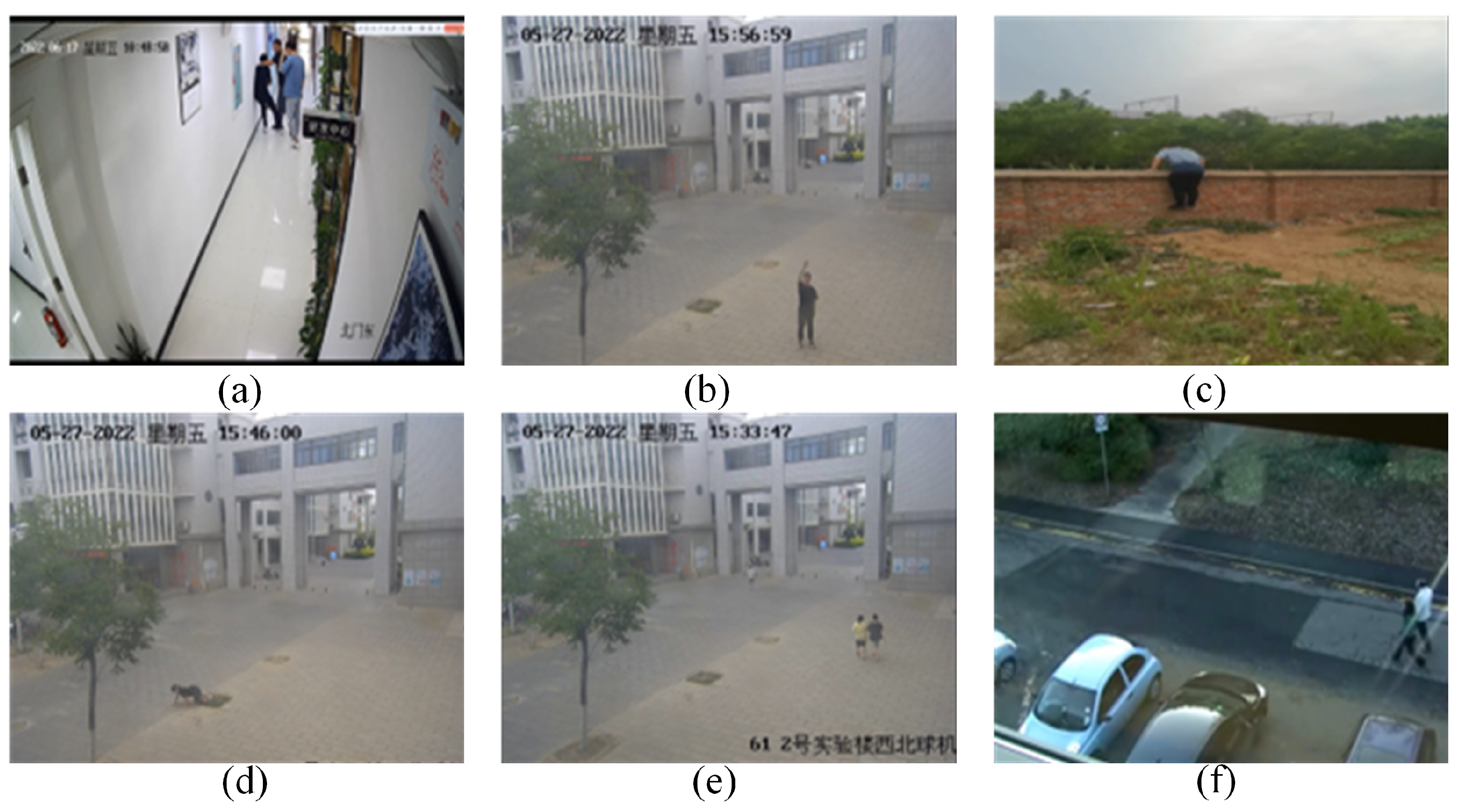

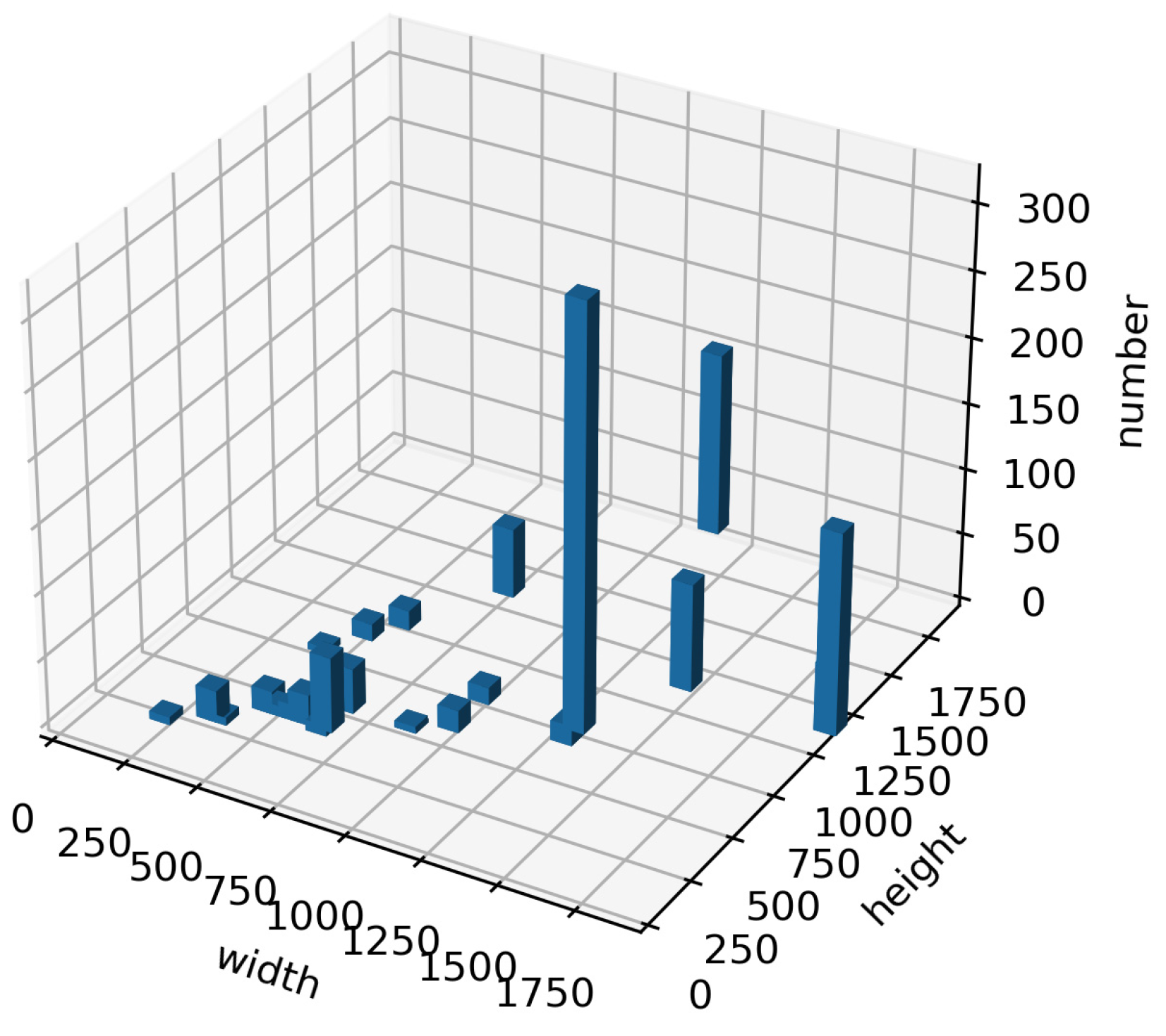

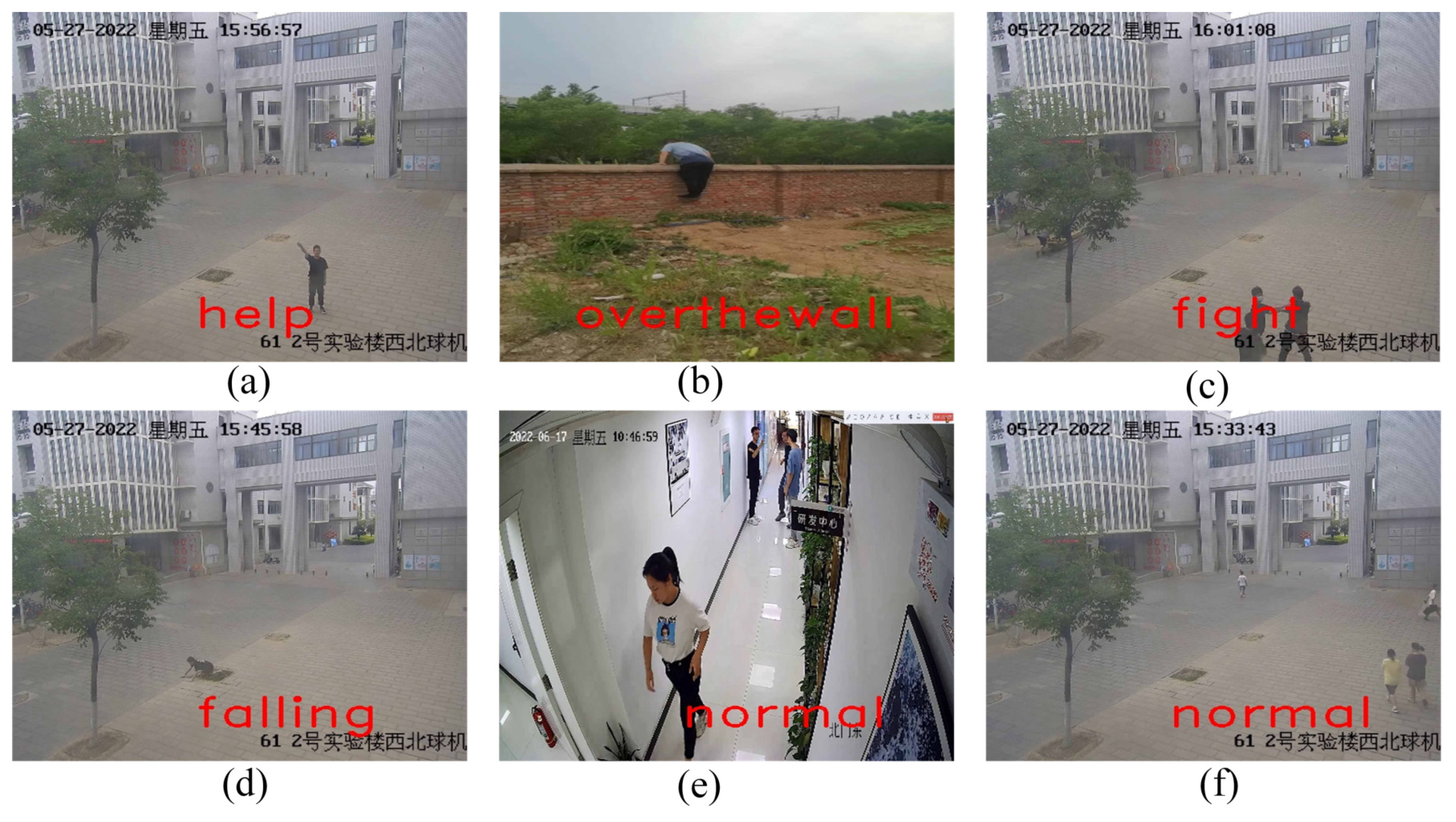

- A Realistic Benchmark for Fine-Grained Analysis: We construct the Violence5 dataset, a multi-category, naturally imbalanced collection of campus scenes. It emphasizes subtle anomalous behaviors and supports model evaluation under real-world long-tailed distributions.

4. Methods

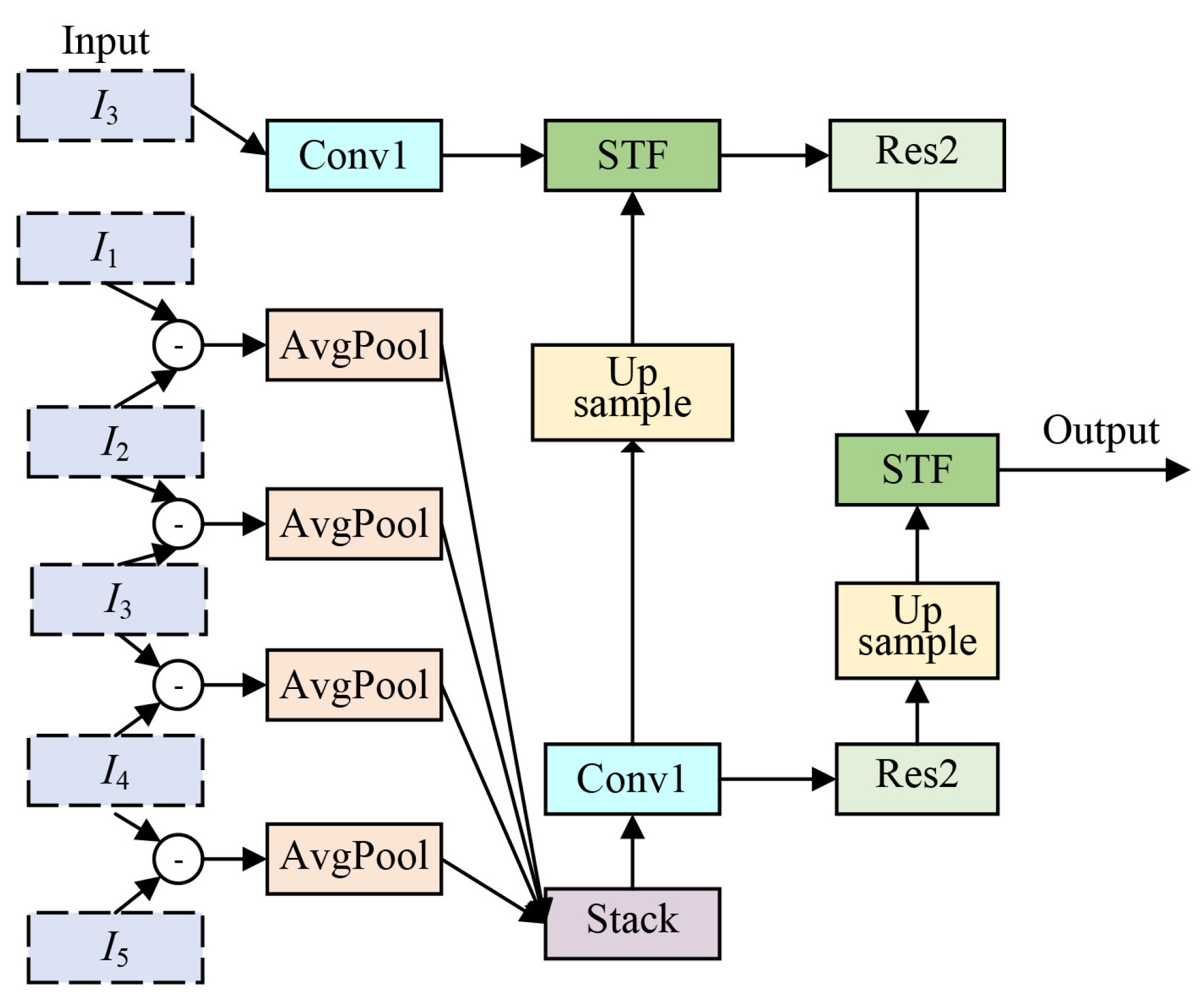

4.1. Improved TDN Network

- To address the issue of data distribution imbalance, the Multi-Class Focus Loss Function (MFLF) is developed to supplant the conventional Cross-Entropy Loss Function (CELF), therefore markedly improving the model’s capacity to learn from sparse category samples.

- We revise the model architecture to enhance overall performance by upgrading the local temporal modeling module (S-TDM) of the original model to the spatio-temporal differential fusion module (S-TDF). The two optimization procedures collaboratively enhance the system’s detection efficacy through the design of the loss function and the fusion of spatio-temporal features, respectively.

4.1.1. S-TDF Module

4.1.2. L-TDM Module

4.2. Focal Loss Function

5. Experiment

5.1. Environment Setup and Dataset Construction

5.2. Experimental Configuration

5.3. Evaluation Metrics

5.4. Introduction to Experimental Methods

5.5. Experimental Comparison on the RWF-2000 Dataset

5.6. Experimental Comparison on the Violence5 Dataset

5.7. Ablation Experiments

5.8. Applications

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Salmivalli, C.; Laninga-Wijnen, L.; Malamut, S.T.; Garandeau, C.F. Bullying prevention in adolescence: Solutions and new challenges from the past decade. J. Res. Adolesc. 2021, 31, 1023–1046. [Google Scholar] [CrossRef]

- Thornberg, R.; Delby, H. How do secondary school students explain bullying? Educ. Res. 2019, 61, 142–160. [Google Scholar] [CrossRef]

- Maunder, R.E.; Crafter, S. School bullying from a sociocultural perspective. Aggress. Violent Behav. 2018, 38, 13–20. [Google Scholar] [CrossRef]

- Gaffney, H.; Ttofi, M.M.; Farrington, D.P. What works in anti-bullying programs? Analysis of effective intervention components. J. Sch. Psychol. 2021, 85, 37–56. [Google Scholar] [CrossRef]

- Zych, I.; Viejo, C.; Vila, E.; Farrington, D.P. School bullying and dating violence in adolescents: A systematic review and meta-analysis. Trauma Violence Abus. 2021, 22, 397–412. [Google Scholar] [CrossRef] [PubMed]

- López, D.P.; Llor-Esteban, B.; Ruiz-Hernández, J.A.; Luna-Maldonado, A.; Puente-López, E. Attitudes towards school violence: A qualitative study with Spanish children. J. Interpers. Violence 2022, 37, NP10782–NP10809. [Google Scholar] [CrossRef] [PubMed]

- Suski, E.F. Beyond the schoolhouse gates: The unprecedented expansion of school surveillance authority under cyberbulling laws. Case West. Reserve Law Rev. 2014, 65, 63. [Google Scholar]

- Ptaszynski, M.; Dybala, P.; Matsuba, T.; Masui, F.; Rzepka, R.; Araki, K. Machine learning and affect analysis against cyber-bullying. In Proceedings of the 36th AISB, Leicester, UK, 29 March–1 April 2010; pp. 7–16. [Google Scholar]

- Raisi, E.; Huang, B. Cyberbullying detection with weakly supervised machine learning. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017, Sydney, NSW, Australia, 31 July–3 August 2017; pp. 409–416. [Google Scholar]

- Zaib, M.H.; Bashir, F.; Qureshi, K.N.; Kausar, S.; Rizwan, M.; Jeon, G. Deep learning based cyber bullying early detection using distributed denial of service flow. Multimed. Syst. 2022, 28, 1905–1924. [Google Scholar] [CrossRef]

- Iwendi, C.; Srivastava, G.; Khan, S.; Maddikunta, P.K.R. Cyberbullying detection solutions based on deep learning architectures. Multimed. Syst. 2023, 29, 1839–1852. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Feichtenhofer, C. X3d: Expanding architectures for efficient video recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 203–213. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7083–7093. [Google Scholar]

- Wang, L.; Tong, Z.; Ji, B.; Wu, G. Tdn: Temporal difference networks for efficient action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1895–1904. [Google Scholar]

- Cheng, M.; Cai, K.; Li, M. RWF-2000: An Open Large Scale Video Database for Violence Detection. arXiv 2019, arXiv:1911.05913. [Google Scholar]

- Singla, S.; Lal, R.; Sharma, K.; Solanki, A.; Kumar, J. Machine learning techniques to detect cyber-bullying. In Proceedings of the 2023 5th International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 3–5 August 2023; pp. 639–643. [Google Scholar]

- Dedeepya, P.; Sowmya, P.; Saketh, T.D.; Sruthi, P.; Abhijit, P.; Praveen, S.P. Detecting cyber bullying on twitter using support vector machine. In Proceedings of the 2023 Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023; pp. 817–822. [Google Scholar]

- Omarov, B.; Narynov, S.; Zhumanov, Z.; Gumar, A.; Khassanova, M. A Skeleton-based Approach for Campus Violence Detection. Comput. Mater. Contin. 2022, 72, 315–331. [Google Scholar] [CrossRef]

- Wu, P.; Liu, J.; Shi, Y.; Sun, Y.; Shao, F.; Wu, Z.; Yang, Z. Not only look, but also listen: Learning multimodal violence detection under weak supervision. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXX 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 322–339. [Google Scholar]

- Dang, L.M.; Min, K.; Wang, H.; Piran, M.J.; Lee, C.H.; Moon, H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognit. 2020, 108, 107561. [Google Scholar] [CrossRef]

- Ye, L.; Wang, L.; Ferdinando, H.; Seppänen, T.; Alasaarela, E. A video-based DT–SVM school violence detecting algorithm. Sensors 2020, 20, 2018. [Google Scholar] [CrossRef]

- Ye, L.; Shi, J.; Ferdinando, H.; Seppänen, T.; Alasaarela, E. School violence detection based on multi-sensor fusion and improved Relief-F algorithms. In Proceedings of the Artificial Intelligence for Communications and Networks: First EAI International Conference, AICON 2019, Harbin, China, 25–26 May 2019; Proceedings, Part II 1. Springer: Berlin/Heidelberg, Germany, 2019; pp. 261–269. [Google Scholar]

- Accattoli, S.; Sernani, P.; Falcionelli, N.; Mekuria, D.N.; Dragoni, A.F. Violence detection in videos by combining 3D convolutional neural networks and support vector machines. Appl. Artif. Intell. 2020, 34, 329–344. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Muhammad, K.; Haq, I.U.; Khan, N.; Heidari, A.A.; Baik, S.W.; de Albuquerque, V.H.C. AI-assisted edge vision for violence detection in IoT-based industrial surveillance networks. IEEE Trans. Ind. Inform. 2021, 18, 5359–5370. [Google Scholar] [CrossRef]

- Vijeikis, R.; Raudonis, V.; Dervinis, G. Efficient violence detection in surveillance. Sensors 2022, 22, 2216. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Liu, H.; Sun, X.; Wang, C.; Liu, Y. Violence detection using oriented violent flows. Image Vis. Comput. 2016, 48, 37–41. [Google Scholar] [CrossRef]

- Ullah, W.; Hussain, T.; Ullah, F.U.M.; Lee, M.Y.; Baik, S.W. TransCNN: Hybrid CNN and transformer mechanism for surveillance anomaly detection. Eng. Appl. Artif. Intell. 2023, 123, 106173. [Google Scholar] [CrossRef]

- Serrano, I.; Deniz, O.; Espinosa-Aranda, J.L.; Bueno, G. Fight recognition in video using hough forests and 2D convolutional neural network. IEEE Trans. Image Process. 2018, 27, 4787–4797. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Ullah, A.; Muhammad, K.; Haq, I.U.; Baik, S.W. Violence detection using spatiotemporal features with 3D convolutional neural network. Sensors 2019, 19, 2472. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, Y.; Zhang, Y.; Zhang, T. Video saliency prediction via single feature enhancement and temporal recurrence. Eng. Appl. Artif. Intell. 2025, 160, 111840. [Google Scholar] [CrossRef]

- Haq, M.A. CNN based automated weed detection system using UAV imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar] [CrossRef]

- Yang, T.; Zhu, Y.; Xie, Y.; Zhang, A.; Chen, C.; Li, M. Aim: Adapting image models for efficient video action recognition. arXiv 2023, arXiv:2302.03024. [Google Scholar] [CrossRef]

- Hao, Y.; Wang, S.; Cao, P.; Gao, X.; Xu, T.; Wu, J.; He, X. Attention in attention: Modeling context correlation for efficient video classification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7120–7132. [Google Scholar] [CrossRef]

- Su, H.; Li, K.; Feng, J.; Wang, D.; Gan, W.; Wu, W.; Qiao, Y. TSI: Temporal saliency integration for video action recognition. arXiv 2021, arXiv:2106.01088. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video swin transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3202–3211. [Google Scholar]

- Neimark, D.; Bar, O.; Zohar, M.; Asselmann, D. Video transformer network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3163–3172. [Google Scholar]

- Huang, Y.; Guo, Y.; Gao, C. Efficient parallel inflated 3D convolution architecture for action recognition. IEEE Access 2020, 8, 45753–45765. [Google Scholar] [CrossRef]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Xie, S.; Sun, C.; Huang, J.; Tu, Z.; Murphy, K. Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 305–321. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Feiszli, M. Video classification with channel-separated convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5552–5561. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks for action recognition in videos. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2740–2755. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Lin, T. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Tan, Y.; Hao, Y.; Zhang, H.; Wang, S.; He, X. Hierarchical Hourglass Convolutional Network for Efficient Video Classification. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 5880–5891. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

| Fight | Normal | Over the Wall | Help | Falling |

|---|---|---|---|---|

| 319 | 274 | 261 | 126 | 118 |

| Model | (%) | P (%) | R (%) | (%) | Param (M) |

|---|---|---|---|---|---|

| TSN | 77.3 | 75.8 | 80.0 | 77.9 | 23.5 |

| TSM | 82.8 | 80.8 | 86.0 | 83.3 | 23.5 |

| H2CN | 80.5 | 82.1 | 78.0 | 80.0 | 23.7 |

| TDN | 84.3 | 87.0 | 80.5 | 83.6 | 24.0 |

| Ours | 87.5 | 89.9 | 84.5 | 87.1 | 24.2 |

| Model | (%) | P (%) | R (%) | (%) | Param (M) |

|---|---|---|---|---|---|

| TSN | 91.5 | 91.0 | 91.2 | 91.1 | 23.5 |

| TSM | 91.5 | 91.5 | 90.8 | 91.0 | 23.5 |

| H2CN | 87.8 | 88.5 | 86.7 | 87.0 | 23.7 |

| TDN | 94.5 | 94.8 | 94.5 | 94.6 | 24.0 |

| Ours | 96.3 | 96.2 | 96.4 | 96.3 | 24.3 |

| Model | (%) | P (%) | R (%) | (%) |

|---|---|---|---|---|

| Original | 94.5 | 94.8 | 94.5 | 94.6 |

| STF | 95.4 | 95.2 | 94.8 | 94.9 |

| Focal loss | 95.4 | 95.7 | 95.3 | 95.5 |

| Focal loss + STF | 96.3 | 96.2 | 96.4 | 96.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, F.; Jiao, Y.; Wang, N.; Zheng, K.; Shi, G.; Yang, M.; Zhao, W. Campus Abnormal Behavior Detection with a Spatio-Temporal Fusion–Temporal Difference Network. Electronics 2025, 14, 4221. https://doi.org/10.3390/electronics14214221

Wei F, Jiao Y, Wang N, Zheng K, Shi G, Yang M, Zhao W. Campus Abnormal Behavior Detection with a Spatio-Temporal Fusion–Temporal Difference Network. Electronics. 2025; 14(21):4221. https://doi.org/10.3390/electronics14214221

Chicago/Turabian StyleWei, Fupeng, Yibo Jiao, Nan Wang, Kai Zheng, Ge Shi, Mengfan Yang, and Wen Zhao. 2025. "Campus Abnormal Behavior Detection with a Spatio-Temporal Fusion–Temporal Difference Network" Electronics 14, no. 21: 4221. https://doi.org/10.3390/electronics14214221

APA StyleWei, F., Jiao, Y., Wang, N., Zheng, K., Shi, G., Yang, M., & Zhao, W. (2025). Campus Abnormal Behavior Detection with a Spatio-Temporal Fusion–Temporal Difference Network. Electronics, 14(21), 4221. https://doi.org/10.3390/electronics14214221