Coal Shearer Drum Detection in Underground Mines Based on DCS-YOLO

Abstract

1. Introduction

- (1)

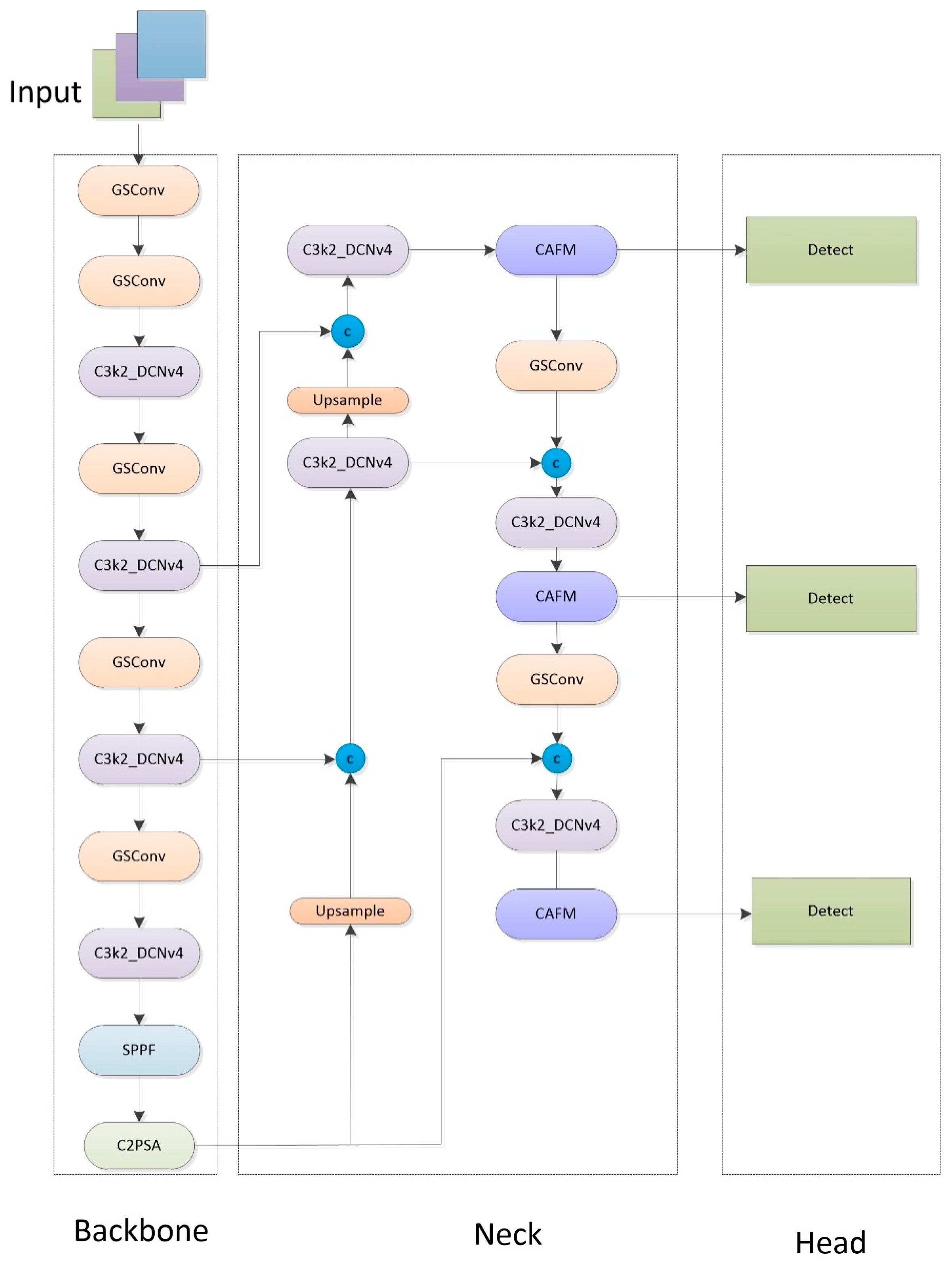

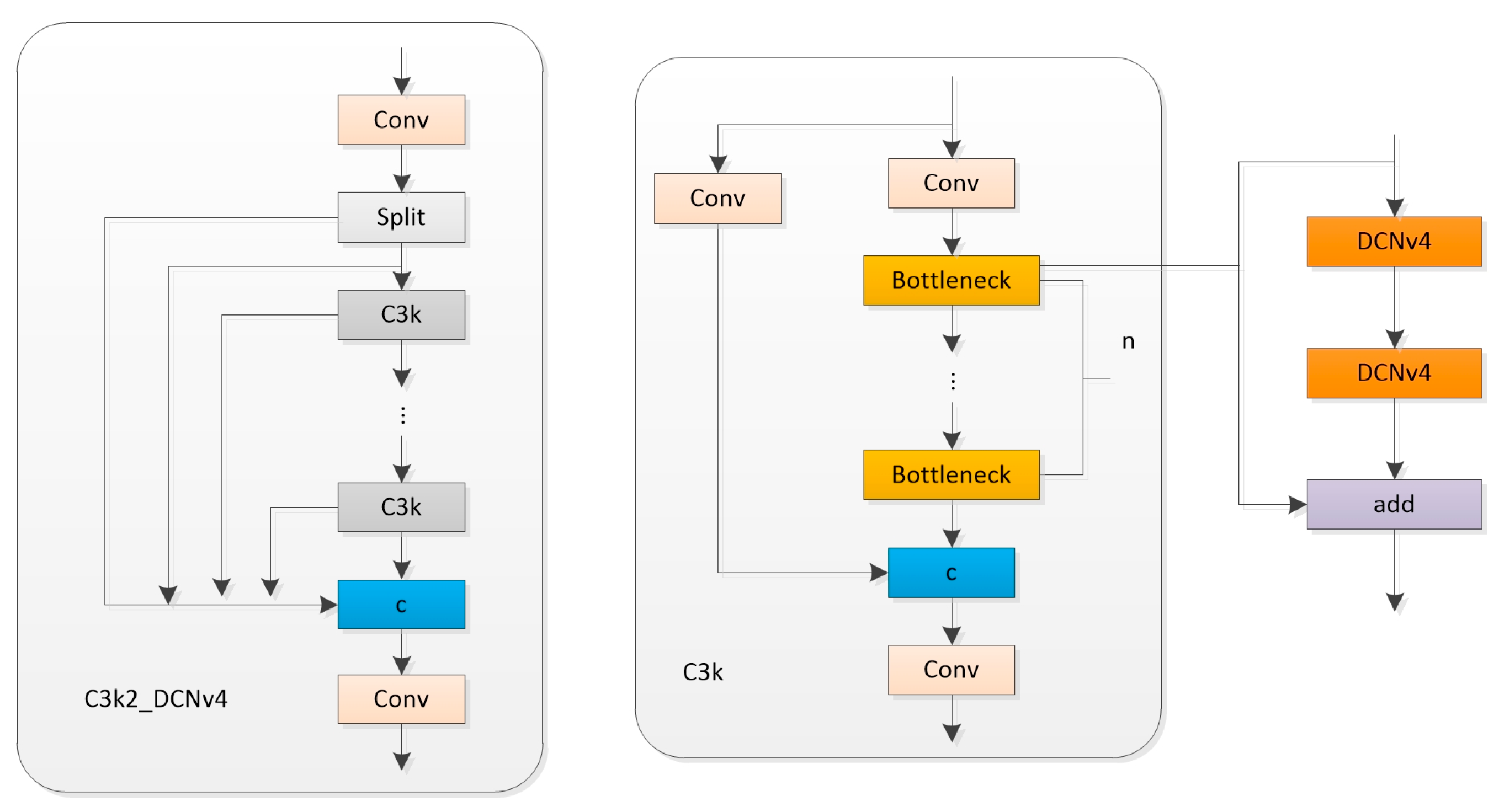

- We introduce the C3k2_DCNv4 module, which adaptively adjusts convolution sampling points to capture drum features under varying scales and non-rigid deformations, improving perception under occlusion.

- (2)

- We propose a lightweight convolution and attention fusion module (CAFM) that enhances multi-resolution feature representation under complex illumination and background interference while maintaining computational efficiency with GSConv.

- (3)

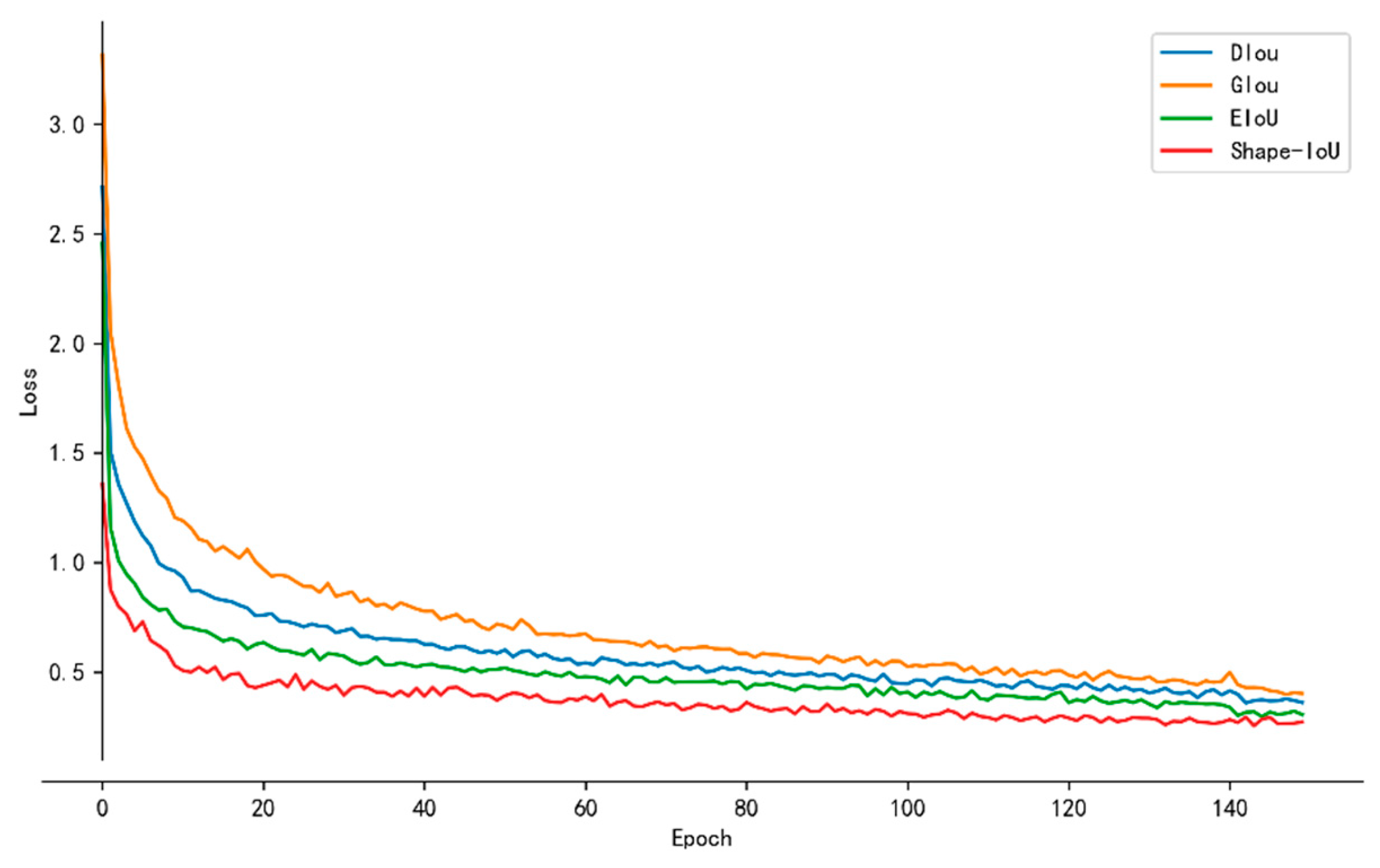

- We employ the Shape-IoU loss to precisely fit irregular drum boundaries by considering both position and shape similarity, improving localization accuracy in low-light and complex environments.

2. Materials and Methods

2.1. Dataset

2.2. Proposed Method

2.2.1. C3k2_DCNv4

2.2.2. Convolution and Attention Fusion Module

2.2.3. Shape-IoU Loss

3. Experiments and Results

3.1. Experimental Environment and Parameter Settings

3.2. Evaluation Metrics

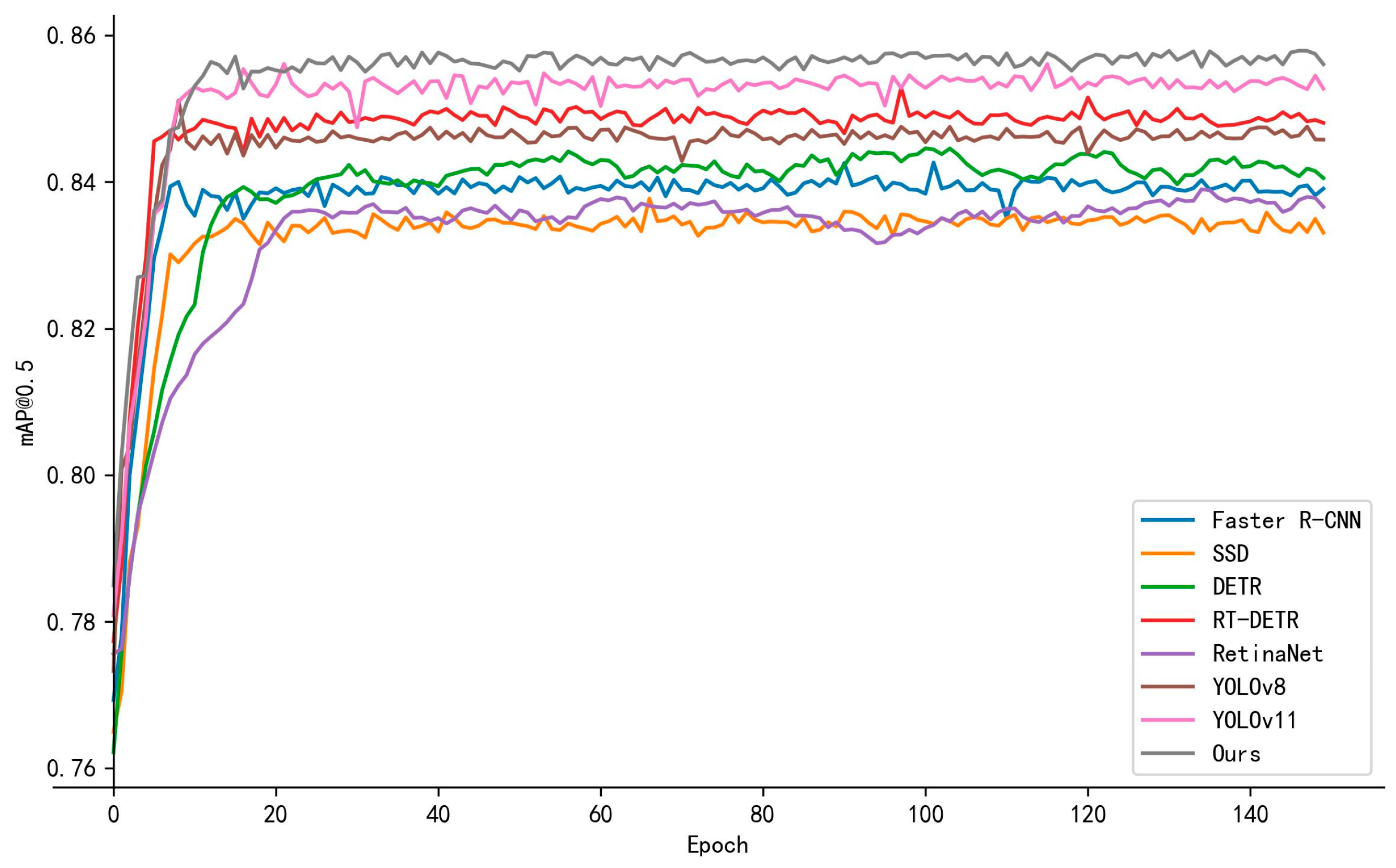

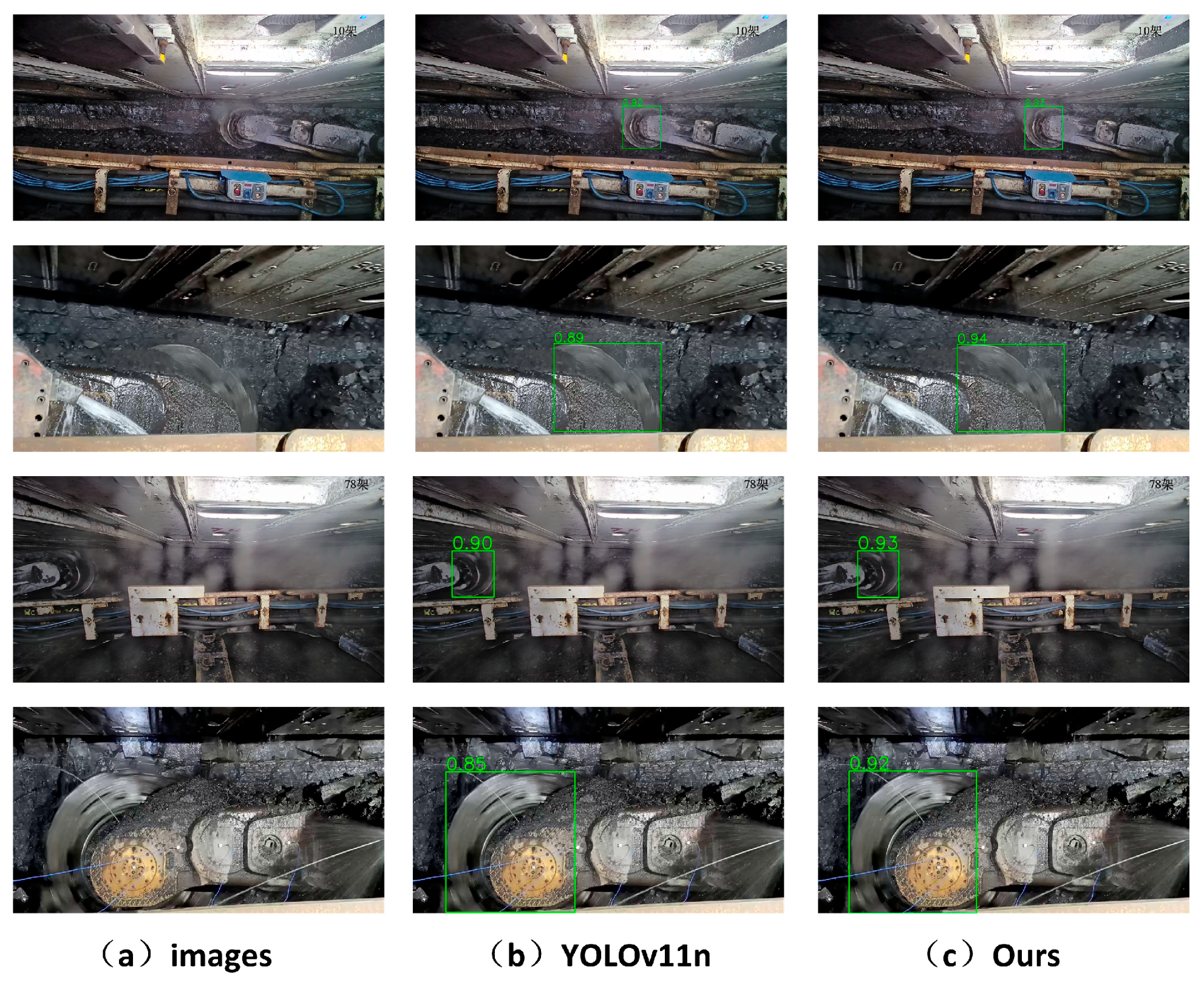

3.3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, F.; Zhang, J.; Cheng, H. Research review on intelligent object detection technology for coal mines based on deep learning. Coal Sci. Technol. 2025, 53, 284–296. [Google Scholar] [CrossRef]

- Yang, W.; Ji, Y.; Zhang, X.; Zhao, D.; Ren, Z.; Wang, Z.; Tian, S.; Du, Y.; Zhu, L.; Jiang, J. A multi-camera system-based relative pose estimation and virtual–physical collision detection methods for the underground anchor digging equipment. Mathematics 2025, 13, 559. [Google Scholar] [CrossRef]

- Liang, M.; Zhang, J. Application and prospect of strapdown inertial navigation system in coal mining equipment. Sensors 2024, 24, 6836. [Google Scholar] [CrossRef]

- Xue, X.; Zhang, Y. Digital modelling method of coal-mine roadway based on millimeter-wave radar array. Sci. Rep. 2024, 14, 69547. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Zhang, L. An edge computing based anomaly detection method in underground coal mine. Neurocomputing 2022, 470, 226–235. [Google Scholar] [CrossRef]

- Hu, T.; Zhuang, D.; Qiu, J. An EfficientNetv2-based method for coal conveyor belt foreign object detection. Front. Energy Res. 2025, 12, 1444877. [Google Scholar] [CrossRef]

- Ren, H.W.; Li, S.S.; Zhao, G.R.; Fu, K.K. Measurement method of support height and roof beam posture angles for working face hydraulic support based on depth vision. J. Min. Saf. Eng. 2022, 39, 72–81+93. [Google Scholar]

- Wang, M.Y.; Zhang, X.H.; Ma, H.W.; Du, Y.Y.; Zhang, Y.M.; Xie, N.; Wei, Q.N. Remote control collision detection and early warning method for comprehensive mining equipment. Coal Sci. Technol. 2021, 49, 110–116. [Google Scholar]

- Zhang, K.; Lian, Z. Hydraulic bracket attitude angle measurement system. Ind. Mine Autom. 2017, 43, 40–45. [Google Scholar]

- Zhang, J.; Ding, J.K.; Li, R.; Wang, H.; Wang, X. Research on 5G-based attitude detection technology of overhead hydraulic bracket. Coal Min. Mach. 2022, 43, 39–41. [Google Scholar]

- Zhang, K.; Yang, X.; Xu, L.; Thé, J.; Tan, Z.; Yu, H. Research on enhancing coal-gangue object detection using GAN-based data augmentation strategy with dual attention mechanism. Energy 2024, 287, 129654. [Google Scholar] [CrossRef]

- Xu, S.; Jiang, W.; Liu, Q.; Wang, H.; Zhang, J.; Li, J.; Wang, C. Coal-rock interface real-time recognition based on the improved YOLO detection and bilateral segmentation network. Undergr. Space 2024, 21, 22–32. [Google Scholar] [CrossRef]

- Liu, W.; Tao, Q.; Wang, N.; Xiao, W.; Pan, C. YOLO-STOD: An industrial conveyor belt tear detection model based on YOLOv5 algorithm. Sci. Rep. 2025, 15, 1659. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A comprehensive review of object detection with deep learning. Digit. Signal Process. 2023, 132, 103812. [Google Scholar] [CrossRef]

- Edozie, E.; Nuhu, A.; John, S.; Sadiq, B.O. Comprehensive review of recent developments in visual object detection based on deep learning. Artif. Intell. Rev. 2025, 58, 277–312. [Google Scholar] [CrossRef]

- Lamichhane, B.R.; Srijuntongsiri, G.; Horanont, T. CNN based 2D object detection techniques: A review. Front. Comput. Sci. 2025, 7, 1437664. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. Eur. Conf. Comput. Vis. (ECCV) 2016, 21, 21–37. [Google Scholar] [CrossRef]

- Tlebaldinova, A.; Omiotek, Z.; Karmenova, M.; Kumargazhanova, S.; Smailova, S.; Tankibayeva, A.; Kumarkanova, A.; Glinskiy, I. Comparison of modern convolution and transformer architectures: YOLO and RT-DETR in meniscus diagnosis. Computers 2025, 14, 333. [Google Scholar] [CrossRef]

- Zheng, H.; Chen, X.; Cheng, H.; Du, Y.; Jiang, Z. MD-YOLO: Surface defect detector for industrial complex environments. Opt. Lasers Eng. 2024, 178, 108170. [Google Scholar] [CrossRef]

- Kang, S.; Hu, Z.; Liu, L.; Zhang, K.; Cao, Z. Object detection YOLO algorithms and their industrial applications: Overview and comparative analysis. Electronics 2025, 14, 1104. [Google Scholar] [CrossRef]

- Zhou, W.; Li, C.; Ye, Z.; He, Q.; Ming, Z.; Chen, J.; Wan, F.; Xiao, Z. An efficient tiny defect detection method for PCB with improved YOLO through a compression training strategy. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Shen, J.; Cheng, X.; Yang, X.; Zhang, L.; Cheng, W.; Lin, Y. Efficient CNN accelerator based on low-end FPGA with optimized depthwise separable convolutions and squeeze-and-excite modules. AI 2025, 6, 244. [Google Scholar] [CrossRef]

- Zhang, L.; Lin, Y.; Yang, X.; Cheng, W. From sample poverty to rich feature learning: A new metric learning method for few-shot classification. IEEE Access 2024, 12, 124990–125002. [Google Scholar] [CrossRef]

- Jiang, J.; Xie, G.; Guo, M.; Cui, J. Surface mine personnel object video tracking method based on YOLOv5-Deepsort algorithm. Sci. Rep. 2025, 15, 17123. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhu, Y.; Zhang, Y.; Liu, S. An effective deep learning approach enabling miners’ protective equipment detection and tracking using improved YOLOv7 architecture. Comput. Electr. Eng. 2025, 123, 110173. [Google Scholar] [CrossRef]

- Tian, F.; Song, C.; Liu, X. Small target detection in coal mine underground based on improved RTDETR algorithm. Sci. Rep. 2025, 15, 12006. [Google Scholar] [CrossRef]

- Ling, J.; Fu, Z.; Yuan, X. Research on downhole drilling target detection based on improved YOLOv8n. Sci. Rep. 2025, 15, 26105. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Y.; Zhang, Y.; Guo, B.; Xu, R. DWHA-PCMSP: Salient Object Detection Network in Coal Mine Industrial IoT. IEEE Trans. Ind. Inform. 2025, 21, 5746–5754. [Google Scholar] [CrossRef]

- Wang, A.; Fu, X.; Liu, Y.; Zhang, Z. A remote sensing image object detection model based on improved YOLOv11. Electronics 2025, 14, 2607. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Xiong, Y.; Li, Z.; Chen, Y.; Wang, F.; Zhu, X.; Luo, J.; Wang, W.; Lu, T.; Li, H.; Qiao, Y.; et al. Efficient deformable ConvNets: Rethinking dynamic and sparse operator for vision applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 5652–5661. [Google Scholar] [CrossRef]

- Hu, S.; Gao, F.; Zhou, X.; Dong, J.; Du, Q. Hybrid convolutional and attention network for hyperspectral image denoising. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5504005. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, J.; Yang, S. Enhancing YOLOv8 object detection with shape-IoU loss and local convolution for small target recognition. Informatica 2025, 49, 105–120. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Hwang, D.; Kim, J.-J.; Moon, S.; Wang, S. Image augmentation approaches for building dimension estimation in street view images using object detection and instance segmentation based on deep learning. Appl. Sci. 2025, 15, 2525. [Google Scholar] [CrossRef]

- Wang, S. Automated non-PPE detection on construction sites using YOLOv10 and transformer architectures for surveillance and body-worn cameras with benchmark datasets. Sci. Rep. 2025, 15, 27043. [Google Scholar] [CrossRef] [PubMed]

| Configuration | Parameters |

|---|---|

| Deep learning framework | Pytorch 2.1.0 + python 3.8.0 |

| Operating system | Windows10 |

| GPU | NVIDIA GeForce RTX 3090 |

| CPU | Intel(R) Core(TM) i7-12700@2.10 GHz |

| Loss | Pr (%) | Re (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|

| GIoU | 88.9 | 76.4 | 83.9 | 0.528 |

| DIoU | 89.4 | 76.6 | 84.1 | 0.531 |

| EIoU | 90.1 | 76.3 | 84.5 | 0.535 |

| Shape-IoU | 90.8 | 76.0 | 84.9 | 0.538 |

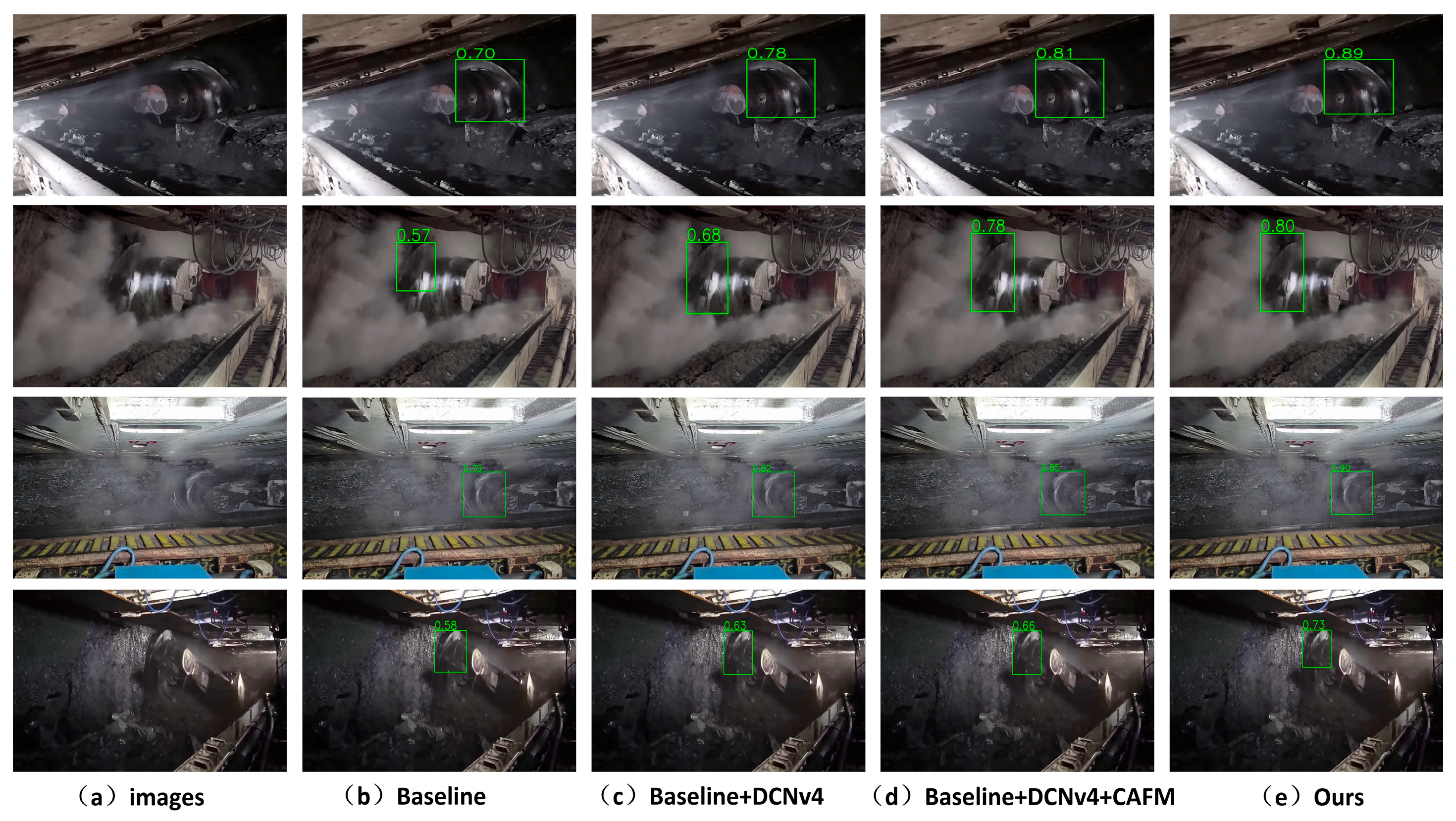

| Method | DCNv4 | CAFM | Shape-IoU | Pr (%) | Re (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|---|---|

| a | 88.4 | 77.1 | 84.5 | 52.9 | |||

| b | ✓ | 88.7 | 77.3 | 84.8 | 53.6 | ||

| c | ✓ | 88.5 | 77.6 | 84.7 | 53.4 | ||

| d | ✓ | 90.8 | 76.0 | 84.9 | 53.8 | ||

| e | ✓ | ✓ | 89.6 | 78.0 | 85.1 | 54.7 | |

| f | ✓ | ✓ | 90.3 | 77.8 | 85.2 | 55.1 | |

| g | ✓ | ✓ | 89.2 | 79.1 | 85.0 | 54.5 | |

| h | ✓ | ✓ | ✓ | 91.3 | 80.3 | 85.6 | 56.2 |

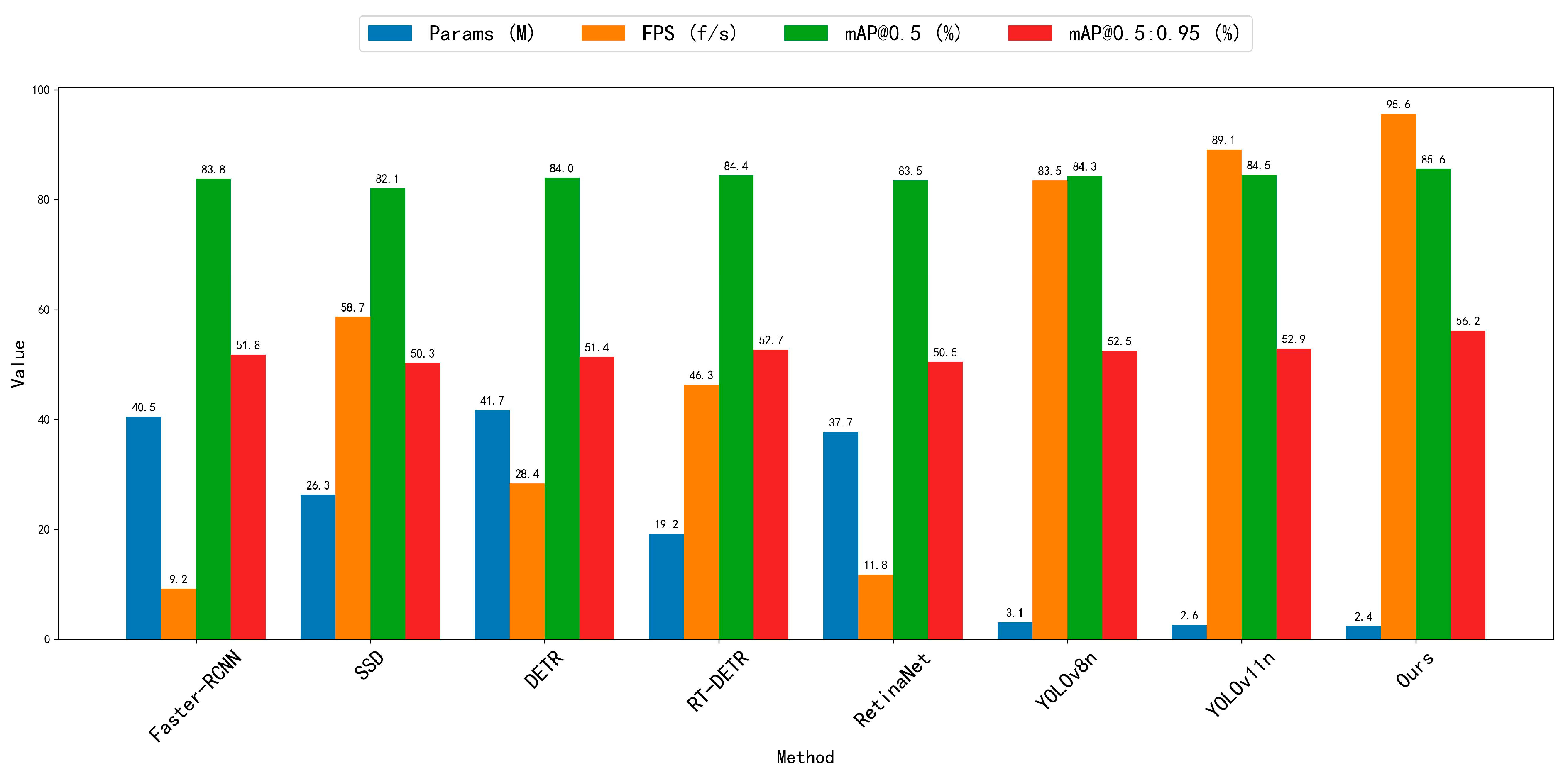

| Method | Params (M) | Flops (G) | FPS (f/s) | Pr (%) | Re (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|---|---|

| Faster-RCNN | 40.5 | 200.3 | 9.2 | 83.2 | 78.9 | 83.8 | 51.8 |

| SSD | 26.3 | 30.8 | 58.7 | 82.0 | 76.6 | 82.1 | 50.3 |

| DETR | 41.7 | 86.5 | 28.4 | 85.1 | 78.4 | 84.0 | 51.4 |

| RT-DETR | 19.2 | 56.3 | 46.3 | 90.9 | 75.6 | 84.4 | 52.7 |

| RetinaNet | 37.7 | 204.1 | 11.8 | 84.5 | 77.2 | 83.5 | 50.5 |

| YOLOv8n | 3.1 | 8.8 | 83.5 | 90.8 | 75.9 | 84.3 | 52.5 |

| YOLOv11n | 2.6 | 6.5 | 89.1 | 88.4 | 77.1 | 84.5 | 52.9 |

| Ours | 2.4 | 6.2 | 95.6 | 91.3 | 80.3 | 85.6 | 56.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, T.; Qiu, J.; Zheng, L.; Yu, Z.; Liu, C. Coal Shearer Drum Detection in Underground Mines Based on DCS-YOLO. Electronics 2025, 14, 4132. https://doi.org/10.3390/electronics14204132

Hu T, Qiu J, Zheng L, Yu Z, Liu C. Coal Shearer Drum Detection in Underground Mines Based on DCS-YOLO. Electronics. 2025; 14(20):4132. https://doi.org/10.3390/electronics14204132

Chicago/Turabian StyleHu, Tao, Jinbo Qiu, Libo Zheng, Zehai Yu, and Cong Liu. 2025. "Coal Shearer Drum Detection in Underground Mines Based on DCS-YOLO" Electronics 14, no. 20: 4132. https://doi.org/10.3390/electronics14204132

APA StyleHu, T., Qiu, J., Zheng, L., Yu, Z., & Liu, C. (2025). Coal Shearer Drum Detection in Underground Mines Based on DCS-YOLO. Electronics, 14(20), 4132. https://doi.org/10.3390/electronics14204132