4.2. Experimental RESULT

As shown in

Table 4, in the comparative experiments with different embedding models, CompGCN [

39], HittER [

40], and SAttLE [

41], each exhibit distinct roles in candidate entity scoring. Specifically, CompGCN [

39] reduces model complexity by sharing relation embeddings and decomposing basis vectors, thereby ensuring computational efficiency and delivering stable performance. On both the FB15k-237 and CODEX-S datasets, the MCFR model with CompGCN [

39] achieves better MRR and Hits@k metrics than traditional triple-based embedding models, providing a reliable baseline capability. In contrast, HittER [

40] leverages a hierarchical Transformer architecture to capture multi-level semantic features of entities and relations in knowledge graphs, leading to stronger modeling ability compared to CompGCN [

39]. On the FB15k-237 dataset, MCFR-HittER achieves an MRR of 0.501, outperforming MCFR-CompGCN at 0.462; similarly, on CODEX-S, MCFR-HittER reaches 0.528, exceeding the 0.519 achieved by MCFR-CompGCN.

Notably, SAttLE [

41] demonstrates the most significant advantage among the three embedding models. By employing a self-attention mechanism, SAttLE [

41] effectively captures fine-grained interaction patterns between entities and relations and achieves efficient feature representation in a low-dimensional embedding space, thereby substantially improving predictive performance. On the FB15k-237 dataset, MCFR-SAttLE achieves an MRR of 0.526, representing a 5.0% improvement over MCFR-HittER, with Hits@10 further increasing to 0.629. On the CODEX-S dataset, MCFR-SAttLE achieves an MRR of 0.565, also surpassing MCFR-HittER at 0.528, with Hits@10 improving to 0.742. These results indicate that SAttLE [

41] not only outperforms CompGCN [

39] and HittER [

40] in overall accuracy but also demonstrates stronger generalization ability for long-tail entities. Overall, while CompGCN [

39] provides an efficient structural modeling baseline and HittER [

40] enhances hierarchical semantic representation, SAttLE [

41] achieves a superior balance between efficiency and representational quality, making it the most advantageous embedding model for knowledge graph link prediction. In the following experiments, all reported results are based on the SAttLE [

41] embedding model to consistently demonstrate the performance of the proposed MCFR framework.

Table 4 compares the performance of various indicators for link prediction on the FB15k-237 and CODEX-S datasets. The proposed MCFR model outperforms conventional triple-based methods, achieving notable improvements on both datasets. On the FB15k-237 dataset, the proposed model’s Mean Reciprocal Rank (MRR) surpasses that of established triple embedding methods such as RESCAL [

14], TransE [

13], DistMult [

15], ComplEx [

46], and RotatE [

47]. Notably, the MRR of the proposed model exceeds that of the TransE model by 24.7%. This improvement is primarily due to the high expressive capability of SAttLE’s self-attention mechanism. In addition, MCFR’s semantic-enhanced reasoning and self-consistency scoring contribute to more precise modeling of entity-relation patterns. These advantages are particularly evident for complex and long-tail relations. As a result, the model demonstrates stronger entity-relation modeling capacity and significantly outperforms traditional approaches. These findings suggest that conventional triple-based embedding methods fail to capture the intricate semantic information within knowledge graphs. In contrast, the proposed model achieves a substantial improvement in link prediction performance.

The MCFR model presented in this work demonstrates superior performance compared to text-based pre-training methods such as Pretrain-KGE [

17] and KG-BERT [

16], as evidenced by higher MRR and Hits@k metrics. Specifically, the MRR of the model on the FB15k-237 dataset is 0.526, a 19.4% improvement over the 0.332 MRR achieved by Pretrain-KGE. Furthermore, the model’s enhanced performance on Hits@1, Hits@3, and Hits@10 metrics suggests its ability to more effectively leverage contextual information when reasoning about entities and relationships within knowledge graphs, thereby improving the overall accuracy of the reasoning process.

Path-based and GNN-based methods demonstrate strong capabilities in knowledge graph link prediction. For instance, NBFNet [

52] achieves an MRR of 0.415 and Hits@10 of 0.599 on FB15k-237, and an MRR of 0.515 and Hits@10 of 0.659 on CODEX-S, indicating that path- and graph-based approaches can effectively capture graph structural information and perform multi-hop reasoning. However, our proposed MCFR models consistently outperform these methods. Specifically, MCFR-SAttLE achieves an MRR of 0.526 on FB15k-237, 11.0% higher than NBFNet [

52], with Hits@10 reaching 0.629; on CODEX-S, MRR rises to 0.565 and Hits@10 to 0.742.

The MCFR model introduced in this study demonstrates substantial performance enhancements over the state-of-the-art LLM-based method, KICGPT [

23], on the FB15k-237 dataset. Specifically, the model achieves an 11.4% increase in MRR, with Hits@1, Hits@3, and Hits@10 improving by 13.7%, 10.9%, and 7.5%, respectively. These results suggest that the proposed model more effectively harnesses the capabilities of LLMs, optimizing entity linking and reasoning processes. On the CODEX-S dataset, the model outperforms KICGPT [

23] with a 5.2% higher MRR and increases in Hits@1, Hits@3, and Hits@10 by 1.1%, 3.2%, and 8.7%, respectively. Notably, the enhancement in Hits@10 underscores the model’s superior predictive performance for long-tail entities.

The experimental results demonstrate that the MCFR model proposed in this paper surpasses traditional triple-based, text-based, and LLM-based approaches. By fully leveraging the structural information within the knowledge graph and effectively enhancing the reasoning capabilities through a context augmentation strategy, the method is suitable for more complex link prediction tasks.

To further validate the effectiveness and scalability of the proposed MCFR framework, we additionally evaluate its performance on the larger and more diverse Wikidata5M dataset, which contains more complex and noisy relations compared to FB15k-237 and CODEX-S. As shown in

Table 5, MCFR-SAttLE achieves an MRR of 0.492, substantially outperforming conventional triple-based methods such as TransE [

13], DistMult [

15], ComplEx [

46], SimplE [

56], and RotatE [

14], as well as the text-enhanced KEPLER model [

35]. These results indicate that MCFR’s structural reasoning capabilities generalize well to larger, noisier knowledge graphs.

4.3. Ablation Experiments

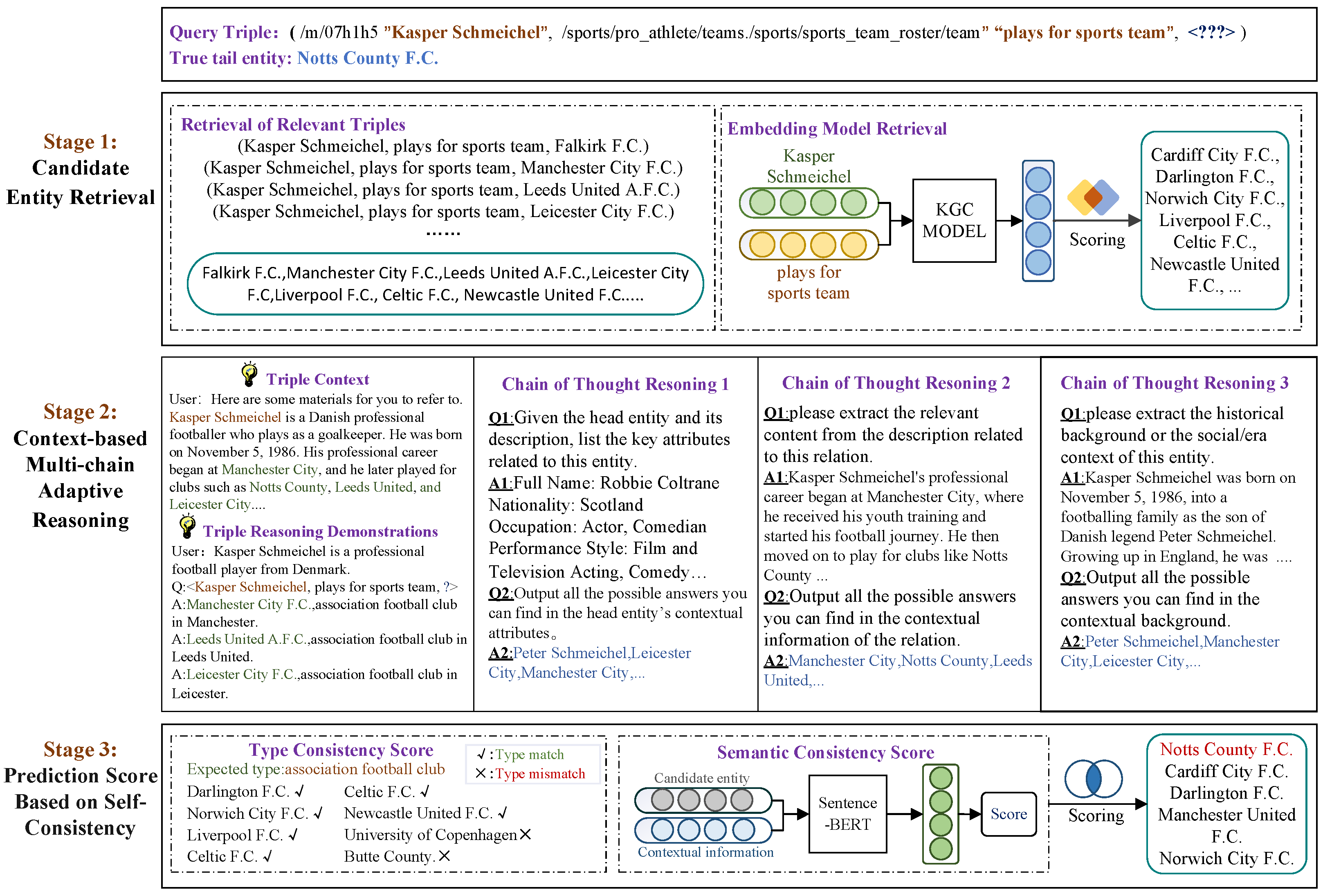

This section presents an ablation study to evaluate the contribution of each module to the MCFR model’s performance. The analysis includes the candidate entity extraction method, the context-based reasoning strategy, the scoring method for final candidate entities, and the effect of varying the number of candidate entities.

4.3.1. Influence of the Initial Candidate Entity Retrieval Method

Table 6 illustrates the use of two distinct candidate entity retrieval methods: one based on the embedding model and the other integrating relevant triples with the embedding model. These methods are compared to evaluate their performance in the link prediction task.

The proposed candidate entity retrieval method demonstrated significant performance improvements across all evaluated metrics. On the FB15K-237 dataset, the method achieved an MRR of 0.526 and a Hits@10 score of 0.629. In comparison, the retrieval method based solely on the embedding model yielded an MRR of 0.485 and a Hits@10 of 0.597. These results suggest that the candidate entity retrieval method, which integrates relevant triples and the embedding model, can more comprehensively retrieve candidate entities, thereby enhancing the overall predictive accuracy of the model.

4.3.2. Influence of Context-Based Multi-Chain Fusion Reasoning

Table 7 presents the results of link prediction experiments conducted on three individual inference chains and a combined multi-chain inference strategy.

These experiments assess the impact of multi-chain inference on model performance. The results indicate an improvement in model performance to varying extents. For the FB15k-237 dataset, the MRR values for the individual inference chains are 0.436, 0.486, and 0.470, with the highest Hits@10 recorded at 0.591. In contrast, the multi-chain inference strategy, which integrates the three chains, elevates the MRR to 0.526 and the Hits@10 to 0.629. This demonstrates that the integration of multi-chain information significantly enhances the model’s capability to capture complex semantic structures and improve inference accuracy.

To further analyze the interaction among different reasoning chains, we conducted an ablation experiment by activating any two of the three chains.

As shown in

Table 8, removing any chain leads to a noticeable performance drop compared to using all three chains. Specifically, on FB15k-237, removing the historical chain (i.e., using only the attribute–relation combination) causes a clear decline in MRR (0.526 → 0.436), while removing the relation chain (attribute–history) results in a moderate decrease. This suggests that the historical chain contributes most to enhancing contextual reasoning, while the attribute and relation chains provide complementary semantic features. A similar trend is observed on CODEX-S, demonstrating the necessity of multi-chain cooperation for robust reasoning.

4.3.3. Influence of Self-Consistency Prediction Score

To validate the self-consistency scoring mechanism, this paper compares it with type consistency and semantic consistency scoring. The experimental results are presented in

Table 9.

The experimental results demonstrate that the introduction of the scoring mechanism yields robust model performance. The type consistency scoring primarily leverages entity type information for screening purposes. However, the lack of sufficient contextual information limits the improvement in prediction performance. In contrast, the semantic consistency scoring calculates the semantic similarity between candidate entities and triple descriptions through embedding, further enhancing the inference accuracy, particularly on the CODEX-S dataset. In addition, self-consistency scoring, when combined with individual scoring approaches, further improves the inference effect. In the FB15K-237 dataset, the MRR reaches 0.526, and Hits@10 attains 0.629; on the CODEX-S dataset, the MRR increases to 0.565, and Hits@10 reaches 0.742.

While the self-consistency prediction score has been shown to improve prediction reliability, it can also serve as a partial indicator of hallucinated triples, i.e., triples that appear plausible but are factually incorrect. By measuring the internal agreement among multiple prediction chains, inconsistent or low-confidence triples can be flagged. However, this mechanism cannot fully prevent fabricated relations, and inherent biases in LLMs (e.g., cultural or linguistic biases) may still affect prediction results. Addressing these risks in a comprehensive manner is left for future work, such as integrating external knowledge graph constraints or ensemble verification mechanisms.

4.3.4. Influence of Different Numbers of Candidate Entities

Table 10 illustrates the impact of varying the number of candidate entities on the performance of the model. In the experimental setup, the number of candidate entities

k is set to 10, 50, 100 and 200, respectively.

The experimental results demonstrate that the performance of the model improves as the number of candidate entities increases. In the FB15K-237 dataset, when the hyperparameter value k is increased from 10 to 50, the MRR improves from 0.361 to 0.526, Hits@1 from 0.268 to 0.464 and Hits@10 from 0.542 to 0.629, indicating a significant performance improvement. However, further increasing the value of k leads to diminishing returns, as the rate of performance improvement decreases. When k is set to 100 and 200, the changes in MRR, Hits@1, Hits@3 and Hits@10 become relatively small, suggesting that the model performance tends to stabilize. A similar trend is observed in the CODEX-S dataset, where the MRR and Hits@k metrics gradually improve as the value of k increases, but the amplitude of performance improvement narrows after k is set to 100 and 200.

Given the time and cost associated with LLM processing, increasing the number of candidate entities can enhance performance. However, the improvement beyond 50 candidates is marginal. Consequently, has been chosen, balancing the performance of the model with the need to mitigate the excessive computational overhead and cost.

4.3.5. Sensitivity Analysis of Hyperparameters and

To further assess the robustness of our method ad ensure reproducibility, we conducted an ablation study on the key hyperparameters

and

, which control the weighting of different components in the final scoring function. Recall that the predicted score for a candidate tail entity

is computed as

where

balances the contribution from the fusion reasoning chains, and

scales the self-consistency score. In our main experiments, we set

and

, with

candidate entities per chain.

We varied from 0.3 to 0.9 (with ) to observe its impact on Hits@1, Hits@3, and MRR metrics across both FB15k-237 and CODEX-S datasets. Similarly, we fixed and varied from 0.1 to 0.7 to examine its influence.

As shown in

Table 11, the performance is relatively stable for

values between 0.5 and 0.7, indicating that the method is not overly sensitive to exact hyperparameter selection. Extreme settings, e.g.,

or

, slightly degrade performance, as they under- or over-emphasize the chain scores relative to self-consistency.

Reproducibility Note: The candidate entity list for each chain is obtained directly from ChatGPT using the prompt templates provided in

Appendix A. For clarity, we parse the LLM outputs by extracting the top-

k entities mentioned explicitly in the output, ignoring additional commentary or explanations. Setting

ensures a sufficiently diverse candidate pool for MI estimation and final scoring. By providing both the prompt templates and explicit parsing rules, all experiments can be fully reproduced.

In summary, this sensitivity analysis validates that our chosen hyperparameters , , and represent a reasonable trade-off between chain contributions and self-consistency, and the results are robust across a moderate range of hyperparameter values.

4.3.6. Efficiency and Cost Analysis

To further evaluate the practical applicability of MCFR, we provide a quantitative analysis of its computational cost. Since our method calls the LLM for each candidate entity across multiple reasoning chains, the computational cost is non-negligible. We measure the efficiency from three aspects: runtime, total token consumption, and estimated monetary cost.

We record the total number of tokens consumed by ChatGPT for generating candidate entities for all test triples in FB15k-237, CODEX-S and Wikidata5M. The runtime is measured on a single GPU workstation with 32 GB memory. The estimated monetary cost is calculated based on the token usage and the prevailing API pricing.

As shown in

Table 12, the token consumption and runtime scale linearly with the number of test triples and the number of candidate entities per chain. While MCFR requires multiple LLM calls, the actual cost remains moderate for academic-scale datasets. By adjusting the candidate pool size

k, users can trade off between computational cost and predictive performance.

This analysis is demonstrates that, despite the LLM calls for each candidate, MCFR maintains a reasonable balance between high predictive accuracy and computational cost, supporting its practical applicability in knowledge graph link prediction tasks.