DataSense: A Real-Time Sensor-Based Benchmark Dataset for Attack Analysis in IIoT with Multi-Objective Feature Selection

Abstract

1. Introduction

- Designed and implemented a realistic IIoT testbed incorporating diverse industrial sensors, IoT devices, and network infrastructure to replicate real-world industrial environments.

- Collected synchronized time-series sensor data and network traffic streams from all devices, producing a comprehensive dataset for in-depth security analysis.

- Executed and recorded 50 distinct attack types targeting network infrastructure, sensors, and devices, spanning multiple categories to capture diverse and realistic threat scenarios.

- Proposed and empirically validated an innovative multi-objective approach to feature selection for anomaly detection in IIoT environments, improving detection accuracy while reducing computational overhead by isolating the most relevant features from both sensor and network data streams.

- Developed a comprehensive real-time analysis benchmark integrating machine learning and deep learning methods for accurate detection and classification of cyberattacks in streaming data environments.

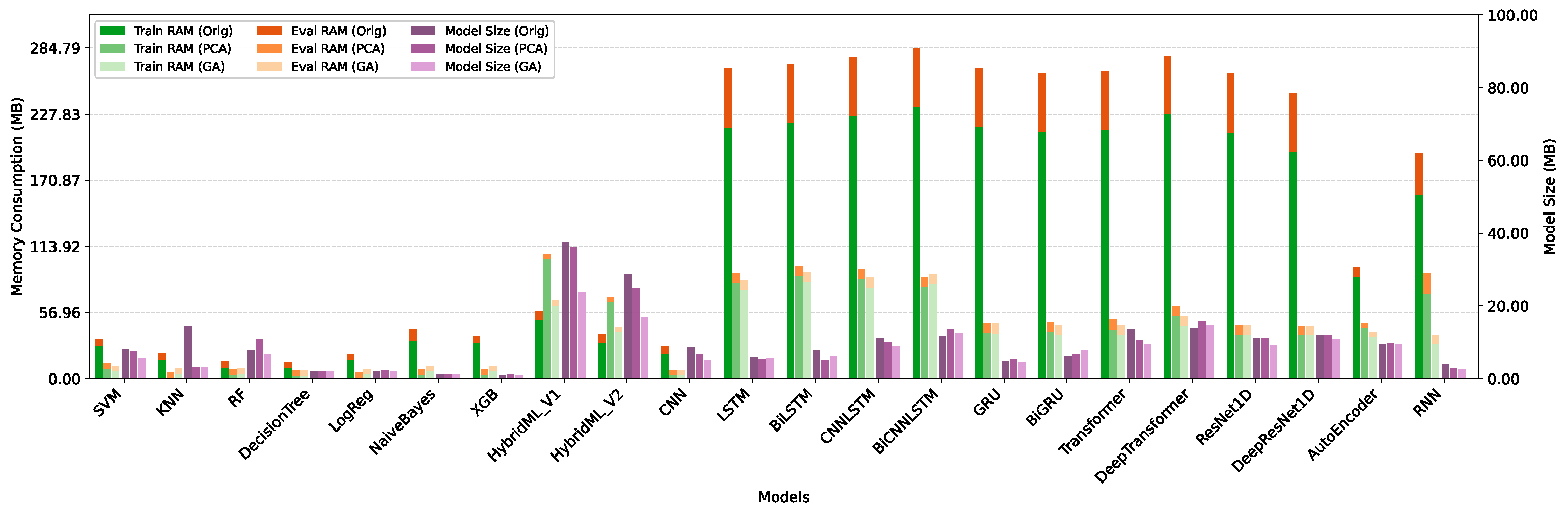

- Performed meticulous experiments to profile resource utilization of the proposed approaches under different operational scenarios, demonstrating the effectiveness of the feature selection method and comparing resource usage across multiple detection techniques.

2. Related Work

2.1. Intrusion and Anomaly Detection Datasets

2.2. Malware Detection Datasets

2.3. Industrial Systems

2.3.1. Water Treatment Systems

2.3.2. Predictive Maintenance and Fault Detection

2.3.3. Gas Systems

2.3.4. Environmental Monitoring

2.4. Time Series Analysis

2.5. Research Gap

3. The Proposed DataSense Dataset

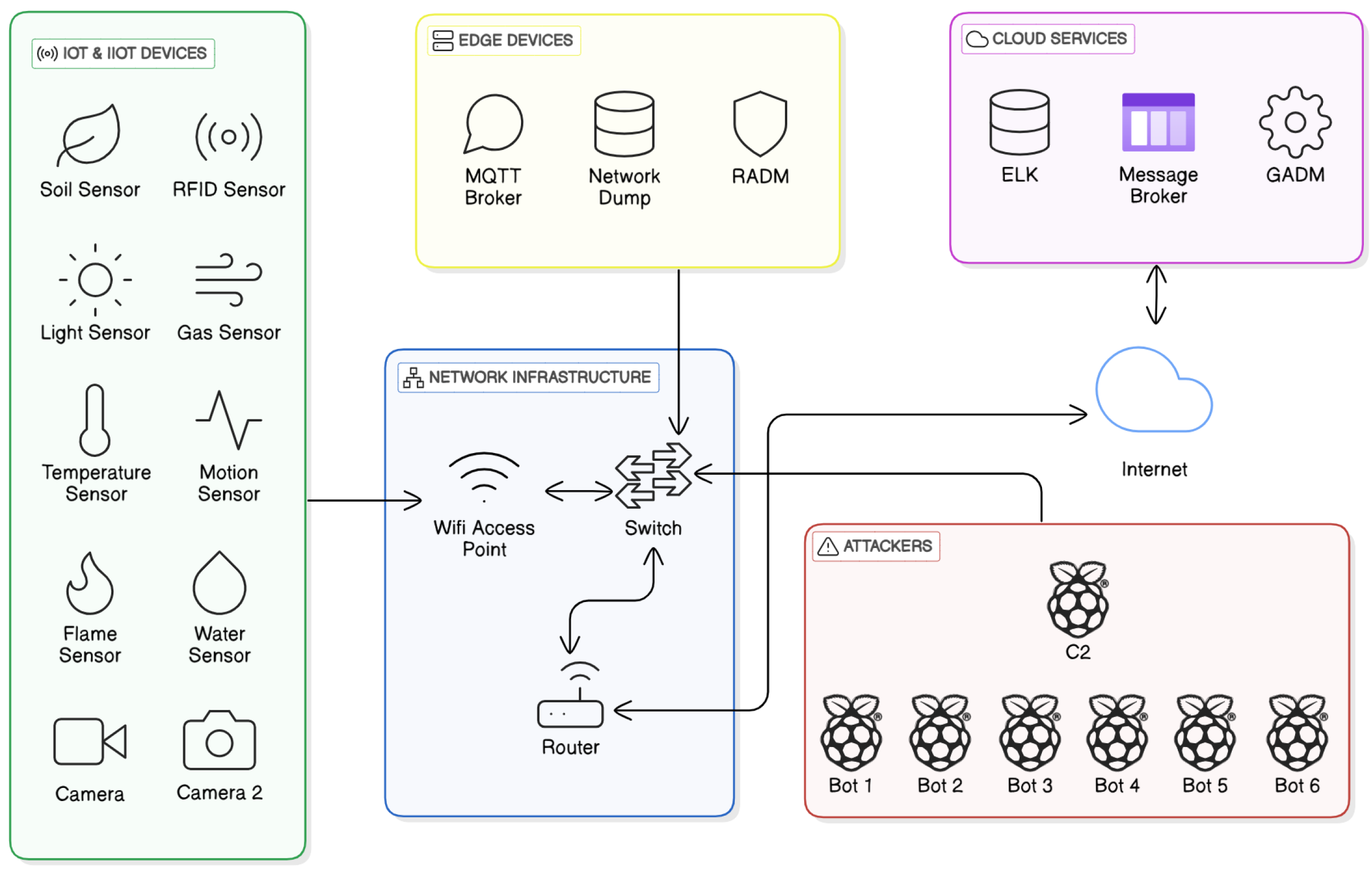

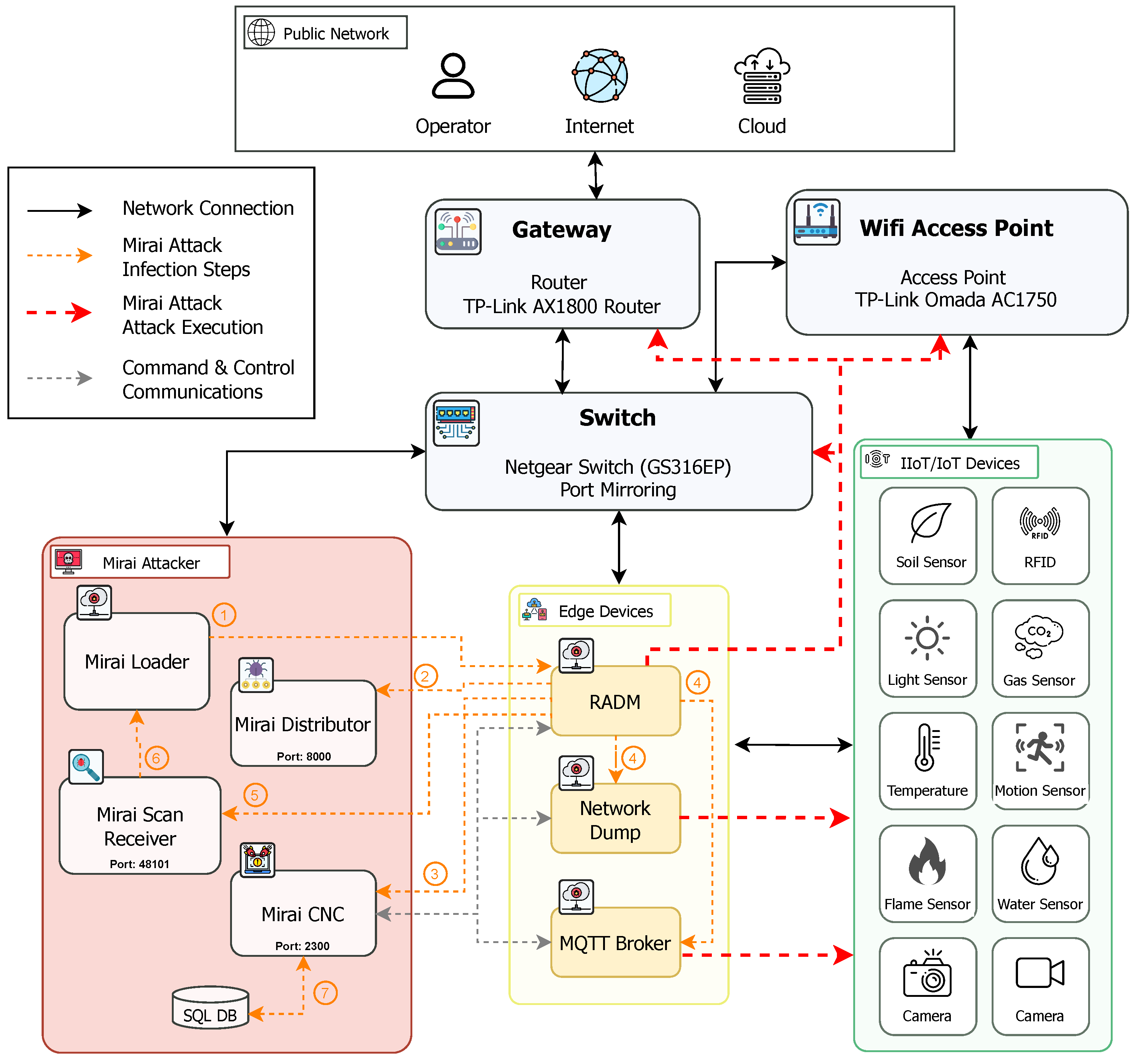

3.1. IoT and IIoT Lab

3.2. IIoT Testbed

3.2.1. IIoT and IoT Layer

3.2.2. Network Infrastructure

3.2.3. Edge Layer

3.2.4. Cloud Layer

3.2.5. Attacker Layer

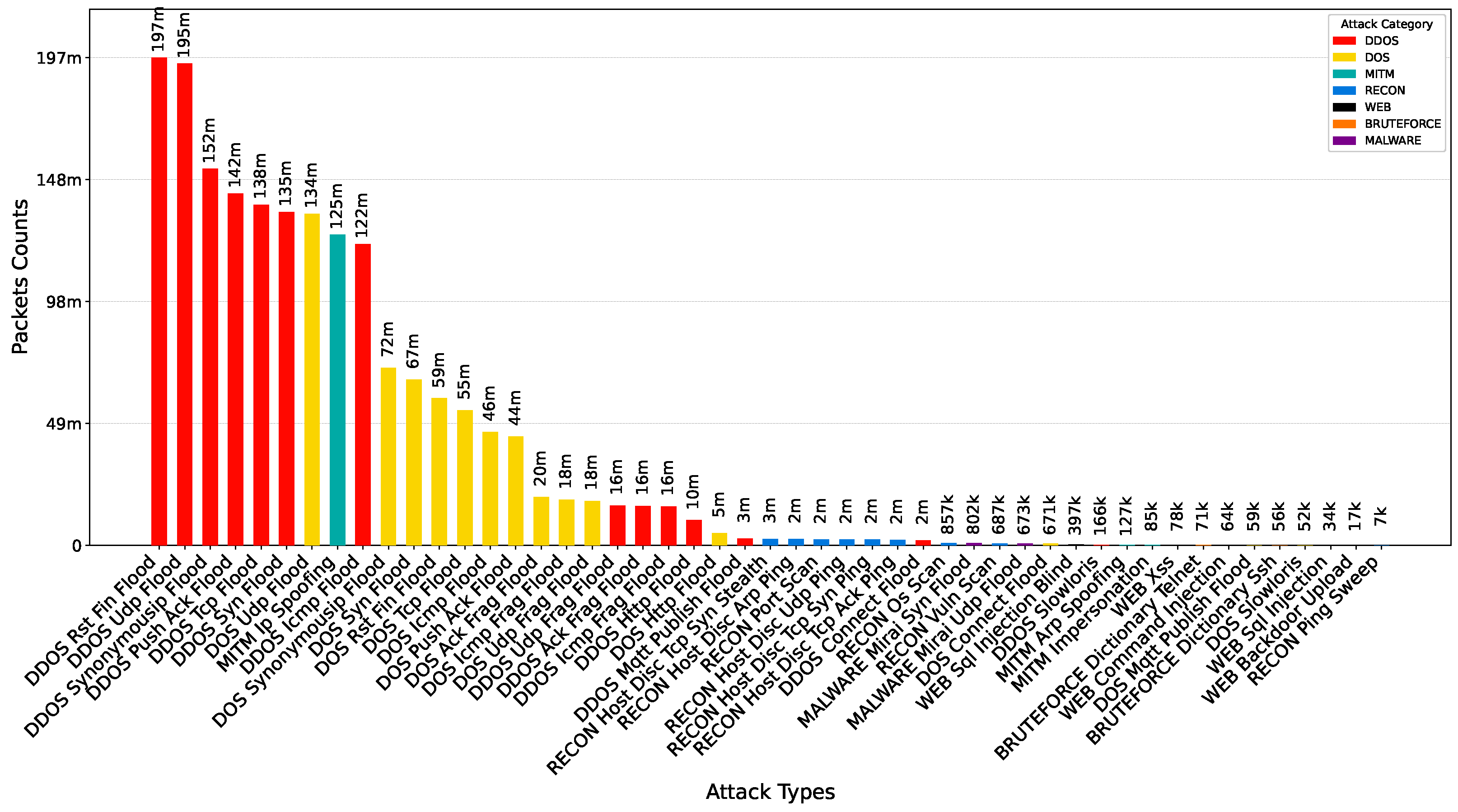

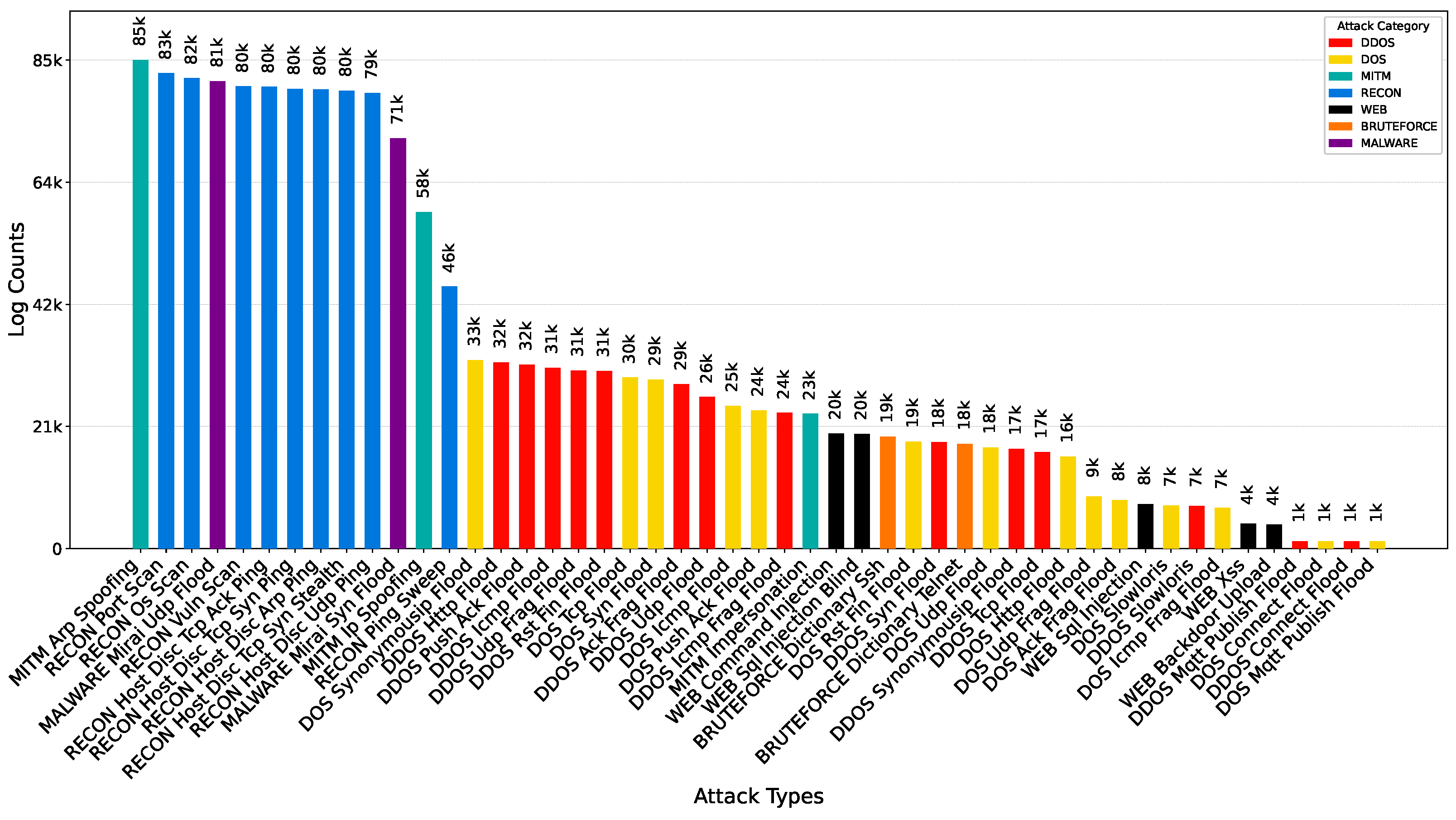

3.3. Data Collection

3.4. Benign Data Generation

3.5. Attack Data Generation

3.5.1. Execution of DoS and DDoS Attacks

- ACK Fragmentation: Uses a limited number of maximum-sized packets to impair network performance. These fragmented packets are often processed via network components such as routers and firewalls, as they typically do not perform packet reassembly.

- Slowloris: An application-layer DoS attack that keeps many connections to the target web server open by sending partial HTTP requests, thereby exhausting the server’s resources.

- TCP/UDP/ICMP/HTTP Flood: Involves overwhelming the target device with excessive volumes of different protocol packets to exhaust its processing capacity.

- RST-FIN Flood: Continuously sends TCP packets with the RST and FIN flags to degrade network performance by forcing connection terminations.

- PSH-ACK Flood: Targets server performance by sending floods of TCP packets with PSH and ACK flags, disrupting normal communication.

- UDP Fragmentation: A variation of UDP flooding that utilizes fragmented packets to consume more bandwidth with fewer packets.

- ICMP Fragmentation: Employs fragmented IP packets containing segments of ICMP messages to bypass network defenses and consume resources.

- TCP SYN Flood: A TCP-based attack that generates a high volume of SYN requests without completing the three-way handshake, causing the server to accumulate half-open connections.

- Synonymous IP Flood: This attack sends spoofed TCP-SYN packets in which the source and destination IP addresses are set to the target’s own address, causing the server to expend resources processing self-directed, invalid traffic.

3.5.2. Execution of Reconnaissance Attacks

- Ping Sweep: In this technique, ICMP Echo Request packets are sent to a range of IP addresses to determine active hosts, with devices responding via ICMP Echo Reply packets identified as online and reachable.

- OS Scan: Also referred to as operating system fingerprinting, this technique seeks to identify the specific operating system and its release version on a target device by analyzing its network responses and the behavior of open ports and services.

- Vulnerability Scan: This automated process probes devices and systems for known security weaknesses. It identifies exploitable flaws that could be leveraged in subsequent attacks, thereby supporting risk assessment and the prioritization of targets.

- Port Scan: This attack is employed to determine whether network ports on a target device are open, closed, or filtered. The attacker transmits a sequence of packets to multiple ports and analyzes the responses to identify available services and potential attack vectors.

- Host Discovery: This foundational step in many attacks involves identifying all active hosts within a network. Various techniques are employed to enumerate IP addresses of connected devices, providing a comprehensive view of the network environment.

3.5.3. Execution of Web-Based Attacks

- SQL Injection: This attack exploits web application input fields to inject malicious SQL statements, aiming to gain unauthorized access to the underlying database, retrieve sensitive information, or execute arbitrary queries.

- Command Injection: Similar in concept to SQL injection, this attack targets system-level commands by injecting malicious input into web forms or parameters, aiming to execute unauthorized commands on the host operating system.

- Backdoor Malware: Involves the installation of malicious software on the target system to establish a covert entry point. This enables persistent unauthorized access for executing malicious operations or exfiltrating data.

- Uploading Attack: Exploits vulnerabilities in file upload mechanisms of web applications to upload harmful files (e.g., scripts or executables). Once uploaded, these files can be executed to compromise the host system or escalate privileges.

- Cross-Site Scripting (XSS): This attack enables adversaries to inject malicious client-side scripts into web pages viewed by other users. Such scripts can be used to steal session cookies, redirect user traffic, or alter web content to deceive users and harvest sensitive information.

- Browser Hijacking: These attacks alter web browser configurations, including the homepage, search engine, or bookmarks, in order to redirect users to malicious sites or inject unwanted advertisements. The primary objective is often financial gain or data theft.

3.5.4. Execution of Spoofing and Man-in-the-Middle (MitM) Attacks

- ARP Spoofing: This attack compromises Address Resolution Protocol (ARP) tables by transmitting forged ARP messages that bind the attacker’s MAC address to the IP address of a legitimate device. Consequently, network traffic intended for the legitimate device is rerouted to the attacker, enabling eavesdropping, data tampering, or service disruption.

- IP Spoofing: In this attack, the attacker forges the source IP address of packets to make them appear as if they originate from a trusted device. This enables the attacker to bypass IP-based access controls, inject malicious data into ongoing sessions, or initiate further attacks without revealing their true identity.

- Impersonation Attack: Leveraging information obtained during reconnaissance, the attacker mimics a legitimate device within the network. By forging identification attributes or communication patterns, the attacker sends data on behalf of the impersonated device, potentially misleading other systems or users and facilitating unauthorized data access or manipulation.

3.5.5. Execution of Brute-Force Attacks

- Telnet Brute-Force: Attempts to access devices via Telnet using a predefined list of common credentials.

- SSH Brute-Force: Targets SSH services by automating login attempts with a dictionary of username–password pairs.

3.5.6. Execution of Malware (Mirai) Attacks

3.5.7. Mirai Malware Infection Phase

- Step 1: Credential Bruteforce via Telnet. The Mirai Loader targets a potential victim by initiating a Telnet connection and attempts to gain access using a brute-force attack on default or weak credentials. This exploits the inherent vulnerabilities of the Telnet protocol commonly enabled on IoT devices.

- Step 2: Malware Deployment. Upon successful access, the Mirai Distributor transfers the Mirai executable to the compromised device using an HTTP connection. The executable is then loaded and executed on the device.

- Step 3: Establishing CNC Communication. The infected device now operates under the control of the Mirai malware. It connects to the Mirai CNC server, which issues commands to coordinate further malware propagation and manage the infected botnet. All communication with the CNC server is conducted over Telnet in encoded binary format.

- Step 4: Network Scanning for New Victims. The infected device initiates a scanning process targeting random IP addresses within the network, seeking additional vulnerable devices. It attempts to authenticate to these devices using the same brute-force credential attack over Telnet.

- Step 5: Reporting to Scan Receiver. When a new vulnerable device is discovered and successfully accessed, the infected device sends the target’s IP address, port, and credentials to the Mirai Scan Receiver.

- Step 6: Infection Propagation. The Mirai Scan Receiver relays the newly discovered victim information to the Mirai Loader. The infection cycle then restarts from Step 1, targeting the new device.

- Step 7: CNC Administration A MySQL database is employed to support administrative operations of the Mirai CNC. It includes tables for user authentication to the CNC terminal, command execution logs, and IP whitelisting. This enables the botnet administrator to control access, review command history, and define IPs that are either excluded from or targeted for attacks.

3.5.8. Mirai Malware Attack Execution Phase

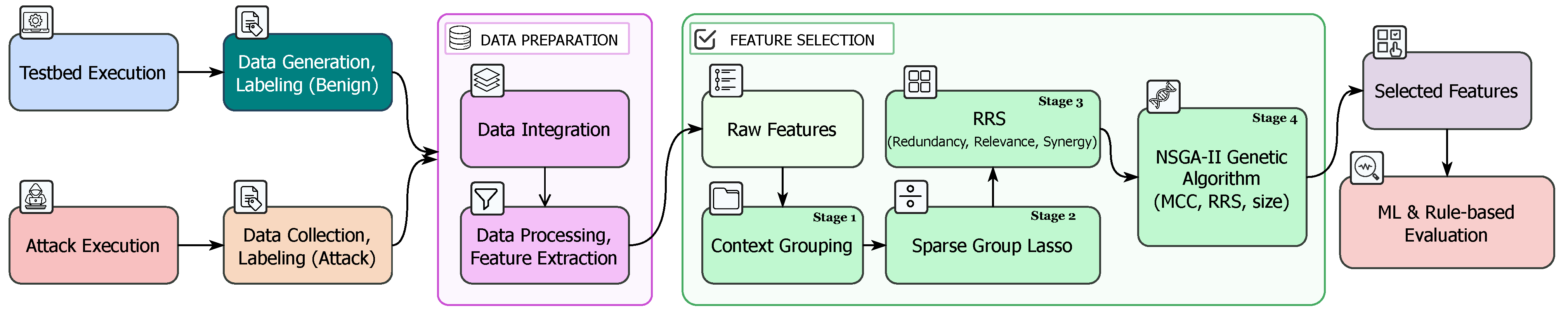

4. From Data Generation to Hierarchical Information-Driven Feature Selection

4.1. Proposed Hierarchical Multi-Stage Information-Driven Feature Selection Framework

- Dimensionality reduction: fewer input features lower training time, reduce memory usage, which is crucial for resource-constrained IIoT devices, and help mitigate the curse of dimensionality that negatively affects distance-based methods.

- Noise filtering: grouping features based on domain knowledge removes irrelevant or spurious correlations that can obscure meaningful patterns in the data.

- Interpretability: aggregating features into semantically meaningful categories (e.g., “Header Flags” or “Port Diversity”) improves transparency, enabling security practitioners to better understand and trust detection outcomes.

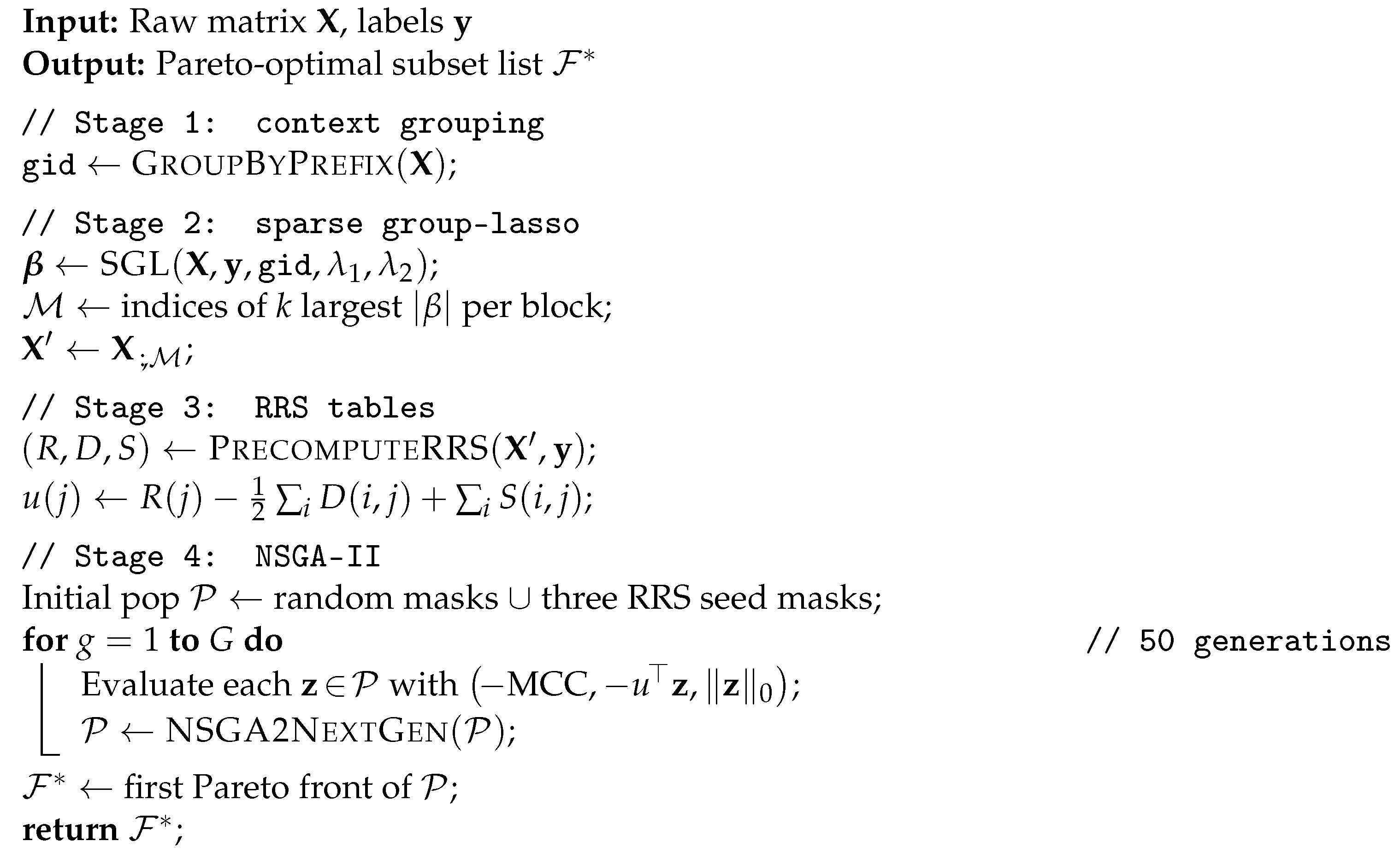

4.2. Overview of Hierarchical Information-Driven Feature Selection

- Stage 1: Context Grouping. Raw features () are partitioned into semantic blocks (e.g. “Packet Size”, “Header Flags”) using rule-based prefixes. The output is an index vector, .

- Stage 4: NSGA-II Optimization. [69] Each individual bit-string is scored through the objective vectorwhich NSGA-II minimizes via two-point crossover, RRS-biased mutation, and crowding-distance selection. The algorithm returns a Pareto set of non-dominated masks that span accuracy, information value, and subset size.

| Algorithm 1: Context-aware multi-stage feature selection. |

|

4.3. Input: Raw Feature Matrix

- Network-centric metrics: including byte counts, TCP/IP flag combinations, packet inter-arrival times, directional flow counts, and sets of unique IP/MAC/port values.

- Sensor and log metrics: covering sensor message intervals, value statistics (mean, max, min, standard deviation), and categorical distributions (e.g., type counts).

4.4. Context Grouping

4.5. Sparse Group Lasso for Hierarchical Feature Selection

4.6. Relevance–Redundancy–Synergy (RRS) Scoring

4.6.1. Composite Score

4.6.2. Integration in Genetic Optimization

4.7. Multi-Objective Genetic Algorithm Guided by RRS

4.7.1. Objective Vector

- MCC is the Matthews correlation coefficient achieved via a lightweight classifier (logistic regression) trained on using a 50% stratified subsample;

- RRS is the pre-computed relevance–redundancy–synergy score from Equation (7);

- penalizes larger subsets to promote parsimony.

4.7.2. NSGA-II Mechanics

4.7.3. Outcome

4.8. Experimental Results of the Proposed Feature Selection Method

4.8.1. Feature Selection Results for Binary Classification Target Variable

4.8.2. Feature Selection Results for Multi-Class Classification Target Variable

4.8.3. Discussion

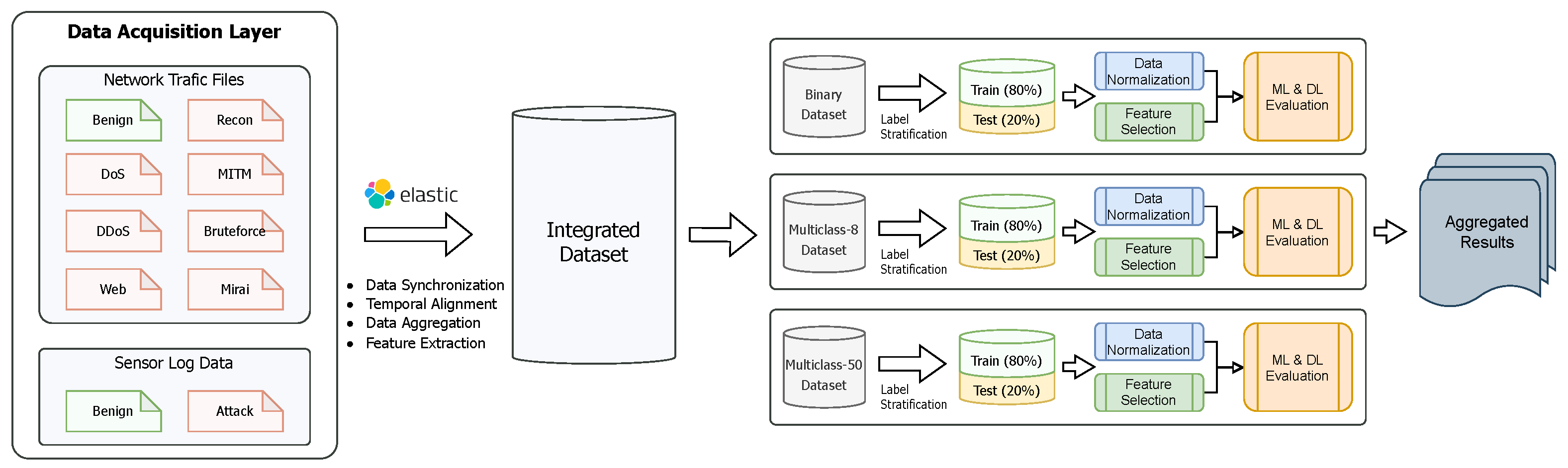

5. Experiments and Evaluations

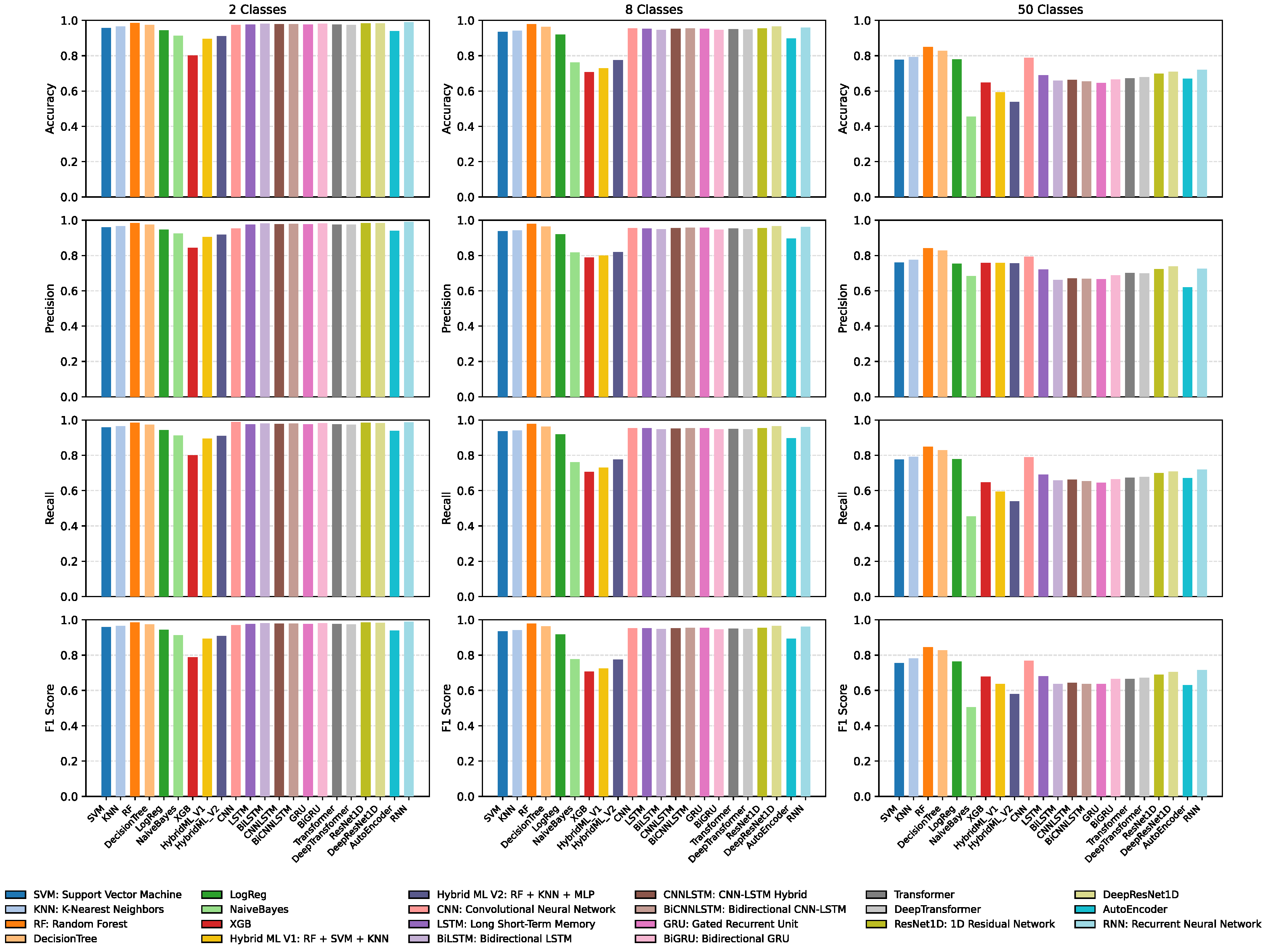

5.1. Performance Assessment of Machine Learning and Deep Learning Methods

- Accuracy: the ratio of correctly classified samples to the total number of samples.where FP denotes the number of false positives, TP the number of true positives, FN the number of false negatives, and TN the number of true negatives.

- Precision: the proportion of true positive predictions relative to all predicted positive instances.

- Recall (sensitivity) represents the proportion of actual positives correctly identified via the model.

- F1-score: the F1-score is the harmonic mean of precision and recall, serving as a unified measure that captures the trade-off between the two.

Discussion

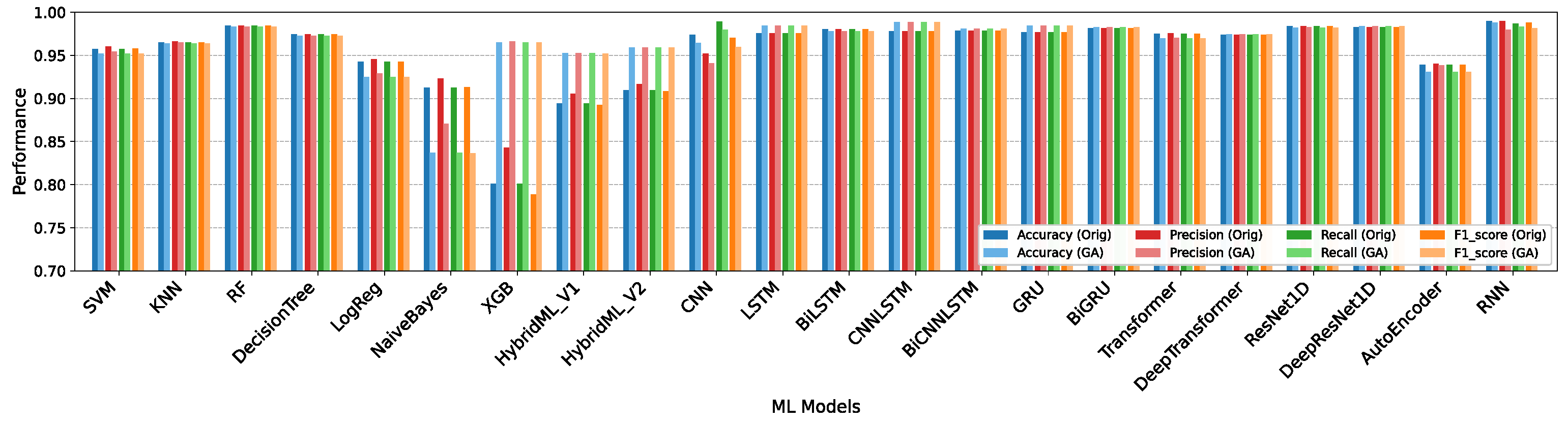

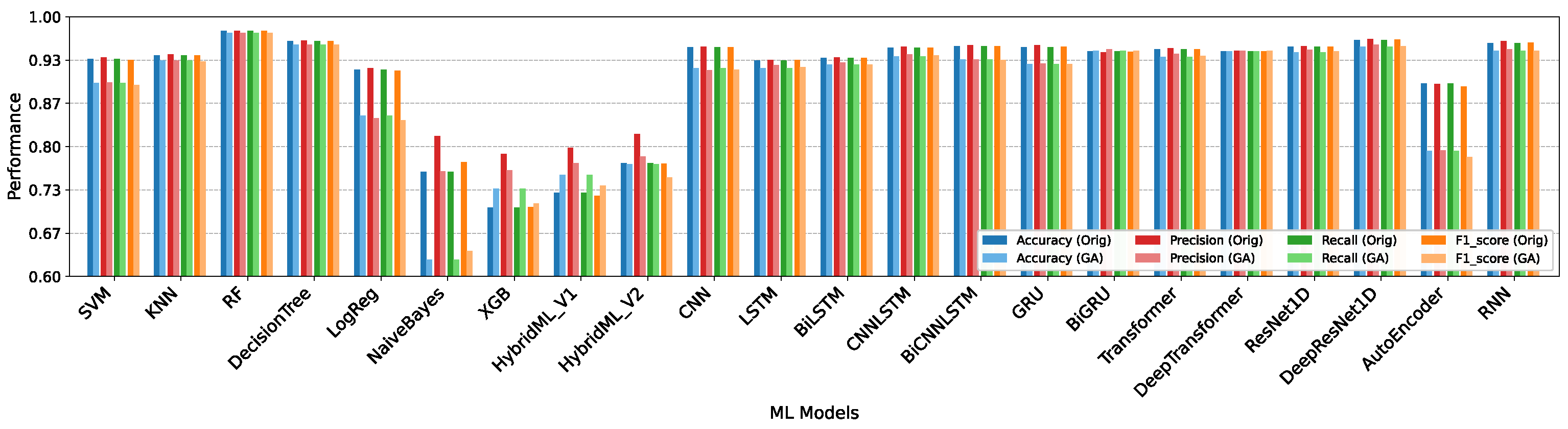

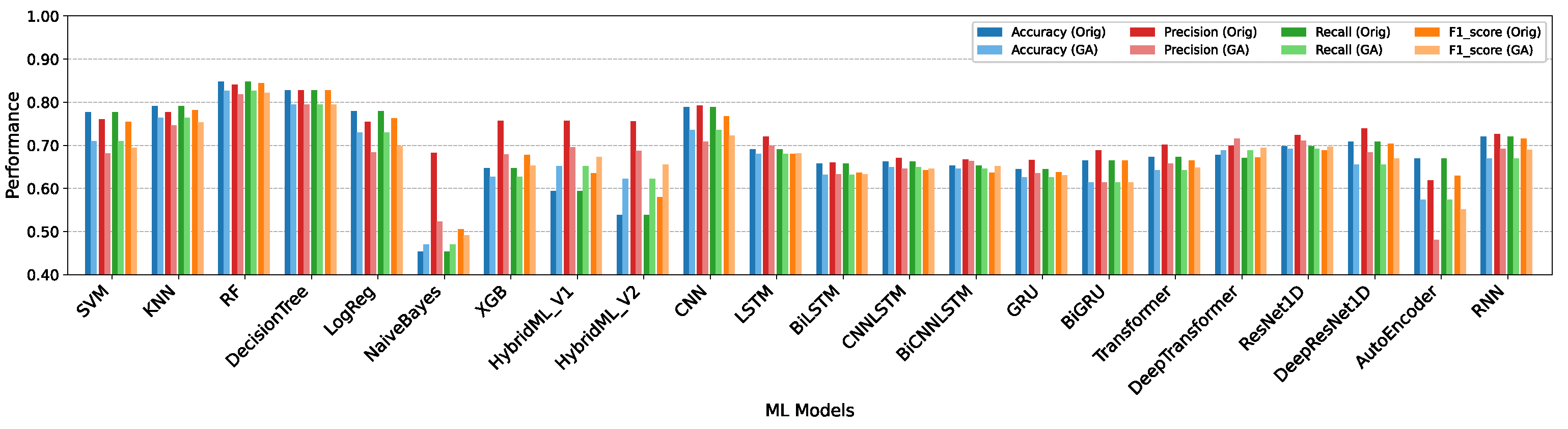

5.2. Performance Assessment of Feature Selection Method: Detection Performance Perspective

5.3. Comparison with Established Feature Selection Methods

Discussion

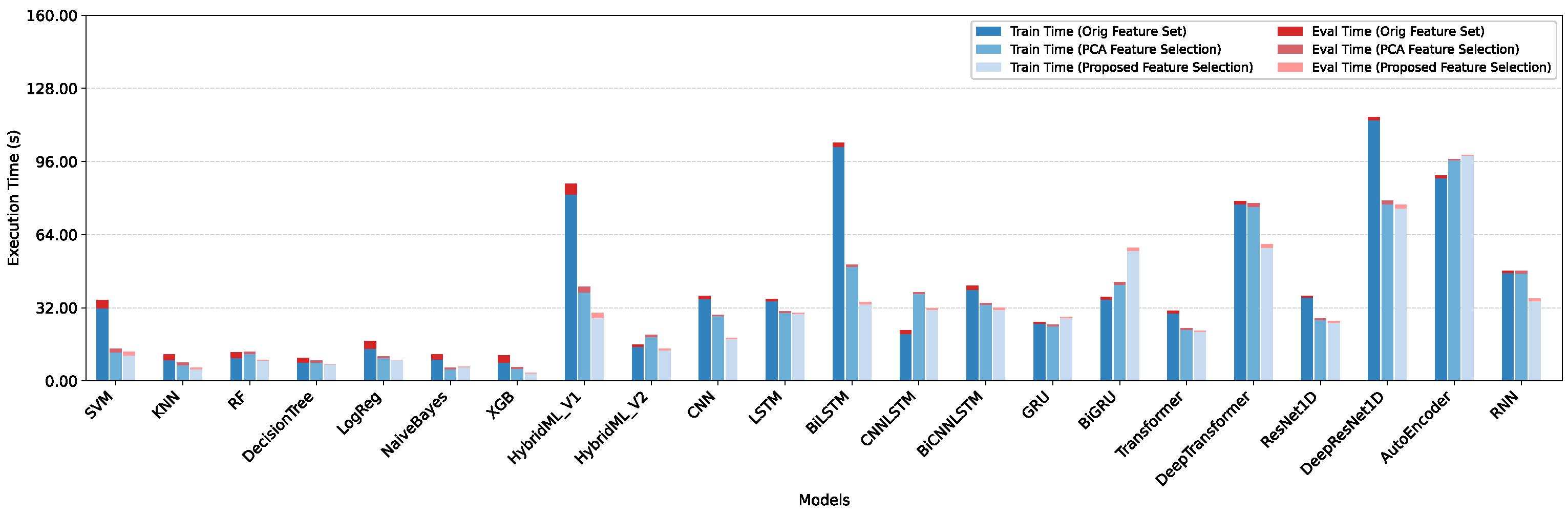

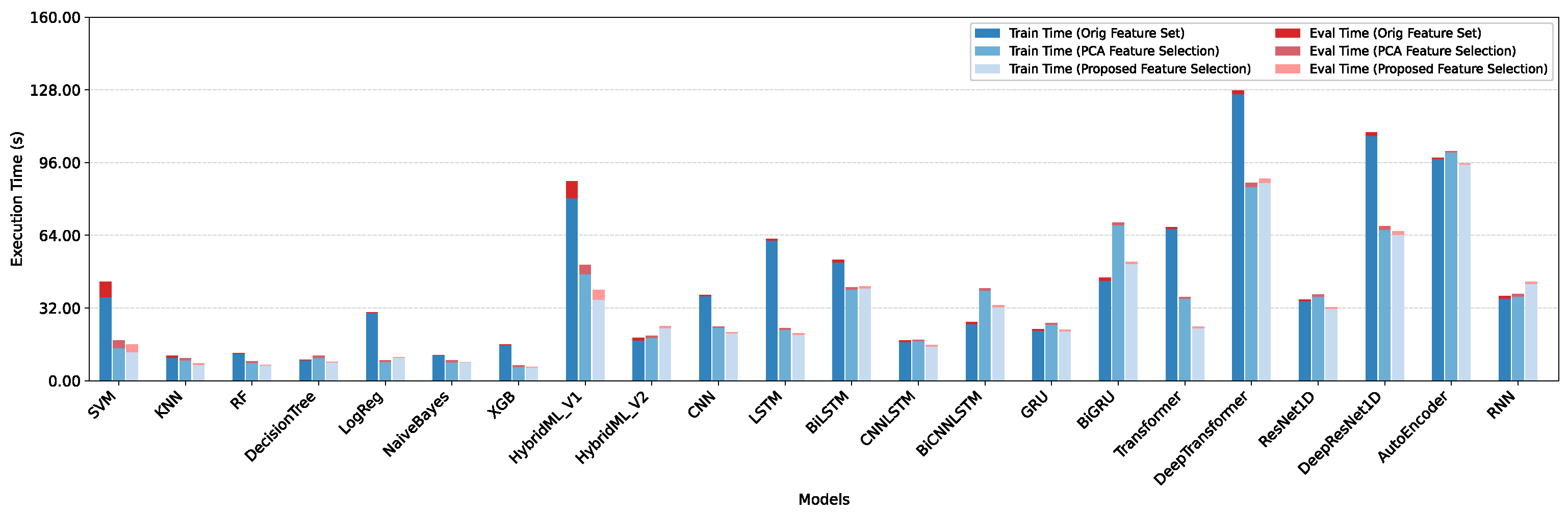

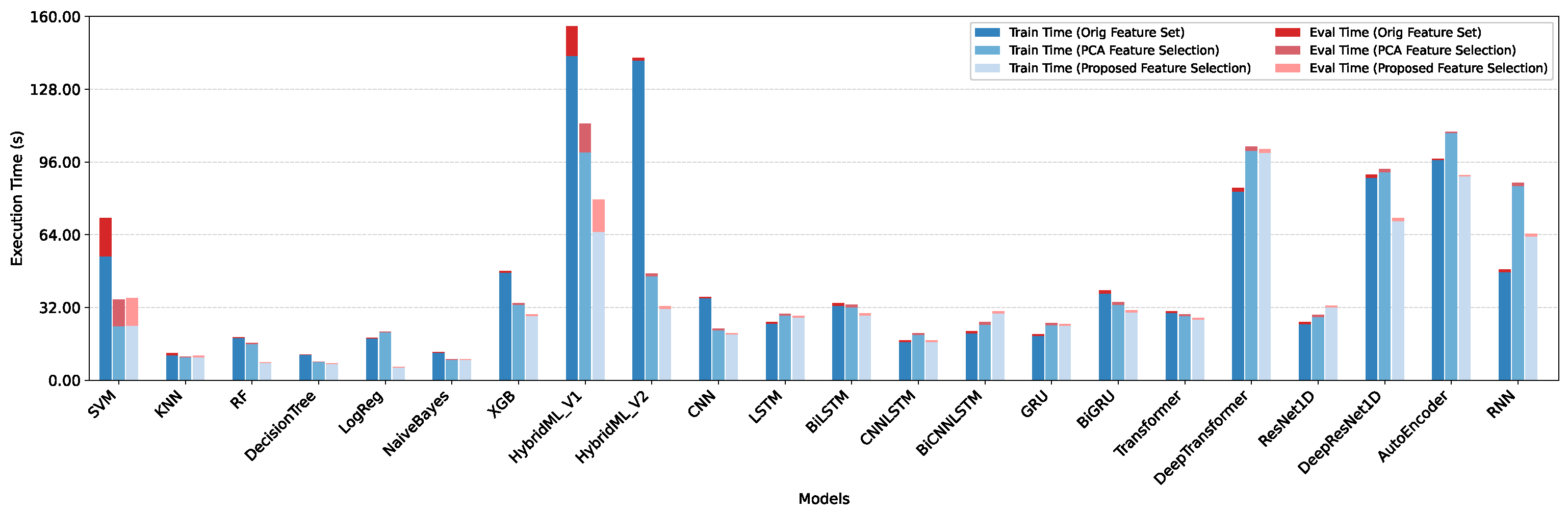

5.4. Performance Assessment of Feature Selection Method: Resource Efficiency Perspective

Discussion

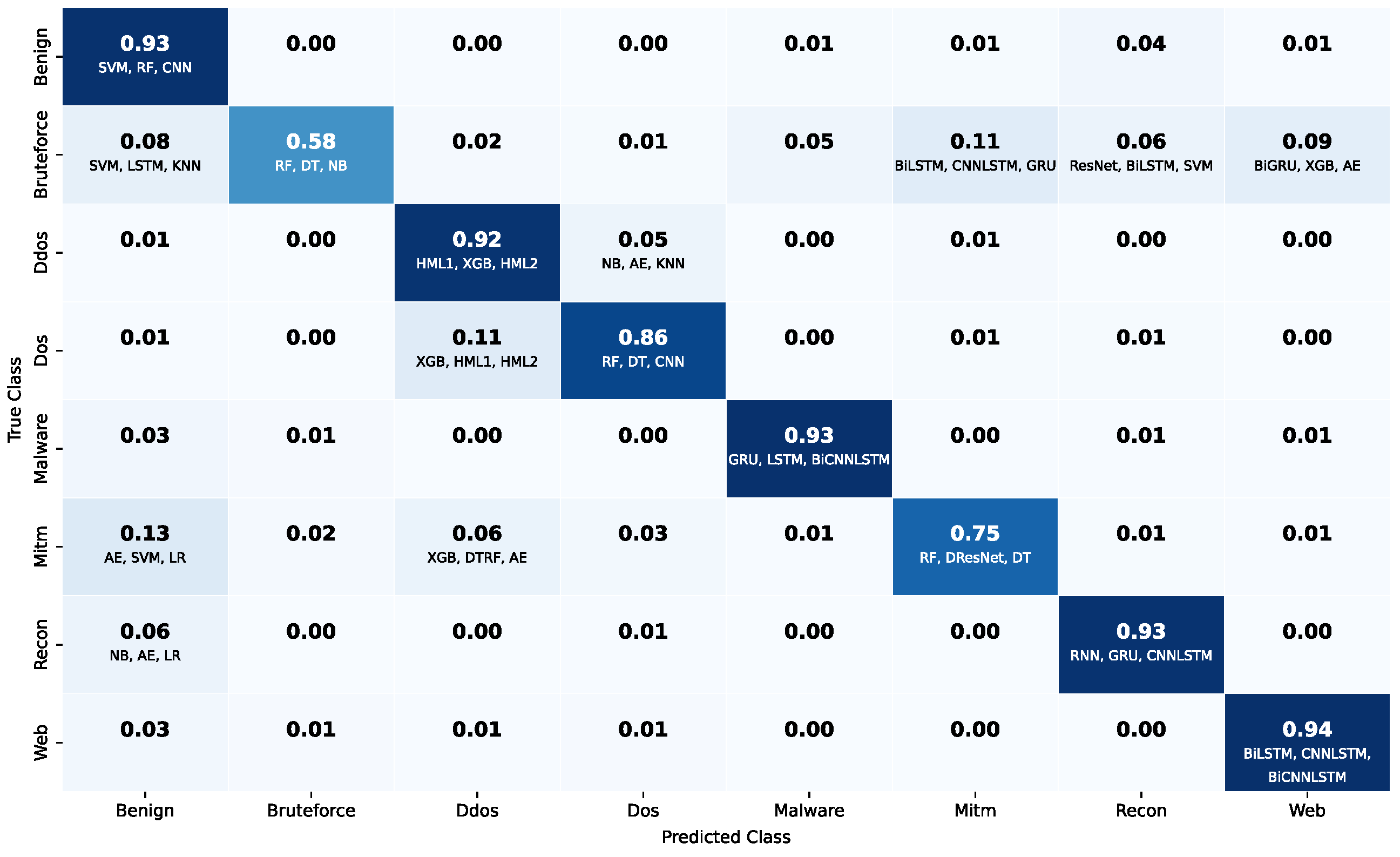

5.5. Aggregated Confusion Matrix Analysis Across Models

5.6. Quantitative Comparison and Ranking of Benchmark Datasets

5.6.1. Ranking Approach

5.6.2. Results and Discussion

6. Limitations and Future Directions

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chataut, R.; Phoummalayvane, A.; Akl, R. Unleashing the Power of IoT: A Comprehensive Review of IoT Applications and Future Prospects in Healthcare, Agriculture, Smart Homes, Smart Cities, and Industry 4.0. Sensors 2023, 23, 7194. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, B. A Survey on Industrial Internet of Things Security: Requirements, Attacks, AI-Based Solutions, and Edge Computing Opportunities. Sensors 2023, 23, 7470. [Google Scholar] [CrossRef]

- Nuaimi, M.; Fourati, L.C.; Hamed, B.B. Intelligent approaches toward intrusion detection systems for Industrial Internet of Things: A systematic comprehensive review. J. Netw. Comput. Appl. 2023, 215, 103637. [Google Scholar] [CrossRef]

- Mengistu, T.M.; Kim, T.; Lin, J.W. A Survey on Heterogeneity Taxonomy, Security and Privacy Preservation in the Integration of IoT, Wireless Sensor Networks and Federated Learning. Sensors 2024, 24, 968. [Google Scholar] [CrossRef]

- Anjum, N.; Latif, Z.; Chen, H. Security and privacy of industrial big data: Motivation, opportunities, and challenges. J. Netw. Comput. Appl. 2025, 237, 104130. [Google Scholar] [CrossRef]

- Yang, X.; Tong, F.; Jiang, F.; Cheng, G. A Lightweight and Dynamic Open-Set Intrusion Detection for Industrial Internet of Things. IEEE Trans. Inf. Forensics Secur. 2025, 20, 2930–2943. [Google Scholar] [CrossRef]

- Savaglio, C.; Mazzei, P.; Fortino, G. Edge Intelligence for Industrial IoT: Opportunities and Limitations. Procedia Comput. Sci. 2024, 232, 397–405. [Google Scholar] [CrossRef]

- Andriulo, F.C.; Fiore, M.; Mongiello, M.; Traversa, E.; Zizzo, V. Edge Computing and Cloud Computing for Internet of Things: A Review. Informatics 2024, 11, 71. [Google Scholar] [CrossRef]

- Holdbrook, R.; Odeyomi, O.; Yi, S.; Roy, K. Network-Based Intrusion Detection for Industrial and Robotics Systems: A Comprehensive Survey. Electronics 2024, 13, 4440. [Google Scholar] [CrossRef]

- Kong, X.; Song, Z.; Ye, X.; Jiao, J.; Qi, H.; Liu, X. GRID: Graph-Based Robust Intrusion Detection Solution for Industrial IoT Networks. IEEE Internet Things J. 2025, 12, 26646–26659. [Google Scholar] [CrossRef]

- Zhang, S.; Xu, Y.; Xie, X. Universal Adversarial Perturbations Against Machine-Learning-Based Intrusion Detection Systems in Industrial Internet of Things. IEEE Internet Things J. 2025, 12, 1867–1889. [Google Scholar] [CrossRef]

- Ahmed, M.R.; Zhang, M. Context-Aware Intrusion Detection in Industrial Control Systems. In Proceedings of the 2024 Workshop on Re-Design Industrial Control Systems with Security, New York, NY, USA, 6 May 2024; RICSS: London, UK, 2024. [Google Scholar] [CrossRef]

- Sheng, C.; Zhou, W.; Han, Q.L.; Ma, W.; Zhu, X.; Wen, S.; Xiang, Y. Network Traffic Fingerprinting for IIoT Device Identification: A Survey. IEEE Trans. Ind. Inform. 2025, 21, 3541–3554. [Google Scholar] [CrossRef]

- Al-Hawawreh, M.; Sitnikova, E.; Aboutorab, N. X-IIoTID: A Connectivity-Agnostic and Device-Agnostic Intrusion Data Set for Industrial Internet of Things. IEEE Internet Things J. 2022, 9, 3962–3977. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the International Conference on Information Systems Security and Privacy, Madeira, Portugal, 22–24 January 2018. [Google Scholar]

- Neto, E.C.P.; Dadkhah, S.; Ferreira, R.; Zohourian, A.; Lu, R.; Ghorbani, A.A. CICIoT2023: A Real-Time Dataset and Benchmark for Large-Scale Attacks in IoT Environment. Sensors 2023, 23, 5941. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A New Comprehensive Realistic Cyber Security Dataset of IoT and IIoT Applications for Centralized and Federated Learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A. A Detailed Analysis of the KDD-CUP 99 Data Set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; Available online: https://ieeexplore.ieee.org/document/5356528 (accessed on 27 August 2024).

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Alsaedi, A.; Moustafa, N.; Tari, Z.; Mahmood, A.; Anwar, A. TON_IoT Telemetry Dataset: A New Generation Dataset of IoT and IIoT for Data-Driven Intrusion Detection Systems. IEEE Access 2020, 8, 165130–165150. [Google Scholar] [CrossRef]

- Vaccari, I.; Chiola, G.; Aiello, M.; Mongelli, M.; Cambiaso, E. MQTTset, a New Dataset for Machine Learning Techniques on MQTT. Sensors 2020, 20, 6578. [Google Scholar] [CrossRef]

- Omotosho, A.; Qendah, Y.; Hammer, C. IDS-MA: Intrusion Detection System for IoT MQTT Attacks Using Centralized and Federated Learning. In Proceedings of the 2023 IEEE 47th Annual Computers, Software, and Applications Conference (COMPSAC), Torino, Italy, 26–30 June 2023; pp. 678–688. [Google Scholar] [CrossRef]

- Lippmann, R.; Haines, J.W.,; Fried, D.J.; Korba, J.; Das, K. The 1999 DARPA Off-Line Intrusion Detection Evaluation. MIT Lincoln Laboratory, 2000. Available online: https://www.ll.mit.edu/r-d/datasets/1999-darpa-intrusion-detection-evaluation-dataset (accessed on 27 August 2024).

- Ghiasvand, E.; Ray, S.; Iqbal, S.; Dadkhah, S.; Ghorbani, A.A. CICAPT-IIOT: A provenance-based apt attack dataset for IIOT environment. arXiv 2024, arXiv:2407.11278. 2024. [Google Scholar]

- Zolanvari, M.; Teixeira, M.A.; Gupta, L.; Jain, R. WUSTL-IIOT-2021 Dataset for IIoT Cybersecurity Research; Washington University: St. Louis, MO, USA, 2021. [Google Scholar]

- Myneni, S.; Chowdhary, A.; Sabur, A.; Sengupta, S.; Agrawal, G.; Huang, D.; Kang, M. DAPT 2020—Constructing a Benchmark Dataset for Advanced Persistent Threats; Springer International Publishing: Cham, Switzerland, 2020; pp. 138–163. [Google Scholar] [CrossRef]

- Garcia, S.; Parmisano, A.; Erquiaga, M.J. IoT-23: A Labeled Dataset with Malicious and Benign IoT Network Traffic, 2020 [Dataset]. Available online: https://doi.org/10.5281/zenodo.4743746 (accessed on 17 November 2024).

- Meidan, Y.; Bohadana, M.; Mathov, Y.; Mirsky, Y.; Shabtai, A.; Breitenbacher, D.; Elovici, Y. N-BaIoT—Network-Based Detection of IoT Botnet Attacks Using Deep Autoencoders. IEEE Pervasive Comput. 2018, 17, 12–22. [Google Scholar] [CrossRef]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the development of realistic botnet dataset in the Internet of Things for network forensic analytics: Bot-IoT dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef]

- Mathur, A.P.; Tippenhauer, N.O. SWaT: A water treatment testbed for research and training on ICS security. In Proceedings of the 2016 International Workshop on Cyber-physical Systems for Smart Water Networks (CySWater), Vienna, Austria, 11 April 2016; pp. 31–36. [Google Scholar] [CrossRef]

- Ahmed, C.; Palleti, V.; Mathur, A. WADI: A water distribution testbed for research in the design of secure cyber physical systems. In Proceedings of the 3rd international workshop on cyber-physical systems for smart water networks, Pittsburgh, PA, USA, 21 April 2017; pp. 25–28. [Google Scholar] [CrossRef]

- C-MAPSS Aircraft Engine Simulator Data, 2008. Available online: https://data.nasa.gov/dataset/c-mapss-aircraft-engine-simulator-data (accessed on 27 August 2024).

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust Anomaly Detection for Multivariate Time Series through Stochastic Recurrent Neural Network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 4–8 August 2019; pp. 2828–2837. [Google Scholar] [CrossRef]

- McCann, M.; Johnston, A. SECOM [Dataset]. UCI Machine Learning Repository, 2008. Available online: https://doi.org/10.24432/C54305 (accessed on 27 August 2024).

- Scania CV AB, APS Failure at Scania Trucks [Dataset], UCI Machine Learning Repository, 2016. Available online: https://archive.ics.uci.edu/ml/datasets/APS+Failure+at+Scania+Trucks (accessed on 27 August 2024).

- Purohit, H.; Tanabe, R.; Ichige, K.; Endo, T.; Nikaido, Y.; Suefusa, K.; Kawaguchi, Y. MIMII Dataset: Sound Dataset for Malfunctioning Industrial Machine Investigation and Inspection. arXiv 2019, arXiv:1909.09347. [Google Scholar] [CrossRef]

- Gas Sensor Array Drift Dataset. Available online: https://archive.ics.uci.edu/dataset/224/gas+sensor+array+drift+dataset (accessed on 27 August 2024).

- Intel Lab Data. 2004. Available online: https://kaggle.com/datasets/caesarlupum/iot-sensordata (accessed on 27 August 2024).

- Gao, J.; Giri, S.; Kara, E.C.; Bergés, M. PLAID: A public dataset of high-resoultion electrical appliance measurements for load identification research: Demo abstract. In Proceedings of the 1st ACM Conference on Embedded Systems for Energy-Efficient Buildings, New York, NY, USA, 5–6 November 2014; pp. 198–199. [Google Scholar] [CrossRef]

- Abdulaal, A.; Liu, Z.; Lancewicki, T. Practical Approach to Asynchronous Multivariate Time Series Anomaly Detection and Localization. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 14 August 2021; pp. 2485–2494. [Google Scholar] [CrossRef]

- Ranjan, C.; Reddy, M.; Mustonen, M.; Paynabar, K.; Pourak, K. Dataset: Rare event classification in Multivariate Time Series. arXiv 2019, arXiv:1809.10717. [Google Scholar] [CrossRef]

- Statlog (Shuttle) Dataset, UCI Machine Learning Repository, 1999. Available online: https://archive.ics.uci.edu/ml/datasets/Statlog+(Shuttle) (accessed on 27 August 2024).

- Chen, Y.; Keogh, E. ECG5000 Dataset [Dataset], UCR Time Series Classification Archive, 2015. Available online: https://www.cs.ucr.edu/~eamonn/time_series_data_2018/ (accessed on 27 August 2024).

- Eclipse Mosquitto. Available online: https://mosquitto.org/ (accessed on 26 August 2024).

- Filebeat. Available online: https://www.elastic.co/beats/filebeat (accessed on 26 August 2024).

- TShark. Available online: https://www.wireshark.org/docs/man-pages/tshark.html (accessed on 26 August 2024).

- Wireshark Foundation. Wireshark: Network Protocol Analyzer; Wireshark Foundation: Davis, CA, USA, 2024; Available online: https://www.wireshark.org (accessed on 26 August 2024).

- Linux, K. hping3: TCP/IP Packet Assembler and Analyzer. 2019. Available online: https://www.kali.org/tools/hping3 (accessed on 19 June 2023).

- Leeon123. Golang HTTP Flood Tool. 2020. Available online: https://github.com/Leeon123/golang-httpflood (accessed on 19 June 2023).

- Krylovsk. MQTT Benchmark: MQTT Broker Benchmarking Tool. 2023. Available online: https://github.com/krylovsk/mqtt-benchmark (accessed on 19 June 2023).

- Yaltirakli, G. Slowloris: Low Bandwidth DoS Tool. 2015. Available online: https://github.com/gkbrk/slowloris (accessed on 19 June 2023).

- EPC-MSU. UDP Flood Attack Script. 2023. Available online: https://github.com/EPC-MSU/udp-flood (accessed on 19 June 2023).

- Lyon, G. Nmap: Network Mapper. 2014. Available online: http://nmap.org/ (accessed on 22 June 2023).

- Linux, K. fping: Parallel Ping for Network Scanning. 2023. Available online: https://fping.org/ (accessed on 19 June 2023).

- Security, S. Vulscan: Vulnerability Scanning NSE Script for Nmap. 2023. Available online: https://github.com/scipag/vulscan (accessed on 19 June 2023).

- Makiraid. Remot3d: PHP Remote Shell Backdoor. 2023. Available online: https://github.com/makiraid/Remot3d (accessed on 19 June 2023).

- Payloadbox. Command Injection Payload List. 2023. Available online: https://github.com/payloadbox/command-injection-payload-list (accessed on 19 June 2023).

- Project, S. sqlmap: Automatic SQL Injection and Database Takeover Tool. 2023. Available online: https://github.com/sqlmapproject/sqlmap (accessed on 19 June 2023).

- Payloadbox. SQL Injection Payload List. 2023. Available online: https://github.com/payloadbox/sql-injection-payload-list (accessed on 19 June 2023).

- Payloadbox. XSS Payload List. 2023. Available online: https://github.com/payloadbox/xss-payload-list (accessed on 19 June 2023).

- S0md3v. XSStrike: Advanced XSS Detection Suite. 2023. Available online: https://github.com/s0md3v/XSStrike (accessed on 19 June 2023).

- Miessler, D. SecLists: Password Collections for Security Testing. 2023. Available online: https://github.com/danielmiessler/SecLists/blob/master/Passwords (accessed on 19 June 2023).

- Hauser, V. THC-Hydra: Network Logon Cracker. 2023. Available online: https://github.com/vanhauser-thc/thc-hydra (accessed on 19 June 2023).

- Ornaghi, A.; Valleri, M. Ettercap: Comprehensive Suite for MITM Attacks. 2005. Available online: https://www.ettercap-project.org/ (accessed on 19 June 2023).

- Gamblin, J. Mirai BotNet: Leaked Mirai Source Code for Research/IOC Development Purposes. Available online: https://github.com/jgamblin/Mirai-Source-Code (accessed on 1 June 2023).

- Meier, L.; van de Geer, S.; Bühlmann, P. The Group Lasso for Logistic Regression. J. R. Stat. Soc. Series B 2008, 70, 53–71. [Google Scholar] [CrossRef]

- Simon, N.; Friedman, J.; Hastie, T.; Tibshirani, R. A Sparse-Group Lasso. J. Comput. Graph. Stat. 2013, 22, 231–245. [Google Scholar] [CrossRef]

- Brown, G.; Pocock, A.; Zhao, M.J.; Luján, M. Conditional likelihood maximisation: A unifying framework for information theoretic feature selection. J. Mach. Learn. Res. 2012, 13, 27–66. [Google Scholar]

- Hamdani, T.M.; Won, J.-M.; Alimi, A.M.; Karray, F. Multi-objective Feature Selection with NSGA-II. In Proceedings of the EvoWorkshops (EvoCOP, EvoBIO, EvoWorkshops), Valencia, Spain, 11–13 April 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 240–250. [Google Scholar]

- Ding, C.; Peng, H. Minimum Redundancy Feature Selection from Microarray Gene Expression Data. J. Bioinform. Comput. Biol. 2005, 3, 185–205. [Google Scholar] [CrossRef]

- McGill, W.J. Multivariate Information Transmission. Psychometrika 1954, 19, 97–116. [Google Scholar] [CrossRef]

- Wollstadt, P.; Nili, H.; Lizier, J.T.; Vicente, R. A Rigorous Information-Theoretic Definition of Redundancy and Synergy in Feature Selection. J. Mach. Learn. Res. 2023, 24, 1–69. [Google Scholar]

| Device Name | Category | Role | MAC Address | IP |

|---|---|---|---|---|

| TP-Link Router | Network | Router | 28:87:BA:BD:C6:6C | 192.168.1.1 |

| Netgear Switch | Network | Switch | E0:46:EE:21:56:18 | 192.168.1.200 |

| IIoT AP | Network | AP | 30:DE:4B:E2:13:4E | 192.168.1.205 |

| MQTT Broker | Raspberry Pie | MQTT Broker | DC:A6:32:DC:28:46 | 192.168.1.193 |

| Edge Device | Raspberry Pie | Edge Device | DC:A6:32:DC:27:D4 | 192.168.1.195 |

| IIoT Laptop | Laptop | Capturer | E4:B9:7A:21:B2:F0 | 192.168.1.210 |

| Weather Sensor | Sensor | Sensor | 08:B6:1F:82:12:30 | 192.168.1.10 |

| Water Sensor | Sensor | Sensor | 08:B6:1F:84:66:78 | 192.168.1.11 |

| Soil Sensor | Sensor | Sensor | F0:08:D1:CE:CF:0C | 192.168.1.12 |

| Steam Sensor | Sensor | Sensor | 08:B6:1F:81:D2:CC | 192.168.1.13 |

| Gas Sensor | Sensor | Sensor | 08:B6:1F:83:25:98 | 192.168.1.14 |

| Sound Sensor | Sensor | Sensor | F0:08:D1:CE:CF:C8 | 192.168.1.15 |

| Vibration Sensor | Sensor | Sensor | 08:B6:1F:82:27:D0 | 192.168.1.16 |

| Ultrasonic Sensor | Sensor | Sensor | 08:B6:1F:82:EE:C4 | 192.168.1.17 |

| Light Sensor | Sensor | Sensor | 8C:AA:B5:8A:A9:B4 | 192.168.1.18 |

| Accelerometer Sensor | Sensor | Sensor | 08:B6:1F:82:EE:44 | 192.168.1.19 |

| Proximity Collision | Sensor | Sensor | 08:B6:1F:82:EF:30 | 192.168.1.20 |

| Motion Sensor | Sensor | Sensor | 08:B6:1F:82:1C:3C | 192.168.1.21 |

| RFID Sensor | Sensor | Sensor | 08:B6:1F:82:2B:1C | 192.168.1.22 |

| Flame Sensor | Sensor | Sensor | 08:B6:1F:82:EE:CC | 192.168.1.23 |

| Yi Camera | Camera | Camera | 7C:94:9F:84:71:7E | 192.168.1.50 |

| Blurams Camera | Camera | Camera | 14:C9:CF:45:3E:BA | 192.168.1.52 |

| Dekco Camera | Camera | Camera | 44:29:1E:5C:DE:12 | 192.168.1.53 |

| Liftmaster Camera | Camera | Camera | 20:50:E7:F0:0A:04 | 192.168.1.54 |

| Geeni Camera | Camera | Camera | DC:29:19:95:3A:79 | 192.168.1.55 |

| Wisenet Camera | Camera | Camera | 00:09:18:6D:73:B9 | 192.168.1.57 |

| Plug All Cameras | Smart Plug | Smart Plug | C4:DD:57:15:5C:2C | 192.168.1.80 |

| Plug Laptop | Smart Plug | Smart Plug | C4:DD:57:0D:F2:76 | 192.168.1.81 |

| Plug Mqtt | Smart Plug | Smart Plug | D4:A6:51:1F:F6:7C | 192.168.1.82 |

| Plug RFID | Smart Plug | Smart Plug | D4:A6:51:1D:C0:ED | 192.168.1.83 |

| Plug Edge | Smart Plug | Smart Plug | D4:A6:51:22:03:99 | 192.168.1.84 |

| Plug Motion | Smart Plug | Smart Plug | D4:A6:51:1D:74:3A | 192.168.1.85 |

| Plug Flame | Smart Plug | Smart Plug | D4:A6:51:20:91:F7 | 192.168.1.86 |

| Plug Proximity | Smart Plug | Smart Plug | D4:A6:51:20:0E:3F | 192.168.1.87 |

| Plug Vibration | Smart Plug | Smart Plug | D4:A6:51:79:68:75 | 192.168.1.88 |

| Plug Cameras1 Yi | Smart Plug | Smart Plug | 50:02:91:10:AC:D8 | 192.168.1.90 |

| Plug Cameras2 Geeni | Smart Plug | Smart Plug | 50:02:91:10:09:8F | 192.168.1.91 |

| Plug Cameras3 Dekco | Smart Plug | Smart Plug | 50:02:91:11:05:8C | 192.168.1.92 |

| Plug All Sensors | Smart Plug | Smart Plug | D4:A6:51:82:98:A8 | 192.168.1.93 |

| Attacker0 | Raspberry Pie | Attacker (C2) | E4:5F:01:55:90:C1 | 192.168.1.100 |

| Attacker1 | Raspberry Pie | Attacker (Bot) | DC:A6:32:C9:E6:F3 | 192.168.1.101 |

| Attacker2 | Raspberry Pie | Attacker (Bot) | DC:A6:32:C9:E5:A3 | 192.168.1.102 |

| Attacker3 | Raspberry Pie | Attacker (Bot) | DC:A6:32:C9:E4:C5 | 192.168.1.103 |

| Attacker4 | Raspberry Pie | Attacker (Bot) | DC:A6:32:C9:E5:01 | 192.168.1.104 |

| Attacker5 | Raspberry Pie | Attacker (Bot) | DC:A6:32:C9:E4:AA | 192.168.1.105 |

| Application | IIoT Sensors | Sensor Types |

|---|---|---|

| Sound | Big Sound Detector | KY-037 |

| Small Sound Detector | KY-038 | |

| Temperature & Humidity | DHT11 | |

| Linear temperature | LM35 | |

| Weather | Analog temperature | KY-013 |

| Digital temperature | DS18B20 | |

| Atmospheric pressure | BMP-180 | |

| Soil Moisture | Soil Moisture | YL-69 |

| Motion | PIR Motion Sensor | HC-SR501 |

| Vibration | Ceramic Vibration Sensor | SW-420 |

| Water | Water Level Sensor | YL-83 |

| Steam | Steam Sensor | KS0203 |

| RFID | RFID Sensor | RFID-RC522 |

| Accelerometer Gyroscope | Triaxial Digital Acceleration Tilt Sensor | ADXL345 |

| Proximity | ALS Infrared LED Optical Proximity | APDS-9930 |

| Collision | Collision (Crash Sensor) | KY-031 |

| Ultrasonic | Ultrasonic Sensor | HC-SR04 |

| Flame | Flame Detector | KY-026 |

| Light Gesture | Light & Gesture Detection Sensor | APDS-9960 |

| Gas | Analog gas detector | MQ-2 |

| Analog Alcohol detector | MQ-3 |

| Device | Hardware Model | OS | Description |

|---|---|---|---|

| IIoT Sensors | Arduino MKR WiFi 1010 | firmware | Arduino Board |

| Arduino Ethernet | |||

| Access Point | TP-Link Omada AC1750 | firmware | Gigabit Wireless AP |

| Switch | Netgear GS316EP Switch | firmware | 16-Port PoE Gigabit Eth Switch |

| Router | TP-Link AX1800 Router | firmware | WiFi Smart Router |

| Edge Devices | Raspberry Pi B 4 8GB | Kali | MQTT Broker |

| Raspberry Pi B 4 8GB | Web Server, Network Dump | ||

| Cloud | CloudPowerEdge R530 Server | Centos 9 | Elasticsearch |

| Message Broker | |||

| Attackers | 1xRaspberry Pi B 4 4GB | Kali | Attacker C2 |

| 5xRaspberry Pi B 4 2GB | Raspbian | Attacker Bots |

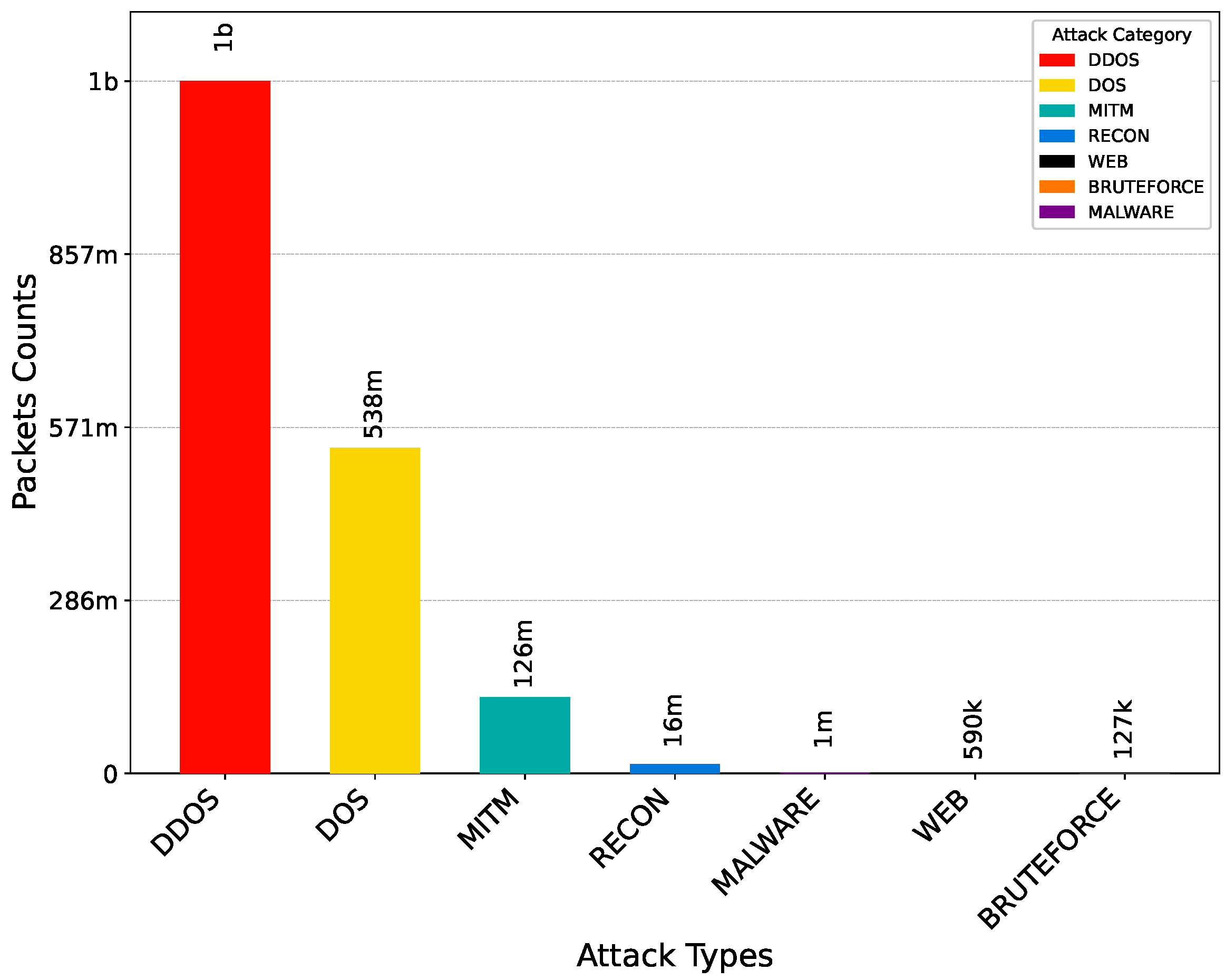

| Category | Benign/Attack Type | # Packets | # Logs | Tools |

|---|---|---|---|---|

| Benign | Benign Data | 259,212 | 72,554 | tshark [46]; filebeat [45] |

| DDoS | Ack Fragmentation Flood | 15,895,816 | 28,560 | hping3 [48] |

| Connect Flood | 1,937,806 | 1241 | mqtt-connect-flood | |

| HTTP Flood | 10,128,404 | 32,359 | golang-httpflood [49] | |

| ICMP Flood | 121,646,915 | 31,422 | hping3 [48] | |

| ICMP Fragmentation Flood | 15,715,035 | 23,594 | hping3 [48] | |

| MQTT Publish Flood | 2,732,308 | 1249 | mqtt-benchmark [50] | |

| PSHACK Flood | 142,102,006 | 31,994 | hping3 [48] | |

| RSTFIN Flood | 196,936,383 | 30,866 | hping3 [48] | |

| Slowloris | 166,168 | 7444 | slowloris [51] | |

| TCP SYN Flood | 134,658,233 | 18,493 | hping3 [48] | |

| Synonymous IP Flood | 152,046,341 | 17,315 | hping3 [48] | |

| TCP Flood | 137,626,631 | 16,744 | hping3 [48] | |

| UDP Flood | 194,623,658 | 26,354 | hping3 [48]; udp-flood [52] | |

| UDP Fragmentation Flood | 15,973,062 | 30,941 | udp-flood [52] | |

| Total | 1,142,218,766 | 298,576 | ||

| DoS | Ack Fragmentation Flood | 19,550,570 | 8414 | udp-flood [52] |

| Connect Flood | 671,129 | 1248 | mqtt-connect-flood | |

| HTTP Flood | 4,899,179 | 16,021 | golang-httpflood [49] | |

| ICMP Flood | 45,789,955 | 24,824 | hping3 [48] | |

| ICMP Fragmentation Flood | 18,340,110 | 7127 | hping3 [48] | |

| MQTT Publish Flood | 58,739 | 1240 | mqtt-benchmark [50] | |

| PSHACK Flood | 43,897,855 | 24,015 | hping3 [48] | |

| RSTFIN Flood | 59,372,231 | 18,573 | hping3 [48] | |

| Slowloris | 51,603 | 7492 | slowloris [51] | |

| TCP SYN Flood | 66,872,061 | 29,405 | hping3 [48] | |

| Synonymous IP Flood | 71,659,415 | 32,791 | hping3 [48] | |

| TCP Flood | 54,515,535 | 29,738 | hping3 [48] | |

| UDP Flood | 133,945,490 | 17,594 | hping3 [48]; udp-flood [52] | |

| UDP Fragmentation Flood | 17,897,278 | 9071 | udp-flood [52] | |

| Total | 537,520,150 | 227,553 | ||

| Recon | Host Discovery ARP Ping | 2,463,445 | 79,831 | nmap [53] |

| Host Discovery TCP ACK Ping | 2,107,543 | 80,257 | nmap [53] | |

| Host Discovery TCP SYN Ping | 2,318,477 | 79,901 | nmap [53] | |

| Host Discovery TCP SYN Stealth | 2,600,465 | 79,580 | nmap [53] | |

| Host Discovery UDP Ping | 2,329,836 | 79,206 | nmap [53] | |

| OS Scan | 857,076 | 81,762 | nmap [53] | |

| Ping Sweep | 7474 | 45,556 | nmap; fping [54] | |

| Port Scan | 2,418,517 | 82,613 | nmap [53] | |

| Vulnerability Scan | 687,382 | 80,335 | nmap; vulscan [55] | |

| Total | 15,790,215 | 689,041 | ||

| Web | Backdoor Upload | 16,606 | 4215 | Remot3d [56] |

| Command Injection | 64,155 | 20,028 | payloadbox [57] | |

| SQL Injection | 33,727 | 7723 | sqlmap [58]; payloadbox [59] | |

| Blind SQL Injection | 397,486 | 19,907 | sqlmap [58]; payloadbox [59] | |

| Cross Site Scripting | 77,984 | 4369 | payloadbox [60]; XSStrike [61] | |

| Total | 589,958 | 56,242 | ||

| Bruteforce | SSH Bruteforce | 55,793 | 19,470 | thc-hydra [62]; SecLists [63] |

| Telnet Bruteforce | 71,025 | 18,205 | thc-hydra [62]; SecLists [63] | |

| Total | 126,818 | 37,675 | ||

| MITM | ARP Spoofing | 126,759 | 84,927 | ettercap [64] |

| Impersonation | 84,967 | 23,431 | mqtt-benchmark [50] | |

| IP Spoofing | 125,449,545 | 58,473 | hping3 [48] | |

| Total | 125,661,271 | 166,831 | ||

| Mirai | Syn Flood | 801,628 | 71,308 | Mirai Source Code [65] |

| UDP Flood | 672,801 | 81,226 | Mirai Source Code [65] | |

| Total | 1,474,429 | 152,534 | ||

| Total | 1,823,381,607 | 1,628,452 |

| # | Feature | Description | Group |

|---|---|---|---|

| 1 | Message Interval | Time interval of messages in a time window | Log Data Rate |

| 2 | Messages Count | Total number of log messages in a time window | |

| 3 | Data Range Mean | Mean of value ranges across log entries | Log Data Stats |

| 4 | Data Range Maximum | Maximum value range across log entries | |

| 5 | Data Range Minimum | Minimum value range across log entries | |

| 6 | Data Range Std. Dev. | Std. deviation of value range across log entries | |

| 7 | Data Types Count | Number of distinct data types in log entries | |

| 8 | Data Types List | List of distinct data types in log entries | |

| 9 | Packets All Count | Total number of packets in a time window | Packet Traffic Rate |

| 10 | Packets Dst Count | Number of inbound Packets in a time window | |

| 11 | Packets Src Count | Number of outbound Packets in a time window | |

| 12 | Packet Interval | Time interval of packets in a time window | |

| 13 | Ports All Count | Number of all ports in a time window | Network Multiplexing |

| 14 | Ports Dst Count | Number of destination ports in a time window | |

| 15 | Ports Src Count | Number of source ports in a time window | |

| 16 | Ports All | List of all ports in a time window | |

| 17 | Ports Dst | List of destination ports in a time window | |

| 18 | Ports Src | List of source ports in a time window | |

| 19 | Protocols All Count | Number of unique protocols in a time window | |

| 20 | Protocols Dst Count | Number of destination protocols in a time window | |

| 21 | Protocols Src Count | Number of source protocols in a time window | |

| 22 | Protocols All | List of all protocols in a time window | |

| 23 | Protocols Dst | List of destination protocols in a time window | |

| 24 | Protocols Src | List of source protocols in a time window | |

| 25 | IPs All Count | Number of total IP addresses in a time window | Address Diversity |

| 26 | IPs Dst Count | Number of destination IP addresses in a time window | |

| 27 | IPs Src Count | Number of source IP addresses in a time window | |

| 28 | IPs All | List of all IP addresses in a time window | |

| 29 | IPs Dst | List of destination IP addresses in a time window | |

| 30 | IPs Src | List of source IP addresses in a time window | |

| 31 | MACs All Count | Number of all MAC addresses in a time window | |

| 32 | MACs Dst Count | Number of destination MAC addresses in a time window | |

| 33 | MACs Src Count | Number of source MAC addresses in a time window | |

| 34 | MACs All | List of all MAC addresses in a time window | |

| 35 | MACs Dst | List of destination MAC addresses in a time window | |

| 36 | MACs Src | List of source MAC addresses in a time window | |

| 37 | Fragmentation Score | Overall fragmentation score for a time window | Fragmentation |

| 38 | Fragmented Packets | Number of fragmented packets in a time window | |

| 39 | TCP ACK Flag Count | Number of TCP ACK flags in a time window | Header Flags |

| 40 | TCP FIN Flag Count | Number of TCP FIN flags in a time window | |

| 41 | TCP PSH Flag Count | Number of TCP PSH flags in a time window | |

| 42 | TCP RST Flag Count | Number of TCP RST flags in a time window | |

| 43 | TCP SYN Flag Count | Number of TCP SYN flags in a time window | |

| 44 | TCP URG Flag Count | Number of TCP URG flags in a time window | |

| 45 | TCP Flags Mean | Mean of TCP flag values in a time window | |

| 46 | TCP Flags Maximum | Maximum of TCP flag values in a time window | |

| 47 | TCP Flags Minimum | Minimum of TCP flag values in a time window | |

| 48 | TCP Flags Std. Dev. | Std. deviation of TCP flag values in a time window | |

| 49 | IP Flags Mean | Mean of IP flag values in a time window | |

| 50 | IP Flags Maximum | Maximum of IP flag values in a time window | |

| 51 | IP Flags Minimum | Minimum of IP flag values in a time window | |

| 52 | IP Flags Std. Dev. | Std. deviation of IP flag values in a time window | |

| 53 | Time Delta Mean | Mean inter-packet time delta in a time window | Timing Control |

| 54 | Time Delta Maximum | Maximum inter-packet time delta in a time window | |

| 55 | Time Delta Minimum | Minimum inter-packet time delta in a time window | |

| 56 | Time Delta Std. Dev. | Std. deviation of time deltas in a time window | |

| 57 | TTL Mean | Mean TTL value in a time window | |

| 58 | TTL Maximum | Maximum TTL value in a time window | |

| 59 | TTL Minimum | Minimum TTL value in a time window | |

| 60 | TTL Std. Dev. | Std. deviation of TTL values in a time window | |

| 61 | Window Size Mean | Mean TCP window size in a time window | |

| 62 | Window Size Maximum | Maximum TCP window size in a time window | |

| 63 | Window Size Minimum | Minimum TCP window size in a time window | |

| 64 | Window Size Std. Dev. | Std. deviation of window size in a time window | |

| 65 | Packet Size Mean | Mean packet size in a time window | Size Length |

| 66 | Packet Size Maximum | Maximum packet size in a time window | |

| 67 | Packet Size Minimum | Minimum packet size in a time window | |

| 68 | Packet Size Std. Dev. | Std. deviation of packet size in a time window | |

| 69 | Header Length Mean | Mean IP header length in a time window | |

| 70 | Header Length Maximum | Maximum IP header length in a time window | |

| 71 | Header Length Minimum | Minimum IP header length in a time window | |

| 72 | Header Length Std. Dev. | Std. deviation of header length in a time window | |

| 73 | IP Length Mean | Mean IP packet length in a time window | |

| 74 | IP Length Maximum | Maximum IP packet length in a time window | |

| 75 | IP Length Minimum | Minimum IP packet length in a time window | |

| 76 | IP Length Std. Dev. | Std. deviation of IP packet length in a time window | |

| 77 | MSS Mean | Mean maximum segment size in a time window | |

| 78 | MSS Maximum | Maximum segment size in a time window | |

| 79 | MSS Minimum | Minimum segment size in a time window | |

| 80 | MSS Std. Dev. | Std. deviation of segment size in a time window | |

| 81 | Payload Length Mean | Mean of payload lengths in a time window | |

| 82 | Payload Length Maximum | Maximum payload length in a time window | |

| 83 | Payload Length Minimum | Minimum payload length in a time window | |

| 84 | Payload Length Std. Dev. | Std. deviation of payload length in a time window |

| # | Feature Name | Context Group |

|---|---|---|

| 1 | Messages Count | Log Data Rate |

| 2 | Data Types List | Log Data Stats |

| 3 | Fragmented Packets | Fragmentation |

| 4 | IP Flags Maximum | Header Flags |

| 5 | TCP PSH Flag Count | |

| 6 | IPs All Count | Address Diversity |

| 7 | IPs Dst | |

| 8 | MACs Src | |

| 9 | Packets All Count | Packet Traffic Rate |

| 10 | Ports All | Network Multiplexing |

| 11 | Time Delta Mean | Timing Control |

| 12 | TTL Mean |

| # | Feature Name | Context Group |

|---|---|---|

| 1 | Messages Count | Log Data Rate |

| 2 | Data Range Mean | Log Data Stats |

| 3 | Data Types List | |

| 4 | Fragmented Packets | Fragmentation |

| 5 | Packet Interval | Packet Traffic Rate |

| 6 | Packets All Count | |

| 7 | IPs Dst | Address Diversity |

| 8 | IPs All Count | |

| 9 | MACs Src | |

| 10 | Packet Size Std. Dev. | Size Length |

| 11 | Ports All | Network Multiplexing |

| 12 | Protocols All Count | |

| 13 | Time Delta Mean | Timing Control |

| 14 | TTL Mean | |

| 15 | Window Size Mean | |

| 16 | IP Flags Maximum | Header Flags |

| 17 | TCP PSH Flag Count |

| Algorithm | Metric | 2 Classes | 8 Classes | 50 Classes | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Orig | PCA | FS | Orig | PCA | FS | Orig | PCA | FS | ||

| SVM | Accuracy | 0.9576 | 0.9448 | 0.9519 | 0.9349 | 0.8989 | 0.8982 | 0.7770 | 0.7105 | 0.7099 |

| Precision | 0.9603 | 0.9493 | 0.9544 | 0.9377 | 0.9026 | 0.8988 | 0.7599 | 0.6949 | 0.6815 | |

| Recall | 0.9576 | 0.9448 | 0.9519 | 0.9349 | 0.8989 | 0.8982 | 0.7770 | 0.7105 | 0.7099 | |

| F1-score | 0.9577 | 0.9450 | 0.9520 | 0.9335 | 0.8956 | 0.8948 | 0.7550 | 0.6845 | 0.6947 | |

| KNN | Accuracy | 0.9649 | 0.9636 | 0.9638 | 0.9408 | 0.9333 | 0.9323 | 0.7911 | 0.7826 | 0.7639 |

| Precision | 0.9663 | 0.9648 | 0.9651 | 0.9420 | 0.9344 | 0.9330 | 0.7763 | 0.7684 | 0.7463 | |

| Recall | 0.9649 | 0.9636 | 0.9638 | 0.9408 | 0.9333 | 0.9323 | 0.7911 | 0.7826 | 0.7639 | |

| F1-score | 0.9650 | 0.9637 | 0.9639 | 0.9402 | 0.9325 | 0.9315 | 0.7811 | 0.7730 | 0.7529 | |

| Random Forest | Accuracy | 0.9843 | 0.9701 | 0.9831 | 0.9781 | 0.9435 | 0.9749 | 0.8482 | 0.8079 | 0.8269 |

| Precision | 0.9845 | 0.9707 | 0.9833 | 0.9783 | 0.9441 | 0.9752 | 0.8409 | 0.7965 | 0.8183 | |

| Recall | 0.9843 | 0.9701 | 0.9831 | 0.9781 | 0.9435 | 0.9749 | 0.8482 | 0.8079 | 0.8269 | |

| F1-score | 0.9843 | 0.9701 | 0.9831 | 0.9780 | 0.9429 | 0.9748 | 0.8439 | 0.8007 | 0.8221 | |

| Decision Tree | Accuracy | 0.9743 | 0.9510 | 0.9727 | 0.9628 | 0.9084 | 0.9572 | 0.8280 | 0.7640 | 0.7949 |

| Precision | 0.9744 | 0.9510 | 0.9728 | 0.9630 | 0.9086 | 0.9572 | 0.8279 | 0.7621 | 0.7946 | |

| Recall | 0.9743 | 0.9510 | 0.9727 | 0.9628 | 0.9084 | 0.9572 | 0.8280 | 0.7640 | 0.7949 | |

| F1-score | 0.9743 | 0.9510 | 0.9727 | 0.9627 | 0.9083 | 0.9571 | 0.8277 | 0.7624 | 0.7944 | |

| Logistic Reg. | Accuracy | 0.9424 | 0.9173 | 0.9246 | 0.9187 | 0.8084 | 0.8478 | 0.7792 | 0.7287 | 0.7299 |

| Precision | 0.9455 | 0.9245 | 0.9289 | 0.9205 | 0.8066 | 0.8439 | 0.7539 | 0.6942 | 0.6840 | |

| Recall | 0.9424 | 0.9173 | 0.9246 | 0.9187 | 0.8084 | 0.8478 | 0.7792 | 0.7287 | 0.7299 | |

| F1-score | 0.9426 | 0.9176 | 0.9249 | 0.9172 | 0.8007 | 0.8409 | 0.7628 | 0.6968 | 0.6979 | |

| Naïve Bayes | Accuracy | 0.9127 | 0.7423 | 0.8369 | 0.7614 | 0.6356 | 0.6257 | 0.4539 | 0.4693 | 0.4703 |

| Precision | 0.9234 | 0.7665 | 0.8708 | 0.8163 | 0.7433 | 0.7618 | 0.6830 | 0.6268 | 0.5227 | |

| Recall | 0.9127 | 0.7423 | 0.8369 | 0.7614 | 0.6356 | 0.6257 | 0.4539 | 0.4693 | 0.4703 | |

| F1-score | 0.9130 | 0.7422 | 0.8365 | 0.7762 | 0.6496 | 0.6389 | 0.5059 | 0.5100 | 0.4916 | |

| XGBoost | Accuracy | 0.8011 | 0.9576 | 0.9649 | 0.7065 | 0.7433 | 0.7353 | 0.6468 | 0.6090 | 0.6266 |

| Precision | 0.8431 | 0.9586 | 0.9662 | 0.7886 | 0.7438 | 0.7637 | 0.7567 | 0.6854 | 0.6784 | |

| Recall | 0.8011 | 0.9577 | 0.9649 | 0.7065 | 0.7433 | 0.7353 | 0.6468 | 0.6190 | 0.6266 | |

| F1-score | 0.7886 | 0.9576 | 0.9650 | 0.7071 | 0.7426 | 0.7125 | 0.6778 | 0.6413 | 0.6535 | |

| HybridML1 | Accuracy | 0.8943 | 0.9502 | 0.9524 | 0.7293 | 0.7444 | 0.7565 | 0.5938 | 0.6075 | 0.6514 |

| Precision | 0.9052 | 0.9508 | 0.9526 | 0.7984 | 0.7448 | 0.7742 | 0.7572 | 0.6643 | 0.6956 | |

| Recall | 0.8943 | 0.9502 | 0.9524 | 0.7293 | 0.7444 | 0.7565 | 0.5938 | 0.6275 | 0.6514 | |

| F1-score | 0.8922 | 0.9503 | 0.9523 | 0.7244 | 0.7439 | 0.7399 | 0.6353 | 0.6493 | 0.6729 | |

| HybridML2 | Accuracy | 0.9098 | 0.9579 | 0.9592 | 0.7749 | 0.7467 | 0.7729 | 0.5388 | 0.6097 | 0.6225 |

| Precision | 0.9169 | 0.9585 | 0.9592 | 0.8193 | 0.7472 | 0.7846 | 0.7558 | 0.6780 | 0.6868 | |

| Recall | 0.9098 | 0.9569 | 0.9592 | 0.7749 | 0.7467 | 0.7729 | 0.5388 | 0.6197 | 0.6225 | |

| F1-score | 0.9085 | 0.9580 | 0.9592 | 0.7741 | 0.7462 | 0.7526 | 0.5798 | 0.6322 | 0.6554 | |

| CNN | Accuracy | 0.9736 | 0.9570 | 0.9642 | 0.9533 | 0.9025 | 0.9209 | 0.7881 | 0.7295 | 0.7351 |

| Precision | 0.9522 | 0.9251 | 0.9406 | 0.9543 | 0.8996 | 0.9176 | 0.7918 | 0.6986 | 0.7088 | |

| Recall | 0.9894 | 0.9813 | 0.9800 | 0.9533 | 0.9025 | 0.9209 | 0.7881 | 0.7295 | 0.7351 | |

| F1-score | 0.9704 | 0.9524 | 0.9599 | 0.9530 | 0.8989 | 0.9183 | 0.7675 | 0.7005 | 0.7228 | |

| LSTM | Accuracy | 0.9757 | 0.9816 | 0.9845 | 0.9328 | 0.9251 | 0.9209 | 0.6901 | 0.6785 | 0.6798 |

| Precision | 0.9757 | 0.9810 | 0.9845 | 0.9334 | 0.9267 | 0.9253 | 0.7199 | 0.7011 | 0.6985 | |

| Recall | 0.9757 | 0.9826 | 0.9845 | 0.9328 | 0.9251 | 0.9209 | 0.6901 | 0.6785 | 0.6798 | |

| F1-score | 0.9757 | 0.9816 | 0.9845 | 0.9338 | 0.9255 | 0.9222 | 0.6806 | 0.6710 | 0.6808 | |

| BiLSTM | Accuracy | 0.9803 | 0.9795 | 0.9780 | 0.9363 | 0.9351 | 0.9260 | 0.6576 | 0.6453 | 0.6312 |

| Precision | 0.9803 | 0.9793 | 0.9780 | 0.9377 | 0.9379 | 0.9293 | 0.6605 | 0.6611 | 0.6328 | |

| Recall | 0.9803 | 0.9785 | 0.9780 | 0.9363 | 0.9351 | 0.9260 | 0.6576 | 0.6453 | 0.6312 | |

| F1-score | 0.9803 | 0.9786 | 0.9780 | 0.9367 | 0.9360 | 0.9265 | 0.6365 | 0.6541 | 0.6326 | |

| CNN-LSTM | Accuracy | 0.9803 | 0.9795 | 0.9780 | 0.9363 | 0.9351 | 0.9260 | 0.6576 | 0.6453 | 0.6312 |

| Precision | 0.9803 | 0.9793 | 0.9780 | 0.9377 | 0.9379 | 0.9293 | 0.6605 | 0.6611 | 0.6328 | |

| Recall | 0.9803 | 0.9785 | 0.9780 | 0.9363 | 0.9351 | 0.9260 | 0.6576 | 0.6453 | 0.6312 | |

| F1-score | 0.9803 | 0.9786 | 0.9780 | 0.9367 | 0.9360 | 0.9265 | 0.6365 | 0.6541 | 0.6326 | |

| BiCNN-LSTM | Accuracy | 0.9788 | 0.9808 | 0.9811 | 0.9545 | 0.9472 | 0.9340 | 0.6532 | 0.6434 | 0.6454 |

| Precision | 0.9788 | 0.9801 | 0.9811 | 0.9563 | 0.9508 | 0.9342 | 0.6674 | 0.6567 | 0.6641 | |

| Recall | 0.9788 | 0.9807 | 0.9811 | 0.9545 | 0.9472 | 0.9340 | 0.6532 | 0.6434 | 0.6454 | |

| F1-score | 0.9788 | 0.9806 | 0.9811 | 0.9551 | 0.9483 | 0.9337 | 0.6358 | 0.6360 | 0.6518 | |

| GRU | Accuracy | 0.9765 | 0.9874 | 0.9847 | 0.9530 | 0.9522 | 0.9274 | 0.6448 | 0.6356 | 0.6253 |

| Precision | 0.9766 | 0.9874 | 0.9847 | 0.9566 | 0.9562 | 0.9283 | 0.6654 | 0.6505 | 0.6352 | |

| Recall | 0.9765 | 0.9874 | 0.9847 | 0.9530 | 0.9522 | 0.9274 | 0.6448 | 0.6356 | 0.6253 | |

| F1-score | 0.9765 | 0.9874 | 0.9847 | 0.9542 | 0.9535 | 0.9275 | 0.6370 | 0.6427 | 0.6301 | |

| BiGRU | Accuracy | 0.9815 | 0.9839 | 0.9828 | 0.9467 | 0.9458 | 0.9474 | 0.6649 | 0.6379 | 0.6138 |

| Precision | 0.9815 | 0.9840 | 0.9829 | 0.9456 | 0.9401 | 0.9501 | 0.6881 | 0.6667 | 0.6144 | |

| Recall | 0.9815 | 0.9839 | 0.9828 | 0.9467 | 0.9458 | 0.9474 | 0.6649 | 0.6479 | 0.6138 | |

| F1-score | 0.9815 | 0.9839 | 0.9828 | 0.9457 | 0.9473 | 0.9478 | 0.6643 | 0.6434 | 0.6141 | |

| Transformer | Accuracy | 0.9753 | 0.9692 | 0.9700 | 0.9499 | 0.9319 | 0.9383 | 0.6727 | 0.6571 | 0.6419 |

| Precision | 0.9754 | 0.9682 | 0.9701 | 0.9518 | 0.9368 | 0.9431 | 0.7010 | 0.6756 | 0.6573 | |

| Recall | 0.9753 | 0.9686 | 0.9700 | 0.9499 | 0.9319 | 0.9383 | 0.6727 | 0.6371 | 0.6419 | |

| F1-score | 0.9753 | 0.9684 | 0.9700 | 0.9503 | 0.9337 | 0.9397 | 0.6643 | 0.6470 | 0.6482 | |

| DeepTransformer | Accuracy | 0.9736 | 0.9701 | 0.9746 | 0.9470 | 0.9409 | 0.9470 | 0.6779 | 0.6824 | 0.6888 |

| Precision | 0.9737 | 0.9711 | 0.9746 | 0.9476 | 0.9466 | 0.9480 | 0.6989 | 0.7016 | 0.7153 | |

| Recall | 0.9736 | 0.9721 | 0.9746 | 0.9470 | 0.9409 | 0.9470 | 0.6709 | 0.6814 | 0.6888 | |

| F1-score | 0.9736 | 0.9716 | 0.9746 | 0.9467 | 0.9421 | 0.9473 | 0.6717 | 0.6926 | 0.6943 | |

| ResNet1D | Accuracy | 0.9839 | 0.9807 | 0.9822 | 0.9539 | 0.9576 | 0.9455 | 0.6983 | 0.6952 | 0.6918 |

| Precision | 0.9840 | 0.9807 | 0.9824 | 0.9549 | 0.9591 | 0.9492 | 0.7236 | 0.7264 | 0.7111 | |

| Recall | 0.9839 | 0.9807 | 0.9822 | 0.9539 | 0.9576 | 0.9455 | 0.6983 | 0.6952 | 0.6918 | |

| F1-score | 0.9839 | 0.9807 | 0.9822 | 0.9541 | 0.9577 | 0.9465 | 0.6884 | 0.6948 | 0.6964 | |

| DeepResNet1D | Accuracy | 0.9828 | 0.9851 | 0.9839 | 0.9643 | 0.9453 | 0.9537 | 0.7079 | 0.6426 | 0.6549 |

| Precision | 0.9829 | 0.9851 | 0.9840 | 0.9655 | 0.9459 | 0.9569 | 0.7386 | 0.6850 | 0.6836 | |

| Recall | 0.9828 | 0.9851 | 0.9839 | 0.9643 | 0.9453 | 0.9537 | 0.7079 | 0.6526 | 0.6549 | |

| F1-score | 0.9828 | 0.9851 | 0.9839 | 0.9646 | 0.9454 | 0.9546 | 0.7035 | 0.6622 | 0.6689 | |

| AutoEncoder | Accuracy | 0.9389 | 0.9387 | 0.9305 | 0.8972 | 0.8651 | 0.7938 | 0.6698 | 0.6437 | 0.5742 |

| Precision | 0.9404 | 0.9428 | 0.9387 | 0.8964 | 0.8634 | 0.7943 | 0.6189 | 0.5831 | 0.4810 | |

| Recall | 0.9389 | 0.9387 | 0.9305 | 0.8972 | 0.8651 | 0.7938 | 0.6698 | 0.6437 | 0.5742 | |

| F1-score | 0.9390 | 0.9389 | 0.9308 | 0.8922 | 0.8585 | 0.7844 | 0.6288 | 0.6034 | 0.5512 | |

| RNN | Accuracy | 0.9897 | 0.9807 | 0.9878 | 0.9595 | 0.9461 | 0.9473 | 0.7201 | 0.6684 | 0.6697 |

| Precision | 0.9895 | 0.9787 | 0.9798 | 0.9625 | 0.9467 | 0.9501 | 0.7256 | 0.6705 | 0.6923 | |

| Recall | 0.9870 | 0.9774 | 0.9830 | 0.9595 | 0.9461 | 0.9473 | 0.7201 | 0.6624 | 0.6697 | |

| F1-score | 0.9882 | 0.9780 | 0.9814 | 0.9605 | 0.9460 | 0.9475 | 0.7156 | 0.6641 | 0.6892 | |

| Dataset | Network + Sensor Data | Attack Diversity | # Attacks | Device Diversity | No. Devices | ML/DL Eval. | Feature Sel. | Resource Util. | Rank | |

|---|---|---|---|---|---|---|---|---|---|---|

| Type | Cat. | |||||||||

| WUSTL-IIoT | - | - | 4 | 4 | - | 8 | - | - | - | 30.5 |

| IoT-23 | - | - | 10 | 2 | - | 4 | ✓ | - | - | 38.1 |

| MQTT-IoT-IDS | - | - | 8 | 4 | ✓ | 15 | ✓ | - | - | 46.6 |

| N-BaIoT | - | - | 10 | 2 | - | 9 | ✓ | ✓ | - | 51.8 |

| MQTTset | - | - | 5 | 5 | - | 10 | ✓ | ✓ | - | 53.7 |

| BoT-IoT | - | - | 10 | 4 | - | 7 | ✓ | ✓ | - | 53.9 |

| ToN_IoT | ✓ | - | 9 | 6 | - | 10 | ✓ | - | - | 62.4 |

| X-IIoTID | ✓ | ✓ | 18 | 9 | ✓ | 15 | ✓ | - | - | 70.0 |

| CICIoT2023 | - | ✓ | 33 | 7 | ✓ | 105 | ✓ | ✓ | - | 73.0 |

| Edge-IIoTset | ✓ | - | 14 | 4 | - | 10 | ✓ | ✓ | - | 74.4 |

| DataSense | ✓ | ✓ | 49 | 7 | ✓ | 40 | ✓ | ✓ | ✓ | 98.5 |

| Attack | Edge-IIoTset | X-IIoTID | WUSTL-IIoT | IoT-23 | BoT-IoT | ToN_IoT | MQTTset | N-BaIoT | MQTT-IoT-IDS | DataSense | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Recon | Host Discovery-TCP Ack Ping | - | - | - | - | - | - | - | - | - | ✓ |

| Host Discovery-TCP Syn Stealth | - | - | - | - | - | - | - | - | - | ✓ | |

| Host Discovery-ARP Ping | - | - | - | - | - | - | - | - | - | ✓ | |

| Host Discovery-UDP Ping | - | - | - | - | - | - | - | - | - | ✓ | |

| Host Discovery-TCP Syn Ping | - | ✓ | - | - | - | - | - | - | - | ✓ | |

| Port Scan | ✓ | ✓ | ✓ | - | ✓ | ✓ | - | ✓ | ✓ | ✓ | |

| Vulnerability Scan | ✓ | ✓ | - | - | - | - | - | ✓ | ✓ | ✓ | |

| Ping Sweep | - | - | - | - | - | - | - | - | - | ✓ | |

| OS Scan | ✓ | ✓ | - | - | ✓ | - | - | - | ✓ | ✓ | |

| DoS | TCP Syn Flood | ✓ | - | ✓ | - | - | - | ✓ | ✓ | ✓ | ✓ |

| SynonymousIP Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| Slowloris | - | - | - | - | - | - | - | - | - | ✓ | |

| UDP Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| RST Fin Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| ICMP Flood | ✓ | - | - | - | - | - | - | - | - | ✓ | |

| UDP Flood | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | |

| HTTP Flood | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | |

| Push Ack Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| Ack Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| ICMP Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| TCP Flood | - | - | - | - | ✓ | ✓ | - | ✓ | - | ✓ | |

| MQTT Connect Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| MQTT Publish Flood | - | - | - | - | - | - | ✓ | - | - | ✓ | |

| DDoS | UDP Flood | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ |

| HTTP Flood | ✓ | - | - | - | ✓ | - | - | ✓ | - | ✓ | |

| Slowloris | - | - | - | - | - | - | - | - | - | ✓ | |

| Push Ack Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| TCP Flood | - | - | - | - | ✓ | ✓ | - | ✓ | - | ✓ | |

| Synonymousip Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| UDP Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| Ack Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| TCP Syn Flood | ✓ | - | - | - | - | - | - | ✓ | ✓ | ✓ | |

| MQTT Publish Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| MQTT Connect Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| ICMP Fragmentation Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| ICMP Flood | ✓ | - | - | - | - | - | - | - | - | ✓ | |

| RST Fin Flood | - | - | - | - | - | - | - | - | - | ✓ | |

| MitM | IP Spoofing | - | - | - | - | - | - | - | - | - | ✓ |

| ARP Spoofing | ✓ | ✓ | - | - | - | ✓ | - | - | ✓ | ✓ | |

| Impersonation | - | - | - | - | - | - | - | - | - | ✓ | |

| BruteForce | Dictionary Telnet | - | - | - | - | - | - | ✓ | - | - | ✓ |

| Dictionary SSH | - | ✓ | - | - | - | ✓ | - | - | ✓ | ✓ | |

| Web | XSS | ✓ | - | - | - | - | ✓ | - | - | - | ✓ |

| Command Injection | - | - | ✓ | - | - | ✓ | - | - | - | ✓ | |

| SQL Injection | ✓ | - | - | - | - | - | - | - | - | ✓ | |

| SQL Injection Blind | - | - | - | - | - | - | - | - | - | ✓ | |

| Backdoor Upload | ✓ | ✓ | ✓ | - | - | ✓ | - | - | - | ✓ | |

| Malware | Mirai Syn Flood | ✓ | ✓ | - | ✓ | - | - | - | ✓ | - | ✓ |

| Mirai UDP Flood | - | - | - | ✓ | - | - | - | ✓ | - | ✓ | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Firouzi, A.; Dadkhah, S.; Maret, S.A.; Ghorbani, A.A. DataSense: A Real-Time Sensor-Based Benchmark Dataset for Attack Analysis in IIoT with Multi-Objective Feature Selection. Electronics 2025, 14, 4095. https://doi.org/10.3390/electronics14204095

Firouzi A, Dadkhah S, Maret SA, Ghorbani AA. DataSense: A Real-Time Sensor-Based Benchmark Dataset for Attack Analysis in IIoT with Multi-Objective Feature Selection. Electronics. 2025; 14(20):4095. https://doi.org/10.3390/electronics14204095

Chicago/Turabian StyleFirouzi, Amir, Sajjad Dadkhah, Sebin Abraham Maret, and Ali A. Ghorbani. 2025. "DataSense: A Real-Time Sensor-Based Benchmark Dataset for Attack Analysis in IIoT with Multi-Objective Feature Selection" Electronics 14, no. 20: 4095. https://doi.org/10.3390/electronics14204095

APA StyleFirouzi, A., Dadkhah, S., Maret, S. A., & Ghorbani, A. A. (2025). DataSense: A Real-Time Sensor-Based Benchmark Dataset for Attack Analysis in IIoT with Multi-Objective Feature Selection. Electronics, 14(20), 4095. https://doi.org/10.3390/electronics14204095