An Energy-Aware Generative AI Edge Inference Framework for Low-Power IoT Devices

Abstract

1. Introduction

2. Related Works

2.1. Application Scenarios and Challenges

2.2. Review of Mainstream Approaches

2.3. Closely Related Studies

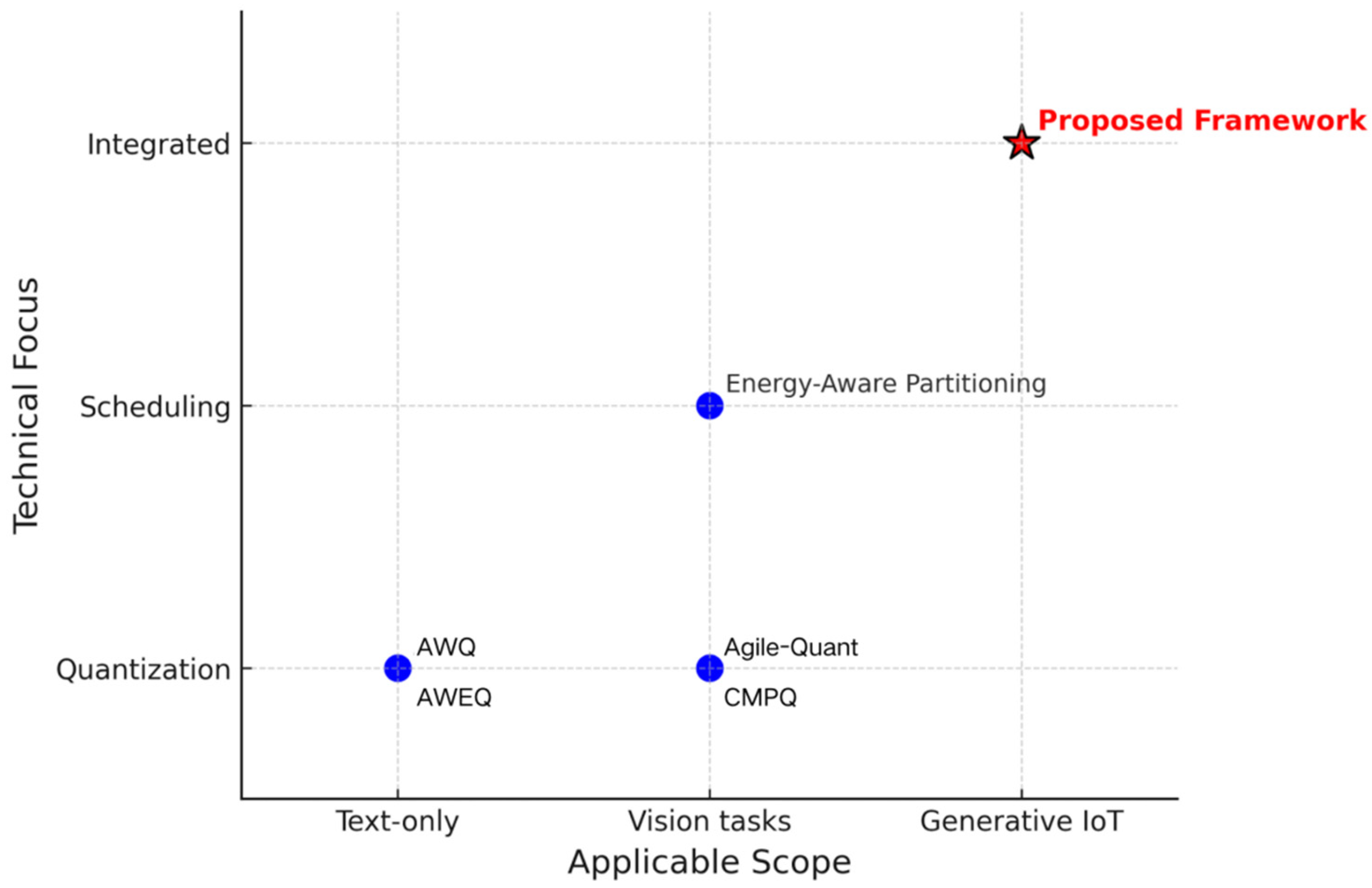

2.4. Summary

3. Methodology

3.1. Problem Formulation

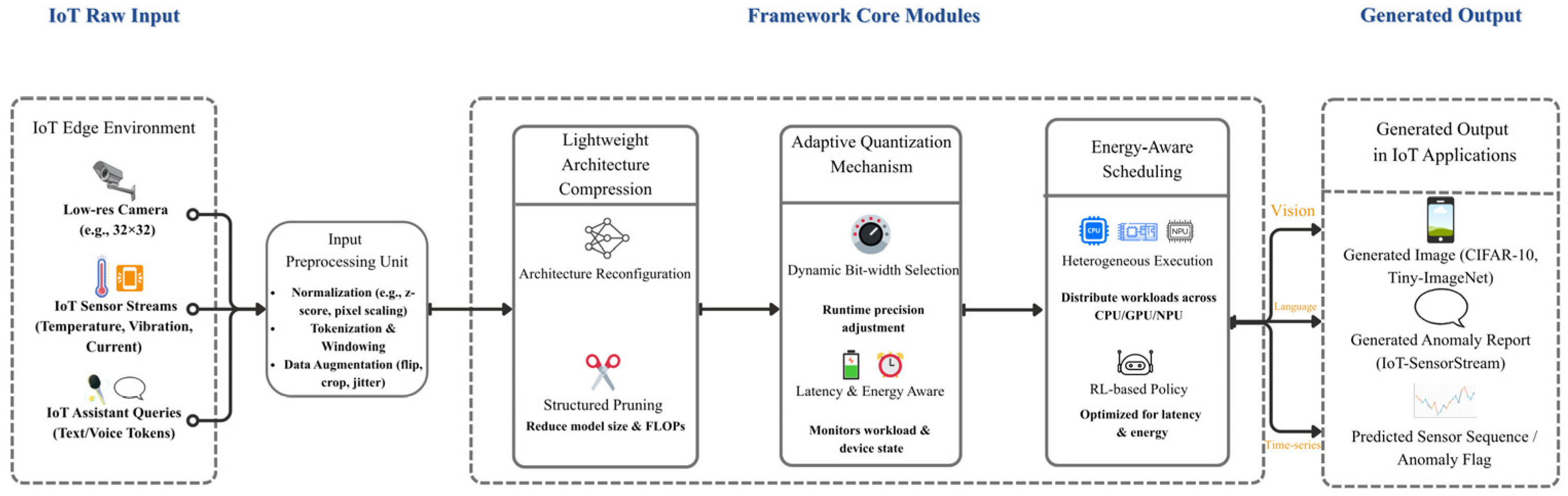

3.2. Overall Framework

3.3. Module Descriptions

3.3.1. Lightweight Architecture Compression

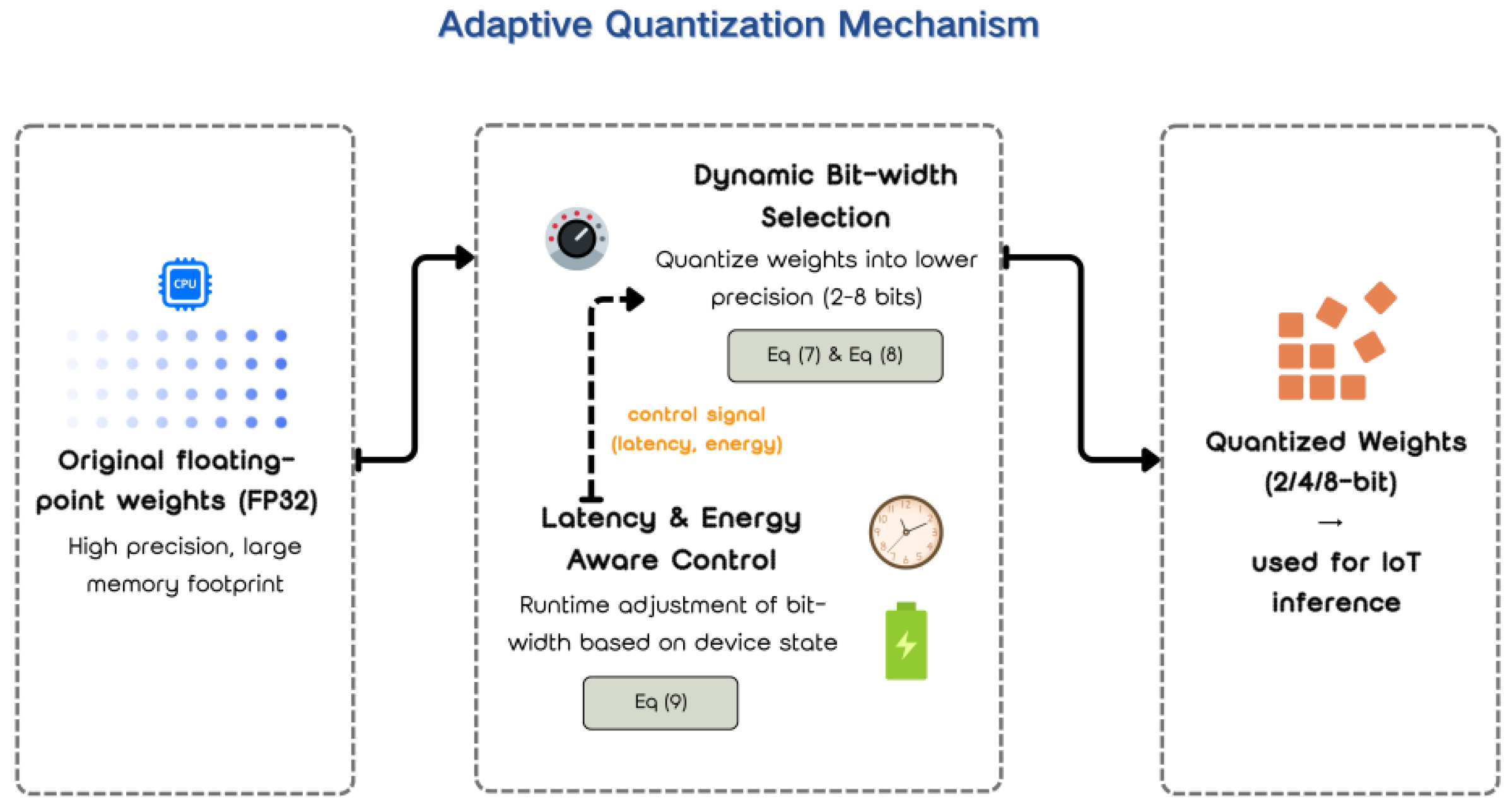

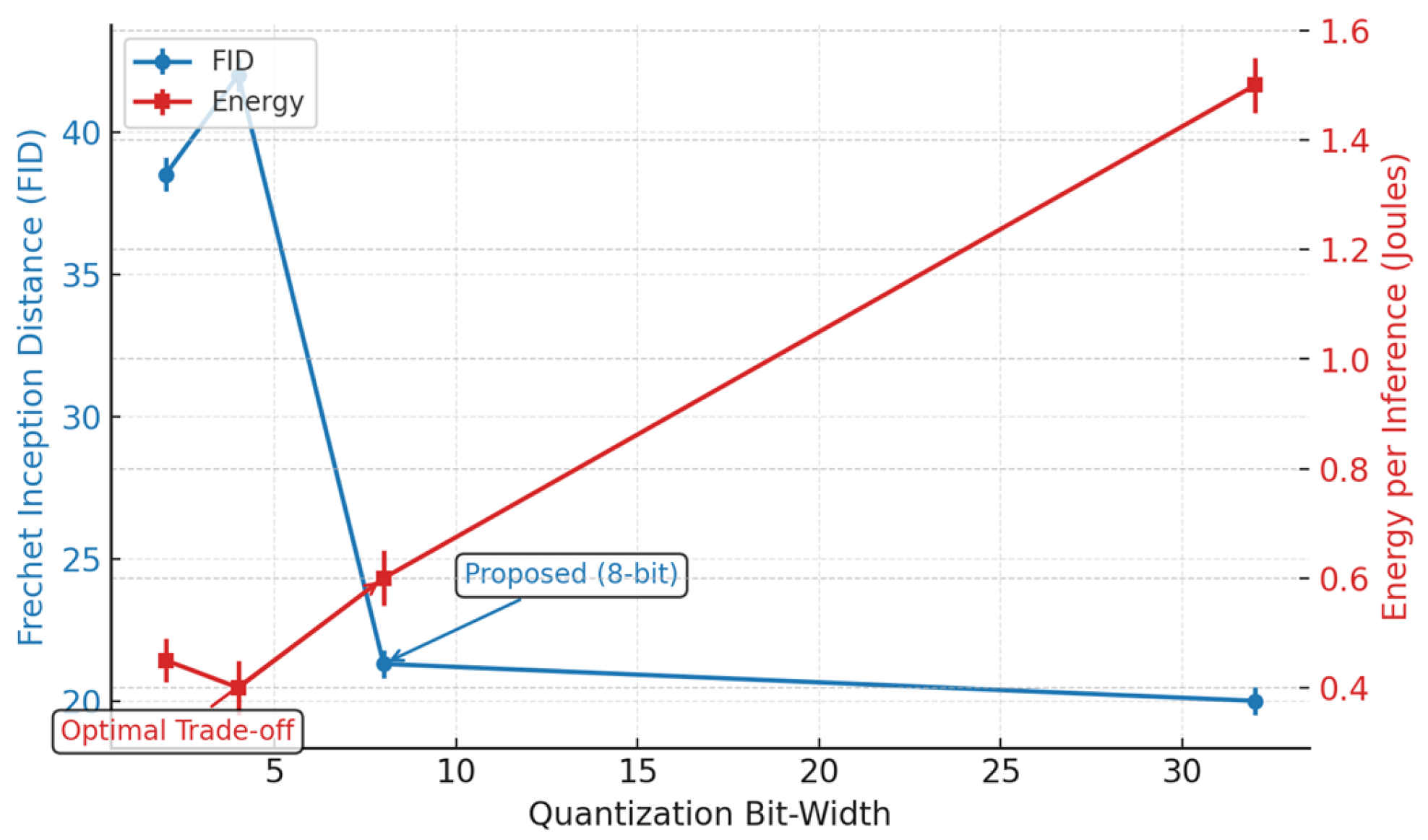

3.3.2. Adaptive Quantization Mechanism

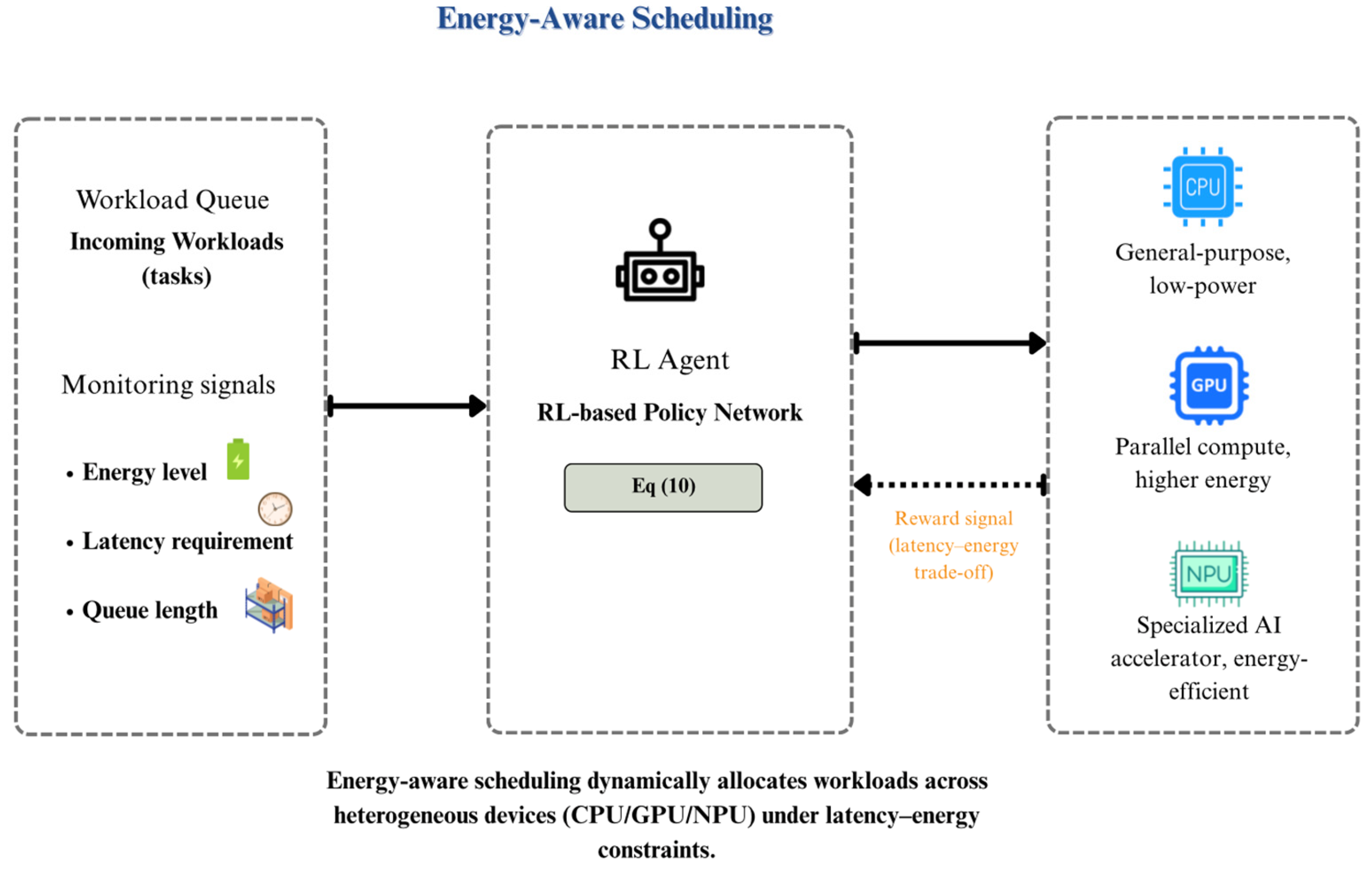

3.3.3. Energy-Aware Scheduling

3.3.4. Pseudocode

| Algorithm 1: Generative AI Edge Inference |

| Input: Data x, device constraints C Output: Generated result y_hat 1: θ ← Initialize model parameters 2: θ_c ← Apply Lightweight Compression(θ) #one-time cost of O(Np), where Np is the number of parameters; applied during model setup. 3: while inference do # runtime cost O(W), proportional to the number of weights; overhead is <5% of inference time. 4: b ← AdaptiveQuantization(L_t, E_t, C) 5: assign ← EnergyAwareScheduler(devices, b) #RL-based decision per task with complexity O(S), where S is the number of candidate devices; negligible (<1 ms) in practice. 6: y_hat ← Inference(θ_c, x, assign, b) 7: return y_hat |

3.4. Objective Function and Optimization

- (1)

- Reconstruction Loss

- (2)

- Adversarial Loss

- (3)

- KL Divergence Loss

- (4)

- Compression Regularization

- (5)

- Quantization Error Loss

- (6)

- Scheduling Cost

4. Experiment and Results

4.1. Experimental Setups

4.1.1. Datasets

4.1.2. Hardware Configuration

4.1.3. Evaluation Metrics

4.1.4. Training Protocol

4.2. Baselines

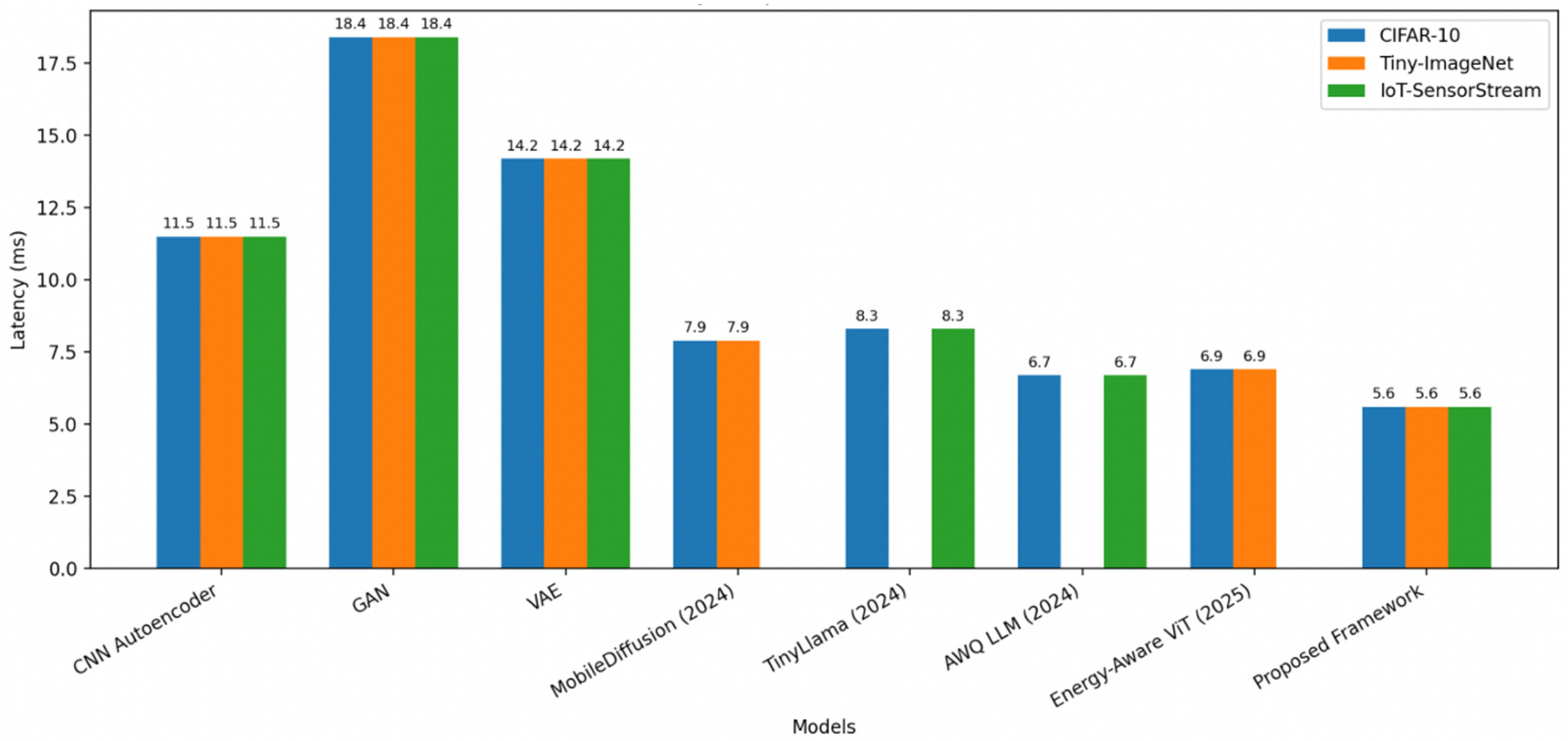

4.3. Quantitative Results

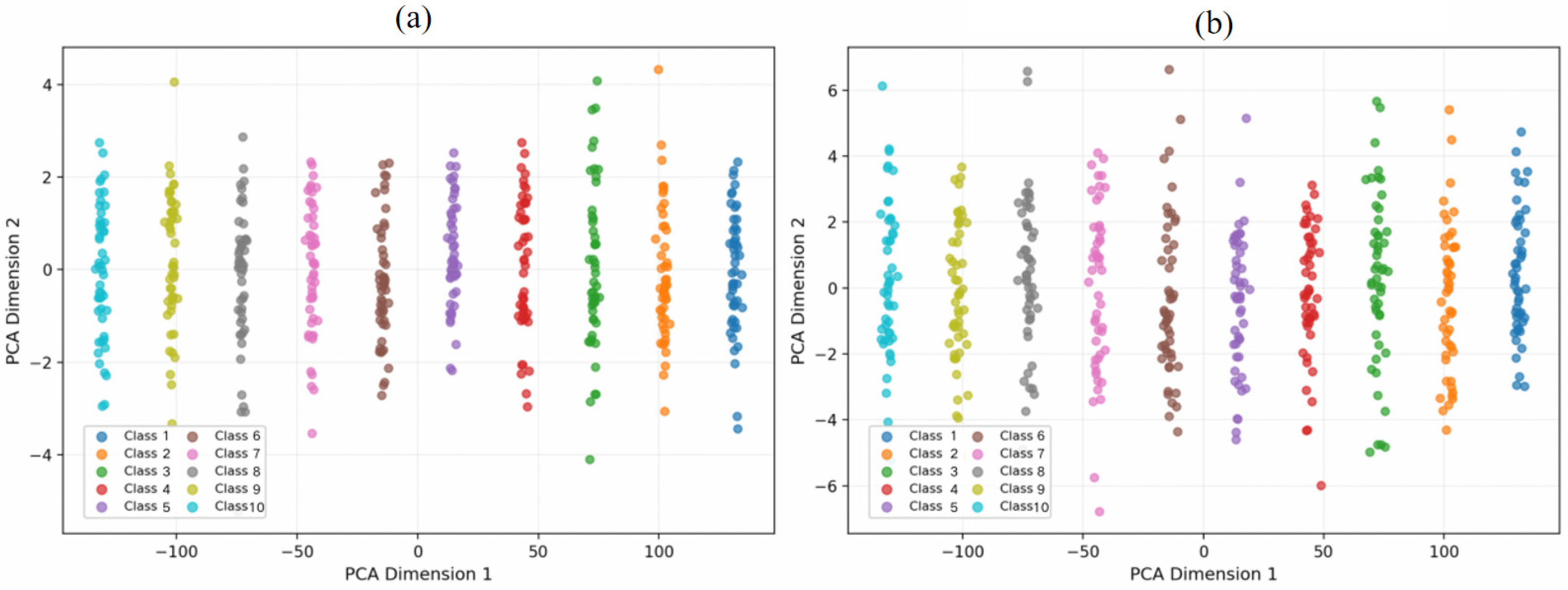

4.4. Qualitative Results

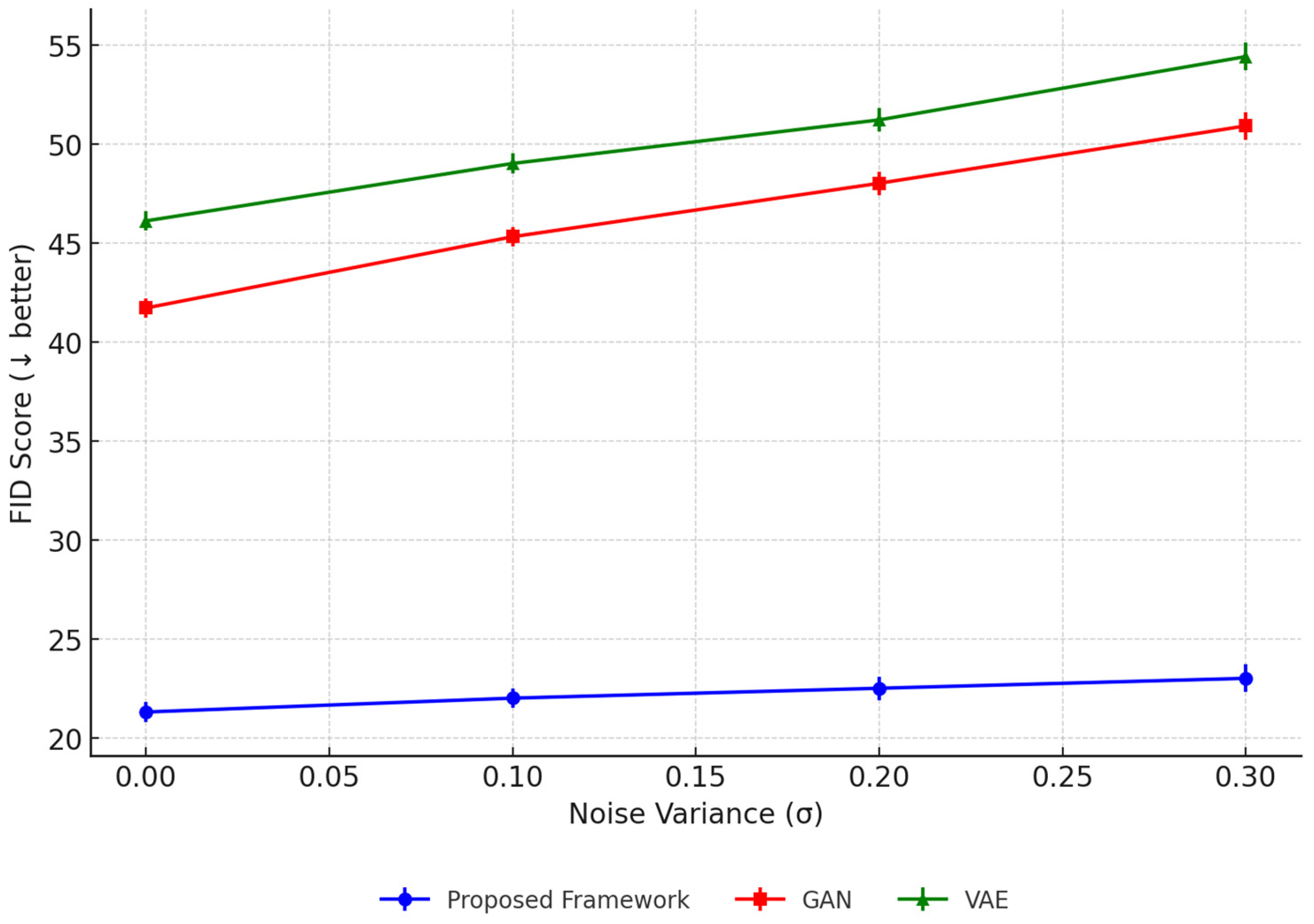

4.5. Robustness

4.6. Ablation Study

4.7. Summary of Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbol | Definition |

| Input sample (e.g., image pixels, token embeddings, or sensor readings) | |

| Generated output sequence or image | |

| Model-predicted generative output | |

| Training dataset | |

| Model parameters (weights and biases) | |

| Compressed model parameters after pruning and reconfiguration | |

| Device constraints (energy, memory, latency) | |

| Energy consumption per inference (Joules) | |

| Instantaneous power at time (t) (Watts) | |

| Sampling interval for power measurement (seconds) | |

| Number of inference operations | |

| Memory usage (MB) | |

| Inference latency (milliseconds) | |

| Quantization bit-width | |

| Quantization operator at bit-width (b) | |

| Regularization coefficient for compression | |

| Trade-off coefficients balancing fidelity, latency, and energy | |

| Scheduling policy (reinforcement learning agent) | |

| Device selected for execution | |

| Latency on device (d) | |

| Energy consumption on device (d) | |

| Fréchet Inception Distance (image fidelity metric) | |

| Bilingual Evaluation Understudy (text generation metric) | |

| Inception Score (image quality metric) | |

| Mean Squared Error (time-series reconstruction metric) |

References

- Ansere, J.A.; Kamal, M.; Khan, I.A.; Aman, M.N. Dynamic resource optimization for energy-efficient 6G-IoT ecosystems. Sensors 2023, 23, 4711. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, I.; Nasim, F.; Khawaja, M.F.; Naqvi, S.A.A.; Khan, H. Enhancing IoT security and services based on generative artificial intelligence techniques: A systematic analysis based on emerging threats, challenges and future directions. Spectr. Eng. Sci. 2025, 3, 1–25. [Google Scholar]

- Zhang, X.; Nie, J.; Huang, Y.; Xie, G.; Xiong, Z.; Liu, J.; Niyato, D.; Shen, X.S. Beyond the cloud: Edge inference for generative large language models in wireless networks. IEEE Trans. Wirel. Commun. 2024, 24, 643–658. [Google Scholar] [CrossRef]

- Li, J.; Qin, R.; Olaverri-Monreal, C.; Prodan, R.; Wang, F.Y. Logistics 5.0: From intelligent networks to sustainable ecosystems. IEEE Trans. Intell. Veh. 2023, 8, 3771–3774. [Google Scholar] [CrossRef]

- Li, X.; Bi, S. Optimal AI model splitting and resource allocation for device-edge co-inference in multi-user wireless sensing systems. IEEE Trans. Wirel. Commun. 2024, 23, 11094–11108. [Google Scholar] [CrossRef]

- He, J.; Lai, B.; Kang, J.; Du, H.; Nie, J.; Zhang, T.; Yuan, Y.; Zhang, W.; Niyato, D.; Jamalipour, A. Securing federated diffusion model with dynamic quantization for generative ai services in multiple-access artificial intelligence of things. IEEE Internet Things J. 2024, 11, 28064–28077. [Google Scholar] [CrossRef]

- Almudayni, Z.; Soh, B.; Samra, H.; Li, A. Energy inefficiency in IoT networks: Causes, impact, and a strategic framework for sustainable optimisation. Electronics 2025, 14, 159. [Google Scholar] [CrossRef]

- Mukhoti, J.; Kirsch, A.; Van Amersfoort, J.; Torr, P.H.; Gal, Y. Deep deterministic uncertainty: A new simple baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 24384–24394. [Google Scholar]

- Sai, S.; Kanadia, M.; Chamola, V. Empowering IoT with generative AI: Applications, case studies, and limitations. IEEE Internet Things Mag. 2024, 7, 38–43. [Google Scholar] [CrossRef]

- Nezami, Z.; Hafeez, M.; Djemame, K.; Zaidi, S.A.R.; Xu, J. Descriptor: Benchmark Dataset for Generative AI on Edge Devices (BeDGED). IEEE Data Descr. 2025, 2, 50–55. [Google Scholar] [CrossRef]

- Mohanty, R.K.; Sahoo, S.P.; Kabat, M.R.; Alhadidi, B. The Rise of Generative AI Language Models: Challenges and Opportunities for Wireless Body Area Networks. Gener. AI Curr. Trends Appl. 2024, 1177, 101–120. [Google Scholar]

- Lin, J.; Tang, J.; Tang, H.; Yang, S.; Chen, W.; Wang, W.; Xiao, G.; Dang, X.; Gan, C.; Han, S. Awq: Activation-aware weight quantization for on-device llm compression and acceleration. Proc. Mach. Learn. Syst. 2024, 6, 87–100. [Google Scholar] [CrossRef]

- Zhao, X.; Xu, R.; Gao, Y.; Verma, V.; Stan, M.R.; Guo, X. Edge-mpq: Layer-wise mixed-precision quantization with tightly integrated versatile inference units for edge computing. IEEE Trans. Comput. 2024, 73, 2504–2519. [Google Scholar] [CrossRef]

- Li, B.; Wang, X.; Xu, H. Aweq: Post-training quantization with activation-weight equalization for large language models. arXiv 2023, arXiv:2311.01305. [Google Scholar]

- Shen, X.; Dong, P.; Lu, L.; Kong, Z.; Li, Z.; Lin, M.; Wu, C.; Wang, Y. Agile-quant: Activation-guided quantization for faster inference of LLMs on the edge. Proc. AAAI Conf. Artif. Intell. 2024, 38, 18944–18951. [Google Scholar] [CrossRef]

- Katare, D.; Zhou, M.; Chen, Y.; Janssen, M.; Ding, A.Y. Energy-Aware Vision Model Partitioning for Edge AI. In Proceedings of the 40th ACM/SIGAPP Symposium on Applied Computing, New York, NY, USA, 31 March–4 April 2025; pp. 671–678. [Google Scholar]

- Ahmad, W.; Gautam, G.; Alam, B.; Bhati, B.S. An Analytical Review and Performance Measures of State-of-Art Scheduling Algorithms in Heterogenous Computing Environment. Arch. Comput. Methods Eng. 2024, 31, 3091–3113. [Google Scholar] [CrossRef]

- Tundo, A.; Mobilio, M.; Ilager, S.; Brandić, I.; Bartocci, E.; Mariani, L. An energy-aware approach to design self-adaptive ai-based applications on the edge. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Luxembourg, 11–15 September 2023; pp. 281–293. [Google Scholar]

- Różycki, R.; Solarska, D.A.; Waligóra, G. Energy-Aware Machine Learning Models—A Review of Recent Techniques and Perspectives. Energies 2025, 18, 2810. [Google Scholar] [CrossRef]

- Chen, Z.; Xie, B.; Li, J.; Shen, C. Channel-wise mixed-precision quantization for large language models. arXiv 2024, arXiv:2410.13056. [Google Scholar]

- Huang, B.; Abtahi, A.; Aminifar, A. Energy-Aware Integrated Neural Architecture Search and Partitioning for Distributed Internet of Things (IoT). IEEE Trans. Circuits Syst. Artif. Intell. 2024, 1, 257–271. [Google Scholar] [CrossRef]

- Samikwa, E.; Di Maio, A.; Braun, T. Disnet: Distributed micro-split deep learning in heterogeneous dynamic iot. IEEE Internet Things J. 2023, 11, 6199–6216. [Google Scholar] [CrossRef]

- Vahidian, S.; Morafah, M.; Chen, C.; Shah, M.; Lin, B. Rethinking data heterogeneity in federated learning: Introducing a new notion and standard benchmarks. IEEE Trans. Artif. Intell. 2023, 5, 1386–1397. [Google Scholar] [CrossRef]

- Huang, B.; Huang, X.; Liu, X.; Ding, C.; Yin, Y.; Deng, S. Adaptive partitioning and efficient scheduling for distributed DNN training in heterogeneous IoT environment. Comput. Commun. 2024, 215, 169–179. [Google Scholar] [CrossRef]

- López Delgado, J.L.; López Ramos, J.A. A Comprehensive Survey on Generative AI Solutions in IoT Security. Electronics 2024, 13, 4965. [Google Scholar] [CrossRef]

- Zhao, Y.; Xu, Y.; Xiao, Z.; Jia, H.; Hou, T. Mobilediffusion: Instant text-to-image generation on mobile devices. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 225–242. [Google Scholar]

- Islam, M.R.; Dhar, N.; Deng, B.; Nguyen, T.N.; He, S.; Suo, K. Characterizing and Understanding the Performance of Small Language Models on Edge Devices. In Proceedings of the 2024 IEEE International Performance, Computing, and Communications Conference (IPCCC), Orlando, FL, USA, 22–24 November 2024; pp. 1–10. [Google Scholar]

- Ma, H.; Tao, Y.; Fang, Y.; Chen, P.; Li, Y. Multi-Carrier Initial-Condition-Index-aided DCSK Scheme: An Efficient Solution for Multipath Fading Channel. IEEE Trans. Veh. Technol. 2025, 74, 15743–15757. [Google Scholar] [CrossRef]

- Salem, M.A.; Kasem, H.M.; Abdelfatah, R.I.; El-Ganiny, M.Y.; Roshdy, R.A. A KLJN-Based Thermal Noise Modulation Scheme with Enhanced Reliability for Low-Power IoT Communication. IEEE Open J. Commun. Soc. 2025, 6, 6336–6351. [Google Scholar] [CrossRef]

- Zhang, P.; Zeng, G.; Wang, T.; Lu, W. Tinyllama: An open-source small language model. arXiv 2024, arXiv:2401.02385. [Google Scholar]

| Method | Technical Focus | Applicable Scope | Limitation |

|---|---|---|---|

| AWQ | Quantization | LLMs, text-only tasks | No scheduling, limited adaptability |

| CMPQ | Mixed precision | LLMs, vision tasks | Lacks runtime adaptation, no energy-awareness |

| AWEQ | Equalization + Quantization | LLM compression | Not designed for heterogeneous IoT deployment |

| Agile-Quant | Pruning + Quantization | Edge LLM acceleration | Lacks generative-task validation |

| Energy-Aware Partitioning | Scheduling | Vision Transformers | Domain-specific, no generative capability |

| Proposed Framework | Compression + Adaptive Quantization + Energy-Aware Scheduling | Generative AI on low-power IoT | Holistic solution; balances fidelity, energy, and adaptability |

| Dataset | Domain | Size | Task | Metric Used |

|---|---|---|---|---|

| CIFAR-10 | Vision | 60,000 images (32 × 32) | Image generation | FID, PSNR |

| Tiny-ImageNet | Vision | 100,000 images (64 × 64) | Conditional image generation | FID, IS |

| IoT-SensorStream | Multivariate time series | 50 M records | Sequence generation and anomaly detection | MSE, BLEU |

| Device | Type | Specification | Role |

|---|---|---|---|

| NVIDIA Jetson Nano | Edge GPU | ARM Cortex-A57, 128-core Maxwell GPU, 4 GB RAM | Edge inference |

| Raspberry Pi 4 | CPU-only | Quad-core Cortex-A72, 4 GB RAM | Lightweight edge testing |

| NVIDIA A100 | Server GPU | 80 GB HBM2e | Training and baseline evaluation |

| Qualcomm Snapdragon 8cx | Mobile NPU | Hexagon DSP, 8 GB RAM | Mobile inference |

| Category | Metric | Description |

|---|---|---|

| Fidelity | FID, BLEU, IS | Measures realism of generated images and text |

| Efficiency | Latency (ms), Energy (J) | Measures runtime performance and power usage |

| Robustness | Accuracy under noise, Task-switch success | Evaluates resilience to perturbations |

| Generalization | Cross-dataset FID, BLEU | Assesses transfer performance |

| Model | CIFAR-10 | Tiny-ImageNet | IoT-SensorStream | Latency (ms) | Energy (J) |

|---|---|---|---|---|---|

| CNN Autoencoder | 74.2 ± 1.1 | 95.1 ± 1.4 | 0.23 ± 0.02 | 11.5 ± 0.3 | 1.2 ± 0.05 |

| GAN | 41.7 ± 0.9 | 63.5 ± 1.0 | 0.32 ± 0.03 | 18.4 ± 0.6 | 2.8 ± 0.08 |

| VAE | 46.1 ± 1.2 | 70.2 ± 1.3 | 0.28 ± 0.02 | 14.2 ± 0.4 | 1.7 ± 0.05 |

| MobileDiffusion [26] | 28.9 ± 0.8 | 45.1 ± 0.9 | – | 7.9 ± 0.2 | 0.9 ± 0.03 |

| TinyLlama [30] | – | – | 0.37 ± 0.02 | 8.3 ± 0.3 | 1.1 ± 0.04 |

| AWQ LLM [12] | – | – | 0.36 ± 0.02 | 6.7 ± 0.2 | 0.7 ± 0.02 |

| Energy-Aware ViT [21] | 26.5 ± 0.7 | 40.7 ± 0.8 | – | 6.9 ± 0.2 | 0.8 ± 0.02 |

| Proposed Framework | 21.3 ± 0.6 | 34.8 ± 0.7 | 0.42 ± 0.02 | 5.6 ± 0.2 | 0.6 ± 0.02 |

| Case | Ground Truth (Expert Annotation) | Proposed Framework Output | TinyLlama Output |

|---|---|---|---|

| 1 | abnormal vibration detected with high frequency during cycle phase | abnormal vibration detected with high frequency during cycle phase | abnormal vibration detected high |

| 2 | temperature anomaly observed in cooling unit during peak load | temperature anomaly detected in cooling unit under peak load | temperature anomaly in unit |

| 3 | irregular pressure drop in hydraulic system | irregular pressure drop observed in hydraulic system | irregular pressure detected |

| 4 | motor torque exceeded threshold during startup | motor torque exceeded safe threshold at startup phase | motor torque exceeded |

| Configuration | CIFAR-10 | IoT-SensorStream BLEU | Latency (ms) | Energy (J) |

|---|---|---|---|---|

| Full model | 21.3 | 0.42 | 5.6 | 0.6 |

| –Compression | 28.1 | 0.36 | 7.4 | 0.9 |

| –Quantization | 25.7 | 0.39 | 8.6 | 1.3 |

| –Scheduling | 26.5 | 0.38 | 9.3 | 1.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Y.; Fang, Q. An Energy-Aware Generative AI Edge Inference Framework for Low-Power IoT Devices. Electronics 2025, 14, 4086. https://doi.org/10.3390/electronics14204086

Xie Y, Fang Q. An Energy-Aware Generative AI Edge Inference Framework for Low-Power IoT Devices. Electronics. 2025; 14(20):4086. https://doi.org/10.3390/electronics14204086

Chicago/Turabian StyleXie, Yafei, and Quanrong Fang. 2025. "An Energy-Aware Generative AI Edge Inference Framework for Low-Power IoT Devices" Electronics 14, no. 20: 4086. https://doi.org/10.3390/electronics14204086

APA StyleXie, Y., & Fang, Q. (2025). An Energy-Aware Generative AI Edge Inference Framework for Low-Power IoT Devices. Electronics, 14(20), 4086. https://doi.org/10.3390/electronics14204086