Edge Computing Task Offloading Algorithm Based on Distributed Multi-Agent Deep Reinforcement Learning

Abstract

1. Introduction

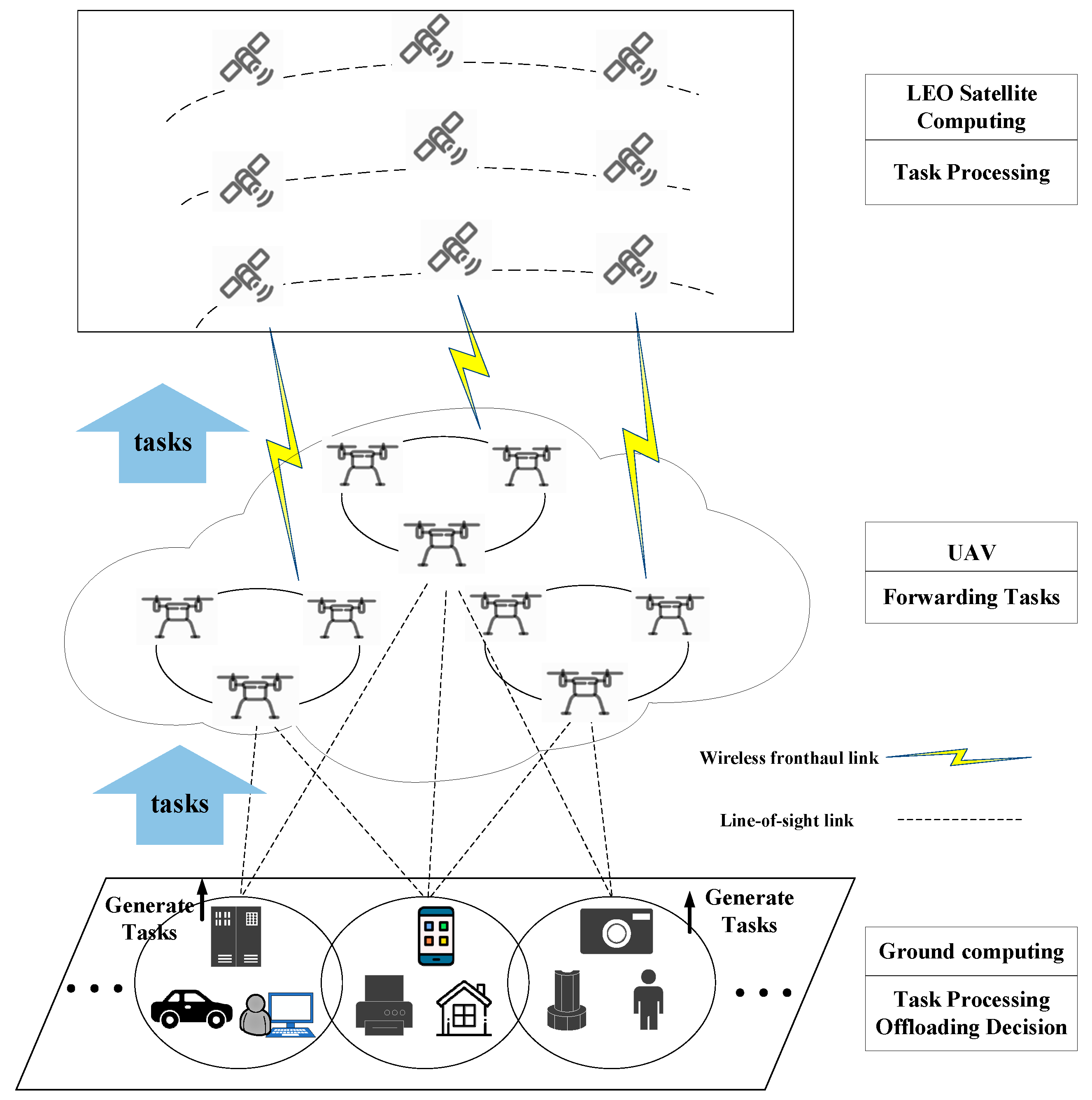

- A three-layer collaborative network architecture, comprising Ground–UAV–LEO satellites, was developed, with UAVs serving as relay layers to forward tasks, thereby enabling high-speed offloading of ground computing tasks to the LEO satellite network. In this architecture, each agent can independently generate offloading decisions without prior knowledge of other agents, ultimately achieving global optimization through global reward feedback.

- The task offloading problem is decomposed into two sub-problems: offloading decisions and resource allocation. The offloading decision sub-problem is formulated as a Markov Decision Process (MDP) and optimized using a distributed multi-agent deep Q-network (DMADQN). Q-value estimation is iteratively optimized via the online and target networks. For the resource allocation sub-problem, the gradient descent method is employed to solve the optimization problem in the continuous action space. This approach enhances the average task completion rate and reduces the average task processing latency.

- The effectiveness of the proposed algorithm was validated through simulation experiments. Compared with existing representative LEO satellite task offloading algorithms, the proposed algorithm increases the average transmission rate by 21.7%, enhances the average task completion rate by at least 22.63%, and reduces the average task processing latency by at least 11.32%, thereby significantly improving system performance and providing an efficient solution for task offloading and resource allocation in LEO satellite–UAV collaborative networks.

2. Related Work

2.1. LEO Satellite Network Architecture Supporting Edge Computing

2.2. Single-Agent DRL Algorithm for Satellite–Ground Collaborative Networks

2.3. MADRL Algorithm for Satellite–Ground Cooperative Network

3. Model Description and Problem Analysis

3.1. Overall Model Description

3.2. Ground Computing Model

3.2.1. Ground Latency Analysis

3.2.2. Ground Energy Consumption Analysis

3.3. Low-Earth-Orbit Satellite Computing Model

3.3.1. LEO Satellite Latency Analysis

3.3.2. LEO Energy Consumption Analysis

3.4. Optimization Objectives

4. Distributed Multi-Agent Offloading Decision and Resource Allocation Solution

4.1. Decomposition of the Task Offloading Problem

4.2. Offloading Decision Process

4.2.1. Markov Model

- (1)

- State space

- (2)

- Action Space

- (3)

- Reward function

- (1)

- User task module. Each task contains status information such as task generation, transmission queue, computing queue, and satellite load. The user task module passes the status information to the training network for training. After training, a complete interactive four-tuple experience group is obtained. On the one hand, this four-tuple is passed to the experience playback buffer module for sampling, and on the other hand, it is passed to the resource allocation module to guide resource allocation.

- (2)

- Experience replay buffer module. The experience gained during training is stored in the replay buffer, from which the agent can sample to improve learning efficiency, accelerate convergence, and suppress overfitting. Specifically, the agent stores the set in the experience replay buffer R. Then, the agent extracts a small batch of N samples from R and updates the parameters and of the online network.

- (3)

- Training network module. The action network and the value evaluation network are approximated by two independent frameworks, namely the Action execution network and the Value evaluation network. The functions are as follows: the Action execution network is responsible for executing decisions, while the Value evaluation network is responsible for evaluating the correctness of the behavior. In the Action execution network or the Value evaluation network, there is an online network and a target network. The four networks have the same structure, the parameters of the online network are and , and the parameters of the target network are and . The weights of the target network are copied from the online network regularly, but the update frequency is much lower than that of the online network. This approach helps to reduce the correlation between the target Q value (the expected return) and the current Q value (the actual return), thereby reducing the volatility in the learning process and solving the instability problem of the training process. After training, the actions are passed to the user task module to guide decision-making.

- (4)

- Resource allocation module. Resources are allocated based on the complete historical interaction experience transmitted by the task module, and then the reward value of the task training sample that completes the communication is passed to the loss function module to calculate the loss function.

- (5)

- Loss function module. The error between the predicted value and the target value of the evaluation network is calculated to measure the accuracy of the strategy, and the parameters of the training network are updated in reverse.

4.2.2. Decision Process Design

4.3. Resource Allocation Process

| Algorithm 1: DMADQN algorithm for Offloading decision |

| Input: t time slot task status Output: Offloading decision

|

4.4. Algorithm Complexity Analysis

- (1)

- Time Complexity

- (2)

- Space Complexity

5. Simulation Analysis

5.1. Simulation Environment Settings

5.2. Simulation Results Analysis

5.2.1. Algorithm Convergence Analysis

5.2.2. Performance Comparison Analysis

- (1)

- Local Computing (LC): In each time slot, all arriving tasks are processed by the user equipment on the ground.

- (2)

- Deep Q Network (DQN) [33]: This strategy implements resource allocation by discretizing the continuous action space. The network architecture of the algorithm is the same as the value evaluation network in this paper, and the ε-greedy strategy is also adopted during the exploration process.

- (3)

- Dual Deep Q Network (DDQN) [34]: Since the maximization operation in DQN easily leads to large Q value estimation, DDQN was proposed based on the original DQN to reduce this overestimation problem. The algorithm improves the accuracy of algorithm learning by decoupling the action selection and evaluation process of the objective value function.

- (4)

- Deep Deterministic Policy Gradient (DDPG) [35]: DDPG employs deep reinforcement learning (DRL) to optimize dynamic decision-making for continuous actions in task offloading. It aims to balance multiple objectives, including latency and energy consumption, while adapting to changing environments, but its convergence speed is relatively slow.

- (5)

- Distributed Multi-Agent Deep Deterministic Policy Gradient (MADDPG) [36]: This algorithm is a multi-agent extension of DDPG, addressing the value estimation bias issue in multi-agent environments. During the execution phase, each agent generates continuous actions based on its own local observations and an independent policy network without relying on global information.

5.3. Ablation Experiment

6. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, A.; Wen, Y. Computing offloading strategy using improved genetic algorithm in mobile edge computing system. J. Grid Comput. 2021, 19, 38. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, S.; Chen, Q.; Wang, H.; Wang, M.; Liu, N. Computing-aware routing for LEO satellite networks: A transmission and computation integration approach. IEEE Trans. Veh. Technol. 2023, 72, 16607–16623. [Google Scholar] [CrossRef]

- El-Emary, M.; Naboulsi, D.; Stanica, R. Energy Efficient and Resilient Task Offloading in UAV-Assisted MEC Systems. IEEE Open J. Veh. Technol. 2025, 6, 2236–2254. [Google Scholar] [CrossRef]

- Gao, X.; Liu, R.; Kaushik, A.; Zhang, H. Dynamic resource allocation for virtual network function placement in satellite edge clouds. IEEE Trans. Netw. Sci. Eng. 2022, 9, 2252–2265. [Google Scholar] [CrossRef]

- Hussein, M.K.; Mousa, M.H. Efficient task offloading for IoT-based applications in fog computing using ant colony optimization. IEEE Access 2020, 8, 37191–37201. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W.S. Deep reinforcement learning for task offloading in mobile edge computing systems. IEEE Trans. Mob. Comput. 2020, 21, 1985–1997. [Google Scholar] [CrossRef]

- Seid, A.M.; Boateng, G.O.; Mareri, B.; Sun, G.; Jiang, W. Multi-agent DRL for task offloading and resource allocation in multi-UAV enabled IoT edge network. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4531–4547. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint multi-task offloading and resource allocation for mobile edge computing systems in satellite IoT. IEEE Trans. Veh. Technol. 2023, 72, 7783–7795. [Google Scholar] [CrossRef]

- Qin, Z.; Yao, H.; Mai, T.; Wu, D.; Zhang, N.; Guo, S. Multi-agent reinforcement learning aided computation offloading in aerial computing for the internet-of-things. IEEE Trans. Serv. Comput. 2022, 16, 1976–1986. [Google Scholar] [CrossRef]

- Yao, S.; Wang, M.; Ren, J.; Xia, T.; Wang, W.; Xu, K.; Xu, M.; Zhang, H. Multi-Agent Reinforcement Learning for Task Offloading in Crowd-Edge Computing. IEEE Trans. Mob. Comput. 2025, 24, 9289–9302. [Google Scholar] [CrossRef]

- Xu, S.; Liu, J.; Tang, J.; Liu, X.; Li, Z. Multi objective reinforcement learning driven task offloading algorithm for satellite edge computing networks. Sci. Rep. 2025, 15, 24045. [Google Scholar] [CrossRef]

- Tang, Q.; Fei, Z.; Li, B.; Han, Z. Computation offloading in LEO satellite networks with hybrid cloud and edge computing. IEEE Internet Things J. 2021, 8, 9164–9176. [Google Scholar] [CrossRef]

- Sheng, M.; Zhou, D.; Bai, W.; Liu, J.; Li, H.; Shi, Y.; Li, J. Coverage enhancement for 6G satellite-terrestrial integrated networks: Performance metrics, constellation configuration and resource allocation. Sci. China Inf. Sci. 2023, 66, 130303. [Google Scholar] [CrossRef]

- Cheng, N.; Lyu, F.; Quan, W.; Zhou, C.; He, H.; Shi, W.; Shen, X. Space/aerial-assisted computing offloading for IoT applications: A learning-based approach. IEEE J. Sel. Areas Commun. 2019, 37, 1117–1129. [Google Scholar] [CrossRef]

- Cui, G.; Li, X.; Xu, L.; Wang, W. Latency and energy optimization for MEC enhanced SAT-IoT networks. IEEE Access 2020, 8, 55915–55926. [Google Scholar] [CrossRef]

- Yan, L.; Cao, S.; Gong, Y.; Han, H.; Wei, J.; Zhao, Y.; Yang, S. SatEC: A 5G satellite edge computing framework based on microservice architecture. Sensors 2019, 19, 831. [Google Scholar] [CrossRef]

- Xie, R.; Tang, Q.; Wang, Q.; Liu, X.; Yu, F.R.; Huang, T. Satellite-terrestrial integrated edge computing networks: Architecture, challenges, and open issues. IEEE Netw. 2020, 34, 224–231. [Google Scholar] [CrossRef]

- Zhou, Y.; Lei, L.; Zhao, X.; You, L.; Sun, Y.; Chatzinotas, S. Decomposition and meta-DRL based multi-objective optimization for asynchronous federated learning in 6G-satellite systems. IEEE J. Sel. Areas Commun. 2024, 42, 1115–1129. [Google Scholar] [CrossRef]

- Du, J.; Wang, J.; Sun, A.; Qu, J.; Zhang, J.; Wu, C.; Niyato, D. Joint optimization in blockchain-and MEC-enabled space–air–ground integrated networks. IEEE Internet Things J. 2024, 11, 31862–31877. [Google Scholar] [CrossRef]

- Zhang, P.; Zhang, Y.; Kumar, N.; Hsu, C.H. Deep reinforcement learning for latency-oriented IoT task scheduling in SAGIN. IEEE Trans. Wirel. Commun. 2020, 20, 911–925. [Google Scholar]

- Lyu, Y.; Liu, Z.; Fan, R.; Zhan, C.; Hu, H.; An, J. Optimal computation offloading in collaborative LEO-IoT enabled MEC: A multiagent deep reinforcement learning approach. IEEE Trans. Green Commun. Netw. 2022, 7, 996–1011. [Google Scholar] [CrossRef]

- Lakew, D.S.; Tran, A.T.; Dao, N.N.; Cho, S. Intelligent self-optimization for task offloading in LEO-MEC-assisted energy-harvesting-UAV systems. IEEE Trans. Netw. Sci. Eng. 2024, 11, 5135–5148. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, R.; Kaushik, A.; Gao, X. Satellite edge computing with collaborative computation offloading: An intelligent deep deterministic policy gradient approach. IEEE Internet Things J. 2023, 10, 9092–9107. [Google Scholar] [CrossRef]

- Jiao, T.; Feng, X.; Guo, C.; Wang, D.; Song, J. Multi-Agent Deep Reinforcement Learning for Efficient Computation Offloading in Mobile Edge Computing. Comput. Mater. Contin. 2023, 76, 3585. [Google Scholar] [CrossRef]

- Xu, S.; Liu, Q.; Gong, C.; Wen, X. Energy-Efficient Multi-Agent Deep Reinforcement Learning Task Offloading and Resource Allocation for UAV Edge Computing. Sensors 2025, 25, 3403. [Google Scholar] [CrossRef]

- Kim, M.; Lee, H.; Hwang, S.; Debbah, M.; Lee, I. Cooperative multi-agent deep reinforcement learning methods for uav-aided mobile edge computing networks. IEEE Internet Things J. 2024, 11, 38040–38053. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, C.; Ge, T.; Pan, M. Computation offloading via multi-agent deep reinforcement learning in aerial hierarchical edge computing systems. IEEE Trans. Netw. Sci. Eng. 2024, 11, 5253–5266. [Google Scholar] [CrossRef]

- Zhou, J.; Liang, J.; Zhao, L.; Wan, S.; Cai, H.; Xiao, F. Latency-Energy Efficient Task Offloading in the Satellite Network-Assisted Edge Computing via Deep Reinforcement Learning. IEEE Trans. Mob. Comput. 2024, 24, 2644–2659. [Google Scholar] [CrossRef]

- Jia, M.; Zhang, L.; Wu, J.; Guo, Q.; Zhang, G.; Gu, X. Deep Multi-Agent Reinforcement Learning for Task Offloading and Resource Allocation in Satellite Edge Computing. IEEE Internet Things J. 2024, 12, 3832–3845. [Google Scholar] [CrossRef]

- She, H.; Yan, L.; Guo, Y. Efficient end–edge–cloud task offloading in 6g networks based on multiagent deep reinforcement learning. IEEE Internet Things J. 2024, 11, 20260–20270. [Google Scholar] [CrossRef]

- Zhang, H.; Tian, Z.; Zeng, L.; Lu, L.; Qiao, S.; Chen, S.; Liu, X. Distributed Multi-Agent Reinforcement Learning Approach for Multi-Server Multi-User Task Offloading. IEEE Internet Things J. 2025, 12, 37836–37852. [Google Scholar] [CrossRef]

- Zhang, S.; Cui, G.; Long, Y.; Wang, W. Joint computing and communication resource allocation for satellite communication networks with edge computing. China Commun. 2021, 18, 236–252. [Google Scholar] [CrossRef]

- Chiang, Y.; Hsu, C.H.; Chen, G.H.; Wei, H.Y. Deep Q-learning-based dynamic network slicing and task offloading in edge network. IEEE Trans. Netw. Serv. Manag. 2022, 20, 369–384. [Google Scholar] [CrossRef]

- Zhai, H.; Zhou, X.; Zhang, H.; Yuan, D. Latency minimization in hybrid edge computing networks: A DDQN-based task offloading approach. IEEE Trans. Veh. Technol. 2024, 73, 15098–15108. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, M.; Li, M. Task offloading strategy and scheduling optimization for internet of vehicles based on deep reinforcement learning. Ad Hoc Netw. 2023, 147, 103193. [Google Scholar] [CrossRef]

- Alam, M.M.; Sangman, M. Joint trajectory control, frequency allocation, and routing for uav swarm networks: A multi-agent deep reinforcement learning approach. IEEE Trans. Mob. Comput. 2024, 23, 11989–12005. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| U/M/N | Number of user devices/UAVs/LEO satellites |

| T | Total number of time slots |

| Duration of each time slot | |

| The computational tasks of the user equipment group in time slot t | |

| // | Data size/computation density/maximum tolerable latency of task |

| / | Remaining energy capacity on the ground/LEO satellite when processing tasks |

| / | The time slot in which the task waits for latency/full execution or discard |

| / | Ground/fronthaul transmission latency |

| / | Ground/LEO satellite computing latency |

| / | Ground/LEO satellite computing power |

| / | Ground/LEO satellite mission processing latency |

| / | Ground/LEO satellite processing energy consumption |

| Parameter | Value |

|---|---|

| Poisson Distribution | 5 |

| Energy Consumption Coefficient | 10−28 |

| Penalty Weight | 0.1 |

| Exploration Rate | From 1 to 0.01 |

| Experience Replay Buffer Size R | 60,000 |

| Mini-Batch Sample Size | 256 |

| Discount Factor | 0.95 |

| Initial Learning Rate | 0.01 |

| Soft Update Factor | 0.001 |

| Average Task Completion Rate (%) | Average Transmission Rate (Mbps) | Average Latency (ms) | Average Energy Consumption (J) | |

|---|---|---|---|---|

| (a) | 78.196 | 13.963 | 65.638 | 0.769 |

| (b) | 85.635 | 10.446 | 69.459 | 0.864 |

| (c) | 89.946 | 10.856 | 56.566 | 0.894 |

| Proposed Method in This Paper | 95.452 | 7.563 | 51.366 | 0.616 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Zhu, Z.; Li, Y.; Huang, W.; Wang, Z. Edge Computing Task Offloading Algorithm Based on Distributed Multi-Agent Deep Reinforcement Learning. Electronics 2025, 14, 4063. https://doi.org/10.3390/electronics14204063

Li H, Zhu Z, Li Y, Huang W, Wang Z. Edge Computing Task Offloading Algorithm Based on Distributed Multi-Agent Deep Reinforcement Learning. Electronics. 2025; 14(20):4063. https://doi.org/10.3390/electronics14204063

Chicago/Turabian StyleLi, Hui, Zhilong Zhu, Yingying Li, Wanwei Huang, and Zhiheng Wang. 2025. "Edge Computing Task Offloading Algorithm Based on Distributed Multi-Agent Deep Reinforcement Learning" Electronics 14, no. 20: 4063. https://doi.org/10.3390/electronics14204063

APA StyleLi, H., Zhu, Z., Li, Y., Huang, W., & Wang, Z. (2025). Edge Computing Task Offloading Algorithm Based on Distributed Multi-Agent Deep Reinforcement Learning. Electronics, 14(20), 4063. https://doi.org/10.3390/electronics14204063