Facial and Speech-Based Emotion Recognition Using Sequential Pattern Mining

Abstract

1. Introduction

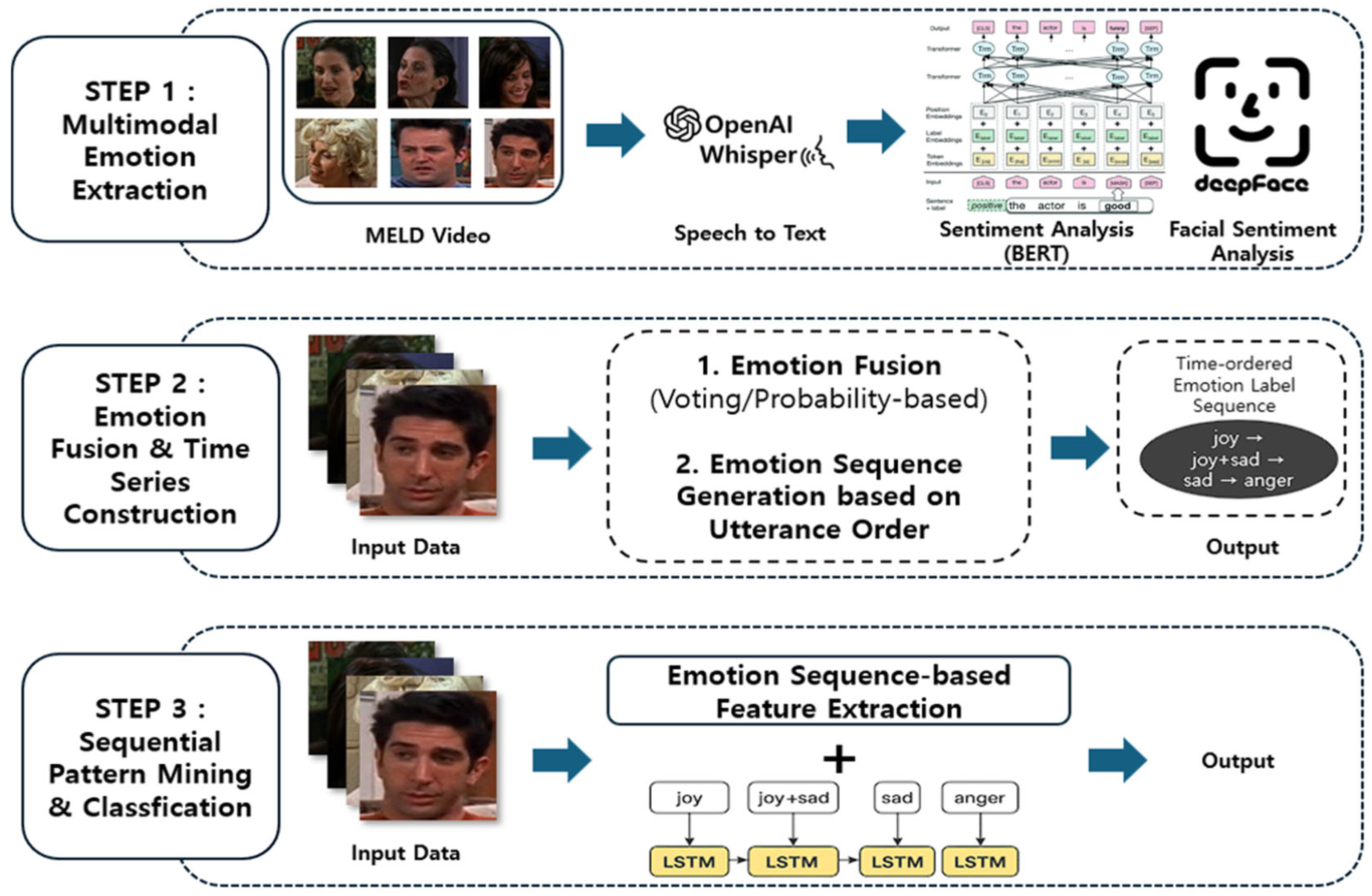

- Development of a multimodal emotion recognition framework: By integrating Whisper-based speech transcription, BERT-based text emotion analysis, and DeepFace-based facial emotion analysis, the proposed framework overcomes the limitations of unimodal approaches and achieves more accurate and reliable emotion recognition.

- Construction of emotion sequences and application of SPM: Based on MELD utterance sequences, emotion sequences are generated, and SPM-based techniques are applied to quantitatively analyze dynamic emotional characteristics such as transition patterns, duration, and change-point detection.

- Emotion flow visualization and empirical analysis: By visually representing emotion transition matrices and change-point detection results, the study provides intuitive insights into trends of affective shifts and sudden changes within conversations, offering design guidance for affective systems and interface improvements.

2. Related Work

2.1. Multimodal Emotion Recognition

2.2. Sequential Emotion Flow Analysis

3. Facial and Speech Emotion Sequence Modeling Using Sequential Pattern Mining

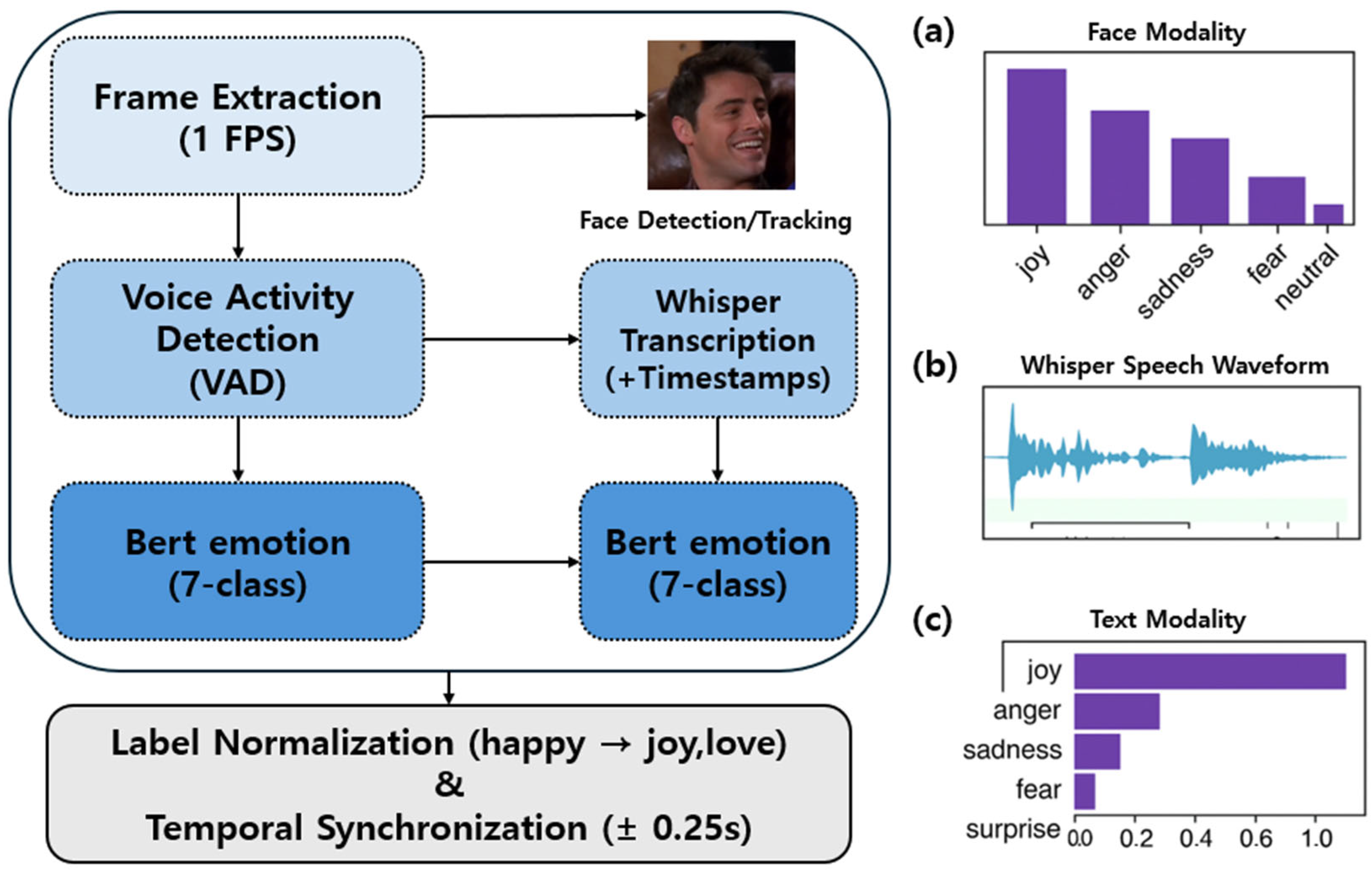

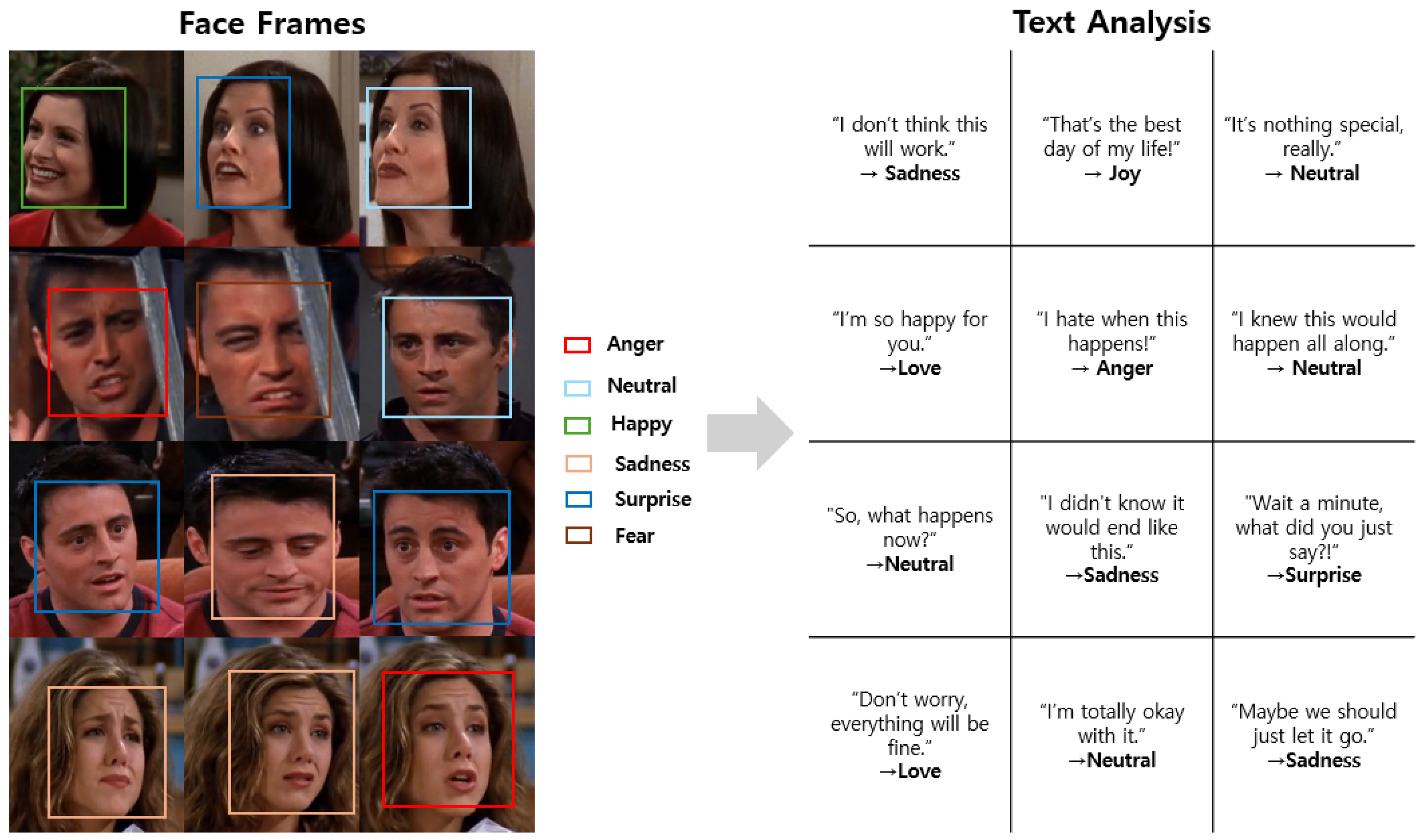

3.1. Multimodal Data Preprocessing and Emotion Extraction

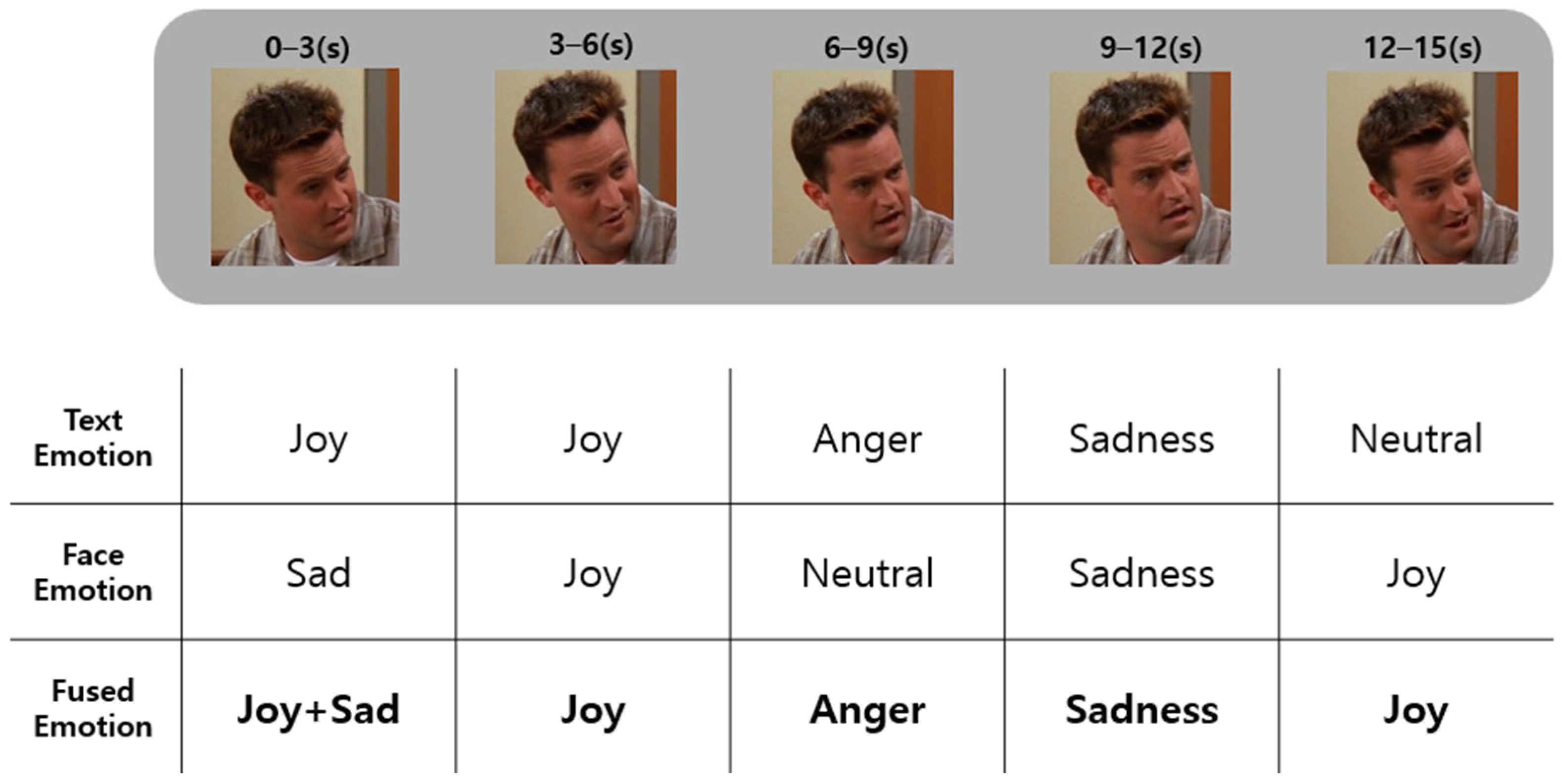

3.2. Emotion Fusion and Time Series Construction

- Dominant Emotion Selection: If the same emotion has the highest probability in both modalities, it is set as the final emotion.

- Probability-Based Weighted Voting: If the modalities indicate different emotions, the one with the higher Softmax probability value is selected. If the probability difference is not significant (e.g., difference <0.15), both emotions are noted to represent a complex emotion (e.g., joy + sadness).

- Handling Uncertain Cases: If the confidence of all emotion predictions for a specific utterance is low (confidence <0.4), that segment is labeled as “uncertain” or “neutral.”

3.3. Emotion Sequence Mining and Classification

3.3.1. Emotion Transition Matrix

- Persistence Analysis: Measures how long a single emotion is maintained.

- Directionality: Identifies the primary transition paths between emotions (i.e., which emotions tend to lead to which other emotions).

- Predictive Modeling: Predicts the next emotional state based on past patterns.

| Algorithm 1 Emotion Transition Matrix Calculation |

| 1: Input: An emotion sequence O = (o1, o2, …, ot), A set of unique emotion states S = {s1, s2, …, sn} 2: Output: An emotion transition probability matrix T of size N × N 3: 4: ▷ Initialize a matrix to store transition counts 5: Initialize an N × N matrix C with all elements set to 0 6: 7: ▷ Iterate through the sequence to count observed transitions 8: for t ← 1 to T−1 do 9: i ← indexOf(o_t, S) ▷ Get index of current emotion 10: j ← indexOf(o_{t+1}, S) ▷ Get index of next emotion 11: C[i][j] ← C[i][j] + 1 12: end for 13: ▷ Normalize the count matrix row by row to get probabilities 14: Initialize an N × N probability matrix T 15: for i ← 1 to N do 16: total_transitions ← Σ C[i][j] from j = 1 to N 17: if total_transitions ≠ 0 then 18: for j ← 1 to N do 19: T[i][j] ← C[i][j]/total_transitions 20: end for 21: else 22: for j ← 1 to N do 23: T[i][j] ← 0 24: end for 25: end if 26: end for 27: return T |

3.3.2. Change-Point Detection

| Algorithm 2 CUSUM Change-Point Detection |

| 1: Input: A numeric sequence X = (x1, x2, …, xT), threshold h, drift δ 2: Output: A list of change-point indices C 4: ▷ Initialization 5: S ← 0 6: C ← [] ▷ Iterate through the sequence to count observed transitions 7: μ ← mean(X) ▷ Calculate the mean of the sequence 9: ▷ Iterate through the sequence to detect changes 8: for t ← 1 to T do 9: S ← max(0, S + xt − μ − δ) 10: if S > h then 11: Append t to C 12: S ← 0 ▷ Reset sum after a change-point is detected 13: end if 14: end for 15: return C |

3.3.3. Emotion Sequential Pattern Mining

3.3.4. Time Series Classification (LSTM)

- Emotion Label: The primary emotion label for the utterance, represented as a one-hot encoded vector (e.g., ‘joy’ as [1, 0, 0, 0, 0, 0, 0]).

- Change-Point Feature: A binary feature indicating an abrupt emotional shift. This value was set to ‘1’ if the CUSUM algorithm detected a change-point at utterance t, and ‘0’ otherwise. This allows the model to explicitly recognize moments of sharp transition.

- SPM Pattern Features: Features derived from the mined frequent sequential patterns. To generate these, we first determined the minimum support threshold (min_support) for the PrefixSpan algorithm empirically by evaluating a range of values on the validation set, selecting the one that yielded the most meaningful patterns. Then, for each dialogue, we created a multi-hot binary vector where each dimension corresponded to one of the top-k frequent patterns. A ‘1’ was marked in the vector if the dialogue’s emotion sequence contained that specific pattern. This dialogue-level SPM feature vector was then concatenated with the utterance-level features for every time step in that dialogue.

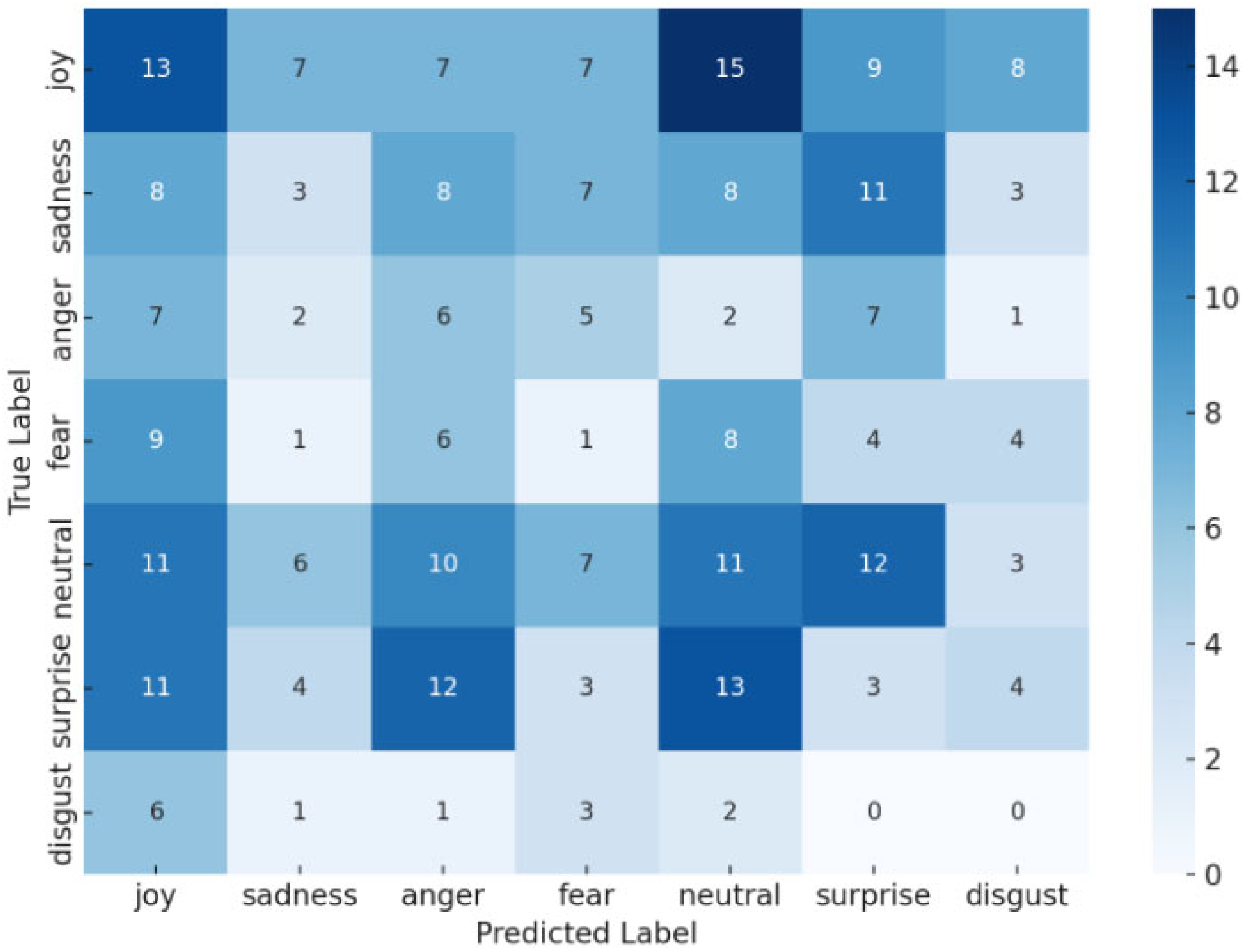

4. Results and Performance Evaluation

4.1. Results

4.2. Performance Evaluation

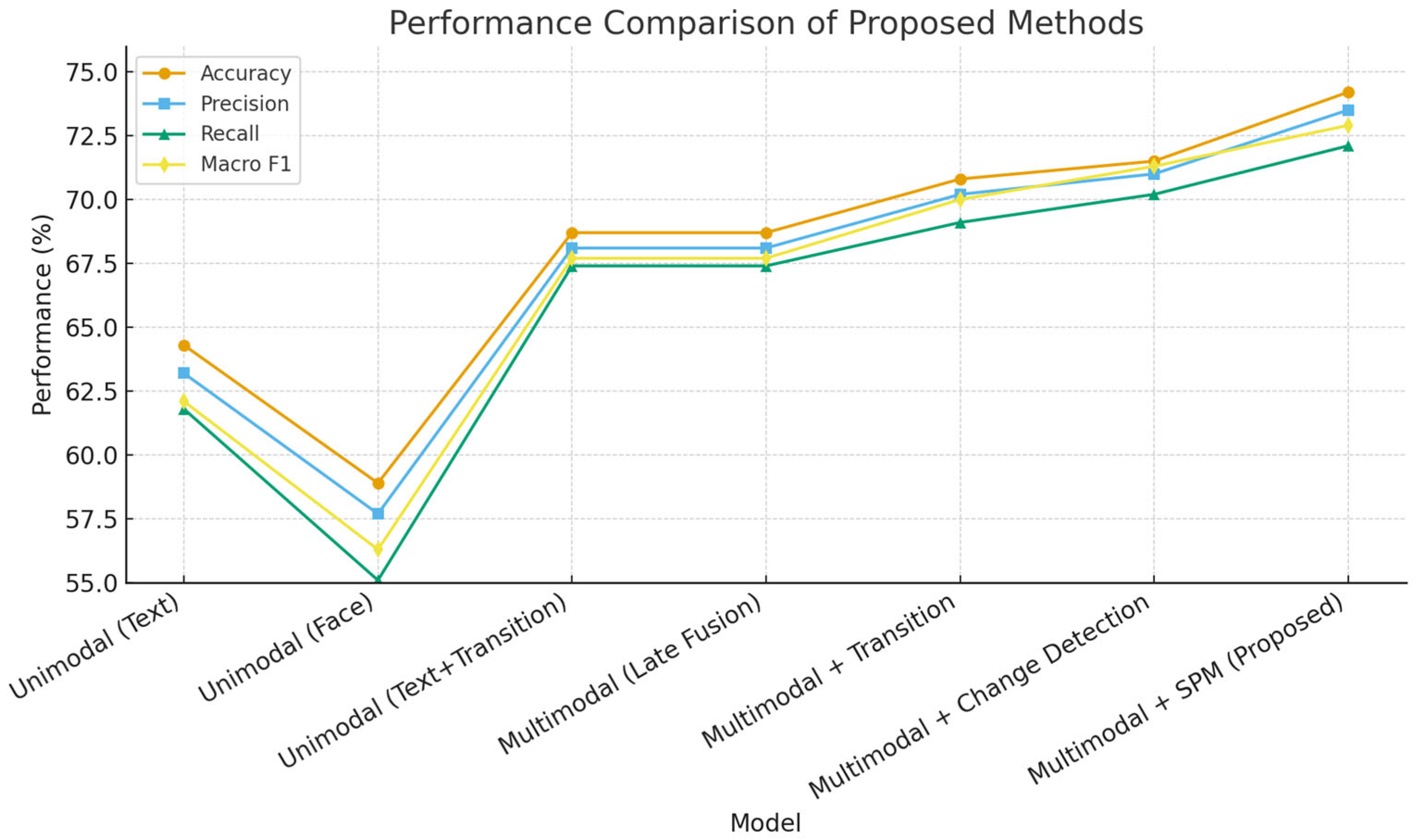

- Unimodal (Text): A unimodal model that recognizes emotions using only the BERT model based on text data transcribed by Whisper. As text data has been a primary modality in previous multimodal research, it serves as a useful performance baseline.

- Unimodal (Face): A unimodal model that performs emotion recognition using only the DeepFace-based facial expression recognition model, recognizing emotions from video frame data. This serves as a baseline for evaluating the standalone performance of the visual modality.

- Unimodal (Text) + Transition Matrix: To better isolate the individual contributions of multimodal fusion and temporal modeling, we introduced this baseline. It constructs an emotion transition matrix solely from the text modality and uses this temporal information as a feature for classification, allowing for a more direct comparison against our proposed multimodal temporal models.

- Multimodal (Late Fusion): A multimodal fusion model that performs final emotion prediction by combining the emotion results extracted from the text and facial modalities using a simple voting method. This is a basic multimodal approach without any additional time-series analysis techniques applied.

- Multimodal + Transition Matrix: A model that considers temporal features by applying an Emotion Transition Matrix to the results of the Late Fusion-based multimodal approach. This setup is for evaluating whether utilizing emotion transition characteristics improves model performance.

- Multimodal + Change Detection: An approach that aims to improve emotion prediction Accuracy by adding CUSUM change-point detection to the multimodal emotion sequence to detect and reflect abrupt emotional changes. Multimodal + SPM (Proposed): The final model proposed in this study, which includes all the methods (multimodal fusion, emotion transition matrix, change-point detection, Emotion SPM, and LSTM-based time-series classification).

4.3. Computational Cost and Scalability for Real-Time Systems

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, Y.; Mi, Q.; Gao, T. A Comprehensive Review of Multimodal Emotion Recognition: Techniques, Challenges, and Future Directions. Biomimetics 2025, 10, 418. [Google Scholar] [CrossRef]

- Maithri, M.; Raghavendra, U.; Gudigar, A.; Acharya, U.R. Automated Emotion Recognition: Current Trends and Future Perspectives. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 1140–1152. [Google Scholar] [CrossRef] [PubMed]

- Pan, B.; Hirota, K.; Jia, Z.; Dai, Y. A Review of Multimodal Emotion Recognition from Datasets, Preprocessing, Features, and Fusion Methods. Neurocomputing 2023, 561, 126866. [Google Scholar] [CrossRef]

- Lian, H.; Lu, C.; Li, S.; Zhao, Y.; Tang, C.; Zong, Y. A Survey of Deep Learning-Based Multimodal Emotion Recognition: Speech, Text, and Face. Entropy 2023, 25, 1440. [Google Scholar] [CrossRef]

- Xiang, P.; Lin, C.; Wu, K.; Bai, O. MultiMAE-DER: Multimodal Masked Autoencoder for Dynamic Emotion Recognition. arXiv 2024, arXiv:2404.18327. [Google Scholar]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor fusion network for multimodal sentiment analysis. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (EMNLP), Copenhagen, Denmark, 7–11 September 2017; pp. 1103–1114. [Google Scholar]

- Tsai, Y.H.H.; Bai, S.; Yamada, M.; Morency, L.P.; Salakhutdinov, R. Multimodal Transformer for Unaligned Multimodal Language Sequences. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 6558–6569. [Google Scholar]

- Farhadipour, A.; Qaderi, K.; Akbari, M. Emotion Recognition and Sentiment Analysis in Multi-Party Conversation Contexts. arXiv 2025, arXiv:2503.06805. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, C.; Zhang, W. Improving Multimodal Emotion Recognition via Acoustic Adaptation and Visual Alignment. arXiv 2024, arXiv:2409.05015. [Google Scholar]

- Ge, M.; Hou, Y.; Song, Y. Early Joint Learning of Emotion Information for Multimodal Conversation. arXiv 2024, arXiv:2409.18971. [Google Scholar]

- Li, J.; Yu, R.; Huang, H.; Yan, H. Unimodal-driven Distillation in Multimodal Emotion Recognition with Dynamic Fusion. arXiv 2025, arXiv:2503.23721. [Google Scholar] [CrossRef]

- Lei, Y. In-depth Study and Application Analysis of Multimodal Emotion Recognition Methods: Multidimensional Fusion Techniques Based on Vision, Speech, and Text. Appl. Comput. Eng. 2024, 107, 73–80. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, J.; Fu, Y. Learning from a Progressive Network of Multigranular Interactions for Multimodal Emotion Recognition. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 1512–1520. [Google Scholar]

- Savani, B. Bert-Base-Uncased-Emotion. HuggingFace Model Card. 2020. Available online: https://huggingface.co/bhadresh-savani/bert-base-uncased-emotion (accessed on 27 August 2025).

- Serengil, S.; Ozpinar, A. LightFace: A Hybrid Deep Face Recognition Framework. In Proceedings of the 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, 15–17 October 2020; pp. 1–5. [Google Scholar]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Online, 18–24 July 2021. [Google Scholar]

- Kshirsagar, R.; Morency, L.P.; Palm, R. Multimodal dialog systems with context modeling using hierarchical encoder-decoder architecture. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5680–5684. [Google Scholar]

- Hassan, A.; Mahmood, A.; Durrani, T.S. Change-point detection in emotional speech signals using RNNs. Pattern Recognit. Lett. 2021, 143, 40–47. [Google Scholar]

- Yu, J.; Li, B.; Zhang, Z.; Wang, X. Dual-Stage Cross-Modal Network for Dynamic Emotion Trajectory Modeling in Conversations. Front. Psychiatry 2025, 16, 1579543. [Google Scholar]

- Meng, T.; Zhang, F.; Shou, Y.; Shao, H.; Ai, W.; Li, K. Masked Graph Learning with Recurrent Alignment for Multimodal Emotion Recognition in Conversation. arXiv 2024, arXiv:2407.16714. [Google Scholar] [CrossRef]

- Sun, L.; Lian, Z.; Liu, B.; Tao, J. HiCMAE: Hierarchical Contrastive Masked Autoencoder for Self-Supervised Audio-Visual Emotion Recognition. arXiv 2024, arXiv:2401.05698. [Google Scholar] [CrossRef]

- Chung, K.Y.; Na, Y.J.; Lee, J.H. Development of Fashion Design Recommender System using Textile based Collaborative Filtering Personalization Technique. J. KIISE Comput. Pract. Lett. 2003, 9, 541–550. [Google Scholar]

- Kim, J.C.; Cho, E.B.; Chang, J.H. Construction of Dataset for the 5 Major Violent Crimes Through Collection and Preprocessing of Judgment. J. Artif. Intell. Converg. Technol. 2025, 5, 11–16. [Google Scholar]

- Lee, S.E.; Yoo, H.; Chung, K. Pose Pattern Mining Using Transformer for Motion Classification. Appl. Intell. 2024, 54, 3841–3858. [Google Scholar] [CrossRef]

- Jo, S.M. A Study on Technical Analysis of Efficient Recommendation Systems. J. Artif. Intell. Converg. Technol. 2025, 5, 17–22. [Google Scholar]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Mihalcea, R.; Cambria, E. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations (EMNLP), Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.-P. Multimodal Machine Learning: A Survey and Taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.J.; Chung, K. T5-Based Anomaly-Behavior Video Captioning Using Semantic Relation Mining. Appl. Soft Comput. 2025, 185, 113923. [Google Scholar] [CrossRef]

- Kim, G.I.; Chung, K. ViT-Based Multi-Scale Classification Using Digital Signal Processing and Image Transformation. IEEE Access 2024, 12, 58625–58638. [Google Scholar] [CrossRef]

- Lim, S. Trends in Automation Technologies for Computer Graphics Using Artificial Intelligence. J. Artif. Intell. Converg. Technol. 2025, 5, 37–42. [Google Scholar]

- Serengil, S.I.; Ozpinar, A. DeepFace: A Lightweight Face Recognition and Facial Attribute Analysis (Age, Gender, Emotion and Race) Framework for Python. arXiv 2021, arXiv:2101.06938. [Google Scholar]

| Category | Value |

|---|---|

| Total Dialogues | 1433 |

| Total Utterances | 13,703 |

| Average Utterance Length | Approx. 9 s |

| Average Emotion Length | 2.7 s |

| Number of Emotion Classes | 7 |

| Frames per Video | Approx. 30–60 |

| Dialogue ID | Utterance ID | Text Emotion | Face Emotion | Fused Emotion | Timestamp(s) |

|---|---|---|---|---|---|

| 757 | 17 | Joy | Sad | Joy + Sad | 0–3 |

| 757 | 18 | Joy | Joy | Joy | 3–6 |

| 757 | 19 | Anger | Neutral | Anger | 6–9 |

| 757 | 20 | Sadness | Sadness | Sadness | 9–12 |

| 757 | 21 | Neutral | Joy | Joy | 12–15 |

| Emotion Class | Integer Index |

|---|---|

| Joy | 0 |

| Anger | 1 |

| Sadness | 2 |

| Fear | 3 |

| Surprise | 4 |

| Love | 5 |

| Neutral | 6 |

| Video ID | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| V001 | 0 | 0 | 1 | 1 | 0 | 0 | 0 |

| V002 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| V003 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| V004 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| V005 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| V006 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| … | … | … | … | … | … | … | … |

| V500 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| Prefix | Subset of Sequential Patterns |

|---|---|

| <0> | <0, 0, 1>, <0, 1, 2>, <0, 0, 3>, <0, 2, 2>, <0, 3, 0>, <0, 0, 1, 0>, <0, 2, 4>, <0, 1, 1>, <0, 0, 0>, <0, 4, 2>, <0, 2, 5>, <0, 3, 1>, <0, 2, 0>, <0, 6, 6>, <0, 4, 0>, <0, 0, 6>, … |

| <1> | <1, 2, 2>, <1, 0, 1>, <1, 4, 4>, <1, 3, 3>, <1, 1, 0>, <1, 2, 5>, <1, 6, 1>, <1, 0, 0>, <1, 3, 1>, <1, 5, 0>, <1, 1, 2>, <1, 6, 3>, <1, 6, 0>, <1, 1, 6>, <1, 2, 1>, <1, 3, 1> … |

| <2> | <2, 0, 3>, <2, 2, 0>, <2, 1, 6>, <2, 3, 0>, <2, 0, 0>, <2, 4, 2>, <2, 6, 3>, <2, 2, 1>, <2, 3, 3>, <2, 5, 0>, <2, 2, 4>, <2, 1, 0>, <2, 6, 1>, <2, 2, 6>, <2, 0, 2>, <2, 2, 0>, … |

| <3> | <3, 3, 2>, <3, 0, 0>, <3, 2, 5>, <3, 4, 0>, <3, 1, 3>, <3, 0, 2>, <3, 3, 1>, <3, 0, 4>, <3, 5, 2>, <3, 2, 3>, <3, 6, 0>, <3, 1, 2>, <3, 4, 6>, <3, 6, 2>, <3, 3, 0>,<3, 0, 2>, … |

| <4> | <4, 0, 3>, <4, 4, 3>, <4, 2, 1>, <4, 0, 0>, <4, 1, 4>, <4, 3, 2>, <4, 6, 1>, <4, 0, 2>, <4, 2, 4>, <4, 5, 3>, <4, 1, 0>, <4, 3, 5>,<4, 0, 6>, <4, 6, 0>, <4, 1, 2>,<4, 0, 1>,… |

| <5> | <5, 0, 6>, <5, 2, 3>, <5, 0, 0>, <5, 4, 1>, <5, 1, 2>, <5, 3, 0>, <5, 2, 5>, <5, 0, 3>, <5, 5, 1>, <5, 6, 2>, <5, 1, 1>, <5, 2, 0>,<5, 0, 1>, <5, 6, 0>, <5, 5, 0>,<5, 5, 1> … |

| <6> | <6, 6, 2>, <6, 0, 1>, <6, 3, 4>, <6, 2, 6>, <6, 1, 1>, <6, 4, 0>, <6, 0, 2>, <6, 5, 3>, <6, 1, 5>, <6, 3, 0>, <6, 2, 2>, <6, 0, 0>,<6, 1, 0>, <6, 2, 0>, <6, 6, 0>,<6, 6, 2> … |

| Model | Accuracy (%) | Precision (%) | Recall (%) | Macro F1 (%) |

|---|---|---|---|---|

| Unimodal (Text) | 64.3 | 63.2 | 61.8 | 62.1 |

| Unimodal (Face) | 58.9 | 57.7 | 55.1 | 56.3 |

| Unimodal (Text) + Transition Matrix | 68.7 | 68.1 | 67.4 | 67.7 |

| Multimodal (Late Fusion) | 68.7 | 68.1 | 67.4 | 67.7 |

| Multimodal + Transition Matrix | 70.8 | 70.2 | 69.1 | 70.2 |

| Multimodal + Change Detection | 71.5 | 71.0 | 70.2 | 71.3 |

| Multimodal + SPM (Ours) | 74.2 | 73.5 | 72.1 | 72.9 |

| Model | Macro F1 (%) |

|---|---|

| Multimodal (Fusion only) | 67.7 |

| Emotion Transition Matrix | 70.2 |

| Change-Point Detection | 71.3 |

| SPM Features | 72.3 |

| LSTM Classification (Full) | 72.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Chung, K. Facial and Speech-Based Emotion Recognition Using Sequential Pattern Mining. Electronics 2025, 14, 4015. https://doi.org/10.3390/electronics14204015

Song Y, Chung K. Facial and Speech-Based Emotion Recognition Using Sequential Pattern Mining. Electronics. 2025; 14(20):4015. https://doi.org/10.3390/electronics14204015

Chicago/Turabian StyleSong, Younghun, and Kyungyong Chung. 2025. "Facial and Speech-Based Emotion Recognition Using Sequential Pattern Mining" Electronics 14, no. 20: 4015. https://doi.org/10.3390/electronics14204015

APA StyleSong, Y., & Chung, K. (2025). Facial and Speech-Based Emotion Recognition Using Sequential Pattern Mining. Electronics, 14(20), 4015. https://doi.org/10.3390/electronics14204015