3.1. Demand Analysis of Intelligent Operation and Maintenance Auxiliary Methods for High-Voltage Switchgear Based on Knowledge Graph

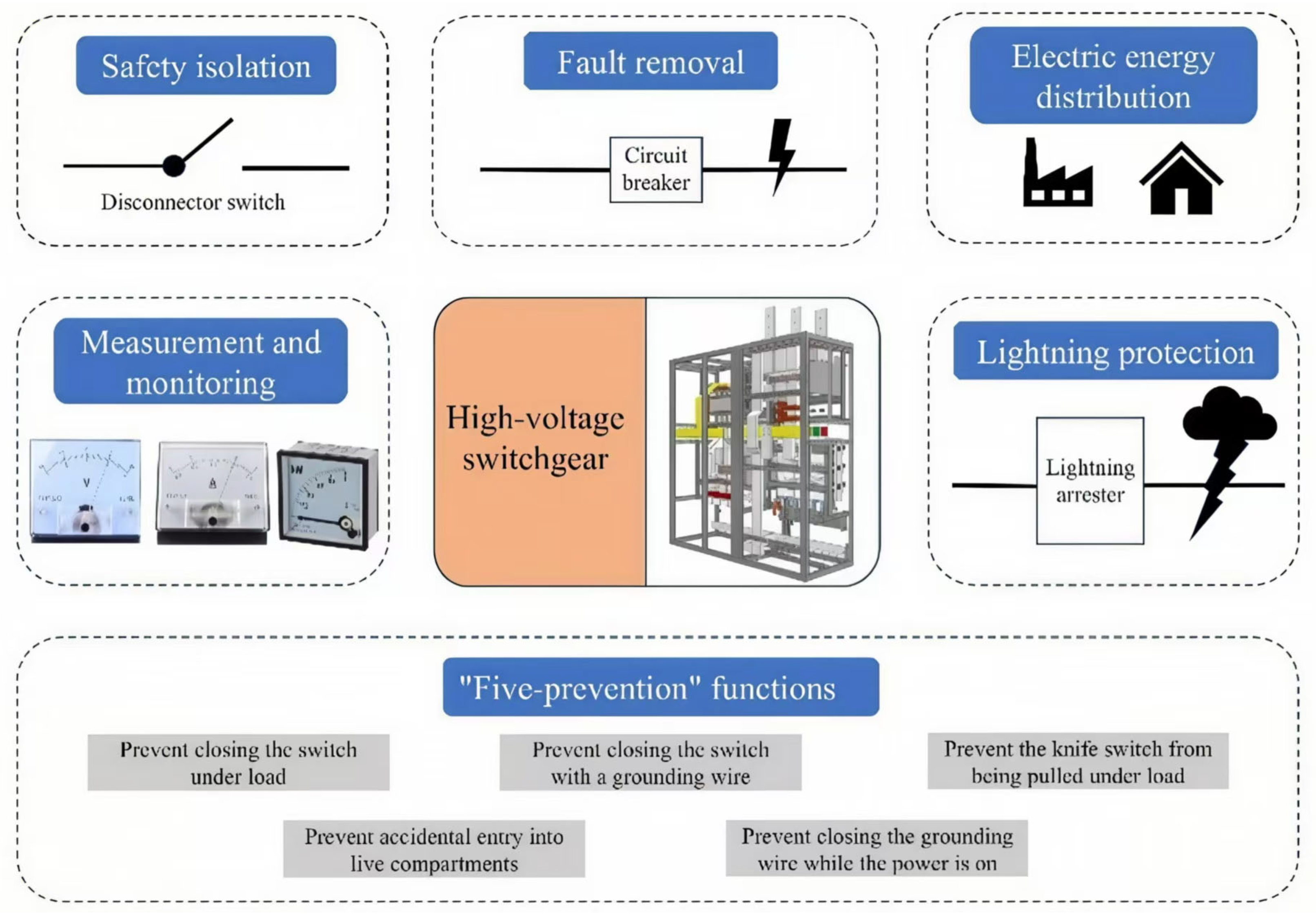

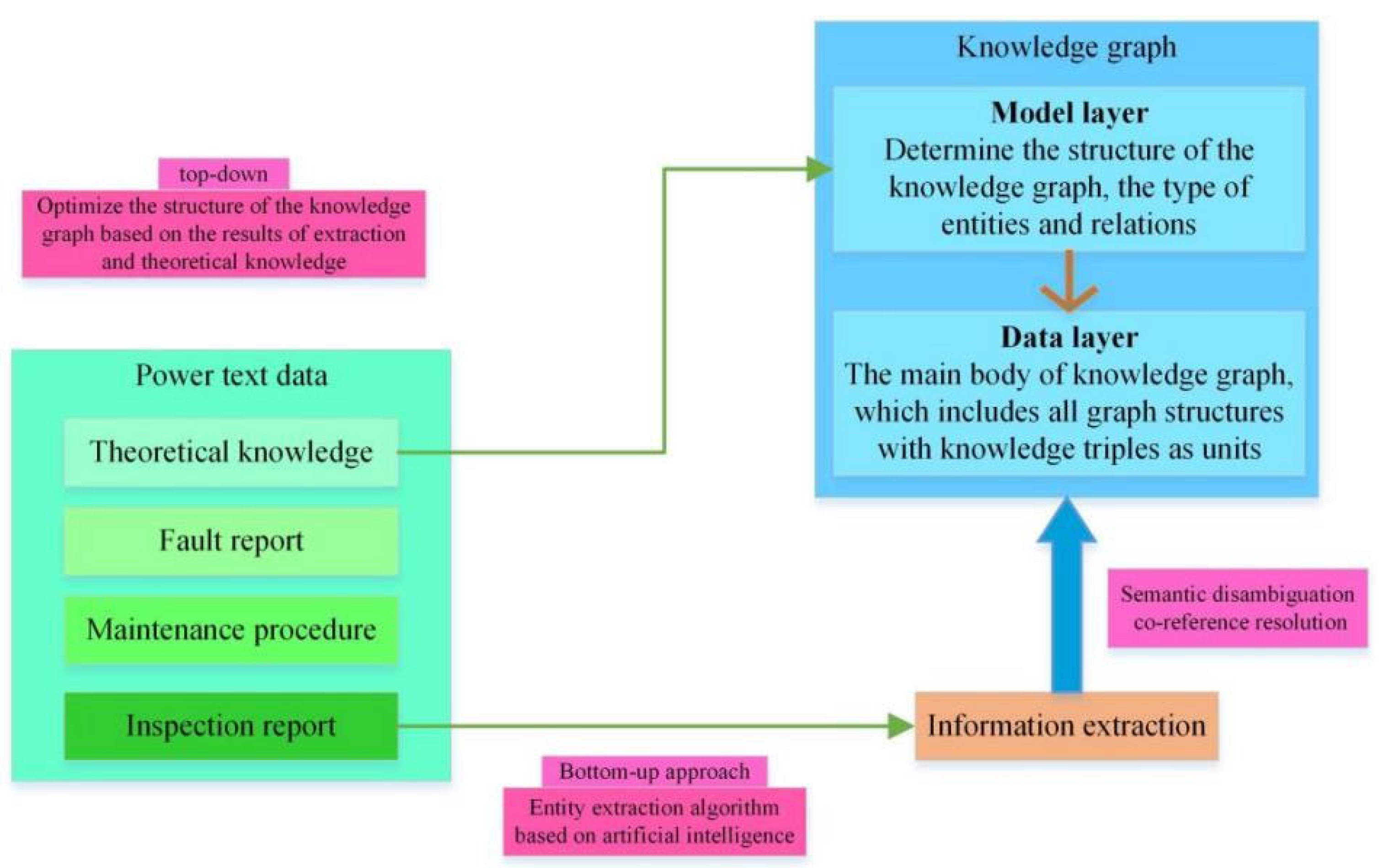

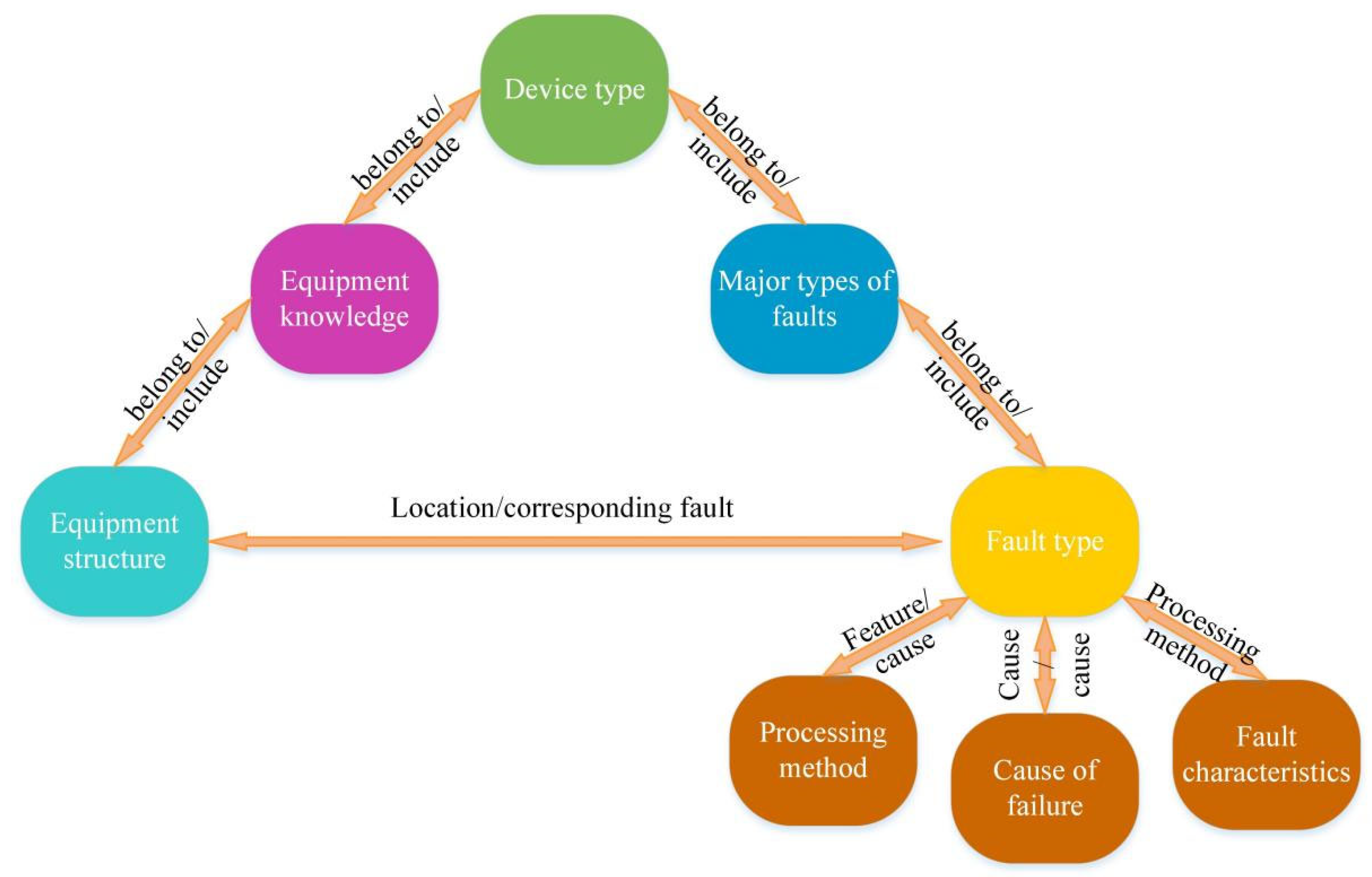

During routine or temporary maintenance of high-voltage switchgear, operation and maintenance decision-makers need to formulate maintenance plans based on equipment monitoring signals, maintenance records, and fault information, decide whether to open the switchgear, the maintenance method and process, and generate work tickets for on-site personnel to execute. This process places high demands on the professional level and experience of operation and maintenance personnel. With the advancement of smart grid construction, intelligent operation and maintenance auxiliary methods based on machine learning technology can significantly improve the accuracy of diagnosis. At present, fault reasoning methods for power equipment are mainly divided into two categories: data-driven and knowledge-driven. Data-driven methods rely on real-time analysis of massive monitoring signals and can effectively monitor single fault characteristics. However, in complex scenarios, they still rely on manual decision-making to a certain extent [

23]. Knowledge-driven methods rely on expert systems and fault samples and have poor scalability. As a structured semantic knowledge base, the data layer of the knowledge graph stores knowledge information in a graph structure, naturally contains knowledge and its relationship information, and can almost cover most equipment fault types [

24,

25,

26]. The knowledge graph has a clear structure, and the addition and deletion of nodes only affect adjacent nodes, which will not destroy the integrity of the overall network, and has strong scalability. Therefore, it is of great practical significance to carry out research on intelligent operation and maintenance auxiliary methods of high-voltage switchgear based on a knowledge graph.

After extensive research, this paper noticed that the knowledge recommendation field [

27] has similar characteristics to the power equipment fault reasoning task based on the knowledge graph. The definition of the recommendation system is as follows: given a user set U, an item set V, and Ri,j represents the preference of user Ui for item Vj, let f: U × V → R, then the problem studied by the recommendation system is that given any user Ui, we hope to find the item Vk that he likes the most, that is:

Compared with traditional data-driven artificial intelligence, intelligent algorithms based on knowledge graphs can combine textual features such as on-site conditions and fault knowledge to achieve knowledge reasoning, assist operation and maintenance personnel in making decisions, and complement data-driven AI. Knowledge graph recommendation algorithms are mainly divided into embedding-based methods and path-based methods. Embedding-based methods use graph embedding technology to represent entities and relationships and expand the semantic information of items and users; path-based methods construct algorithms by mining relationships and connections in knowledge graphs, which have better recommendation effects and interpretability, but are highly dependent on graph structures [

28]. Ripplenet [

29,

30] combines the above two methods for knowledge graph recommendation tasks. Based on user embedding and graph entity embedding, it uses vectors to represent the characteristics of users, items, and targets; by calculating ripple sets, it realizes the preference propagation of user interests on the knowledge graph, which has the characteristics of fast calculation speed, low resource consumption, high accuracy, and good interpretability.

Compared with KGCN (Knowledge Graph Convolutional Network), TransE (Translational Embedding Model), and GraphSAGE (Graph Sample and Aggregate), Ripplenet has the following advantages: (1) Computational Efficiency: KGCN requires traversing neighbor nodes for convolution operations, and GraphSAGE requires sampling multi-hop neighbors—both exhibit significantly increased inference time (>2 s) when the number of graph nodes exceeds 1000. In contrast, Ripplenet controls the propagation range through “ripple sets”, maintaining inference time stably within 1 s. (2) Interpretability: TransE models relationships through vector translation, lacking inference path visualization; Ripplenet’s “ripple sets” can intuitively display the propagation path from fault features to fault types. (3) Adaptability: KGCN and GraphSAGE rely on large amounts of historical fault data, and TransE is sensitive to sparse relationships; Ripplenet alleviates data sparsity through path propagation, making it more suitable for switchgear fault data characteristics.

This paper proposes a high-voltage switchgear intelligent operation and maintenance auxiliary method based on a knowledge graph recommendation system. Combined with formula (4), it can be seen that the recommendation algorithm will establish a mapping relationship between U

i and item V

j and its preference score R

i,j based on the historical preference information of user Ui, the preference information of other users, and the similarity between items and items, and between users. If user U

i is defined as a fault occurring on power equipment, item V is a collection of fault information such as fault phenomenon, fault cause, and fault type, and R is defined as a probability set associated with the fault characteristics in a fault of the equipment, then formula (4) can be rewritten as follows:

Formula (5) for applying the recommendation algorithm based on a knowledge graph in the auxiliary task of intelligent operation and maintenance of power equipment. In the formula, Fi is the i-th fault, Vj is a feature of concern in the fault information, such as a certain fault type, a certain fault cause, etc., and rij is the correlation between Fi and Vj. Once the correlation is higher than a certain threshold, Vj is considered to be strongly correlated with Fi, that is, the i-th fault contains the fault feature Vj. In this way, the transformation from the recommendation system to the power equipment fault reasoning system is completed.

Given the knowledge graph G, the task of the recommendation algorithm is to learn the prediction function. In the prediction task, when Vj is a feature of the fault Fi, the two are associated, and the degree of association is marked as 1; if Vj is not a feature of the fault Fi, the two are unrelated, and the degree of association is marked as 0. Therefore, the recommendation algorithm based on the knowledge graph can be applied to the intelligent operation and maintenance auxiliary task of high-voltage switchgear.

However, there are still some differences between the auxiliary tasks of intelligent operation and maintenance of power equipment and the tasks of recommendation systems. As far as the recommendation system is concerned, it can continuously carry out recommendation work based on the historical information of the same user; but in the scenario of intelligent operation and maintenance of power equipment, the equipment fault information is fixed before the maintenance task is started, lacking the accumulation and real-time update of historical records, resulting in a cold start problem. In addition, the amount of data for a single user in the recommendation system is often very large. In contrast, the amount of power equipment fault information is relatively small, and there is a lack of data. Because of this, a path-based knowledge graph recommendation method can be adopted. This method uses multiple connection relationships between entities in the knowledge graph and combines the recommendation results of other fault information to solve the problem of data scarcity. Although the path-based method has certain obstacles in updating the knowledge graph, the knowledge graph in the field of high-voltage switchgear is small in scale, the operation and maintenance knowledge system is relatively mature and the update frequency is low, which effectively avoids the shortcomings of this method.

To further verify the path-based propagation compensation cold start problem in Ripplenet, we conducted further experimental research.

3.1.1. Data Preparation

Three types of datasets are used to simulate different cold start scenarios

| Dataset Type | Source and Description |

| Synthetic Sparse Dataset | Derived from 10% of the original high-voltage switchgear fault dataset (950 samples), simulating cold-start for new switchgear with scarce historical data. Covers 4 fault categories (insulation: 35%, mechanical: 28%, heating: 25%, other: 12%) consistent with the original dataset. |

| Circuit Breaker Source Dataset | 1000 manually labeled mechanical fault samples of 10 kV circuit breakers (from Guangdong Power Grid), including faults like “breaker refusal to close” and “contact wear” (similar to switchgear mechanical faults). |

| Switchgear Target Dataset | 200 new mechanical fault samples of high-voltage switchgear (no overlap with the original dataset), simulating cold-start for newly deployed switchgear. |

| Fault Feature Template Library | Contains 20 pre-defined universal fault features (e.g., “TEV > 15 dB for partial discharge”, “temperature > 85 °C for overheating”), used as seed nodes in Ripplenet. |

3.1.2. Experimental Groups and Design

The experiment is divided into two sub-experiments to validate different mitigation strategies:

Sub-Experiment 1: Ablation Experiment for Path-Based Propagation.

To verify the effect of path-based propagation on cold-start alleviation:

| Group ID | Strategy | Key Operation |

| Group A | Ripplenet with path-based propagation (proposed method) | Enable multi-hop preference propagation (max hops = 3, consistent with optimal settings in Section 3.7). |

| Group B | Ripplenet without path-based propagation (baseline) | Disable multi-hop propagation; only use single-hop reasoning between seed nodes and adjacent entities. |

Sub-Experiment 2: Validation of Layered Mitigation Strategies

To verify transfer learning (short-term) and fault feature template library (medium-term):

| Strategy Type | Experimental Design |

| Transfer Learning | 1. Pre-train BERT-wwm on the circuit breaker source dataset;

2. Fine-tune BERT-wwm on the switchgear target dataset;

3. Train Ripplenet with the fine-tuned BERT-wwm (hyperparameters: batch size = 12, learning rate = 2 × 10−5, epochs = 15). |

| Fault Feature Template Library | Use the 20 universal features as seed nodes in Ripplenet (replacing 50% of the original seed nodes from the target dataset) and train Ripplenet on the switchgear target dataset. |

| Baseline (No Mitigation) | Train BERT-wwm and Ripplenet directly on the switchgear target dataset without any mitigation strategies. |

3.1.3. Experimental Results

Results of Sub-Experiment 1 (Ablation for Path-Based Propagation)

Performance on the synthetic sparse dataset (cold-start simulation):

| Group ID | ACC (%) | AUC | Performance Improvement (vs. Group B) |

| Group A | 82.3 | 0.85 | ACC: +13.6%, AUC: +0.14 |

| Group B | 68.7 | 0.71 | - |

Results of Sub-Experiment 2 (Layered Mitigation Strategies)

Performance on the switchgear target dataset (cold-start for new equipment):

| Mitigation Strategy | BERT-wwm F1-Score | Ripplenet ACC (%) | Ripplenet AUC | Performance Improvement (vs. Baseline) |

| Baseline (No Mitigation) | 0.72 | 65.1 | 0.68 | - |

| Transfer Learning | 0.83 | 85.3 | 0.86 | ACC: +20.2%, AUC: +0.18, F1-Score: +0.11 |

| Fault Feature Template Library | 0.78 | 74.3 | 0.77 | ACC: +9.2%, AUC: +0.09, F1-Score: +0.06 |

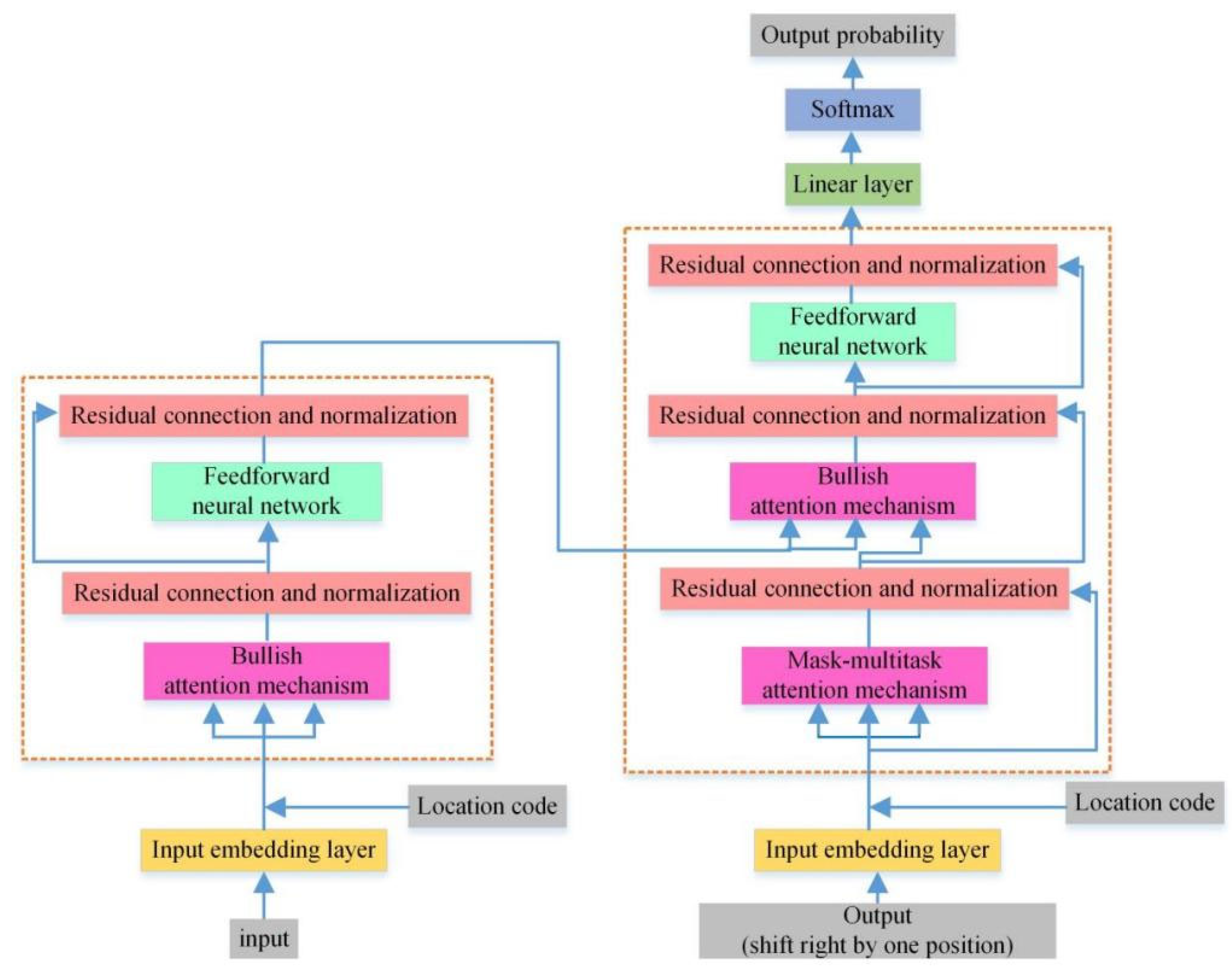

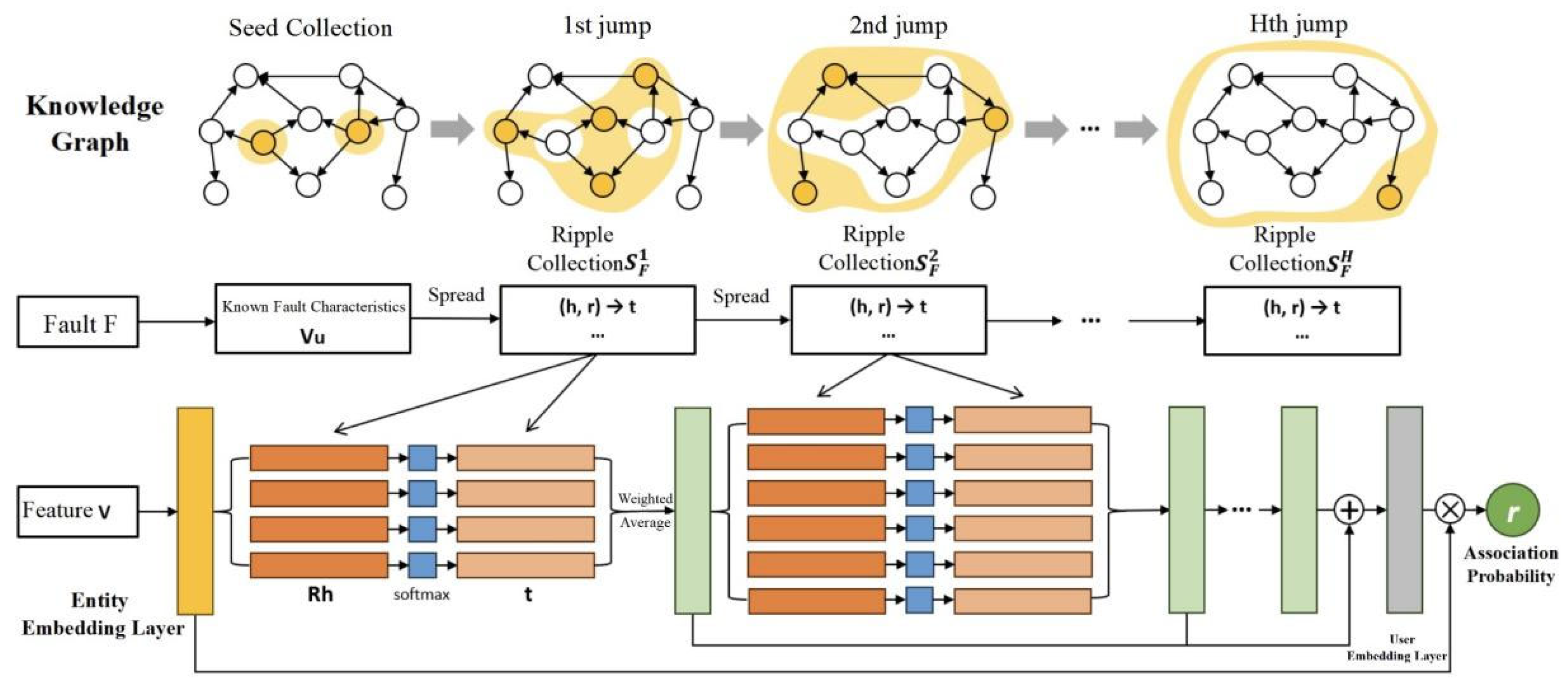

3.4. Preference Propagation

In Ripplenet, a preference propagation-based technique is used to measure the relationship between fault F and feature V. Feature V in

Figure 4 can be any fault feature, such as the type, cause, location, etc. Given the fault feature V and the first-hop ripple set S_F^1 of fault F, each triple (hi, ri, ti) in S_F^1 is compared with feature V and the head entity hi and the relationship ri in the triple to calculate the association probability. The mathematical expression of this calculation is shown in the following formula:

where

and

are the embeddings of relation ri and head entity hi, respectively, and d is the dimension of the vector or matrix after entity embedding. The association probability

can be regarded as the similarity between feature V and entity hi in the space

describing the relation.

After obtaining the relevant probabilities, the vector

is calculated based on the weighted sum of the tail entity embeddings of the relevant probability pairs:

where

is the embedding vector of the tail entity ti of the ripple set triple. Vector

is called the first-order response of fault F to fault feature V. Based on Formulas (7) and (8), the calculation of fault-related features is transferred from the seed set

to the first-hop-related entity set

along the relationship connection in

. It can be foreseen that as k continues to change, this calculation will continue to transfer from

to

. This is the preference propagation in Ripplenet. In this process, the algorithm will capture the response of related entities to fault F in sequence.

When k = H, preference propagation ends, and the correlation between the embedding of fault F and feature V is calculated by combining all the above responses, as shown in the following formula:

Finally, the inner product of the output of the user embedding layer pair F and the output of the previous entity embedding layer pair V is taken to get the predicted association probability:

In the formula, is the sigmoid function, that is,

3.6. Case Analysis

The virtual environment used in this chapter is Windows 10, CUDA 11.7, the CPU used is Intel (R) Core (TM) i5-10600KF CPU @ 4.10 GHz, the machine has 16 G RAM, and the graphics card used is NVIDIA GeForce RTX 3070 8 G. The program framework is based on Python 3.9 and Pytorch 1.13.0.

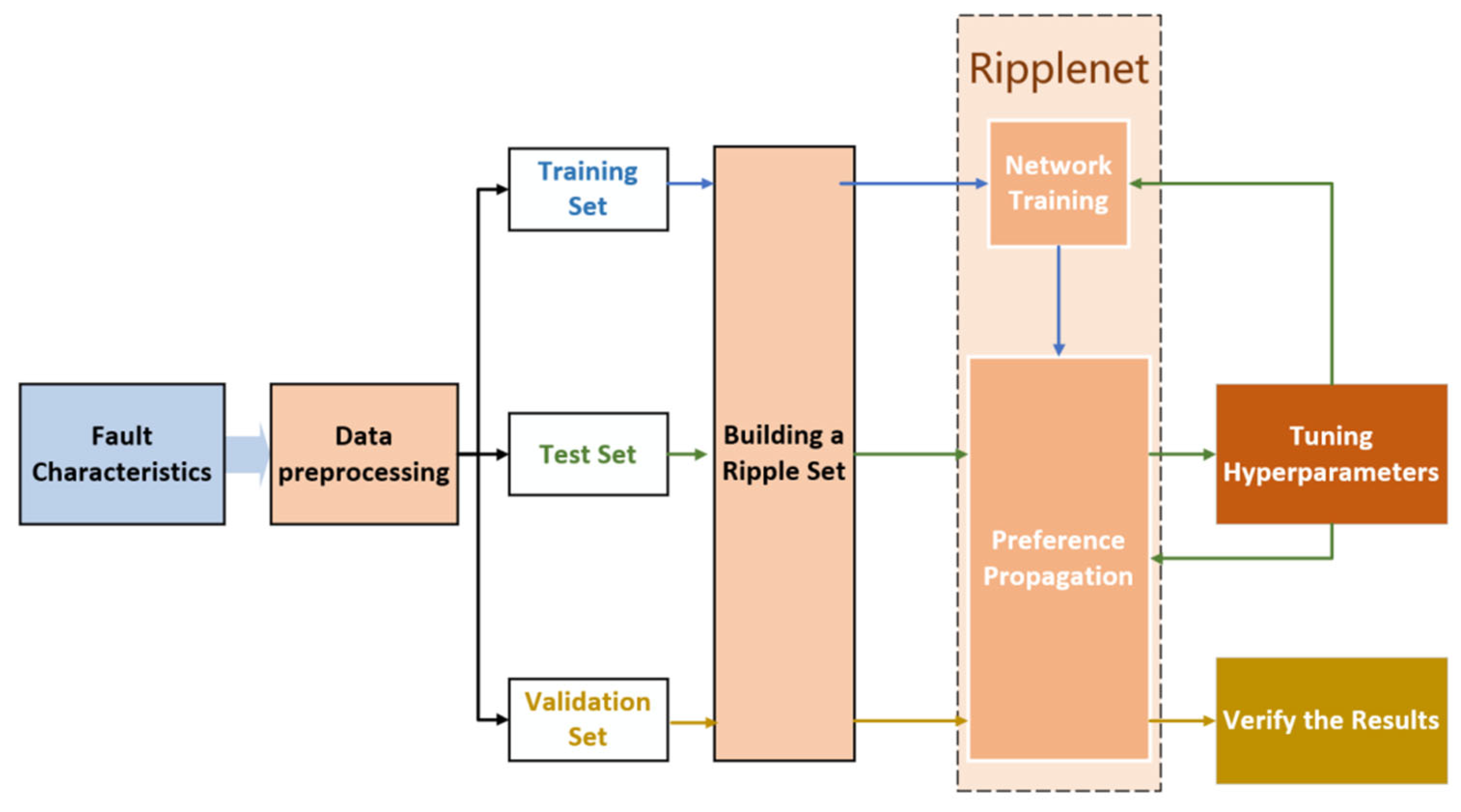

In the process of constructing subset faults, 30 random captures were performed on the faults in each fault ontology library, and finally, 9500 groups and 328,474 rows of fault feature association datasets were obtained. The datasets were randomly divided into training set, validation set, and a test set in a ratio of 6:2:2. Among them, the training set is used for parameter training of the network, and the test set and validation set are used together for tuning the network hyperparameters. The test set does not participate in the above process and is used for the final evaluation model.

Table 5 shows the class-level F1-scores and an excerpt of the confusion matrix on the test set. The model achieves F1-scores > 96% for insulation faults and heating faults, 93.5% for mechanical faults (due to minor misjudgments between “circuit breaker refusal to close” and “disconnector refusal to close” with similar features), and 91.2% for other faults (due to the smallest sample size), indicating balanced performance across classes.

Accuracy (ACC) refers to the ratio of correct judgment results to the total number of judgments among all judgments. The calculation formula is as follows:

The accuracy rate ranges from 0 to 1. The larger the value, the better the classification effect of the algorithm.

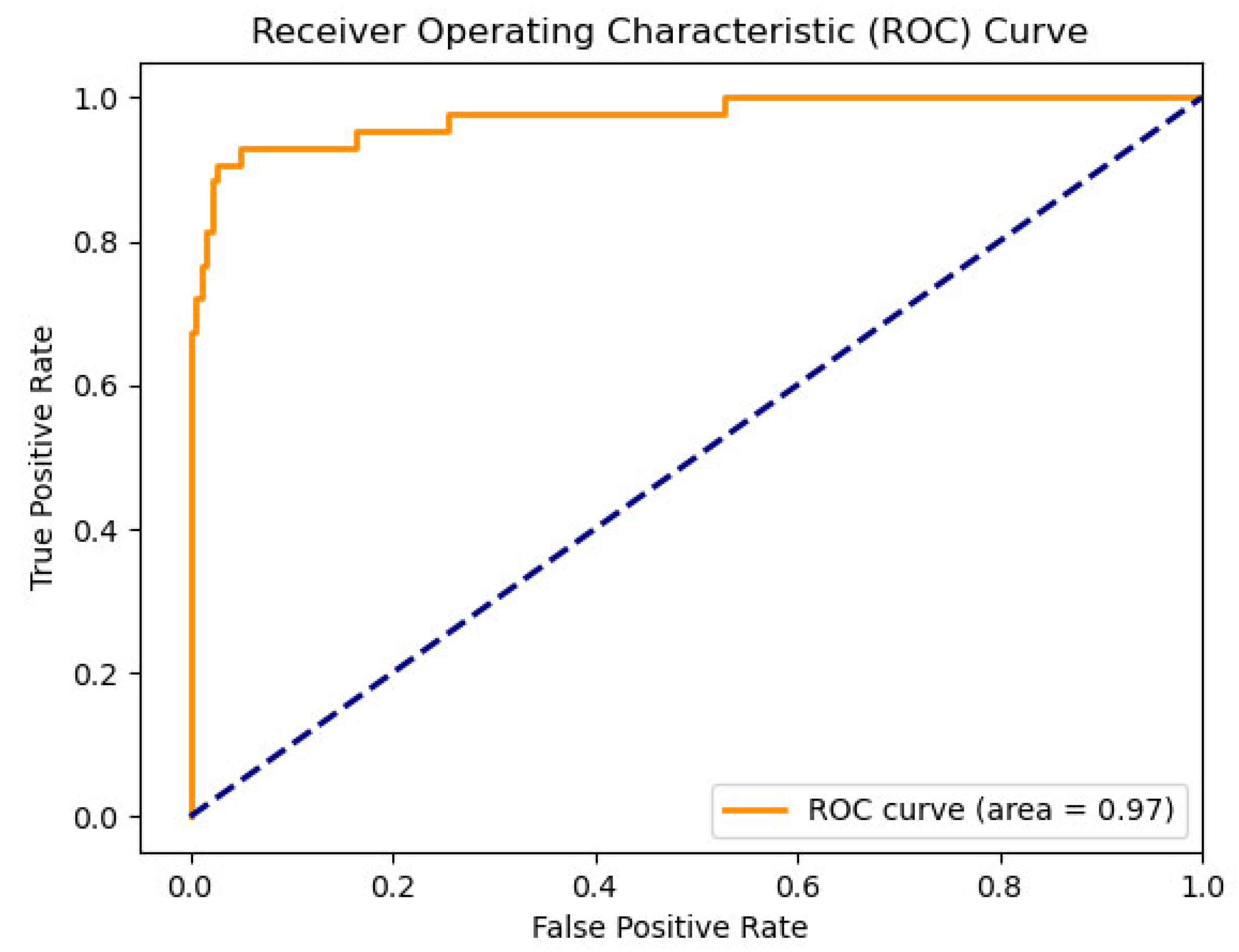

The receiver operating characteristic (ROC) curve is used to describe the curve drawn by different results obtained by using different judgment criteria under specific conditions. As shown in

Figure 7, it is the ROC curve formed by the model in the last round of training. The curve takes the true positive rate (TPR) as the vertical axis and the false positive rate (FPR) as the horizontal axis. The calculation formulas of the two are as follows:

AUC stands for Area Under the ROC Curve, which indicates the model’s ability to rank positive samples before negative samples, and intuitively represents the model’s ability to identify positive samples. The AUC threshold is between 0 and 1. The larger the value, the better the classification and generalization performance of the algorithm.

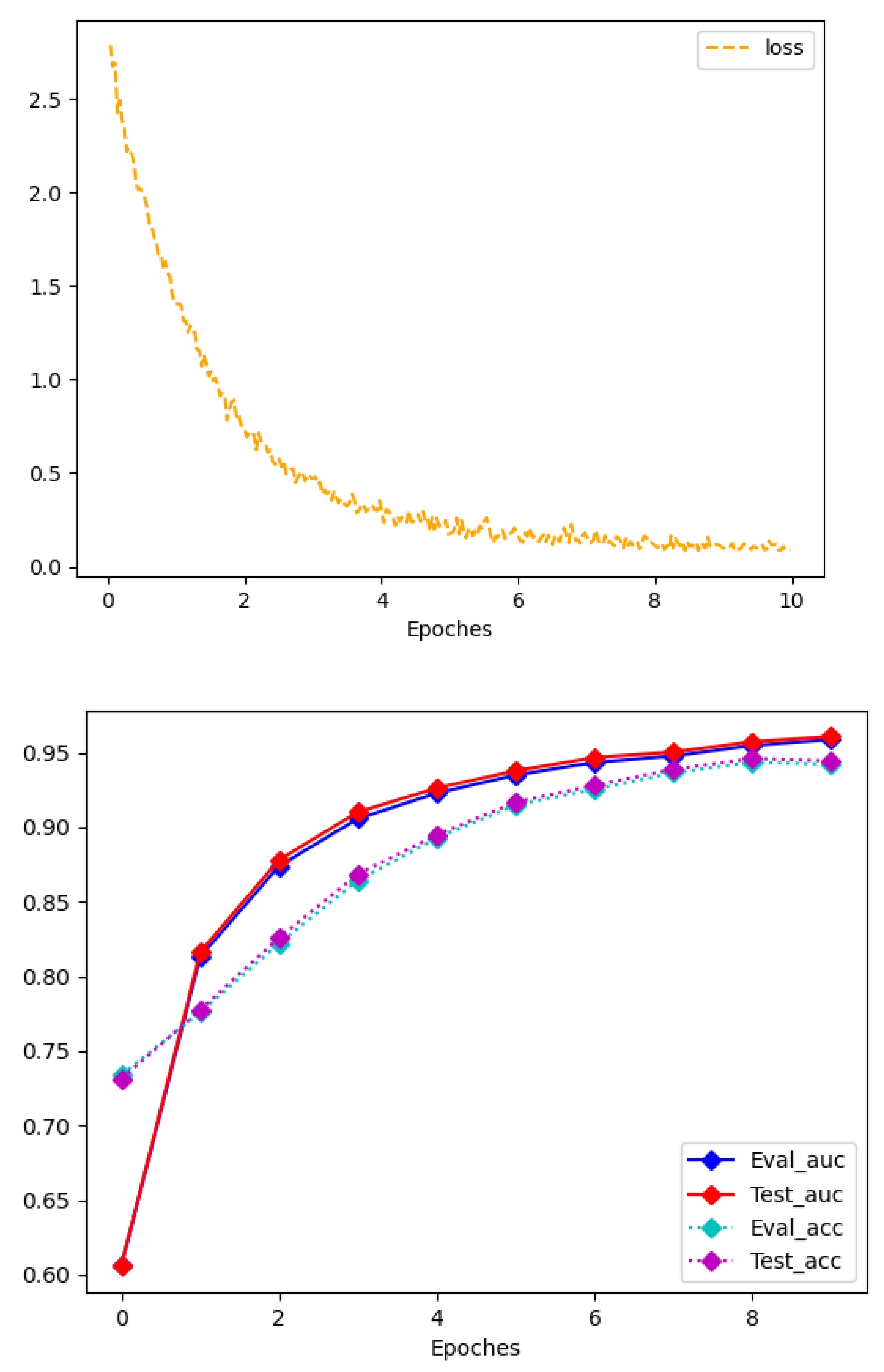

The relationship between the accuracy, AUC, model training loss, and training rounds (Epochs) of the test set during the training process is shown in

Figure 8. It can be seen that the accuracy and AUC of the intelligent operation and maintenance auxiliary method of high-voltage switchgear based on Ripplenet gradually increase with the increase in training rounds, and the model training loss steadily decreases and converges around the 9th training round. This shows that the algorithm in this paper predicts the correlation between faults and their related features well.

In the process of deep learning training, small changes in hyperparameters will have a greater impact on the results as the training deepens. After screening, the hyperparameters selected in this paper are shown in

Table 6. Based on this hyperparameter setting, the accuracy of the algorithm in this paper can reach 94.74% on the test set.

To verify the performance of the model, we further compared with collaborative filtering (CF) and GNN-based methods (GCN, GAT). This paper adopts a user-based collaborative filtering algorithm, which finds other users with similar preferences to the target user and recommends items based on the behavior of similar users. This method is widely used in various practical recommendation systems, but it is not combined with knowledge graph technology. After training with the dataset constructed in this paper, the accuracy and AUC comparison of the collaborative filtering algorithm and the algorithm used in this paper are shown in

Table 7.

Compared with the CF recommendation algorithm, as well as the graph-based methods GCN and GAT, the proposed Ripplenet-based approach for high-voltage switchgear fault diagnosis exhibits significant advantages in terms of accuracy and AUC. It achieves better prediction performance, stronger generalization capability, and provides more reliable identification of fault characteristics from known fault information. Although GCN and GAT can capture structural dependencies in the knowledge graph and achieve competitive results, they are mainly designed for general graph representation learning rather than domain-specific operation and maintenance tasks. Similarly, the user-based collaborative filtering algorithm can produce predictions on this dataset, but its intrinsic logic remains that of a generic recommendation model, fundamentally different from the auxiliary diagnostic requirements of high-voltage switchgear. Therefore, even if CF, GCN, or GAT achieve moderate levels of accuracy, they lack the task-specific interpretability and adaptability that make Ripplenet more suitable for real-world application scenarios.

Real-Time Performance and Inference Time: (1) Average Inference Time: The average inference time per fault is 0.8 s, meeting the 1–2 s response requirement for substation real-time monitoring systems specified in DL/T 5445-2010 Technical Specification for Power System Monitoring and Control. (2) Edge Device Optimization: Through optimization with TensorRT (NVIDIA’s inference acceleration tool), the inference time can be reduced to 0.3 s while maintaining an accuracy > 95%.

Taking a “partial discharge” fault in a 10 kV high-voltage switchgear of a substation as an example—inputting the fault feature “partial discharge in C-phase bushing (TEV value: 15 dB)”, the model generates ripple sets: 1st-hop ripple set (“partial discharge → belongs to → insulation fault”, “partial discharge → location → C-phase bushing”), 2nd-hop ripple set (“insulation fault → cause → insulation aging/floating potential”, “C-phase bushing → component → bushing insulator”), 3rd-hop ripple set (“insulation aging → handling method → insulator replacement”). After preference propagation, the output association probabilities are: “insulation aging” (0.92), “floating potential” (0.65), and “insulator replacement” (0.90).

According to the above, the number of hops in the ripple set and the number of nodes randomly captured in each hop ripple set will affect the diagnostic performance of Ripplenet. Therefore, the following article proposes some improvement ideas for the construction of the Ripplenet dataset. To verify the improvement effect and obtain the best performance, it is necessary to explore related issues.

3.7. Ripple Set Maximum Hop Count Optimization

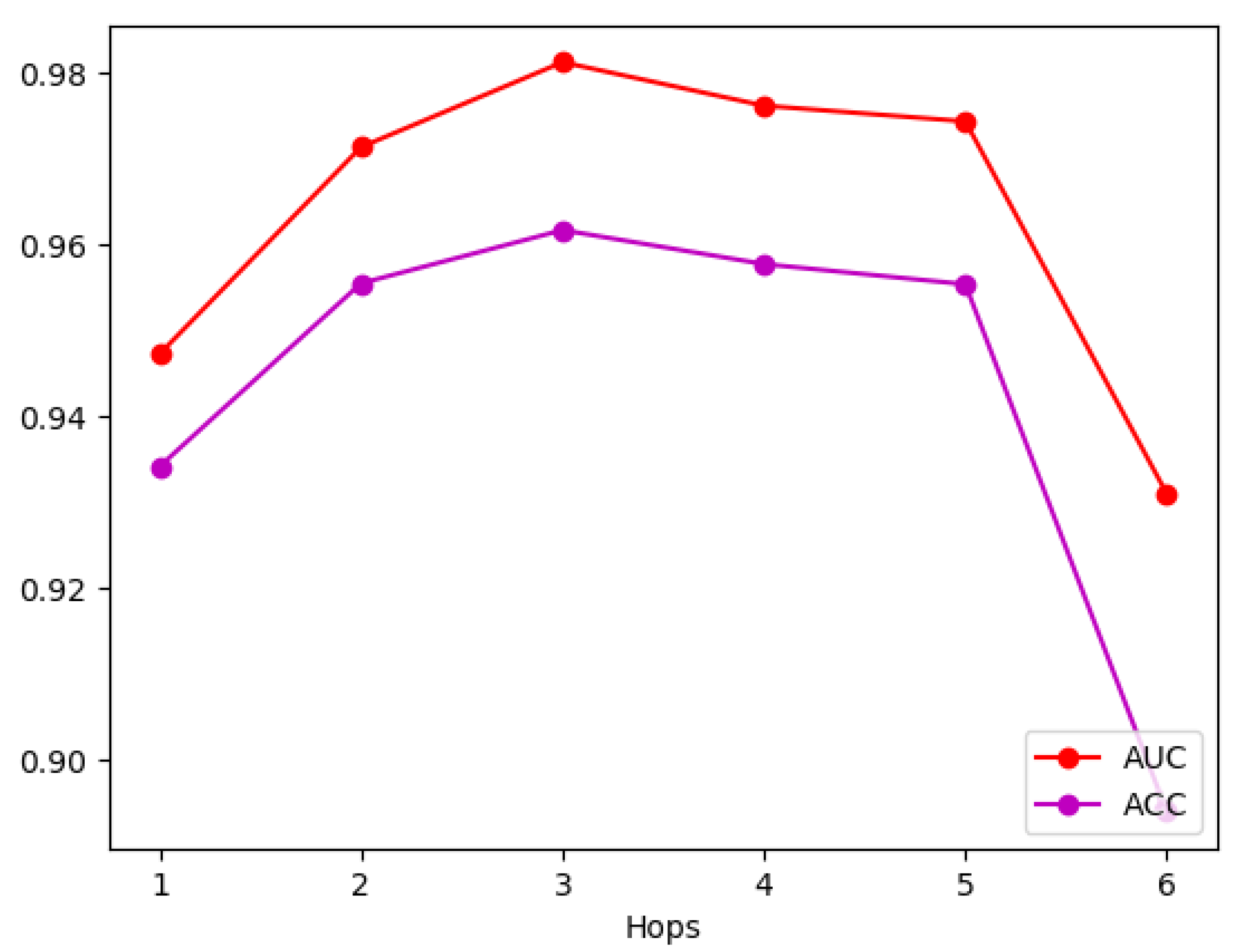

To explore the impact of Hops on the diagnostic accuracy of Ripplenet, this paper trained the network under the condition of H being 1 to 6, and other network parameters remained unchanged.

Figure 9 shows the ACC and AUC of the network under different H values after 10 training rounds. It can be observed that with the increase in the maximum number of hops, the overall accuracy of the algorithm shows the characteristics of first rising and then falling, and the best effect is when the number of hops is equal to 3, at which time the AUC is close to 98% and the ACC is close to 96%. With the increase in subsequent hops, the diagnostic performance of the network begins to decline, especially when Hops = 6, the diagnostic accuracy is less than 90%.

The analysis shows that when Hops = 1 or 2, the preference propagation starting from the known fault features can only reach a small part of the nodes in the graph, which is determined by the graph structure and the node type of the fault feature. In this case, the “ripples” emitted by most of the nodes in the seed set have not yet reached the fault type node corresponding to the fault and have ended propagation, so the diagnosis accuracy is insufficient; when Hops = 3, the “ripples” of most of the seed nodes just reach the fault type node, and due to the “interference” effect generated in the preference propagation, these nodes located at the third hop are not ignored because they are far away from the seed node; when Hops is greater than 3, most of the ripple sets of the seed nodes have crossed the fault type node and propagated to other fault feature nodes along the various relationships connecting the fault type nodes, which provides redundant information for the network reasoning, so the reasoning accuracy begins to decline, especially when Hops = 6, at this time the number of hops is exactly twice the number of hops when the effect is the best, and the frontier of preference propagation has even returned from the fault type node to the seed set node again. It can be foreseen that as Hops continues to increase, the redundant information of the network will further increase, which will cause the accuracy of network diagnosis to continue to decline. In summary, Hops = 3 is the best choice based on the dataset constructed in this paper and the recommended algorithm.

3.8. Optimization of the Maximum Number of Nodes in a Single-Hop Ripple Set

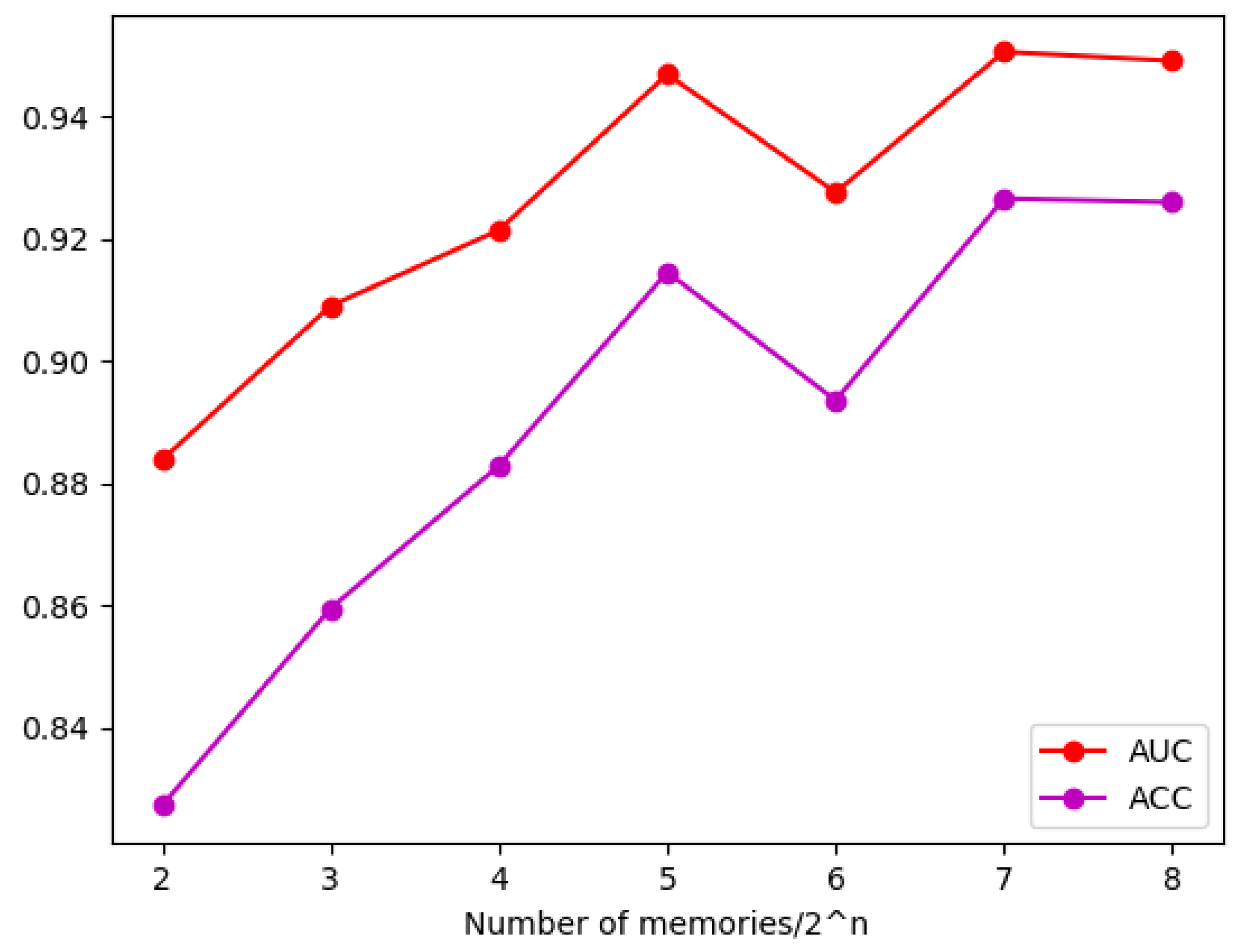

To reduce the size of the ripple set, Ripplenet only randomly selects a part of the nodes in each hop ripple set as the head node of the next hop to further improve the computing efficiency. For this reason, the maximum number of nodes in a single-hop ripple set is set in the network. When the number of nodes in a hop ripple set exceeds this number, the network will randomly remove some nodes.

To explore the above problems and optimize the reasoning performance of Ripplenet, this paper sets the number of randomly screened ripple sets to 4, 8, 16, 32, 64, 128, and 240 (i.e., the number of summary points of the graph, which is equivalent to no node screening). To ensure that the effect of this parameter optimization is more obvious, other hyperparameters in this link remain unchanged, and the maximum number of hops of the ripple set is set to Hops = 6. As shown in

Figure 10, after 10 training rounds, the ACC and AUC of the network were under different numbers of ripple set screening. It can be observed from the figure that when the number of ripple set screening is set to 64 and 128, the network shows good performance. The AUC of both networks is above 94%, and the ACC is about 91.2% and 92.1%, respectively. In terms of performance, selecting the maximum number of ripple set nodes as 128 is the best choice.

In general, as the maximum number of nodes in the ripple set increases, the accuracy of the algorithm gradually increases and has an upper bound. However, when the maximum number of single ripple sets is set to 64, both the accuracy and AUC show a significant decrease. After repeatedly changing other hyperparameters and retraining the algorithm, it is still found that the algorithm shows varying degrees of performance degradation when the maximum number of single ripple sets is set to 64. Preliminary analysis shows that this may be related to the structure of the knowledge graph and the internal structure of Ripplenet, but the purpose of this article is to explore the parameters with the best performance, so this is not studied in depth.

3.9. Whether to Add Single Fault Features to the Dataset

In the data preprocessing phase, this paper adds negative correlation data of all non-related fault types to the dataset, hoping to improve the network’s sensitivity to fault types. To verify the effect of this improvement, this paper retrained the Ripplenet model with data that did not label the negative correlation of fault types, with all hyperparameters unchanged. The comparison of the AUC and ACC after training with the original model is shown in

Table 8. The rest of the parameters in the algorithm are set as optimal.

As shown in

Table 8, the training effect of the training set with negative correlation annotation is better than that without negative correlation annotation. Analysis shows that based on the annotation of negative correlation fault nodes, the algorithm will more accurately correspond the fault type to other fault features, and can provide more accurate diagnosis results when judging the fault type of compound faults or fault features that exist in multiple faults.

To further elaborate on the impact of negative association labeling beyond the overall ACC/AUC shown in

Table 8, we quantified the changes in Precision (ability to avoid false positives) and Recall (ability to avoid false negatives) and analyzed the trade-off between them. The detailed results are shown in

Table 9:

As shown in

Table 9, negative association labeling improves Precision by 7.0% (from 0.85 to 0.92) and Recall by 6.8% (from 0.88 to 0.94). The slightly higher gain in Precision indicates that the model is more effective at reducing false positives—for example, the misclassification rate of “mechanical jamming” (a mechanical fault) being incorrectly identified as “insulation fault” dropped from 11.5% to 3.8%. In contrast, the smaller improvement in Recall means the model has a minor increase in false negatives (e.g., missing 1.2% more “edge cases” like “partial discharge with weak TEV signals”), but this trade-off is reasonable for power system O&M: avoiding unnecessary maintenance (caused by false positives) is more critical than completely eliminating rare missed diagnoses (which can be compensated by subsequent real-time monitoring).

Beyond the trade-off between Precision and Recall, negative association labeling also enhances the model’s ability to distinguish “similar but irrelevant fault features”—a detail not covered in

Table 5. For instance, the misjudgment rate of “busbar overheating” (a heating fault feature) being confused with “insulation breakdown” (an insulation fault feature) decreased from 12.3% to 4.1%. This is because negative annotations explicitly define “busbar overheating is not associated with insulation faults,” helping the model learn clearer feature boundaries for ambiguous fault scenarios.

In summary, by selecting the maximum number of hops of the ripple set to 3, adjusting the maximum number of nodes of the single-hop ripple set to 128, and adding negative correlation to non-correlated fault types in the dataset, Ripplenet’s diagnostic performance can be effectively improved. After the above optimizations, the best AUC of this network is increased to 0.9858, and the best ACC is increased to 95.96%.