A Sketch-Based Cross-Modal Retrieval Model for Building Localization Without Satellite Signals

Abstract

1. Introduction

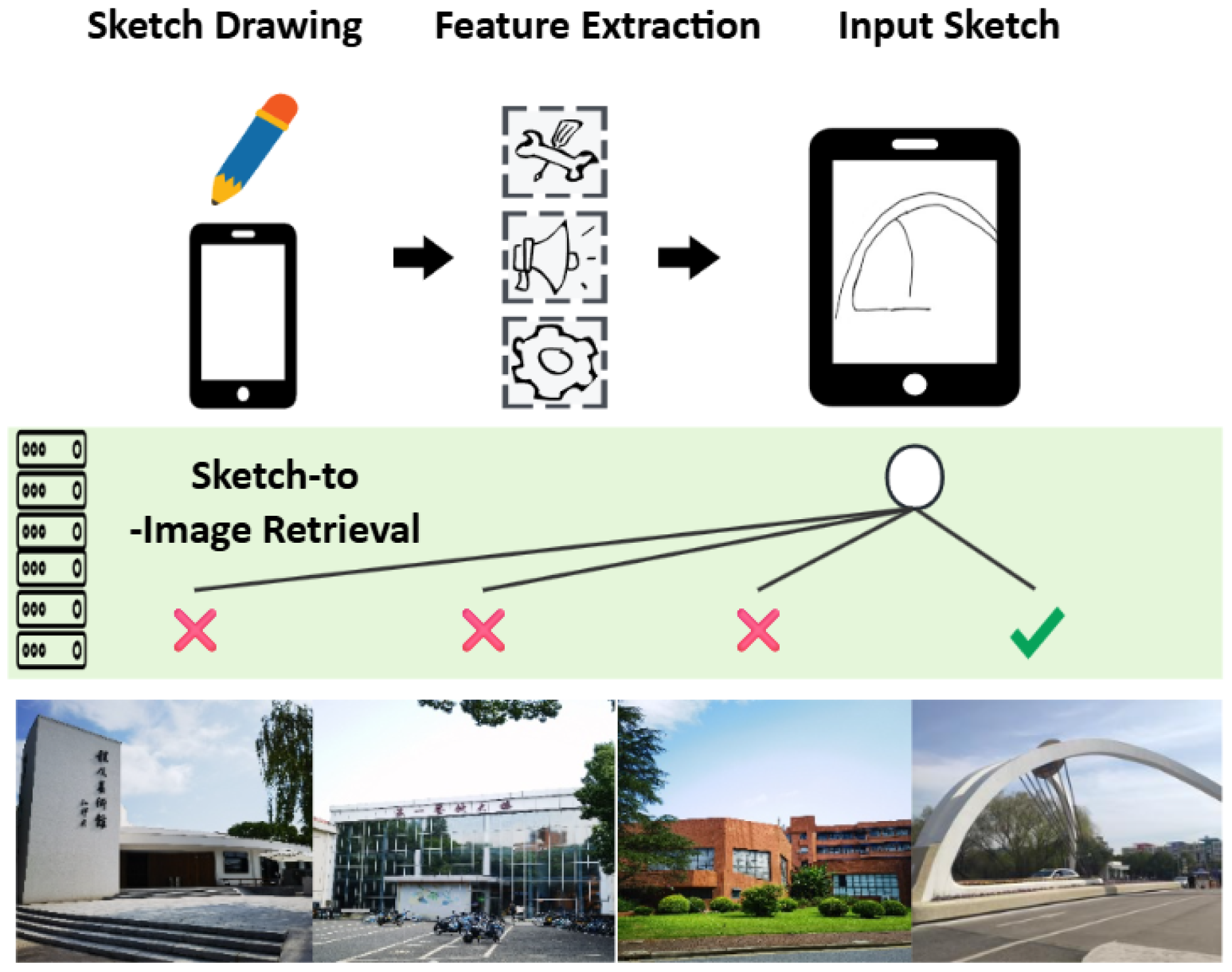

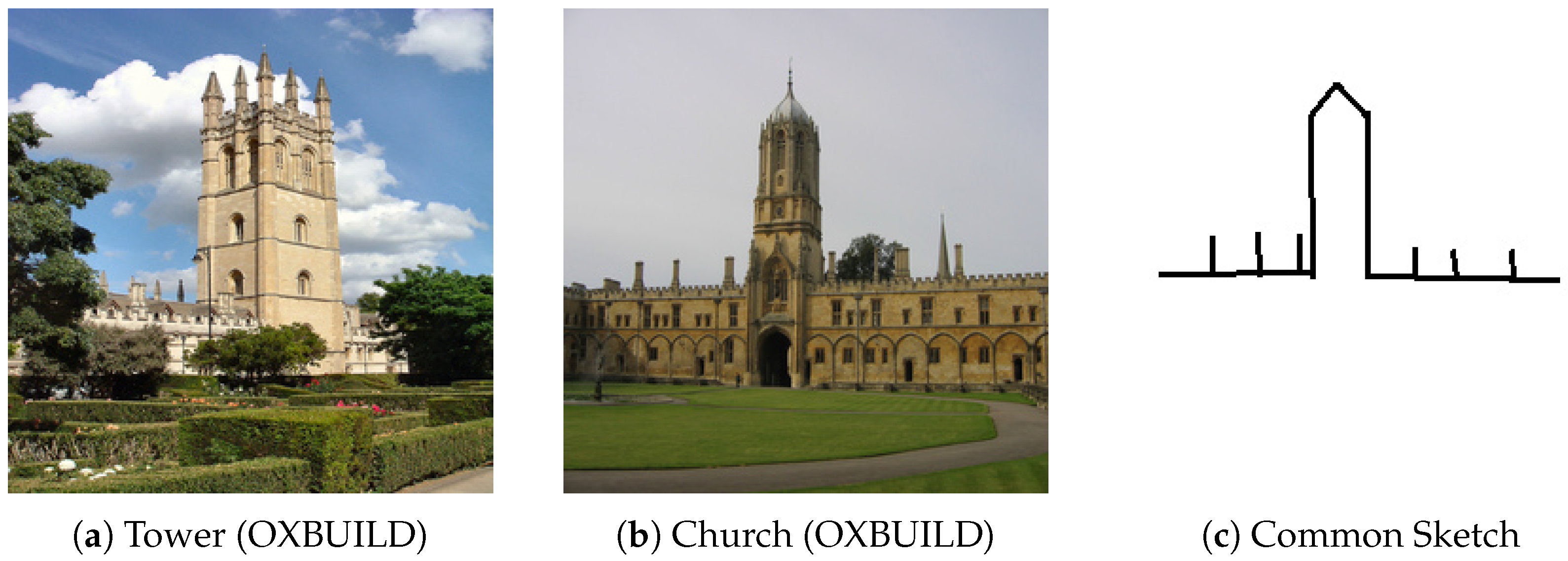

- A semantic location method based on sketch retrieval is introduced as an innovative approach for achieving precise positioning and navigation, without relying on satellite technology. The method involves matching hand-drawn architectural sketches created by individuals without any prior art skills to real-world buildings. The result is a remarkably robust and effective location-based navigation system, which is validated through retrieval accuracy experiments on multiple architectural datasets in Section 3.4.

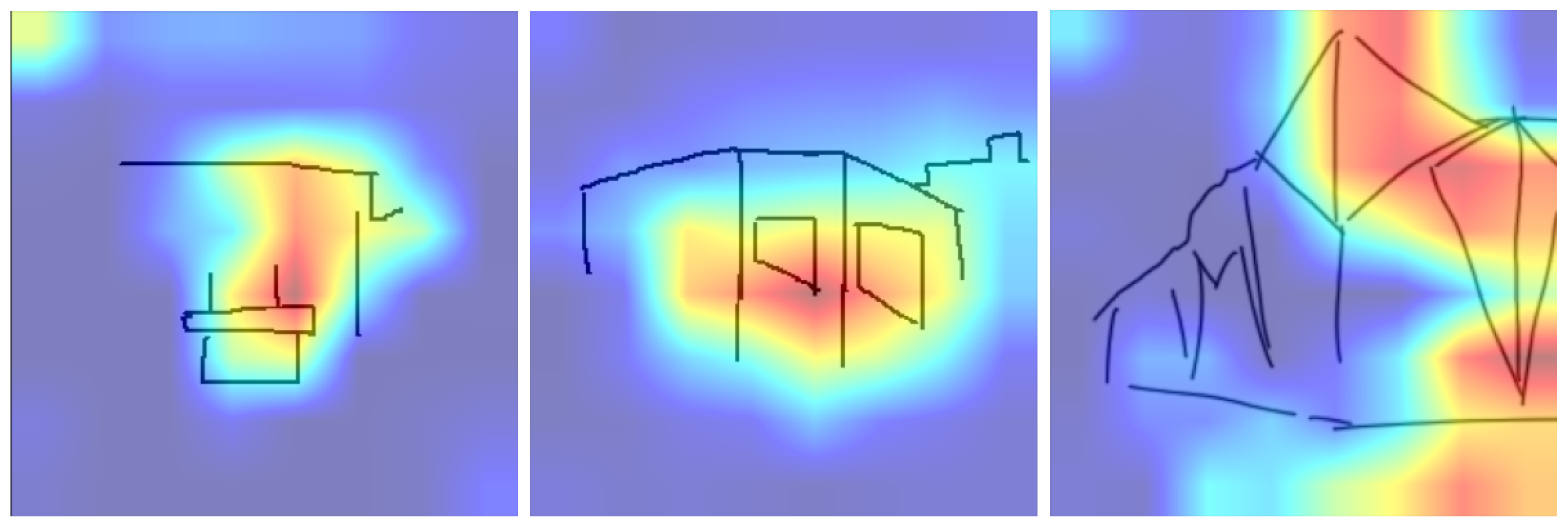

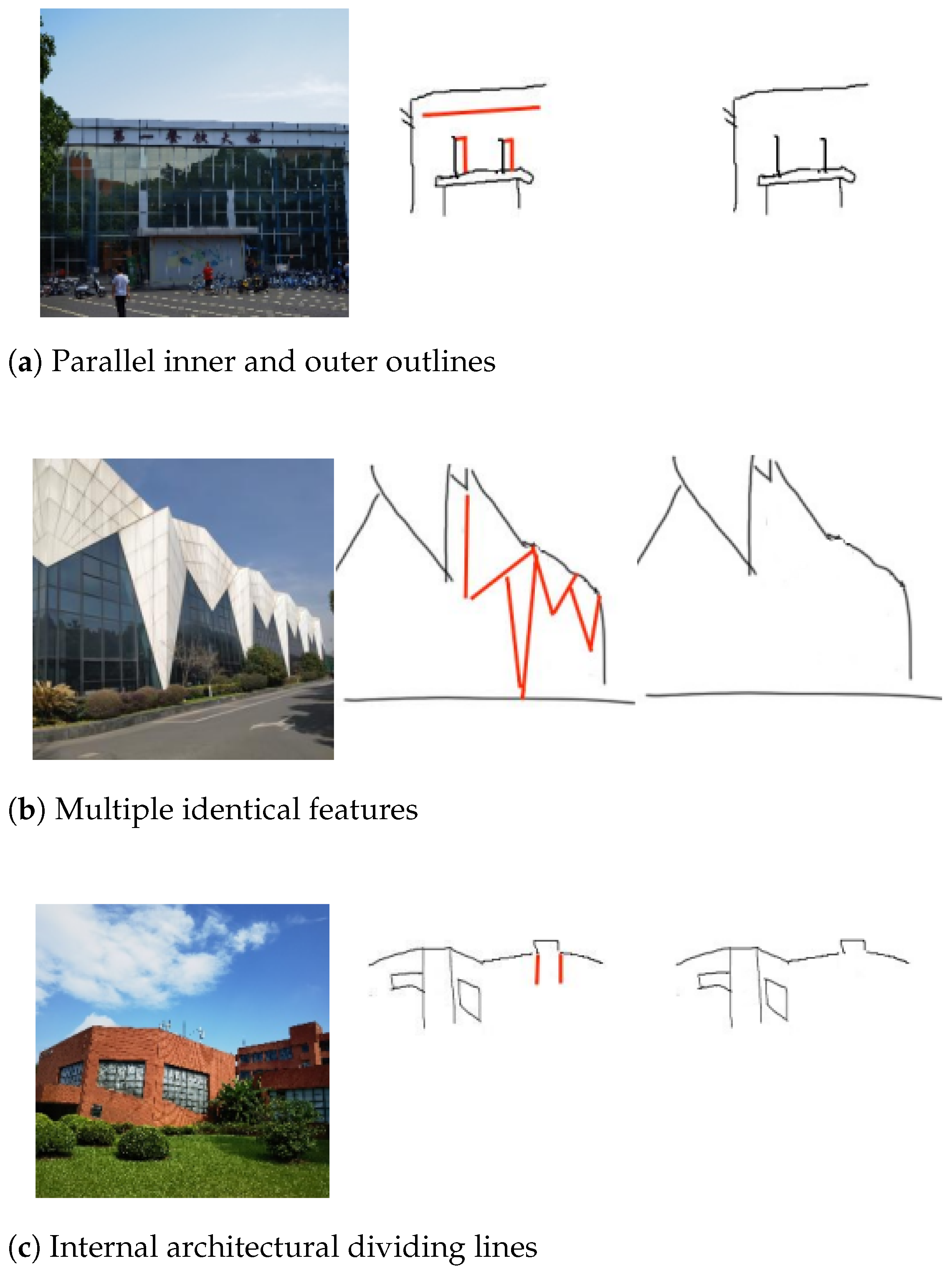

- To derive a set of universal sketching principles, sketch-original datasets encompassing various architectural styles were constructed, and corresponding sketching experiments were carried out. The Grad-CAM method is used to visualize the position of key lines and further simplify the way sketches are drawn. Ultimately, three fundamental principles for retaining essential lines were formulated based on distinctive line characteristics, and their impact on retrieval accuracy is demonstrated in the sketch simplification experiments of Section 3.4.

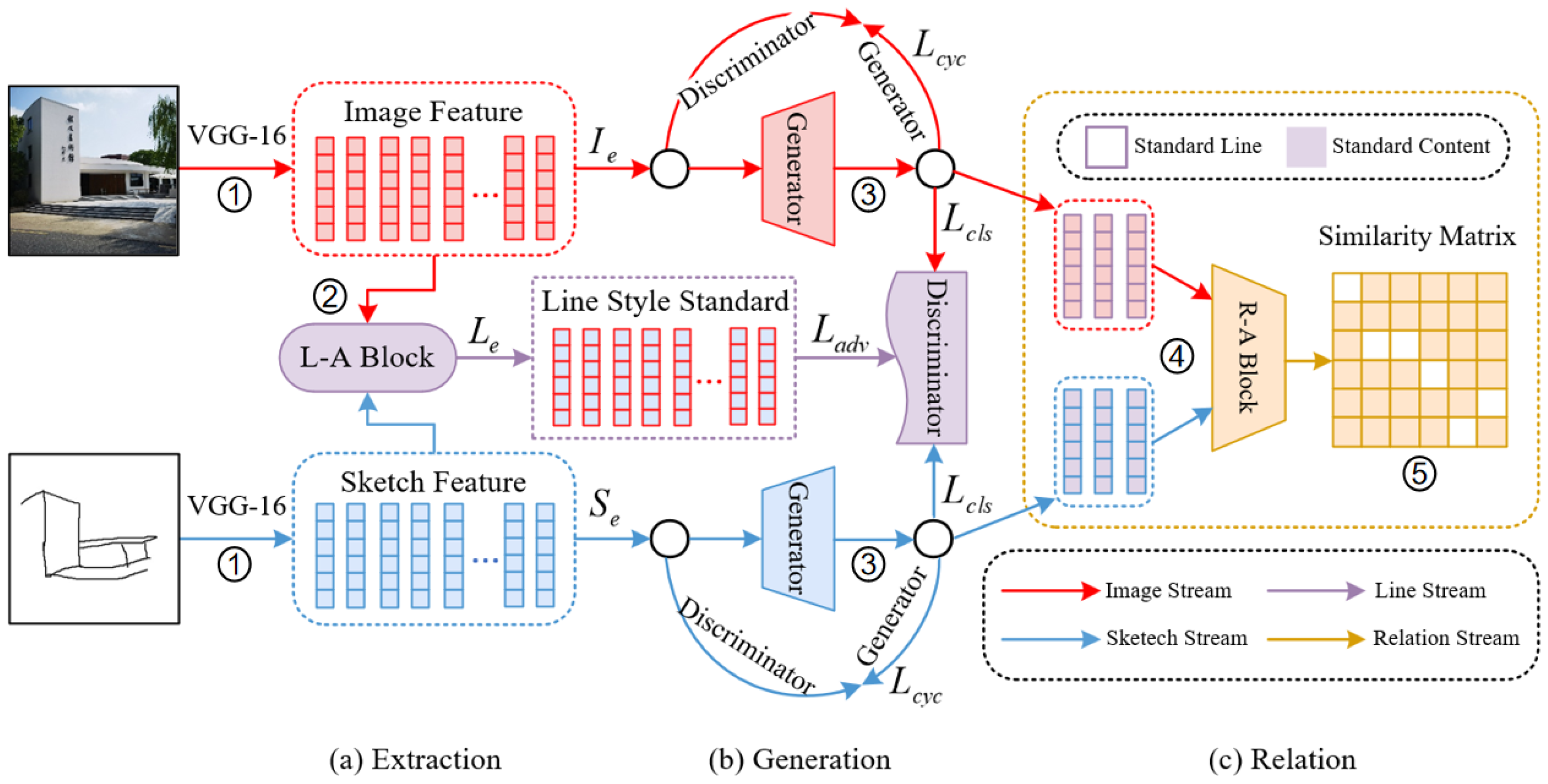

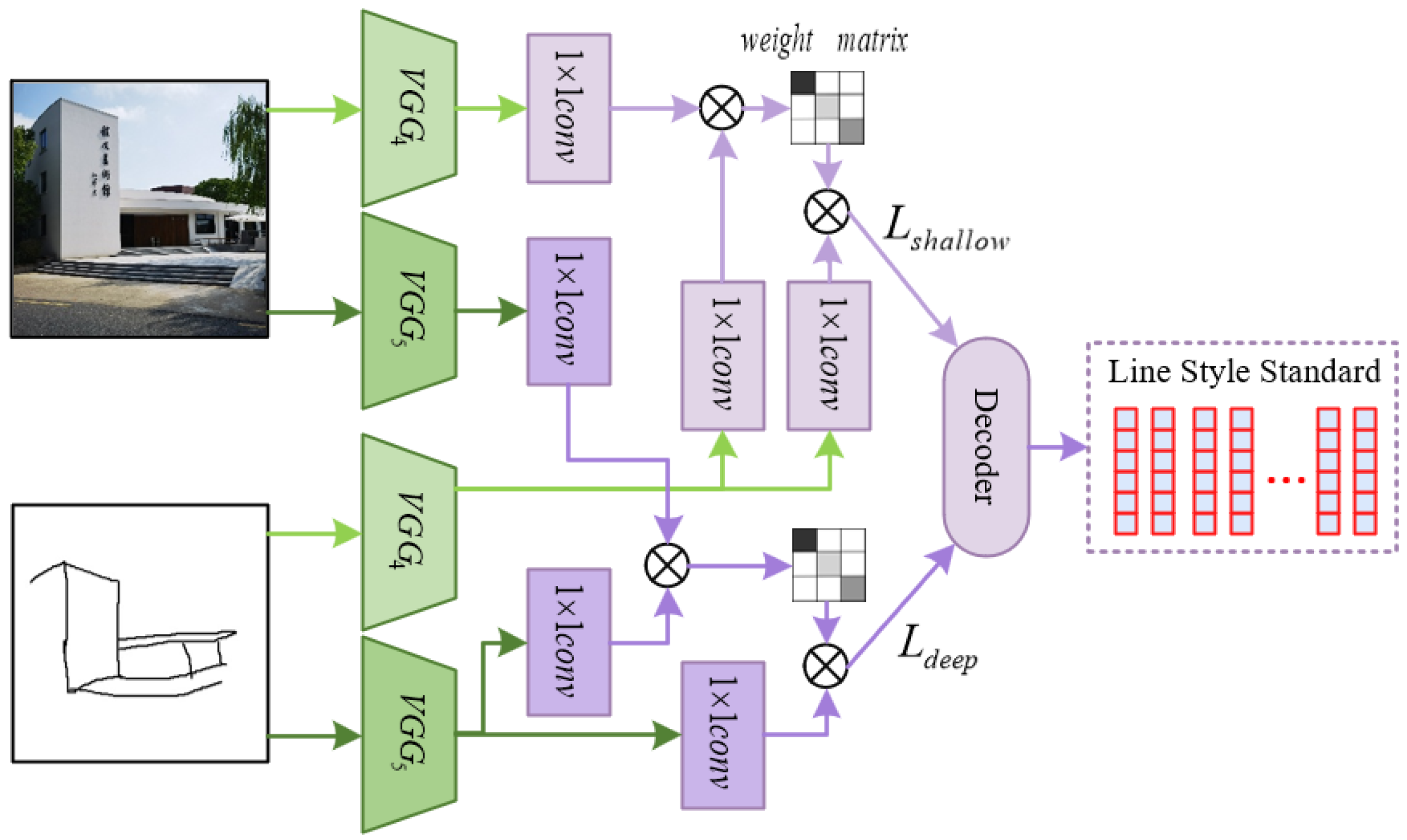

- In the context of aligning sketches with their corresponding original drawings, a novel approach involves the use of a two-branch generative network that relies on line information. Two types of modules, L-A Block and R-A Block, are proposed in dealing with the matching problem of buildings with similar height. The former extracts the line features and content information of the building, while the latter deeply associates the information with the help of the characteristics of the association network. The effectiveness of these modules is confirmed by the ablation studies in Section 3.5.

2. Materials and Methods

2.1. Cycle-Consistent Generative Model

2.2. L-A Block and R-A Block

2.2.1. Line-Attention Block

2.2.2. Relation Block

2.3. Overall Cost Function

2.4. Algorithm Description

| Algorithm 1 Training procedure of the SLIC model |

|

| Algorithm 2 Sketch-to-Image Retrieval with SLIC |

|

3. Results

3.1. Simulation Setup

3.2. Datasets

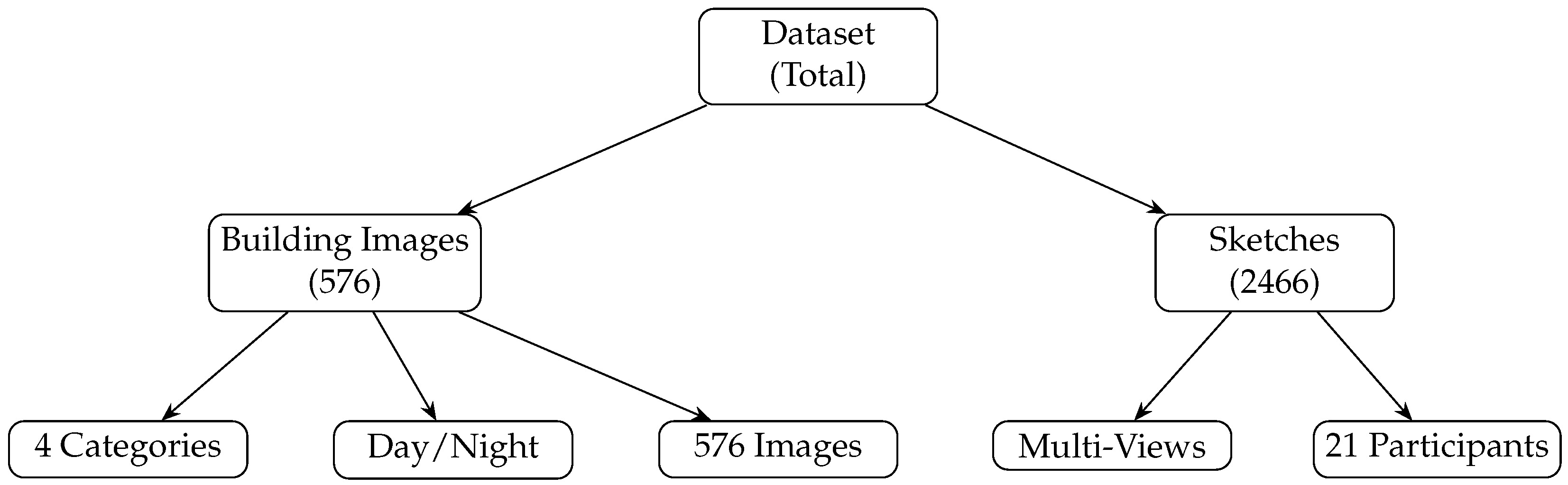

3.2.1. Sketch Dataset

3.2.2. Buildings Dataset

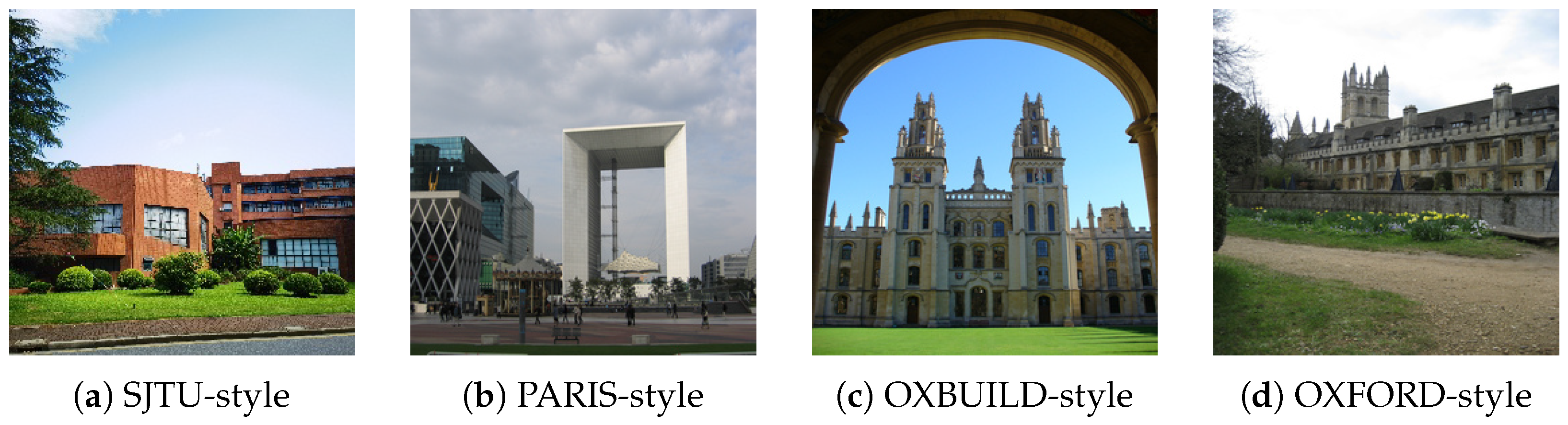

3.2.3. Night Dataset

3.3. Experiments on the Principles of Core Sketching

3.4. Experiments on Universality of Drawing Principles and Network Structures

3.5. Comparative Experiment and L-A Block Ablation Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ye, H.; Huang, H.; Liu, M. Monocular direct sparse localization in a prior 3d surfel map. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 8892–8898. [Google Scholar]

- Ji, X.; Liu, P.; Niu, H.; Chen, X.; Ying, R.; Wen, F. Object SLAM Based on Spatial Layout and Semantic Consistency. IEEE Trans. Instrum. Meas. 2023, 72, 2528812. [Google Scholar] [CrossRef]

- Li, J.; Chu, J.; Zhang, R.; Hu, H.; Tong, K.; Li, J. Biomimetic navigation system using a polarization sensor and a binocular camera. J. Opt. Soc. Am. A 2022, 39, 847–854. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Dong, Y.; Wang, H.; Wang, S.; Zhang, Y.; He, B. Bifocal-Binocular Visual SLAM System for Repetitive Large-Scale Environments. IEEE Trans. Instrum. Meas. 2022, 71, 5018315. [Google Scholar] [CrossRef]

- Zhang, H.; Jin, L.; Ye, C. An RGB-D camera based visual positioning system for assistive navigation by a robotic navigation aid. IEEE/CAA J. Autom. Sin. 2021, 8, 1389–1400. [Google Scholar] [CrossRef]

- Xu, Z.; Zhan, X.; Chen, B.; Xiu, Y.; Yang, C.; Shimada, K. A real-time dynamic obstacle tracking and mapping system for UAV navigation and collision avoidance with an RGB-D camera. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 10645–10651. [Google Scholar]

- Wang, L.; Shen, Q. Visual inspection of welding zone by boundary-aware semantic segmentation algorithm. IEEE Trans. Instrum. Meas. 2020, 70, 5001309. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Achuthan, K. WODIS: Water obstacle detection network based on image segmentation for autonomous surface vehicles in maritime environments. IEEE Trans. Instrum. Meas. 2021, 70, 7503213. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Q.; Wang, Y.; Yu, G. CSI-based location-independent human activity recognition using feature fusion. IEEE Trans. Instrum. Meas. 2022, 71, 5503312. [Google Scholar] [CrossRef]

- de Lucca Siqueira, F.; Plentz, P.D.M.; De Pieri, E.R. Semantic trajectory applied to the navigation of autonomous mobile robots. In Proceedings of the 2016 IEEE/ACS 13th International Conference of Computer Systems and Applications (AICCSA), Agadir, Morocco, 29 November–2 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Aotani, Y.; Ienaga, T.; Machinaka, N.; Sadakuni, Y.; Yamazaki, R.; Hosoda, Y.; Sawahashi, R.; Kuroda, Y. Development of autonomous navigation system using 3D map with geometric and semantic information. J. Robot. Mechatronics 2017, 29, 639–648. [Google Scholar] [CrossRef]

- Zender, H.; Mozos, O.M.; Jensfelt, P.; Kruijff, G.J.; Burgard, W. Conceptual spatial representations for indoor mobile robots. Robot. Auton. Syst. 2008, 56, 493–502. [Google Scholar] [CrossRef]

- Crespo, J.; Barber, R.; Mozos, O. Relational model for robotic semantic navigation in indoor environments. J. Intell. Robot. Syst. 2017, 86, 617–639. [Google Scholar] [CrossRef]

- Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Building multiversal semantic maps for mobile robot operation. Knowl.-Based Syst. 2017, 119, 257–272. [Google Scholar] [CrossRef]

- Drouilly, R.; Rives, P.; Morisset, B. Semantic representation for navigation in large-scale environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1106–1111. [Google Scholar]

- He, G.; Zhang, Q.; Zhuang, Y. Online semantic-assisted topological map building with LiDAR in large-scale outdoor environments: Toward robust place recognition. IEEE Trans. Instrum. Meas. 2022, 71, 8504412. [Google Scholar] [CrossRef]

- Tripathi, A.; Dani, R.R.; Mishra, A.; Chakraborty, A. Sketch-Guided Object Localization in Natural Images. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 532–547. [Google Scholar]

- Chen, J.; Fang, Y. Deep cross-modality adaptation via semantics preserving adversarial learning for sketch-based 3d shape retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 605–620. [Google Scholar]

- Yelamarthi, S.K.; Reddy, S.K.; Mishra, A.; Mittal, A. A zero-shot framework for sketch based image retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Liu, L.; Shen, F.; Shen, Y.; Liu, X.; Shao, L. Deep sketch hashing: Fast free-hand sketch-based image retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2862–2871. [Google Scholar]

- Zhang, J.; Shen, F.; Liu, L.; Zhu, F.; Yu, M.; Shao, L.; Shen, H.T.; Van Gool, L. Generative domain-migration hashing for sketch-to-image retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 297–314. [Google Scholar]

- Song, J.; Yu, Q.; Song, Y.Z.; Xiang, T.; Hospedales, T.M. Deep spatial-semantic attention for fine-grained sketch-based image retrieval. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5551–5560. [Google Scholar]

- Yang, Z.; Zhu, X.; Qian, J.; Liu, P. Dark-aware network for fine-grained sketch-based image retrieval. IEEE Signal Process. Lett. 2020, 28, 264–268. [Google Scholar] [CrossRef]

- Besbas, W.; Artemi, M.; Salman, R. Content based image retrieval (CBIR) of face sketch images using WHT transform domain. In Proceedings of the 2014 3rd International Conference on Informatics, Environment, Energy and Applications IPCBEE, Shanghai, China, 27–28 March 2014; Volume 66. [Google Scholar]

- Zhou, R.; Chen, L.; Zhang, L. Sketch-based image retrieval on a large scale database. In Proceedings of the 20th ACM International Conference on Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 973–976. [Google Scholar]

- Gaidhani, P.A.; Bagal, S. Implementation of Sketch Based and Content Based Image Retrieval. Int. J. Mod. Trends Eng. Res. 2016, 3, hal-01336894. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Changpinyo, S.; Chao, W.L.; Gong, B.; Sha, F. Synthesized classifiers for zero-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5327–5336. [Google Scholar]

- Park, D.Y.; Lee, K.H. Arbitrary style transfer with style-attentional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5880–5888. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Rybski, P.E.; Roumeliotis, S.; Gini, M.; Papanikopoulos, N. Appearance-based mapping using minimalistic sensor models. Auton. Robot. 2008, 24, 229–246. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Dutta, A.; Akata, Z. Semantically tied paired cycle consistency for zero-shot sketch-based image retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5089–5098. [Google Scholar]

- Lin, F.; Li, M.; Li, D.; Hospedales, T.; Song, Y.Z.; Qi, Y. Zero-shot everything sketch-based image retrieval, and in explainable style. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23349–23358. [Google Scholar]

| Removed Stroke Type | mAP@all | mAP@3 |

|---|---|---|

| Full sketch (baseline) | 0.90 | 0.91 |

| Parallel inner/outer outlines | 0.87 | 0.88 |

| Multiple identical features | 0.85 | 0.86 |

| Internal dividing lines | 0.84 | 0.84 |

| Place | Scenario | mAP@2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SJTU | 0.90 | |||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ | ✓ | ||

| PARIS | 1.00 | |||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| OXFORD | 0.88 | |||||||||

| ✓ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| OXBUILD | 0.87 | |||||||||

| ✓ | ✓ | ✓ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Method | mAP@1 | mAP@2 | mAP@3 |

|---|---|---|---|

| DAISY [31] | 0.61 | 0.59 | 0.57 |

| HOG [32] | 0.74 | 0.72 | 0.68 |

| RESNET [33] | 0.82 | 0.85 | 0.79 |

| VGG [34] | 0.85 | 0.83 | 0.80 |

| SEM-PCYC [35] | 0.85 | 0.83 | 0.81 |

| ZSE [36] | 0.87 | 0.84 | 0.82 |

| Ours | 0.90 | 0.90 | 0.91 |

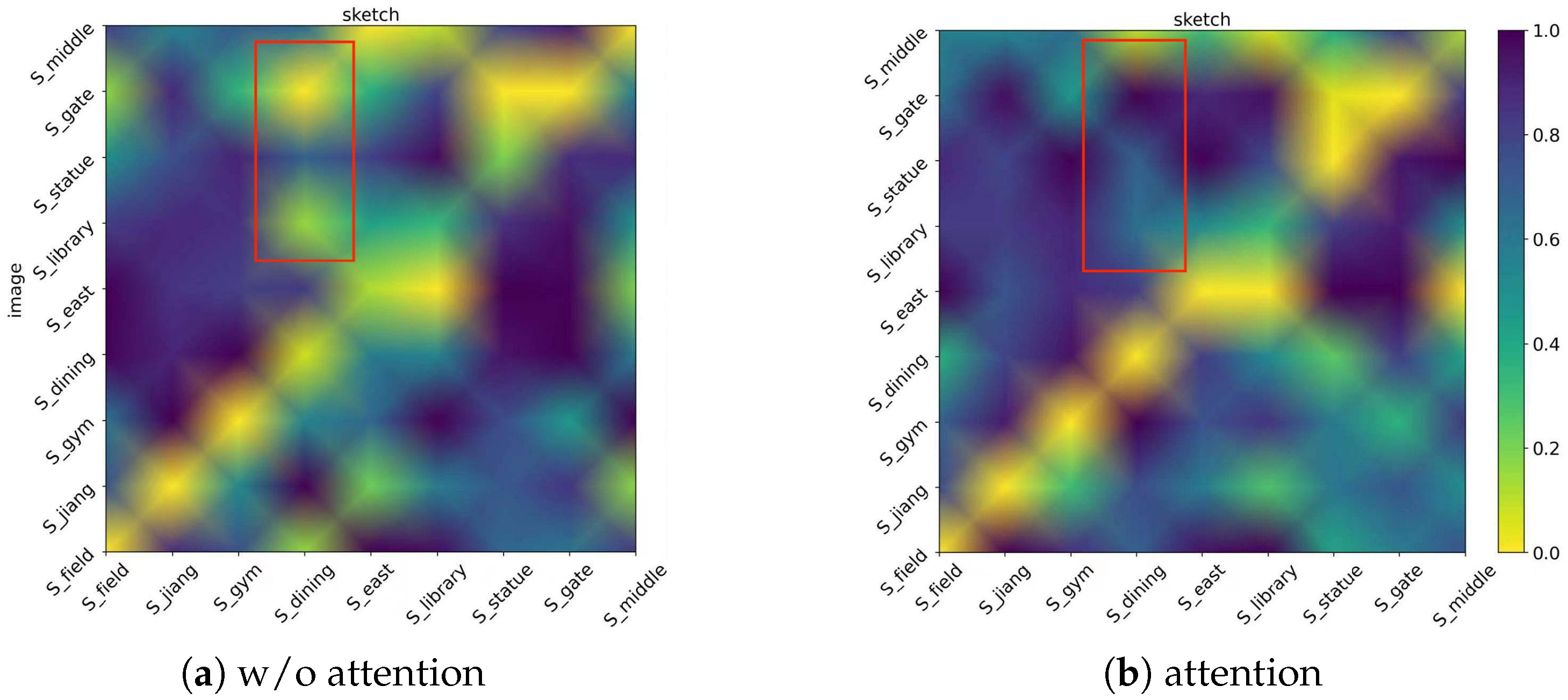

| Time | Network | mAP@1 | mAP@2 | mAP@3 | Drop@1% | Drop@2% | Drop@3% |

|---|---|---|---|---|---|---|---|

| day | attention | 0.90 | 0.90 | 0.91 | – | – | – |

| w/o attention | 0.85 | 0.82 | 0.88 | 5.6% | 8.9% | 3.3% | |

| night | attention | 0.74 | 0.74 | 0.75 | – | – | – |

| w/o attention | 0.62 | 0.64 | 0.65 | 16.2% | 13.5% | 13.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, H.; Fan, J.; Huang, Y.; Lin, L.; Qian, J. A Sketch-Based Cross-Modal Retrieval Model for Building Localization Without Satellite Signals. Electronics 2025, 14, 3936. https://doi.org/10.3390/electronics14193936

Du H, Fan J, Huang Y, Lin L, Qian J. A Sketch-Based Cross-Modal Retrieval Model for Building Localization Without Satellite Signals. Electronics. 2025; 14(19):3936. https://doi.org/10.3390/electronics14193936

Chicago/Turabian StyleDu, Haihua, Jiawei Fan, Yitao Huang, Longyang Lin, and Jiuchao Qian. 2025. "A Sketch-Based Cross-Modal Retrieval Model for Building Localization Without Satellite Signals" Electronics 14, no. 19: 3936. https://doi.org/10.3390/electronics14193936

APA StyleDu, H., Fan, J., Huang, Y., Lin, L., & Qian, J. (2025). A Sketch-Based Cross-Modal Retrieval Model for Building Localization Without Satellite Signals. Electronics, 14(19), 3936. https://doi.org/10.3390/electronics14193936