1. Introduction

Recent advances in Generative Artificial Intelligence (GAI) including the advent of foundation models such as Large Language Models (LLMs) have been fundamentally transformative, demonstrating unprecedented performance across a wide range of tasks, including text generation, sentiment analysis, and question answering [

1,

2]. While the generalist nature of LLMs and other GAI models has facilitated their broad applicability, it poses significant limitations in scenarios requiring nuanced, user-specific responses [

3], such as in educational contexts like anti-phishing training [

4]. One of the most critical efforts to prevent social harm done by these new technologies is the effective training against social engineering, deepfakes of news, and other nefarious applications of GAI.

The complexity of social engineering attacks has significantly increased in recent months due in part to the advanced sophistication of GAI models [

5]. These models can be used to quickly design new attacks from scratch using various methods such as translating previously used databases of attacks or creating complex novel attacks leveraging images, video, text, and audio [

6] in an attempt to increase the success of social engineering attempts. Despite the significant threat posed by GAI models such as LLMs in accelerating social engineering attacks [

7,

8,

9], only 23% of companies polled by Proofpoint in 2024 had trained their employees on GAI safety [

10].

One reason for this limitation of adequate training regarding GAI-based phishing attacks is the high cost associated with traditional training methods such as in-person lecturing [

11], and the time required to develop remote learning materials [

12]. However, research has suggested that virtual learning of social engineering training can be more effective than in-person training [

11]. A recent approach to addressing this educational limitation is to leverage GAI models themselves to design educational materials while providing feedback to users [

4]. In the context of social engineering training in identifying phishing emails, this approach has the benefit of allowing for a training platform that can simultaneously generate realistic phishing attempts. While LLM supported training and education has benefits of easy access and scalability, it has issues related to domain-specific knowledge and individualization of feedback in educational settings [

13].

In this work we begin by presenting a dataset that serves to augment the original dataset presented by Malloy et al. [

4] containing a set of messages sent between human students and an LLM teacher in an anti-phishing education platform. We augment this dataset with two embedding dictionaries; the first is a set of embeddings of the messages sent by LLM teachers and human students; the second is a set of embeddings of open responses that students provided to describe the method that they used to determine if emails were phishing or not. This dataset includes embeddings formed by 10 different embedding models ranging from open to closed models and a range of embedding sizes.

After describing this presented dataset, we compare the 10 different embedding models in their correlation to human student learning outcomes. Next, we evaluate the usefulness of these embedding dictionaries by comparing the cosine similarity of the embeddings of messages sent by LLM teachers and students with the embeddings of the emails presented to students. These cosine similarity measurements are compared with several metrics of student learning performance, demographics, and other measures of the educational platform. We conclude this paper with a description of the results we present and a contextualization of these results with specific recommendations for improving LLM teaching methods.

2. Related Work

2.1. LLMs in Education

One example of a domain-specific application of LLM education is discussed in [

14] which focuses on databases and information systems in higher education. Here, the authors find that issues such as bias and hallucinations can be mitigated in domain-specific educational applications through the use of an LLM-based chatbot ’MoodleBot’, a specialized system tailored for a single specific educational course. These results highlight the importance of domain-specific knowledge in the design and evaluation of LLM teaching platforms. Meanwhile, a more generalist educational LLM platform is presented in [

15] called multiple choice question generator (MCQGen), that can be applied to a variety of domains through the integration of Retrieval Augmented Generation and an human-in-the-loop process that ensures question validity.

Beyond applications of LLMs as merely educational tools is research into use cases of agentic LLMs that make decisions regarding student education. One recent survey by Chu et al. [

16] of LLM applications on education focuses on the use of LLM agents, which extend the traditional use-case of LLMs beyond a tool into a more independent model that makes decisions and impacts an environment [

17]. This survey highlights the importance of mitigating hallucinations and ensuring fairness in educational outcomes. This insight guides an important focus of this work which compares different potentially vulnerable subpopulations in the way that they converse with an LLM chatbot. Many examples exist in the literature of LLM bias demonstrating potential causes of unfairness, such as racial bias [

18], gender bias [

19], or age [

20]. These biases become increasingly relevant in domain-specific applications of LLMs in education, as the ways in which biases interact with education become more complete than in other LLM applications [

21].

2.2. LLM Personalization in Education

Personalization techniques have traditionally been extensively researched within information retrieval and recommendation systems but remain relatively underexplored in the context of LLMs [

1]. Developing personalized and domain-specific educational LLMs involves leveraging user-specific data such as profiles, historical interactions, and preferences to tailor model outputs [

22]. Effective personalization of LLMs is critical in domains such as conversational agents, education, healthcare, and content recommendation, where understanding individual preferences significantly enhances user satisfaction and engagement [

22,

23].

Recent literature highlights various strategies for personalizing LLMs, broadly categorized into fine-tuning approaches, retrieval augmentation, and prompt engineering [

2,

22,

23]. Fine-tuning methods adapt LLM parameters directly to user-specific contexts, showing significant performance improvements in subjective tasks like sentiment and emotion recognition [

2]. Fine-tuned LLMs have been applied onto educational domains such as the Tailor-Mind model which generates visualizations for use in educational contexts [

24]. However, these approaches are resource-intensive and often impractical for real-time personalization across numerous users [

25].

Retrieval augmentation, on the other hand, enhances personalization efficiency by dynamically incorporating external user-specific information at inference time without extensive model retraining [

26]. Methods like LaMP utilize user profiles and historical data, selectively integrating relevant context through retrieval techniques [

1]. More recently, frameworks such as OPEN-RAG have significantly improved reasoning capabilities within retrieval-augmented systems, especially when combined with open-source LLMs [

23]. Prompt engineering and context injection represent lighter-weight approaches where user-specific information is embedded within the prompt or input context, guiding the LLM toward personalized responses [

22,

27]. RAG has been applied on to domain-specific educational contexts like computing education [

28] through the use of small LLMs that incorporate RAG. Other recent approaches in LLM education with RAG seek to personalize pedagogical content by predicting user learning styles [

29]. These methods, while efficient, are limited by context length constraints and impermanent personalization.

2.3. Automatic Phishing Detection

On the defensive side, research efforts are increasingly focused on countering these threats. The growing sophistication of LLM-generated phishing emails presents challenges for traditional phishing detection systems, many of which are no longer able to reliably identify such attacks. This issue has thus become a focal point in AI-driven cybersecurity research, which is particularly evident in the following two leading approaches.

Ref. [

30] employed LLMs to rephrase phishing emails in order to augment existing phishing datasets, with the goal of improving the ability of detection systems to identify automatically generated phishing content. Their findings suggest that the detection of LLM-generated phishing emails often relies on different features and keywords than those used to identify traditional phishing emails.

LLM-generated phishing emails were also used in the approach of [

31] to fine-tune various AI models, including BERT-, T5- and GPT-based architectures. Their results demonstrated a significant improvement in phishing detection performance across both human- and LLM-generated messages, compared to the baseline models.

2.4. LLM Generated Phishing Emails

Several studies have highlighted that generative AI can be leveraged to create highly convincing phishing emails, significantly reducing the human and financial resources typically required for the creation of them [

30,

31,

32,

33,

34,

35]. This development is driven in part by the increasing ability of LLMs to maintain syntactic and grammatical integrity while they also embed cultural knowledge into artificially generated messages [

36]. Moreover, with the capacity to generate multimedia elements such as images and audio, GAI can enhance phishing emails by adding elements that further support social engineering attacks [

32]. The collection of personal data for targeting specific individuals can also be facilitated through AI-based tools [

35].

In [

31], Bethany et al. evaluated the effectiveness of GPT-4-generated phishing emails and confirmed their persuasive power in controlled studies. A related study revealed that while human-crafted phishing emails still demonstrated a higher success rate among test subjects, they were also more frequently flagged as spam compared to those generated by GPT-3 models [

33]. Targeted phishing attacks—commonly known as spear phishing—can also be rapidly and extensively generated by low experienced actors using GAI, as demonstrated in [

35] experiments with a LLaMA-based model.

2.5. Anti-Phishing Education

Anti-Phishing education seeks to train end-users to correctly identify phishing emails they receive in real life and react appropriately. This education is an important first step in cybersecurity as user interaction with emails and other forms of social engineering is often the easiest means for cyberattackers to gain access to privileged information and services [

37]. Part of the ease with which attackers can leverage emails is due to the high number of emails that users receive as a part of their daily work, which leads to a limited amount of attention being placed on each email [

38]. Additionally, phishing emails are relatively rare to receive as many filtering and spam detection methods prevent them from being sent to users’ inboxes. For this reason, many users are relatively inexperienced with phishing emails and may incorrectly identify them [

39]. Despite the commonality of cybersecurity education and training in many workplaces, social engineering including phishing emails remains a common method of attack with a significant impact on security [

40].

Part of the challenge of anti-phishing education is defining the qualities of a good education platform and determining how to evaluate both the platform and the ability of users to detect emails in the real world. In their survey, Jampen et al. note the importance of anti-phishing education platforms that can equitably serve large and diverse populations in an inclusive manner [

37]. This review compared ’user-specific properties and their impact on susceptibility to phishing attacks’ to identify key features of users such as age, gender, email experience, confidence, and distrust. This is crucial as cybersecurity preparedness is only as effective as its weakest link, meaning anti-phishing education platforms that only work for some populations are insufficient to appropriately address the dangers associated with phishing emails [

41]. It is important for anti-phishing training platforms to serve populations as they vary across these features to ensure that the general population is safe and secure from attacks using phishing emails [

42].

Another important area of research uses laboratory and online experiments with human participants engaged in a simulation of anti-phishing training to compare different approaches. This has the benefit of allowing for more theoretically justified comparisons, since traditional real-world anti-phishing education has high costs associated with it, making more direct comparisons difficult [

40]. Some results within this area of research indicate that more detailed feedback, rather than only correct or incorrect information, significantly improves post-training accuracy in categorizing emails as either phishing or ham [

43]. Additional studies indicate that personalizing LLM-generated detailed feedback to the individual user through prompt engineering can further improve the educational outcomes of these platforms [

4,

44]. However, these previous approaches do not involve the training or fine-tuning of more domain-specific models, and rely on off-the-shelf black box models using API calls to generate responses.

3. Dataset

3.1. Original Dataset

The experimental methods used to gather the dataset used for analysis in this work are described in [

4] and made available on OSF by the original authors at

https://osf.io/wbg3r/ (accessed on 1 September 2025). 417 participants made 60 total judgments about whether emails they were shown were safe or dangerous, with 8 different experiment conditions that varied the method of generating emails and the specifics of the LLM teacher prompting for educational feedback. These emails were gathered from a dataset of 1461 emails, with a variety of methods used to create these emails. In each of the four conditions we examine used educational example emails that were generated by a GPT-4 LLM model. While the experimentation methods contained 8 different conditions, we are interested only in the four conditions that involved conversations between users and the GPT-4.1 LLM chatbot. In each of these experiments, the participants were given feedback on the accuracy of their categorization from an LLM that they could also converse with.

Between these four conditions, the only difference was the presentation of emails to participants and the prompting of the LLM model for feedback. In the ‘base’ first condition, emails were selected randomly, and the LLM model was prompted to provide feedback based on the information in the email and the decision of the student. In the second condition, emails were selected by an IBL cognitive model in an attempt to give more challenging emails to the student, based on the past decisions they made. The third condition selected emails randomly but included information from the IBL cognitive model in the prompt to the LLM; specifically, this information was a prediction of which features of an email the current student may struggle with. Finally, the fourth condition combined the two previous ones, using the IBL cognitive model for both email selection and prompting.

In the original dataset, there are three sets of LLM embeddings of each email shown to participants using OpenAI API to access 3 embedding models (‘text-embedding-3-large’, ‘text-embedding-3-small’, and ‘text-embedding-ada-002’) [

45]. These embeddings were used alongside a cognitive model of human learning and decision making called Instance-Based Learning [

46,

47,

48] to predict the training progress of users. However, the original paper [

4] did not directly analyze the conversations between end users and the LLM chatbots, and did not create a database of chatbot conversation embeddings.

3.2. Proposed Dataset

In this work we introduce an embedding dictionary

https://osf.io/642zc/ (accessed on 1 September 2025) of these messages and evaluate the usefulness of this embedding dictionary in different use cases. We also include in the same dataset an embedding dictionary of the open response replies that students gave at the end of the experiment to answer the question of how they determined if emails were safe or dangerous. In the majority of our analysis, we combine the four conditions that included conversations with chatbots because the previously mentioned differences do not impact conversations between participants and the LLM chatbot.

One major limitation to this previous dataset is the exclusive use of closed-source models. While the embeddings themselves were included, the closed-source nature of the three embedded models used in [

4] limits the reproducibility of the work and the accessibility to other researchers. In this work we employ the same three closed-source models as in the original work as well as seven new open-source models (qwen3-embedding-0.6B

https://huggingface.co/Qwen/Qwen3-Embedding-0.6B (accessed on 1 September 2025) [

49], qwen3-embedding-4B

https://huggingface.co/Qwen/Qwen3-Embedding-4B (accessed on 1 September 2025) [

49], qwen3-embedding-8B

https://huggingface.co/Qwen/Qwen3-Embedding-8B (accessed on 1 September 2025) [

49], all-MiniLM-L6-v2

https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2 (accessed on 1 September 2025), bge-large-en-v1.5

https://huggingface.co/BAAI/bge-large-en-v1.5 (accessed on 1 September 2025) [

50], embeddinggemma-300m

https://huggingface.co/google/embeddinggemma-300m (accessed on 1 September 2025) [

51], and granite-embedding-small-english-r2

https://huggingface.co/ibm-granite/granite-embedding-small-english-r2 (accessed on 1 September 2025) [

52]). For all models that did not directly output embeddings, mean pooling was used to extract embeddings [

53]. There were 3846 messages sent between chatbots and 146 different users during the anti-phishing training, resulting in 38,460 message embeddings in our dataset. Additionally, we provide embeddings for the seven new open-source models of the emails in the original dataset resulting in 5856 new email embeddings.

Our conversation analysis presented in the following section begins by a comparison of the ten embedding models contained in our proposed dataset along a single metric. After this, we perform a series of regressions that compare correlations of different metrics of performance with the cosine similarity between the embeddings of messages and emails. Finally, we perform a mediation analysis to give more strength to our conclusions and recommendations. After this analysis, we proceed to the Results and Discussion Sections.

4. Conversation Analysis

In this section we demonstrate the usefulness of the presented dataset of embeddings between users and the teacher LLM in this anti-phishing education context. We begin by comparing the cosine similarity of the embeddings of messages sent by students and the LLM teacher with the emails that the student was viewing when the message was sent. This is an exploratory analysis that serves to examine whether cosine similarity is correlated with three different student performance metrics. An important aspect of this analysis is that it is purely correlational, meaning that we cannot determine causal relationships or the direction of correlational relationships. Our goal with this analysis is to explore potential methods of improving LLM education that can be further explored in future research. Code to generate all figures and statistical analysis in this section is included online

https://github.com/TailiaReganMalloy/PhishingConversations (accessed on 1 September 2025).

4.1. Embedding Model Comparison

Before presenting our analysis of the correlations between cosine similarity and different attributes of student performance and demographics, we first seek to motivate our choice of cosine similarity as a metric. There are several more simple metrics that could be calculated between emails and messages without the need for embedding models, raising the question of the value of our proposed dataset. For instance, metrics of the lexical overlap between emails and messages such as the Jaccard [

54], the proportion of common words between the message and the email, and the Rouge [

55], a count of how many of the same short phrases (n-grams) appear in both texts. Additionally it is important to control for attributes such as the message length [

56], since longer messages may have on average higher similarities to emails since they are of a similar length.

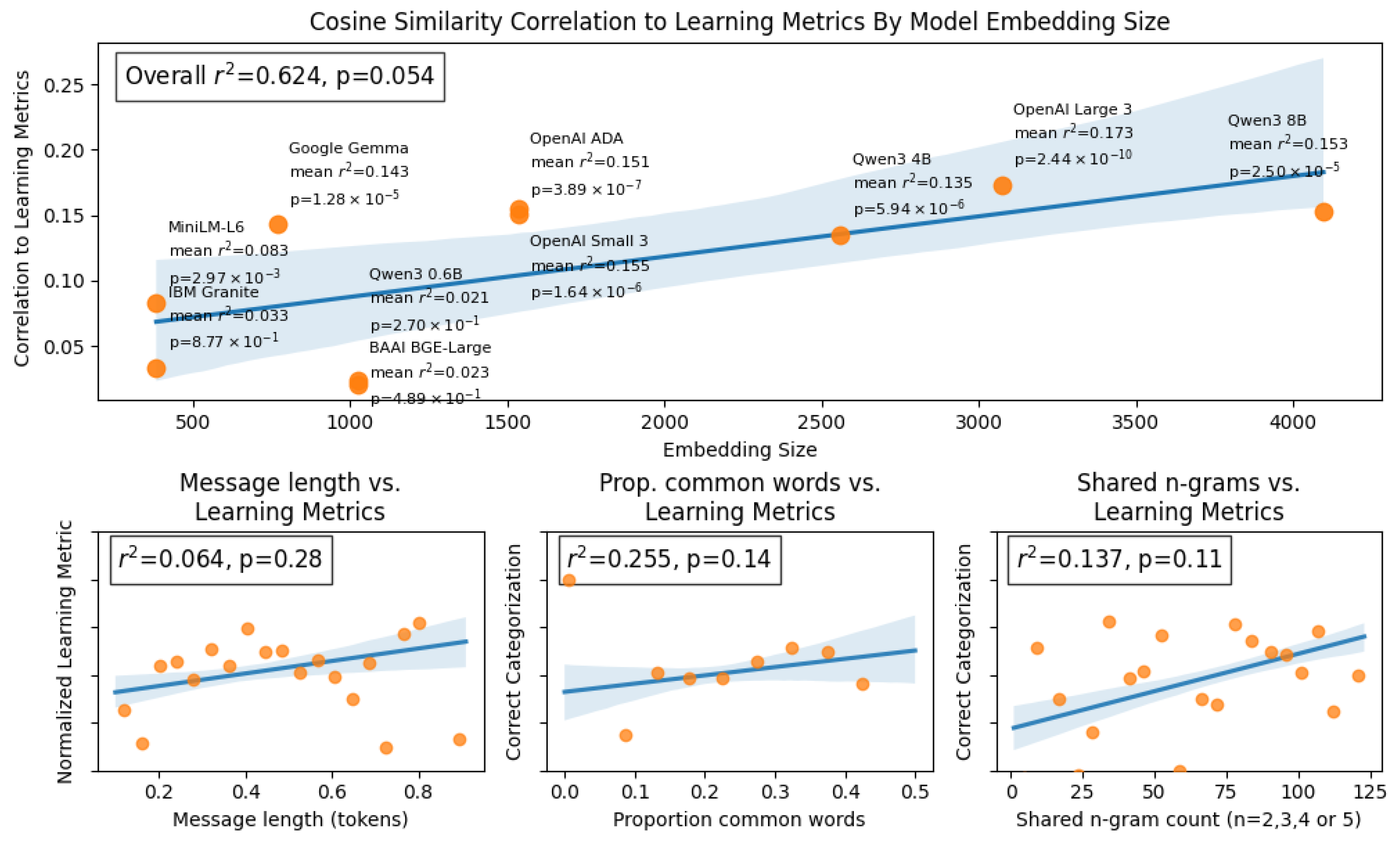

If the correlational analysis we present in this section could be equally related to these alternative metrics, it would demonstrate an issue with our proposition of the usefulness of the dataset we present. To address this, we begin by comparing the correlation of three metrics of student performance, their correct categorization, their confidence, and their reaction time. In

Figure 1, we compare the correlation between three learning metrics and the cosine similarity of embeddings of messages sent as feedback and the emails students are observing. We report this average for 10 different embedding models. Additionally, we compare these correlations to the alternative metrics previously mentioned.

To determine which embedding model is ideal for our correlation analysis, we compare each of these embedding models in terms of the average correlation of our three metrics, correct categorization, confidence, and reaction time. This is shown on the top row of

Figure 1 which has on the x axis the embedding size of the models under comparison, and on the y-axis the average correlation (Pearson

) of those three metrics. Overall we see a significant trend that models with larger embedding sizes are typically better correlated to the learning metrics we are interested in. This result is promising for our analysis, as comparing the similarity of larger embeddings often captures semantic similarity better than smaller embeddings [

57]. The highest correlation to learning metrics is observed when comparing the cosine similarity of emails and messages generated by the Open AI Large 3 model which has an embedding size of 3048. For this reason we will compare the cosine similarity as measured using embeddings formed by the Open AI Large 3 model in all following analyses.

4.2. Regression Analyses

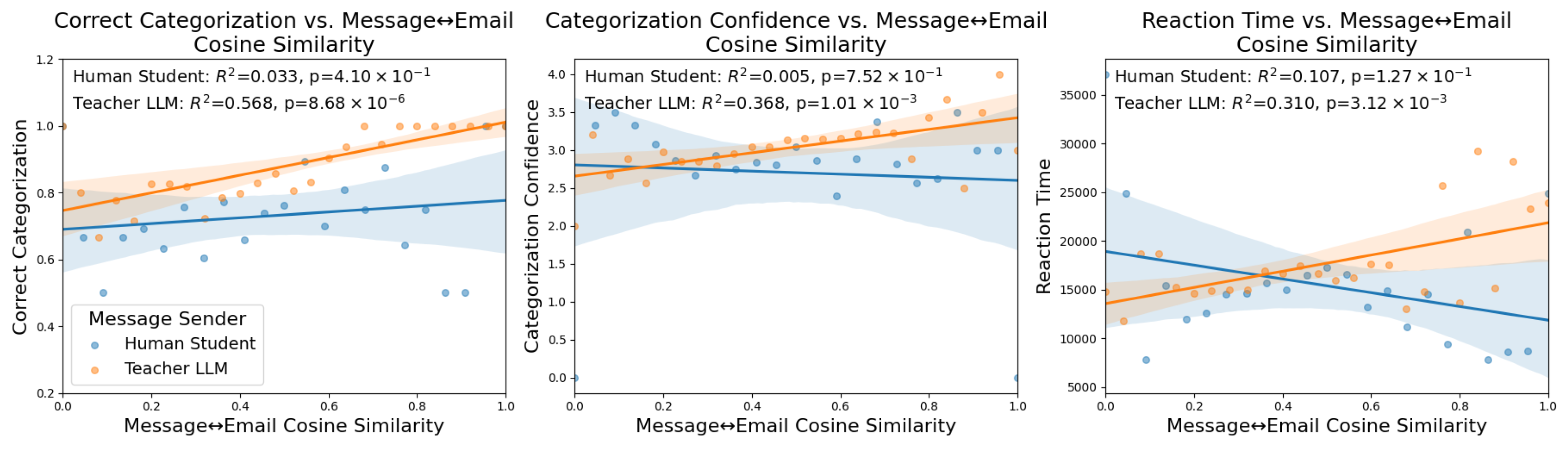

In all regression analyses in this section, we first bin the message embedding cosine similarities to email embeddings to the nearest 0.01, grouped based on the sender. Additionally, all message cosine similarity values on the x-axis are normalized to between 0–1 grouped by the message sender. This is the source of the values on the x-axis of each plot. Then, we plot as a scatterplot the averages for the metric on the y-axis of all of the binned messages. For example, in the left column of

Figure 2, the leftmost blue point represents the average correct categorization for all trials where messages were sent that had embeddings with a cosine similarity to email embeddings of 0.00. The significance of these regressions is based on Pearson correlation coefficients with the

and

p values shown at the top of each subplot. Finally, each variable comparison (e.g., correct categorization and message cosine similarity to email) has a

t-Test run to compare the correlation in a different manner that does not use binned message cosine similarity values.

The first of these metrics is the percent of correct categorization by the student, the second is their confidence in the categorization, and the last is the reaction time of the student. Ideally, the teacher LLM would be providing feedback that is easy to quickly understand and leads to high confidence and correct categorizations. These three metrics are compared to the cosine similarity of emails with respect to both student and teacher message embeddings as shown in

Figure 2.

4.3. Categorization Accuracy

The relationship between message cosine similarity and user categorization accuracy is shown on the middle column of

Figure 2. The analysis of student accuracy in categorization revealed that both the human student’s and teacher LLM message cosine similarities to emails were positively associated with the likelihood of a correct categorization. The human student’s message–email cosine similarity showed a moderate positive correlation with correct categorization, that is not robust when evaluated with ANOVA (Pearson Correlation:

, ANOVA:

,

,

). The teacher LLM’s message similarity exhibited a strong positive association with correct outcomes, a relationship further corroborated by statistically significant results from ANOVA, though the effect size was small (Pearson Correlation

, ANOVA:

,

,

). These results indicate that student performance was higher when the messages sent by either them or their teacher were more closely related to the email that was being observed by the student. Furthermore, the results suggest that the LLM’s message similarity is a stronger predictor of correct categorization than the human student’s similarity, though the ANOVA effect sizes remain modest.

4.4. Categorization Confidence

The relationship between message cosine similarity and user confidence in their categorization is shown in the middle column of

Figure 2. The analysis of students’ categorization confidence showed a divergent trend for the student and the teacher in relation to message similarity. This is a surprising result, since the previous analysis of categorization accuracy indicated that both student and teacher messages that were more related to the current email were associated with better performance. However, confidence is a separate dimension from accuracy as low confidence correct answers and high confidence incorrect answers can change the relationship between message embedding similarities and this metric of student performance. The cosine similarity between a student’s message and the email content was negatively associated with the student’s confidence rating (Pearson Correlation:

, ANOVA:

,

,

).

In other words, students who more closely echoed the email’s content in their own messages tended to report lower confidence in their categorization decisions, but this pattern was not consistently supported across groups, as indicated by ANOVA score. By contrast, the teacher LLM’s message similarity showed a positive correlation with student confidence which was also statistically significant in ANOVA (Pearson Correlation: , ANOVA: , , ). This indicates that when the teacher’s response closely matched the email content, students tended to feel slightly more confident about their categorizations, although the effect size was small.

4.5. Categorization Reaction Time

The relationship between message cosine similarity and reaction time is shown on the right hand side of

Figure 2. The relationship between reaction time and message similarity differed markedly by role. There was no significant association between the human student’s message similarity and their reaction time, not with Pearson Correlation nor with ANOVA (Pearson Correlation:

, ANOVA:

,

,

), indicating that how closely a student’s message mirrored the email content did not measurably influence how quickly they responded. In contrast, the teacher LLM’s message similarity was significantly associated with longer reaction times in regard to Pearson Correlation, but ANOVA also showed just a small effect size (Pearson Correlation:

, ANOVA:

,

,

).

Higher cosine similarity between the teacher’s message and the email corresponded to increased time taken by students to complete the categorization task, even if the effect was not conventionally significant with ANOVA, it shows a trend. In practical terms, when the teacher’s response closely resembled the email text, students tended to require more time to finalize their categorization, whereas the student’s own content overlap had little to no observable effect on timing. These results are presented as correlational patterns (from the regression analysis) and do not imply causation, but they highlight that teacher-provided content overlap was linked to slower student responses while student-provided overlap was not.

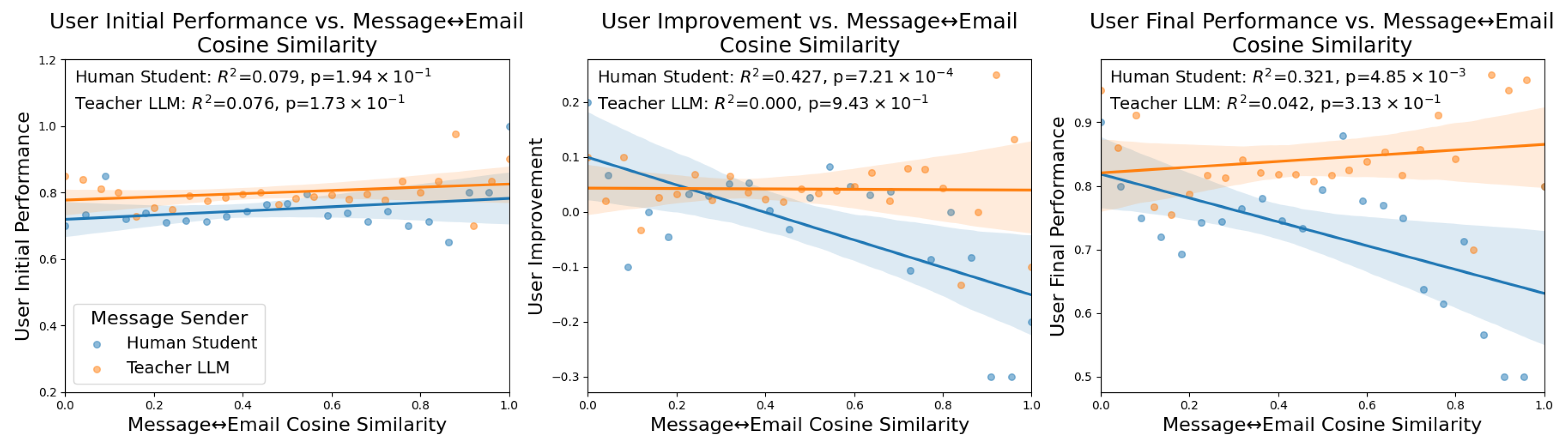

4.6. Student Learning Outcomes

The next analysis that we perform is related to the learning outcomes of the students, as well as their responses to the post-experiment questionnaire that asked them questions about whether they thought the emails that they observed were written by humans or an LLM. Note that in the three conditions we examine here, all of the emails were written and stylized with HTML and CSS code by a GPT-4.1 LLM, meaning that the correct perception of emails as AI-generated is 100 percent. The open-response question that is analyzed on the right column of

Figure 3 is the student’s response to the question of how they made their decisions about whether an email was safe or dangerous.

4.6.1. User Initial Performance

The left column of

Figure 3 compares the average message cosine similarity to the current email being observed by the student with the initial performance of the student. Here we see that neither the messages sent by human students nor the teacher LLM are strongly correlated with user initial performance. There is a slight positive trend for both regressions where higher cosine similarity with student messages is associated with better initial performance (Pearson Correlation:

, ANOVA:

,

,

), and similarly for teacher LLM similarity (Pearson Correlation

, ANOVA:

,

,

). However, both of these have low correlations with high p-values and the ANOVA results show no significance and small effect sizes. This indicates that there is no relationship between the conversations of human students and LLM teachers and initial performance, at least when measured by message cosine similarity to emails. This makes intuitive sense as the messages between participants and students begin after this initial pre-training phase when there is no feedback yet.

4.6.2. User Training Outcomes

The middle column of

Figure 3 compares the user improvement to our measure of message cosine similarity to emails. Here, we can see that only the messages sent by human students have cosine similarities to emails that are correlated with user improvement, supported by Pearson Correlation. However, interestingly this is actually a negative trend, meaning that higher human message cosine similarity to emails results in lower average user improvement (Pearson Correlation:

ANOVA:

,

,

), though the ANOVA result is not statistically significant, indicating that the effect is not robust across groups. Meanwhile, this same comparison of teacher LLM messages shows no correlation at all (Pearson Correlation:

, ANOVA:

,

,

). This goes against the intuition that conversations that focus on the content of emails are beneficial to student learning outcomes that were established in the previous set of results. However, we believe they are not completely contradictory as a human student sending messages about specific parts of emails, even including specific passages of the email, may indicate a high level of confusion about the categorization.

4.6.3. User Final Performance

The right column of

Figure 3 compares the user improvement to the message cosine similarity to emails. Similarly to the comparison to user improvement, here we see no correlation with the LLM teacher messages and user final performance (Pearson Correlation

, ANOVA:

,

,

), while the human emails have a similar negative correlation (Pearson Correlation

, ANOVA:

,

,

). Both correlation measures support these outcomes. This supports the conclusions of the previous comparison of regressions which suggested that participants who frequently make comments that reference specific parts of the emails they are shown may have worse training outcomes. Taking these results in mind while observing the results of regressions shown in

Figure 2 suggests that LLM models should seek to make their feedback specific and reference the emails that are being shown to participants, but steer human participants away from focusing too much on the specifics of the email in question in their own messages.

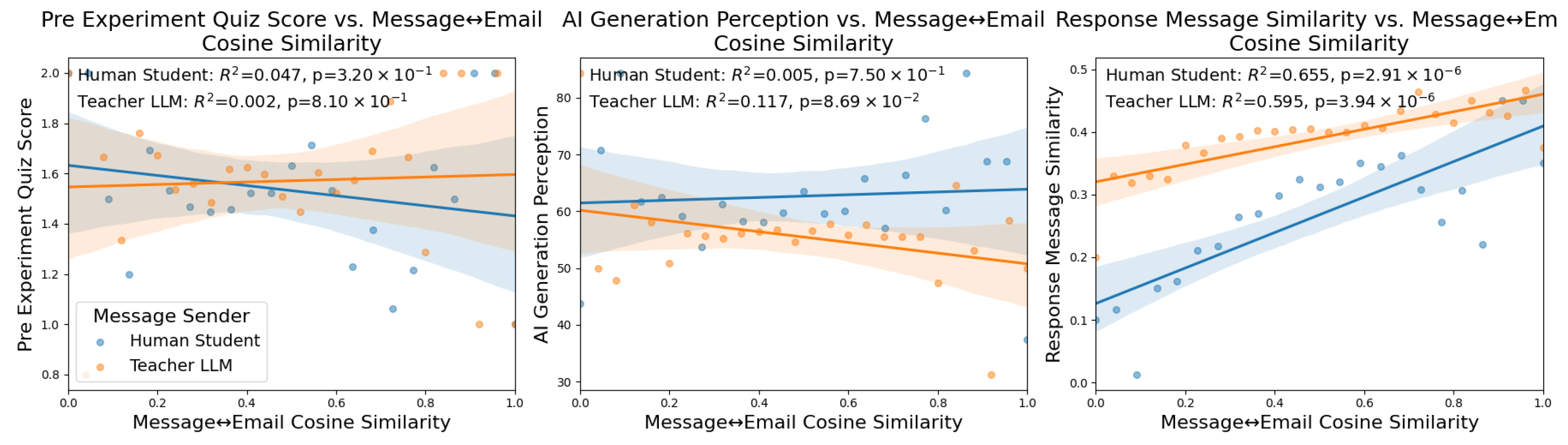

4.7. Student Quiz Responses

The next set of cosine similarity analyses that we perform using the cosine similarity of messages and emails compares the performance of students on the quizzes they completed before and after training.

4.7.1. Student Pre-Experiment Quiz

The left column of

Figure 4 compares the pre-experiment quiz score of students to the message cosine similarity between the emails and the messages sent by human students and LLM teachers. Here we see no correlation between the messages sent by either students (Pearson Correlation:

, ANOVA:

,

,

) or teachers (Pearson Correlation:

, ANOVA:

,

,

). As with the user initial performance, this makes intuitive sense since the base level of student ability should not have a direct impact on the way that students and teachers communicate relative to the email that the student is observing. One potential difference between these communications that is not directly measured in this analysis is the information within the email itself that may be focused on more or less in conversations depending on student initial ability.

4.7.2. Student Post-Experiment Quiz

The middle column of

Figure 4 compares user participant perception of emails as being AI-generated and the similarity of messages sent between human students and LLM teachers and the current email being observed. Here we see no correlation for messages sent by human students (Pearson Correlation:

, ANOVA:

,

,

) or for messages sent by the LLM teacher (Pearson Correlation

, ANOVA:

,

,

). There is a slight negative trend here observable as pattern, where a lower perception of emails as being AI-generated is slightly associated with a lower LLM teacher message cosine similarity. This is an interesting trend as the true correct percentage of emails that are AI-generated is 100%, however this trend is statistically not significant.

4.7.3. Student Post-Experiment Open Response

The right column of

Figure 4 compares the similarity between the current email being observed by a student and the open response messages that they gave to the question of how they made their decisions of whether emails were safe or dangerous. Here we see the strongest and most significant trend over all of the embedding similarity regressions we have performed. There is a strong positive trend for human student messages with both correlation measures (Pearson Correlation:

,

, ANOVA:

,

,

) and LLM teacher messages (Pearson Correlation

,

, ANOVA:

,

,

) where the more similar a message is to the email that the human student is observing, the more similar that message is to the open-response question at the end of the experiment. For the LLM teacher messages, this effect shows less robust according to ANOVA.

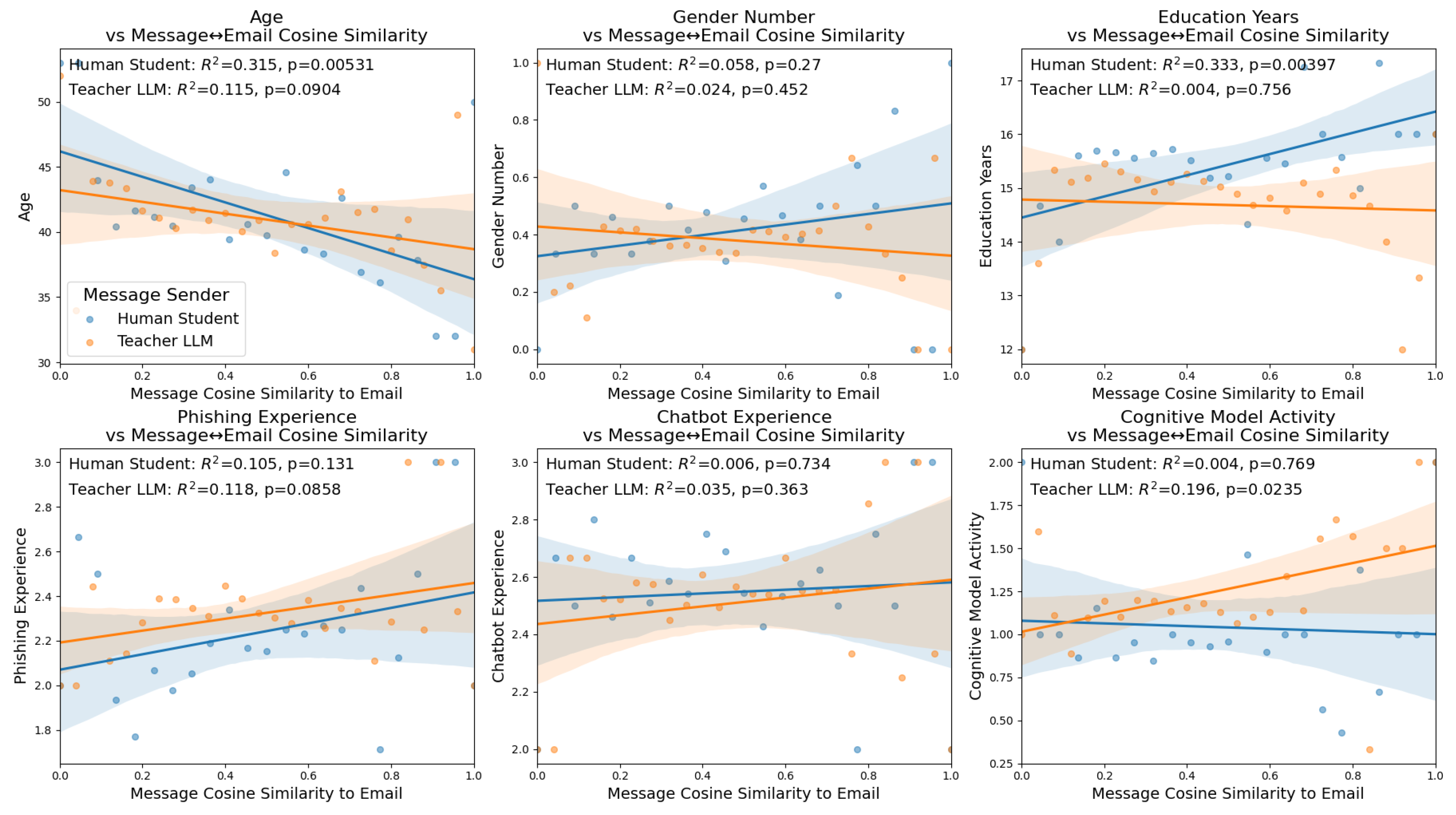

4.8. User Demographics

The final set of cosine similarity regressions we perform compares the similarity of messages sent by human students and LLM teachers and the different demographics measurements that were included in the original dataset.

4.8.1. Age

Comparing the age of participants and their conversations demonstrates a significant correlation to the messages sent by human students (Pearson Correlation: , ANOVA: , , ), and an insignificant but present trend for the messages sent by the Teacher LLM (Pearson Correlation: , ANOVA: , , ). Both of these correlations trend negative, indicating that older participants have less correlation in the messages they send and the emails they are currently observing. ANOVA confirms a small-to-moderate effect size of this for the student–message similarity over groups, while being not conventionally significant, since it cannot be consistently observed across all groups.

4.8.2. Gender

To perform a regression in the same format as the previous analyses, we arbitrarily assigned female to a value of 1 and male to a value of 0 (there were 0 non-binary students in this subset of the original dataset). This allowed for an analysis, shown in the top-middle of

Figure 5, which shows no correlation between the gender number of students and the messages sent by either human students (Pearson Correlation:

, ANOVA:

,

,

) or by teacher LLMs (Pearson Correlation:

,

), ANOVA:

,

,

). This indicates that male and female students sent similar messages, and that the LLM replied with similar messages. While these results are insignificant, they do suggest that accounting for gender differences in how LLM teaching models give feedback to students is less of a priority compared to other subpopulations of students.

4.8.3. Education

Comparing the similarity of embeddings of messages sent between human students and LLM teachers demonstrates a correlation with the years of education that the student has received for messages sent by the human student (Pearson Correlation: , ANOVA: , , ) but not for the messages sent by the teacher LLM (, ANOVA: , , ). The positive trend between the number of years of education and the human student message cosine similarity to emails indicates that students with higher education send messages that more closely match the information contained in the emails they are observing. This effect is continuous but not significant with ANOVA, so it is more a trend showing than a stepwise jump between education categories. As mentioned with regards to age, education level is another important group to account for when improving educational outcomes, meaning education level could be a target for future improvement in LLM teacher feedback.

4.8.4. Phishing Experience

The next analysis we performed compared the level of phishing experience of human students, as measured by the response that students gave to the number of times that they have received a phishing email. We again mapped this discrete categorization onto a value to perform a regression. When we compare this measure of experience to the cosine similarity of messages sent and emails, we see no significant correlation in either messages sent by human students (Pearson Correlation: , ANOVA: , , ) or the teacher LLM (Pearson Correlation: , ANOVA: , , ). While insignificant, both of these regressions demonstrate a slightly positive trend suggesting that more experienced users may be more likely to send messages related to the emails they are observing.

4.8.5. Chatbot Experience

Similar to phishing experience, chatbot experience was determined by mapping a multiple choice question onto values to allow for a regression. Interestingly, we see no correlation between email embeddings and the embeddings of messages sent by either human students (Pearson Correlation: , ANOVA: , , ) or teacher LLMs (Pearson Correlation: , ANOVA: , , ), with both regressions displaying near 0 trends and high p-values. This indicates that the conversations during training were equally likely to be related to the emails that were being observed by participants whether the student had little or a high amount of experience with LLM chatbots. Typically we would assume that participants would converse differently if they had more experience, but here it is important to note we are comparing one specific aspect of the messages, whether they are related to the email being observed, meaning other comparisons of these conversations may display a difference across chatbot experience level.

4.8.6. Cognitive Model Activity

The final regression that we perform looked at the ’cognitive model activity’, which is a stand-in for the condition of the experiment. While not directly a demographic, this did compare the messages sent by humans and the LLM based on the condition of the experiment. This metric was determined based on whether the IBL cognitive model used in the experiment performed no role (0), either determined the emails to send to participants or was used to prompt the LLM (1), or if the IBL model performed both of these tasks (2).

Comparing this measure of cognitive model activity which differed across experiment conditions demonstrates a positive and significant trend for messages sent by the LLM teacher, though the ANOVA shows no significant group-level effect (Pearson Correlation: , ANOVA: , , ). This indicates that LLM messages are more likely to align with emails when the cognitive model is more active, even if differences across groups are minimal. For human student messages, the Pearson correlation shows no significant relationship, but ANOVA indicates significant differences across conditions (Pearson Correlation: , ANOVA: , , ). This suggests that while overall message similarity is not linearly correlated with cognitive model activity, there are measurable differences in how students respond depending on the experimental condition.

4.9. Mediation Analysis

In addition to regression analyses, we are interested in the impact of each of the demographic variables (

Figure 3 and

Figure 4) on measures of student performance (

Figure 1 and

Figure 2). For brevity, we focus our analysis on demographic measure impact on correct categorization. Mediation analysis can be used to test whether the impact of a variable X on Y is at lest partially explained by the effects of an intermediate variable M, called the ‘mediator’ [

58]. This analysis is commonly used in social psychology [

59], human–computer interaction [

60], and in LLM research such as investigations of potential gender biases in LLMs [

61]. This analysis was performed using the Pingouin python library package [

62]. All of the significant mediation effects reported in this section are summarized in

Table 1.

4.9.1. Mediation of Student and Teacher Messages

Our first set of mediation analyses compared whether the impact of demographic variables on student correct categorization could be mediated by the cosine similarity of student or teacher message embeddings and email embeddings. Out of all of the demographic variables, three significant mediation effects were observed. The first was Age, which had a significant total effect (, , , ), a significant direct effect (, , , ), and a significant indirect effect (, , , ).

The next significant mediation effect was of the effect of AI generation perception on correct categorization. There was a significant total effect (, , , ), direct effect (, , , ) and indirect effect (, , , ).

The final significant mediation effect when using both student and teacher message similarities as a mediator is Response Message Similarity which had a significant total effect (, , , ), direct effect (, , , ), and indirect effect (, , , ).

4.9.2. Mediation of Teacher Messages Only

The first of the two significant mediation effects with respect to teacher message cosine similarity to emails is on Education Years which had a significant total effect (, , , ), direct effect (, , , ), and indirect effect (, , , ).

The second significant effect of teacher messages is that of Response Message Similarity which had a significant total effect (, , , ), direct effect (, , , ), and indirect effect (, , , .

5. Results

Before beginning our summary and interpretation of the above analysis, it is important to reiterate the reason for our analysis and the meaning of statistical significance in the context of correlational analysis. We initially sought to perform an exploratory analysis to compare potential areas of improvement in LLM teacher feedback generation. This is a broad question with many possible methods of improvement, necessitating the narrowing down of potential methods. While these results point to possible methods of improvement, they do not exclude the possibility that alternatives may also lead to improvements in LLM teacher feedback.

Across the 30 regressions that we performed, 12 reached statistical significance and 6 of the others showed meaningful trends. We additionally performed 25 mediation analyses which showed 5 significant mediation effects. Taken together, these results form a coherent picture of how email embedding cosine similarity to embeddings of messages sent by both students and teachers relates to student performance and learning. The following analyses summarize and synthesize the results of our message–email similarity analysis and make actionable recommendations for both real-world online training platforms and future studies of human learning using natural language feedback provided by LLMs.

In categorization accuracy, both student and teacher message–email similarity were positively correlated with student categorization accuracy. The similarity between the student message and email with the emails showed a moderate effect that is not consistent across categories, while this same metric for the LLM teacher messages showed a strong effect that is also confirmed by ANOVA score, suggesting that the LLM teacher messages that closely aligned with the observed email were most useful for guiding correct responses. This makes intuitive sense since feedback for participants that references the email they are currently observing would typically be more relevant than less email-related feedback. While this analysis is correlational, it does demonstrate one area that future LLMs could be trained to optimize, by encouraging or preferring responses that are more closely related to the emails that students are currently categorizing.

Building on this, the confidence results gave important insights: students who echoed the content of the emails more closely actually felt less confident, whereas higher teacher message–email similarity increased confidence. This correlation indicates that a student who is frequently making questions or comments that directly reference parts of the emails in the training might indicate someone who needs more experience and varied feedback to achieve higher improvement levels. Taken together, these findings indicate that while alignment with the email improves accuracy, when it is the student providing the overlap, it may signal uncertainty, whereas teacher-provided overlap reassures students.

For LLM-supported learning platforms, this finding suggests potential avenues for development toward greater user engagement in the conversation with the LLM teacher during email categorization, which also extends the insights reported in [

4,

44]. Also, such a level of guidance cannot be provided in traditional in-person training [

11].

With taking also reaction times into account, we found that teacher message–email similarity was significantly associated with longer response times in terms of Pearson Correlation, while student message cosine message–email similarity showed no significant effect. The ANOVA results suggested that neither variable exerted a statistically significant effect. While accuracy and confidence are clear objectives for improvement, which are both increased with higher teacher message–email similarity, a preference for either higher or lower reaction time is less obvious. Taking these results on reaction into account with the previous correlation analysis may indicate that while teacher message–email similarity may improve the important metrics of accuracy and confidence, that may come at the cost of a longer time requirement for students. In some educational scenarios this may be a trade-off, if student time is a significantly constrained resource. However, in other settings the improvement on accuracy and confidence correlated with teacher message–email similarity may be much more important, meaning the increased time requirement is relatively irrelevant.

When we turn from immediate task performance to learning outcomes, the results show a different pattern. Greater similarity between student message and the email text was negatively associated with both learning improvement and final performance, while teacher message–email similarity showed no significant relationship in either case. So high student message–email similarity predicts weaker learning, suggesting that anti-phishing training should encourage flexible strategies rather than focusing on specific examples. Taken together with the results on confidence and accuracy, this set of findings indicates that student message–email similarity is positively associated with immediate correctness but negatively associated with learning gains and final outcomes, while teacher message–email similarity is linked to immediate performance benefits without clear effects on longer-term improvement.

Students who closely echo phishing emails may rely too heavily on surface features, indicating that training should emphasize broader pattern recognition rather than simple repetition. Overall, over-reliance on specific email features may hinder broader learning and decision-making, highlighting the importance of teaching generalizable strategies for identifying phishing attempts. This becomes particularly important in the context of GAI-generated phishing emails, as these may be detected based on patterns beyond mere textual features [

31,

32,

33].

The strongest effects we observed came in the post-experiment open responses, where both student and teacher message–email similarities were strongly and positively related to the strategies that students reported using. This is an interesting result as the open-response questions ask the student to reply on their general strategy, rather than a specific email they observed. This indicates that it may be useful for LLM teachers to discuss the strategies that students use to determine if an email is safe during their feedback conversations with students.

Because these questions asked students to describe their general strategy rather than respond to a specific email, this suggests that anti-phishing training could benefit from emphasizing strategy development over rote memorization of individual examples [

43], building on what was previously outlined in this regard. GAI LLMs could support this by providing strategy-focused feedback and offering personalized prompts when students rely too heavily on surface cues, as well as guiding post-task reflection on the strategies applied. Together, these approaches could help address a common challenge in phishing defense: students’ tendency to over-rely on specific email features rather than developing robust, transferable detection strategies.

Our analysis of the LLM teacher and human student message–email similarity with respect to student demographics revealed important implications for improving diversity, equity, and inclusion in online training platforms. One of the most important aspects of equality in education is effective progress for students of all ages. This is especially important in anti-phishing education and the elderly as they are one of the most susceptible subpopulations with regards to phishing attempts [

63]. These results indicate that older age groups may be less likely to have conversations about the specific emails they are observing in anti-phishing training. Taking this into account when providing natural language educational feedback could improve the learning outcomes of more aged individuals.

Finally, our analysis of mediation effects provided further support to the evidence that the types of messages that are sent between students and teachers, as well as the types of messages teachers send independently, can alter the impact of demographics on student performance. This was identified by the five mediation analyses with significant indirect effects, demonstrating that part of the impact of these demographics effects on correct categorization can be explained in part by effects related to how students and teachers communicate. This indicates that by improving the way that teacher LLMs, such as by using the metrics we suggest in this section, there maybe a similar improvement in the performance of students that reduces the biases related to specific subpopulations of students. This is a major target for improving LLM teacher quality, as it can potentially lead to more equitable outcomes in the application of LLM teachers, and reduce some of the concern over their widespread adoption.

6. Discussion

In this work we present a dataset of embeddings of messages sent between LLM teachers and human students in an online anti-phishing educational platform. The goal of this dataset is to be applied onto improving the quality of LLM teacher educational feedback in a way that can account for potential biases that exist within LLMs that raise concerns regarding their widespread adoption. Our analysis revealed relationships between metrics of educational outcomes and the semantic alignment of educational feedback discussions, as measured by the cosine similarity of message embeddings and the educational email embeddings. In general, we found that when the LLM teacher’s feedback closely mirrored the content of the email under discussion, students performed better on the immediate task. We additionally found some correlations between these educational outcomes and the similarity of student messages to email examples, but overall the conclusions were more mixed compared to the analysis of teacher messages. Additionally, our mediation analysis provided further support that teacher message and email embedding similarity can serve as a mediator for the effect of several important demographics on the impact of student performance.

These results suggest that message–email similarity can be an important target for testing methods in training, fine-tuning, and prompting without the requirement of running additional tests with human subjects which can be costly, or relying on simulated LLM students which can have issues transferring to real world student educational improvement. Moreover, these results have applications outside of describing targets for testing methods by detailing some of the most important subpopulations to focus on for improvement of the quality of LLM teacher responses in the content of anti-phishing training. Specifically, age, education, phishing experience and experience with AI chatbots were identified as demographics in which certain subpopulations may be disproportionally negatively impacted by lower quality teacher LLMs. Our mediation analysis, as well as ANOVA and regression analyses, provided evidence that improving the quality of LLM teacher responses using the methods we suggest can have a positive impact on the educational outcomes of these subpopulations. Full mediation and ANOVA tables are presented in the

Appendix A.

Another possible approach to incorporate the lessons learned from this work into the design of new LLM teaching models is to attempt to detect and address learner confusion over phishing emails proactively. The negative correlation of student message similarity with learning outcomes indicates that over-fixation on specific aspects of the email examples can be a real-time signal that LLM teachers can use to adjust their feedback. Whether done through chain-of-thought reasoning or other methods, leveraging the similarity of user messages to their emails can give insight into their learning and indicate a way to improve training by adjusting the teaching approach in response to these types of messages. In the dataset we present, we noted a correlation between teacher and student message similarity with respect to several metics, which indicates that LLM teachers are often similarly narrow-focused as students. The degree of this specificity could be adjusted in response to student message similarity to emails, and avoid merely mirroring the specificity that user messages exhibit.

In addition to the significant positive correlations we report, there are also interesting negative correlations that differ from expectations given the correlation of other demographics and educational metrics. Specifically, we found that students who frequently send messages that are more closely related to the emails being observed actually had worse overall performance and training improvement. This can be explained by several different causes, such as less knowledgeable students more often choosing to ask questions that make reference to specific aspects of the emails they are observing, rather than the topic they are learning more broadly. This type of effect may allow for a chain-of-thought reasoning LLM model to identify when students are sending messages of this type, and adjust the method of providing educational feedback based on this insight.

By implementing these recommendations, anti-phishing and other types of online training platforms that use LLMs can potentially produce more responsive educational tools rather than one-size-fits-all chatbots that could disproportionally negatively impact the educational quality of important subpopulations. However, there are limitations to this work that raise important areas for future research. As mentioned, we performed only regression and mediation analysis on the demographics and learning outcomes of the dataset we had available, and our introduced embeddings of conversations. While this allowed us to make useful recommendations for future LLM teaching models, it is a limited view of the ways that LLM models can be improved. One useful area of future research that could leverage this same dataset or collect new data would be to compare the prompting of the LLMs and how they output educational feedback. LLM prompting was not a major investigation of this research as we chose to create embeddings of messages themselves, but a similar approach using LLM prompts could also be used to draw conclusions for important targets of LLM teacher optimization.

Beyond the work we present here, there are many additional contexts that LLM teaching feedback improvement can be applied to. Educational settings are one high-risk application of LLMs, which requires significant research into improving response quality and ensuring a lack of bias. Part of the reason for this is that in many situations humans will be interacting directly with the LLM without a dedicated human teacher. Alternative settings may have lower risks associated with them, such as in teaming settings where humans are using LLMs in cybersecurity contexts such as paired programming or as a tool for network analysis, threat detection, and a variety of other applications. Further research into how the results here can be applied to these settings can add to our understanding of how LLMs interact with humans.

Ethics Statement: The use of large language models (LLMs) in education carries significant ethical challenges. LLM outputs can be impacted by existing societal biases, such as those related to race, gender, or age. These biases have the potential to cause unequal learning experiences or reinforcing harmful stereotypes. The dataset presented in this work, and the recommendations we give to future LLM teaching models, are intended to mitigate the issues associated with unequal learning outcomes through our analysis of the learning of specific subpopulations and how it can be improved. Training the LLM models used to converse with human participants, as well as the embedding models used to create the dataset we present demands immense computational resources, contributing to carbon emissions and other environmental impacts. Moreover, many widely used LLMs are built on datasets that include text gathered without the creators’ knowledge or consent, raising serious questions about intellectual property rights, privacy, and the equitable sharing of benefits from such data. We attempted to mitigate these concerns through our analysis of different open- and closed-source embedding models in their effectiveness to relate embeddings to student learning outcomes. We additionally compared embedding models of different sizes to evaluate how smaller less computational intensive models fair in our applications.