1. Introduction

In recent years, the rapid development of social platforms has established them as a primary medium for the expression of emotional opinions. At present, users express their thoughts and emotions not only through text but also through diverse modalities, including images and videos. Initially, sentiment analysis was primarily based on text mining. However, sentiment data on contemporary social platforms frequently exist in multimodal forms, making it challenging to comprehensively capture sentiment solely through text-based mining approaches. Single-modal sentiment analysis is often constrained by the inherent information limitations and feature sparsity of individual data sources; this hinders improvements in sentiment classification accuracy. In contrast, multimodal sentiment analysis can overcome these limitations by effectively integrating complementary features from different modalities and their latent inter-modal correlations, thereby producing more reliable emotion recognition outcomes. For example, emotional tendencies in videos can be inferred by leveraging textual, visual, and auditory cues. Nonetheless, multimodal approaches introduce additional technical challenges in processing and analyzing multi-source heterogeneous information [

1]. As a result, multimodal sentiment analysis has garnered significant research attention; not only does it offer more precise emotion identification, but it is also widely applicable in domains such as rumor detection [

2], public opinion monitoring [

3], and content recommendation [

4].

A key objective of multimodal sentiment analysis is to enhance consistent sentiment cues across modalities while leveraging complementary differences among modalities to improve prediction accuracy [

5]. As illustrated in

Figure 1, when both image and textual data convey the same emotion, the cross-modal association should be strengthened; conversely, when discrepancies exist, such differences should be preserved to enable the complementary integration of emotional signals. Numerous studies have focused on optimizing interactive fusion techniques for heterogeneous modalities. For instance, Hu et al. [

6] achieved fusion through feature concatenation; Zhu et al. [

7] investigated image-text relationships using a gating mechanism; and Xiao et al. [

8] employed bidirectional interaction networks to facilitate modality interactions. Shen et al. [

9] addressed two key issues: clinical depression diagnosis lacks objective indicators and is prone to doctors’ and patients’ subjectivity, while existing EEG-based automatic depression diagnosis methods fail to resolve EEG’s high individual variability and lack recognition result uncertainty estimation. They proposed the UA-DAAN, which enhances the transferability of class-related features between domains, boosts model robustness, accuracy and reliability, with experimental results confirming its effectiveness in depression recognition. However, detail loss often occurs during the interaction and fusion of multimodal data. Most existing methods overlook this issue and neglect the significance of modality-specific local sentiment cues, thereby limiting their ability to achieve effective emotional complementarity. In addition, they remain inadequate in addressing semantic misalignment and modality-specific noise. These limitations constrain the emotional recognition capabilities of sentiment analysis models.

In summary, current multimodal sentiment analysis continues to face two core challenges. On the one hand, the significance of modality-specific local sentiment information is often overlooked in achieving emotional complementarity. On the other hand, limitations remain in the design of fusion strategies, resulting in challenges for semantic alignment and reduced robustness to noise. To address these issues, a novel method, Multimodal Alignment and Hierarchical Fusion Network (MAHFNet), is proposed. Firstly, we design an attention-guided gated interaction alignment module to model the semantic interaction between text and image via a cross-modal attention mechanism. Concurrently, a contrastive learning mechanism is introduced: it encourages semantically consistent image-text pairs to cluster and inconsistent ones to separate. This design enhances the model’s ability to capture cross-modal emotional consistency and improves its robustness against noisy or conflicting information. Building on this foundation, an intra-modality emotion extraction module is employed to extract local emotional features from both textual and visual modalities. These features capture fine-grained emotional cues, such as sentiment-laden words in text, or expressive regions and color tones in images, which are often overlooked in cross-modal interaction modeling yet critical for emotional understanding. Subsequently, the extracted local emotional features and cross-modal interaction features from each modality are fed into a hierarchical gated fusion module. Through a cross-gating mechanism, local emotional features are fused to suppress redundant or irrelevant information while emphasizing salient emotional cues. Finally, the fusion results and cross-modal interaction features are further integrated via a multi-scale attention gating module, aiming to capture hierarchical dependencies between local and global emotional information. This integration ultimately enhances the model’s capacity to perceive and integrate emotions across multiple semantic levels.

The main contributions of this paper are summarized as follows:

A hierarchical fusion network with multimodal alignment is proposed, which constructs a dual-pathway structure for cross-modal alignment and modality-specific local emotion modeling. In addition, a hierarchical gated fusion mechanism is introduced to facilitate the multi-level integration of intra-modal local emotion features and shared cross-modal information, thereby enhancing emotion consistency modeling and multi-granularity emotional perception.

Two sub-gated fusion modules are designed, namely the Cross-Gated Fusion module (CGF) and the Multi-Scale Attention Gating module (MAG). The CGF is employed to dynamically adjust emotional contributions across modalities, while the MAG integrates local and global emotion features to capture hierarchical semantic relationships in emotional expression.

Extensive empirical studies conducted on two public multimodal sentiment analysis datasets demonstrate that the proposed model effectively captures both local and global emotional cues and facilitates comprehensive fusion, thereby improving both semantic alignment and robustness to noise. Experimental results indicate that MAHFNet achieves significant improvements over existing baseline methods.

The remainder of this paper is organized as follows:

Section 2 comprehensively reviews pertinent existing literature;

Section 3 details the methodology employed;

Section 4 presents the experimental procedures and corresponding results;

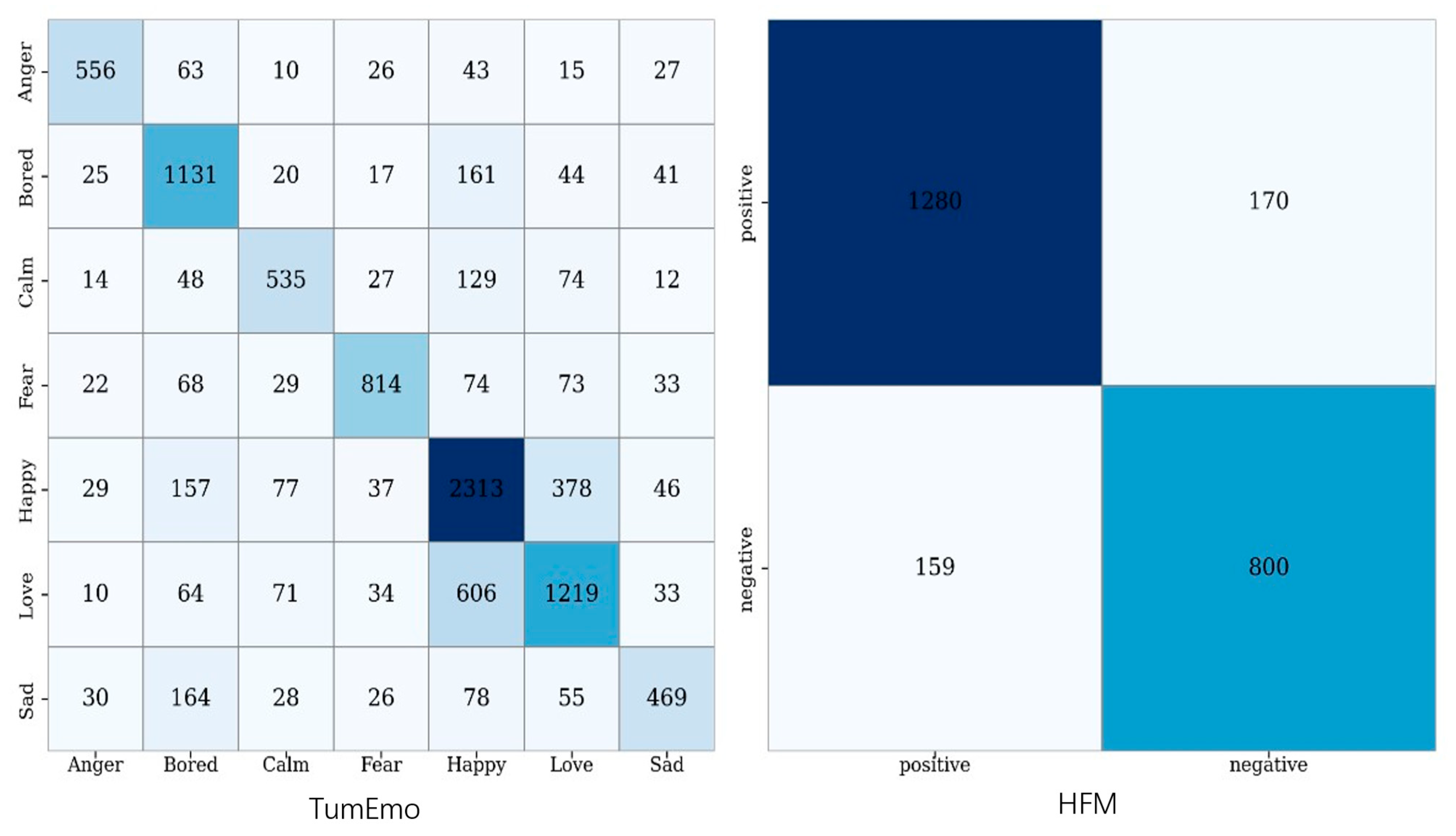

Section 5 evaluates and analyzes model performance based on the confusion matrix;

Section 6 summarizes the main contributions of this paper and draws conclusions, while also pointing out the future work to be carried out.

2. Related Work

Effectively modeling the relationships between modalities is a central challenge in multimodal sentiment analysis. However, many existing methods rely on simplistic approaches such as direct feature concatenation or basic attention mechanisms for cross-modal interaction, which fail to adequately capture complex inter-modal dependencies, often resulting in suboptimal performance. Consequently, increasing research efforts have been devoted to developing more effective strategies for modality interaction and fusion. For example, Xu et al. [

10] introduced the Multi-interactive memory network (MIMN), which captures correlations between textual and visual modalities through multiple layers of interactive attention and contextual memory.

With the emergence of Transformers [

11] and numerous pre-trained models such as BERT [

12] and ViT [

13], multimodal sentiment analysis has experienced significant advancements. Hang et al. [

14] introduced a text-centered fusion network with cross-modal attention (TeFNA). Wang et al. [

15] introduced an end-to-end multimodal aspect-sentiment analysis framework involving an image-to-text conversion module that transforms visual data into BERT-compatible implicit token sequences, and incorporating an aspect-oriented filtering module for dynamic filtering of visual noise using a dual attention mechanism. Yang et al. [

16] introduced a multi-grained fusion network with self-distillation model (MGFN-SD), addressing limitations such as coarse-grained semantic loss and the omission of image–aspect correlations through multi-granularity representation learning and a self-distillation mechanism. Kim et al. [

17] introduced the AOBERT model for multimodal sentiment analysis. Yu et al. [

18] presented a Hierarchical interactive multimodal transformer (HIMT) for modeling deep modality interactions. Chen et al. [

19] introduced a Hierarchical Cross-modal Transformer (HCT) for modeling interactions between text and images using a Transformer-based architecture. Le et al. [

20] introduced a Transformer-based fusion model designed to unify modality representations via joint learning.

Numerous studies have also employed Graph Convolutional Networks (GCNs) for multimodal sentiment prediction, leveraging graph structural information to model nodes and edges within multimodal data. This approach is particularly well-suited for capturing complex relationships and structural dependencies among modalities. For instance, Lu et al. [

21] proposed a heterogeneous graph neural network (Hete-GNNs) model to share sentence sequence features through interactive aspect word encoding, and construct heterogeneous semantic graphs (integrating syntactic dependency trees, sentiment prior dictionaries and part-of-speech tagging) to solve the problem of semantic confounding in multi-target sentiment analysis. Lu et al. [

22] proposed the Coordinated Joint Translation Fusion (CJTF) framework, which enhances textual emotion representation using an emotional interaction graph. In their approach, cross-modal masked attention is utilized to accurately identify shared semantic features, while a translation-awareness mechanism is employed to reconstruct modality-specific representations. However, the performance of GCN-based models remains highly dependent on the quality of the input graph structure. Inaccurate or incomplete connectivity among graph nodes can adversely affect overall model performance.

In addition, incorporating external knowledge has become a common strategy in multimodal sentiment analysis. Wang et al. [

5] introduced the Multiple Attention-based Multimodal Sentiment Analysis (MAMSA) framework, in which sentiment attention from a sentiment matrix is used to highlight emotion-relevant information in both text and image modalities. Zhou et al. [

23] utilized SenticNet to generate sentiment scores, which were integrated into the multimodal fusion process. Dong et al. [

24] proposed cross-modal feedback interactions based on a knowledge graph, which dynamically enhanced modality representations through a self-feedback feature masking mechanism and incorporated knowledge graph embeddings to supplement external semantic knowledge. Finally, multi-channel information was integrated using global feature fusion, significantly improving sentiment prediction accuracy in complex scenarios. However, approaches that rely on external knowledge typically introduce additional computational overhead and often yield limited practical gains.

Meanwhile, multi-level fusion and interaction at the segment level are becoming increasingly important in multimodal sentiment analysis. Liu et al. [

25] introduced a cascaded multichannel and hierarchical fusion (CMC-HF) model, which effectively captures cross-modal interaction information through a hierarchical fusion framework. Yang et al. [

26] employed stacked attention mechanisms to facilitate fusion via multiple interactions between text and image segments.

While existing works on MMSA have attempted to optimize cross-modal interaction effects through designs such as cross-attention mechanisms and multi-stage fusion, they still exhibit notable limitations in modeling complex emotional expressions. On one hand, although MAMSA achieves late-stage fusion via cross-attention and residual structures, its core focus lies on single-scale feature correlation, failing to fully account for the deep local-global interaction of emotional semantics. This results in insufficient adaptability to multi-granularity emotional expressions. On the other hand, the BIT model relies on repeated concatenation operations to complete final feature fusion. This static feature stacking approach lacks awareness of dynamic cross-modal relationships, making it unable to adaptively adjust the emotional contribution weights of different modalities according to the heterogeneity of emotional expressions, and thus prone to interference from redundant modal information.

To address these limitations, this study proposes the MAHFNet. Specifically, relying on the dual-path architecture of “cross-modal alignment—intra-modal local emotional modeling”, a hierarchical gated fusion mechanism is constructed to realize the multi-level integration of intra-modal local emotional features and cross-modal shared information. To tackle the aforementioned specific issues, two modules are designed:

Cross-Gated Fusion (CGF) Module: By dynamically adjusting the emotional contribution weights of multiple modalities, it effectively filters modal redundancy and enhances complementary information, thereby solving the problem of insufficient modeling of dynamic cross-modal relationships.

Multi-Scale Attention Gating (MAG) Module: By integrating local and global emotional features, it establishes multi-granularity emotional semantic correlations, overcoming the limitation that single-scale attention cannot cover complex emotional expressions.

Table 1 further compares the proposed MAHFNet with MAMSA and BIT from the perspective of core design dimensions of architectural components, clearly presenting the differentiated positioning of this study.

3. Method

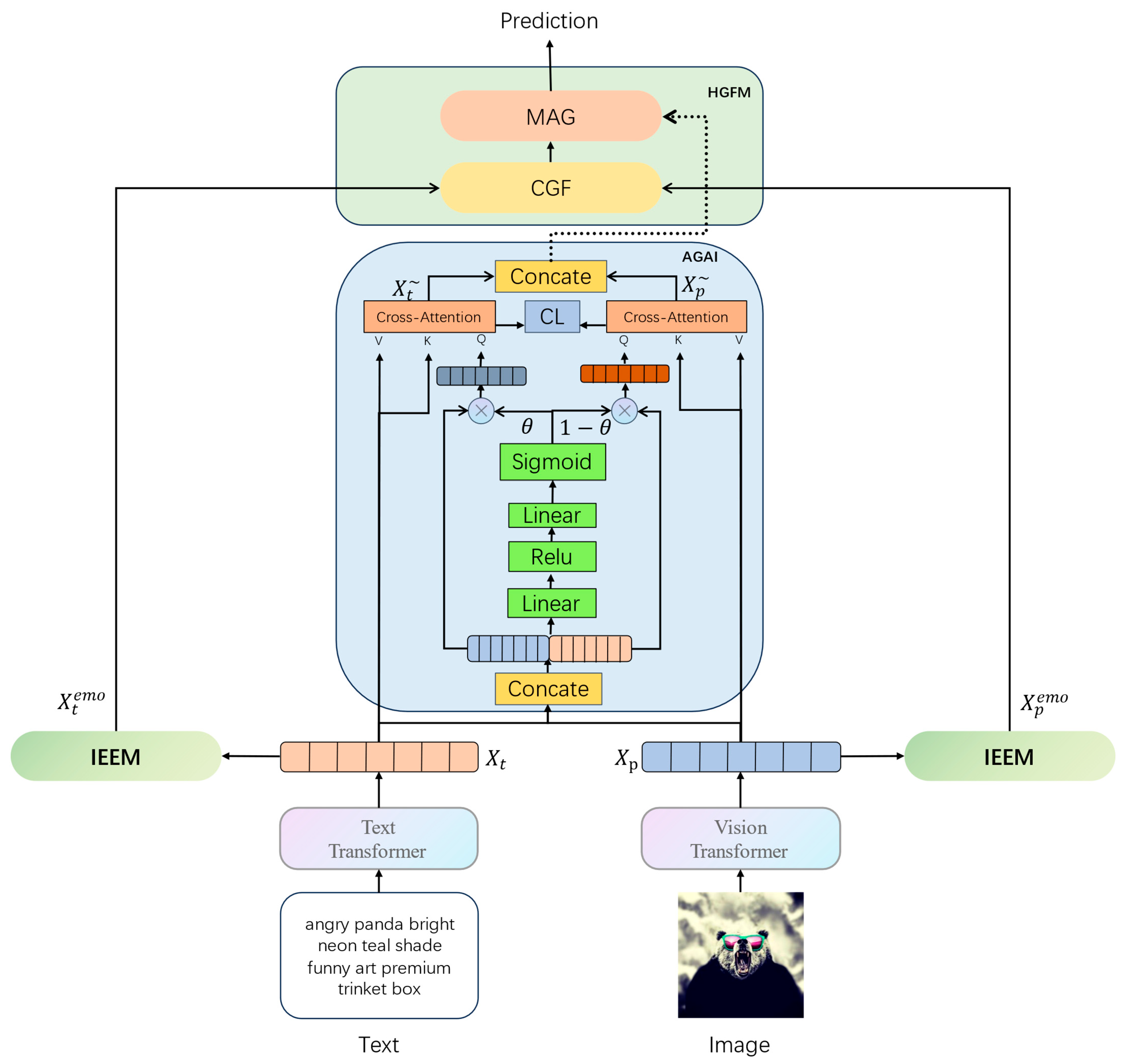

This section presents the overall architecture of the proposed MAHFNet. As shown in

Figure 2, the model consists of three key components: (1) an Attention-guided Alignment and Interaction module (AGAI), (2) an Intra-modal Emotion Extraction module, and (3) a Hierarchical Gated Fusion module (HGFM). Detailed Configuration information can be found in

Table 2.

The purpose of MSA tasks is to obtain sentiments by using multi-modal signals of text (T) and image (P). Generally speaking, MSA can be seen as a classification task or regression task. This task is considered as a classification task. Therefore, the model inputs text and image , and then outputs a sentiment label .

3.1. Feature Extraction

For the text modality, a sentence

consists of a sequence of words. The text Transformer branch of CLIP [

27] is employed; this branch has been pre-trained on 400 million image-text pairs. To acquire word-level features, the final pooled representation is discarded, while the token-level outputs are preserved. Each word is thereby encoded as a 512-dimensional feature vector:

where

, with

denoting the number of words in the sentence, and

= 512 the feature dimension.

For the image modality, the Vision Transformer (ViT) architecture [

13] is followed, wherein each image is divided into a sequence of 16 × 16 patches, which serve as visual tokens. To extract patch-level features, the vision branch of the CLIP model is adopted. Similarly to the text modality, the final pooled token is discarded, and the individual patch embeddings are retained. Each image patch is thus represented as a 512-dimensional feature vector:

where

,

n = 196 is the number of patches,

d = 512 is the embedding dimension.

3.2. Attention-Guided Alignment and Interaction Module (AGAI)

To capture semantic interactions between text and image while mitigating modality-specific noise and conflicts, an Attention-Guided Alignment and Interaction (AGAI) module is proposed. This module conducts fine-grained cross-modal interaction and contrastive alignment through a three-step process.

3.2.1. Dynamic Modality Weighting

Given the input textual feature

and visual feature

, they are first concatenated and processed by a multi-layer perceptron followed by a sigmoid function to produce a gating coefficient

,

where

.

The gate is then used to adaptively modulate the contribution of each modality:

This dynamic reweighting enables the network to selectively emphasize informative content from either modality based on contextual relevance. It effectively suppresses noise introduced during feature interaction and adaptively adjusts the contribution of each modality.

3.2.2. Bidirectional Cross-Modal Attention

To model the semantic alignment between modalities, two cross-attention mechanisms are applied. The textual feature attends to the visual feature and vice versa.

where

is linear projection matrix.

.

The attended features are concatenated and passed through a shared feed-forward projection layer to obtain the interaction feature:

where

represents the aligned and fused representation of cross-modal information.

3.2.3. Contrastive Representation Alignment

To enhance semantic consistency and improve cross-modal discriminative capability, a contrastive learning strategy is incorporated. The attended features from each modality are pooled and projected into a shared embedding space for contrastive alignment.

A symmetric InfoNCE-style contrastive loss is computed based on cosine similarity with temperature scaling:

where

denotes cosine similarity and

is a temperature parameter.

is a visual contrast feature that is derived from the same sample as

.

is a visual contrast feature that is derived from the same sample as

.

represents cosine similarity.

The AGAI outputs the fused interaction feature , which is passed to the subsequent fusion module, and the contrastive loss , which is used to jointly optimize modality alignment during training.

3.3. Intra-Modal Emotion Extraction Module (IEEM)

While cross-modal interaction captures the high-level alignment between modalities, the fine-grained emotional cues embedded in each modality are often overlooked or lost in the process of intermodal interaction. To address this, an intra-modal emotion extraction module is applied to independently refine emotional representations within each modality.

Specifically, each modality is processed through a two-layer multi-head self-attention network to model local contextual dependencies

where

represents the stacking of two layers of attention.

This design enables the model to focus on intra-modality sentiment-relevant elements. For textual inputs, emotional tokens such as adjectives, negations, and intensifiers are emphasized. For visual inputs, local visual patterns such as facial expressions or warm/cold color regions are highlighted.

The outputs and serve as modality-enhanced emotional descriptors, which are fed into the subsequent fusion module.

3.4. Hierarchical Gated Fusion Module (HGFM)

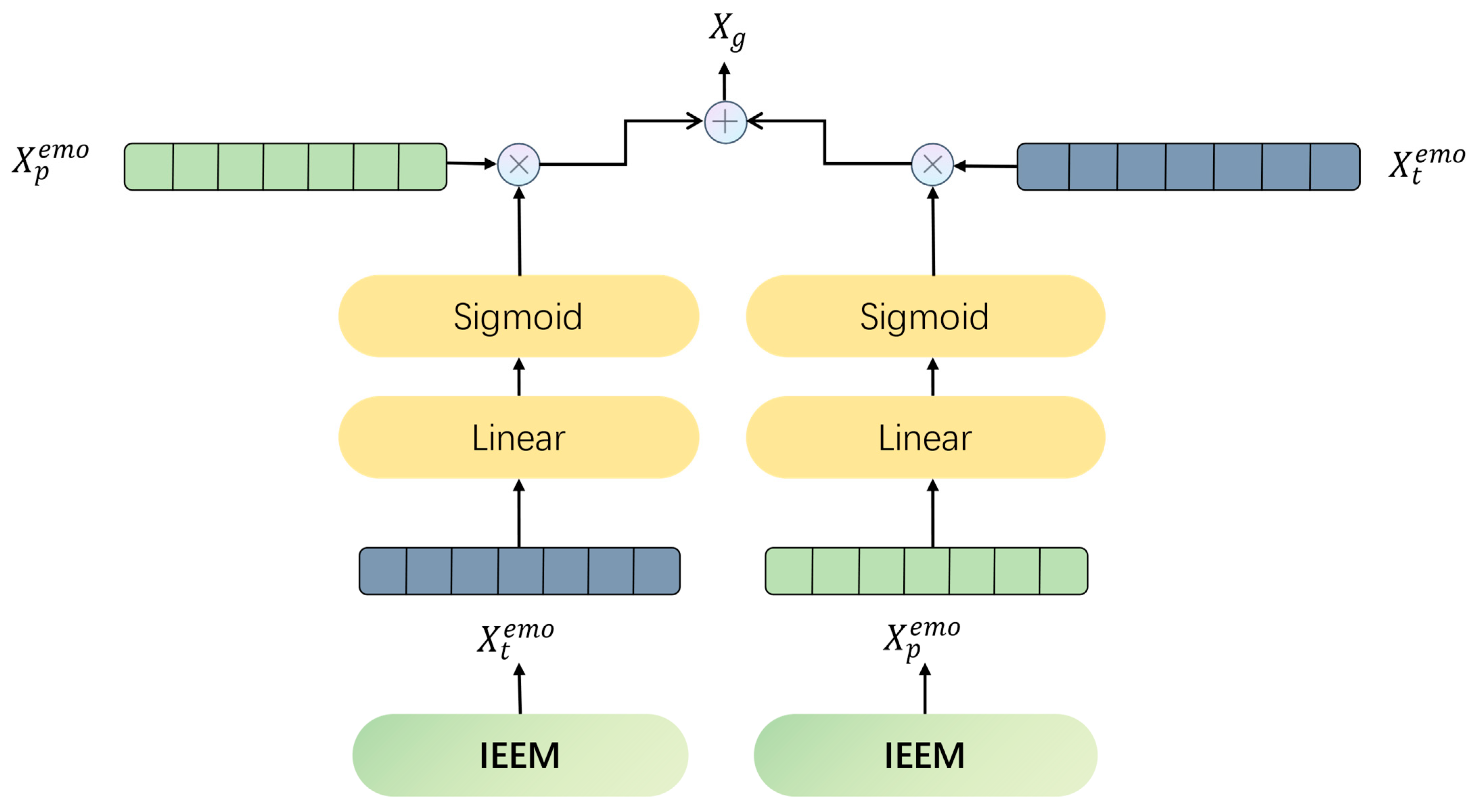

To integrate the extracted intra-modal emotion features with cross-modal interaction features, a Hierarchical Gated Fusion Module (HGFM) is proposed. This module is designed to suppress irrelevant or redundant signals and to model complementary dependencies between local and global sentiment cues. It comprises two sequential stages: Cross-Gated Fusion (CGF) and Multi-Scale Attention Gating (MAG).

3.4.1. Cross-Gated Fusion (CGF)

Given the intra-modal emotion features

and

, the CGF mechanism introduces mutual gating to regulate cross-modality influence:

where

are learnable linear projections,

denotes the sigmoid function, and

is element-wise multiplication.

.

This formulation enables each modality to attend to the most relevant features in the other modality, dynamically suppressing modality-specific noise. The structure of the module is shown in

Figure 3.

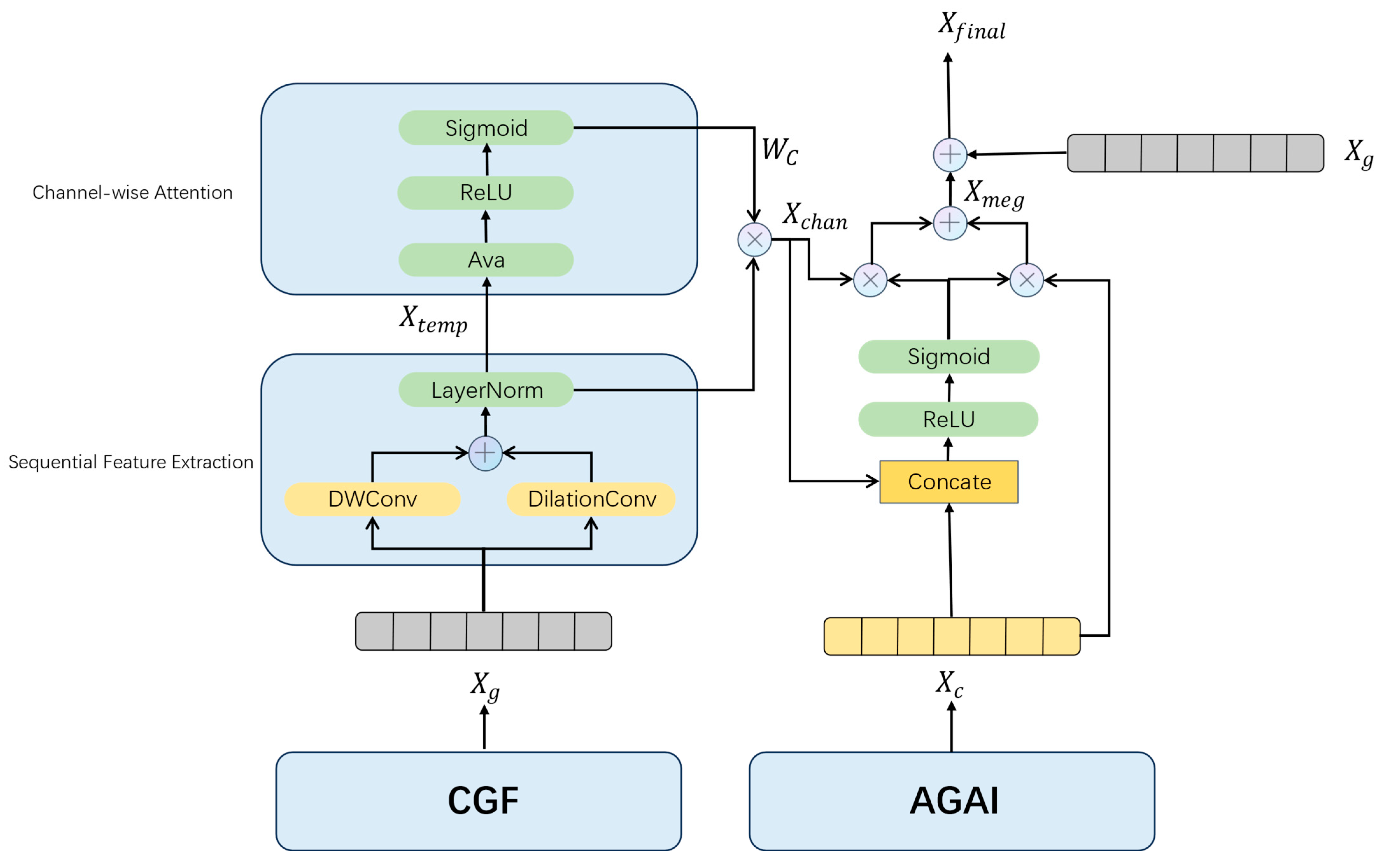

3.4.2. Multi-Scale Attention Gating (MAG)

To further integrate the cross-gated intra-modal features

with the cross-modal interaction representation

a Multi-Scale Attention Gating (MAG) module is designed. The MAG structure of the module is shown in

Figure 4. It aims to model both temporal dependencies and channel-wise emotional salience through convolutional and attention mechanisms, followed by adaptive fusion.

Sequential Feature Extraction: To enhance sequence-level emotion representations, a specialized feature extraction block was introduced to model ordered data sequences. This module employs multi-scale convolutional operations to capture both local associations and broader contextual relationships within the input feature sequence. The architecture comprises two complementary components: a depthwise separable convolution, which extracts fine-grained patterns and immediate sequential dependencies, and a dilated convolution, which expands the receptive field to integrate hierarchical relationships over longer temporal spans.

The depthwise separable convolution performs channel-wise filtering using a kernel size of 3 (with no dilation), effectively capturing local sequential patterns within each feature channel independently. In parallel, the dilated convolution is applied with a dilation rate of 2, enabling the network to capture extended contextual dependencies by enlarging the receptive field without increasing the parameter count. The outputs from both convolutional paths are aggregated via element-wise addition and passed through a Layer Normalization layer to stabilize training.

This synergistic design facilitates comprehensive modeling of both short-range feature interactions and long-range dependencies, which is essential for capturing subtle emotional cues embedded in sequential data structures.

where

is the transposed input. The convolution kernel size of

is

, without dilation. The convolution kernel size of

is

, dilation rate = 2.

.

Channel-wise Attention: To identify which semantic channels (i.e., feature dimensions) are more emotion-relevant, a squeeze-and-excitation-style channel attention is applied. The channel weights are computed as

where

,

represents the channel attention weight.

The refined feature after channel attention becomes

This step ensures that the most sentiment-relevant dimensions are amplified before fusion.

Adaptive Gated Fusion: Finally, the attended representation

and the interaction feature

are integrated via a learnable adaptive gating mechanism. A sigmoid gate is generated from the concatenation of both inputs:

where

is used to weight the relative contribution of each path:

To enhance residual consistency, the final multi-modal fusion representation is computed as

This fusion strategy enables the model to capture hierarchical emotional signals, spanning local temporal structure, global channel importance, and cross-modal alignment, in a flexible and learnable way.

3.5. Classification and Final Objective

The final multimodal sentiment representation

, obtained from the hierarchical fusion module, is passed through a linear classifier to generate prediction logits:

where

, C represents the number of categories.

are learnable classification parameters.

We compute the standard cross-entropy loss

between the predicted logits and the ground truth labels. Together with the contrastive loss

from the AGAI, the final objective function of the model is defined as

where

is a weighting hyperparameter that balances the contribution of the contrastive alignment loss.

4. Results

4.1. Dataset

An evaluation of our models was conducted using three publicly accessible multimodal sentiment analysis datasets sourced from social media platforms: MVSA-S [

28], TumEmo [

26] and HFM [

29]. The HFM dataset is sourced from Twitter, while TumEmo is derived from Tumblr and contains numerous image-text pairs. TumEmo is a weakly supervised sentiment analysis dataset. Notably, both datasets capture users’ sentiment expressions across a broad spectrum of topics, which closely aligns with real-world scenarios in social media sentiment analysis. The MVSA dataset is commonly used for multimodal sentiment analysis tasks. Each dataset was divided into training, validation, and testing sets using an 8:1:1 ratio, with details provided in

Table 3 and

Table 4.

4.2. Parameter Setting and Evaluation Indicators

The Adam optimizer implemented in PyTorch (2.8.0 versions) was employed to train the models. The batch size was set to 64 for both the HFM and TumEmo datasets, with an initial learning rate of 1 × 10

−4. Learning rate scheduling configurations varied by dataset: for HFM, step size = 2 and gamma = 0.8 were used, while identical settings (step size = 2 and gamma = 0.8) were applied to TumEmo. Contrastive loss coefficients were set to 0.0001 for HFM and 0.001 for TumEmo. All experiments were conducted on NVIDIA RTX 3090 GPUs (Nvidia Corporation, Santa Clara, CA, USA). The detailed experimental parameters are shown in

Table 5.

Accuracy (ACC) and F1 score are standard evaluation metrics for sentiment analysis, quantifying model performance. ACC measures the proportion of correctly classified instances relative to the total dataset size. The F1 score provides a balanced assessment by harmonizing precision and recall, particularly valuable for imbalanced classification scenarios. For both metrics, higher values indicate superior model effectiveness.

Let P denote the number of correctly predicted samples and N the total number of samples. The accuracy is defined as the ratio of correctly predicted samples to the total number of samples, the proportion of true positives among all predictions. The recall measures the proportion of correctly predicted positive instances among all actual positive instances. The F1-score is calculated as the harmonic mean of accuracy and recall, providing a balanced measure of both metrics.

4.3. Baseline Model

In this study, baseline models are categorized into three groups: text-based models, image-based models, and multimodal models.

Text-based models: CNN [

30] and BiLSTM [

31] are classical neural architectures widely used in text classification tasks. TGNN [

32] introduces graph structures at the text level to capture non-sequential semantic relationships. BiACNN [

33] integrates CNN and BiLSTM with an attention mechanism to enhance sentiment representation in textual data.

Image-based models: ResNet [

34] serves as a foundational visual backbone, applied through standard pre-training and fine-tuning procedures. OSDA [

26] adopts a multi-view strategy to extract affective cues from images for sentiment classification.

Multimodal models: MultiSentiNet [

10] is a deep semantic network designed for joint text-image sentiment analysis. HSAN [

35] employs a hierarchical attention framework that leverages image captions to align multimodal features. MGNNS [

36] introduces a multi-channel graph neural network with sentiment-aware representations for multimodal sentiment detection. CLMLF [

37] utilizes a multi-layer Transformer architecture for feature fusion and incorporates contrastive learning to improve text-image alignment. CIGNN [

38] enhances image representation through attribute-level encoding, constructs dual graph neural networks to capture dataset-level global features, and performs sentiment analysis on the fused representations. MAMSA [

1] introduces an adaptive attention mechanism to dynamically balance the contribution of text and image features, and employs hierarchical fusion guided by sentiment information to enhance multimodal representation learning. MPNAS [

39] presents a unified NAS-based pruning framework with a two-stage process, first coarse-grained NAS, then fine-grained NAS guided by MSA characteristics, to tackle existing pruning limitations, showing superiority on three datasets.

4.4. Comparative Experiments

To evaluate the effectiveness of the proposed model in multimodal sentiment recognition, Experiments are conducted on two publicly available datasets, and its performance is compared against both unimodal and multimodal baseline models. The detailed results are presented in

Table 6. All the baseline model results are derived from MPNAS [

39] and MAMSA [

1]. The experimental results of this model were repeated three times under different seeds, and were presented in the form of “average value ± standard deviation”. The CLIP model has not been fine-tuned. Bold values indicate the performance of the proposed MAHFNet model, while underlined values denote the best results among all baseline methods. For the TumEmo dataset, the model’s runtime is 4 h, 50 min, and 49.70 s, with approximately 14.15 million trainable parameters.

4.4.1. Quantitative Analysis

As shown in

Table 6, compared with TGNN, the proposed model achieves an improvement of 6.21% in both metrics on the TumEmo dataset. In comparison with DuIG, the model demonstrates a significant performance gain of 23.64% and 24.25% on the same dataset. Furthermore, relative to MAMSA, the proposed model improves by 2.55% and 2.63% on TumEmo. Additionally, compared with CIGNN, the model yields improvements of 0.87% and 1.35% on the HFM dataset. These results indicate that the proposed model consistently achieves superior performance in both binary and fine-grained (seven-class) sentiment classification tasks. On the MVSA-S dataset. These gains reach 0.03% in ACC and 1.26% in F1 score.

4.4.2. Qualitative Analysis

Compared with unimodal models, the proposed model is more effective in capturing emotional information from multiple modalities by enabling enhanced and complementary interactions across them. As shown in

Table 2, relying solely on images for sentiment prediction results in low accuracy. This is primarily because many images lack explicit emotional cues, and the presence of abundant non-emotional content can interfere with the model’s predictions, leading to sparse emotional signals. In contrast, our model achieves effective multimodal interaction and preserves fine-grained local features. Moreover, through the hierarchical gated fusion module, it captures the hierarchical dependencies between local and global emotional representations, thereby improving the model’s ability to perceive and integrate sentiment information across different semantic levels.

4.5. Ablation Experiment

To further investigate the contribution of each component to the overall model performance, Four groups of ablation experiments are conducted on the TumEmo, MVSA-S and HFM datasets. The corresponding results are presented in

Table 7.

To evaluate the contribution of each component, ablation experiments were conducted by successively removing the proposed modules: MAG, HGFM, IEEM, and AGAI. Specifically, -(MAG) refers to the removal of the MAG. The MAG integrates the outputs of the CGF and AGAI for fusion. After removing the MAG, the output of the CGF is directly used as the final output. -(HGFM) refers to the removal of the Hierarchical Gated Fusion Module, where feature representations are directly averaged for prediction. -(IEEM) denotes the additional removal of the Intra-modal Emotion Extraction Module on top of HGFM. -(AGAI) represents the further elimination of the Attention-Guided Alignment and Interaction Module, replacing it with a simple cross-attention mechanism for multimodal feature fusion.

The results clearly demonstrate that each proposed module contributes significantly to the overall performance, further validating the effectiveness and necessity of the modular design. When only one MAG is removed, only the output of the CGF is used as the final output. The CGF directly fuses the textual and graphic features through the gating mechanism without the interaction of the attention mechanism or multi-scale fusion. This results in a large amount of noise in the output features, leading to a significant decline in the effect. Upon removal of the HGFM, the model’s performance decreases on both datasets, indicating that the hierarchical gated fusion mechanism effectively captures dependencies between local and global sentiment representations, thereby facilitating comprehensive multimodal fusion. When the IEEM is further removed, the model’s accuracy declines by 0.52% and 0.56% on TumEmo, and by 0.20% and 0.53% on HFM. This highlights the importance of extracting local features from individual modalities, which enables the effective complementation of emotional information and mitigates the loss of fine-grained details during modality fusion. Finally, with the removal of the AGAI, a substantial performance drop is observed, indicating that semantic interaction between text and image is effectively achieved through the cross-modal attention mechanism. Additionally, the integration of contrastive learning further enhances the model’s ability to aggregate semantically consistent image-text pairs.

4.6. Parametric Experiments

The correct parameter setting can effectively improve the performance of the model. In this chapter, the important parameters include the learning rate, Batch_size, and the coefficient of Contrastive learning.

4.6.1. Learning Rate Experiments

Results of the learning rate experiments are presented in

Table 8, where the optimal learning rate for three datasets is identified as 0.0001. Specifically, when the learning rate is excessively large (e.g., 0.001), the model’s performance decreases sharply during training. Conversely, as the learning rate continues to decrease from this high value, the model’s performance gradually improves; it reaches its peak when the learning rate is 0.0001, after which performance begins to decline if the learning rate is reduced further.

4.6.2. Batchsize

The experiments for Batchsize are shown in

Table 9. When Batch_size = 64, the best results are obtained on TumEmo and HFM datasets. When Batch_size = 32,the best results are obtained on MVSA-S datasets.

4.6.3. Contrastive Learning Loss Experiments

The effect of the contrastive learning loss coefficient is presented in

Table 10. Contrastive learning plays a critical role in aligning textual and visual modality features by encouraging semantically consistent cross-modal representations to be drawn closer in the embedding space. Appropriately setting the weight coefficient of the contrastive loss is essential for optimizing the learning process, enhancing the model’s discriminative capability, and fully leveraging the potential of the cross-modal alignment module. As shown in the results, a coefficient of 0.001 yields the best performance on the TumEmo dataset, while a coefficient of 0.0001 achieves the best results on the HFM dataset and a coefficient of 0.0005 achieves the best results on the MVSA-S dataset.

4.6.4. Contrastive Learning of the Temperature Sweep Experiment

The effect of the temperature sweep is presented in

Table 11. The effect of the temperature parameter τ in contrastive learning on model performance across the TumEmo, HFM, and MVSA-S datasets. As a critical factor in scaling similarity scores within contrastive loss, τ regulates how closely semantically consistent textual and visual features cluster in the embedding space, balancing the model’s ability to distinguish between instances and tolerate semantic similarities among samples. Proper calibration of τ is essential for optimizing cross-modal alignment, too small a value may over-penalize useful semantic proximity, while too large a value can blur meaningful distinctions. From the results, a τ of 0.07 yields the best performance on TumEmo and HFM, effectively enhancing feature clustering for these datasets. In contrast, the MVSA-S dataset achieves optimal results with a higher τ of 0.4, suggesting that its more complex cross-modal semantic correlations benefit from a relaxed temperature to better capture nuanced alignments.

7. Conclusions

The proliferation of multimodal posts across social platforms has opened new avenues for sentiment analysis, yet existing studies often suffer from detail loss during cross-modal interaction fusion, struggle with semantic alignment challenges, and remain vulnerable to modal noise. To address these limitations and boost analytical precision, this study proposes the Multimodal Alignment and Hierarchical Fusion Network (MAHFNet) for sentiment analysis tasks. While MAHFNet integrates established mechanisms, including attention, gating, and contrastive learning, its novelty lies in how these components are synergistically combined to tackle cross-modal challenges in sentiment analysis. Specifically, MAHFNet first leverages contrastive learning and attention to achieve interaction and alignment of multimodal features; then extracts local features from individual modalities to compensate for information loss during cross-modal integration; and finally employs a hierarchical gated fusion strategy to combine global and local features, enhancing emotional representations for more accurate sentiment prediction. Beyond the technical contributions, ethical considerations in multimodal sentiment analysis also merit attention. Multimodal datasets often carry inherent biases and models like MAHFNet, if deployed without safeguards, may amplify these biases in scenarios like automated content moderation or social listening. Addressing them is nonetheless essential to ensuring the model’s positive and responsible real-world impact.

Experimental results on multiple public datasets demonstrate the effectiveness and robustness of MAHFNet. Despite these encouraging results, the model still faces challenges in recognizing emotions from complex images or ambiguous expressions. Future work will explore incorporating descriptive text to mitigate emotion sparsity in visually intricate scenarios, and integrate bias-mitigation strategies to enhance the model’s fairness and ethical compliance.