1. Introduction

Temporal reasoning plays a crucial role in natural language processing by facilitating the prediction of future trends and supporting informed decision-making through the comprehension of event sequences [

1,

2,

3,

4]. Its application spans various domains including legal analysis, financial forecasting, medical decision-making, and crisis management [

5,

6,

7,

8]. One of the most challenging applications of temporal reasoning is the future event prediction, which requires not only an understanding of current event characteristics but also the inference of plausible future scenarios from intricate temporal and causal relationships. In any given context, the goal of future event prediction is to forecast likely subsequent events based on historical events and their causal interconnections.

Previous studies have primarily focused on script-level event prediction, aiming to forecast subsequent events from individual sentences or short text segments [

9,

10]. Although these methods effectively capture local event coherence, they often fail to model the macro-level narrative structure in lengthy documents, where events can extend across multiple paragraphs and entail intricate temporal and causal connections [

11]. Unlike script-level prediction, document-level future event prediction necessitates a profound understanding of long-range dependencies and global event interactions within documents. This entails a thorough comprehension of temporal and causal relationships between events and the capacity to incorporate contextual details from various paragraphs or complete documents.

Large language models (LLMs) such as GPT-4, Llama 2, and GLM-4 have shown remarkable capabilities in temporal reasoning tasks [

12,

13], encompassing temporal question answering, event prediction, and knowledge inference. This success stems from their extensive training on large datasets, facilitating the capture of intricate text patterns. Particularly noteworthy is the effectiveness of LLMs enhanced with temporal knowledge graphs in comprehending structured temporal relationships. GenTKG [

14] introduces an innovative framework for temporal knowledge graph prediction by merging temporal logic rule-based retrieval with efficient instruction tuning. Similarly, LLM-DA [

15] utilizes LLMs to extract temporal rules, thereby improving the accuracy of temporal knowledge graph reasoning through adaptive rule adjustments. These investigations highlight the substantial potential of integrating LLMs with structured knowledge representations for advanced temporal reasoning.

Despite recent progress, two primary challenges remain in document-level future event prediction. Firstly, current methods mainly focus on script-level event prediction, involving temporal reasoning by analyzing event attributes and causal connections within sentences. However, the effectiveness of LLMs in document-level future event prediction remains uncertain. This task necessitates the model’s comprehension of dispersed events across long texts and its ability to engage in multi-hop reasoning. Secondly, while LLM has exhibited promising capabilities in temporal knowledge graph reasoning tasks, the effectiveness of event knowledge graphs in document-level future event prediction require further investigation. Specifically, does a structured event knowledge graph enhance prediction accuracy and offer interpretability evidence? This question is critical. Given these gaps, we propose the following research questions to guide our study.

Q1: Can LLMs effectively predict future events by capturing the intricate evolution of document-level events and how does its performance compare to traditional methods?

Q2: Can event knowledge graphs enhance the interpretability of future event prediction and effectively reduce event redundancy while preserving essential event features?

To address these challenges, this study proposes a document-level future event prediction method integrating event knowledge graphs with LLM temporal reasoning. Specifically, TimeEchain, an instruction fine-tuning dataset for the emergency domain, is first constructed, which is used to validate the performance of LLM on the task of document-level future event prediction.

The TimeEchain dataset encompasses a diverse range of event types, with an average document length of 2415 words. It contains 2058 event chains derived from both original event texts and those generated with LLM guidance. Event features such as trigger words, times, entities, and relationships are extracted to construct an event knowledge graph, with the aim of enhancing document-level future event prediction accuracy. Furthermore, a Prompt reasoning framework based on metacognitive theory (MPF) is proposed. MPF framework employs a structured reasoning process comprising a four-stage cycle: task understanding, planning strategy, execution, and reflection. This cyclical approach is implemented to facilitate interpretable document-level event prediction. Additionally, this study uses the LLaMA-Factory framework combined with LoRA technology to fine-tune two small language models, EChainQwen-7B and EChainQwen-14B. Experimental results show that, compared with other LLMs, EChainQwen has significant advantages in document-level future event prediction and interpretability.

The main contributions of this paper are as follows:

The instruction fine-tuning dataset TimeEchain was constructed for evaluating the ability of LLMs to predict future events at document-level. The event knowledge graph of the dataset is extracted and enhances the performance of improving future event prediction.

The MPF Framework for document-level future event prediction was proposed. The MPF framework introduced a structured reasoning process with a four-stage cycle to realize interpretable future event prediction, verifying the effectiveness of LLMs in document-level event reasoning.

An experimental comparison of future event prediction was carried out between the proposed MPF framework and mainstream LLM reasoning framework. The experimental results show that the MPF framework exhibits better prediction performance while having lower token requirements and higher reasoning efficiency.

3. Methodology

This study aims to evaluate and enhance the ability of LLM to predict future events within lengthy and intricate textual contexts. To this end,

Section 3 defines the future event prediction task for complex temporal reasoning, and constructs a future event prediction dataset based on real-world emergency cases. Then, a future event prediction framework is introduced that integrates event knowledge graphs and metacognitive theory. Additionally, a series of small language models are also fine-tuned to achieve the effect of a high-parameter model.

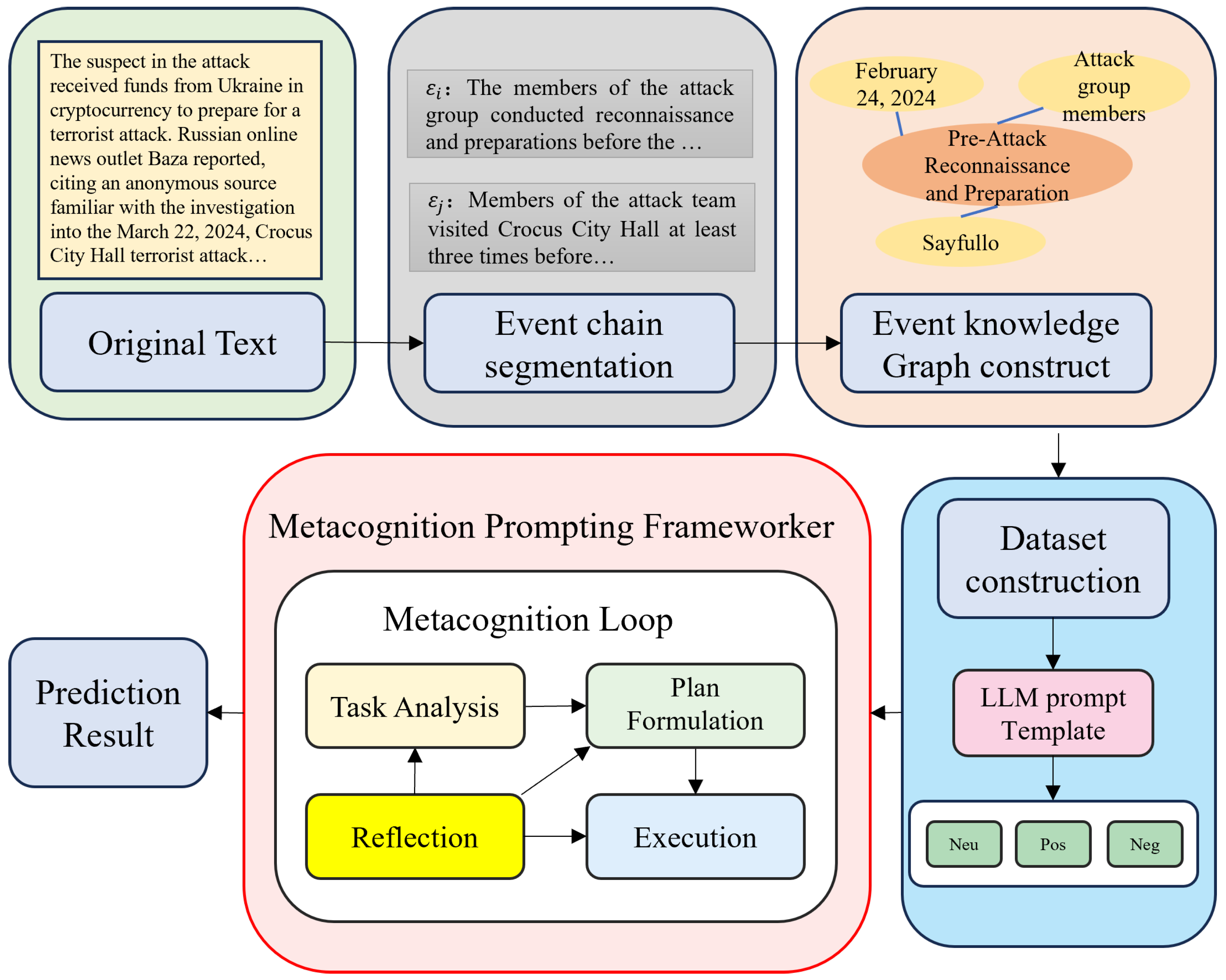

Figure 1 illustrates the outlined methodology of the study.

3.1. Problem Definition

An interpretable future event prediction is defined as follows: Given an input document d containing a sequence of event chains, the ith event chain is denoted as For an event chain pair in d, future event prediction is to estimate the likelihood of event occurrence based on the information from the event chain pair . The MPF framework based on LLMs is employed for prediction inference, with a focus on providing explainable reasoning. The probabilities are categorized as positive, neutral, and negative. The training examples for fine-tuning the LLM model in the experiment comprises quadruples: the input question , the event chain pair , the predicted answer , and the inference process . A training instance can be represented as where the inference process is utilized to refine the LLM model to facilitate the generation of inference steps and explanations.

3.2. TimeEchain Dataset

In this paper, a document-level future event prediction dataset TimeEchain is constructed for the emergency event domain. TimeEchain exhibits a variety of data types, long-range dependencies, inter-paragraph inference, and structured annotations, presenting unique challenges for document-level event prediction.

Table 1 highlights the differences between the existing datasets MCNC and DocSEP.

3.2.1. Data Collection and Preprocessing

The TimeEchain dataset contains textual data from 623 emergency events sourced from Wikipedia. Initially, the event text underwent preprocessing steps, including the removal of URL links, reference marks, common stop words, redundant spaces, and newlines. This preprocessing was conducted to standardize the text and minimize interference from irrelevant elements, thereby producing a refined dataset suitable for analysis. In addition, we have translated the texts from the English data source into Chinese to make it easier for the team to perform annotation and filtering. Nonetheless, both the paper and its presentation will remain in English. The TimeEchain corpus comprises 6 event categories: 255 accidents and disasters, 235 violent conflicts, 56 environmental pollutions, 48 safety and health, 16 political, and 13 other events. The corpus exhibits an average document length of 2415 words and an average event chain length of 437. Details of the dataset are shown in

Table 2.

3.2.2. Event Knowledge Graph Construction

An event knowledge graph differs from a traditional knowledge graph, which typically represents facts about entities and their interrelations. Instead, event knowledge graphs focus on dynamic representations, positioning events as central nodes and highlighting temporal and causal links between them. Unlike the static facts in traditional knowledge graphs, event knowledge graphs capture the evolution of events, enabling coherent narratives from unstructured text. This structure is crucial for event reasoning, as it converts unstructured text into a coherent narrative that reflects the progression of events. By explicitly representing temporal and causal dependencies, an event knowledge graph enhances a models’ capacity to comprehend the progression of complex events.

To facilitate event prediction by large language models when dealing with complex and lengthy texts, the incremental Prompting technique is employed. This technique enables LLMs to concentrate on pivotal event nodes, thereby refining event descriptions and minimizing token usage. Initially, original event documents are segmented into multiple event chains, guided by temporal and causal relationships among events. Each event chain is centered around specific time points and causal links, maintaining the temporal and causal coherence within the chain. This segmentation process yields a considerable quantity of event chains. The template for dividing event chains is delineated below.

Please reorganize the content of the following event knowledge graph text into an event chain with event timelines and causal relationships. Ensure compliance with the following rules: 1. Divide the event sets based on event stages, time spans, and text length. 2. Do not add content that does not exist in the original text and preserve all details. 3. Be careful not to include summarizing statements.

The construction of an event knowledge graph involves the identification and detailed analysis of each sub-event within the input event chain . Each sub-event contains multiple key features, such as participants P, location L, time T, trigger word C, etc. By extracting these features, the core elements of each sub-event are identified, and a clear connection is established for the temporal order and causal relationship between sub-events. This ensures the event knowledge graph accurately reflects the original event text. The algorithm framework for constructing the event knowledge graph is detailed in Algorithm 1.

| Algorithm 1 The algorithm framework for constructing the event knowledge graph. |

Input: Raw event document d

Output: Event knowledge graph G = (V, E)

Data preprocessing to obtain a cleaned version T = remove (d, url, citations, stop words…) Parse cleaned text T to identify and segment event chains , where each event chain is decomposed into a series of constituent sub-events e. for each event chain , do for each sub-event , do Extract event knowledge from the text, including: Subject, Object, Time, and Trigger Word, forming event nodes V. Determine relationships between sub-events and create relational edges.

|

The construction of the event knowledge graph enables the extraction of key events, event features, and the relationships between them from the original text. Based on this, a concise event description rich in structural information is generated.

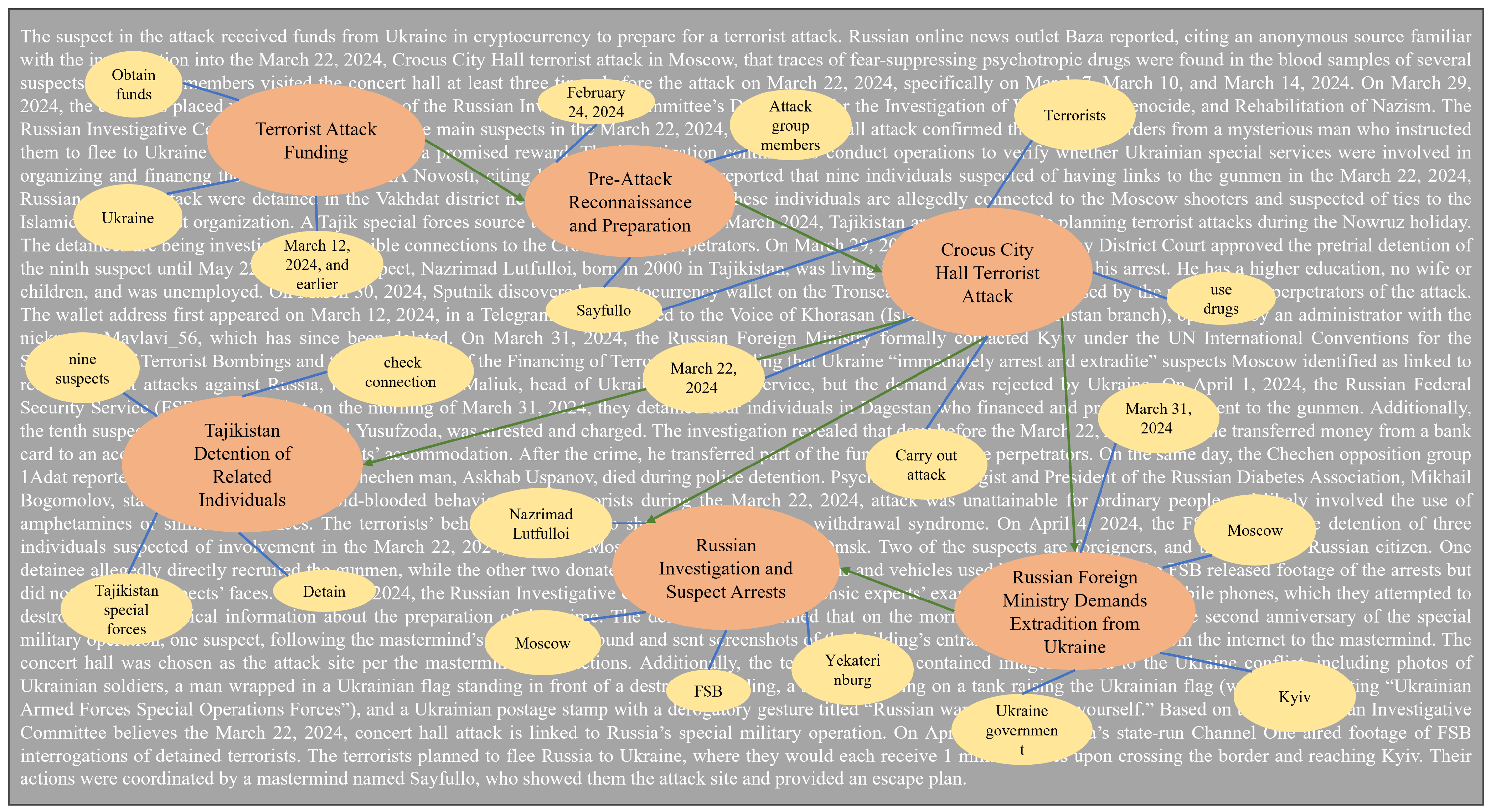

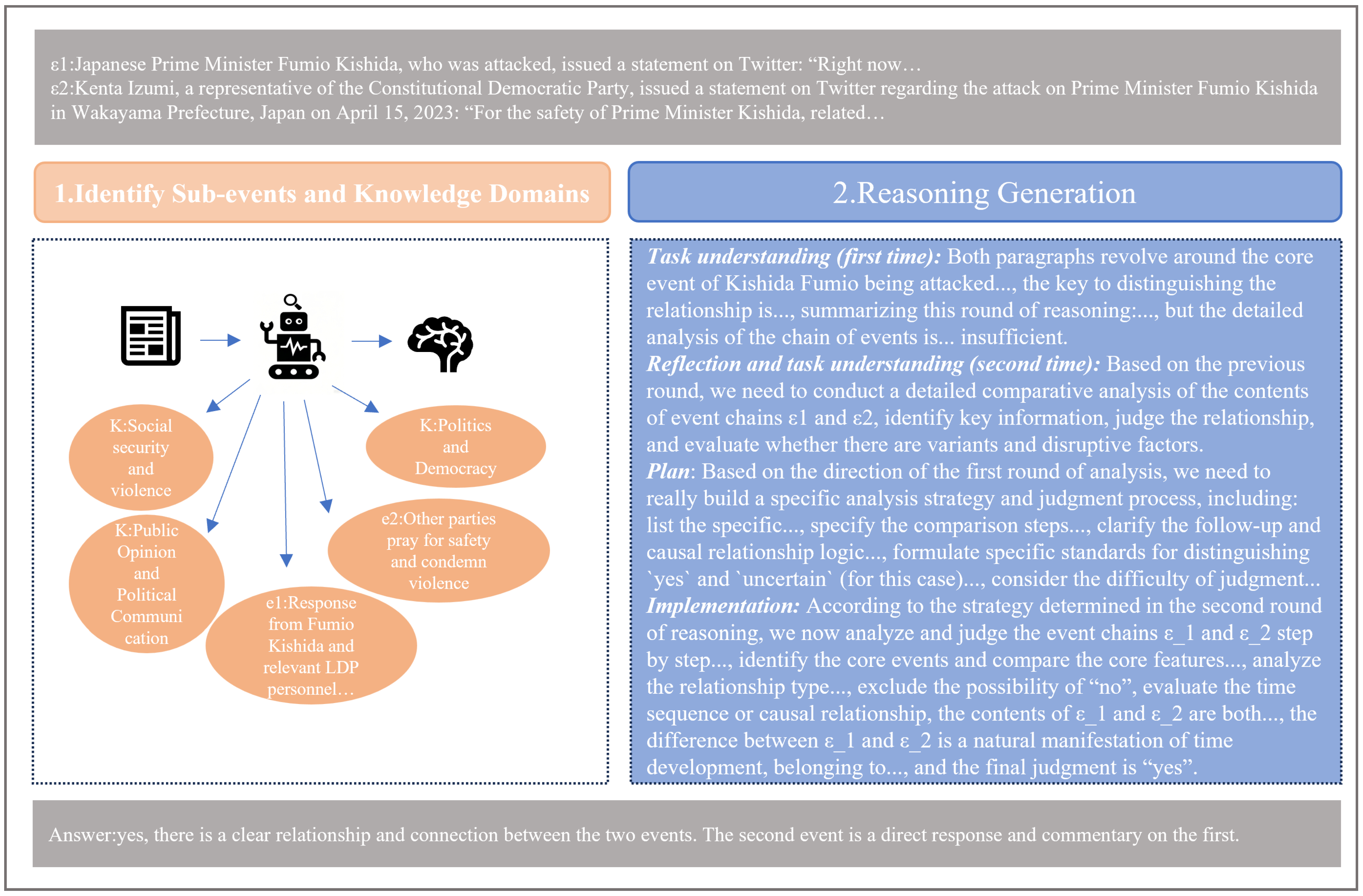

Figure 2 illustrates the process and results of event knowledge graph construction.

3.2.3. Data Sample Generation

To construct training samples, event documents are divided into multiple event chains based on the temporal and causal development relationships between events. Each event chain revolves around distinct time points and causal connections, preserving the integrity of the temporal and causal structure in the event chain. This process generates a substantial number of positive sample , which serve as training data for future event reasoning and prediction. The template for splitting event chains is outlined below.

In this study, negative sample pairs are generated by replacing events in positive sample pairs with events of different types. The aim is to break the original causal relationship, such that the connection between the event pairs no longer exists. Specifically, events from different temporal and contextual contexts are selected from event chain instance repository to replace the original events. Subsequently, LLMs are utilized to confirm and assess the lack of relevance between the newly paired events. For example, given a positive sample event chain

, where event

is a natural disaster event and event

is a violent conflict event, negative samples are generated through techniques such as time replacement and background replacement. This process ensures that the resulting event pairs cannot form a reasonable causal chain. It is specifically expressed as

A large number of negative sample instances labeled as can be obtained through simple rule replacement of existing positive sample instances. To ensure the irrelevance of the contextual relationships in the generated negative samples, this study employs a large-scale model to design the following Prompt, which is used to test and evaluate the null hypothesis that the event sequences and are statistically independent.

Figure 2.

The main event (orange), the event features (yellow), and their corresponding relationships (the relationship between events and features is a blue line, and the relationship between events is connected by green arrows) are extracted from the document-level event text (white text). The black font is a brief description of the document.

Figure 2.

The main event (orange), the event features (yellow), and their corresponding relationships (the relationship between events and features is a blue line, and the relationship between events is connected by green arrows) are extracted from the document-level event text (white text). The black font is a brief description of the document.

Please determine whether the pair is a pair of event chains that have no relationship.

For neutral samples , the different events appearing in event chain pairs are interference factors generated by normal development. Therefore, this study modifies certain event features and event relationships in the event chain , such as time, location, participants, etc. Neutral samples required for the experiment are generated by disrupting the certainty of the temporal or causal relationships in the event chain. Different from directly replacing other events in negative sample generation, the generation of neutral samples relies on the powerful context understanding ability of LLM to ensure that the generated event chain pairs conform to natural language logic. The Prompt template for generating neutral samples is as follows.

Based on the input positive sample event chain pair , please generate a neutral sample by randomly replacing key event features in to alter the original event development trend, thereby creating a neutral sample such that the model cannot clearly determine whether there is a relationship between and .

3.2.4. Data Validation and Quality Assessment

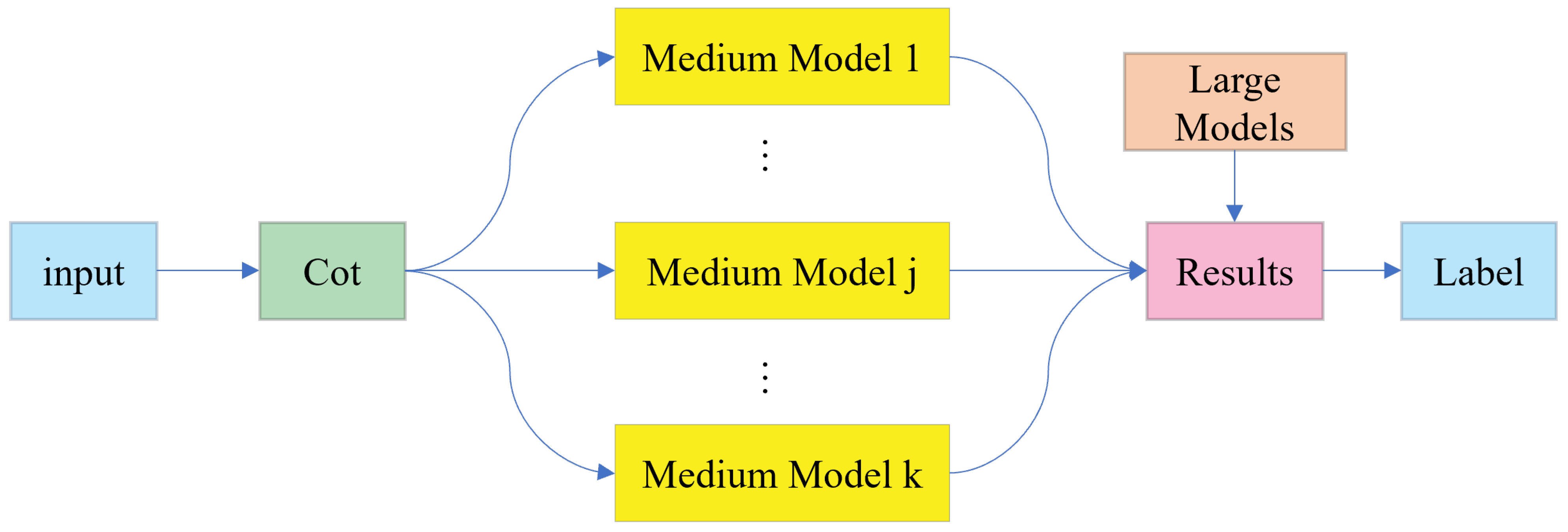

The TimeEchain dataset was rigorously validated to ensure data accuracy. A Prompt ensemble refinement method was employed to verify the quality of the dataset and remove ambiguous or incorrect classifications. Data evaluation employed several language models with moderate reasoning capabilities, such as Grok2. Samples that exhibited robust consistency across multiple assessments were retained. For sample data whose quality could not be directly judged, more advanced language models with enhanced reasoning abilities, such as O1, Grok3, and Gemini 2.5 Pro, were employed for further verification. The assessment framework depicted in

Figure 3 illustrates a data validation framework based on a large language model.

In this framework, event chain pairs are input into a structured process to determine whether the event chain pairs meet the corresponding labels according to the Prompts. Inference models 1 to k independently process the data and validate the labels. The final judgment is based on the consistency of the results of these models, and the final answer is determined based on the principle of stronger consistency. Based on the above data validation principle, 2058 event chains were extracted, generating 358 positive samples, 400 negative samples, and 271 neutral samples.

The dataset’s quality was further evaluated by employing two seasoned annotators to independently assess a sample of the data. The annotators scored the labels of each sample based on the following three criteria:

Accuracy. Evaluate whether the event chain pairs and their labels correctly reflect the data content and event relationships.

Completeness. Assess whether the event chain pairs provide the necessary background for future event prediction, enabling a well-founded judgment of the labels.

Reasoning difficulty. Evaluate the degree of uncertainty in the label judgment of the event chain pairs . It is used to measure the possibility that the model may misjudge the target label as other labels while correctly identifying it.

The architecture of our data validation framework, which leverages multiple LLMs to ensure consistency, is depicted in

Figure 3.

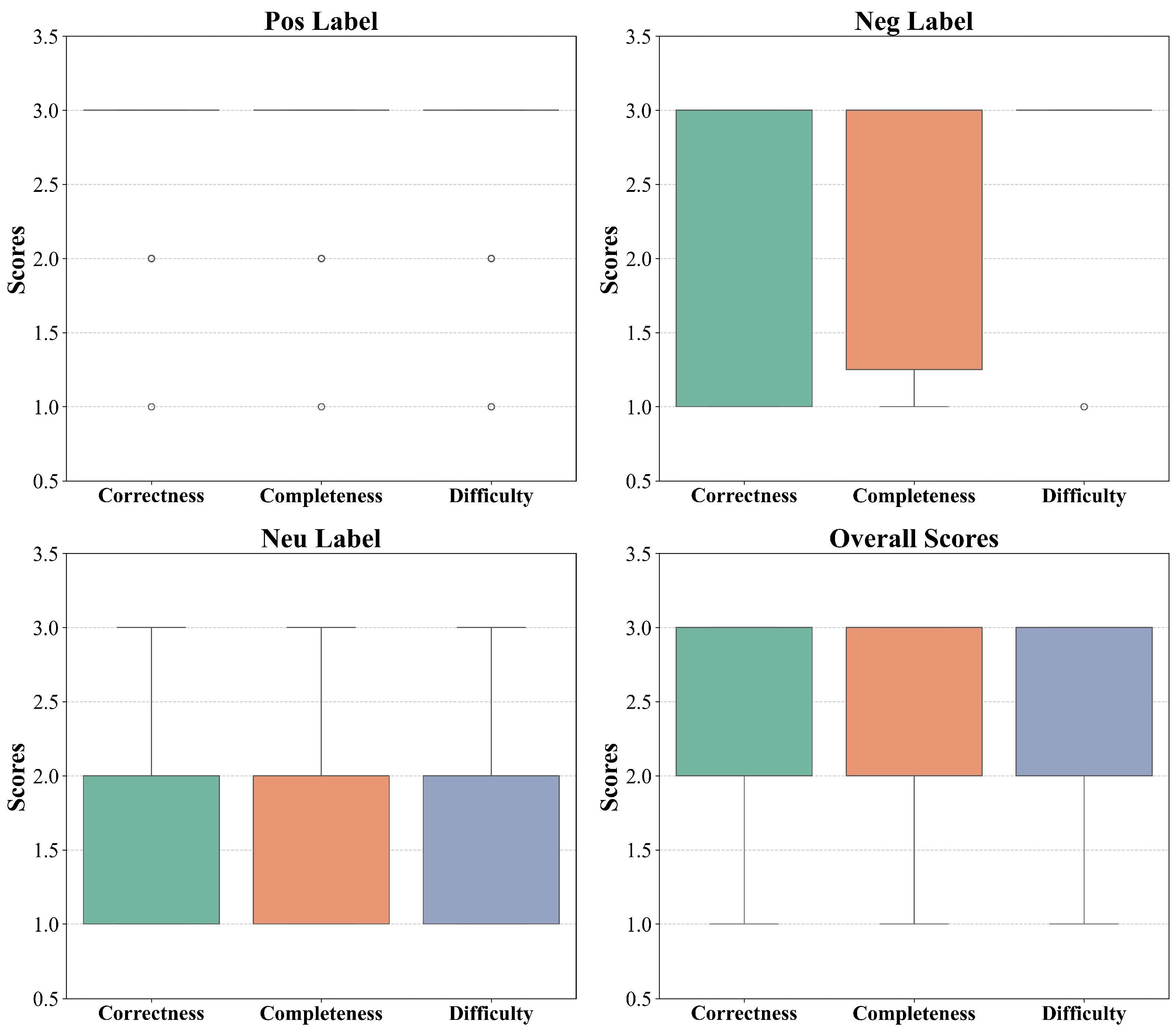

The statistical distribution of the evaluation results is shown in

Figure 4. As can be seen from

Figure 4, positive samples consistently garnered high scores across all criteria, indicating substantial agreement among evaluators. Negative samples, while also receiving relatively high scores, exhibited narrower score dispersion compared to positive samples. In contrast, neutral samples displayed more varied scores and lower evaluator consistency. The analysis of Cohen’s Kappa coefficient in

Table 3 corroborates these findings.

Table 3 reveals high Kappa values for positive and negative samples, contrasting with notably lower values for neutral samples, indicating substantial discrepancies among evaluators in assessing neutral judgments. The comprehensive analysis demonstrates that a majority of samples scored highly across the three criteria. Particularly noteworthy is the substantial consensus among evaluators for positive and negative samples. Samples with low scores on the three criteria were excluded to uphold the dataset’s quality and standardization.

3.3. Reasoning Framework Based on Metacognitive Theory

The MPF integrates metacognitive theory into LLM reasoning tasks to enhance their predictive capabilities. Originating from Flavell’s work, metacognitive theory delineates cognition into four key components: knowledge cognition, goals and tasks, metacognitive experiences, and strategies and actions. This study incorporates this metacognitive model into the reasoning tasks of LLMs. The proposed framework employs a four-stage cyclical reasoning process to improve the LLM’s capacity for document-level future event prediction. This process encompasses task understanding, strategy planning, execution, and reflection. By identifying sub-events and leveraging relevant knowledge domains, this structured approach aims to enhance the predictive capability. The reasoning framework is depicted in

Figure 5.

3.3.1. Data Preprocessing

In the task analysis phase, we utilize the extensive prior knowledge of large language models to analyze the sub-events within the overall event chain and identify the relevant knowledge domains. The task analysis Prompt is structured as follows:

Data definition:

- pos: Event chain pairs originate from the same real-world event and have a clear temporal sequence or causal relationship.

- neg: There is no association between event chain pairs . Their themes or backgrounds are completely different, and there is no overlap in core features.

- neu: The event chain i is a variant of the subsequent events of the event chain j. Some features are similar, but the other half are significantly different. There is a correlation between the features, but there are obvious other disturbing factors. Pay attention to distinguishing the disturbing factors from the characteristic factors that may arise during event development.

Event chain:

Event chain:

Task: Determine the relationship between event chain i and event chain j, and preliminarily identify the occurrence of sub-event e in event chain and event chain, as well as the event knowledge domain.

3.3.2. Inference Generation

This stage aims to guide LLMs in deeply understanding input data and tasks, planning suitable reasoning strategies, executing selected strategies, and reflecting the results of strategy execution.

Through step-by-step analytical reasoning, the temporal relationship of the given event chains pair within the same context can be obtained. To better achieve this goal, this paper seeks to foster the model’s active cognitive capacity, enabling it to analyze problems and weigh options prudently like human experts, rather than passively accepting instructions or directly giving answers. As previously noted, merely instructing the model to “think, judge, and determine the temporal relationship of and ” is insufficient. This assertion is empirically validated by our experiments, which frame the comparison between our MPF framework and the CoT baseline as an ablation-style analysis. Specifically, the CoT baseline represents a powerful linear reasoning approach that lacks the final metacognitive stage. The significant performance gap between CoT and our complete MPF framework highlights the critical contribution of self-reflection. Therefore, this study encourages the model to reflect on its own reasoning results by adding an additional “rethink” Prompt.

The MPF framework first analyzes the input event chain data to accurately interpret its structure and meaning, which includes timestamps, descriptive text, and contextual event details. The Prompt template is outlined as follows:

Please distinguish the key points of data types based on the input event chain data, the definition of the data, and the task understanding.

Based on a comprehensive understanding of the task and data, the model must select appropriate reasoning strategies and formulate detailed execution plans. Determining the temporal relationships in event chains may rely on various methods, such as direct timestamp comparison, causal logic inference, or contextual event sequence analysis. This stage guides the model to select appropriate strategies and analyze key points according to the data characteristics, thereby providing a clear roadmap for subsequent reasoning. The Prompt template is as follows:

Select an appropriate strategy and plan how to determine the relationship between event chains and .

Subsequently the model should systematically analyze the data in accordance with the predetermined plan, engaging in logical reasoning and making informed judgments to derive the ultimate outcome.

According to the plan, gradually conduct analysis to judge the relationship between event chain pairs.

To ensure the rigor of reasoning, the model should review its analysis process to check for omissions, incorrect assumptions, or logical loopholes. Upon identifying deficiencies, the model should engage in iterative reflection to refine its reasoning. To avoid infinite loops, a predefined limit is imposed on the number of iterations. During this phase, Prompt words are designed to stimulate the model’s reflective capabilities and enhance judgment accuracy. The Prompt template is as follows:

Please determine whether there are any deficiencies in the previous round of analysis and whether further reasoning is required on this basis.

3.3.3. Model Fine-Tuning Based on TimeEchain

In fine-tuning the TimeEChain model, Grok 3 is employed for inference generation on event chain data. For the fine-tuning, we partitioned the complete TimeEchain dataset into training, validation, and test sets based on a strict 8:1:1 ratio. We then used high-quality, inference-generated data to fine-tune the Qwen2.5-7B and Qwen2.5-14B models, creating the EChainQwen-7B and EChainQwen-14B variants. This fine-tuning process utilizes the LLaMA - Factory framework in conjunction with LoRA (Low-Rank Adaptation) technology, enabling the models to adapt to the event inference task based on event chain pairs.

In the experiment, the hyper-parameters were set as follows: the learning rate was 0.00001, the number of training epochs was 5, the batch size was 8, the LoRA Rank was 8, the LoRA Alpha was 32, the LoRA Dropout was 0.05, the maximum number of tokens was set to 32,768, and the model was trained utilizing the NVIDIA H800 PCIe (NVIDIA Corporation, Santa Clara, CA, USA).

Specifically, the inference output generated by Grok3 contains logical relationships between events, causal inferences, and time-series information. These data are utilized as pseudo-labels and integrated with the original dataset to enhance the training process. During fine-tuning, the model parameters are efficiently adjusted through LoRA to optimize performance on the future event prediction task. Experimental results indicate that Grok3’s inference output, based on the TimeEChain event chain, markedly enhances the training of smaller models. Specifically, the comprehensive metrics for EChainQwen-14B and EChainQwen-7B in future event prediction tasks improve by approximately 7.58% and 7.64%, respectively.

4. Experiments

4.1. Benchmark Model

In this study, the following models were used for tasks such as data construction, data validation, and inference prediction. These language models employed can be broadly classified into three main categories: small, medium, and large models. Small language models, such as Qwen2.5-7B and Qwen2.5-14B, have fewer parameters relative to the DeepSeek-V3 model. Medium language models, like Grok2, offer moderate reasoning capabilities and are well-suited for a range of data processing and analysis tasks. Large models encompass Grok3, OpenAI’s o1 and GPT-4.1, and Google’s Gemini 2.5 Pro, considered flagship or cutting-edge models by their developers due to their advanced reasoning capabilities for intricate applications.

Qwen2.5-7B and Qwen2.5-14B [

61]: The language models with 7 billion and 14 billion parameters, respectively, developed by the Qwen team at Alibaba Cloud. It supports multiple languages and handles extended contexts up to 128 K tokens. The model excels in executing instructions, generating long texts, processing structured data, and producing structured outputs.

Grok2 [

62]: Grok2 is a model with moderate reasoning ability launched by xAI, demonstrating excellent performance in data processing and analysis. It efficiently mines and interprets diverse data types, aiding users in extracting valuable insights from large datasets. Grok3 [

63]: Grok3 is positioned as the latest and more powerful model from xAI, excelling in enterprise applications like data extraction, encoding, and text summarization, with comprehensive expertise across various professional domains.

o1 [

64]: The latest and strongest model family from OpenAI, o1 is designed to spend more time thinking before responding. The o1 model series is trained with large-scale reinforcement learning to reason using chain of thought.

GPT-4.1 [

65]: OpenAI’s flagship general artificial intelligence model, hypothetically released on 14 April 2025. It excels at solving complex tasks and is particularly well-suited for cross-domain problem-solving.

Gemini 2.5 Pro [

66]: Google’s state-of-the-art AI model engineered for sophisticated reasoning, programming, mathematics, and scientific applications. Endowed with robust cognitive capabilities, it delivers more accurate and contextually nuanced responses, enabling advanced reasoning and problem-solving across a wide range of academic and technical domains.

Mistral-small-3.1 [

67]: Mistral AI’s highly efficient model designed for high-volume, low-latency tasks. It offers a strong balance between performance and cost, making it ideal for applications such as classification, summarization, and function calling that require rapid response times.

claude-3.5-haiku [

68]: As part of Anthropic’s Claude 3.5 model family, Haiku is the fastest and most compact model, optimized for near-instant responsiveness. It is designed for simple queries, content moderation, and customer service applications where speed is paramount, while benefiting from the advanced reasoning and vision capabilities of the Claude 3.5 generation.

The aforementioned models were chosen to encompass a broad spectrum of performance and parameter sizes, facilitating a thorough assessment of their strengths and weaknesses.

4.2. Benchmark Method

Prompt [

69]: The most basic zero-shot Prompting method. Directly issue a task instruction to the model, requesting it to immediately output a final judgment based on its existing knowledge reserves, without guiding it to think through intermediate steps.

Determine the relationship between event chain i and event chain j?

CoT [

70]: Chain-of-Thought (CoT) is a Prompting strategy that guides large language models to solve complex reasoning tasks. By adding instructions like “Think step-by-step” to the Prompt, it encourages the model to output a coherent, step-by-step reasoning process before giving the final answer.

Please reason step-by-step and determine the relationship between event chain i and event chain j?

MPF: The Metacognitive Prompting Framework (MPF) is a structured reasoning method proposed in this study based on metacognitive theory. It does not rely on a single Prompt but guides the model to conduct deeper, human-expert-like thinking through a four-stage cyclical process that includes task understanding, strategy planning, execution, and reflection. For details, refer to

Section 3.3.

4.3. Evaluation Indicators

This study employed standardized metrics to assess the model’s performance in predicting future events, focusing on accuracy, macro-mean accuracy, recall, and F1 score for each category. These metrics were independently calculated for each labeling type—neutral, negative, and positive—to offer a detailed evaluation of the model’s efficacy across different categories.

For each category c, the specific definitions of the metrics are as follows:

To comprehensively evaluate the overall performance of the model in the classification task, this study further calculated the overall indicator. Among them, Accuracy measures the overall prediction accuracy rate. Its definition is as follows:

It should be noted that in this study, the precision, recall, and F1 metrics in the overall indicators are macro-averaged metrics, rather than simply calculated based on the total number of all samples. The category metrics of Neu, Neg, and Pos are calculated separately without weighting or averaging. The three macro-average metrics, namely Macro-Precision, Macro-Recall, and Macro-F1 Score, refer to calculating the metrics for each category separately and then averaging them. This approach mitigates biases from category imbalances.

4.4. Experimental Results

Section 4.4 systematically examines the impact of different reasoning approaches, event knowledge graphs, and model architectures on future event prediction performance. The experimental results demonstrate that the proposed MPF framework outperforms other methods in document-level future event prediction. The incorporation of event knowledge graphs notably enhances the performance of simple Prompts, chain-of-thought reasoning, and MPF framework reasoning. Furthermore, fine-tuning small language models with event chain knowledge can substantially improve their prediction accuracy, enabling them to approach or even surpass the performance of un-tuned large language models in event prediction tasks.

4.4.1. The Effect of MPF Reasoning Framework on the Performance of Future Event Prediction

The study evaluated the performance of various inference methods, including Prompt, CoT, and the proposed MPF, on the document-level future event prediction task using the Qwen 7B dataset. As shown in

Table 4, the results demonstrate a clear trend of performance improvement across the different inference approaches. The Prompt method exhibited the lowest overall performance, while the CoT approach showed improved results, and the MPF framework achieved the best performance in terms of precision, recall, and F1 score. Specifically, prediction accuracy increased from 0.6352 with Prompt reasoning to 0.6817 with CoT reasoning, and further to 0.7362 with the MPF framework. This represents a significant improvement in the overall accuracy of the MPF framework for the future event prediction task.

The horizontal comparison of classification indicators in

Table 4, where the best performance for each metric is highlighted in bold, reveals that all three inference methods exhibit limited predictive capability for neutral event categories. The MPF framework, despite being the most effective, achieves an F1 score of only 0.4093 for neutral events, significantly lower than its scores of 0.7990 for positive samples and 0.8478 for negative samples. This finding suggests that existing methods still have great challenges in distinguishing and accurately predicting neutral events.

4.4.2. The Effect of Event Knowledge Graph on the Performance of Future Event Prediction

This study assesses the impact of integrating an event knowledge graph into three distinct reasoning frameworks for predicting future events. As illustrated in

Table 5, the inclusion of the knowledge graph consistently enhances performance across the Prompt, CoT, and MPF framework. Specifically, the overall F1 score for predictions increases from 0.6033 with the Prompt* to 0.6854 with CoT*, and further to 0.7161 with the MPF framework.

Table 4 and

Table 5 visually confirm these improvements, demonstrating a marked enhancement in prediction accuracy subsequent to the knowledge graph’s integration. Notably, the overall F1 score for the MPF framework rises from 0.6854 to 0.7161 following the incorporation of the knowledge graph.

An in-depth analysis of the results in

Table 5 reveals a key advantage of the MPF* framework that transcends the overall metrics: its ability to effectively mitigate the “positive prediction bias” inherent in the baseline models. Although baselines like Prompt* and CoT* achieve high F1 scores on the “Pos” category, this is largely attributable to their tendency to default to positive predictions for ambiguous or uncertain samples. This is strongly evidenced by the Prompt* model’s near-perfect recall of 0.9972 on positive examples. While this bias inflates the metric for a single category, it severely compromises the models’ ability to accurately identify neutral and negative samples, rendering them unreliable in a holistic evaluation. In contrast, the MPF* framework demonstrates a significantly more balanced and robust predictive capability. Instead of maximizing performance on a single category, it enhances recognition accuracy across all classes, particularly the more challenging ones. Specifically, the MPF* framework not only boosts the F1 score for the “Neu” category to 0.4928—a relative increase of over 20% compared to CoT*—but also achieves a class-leading F1 score of 0.9010 on the “Neg” category. This balanced performance across categories indicates that the judgments of MPF* are based on effective evidence-based reasoning rather than an inherent predictive bias. Consequently, while its recall on positive samples is slightly lower than that of the biased baselines, the substantial performance gains in other categories underscore its more reliable and generalizable reasoning process.

4.4.3. The Effect of Model Fine-Tuning Based on TimeEchain

This study also evaluated the effect of model fine-tuning on future event prediction performance. Two models, EChainQwen 7B and EChainQwen 14B, were fine-tuned using the event prediction knowledge from the Grok3 model, building upon the smaller language models Qwen7B and Qwen14B, respectively.

Table 6 presents a comparative analysis of the models’ overall prediction performance before and after the fine-tuning process.

The fine-tuned EChainQwen model demonstrates substantial performance enhancements, as evident from the data in

Table 6, where the symbol ↑ denotes a performance improvement over the baseline model. The macro-averaged F1 of the EChainQwen-7B model reaches 0.7618, representing a 4.23% improvement over the Qwen-2.5-7B model. Similarly, the Accuracy score of the EChainQwen-14B model improved by 8.42%. Notably, this performance boost is not limited to the Accuracy metric but is also reflected in the simultaneous enhancement of event prediction across the neutral, positive, and negative categories.

4.4.4. Comprehensive Performance Comparison Among Various Language Models

Section 4.4.4 evaluates the performance of various language models in the task of predicting future events.

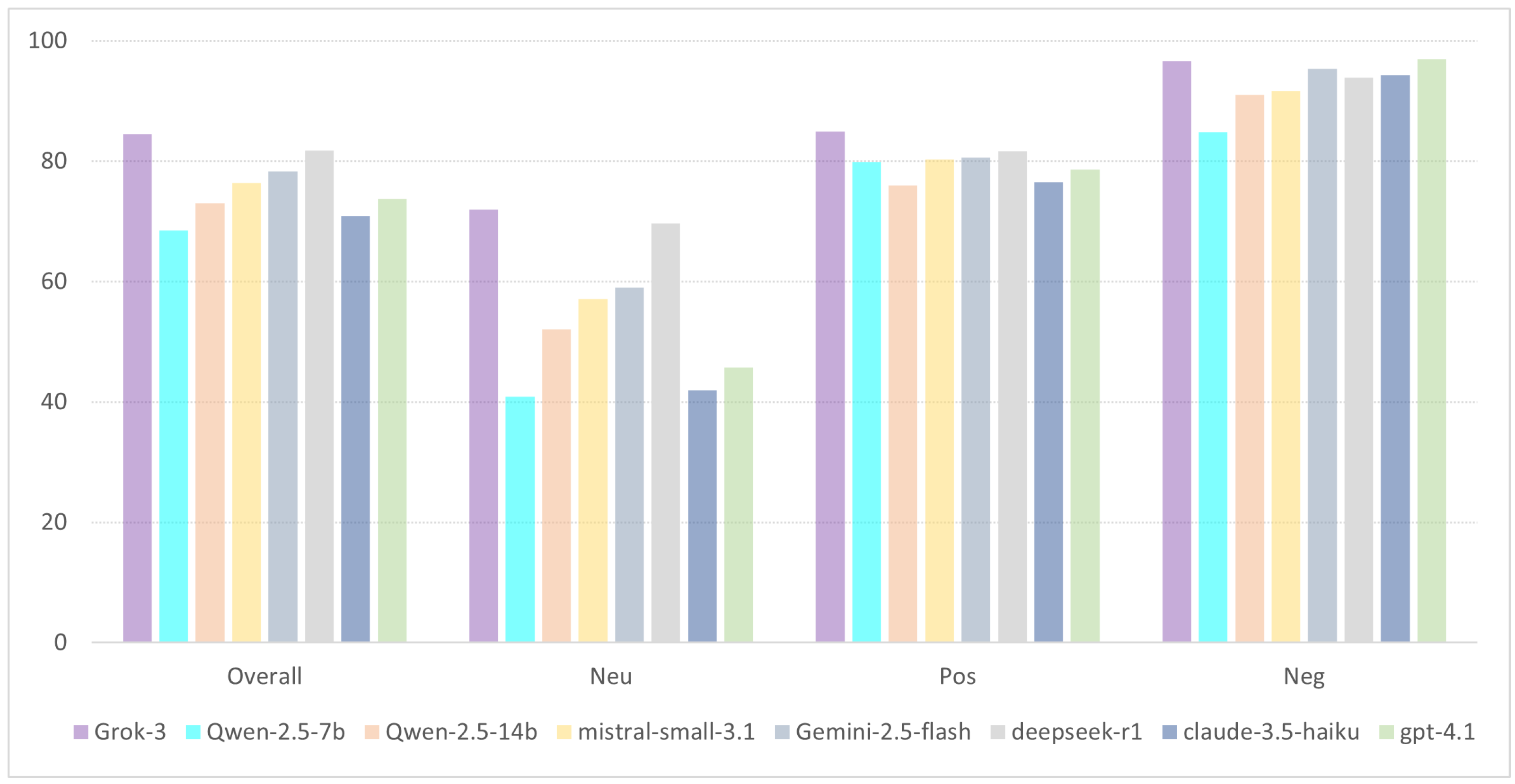

Table 7 presents macro-average F1 scores and F1 scores for neutral, positive, and negative categories across various language models in the task of predicting future events. The results reveal notable performance discrepancies among the models, with larger-scale models and those with higher pre-training levels generally demonstrating superior overall performance. For example, the Qwen-7B model, featuring a relatively modest parameter scale and no fine-tuning, achieves an overall F1 score of approximately 0.7161, whereas the Qwen-14B model, with double the parameters, enhances the overall F1 score to around 0.73. Google’s Gemini-2.5 model attains an overall F1 score of nearly 0.79, while the xAI Grok-3 model exhibits the most remarkable performance, with an overall F1 score of approximately 0.85. In contrast, the GPT-4.1 model achieves a slightly lower F1 score of about 0.74.

It is also worth noting the performance of the models varies significantly across different event categories. Accurate prediction is most evident for negative events, whereas neutral events present a considerable challenge. Models consistently demonstrate superior F1 scores for negative events, with the Grok-3 model notably achieving an F1 score of 0.9665 in the negative category. This heightened accuracy may stem from the higher frequency of negative events in the dataset or their distinct linguistic attributes, which facilitate clearer differentiation by the model. In contrast, the prediction accuracy for neutral events is notably lower compared to positive and negative events, primarily due to the absence of pronounced emotional or outcome orientations. For instance, the Qwen-7B model exhibits an F1 score of 0.4928 for neutral events, in stark contrast to its performance of 0.901 for negative events. Even the top-performing Grok-3 model displays a modest F1 score of 0.7202 in the neutral category, significantly below its performance in the negative category at 0.9665.

Figure 6 provides a visual comparison of these findings.

Additionaly, the EChainQwen series of small models demonstrate strong competitiveness compared to other large models in the cross-model comparison. EChainQwen-14B ranks among the top in open-source models with a macro-average F1 of 0.8057, significantly outperforming the GPT-4.1 model without specialized training. This suggests that small or medium-sized language models enhanced with event chain knowledge can surpass traditional large-scale pre-trained models in specific reasoning tasks. However, some of the most advanced closed-source or semi-open-source models in the industry still lead in this task. For instance, the Grok-3 model from xAI achieves a macro F1 of 0.8453, and the DeepSeek-R1 model scores 0.8176, slightly surpassing EChainQwen-14B. These top models may benefit from extensive domain knowledge absorption during pre-training or larger parameter scales. Nonetheless, EChainQwen-14B has narrowed the performance gap to single-digit percentage points by incorporating reasoning knowledge from high-parameter models, highlighting the effectiveness of the MPF framework’s reasoning fine-tuning. Additionally, EChainQwen-7B achieves a notable macro-average F1 score of 76.18%, surpassing baseline models like general-purpose GPT-4.1, which emphasizes code and mathematics.

4.5. Interpretability Evaluation

To assess the quality and reliability of the explanations produced by the MPF framework, a subjective evaluation system utilizing a 5-point Likert scale was devised. This scale facilitated scoring, with evaluators assigning ratings ranging from 1 to 5 to gauge the explanation quality. Such an approach enables the quantification of subjective assessments regarding the explanations’ quality. The evaluation was conducted from the following three dimensions:

Logical Reasoning (L): Assess the clarity and reasonableness of the reasoning process underlying the generated explanations. A high score indicates that the reasoning process is coherent, rigorous, and in line with common sense and the event context.

Explanation Correctness (E): Evaluate the clarity and rationality of the reasoning process supporting the provided explanations. A high score signifies that the reasoning is cohesive, rigorous, and consistent with both common sense and the context of the event.

Information Relevance (I): This metric assesses the effectiveness of referencing key information in events, such as the presence of “hallucinations” and the integration of actual event content into the reasoning process. A high rating suggests that the explanations are closely aligned with the event data and devoid of inaccuracies.

To enhance result objectivity, a dual evaluation approach was implemented. Specifically, GPT-4.1 conducted automated scoring of model outputs, while several human evaluators individually assessed 50 samples from the test set. The mean score was calculated to minimize personal biases. Moreover, to establish a gold standard for evaluation, we introduced a set of high-quality explanations written by human experts to serve as an ideal benchmark, referred to as “Human-written Benchmark” in

Table 8. This benchmark represents the highest achievable score across the evaluation dimensions. The efficacy of the MPF framework was evaluated against several techniques across various dimensions to verify its advantages. The results of the interpretability evaluation are shown in

Table 8.

To enhance result objectivity, a dual evaluation approach was implemented. Specifically, GPT-4.1 conducted automated scoring of model outputs, while several human evaluators individually assessed 50 samples from the test set. The mean score was calculated to minimize personal biases. Moreover, high-quality human-written explanations served as a benchmark. The efficacy of the MPF framework was evaluated against multiple techniques across various dimensions to verify its advantages. The results of the interpretability evaluation are shown in

Table 8.

Table 8 demonstrates that the explanatory capabilities of the MPF framework significantly outperform those of the baseline methods across all dimensions. Specifically, the MPF framework achieves scores of 4.85, 4.67, and 4.83 in dimensions L, E, and I, respectively, closely approaching the high-quality manual explanations score of 5. In contrast, the Prompt and CoT methods exhibit notably lower scores, particularly in dimensions L and I. Remarkably, MPF attains scores of 4.66, 4.7, and 4.93 in these dimensions, closely resembling the Oracle scores of 4.9, 5, and 4.97. Conversely, the performance of the baseline methods in manual evaluation diminishes significantly. For instance, Prompt scores 2.87 in dimension L, while CoT scores 3.4 in dimension E, indicating that automated assessment may overstate the efficacy of the baseline methods. This highlights the critical role of human judgment in evaluating the explanatory quality. In summary, the MPF framework excels in generating logically coherent, factually precise, and highly pertinent explanations concerning event data, showcasing its resilience and efficacy.

4.6. Case Study

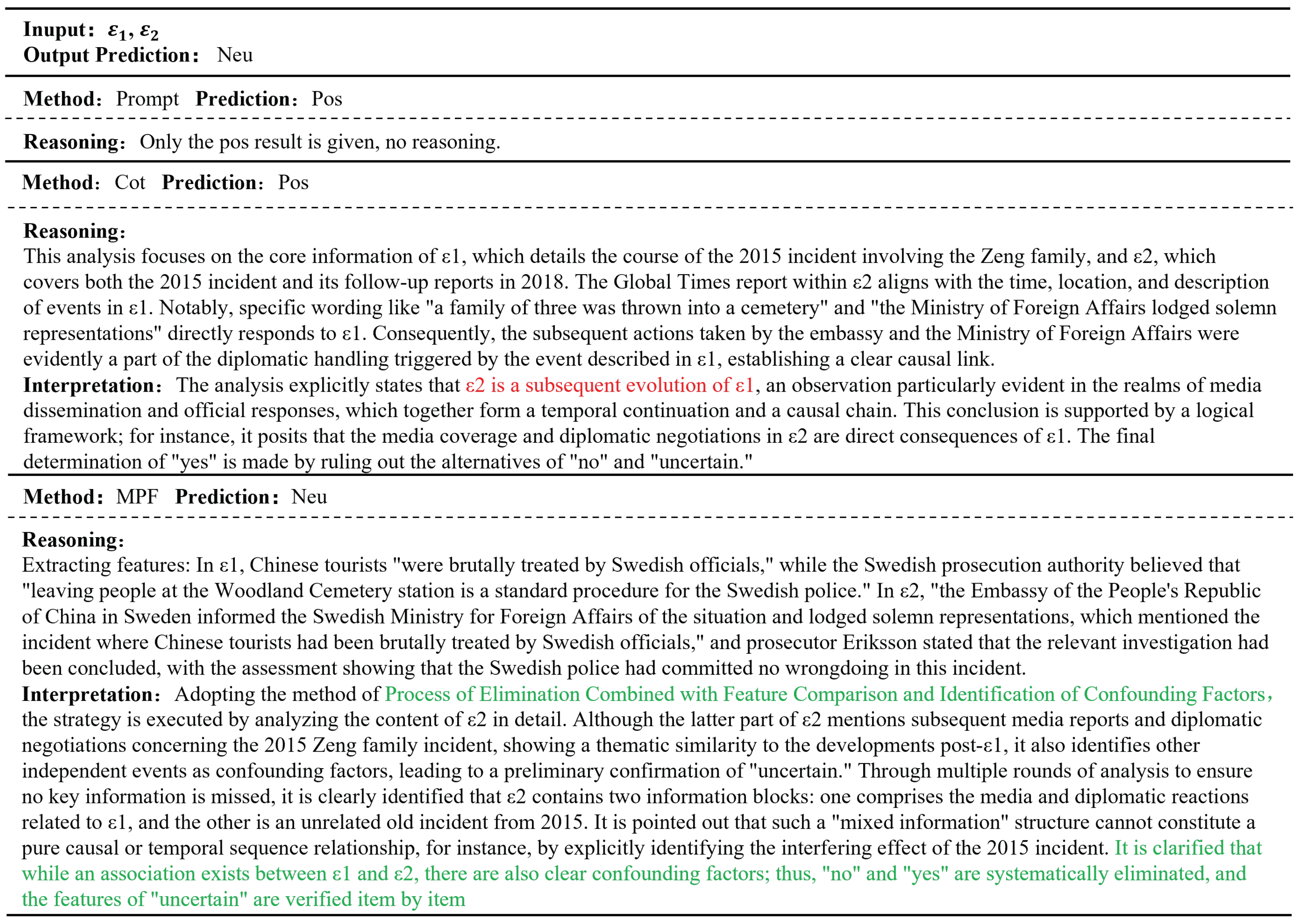

To validate the inference effectiveness and interpretability of the MPF framework, a specific case from the dataset was selected for analysis. This case involves the complex relationship between two event chains. One chain includes both direct subsequent developments and unrelated historical data, presenting a clear challenge for prediction. The analysis focuses on a multi-year timespan encompassing various event types, such as civil disputes, diplomatic developments, and media reactions. The relationship of the event chains in this case was classified as “neutral.”

The original text of the case contains 882 tokens. The case describes a dispute between a Chinese tourist, Mr. Zeng, and a Swedish hostel in September 2018, which escalated into a conflict involving the police. The incident prompted a diplomatic response from the Chinese Embassy in Sweden. The case encompasses two interrelated event chains: the initial civil dispute over hotel check-in , and the subsequent reactions from various parties, including media coverage and diplomatic representations. The case spans multiple years, from 2015 to 2018, adding complexity to the event reasoning. Overall, the case illustrates the evolution of a localized incident into a diplomatic issue between China and Sweden.

The primary challenge in this case lies in the fact that event chain is not a straightforward continuation of event chain . It includes misleading historical information, resulting in the relationship between the two event chains being classified as “neutral.” This intertwining of past and present events places significant demands on the model’s ability to discern associations and filter out interfering information, thereby highlighting the complexity and challenges inherent in real-world event prediction. By evaluating the relationship between the event chain pair , the predictive and interpretative effectiveness of various methods is demonstrated.

Section 4.6 evaluates the prediction results and reasoning mechanisms of three methods: Prompt, CoT, and MPF. It further examines the theoretical foundations and logical underpinnings of the reasoning outputs generated by each method, and discusses potential limitations of the respective reasoning frameworks.

Figure 7 provides a comparative overview of the reasoning processes and outcomes across the three methods.

Figure 7 reveals that the inaccuracies in the Prompt and CoT methods stem from their inability to process complex mixed information. The Prompt method does not provide any reasoning process and only gives a “positive” prediction result, completely ignoring the interfering event in 2015 mentioned in

. While the CoT method outlines a reasoning process and identifies the causal link between subsequent media and diplomatic reports in

and

, it fails to recognize the 2015 event as independent and irrelevant. Instead, it erroneously considers the entirety of

as a direct subsequent development of

, leading to a flawed “positive” conclusion, as illustrated by the red text in

Figure 7.

The success of the MPF method hinges on its self-reflection and cognitive strategies. Utilizing task understanding and analysis, MPF employs an “elimination method combined with feature comparison and interference factor identification”, as shown in the green text in

Figure 7. This approach effectively isolates the irrelevant “2015 event” from

. By examining and identifying the structure of this “information confounding,” MPF accurately discerns that the relationship is neither purely “positive” nor “negative,” leading to the correct conclusion of “neutral.”

5. Conclusions

This study proposes a document-level future event prediction method that integrates event knowledge graph feature representation and LLM temporal reasoning. Initially, a dataset named TimeEchain is developed for document-level future event prediction, employing an LLM-based strategy to generate extensive event chains from the original text. To better understand the intricate progression of document-level events, an event knowledge graph is constructed using LLMs to extract key event features, including event type, time, location, trigger words, and causal relationships. Furthermore, a metacognitive theory-based LLM reasoning framework is proposed to guide LLMs through a reasoning process that includes knowledge acquisition, task comprehension, strategy planning, strategy execution, and strategy reflection. The framework leverages the event knowledge graph to enhance the predictive capabilities of LLMs for future events.

Extensive experimentation and analysis were conducted utilizing a range of evaluation metrics, including Accuracy, macro-average precision, macro-average recall, and macro-average F1 score. The results illustrate a significant improvement in the performance of basic Prompts, chain-of-thought reasoning, and the MPF framework reasoning through the integration of the event knowledge graph. A key insight from this investigation pertains to the efficacy of employing various LLMs for predicting future events. The experimental results indicate that the proposed MPF framework remarkably enhances the ability of LLMs in future event prediction. This approach holds promise for assisting policymakers in the development of real-time event prediction systems, such as public opinion monitoring and early disaster warnings, as well as in exploring paradigms for collaborative decision-making between humans and machines.

For future work, the proposed model’s generalizability can be further enhanced by validation on a broader range of datasets. We acknowledge that the current study has certain limitations. Specifically, our data sources are restricted to text and were not extended to multimodal data. Furthermore, in our experiments, the construction of the event graph heavily relied on LLMs, and we lacked a quantitative analysis of potential error propagation, with no manual verification or filtering.

In the future, to address these issues, we can specifically improve the model’s reasoning in scenarios with complex causal relationships and long sequences by integrating dynamic temporal modeling with multimodal features from images, videos, audio, and structured data. This will also allow us to add error propagation blocking and manual verification using smaller models for the modeling of event schemas. Additionally, incorporating insights from cognitive science and causal inference theory would enhance the model’s interpretability, fairness, and robustness. This presents a promising direction for future research, poised to increase the utility of LLMs for intricate temporal reasoning tasks.