Grasping in Shared Virtual Environments: Toward Realistic Human–Object Interaction Through Review-Based Modeling

Abstract

1. Introduction

- 1.

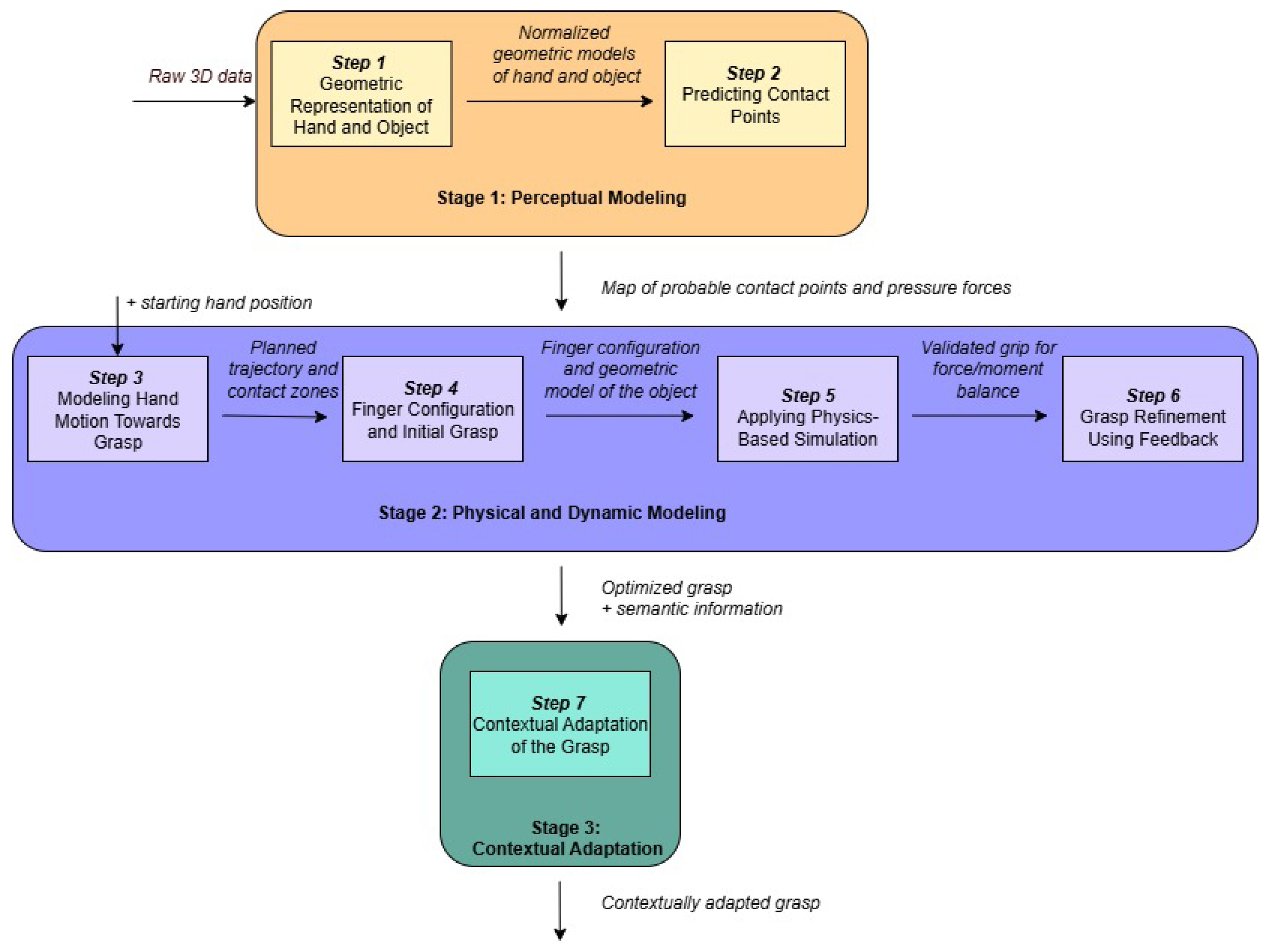

- A seven-step grasping framework: We propose a multi-layered grasping model that combines biomechanical, perceptual, physical, and contextual processes. Our framework differs from others because it combines the layers of object geometry and physics into a single sequence, making human–object interactions more realistic and adaptive.

- 2.

- Addressing technical issues: The proposed framework methodically addresses a number of important technical issues. We consider the latency and synchronization of multimodal feedback. We propose ways to address the computational complexity of real-time physics-based simulations. The model takes into account data limitations, such as data set bias and lack of diversity. We explore approaches to modeling deformable and composite objects that have not been extensively addressed in the majority of the current literature.

- 3.

- Real-World Effects: Our conceptual framework supports real-world applications in areas where safe and realistic interactions are crucial, by connecting grasping modeling to the Tactile Internet and low-latency communication over 6G.

2. Modeling of the Grasping Process

- Stage 1: Perceptual Modeling (Steps 1–2)—representation of the hand and the object and identification of contact candidates;

- Stage 2: Physical and Dynamic Modeling (Steps 3–6)—simulation of the movement, applying physics, and refining the grip through feedback;

- Stage 3: Contextual Adaptation (Step 7)—adjustment of the grip according to the scene, the capabilities of the object, or the goals of the task.

2.1. Step 1: Geometric Representation of Hand and Object

2.2. Step 2: Predicting Contact Points

2.3. Step 3: Modeling Hand Motion Towards Grasp

2.4. Step 4: Finger Configuration and Initial Grasp

2.5. Step 5: Applying Physics-Based Simulation

2.6. Step 6: Grasp Refinement Using Feedback

2.7. Step 7: Contextual Adaptation of the Grasp

2.8. Guidelines for Implementing Tactile Internet in Grasping Frameworks

3. Physics-Informed Modeling of Grasping

4. Types of Datasets and Their Focus

4.1. Real-World Datasets

4.2. Synthetic Datasets

4.3. Data Representation Approach

4.4. Object Material Types

5. Evaluation and Limitations

5.1. Evaluation

5.2. Limitations

5.2.1. Hardware Limitations

5.2.2. Data Limitations

5.2.3. Methodological Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADD | Average distance |

| AR | Augmented reality |

| CD | Chamfer distance |

| CVAE | Conditional Variational Autoencoder |

| CNN | Convolutional Neural Networks |

| FEM | Finite element methods |

| IoU | Intersection over union |

| IV | Intersection volume |

| MPJPE | Mean joint position error |

| MRGs | Manipulation relationship graphs |

| MoCap | Motion capture |

| MR | Mixed reality |

| RL | Reinforcement learning |

| SIV | Scene interaction vector |

| SD | Simulation displacement |

| SDFs | Signed Distance Functions |

| TI | Tactile Internet |

| UHM | Universal Hand Model |

| VAE | Variational Autoencoders |

| VR | Virtual reality |

References

- Sharma, S.K.; Woungang, I.; Anpalagan, A.; Chatzinotas, S. Toward tactile internet in beyond 5G era: Recent advances, current issues, and future directions. IEEE Access 2020, 8, 56948–56991. [Google Scholar] [CrossRef]

- Stefanidi, Z.; Margetis, G.; Ntoa, S.; Papagiannakis, G. Real-time adaptation of context-aware intelligent user interfaces, for enhanced situational awareness. IEEE Access 2022, 10, 23367–23393. [Google Scholar] [CrossRef]

- Lawson McLean, A.; Lawson McLean, A.C. Immersive simulations in surgical training: Analyzing the interplay between virtual and real-world environments. Simul. Gaming 2024, 55, 1103–1123. [Google Scholar] [CrossRef]

- Haynes, G.C.; Stager, D.; Stentz, A.; Vande Weghe, J.M.; Zajac, B.; Herman, H.; Kelly, A.; Meyhofer, E.; Anderson, D.; Bennington, D.; et al. Developing a robust disaster response robot: CHIMP and the robotics challenge. J. Field Robot. 2017, 34, 281–304. [Google Scholar] [CrossRef]

- Zhu, J.; Cherubini, A.; Dune, C.; Navarro-Alarcon, D.; Alambeigi, F.; Berenson, D.; Ficuciello, F.; Harada, K.; Kober, J.; Li, X.; et al. Challenges and outlook in robotic manipulation of deformable objects. IEEE Robot. Autom. Mag. 2022, 29, 67–77. [Google Scholar] [CrossRef]

- Berrezueta-Guzman, S.; Chen, W.; Wagner, S. A Therapeutic Role-Playing VR Game for Children with Intellectual Disabilities. arXiv 2025, arXiv:2507.19114. [Google Scholar] [CrossRef]

- Alverson, D.C.; Saiki Jr, S.M.; Kalishman, S.; Lindberg, M.; Mennin, S.; Mines, J.; Serna, L.; Summers, K.; Jacobs, J.; Lozanoff, S.; et al. Medical students learn over distance using virtual reality simulation. Simul. Healthc. 2008, 3, 10–15. [Google Scholar] [CrossRef] [PubMed]

- Saeed, S.; Khan, K.B.; Hassan, M.A.; Qayyum, A.; Salahuddin, S. Review on the role of virtual reality in reducing mental health diseases specifically stress, anxiety, and depression. arXiv 2024, arXiv:2407.18918. [Google Scholar]

- Santos, J.; Wauters, T.; Volckaert, B.; De Turck, F. Towards low-latency service delivery in a continuum of virtual resources: State-of-the-art and research directions. IEEE Commun. Surv. Tutor. 2021, 23, 2557–2589. [Google Scholar] [CrossRef]

- Awais, M.; Ullah Khan, F.; Zafar, M.; Mudassar, M.; Zaigham Zaheer, M.; Mehmood Cheema, K.; Kamran, M.; Jung, W.S. Towards enabling haptic communications over 6G: Issues and challenges. Electronics 2023, 12, 2955. [Google Scholar] [CrossRef]

- Manoj, R.; Krishna, N.; TS, M.S. A Comprehensive Study on the Integration of Robotic Technology in Medical Applications considering Legal Frameworks & Ethical Concerns. Int. J. Health Technol. Innov. 2024, 3, 29–37. [Google Scholar]

- Ahmad, A.; Migniot, C.; Dipanda, A. Tracking hands in interaction with objects: A review. In Proceedings of the 2017 13th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Jaipur, India, 4–7 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 360–369. [Google Scholar]

- Si, W.; Wang, N.; Yang, C. A review on manipulation skill acquisition through teleoperation-based learning from demonstration. Cogn. Comput. Syst. 2021, 3, 1–16. [Google Scholar] [CrossRef]

- Tian, H.; Wang, C.; Manocha, D.; Zhang, X. Realtime hand-object interaction using learned grasp space for virtual environments. IEEE Trans. Vis. Comput. Graph. 2018, 25, 2623–2635. [Google Scholar] [CrossRef]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied Hands: Modeling and Capturing Hands and Bodies Together. Acm Trans. Graph. (Proc. SIGGRAPH Asia) 2017, 36, 245. [Google Scholar] [CrossRef]

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.A.; Tzionas, D.; Black, M.J. Expressive Body Capture: 3D Hands, Face, and Body from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 10975–10985. [Google Scholar]

- Moon, G.; Xu, W.; Joshi, R.; Wu, C.; Shiratori, T. Authentic Hand Avatar from a Phone Scan via Universal Hand Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 2029–2038. [Google Scholar]

- Wang, Y.K.; Xing, C.; Wei, Y.L.; Wu, X.M.; Zheng, W.S. Single-View Scene Point Cloud Human Grasp Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 831–841. [Google Scholar]

- Karunratanakul, K.; Yang, J.; Zhang, Y.; Black, M.J.; Muandet, K.; Tang, S. Grasping field: Learning implicit representations for human grasps. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Virtual, 25–28 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 333–344. [Google Scholar]

- Li, Z.; Sedlar, J.; Carpentier, J.; Laptev, I.; Mansard, N.; Sivic, J. Estimating 3d motion and forces of person-object interactions from monocular video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 8640–8649. [Google Scholar]

- Hampali, S.; Rad, M.; Oberweger, M.; Lepetit, V. Honnotate: A method for 3d annotation of hand and object poses. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 13–19 June 2020; pp. 3196–3206. [Google Scholar]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Orts-Escolano, S.; Garcia-Rodriguez, J. A visually realistic grasping system for object manipulation and interaction in virtual reality environments. Comput. Graph. 2019, 83, 77–86. [Google Scholar] [CrossRef]

- Yang, L.; Zhan, X.; Li, K.; Xu, W.; Li, J.; Lu, C. Cpf: Learning a contact potential field to model the hand-object interaction. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 11097–11106. [Google Scholar]

- Wang, C.; Zang, X.; Zhang, X.; Liu, Y.; Zhao, J. Parameter estimation and object gripping based on fingertip force/torque sensors. Measurement 2021, 179, 109479. [Google Scholar] [CrossRef]

- Huang, I.; Narang, Y.; Eppner, C.; Sundaralingam, B.; Macklin, M.; Bajcsy, R.; Hermans, T.; Fox, D. DefGraspSim: Physics-based simulation of grasp outcomes for 3D deformable objects. IEEE Robot. Autom. Lett. 2022, 7, 6274–6281. [Google Scholar] [CrossRef]

- Cha, J.; Kim, J.; Yoon, J.S.; Baek, S. Text2HOI: Text-guided 3D Motion Generation for Hand-Object Interaction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 1577–1585. [Google Scholar]

- Pokhariya, C.; Shah, I.N.; Xing, A.; Li, Z.; Chen, K.; Sharma, A.; Sridhar, S. MANUS: Markerless Grasp Capture using Articulated 3D Gaussians. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 2197–2208. [Google Scholar]

- Zhang, Q.; Li, Y.; Luo, Y.; Shou, W.; Foshey, M.; Yan, J.; Tenenbaum, J.B.; Matusik, W.; Torralba, A. Dynamic modeling of hand-object interactions via tactile sensing. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2874–2881. [Google Scholar]

- Watkins-Valls, D.; Varley, J.; Allen, P. Multi-modal geometric learning for grasping and manipulation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7339–7345. [Google Scholar]

- Siddiqui, M.S.; Coppola, C.; Solak, G.; Jamone, L. Grasp stability prediction for a dexterous robotic hand combining depth vision and haptic bayesian exploration. Front. Robot. AI 2021, 8, 703869. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, Z.; Wang, L.; Zhu, X.; Huang, H.; Cao, Q. Digital twin-enabled grasp outcomes assessment for unknown objects using visual-tactile fusion perception. Robot.-Comput.-Integr. Manuf. 2023, 84, 102601. [Google Scholar] [CrossRef]

- Bhatnagar, B.L.; Xie, X.; Petrov, I.A.; Sminchisescu, C.; Theobalt, C.; Pons-Moll, G. Behave: Dataset and method for tracking human object interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 15935–15946. [Google Scholar]

- Palleschi, A.; Angelini, F.; Gabellieri, C.; Pallottino, L.; Bicchi, A.; Garabini, M. Grasp It Like a Pro 2.0: A Data-Driven Approach Exploiting Basic Shape Decomposition and Human Data for Grasping Unknown Objects. IEEE Trans. Robot. 2023, 39, 4016–4036. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, S.; Schmid, C.; Laptev, I. gsdf: Geometry-driven signed distance functions for 3d hand-object reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 12890–12900. [Google Scholar]

- Chao, Y.W.; Yang, W.; Xiang, Y.; Molchanov, P.; Handa, A.; Tremblay, J.; Narang, Y.S.; Van Wyk, K.; Iqbal, U.; Birchfield, S.; et al. DexYCB: A benchmark for capturing hand grasping of objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021; pp. 9044–9053.

- Sajjan, S.; Moore, M.; Pan, M.; Nagaraja, G.; Lee, J.; Zeng, A.; Song, S. Clear grasp: 3d shape estimation of transparent objects for manipulation. In Proceedings of the 2020 IEEE international conference on robotics and automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3634–3642. [Google Scholar]

- Gao, R.; Chang, Y.Y.; Mall, S.; Fei-Fei, L.; Wu, J. Objectfolder: A dataset of objects with implicit visual, auditory, and tactile representations. arXiv 2021, arXiv:2109.07991. [Google Scholar] [CrossRef]

- Breyer, M.; Chung, J.J.; Ott, L.; Siegwart, R.; Nieto, J. Volumetric grasping network: Real-time 6 dof grasp detection in clutter. In Proceedings of the Conference on Robot Learning. PMLR 2021, London, UK, 8–11 November 2021; pp. 1602–1611. [Google Scholar]

- Mayer, V.; Feng, Q.; Deng, J.; Shi, Y.; Chen, Z.; Knoll, A. FFHNet: Generating multi-fingered robotic grasps for unknown objects in real-time. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 762–769. [Google Scholar]

- Ni, P.; Zhang, W.; Zhu, X.; Cao, Q. Pointnet++ grasping: Learning an end-to-end spatial grasp generation algorithm from sparse point clouds. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3619–3625. [Google Scholar]

- Li, H.; Lin, X.; Zhou, Y.; Li, X.; Huo, Y.; Chen, J.; Ye, Q. Contact2grasp: 3d grasp synthesis via hand-object contact constraint. arXiv 2022, arXiv:2210.09245. [Google Scholar]

- Wang, S.; Liu, X.; Wang, C.C.; Liu, J. Physics-aware iterative learning and prediction of saliency map for bimanual grasp planning. Comput. Aided Geom. Des. 2024, 111, 102298. [Google Scholar] [CrossRef]

- Liu, Q.; Cui, Y.; Ye, Q.; Sun, Z.; Li, H.; Li, G.; Shao, L.; Chen, J. DexRepNet: Learning dexterous robotic grasping network with geometric and spatial hand-object representations. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 3153–3160. [Google Scholar]

- Zhang, H.; Yang, D.; Wang, H.; Zhao, B.; Lan, X.; Ding, J.; Zheng, N. Regrad: A large-scale relational grasp dataset for safe and object-specific robotic grasping in clutter. IEEE Robot. Autom. Lett. 2022, 7, 2929–2936. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Y.; Liu, T.; Zhu, Y.; Liang, W.; Huang, S. Humanise: Language-conditioned human motion generation in 3d scenes. Adv. Neural Inf. Process. Syst. 2022, 35, 14959–14971. [Google Scholar]

- Liu, S.; Jiang, H.; Xu, J.; Liu, S.; Wang, X. Semi-supervised 3d hand-object poses estimation with interactions in time. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2021; pp. 14687–14697. [Google Scholar]

- Chaudhari, B.S. Enabling Tactile Internet via 6G: Application Characteristics, Requirements, and Design Considerations. Future Internet 2025, 17, 122. [Google Scholar] [CrossRef]

- Xiang, H.; Wu, K.; Chen, J.; Yi, C.; Cai, J.; Niyato, D.; Shen, X. Edge computing empowered tactile Internet for human digital twin: Visions and case study. arXiv 2023, arXiv:2304.07454. [Google Scholar]

- Lu, Y.; Kong, D.; Yang, G.; Wang, R.; Pang, G.; Luo, H.; Yang, H.; Xu, K. Machine learning-enabled tactile sensor design for dynamic touch decoding. Adv. Sci. 2023, 10, 2303949. [Google Scholar] [CrossRef]

- Turpin, D.; Wang, L.; Heiden, E.; Chen, Y.C.; Macklin, M.; Tsogkas, S.; Dickinson, S.; Garg, A. Grasp’d: Differentiable contact-rich grasp synthesis for multi-fingered hands. In Proceedings of the European Conference on Computer Vision 2022, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 201–221. [Google Scholar]

- Huang, I.; Narang, Y.; Eppner, C.; Sundaralingam, B.; Macklin, M.; Hermans, T.; Fox, D. Defgraspsim: Simulation-based grasping of 3d deformable objects. arXiv 2021, arXiv:2107.05778. [Google Scholar]

- Grady, P.; Tang, C.; Twigg, C.D.; Vo, M.; Brahmbhatt, S.; Kemp, C.C. Contactopt: Optimizing contact to improve grasps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2021; pp. 1471–1481. [Google Scholar]

- Van der Merwe, M.; Lu, Q.; Sundaralingam, B.; Matak, M.; Hermans, T. Learning continuous 3d reconstructions for geometrically aware grasping. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 11516–11522. [Google Scholar]

- Yang, L.; Li, K.; Zhan, X.; Lv, J.; Xu, W.; Li, J.; Lu, C. Artiboost: Boosting articulated 3d hand-object pose estimation via online exploration and synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 2750–2760. [Google Scholar]

- Cao, Z.; Radosavovic, I.; Kanazawa, A.; Malik, J. Reconstructing hand-object interactions in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 12417–12426. [Google Scholar]

- Coumans, E. Bullet physics simulation. In ACM SIGGRAPH 2015 Courses; Association for Computing Machinery: New York, NY, USA, 2015; p. 1. [Google Scholar]

- Coumans, E.; Bai, Y. PyBullet Quickstart Guide. 2021. Available online: https://raw.githubusercontent.com/bulletphysics/bullet3/master/docs/pybullet_quickstartguide.pdf (accessed on 23 September 2025).

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ international conference on intelligent robots and systems (IROS)(IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Todorov, E.; Erez, T.; Tassa, Y. Mujoco: A physics engine for model-based control. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 5026–5033. [Google Scholar]

- Lu, Q.; Van der Merwe, M.; Sundaralingam, B.; Hermans, T. Multifingered grasp planning via inference in deep neural networks: Outperforming sampling by learning differentiable models. IEEE Robot. Autom. Mag. 2020, 27, 55–65. [Google Scholar] [CrossRef]

- Li, K.; Wang, J.; Yang, L.; Lu, C.; Dai, B. Semgrasp: Semantic grasp generation via language aligned discretization. arXiv 2024, arXiv:2404.03590. [Google Scholar] [CrossRef]

- Liang, H.; Ma, X.; Li, S.; Görner, M.; Tang, S.; Fang, B.; Sun, F.; Zhang, J. Pointnetgpd: Detecting grasp configurations from point sets. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3629–3635. [Google Scholar]

- Xu, Y.; Wan, W.; Zhang, J.; Liu, H.; Shan, Z.; Shen, H.; Wang, R.; Geng, H.; Weng, Y.; Chen, J.; et al. Unidexgrasp: Universal robotic dexterous grasping via learning diverse proposal generation and goal-conditioned policy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 4737–4746. [Google Scholar]

- Murali, A.; Liu, W.; Marino, K.; Chernova, S.; Gupta, A. Same object, different grasps: Data and semantic knowledge for task-oriented grasping. In Proceedings of the Conference on Robot Learning. PMLR 2021, London, UK, 8–11 November 2021; pp. 1540–1557. [Google Scholar]

- Braun, J.; Christen, S.; Kocabas, M.; Aksan, E.; Hilliges, O. Physically plausible full-body hand-object interaction synthesis. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 464–473. [Google Scholar]

- Zhang, H.; Christen, S.; Fan, Z.; Zheng, L.; Hwangbo, J.; Song, J.; Hilliges, O. ArtiGrasp: Physically plausible synthesis of bi-manual dexterous grasping and articulation. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 235–246. [Google Scholar]

- Kasaei, H.; Kasaei, M. Mvgrasp: Real-time multi-view 3d object grasping in highly cluttered environments. Robot. Auton. Syst. 2023, 160, 104313. [Google Scholar] [CrossRef]

- Hasson, Y.; Varol, G.; Tzionas, D.; Kalevatykh, I.; Black, M.J.; Laptev, I.; Schmid, C. Learning joint reconstruction of hands and manipulated objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 11807–11816. [Google Scholar]

- Brahmbhatt, S.; Tang, C.; Twigg, C.D.; Kemp, C.C.; Hays, J. ContactPose: A dataset of grasps with object contact and hand pose. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16. Springer: Cham, Switzerland, 2020; pp. 361–378. [Google Scholar]

- Taheri, O.; Ghorbani, N.; Black, M.J.; Tzionas, D. GRAB: A dataset of whole-body human grasping of objects. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part IV 16. Springer: Cham, Switzerland, 2020; pp. 581–600. [Google Scholar]

- Yang, L.; Li, K.; Zhan, X.; Wu, F.; Xu, A.; Liu, L.; Lu, C. Oakink: A large-scale knowledge repository for understanding hand-object interaction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 20953–20962. [Google Scholar]

- Taheri, O.; Choutas, V.; Black, M.J.; Tzionas, D. GOAL: Generating 4D whole-body motion for hand-object grasping. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 13263–13273. [Google Scholar]

- Fan, Z.; Taheri, O.; Tzionas, D.; Kocabas, M.; Kaufmann, M.; Black, M.J.; Hilliges, O. ARCTIC: A dataset for dexterous bimanual hand-object manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 12943–12954. [Google Scholar]

- Tendulkar, P.; Surís, D.; Vondrick, C. Flex: Full-body grasping without full-body grasps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 21179–21189. [Google Scholar]

- Chang, X.; Sun, Y. Text2Grasp: Grasp synthesis by text prompts of object grasping parts. arXiv 2024, arXiv:2404.15189. [Google Scholar] [CrossRef]

- Farias, C.; Marti, N.; Stolkin, R.; Bekiroglu, Y. Simultaneous tactile exploration and grasp refinement for unknown objects. IEEE Robot. Autom. Lett. 2021, 6, 3349–3356. [Google Scholar] [CrossRef]

- Gao, M.; Ruan, N.; Shi, J.; Zhou, W. Deep neural network for 3D shape classification based on mesh feature. Sensors 2022, 22, 7040. [Google Scholar] [CrossRef]

- Jiang, H.; Liu, S.; Wang, J.; Wang, X. Hand-object contact consistency reasoning for human grasps generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 11107–11116. [Google Scholar]

- Li, Y.; Schomaker, L.; Kasaei, S.H. Learning to grasp 3d objects using deep residual u-nets. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 781–787. [Google Scholar]

- Fan, Z.; Parelli, M.; Kadoglou, M.E.; Chen, X.; Kocabas, M.; Black, M.J.; Hilliges, O. HOLD: Category-agnostic 3d reconstruction of interacting hands and objects from video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 494–504. [Google Scholar]

- Hao, Y.; Zhang, J.; Zhuo, T.; Wen, F.; Fan, H. Hand-Centric Motion Refinement for 3D Hand-Object Interaction via Hierarchical Spatial-Temporal Modeling. In Proceedings of the AAAI Conference on Artificial Intelligence 2024, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 2076–2084. [Google Scholar]

- Petrov, I.A.; Marin, R.; Chibane, J.; Pons-Moll, G. Object pop-up: Can we infer 3d objects and their poses from human interactions alone? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 4726–4736. [Google Scholar]

- Ye, Y.; Gupta, A.; Tulsiani, S. What’s in your hands? 3d reconstruction of generic objects in hands. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 3895–3905. [Google Scholar]

- Zhou, K.; Bhatnagar, B.L.; Lenssen, J.E.; Pons-Moll, G. Toch: Spatio-temporal object-to-hand correspondence for motion refinement. In Proceedings of the European Conference on Computer Vision 2022, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 1–19. [Google Scholar]

- Kiatos, M.; Malassiotis, S.; Sarantopoulos, I. A geometric approach for grasping unknown objects with multifingered hands. IEEE Trans. Robot. 2020, 37, 735–746. [Google Scholar] [CrossRef]

- Corona, E.; Pumarola, A.; Alenya, G.; Moreno-Noguer, F.; Rogez, G. Ganhand: Predicting human grasp affordances in multi-object scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 13–19 June 2020; pp. 5031–5041. [Google Scholar]

- Fang, H.S.; Wang, C.; Gou, M.; Lu, C. Graspnet-1billion: A large-scale benchmark for general object grasping. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 13–19 June 2020; pp. 11444–11453. [Google Scholar]

- Christoff, N.; Neshov, N.N.; Tonchev, K.; Manolova, A. Application of a 3D talking head as part of telecommunication AR, VR, MR system: Systematic review. Electronics 2023, 12, 4788. [Google Scholar] [CrossRef]

- Zapata-Impata, B.S.; Gil, P.; Pomares, J.; Torres, F. Fast geometry-based computation of grasping points on three-dimensional point clouds. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831846. [Google Scholar] [CrossRef]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+o: Unified egocentric recognition of 3d hand-object poses and interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 4511–4520. [Google Scholar]

- Delrieu, T.; Weistroffer, V.; Gazeau, J.P. Precise and realistic grasping and manipulation in virtual reality without force feedback. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 266–274. [Google Scholar]

- Fan, H.; Zhuo, T.; Yu, X.; Yang, Y.; Kankanhalli, M. Understanding atomic hand-object interaction with human intention. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 275–285. [Google Scholar] [CrossRef]

- Le, T.T.; Le, T.S.; Chen, Y.R.; Vidal, J.; Lin, C.Y. 6D pose estimation with combined deep learning and 3D vision techniques for a fast and accurate object grasping. Robot. Auton. Syst. 2021, 141, 103775. [Google Scholar] [CrossRef]

- Baek, S.; Kim, K.I.; Kim, T.K. Weakly-supervised domain adaptation via gan and mesh model for estimating 3d hand poses interacting objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 13–19 June 2020; pp. 6121–6131. [Google Scholar]

- Stavrev, S. Reimagining Robots: The Future of Cybernetic Organisms with Energy-Efficient Designs. Big Data Cogn. Comput. 2025, 9, 104. [Google Scholar] [CrossRef]

| Approach | Techniques | Applications |

|---|---|---|

| Geometric | Point clouds, 3D Gaussian, SDFs | Prediction of contact points and refinement of hand–object interactions [19,26,27]; applicability to precise positioning and shape adaptation; use of implicit surface modeling [19], contact by Gaussian approximation [27] and diffusion text-driven models [26]. |

| Physical interaction modeling | Optimal control, force/torque equilibrium, elastic energy | Evaluation and stabilization of contact points and grip forces [20,22,24]; dynamic correction via capsular triggers [22]; realistic poses via elastic energy optimization [23]; fine-tuning with control strategies [20]. |

| Tactile and sensor-based | Tactile data, haptic feedback, visual-tactile fusion | Tactile–visual grasp planning [28,29,30]; Bayesian optimization of secure configurations [30]; application to uncertain environments and transparent objects [28,36]. |

| Simulation and kinematic | Rigid-body dynamics, differentiable simulation, 3D pose tracking | Simulation of contact dynamics and sequences from approach to stabilization of the grip [35,50,51]; extraction of detailed kinematic data through simulations and multi-camera configurations [35,50]; application in realistic animation and testing of robotic grippers. |

| Machine learning | CNNs, transformers, VAEs, RL | Predicting poses and grip quality through deep learning [37,40,52]; improving touch-based grips through reinforcement learning [37]; generative approaches to modeling stable configurations and refining through physical constraints [14,53]. |

| Contextual | MRGs, contextual reasoning, language descriptions | Processing of task-specific constraints and scenes with high object density [44,46]; modeling dependencies between objects via manipulation relationship graphs [44]; contextual modules for task understanding and action prediction [45,46]. |

| Multidimensional | Co-attention, RGB-D and tactile fusion, 3D fitting | Integration of multidimensional data for more accurate modeling [31,32]; combining RGB-D and tactile information in manipulation tasks [32]; co-attention mechanisms for combining visual and tactile signals in grasp prediction [31]. |

| Human-centric learning | Human motion tracking, bimanual grasp, shape decomposition | Bimanual grip prediction [42]; structure extraction from human demonstrations for better grip quality [33]; motion tracking for adaptive learning [35]. |

| Dataset | Type | Materials Represented | Data Representation | Preprocessing | Text-to-Motion |

|---|---|---|---|---|---|

| HO-3D [21] | Real | Ten objects (plastic, organic materials, metal, paper, etc.) | Three-dimensional hand/object poses; segmentation masks | Manual alignment of grasps and masks | No |

| ObMan [68] | Synthetic | Eight object categories from ShapeNet (bottles, bowls, cans, jars, knives, cellphones, cameras, remote controls) | MANO hand model; object meshes; point clouds | Contact map derivation; SDF computation | No |

| GRAB [70] | Real | Fifty-one objects (plastic, glass, organic materials, etc.) | SMPL-X models; 3D contact and motion data | Annotation filtering; ground-truth cleanup | Yes |

| DexYCB [35] | Real | YCB objects (wood, metal, plastic, cardboard, natural materials, etc.) | Six-dimensional hand/object poses; RGB-D images | Downsampling; normalization | No |

| OakInk [71] | Real | Ceramic, metal, etc. | Point clouds; labeled grasp vectors | Point segmentation | No |

| ContactPose [69] | Real | Glass, metal, plastic, etc. | Three-dimensional hand/object poses; high-resolution thermal-based contact maps; multi-view RGB-D images | Thermal data preprocessing; object surface extraction | No |

| MOW [55] | Real | Organic, plastic, metal, etc. | RGB images; 3D reconstructed hand– object models | Object segmentation; 3D mesh selection | No |

| HUMANISE [45] | Synthetic | Indoor objects; full-body interactions | Three-dimensional human motion sequences; point clouds | Alignment of motion and scenes; text description generation | Yes |

| Tactile Glove [28] | Real | Ceramic, plastic, metal | Tactile images; 3D positions; velocities | Tactile encoding via CNN; data embedding | No |

| Evaluation Category | Techniques | Advantages | Limitations |

|---|---|---|---|

| Quantitative metrics | MPJPE [26,80,81], Chamfer distance [17,80,82], F-Score [80,83], intersection volume [50,52], contact ratio [52,75], simulation displacement [18,78] | Objective and precise; widely used in assessing pose and geometry accuracy; ensures quantitative evaluation of joint-level accuracy (MPJPE) and evaluates shape fidelity (Chamfer distance) | Capturing nuances of human perception or complex interactions; does not always correlate with subjective quality; different object geometries comparison. |

| Physical realism | Penetration depth [18,84], contact consistency [52,75], stability in simulation [18,78,84] | Ensures grasps are physically possible. Penetration depth highlights unrealistic overlaps. Stability in simulation tests interaction feasibility in robotic settings. | Simulations do not fully capture real-world physics. Variability in object surface and friction coefficients may affect accuracy. Computationally intensive for complex models. |

| Qualitative assessments | Visual inspection [72,85], perceptual studies (e.g., Likert scales, user feedback) [61,70,78] | Captures nuances of human perception; identifies naturalness and aesthetic aspects missed by quantitative metrics. Effective for end-user-focused evaluations. | Subjective and often biased. Requires large-scale user studies for statistical significance. Difficult to replicate consistently. |

| Comparative analysis | Baseline methods (e.g., PointNetGPD [40], HOMan [86]), ablation studies [55,63] | Provides context by benchmarking in comparison to existing approaches. Highlights the impact of model components. | Dependent on the choice of baselines, not always captured interdependencies between components |

| Dataset-specific metrics | Diversity metrics [18,26], coverage of real-world interactions [35,36] | Ensures models handle a wide range of interaction scenarios, making them robust for real-world use. Diversity metrics capture variability across grasp styles and motions. | Dependent on dataset quality and diversity. Limited coverage may reduce generalizability. Metrics might overemphasize diversity while neglecting physical feasibility. |

| Real-world testing | Simulation-based validation [14,19], robotic experiments [30,87] | Tests the model in practical environments, validating its application readiness. Highlights failure modes not evident in simulations. | Expensive and resource-intensive. Real-world conditions like lighting, clutter, or material properties may introduce variability, making results less reproducible. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Christoff, N.; Neshov, N.N.; Petkova, R.; Tonchev, K.; Manolova, A. Grasping in Shared Virtual Environments: Toward Realistic Human–Object Interaction Through Review-Based Modeling. Electronics 2025, 14, 3809. https://doi.org/10.3390/electronics14193809

Christoff N, Neshov NN, Petkova R, Tonchev K, Manolova A. Grasping in Shared Virtual Environments: Toward Realistic Human–Object Interaction Through Review-Based Modeling. Electronics. 2025; 14(19):3809. https://doi.org/10.3390/electronics14193809

Chicago/Turabian StyleChristoff, Nicole, Nikolay N. Neshov, Radostina Petkova, Krasimir Tonchev, and Agata Manolova. 2025. "Grasping in Shared Virtual Environments: Toward Realistic Human–Object Interaction Through Review-Based Modeling" Electronics 14, no. 19: 3809. https://doi.org/10.3390/electronics14193809

APA StyleChristoff, N., Neshov, N. N., Petkova, R., Tonchev, K., & Manolova, A. (2025). Grasping in Shared Virtual Environments: Toward Realistic Human–Object Interaction Through Review-Based Modeling. Electronics, 14(19), 3809. https://doi.org/10.3390/electronics14193809