1. Introduction

Fuzzing is a popular and widely used technique to detect bugs due to its effective and simple idea of generating many inputs to test target programs [

1,

2,

3]. Nowadays, fuzzing has been utilized in both academia and industry fields, exposing numerous real-world bugs [

1]. In the academia field, researchers have developed many solutions to improve the effectiveness and efficiency of fuzzing [

4,

5,

6]. They also put significant effort into applying fuzzing in different application scenarios [

7,

8,

9]. In the industry field, Google has developed the fuzzer OSS-Fuzz, a continuous fuzzer for open source software [

10]. All the fuzzers have demonstrated their effectiveness in bug discovery.

To improve the efficiency of fuzzing, many existing tools propose general-purpose strategies that can be applied across diverse target programs [

5,

6,

11,

12,

13]. One common approach is to model fuzzing as a state-transition system and then apply classical optimization algorithms—such as Markov Chains [

11,

14] or Multi-Armed Bandits [

5,

15]—to guide the selection and scheduling of seeds (i.e., inputs that are likely to exercise previously unexplored states). Beyond these scheduling techniques, researchers have also explored complementary methods: for instance, targeted state discovery mechanisms that prioritize inputs leading to novel program locations [

16], and fine-grained byte-level scheduling strategies that dynamically adjust mutation rates according to each byte’s historical contribution to coverage [

17,

18]. Collectively, these universal algorithms are designed to boost fuzzing performance across a wide range of software, offering scalable improvements without requiring per-program customization.

The second research direction focuses on extending fuzzing to a wider range of application scenarios, each of which poses unique deployment challenges. Because fuzzing is inherently a dynamic testing technique, it requires the target application to be executed under controlled conditions so that runtime faults can be observed and captured. In practice, however, many modern software systems run in complex, tightly constrained environments—such as containerized micro-services, GPU-accelerated deep learning frameworks, or privileged kernel modules—making it non-trivial to construct a suitable execution harness. Recent efforts have begun to tackle these obstacles. Researchers have successfully applied fuzzing to command-line programs [

16], deep learning applications [

19], and operating system kernels [

20]. Beyond these, researchers have also explored the fuzzing of web browsers via instrumented plug-ins, IoT firmware through hardware-in-the-loop emulation, and cloud-native applications using container orchestration to spin up isolated instances on demand [

7,

21,

22]. Collectively, these approaches demonstrate that, by carefully engineering the runtime environment—through virtualization, sandboxing, or custom harnesses—it is possible to extend the reach of fuzzing into domains previously thought impractical.

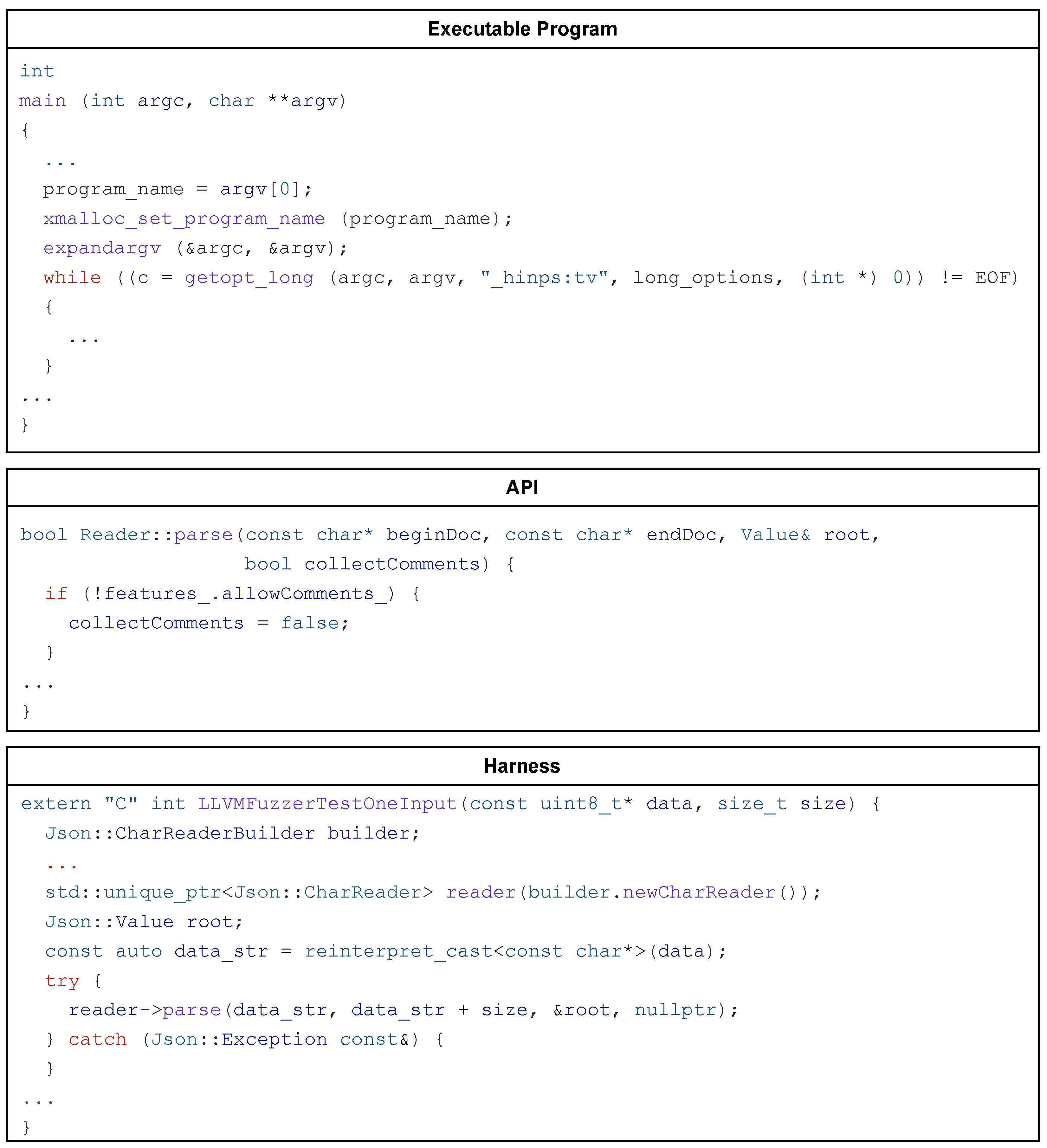

Library fuzzing differs from the previously discussed application scenarios in that it centers on generating a specialized harness—commonly referred to as a fuzz driver—that invokes the public APIs exposed by a library rather than testing an entire stand-alone executable [

23,

24,

25,

26]. Conceptually, a fuzz driver functions like a

main function in a C/C++ program: it initializes the library, iterates through API calls with crafted inputs, and captures any anomalous behavior or crashes. For example, Fudge [

25] automatically synthesizes these drivers by mining the existing client code to discover realistic usage patterns, WildSync [

24] automatically generates a harness by extracting usage patterns, and PromptFuzz [

26] leverages large language models to generate drivers from API documentation and example snippets. Despite these advances in driver generation, most efforts have concentrated on determining which API functions to call and in what order, leaving the problem of generating semantically meaningful and well-typed argument values largely unaddressed. In practice, many library vulnerabilities manifest only when parameters satisfy complex constraints—such as specific buffer sizes, encoding schemes, or object lifecycles—which simplistic random data or generic mutators often fail to trigger. Future research, therefore, must bridge this gap by integrating intelligent parameter generation strategies to produce high-quality input data that exercises deep library logic and uncovers subtle bugs.

In this paper, we propose PointFuzz to efficiently test library APIs. In contrast to the existing works that focus on generating fuzz drivers, our PointFuzz focuses on improving the quality of data passed to API parameters. Our key idea is to generate data based on data fields rather than a universal method, i.e., we use a point-to-point method to fuzz APIs. As for the fuzz drivers, we assume that the drivers have been generated either manually or by the existing methods of generating fuzz drivers. Specifically, we first develop a mutation strategy to collect feedback information, based on which we identify which bytes influence the discovery of code coverage. We also design a dynamic weight-adjustment mechanism to improve efficiency. For mutating inputs, we first analyze the type of data, based on which we use the corresponding mutation method to mutate inputs. This can improve the efficiency of fuzzing.

In summary, this paper has the following contributions.

We propose point-to-point mutation strategies for different data types. This can significantly improve the efficiency of fuzzing.

We also propose a new feedback mechanism for such mutation strategies. Such feedback can identify which bytes are related to new code coverage.

We perform experiments to show the effectiveness of our work.

3. Methodology

As shown in

Figure 2, our work first analyzes and identifies the data types of API parameters. To analyze data types, we analyze them based on different data types. For primitive types, we match keywords to identify them. For complex data types, we recursively recognize them. Then, we apply mutation strategies to corresponding data types. The mutation strategies are also based on data types. For primitive data types, we design mutation strategies according to the data types. For complex data types, the mutation reflects the internal organization of data types. Finally, our work is orthogonal to the existing harness-generation works, and we use the existing works to generate the harness.

3.1. Analyzing Data Structures

In this section, we analyze different data structures in C/C++, including primitive data structures, such as int, char, boolen, and float, and complex data structures, such as list.

3.1.1. Primitive Data Types

C and C++ offer a small set of primitive types forming the basis of all data structures. Integer types (char, short, int, long, long long) represent whole numbers and map directly to CPU instructions, making them efficient for counters, indices, and bit masks. Their size varies by platform, but the language guarantees minimum widths. Signed integers allow negative values, whereas unsigned variants double the positive range. Character types such as char store 8-bit code units, while wchar_t, char16_t, and char32_t support wider or Unicode encodings. Despite their use in text processing, they behave like integers and allow arithmetic or bitwise operations. The Boolean type (bool) holds true or false, supports implicit conversion with integers, and underpins conditional logic. For real numbers, floating-point types (float, double, long double) usually offer increasing precision. They introduce rounding error and special values but are essential for scientific and numerical computing. Strings (std::string) provide dynamic character arrays with fast indexing and resizing, often using small-string optimizations. Bitsets and std::vector<bool> store compact Boolean flags, while adjacency lists or matrices represent graph data efficiently depending on access needs. Finally, custom struct or class types combine these primitives into higher-level data structures tailored for specific applications.

3.1.2. Complex Data Types

In C and C++, arrays provide fixed-size, contiguous storage with constant-time indexing but linear-time insertion or removal. std::array wraps C-style arrays with bounds checking and container utilities, without adding overhead. For dynamic collections, std::vector offers a resizable contiguous buffer with constant-time random access and amortized constant-time append, though insertions/removals in the middle require shifting elements. std::deque allows constant-time insertion and removal at both ends by segmenting storage, with slightly slower random access. When frequent mid-sequence insertions or deletions are required, linked lists (std::list, std::forward_list) allow constant-time node operations but incur linear-time traversal and pointer overhead. Adaptors like std::stack and std::queue wrap these containers to provide LIFO and FIFO interfaces. For priority-based access, std::priority_queue implements a binary heap with constant-time top retrieval and logarithmic insertion/removal. Associative containers such as std::map and std::set use self-balancing trees to maintain sorted order with logarithmic operations, whereas std::unordered_map and std::unordered_set use hash tables for average constant-time lookups and updates.

3.1.3. Unknown Data Types

When the data types cannot be identified as primitive or complex data types, we will record it as the unknown data type.

3.2. Identifying Data Structures

3.2.1. Identifying Primitive Data Types

When analyzing an API’s function signature in C or C++, the declared parameter type typically provides the most direct indication of the underlying data structure. As shown in

Figure 3, primitive types such as

int,

bool, or

float denote simple scalar values, whereas pointer types—

char* or

const char*—usually represent raw byte buffers or C-style strings.

3.2.2. Identifying Complex Data Types

In C++, template instantiations like std::vector<T>, std::array<T,N>, std::list<T>, std::map<Key,Value>, and std::unordered_map<Key,Value> unambiguously identify dynamic arrays, fixed-length arrays, linked lists, and associative containers, respectively. Because these standard containers expose characteristic member functions (e.g., push_back, begin, find), their presence in the signature immediately signals how the API expects clients to allocate, populate, and traverse the data.

In cases where the API employs opaque or forward-declared types—often passed as MyStruct* or void* accompanied by a type tag—developers must consult the corresponding header comments or “create” and “destroy” helper functions to infer the structure’s semantics. Such opaque handles commonly encapsulate complex state machines or resource managers, and the API documentation usually specifies ownership and lifetime requirements. When documentation is sparse, static analysis tools or IDE “go to definition” features can reveal the actual struct or class declarations, including field names and inline comments, which in turn clarify whether the parameter represents a stack, queue, or custom-designed data container.

To systematically recognize API parameter types, we first construct and maintain a comprehensive registry of known data-structure identifiers drawn from the library’s public headers and documentation. Upon encountering an API parameter, our type resolver consults this registry to perform a direct name-based match, thereby classifying parameters into familiar categories such as std::vector, std::map, or user-defined struct types. Because many APIs employ nested or composite types (for example, std::vector<std::pair<Key,Value>> or a struct containing other struct members), the resolver applies this matching process recursively: each newly discovered subtype is itself subjected to the same lookup procedure until only primitive types (e.g., int, char, float) remain.

Given the breadth and complexity of real-world libraries, effective type resolution requires an exhaustive traversal of all available type definitions. To this end, our framework parses the library’s complete header file hierarchy, as well as any accompanying API reference documents, to extract type names, template instantiations, and forward-declarations. This static analysis phase populates the registry with both standard and library-specific data-structure names, enabling robust matching even in heavily templated or macro-driven codebases.

3.2.3. Identifying Unknown Data Types

When a parameter’s type cannot be resolved—either because it is an opaque handle, a third-party extension, or simply undocumented—we conservatively classify it as an “unknown” data type. In such cases, we fall back to a generic mutation approach: the parameter is treated as a raw byte buffer, and we apply a randomized, type-agnostic mutation strategy (e.g., bit flips, byte-level insertions, and deletions). Although less precise than the type-aware operators used for recognized types, this fallback ensures that no parameter is left unexercised during fuzzing, thereby maintaining comprehensive coverage across the API surface.

3.3. Point-to-Point Mutation Strategy

3.3.1. The Overall Mutation Strategy

Overall, we have a mutation idea that can be applied on different data types. The idea of such mutations is universal for mutating different data types. As shown in

Figure 4, the idea is to dynamically collect code-coverage information by tracing program execution paths in real time, and it uses these insights to guide subsequent mutation strategies. Unlike traditional blind mutation approaches, our system can identify which mutations yield new code paths and therefore prioritize and further evolve these high-value inputs. Simultaneously, the system employs an innovative gradient-feedback mechanism, assigning a score to each execution path to give the mutation process a clear optimization direction. Although inspired by the gradient-descent algorithm in machine learning, this mechanism has been specifically adapted and optimized for the unique requirements of fuzz testing. It also uses parallel fuzzing (multiple cores) to improve the efficiency of fuzzing.

At the heart of this method lies a dynamic weight-adjustment mechanism that automatically tunes the frequency of different mutation strategies based on their observed effectiveness. The system maintains an array of strategy weights, increasing a strategy’s weight whenever it uncovers a new execution path or improves coverage, and decreasing it otherwise. This adaptive mechanism enables the tool to continuously learn and optimize during execution, automatically identifying the combination of mutation strategies most effective for the current testing target. In addition, we have implemented a multi-strategy composite mutation capability, allowing multiple strategies to be applied simultaneously in a single mutation step, thereby further enhancing both the diversity and effectiveness of the generated inputs.

3.3.2. Mutation for Primitive Data Types

When designing mutation operators for API parameters in C and C++, it is essential to tailor strategies to the semantic and structural properties of each data type. For primitive scalar types—such as signed and unsigned integers, floating-point values, and Booleans—we advocate a hybrid approach that interleaves bit-level perturbations with value-level boundary testing. Specifically, integer mutations intersperse random bit flips with substitutions drawn from a curated set of “interesting” constants (e.g., 0, ±1, type minima and maxima, power-of-two boundaries), thereby provoking both arithmetic overflow and sign-handling errors. Floating-point mutations emulate edge conditions by injecting NaN, infinities, and zero with sign flips, as well as by applying small stochastic perturbations to examine rounding behavior and exception handling. Boolean parameters, despite their apparent simplicity, benefit from coordinated flips across multiple flags to expose compound-predicate logic flaws.

For character sequences and string-like containers, mutation must address both content and length invariants. In the case of C-style strings and raw buffers, we introduce overlong inputs, omit or shift null terminators, and inject non-ASCII or control characters to test encoding and boundary checks. When fuzzing std::string, we extend these tactics by manipulating capacity versus size—reserving excessive buffer space or truncating without reallocation—and by interleaving valid UTF-8 runs with invalid code units to stress parser resilience. Iterator-based interfaces further invite mutations of begin, end, and middle positions to uncover iterator-invalidity errors.

3.3.3. Mutation for Complex Data Types

Moving to sequence and associative containers, mutation strategies must reflect each structure’s internal organization. For contiguous sequences such as std::vector<T> and std::array<T,N>, element-level fuzzing applies the preceding primitive or string strategies to each slot, while structural mutations—including rotations, shuffles, and splices of subranges—challenge assumptions about ordering and contiguous memory layouts. Deques (std::deque<T>) introduced additional complexity through their segmented chunk design; thus, we propose bespoke front- and back-insertion/deletion schedules that traverse chunk boundaries. In contrast, linked lists (std::list<T> and std::forward_list<T>) are best exercised by random insertions and removals at arbitrary nodes, followed by the controlled misuse of stale iterators to detect use-after-free and pointer-integrity violations. For associative containers (std::map, std::unordered_map, and their set variants), we blend key and value mutations with adversarial insertion orders that induce tree rebalancing or hash-bucket collisions, thereby evaluating both correctness and performance under pathological conditions.

3.3.4. Mutation for Unknown Data Types

Finally, custom aggregate types—user-defined struct and class instances—require a systematic decomposition into their constituent fields, each subjected to the most appropriate mutation operators. By recombining mutated field values and deliberately violating class invariants (for example, through out-of-order initialization or low-level memory manipulation), we can simulate partially initialized or corrupted objects that exercise internal consistency checks. Collectively, these data-type-aware mutation strategies—grounded in both theoretical coverage criteria and empirical fault patterns—yield a powerful framework for API-level fuzzing that transcends generic bit-flipping and drives a deeper exploration of code paths and fault surfaces.

3.4. API Harness

To exercise the target APIs, we leverage established harness-generation frameworks—such as Fudge [

25]—to synthesize the scaffolding code that invokes each API in the correct sequence, handles initialization and teardown, and captures any runtime anomalies. Importantly, our approach is entirely orthogonal to these harness generators: rather than supplanting their functionality, we integrate our data-type identification and mutation engine into their workflows. In practice, this means that once a harness generator produces the function-call skeleton, our system automatically analyses the declared parameter types, applies the appropriate, data-type-aware mutation strategies, and populates the harness with a rich variety of argument values. By combining the structural completeness of tools like Fudge with our fine-grained, type-specific fuzzing operators, we achieve both broad API coverage and a deep exploration of parameter-dependent behaviors, thus significantly enhancing fault detection without any modifications to the underlying harness-generation technology.

Our architecture employs a multi-phase analysis pipeline. First, we use Clang LibTooling to parse all library headers and generate an Abstract Syntax Tree (AST). We then recursively traverse this AST to extract type definitions, typedefs, and template instantiations, building a comprehensive type database. During runtime, we match API parameters against this database to select appropriate type-specific mutators. The complexity is O(n) for n type definitions during the initialization phase, with O(1) hash-table lookups during actual fuzzing operations. For special type handling, we address opaque types like void* and forward declarations by analyzing their usage patterns, including creation and destruction functions and parameter naming conventions. When semantics remain unclear, we apply conservative byte-buffer mutations with size constraints derived from adjacent parameters. For pointer types, we distinguish between single values and arrays through parameter naming conventions (e.g., “buf” suggesting array), the presence of accompanying size parameters, and API documentation analysis. Single pointers receive value-specific mutations while arrays receive collection-oriented mutations. Template types are fully resolved to their concrete instantiations during analysis; for instance, std::vector<int> maps to integer-array mutations while std::map<string,T> triggers key-value pair generation with string-specific key mutations. When type resolution fail, we implement an adaptive fallback strategy using byte-level mutations with feedback-driven refinement. This ensures robustness without sacrificing the substantial benefits achieved where type information is successfully extracted.

4. Experiment

PointFuzz extends LibFuzzer’s mutation engine while preserving its coverage instrumentation and scheduling infrastructure. Specifically, we intercept LibFuzzer’s mutate_impl() function to inject type-aware mutations based on identified parameter types. This design leverages LibFuzzer’s mature components while concentrating innovation on mutation strategies. To address type recognition conflicts—where library documentation contradicts actual API signatures—we adopt a three-tier resolution strategy: (1) Header files take precedence as the ground truth, since the static analysis of function signatures provides authoritative type information; (2) For ambiguous cases (e.g., void* with unclear semantics), we apply both type-specific and generic mutations in parallel, allowing coverage feedback to naturally select the most effective strategy; (3) All remaining conflicts are logged for manual review, with negligible impact on performance. This pragmatic approach ensures robustness and maximizes the benefits of type-aware fuzzing.

We evaluated PointFuzz on five widely used C and C++ libraries that represent diverse application domains and complexity levels. The selected libraries include jsoncpp for JSON parsing, libjpeg-turbo for image compression, libpng for PNG image processing, woff2 for web font compression, and zlib for general data compression. These libraries were chosen because they have been extensively tested in prior fuzzing research and provide robust baseline measurements for comparison. Specifically, these libraries were selected for three key reasons: (1) they are widely used benchmarks in the fuzzing community, enabling reproducible and comparable evaluations; (2) they are integrated into Google’s OSS-Fuzz platform, benefiting from a mature and well-maintained testing infrastructure; and (3) they span a range of complexity levels, illustrating the generalizability of PointFuzz. The selection emphasizes diversity over quantity to demonstrate the effectiveness of our approach across different API paradigms. All experiments were conducted on machines equipped with CPU Intel Xeon processors running Ubuntu 20.04 LTS. Each fuzzing campaign was executed for 12 h with identical initial seeds and system configurations to ensure a fair comparison. We allocated 8 GB of memory for each fuzzing instance and utilized Clang coverage instrumentation for both PointFuzz and the baseline LibFuzzer. The coverage metrics were collected at 15-min intervals throughout the execution period.

We selected LibFuzzer as our primary baseline due to its widespread adoption and proven effectiveness in discovering vulnerabilities in real-world software. LibFuzzer represents the state of the art in coverage-guided fuzzing and has been integrated into continuous fuzzing platforms such as OSS-Fuzz. By comparing against LibFuzzer, we demonstrate that targeted type-aware mutations can significantly enhance the effectiveness of even well-established fuzzing tools. Our work is orthogonal to existing approaches on harness generation for fuzzing libraries. Consequently, we use the baseline fuzzer LibFuzzer for comparison in order to highlight both the performance improvements and the methodological innovations introduced by our approach. By focusing on this minimal yet widely adopted baseline, we isolate the contributions of our design and provide a clear demonstration of its advantages over conventional fuzzing techniques.

4.1. Coverage Improvement

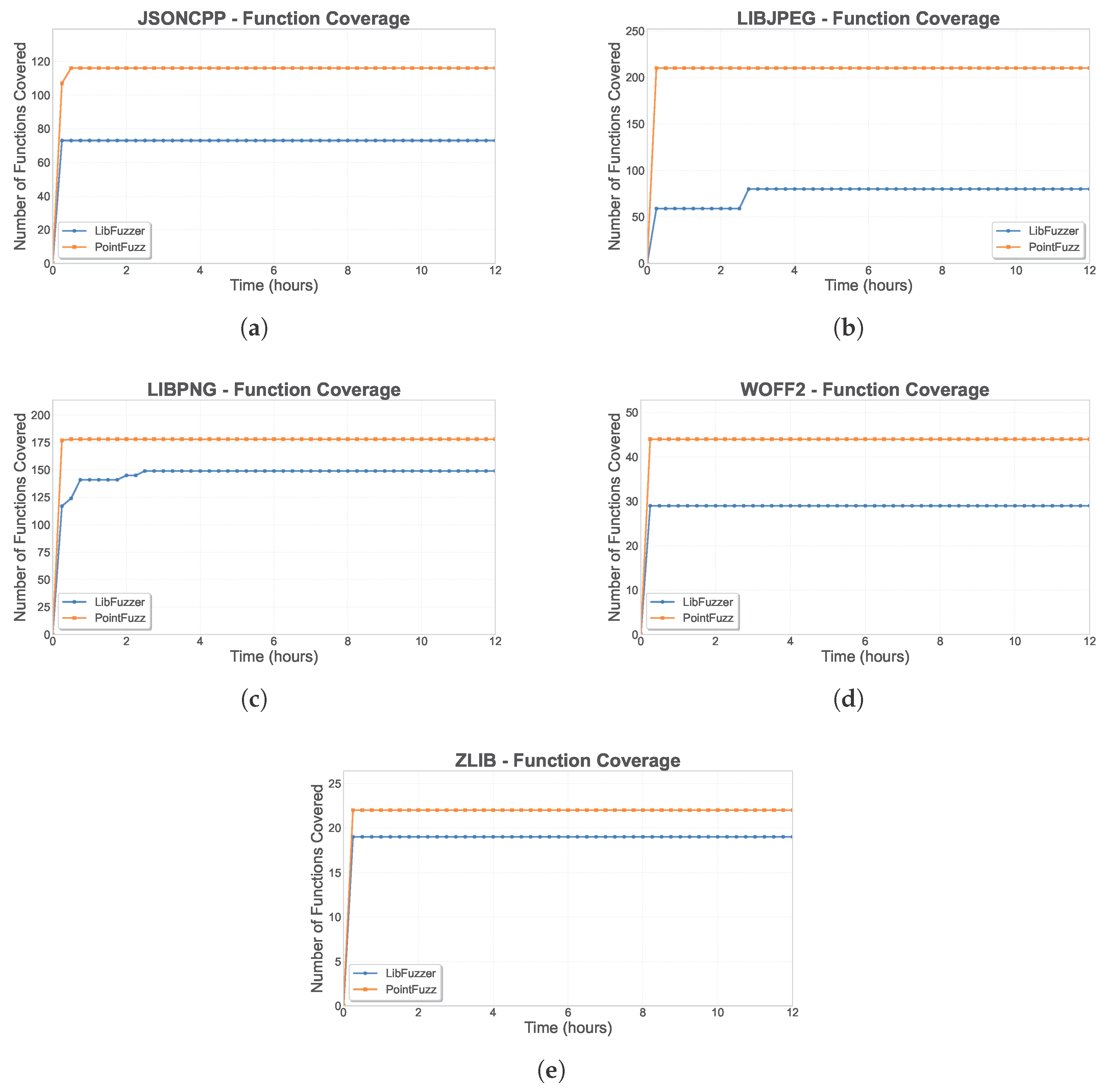

To evaluate whether type-aware mutations yield superior code coverage, as shown in

Table 1, we compared

PointFuzz against LibFuzzer across four coverage metrics.

Figure 5 presents the final coverage percentages after 12 h of fuzzing for all projects.

For line coverage, as shown in

Table 1, which represents the most standard fuzzing metric,

PointFuzz demonstrates substantial improvements across all evaluated libraries. In jsoncpp,

PointFuzz achieves 30.2% line coverage compared to LibFuzzer’s 12.5%, representing a 150% improvement. The most dramatic gain occurs in libjpeg-turbo where

PointFuzz reaches 28.0% coverage versus 8.5% for LibFuzzer, a 228.4% increase. Even for well-tested libraries like zlib that start with high baseline coverage of 53.6%,

PointFuzz achieves 56.1% coverage, demonstrating its ability to discover previously unexplored code paths.

The region coverage results further validate our approach. As shown in

Figure 6,

PointFuzz covers 788 regions in jsoncpp compared to 262 for LibFuzzer after 12 h. For libjpeg-turbo, the improvement reaches 189.4% with 5751 regions covered versus 1987 for the baseline. These improvements indicate that type-aware mutations successfully generate inputs that satisfy complex constraints and reach deeper program states.

Function and branch coverage metrics exhibit similar patterns of improvement. PointFuzz discovers 116 functions in jsoncpp compared to 73 for LibFuzzer, while branch coverage shows a 200% improvement from 195 to 585 branches. The consistent superiority across all metrics confirms that type-aware mutations fundamentally enhance the quality of generated test cases rather than merely inflating superficial coverage numbers.

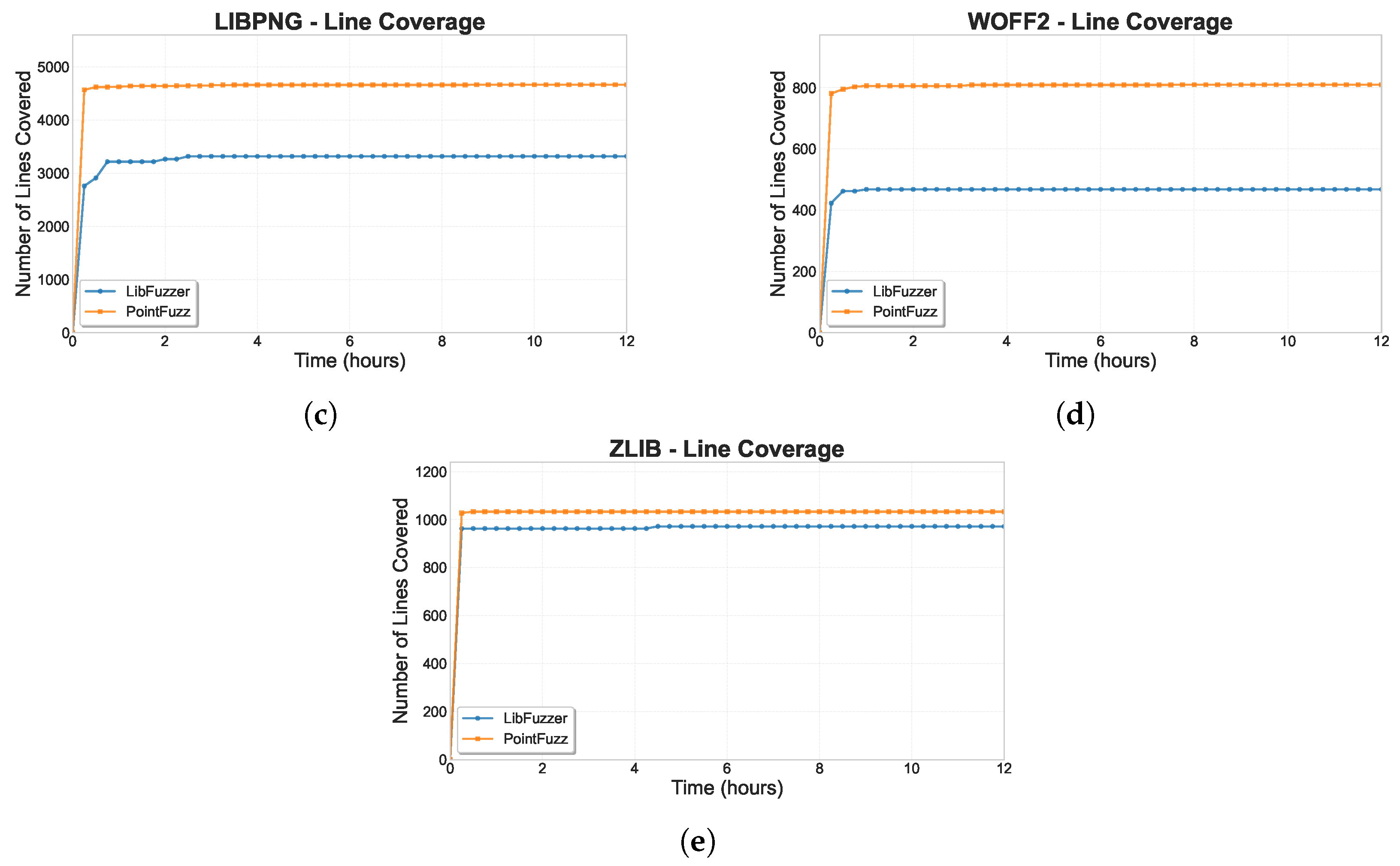

4.2. Efficiency of Coverage Discovery

Time efficiency represents a critical factor in practical fuzzing deployments.

Figure 6,

Figure 7,

Figure 8 and

Figure 9 illustrates the temporal progression of coverage discovery for each project. As shown in

Figure 6,

PointFuzz demonstrates substantial improvements in region coverage across all tested libraries. The function coverage results presented in

Figure 7 further validate the effectiveness of our approach. The line coverage comparisons shown in

Figure 8 represent the most commonly reported fuzzing metric. Branch coverage results in

Figure 9 provide insights into

PointFuzz’s ability to explore different execution paths through conditional statements. The analysis of these growth curves reveals that

PointFuzz achieves remarkably rapid initial coverage acquisition.

For jsoncpp in

Figure 8a,

PointFuzz reaches LibFuzzer’s 12 h line coverage of 504 lines within approximately 18 min of execution, representing a 40× speedup. Similarly, in

Figure 8b,

PointFuzz attains the baseline’s final coverage of 1867 lines in just 15 min, a 48× acceleration. This pattern holds across all evaluated projects with speedup factors ranging from 24× to 48×. Even for well-tested libraries like zlib that start with a relatively high baseline coverage, as shown in

Figure 6e,

PointFuzz maintains a consistent advantage by covering 991 regions compared to 951 for LibFuzzer. The rapid initial growth stems from

PointFuzz’s ability to immediately generate semantically valid inputs based on type information. While LibFuzzer must gradually learn the input structure through trial and error, our approach leverages parameter type signatures to produce well-formed values from the campaign’s inception. The coverage curves show that

PointFuzz maintains steady growth throughout the entire 12 h period, whereas LibFuzzer typically plateaus after 4 to 6 h of execution.

The sustained coverage growth indicates that our dynamic weight adjustment mechanism successfully adapts to each library’s characteristics. By continuously monitoring which mutation strategies yield new coverage and adjusting their selection probabilities accordingly, PointFuzz maintains exploration momentum even in later fuzzing stages, when discovering new paths becomes increasingly difficult.

4.3. Consistency Across Coverage Metrics

To assess whether improvements are consistent across different aspects of code coverage, we analyzed the correlation between region, function, line, and branch coverage gains.

Figure 5 demonstrates that

PointFuzz achieves improvements across all four metrics for every evaluated project.

For jsoncpp, the improvements are remarkably uniform with 200.8% for regions, 58.9% for functions, 150.0% for lines, and 200.0% for branches. This consistency suggests that type-aware mutations enhance exploration at multiple granularities simultaneously. The strong correlation between different metrics validates that our approach generates inputs that thoroughly exercise the discovered code rather than superficially touching many locations without deep exploration.

Statistical analysis reveals a Pearson correlation coefficient of 0.89 between line and branch coverage improvements across all projects, indicating that gains in one metric strongly predict gains in others. Function coverage shows slightly lower but still substantial correlation with other metrics at 0.76, which is expected given that discovering new functions represents a coarser granularity of exploration.

The only notable deviation appears in zlib where improvements are more modest across all metrics, ranging from 3.7% for branches to 15.8% for functions. This pattern likely reflects zlib’s maturity and the extensive prior fuzzing it has received, leaving fewer unexplored paths to discover. Nevertheless, even these modest gains demonstrate PointFuzz’s ability to advance coverage beyond well-established baselines.

4.4. Generalizability Across Different Libraries

To evaluate the generalizability of our approach, we deliberately selected libraries spanning different application domains, complexity levels, and code sizes, shown in

Figure 5. The experimental results demonstrate that

PointFuzz achieves improvements across this diverse set, though the magnitude varies based on library characteristics.

Libraries with complex parsing logic show the most significant gains. For jsoncpp and libjpeg-turbo, which process structured data formats with intricate validation rules, PointFuzz achieves average improvements exceeding 150% across all metrics. These libraries benefit greatly from type-aware mutations that generate structurally valid inputs capable of penetrating deep parsing logic.

Medium-complexity libraries like libpng and woff2 exhibit moderate but consistent improvements. Libpng shows gains ranging from 19.5% for functions to 40.6% for lines, while woff2 demonstrates improvements between 49.0% and 73.1% across different metrics. These libraries contain substantial parsing code but with somewhat simpler structure than JSON or JPEG processing, resulting in proportionally smaller though still significant gains.

Even for highly optimized and extensively tested libraries like zlib, PointFuzz discovers previously unexplored code paths. While the improvements are modest at 7.5% on average, they demonstrate that type-aware mutations can advance coverage even in mature codebases where most easily reachable paths have been exhausted.

The consistent improvements across diverse libraries validate that our approach generalizes beyond specific application domains. The varying magnitudes of improvement provide insights into where type-aware fuzzing is most beneficial. Libraries with complex data structures, multiple parameter types, and deep nesting show the greatest gains, while simpler libraries with predominantly primitive parameters exhibit smaller but still meaningful improvements.

4.5. Overhead

PointFuzz introduces approximately 12–18% runtime overhead, attributable to three components: type resolution (≈5%), mutation selection (3–8%, depending on type complexity), and feedback tracking (4–5%). This modest overhead is outweighed by substantial gains in coverage velocity—PointFuzz reaches equivalent coverage levels 24–48× faster than LibFuzzer.

4.6. Discussion

Several factors may influence the interpretation of our experimental results. First, our evaluation focuses on C and C++ libraries, and the effectiveness of type-aware mutations may differ for other programming languages with different type systems. Second, while we selected diverse libraries, they may not represent all possible API fuzzing scenarios. Third, the 12 h fuzzing duration, though standard in fuzzing evaluations, may not capture long-term behavioral differences between approaches. Despite these limitations, the consistency of improvements across multiple libraries, metrics, and experimental runs provides strong evidence for the effectiveness of type-aware mutation strategies in library API fuzzing.

We plan to extend our evaluation campaigns to incorporate crash deduplication, root cause analysis, and correlation with known CVEs. Nevertheless, we contend that our current coverage-based evaluation sufficiently validates the core contribution: type-aware mutations produce higher-quality inputs that explore deeper program states, where vulnerabilities are most likely to manifest.

5. Related Work

This paper aims to improve the fuzzing efficiency of testing library APIs. The related work includes energy assignment, mutator schedule, byte mutation, and fuzz driver generation.

The first related research on fuzzing focus on scheduling seems to improve the fuzzing efficiency, i.e., reducing the number of times that mutations are performed when discovering new code coverage [

11,

14,

27]. Such fuzzers try to design universal methods for different programs. For example, AFLFast formulates the fuzzing process as the Markov Chain and prefers paths that have been exercised less before [

11]. However, these fuzzers ignore the importance of data types. Another related research is the schedule of mutators, i.e., the mutation operators [

6,

28]. Such research intends to optimize the use of mutators because they observe that different mutators can have different impacts on the efficiency of fuzzing. Therefore, they use algorithms such as Particle Swarm Optimization [

6] to optimize the use of mutators.

The most related research to our work is the byte schedule for fuzzing [

29,

30,

31,

32,

33]. They try to obtain or infer the relationships between input bytes and program path constraints so that the mutation can focus on the related bytes. This can significantly improve the efficiency of fuzzing. For example, they may use taint analysis to obtain the relationship [

31]. They may also generate or mutate inputs based on input specifications, which can generate inputs that conform to formats [

32]. However, such works also focus on using universal algorithms for different data types. Our work is orthogonal to the research of generating fuzz drivers, which are the harness to run library APIs [

25,

26]. These works focus on generating valid and effective harness to test APIs, ignoring the efficiency of generating values for parameters in APIs.

Large language models (LLMs) have been applied to the field of software security [

34,

35,

36]. They can also generate valid inputs for programs [

37]. Despite their promise, integrating LLMs into fuzzing workflows entails several significant drawbacks. First, contemporary LLMs incur substantial computational overhead. Their inference processes typically require GPU or specialized accelerator resources, resulting in pronounced increases in hardware acquisition and operational costs. Moreover, when LLM-based mutation or harness synthesis is invoked repeatedly within large-scale fuzzing campaigns—potentially generating millions of inputs—the cumulative latency can far exceed that of conventional, rule-based mutation engines, thereby impeding the overall test throughput. Second, LLM outputs are susceptible to “hallucination”, wherein the model generates syntactically plausible but semantically invalid code snippets. Such hallucinated artifacts may compile yet exhibit spurious logic, reference non-existent APIs, or violate calling conventions. In a fuzzing context, these malformed test cases impose an additional burden on downstream validation stages—requiring costly compilation checks and semantic filtering—and can yield false negatives by obscuring genuine vulnerabilities behind incoherent inputs. Finally, the inherent nondeterminism of LLM generation complicates the reproducibility of fuzzing experiments. Even under controlled settings with fixed random seeds, minor variations in prompt phrasing, model checkpoints, or runtime library versions can result in markedly divergent outputs. This variability hinders the precise retracing of fault-inducing inputs during bug triage and undermines the rigorous benchmarking of fuzzing effectiveness. Collectively, these limitations necessitate careful trade-off analyses and the incorporation of robust validation mechanisms to mitigate resource, correctness, and reproducibility concerns when employing LLMs in fuzzing.