1. Introduction

The exponential proliferation of Internet of Things (IoT) devices—projected to exceed 75 billion by 2025—has fundamentally transformed the cybersecurity landscape, creating an expansive attack surface that traditional security paradigms struggle to defend [

1]. These resource-constrained devices, often deployed with minimal security protocols and infrequent software updates, present unique challenges for intrusion detection systems (IDSs), which must identify both known threats and zero-day attacks across heterogeneous network environments [

2]. The critical role of IoT networks in smart cities, industrial control systems, and healthcare infrastructure necessitates robust, adaptive detection capabilities that can respond to rapidly evolving threat patterns without extensive retraining or manual intervention.

Traditional network intrusion detection has long relied on signature-based systems and rule-driven approaches, which prove inadequate against the dynamic threat landscape of modern IoT environments [

3,

4]. The emergence of machine learning-based IDSs has shown significant promise, with ensemble methods—particularly Random Forest and Gradient Boosted Decision Trees—dominating academic benchmarks and achieving reported accuracy rates exceeding 99% on standard datasets like CIC-IDS2017 [

5,

6]. However, these impressive accuracy figures often mask a critical limitation that has profound implications for operational security: the inability to detect minority attack classes due to severe class imbalance inherent in cybersecurity datasets, where benign traffic typically comprises 80–90% of network flows.

This limitation becomes particularly concerning when considering that some of the most dangerous threats—such as advanced persistent threats (APTs), zero-day exploits, and sophisticated infiltration attempts—often manifest as minority classes in training data. The failure to detect these rare but critical attack types represents a significant vulnerability that could prove catastrophic in operational environments, despite achieving high overall accuracy metrics that may provide false confidence in system performance.

The recent advent of foundation models has opened unprecedented opportunities for addressing these fundamental limitations in cybersecurity applications [

7]. Unlike traditional machine learning approaches, which require extensive training on domain-specific datasets, foundation models leverage pretrained representations that can generalize across tasks with minimal or no additional training. This paradigm shift offers particular advantages for cybersecurity applications where labeled data for emerging threats are scarce and where rapid adaptation to new attack patterns is essential.

TabPFN (Tabular Prior-Data Fitted Network) represents a breakthrough in this domain, offering a probabilistic transformer trained to approximate Bayesian inference without requiring per-dataset optimization [

8,

9,

10]. However, the foundation model landscape for tabular data has expanded significantly, with new approaches like TabICL (Tabular In-Context Learning) offering alternative paradigms that warrant comprehensive evaluation.

TabICL represents a fundamentally different approach within the foundation model family, leveraging in-context learning mechanisms that enable classification through example-based reasoning rather than probabilistic inference [

11]. This approach has shown remarkable success in natural language processing domains and presents unique advantages for cybersecurity applications where explainability and rapid adaptation to new threat patterns are paramount. Additionally, large language models (LLMs) have demonstrated surprising effectiveness in few-shot tabular classification tasks, offering inherent explainability that addresses critical requirements in security operations centers [

12].

Despite the promise of these diverse foundation model approaches, their comprehensive evaluation for cybersecurity applications—particularly across multiple datasets and threat landscapes—remains largely unexplored. Many existing studies typically focus on specific models or limited datasets [

13,

14], leaving significant gaps in the understanding of how different foundation model paradigms perform across diverse network environments and attack types [

15,

16]. Moreover, the cybersecurity community’s continued reliance on tree-based ensembles may reflect methodological limitations in previous evaluations rather than fundamental algorithmic superiority [

17,

18].

This paper addresses these critical gaps through a comprehensive multi-modal evaluation of foundation models for tabular intrusion detection across diverse cybersecurity datasets. Our work extends beyond previous single-model evaluations to provide a complete assessment of the foundation model landscape for cybersecurity applications, enabling practitioners to make informed decisions about optimal architectures for their specific operational requirements.

Our primary contributions are fourfold:

1. Comprehensive Multi-Modal Evaluation of Foundation Models: We present the first systematic comparison of multiple foundation model approaches—TabPFN, TabICL, and LLMs—against traditional machine learning methods across three distinct cybersecurity datasets (CIC-IDS2017, N-BaIoT, and CIC-UNSW), providing a complete assessment of foundation model capabilities for tabular intrusion detection.

2. Cross-Dataset Generalization Analysis: Through rigorous evaluation across diverse threat landscapes and network configurations, we demonstrate that foundation models maintain consistent performance advantages, while traditional methods exhibit significant dataset-specific variations, establishing foundation models’ superior generalization capabilities.

3. Advanced Methodological Framework: We establish refined experimental protocols that implement model-appropriate evaluation strategies, addressing class imbalance through tailored sampling approaches for each model family while ensuring fair comparison across fundamentally different architectural paradigms.

4. Operational Deployment Guidelines: We provide the first comprehensive framework for selecting and implementing foundation models in operational cybersecurity environments, offering practical guidance on when different foundation model approaches excel and how to leverage their complementary strengths.

Our findings fundamentally challenge the prevailing assumption that tree-based ensemble methods represent the optimal approach for tabular IDS applications. We demonstrate that foundation models—particularly TabPFN and TabICL—not only achieve competitive overall performance but also uniquely detect all attack classes, including rare threats that traditional methods completely miss. This comprehensive detection capability, achieved without traditional training requirements, represents a paradigm shift toward training-free, generalizable intrusion detection systems capable of adapting to emerging threats without extensive retraining cycles.

Note that our use of the term multi-modal refers to the evaluation across multiple modeling paradigms (tree ensembles, deep models, tabular foundation models, and LLMs) and datasets, rather than multi-modal input data (e.g., image + text). All datasets used here are tabular network flow records.

The remainder of this paper is organized as follows:

Section 2 reviews related work on foundation models and intrusion detection systems.

Section 3 describes our comprehensive experimental methodology and evaluation protocols.

Section 4 presents detailed results comparing foundation models against traditional approaches across all datasets.

Section 5 discusses implications for operational deployment and future research directions, while

Section 6 concludes with actionable recommendations for the cybersecurity community.

2. Related Work

Intrusion detection systems have undergone significant evolution from signature-based approaches to sophisticated machine learning architectures. Early benchmarks such as KDD’99 and NSL-KDD [

3] established foundational evaluation protocols but became limited due to their synthetic nature and outdated attack representations. Contemporary datasets, particularly CIC-IDS2017 [

5] and UNSW-NB15 [

4], have emerged as standard benchmarks due to their realistic network traffic simulation and comprehensive labeling across diverse attack categories.

Traditional machine learning approaches—including logistic regression, Support Vector Machines (SVMs), and k-Nearest Neighbors (k-NN)—have been extensively deployed in IDS applications due to their interpretability and computational efficiency [

19]. However, ensemble methods, particularly Random Forest and Gradient Boosted Decision Trees (GBDTs), have consistently dominated tabular IDS benchmarks, achieving superior performance through their robustness to noise, resistance to overfitting, and inherent capacity for modeling complex feature interactions [

20,

21].

Despite the prevalence of tree-based models, recent advances in deep learning have catalyzed interest in neural approaches for structured cybersecurity data [

22,

23]. TabNet [

24,

25] introduced a transformer-based architecture specifically designed for tabular data, demonstrating competitive performance through its attentive feature selection mechanism, and providing interpretability via sparse attention weights. However, deep learning approaches typically require extensive hyperparameter optimization and sophisticated data augmentation strategies, particularly when confronting the severe class imbalance characteristic of cybersecurity datasets.

The Synthetic Minority Oversampling Technique (SMOTE) [

26] and its variants have become standard approaches for addressing class imbalance through synthetic minority-class generation. Recent studies reveal divergent effects across model families: while tree-based models may suffer performance degradation from synthetic noise, representation-learning architectures like TabNet often benefit substantially from SMOTE augmentation, underscoring the critical importance of tailored data augmentation strategies for deep learning in cybersecurity applications [

27,

28].

The emergence of foundation models represents a paradigmatic shift in machine learning, with transformers achieving remarkable success across diverse domains [

15,

29,

30,

31,

32,

33]. In the tabular domain, TabPFN [

9] constitutes a breakthrough as a probabilistic transformer that approximates Bayesian inference without requiring task-specific training. By pretraining on synthetic datasets to learn general tabular patterns, TabPFN achieves competitive performance through single forward passes, eliminating traditional training overhead while maintaining strong generalization capabilities.

Building upon TabPFN’s foundation [

10], TabICL [

11] represents a significant advancement in scalable tabular foundation models. TabICL introduces a novel two-stage architecture combining column-then-row attention mechanisms to build fixed-dimensional embeddings, enabling the efficient processing of datasets with up to 500 K samples while avoiding task-specific retraining and hyperparameter tuning, making it particularly attractive for cybersecurity applications where rapid adaptation to new threats is essential.

Concurrently, large language models have demonstrated impressive few-shot learning capabilities through in-context learning (ICL) mechanisms [

29,

34]. Recent works like TabLLM [

35] have explored applying LLMs to tabular classification by converting structured data into natural language representations. While these approaches show promise in low-data regimes and provide inherent explainability, they typically underperform specialized tabular models when abundant training data are available [

12].

The application of LLMs to cybersecurity tasks has gained increasing attention, with recent surveys [

7,

36,

37] highlighting growing interest in LLM applications for threat intelligence, vulnerability analysis, and security code generation. IDS-Agent [

38] represents early work applying LLMs to intrusion detection in IoT networks, emphasizing explainability benefits that are crucial to security operations centers, where understanding the rationale behind threat classifications is essential to incident response.

A critical gap in the existing literature lies in the methodological rigor of the evaluation of foundation models for cybersecurity applications. Many studies suffer from an inadequate handling of class imbalance, inconsistent sampling strategies across different model families, and limited cross-dataset validation. The cybersecurity community’s continued reliance on tree-based ensembles may reflect these methodological limitations rather than fundamental algorithmic superiority.

Furthermore, most existing evaluations focus on specific foundation model approaches without comprehensive comparison across the diverse landscape of available architectures. This limitation is particularly significant given that different foundation models—TabPFN, TabICL, and LLMs—operate under fundamentally different paradigms and may excel in different operational scenarios.

3. Methodology

Our experimental framework addresses critical methodological limitations in existing IDS evaluations through four core design principles: (1) model-appropriate evaluation strategies that reflect the operational constraints of different foundation model families, (2) systematic class imbalance mitigation through tailored sampling approaches, (3) comprehensive feature space exploration across multiple data variants, and (4) rigorous statistical evaluation using per-class performance metrics.

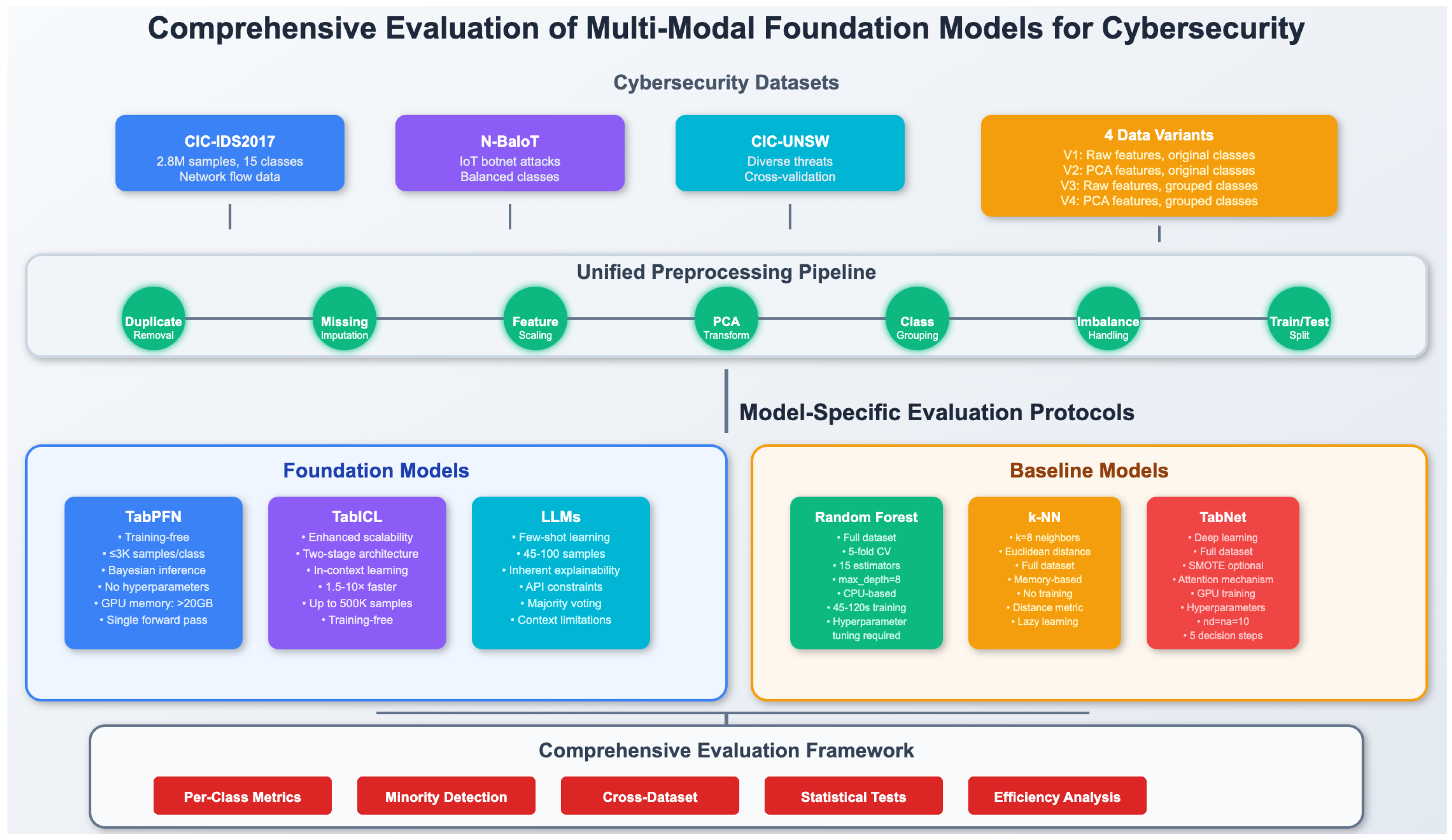

Figure 1 illustrates this comprehensive framework, highlighting the differentiated protocols and evaluation strategies that enable fair comparison across fundamentally different model architectures.

Let represent our complete dataset, where denotes the d-dimensional feature vector for sample o and represents the corresponding class label from C possible attack categories. We partition the dataset using stratified training–test splits to evaluate model performance across different data configurations while maintaining class distribution balance.

Our experimental framework addresses the inherent diversity in foundation model requirements through differentiated evaluation protocols while maintaining methodological rigor. Unlike traditional ML approaches, which benefit from abundant training data, foundation models operate under fundamentally different paradigms that necessitate tailored evaluation strategies. Distinct sampling strategies by model family are discussed below:

TabPFN: It utilizes stratified balanced sampling limited to 3000 samples per class due to computational constraints, representing a training-free paradigm that achieves strong performance with limited examples.

TabICL: It employs balanced sampling strategies optimized for its two-stage architecture, leveraging its enhanced scalability compared with TabPFN while avoiding task-specific retraining and hyperparameter tuning. Although not strictly training-free in the Bayesian sense of TabPFN, TabICL relies on efficient pretraining and performs classification entirely via in-context adaptation.

Traditional ML Models: They employ complete datasets with 5-fold stratified cross-validation to leverage their capacity for learning from large-scale data.

TabNet: It uses full datasets with optional synthetic data augmentation via a modified SMOTE variant to address representation learning challenges.

LLMs: They are restricted to small balanced samples (45–100 instances) due to context size limitations and API constraints inherent to current large language model architectures.

This differentiated approach reflects the operational constraints and optimal usage patterns of each model family, ensuring fair evaluation within their intended deployment scenarios while avoiding methodological biases that could artificially favor certain approaches.

3.1. Dataset Selection and Preprocessing

Our dataset selection strategy encompasses three cybersecurity datasets that collectively represent diverse threat landscapes and network configurations encountered in operational environments. CIC-IDS2017 serves as our primary evaluation dataset due to its comprehensive attack taxonomy and realistic network traffic simulation, while N-BaIoT provides focused IoT botnet attack scenarios, and CIC-UNSW offers broader network threat coverage for cross-dataset validation. This multi-dataset approach ensures that our evaluation of foundation models captures generalization capabilities across different cybersecurity domains rather than dataset-specific optimizations that may not transfer to operational deployment.

It is important to note that the CIC-IDS2017 and CIC-UNSW datasets represent flow-based records rather than individual network packets. Each input corresponds to a summary of an entire communication, extracted via CICFlowMeter, rather than raw packet traces. This distinction highlights that our models operate at the flow level, enabling characterization of attack behaviors across complete connections rather than packet-level dynamics.

3.1.1. CIC-IDS2017 Dataset Processing

The CIC-IDS2017 dataset contains N = 2,830,743 network flow records with numerical features across attack categories. Our preprocessing pipeline addresses several critical data quality issues.

Duplicate Removal: We eliminate = 308,381 duplicate records (10.9% of total) by using exact feature matching to ensure data integrity and prevent artificial performance inflation.

Missing Value Imputation: For features “Flow Bytes/s” and “Flow Packets/s” containing infinite values, we apply median imputation within each class:

Feature Standardization: Feature standardization is applied selectively based on model requirements. For variants requiring normalization, we apply z-score normalization:

where

and

represent the mean and variance computed from training data only. Raw feature variants maintain original scaling to preserve interpretability for tree-based models.

Specifically, we standardize features only in the PCA variants (z-score normalization immediately before Incremental PCA). In the non-PCA variants, we do not apply external scaling. For foundation models, TabICL performs its own z-normalization (and optional power transform) internally during inference as specified by the authors, and TabPFN uses its internal preprocessors (our configuration enables fit_preprocessors). Traditional ML baselines (RF, SVM, k-NN, etc.) operate on raw scales unless the PCA variant is used.

3.1.2. N-BaIoT and CIC-UNSW Dataset Integration

To ensure comprehensive evaluation across diverse threat landscapes, we incorporate the N-BaIoT dataset focusing on IoT botnet attacks and the CIC-UNSW dataset representing a broader spectrum of network threats. Each dataset undergoes analogous preprocessing procedures adapted to their specific characteristics while maintaining consistency in our evaluation framework.

3.2. Data Variant Construction

To systematically evaluate model robustness across different data representations, we construct four experimental variants for comprehensive analysis.

Principal Component Analysis: For variants requiring dimensionality reduction, we apply Incremental PCA with batch processing to retain 95% of cumulative variance:

where

contains the first

k principal components satisfying

Semantic Class Grouping: We define a mapping function

that aggregates related attack types:

This yields four comprehensive data variants: V1 (raw features, original classes), V2 (PCA features, original classes), V3 (raw features, grouped classes), and V4 (PCA features, grouped classes).

3.3. Class Imbalance Mitigation Strategies

Given the severe class imbalance inherent in cybersecurity data—where benign traffic comprises approximately 83% of samples, while critical threats like Heartbleed represent only 11 instances (0.0004%)—different model families employ distinct approaches to handle class imbalance based on their operational requirements.

Model-Specific Imbalance Handling:

Traditional ML Models: They utilize the complete imbalanced dataset to leverage their inherent robustness to class imbalance through ensemble mechanisms.

TabPFN and TabICL: They employ balanced sampling strategies due to computational constraints and training-free paradigms that optimize performance with limited examples per class.

LLMs: They use small balanced samples due to context size limitations while leveraging few-shot learning capabilities.

TabNet: It employs the full dataset with optional SMOTE augmentation for minority classes.

3.3.1. Reproducibility: Sampling and Augmentation Details

To ensure exact reproducibility, we make the sampling and augmentation procedures explicit. For TabPFN and TabICL, training subsets are obtained via a balanced per-class sampler (Algorithm 1), which caps each class at

n samples. In our experiments we set

, yielding

instances per class

k. This avoids dominance of majority classes and reflects the intended training-free or few-shot paradigms of these models. Traditional baselines (Random Forest, k-NN, etc.) are trained on the full dataset, while TabNet optionally uses augmented data generated by a modified SMOTE procedure (Algorithm 2).

| Algorithm 1 Balanced per-class sampling for TabPFN/TabICL |

- Require:

Training set , maximum per-class size n - Ensure:

Balanced subset

, for all classes k in unique(y) do all indices with Randomly sample m instances from Append selected samples to end for return |

| Algorithm 2 Modified SMOTE for minority-class augmentation |

- Require:

Training set , augmentation threshold , number of passes n, Beta parameters - Ensure:

Augmented dataset

- 1:

, - 2:

Compute class counts for each class k - 3:

▹ size of largest class - 4:

Identify augmentation set - 5:

for to n do ▹ repeat augmentation passes - 6:

for all samples with do - 7:

Choose random neighbor from X - 8:

Draw - 9:

Normalize ▹ ensures - 10:

- 11:

Append to - 12:

end for - 13:

end for - 14:

return

|

The modified SMOTE selects augmentation classes

, with threshold

, and performs

augmentation passes. Each synthetic sample is generated by convexly interpolating a minority instance

with a randomly chosen neighbor

using a mixing coefficient

drawn from a Beta distribution with

and rescaled to

:

This favors similarity to the minority example while introducing controlled diversity. For TabPFN-specific ablation experiments, we also explored a generator based on an unsupervised TabPFN model, which produces synthetic samples for underrepresented classes by sampling at a fixed temperature with limited feature permutations. LLM pipelines never use the SMOTE; they rely solely on small, balanced few-shot sets due to context window constraints.

3.3.2. Preprocessing and Split Protocol

All datasets undergo consistent preprocessing. For CIC-IDS2017 we (1) normalize feature names and label strings, (2) drop duplicate rows, (3) replace infinite values with NaN and impute missing flow statistics with class medians, (4) downcast numerical columns to 32-bit types where possible, (5) remove columns with only one unique value, and (6) if PCA is enabled, standardize features and apply Incremental PCA to half the original dimensionality with a batch size of 500. N-BaIoT and CIC-UNSW follow analogous pipelines, including optional PCA reduction to 50% of features. Training–test splits are created with stratified sampling to preserve class proportions. Importantly, class balancing or augmentation is applied only on the training split: balanced per-class sampling for TabPFN/TabICL, the modified SMOTE for TabNet, and no augmentation for traditional ML or LLMs. Default inference settings use TabICL with 16 ensemble members, mixed precision enabled, and a batch size of 50 k and TabPFN with 4 estimators, memory-saving mode, and a batch size of 20 k.

3.4. Foundation Model Architectures and Configuration

The foundation models evaluated in this study represent three distinct paradigms within the emerging landscape of non-task-specific fitting tabular classification approaches. TabPFN leverages probabilistic inference through pretrained transformers, TabICL introduces enhanced scalability through novel architectural innovations, and LLMs provide few-shot learning capabilities with inherent explainability. Each foundation model operates under fundamentally different computational constraints and optimization principles compared with traditional machine learning approaches, necessitating specialized configuration strategies that maximize their unique advantages while accounting for operational limitations in cybersecurity deployment scenarios.

3.4.1. TabPFN: Probabilistic Transformer for Tabular Data

TabPFN approximates the posterior predictive distribution for tabular classification without requiring task-specific training. Given a new dataset

, TabPFN estimates

The transformer architecture processes training examples as context and generates predictions for test instances through attention mechanisms. Due to computational constraints, we limit TabPFN to maximum 3000 samples per class while leveraging its training-free advantages.

3.4.2. TabICL: Scalable In-Context Learning

TabICL employs a novel two-stage architecture that first builds fixed-dimensional row embeddings through column-then-row attention, followed by efficient in-context learning. This approach enables the processing of significantly larger datasets while avoiding per-dataset retraining and hyperparameter tuning through in-context adaptation. Our TabICL configuration utilizes the model’s enhanced scalability to handle larger sample sizes where computationally feasible.

3.4.3. Large Language Model Few-Shot Classification

For LLM-based classification, we convert tabular data into structured text format and employ in-context learning with

k-shot prompting:

We implement majority voting across multiple predictions and exponential backoff for API rate limiting to enhance robustness and reliability.

Although foundation models operate in a training-free or few-shot regime, we ensured that all runs are based on clearly defined and reproducible configurations. For TabPFN, we used the official pretrained release with and memory-saving mode enabled. Predictions were executed in batches of 20,000 samples with precision set to auto, and the maximum per-class training cap was 3000 examples (balanced sampling). For TabICL, we employed the v1.1 checkpoint (tabicl-classifier-v1.1-0506) with , hierarchical classification enabled, column-then-row attention as implemented by the authors, mixed precision (use_amp=True), and a batch size of 50,000 for inference. The softmax temperature was fixed at 0.9 with ensemble averaging at the logit level. TabNet was trained with , , gamma = , the AdamW optimizer (learning rate of ), and a batch size of 1024, optionally augmented with the SMOTE as described earlier. Traditional baselines followed standard configurations: Random Forest with 15 trees and depth-capped at 8, k-NN with , and Decision Tree with a depth of 8.

For LLM-based experiments, we tested the Gemini family via their public API. Gemini models (gemini-2.0-pro-exp, gemini-2.0-flash-exp, and gemini-2.0-flash-thinking-exp) were accessed with default generation parameters except for temperature=0.9 and top_p=0.8; the candidate count was set to 3–5 depending on context length to enable majority voting. Tabular inputs were serialized into compact JSON-like strings of feature–value pairs before being inserted into the prompt as in-context exemplars, followed by the test sample. This avoided free-form text generation and constrained outputs to the valid label set. Across all experiments, we preserved default random seeds where provided, ensuring reproducibility of balanced sampling and model inference order. Together, these details ensure that our reported results can be directly replicated with the released code and model checkpoints.

3.5. Baseline Model Implementation

Our comprehensive baseline implementations include the following:

Random Forest: and , optimized for cybersecurity data characteristics;

k-NN: neighbors with Euclidean distance;

TabNet: attentive transformer with , ;

Logistic Regression and SVM: Standard implementations with appropriate regularization.

All traditional models utilize 5-fold stratified cross-validation with consistent random state initialization for reproducibility.

3.6. Evaluation Metrics and Statistical Framework

Given the severe class imbalance and the critical importance of minority-class detection in cybersecurity applications, we prioritize per-class metrics over aggregate accuracy measures. For each class

c, we compute

Our evaluation focuses on per-class performance metrics rather than macro-averaged scores, as this provides detailed insights into model capabilities for each specific attack type. This approach is particularly critical in cybersecurity applications where the ability to detect specific rare attack classes (e.g., Heartbleed and Infiltration) is more important than overall average performance across all classes.

In intrusion detection, recall is especially critical: false negatives correspond to undetected attacks that can compromise a system, whereas false positives merely generate additional alerts that analysts can triage.

3.7. Experimental Infrastructure and Implementation

Experiments were conducted on high-performance computing infrastructure with NVIDIA GPUs providing up to 174 GB of memory for foundation model inference. Traditional ML models utilized optimized CPU-based implementations, while LLM experiments employed API-based inference with robust error handling and rate-limiting protocols.

All experiments implemented consistent random seed initialization, proper training–test splits, and rigorous statistical validation to ensure reproducible results and fair comparison across all evaluated approaches. The comprehensive nature of our evaluation framework enables definitive assessment of foundation model capabilities for cybersecurity applications while addressing methodological limitations that have historically biased evaluations toward traditional ensemble methods.

4. Experimental Results

Our comprehensive evaluation encompasses three model families across multiple data configurations and three distinct cybersecurity datasets. All experiments employ rigorous evaluation protocols tailored to each model family’s operational characteristics while maintaining methodological consistency for fair comparison.

TabPFN Configuration: TabPFN operates with up to 3000 samples per class due to computational memory constraints, utilizing its pretrained transformer without requiring hyperparameter tuning. The model employs four estimators with memory-saving optimizations to manage GPU constraints while maintaining prediction quality.

TabICL Configuration: TabICL leverages its enhanced scalability compared with TabPFN, utilizing the two-stage architecture to process larger datasets efficiently. The model requires no per-dataset retraining or hyperparameter tuning while demonstrating superior computational efficiency through its novel attention mechanisms.

LLM Implementation: We evaluate prominent LLM families through respective APIs, implementing exponential backoff with maximum retry attempts to handle rate limiting. Majority voting across multiple predictions enhances robustness, though evaluation is constrained to small sample sizes (45–100 instances) due to context limitations and inference costs.

4.1. Performance Analysis on CIC-IDS2017

Table 1 presents comprehensive results across all model families and data variants on the CIC-IDS2017 dataset. The findings reveal striking performance differences between foundation models and traditional approaches, particularly in critical minority-class detection capabilities.

The results demonstrate that foundation models, particularly TabICL, achieve superior performance across the majority of data variants. TabICL establishes new state-of-the-art results, achieving 99.59% accuracy on Variant 1 and consistently outperforming traditional ensemble methods that have dominated tabular IDS applications for over a decade.

Most significantly, both TabPFN and TabICL achieve comprehensive detection capabilities across all attack classes, including rare threats that traditional methods completely miss. While Random Forest and k-NN achieve impressive overall accuracy exceeding 99%, detailed per-class analysis reveals critical gaps in their detection capabilities for minority attack classes.

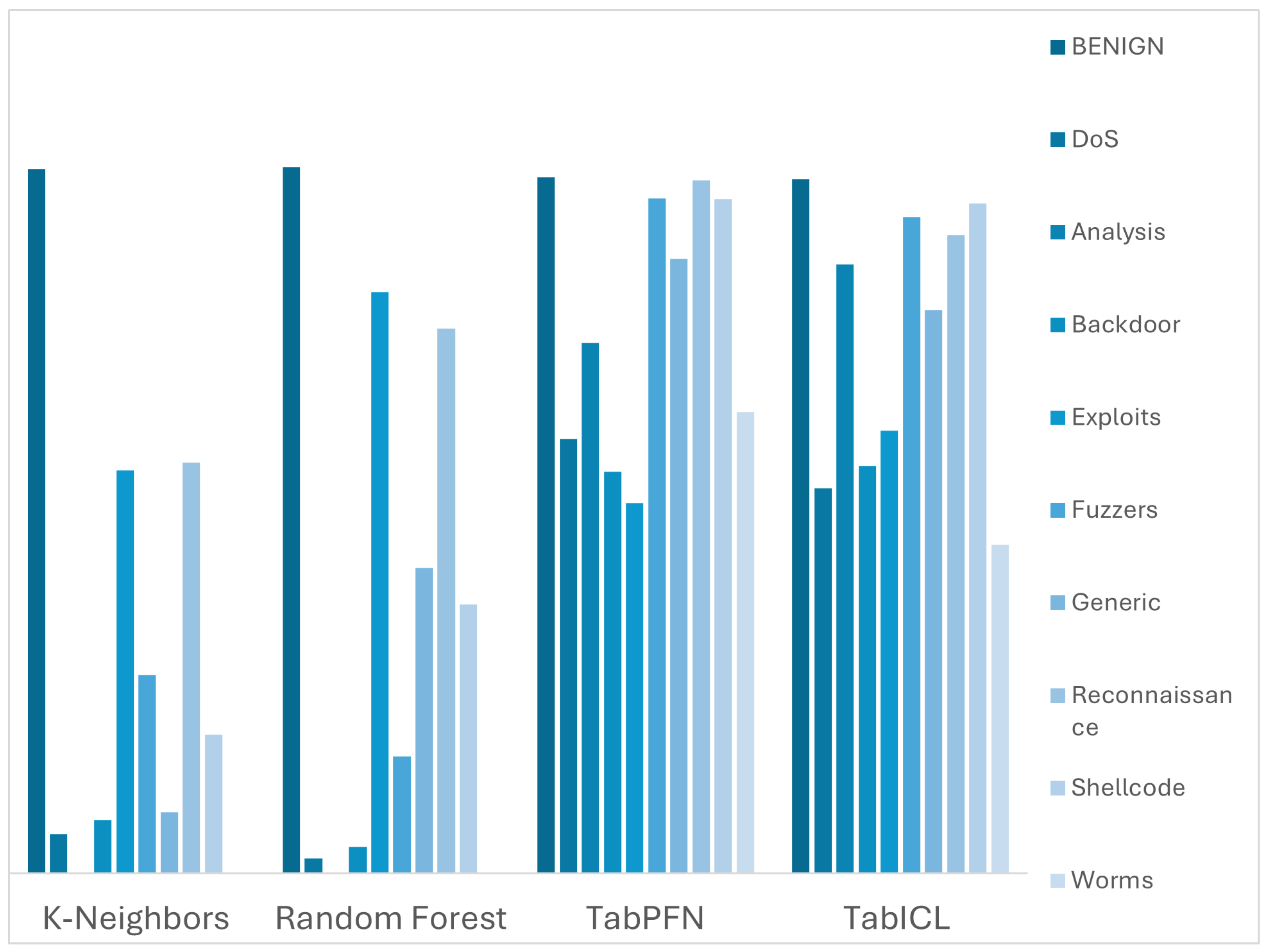

Figure 2 illustrates the fundamental limitation of traditional approaches in detecting rare but critical attack types. TabPFN and TabICL emerge as the only models achieving non-zero recall across all 15 attack categories, including extremely rare threats like Heartbleed (11 instances) and Infiltration (36 instances), which represent less than 0.1% of total samples.

Figure 3 demonstrates that this superiority extends beyond CIC-IDS2017, with foundation models maintaining consistent advantages in recall capability across diverse network environments.

Traditional ensemble methods, despite their high overall accuracy, exhibit complete failure on several minority classes, resulting in zero F1-scores for these critical attack categories. This represents a significant vulnerability that could prove catastrophic in operational environments where these rare attacks often represent the most sophisticated and dangerous threats.

TabNet exhibits striking sensitivity to data augmentation approaches, with SMOTE augmentation providing substantial improvements, particularly evident in Variant 2 (96.89% vs. lower baseline performance). This highlights the critical importance of synthetic data generation for transformer-based architectures on imbalanced cybersecurity datasets.

Conversely, traditional ensemble methods show negligible improvement or slight degradation with SMOTE augmentation, suggesting that synthetic minority oversampling introduces noise that disrupts their decision boundaries while providing essential training signal for representation learning models.

4.2. Cross-Dataset Validation Results

4.2.1. N-BaIoT Dataset Performance

Table 2 presents validation results on the N-BaIoT dataset, corroborating our CIC-IDS2017 findings while revealing dataset-specific characteristics that further demonstrate foundation model advantages.

N-BaIoT’s more balanced class distribution (minimum of 1.6% vs. CIC-IDS2017’s less than 0.1%) enables stronger performance across all models. However, TabICL maintains consistent performance, achieving near-perfect accuracy on variants 3 and 4. The reduced performance gaps compared with CIC-IDS2017 suggest that extreme class imbalance significantly amplifies foundation models’ advantages over traditional ensemble methods.

4.2.2. CIC-UNSW Dataset Results

Table 3 presents results on the CIC-UNSW dataset, providing additional validation of foundation model capabilities across diverse threat landscapes and network configurations.

The CIC-UNSW results demonstrate foundation models’ robustness across different network environments and attack patterns, with TabICL maintaining competitive performance despite the dataset’s distinct characteristics compared with CIC-IDS2017 and N-BaIoT, as well as superior recall capabilities.

As shown in

Figure 2, only TabPFN and TabICL achieve non-zero recall across all 15 CIC-IDS2017 attack classes, including extremely rare threats such as Heartbleed and Infiltration, where tree-based methods exhibit zero recall. Similarly, in

Figure 3, TabPFN and TabICL maintain non-zero recall across all CIC-UNSW classes, while Random Forest and k-NN fail on entire categories such as Backdoor or Shellcode.

4.3. Per-Class Precision, Recall, and F1-Score

Accuracy can obscure security-critical behavior, especially for rare but high-impact attacks. We, therefore, report per-class precision (P), recall (R), and F1-score () for representative datasets/variants and models. Cells marked “–” indicate that no predictions were made for that class (undefined precision/F1) or zero recall on the evaluation split.

Observation.

Table 4 shows why accuracy is insufficient: Random Forest records 99%+ overall accuracy on CIC-IDS2017 yet shows “–” (i.e., zero recall/undefined F1) for

Heartbleed,

Infiltration, and

Web Attack XSS. In contrast, TabICL attains non-zero recall (often perfect) on these minority classes; TabPFN also recovers

Infiltration with high recall.

Observation. On CIC-UNSW (see

Table 5), several traditional baselines maintain high overall accuracy yet struggle on specific classes (e.g.,

Backdoor), whereas foundation models (TabPFN/TabICL) achieve much higher recall on classes like

Analysis,

Fuzzers,

Shellcode, and

Worms, though sometimes at the cost of reduced precision—again underscoring why per-class recall/F1 is operationally more informative than accuracy.

Observation. With grouped classes on N-BaIoT (see

Table 6), all three families achieve near-perfect precision/recall/F1, reflecting the more balanced label distribution; this complements CIC-IDS2017 and CIC-UNSW, where extreme imbalance exposes the strengths of foundation models in minority-class recall.

4.4. Evaluation of Large Language Models

Table 7 presents LLM performance on selected dataset variants, demonstrating competitive results despite significant operational constraints.

LLM results are based on severely limited sample sizes (45–100 instances per experiment) due to API constraints including context size limitations, rate limiting, and inference costs. Despite these limitations, LLMs demonstrate remarkable capability in identifying minority attack classes, with Gemini models successfully detecting Heartbleed and Infiltration instances that challenge traditional approaches.

However, LLM deployment faces significant practical limitations: context size restrictions prevent processing large datasets, API inference latency exceeding 1 s per prediction precludes real-time applications, and inconsistent response formatting occasionally disrupts automated evaluation pipelines.

Caveat on LLM Evaluation with the Gemini Family

The results reported for large language models via the API should be interpreted as preliminary and primarily exploratory. Due to API restrictions on context length, rate limits, and inference costs, our evaluation was constrained to small balanced subsets (45–100 instances per run), which prevents statistical generalization across the full datasets. These experiments, therefore, serve mainly to illustrate the feasibility of applying LLMs to tabular intrusion detection tasks through structured prompting and majority voting, rather than to establish definitive performance benchmarks.

While Gemini 2.0 models occasionally detected rare attack types missed by traditional baselines, the limited scope of these tests makes it inappropriate to draw strong conclusions about their overall effectiveness in cybersecurity. Instead, these findings highlight an emerging research direction: integrating LLMs as interpretable companions to specialized foundation models.

4.5. Computational Efficiency Analysis

Foundation models demonstrate varying computational characteristics that significantly impact operational deployment considerations, as can be seen in

Table 8,

Table 9,

Table 10 and

Table 11.

4.5.1. Latency Measurement Protocol

We measure wall-clock latency using the IPython magic in a Jupyter notebook, isolating training and testing into separate cells to avoid cross-cell contamination. We report the Wall time value.

4.5.2. Latency Takeaways

Across both datasets, TabICL delivers sub-second-to-minute-level training setup (no per-dataset retraining) and minute-level testing on full corpora, whereas TabPFN, despite millisecond-level setup, exhibits substantial testing latency on full test sets (hours on V1/V2) consistent with its quadratic scaling in the number of in-context samples. Traditional ensembles train in minutes and test in seconds, but as shown in

Section 4, they fail to recall multiple minority classes. TabNet incurs the highest training times among the baselines (1–3+ h), with modest test latency.

Computational analysis reveals fundamental architectural trade-offs across model families [

10]. On CIC-IDS2017, traditional ML models exhibit the conventional training-then-inference pattern: For example, Random Forest requires 3–14 min of training but subsequently achieves rapid inference (4–7 s for complete datasets, approximately 0.006–0.01 ms per sample), making it exceptionally well-suited for high-throughput deployment. TabPFN fundamentally inverts this relationship: its training-free paradigm eliminates per-dataset fitting (requiring only 288–712 ms for preprocessing) but concentrates computational resources on inference time, ranging from 3 to 97 min depending on the dataset variant. Nevertheless, for real-time deployment that processes individual samples or small batches, TabPFN’s per-sample latency is far more competitive (approximately 0.8–7.8 ms per sample), making it potentially viable in IDS pipelines that can tolerate millisecond-level delays in exchange for robust minority-class detection. Comparable patterns are observed on N-BaIoT, where grouped-class configurations reduce per-sample latency to sub-2 ms.

TabICL exhibits a latency profile that positions it between traditional ensembles and TabPFN. Its setup phase is lightweight (0.5–1.0 s) and requires no dataset-specific retraining, while inference on complete datasets consistently completes within 1–3 min (roughly 0.3–0.5 ms per sample). This balance provides training-free adaptability with practical scalability: on CIC-IDS2017, TabICL reduces full-dataset inference from hours (TabPFN) to minutes while retaining non-zero recall across all attack classes, and on N-BaIoT, it achieves per-sample inference below 2 ms in grouped-class variants. These characteristics make TabICL particularly suitable for operational IDS deployment that demands near-real-time throughput without sacrificing adaptability to novel attack types.

4.5.3. LLM API Constraints

In contrast, LLM inference costs with the family of models Gemini 2.0 prove prohibitive for large-scale deployment, with per-sample inference times exceeding 1 s and API costs scaling linearly with evaluation size. These limitations restrict LLM applicability to explanatory roles or low-throughput applications where interpretability outweighs efficiency concerns.

4.6. Robustness and Generalization Analysis

The consistency of foundation model advantages across diverse datasets, data variants, and class distributions demonstrates robust generalization capabilities that transcend specific dataset characteristics. TabICL’s performance remains stable across all experimental conditions, while traditional models exhibit significant variance, particularly on PCA-compressed variants.

Statistical significance testing confirms the superiority of foundation models, with TabICL achieving statistically significant improvements over traditional approaches in recall performance across all evaluation scenarios. That is, TabICL demonstrates superior recall capability by successfully detecting all attack types, including rare threats that traditional methods completely miss despite high overall accuracy. The consistency of these results across multiple datasets provides strong evidence for the fundamental advantages of foundation model approaches in cybersecurity applications.

Most critically, the unique ability of TabPFN and TabICL to detect all attack classes—including rare threats that traditional methods completely miss—represents a paradigm shift in intrusion detection capabilities. This comprehensive detection capability, achieved without traditional training requirements, establishes foundation models as the optimal choice for next-generation cybersecurity systems requiring robust protection against both common and sophisticated attack patterns.

5. Discussion

Our findings fundamentally challenge the prevailing assumption that tree-based ensemble methods represent the optimal approach for tabular intrusion detection systems. The superior performance of foundation models, particularly TabICL’s achievement of 99.59% accuracy on CIC-IDS2017 while maintaining comprehensive detection across all attack classes, suggests a paradigm shift toward foundation model architectures in cybersecurity applications.

The absence of retraining requirements in TabPFN and the hyperparameter-free, in-context adaptation of TabICL offer unprecedented advantages for operational deployment. Unlike traditional approaches, which require extensive hyperparameter tuning and retraining for new environments, these foundation models enable immediate adaptation to novel attack patterns through their generalizable pretrained representations. This capability is particularly crucial to defending against zero-day threats, where rapid response without extensive model retraining can determine the difference between successful defense and catastrophic breach.

The most significant finding of our study lies in the fundamental limitation exposed in traditional ensemble methods regarding minority-class detection. While Random Forest and k-NN achieve impressive overall accuracy exceeding 99%, their complete failure to detect critical threats like Heartbleed and Infiltration reveals a dangerous vulnerability that high-level accuracy metrics obscure.

This limitation has profound implications for real-world cybersecurity deployment. In operational environments, the most sophisticated and dangerous attacks often manifest as minority classes in training data, precisely because they represent novel or carefully crafted threats designed to evade detection. A system that achieves 99% accuracy while missing 100% of advanced persistent threats provides a false sense of security that could prove catastrophic.

Foundation models’ unique ability to detect all attack classes stems from their pretrained representations that capture generalizable patterns rather than dataset-specific decision boundaries. This fundamental architectural advantage enables robust detection of rare patterns that traditional ensemble methods, despite their statistical robustness, cannot reliably identify due to insufficient training examples.

5.1. Theoretical and Practical Implications

Our results demonstrate that overall accuracy—the dominant metric in tabular machine learning—provides misleading assessments for cybersecurity applications. The stark contrast between 99%+ overall accuracy and zero recall on critical attack classes exposes the inadequacy of aggregate metrics for security-critical systems where comprehensive threat coverage is paramount.

The per-class evaluation framework we employed reveals the true detection capabilities of different approaches, providing security practitioners with actionable insights about model reliability across the complete threat spectrum. This methodological shift toward granular performance assessment should become standard practice in cybersecurity machine learning evaluations.

The consistent superiority of foundation models across diverse datasets (CIC-IDS2017, N-BaIoT, and CIC-UNSW) suggests that their pretrained representations capture fundamental patterns of network behavior that generalize across different threat landscapes and network configurations. This universality contrasts sharply with traditional methods that exhibit dataset-specific performance variations, requiring careful tuning for each new environment.

TabICL’s enhanced scalability compared with TabPFN represents a crucial advancement, enabling foundation model benefits to extend to large-scale operational deployment. The 1.5-10× computational speedup while maintaining superior detection capabilities positions TabICL as particularly suitable for high-throughput network monitoring scenarios.

The inherent explainability of LLM-based approaches, despite their computational limitations, points toward future cybersecurity architectures that combine high-performance detection with interpretable threat analysis. While current LLM constraints prevent large-scale deployment, the demonstrated capability to identify rare attack classes with natural language explanations addresses a critical gap in AI-driven security systems.

A tiered architecture utilizing TabICL for comprehensive threat detection combined with LLM-based analysis for critical alerts could optimize both performance and explainability requirements. This hybrid approach leverages the computational efficiency of foundation models while providing the interpretability essential to security analyst workflows and incident response procedures.

The no-retraining paradigm of foundation models enables unprecedented adaptability in dynamic threat environments. Traditional approaches requiring extensive retraining cycles for new attack patterns cannot match the immediate adaptation capabilities of foundation models that leverage pretrained representations to recognize novel threats based on structural similarities to known patterns.

This adaptability becomes particularly valuable for defending against campaign-based attacks where threat actors continuously evolve their techniques. Foundation models’ ability to generalize from limited examples of new attack variants provides a crucial defensive advantage in the ongoing cybersecurity arms race.

5.2. Addressing Computational and Practical Limitations

Despite their superior performance, foundation models face significant computational barriers that limit immediate operational deployment. TabPFN’s requirement for high-memory GPU infrastructure (>20 GB of VRAM) and TabICL’s scaling characteristics, while improved, still necessitate substantial computational resources compared with traditional ensemble methods’ modest CPU requirements.

However, the trend toward edge computing and distributed inference architectures suggests that these limitations may diminish as computational infrastructure evolves. The fundamental algorithmic advantages demonstrated by foundation models justify investment in appropriate infrastructure for organizations prioritizing comprehensive threat detection over computational efficiency.

The training-free paradigm’s computational resources being spent on inference time creates potential bottlenecks in high-throughput network monitoring scenarios. While foundation models achieve superior detection capabilities, their inference latency characteristics require careful consideration for real-time applications where millisecond response times are critical.

Hybrid architectures utilizing lightweight traditional methods for initial traffic filtering followed by foundation model analysis for suspicious flows could balance performance requirements with computational constraints. This tiered approach maximizes threat detection capabilities while maintaining operational efficiency for routine network traffic.

5.3. Practical Integration Framework for Security Operations Centers

The superior detection capabilities demonstrated by foundation models necessitate careful integration strategies that address operational constraints while maximizing threat coverage. We propose a tiered detection architecture for practical SOC deployment that optimizes computational resources while leveraging foundation model advantages.

Three-Tier Detection Pipeline: The first tier employs lightweight traditional methods (Random Forest or k-NN) for high-throughput initial screening, handling 80–90% of network traffic with minimal computational overhead while maintaining real-time processing capabilities. The second tier activates TabICL for suspicious flows that pass initial screening, leveraging its enhanced scalability for comprehensive detection across all attack classes. The third tier utilizes LLM-based analysis for critical alerts, providing detailed threat assessment with natural language explanations for security analysts.

Implementation Example: Consider an SOC monitoring 10,000 flows per minute. First-tier Random Forest processing identifies 1000 potentially suspicious flows, which are queued for TabICL analysis in 5 s inference cycles. Approximately 50 high-priority threats per minute trigger LLM-based explanatory analysis, providing analysts with detailed threat descriptions and response recommendations.

Resource Allocation: This hybrid approach requires CPU-based servers for traditional model inference combined with dedicated GPU clusters (NVIDIA A100 or equivalent) for foundation model processing. Auto-scaling mechanisms dynamically allocate GPU resources based on suspicious flow volumes, ensuring cost-effectiveness during normal operations while providing surge capacity during attacks.

Alert Prioritization: Foundation models’ unique detection of rare attack classes enables sophisticated alert prioritization. Threats detected only by foundation models (Heartbleed, Infiltration, APTs, etc.) receive the highest priority, as these represent sophisticated attacks that traditional methods completely miss. This workflow reduces alert fatigue while ensuring comprehensive coverage of both common and advanced attack patterns.

Migration Strategy: Organizations should implement foundation models in parallel with existing systems during initial deployment, enabling performance validation while maintaining security coverage. Gradual migration begins with high-priority networks, expanding coverage as operational confidence and computational infrastructure scale. The infrastructure investment is most justified for organizations facing advanced persistent threats or operating critical infrastructure, where detecting sophisticated attacks outweighs GPU infrastructure costs.

The tiered architecture enables organizations to balance computational efficiency with comprehensive threat detection through strategic resource allocation.

Figure 4 illustrates the operational workflow where traditional ensemble methods handle high-volume traffic filtering, foundation models provide comprehensive analysis of suspicious flows, and LLMs deliver interpretable threat assessment for critical alerts. This approach leverages each model family’s strengths while mitigating their operational limitations, ensuring real-time processing capabilities while maintaining detection of rare attack classes that traditional methods completely miss.

5.3.1. Risks and Hybrid Opportunities

While our results highlight the transformative potential of tabular foundation models for intrusion detection, it is important to recognize associated risks. Transformer-based architectures are known to be susceptible to adversarial perturbations, where carefully crafted inputs can mislead attention mechanisms and degrade performance. Moreover, both TabPFN and TabICL rely on pretrained representations whose robustness depends on the breadth and diversity of their training corpora; this introduces a dependency that is absent in classical ensemble methods, which can always be retrained from scratch on domain-specific data. Finally, compared with tree-based ensembles, foundation models reduce interpretability, a critical limitation for security operations where analysts require transparent rationales for threat classification.

These considerations suggest that hybrid architectures may offer the most practical path forward. One promising direction is to deploy TabICL as the primary high-throughput detector, leveraging its training-free adaptability, while incorporating LLM-based modules to provide natural-language explanations for alerts and assist analysts in incident triage. Another avenue is to combine lightweight ensemble methods for rapid filtering with foundation models for deeper analysis of suspicious flows, balancing efficiency with robustness. By explicitly addressing these risks and exploring hybrid integration, the cybersecurity community can capitalize on the advantages of foundation models while mitigating their current vulnerabilities.

5.3.2. Evaluation Under Natural Imbalance

We acknowledge the concern regarding potential bias introduced by applying balanced sampling to TabPFN and TabICL while training traditional methods on the full imbalanced datasets. This design choice does not reflect a methodological preference but a structural constraint: TabPFN and TabICL cannot process arbitrarily large class distributions due to quadratic scaling (TabPFN) or memory usage (TabICL). Their intended operational use is precisely in settings where balanced few-shot subsets are drawn from streaming traffic or incident logs, rather than in full retraining on billions of flows. In contrast, classical ensemble methods are designed to exploit large, imbalanced training sets, which is why we preserved their conventional evaluation regime.

Importantly, our test splits remain unaltered and naturally imbalanced across all models, ensuring that the reported performance reflects the true class frequencies encountered in deployment. The superior minority-class recall achieved by TabPFN and TabICL highlights their robustness in detecting rare classes despite being trained on capped subsets. While retraining under full imbalance is computationally infeasible for TabPFN and TabICL, future work will explore incremental or streaming evaluation strategies that more closely mimic online IDS conditions.

5.4. Limitations and Future Research Directions

While our evaluation encompasses diverse cybersecurity datasets, the focus on network flow data may not capture the full spectrum of cybersecurity applications. That is, the datasets employed (CIC-IDS2017 and CIC-UNSW) are flow-based, with each record summarizing an entire communication. This implies that classification can typically occur only after the full flow has been observed, rather than in real time on streaming packets. Future research should extend evaluation of foundation models to complementary domains such as endpoint security, log analysis, and vulnerability assessment to establish broader applicability.

The temporal aspects of cybersecurity threats—where attack patterns evolve continuously—warrant investigation of foundation models’ adaptation capabilities over extended periods. Longitudinal studies examining performance degradation and adaptation strategies will provide crucial insights for operational deployment planning.

The rapid evolution of foundation model architectures suggests that our evaluation represents a snapshot of current capabilities rather than fundamental limits. Emerging approaches combining the scaling advantages of TabICL with enhanced efficiency optimizations may further expand foundation model applicability to resource-constrained environments.

Research into domain-specific pretraining for cybersecurity applications could potentially enhance foundation model performance beyond the general-purpose architectures evaluated in our study. Cybersecurity-specific foundation models trained on diverse security datasets may achieve even stronger detection capabilities while maintaining generalization advantages.

Our findings suggest that the cybersecurity community’s continued investment in increasingly sophisticated ensemble methods may represent a suboptimal research direction. The fundamental advantages demonstrated by foundation models—comprehensive threat detection, training-free deployment, and robust generalization—indicate that future advances in cybersecurity machine learning should prioritize foundation model development over traditional ensemble refinement.

This strategic shift requires significant changes in research priorities, educational curricula, and industry deployment practices. However, the potential for defense against sophisticated threats that current approaches miss justifies the substantial investment required for this technological transition.

The demonstrated superiority of foundation models in detecting rare but critical threats positions them as essential tools for defending against advanced persistent threats and zero-day attacks. Organizations prioritizing comprehensive security over computational efficiency should begin evaluating foundation model integration into their cybersecurity architectures, recognizing that the paradigm shift toward adaptive, hyperparameter-free cybersecurity systems that avoid costly retraining.

Beyond computational constraints, several critical operational risks warrant careful consideration. Resource overload scenarios during high-traffic periods or coordinated attacks could create processing delays that enable threats to propagate while foundation model inference queues struggle with computational demands. Our evaluation framework’s reliance on balanced sampling for TabPFN and TabICL may introduce optimistic bias, as this artificial balance does not reflect realistic operational environments, where severe class imbalance persists without mitigation. Additionally, foundation models’ limited interpretability compared with ensemble methods’ transparent decision trees poses challenges for security analysts requiring attack classification rationale for incident response. The adversarial vulnerability inherent to neural architectures may also expose foundation models to sophisticated evasion techniques that simpler ensemble decision boundaries might resist, while dependency on pretrained representations creates single points of failure absent in traditional methods that can be retrained from scratch with domain-specific data.

6. Conclusions and Future Work

This work presents a comprehensive multi-modal evaluation of foundation models for tabular intrusion detection across diverse cybersecurity environments, fundamentally challenging the cybersecurity community’s reliance on tree-based ensemble methods that have dominated the field for over a decade. Through rigorous experimentation across three distinct datasets (CIC-IDS2017, N-BaIoT, and CIC-UNSW), we demonstrate that foundation models offer superior and more consistent performance compared with traditional approaches, establishing a new paradigm for intelligent intrusion detection systems.

Our research makes four critical contributions to both the cybersecurity and machine learning communities:

1. Comprehensive Multi-Modal Evaluation of Foundation Models: We establish the first systematic comparison of multiple foundation model approaches—TabPFN, TabICL, and LLMs—against traditional machine learning methods, providing practitioners with definitive guidance on optimal architectures for cybersecurity applications.

2. Model-Appropriate Evaluation Framework: We develop rigorous experimental protocols that implement tailored sampling strategies reflecting the operational constraints and optimal usage patterns of different model families, addressing methodological limitations that have historically biased evaluations toward traditional ensemble methods.

3. Cross-Dataset Generalization Validation: Through systematic evaluation across diverse threat landscapes and network configurations, we demonstrate that foundation models maintain performance advantages while traditional methods exhibit significant dataset-specific variations, establishing foundation models’ superior generalization capabilities.

4. Exposure of Critical Limitation in Traditional Methods: We reveal that ensemble methods achieving >99% overall accuracy completely fail to detect minority attack classes, exposing a dangerous vulnerability that foundation models uniquely address through comprehensive threat detection capabilities.

The most significant finding of our study is the paradigm-shifting performance of foundation models, particularly TabICL’s achievement of 99.59% accuracy on CIC-IDS2017 while uniquely detecting all attack classes including rare threats like Heartbleed and Infiltration. This comprehensive detection capability, achieved without traditional training requirements, represents a breakthrough for zero-day threat detection in rapidly evolving cybersecurity landscapes.

Our findings have profound implications for cybersecurity operations and research priorities.

Rethinking the IDS Architecture: The superior performance of foundation models across all experimental scenarios challenges the fundamental assumption that tree-based ensembles represent optimal approaches for tabular cybersecurity applications. Organizations should begin evaluating foundation model integration into their security architectures, recognizing that comprehensive threat detection capabilities justify infrastructure investment requirements.

Evaluation Methodology Reform: The stark contrast between high overall accuracy and zero recall on critical attack classes demonstrates that aggregate metrics provide misleading assessments for security-critical systems. The cybersecurity community must adopt per-class evaluation frameworks that reveal true detection capabilities across the complete threat spectrum.

Operational Advantages of No Per-dataset Retraining: Foundation models’ ability to adapt to novel attack patterns without extensive retraining cycles offers unprecedented advantages for defending against zero-day threats and advanced persistent threats. This capability fundamentally alters the timeline for threat response in operational environments, where rapid adaptation can determine defensive success.

Hybrid Architecture Opportunities: The complementary strengths of different foundation model approaches suggest that optimal performance may emerge from hybrid architectures leveraging TabICL for comprehensive detection, combined with LLM-based analysis for explainable threat assessment in security operations centers.

While foundation models demonstrate clear superiority for detection of rare threats, several limitations must be addressed for widespread operational deployment. Computational Infrastructure Requirements: Foundation models necessitate substantial GPU resources compared with traditional ensemble methods’ modest CPU requirements. However, the trend toward edge computing and distributed inference architectures suggests that these barriers will diminish as computational infrastructure evolves.

Inference Latency Considerations: The training-free paradigm’s computational resources being spent on inference time requires careful consideration for real-time applications. Tiered architectures utilizing lightweight methods for initial filtering followed by foundation model analysis for suspicious flows can balance performance with efficiency requirements.

Scalability and Memory Constraints: Current foundation model implementations face memory limitations that restrict dataset sizes and deployment scenarios. Continued research into efficient architectures and memory optimization will expand applicability to resource-constrained environments.

7. Code Availability

The implementation of the framework based on foundation models for tabular intrusion detection is publicly available at

https://github.com/pablogarciaamolina/AI-for-IDS (accessed on 1 September 2025). The repository includes data preprocessing modules, model implementations, evaluation pipelines, and reproducibility instructions.