Machine Learning and Neural Networks for Phishing Detection: A Systematic Review (2017–2024)

Abstract

1. Introduction

Definition 1. “Phishing is a crime employing both social engineering and technical subterfuge to steal consumers’ personal identity data and financial account credentials. Social engineering schemes prey on unwary victims by fooling them into believing they are dealing with a trusted, legitimate party, such as by using deceptive email addresses and messages, bogus web sites, and deceptive domain names. These are designed to lead consumers to counterfeit Web sites that trick recipients into divulging financial data such as usernames and passwords. Technical subterfuge schemes plant malware onto computers to steal credentials directly, often using systems that intercept consumers’ account usernames and passwords or misdirect consumers to counterfeit Web sites” [32].

2. Materials and Methods

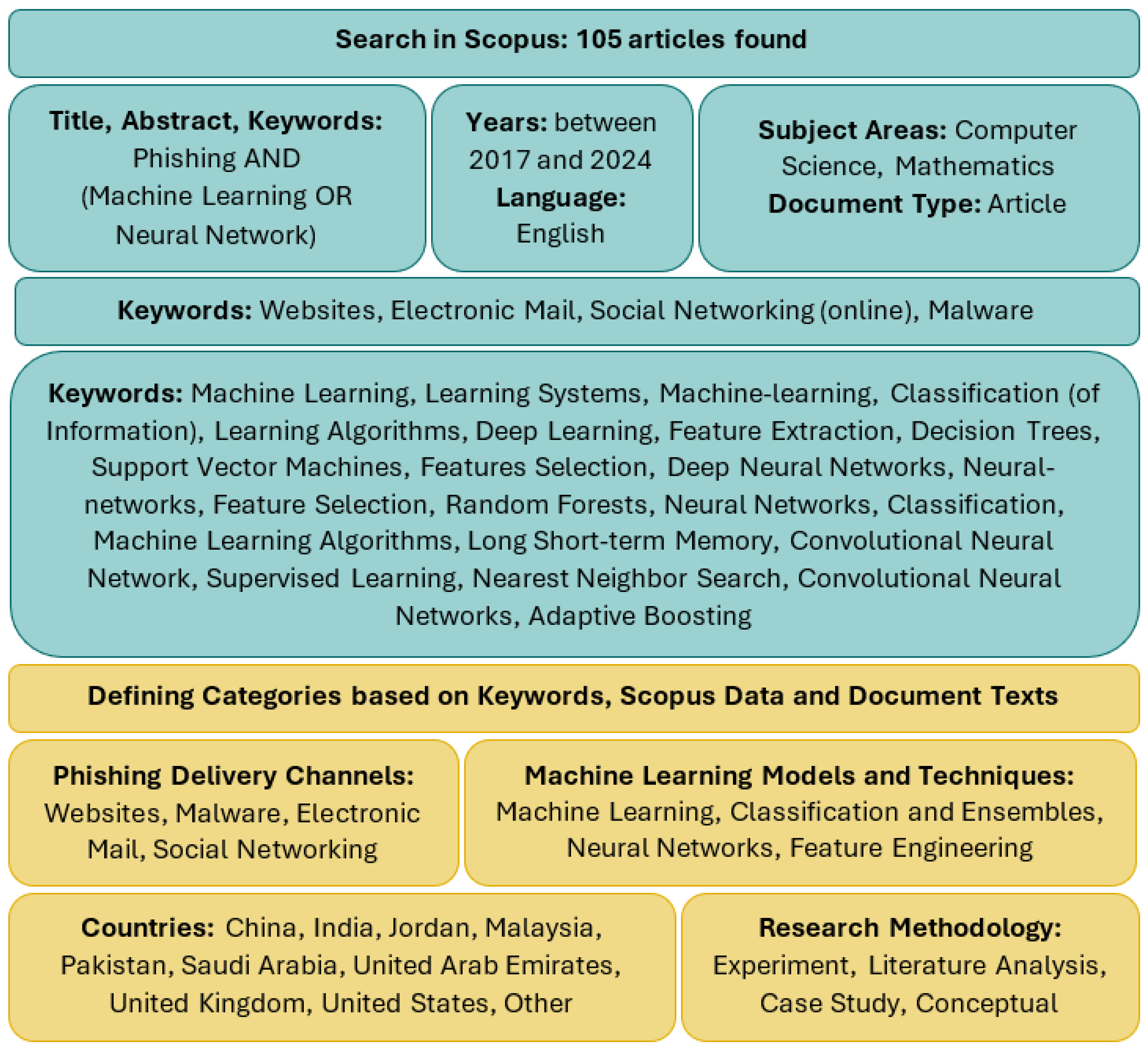

2.1. Data Retrieval and Corpus Construction

2.2. Supplementary Data Sources

2.3. Bibliometric Analysis Procedure

2.4. Review Protocol and Publication Quality

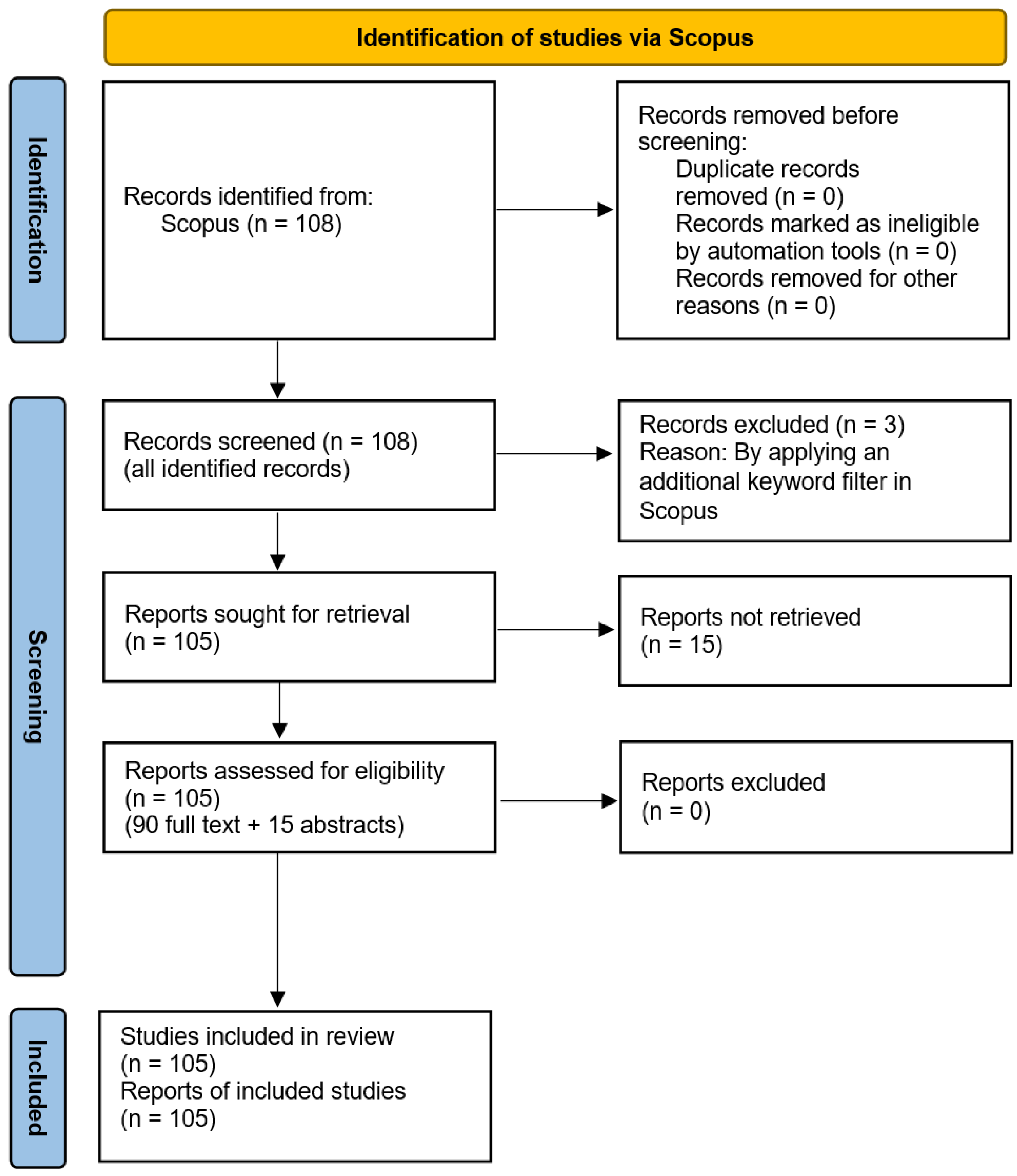

- In the identification stage, a comprehensive search was conducted in the Scopus database. The search strategy used a defined set of keywords applied to titles, abstracts, or author keywords in order to capture relevant publications. Filters were applied to restrict the results to English-language articles within the defined time frame (2017–2024). Records from unrelated subject areas were removed. A total of 108 records were identified.

- In the screening stage, all 108 identified in the previous step records were examined. Three records were excluded after applying an additional keyword filter in Scopus. This left 105 records for further retrieval.

- In the eligibility assessment, 90 full-text articles and 15 abstracts were reviewed. The inclusion of abstracts helped maintain methodological consistency and increased the sample size, which was essential for conducting a reliable quantitative analysis. Although abstracts provide less detail than full texts, they contain key information on the scope of the study, the applied methods, and the main findings, making them a valuable source of data in a systematic review.

2.5. Study Quality and Risk-of-Bias Assessment

2.6. Summary

3. Deployment Checklists by Phishing Delivery Channel

3.1. Deployment Checklist for the Phishing Delivery Channel: Websites

3.1.1. Privacy Controls

3.1.2. Data Collection Risks

3.1.3. Failsafe Behavior and Safe Defaults

3.1.4. Model Updates and Rollback

3.1.5. Explainability for Triage

3.2. Deployment Checklist for the Phishing Delivery Channel: Malware

3.2.1. Privacy Controls

3.2.2. Data Collection Risks

3.2.3. Failsafe Behavior and Safe Defaults

3.2.4. Model Updates and Rollback

3.2.5. Explainability for Triage

3.3. Deployment Checklist for the Phishing Delivery Channel: Electronic Mail

3.3.1. Privacy Controls

3.3.2. Data Collection Risks

3.3.3. Failsafe Behavior and Safe Defaults

3.3.4. Model Updates and Rollback

3.3.5. Explainability for Triage

3.4. Deployment Checklist for the Phishing Delivery Channel: Social Networking

3.4.1. Privacy Controls

3.4.2. Data Collection Risks

3.4.3. Failsafe Behavior and Safe Defaults

3.4.4. Model Updates and Rollback

3.4.5. Explainability for Triage

3.5. Summary

4. Discussion

4.1. Keyword Co-Occurrence Map: Dataset, Parameters, and Metrics

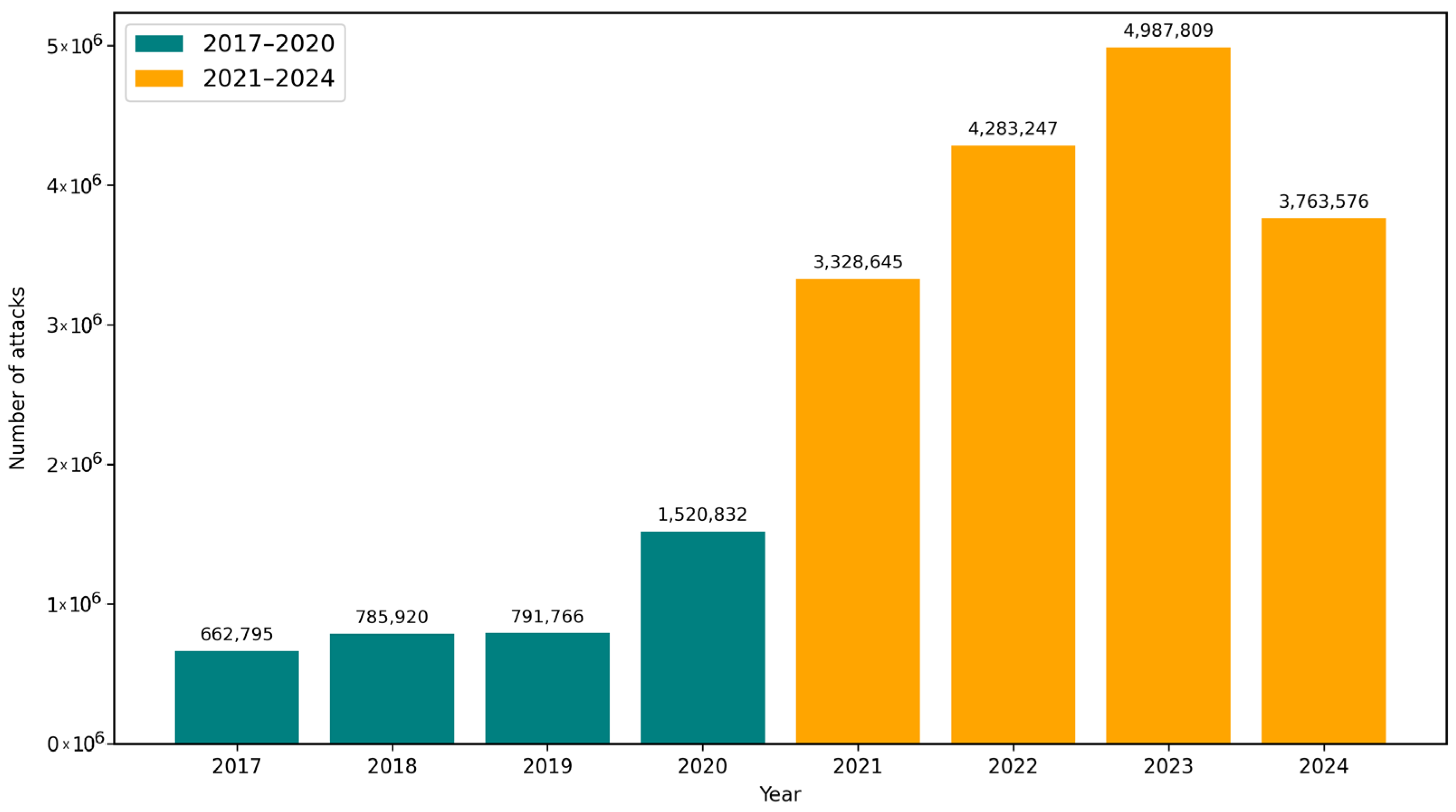

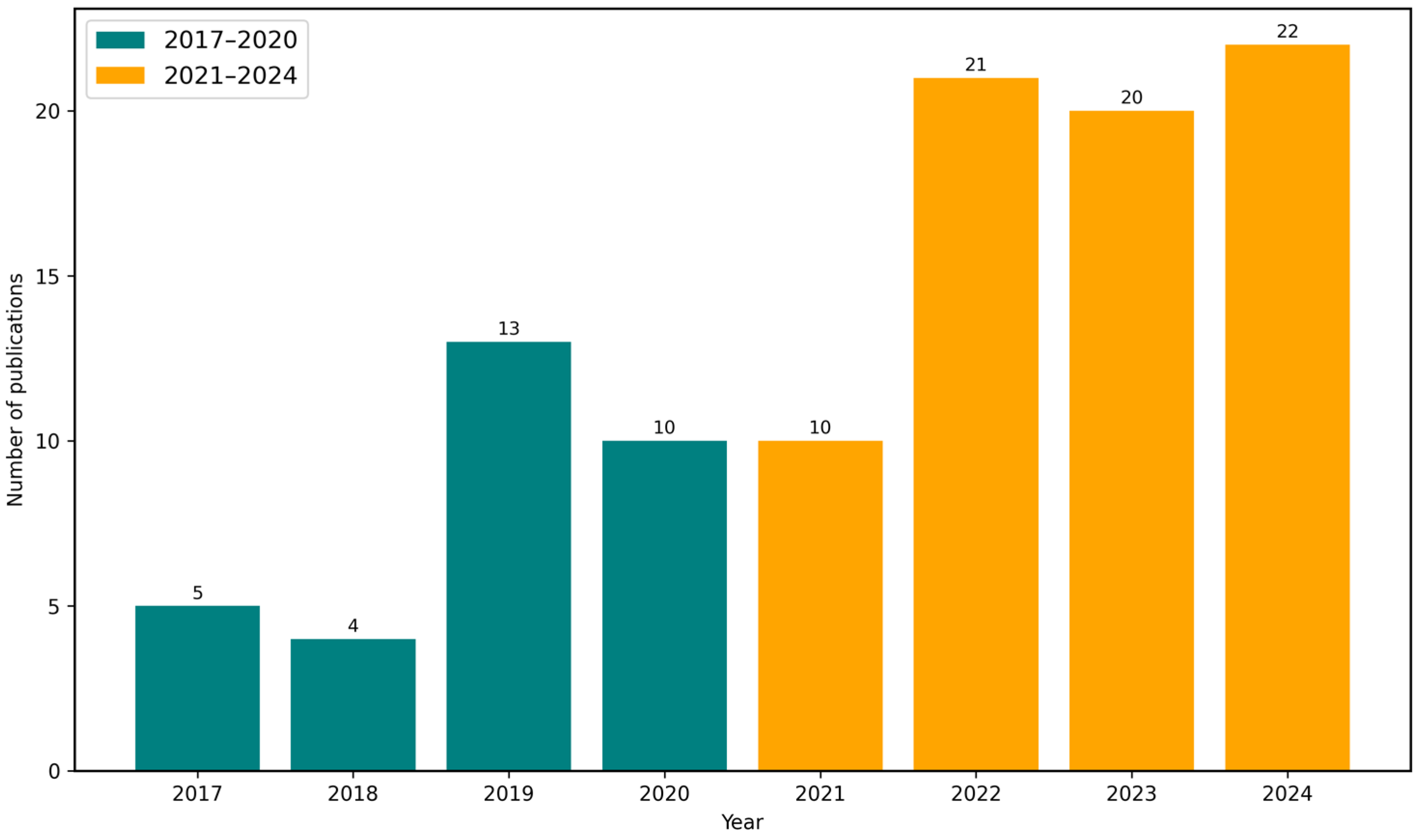

4.2. Trends in Global Phishing Activity

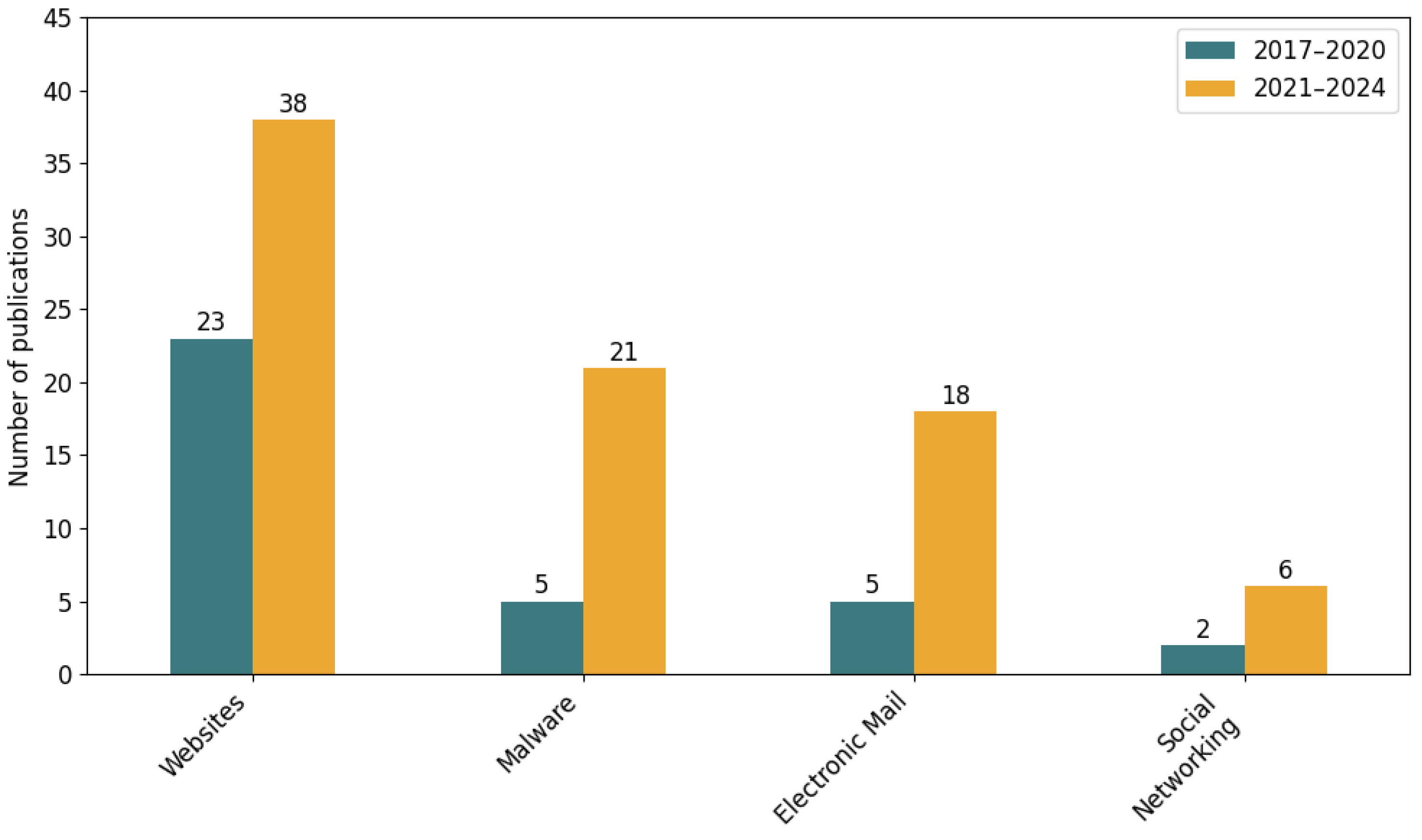

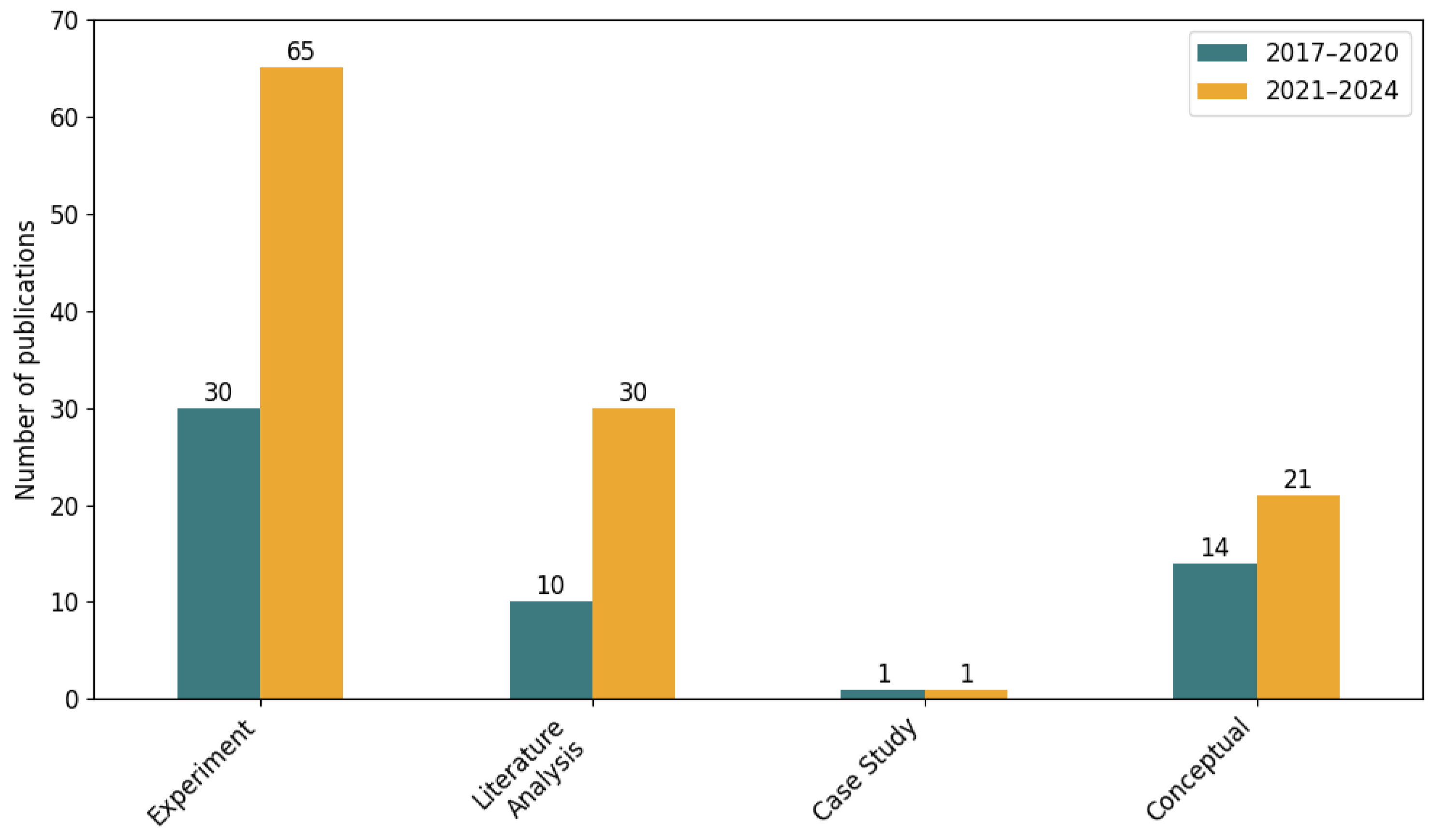

4.3. Categorization Framework for Analyzed Publications

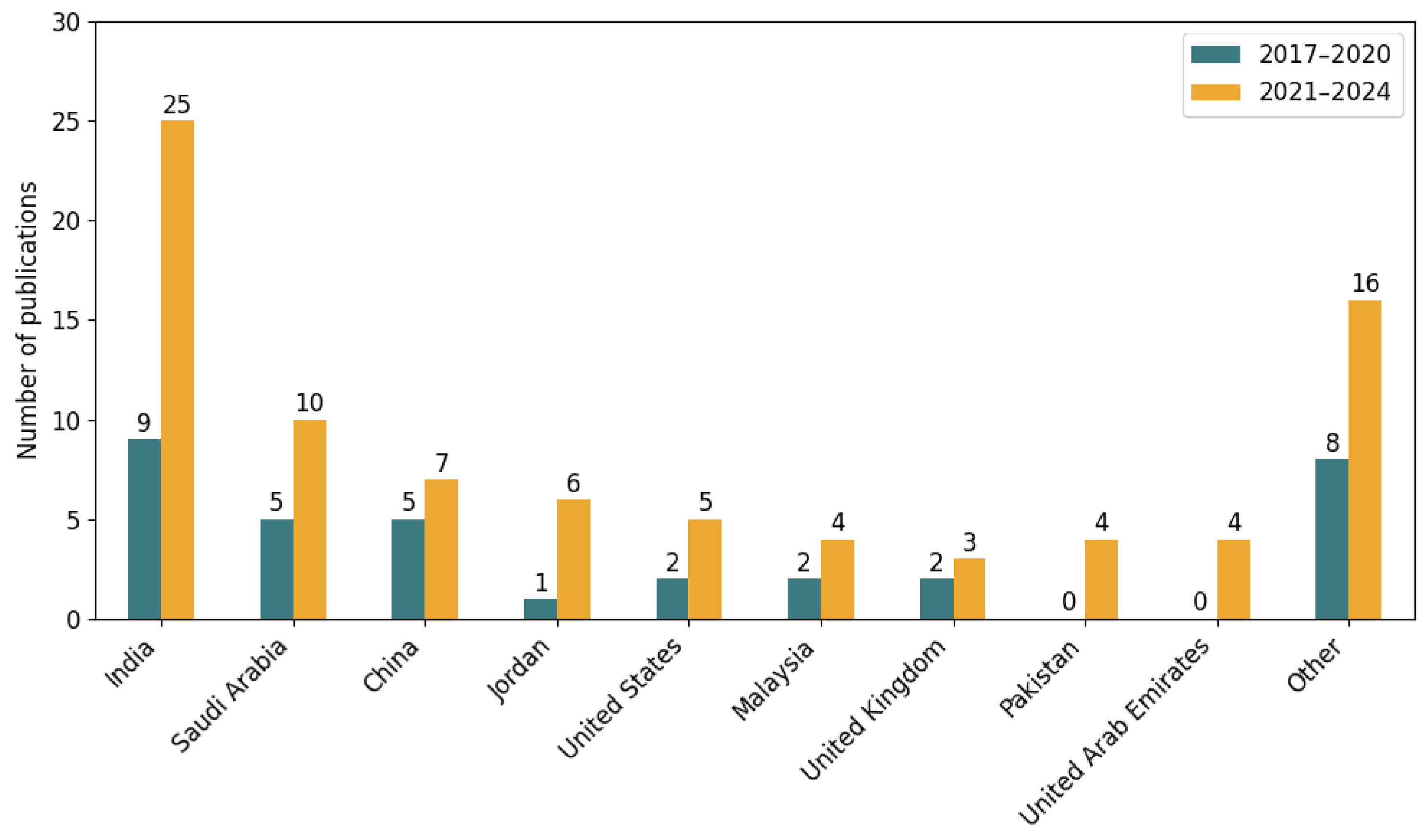

4.4. International Research Contributions in Phishing Detection

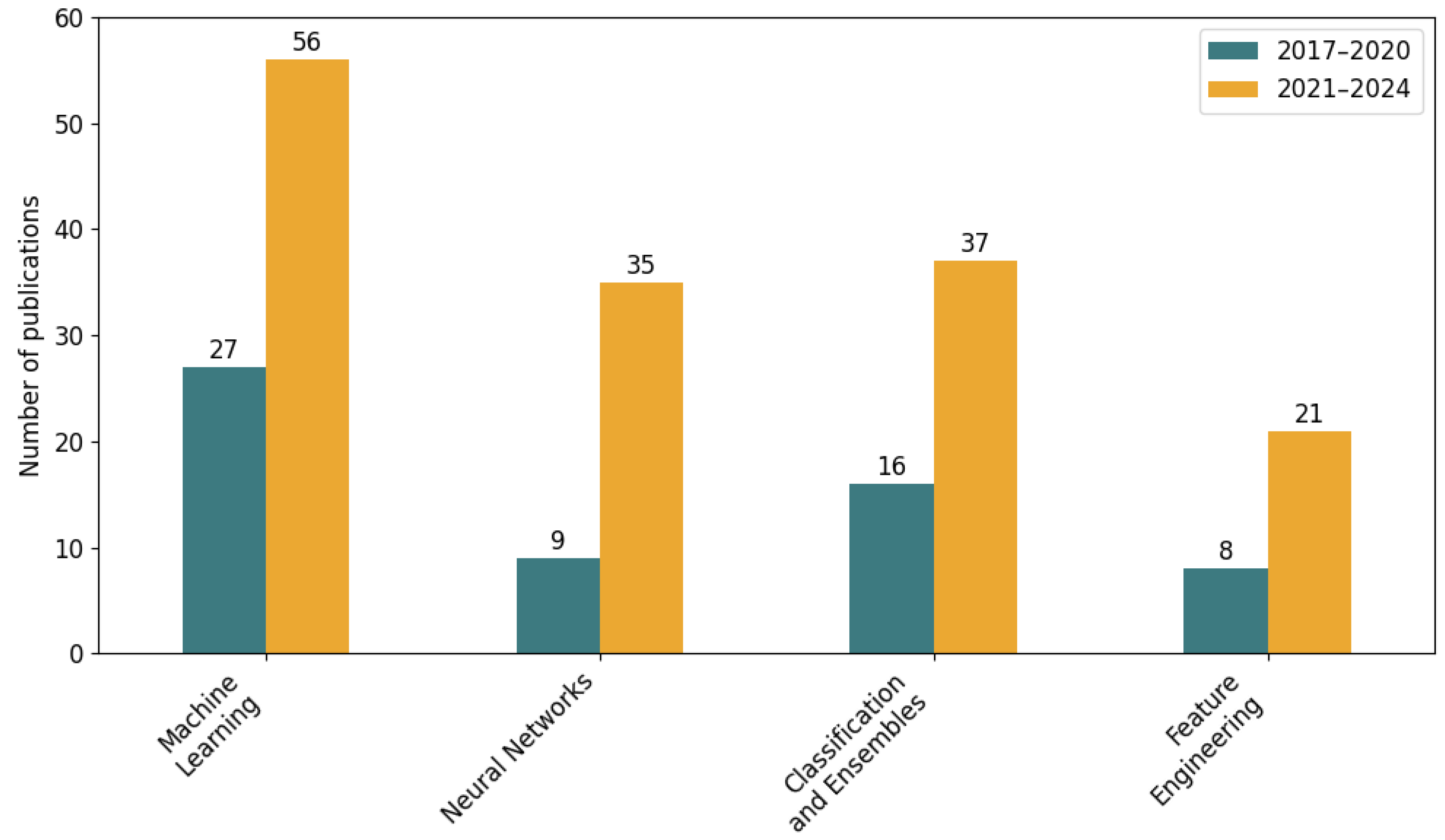

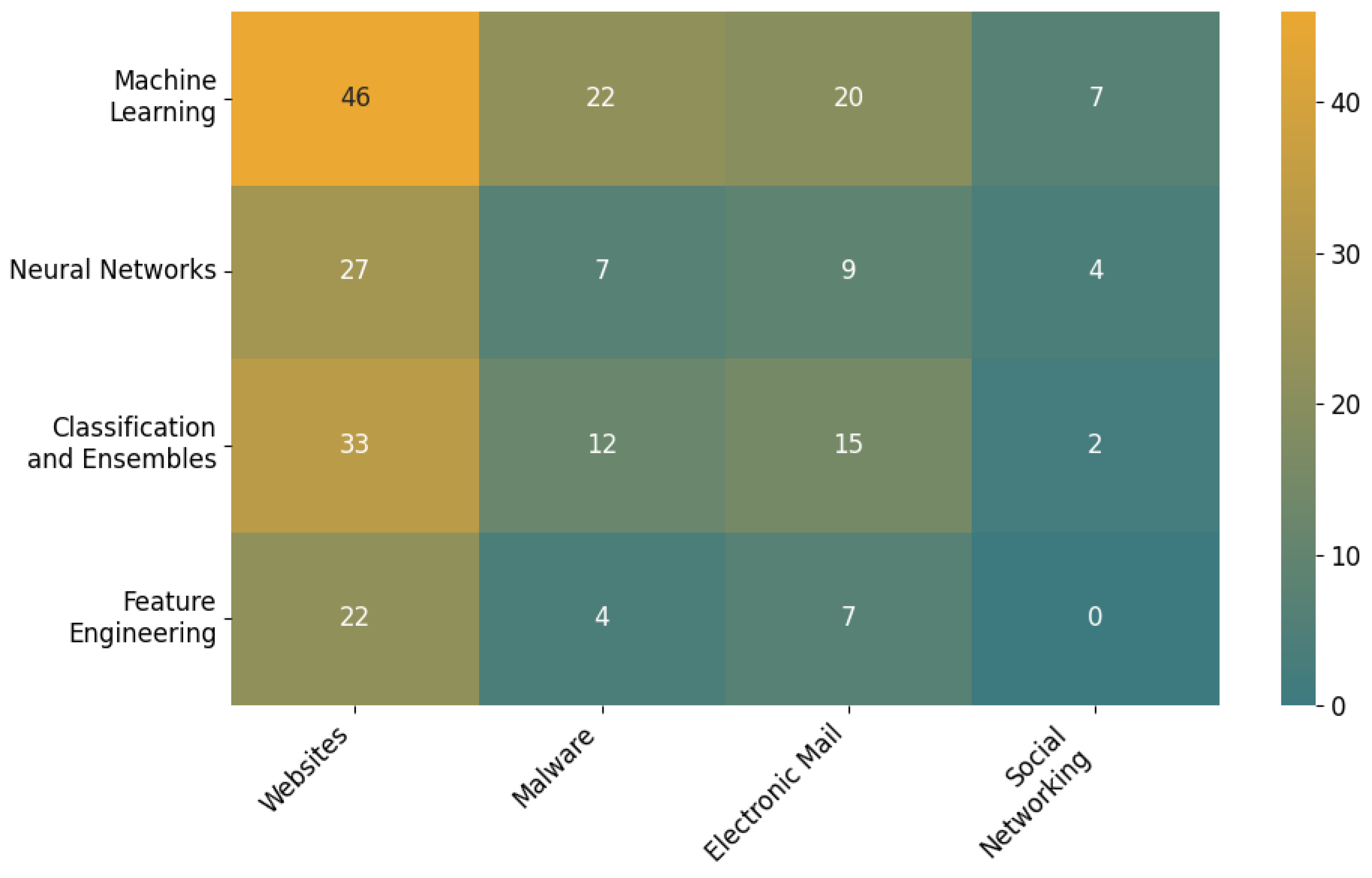

4.5. Technical and Methodological Approaches to Phishing Detection by Channel

4.6. Common Validity Threats Observed in the Reviewed Studies

4.7. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2017; Anti-Phishing Working Group: Lexington, KY, USA, 2017. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2017; Anti-Phishing Working Group: Lexington, KY, USA, 2017. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2017; Anti-Phishing Working Group: Lexington, KY, USA, 2017. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2017; Anti-Phishing Working Group: Lexington, KY, USA, 2017. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2018; Anti-Phishing Working Group: Lexington, KY, USA, 2018. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2018; Anti-Phishing Working Group: Lexington, KY, USA, 2018. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2018; Anti-Phishing Working Group: Lexington, KY, USA, 2018. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2018; Anti-Phishing Working Group: Lexington, KY, USA, 2018. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2019; Anti-Phishing Working Group: Lexington, KY, USA, 2019. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2019; Anti-Phishing Working Group: Lexington, KY, USA, 2019. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2019; Anti-Phishing Working Group: Lexington, KY, USA, 2019. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2019; Anti-Phishing Working Group: Lexington, KY, USA, 2019. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2020; Anti-Phishing Working Group: Lexington, KY, USA, 2020. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2020; Anti-Phishing Working Group: Lexington, KY, USA, 2020. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2020; Anti-Phishing Working Group: Lexington, KY, USA, 2020. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2020; Anti-Phishing Working Group: Lexington, KY, USA, 2020. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2021; Anti-Phishing Working Group: Lexington, KY, USA, 2021. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2021; Anti-Phishing Working Group: Lexington, KY, USA, 2021. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2021; Anti-Phishing Working Group: Lexington, KY, USA, 2021. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2021; Anti-Phishing Working Group: Lexington, KY, USA, 2021. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2022; Anti-Phishing Working Group: Lexington, KY, USA, 2022. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2022; Anti-Phishing Working Group: Lexington, KY, USA, 2022. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2022; Anti-Phishing Working Group: Lexington, KY, USA, 2022. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2022; Anti-Phishing Working Group: Lexington, KY, USA, 2022. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2023; Anti-Phishing Working Group: Lexington, KY, USA, 2023. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2023; Anti-Phishing Working Group: Lexington, KY, USA, 2023. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2023; Anti-Phishing Working Group: Lexington, KY, USA, 2023. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2023; Anti-Phishing Working Group: Lexington, KY, USA, 2023. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 1st Quarter 2024; Anti-Phishing Working Group: Lexington, KY, USA, 2024. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 2nd Quarter 2024; Anti-Phishing Working Group: Lexington, KY, USA, 2024. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 3rd Quarter 2024; Anti-Phishing Working Group: Lexington, KY, USA, 2024. [Google Scholar]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report 4th Quarter 2024; Anti-Phishing Working Group: Lexington, KY, USA, 2024. [Google Scholar]

- Sheng, S.; Wardman, B.; Warner, G.; Cranor, L.; Hong, J.; Zhang, C. An Empirical Analysis of Phishing Blacklists. In Proceedings of the 6th Annual Conference on Email and Anti-Spam (CEAS), Mountain View, CA, USA, 13–14 August 2009; pp. 1–8. [Google Scholar]

- Rao, R.S.; Pais, A.R. Detection of Phishing Websites Using an Efficient Feature-Based Machine Learning Framework. Neural Comput. Appl. 2019, 31, 3851–3873. [Google Scholar] [CrossRef]

- Aburrous, M.; Hossain, M.; Dahal, K.; Thabtah, F. Intelligent Phishing Detection System for E-Banking Using Fuzzy Data Mining. Expert Syst. Appl. 2010, 37, 7913–7921. [Google Scholar] [CrossRef]

- Awasthi, A.; Goel, N. Phishing Website Prediction Using Base and Ensemble Classifier Techniques with Cross-Validation. Cybersecur 2022, 5, 22. [Google Scholar] [CrossRef]

- Hr, M.G.; Mv, A.; Gunesh Prasad, S.; Vinay, S. Development of Anti-Phishing Browser Based on Random Forest and Rule of Extraction Framework. Cybersecur 2020, 3, 20. [Google Scholar] [CrossRef]

- Gopal, S.B.; Poongodi, C. Mitigation of Phishing URL Attack in IoT Using H-ANN with H-FFGWO Algorithm. KSII Trans. Internet Inf. Syst. 2023, 17, 1916–1934. [Google Scholar] [CrossRef]

- Priya, S.; Selvakumar, S.; Velusamy, R.L. Evidential Theoretic Deep Radial and Probabilistic Neural Ensemble Approach for Detecting Phishing Attacks. J. Ambient Intell. Humaniz. Comput. 2023, 14, 1951–1975. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, F.; Luo, X.; Zhang, S. PDRCNN: Precise Phishing Detection with Recurrent Convolutional Neural Networks. Secur. Commun. Netw. 2019, 2019, 2595794. [Google Scholar] [CrossRef]

- Ali, W.; Ahmed, A.A. Hybrid Intelligent Phishing Website Prediction Using Deep Neural Networks with Genetic Algorithm-Based Feature Selection and Weighting. IET Inf. Secur. 2019, 13, 659–669. [Google Scholar] [CrossRef]

- Feng, F.; Zhou, Q.; Shen, Z.; Yang, X.; Han, L.; Wang, J. The Application of a Novel Neural Network in the Detection of Phishing Websites. J. Ambient Intell. Humaniz. Comput. 2024, 15, 1865–1879. [Google Scholar] [CrossRef]

- Al-Alyan, A.; Al-Ahmadi, S. Robust URL Phishing Detection Based on Deep Learning. KSII Trans. Internet Inf. Syst. 2020, 14, 2752–2768. [Google Scholar] [CrossRef]

- Wazirali, R.; Ahmad, R.; Abu-Ein, A.A.-K. Sustaining Accurate Detection of Phishing URLs Using SDN and Feature Selection Approaches. Comput. Netw. 2021, 201, 108591. [Google Scholar] [CrossRef]

- Oram, E.; Dash, P.B.; Naik, B.; Nayak, J.; Vimal, S.; Nataraj, S.K. Light Gradient Boosting Machine-Based Phishing Webpage Detection Model Using Phisher Website Features of Mimic URLs. Pattern Recognit. Lett. 2021, 152, 100–106. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B.B. Two-Level Authentication Approach to Protect from Phishing Attacks in Real Time. J. Ambient Intell. Humaniz. Comput. 2018, 9, 1783–1796. [Google Scholar] [CrossRef]

- Mao, J.; Bian, J.; Tian, W.; Zhu, S.; Wei, T.; Li, A.; Liang, Z. Phishing Page Detection via Learning Classifiers from Page Layout Feature. EURASIP J. Wirel. Commun. Netw. 2019, 2019, 43. [Google Scholar] [CrossRef]

- He, D.; Liu, Z.; Lv, X.; Chan, S.; Guizani, M. On Phishing URL Detection Using Feature Extension. IEEE Internet Things J. 2024, 11, 39527–39536. [Google Scholar] [CrossRef]

- Khatun, M.; Mozumder, M.A.I.; Polash, M.N.H.; Hasan, M.R.; Ahammad, K.; Shaiham, M.S. An Approach to Detect Phishing Websites with Features Selection Method and Ensemble Learning. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 768–775. [Google Scholar] [CrossRef]

- Kulkarni, A.D. Convolution Neural Networks for Phishing Detection. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 15–19. [Google Scholar] [CrossRef]

- Tashtoush, Y.; Alajlouni, M.; Albalas, F.; Darwish, O. Exploring Low-Level Statistical Features of n-Grams in Phishing URLs: A Comparative Analysis with High-Level Features. Clust. Comput. 2024, 27, 13717–13736. [Google Scholar] [CrossRef]

- Almomani, A.; Alauthman, M.; Shatnawi, M.T.; Alweshah, M.; Alrosan, A.; Alomoush, W.; Gupta, B.B. Phishing Website Detection With Semantic Features Based on Machine Learning Classifiers: A Comparative Study. Int. J. Semant. Web Inf. Syst. 2022, 18, 24. [Google Scholar] [CrossRef]

- Jibat, D.; Jamjoom, S.; Al-Haija, Q.A.; Qusef, A. A Systematic Review: Detecting Phishing Websites Using Data Mining Models. Intell. Converg. Netw. 2023, 4, 326–341. [Google Scholar] [CrossRef]

- Prabakaran, M.K.; Meenakshi Sundaram, P.; Chandrasekar, A.D. An Enhanced Deep Learning-Based Phishing Detection Mechanism to Effectively Identify Malicious URLs Using Variational Autoencoders. IET Inf. Secur. 2023, 17, 423–440. [Google Scholar] [CrossRef]

- Samad, S.R.A.; Ganesan, P.; Al-Kaabi, A.S.; Rajasekaran, J.; Singaravelan, M.; Basha, P.S. Automated Detection of Malevolent Domains in Cyberspace Using Natural Language Processing and Machine Learning. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 328–341. [Google Scholar] [CrossRef]

- Jalil, S.; Usman, M.; Fong, A. Highly Accurate Phishing URL Detection Based on Machine Learning. J. Ambient Intell. Humaniz. Comput. 2023, 14, 9233–9251. [Google Scholar] [CrossRef]

- Kulkarni, A.; Brown, L.L. Phishing Websites Detection Using Machine Learning. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 8–13. [Google Scholar] [CrossRef]

- Ndichu, S.; Kim, S.; Ozawa, S.; Misu, T.; Makishima, K. A Machine Learning Approach to Detection of JavaScript-Based Attacks Using AST Features and Paragraph Vectors. Appl. Soft Comput. 2019, 84, 105721. [Google Scholar] [CrossRef]

- Sharma, S.R.; Singh, B.; Kaur, M. Improving the Classification of Phishing Websites Using a Hybrid Algorithm. Comput. Intell. 2022, 38, 667–689. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Z.; Chen, X.; Yuan, H.; Liu, W. A Stacking Model Using URL and HTML Features for Phishing Webpage Detection. Future Gener. Comput. Syst. 2019, 94, 27–39. [Google Scholar] [CrossRef]

- Qasim, M.A.; Flayh, N.A. Enhancing Phishing Website Detection via Feature Selection in URL-Based Analysis. Informatica 2023, 47, 145–155. [Google Scholar] [CrossRef]

- Song, F.; Lei, Y.; Chen, S.; Fan, L.; Liu, Y. Advanced Evasion Attacks and Mitigations on Practical ML-Based Phishing Website Classifiers. Int. J. Intell. Syst. 2021, 36, 5210–5240. [Google Scholar] [CrossRef]

- Mishra, S.; Soni, D. Smishing Detector: A Security Model to Detect Smishing through SMS Content Analysis and URL Behavior Analysis. Future Gener. Comput. Syst. 2020, 108, 803–815. [Google Scholar] [CrossRef]

- Zaimi, R.; Hafidi, M.; Lamia, M. A Deep Learning Mechanism to Detect Phishing URLs Using the Permutation Importance Method and SMOTE-Tomek Link. J. Supercomput. 2024, 80, 17159–17191. [Google Scholar] [CrossRef]

- Mohamad, M.A.; Ahmad, M.A.; Mustaffa, Z. Hybrid Honey Badger Algorithm with Artificial Neural Network (HBA-ANN) for Website Phishing Detection. Iraqi J. Comput. Sci. Math. 2024, 5, 671–682. [Google Scholar] [CrossRef]

- Mahdavifar, S.; Ghorbani, A.A. DeNNeS: Deep Embedded Neural Network Expert System for Detecting Cyber Attacks. Neural Comput. Appl. 2020, 32, 14753–14780. [Google Scholar] [CrossRef]

- Moedjahedy, J.; Setyanto, A.; Alarfaj, F.K.; Alreshoodi, M. CCrFS: Combine Correlation Features Selection for Detecting Phishing Websites Using Machine Learning. Future Internet 2022, 14, 229. [Google Scholar] [CrossRef]

- Hassan, N.H.; Fakharudin, A.S. Web Phishing Classification Model Using Artificial Neural Network and Deep Learning Neural Network. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 535–542. [Google Scholar] [CrossRef]

- Gandotra, E.; Gupta, D. Improving Spoofed Website Detection Using Machine Learning. Cybern. Syst. 2021, 52, 169–190. [Google Scholar] [CrossRef]

- Roy, S.S.; Awad, A.I.; Amare, L.A.; Erkihun, M.T.; Anas, M. Multimodel Phishing URL Detection Using LSTM, Bidirectional LSTM, and GRU Models. Future Internet 2022, 14, 340. [Google Scholar] [CrossRef]

- Shabudin, S.; Sani, N.S.; Ariffin, K.A.Z.; Aliff, M. Feature Selection for Phishing Website Classification. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 587–595. [Google Scholar] [CrossRef]

- Chen, S.; Lu, Y.; Liu, D.-J. Phishing Target Identification Based on Neural Networks Using Category Features and Images. Secur. Commun. Netw. 2022, 2022, 5653270. [Google Scholar] [CrossRef]

- Anitha, J.; Kalaiarasu, M. A New Hybrid Deep Learning-Based Phishing Detection System Using MCS-DNN Classifier. Neural Comput. Appl. 2022, 34, 5867–5882. [Google Scholar] [CrossRef]

- Priya, S.; Selvakumar, S. Detection of Phishing Attacks Using Probabilistic Neural Network with a Novel Training Algorithm for Reduced Gaussian Kernels and Optimal Smoothing Parameter Adaptation for Mobile Web Services. Int. J. Ad Hoc Ubiquitous Comput. 2021, 36, 67–88. [Google Scholar] [CrossRef]

- Maurya, S.; Saini, H.S.; Jain, A. Browser Extension Based Hybrid Anti-Phishing Framework Using Feature Selection. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 579–588. [Google Scholar] [CrossRef]

- Gururaj, H.L.; Mitra, P.; Koner, S.; Bal, S.; Flammini, F.; Janhavi, V.; Kumar, R.V. Prediction of Phishing Websites Using AI Techniques. Int. J. Inf. Secur. Priv. 2022, 16, 14. [Google Scholar] [CrossRef]

- Vrbančič, G.; Fister, I.; Podgorelec, V. Parameter Setting for Deep Neural Networks Using Swarm Intelligence on Phishing Websites Classification. Int. J. Artif. Intell. Tools 2019, 28, 1960008. [Google Scholar] [CrossRef]

- Nagaraj, K.; Bhattacharjee, B.; Sridhar, A.; Sharvani, G.S. Detection of Phishing Websites Using a Novel Twofold Ensemble Model. J. Syst. Inf. Technol. 2018, 20, 321–357. [Google Scholar] [CrossRef]

- Feng, J.; Zou, L.; Nan, T. A Phishing Webpage Detection Method Based on Stacked Autoencoder and Correlation Coefficients. J. Compt. Inf. Technol. 2019, 27, 41–54. [Google Scholar] [CrossRef]

- Gupta, S.; Bansal, H. Trust Evaluation of Health Websites by Eliminating Phishing Websites and Using Similarity Techniques. Concurr. Comput. Pract. Exp. 2023, 35, e7695. [Google Scholar] [CrossRef]

- Ozcan, A.; Catal, C.; Donmez, E.; Senturk, B. A Hybrid DNN–LSTM Model for Detecting Phishing URLs. Neural Comput. Appl. 2023, 35, 4957–4973. [Google Scholar] [CrossRef]

- Alotaibi, B.; Alotaibi, M. Consensus and Majority Vote Feature Selection Methods and a Detection Technique for Web Phishing. J. Ambient Intell. Humaniz. Comput. 2021, 12, 717–727. [Google Scholar] [CrossRef]

- Vaitkevicius, P.; Marcinkevicius, V. Comparison of Classification Algorithms for Detection of Phishing Websites. Informatica 2020, 31, 143–160. [Google Scholar] [CrossRef]

- Zaimi, R.; Hafidi, M.; Lamia, M. A Deep Learning Approach to Detect Phishing Websites Using CNN for Privacy Protection. Intell. Decis. Technol. 2023, 17, 713–728. [Google Scholar] [CrossRef]

- Catal, C.; Giray, G.; Tekinerdogan, B.; Kumar, S.; Shukla, S. Applications of Deep Learning for Phishing Detection: A Systematic Literature Review. Knowl. Inf. Syst. 2022, 64, 1457–1500. [Google Scholar] [CrossRef]

- Gao, B.; Liu, W.; Liu, G.; Nie, F. Resource Knowledge-Driven Heterogeneous Graph Learning for Website Fingerprinting. IEEE Trans. Cogn. Commun. Netw. 2024, 10, 968–981. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B.B. A Machine Learning Based Approach for Phishing Detection Using Hyperlinks Information. J. Ambient Intell. Humaniz. Comput. 2019, 10, 2015–2028. [Google Scholar] [CrossRef]

- Almujahid, N.F.; Haq, M.A.; Alshehri, M. Comparative Evaluation of Machine Learning Algorithms for Phishing Site Detection. PeerJ Comput. Sci. 2024, 10, e2131. [Google Scholar] [CrossRef] [PubMed]

- Hossain, S.; Sarma, D.; Chakma, R.J. Machine Learning-Based Phishing Attack Detection. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 378–388. [Google Scholar] [CrossRef]

- Goud, N.S.; Mathur, A. Feature Engineering Framework to Detect Phishing Websites Using URL Analysis. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 295–303. [Google Scholar] [CrossRef]

- Mehedi, I.M.; Shah, M.H.M. Categorization of Webpages Using Dynamic Mutation Based Differential Evolution and Gradient Boost Classifier. J. Ambient Intell. Humaniz. Comput. 2023, 14, 8363–8374. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Al-Fayoumi, M. An Intelligent Identification and Classification System for Malicious Uniform Resource Locators (URLs). Neural Comput. Appl. 2023, 35, 16995–17011. [Google Scholar] [CrossRef]

- El-Alfy, E.-S.M. Detection of Phishing Websites Based on Probabilistic Neural Networks and K-Medoids Clustering. Comput. J. 2017, 60, 1745–1759. [Google Scholar] [CrossRef]

- Zhang, W.; Jiang, Q.; Chen, L.; Li, C. Two-Stage ELM for Phishing Web Pages Detection Using Hybrid Features. World Wide Web 2017, 20, 797–813. [Google Scholar] [CrossRef]

- Marchal, S.; Armano, G.; Grondahl, T.; Saari, K.; Singh, N.; Asokan, N. Off-the-Hook: An Efficient and Usable Client-Side Phishing Prevention Application. IEEE Trans. Comput. 2017, 66, 1717–1733. [Google Scholar] [CrossRef]

- Abutair, H.; Belghith, A.; AlAhmadi, S. CBR-PDS: A Case-Based Reasoning Phishing Detection System. J. Ambient Intell. Humaniz. Comput. 2019, 10, 2593–2606. [Google Scholar] [CrossRef]

- Muhammad, A.; Murtza, I.; Saadia, A.; Kifayat, K. Cortex-Inspired Ensemble Based Network Intrusion Detection System. Neural Comput. Appl. 2023, 35, 15415–15428. [Google Scholar] [CrossRef]

- Zakaria, W.Z.A.; Abdollah, M.F.; Mohd, O.; Yassin, S.M.W.M.S.M.M.; Ariffin, A. RENTAKA: A Novel Machine Learning Framework for Crypto-Ransomware Pre-Encryption Detection. Intl. J. Adv. Comput. Sci. Appl. 2022, 13, 378–385. [Google Scholar] [CrossRef]

- Arhsad, M.; Karim, A. Android Botnet Detection Using Hybrid Analysis. KSII Trans. Internet Inf. Syst. 2024, 18, 704–719. [Google Scholar] [CrossRef]

- Binsaeed, K.; Stringhini, G.; Youssef, A.E. Detecting Spam in Twitter Microblogging Services: A Novel Machine Learning Approach Based on Domain Popularity. Intl. J. Adv. Comput. Sci. Appl. 2020, 11, 11–22. [Google Scholar] [CrossRef]

- Baruah, S.; Borah, D.J.; Deka, V. Detection of Peer-to-Peer Botnet Using Machine Learning Techniques and Ensemble Learning Algorithm. Int. J. Inf. Secur. Priv. 2023, 17, 16. [Google Scholar] [CrossRef]

- Shang, Y. Detection and Prevention of Cyber Defense Attacks Using Machine Learning Algorithms. Scalable Comput. Pract. Exp. 2024, 25, 760–769. [Google Scholar] [CrossRef]

- Shah, A.; Varshney, S.; Mehrotra, M. DeepMUI: A Novel Method to Identify Malicious Users on Online Social Network Platforms. Concurr. Comput. Pract. Exper. 2024, 36, e7917. [Google Scholar] [CrossRef]

- Almomani, A. Fast-Flux Hunter: A System for Filtering Online Fast-Flux Botnet. Neural Comput. Appl. 2018, 29, 483–493. [Google Scholar] [CrossRef]

- Chipa, I.H.; Gamboa-Cruzado, J.; Villacorta, J.R. Mobile Applications for Cybercrime Prevention: A Comprehensive Systematic Review. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 73–82. [Google Scholar] [CrossRef]

- Ilyasa, S.N.; Khadidos, A.O. Optimized SMS Spam Detection Using SVM-DistilBERT and Voting Classifier: A Comparative Study on the Impact of Lemmatization. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1323–1333. [Google Scholar] [CrossRef]

- Taherdoost, H. Insights into Cybercrime Detection and Response: A Review of Time Factor. Information 2024, 15, 273. [Google Scholar] [CrossRef]

- Rustam, F.; Ashraf, I.; Jurcut, A.D.; Bashir, A.K.; Zikria, Y.B. Malware Detection Using Image Representation of Malware Data and Transfer Learning. J. Parallel Distrib. Comput. 2023, 172, 32–50. [Google Scholar] [CrossRef]

- Mvula, P.K.; Branco, P.; Jourdan, G.-V.; Viktor, H.L. A Survey on the Applications of Semi-Supervised Learning to Cyber-Security. ACM Comput. Surv. 2024, 56, 1–41. [Google Scholar] [CrossRef]

- Al-Fawa’Reh, M.; Abu-Khalaf, J.; Szewczyk, P.; Kang, J.J. MalBoT-DRL: Malware Botnet Detection Using Deep Reinforcement Learning in IoT Networks. IEEE Internet Things J. 2024, 11, 9610–9629. [Google Scholar] [CrossRef]

- Diko, Z.; Sibanda, K. Comparative Analysis of Popular Supervised Machine Learning Algorithms for Detecting Malicious Universal Resource Locators. J. Cyber Secur. Mobil. 2024, 13, 1105–1128. [Google Scholar] [CrossRef]

- Alqahtani, A.S.; Altammami, O.A.; Haq, M.A. A Comprehensive Analysis of Network Security Attack Classification Using Machine Learning Algorithms. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1269–1280. [Google Scholar] [CrossRef]

- Butnaru, A.; Mylonas, A.; Pitropakis, N. Towards Lightweight Url-Based Phishing Detection. Future Internet 2021, 13, 154. [Google Scholar] [CrossRef]

- Demmese, F.A.; Shajarian, S.; Khorsandroo, S. Transfer Learning with ResNet50 for Malicious Domains Classification Using Image Visualization. Discov. Artif. Intell. 2024, 4, 52. [Google Scholar] [CrossRef]

- Das, L.; Ahuja, L.; Pandey, A. A Novel Deep Learning Model-Based Optimization Algorithm for Text Message Spam Detection. J. Supercomput. 2024, 80, 17823–17848. [Google Scholar] [CrossRef]

- Hans, K.; Ahuja, L.; Muttoo, S.K. Detecting Redirection Spam Using Multilayer Perceptron Neural Network. Soft Comput. 2017, 21, 3803–3814. [Google Scholar] [CrossRef]

- Naswir, A.F.; Zakaria, L.Q.; Saad, S. Determining the Best Email and Human Behavior Features on Phishing Email Classification. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 175–184. [Google Scholar] [CrossRef]

- Das, S.; Mandal, S.; Basak, R. Spam Email Detection Using a Novel Multilayer Classification-Based Decision Technique. Int. J. Comput. Appl. 2023, 45, 587–599. [Google Scholar] [CrossRef]

- Bountakas, P.; Xenakis, C. HELPHED: Hybrid Ensemble Learning PHishing Email Detection. J. Netw. Comput. Appl. 2023, 210, 103545. [Google Scholar] [CrossRef]

- Bhadane, A.; Mane, S.B. Detecting Lateral Spear Phishing Attacks in Organisations. IET Inf. Secur. 2019, 13, 133–140. [Google Scholar] [CrossRef]

- Magdy, S.; Abouelseoud, Y.; Mikhail, M. Efficient Spam and Phishing Emails Filtering Based on Deep Learning. Comput. Netw. 2022, 206, 108826. [Google Scholar] [CrossRef]

- Stevanović, N. Character And Word Embeddings for Phishing Email Detection. Comput. Inf. 2022, 41, 1337–1357. [Google Scholar] [CrossRef]

- Somesha, M.; Pais, A.R. Classification of Phishing Email Using Word Embedding and Machine Learning Techniques. J. Cyber Secur. Mobil. 2022, 11, 279–320. [Google Scholar] [CrossRef]

- Almousa, B.N.; Uliyan, D.M. Anti-Spoofing in Medical Employee’s Email Using Machine Learning Uclassify Algorithm. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 241–251. [Google Scholar] [CrossRef]

- Mohammed, M.A.; Ibrahim, D.A.; Salman, A.O. Adaptive Intelligent Learning Approach Based on Visual Anti-Spam Email Model for Multi-Natural Language. J. Intell. Syst. 2021, 30, 774–792. [Google Scholar] [CrossRef]

- Li, W.; Ke, L.; Meng, W.; Han, J. An Empirical Study of Supervised Email Classification in Internet of Things: Practical Performance and Key Influencing Factors. Int. J. Intell. Syst. 2022, 37, 287–304. [Google Scholar] [CrossRef]

- Loh, P.K.K.; Lee, A.Z.Y.; Balachandran, V. Towards a Hybrid Security Framework for Phishing Awareness Education and Defense. Future Internet 2024, 16, 86. [Google Scholar] [CrossRef]

- Manita, G.; Chhabra, A.; Korbaa, O. Efficient E-Mail Spam Filtering Approach Combining Logistic Regression Model and Orthogonal Atomic Orbital Search Algorithm. Appl. Soft Comput. 2023, 144, 110478. [Google Scholar] [CrossRef]

- Akinyelu, A.A.; Adewumi, A.O. On the Performance of Cuckoo Search and Bat Algorithms Based Instance Selection Techniques for SVM Speed Optimization with Application to E-Fraud Detection. KSII Trans. Internet Inf. Syst. 2018, 12, 1348–1375. [Google Scholar] [CrossRef]

- Siddique, Z.B.; Khan, M.A.; Din, I.U.; Almogren, A.; Mohiuddin, I.; Nazir, S. Machine Learning-Based Detection of Spam Emails. Sci. Program. 2021, 2021, 6508784. [Google Scholar] [CrossRef]

- Abari, O.J.; Sani, N.F.M.; Khalid, F.; Sharum, M.Y.B.; Ariffin, N.A.M. Phishing Image Spam Classification Research Trends: Survey and Open Issues. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 794–805. [Google Scholar] [CrossRef]

- Mughaid, A.; AlZu’bi, S.; Hnaif, A.; Taamneh, S.; Alnajjar, A.; Elsoud, E.A. An Intelligent Cyber Security Phishing Detection System Using Deep Learning Techniques. Clust. Comput. 2022, 25, 3819–3828. [Google Scholar] [CrossRef]

- Akinyelu, A.A.; Ezugwu, A.E.; Adewumi, A.O. Ant Colony Optimization Edge Selection for Support Vector Machine Speed Optimization. Neural Comput. Appl. 2020, 32, 11385–11417. [Google Scholar] [CrossRef]

- Bezerra, A.; Pereira, I.; Rebelo, M.Â.; Coelho, D.; Oliveira, D.A.D.; Costa, J.F.P.; Cruz, R.P.M. A Case Study on Phishing Detection with a Machine Learning Net. Int. J. Data Sci. Anal. 2024, 20, 2001–2020. [Google Scholar] [CrossRef]

- Kaushik, K.; Bhardwaj, A.; Kumar, M.; Gupta, S.K.; Gupta, A. A Novel Machine Learning-Based Framework for Detecting Fake Instagram Profiles. Concurr. Comput. Pract. Exp. 2022, 34, e7349. [Google Scholar] [CrossRef]

- Djaballah, K.A.; Boukhalfa, K.; Guelmaoui, M.A.; Saidani, A.; Ramdane, Y. A Proposal Phishing Attack Detection System on Twitter. Int. J. Inf. Secur. Priv. 2022, 16, 27. [Google Scholar] [CrossRef]

- Khan, A.I.; Unhelkar, B. An Enhanced Anti-Phishing Technique for Social Media Users: A Multilayer Q-Learning Approach. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 18–28. [Google Scholar] [CrossRef]

- Shetty, N.P.; Muniyal, B.; Anand, A.; Kumar, S. An Enhanced Sybil Guard to Detect Bots in Online Social Networks. J. Cyber Secur. Mobil. 2022, 11, 105–126. [Google Scholar] [CrossRef]

- Yamak, Z.; Saunier, J.; Vercouter, L. Automatic Detection of Multiple Account Deception in Social Media. Web Intell. 2017, 15, 219–231. [Google Scholar] [CrossRef]

- Khan, A.A.; Chaudhari, O.; Chandra, R. A Review of Ensemble Learning and Data Augmentation Models for Class Imbalanced Problems: Combination, Implementation and Evaluation. Expert Syst. Appl. 2024, 244, 122778. [Google Scholar] [CrossRef]

- Sharma, S.; Gosain, A. Addressing Class Imbalance in Remote Sensing Using Deep Learning Approaches: A Systematic Literature Review. Evol. Intell. 2025, 18, 23. [Google Scholar] [CrossRef]

- Rezvani, S.; Wang, X. A Broad Review on Class Imbalance Learning Techniques. Appl. Soft Comput. 2023, 143, 110415. [Google Scholar] [CrossRef]

- Regulation-2016/679-EN-Gdpr-EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 14 September 2025).

- National Institute of Standards and Technology. NIST Privacy Framework: A Tool for Improving Privacy through Enterprise Risk Management, Version 1.0; NIST: Gaithersburg, MD, USA, 2020. [Google Scholar]

- van Eck, N.J.; Waltman, L. VOSviewer Manual; Centre for Science and Technology Studies (CWTS), Leiden University: Leiden, The Netherlands, 2023. [Google Scholar]

- van Eck, N.J.; Waltman, L. Software Survey: VOSviewer, a Computer Program for Bibliometric Mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Shukla, P.K.; Veerasamy, B.D.; Alduaiji, N.; Addula, S.R.; Sharma, S.; Shukla, P.K. Encoder Only Attention-Guided Transformer Framework for Accurate and Explainable Social Media Fake Profile Detection. Peer-to-Peer Netw. Appl. 2025, 18, 232. [Google Scholar] [CrossRef]

- Balasubramanian, P.; Liyana, S.; Sankaran, H.; Sivaramakrishnan, S.; Pusuluri, S.; Pirttikangas, S.; Peltonen, E. Generative AI for Cyber Threat Intelligence: Applications, Challenges, and Analysis of Real-World Case Studies. Artif. Intell. Rev. 2025, 58, 336. [Google Scholar] [CrossRef]

- Li, H.; Li, Y.; Li, K. Phishing Email Uniform Resource Locator Detection Based on Large Language Model. In Proceedings of the International Conference on Computer Application and Information Security (ICCAIS 2024), Wuhan, China, 20–22 December 2024; SPIE: Bellingham, WA, USA, 2025; Volume 13562, pp. 1245–1250. [Google Scholar]

- Zeng, V.; Baki, S.; El Aassal, A.; Verma, R.; Teixeira De Moraes, L.F.; Das, A. Diverse Datasets and a Customizable Benchmarking Framework for Phishing | Proceedings of the Sixth International Workshop on Security and Privacy Analytics. New Orleans, LA, USA, 18 March 2020. [Google Scholar] [CrossRef]

- Waltman, L.; Van Eck, N.J.; Noyons, E.C.M. A Unified Approach to Mapping and Clustering of Bibliometric Networks. J. Informetr. 2010, 4, 629–635. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to Conduct a Bibliometric Analysis: An Overview and Guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Anti-Phishing Working Group (APWG). Phishing Activity Trends Report, 1st Quarter 2025; Anti-Phishing Working Group (APWG): Lexington, MA, USA, 2025. [Google Scholar]

- European Union Agency for Cybersecurity. ENISA Threat Landscape 2024: July 2023 to June 2024; European Union Agency for Cybersecurity (ENISA): Luxembourg, 2024. [Google Scholar]

- Microsoft Digital Defense Report 2024. Available online: https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/microsoft/final/en-us/microsoft-brand/documents/Microsoft%20Digital%20Defense%20Report%202024%20%281%29.pdf (accessed on 14 September 2025).

| Time Frame * | Dominant Approaches | Example Technologies/Features | Characteristics |

|---|---|---|---|

| 2000–2005 | List-based Approaches [33,34] | Blacklist, Whitelist (Google Safe Browsing, Microsoft SmartScreen) | Simple and fast; high false negative rate for zero-day attacks |

| 2006–2010 | Visual Similarity-based Approaches [34,35] | DOM structure comparison, screenshot matching | Effective for look-alike pages; computationally expensive |

| 2011–2016 | URL & Website Content Feature-based (heuristics) [34,35] | URL length, HTTPS presence, number of forms | Manual rules, easy to bypass; low adaptability to evolving attacks |

| No. | Study | Data Quality | Class Balance | External Sources Used (Blacklists/Metadata) | Risk of Data Leakage | Validation Method | Model Selection Procedure | Evaluation/System Metrics | Handling of Class Imbalance |

|---|---|---|---|---|---|---|---|---|---|

| 1 | [36] | Construction and sources: combined; UCI Machine Learning Repository—Phishing Websites Data Set; Kaggle—“Phishing website dataset”; Preprocessing: standardization applied by authors—datasets described as preprocessed/normalized; Total items: 13,511. | Not reported | Labels: UCI Phishing Websites Data Set; Kaggle “Phishing website dataset. Metadata: WHOIS-derived domain age; DNS record presence; web traffic; Google index; page rank; external links—per dataset feature list. | Medium; datasets from UCI and Kaggle were merged, and separation or deduplication procedures were not described in detail. | 10-fold CV for the ensemble models | Classifiers compared by accuracy across datasets; hyperparameters and selection procedure not described. | Evaluation: Accuracy; Precision; Recall; F1-score; ROC AUC; Cohen’s kappa. System metrics: Not reported. | Partially addressed (metrics only) |

| 2 | [37] | Construction and sources: combined; PhishTank; MillerSmiles; source of benign: Not reported; Acquisition window: Not reported; Preprocessing: Not reported; Total items: 11,055. | Not reported | Labels: PhishTank; MillerSmiles; benign labels: Not reported. Metadata: TLS/SSL certificate information; domain registration length/age (WHOIS); DNS record presence; web traffic rank; PageRank; Google index; links pointing to page; statistical list of phishing IP addresses. | Medium—combined PhishTank and MillerSmiles; deduplication and temporal/host-level separation not described. | 5-fold CV | GridSearchCV for Random Forest; optimal hyperparameters reported; no nested evaluation. | Accuracy, Precision, Recall, F1, confusion matrix; System metrics: controlled testbed; avg response time 4 s (prototype) vs. 6 s (Chrome extension); 33.3% lower time overhead | Partially addressed (metrics only) |

| 3 | [38] | Construction and sources: single-source; ISCX-URL2016; OpenPhish; PhishTank; UCI Machine Learning Repository; Mendeley website dataset; Preprocessing: removal of empty and NaN values; removal of redundant/empty fields; URL-based features only; Total items: Not reported. | Imbalanced; ISCX-URL2016: benign 35,000/phishing 10,000; OpenPhish: benign 20,025,990/phishing 85,003; PhishTank: benign 48,009/phishing 48,009; UCI: benign 204,863/phishing 24,567; Mendeley: benign 58,000/phishing 30,647 | Labels: ISCX-URL2016; OpenPhish; PhishTank; UCI Machine Learning Repository; Mendeley website dataset; snapshot/version not reported. Metadata: none | High—feature selection performed before dataset split; only an 80/20 random hold-out described; no deduplication or temporal separation detailed | Hold-out split (80/20) | Hyperparameter-optimized ANN; H-FFGWO for feature selection; parameters set after experimentation; no formal search procedure described | Evaluation: Accuracy; Precision; Recall; F1-score System metrics: Not reported | Partially addressed (metrics only) |

| 4 | [39] | Construction and sources: combined; UCI ML Repository; PhishTank; Starting Point Directory; Acquisition window: UCI accessed 30 Mar 2020; PhishTank and Starting Point Directory accessed 30 Jul 2019; Preprocessing: continuous attributes converted to categorical; duplicate or invalid URL filtering not reported; Total items: UCI_DS1 = 11,055; UCI_DS2 = 1353; Phish_NetDS = 10,493 | Imbalanced; UCI_DS1: phishing 6157, benign 4898; UCI_DS2: Not reported; Phish_NetDS: phishing 4654, benign 5839. | Labels: UCI repository; Phish_NetDS phishing labels from PhishTank, benign from Starting Point Directory. Metadata: WHOIS domain data, domain age checker, Google index/SEO tools; DNS record. | Medium—multiple datasets evaluated and internal 65/35 hold-out only, no deduplication or temporal split reported. | Hold-out split 65/35; stratification not reported. | Architecture and hyperparameters specified (Deep_Radial m-6-5-4-3-2; activations; epochs = 1000; smoothing = 0.1; RBF spread = 1.0); base-classifier weights optimized with IntSquad (DE + SQP); selection procedure for these settings not described. | Accuracy, Precision, Recall, F1, MCC, TPR, FPR. System metric: the proposed ensemble was slower than DNN by 3.54–5.83% (test detection time, averaged over multiple runs). | Partially addressed (metrics only) |

| 5 | [40] | Construction and sources: combined; PhishTank phishing + Alexa Top-1M-derived benign; Acquisition window: PhishTank Aug 2006–Mar 2018; Alexa snapshot date not reported; Preprocessing: liveness check, removal of non-surviving or HTML-error pages, de-dup of benign links via search engine collection; Total items: 490,408 URLs. | Balanced; overall 245,385 phishing/245,023 benign; Train 196,308/196,019; Validation 24,538/24,502; Test 24,539/24,502. | Labels: PhishTank (August 2006–March 2018) for phishing; Alexa top domains with search engine top-10 links for benign. Metadata: none. | Medium—Combined sources. No deduplication reported. | Fixed split 392,327/49,040/49,041 and separate 10-fold CV reported. | Hyperparameters explored on validation set and chosen by accuracy/loss: RNN units {8, 16, 32, 64, 128} best 64; CNN kernel sizes 2–7 best {5, 6, 7}; batch size 2048; epochs 32; optimizer Adam, learning rate 0.01; architecture and hyperparameters provided with selection on validation set. | Accuracy, Precision, Recall, F1, AUC. System metrics: training time 4426.15 s and test time 40.66 s, average per-URL detection 0.4 ms. | Partially addressed (metrics only) |

| 6 | [41] | Construction and sources: single-source; UCI Machine Learning Repository “Phishing Websites Data Set”; Acquisition window: snapshot retrieved 9 May 2016; Preprocessing: dataset-encoded features with values −1/0/1 as described; Total items: 1353. | Imbalanced; phishing 702, legitimate 548, suspicious 103; per-split distributions Not reported. | Labels: pre-labeled benchmark (UCI). Metadata: features beyond URL string included in UCI dataset (e.g., Age of Domain, Website Traffic, HTTPS/SSL); specific external providers Not reported. | Medium—no per-fold description for GA; single global “best features by GA”; no nested CV | 10-fold CV, also 70/30 hold-out (reported as yielding similar results; paper presents CV results) | GA used to select features and to weight features; DNN architecture and hyperparameters given (TanhWithDropout; 2 hidden layers; 50 neurons each; dropout 0.5; ADADELTA; cross-entropy; max epochs 100) without describing how these settings were chosen. | Accuracy, Sensitivity (TPR), Specificity (TNR), G-mean; multi-class formulas explicitly defined System metrics: Not reported | Partially addressed (metrics only) |

| 7 | [42] | Public dataset: UCI Phishing Websites (11,055 URLs), collected from PhishTank, MillerSmiles, and Google search operators. | Imbalanced. Overall: 55.69% phishing vs. 44.31% benign | Labels: pre-labeled benchmark (UCI). Features: dataset-provided fields only, no external metadata. | High (hyperparameters chosen to maximize test-set accuracy across g, h, activation, cβ). | Random split (80/20), stratified | Manual experimental tuning (Design Risk Minimization + Monte Carlo) over g, h, activation, cβ; selection by test-set accuracy; no nested selection. | Accuracy, TPR, FPR, Precision, Recall, F1, MCC. System metric: test run time about 1 s for each model. | Partially addressed (metrics only) |

| 8 | [43] | MUPD: collected from PhishTank (phishing) and DomCop top-4M (legitimate); deduplicated and balanced; 2,307,800 URLs after deduplication. Sahingoz: 26,052 URLs after deduplication; also evaluated without preprocessing at 73,575 URLs. | Near balanced (overall). MUPD: 1,167,201 phishing vs. 1,140,599 benign; Sahingoz: 14,356 vs. 11,696; Sahingoz (no preprocessing): 37,175 vs. 36,400. | Labels: PhishTank (phishing) and benign derived from DomCop top-4M; plus the published Sahingoz dataset. Features: URL string only (character-level CNN), no external metadata. | Low–medium. URLs and hosts deduplicated; both random 60/20/20 and date-based splits reported; temporal drift observed | Random split 60/20/20. Additional time-based split on MUPD: train 2006–2013, validation 2013–2015, test 2015–2018. | CNN (PUCNN) selected by highest validation accuracy; no nested CV reported. | Accuracy, Precision, Recall, F1. No system metrics reported. | Adequately addressed (metrics and techniques) |

| 9 | [44] | Sources: 5000 best.com (legitimate), PhishTank (phishing); Construction: combined; Size: 51,200 URLs total. PhishTank access date: 21 May 2021. | Imbalanced; Legitimate 40,000; Phishing 11,200; Overall distribution only. | Labels: 5000 best.com (legitimate), PhishTank (phishing). Metadata: none (features derived solely from URL string). | High | Not reported | FS-CNN hyperparameters fixed; RFE-SVM used for feature selection; feature-map sizes compared | Evaluation: Accuracy, Recall, F1-score, Precision. System metrics: throughput 460 URLs/s, Packet inspection time increasing from 77 ms (100 packets) to 1129 ms (5000 packets), memory usage 570 MB, URL length effect on packet inspection time: from 53 ms (15 characters) to 134 ms (100 characters) | Partially addressed (metrics only) |

| 10 | [45] | Public repository: Mendeley Data, dataset “Phishing Dataset for Machine Learning”; 10,000 webpages total; sources: PhishTank, OpenPhish, Alexa, Common Crawl; 48 features; capture windows: 2015-01–2015-05; 2017-05–2017-06. | Imbalanced; distribution: not reported. | Labels: PhishTank, OpenPhish; Alexa, Common Crawl. Metadata: none | High; combined multi-source dataset; split procedure not detailed | Hold-out split; ratio: not reported (training and testing phases mentioned) | Hyperparameters specified for baselines, LightGBM parameter selection: not reported, overall selection procedure: not reported | Evaluation: Accuracy, Precision, Recall, F1-score, ROC curves reported. System metrics: not reported | Adequately addressed (metrics and technique: random naive oversampling) |

| 11 | [46] | Sources: PhishTank, OpenPhish, Alexa Top Sites; Collection window: January to June 2017; Preprocessing: duplicates removed, HTTP 404 excluded; Total: 4000 URLs | Balanced; overall: phishing 2000; benign 2000 | Labels: PhishTank; Openphish; Alexa Top Websites (legitimate). Metadata: none | Low | Not applicable | Not applicable (no machine learning model) | Evaluation: True Positive Rate; True Negative Rate; Accuracy System metrics: Average response time 2358 ms; First-level 1727 ms; Second-level 2043 ms | Partially addressed (metrics only) |

| 12 | [47] | Construction and sources: combined, PhishTank phishing pages plus target and normal pages collected by authors; comparison vectors from CSS layout features. Preprocessing: manual invalid-page filtering, exclusion of pages with too-small layout elements or entirely different appearance from targets. Total items: 24,051 samples (comparison vectors). | Imbalanced; Train: Positive (similar) 3719, Negative (different) 17,926; Test: Positive 414, Negative 1992 | Labels: PhishTank (phishing URLs), target/normal pages from authors’ collection. Metadata: none | Medium; pairwise vectors centered on shared target pages, split given by counts without page-level deduplication details | Hold-out split, training and testing sets, ratio not specified | Manual parameter variation reported for SVM gamma, Decision Tree max_depth, AdaBoost n_estimators, Random Forest n_estimators; no formal search or validation split described | Evaluation: Accuracy, Precision, Recall, F1-score. System metrics: Not reported | Partially addressed (metrics only), no explicit resampling or class weighting |

| 13 | [48] | [A] | |||||||

| 14 | [49] | Construction and sources: single-source, UCI Machine Learning Repository “Phishing Websites Data Set”; Preprocessing: dropped index column, recoded feature values to 0/1; removed records with missing values; Total items: 11,055 | Balanced; per-class counts: Not reported | Labels: UCI “Phishing Websites Data Set”; Metadata: none | High, feature selection performed before train/test split, single 70/30 hold-out, separation details limited | Hold-out split (70/30); stratification: Not reported | Compared multiple classifiers and feature-selection methods, selected by hold-out accuracy, random parameter tuning mentioned, details not reported. | Evaluation: Accuracy System metrics: Not reported. | Adequately addressed |

| 15 | [50] | Construction and sources: single-source, UCI Machine Learning Repository (Website Phishing Data Set); Total items: 1353. | Imbalanced; Overall: phishing 702, legitimate 548, suspicious 103; Per-split: Not reported. | Labels: UCI Website Phishing Data Set; Metadata: Web Traffic, Domain Age; providers not stated. | Low, single UCI dataset, random 70/30 hold-out, no dataset merging described | Hold-out split (70/30), random | Not reported (architecture and hyperparameters provided without describing the selection procedure) | Evaluation: Accuracy System metrics: Not reported | Not addressed (accuracy only) |

| 16 | [51] | Construction and sources: single-source, Hannousse&Yahiouche benchmark dataset (Kaggle: web-page-phishing-detection-dataset); Total items: 11,430. | Balanced; overall: Legitimate 5715, Phishing 5715; per-split: Not reported | Labels: benchmark dataset “status” column. Metadata: none. | Medium; random 80/20 hold-out, no stratification stated. | Hold-out split (80/20), random, stratification: Not reported. | Best model reported (CNN with 8 g); architecture outlined; hyperparameters not specified; selection procedure not described. | Evaluation: Accuracy. System metrics: Training time enhancement ratio vs. 41-feature baseline (percent, 4-feature set): Decision tree 88.89; Gradient boosting 86.52; AdaBoost 81.93; XGBoost 73.42; Random forest 65.05; ExtraTrees 61.60; Logistic regression 50.00; LightGBM 44.64; CatBoost 21.62; Naive Bayes 0.00. | Not applicable (balanced dataset) |

| 17 | [52] | Construction and sources: combined; Huddersfield phishing dataset built from PhishTank, MillerSmiles, Google query operators; plus Tan (2018) dataset from PhishTank, OpenPhish, Alexa, Common Crawl; Acquisition window: 2012–2018; 2015–2017; Total items: 12,456. | Mixed: Dataset 2 balanced (5000 phishing/5000 legitimate); Dataset 1 not reported | Labels: PhishTank, MillerSmiles, Google (Huddersfield); PhishTank, OpenPhish; Alexa/Common Crawl (Tan). Metadata: domain age, DNS record, website traffic, PageRank, Google Index, backlinks count, statistical-reports feature. | Medium; combined datasets and random split; no de-duplication or time-based isolation described; features include list/traffic-based signals. | Hold-out split (70/30), random; 10-fold CV also mentioned | Sixteen scikit-learn classifiers compared; RandomForest reported as best; hyperparameters and selection procedure not described. | Evaluation: Accuracy, Precision, Recall, AUC, Mean squared error System metrics: Not reported | Partially addressed (metrics only) |

| 18 | [53] | [R] | |||||||

| 19 | [54] | Construction and sources: combined; ISCX-URL-2016 (malicious), Kaggle (benign); Preprocessing: one-hot encoding of URL characters (84-symbol alphabet), fixed length 116 via trimming and zero-padding; Total items: 99,658. | Balanced; Train: Not reported; Test: Benign 10,002, Malicious 9911; Validation: Not applicable. | Labels: ISCX-URL-2016 (malicious: phishing, malware, spam, defacement); Kaggle (benign). Metadata: none. | High; VAE trained on entire dataset before split, so test distribution influenced the feature extractor; deduplication and per-domain separation not reported. | Hold-out split (80/20); stratification: Not reported; randomization: Not reported. | VAE latent dimension chosen via loss curves over L ∈ {5, 10, 24, 48, 64} (selected L = 24); DNN architecture and hyperparameters provided without describing the selection procedure; no nested selection. | Evaluation: Accuracy, Precision, Recall, F1-score, ROC. System metrics: Response time 1.9 s; total training time 268 s. | Not applicable (balanced dataset) |

| 20 | [55] | Construction and sources: single-source, Kaggle “Malicious and Benign URLs” dataset; Preprocessing: webpage paragraph text extraction with Requests and BeautifulSoup, text cleaning to lowercase with stopword removal and lemmatization, vectorization to CSV and merge into combined.csv; Total items: 12,982. | Balanced; overall: Malicious 6478, Benign 6504. | Labels: Kaggle “Malicious and Benign URLs” dataset; Metadata: none. | High—vectorizers fitted on the full dataset and saved to CSV before applying 10-fold CV on combined.csv; no de-duplication or domain-wise split reported. | 10-fold CV | Algorithms compared across feature sets and vectorizers; best reported configuration is Hashing Vectorizer with Extreme Gradient Boosting; architecture and hyperparameters not described, selection procedure not described. | Evaluation: Accuracy; Precision; Recall; F1-score. System metrics: Not reported. | Adequately addressed (balanced subset, Precision, Recall, F1-score) |

| 21 | [56] | Construction and sources: multiple public datasets evaluated separately; Kaggle (D1–D2), CatchPhish (D3–D5), Ebbu2017 (D6); Preprocessing: removal of missing and duplicate URLs; normalization of quoted/comma-separated entries; Total items: 969,311 (six datasets). | Imbalanced; D1: phishing 114,203; benign 392,801; D2: phishing 55,914; benign 39,996; D3: phishing 40,668; benign 85,409; D4: phishing 40,668; benign 42,220; D5: phishing 40,668; benign 43,189; D6: phishing 37,175; benign 36,400 | Labels: Kaggle (D1–D2), CatchPhish (D3–D5), Ebbu2017 (D6). Metadata: none | Medium; six pre-labeled public datasets; hold-out 70/30 without stated stratification/time policy; duplicates removed within datasets only; potential cross-split overlap not excluded | Hold-out split (70/30); stratification: Not reported | Eight classifiers compared on hold-out; Random Forest selected as best; hyperparameters WEKA default; selection procedure not described; 30 features chosen via domain knowledge + ReliefF | Evaluation: Accuracy, Precision, TPR, FPR, TNR, FNR, F1-score, ROC System metrics: Training time RF on D1 384 s; latency, memory, throughput: Not reported | Partially addressed (metrics only) |

| 22 | [57] | Construction and sources: single-source; UCI Machine Learning Repository “Website Phishing Data Set”; Total items: 1353. | Imbalanced; overall: phishing 702, suspicious 103, legitimate 548. | Labels: UCI Machine Learning Repository “Website Phishing Data Set”; Metadata: age of domain; web traffic; SSL final state | Low; single-source dataset with explicitly described exclusive random hold-out splits; no mixing of datasets or reuse of test items indicated. | Neural network: hold-out split 60/20/20, random; Decision tree, Naïve Bayes, SVM: hold-out split 40/60, random. | Neural network architecture specified (9-10-3; backprop) without describing a selection procedure; decision tree pruned, selection procedure not described; SVM and Naïve Bayes hyperparameters and selection procedure: Not reported. | Evaluation: Accuracy, True Positive Rate, False Positive Rate, Confusion matrix System metrics: Not reported | Partially addressed (metrics only) |

| 23 | [58] | Construction and sources: combined; D3M (malicious) and JSUNPACK plus Alexa top 100 (benign); Preprocessing: regex filtering to plain-JS and AST parsing with Esprima with syntactic validation; Total items: 5024 JS codes. | Balanced; overall: malicious 2512; benign 2512 | Labels: D3M (malicious); JSUNPACK and Alexa top 100 (benign); snapshot dates not reported. Metadata: none | High; large-scale data augmentation (dummy plain-JS and AST-JS manipulations) combined with 10-fold CV performed by splitting feature vectors, with no grouping by original code reported | 10-fold CV | Model selection procedure: 10-fold CV hyperparameter sweep (vector_size 100–1000; min_count 1–10; window 1–8); selected vector_size = 200, min_count = 5, window = 8; SVM (kernel = linear, C = 1) | Evaluation: Precision; Recall; F1-score; ROC AUC System metrics: training time per JS code 1.4780 s (PV-DBoW), 1.5290 s (PV-DM); detection time per JS code 0.0019 s (PV-DBoW), 0.0012 s (PV-DM) | Partially addressed (metrics only) |

| 24 | [59] | [A] | |||||||

| 25 | [60] | Construction and sources: combined (Alexa; PhishTank); Acquisition window: 50 K Phishing Detection (50 K-PD) 2009–2017; 50 K Image Phishing Detection (50 K-IPD) 2009–2017; 2 K Phishing Detection (2 K-PD) 2017; Preprocessing: Not reported; Total items: 49,947; 53,103; 2000. | Imbalanced overall; 50 K-PD: Legitimate 30,873, Phishing 19,074; 2 K-PD: Legitimate 1000, Phishing 1000; 50 K-IPD: Legitimate 28,320, Phishing 24,789; per-split counts: Not reported. | Labels: PhishTank and Alexa rankings plus hyperlinks for legitimate; Metadata: none. | Medium; combined sources and hold-out split, no deduplication or domain/time separation reported. | Hold-out split (70/30); stratification: Not reported. | Two-layer stacking with base models GBDT, XGBoost, LightGBM; base-model candidates compared via 5-fold CV using Kappa and average error; parameters mostly default; architecture and hyperparameters provided without a formal search procedure. | Evaluation: Accuracy, Missing rate, False alarm rate. System metrics: Training time 64 min (CPU i7-6700HQ; RAM 16G; 35 K training samples) | Partially addressed (metrics only) |

| 26 | [61] | Construction and sources: single-source; Mendeley Data “Phishing Websites Dataset” (2021); Preprocessing: random down-selection from 80,000 to 8000 URLs; Total items: 8000. | Balanced; Overall: Legitimate 4000; Phishing 4000. | Labels: Mendeley Data “Phishing Websites Dataset” (2021). Metadata: none. | Medium; random 80/20 split at URL level stated, no deduplication or domain-level separation reported. | Hold-out split (80/20); randomization: Not reported; stratification: Not reported. | Not reported; algorithms compared (DT, SVM, RF); hyperparameters: Not reported. | Evaluation: Accuracy, Precision, Recall, F1-score System metrics: Not reported. | Not applicable (balanced dataset) |

| 27 | [62] | Construction and sources: combined, PhishTank (phishing URLs, phishing websites), PHISHNET (legitimate websites, URLs); Preprocessing: inactive phishing URLs only; extraction of links and texts from webpages; Total items: 2,599,834. | Not reported | Labels: PhishTank, PHISHNET Metadata: none | Medium—combined sources and no explicit train–test separation; evaluation against production classifiers with unknown training data (GPPF, Bitdefender) | Not applicable | Not applicable (no model trained; evaluation on GPPF and commercial tools) | Evaluation: Attack success rate; Transferability success rate; Detection rate (Pelican) System metrics: Attack crafting time per website < 1 s; Feature inference time: URL/DOM 0.68 h, term 11.66 h, total 12.34 h | Not addressed |

| 28 | [63] | Construction and sources: combined; Almeida SMS spam dataset; Pinterest smishing images converted to text; Preprocessing: punctuation removal, lowercasing, tokenization, stemming, TF-IDF vectorization; short-to-long URL expansion for analysis; Total items: 5858 messages. | Imbalanced; Overall: Smishing 538, Ham 5320; Per-split: Not reported. | Labels: Almeida SMS spam collection; manual extraction of smishing from spam plus Pinterest images to text; snapshot/version not reported. Metadata: PhishTank blacklist lookups; domain age from WHOIS/RDAP; other checks on HTML source and APK download; | Medium; combined sources, no deduplication or time-based separation described, model chosen and evaluated on the same dataset without nesting. | 5-fold CV | Classifier family compared; Naive Bayes selected by empirical performance; hyperparameters and selection procedure not reported. | Evaluation: Accuracy, Precision, Recall, F1-score System metrics: Not reported. | Partially addressed (metrics only) |

| 29 | [64] | Construction and sources: combined; Mendeley Phishing Websites datasets D1 and D2 (legitimate from Alexa; phishing from PhishTank); Preprocessing: datasets verified for null/duplicate samples; SMOTE-Tomek balancing applied. | Imbalanced; D1 overall: Legitimate 27,998, Phishing 30,647; After SMOTE-Tomek: Legitimate 29,194, Phishing 29,194; D2 overall: Legitimate 58,000, Phishing 30,647; After SMOTE-Tomek: Legitimate 56,605, Phishing 56,605 | Labels: PhishTank; Alexa; snapshot: Not reported. Metadata: DNS records and resolver signals (A/NS counts, MX servers, TTL, number of resolved IPs), TLS certificate validity, Sender Policy Framework (SPF), redirects, response time, Google indexing, ASN/IP features; provided as dataset attributes | Medium; SMOTE-Tomek applied before 80/20 split; potential synthetic overlap across train/test; no stratified or temporal split described | Hold-out split (80/20); random; stratification: Not reported | Architectures and hyperparameters provided without describing the selection procedure (XGBoost, CNN, LSTM, CNN-LSTM, LSTM-CNN) | Evaluation: Accuracy; Precision; Recall; F1-score System metrics: Not reported | Adequately addressed (metrics and techniques: SMOTE-Tomek link; metrics reported) |

| 30 | [65] | Construction and sources: single-source; UCI Machine Learning Repository; Total items: Not reported. | Not reported | Labels: Not reported; Metadata: Not reported. | High; separation procedure not described; single-source dataset; potential overlap between training and testing not ruled out. | Hold-out split; split proportions: Not reported; stratification: Not reported. | Architecture and hyperparameters declared without describing the selection procedure; weights optimized via HBA using MSE as fitness. | Evaluation: Accuracy; Precision; Recall; F1-score; Error rate System metrics: Convergence time 528 s; Learning iterations 1689; Minimum MSE 0.00498. | Partially addressed (metrics only). |

| 31 | [66] | Construction and sources: single-source; UCI Phishing Websites Dataset (UCI repository); Total items: 11,055. | Imbalanced; overall: phishing 4898, legitimate 6157; per-split: Not reported. | Labels: UCI Phishing Websites Dataset (snapshot/version: Not reported). Metadata: Website traffic (Alexa rank), Page rank, Google index, DNS record, Domain registration, SSL final state, Statistical report (Top 10 domains/IPs from PhishTank). | Medium—single combined dataset with random stratified 5-fold CV; no deduplication or time-window separation reported; hyperparameters and architecture selected using the same CV setting | Stratified 5-fold CV; dataset shuffled before batching; 10 runs averaged; non-converged runs discarded. | Grid over Adam parameters a ∈ {0.05, 0.01, 0.1, 0.5, 1}, β1 ∈ {0.1, 0.3, 0.5, 0.7, 0.9}; architectures tested (1–3 hidden layers; various neuron counts); best model chosen by Accuracy/F1 on stratified 5-fold CV; no nested procedure described. | Evaluation: Accuracy; Precision; Recall; F1-score; False Positive Rate; False Negative Rate. System metrics: Not reported. | Partially addressed (metrics only). |

| 32 | [67] | Construction and sources: combined; Tan(PhishTank, OpenPhish, Alexa, General Archives); Hannousse&Yahiouche (PhishTank, OpenPhish, Alexa, Yandex); Acquisition window: Tan—two collection sessions between January–May and May–June across two years; Hannousse&Yahiouche—2021 build; Preprocessing: Tan—removed broken/404 pages; screenshots saved for filtering; Hannousse&Yahiouche—removed duplicates and inactive URLs; used DOM for limited-lifetime URLs; Total items: 21,430 (10,000 + 11,430). | Balanced; Tan: 5000 phishing/5000 benign; Hannousse&Yahiouche: 5715 phishing/5715 benign | Labels: PhishTank, OpenPhish; benign from Alexa, General Archives (Tan) and Alexa, Yandex (Hannousse&Yahiouche); Metadata: none | Medium; random 70/30 hold-out only stated for dataset 2; no domain/time-wise isolation described; deduplication mentioned only for dataset 2; Tan collected in sessions across two years | Hold-out split (70/30) (dataset 2); Tan dataset: Not reported | Models compared (RF, SVM, DT, AdaBoost); no hyperparameters or tuning/selection procedure described | Evaluation: Accuracy System metrics: Execution time (s): 0.983028 [48 features, dataset 1, RF]; 0.970703 [10 features, dataset 1, RF]; 0.969786 [87 features, dataset 2, RF]; 0.957109 [10 features, dataset 2, RF] | Not applicable (balanced) |

| 33 | [68] | Construction and sources: single-source; UCI Machine Learning Repository “Website Phishing Data Set”; Acquisition window: page accessed 4 July 2022; Total items: 1353. | Imbalanced; overall distribution: Legitimate 548; Suspicious 103; Phishing 702. | Labels: UCI “Website Phishing Data Set” (accessed 4 July 2022). Metadata: SSL/TLS final state, domain age, and site traffic provided within the UCI dataset; no additional metadata retrieval by authors. | Medium—single hold-out split with no details on randomization or deduplication checks; multiple runs on the same split. | Hold-out split (70/30); repetitions: 20 runs; stratification: Not reported. | Architectures and hyperparameters provided without describing the selection procedure; examples: ANN 9-10-10-1; learning rate 0.01; momentum 0.1; epochs 200; batch size 50. | Evaluation: RMSE System metrics: Not reported | Not addressed. |

| 34 | [69] | [A] | |||||||

| 35 | [70] | Construction and sources: single-source; Kaggle “malicious-and-benign-urls” (siddharthkumar25); Acquisition window: accessed 11 September 2022; Preprocessing: character-level tokenization, fixed-length padding/truncation, embedding; Total items: 450,176. | Class balance: Imbalanced; overall: Phishing 104,438; Benign 345,738. | Labels: Kaggle “malicious-and-benign-urls” (accessed 11 September 2022). Metadata: none. | Medium; single Kaggle snapshot with 70/30 hold-out; no deduplication or temporal separation described. | Hold-out split (70/30); stratification: Not reported. | Architecture and hyperparameters provided without describing the selection procedure; LSTM, Bi-LSTM, GRU models. | Evaluation: Accuracy; Precision; Recall; F1-score System metrics: Not reported | Partially addressed (metrics only) |

| 36 | [71] | Construction and sources: single-source; UCI Machine Learning Repository “Phishing Websites”; Total items: 11,055. | Imbalanced; Overall: Phishing 4898; Benign 6157; Per-split: Not reported. | Labels: UCI “Phishing Websites” dataset; snapshot/version: Not reported. Metadata: SSLfinal-State, Domain-registration-length, Age-of-domain, DNSRecord, Web-traffic, Page-Rank, Google-Index. | Medium—single-source dataset with 10-fold CV; no deduplication or split-detail reported. | 10-fold CV; randomization: Not reported; stratification: Not reported. | Random Forest tuned via one-at-a-time parameter sweeps; final parameters reported: maxDepth = 14, numIterations = 105, batchSize = 10; MLP and Naive Bayes hyperparameters/architecture not detailed, selection procedure not described. | Evaluation: Accuracy System metrics: Processing time—All features: RF 15 s, MLP 945 s, NB 1 s; FSOR: RF 10 s, MLP 600 s, NB 1 s; FSFM: RF 6 s, MLP 360 s, NB 1 s. | Not addressed. |

| 37 | [72] | Construction and sources: single-source; PhishTank URLs with targets plus collected WHOIS, DNS, screenshots, HTML, favicon; Acquisition window: October 2021–June 2022; Preprocessing: manual removal of invalid/blank pages, correction of mislabels, standardized screenshots; Total items: 3500. | Imbalanced; 70 target-brand classes; per-class counts: Not reported; per-split distributions: stratified 80/20. | Labels: PhishTank target labels; authors corrected some labels during cleaning. Metadata: WHOIS (creation/expiration dates, registrant country), DNS A and CNAME records, HTML text and tag counts, favicon ICO hex, OCR text from screenshots. | Medium—random stratified 80/20 split on URLs; no campaign/domain-level separation described. | Hold-out split (80/20); stratified random. | Architecture and hyperparameters provided without describing the selection procedure; learning rate 0.1; batch size 50; epochs 100; random state 0. | Evaluation: Accuracy; Macro-F1; Weighted-F1 System metrics: Not reported | Partially addressed (metrics only) |

| 38 | [73] | Construction and sources: single-source; University of Huddersfield (Phishing Websites Dataset No. 1 and No. 2); Preprocessing: duplicate removal; Total items: Not reported. | Not reported | Labels: University of Huddersfield Phishing Websites Dataset No. 1 and No. 2; Metadata: DNS records; domain age; PageRank; Website Traffic; Google Index | Medium—feature selection and preprocessing described outside the cross-validation loop; separation per fold not detailed | 10-fold CV | Architecture (3 hidden layers, 20 nodes each) and hyperparameters (learning rate 0.001; epochs 50) provided without describing the selection procedure | Evaluation: Accuracy; Precision; Recall; F-score; TPR; TNR; FPR; FNR; MCC System metrics: Not reported | Partially addressed (metrics only) |

| 39 | [74] | [A] | |||||||

| 40 | [75] | Construction and sources: combined; UCI Phishing Websites dataset (repository) for training and a separate live URL set for evaluation; Total items: 11,055 (UCI) and 2000 live URLs. | Balanced; Overall (live set): Phishing 1000, Legitimate 1000; UCI training distribution: Not reported. | Labels: UCI Phishing Websites dataset for training; labels for the live URL set not described. Metadata: Alexa Top Sites whitelist, PhishTank API blacklist, WHOIS Domain Registration data, DNS record checks, SSL certificate checks, Google index, PageRank. | Medium—dataset splitting and deduplication not described; GridSearchCV used for tuning without a clearly separated evaluation protocol; labeling process for the live set not specified. | Not reported. | Stack of classifiers selected–RF, SVM with RBF, Logistic Regression; GridSearchCV described for SVM and RF; feature importance via RF (Gini) mentioned; search space and selection protocol details not described. | Accuracy; Mean Squared Error. System metrics: Average execution time–Proposed framework 0.62 ms; Logistic Regression 0.98 ms; SVM 0.87 ms; Random Forest 1.75 ms. | Not applicable—balanced evaluation set |

| 41 | [76] | [A] | |||||||

| 42 | [77] | [A] | |||||||

| 43 | [78] | Construction and sources: single-source; UCI Machine Learning Repository phishing websites dataset; Preprocessing: cluster-based oversampling with k-means, removal of 988 “inappropriate” instances; feature selection via correlation filter and Boruta; Total items: 10,068. | Balanced; Overall distribution: 5034 phishing/5034 benign; per-split distributions: Not reported. | Labels: UCI phishing websites dataset (snapshot/version not specified). Metadata: none. | High oversampling and feature selection performed on the full dataset prior to evaluation; results reported with 10-fold CV without nesting. | 10-fold CV; also reports fixed hold-out partitions 60:40, 70:30, 75:25. | Architecture and hyperparameters provided without describing the selection procedure; twofold FFNN with five hidden layers and eight neurons; SVM kernels: polynomial, RBF; RF parameters not specified. | Evaluation: Accuracy; Precision; Recall; F1-score; MSE. System metrics: Not reported. | Adequately addressed (metrics and techniques) |

| 44 | [79] | [A] | |||||||

| 45 | [80] | Construction and sources: combined; PhishTank (phishing), UNB CIC URL-2016 (legitimate); Total items: 2000. | Balanced; Overall: Phishing 1000, Legitimate 1000; Per-split: Not reported | Labels: PhishTank; UNB CIC URL-2016. Metadata: WHOIS/registration lookup (DNS record, domain age), Alexa ranking; no TLS/DNS TTL/RDAP fields reported | Medium—80/20 split and 5-fold CV without nesting; algorithm selected on same data; duplicate handling/stratification not described | Hold-out split 80/20; 5-fold CV (mean CV score reported) | Compared Decision Tree, RF, SVM on 80/20 split and mean 5-fold CV; selected Decision Tree; hyperparameters not described; no nested selection | Evaluation: Accuracy, Precision, Recall, Cross-validation score System metrics: Not reported | Not applicable (dataset balanced) |

| 46 | [81] | Construction and sources: combined (Ebbu2017; PhishTank; Marchal2014 legitimate URLs); Total items: Ebbu2017 73,575; PhishTank 26,000. | Balanced; Overall: Ebbu2017 Legitimate 36,400, Phishing 37,175; PhishTank dataset Legitimate 13,000, Phishing 13,000; Per-split: Not reported | Labels: Ebbu2017; PhishTank; Marchal2014; Metadata: none | Medium; 10-fold CV without deduplication or temporal controls described; potential overlap of near-duplicate URLs across folds; dataset construction details limited | 10-fold CV on each dataset | Hyperparameter search space reported; best values given (optimizer = adam; activation = relu; dropout = 0.3; epochs = 40; batch size = 128); architecture specified (DNN–LSTM and DNN–BiLSTM); selection procedure not fully described; no nested CV | Evaluation: Accuracy; AUC; F1-score System metrics: Not reported | Partially addressed (metrics only) |

| 47 | [82] | Construction and sources: combined; Mendeley Data “Phishing dataset for machine learning” and UCI “Phishing Websites” dataset; Total items: 21,055. | Mixed Balanced (Mendeley): Phishing 5000, Legitimate 5000; Imbalanced (UCI): Phishing 3793, Legitimate 7262; Per-split distributions: Not reported. | Labels: From the two datasets; Metadata: External features present in the datasets (e.g., WebTraffic, SSLfinalState, AgeOfDomain, GoogleIndex, DNSRecord); source services not reported. | Medium—single 70/30 hold-out; no details on randomization, deduplication, or host/domain-level separation | Hold-out split 70/30 train/test; number of runs and stratification not reported. | Architecture: AdaBoost with LightGBM; ≥15 algorithms investigated for comparison; base feature selection methods: RF, Gradient Boosting, LightGBM; hyperparameters not reported; selection procedure not described. | Evaluation: Accuracy; Precision; Recall; F1-score. System metrics: Detection time for entire test set—14 ms (Dataset 1, full features); 13.9 ms (Dataset 1, consensus); 13.9 ms (Dataset 1, majority); 214 ms (Dataset 2, full features); 185 ms (Dataset 2, majority); 300 ms (Dataset 2, consensus); per-instance detection time 4.63 μs (Dataset 1, consensus); training time figures not reported numerically. | Partially addressed (metrics only) |

| 48 | [83] | Construction and sources: single-source; UCI-2015 (UCI repository), UCI-2016 (UCI repository), MDP-2018 (Mendeley Data); Acquisition window: UCI-2015 donated March 2015; UCI-2016 contributed November 2016; MDP-2018 published March 2018; Total items: 22,408. | Mixed; UCI-2015 imbalanced (phish 6157; benign 4898); UCI-2016 imbalanced (phish 805; benign 548); MDP-2018 balanced (phishing 5000; benign 5000). | Labels: UCI-2015, UCI-2016, MDP-2018 dataset labels as provided by dataset authors; snapshot months as above; Metadata: none. | Low—30-fold stratified CV within each dataset; no cross-dataset mixing described. | 30-fold stratified CV (per dataset). | Manual, expert-guided hyperparameter tuning using learning curves and 30-fold CV; best hyperparameters reported per dataset; no formal grid or nested search described. | Evaluation: Accuracy System metrics: Not reported | Not addressed |

| 49 | [84] | [A] | |||||||

| 50 | [85] | [R] | |||||||

| 51 | [86] | [A] | |||||||

| 52 | [34] | Construction and sources: combined; PhishTank; Alexa Top Sites; Total items: 3526. | Imbalanced; overall: phishing 2119; legitimate 1407; per-split: Not reported. | Labels: PhishTank(phishing), Alexa Top Sites (legitimate); snapshot/version: Not reported. Metadata: WHOIS domain age, Alexa Page Rank, Bing search-engine results using title/description/copyright matching. | Medium; combined sources and repeated random hold-out split; no deduplication or per-domain grouping described, raising possibility of near-duplicate/domain overlap across splits; authors explicitly note duplicates as a limitation. | Hold-out split 75/25; repeated 10 times with randomly selected training set; metrics averaged across repeats; stratification: Not reported. | Algorithm comparison across RF, J48, LR, BN, MLP, SMO, AdaBoostM1, SVM; RF selected by highest average accuracy over 10 repeated 75/25 splits; RF hyperparameters fixed (ntree = 100; mtry = 4); no inner validation/tuning described. | Evaluation: Sensitivity; Specificity; Precision; Accuracy; Error rate; False positive rate; False negative rate. System metrics: Not reported. | Partially addressed (metrics only) |

| 53 | [87] | Construction and sources: combined; PhishTank (phishing), Alexa top websites, Stuffgate Free Online Website Analyzer, List of online payment service providers; Preprocessing: removed identical feature vectors; label encoding of class values; Total items: 2544. | Imbalanced: phishing 1428; legitimate 1116 | Labels: PhishTank (2018); Alexa top websites (2018); Stuffgate (2018); online payment service providers list (2018). Metadata: none | Medium—combined sources; random 10-fold CV; no domain/time de-duplication described; only “identical values removed” noted | 10-fold CV | Classifier family comparison via 10-fold CV (SMO, Naive Bayes, Random Forest, SVM, Adaboost, Neural Networks, C4.5, Logistic Regression); selected Logistic Regression; hyperparameters not reported | Evaluation: Accuracy, Precision, Recall/TPR, FPR, TNR, FNR, F1-score, ROC AUC System metrics: Not reported | Partially addressed (metrics only) |

| 54 | [88] | Construction and sources: combined; Mendeley dataset built from Alexa and Common Crawl for benign plus PhishTank and Open-Phish for phishing; UCI Phishing Websites dataset; Preprocessing: dropped non-informative/index columns; label normalization; SMOTE applied to Dataset 2; Total items: D1 10,000; D2 12,314 after SMOTE. | Balanced (D1 5000 phishing; 5000 legitimate); Imbalanced (D2 4898 phishing; 6157 legitimate); Balanced after SMOTE (D2 6157 phishing; 6157 legitimate) | Labels: Alexa; Common Crawl; PhishTank; Open-Phish; snapshot versions Not reported. Metadata: none. | High—SMOTE described as producing a fully balanced Dataset 2 prior to reporting totals; feature selection/correlation filtering and GridSearchCV discussed without a clearly separated outer evaluation loop; nested CV not specified; CV settings inconsistent (k = 5 vs. stratified k = 10). | Stratified 10-fold CV | GridSearchCV hyperparameter tuning; reported chosen settings include LR (penalty = L2, C = 0.1, solver = saga, max_iter = 500), DT (criterion = gini, max_depth = 3, min_samples_leaf = 5), RF (n_estimators = 150, max_depth = 10, min_samples_split = 5, min_samples_leaf = 2, max_features = log2), KNN (n_neighbors = 3, algorithm = brute), SVC (C = 0.7, kernel = sigmoid), XGBoost (learning_rate = 0.2, n_estimators = 100, max_depth = 5, min_child_weight = 2, subsample = 0.8, colsample_bytree = 1.0), CNN (64 filters, 3 × 3, pool 3 × 3, dense 128, dropout = 0.5), DL (optimizer/learning rate/batch size/dropout tuned). | Evaluation: Accuracy; Precision; Recall; F1-score; FPR System metrics: CNN training time 94 s 29 ms; other ML models < 10 s; hardware TPU v2–8 (8 cores, 64 GiB). | Adequately addressed (metrics and techniques) |