1. Introduction

Network anomaly detection is critical for maintaining the stability, performance, and security of modern communication systems [

1,

2,

3,

4]. It supports key tasks such as Quality of Service (QoS) assurance, early detection of malware and Trojans, and the deployment of Intrusion Detection Systems (IDSs). The core objective is to identify traffic patterns that deviate significantly from normal behavior, particularly in complex, high-volume, and dynamic network environments. Such deviations are indicative of system faults or malicious activities. This task is commonly formulated as either a binary or multi-class classification problem. Binary classification distinguishes between normal and anomalous traffic and is widely adopted due to its simplicity and lower annotation cost. Multi-class classification enables more fine-grained identification, such as denial-of-service attacks or port scanning, but requires a larger amount of labeled data and more complex model architectures. In this paper, we adopt the binary classification setting.

Over the years, various techniques have been developed to improve anomaly detection in network traffic. Signature-based methods enable efficient detection of known attack patterns but often fail to generalize to novel or polymorphic threats that evolve over time. Statistical approaches rely on threshold-based rules over manually engineered features, which are fragile under dynamic conditions and insufficient for modeling complex multivariate dependencies [

5]. These limitations frequently result in high false positive rates and degraded detection performance in real-world deployments. With the increasing availability of traffic data, deep learning has emerged as the dominant approach due to its capacity to automatically extract features from raw inputs [

6]. Many existing models treat network flows as time series and apply recurrent architectures such as Recurrent Neural Networks (RNNs), Long Short-Term Memory networks (LSTMs), or Gated Recurrent Units (GRUs) to capture temporal correlations. More recently, Transformer-based models have been adopted for flow-level anomaly detection, leveraging self-attention mechanisms to capture long-range dependencies and contextual patterns across traffic sequences [

7]. These methods have demonstrated strong capabilities in learning temporal patterns relevant to anomaly detection.

However, most existing models represent each network flow as a flat feature vector or a uniform sequence, which overlooks the intrinsic heterogeneity of flow-level attributes. In practice, network flows comprise distinct feature domains, including static fields (e.g., IP addresses, port numbers), dynamic sequences (e.g., packet lengths, inter-arrival times), and aggregated statistics over the flow duration. Flattening these heterogeneous features into a unified representation tends to obscure domain-specific structures, limiting the model’s ability to distinguish complex behaviors. To address this issue, some prior methods process individual domains in isolation but neglect interactions across domains. However, these cross-domain dependencies often reflect subtle, multi-dimensional anomalies that cannot be captured by modeling each domain independently. We consider that capturing both domain-specific patterns and their inter-relations is crucial for identifying fine-grained or stealthy anomalies, which may manifest only through correlated deviations spanning multiple domains.

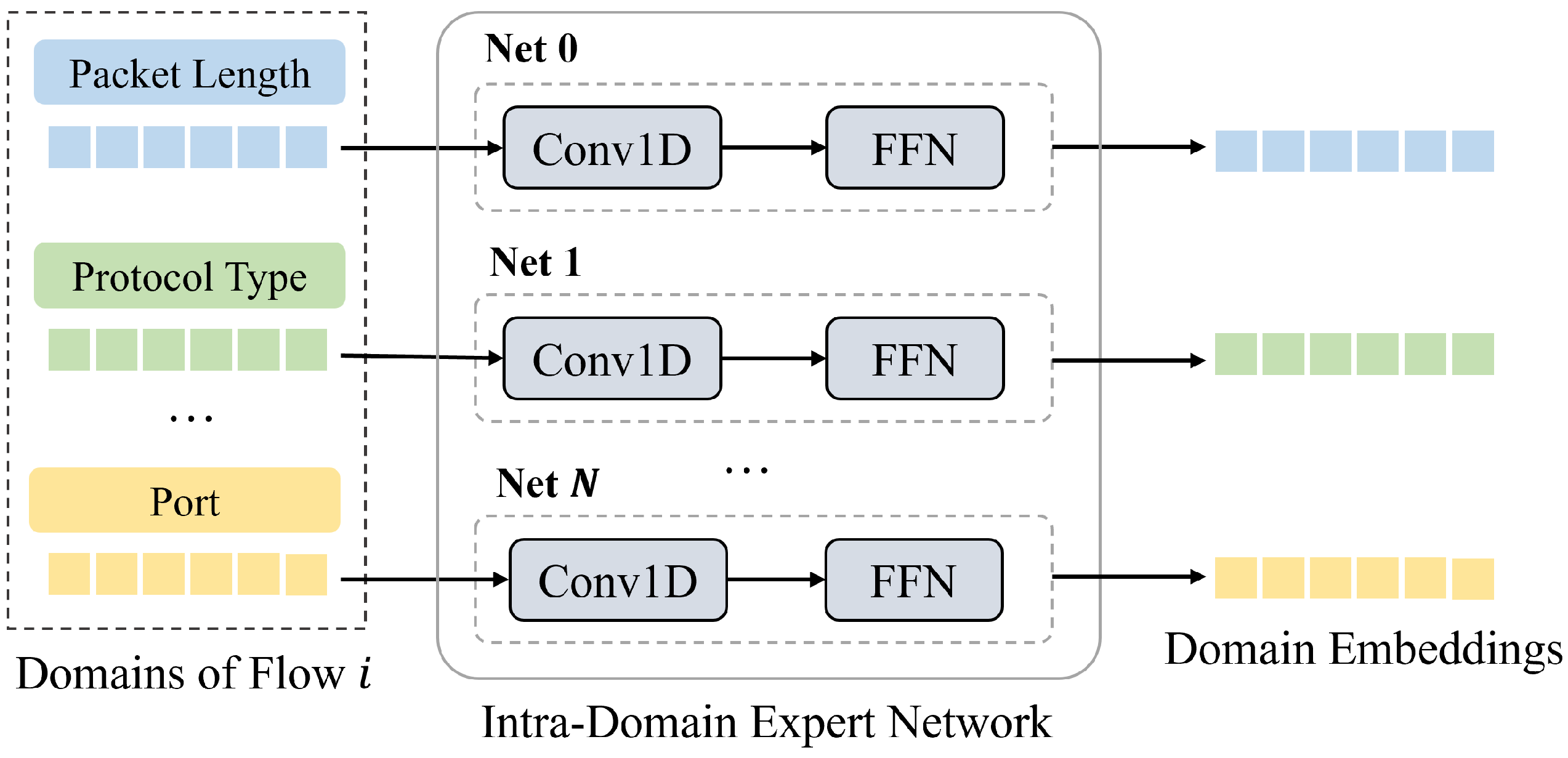

To address these challenges, we propose a domain-aware anomaly detection framework that explicitly models heterogeneous feature domains and integrates them via a cross-domain interaction module, prior to performing temporal modeling. Specifically, flow-level features are partitioned into semantically distinct domains. For each domain, we design an Intra-Domain Expert Network (IDEN) that extracts domain-specific patterns using Convolutional Neural Networks (CNNs) and Feed-Forward Networks (FFNs). The resulting representations are then processed by an Inter-Domain Expert Network (EDEN), which applies attention mechanisms to capture cross-domain dependencies. Consequently, this yields a unified representation that encodes both intra-domain structure and inter-domain correlations. To further capture temporal dependencies across flows, we apply a Transformer-based encoder to the sequence of flow representations. Empirical results on multiple public datasets demonstrate consistent improvements in detection accuracy, validating the effectiveness of our design. Our contributions are summarized as follows:

We design an Intra-Domain Expert Network (IDEN) to capture domain-specific feature patterns within the same network flow. IDEN leverages convolutional and feed-forward modules to extract structural and statistical patterns from semantically distinct feature domains.

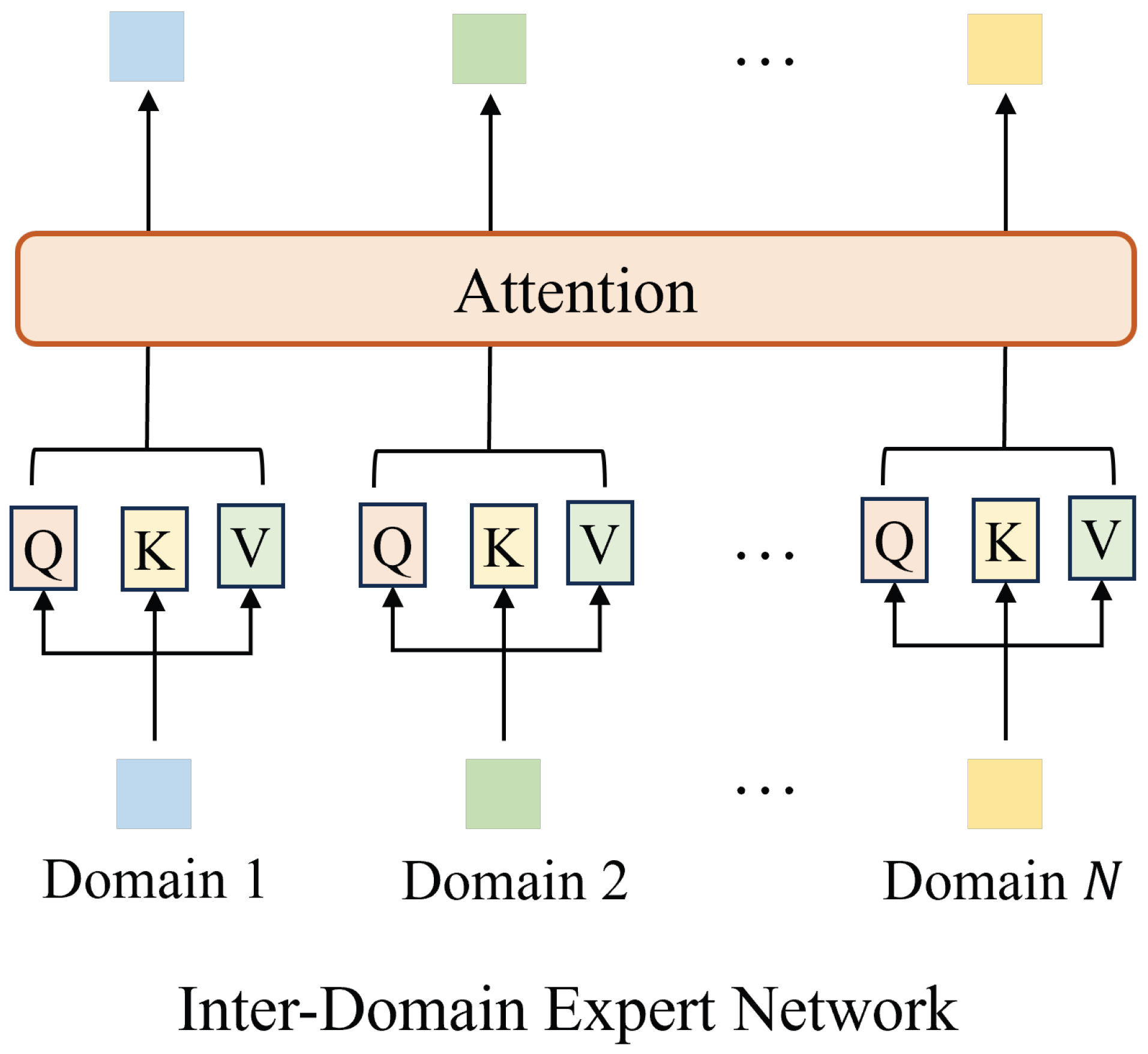

We propose an Inter-Domain Expert Network (EDEN) to capture interactions across feature domains within the same network flow. Built on attention mechanisms, EDEN enables the model to reason over dependencies between heterogeneous features in the flow.

We evaluate our method on multiple public datasets. The results show consistent improvements over strong baselines in terms of detection accuracy.

2. Related Work

Network anomaly detection, a critical component of cybersecurity, focuses on identifying aberrant traffic behaviors or patterns through systematic monitoring and management. A common approach involves binary classification of network traffic into normal or malicious categories [

8]. Although many methods from statistical and machine learning have been applied to this task, they differ in their analytical approaches and in the characteristics or features they extract.

2.1. Traditional Network Anomaly Detection

Early methods relied on mapping traffic types by associating specific TCP/UDP port numbers with known applications [

9]. Although straightforward, the efficacy of such techniques diminished significantly with the proliferation of port obfuscation tactics and dynamic port allocation. To circumvent the over-reliance on port numbers and enhance scalability, Deep Packet Inspection (DPI) emerged [

10]. DPI scrutinizes packet headers and payloads to ascertain the true intent and behavioral patterns of data packets. However, with escalating traffic volumes, DPI’s byte-by-byte analysis became a bottleneck, imposing considerable processing overhead on network devices and ultimately proving ineffective for analyzing traffic encrypted with private or proprietary protocols.

2.2. Deep Learning-Based Network Anomaly Detection

The early application of deep learning in traffic classification has demonstrated remarkable performance in feature learning and anomaly detection [

11]. Subsequent research often treated network traffic as sequential data. For instance, FS-Net [

12] utilized bidirectional GRUs on packet length sequences, incorporating a reconstruction mechanism within an AutoEncoder to ensure the validity of learned features. MTT [

7] adopted a multi-task Transformer trained on truncated packet byte sequences for supervised traffic feature analysis. Recent advancements have explored more efficient and generalizable architectures. NetMamba [

13] replaced Transformers with a linear-time state-space model based on the unidirectional Mamba architecture, achieving high accuracy and inference speed on raw traffic classification. Others have conceptualized network traffic as a form of language—a sequence of specialized “words”—and employed sequence models for its analysis [

14]. A prevalent limitation across many of these sequence-focused methods is their insufficient consideration of the interactional relationships between network entities.

2.3. Graph and Large Language Model-Based Network Anomaly Detection

To capture such relational aspects, graph-based detection methods have gained attention. These typically model network packets (or flows) as nodes and their transmissions or relationships as edges, thereby constructing traffic interaction graphs. Graph Neural Networks (GNNs) [

15] and other graph representation learning techniques are then applied to unearth latent topological information and interaction patterns [

15,

16,

17]. DE-GNN [

18], for example, employed dual embedding layers for packet headers and payloads, utilized a PacketCNN for packet-level representations, and subsequently built traffic interaction graphs. Building on this paradigm, Yang et al. [

19] modeled flows as nodes in a hypergraph, with hyperedges constructed using KNN [

20] to capture potential similarities among traffic instances. Furthermore, the recent ascendancy of large language models (LLMs) [

21] has spurred the development of pre-trained foundational models for network traffic, such as NetGPT [

22]. Lens [

23], on the other hand, leveraged the T5 encoder–decoder architecture to pre-train a foundation model on large-scale packet-level data, combining traffic understanding and generation via multi-objective loss design.

However, many existing approaches overlook the critical fine-grained interplay of heterogeneous features within individual network flows. Our research is thus motivated to develop a framework that explicitly models both distinct intra-domain feature characteristics and their complex inter-domain interactions to better detect subtle anomalies.

3. Method

Our proposed method for network anomaly detection, as illustrated in

Figure 1, centers on fine-grained representation learning of network flow data to identify anomalous traffic. The framework is divided into three stages. (1) Data Preprocessing: Traffic flows are reconstructed from PCAP (Packet Capture) files, and their constituent features are grouped into predefined feature domains (e.g., packet sequences, protocol type, header fields) as introduced in

Section 3.1. (2) Temporal Multi-feature Interaction Learning: Each domain of a given flow is processed by an Intra-Domain Expert Network (IDEN), composed of a CNN and a FFN, to learn domain-specific embeddings. These embeddings are integrated via an Inter-Domain Expert Network (EDEN) that uses attention to capture cross-domain dependencies, as described in

Section 3.2. The resulting unified flow representation is then input into a Cross-Flow Temporal Transformer, which models temporal dependencies across flows in sequence. (3) Classification: A final flow embedding is extracted and passed through a binary classifier to determine whether the sequence is anomalous. The full model is optimized end-to-end using the binary cross-entropy loss, as described in

Section 3.3. This architectural design enables deep modeling of intra-flow structures and cross-flow temporal behaviors, thereby enhancing detection performance.

3.1. Data Preprocessing and Feature Domain Definition

The input to our system is raw network traffic captured in PCAP files. We first reconstruct individual network flows from this data. For each flow , a set of primitive features is extracted, where M denotes the number of feature types for a single flow and represents the m-th type of features. These include packet-level sequences (e.g., packet lengths, inter-arrival times), flow-level aggregate statistics (e.g., duration, total packets, total bytes), and header information (e.g., IP addresses, port numbers, protocol type). By ordering flows chronologically within a given observation window, we construct a flow sequence , where T is the number of flows in the sequence.

A key aspect of our approach is the conceptualization of these primitive features into K distinct feature domains, . Each domain groups features of a similar nature. Specially, we define domains such as a Static Header Domain (categorical header fields), Packet Sequence Domains (for lengths and timings), and a Flow Aggregate Domain (numerical flow statistics). Let denote the subset of features from flow F belonging to domain .

Features within each are preprocessed into a suitable numerical format. Categorical features are typically converted to dense vectors using one-hot encoding followed by a learnable embedding layer. Numerical features are normalized (z-score normalization). Sequential features are processed into fixed-length sequences of vectors or scalars, often involving padding or truncation. This results in an initial representation for each feature domain k of flow F.

3.2. Temporal Multi-Feature Interaction Learning

This stage is central to our framework and aims to generate a rich, discriminative representation for each flow F, where denotes the dimension of the unified flow representation. This is achieved by first modeling patterns within its constituent feature domains and then capturing the complex interactions between these domains.

3.2.1. Intra-Domain Pattern Learning

For each feature domain

of a flow

F, a dedicated Intra-Domain Expert Network, denoted

, is designed to process its initial representation

(as derived in

Section 3.1) and extract salient domain-specific patterns. The architecture of

implements a sequential processing pipeline. Initially, the input

is fed into a Convolutional Neural Network (CNN) module,

, which applies 1D convolutions to extract local features. This choice is based on the observation that intra-domain features are typically short and locally structured. CNNs efficiently capture such patterns with lower latency and better parallelism, making them preferable for high-throughput detection. The overall structure of the Intra-Domain Expert Network is illustrated in

Figure 2.

For domains characterized by sequential data, such as packet length sequences, 1D CNNs are employed to effectively capture local temporal features and structural patterns. In cases where a domain contains a collection of embedded static or numerical features (conceptualized as a 1D sequence of feature vectors), a 1D CNN is similarly used to learn local inter-relationships among these features. This CNN module consists of convolutional layers utilizing ReLU activation functions, followed by a max pooling layer to reduce dimensionality and consolidate features. Let the output of this convolutional feature extraction step be . Subsequently, these extracted features are passed through a Feed-Forward Network (FFN) module, , comprising a set of fully connected layers with non-linear activations. This FFN serves to learn higher-level, abstract representations from the localized patterns identified by the CNN, enabling complex non-linear transformations and feature integration. The final output of each Intra-Domain Expert Network is thus a refined domain-specific embedding , where and denotes the embedding dimension of each feature domain. Collectively, for each flow F, this stage produces a set of K specialized domain embeddings . A residual connection is applied between the input and output of each IDEN to preserve original domain information and stabilize training.

3.2.2. Inter-Domain Interaction Modeling

To capture the synergistic effects among the diverse feature domains within the same flow

F, an Inter-Domain Expert Network, as shown in

Figure 3, implemented using an attention mechanism, processes the set of domain embeddings

. This allows the model to weigh the importance of different domain interactions for forming a holistic flow representation.

Let

be the matrix formed by stacking the domain embeddings. We employ a standard scaled dot-product attention mechanism. Queries (

Q), keys (

K), and values (

V) are derived from

via learnable linear projections:

where

are learnable weight matrices, and

is the key/query dimension. The attention output is

The resulting attended features, representing contextually enriched domain embeddings, are then aggregated via mean pooling over the

K attended domain vectors to produce the unified flow representation

:

The attention output in EDEN is combined with the original domain embeddings via a residual connection to retain complementary information.

3.2.3. Cross-Flow Temporal Pattern Learning

Anomalous network behavior often manifests as specific sequences of flows over time. To capture the cross-flow dynamic change, we employ a Transformer encoder due to its ability to model long-range dependencies and support parallel computation, which is essential for processing sequences of varying lengths in high-throughput traffic environments. A sequence of T flow representations, , where each , is fed into the Transformer. Positional encodings are added to to incorporate sequence order information, yielding .

The Transformer consists of

L identical layers. Each layer

l has two sub-layers: a multi-head self-attention (MHSA) mechanism and a position-wise Feed-Forward Network (FFN). Layer normalization (LN) and residual connections are applied:

The output of the final layer,

, provides contextualized representations for each flow in the input sequence, where

.

3.3. Classification and Optimization

For binary anomaly classification, we prepended a special ‘[CLS]’ token to the Transformer input sequence

S and used the output at this position as the aggregate representation

. This aggregate vector

is then passed through a final linear layer with a sigmoid activation function

to predict the probability of the input sequence

being anomalous:

where

and

are learnable parameters. An anomaly is flagged if

exceeds a predefined threshold

.

The entire framework is trained end-to-end. Given a training dataset

, where

is the ground-truth label (0 for normal, 1 for abnormal) for the

i-th sequence

, we minimize the binary cross-entropy (BCE) [

24] loss function:

where

is the model’s predicted probability for the

i-th sequence. The model parameters are optimized using the AdamW [

25] algorithm. Dropout is applied within the FFNs and Transformer layers to mitigate overfitting.

4. Experiment Results and Discussion

This section first introduces the experimental environment, the datasets used in the experiments, and the evaluation criteria adopted in the experiments, and finally presents the overall performance of the method and the content of the ablation experiments.

4.1. Experiment Setup

In the experiment, the Python environment based on the TensorFlow framework was used, and the experimental configuration is shown in

Table 1. The average per-epoch training time for the NF-CSE-CIC-IDS2018 dataset was approximately 2.61 min. For the ML-EdgeIIoT dataset, it averaged around 1.43 min. The KDD-CUP99 dataset had an average per-epoch training time of about 1.06 min, while the UNSW-NB15 dataset averaged approximately 1.29 min. The total training time varied across datasets depending on their convergence epochs, ranging from approximately 6.00 min to 32.00 min. The total inference time for the NF-CSE-CIC-IDS2018 dataset required approximately 7.67 min. For the ML-EdgeIIoT dataset, the total inference time was around 4.75 min. The KDD-CUP99 dataset took about 2.60 min for inference, and the UNSW-NB15 dataset required approximately 3.00 min.

4.2. Datasets

In this paper, the following four datasets were used for experiments, as shown in

Table 2.

NF-CSE-CIC-IDS2018 dataset [

26]: This dataset, jointly released by NF-CSE and CIC in 2018, is widely used in the field of cybersecurity research. It covers diverse network attack types with comprehensive and detailed features, integrating massive real-world network traffic and simulating multiple network scenarios. This provides critical data samples for network intrusion detection research.

ML-EdgeIIoT dataset [

27]: This dataset focuses on machine learning research in edge Internet of Things (Edge IoT) scenarios and aims to provide data support for network traffic analysis and security detection in edge devices. It includes real traffic from various devices, covering normal communications and common attack types, with detailed annotations of traffic features. It aligns with the characteristics of edge computing devices (resource constraints and dynamic topology changes).

UNSW-NB15 dataset [

28]: This dataset, with its rich attack scenarios and diverse features, has become a critical benchmark in the cybersecurity field. Constructed through a combination of simulation and real-world data collection, it covers 9 common attack types and includes 50 traffic feature types. Beyond traditional attack data such as port scanning and denial-of-service (DoS) attacks, it also incorporates new threat samples like Heartbleed vulnerability exploitation, providing researchers with realistic and diverse training materials for building complex attack recognition models.

KDD-CUP99 dataset [

29]: This dataset is derived from a simulated military network environment and encompasses 41 network connection features with 23 million network connection records. Attack types are categorized into 4 major classes and 39 sub-types, making it a golden standard for early performance evaluation of intrusion detection algorithms.

4.3. Hyper-Parameter Setting

For model training, we employed the regularizer and dropout method to mitigate overfitting and enhance the model’s generalization ability on unseen data. Through empirical tuning, the regularization parameter was set to , and the dropout rate was fixed at 0.1. The Adam optimizer was adopted with a learning rate of 0.025. The CNN kernel size is 3 × 3 with a stride of 1, designed to capture local patterns in sequential features. The Inter-Domain Expert Network uses eight attention heads to model cross-domain dependencies from multiple perspectives. The Transformer has a depth of four layers. For training, the batch size is 128, and the random seed is set to 42 for result stability. Additionally, the number of training iterations for each experiment was determined by the model’s convergence. Specifically, the NF-CSE-CIC-IDS2018 and ML-EdgeIIoT datasets generally converged around the 12th epoch, the UNSW-NB15 dataset converged approximately at the 7th epoch, and the KDD-CUP99 dataset converged around the 5th epoch. To balance training efficiency and performance stability, we implemented an early stopping strategy with a patience of 5 epochs and a maximum epoch limit of 20. This epoch limit was chosen because most models converged within 1% of their final performance by the 20th epoch during preliminary experiments.

4.4. Evaluation Metric

The model was tested using standard performance evaluation methods. Four core metrics were primarily focused on for evaluation: accuracy, precision, recall, and F1-score. These four metrics are all derived from the four basic attributes of the confusion matrix, as detailed below:

Accuracy: The most fundamental performance metric, reflecting the overall correctness of the model’s predictions. The calculation formula is as follows:

where TP denotes the number of attack flows correctly classified as attacks. FP denotes the number of benign flows incorrectly classified as attacks. TN denotes the number of benign flows correctly classified as normal. FN denotes the number of attack flows incorrectly classified as normal.

Precision: Measures the proportion of actual positive samples among those predicted as positive, reflecting the model’s accuracy in predicting positive cases. The formula is

Recall: Represents the proportion of actual positive instances correctly predicted by the model. The calculation formula is

F1-Score: Represents the harmonic mean of precision and recall, used to balance the trade-off between the two metrics. The formula is

4.5. Compared Methods

To comprehensively compare model performance, this study compares the proposed method with representative classical methods and state-of-the-art benchmark approaches. The specific methods are introduced as follows:

SVM [

30]: This method constructs a classification hyperplane based on statistical learning theory. By maximizing the margin, it effectively distinguishes normal and abnormal traffic, excelling in small-sample classification problems within high-dimensional feature spaces.

CNN [

31]: This method extracts spatial features from traffic data through convolutional layers, enabling automatic learning of local and global features. It demonstrates strong recognition capabilities for network traffic anomalies.

LeNet5 [

32]: This method is a classic Convolutional Neural Network architecture that employs an alternating structure of convolution and pooling layers to progressively extract hierarchical representations of traffic features. It has shown stable performance in network traffic pattern recognition tasks.

CNN-LSTM [

33]: This method integrates the spatial feature extraction capability of CNN with the temporal modeling advantages of LSTM. It can capture local feature patterns in traffic data and also mine long-term dependency relationships within traffic sequences.

4.6. Performance Comparison

The proposed method significantly outperforms the baseline approaches across four datasets, as shown in

Table 3. On the NF-CSE-CIC-IDS2018 dataset, our method boosts the accuracy by 4.44%, precision by 5.77%, recall by 3.69%, and F1-score by 5.05%. In the ML-EdgeIIoT dataset, we observe an impressive 2.54% increase in the F1-score. For the UNSW-NB15 dataset, our approach improves the recall by 0.38% and the F1-score by 0.35%. Even on the KDD-CUP dataset, where the baseline already exhibits high performance, our framework still achieves a 0.24% improvement in accuracy, a 0.12% increase in recall, and a 0.15% enhancement in the F1-score.

To verify the statistical significance of the model’s performance, paired t-tests were conducted on the accuracy of our model versus the strongest baseline for each of the four datasets, given that the difference data were validated to follow a normal distribution. After ten experimental runs were repeated for each dataset, the results showed that on the NF-CSE-CIC-IDS2018 dataset, the model achieved an average accuracy of 99.85%, representing a 4.44% improvement over the strongest baseline (CNN-LSTM), with a standard deviation of 0.32%. The t-test yielded , . Similarly, validation was performed on the other datasets, and the test results for all datasets indicated , demonstrating that the performance advantages of our model on each dataset are statistically significant.

Overall, these results strongly validate that by deeply deconstructing and modeling feature domain patterns and their interactions, our proposed framework can effectively capture the subtle and complex anomalies hidden within network traffic data. This capability leads to remarkable performance gains across diverse datasets, making it a robust and reliable solution for network traffic anomaly detection tasks.

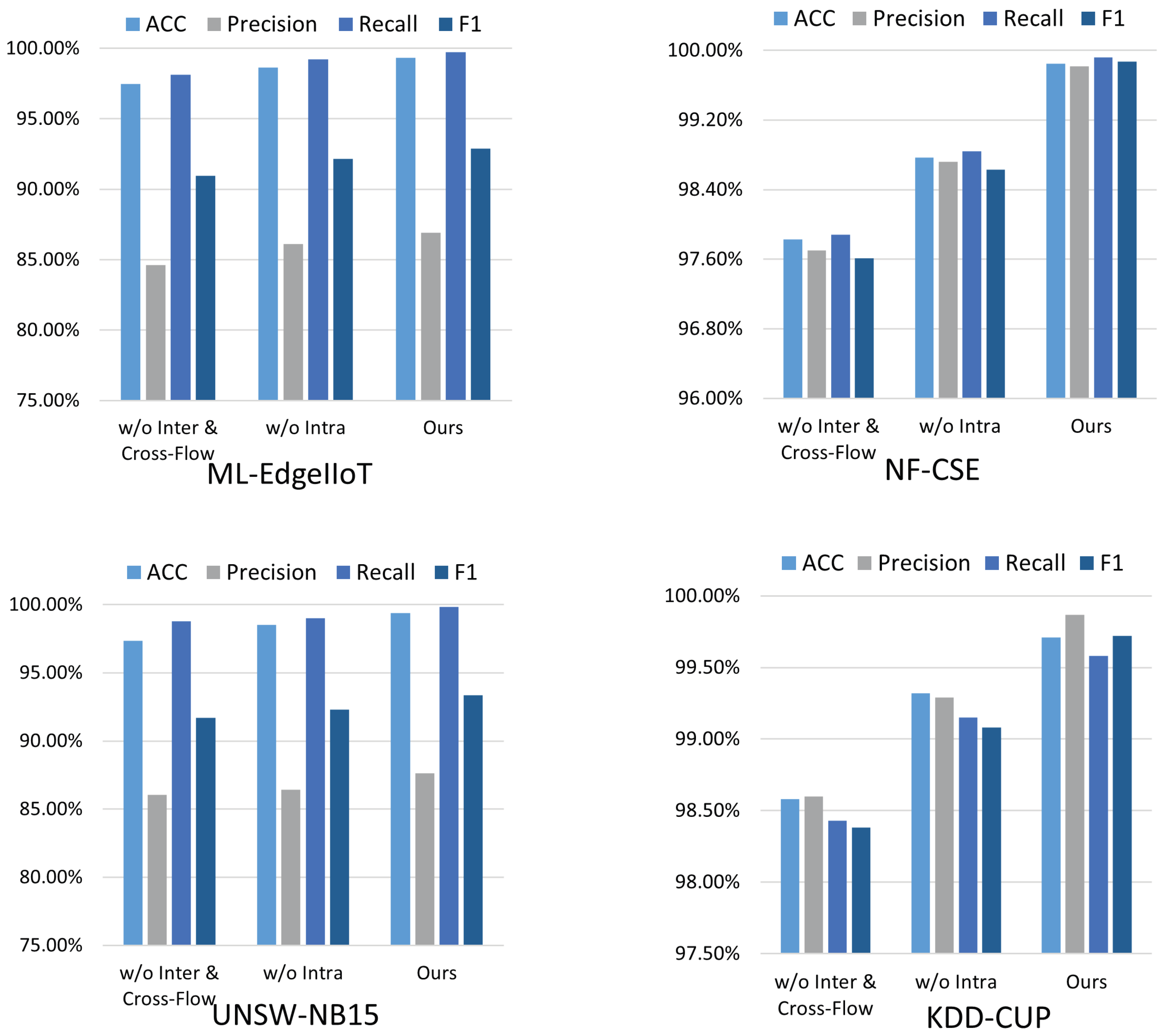

4.7. Ablation Study

To comprehensively evaluate the contribution of each component in our proposed method, we conducted ablation experiments by systematically removing key modules and comparing their performance against the full model (ours) across four datasets. The ablation variants are defined as follows:

The experimental results reveal significant performance disparities, as shown in

Figure 4. On the ML-EdgeIIoT dataset, our model outperforms w/o IDEN by boosting the F1-score from 90.97% to 92.90%. Compared to w/o EDEN and Cross-Flow, ours achieves an additional 0.73% gain in F1-score, demonstrating that integrating intra-domain and inter-domain mechanisms synergistically enhances detection accuracy. In terms of precision, ours improves by 2.29% and 0.78% over w/o IDEN and w/o EDEN and Cross-Flow, respectively, indicating better discrimination of normal and anomalous traffic.

For the NF-CSE-CIC-IDS2018 dataset, the performance gap is even more pronounced. Ours achieves a remarkable 2.26% increase in F1-score compared to w/o IDEN. Against w/o EDEN and Cross-Flow, ours still shows a 1.24% improvement, emphasizing the critical role of Intra-Domain Pattern Learning in handling complex attack patterns. Accuracy improvements reach 2.02% and 1.08% over w/o IDEN and w/o EDEN and Cross-Flow, respectively, highlighting the method’s enhanced reliability in large-scale traffic scenarios.

In the UNSW-NB15 dataset, ours surpasses w/o IDEN with a 1.66% F1-score increase and w/o EDEN and Cross-Flow by 1.05%. Notably, recall improves by 1.06% and 0.82% relative to the two ablation variants, respectively, indicating superior anomaly identification capabilities. Similarly, on the KDD-CUP dataset, ours outperforms w/o IDEN by 1.34% in F1-score and w/o EDEN and Cross-Flow by 0.64%, confirming consistent performance gains across diverse network environments.

These results collectively validate that both Intra-Domain Pattern Learning and Inter-Domain Interaction Modeling are indispensable. The former enables fine-grained feature analysis, while the latter captures complex cross-feature dependencies. Their integration significantly enhances the model’s ability to detect subtle anomalies, underscoring the effectiveness of our proposed framework in network traffic anomaly detection.

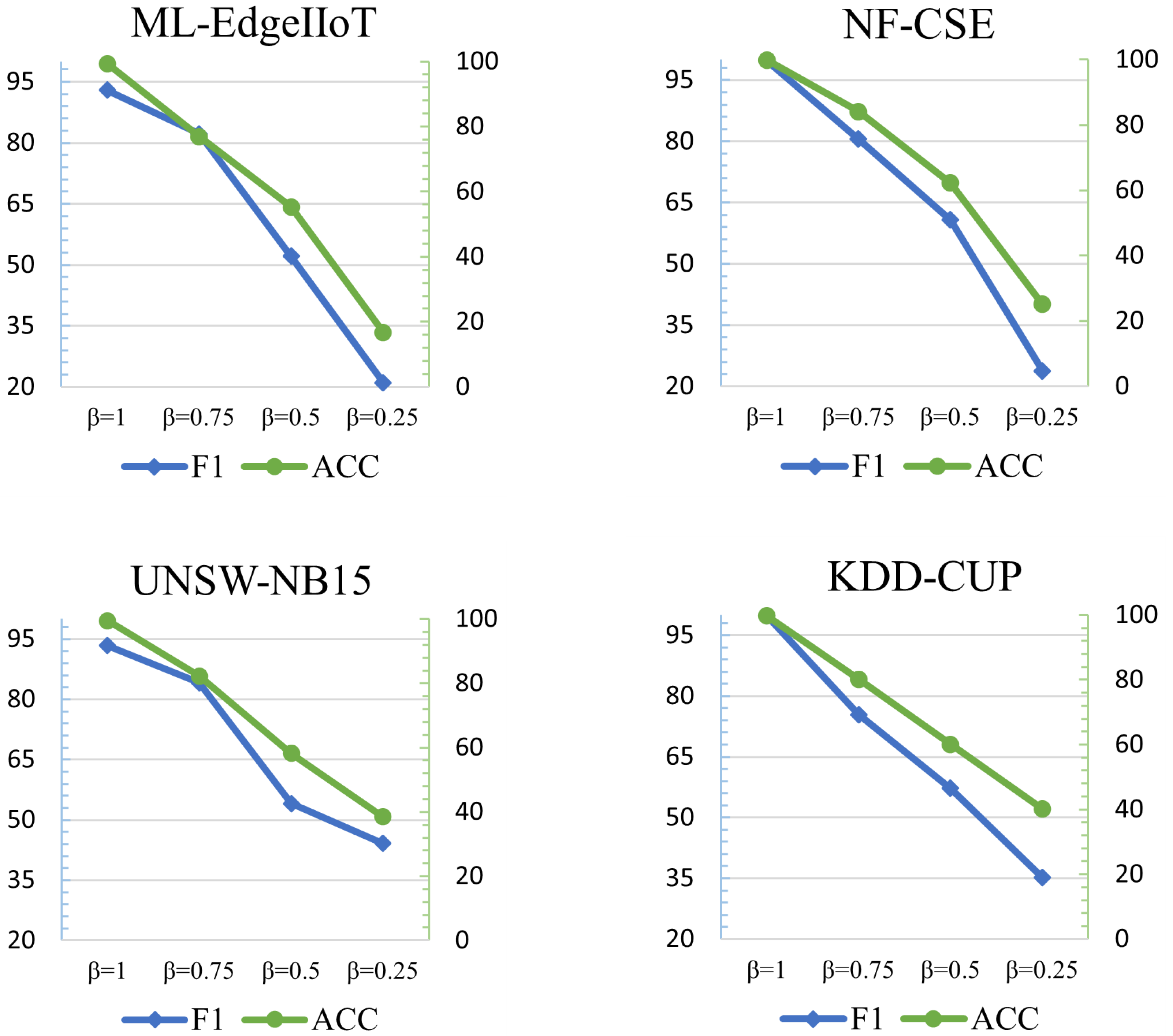

4.8. Parameter Sensitivity Analysis

In the parameter analysis, we use the scaling factor

to determine the number of feature types included in the experiments. When

, all features are utilized, while

halves the feature count with upward rounding to ensure integer values. The specific number of feature types for each dataset are shown in

Table 4. This setup allows us to systematically evaluate model performance under different feature dimensions.

The performance results under different feature quantities set by

are shown in

Figure 5. For the NF-CSE-CIC-IDS2018 dataset, reducing

from 1 to 0.5 causes accuracy to drop by 37.68% and the F1-score to decrease by 39.12%. In ML-EdgeIIoT, ACC and F1-score decline by 44.02% and 40.81%, respectively. For UNSW-NB15, the drops are 41.28% in ACC and 39.32% in F1-score. KDD-CUP99 experiences a 39.61% reduction in ACC and a 42.5% decrease in F1-score.

These results collectively demonstrate that halving the feature quantity leads to significant performance degradation across all datasets. The substantial declines highlight the critical role of sufficient feature information in maintaining model performance, indicating that the proposed model can make full use of all feature types to achieve optimal results.

5. Discussion

Our framework, by leveraging fine-grained intra-flow feature interaction learning and cross-flow temporal modeling, effectively identifies complex network anomalies. Its core lies in generating high-quality flow representations to capture dynamic patterns, with its efficacy confirmed by experimental results. Nevertheless, this work has aspects warranting further refinement and points towards several future research directions. The framework’s multi-component architecture (Intra-Domain Expert Network, Inter-Domain Expert Network, cross-flow Transformer) incurs computational overhead, challenging real-time high-speed deployment. These computational costs are justified by notable F1-score gains (0.35–2.54%) across datasets like UNSW-NB15 and ML-EdgelloT, which reduce false negatives and false positives to lower operational costs. Moreover, its ability to detect subtle cross-domain anomalies often missed by baselines makes the marginal training and inference overhead worthwhile in production. Concurrently, the current manual definition of feature domains poses a test for model generalization, while the interpretability of complex interaction patterns and the Transformer’s efficiency with ultra-long sequences are aspects for further optimization.

While the proposed framework achieves strong performance across multiple benchmarks, several avenues remain for enhancement. In scenarios involving fully encrypted traffic, where protocol fields and payloads are unavailable, adaptation to metadata-level features or representation learning from traffic patterns may further extend its applicability. Moreover, to improve robustness under imbalanced or noisy data distributions, the framework can be integrated with adaptive sampling, cost-sensitive objectives, or semi-supervised fine-tuning. These directions offer practical extensions toward broader deployment contexts.

Future work will focus on adaptive learning mechanisms for feature domains to enhance model robustness, specifically exploring automatic, data-driven feature domain identification strategies such as clustering-based discovery, attention-guided dynamic partitioning, and meta-learning for domain generalization to replace manual segmentation. To meet practical deployment needs, exploration of model compression via techniques like structured pruning of redundant neurons, knowledge distillation from large pre-trained models, and lightweight architecture redesign will be a priority. We will integrate advanced explainable AI techniques for precise attribution of anomaly causes. Furthermore, investigating efficient sequence models to overcome current Transformer limitations with large-scale flow sequences, and extending the framework’s capabilities from binary classification to fine-grained multi-class anomaly identification, are important developmental directions.

6. Conclusions

This paper introduces an innovative framework for network anomaly detection, designed for fine-grained analysis and modeling of individual network flows. The primary contribution of our work lies in a meticulous flow-level learning process: initially, raw features of network flows are partitioned into distinct “feature domains”; subsequently, intrinsic patterns within each feature domain are learned independently, and more importantly, the interactions between the representations of these diverse feature domains within the same flow are explicitly modeled. This analysis mechanism is engineered to generate highly discriminative flow representations. These high-quality representations, in turn, enable our Transformer-based temporal module to effectively capture and identify complex and dynamically evolving anomalous patterns that span the flow sequences. Extensive experimental evaluations on multiple challenging real-world network traffic datasets validate the superior accuracy of the proposed framework.