1. Introduction

Social robots are increasingly being used to assist society in areas such as healthcare [

1] and education [

2], seeking to engage users in the task [

3]. Beyond assistance, social robots are used as everyday companions, providing entertainment and reducing loneliness as expressive and interactive agents [

4,

5]. Robot companions require effective methods to engage users [

6] and natural expressiveness to correctly convey their state using multimodal interaction [

7]. The use of affective and natural responses reported a positive influence on the perception that users have about robots in dimensions such as trust [

8], anthropomorphism [

9], likeability [

9,

10], and intelligence [

9].

Different interactive games such as Tamagotchi [

11] and Pou [

12] had previously captured human attention and produced a high level of engagement, simulating a virtual care-dependent pet that the user has to take care of. This approach has been recently studied in our Mini social robot [

13] by Carrasco-Martínez et al. [

14], which is combined with biologically inspired processes that simulate human-like functions to immerse the user in the interaction. These internal needs, such as hunger, thirst, and boredom, evolve with time, requiring the user to interact with the robot using RFID cards representing objects (e.g., food, toys) that satisfy and reduce these needs. This contribution aims to involve the user in the interaction to help the robot maintain an optimal condition. The approach promotes human–robot interaction (HRI) and user entertainment by inducing the user to look after the robot. The robot exhibits a proactive behaviour that consists of verbally requesting the user’s objects (e.g., a toy when bored). Then, the robot reacts with behaviours that include verbal and non-verbal expressions to the user’s actions by accepting or rejecting the objects based on its needs (for example, if the robot is hungry, food objects are accepted and the other rejected).

Some users who previously used the Mini robot in other experiments without adaptation [

10,

15] found its behaviour generic, repetitive, and unnatural since the robot does not show an adaptive response to the situation (for example, the robot likes all objects) and always reacts in the same way (the robot repeats the same sentences). This lack of adaptive behaviour and personalisation to the interaction context has also been perceived in other experiments with different scenarios [

16,

17,

18], leading to design strategies that mainly focus on adapting the robot’s speech. This problem highlights the need to design and endow social robot companions with more realistic and multimodal responses to enhance their naturalness and interactivity. The motivation of this work is to develop a system that generates robot preferences during the interaction combined with dynamic emotional responses and then evaluate how users perceive this behaviour using standardised scales.

The goal of this paper is to address the previous limitations by presenting a preference system for biologically inspired robots acting as companions in caring scenarios. The model generates adapted and personalised interaction experiences that enhance the robot’s naturalness and the user’s perception. The contribution of this paper is designing a bioinspired Tamagotchi-like behaviour based on internal processes and emotion with adaptive expressions to study how users perceive the robot. The study evaluates how users perceive the robot in terms of

Likeability,

Animacy, and

Perceived intelligence using the Godspeed series [

19] and

Agency and

Sociability using the Human–Robot Interaction Evaluation Scale (HRIES) [

20]. We also included custom questions in the evaluation asking the participants which robot they prefer, their feelings about the interaction, and what emotions and preferences they perceived.

This paper presents in

Section 2 related work conducted to generate robots’ adaptive behaviour during HRI to enhance robots’ naturalness and human–robot bonding.

Section 3 describes the Mini social robot and the preferences system to make the robot work as a robot companion.

Section 4 describes the user study and evaluation methodology to assess how users perceived Mini’s new capabilities.

Section 5 presents the study results.

Section 6 discusses the main findings and limitations of the study,

Section 7 presents the main limitations, and

Section 8 presents the findings and future work.

2. Related Work

Robot companions have been demonstrated in recent years to be useful devices to support older adults with engaging activities [

21]. These robots provide assistance, companionship, entertainment, and affection, targeting those who may experience loneliness and require support [

21]. Studies conducted with older adults [

22,

23,

24] and general audiences [

25,

26] suggest that acceptance and positive opinions depend on user characteristics such as experience with pets, age, education, or retirement status. These studies emphasise the need for user-centric design [

22], focusing on how naturalness and human-like interaction affect the communication with the robot [

25,

26]. We review the previous related research conducted to endow robot companions with adaptive behaviour to user characteristics and interaction context. The review aims to analyse how companion robots improve their responses to the different scenarios and users they encounter.

The research developed by Cagiltay et al. [

16] examined how six families perceived robot companions in particular houses. They identified that family members have different preferences and think of the robot in various roles, such as assistants, suggesting that adapting their behaviour to each family member might improve HRI and robot use. Umbrico et al. [

27] presented a holistic cognitive approach to endow socially assistive robots with intelligent features to reason at different levels of abstraction, understand specific health-related needs, and decide how to act to perform personalised assistive tasks. The paper does not provide a user evaluation of the Koala robot but discusses an experimental assessment and explores the study’s feasibility. Villa et al. [

17] addressed behaviour personalisation by improving human–robot conversations with proactive behaviour in assistive tasks to reduce the digital divide and showing emotions with eye expressions. The authors conclude that more diverse expression improves user acceptance and generates a more natural, affective, non-passive behaviour. Jelinek et al. [

28] explored how autonomous dialogues and emotional personality traits in the Haru robot influence user perception. Their proposal endows the robot with emotional expressiveness and personality to personalise HRI. The results suggest that the adaptive conversation system improves Haru’s perception of liveliness, interactivity, and neutrality.

Maroto-Gómez et al. [

15] investigated how predicting and selecting activities according to the user characteristics and preferences improved HRI with the Mini companion robot. The authors found that Godspeed dimensions such as Likeability and Perceived intelligence in a robot with personalisation were significantly higher than in a robot with random activity selection. These findings suggest that personalisation improves users’ perception towards robot dimensions related to competence and intelligence. These authors extended the previous research by integrating implicit (user actions) and explicit (ratings) feedback [

29] to dynamically adjust user preferences during the execution of entertainment activities. The results highlight that implicit and explicit feedback improve activity selection, while how the robot obtains user feedback during the interaction does not affect user engagement or interaction experience.

Bhat et al. [

18] also explored robot adaptation in HRI. The authors developed a human trust–behaviour model based on Bayesian Inverse Reinforcement Learning to learn user preferences in real time in a human–robot teaming task. The study compares different robot strategies with and without learning and adaptation, concluding that adapting to human preferences increases trust in the robot. Similarly, Herse et al. [

30] investigated how stimulus difficulty and agent features in a collaborative recommender system influence user perception, trust, and decision making in human–agent collaboration. The authors conclude that user trust is complex to obtain, and trust calibration might consider task context to produce positive effects on the users.

In a recent study, Maroto-Gómez et al. [

31] used the MiRo companion robot to integrate a user-adaptive system into its software architecture to adapt its behaviour to preferred user interaction modalities with encouraging behaviours. This approach facilitates HRI by promoting interaction and reducing user limitations such as motor, vision, or audio impairment. Rincon-Arango et al. [

32] addressed this line by designing adaptive exercises and interaction procedures to assist older people in maintaining cognitive health and emotional well-being. Their robot enhances user autonomy and improves quality of life by monitoring physical and emotional states and adapting to the needs of each user. Finally, Becker et al. [

33] studied the influence of naturalness in robot voice generation on user trust and compliance during HRI. They found that participants interacting with a robot with a natural voice increased intelligibility and communication.

Summary

The previous literature on robot companions highlights that robot personalisation to user characteristics improves dimensions such as trust [

18], naturalness [

17,

28,

33], and compliance [

31]. However, these papers barely address robot adaptation to the dynamics and context of the interaction from the robot’s point of view using emotions and robot preferences. This paper addresses this research gap by presenting an adaptive preference system for a robot companion with emotional responses to user actions based on RL to enhance robot naturalness during HRI.

3. The Mini Social Robot

Mini [

13] is a desktop social robot dedicated to research purposes, which is designed as a multi-purpose companion that provides entertainment and assistance in different tasks. It contains many activities devoted to performing cognitive entertainment exercises to train memory, perception, and other skills. It also includes assistive functions such as monitoring the user or informing about the latest news and weather forecast.

In this paper, we focus on one of its principal entertainment functions, Mini acting as a Tamagotchi pet-like care-dependent companion, which the user has to take care of using RFID cards simulating various object types. This idea, previously presented in [

14], intends to engage the user in the interaction and generate human–robot bonding using psychological strategies [

34] by creating an immersive interaction experience. The following sections describe the hardware and software of the Mini robot used in this experiment.

3.1. Hardware Capabilities

Mini’s body integrates many sensors to perceive the stimuli available in the environment. The robot has three capacitive touch sensors on its shoulders and belly to sense contact. It has a microphone to capture user speech and understand simple instructions using an automatic speech recogniser to guide how to drive the interaction and the execution of activities. The robot has a 3D camera on its hip to obtain vision information from the front field. Finally, the robot incorporates an RFID reader in its belly to perceive RFID cards that simulate food, drinks, and toys.

Regarding its actuation capabilities, the robot has five degrees of freedom: one to rotate the hip, one per arm to move them up and down, and two in the head to move it horizontally (right and left) and vertically (up and down). It has two screens on its head that simulate two expressive eyes that can be configured in terms of pupil size, eyelid position, blinking speed, and blinking frequency, and three LEDs in the mouth, cheeks, and heart that can be configured in colour and blinking speed. The robot incorporates a speaker to play a voice thanks to a text-to-speech synthesiser. Finally, the robot has a touch screen to display multimedia content such as images, videos, and sound, and obtain user responses to different questions presented as text menus.

The experiment uses two Mini robots with the same appearance, as shown in

Figure 1. As further described in the experiment setup, one of the robots incorporates a software module to generate preferences towards RFID objects combined with emotional reactions, and the second robot only shows random expressiveness with no preferences towards the objects. We use the RFID reader from the robot’s sensors to perceive the objects the user gives to the robot, and we use the touch screen to show start and finish buttons that initiate and end the experiment. From its actuators, we use the five degrees of freedom to generate motion, the screens to display configurable eye expressions, LEDs to change expressiveness using colour and blink frequency, and the speaker to play verbal requests, rejections, and acceptances towards the objects given by the user, depending on the preferences and interaction dynamics.

3.2. Software Architecture

Mini features a modular software architecture developed under the ROS environment [

35]. The architecture facilitates activities for HRI purposes, managing autonomous decision making based on perception signals and generating appropriate expressiveness.

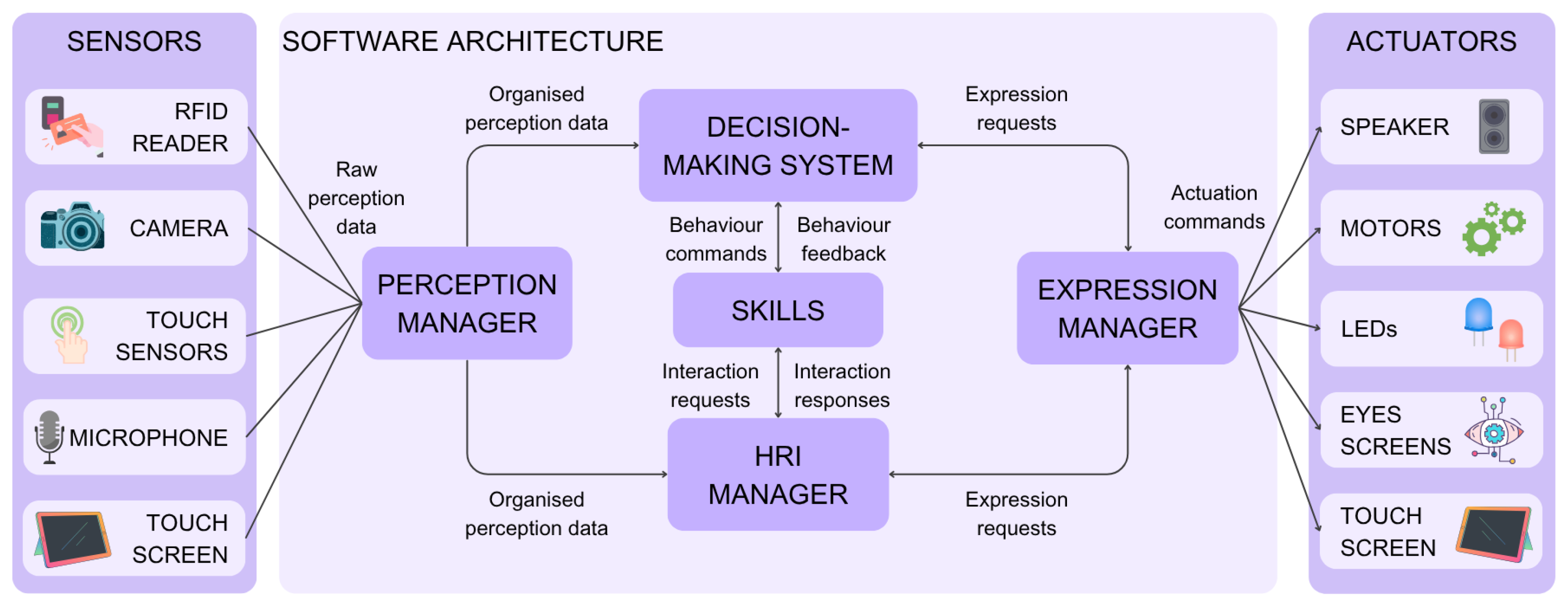

Figure 2 shows the software architecture with its main modules, the Perception Manager, the Decision-Making System, the HRI Manager, the Expression Manager, and the Skills.

The Perception Manager is a module that receives raw perception data from the robot sensors and generates a unified message that homogenises all perception data for its understanding by the rest of the modules in the architecture. The unified message contains meaningful perception data such as the sensor that is providing the information, the kind of object perceived (for the case of RFID tags), the intensity of the stimulus from 0 to 100 units (for discrete stimulus, 0 or 100), and other important information such as the stimulus state (for example, for touch sensors, the touch area).

The Decision-Making System acts as the robot’s brain, deciding which activity should be executed at every moment. It aggregates information from the Perception Manager and an internal module that generates simulated biological functions such as feeding, moisturising, and entertainment that endow the robot with a lively and natural behaviour. The Decision-Making System activates the Skills, which are modular robot functions such as games or communications that can be started or stopped on demand. This work employs two Skills called Tamagotchi and Communication Skills. The Tamagotchi Skill generates robot requests depending on its internal needs (e.g., being hungry) and reactions to the objects provided by the user that can be accepted or rejected. The Communication Skill manages the touch screen menus to start and stop the experiment.

The HRI Manager hosts the interaction dynamics. This module manages multimodal interaction with the user and the environment by creating interfaces that allow for obtaining and giving information, especially to the user. The HRI Manager resolves conflicts when multiple interaction petitions are received simultaneously, enabling a smooth and appropriate interaction. The HRI Manager works closely with the Expression Manager. This module controls the robot’s actuators to display interaction information (for example, saying a sentence with the speaker, displaying a specific eye expression, or showing a button on the touch screen for the user to press), producing appropriate expressiveness and enabling natural communication with the user.

The software architecture includes an Adaptive Object Preference System integrated into the Decision-Making System. It allows Mini to generate preferences towards the objects used to care for them while behaving in Tamagotchi mode. The following section describes the system’s features and how emotional responses appear in response to the users’ actions.

3.3. Adaptive Object Preference System

The Mini robot is an embodied companion with biologically inspired functions to exhibit a Tamagotchi-like operation.

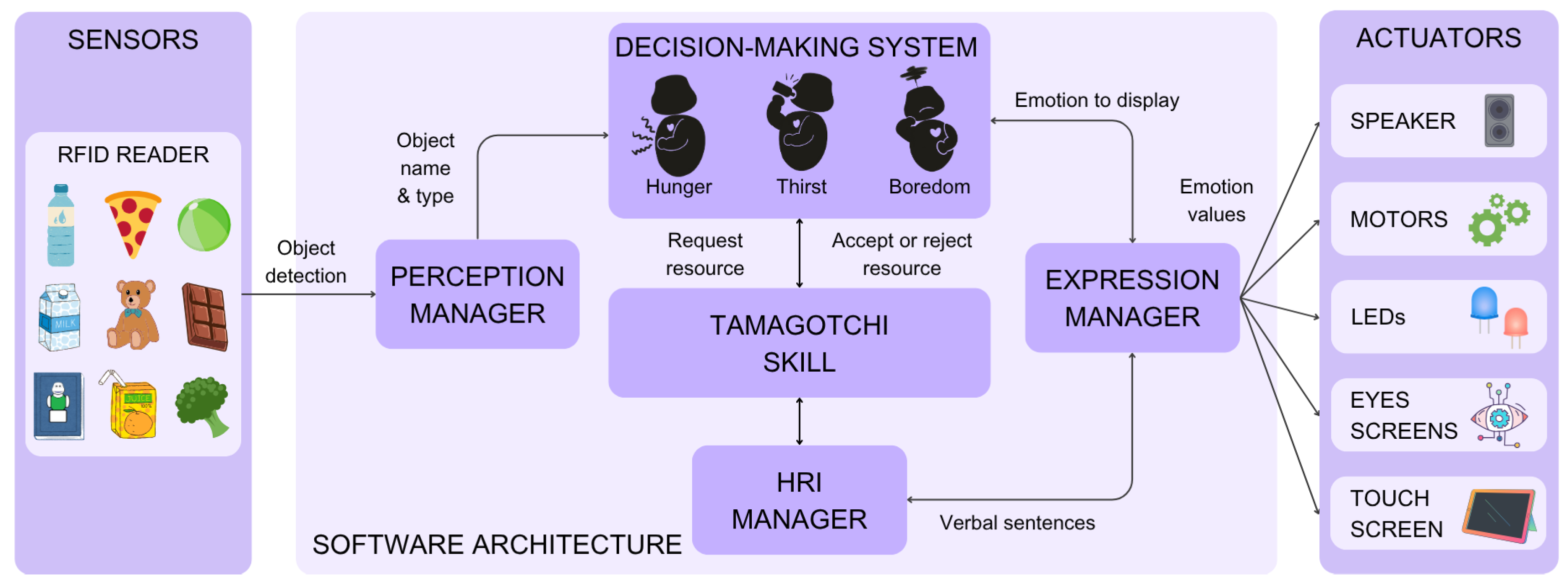

Figure 3 shows a detailed view of the Adaptive Object Preference System and its connection to the other software modules in the robot’s architecture, especially with the Decision-Making System that hosts the learning module. This behaviour involves the user in the interaction by promoting more frequent actions that intend to create human–robot bonds [

14]. The three biologically inspired functions designed for the Mini robot are feeding, moisturising, and entertainment. These functions, whose parameters are in

Table 1, have a value from 0 to 100 units, where 100 means the process is in perfect condition and 0 means the process needs restoration from the user. All processes start at their ideal value of 100 units. We define that the processes’ values change every 30 s to a random value between 0 and 100 units, creating an internal deficit. The corresponding deficits for the above processes are hunger, thirst, and boredom.

The user has to correct these deficits using RFID objects representing food, drinks, and toys. The RFID objects available are broccoli, pizza, and chocolate for the food category; milk, water, and orange juice for drinks; and a teddy bear, a green ball, and a book as toys. The robot has three actions related to each biological process: request, accept, and reject a resource. Requesting a resource occasionally becomes active to ask for something the robot needs. For example, when the robot is thirsty, it requests a drink by saying a specific sentence. Acceptance of a resource becomes active when the robot presents a biological deficit and the user gives the robot the appropriate object. For example, if the robot is bored and receives a teddy bear toy, the robot will thank the user. Finally, the robot rejects a resource if the user gives Mini an object that the robot does not need. For example, if the robot does not present any internal deficit and the user provides water, it will reject it. The sentences used to communicate the robot’s intentions verbally were generic before this work, only recognising the kind of object (food, drink, or toy) but not the specific object given.

The new proposal generates a more natural response by creating an Adaptive Object Preference System that produces dynamic reactions. In this update, acceptance or rejection depends on the biological internal need and whether the robot likes the object received. Preferences towards objects depend on two stages. First, we generate an initial value that depends on the kind of object and some randomness. Then, we dynamically adapt this value depending on factors such as how strongly the robot needs the object (improves liking) or how frequently it receives the same object (generates dislike).

3.3.1. Initial Preferences

The system assigns an initial preference value between

and 1 to each object the first time the user gives the robot an object. This value is based on its type and a stochastic component, allowing each robot to exhibit distinct preferences. The category of the object (i.e., food, drink, or toy in this setting) influences the likelihood of a positive or negative preference being assigned. For example, food objects are likely to be liked at

, drinks

, and toys

. This implies that the robot is more likely to prefer toys and drinks than food. This initial assignment, defined empirically, is informed by human studies [

36], which demonstrate that variations in flavour, colour, and odour in food elicit a broader range of emotional responses and preferences compared to drinks or toys.

Once the like (1) or dislike (

) factor is determined, the system generates a random value between 0 and 1, which is multiplied by the like/dislike factor, producing a preference value for the object within the range of

to 1. Mathematically, the initial preference (

) for each object (

o) is computed based on its type and a random factor. Equation (

1) defines how the like/dislike factor (

) is determined, depending on a probability (

) associated with the kind of object (

). This factor is then multiplied by a uniformly distributed random scalar (

), which defines the initial preference (

), as expressed in Equation (

2).

3.3.2. Adaptive Object Preferences

The model uses RL to dynamically update the preference

of each object the robot interacts with. RL is a biologically inspired method that enables an agent to learn adaptive behaviour through trial and error. It allows the agent to prioritise and respond to stimuli by interacting with a dynamic environment. Learning occurs by evaluating the benefits or drawbacks of performing a particular action under specific conditions, which is represented numerically as a reward. If an action yields a positive outcome, the reward is high; if it produces no beneficial effect, the reward is low [

37].

We apply the Rescorla–Wagner rule [

38], which updates predictions by comparing the reward the agent receives with its current estimate to define the robot’s preferences towards each object. Used in psychology, cognitive modelling, and associative learning, this rule is widely used to represent reactive and adaptive systems, such as the Adaptive Object Preference System proposed in this work. Equation (

3) presents the Rescorla–Wagner update adapted to our scenario. The system learns a preference value

for each object

o. These values are updated whenever the robot receives the object, allowing it to adjust its likes or dislikes through interaction. This mechanism enables the robot to display more natural and responsive behaviour over time.

where

is the learning rate (ranging from 0 to 1), and

r is the reward value produced by the reward function, which is also constrained to the interval

.

is the preference for object

o, which is incrementally updated based on the reward.

The reward value (

) determines whether the robot increases or decreases its preference for an object compared to previous interactions. Three factors influence this value. First, there is the robot’s internal need for the object at the given moment (

). For example, if the robot is bored and receives a book, it is more likely to appreciate it. Second, there is the frequency with which the user presents the same object (

). If the user repeatedly provides the same item, the robot may begin to dislike it. Finally, a stochastic component (

), sampled uniformly between

and

, introduces variability to ensure a more dynamic and less deterministic system. Equation (

4) defines the calculation of the reward value, which is bounded within the interval

.

The robot’s internal needs range from 0 to 100 units. Accordingly, the need factor () is scaled to take values between (indicating no need for the object) and 1 (indicating a high need for the object). The object frequency factor () is set to 0 if the object has not been received in the past 30 min. Otherwise, it takes a negative value equal to multiplied by the number of times the object has been presented within that time frame.

3.3.3. Emotional Responses

The robot generates an emotional response when it receives an object from the user. The emotional response depends on the preference value (

) and the number of times the robot has seen the object. The robot reacts with four of the six basic emotions discovered by Ekman [

39] across all cultures: joy, surprise, anger, and disgust. We excluded sadness and fear since we did not find a direct relationship between these emotions and our application.

The robot reacts with surprise when perceiving an object for the first time, since no predefined preference exists. In subsequent encounters, its emotional reaction depends on the learned preference value . Specifically, the robot reacts with joy if it likes the object (), with anger if it moderately dislikes it (), and with disgust if it strongly rejects it (). In all other situations, the robot remains neutral. The model updates the emotional responses associated with each object dynamically every time the object is perceived.

The emotion ranges were inspired by Russell’s Circumplex Model of Affect [

40], which conceptualises emotions in a two-dimensional space of valence (pleasantness) and arousal (activation). We map the valence dimension to the robot’s preference values: positive preferences to joy, moderate negative preferences to anger, and strong negative preferences to disgust. Neutral states are in subtly negative values, while surprise emerges when no preference has yet been defined, reflecting novelty and unexpected events. This mapping strategy has been previously applied in adaptive emotion generation for pet robot companions [

31], supporting its relevance in social robotics.

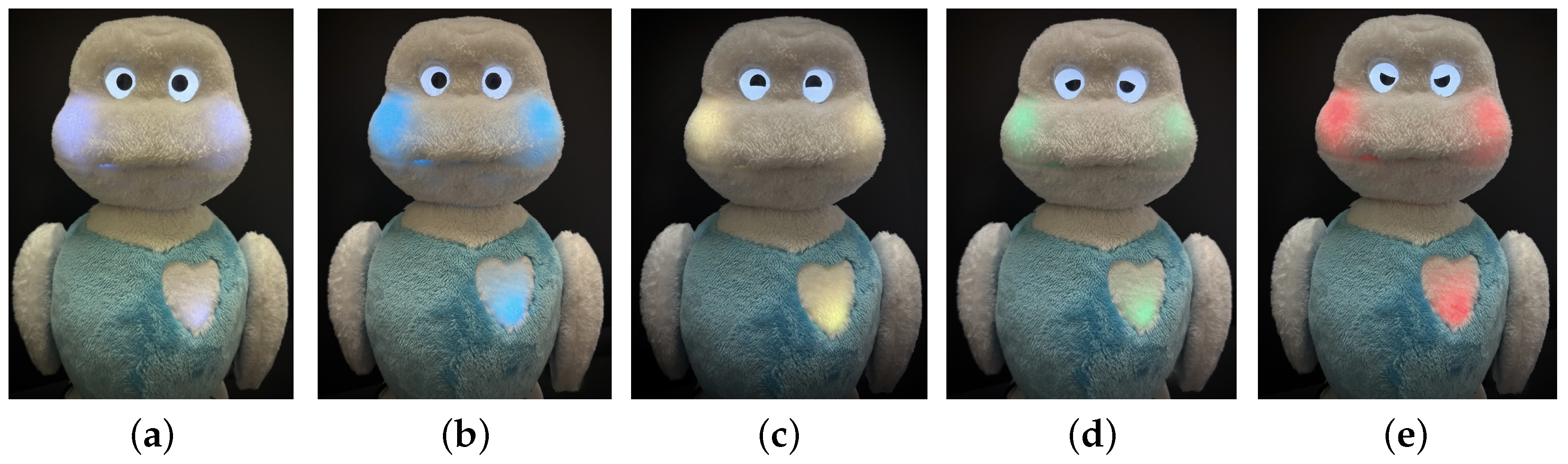

Emotions dynamically modulate the robot’s expressiveness.

Figure 4 shows the different emotions in Mini. Due to the actuation capacities of the robot, we modulate its eye screens in eyelid position, pupil size, gaze direction, blink frequency, and blink speed; its heart colour and beat speed; its cheeks and mouth colour; the sentences spoken by the robot; and its movements in terms of velocity and frequency. The actuators have predefined ranges. The robot can control the eyes’ blink frequency from 0 to 40 per minute, blink speed from

s to 1 s (time to open and close the eyelid), and pupil size from 2 mm to 8 mm. For the five motors (hip, two in the arms, and two in the head), their velocity can be set from

to 100 rpm and activated at different time intervals. All actuator commands are rescaled from 0 to 100 units, where 0 means no intensity (e.g., no movements or blinking) and 100 means full intensity. The emotions’ parameters are generated with a frequency, time, and a speed set from previous HRI studies with our Mini robot [

10] that aimed to investigate whether people could perceive the emotions and mood shown by Mini. The study shows how, with these parameters, most people could positively identify which basic emotion Mini was expressing.

Table 2 shows the values each actuator takes to express the different emotions depending on the object preference value.

4. User Study

The user study evaluates whether robot users perceive a robot companion acting as a pet-like virtual device with preferences towards objects and emotional responses as more natural and likeable than a robot without such adaptive behaviour. The following sections describe the hypotheses for the experiment, the participants’ information, the experiment dynamics, and the evaluation metrics.

4.1. Hypotheses

The evaluation presented in this study was conducted to assess three hypotheses related to the adaptive and expressive behaviour exhibited by the robot with the preference object learning system.

H1. Endowing a robot with preferences towards objects and emotional reactions would improve the robot’s naturalness. Therefore, participants should rate Robot B’s dimensions of Animacy, Intelligence, and Agency significantly higher than those of Robot A.

H2. Participants prefer the interaction with Robot B rather than with Robot A, as shown in the dimensions Likeability and Sociability and the responses provided in the qualitative analysis.

H3. Participants should recognise the preferences towards objects and the emotions displayed by Robot B and not perceive this behaviour in Robot A.

4.2. Participants

A total of 35 participants aged from 20 to 59 () from the University Carlos III of Madrid completed the experiment, 23 men and 12 women. We recruited them by announcing the experiment at the university hall. They all voluntarily agreed to participate without receiving any reward other than the possibility of meeting a social robot. One participant reported very little technology knowledge, four participants answered having moderate knowledge, nine participants reported having high knowledge, and 21 participants reported having very high knowledge. Regarding their robotic knowledge, three participants responded that they had very low knowledge, none had low knowledge, four had a moderate value, 14 had high knowledge, and 14 were experts providing a high rating. Finally, of the 35 participants, 30 had previously interacted with Mini in other contexts, but none had previously used the Mini robot acting as a virtual Tamagotchi pet with emotions.

4.3. Experiment Dynamics

The experiment dynamics consisted of each participant entering a room with a moderator who explained the experiment.

Figure 5 shows the experiment scenario, and the video available here

https://www.youtube.com/watch?v=w3_8pzk9meU (accessed on 25 July 2025) shows the system working in real time. The room had two Mini robots with the same appearance placed on the left and right side (tagged with letters A and B, respectively) of a table with their touch screens in front and 9 RFID object cards representing food (broccoli, pizza, and chocolate), drinks (water, milk, and orange juice), and toys (teddy bear, green ball, and book). The objects were in the centre of the table between both robots with a chair in front of the table where the user had to sit. Both robots were ready, showing a Start green button on their touch screens. Each robot stayed idle until the participant started the interaction with it.

We decided to pre-set some preferences in the short-term experiment to facilitate the appearance of all the possible emotional reactions the robot can perform. This would be challenging in the short term, since the learning rate defined for the model varies preferences very slowly. Since initial preferences depend on the kind of object and a random factor determining whether the robot likes or dislikes all the objects simultaneously, participants could experience very different and incoherent sessions, such as the robot liking all objects simultaneously. The robot likes an object of each type (chocolate, teddy bear, and orange juice) with a preference value of units and dislikes one of each type (broccoli, milk, and book) with a preference value of units. The other objects (pizza, water, and green ball) started with no preference (0 units). Preferences were reset to ensure all participants perceived the same experience when a new session started. These preferences are not reset but saved in the long-term robot behaviour to define a unique preference system for each robot. Once initial preferences were set, they evolved during the experiment, depending on the user’s actions, following the long-term model definition.

Before starting the interaction, all participants signed a consent privacy form and completed an initial demographic survey on a laptop, asking about their age (open numerical answer), gender (man, woman, other), technology knowledge (5-point Likert scale), robotics knowledge (5-point Likert scale), and whether they had previously interacted with Mini. Once the participant completed the initial survey, the moderator gave basic instructions about the experiment using the same speech: “You have to interact with two social robots with the same appearance in different turns. They will ask you to help them with some things they need. You can interact freely with them and perform the actions you prefer. You decide which one to interact with first. The interaction with each robot starts when you press the green start button on the touch screen. You can pass an object over their belly to interact with them, which is just above the camera. Once you start, a red finish button will appear on the touch screen; press it when you want to finish interacting. After interacting with each robot, you must complete a questionnaire on the laptop, which is identified as Robot A or B. After completing this task, you can use the other robot. Never use both robots at the same time. When you finish both, take the final questionnaire on the laptop”. Questionnaires were ready and opened on the laptop.

After this explanation, the moderator left the room, and the user could start the experiment by choosing the robot they preferred. Participants who decided on Robot A first interacted with a general non-expressive robot. The robot requested objects depending on its internal needs (food if hungry, drink if thirsty, and toys if bored). Internal needs and object requests occurred randomly between 10 and 20 s to avoid overwhelming the user, but only one object type was requested simultaneously. That is, the robot could not be hungry and thirsty simultaneously. This was programmed in order to let the robot correctly react to only one object at a time, avoiding simultaneous object detection that might lead to overlapped or incorrect responses. Participants could decide when to show objects to the robot. If a user showed an object and the robot needed it (for example, being bored and receiving a book), the robot reacted with a positive sentence thanking the user. If the user gave an object not needed by Mini, it responded with a rejection sentence informing the user that it does not need that object at the time. Robot A showed no expressiveness except random movements and verbal general sentences of acceptance, rejection, and requests for different object types (see

Appendix A for the whole robot speech during the experiment). The participant could voluntarily finish the interaction with Robot A by pressing the touch screen. No time limit was set. After finishing with Robot A, the robot returned to an idle state, displaying the start button again. The participant had to complete a questionnaire about Robot A using the laptop.

The interaction with Robot B followed the same dynamics as that of Robot A. The interaction started when the participant pressed the start button on the touch screen and ended when the participant pressed the finish button on the touch screen. The robot made requests in the same way as for Robot B. However, the robot’s responses varied depending on the user’s action (see

Appendix A for the whole robot speech during the experiment). Object rejection or acceptance depended on whether the robot needed the object or not and whether the robot liked the object. If the robot wanted and enjoyed the object, it reacted joyfully and thanked the user for giving it the required object. If the robot did not need or like the object, it showed negative emotions and rejected it. All emotional responses tuned the robot’s eye expressions, motion in terms of velocity and frequency, cheeks and heart colour, and heartbeat frequency. Robot B personalised the rejection or acceptance sentence to the object received (e.g., thank you for giving me orange juice, I was so thirsty). Once participants interacted with both robots, they completed a final qualitative survey. Then, they left the room, and the experiment concluded.

4.4. Evaluation Procedure

Participants had to complete four questionnaires during the experiment: the first before starting the interaction, questionnaires two and three after interacting with Robots A and B, and a final questionnaire before leaving the room. The evaluation procedure, whose questionnaires are in

Appendix B, are the following:

The first survey retrieved demographic data, age (open numerical answer), gender (man, woman, other), technology knowledge (5-point Likert scale), robotics knowledge (5-point Likert scale), and whether they had previously interacted with Mini (yes/no). This questionnaire was administered following the procedure stated in similar social robotics research [

29].

The second and third questionnaires were identical but differentiated between Robot A and B. They asked the user to rate different robot attributes to define a score for the dimensions Animacy, Likeability, and Perceived intelligence from the Godspeed questionnaire series [

19] and the dimensions Sociability and Agency from the HRIES questionnaire [

20]. Then, we included a question where participants could enumerate (open text) the emotions they perceived in the robot. Finally, we asked participants whether they perceived robot preferences towards objects (Yes/No). The last part of the questionnaire was conducted following our previous work where we evaluated Mini’s emotions [

10].

Finally, we asked which robot the participants preferred (A, B, or indifferent) and an open text asking why the participant chose such a robot and for comments and suggestions. This last part aims at obtaining the participants’ conclusions and suggestions in the experiment, as most qualitative HRI studies include (e.g., [

5,

29,

32]).

5. Results

This section presents the quantitative and qualitative outcomes of the paper. First, we present an ablation study showing the influence of the parameters on the model, the interaction data obtained during the experiment, and the results obtained from running the questionnaires on Robots A and B. Then, a qualitative analysis describes which emotions and object preferences the participants recognised and their opinion about which robot they like the most.

5.1. Quantitative Results

5.1.1. Ablation Study

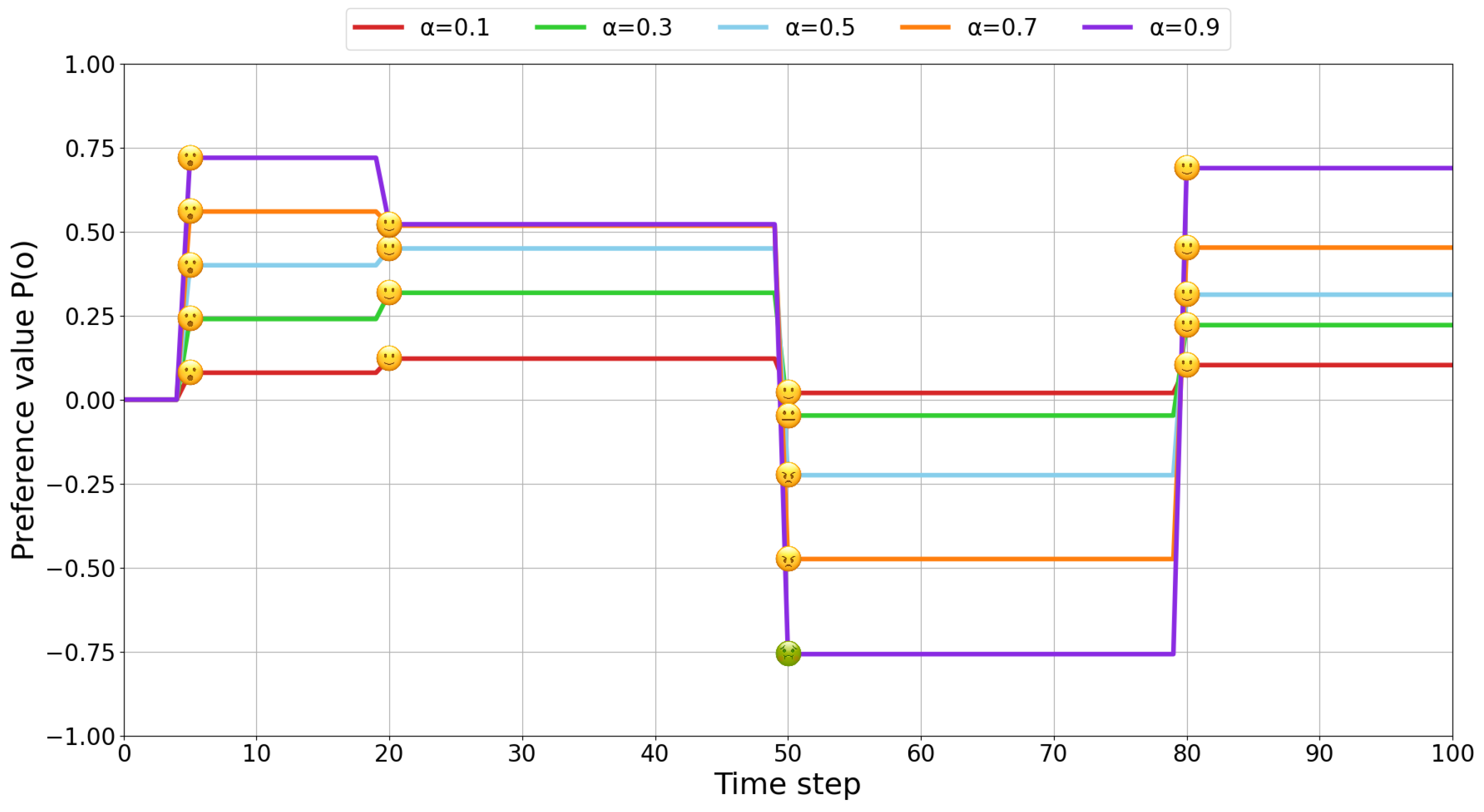

Figure 6 shows the model performance under different learning rates (

) and the robot’s emotional responses when receiving the object. The learning rate regulates how quickly preferences adapt to new rewards. High learning rates promote fast adaptation, producing significant changes in preferences and in the robot’s expressions. Contrarily, low

values lead to slower, more stable updates and emotionality.

In this scenario, the robot receives a toy object four times at steps 5, 20, 50, and 80. The first time (step 5), the robot receives a high positive reward due to the kind of object () and the random preference that, in this case, makes the robot like the object. This fact produces a surprise reaction for all learning rates, as no prior preference exists, but different preference values do (higher for higher learning rates). At the time step of 20, the robot moderately needs the toy, and the user gives it to the robot, obtaining a moderate positive reward () and responding this time with joy in all situations. For high learning rates, the preference value decreases slightly, moving to the new reward value of . For low learning rates, the preference value increases since it was below the new reward. At step 50, the object is provided when unnecessary, generating a negative reward (). High learning rates produce big preference changes, leading to anger or disgust, while low learning rates maintain a joyful or neutral state since the preference value does not suffer a big decrease. Finally, at step 80, the robot needs the toy again, receiving a substantial positive reward, which restores joy across all learning rates with stronger changes for higher values.

This experiment highlights the impact of the learning rate on the preference values and the robot’s emotional consistency. Since we aim to model long-term robot behaviour, abrupt preference changes could cause inconsistent emotional expressions toward the same object. To ensure gradual adaptation and emotional stability, we selected a learning rate of as it provides smoother and more reliable preference dynamics.

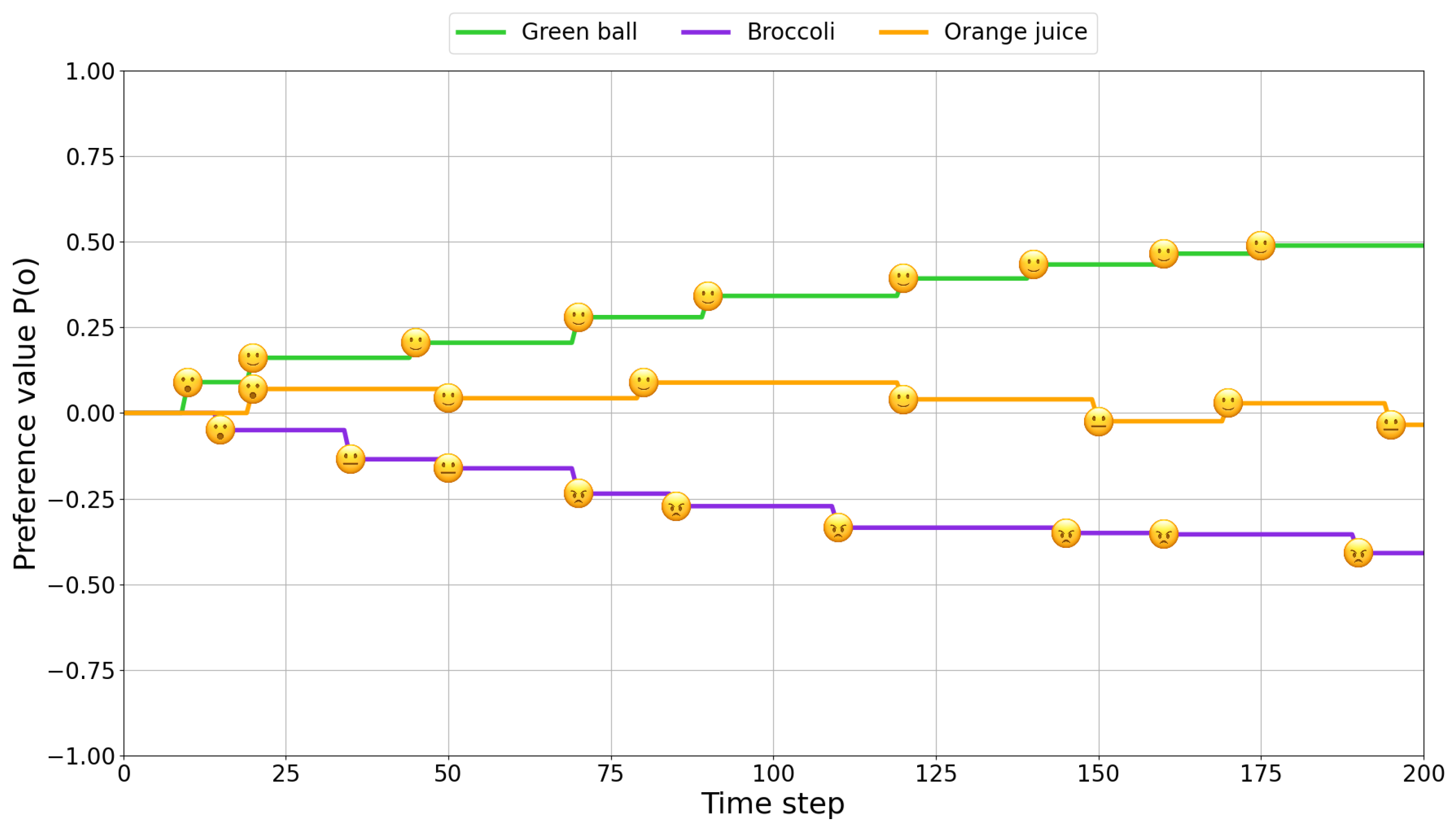

Figure 7 compares the model performance for different objects and dynamics after fixing the learning rate to

. The figure shows three different tendencies: the robot liking the green ball object with the model producing high positive rewards all the time, the robot disliking the broccoli object receiving negative rewards each time the robot received the object, and a variant object preference towards orange juice alternative making the robot moderately like and dislike the orange juice depending on the moment the robot receives the object.

The robot reacts with surprise the first time it receives the green ball. Since it is a toy, the robot’s first reward to define this object’s preference is because the random factor decided the robot liked it. Then, the robot receives this object many times at appropriate moments (when the robot needs to play), increasing the preference value towards the green ball, with the robot responding joyfully. The second case shows the opposite tendency for the broccoli object. The first time the robot receives the object, it receives a reward value of since it is food, and the object did not like it due to the random factor. The robot reacts with surprise at this first encounter. In subsequent time steps, the preference value worsens since the robot receives the broccoli when it does not need it. Therefore, the robot expresses a neutral state the first two times it receives the broccoli and anger in subsequent ones. Finally, the orange juice object follows a more varied response. The robot likes the orange juice the first time it receives this drink (reward value of ). However, the following times the robot receives orange juice, the preference fluctuates between and points, leading the robot to express joy or a neutral state during the interaction.

5.1.2. Interaction Data

The experiment reported interesting interaction metrics that are noteworthy to mention. The average full session time, including the questionnaires, was s with a maximum session time of s and a minimum session time of s.

Table 3 shows the interaction metrics by robot. Robot A was chosen first 20 times while Robot B was chosen 15 times. The interaction time (without including the questionnaire time) for Robot A was

with a maximum interaction time of

s and a minimum of

. On the other hand, the participants spent with Robot B

s with a maximum time of

and a minimum of

s. Participants dedicated more time to the robot they chose first. When Robot A was chosen first, participants dedicated

s for the interaction with this value dropping to

when Robot A was chosen second. Participants who chose Robot B first spent

s for the interaction, while when they chose Robot B as the second option, they spent

s on average.

Finally, we computed the times participants gave objects to the robots. On average, participants gave objects to the robot per session. The results show that participants interacting with Robot B used more objects () than when they interacted with Robot A ().

5.1.3. Statistical Analysis

We conducted a statistical analysis using SPSS software v26 to compare the scores provided by the participants for the dimensions of Likeability, Animacy, Perceived intelligence, Sociability, and Agency. We performed normality tests using the Shapiro–Wilk test. The normality results, shown in

Table 4, indicate normality for the dimensions Animacy and Perceived intelligence and non-normal distributions for Likeability, Sociability, and Agency. Based on the normality results, we performed the parametric Paired

t test for the dimensions Animacy and Perceived intelligence and the non-parametric Wilcoxon test for Likeability, Sociability, and Agency.

We calculated effect sizes using Cohen’s d [

41] for parametric tests and Rosenthal’s r [

42] for non-parametric tests. The significance level was maintained at

, following this rationale: (i) all hypotheses were defined beforehand; (ii) the dependent variables are not redundant and do not originate from a single underlying construct (i.e., they are not multiple measures of the same variable); and (iii) applying strict corrections in studies with a large number of hypotheses can substantially increase the risk of Type II errors. This approach—avoiding corrections for multiple comparisons under these conditions—has also been supported in the literature [

43].

We also calculated Cronbach’s

[

44] to assess the internal consistency of the questionnaires for Robot A and Robot B conditions. We compared the results to the Godspeed [

19] and HRIES [

20] scales. For Robot A,

values are

for Likeability,

for Animacy,

for Perceived intelligence,

for Sociability, and

for Agency. For Robot B, the values obtained are

for Likeability,

for Animacy,

for Perceived intelligence,

for Sociability, and

for Agency. These values are slightly higher than those reported in the original questionnaires, where Cronbach’s

was from

for Likeability,

for Animacy,

for Perceived intelligence,

for Sociability, and

for Agency. These values indicate high internal consistency since all dimensions were higher than

points, demonstrating that the questionnaires reliably measured the intended constructs.

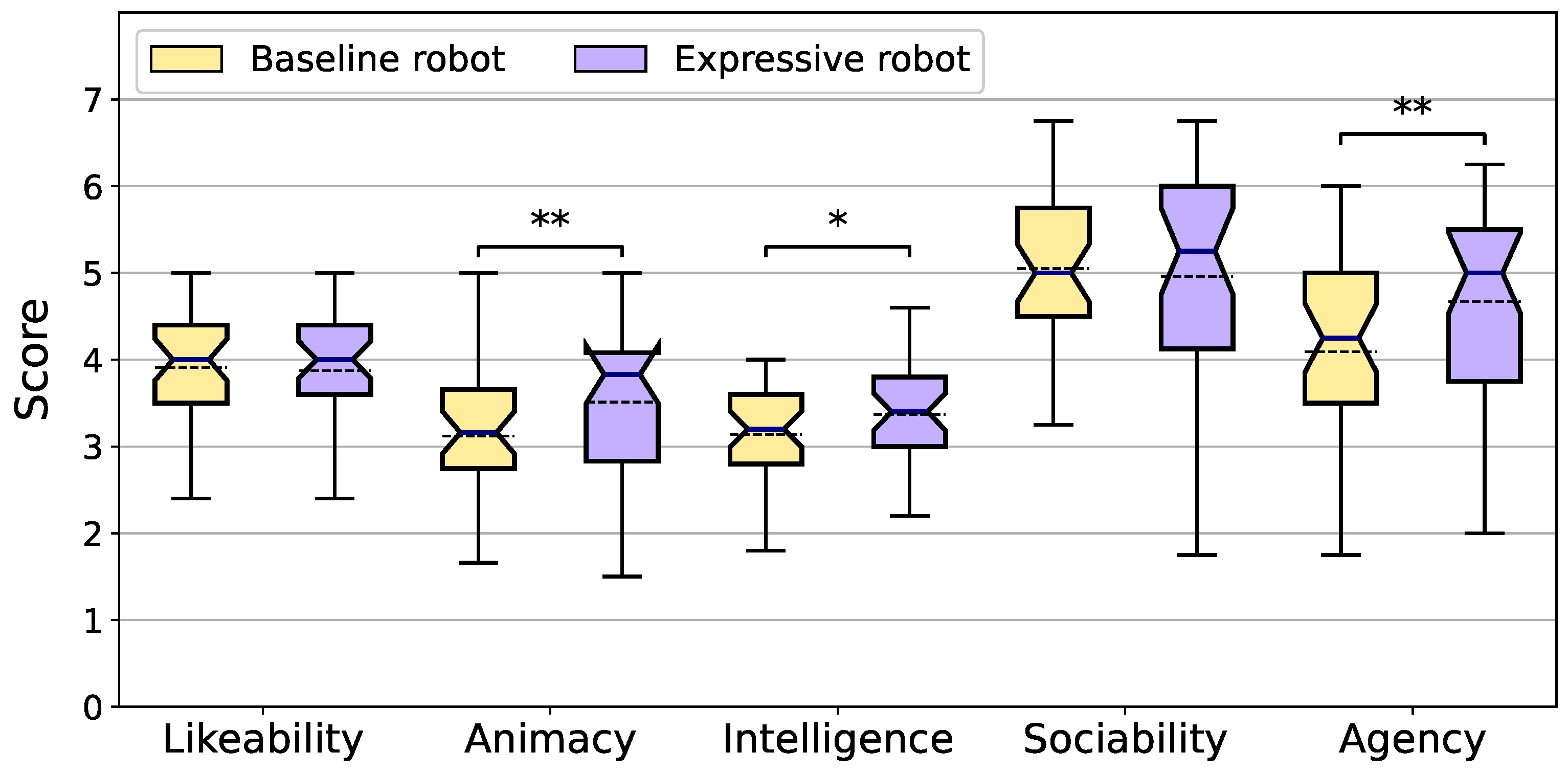

The analysis, represented in

Figure 8 as a boxplot, revealed significant differences between the Robot A Baseline and Robot B Expressive conditions on several measured variables. Specifically, for

Animacy, the paired samples

t-test indicated a significant increase in ratings for Robot B compared to Robot A,

,

, with a medium-to-large effect size (

). Similarly,

Perceived intelligence showed a significant improvement in the expressive robot,

,

, with a medium effect size (

).

Agency ratings were significantly higher for Robot B, with a large effect size,

,

,

, suggesting that the expressive robot was perceived as having considerably more agency.

In contrast, no significant differences were found for Likeability (, , ) or Sociability (, , ) using the Wilcoxon Signed-Rank test. These results indicate that participants’ perceptions of these variables did not change meaningfully between the two robots.

We applied the Benjamini–Hochberg procedure [

45] to account for multiple comparisons across the different dimensions and control the false discovery rate (BH-FDR), ensuring that the observed differences between Robot A and Robot B are statistically robust. For dimensions related to

(Animacy, Intelligence, Agency), differences remained significant after FDR correction: Animacy (

,

), Intelligence (

,

), and Agency (

,

). For the dimensions related to

(Likeability and Sociability), no dimension is significant after applying the correction (Likeability:

,

; Sociability:

,

).

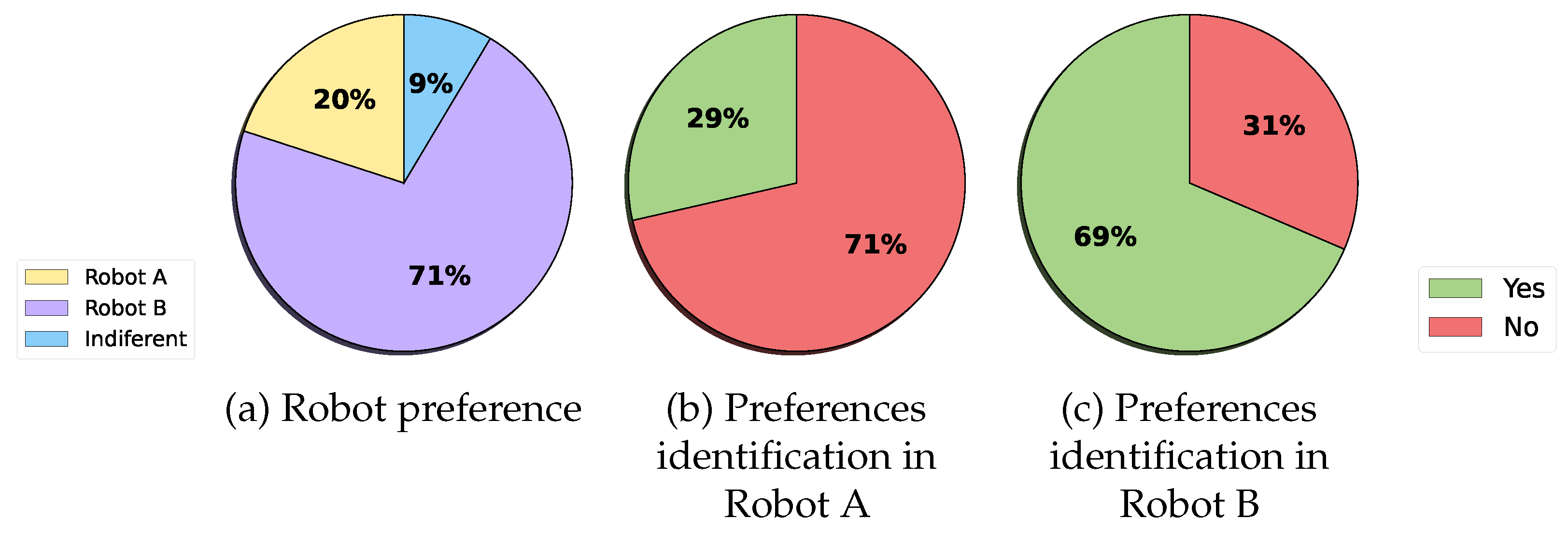

Figure 9 shows different pie charts representing the percentage of people who preferred Robot A, Robot B, and showed indifference, people who incorrectly identified the robot’s preferences towards objects in Robot A, and people who correctly identified the robot’s preferences towards objects. Most people (25 out of 35) preferred Robot A over Robot B (7 out of 35).

people showed indifference towards their preferred robot, suggesting they did not perceive changes between Robot A and B. When asked whether they perceived that Robot A had preferences towards objects, 10 out of 35 incorrectly indicated yes, while the other 25 indicated no (Robot A did not show preferences). On the other hand, 24 people correctly identified the robot’s preferences while the other 11 participants reported not perceiving preferences.

5.2. Qualitative Results

The qualitative study aimed to obtain users’ opinions about which emotions they perceived using an open text question. We also asked participants why they preferred each robot and to provide commentaries and suggestions about the experiment.

5.2.1. Emotion Perception

Although Robot A was not designed with emotions, many participants perceived emotions in it. The most reported terms were joy, surprise, and curiosity. Several users mentioned kindness, tenderness, or playfulness, indicating a tendency to humanise the robot based on movement or timing rather than explicit cues. Some interpreted its actions as signs of hunger or thirst, linking physical interaction patterns to internal states.

Many participants stated they perceived no emotion or felt indifference, boredom, or confusion. Comments such as no emotion, none, indifferent, or monotonous suggest that Robot A’s lack of expressiveness was noticed and sometimes criticised. A few users described the robot as passive, distant, or cold, interpreting its limited variation as emotional flatness. While some tried to rationalise behaviours as signals of internal needs, others dismissed them as mechanical or repetitive. A small number referred to frustration, impatience, or abandonment.

Participants widely reported perceiving a range of emotions in Robot B. The most frequently detected emotion was joy, which was often connected to situations where the robot received preferred objects. Many users described the robot as happy, playful, or enthusiastic, suggesting its positive emotional displays were easily recognised. Emotions such as anger, disgust, and surprise were also frequently identified, usually in response to disliked objects or unexpected behaviours. This indicates that participants were sensitive to the robot’s expressive cues and could distinguish between emotional reactions. Some participants also noted curiosity, tenderness, and satisfaction. A few participants described the robot as confused, tired, or frustrated, showing that users inferred emotions even from ambiguous or repetitive behaviours.

In Robot B, many participants reported motivational or physiological states, such as hunger, thirst, or boredom, associating certain behaviours with internal needs. A few participants criticised the robot as monotonous or repetitive, but even these comments included emotional terms such as serenity or boredom. No participants reported confusion or inability to interpret the robot’s behaviour, suggesting that the emotional cues were accessible to most users.

5.2.2. Robot Preference

Most participants preferred Robot B, citing its greater emotional expressivity, visual cues, and human-like feedback as key reasons. Participants generally highlighted robot features such as coloured lights, eye movements, and facial elements (cheeks and heart) that helped convey emotions like anger, satisfaction, or curiosity. Some participants perceived that Robot B reacted more clearly to different objects, showing preferences and making interactions more natural and engaging. Others described it as more expressive, friendly, or alive, reinforcing the perception that emotional cues improve HRI. Some participants found the robot’s reactions more informative or emotionally coherent, especially when accepting or rejecting objects. One participant stated that the robot’s emotional feedback felt close to a human interaction despite speaking less than Robot A.

Those who preferred Robot A tended to value its consistency, request clarity, and verbal coherence. The robot’s responses were perceived as more straightforward, making it easier to understand when asking. A few participants found Robot B’s emotional responses to be negative, intense, or uncomfortable, noting that strong expressions of anger could feel like a personal failure. Others perceived that Robot A responded to all RFID cards, being more coherent in its instructions, which made the interaction more manageable. In these cases, the preference reflected a desire for predictability and clarity over affective richness. Finally, a few participants reported no strong preference between the two robots, indicating that both appeared similar in functionality or failed to elicit a distinct emotional impression.

5.2.3. General Suggestions

Participants provided many suggestions concerning both robots’ interaction quality, system responsiveness, and emotional coherence. One of the most recurring topics was the importance of timely and precise feedback, particularly when presenting objects to the robot. One participant wrote that “clear emotional feedback makes it easier to consider what the robot wants at each moment”. This fact highlights the importance of multimodal expressivity, especially in emotionally interactive systems.

Several participants offered constructive design suggestions, such as integrating camera-based object detection or allowing voice interaction to make the experience more intuitive and organic. Others proposed improvements in interface responsiveness, noting that the “Finish” button lacked visual or auditory feedback, and both robots were slow to start interacting. Despite these limitations, many users found the experience fun and interesting, particularly when the robot reacted with expressive features like stomach sounds or lighting effects. A few also suggested refining the robot’s preferences and reactions to avoid inconsistent or overly strong emotional displays. Participants appreciated the system’s potential but emphasised the need for greater responsiveness, emotional coherence, and interactional clarity to enhance the overall user experience.

6. Discussion

The hypotheses defined in

Section 4.1 aimed to determine whether endowing a social robot with preferences and emotions towards the objects they used in their interactions with people enhances the perception of the robot’s naturalness (

) through the dimensions Animacy, Perceived intelligence, and Agency and the robot’s likeability (

) through the dimensions Likeability and Sociability. In addition, we explored with

whether participants recognised preferences and emotions in Robot B and not in Robot A.

Cronbach’s reported positive internal consistency (Cronbach’s ) in all questionnaire dimensions, confirming the reliability of the scales. The Animacy, Perceived intelligence, and Agency results confirm our initial hypothesis, since Robot B was perceived significantly more animated, intelligent, and agentic than Robot A. These dimensions refer to the robot’s capacity to exhibit behaviour similar to humans, leaving aside its machine-like appearance and exhibiting rational and decision-making capacities that improve its capacity to solve complex tasks. In addition, the participants’ opinions in the qualitative analysis suggest they positively perceived these features in Robot B, highlighting key aspects such as naturalness or human-like behaviour.

The statistical analysis outcomes did not confirm hypothesis . The scores associated with the Likeability and Sociability dimensions did not show differences between Robots A and B. Participants rated Robot A slightly above Robot B in the Sociability dimension. The explanation for this outcome can be found in the comments provided in the qualitative survey, where participants could report their opinion about the two robots at the end of the experiment. In the open text questions, some participants indicated they found the instructions, explanations, and reactions of Robot A way simpler than those of Robot B, making it easier to follow and understand during the experiment. However, they clearly reported that Robot A was cold and unresponsive, while they mostly agreed that Robot B was more emotional and reacted better to the interaction events. These opinions might explain why some people reported in the questionnaires that they liked Robot A more (easier to understand), although they found Robot B more sociable (the reactions are richer). The statistical results do not support our initial hypothesis, but participants did report their primary preference towards Robot B rather than Robot A. These results suggest that further experiments might be needed to expand the interaction possibilities in longer-lasting interactions to analyse whether the subtle details of each expression/sentence performed by the robot during the experiment affected the Likeability and Sociability dimensions. Another possible reason is that Godspeed and HRIES questionnaires could not capture real participants’ beliefs, which was possibly due to the specific nature of the pet-like interaction or the wording of the scale items. Finally, according to the participants’ answers in the qualitative study, we observed that Robot B’s negative emotions, such as anger and disgust, as well as the increased object rejection because of the robot’s preferences, negatively affected the participants’ perception of this robot’s likeability since they indicated they did not like that Robot B showed negative reactions.

The last hypothesis

evaluated in this study was confirmed by the findings presented in

Figure 9 and the qualitative analysis. Most people (

) reported they perceive Robot’s B preferences towards objects and the emotions it showed when receiving an object. On the other hand, most participants did not perceive preferences towards objects in Robot A (

). The emotions they perceived were mostly joy, anger, and physiological states, which are not emotions at all. Those participants who perceived joy and anger in Robot A might be affected by the robot’s generic acceptance and rejection sentences when receiving an object. It is noteworthy to recall that Robot A did not have preferences or emotions. However, it accepted those objects needed and rejected those which did not with generic sentences, which is something that might have affected the perception of preference emotions, especially for those who interacted with Robot A first.

The interaction metrics in

Table 3 help understand the results. Participants used objects to satisfy the robot’s needs more often with Robot B than with Robot A (

vs.

actions on average), which suggests they enjoyed interacting more with the robot that displayed preferences and emotional reactions and, therefore, supported

. By contrast, participants spent more time with Robot A than with Robot B (

vs.

s on average). At first glance, this may be inconsistent with the reported preference for Robot B. However, the interaction metrics offer a suitable explanation. Participants spent longer with the robot they encountered first, which was likely because they were learning how to interact and exploring its behaviour. As Robot A was presented first more often than Robot B (20 vs. 15 participants), the higher mean interaction time for Robot A need not reflect greater engagement or enjoyment. Although the order in which participants interacted with the robots clearly influenced interaction time, we did not find any statistically significant order effects on the outcome measures. Similarly, while Robot B and A differed subtly in the speech used when reacting to objects provided by participants, this variation did not appear to influence the interaction results.

Compared to the literature, our results extend previous research on adaptive and personalised behaviour in robot companions by improving dimensions such as Animacy, Perceived intelligence, and Agency when robots display biologically inspired expressive behaviours based on preferences and emotion. Past studies highlight the importance of user characteristics, naturalness, and personalisation in robot acceptance and trust [

22,

25,

26]. Our results reported statistical differences in user perceptions across key Godspeed dimensions. Unlike studies such as Umbrico et al. [

27] and Villa et al. [

17], which qualitatively highlight the potential of adaptive behaviours but do not provide empirical validation, our assessment shows statistical support for the benefits of expressiveness in HRI. In addition, compared to previous studies our research group conducted with the Mini robot [

15], the values obtained for Likeability decrease from

points to

, for Animacy

points to

, and for Perceived intelligence from

points to

. Although the results decrease the robot’s perception, a direct comparison is impossible since the tasks differ. Our results reinforce Bhat et al.’s [

18] ideas that expressive and personalised behaviours are paramount to obtain user acceptance, trust, and engagement with companion robots, contributing methodological and empirical insights to the growing literature on socially assistive robotics.

7. Limitations

The realisation of this study had intrinsic technical and societal limitations worth mentioning. In the technical aspects, it is essential to highlight that Robot A had to be restarted twice for different users since it had an undetected software issue that made it stop showing reactions. This problem made participants start the experiment from the beginning. To analyse the influence of these problems, we performed a two-step analysis. First, we analysed whether the values of these participants differed from those of the rest of the sample. This evaluation was conducted subjectively and quantitatively using SPSS, which did not identify their data as outliers. Second, we repeated all statistical analyses with and without these two participants. Although the results showed a slight improvement when excluding them, the differences were minimal. We opted to present the analyses with the full sample since we believe technology failures might occur in real-life situations, and the results did not change significantly.

The short-term experiment conducted in this study lasted from eight to ten minutes per participant. It was designed to test the impact of endowing the robot with fixed preferences and emotional reactions and analyse the participants’ perceptions and preferences towards different robot behaviours. This design facilitated the control of the study conditions. However, these short-term interactions also introduced bias due to novelty effects, limited exposure to the robot’s capabilities, insufficient time to obtain well-defined adaptive responses, and the impossibility of participants to observe the complete robot’s repertoire. HRI often requires more prolonged interactions to assess whether behaviours stabilise and remain meaningful over time. For this reason, future studies require extending the interaction duration and allowing preferences to adapt dynamically. This approach can reduce the previous shortcomings and provide a more robust evaluation of the long-term effects of personalised robot behaviour in real application scenarios.

Other limitations of this study are related to the fact that participants could choose which robot to interact with first, which may have influenced the outcomes, biasing the perception of the robots depending on which one they used first. In addition, both robots were simultaneously in the room, which could have affected participants’ attention and behaviour during the experiment. Although Robot A and B differed slightly in their speech, and we did not find statistical differences due to this design, these variations might have influenced participants’ perceptions of the robots. We found a contradiction in the results obtained to contrast . Although participants mostly stated they preferred Robot B, the outcomes in the quantitative analyses did not report differences in Likeability and Sociability. This suggests that despite the Godspeed and HRIES questionnaires being reliable evaluation methods for HRI, they might not fully capture the users’ opinion, producing incoherent results or not accepting hypotheses that can be true.

Regarding social aspects, the primary limitation is that participants all belonged to the university context. Therefore, extending the sample of participants beyond the university setting would be valuable. Conducting new experiments with participants using the robot during more sessions and from more diverse cultural backgrounds and age groups could enhance the study’s external validity and provide more substantial evidence of the positive effects of personalisation in HRI. Such extensions would contribute to a broader understanding of how personalisation strategies can be adapted to real-world scenarios.

8. Conclusions

This paper presents an Adaptive Object Preference System for social robot actions as care-dependent companions. We present a user study to evaluate whether combining such preferences with dynamically generated emotional responses improves the participants’ perceived naturalness and likeability of the robot. The results show that a robot with such behaviour and responses improves in naturalness, but the study participants did not like it more than a robot without preferences and emotions. However, the results also highlight that most participants preferred the robot with preferences and behaviour. The qualitative analysis demonstrates the correct user perception of the behaviour we wanted to integrate into our Mini social robot.

The future direction of this research work is the integration of new adaptive and personalised behaviours into the social robot to enhance its naturalness and responsiveness to the different interaction experiences it encounters. For example, changing the object’s preferences, depending on how much the robot needs it or the kind of object, and considering users’ characteristics, such as their cultural background, might produce a more personalised experience. Regarding technical aspects, integrating new camera object detection and voice interaction might facilitate the interaction between the robot and the participants. We propose to investigate how using care-dependent robots as companions affects other HRI factors, such as engagement or fun, increasing long-term robot use and promoting user–robot bonds. Finally, derived from the limitations presented in the previous section, it would be valuable to conduct longer-lasting experiments to avoid the potential biases (e.g., novelty or lack of time to perceive the robot’s expression repertoire) that short-term experiments might bring. These longer-lasting interactions facilitate analysing whether factors such as the speech used by the robot, which robot was used first, and the fact that both robots were simultaneously in the room affect the perception of the robot and the participants’ performance during longer-lasting interactions.

Author Contributions

Conceptualization, M.M.-G., S.Á.-A. and M.M.; methodology, M.M.-G., S.Á.-A. and M.M.; software, M.M.-G. and S.Á.-A.; validation, M.M.-G., S.Á.-A., J.R.-H. and A.S.-B.; formal analysis, M.M.-G. and S.Á.-A.; investigation, M.M.-G., S.Á.-A., J.R.-H. and A.S.-B.; resources, M.M.-G. and M.M.; data curation, M.M.-G. and S.Á.-A.; writing—original draft preparation, M.M.-G., S.Á.-A., J.R.-H. and A.S.-B.; writing—review and editing, M.M.-G. and M.M.; visualization, M.M.-G. and S.Á.-A.; supervision, M.M.; project administration, M.M.-G. and M.M.; funding acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Robots sociales para mitigar la soledad y el aislamiento en mayores (SOROLI), PID2021-123941OA-I00, funded by Agencia Estatal de Investigación (AEI), Spanish Ministerio de Ciencia e Innovación. Robots sociales para reducir la brecha digital de las personas mayores (SoRoGap), TED2021-132079B-I00, funded by Agencia Estatal de Investigación (AEI), Spanish Ministerio de Ciencia e Innovación. Mejora del nivel de madurez tecnológica del robot Mini (MeNiR) funded by MCIN/AEI/10 13039/501100011033 and the European Union NextGeneration EU/PRTR. Portable Social Robot with High Level of Engagement (PoSoRo) PID2022-140345OB-I00 funded by MCIN/AEI/10.13039/501100011033 and ERDF A way of making Europe; Evaluación del comportamiento del robot social Mini en residencias de mayores with grant number 2024/00742/001 in the programme Ayudas para la Actividad Investigadora de los Jóvenes Doctores, Programa Propio de Investigación awarded by Universidad Carlos III de Madrid. This publication is part of the R&D&I project PDC2022-133518-I00, funded by MCIN/AEI/10.13039/501100011033 and by the European Union NextGeneration EU/PRTR.

Informed Consent Statement

The study was conducted in accordance with the Declaration of Helsinki. Informed consent was obtained from all subjects involved in the study. All participants were informed about the data privacy policies of the University Carlos III of Madrid.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Robots’ Speech During the Experiment

The robot’s speech during the experiment can be divided into four groups: introduction, closing, requests, and reactions. The introduction, closing, and requests were identical for Robot A and B. The reactions changed between Robot A and B. Robot A reacted with simple speech. In contrast, Robot B reacted with adapted speech to the object perceived and emotional expressions that changed depending on how much the robot liked the object. The emotion did not alter the speech.

Appendix A.1. Introduction

Before starting the interaction: Please press the button Start on the touch screen to begin the experiment.

Once the participant pressed Start on the touch screen: Hello, there are nine objects in front of you. If you want to give me one, bring it close to my belly. Please wait a moment until I finish speaking if you are going to provide me with something. To end the experiment, press Finish on the touch screen.

Appendix A.3. Requests

Can you hear my poor robotic guts? I am very hungry. Please give me something to eat.

Do you have anything to eat? I am hungry.

Are you hungry? I am starving! Please give me something you have lying around.

I am dying of thirst, do you have anything to drink?

Are you thirsty? I am very thirsty! Please give me something to drink.

I am so thirsty I could drink an entire lake, please give me something to drink.

I am bored stiff. Do you have any toys?

You do not have any toys lying around, do you?

I am dying of boredom. Do you have any toys you want to show me?

Appendix A.4. Reactions

If Robot A was hungry and received food: Thank you very much, I was starving.

If Robot A was not hungry and received food: I do not need food right now, but thank you anyway.

If Robot A was thirsty and received a drink: Thank you very much, I was so thirsty.

If Robot A was not thirsty and received a drink: I do not need a drink right now, but thank you anyway.

If Robot A was bored and received a toy: Thank you very much, I was so bored.

If Robot A was not bored and received a toy: I do not need a toy right now, but thank you anyway.

For Robot B, reactions were adapted to the kind of object. The following sentences are representative examples of Robot’s B reactions. For the other responses, the robot replaced the italics word (e.g., chocolate, water, or green ball) with the object received.

If Robot B was hungry and received food: Thank you very much for the chocolate, I was starving.

If Robot B was not hungry and received food: I do not need the broccoli right now, but thank you anyway.

If Robot B was thirsty and received a drink: Thank you very much for the orange juice, I was so thirsty.

If Robot B was not thirsty and received a drink: I do not need water right now, but thank you anyway.

If Robot B was bored and received a toy: Thank you very much for the green ball, I was so bored.

If Robot B was not bored and received a toy: I do not need the teddy bear right now, but thank you anyway.

Appendix B. Scale Items Used in the Study

Appendix B.1. Demographic Survey

Please indicate your Gender (Male, Female, Other).

Please indicate your Age (Open numerical question).

Please indicate your Technology knowledge (5-point Likert scale).

Please indicate your Robotics knowledge (5-point Likert scale).

Have you previously interacted with Mini? (Yes/No question).

Appendix B.2. Robot Attributes Questionnaires

Animacy items from Godspeed questionnaire

Dead–Alive (5-point Likert scale).

Stagnant–Lively (5-point Likert scale).

Mechanical–Organic (5-point Likert scale).

Artificial–Lifelike (5-point Likert scale).

Inert–Interactive (5-point Likert scale).

Apathetic–Responsive (5-point Likert scale).

Likeability items from Godspeed questionnaire

Dislike–Like (5-point Likert scale).

Unfriendly–Friendly (5-point Likert scale).

Unkind–Kind (5-point Likert scale).

Unpleasant–Pleasant (5-point Likert scale).

Awful–Nice (5-point Likert scale).

Perceived intelligence items from Godspeed questionnaire

Incompetent–Competent (5-point Likert scale).

Ignorant–Knowledgeable (5-point Likert scale).

Irresponsible–Responsible (5-point Likert scale).

Unintelligent–Intelligent (5-point Likert scale).

Foolish–Sensible (5-point Likert scale).

Sociability items from HRIES questionnaire

Warm (7-point Likert scale from Not at all to Totally).

Likeable (7-point Likert scale from Not at all to Totally).

Trustworthy (7-point Likert scale from Not at all to Totally).

Friendly (7-point Likert scale from Not at all to Totally).

Agency items from HRIES questionnaire

Human-like (7-point Likert scale from Not at all to Totally).

Real (7-point Likert scale from Not at all to Totally).

Alive (7-point Likert scale from Not at all to Totally).

Natural (7-point Likert scale from Not at all to Totally).

Appendix B.3. Personal Opinion Questions

Please write which emotions you perceived in the robot (Open text question).

Did you perceive that the robot had preferences towards the objects on the table? (Yes/No question).

Which robot do you prefer? (Robot A, Robot B, Indifferent).

Why did you select the previous option? (Open text question).

Comments and Suggestions (Open text question).

References

- Ragno, L.; Borboni, A.; Vannetti, F.; Amici, C.; Cusano, N. Application of social robots in healthcare: Review on characteristics, requirements, technical solutions. Sensors 2023, 23, 6820. [Google Scholar] [CrossRef]

- Papakostas, G.A.; Sidiropoulos, G.K.; Papadopoulou, C.I.; Vrochidou, E.; Kaburlasos, V.G.; Papadopoulou, M.T.; Holeva, V.; Nikopoulou, V.A.; Dalivigkas, N. Social robots in special education: A systematic review. Electronics 2021, 10, 1398. [Google Scholar] [CrossRef]

- Oertel, C.; Castellano, G.; Chetouani, M.; Nasir, J.; Obaid, M.; Pelachaud, C.; Peters, C. Engagement in human-agent interaction: An overview. Front. Robot. AI 2020, 7, 92. [Google Scholar] [CrossRef]

- Jung, Y.; Hahn, S. Social Robots As Companions for Lonely Hearts: The Role of Anthropomorphism and Robot Appearance. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 2520–2525. [Google Scholar] [CrossRef]

- Ahmed, E.; Buruk, O.O.; Hamari, J. Human–robot companionship: Current trends and future agenda. Int. J. Soc. Robot. 2024, 16, 1809–1860. [Google Scholar] [CrossRef]

- Kok, C.L.; Ho, C.K.; Teo, T.H.; Kato, K.; Koh, Y.Y. A novel implementation of a social robot for sustainable human engagement in homecare services for ageing populations. Sensors 2024, 24, 4466. [Google Scholar] [CrossRef]

- Fernández-Rodicio, E.; Castro-González, Á.; Gamboa-Montero, J.J.; Carrasco-Martínez, S.; Salichs, M.A. Creating Expressive Social Robots That Convey Symbolic and Spontaneous Communication. Sensors 2024, 24, 3671. [Google Scholar] [CrossRef] [PubMed]

- Law, T.; de Leeuw, J.; Long, J.H. How movements of a non-humanoid robot affect emotional perceptions and trust. Int. J. Soc. Robot. 2021, 13, 1967–1978. [Google Scholar] [CrossRef]

- Spatola, N.; Wudarczyk, O.A. Ascribing emotions to robots: Explicit and implicit attribution of emotions and perceived robot anthropomorphism. Comput. Hum. Behav. 2021, 124, 106934. [Google Scholar] [CrossRef]

- Fernández-Rodicio, E.; Maroto-Gómez, M.; Castro-González, Á.; Malfaz, M.; Salichs, M.Á. Emotion and mood blending in embodied artificial agents: Expressing affective states in the mini social robot. Int. J. Soc. Robot. 2022, 14, 1841–1864. [Google Scholar] [CrossRef]

- Lawton, L. Taken by the Tamagotchi: How a toy changed the perspective on mobile technology. Ijournal Stud. J. Fac. Inf. 2017, 2, 1–8. [Google Scholar]

- Salameh, P. Pou (Version 1.4.120) [Mobile app]. Zakeh, App Store & Google Play. 2012. Available online: https://www.pou.me/ (accessed on 25 July 2025).

- Salichs, M.A.; Castro-González, Á.; Salichs, E.; Fernández-Rodicio, E.; Maroto-Gómez, M.; Gamboa-Montero, J.J.; Marques-Villarroya, S.; Castillo, J.C.; Alonso-Martín, F.; Malfaz, M. Mini: A new social robot for the elderly. Int. J. Soc. Robot. 2020, 12, 1231–1249. [Google Scholar] [CrossRef]

- Martínez, S.C.; Montero, J.J.G.; Gómez, M.M.; Martín, F.A.; Salichs, M.Á. Aplicación de estrategias psicológicas y sociales para incrementar el vínculo en interacción humano-robot. Rev. Iberoam. de Automática e Informática Industrial 2023, 20, 199–212. [Google Scholar] [CrossRef]

- Maroto-Gómez, M.; Castro-González, Á.; Castillo, J.C.; Malfaz, M.; Salichs, M.Á. An adaptive decision-making system supported on user preference predictions for human–robot interactive communication. User Model.-User-Adapt. Interact. 2023, 33, 359–403. [Google Scholar] [CrossRef] [PubMed]

- Cagiltay, B.; Ho, H.R.; Michaelis, J.E.; Mutlu, B. Investigating family perceptions and design preferences for an in-home robot. In Proceedings of the Interaction Design and Children Conference, London, UK, 21–24 June 2020; pp. 229–242. [Google Scholar]

- Villa, L.; Hervás, R.; Cruz-Sandoval, D.; Favela, J. Design and evaluation of proactive behavior in conversational assistants: Approach with the eva companion robot. In International Conference on Ubiquitous Computing and Ambient Intelligence; Springer: Berlin/Heidelberg, Germany, 2022; pp. 234–245. [Google Scholar]