Textile Defect Detection Using Artificial Intelligence and Computer Vision—A Preliminary Deep Learning Approach

Abstract

1. Introduction

- This paper introduces an automatic fabric defect detection system that integrates a lightweight framework, such as Ultralytics’ YOLO, with an edge device like the NVIDIA Jetson Orin Nano, aiming to reduce processing latency and enhance production line efficiency.

- A robust dataset consisting of images taken from fabrics with different colors is used, contributing to a more generalized model.

- Advanced data augmentation techniques are employed to train a more robust model capable of generalizing across diverse industrial environments.

- In order to accommodate the constrained resources of edge devices, the trained model is optimized using TensorRT, ensuring compliance with real-time performance requirements.

2. State of the Art

2.1. Image Acquisition

2.2. Pre-Processing

2.3. Feature Extraction

2.4. Classification

- i.

- Traditional classifiers

- ii.

- Faster R-CNN:

- iii.

- SSD (Single Shot MultiBox Detector):

- iv.

- YOLOv5:

- v.

- YOLOv8

- vi.

- YOLOv11:

- vii.

- Comparison

3. Methodology

- System architecture: the end-to-end pipeline design, including hardware and software components;

- Deployment setup: the implementation and integration of the system in a production environment;

- Performance evaluation metrics: the criteria used to assess the system’s performance.

3.1. System Architecture

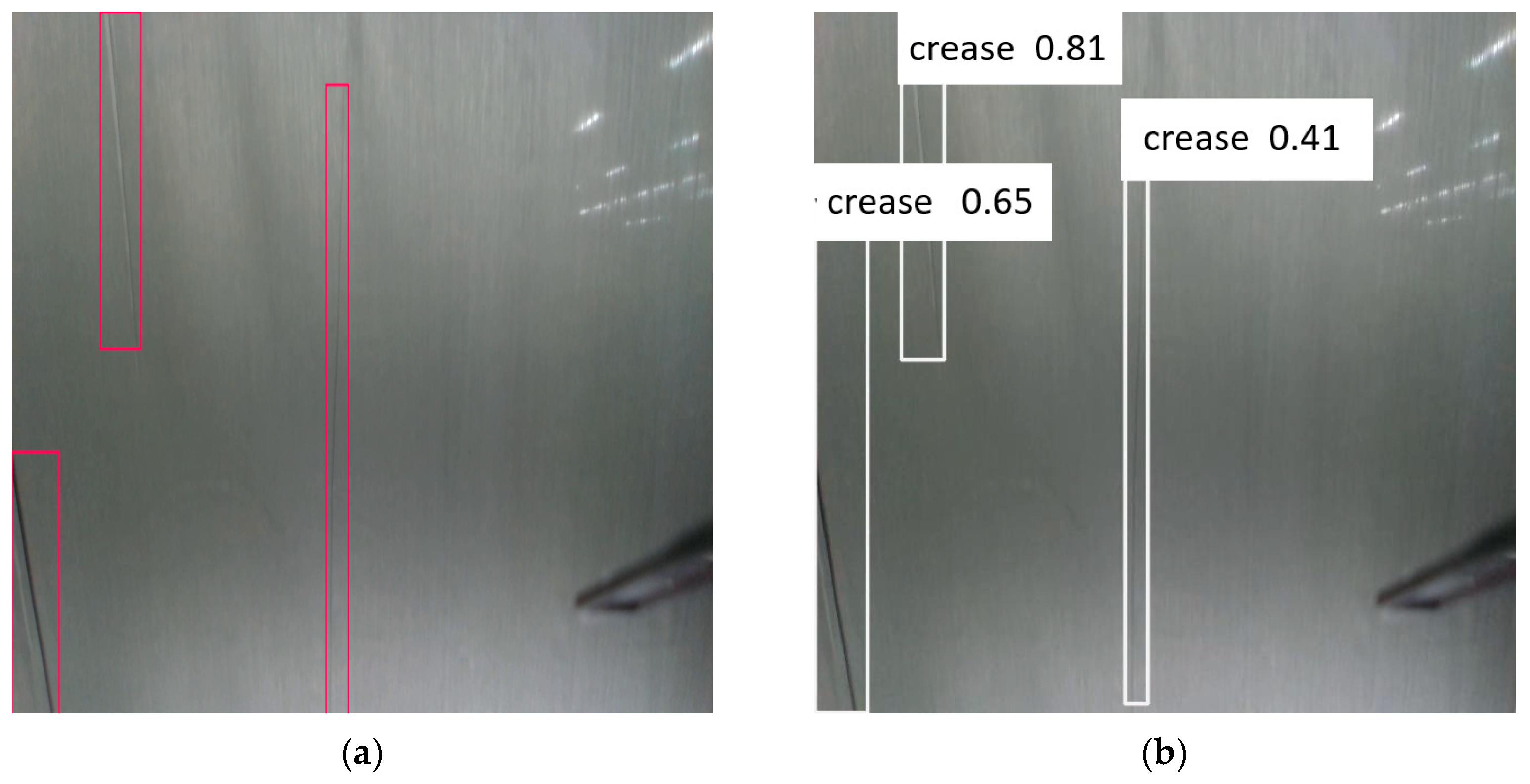

- Model Training: A labeled dataset of fabric images, including three defect types with one being predominant, the crease defect, as shown in Table 2, is used to train a deep learning model on a Graphics Processing Unit (GPU) cluster. The trained model is optimized (e.g., pruned, quantized) for edge deployment.

- Image Acquisition: RGB-D cameras (Intel RealSense D435i) installed on the production line continuously capture image frames of the rolling fabric.

- Edge Device (NVIDIA Jetson Orin Nano): This device executes the AI inference pipeline locally, minimizing latency and enabling real-time defect detection without relying on constant cloud communication.

- Visual Feedback: The output of the detection process is presented in real time through a monitoring dashboard, by distance through RTMP Streaming or locally saved recordings. This allows operators to supervise and analyze the inspection process.

- Database: Detected defects are stored on a cloud database for traceability, reporting, and further statistical analysis.

- i.

- Image acquisition

- ii.

- Pre-processing

- iii.

- Feature Extraction

- iv.

- Classification

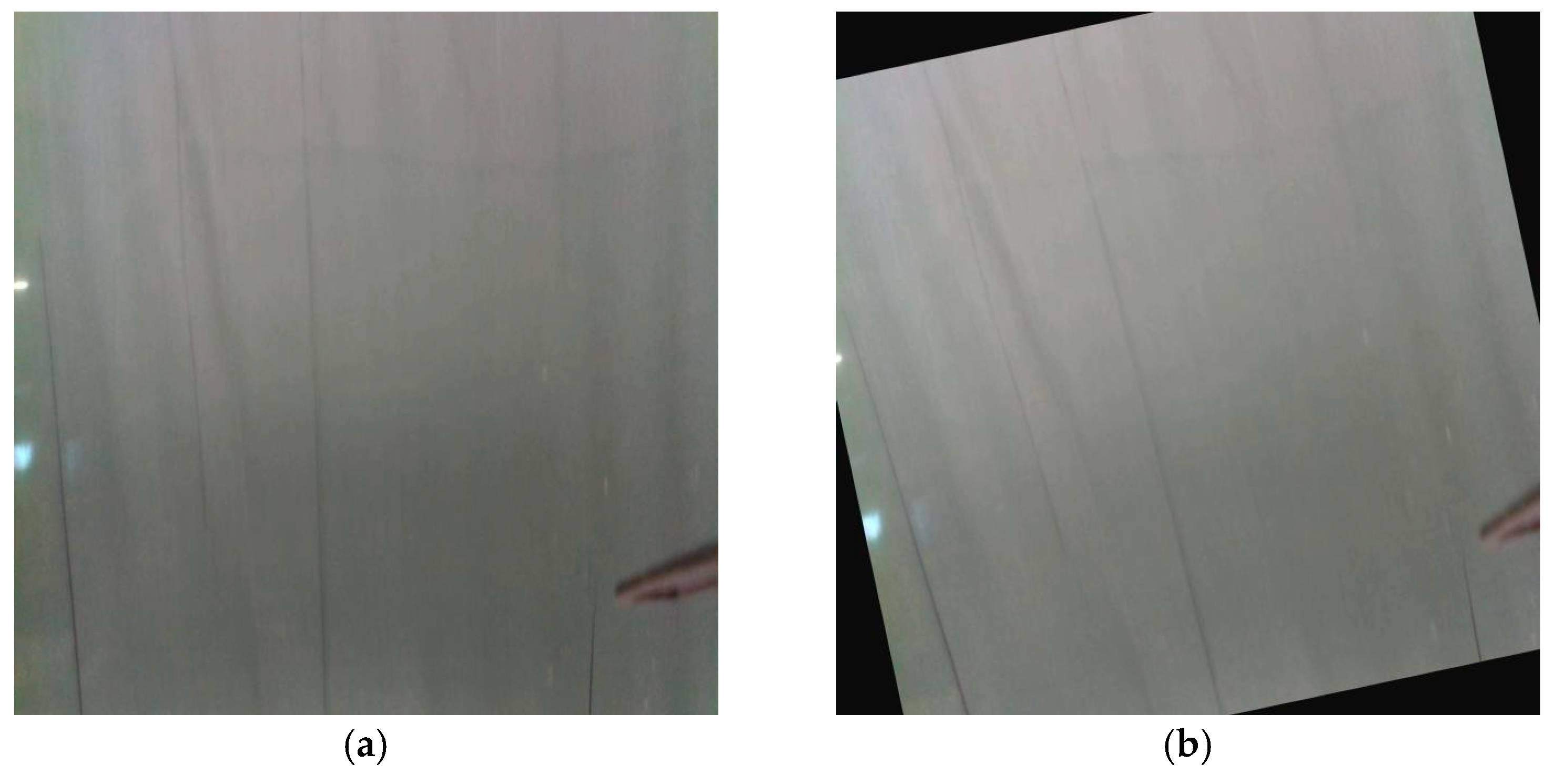

3.2. Setup Implementation

- Camera positioning: As shown in Figure 4a, two Realsense D435i RGB-D cameras were installed above the rolling fabric, fastened securely to a metallic pipe at a fixed distance of approximately 1 m from the fabric. This configuration enabled a field of view of approximately 1 m in height and 3 m in width. The cameras were oriented perpendicularly to the fabric surface, aligned with the direction of fabric movement, and positioned close to the light sources to reduce shadows and reflections. Each camera was connected via Video for Linux Two (V4L2) and processed locally on the Jetson Orin Nano. The camera feeds were configured at a resolution of 640 × 480 pixels and a frame rate of 30 FPS.

- Edge Device Integration: An NVIDIA Jetson Orin Nano, NVIDIA, Santa Clara, CA, USA, device was securely mounted near the cameras, with all cables routed and fixed to avoid interference with machine operation. This close proximity minimizes data transmission latency and supports real-time inference.

- Lighting Adjustments: 120 cm width supplemental diffuse LED lights were installed above the fabric to ensure consistent illumination across the surface, as shown in Figure 4b. Poor lighting conditions from the industrial floor initially caused false positives due to shadows and inconsistent texture appearance. By adjusting the angle and intensity of the lighting, these issues were mitigated.

- A display was connected to the NVIDIA Jetson Orin Nano edge device using DisplayPort, showing the real-time camera feed with bounding boxes over detected defects. This visual feedback interface provides operators with immediate insight into the inspection process, as shown in Figure 4c. The system continuously analyzes the incoming frames and overlays the localization results, which are then recorded and stored.

- Defect metadata and localization info are sent via MQTT to a central database for traceability, remote access and historical analysis.

3.3. Dataset Preparation and Model Training

3.4. Real-Time Processing System

- Capturing image streams from the RGB-D cameras;

- Executing the inference pipeline using an optimized deep learning model;

- Displaying real-time detection results on a local monitor;

- Transmitting detection metadata to the database for logging and traceability purposes.

3.5. Evaluation Metrics

4. Experimental Results

4.1. Hole Defect Detection

4.2. Color Bleeding Detection

4.3. Crease Detection

4.4. Performance Metrics

5. Discussion

- The camera employed is optimized for capturing depth and structural features, enhancing the system’s ability to detect physical deformations. However, its limited color sensitivity impairs performance in identifying chromatic irregularities.

- Additionally, no preprocessing techniques tailored to specific defect types were employed. While methods such as contrast enhancement or grayscale adjustment could improve the detection of particular features, they were intentionally omitted to preserve the generalizability of the system. The detection model was designed to operate without class-specific preprocessing, aiming to provide unified detection capabilities across both physical and visual defects.

6. Conclusions and Further Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D | Two-Dimensional |

| 3D | Three-Dimensional |

| AI | Artificial Intelligence |

| AOI | Automated Optical Inspection |

| AP | Average Precision |

| C2f | Cross-stage partial bottleneck with two convolutions |

| C2PSA | Convolutional block with Parallel Spatial Attention |

| C3k2 | Cross Stage Partial with kernel size 2 |

| CNN | Convolutional Neural Network |

| CSPNet | Cross-Stage Partial Network |

| CSV | Comma-Separated Values |

| CUDA | Compute Unified Device Architecture |

| DCN | Deformable Convolution Network |

| FN | False Negatives |

| FP | False Positives |

| FP16 | Floating-Point 16bits |

| FPS | Frames Per Second |

| GA | Genetic Algorithm |

| GB | Giga Bytes |

| GLCM | Gray-Level Co-occurrence Matrix |

| GPU | Graphics Processing Unit |

| HOG | Histogram of Oriented Gradients |

| IoU | Intersection over Union |

| K-NN | K-Nearest Neighbours |

| LED | Light-Emitting Diode |

| Light-repGFPN | Lightweight Replication-based Generalized Feature Pyramid Network |

| LSK | Large Selective Kernel |

| LW-SSD | Lightweight Single Shot Detector |

| mAP | Mean Average Precision |

| MES/ERP | Execution System/Enterprise Resource |

| ML | Machine Learning |

| mm | millimeter |

| MPCA | MaxPool with Coordinate Attention |

| MQTT | Message Queuing Telemetry Transport |

| ms | milliseconds |

| P | Precision |

| R | Recall |

| ReLU | Rectified Linear Unit |

| RGB | Red, Green and Blue |

| RGB-D | Red, Green, Blue and Depth |

| RTMP | Real-Time Messaging Protocol |

| SSD | Single Shot Detector |

| SVM | Support Vector Machine |

| TP | True Positives |

| YOLO | You Only Look Once |

References

- Associação Têxtil E de Vestuário. Available online: https://atp.pt/pt-pt/estatisticas/caraterizacao/ (accessed on 10 July 2025).

- Circularity of the EU Textiles Value Chain in Numbers. Available online: https://www.eea.europa.eu/en/analysis/publications/circularity-of-the-eu-textiles-value-chain-in-numbers (accessed on 9 July 2025).

- Kang, X.; Zhang, E. A Universal Defect Detection Approach for Various Types of Fabrics Based on the Elo-Rating Algorithm of the Integral Image. Text. Res. J. 2019, 89, 4766–4793. [Google Scholar] [CrossRef]

- Carrilho, R.; Yaghoubi, E.; Lindo, J.; Hambarde, K.; Proença, H. Toward Automated Fabric Defect Detection: A Survey of Recent Computer Vision Approaches. Electronics 2024, 13, 3728. [Google Scholar] [CrossRef]

- Li, M.; Wan, S.; Deng, Z.; Wang, Y. Fabric Defect Detection Based on Saliency Histogram Features. Comput. Intell. 2019, 35, 517–534. [Google Scholar] [CrossRef]

- Li, F.; Yuan, L.; Zhang, K.; Li, W. A Defect Detection Method for Unpatterned Fabric Based on Multidirectional Binary Patterns and the Gray-Level Co-Occurrence Matrix. Text. Res. J. 2020, 90, 776–796. [Google Scholar] [CrossRef]

- Khwakhali, U.S.; Tra, N.T.; Tin, H.V.; Khai, T.D.; Tin, C.Q.; Hoe, L.I. Fabric Defect Detection Using Gray Level Co-Occurence Matrix and Local Binary Pattern. In Proceedings of the 2022 RIVF International Conference on Computing and Communication Technologies (RIVF), Ho Chi Minh City, Vietnam, 20–22 December 2022; pp. 226–231. [Google Scholar]

- Lizarraga-Morales, R.A.; Correa-Tome, F.E.; Sanchez-Yanez, R.E.; Cepeda-Negrete, J. On the Use of Binary Features in a Rule-Based Approach for Defect Detection on Patterned Textiles. IEEE Access 2019, 7, 18042–18049. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Taiwo, O.; Ezugwu, A.E.; Oyelade, O.N.; Almutairi, M.S. Enhanced Intelligent Smart Home Control and Security System Based on Deep Learning Model. Wirel. Commun. Mob. Comput. 2022, 2022, 9307961. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Ayari, M.A.; Khan, A.U.; Khan, M.S.; Al-Emadi, N.; Reaz, M.B.I.; Islam, M.T.; Ali, S.H.M. Automatic and Reliable Leaf Disease Detection Using Deep Learning Techniques. AgriEngineering 2021, 3, 294–312. [Google Scholar] [CrossRef]

- Munappy, A.R.; Bosch, J.; Olsson, H.H.; Arpteg, A.; Brinne, B. Data Management for Production Quality Deep Learning Models: Challenges and Solutions. J. Syst. Softw. 2022, 191, 111359. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Bai, J.; Wu, D.; Shelley, T.; Schubel, P.; Twine, D.; Russell, J.; Zheng, X.; Zhang, J. A Comprehensive Survey on Machine Learning Driven Material Defect Detection. ACM Comput. Surv. 2025, 57, 1–36. [Google Scholar] [CrossRef]

- Kumar, A. Computer-Vision-Based Fabric Defect Detection: A Survey. IEEE Trans. Ind. Electron. 2008, 55, 348–363. [Google Scholar] [CrossRef]

- Nacy, S.M.; Abbood, W.T. Automated Surface Defect Detection Using Area Scan Camera. Innov. Syst. Des. Eng. 2013, 4, 1–10. [Google Scholar]

- Chen, Y.L.; Tsai, C.W.; Ding, F.S.; Hsu, Q.C. Application of Line Scan Technology for Defect Inspection in Plain Dyed Fabric. Sens. Mater. 2021, 33, 4087–4103. [Google Scholar] [CrossRef]

- Khodier, M.M.; Ahmed, S.M.; Sayed, M.S. Complex Pattern Jacquard Fabrics Defect Detection Using Convolutional Neural Networks and Multispectral Imaging. IEEE Access 2022, 10, 10653–10660. [Google Scholar] [CrossRef]

- Siegmund, D.; Prajapati, A.; Kirchbuchner, F.; Kuijper, A. An Integrated Deep Neural Network for Defect Detection in Dynamic Textile Textures. In Progress in Artificial Intelligence and Pattern Recognition, 6th International Workshop—IWAIPR 2018, Havana, Cuba, 24–26 September 2018; Springer: Cham, Switzerland, 2018; pp. 77–84. [Google Scholar]

- Yildiz, K.; Buldu, A.; Demetgul, M.; Yildiz, Z. A Novel Thermal-Based Fabric Defect Detection Technique. J. Text. Inst. 2015, 106, 275–283. [Google Scholar] [CrossRef]

- Huang, T.S.; Schreiber, W.F.; Tretiak, O.J. Image Processing. Proc. IEEE 2005, 59, 1586–1609. [Google Scholar]

- Kuruvilla, J.; Sukumaran, D.; Sankar, A.; Joy, S.P. A Review on Image Processing and Image Segmentation. In Proceedings of the 2016 International Conference on Data Mining and Advanced Computing (SAPIENCE), Ernakulam, India, 16–18 March 2016; pp. 198–203. [Google Scholar]

- Di, L.; Long, H.; Liang, J. Fabric Defect Detection Based on Illumination Correction and Visual Salient Features. Sensors 2020, 20, 5147. [Google Scholar] [CrossRef]

- Mewada, H.; Pires, I.M.; Engineer, P.; Patel, A.V. Fabric Surface Defect Classification and Systematic Analysis Using a Cuckoo Search Optimized Deep Residual Network. Eng. Sci. Technol. Int. J. 2024, 53, 101681. [Google Scholar] [CrossRef]

- Hu, G.-H.; Wang, Q.-H.; Zhang, G.-H. Unsupervised Defect Detection in Textiles Based on Fourier Analysis and Wavelet Shrinkage. Appl. Opt. 2015, 54, 2963–2980. [Google Scholar] [CrossRef]

- Kuo, C.F.J.; Wang, W.R.; Barman, J. Automated Optical Inspection for Defect Identification and Classification in Actual Woven Fabric Production Lines. Sensors 2022, 22, 7246. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Jin, Z.; Wang, B.; Ma, L.; Cui, Y. A Sobel Operator Combined with Patch Statistics Algorithm for Fabric Defect Detection. KSII Trans. Internet Inf. Syst. (TIIS) 2020, 14, 687–701. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Bresee, R.R. Fabric Defect Detection and Classification Using Image Analysis. Text. Res. J. 1995, 65, 1–9. [Google Scholar] [CrossRef]

- Jun, X.; Wang, J.; Zhou, J.; Meng, S.; Pan, R.; Gao, W. Fabric Defect Detection Based on a Deep Convolutional Neural Network Using a Two-Stage Strategy. Text. Res. J. 2021, 91, 130–142. [Google Scholar] [CrossRef]

- Wang, P.H.; Lin, C.C. Data Augmentation Method For Fabric Defect Detection. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics—Taiwan, Taipei, Taiwan, 6–8 July 2022; pp. 255–256. [Google Scholar]

- Muchlis, A.; Wibowo, E.P.; Irawan, R.; Afzeri. The Effect of Data Augmentation on Accuracy Values In Fabric Defect Detection. Rev. Gestão-RGSA 2025, 19, e011606. [Google Scholar] [CrossRef]

- Lu, B.; Zhang, M.; Huang, B. Deep Adversarial Data Augmentation for Fabric Defect Classification With Scarce Defect Data. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Feature Extraction. In Feature Extraction: Foundations and Applications; Guyon, I., Nikravesh, M., Gunn, S., Zadeh, L.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–25. ISBN 978-3-540-35488-8. [Google Scholar]

- Mutlag, W.K.; Ali, S.K.; Aydam, Z.M.; Taher, B.H. Feature Extraction Methods: A Review. J. Phys. Conf. Ser. 2020, 1591, 012028. [Google Scholar] [CrossRef]

- Chen, M.; Yu, L.; Zhi, C.; Sun, R.; Zhu, S.; Gao, Z.; Ke, Z.; Zhu, M.; Zhang, Y. Improved Faster R-CNN for Fabric Defect Detection Based on Gabor Filter with Genetic Algorithm Optimization. Comput. Ind. 2022, 134, 103551. [Google Scholar] [CrossRef]

- Rasamoelina, A.D.; Adjailia, F.; Sinčák, P. A Review of Activation Function for Artificial Neural Network. In Proceedings of the 2020 IEEE 18th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herlany, Slovakia, 23–25 January 2020; pp. 281–286. [Google Scholar]

- Agarap, A.F. Deep Learning Using Rectified Linear Units (ReLU). arXiv 2019, arXiv:1803.08375v2. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Gholamalinezhad, H.; Khosravi, H. Pooling Methods in Deep Neural Networks, a Review. arXiv 2020, arXiv:2009.07485. [Google Scholar] [CrossRef]

- Song, W.; Lang, J.; Zhang, D.; Zheng, M.; Li, X. Textile Defect Detection Algorithm Based on the Improved YOLOv8. IEEE Access 2025, 13, 11217–11231. [Google Scholar] [CrossRef]

- Ozek, A.; Seckin, M.; Demircioglu, P.; Bogrekci, I. Artificial Intelligence Driving Innovation in Textile Defect Detection. Textiles 2025, 5, 12. [Google Scholar] [CrossRef]

- Shumin, D.; Zhoufeng, L.; Chunlei, L. AdaBoost Learning for Fabric Defect Detection Based on HOG and SVM. In Proceedings of the 2011 International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 2903–2906. [Google Scholar]

- Deotale, N.T.; Sarode, T.K. Fabric Defect Detection Adopting Combined GLCM, Gabor Wavelet Features and Random Decision Forest. 3D Res. 2019, 10, 5. [Google Scholar] [CrossRef]

- Dhivya, D.; Renuka Devi, M. Detection of Structural Defects in Fabric Parts Using a Novel Edge Detection Method. Comput. J. 2018, 62, 1036–1043. [Google Scholar] [CrossRef]

- Yang, R.; Guo, N.; Tian, B.; Wang, J.; Liu, S.; Yu, M. Fabric Defect Detection via Saliency Model Based on Adjacent Context Coordination and Transformer. J. Eng. Fibers Fabr. 2024, 19, 15589250241258272. [Google Scholar] [CrossRef]

- Luo, Q.; Fang, X.; Su, J.; Zhou, J.; Zhou, B.; Yang, C. Automated Visual Defect Classification for Flat Steel Surface: A Survey. IEEE Trans. Instrum. Meas. 2020, 69, 9329–9349. [Google Scholar] [CrossRef]

- Voronin, V.; Sizyakin, R.; Zhdanova, M.; Semenishchev, E.; Bezuglov, D.; Zelemskii, A. Automated Visual Inspection of Fabric Image Using Deep Learning Approach for Defect Detection. In Proceedings of the Automated Visual Inspection and Machine Vision IV, Online, 21–26 June 2021; Volume 11787, p. 117870P. [Google Scholar]

- Edozie, E.; Shuaibu, A.N.; John, U.K.; Sadiq, B.O. Comprehensive Review of Recent Developments in Visual Object Detection Based on Deep Learning. Artif. Intell. Rev. 2025, 58, 277. [Google Scholar] [CrossRef]

- Dhamal, S.K.; Joshi, C.; Chakrabarti, P.; Chouhan, D.S. An Automated Framework for Fabric Defect Detection in Textile Inspection. J. Inf. Syst. Eng. Manag. 2025, 10, 353–367. [Google Scholar] [CrossRef]

- Jia, Z.; Shi, Z.; Quan, Z.; Shunqi, M. Fabric Defect Detection Based on Transfer Learning and Improved Faster R-CNN. J. Eng. Fibers Fabr. 2022, 17, 15589250221086647. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, S.; Li, C.; Ding, S.; Dong, Y. Fabric Defects Detection Based on SSD. In Proceedings of the 2nd International Conference on Graphics and Signal Processing; Association for Computing Machinery, Sydney, NSW, Australia, 6–8 October 2018; pp. 74–78. [Google Scholar]

- Liu, S.; Huang, L.; Zhao, Y.; Wu, X. Lightweight Single Shot Multi-Box Detector: A Fabric Defect Detection Algorithm Incorporating Parallel Dilated Convolution and Dual Channel Attention. Text. Res. J. 2024, 94, 209–224. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, Y.; Wu, Z. An Improved Fabric Defect Detection Method Based on SSD. AATCC J. Res. 2021, 8, 181–190. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Mao, M.; Hong, M. YOLO Object Detection for Real-Time Fabric Defect Inspection in the Textile Industry: A Review of YOLOv1 to YOLOv11. Sensors 2025, 25, 2270. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. What Is YOLOv5: A Deep Look into the Internal Features of the Popular Object Detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Pereira, F.; Lopes, H.; Pinto, L.; Soares, F.; Vasconcelos, R.; Machado, J.; Carvalho, V. A Novel Deep Learning Approach for Yarn Hairiness Characterization Using an Improved YOLOv5 Algorithm. Appl. Sci. 2025, 15, 149. [Google Scholar] [CrossRef]

- Lv, H.; Zhang, H.; Wang, M.; Xu, J.; Li, X.; Liu, C. Hyperspectral Imaging Based Nonwoven Fabric Defect Detection Method Using LL-YOLOv5. IEEE Access 2024, 12, 41988–41998. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Yaseen, M. What Is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2409.07813. [Google Scholar]

- Jin, Y.; Liu, X.; Nan, K.; Wang, S.; Wang, T.; Zhang, Z.; Zhang, X. A Real-Time Fabric Defect Detection Method Based on Improved YOLOv8. Appl. Sci. 2025, 15, 3228. [Google Scholar] [CrossRef]

- YOLOv5 Benchmarks. Available online: https://github.com/ultralytics/yolov5#benchmarks] (accessed on 25 August 2025).

- Pasam, S.K.; Bhosale, A.G. A Comprehensive Comparison Between YOLOv5 and YOLOv8 for Embedded Systems Applications. In Proceedings of the 2025 3rd International Conference on Smart Systems for Applications in Electrical Sciences (ICSSES), Tumakuru, India, 21–22 March 2025; pp. 1–6. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wu, T.; Xie, F. MSI-YOLO: An Efficient Model for Multiscale Fabric Defect Detection Based on Improved YOLOv11. Text. Res. J. 2025, 00405175251329936. [Google Scholar] [CrossRef]

- Chen, P.; Luo, Y.G.; Zhang, J.; Wang, B. PRD-YOLOv11: Efficient and Accurate Textile Tow Defect Detection via Progressive Representation Distillation. Signal Image Video Process 2025, 19, 671. [Google Scholar] [CrossRef]

- Kang, X. Research on Fabric Defect Detection Method Based on Lightweight Network. J. Eng. Fibers Fabr. 2024, 19, 15589250241232153. [Google Scholar] [CrossRef]

- Alqahtani, D.K.; Cheema, M.A.; Toosi, A.N. Benchmarking Deep Learning Models for Object Detection on Edge Computing Devices. In Proceedings of the International Conference on Service-Oriented Computing, Tunis, Tunisia, 3–6 December 2024. [Google Scholar]

- Song, S.; Jing, J.; Huang, Y.; Shi, M. EfficientDet for Fabric Defect Detection Based on Edge Computing. J. Eng. Fibers Fabr. 2021, 16, 15589250211008346. [Google Scholar] [CrossRef]

- Intel Realsense d435i Datasheet. Available online: https://www.intel.com/content/www/us/en/products/sku/190004/intel-realsense-depth-camera-d435i/specifications.html (accessed on 25 August 2025).

- Jetson Orin Nano Developer Kit User Guide | NVIDIA Developer. Available online: https://developer.nvidia.com/embedded/learn/jetson-orin-nano-devkit-user-guide/index.html (accessed on 11 July 2025).

| Model | Accuracy | Computational Requirements | Raw Fabric Detection | Pattern Fabric Detection | FPS | Relevance |

|---|---|---|---|---|---|---|

| SSD | Medium/High | Low/Moderate | Good | Low | High | Useful speed vs. accuracy for raw fabric |

| Faster R-CNN | Very High | High | High | High | Low | High precision benchmark if latency is allowed |

| YOLOv5n | Medium/High | Very Low | Good | Good | Very High | Lightweight YOLO baseline, for low latency and very constrained environments |

| YOLOv8n | High | Very Low | Good | High | Very High | State-of-the-art efficiency for nano models. |

| YOLOv11n | High | Very Low | High | High | Very High | Good balance of accuracy and edge performance |

| Defect Type | Instances |

|---|---|

| Hole | 2169 |

| Crease | 27,126 |

| Color bleeding | 1365 |

| Total | 30,660 |

| Augmentation Techniques | mAP@50 |

|---|---|

| No Augmentation | 0.751 |

| Mosaic | 0.806 |

| Mosaic + Brightness | 0.802 |

| Mosaic + Noise | 0.781 |

| Mosaic + Rotation | 0.804 |

| Mosaic + Brightness + Noise | 0.811 |

| Mosaic + Brightness + Rotation | 0.793 |

| Mosaic + Noise + Rotation | 0.767 |

| Mosaic + Brightness + Noise + Rotation | 0.821 |

| Metric | Value |

|---|---|

| Inference Time (ms) | ~10 ms |

| FPS | ~100 fps |

| Classes | Precision | Recall | F1 Score | mAP(@50) |

|---|---|---|---|---|

| all | 0.750 | 0.780 | 0.765 | 0.821 |

| hole | 0.890 | 0.760 | 0.820 | 0.870 |

| color bleeding | 0.620 | 0.810 | 0.702 | 0.850 |

| crease | 0.730 | 0.760 | 0.745 | 0.750 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Machado, R.; Barros, L.A.M.; Vieira, V.; Silva, F.D.d.; Costa, H.; Carvalho, V. Textile Defect Detection Using Artificial Intelligence and Computer Vision—A Preliminary Deep Learning Approach. Electronics 2025, 14, 3692. https://doi.org/10.3390/electronics14183692

Machado R, Barros LAM, Vieira V, Silva FDd, Costa H, Carvalho V. Textile Defect Detection Using Artificial Intelligence and Computer Vision—A Preliminary Deep Learning Approach. Electronics. 2025; 14(18):3692. https://doi.org/10.3390/electronics14183692

Chicago/Turabian StyleMachado, Rúben, Luis A. M. Barros, Vasco Vieira, Flávio Dias da Silva, Hugo Costa, and Vitor Carvalho. 2025. "Textile Defect Detection Using Artificial Intelligence and Computer Vision—A Preliminary Deep Learning Approach" Electronics 14, no. 18: 3692. https://doi.org/10.3390/electronics14183692

APA StyleMachado, R., Barros, L. A. M., Vieira, V., Silva, F. D. d., Costa, H., & Carvalho, V. (2025). Textile Defect Detection Using Artificial Intelligence and Computer Vision—A Preliminary Deep Learning Approach. Electronics, 14(18), 3692. https://doi.org/10.3390/electronics14183692