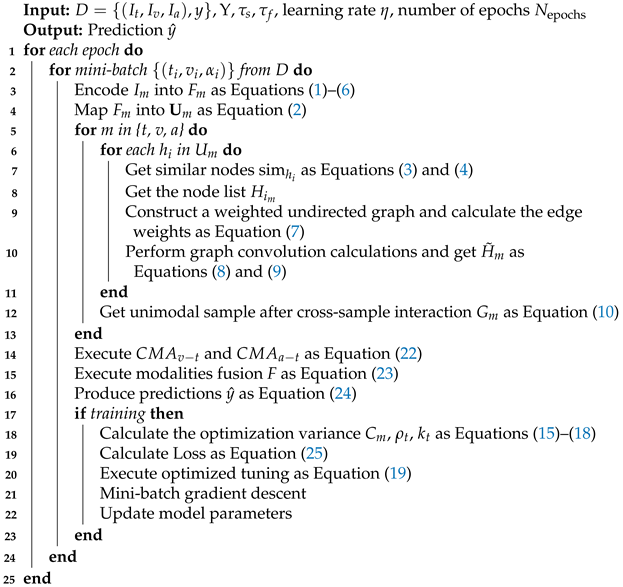

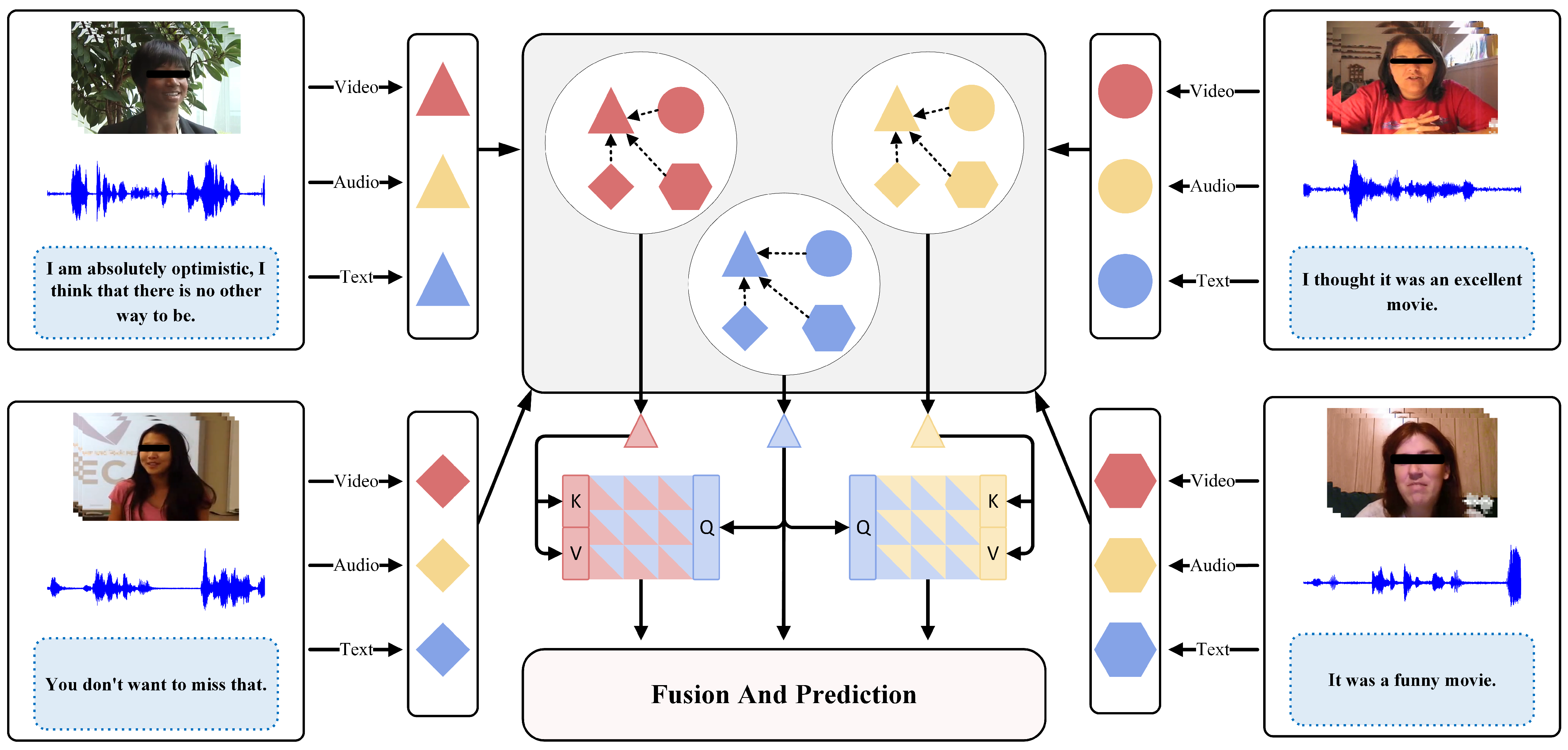

In this section, we will provide a detailed introduction to the proposed Cross-Modal Graph Interaction Network (CSGI-Net). The core objective of CSGI-Net is to capture rich unimodal representations by using graph convolution to learn and extract the common features within each modality among similar samples. Additionally, during the training process, CSGI-Net performs dynamic optimization tuning based on the optimization differences between modalities to ensure that each modality is fully optimized.

3.4. Cross-Sample Graph Interaction (CSGI)

To enable the model to better capture the shared characteristics among similar samples within the same modality, we constructed a cross-sample interaction graph and applied a custom graph convolution calculation strategy for cross-sample interaction learning. Additionally, during the interaction learning process, our graph dynamically adjusts the edge weights based on the similarity between samples to accurately learn emotional information.

Definition of similar samples: First, we define a new standard for determining similar samples. Previous studies typically considered only semantic similarity while ignoring emotional factors, which led to the learning of irrelevant or even negative information, increasing noise and affecting model performance. Therefore, when defining similar samples, we consider both semantic and emotional similarities.

For semantic similarity, we first normalize the sample representations within each modality, generating the normalized sample representations to mitigate the adverse effects of outlier samples. Then, we compute the cosine similarity to construct the semantic similarity matrix between samples within the same modality, reflecting the proximity and correlation of the samples in the semantic space.

For emotional similarity, we employ the external tool NLTK to predict the sentiment of the original sentences. The predicted sentiment labels are then mapped through a linear layer to obtain the generated sentiment label

. However, since

is derived from the text modality via an external tool, it may differ from the true label. To address this, we introduce a generation loss

to align the generated sentiment label

more closely with the true sentiment label

y. Subsequently, we compute the emotional difference between samples using the Manhattan distance, constructing the emotional similarity matrix

, which accurately captures the emotional relationships between samples. By considering both semantic and emotional similarities, we define a more comprehensive notion of similar samples.

In this context,

represents the normalized semantic similarity matrix among samples within each modality in a batch,

denotes the normalized emotional similarity matrix among samples in the batch,

indicates the true emotional score of sample

i, and

represents the true emotional score of each sample, where

denote the number of samples.

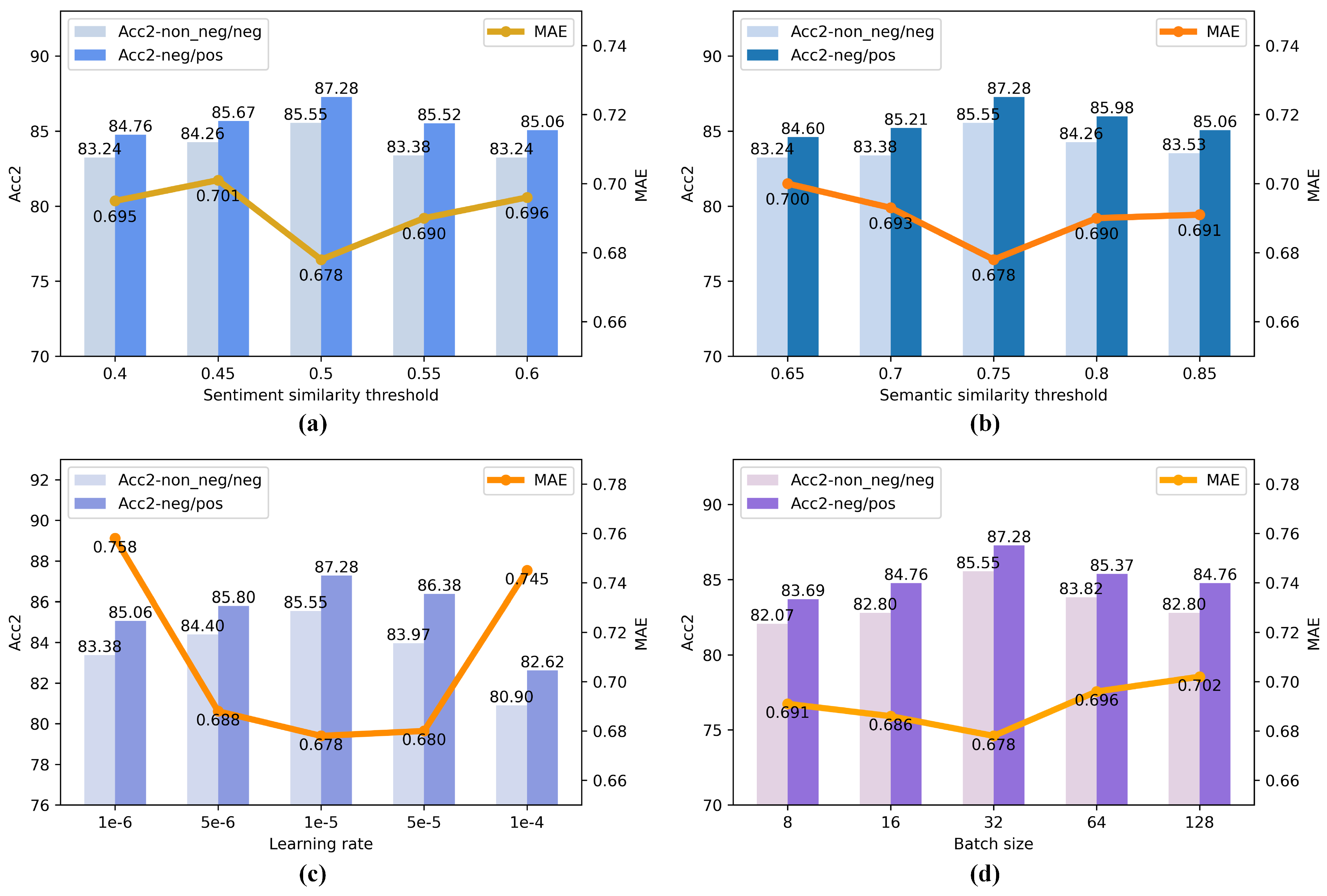

As shown in

Figure 3a, after constructing these two similarity matrices, we define two hyperparameters,

and

, which represent the thresholds for semantic similarity and emotional similarity, respectively. Using these two hyperparameters, we filter the elements in

and generate a sample mask matrix

. Subsequently, we set the positions in

corresponding to False in

to negative infinity and select the top

K most semantically similar samples for each sample (anchor sample) in the sample sequence, as illustrated in

Figure 3b.

Among them, { v, t, a } represents the three modalities (video, text, and audio).

Graph Construction: After determining the K similar samples within each modality, we proceed to construct a graph for cross-sample interaction. As shown in

Figure 4, we consider four common structures when constructing the graph. Among these, (a) contains a large number of redundant edges, resulting in a significant amount of ineffective computation; (b) and (c) require multiple GCN iterations for the anchor sample to learn the common features of all similar samples, which may lead to learning redundant information; whereas (d) can accurately learn the common features of all similar nodes with just one GCN iteration. Therefore, we choose (d) as the basic model for graph construction.

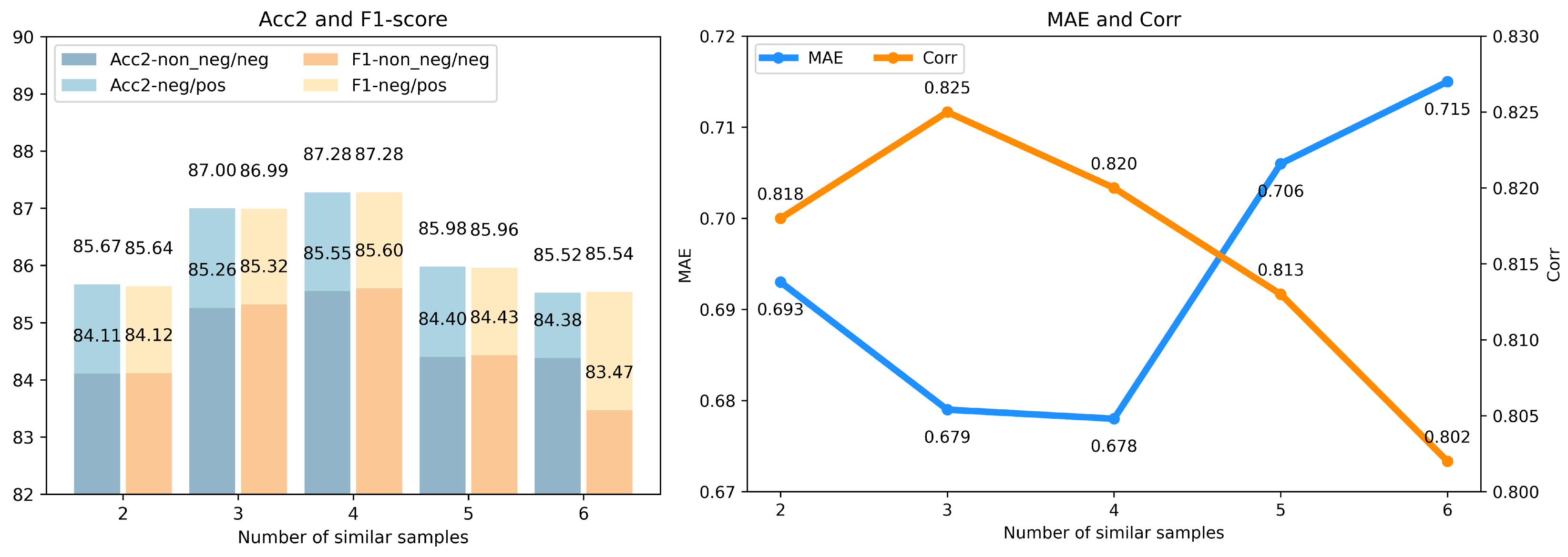

Specifically, we design a star-shaped weighted undirected graph for each sample. The vertex set V has a size of , including the anchor sample and its K similar samples. The anchor sample serves as the central node, while the remaining K similar samples are peripheral nodes. The edge set has a size of , comprising K edges that represent the similarity relationships between the peripheral nodes and the central node. For three-modal data with N samples, we construct a graph set consisting of three parts, each containing N independent graphs, with each graph designed to have a structure of nodes.

Nodes: Within our model framework, the nodes in the graph are categorized into two major types: and , where , represents the i-th sample in modality m() and , identifies the j-th sample that shows high similarity with sample .

Edges: In our analytical framework, each sample is regarded as a central node in the graph. These central nodes exhibit varying degrees of similarity in semantic features and emotional dimensions with K other samples from the training set. Based on this assumption, we construct a graph model where each central node is connected to its K most similar peripheral nodes. Although there may be some similarity between the similar samples themselves, we focus only on the direct similarity between the central node and its peripheral nodes to avoid introducing unnecessary complexity. Therefore, in the graph, we create edges only between the central node and its specific K similar samples. This ensures the precise capture of the semantic and emotional correlations between samples without blurring the focus by considering all interrelations among similar samples.

Taking a batch of 7 samples as an example, we construct the cross-sample interaction graph for the text modality of the first sample. First, we compute the semantic similarity matrix and the sentiment similarity matrix between these samples, both of which are of size 7 × 7. Then, based on the definition of similar samples, we use the semantic threshold and sentiment threshold to filter and , masking out the samples that do not meet the criteria. The resulting masks are combined to form the mask matrix .

To build the cross-sample interaction graph for the first sample, we extract the first row of

,

, and

, which represent the semantic similarity, sentiment similarity, and similarity mask between the first sample and the other samples, respectively, as shown in

Figure 5a. As illustrated in

Figure 5a, samples 2 to 5 satisfy the similarity requirements, while samples 6 and 7 are not considered similar to the first sample due to failing to meet both the semantic and sentiment criteria.

Finally, the similarity mask from

Figure 5a is used as the first row and first column of the adjacency matrix, with the remaining elements set to 0. This results in the adjacency matrix for the cross-sample interaction graph of the first sample. Based on this adjacency matrix, we obtain the cross-sample interaction graph for the text modality of the first sample, as shown in

Figure 5b.

Edge Weights: In our study, we propose a core hypothesis: if two nodes exhibit a high degree of similarity, their shared characteristics become particularly significant, and the weight of the edge between these two nodes needs to be high. To more accurately capture and quantify this similarity between nodes, we introduce cosine similarity [

30] as a measurement standard to define the edge weights. By adopting the concept of cosine similarity, we can more precisely depict the strength of the relationships between nodes in complex network analysis. This is especially effective in highlighting the importance of these relationships when there are multiple shared characteristics between nodes. The calculation method for edge weights is as follows:

where

represents the feature representation of the

i-th sample within modality

m, and

represents the feature representation of the

j-th peripheral node connected to the central node in the graph.

Graph Convolution: Following the aforementioned graph construction steps, we obtain the required weighted undirected graph. We then use this graph to build our custom Graph Convolutional Network (GCN) to further encode and learn the common features among similar nodes. Specifically, given a weighted undirected graph

, let

be the symmetric normalized Laplacian matrix of the graph

:

where

represents the degree matrix of graph

,

represents the adjacency matrix, and

I represents the identity matrix. The graph convolution operation we designed can be formulated as:

where the subscript

m denotes one of the three modalities (video, text, and audio), and the superscript

i represents the cross-sample interaction graph centered on the

i-th sample.

represents the feature matrix composed of the features of each node in the graph. Unlike classical graph convolution operations, we discard the conventional learnable weight matrix

W. Instead, we implicitly guide the backpropagation process to enhance the learning of common features among similar nodes. This design naturally promotes the network’s information flow to adaptively adjust based on node similarity, eliminating the need for explicit weight parameter adjustments. Consequently, this improves the model’s ability to capture inherent patterns within complex data structures.

Finally, we integrate and reorganize the central node sample

after its interaction with the peripheral nodes, generating a single-modality sample sequence post cross-sample interaction. This sequence is then passed to the subsequent processing stages of the model to further perform downstream tasks.

3.5. Dynamic Optimization Tuning (DOT)

During the training process of multimodal models, we discovered that the optimization speeds of different modalities are unbalanced. This inconsistency in optimization speeds leads to imbalances in modality optimization during model training, preventing the model from fully utilizing the unique sentimental information contained in each modality and hindering overall performance improvement. To address this issue, we propose a new Dynamic Optimization Tuning (DOT) strategy, aimed at monitoring and responding to the performance fluctuations of each modality in real-time. This strategy dynamically adjusts the optimization intensity allocated to each modality’s feature channels, ensuring that all modalities are adequately optimized.

When employing the GD (Gradient Descent) strategy, the parameters

of the encoder

, where

, are updated as follows:

However, in practical applications, the Adam optimization strategy is widely adopted due to its efficiency and robustness. The parameter update rule can be described as follows:

where

represents the current gradient,

and

respectively denote the estimates of the first moment (momentum) and the second moment (variance) at time step

s,

and

are hyperparameters used to control the smoothing of the momentum and variance,

is the learning rate, and

is a very small constant (e.g.,

), used to prevent division by zero and provide smoothing.

To effectively address the aforementioned issue of imbalances in optimization across modalities, we developed a system of assessment and tuning. The core of this system lies in continuously monitoring and analyzing the differences in learning progress across various modality channels to dynamically adjust the optimization magnitude for each modality. Specifically, we introduce a quantitative metric known as the “single-modality optimization disparity rate”:

In our method, in order to precisely quantify the performance of a single modality channel, we use as the approximate predictive value for channel m’s contribution to the target, where and represent the weight and feature mapping function of channel m at time step s, respectively; represents the learnable parameters in ; represents the sequence of samples for modality m at time step s; and b is a bias term. Based on this prediction, we evaluate the performance metric for each modality channel m at a given optimization time step in the multimodal model.

Subsequently, by comparing the performance of the video (v), text (t), and audio (a) modality channels, we identify the dominant modality channel at the current time step s as , where , and denote this dominant modality with . To continuously track the performance gap between the non-dominant modalities and the current dominant modality, we introduce a dynamic monitoring metric , where represents the modalities other than the one corresponding to . Simultaneously, we use to measure the extent of advanced optimization of the dominant modality compared to the overall average performance. This ensures appropriate constraints on the dominant modality to prevent overfitting and reduce suppression of the optimization processes in other modalities.

Based on the analysis of performance differences across modalities, we designed a dynamic optimization tuning mechanism aimed at dynamically adjusting the optimization gradient values for each modality channel. This mechanism effectively narrows the optimization gap between different modality channels by:

where

is a hyperparameter used to control the extent of gradient tuning, and

. Unlike using a fixed adjustment function,

, as a learnable parameter within the model, can automatically learn through training how to allocate appropriate gradient weights between different modalities. When a modality is poorly optimized at a certain stage (e.g., when the loss decreases slowly or gradients are unstable), the model can adjust the corresponding parameter

for that modality, increasing the learning rate or enhancing the gradient to provide more optimization focus. Conversely, the model can suppress the gradient update for that modality to prevent overfitting [

11]. Therefore, we integrate the optimization difference coefficient

into the Adam optimization strategy and update the learnable parameters

of the encoder

at the current time step

s as follows:

After completing the optimization tuning steps, to prevent the model from becoming trapped in local optima, we incorporate random Gaussian noise into the optimization gradient calculations during training. This disruption of the existing gradient landscape encourages model parameters to escape the constraints of local minima and explore a broader weight configuration space. By doing so, the model can transcend the limitations of local optimization, discover superior solutions in a more extensive parameter space, and thereby enhance its generalization ability on unseen data.