LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks Under Low-Resource Scenarios

Abstract

1. Introduction

- (1)

- Low-memory Text2SQL fine-tuning framework. We develop a general supervised fine-tuning framework for Text2SQL under low-GPU-memory scenarios, which includes both the schema linking model and the SQL generation model. Our framework is publicly available at https://github.com/hongWin/LR-SQL (accessed on 25 August 2025).

- (2)

- Large-scale benchmark construction. We construct a large-scale dataset, based on the Spider dataset, to simulate Text2SQL tasks in large- and medium-scale databases, closely reflecting real-world scenarios.

- (3)

- Schema slicing for efficient linking. We propose an innovative schema linking strategy that decomposes databases into multiple slices with adjustable token capacities, enabling flexible adaptation to resource constraints.

2. Related Work

2.1. Fine-Tuning-Based Text2SQL Methods

2.2. Low-Resource Training Based on PEFT

2.3. Short-Context-Window Handling for Long Contexts

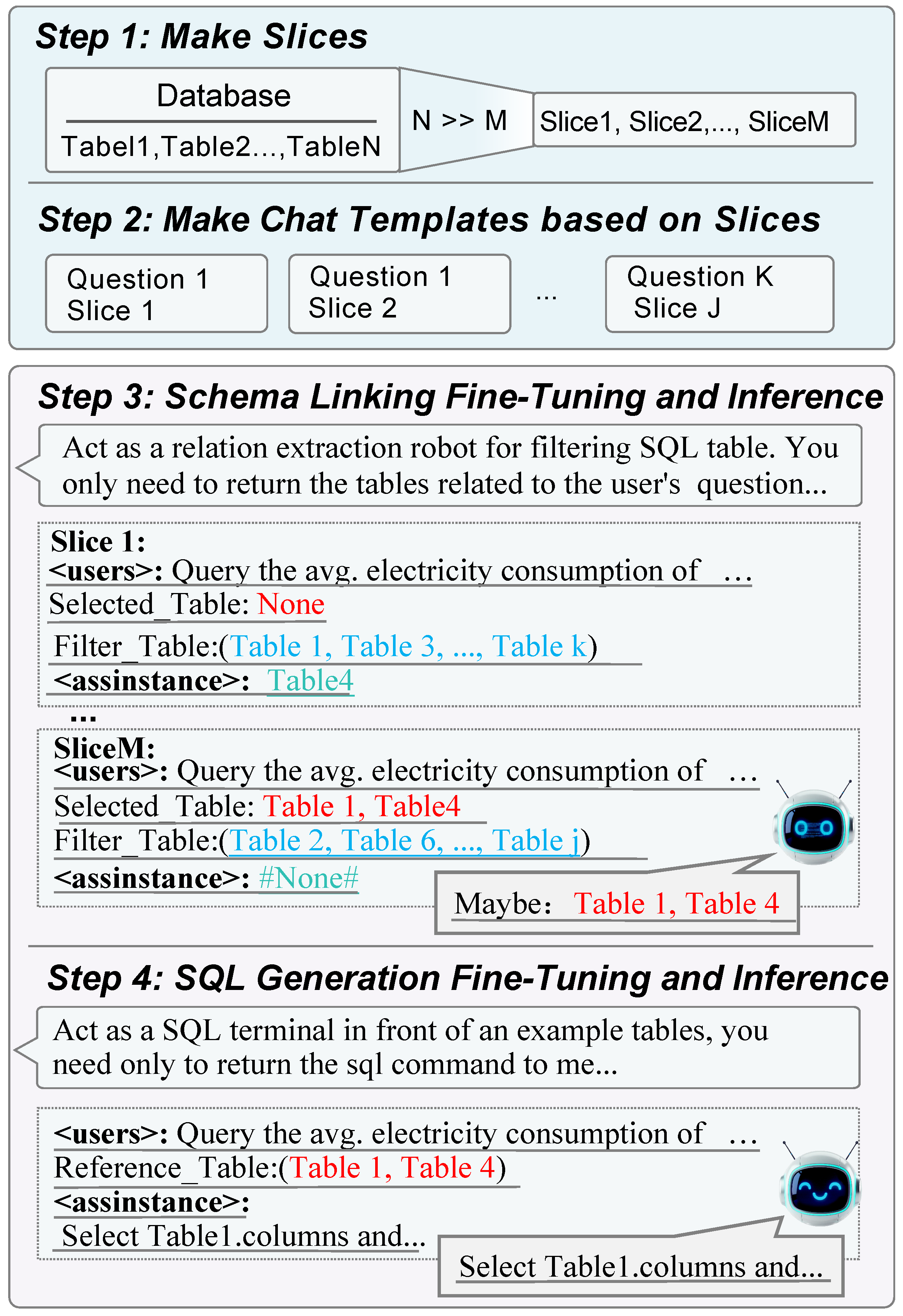

3. Methodology

3.1. Slice Construction

| Algorithm 1 Constructing the slice set W |

| Require: , , , Ensure: Slice set W 1: Initialize , 2: while do 3: while do 4: if then 5: 6: else 7: , 8: 9: end if 10: end while 11: end while 12: while do 13: if then 14: 15: else 16: , 17: 18: end if 19: end while 20: return W |

3.2. Fine-Tuning on the Slice-Based Related Table Filtering Task

4. Experiments

4.1. Dataset Construction

4.2. Experimental Setup

4.3. Schema Linking Task Evaluation

4.4. CoT Effectiveness Evaluation

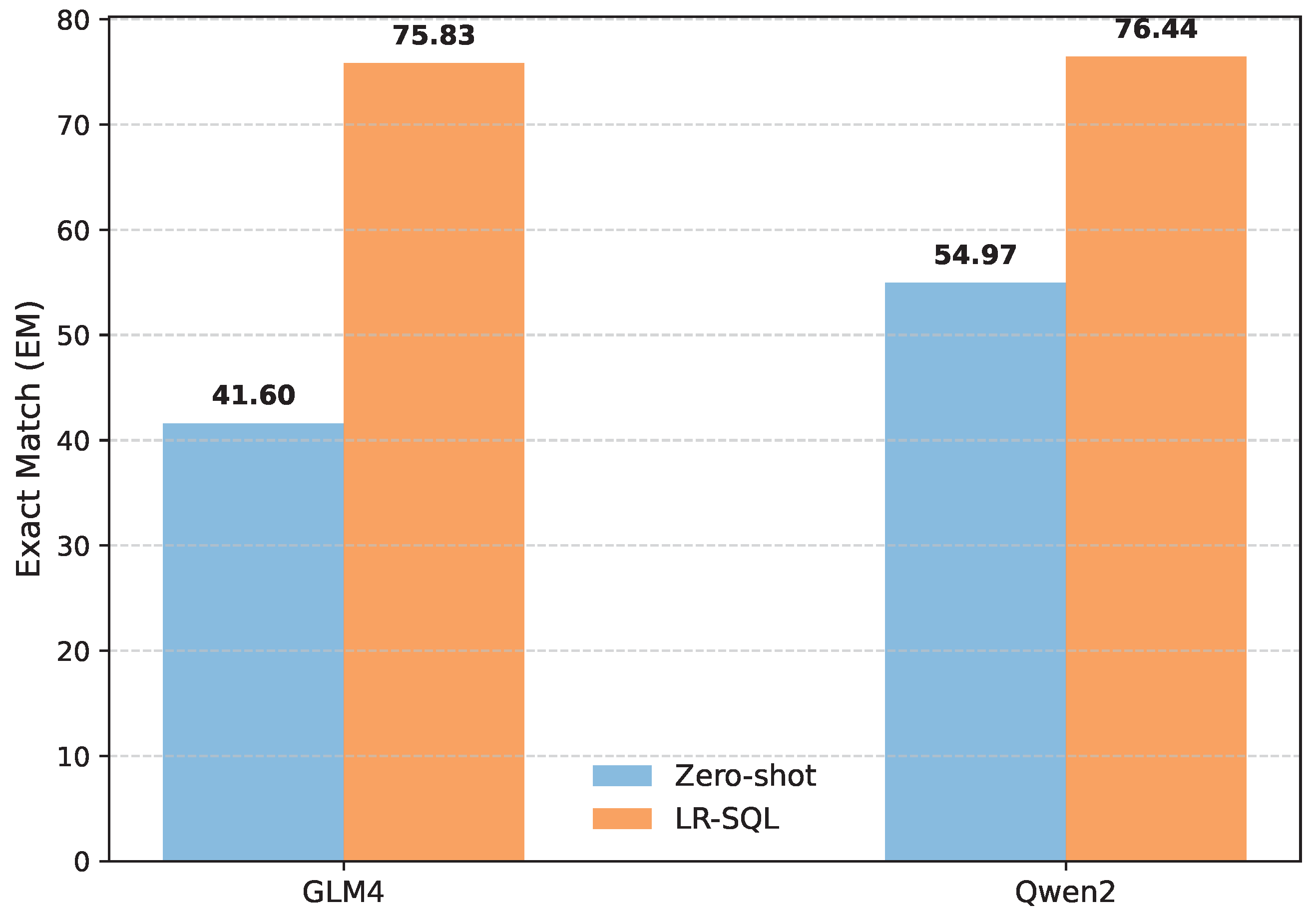

4.5. SQL Generation Evaluation

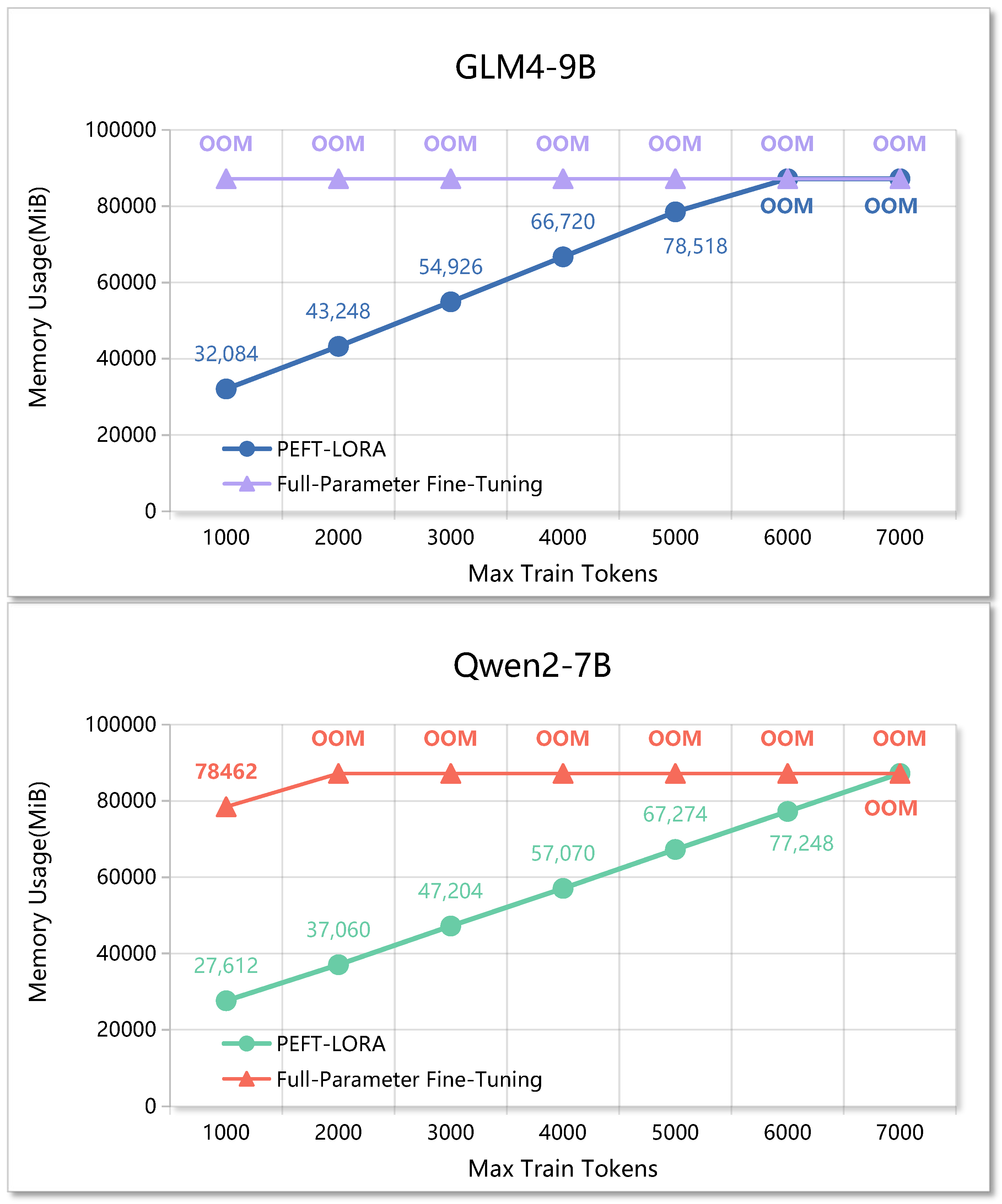

4.6. Slice Token, GPU Memory, and Performance Evaluation

4.7. Slice Size During Inference Evaluation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Ablation Study on Different Slicing Methods

| Model | Slicing | TotalAcc | FilteredAcc | AvgPre | AvgRecall |

|---|---|---|---|---|---|

| GLM4 | Random | 91.57 | 93.82 | 97.61 | 96.44 |

| Foreign Key | 94.38 | 97.19 | 96.91 | 97.85 | |

| Qwen2 | Random | 84.27 | 87.08 | 94.94 | 92.37 |

| Foreign Key | 89.89 | 92.13 | 94.94 | 94.48 |

Appendix B. Further Experiment for QLORA

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall | GPU Usage |

|---|---|---|---|---|---|

| LoRA | 89.89 | 92.13 | 94.94 | 94.48 | 38,374 |

| QLoRA (No Quant.) | 53.38 | 59.55 | 71.3 | 67.6 | 35,813 |

| QLoRA (Quant.) | 62.36 | 63.48 | 80.15 | 73.17 | 35,813 |

Appendix C. Further Experiment on Wiki-SQL Dataset

References

- Xiao, D.; Chai, L.; Zhang, Q.W.; Yan, Z.; Li, Z.; Cao, Y. CQR-SQL: Conversational Question Reformulation Enhanced Context-Dependent Text-to-SQL Parsers. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; pp. 2055–2068. [Google Scholar] [CrossRef]

- Zheng, Y.; Wang, H.; Dong, B.; Wang, X.; Li, C. HIE-SQL: History Information Enhanced Network for Context-Dependent Text-to-SQL Semantic Parsing. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; pp. 2997–3007. [Google Scholar] [CrossRef]

- Hui, B.; Geng, R.; Wang, L.; Qin, B.; Li, Y.; Li, B.; Sun, J.; Li, Y. S2SQL: Injecting Syntax to Question-Schema Interaction Graph Encoder for Text-to-SQL Parsers. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; pp. 1254–1262. [Google Scholar] [CrossRef]

- Shi, L.; Tang, Z.; Zhang, N.; Zhang, X.; Yang, Z. A Survey on Employing Large Language Models for Text-to-SQL Tasks. ACM Comput. Surv. 2025. [Google Scholar] [CrossRef]

- Li, H.; Zhang, J.; Liu, H.; Fan, J.; Zhang, X.; Zhu, J.; Wei, R.; Pan, H.; Li, C.; Chen, H. Codes: Towards building open-source language models for text-to-sql. Proc. ACM Manag. Data 2024, 2, 1–28. [Google Scholar] [CrossRef]

- Lee, D.; Park, C.; Kim, J.; Park, H. MCS-SQL: Leveraging Multiple Prompts and Multiple-Choice Selection For Text-to-SQL Generation. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; Rambow, O., Wanner, L., Apidianaki, M., Al-Khalifa, H., Eugenio, B.D., Schockaert, S., Eds.; pp. 337–353. [Google Scholar]

- Pourreza, M.; Rafiei, D. Din-sql: Decomposed in-context learning of text-to-sql with self-correction. Adv. Neural Inf. Process. Syst. 2024, 36, 36339–36348. [Google Scholar]

- Wang, B.; Ren, C.; Yang, J.; Liang, X.; Bai, J.; Chai, L.; Yan, Z.; Zhang, Q.W.; Yin, D.; Sun, X.; et al. MAC-SQL: A Multi-Agent Collaborative Framework for Text-to-SQL. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; Rambow, O., Wanner, L., Apidianaki, M., Al-Khalifa, H., Eugenio, B.D., Schockaert, S., Eds.; pp. 540–557. [Google Scholar]

- Dong, X.; Zhang, C.; Ge, Y.; Mao, Y.; Gao, Y.; Lin, J.; Lou, D. C3: Zero-shot text-to-sql with chatgpt. arXiv 2023, arXiv:2307.07306. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-Efficient Transfer Learning for NLP. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR; Proceedings of Machine Learning Research. Volume 97, pp. 2790–2799. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Pourreza, M.; Rafiei, D. DTS-SQL: Decomposed Text-to-SQL with Small Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 8212–8220. [Google Scholar] [CrossRef]

- Xue, S.; Qi, D.; Jiang, C.; Cheng, F.; Chen, K.; Zhang, Z.; Zhang, H.; Wei, G.; Zhao, W.; Zhou, F.; et al. Demonstration of DB-GPT: Next Generation Data Interaction System Empowered by Large Language Models. Proc. VLDB Endow. 2024, 17, 4365–4368. [Google Scholar] [CrossRef]

- yu Zhu, G.; Shao, W.; Zhu, X.; Yu, L.; Guo, J.; Cheng, X. Text2Sql: Pure Fine-Tuning and Pure Knowledge Distillation. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 3: Industry Track), Albuquerque, NM, USA, 30 April–2 May 2025; pp. 54–61. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Shi, W.; Li, S.; Yu, K.; Chen, J.; Liang, Z.; Wu, X.; Qian, Y.; Wei, F.; Zheng, B.; Liang, J.; et al. SEGMENT+: Long Text Processing with Short-Context Language Models. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 16605–16617. [Google Scholar] [CrossRef]

- Xu, F.; Shi, W.; Choi, E. Recomp: Improving retrieval-augmented lms with compression and selective augmentation. arXiv 2023, arXiv:2310.04408. [Google Scholar] [CrossRef]

- Cao, B.; Cai, D.; Lam, W. InfiniteICL: Breaking the Limit of Context Window Size via Long Short-term Memory Transformation. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 27 July–1August 2025; Che, W., Nabende, J., Shutova, E., Pilehvar, M.T., Eds.; pp. 11402–11415. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Zhang, Z.; Yao, Y.; Zhang, A.; Tang, X.; Ma, X.; He, Z.; Wang, Y.; Gerstein, M.; Wang, R.; Liu, G.; et al. Igniting language intelligence: The hitchhiker’s guide from chain-of-thought reasoning to language agents. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Sun, G.; Shen, R.; Jin, L.; Wang, Y.; Xu, S.; Chen, J.; Jiang, W. Instruction Tuning Text-to-SQL with Large Language Models in the Power Grid Domain. In Proceedings of the 2023 4th International Conference on Control, Robotics and Intelligent System, Guangzhou, China, 25–27 August 2023; pp. 59–63. [Google Scholar]

- Hong, Z.; Yuan, Z.; Chen, H.; Zhang, Q.; Huang, F.; Huang, X. Knowledge-to-SQL: Enhancing SQL Generation with Data Expert LLM. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L.W., Martins, A., Srikumar, V., Eds.; pp. 10997–11008. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Manning, C.D.; Ermon, S.; Finn, C. Direct preference optimization: Your language model is secretly a reward model. Adv. Neural Inf. Process. Syst. 2023, 36, 53728–53741. [Google Scholar]

- Stoisser, J.L.; Martell, M.B.; Fauqueur, J. Sparks of Tabular Reasoning via Text2SQL Reinforcement Learning. In Proceedings of the 4th Table Representation Learning Workshop, Vienna, Austria, 1 August 2025; Chang, S., Hulsebos, M., Liu, Q., Chen, W., Sun, H., Eds.; pp. 229–240. [Google Scholar] [CrossRef]

- Ma, P.; Zhuang, X.; Xu, C.; Jiang, X.; Chen, R.; Guo, J. Sql-r1: Training natural language to sql reasoning model by reinforcement learning. arXiv 2025, arXiv:2504.08600. [Google Scholar] [CrossRef]

- Sun, R.; Arik, S.Ö.; Muzio, A.; Miculicich, L.; Gundabathula, S.; Yin, P.; Dai, H.; Nakhost, H.; Sinha, R.; Wang, Z.; et al. Sql-palm: Improved large language model adaptation for text-to-sql (extended). arXiv 2023, arXiv:2306.00739. [Google Scholar]

- Shen, R.; Sun, G.; Shen, H.; Li, Y.; Jin, L.; Jiang, H. SPSQL: Step-by-step parsing based framework for text-to-SQL generation. In Proceedings of the 2023 7th International Conference on Machine Vision and Information Technology (CMVIT), Xiamen, China, 24–26 March 2023; pp. 115–122. [Google Scholar]

- Zhang, L.; Zhang, L.; Shi, S.; Chu, X.; Li, B. Lora-fa: Memory-efficient low-rank adaptation for large language models fine-tuning. arXiv 2023, arXiv:2308.03303. [Google Scholar]

- Gao, Z.; Wang, Q.; Chen, A.; Liu, Z.; Wu, B.; Chen, L.; Li, J. Parameter-efficient fine-tuning with discrete fourier transform. arXiv 2024, arXiv:2405.03003. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. Qlora: Efficient finetuning of quantized llms. Adv. Neural Inf. Process. Syst. 2024, 36, 10088–10115. [Google Scholar]

- Liao, B.; Herold, C.; Khadivi, S.; Monz, C. ApiQ: Finetuning of 2-Bit Quantized Large Language Model. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 20996–21020. [Google Scholar] [CrossRef]

- Chen, Y.; Qian, S.; Tang, H.; Lai, X.; Liu, Z.; Han, S.; Jia, J. Longlora: Efficient fine-tuning of long-context large language models. arXiv 2023, arXiv:2309.12307. [Google Scholar]

- Simoulin, A.; Park, N.; Liu, X.; Yang, G. Memory-Efficient Fine-Tuning of Transformers via Token Selection. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 21565–21580. [Google Scholar] [CrossRef]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, Punta Cana, Dominican Republic, 7–11 November 2021; Moens, M.F., Huang, X., Specia, L., Yih, S.W.t., Eds.; pp. 3045–3059. [Google Scholar] [CrossRef]

- Li, X.L.; Liang, P. Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–16 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; pp. 4582–4597. [Google Scholar] [CrossRef]

- Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 technical report. arXiv 2024, arXiv:2412.15115. [Google Scholar] [CrossRef]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Gao, T.; Wettig, A.; Yen, H.; Chen, D. How to train long-context language models (effectively). arXiv 2024, arXiv:2410.02660. [Google Scholar] [CrossRef]

- Li, Y.; Dong, B.; Guerin, F.; Lin, C. Compressing Context to Enhance Inference Efficiency of Large Language Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 7–11 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; pp. 6342–6353. [Google Scholar] [CrossRef]

- Lee, K.H.; Chen, X.; Furuta, H.; Canny, J.; Fischer, I. A human-inspired reading agent with gist memory of very long contexts. arXiv 2024, arXiv:2402.09727. [Google Scholar] [CrossRef]

- Chen, H.; Pasunuru, R.; Weston, J.; Celikyilmaz, A. Walking down the memory maze: Beyond context limit through interactive reading. arXiv 2023, arXiv:2310.05029. [Google Scholar] [CrossRef]

- Silberschatz, A.; Korth, H.F.; Sudarshan, S. Database System Concepts; McGraw-Hill: Columbus, OH, USA, 2011. [Google Scholar]

- Yu, T.; Zhang, R.; Yang, K.; Yasunaga, M.; Wang, D.; Li, Z.; Ma, J.; Li, I.; Yao, Q.; Roman, S.; et al. Spider: A large-scale human-labeled dataset for complex and cross-domain semantic parsing and text-to-sql task. arXiv 2018, arXiv:1809.08887. [Google Scholar]

- GLM, T.; Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Rojas, D.; Feng, G.; Zhao, H.; Lai, H.; et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. arXiv 2024, arXiv:2406.12793. [Google Scholar]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 technical report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Bi, X.; Chen, D.; Chen, G.; Chen, S.; Dai, D.; Deng, C.; Ding, H.; Dong, K.; Du, Q.; Fu, Z.; et al. Deepseek llm: Scaling open-source language models with longtermism. arXiv 2024, arXiv:2401.02954. [Google Scholar]

| Hyperparameter | Search Values |

|---|---|

| LoRA rank (lora_r) | {32, 64, 128} |

| LoRA (lora_alpha) | {16, 32, 64} |

| LoRA dropout (lora_dropout) | {0.05, 0.1, 0.2} |

| Learning rate | {, , } |

| Weight decay | {0.0, 0.01, 0.05} |

| LR scheduler type | {cosine, constant} |

| Warmup ratio | {0.01, 0.05} |

| Max grad norm | {0.1, 0.3, 0.5} |

| Spider-Large | ||||||

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall | GPU Usage | Inference Time |

| GLM4 (zero-shot) | 20.62 | 66.58 | 54.0 | 76.97 | 21,333 | 1.12 |

| Qwen2 (zero-shot) | 9.30 | 13.84 | 27.62 | 21.08 | 32,884 | 1.39 |

| DeepSeek (zero-shot) | – | – | – | – | OOW | – |

| GLM4 (compromise) | 78.59 | 83.81 | 87.89 | 88.00 | 59,472 | 1.02 |

| Qwen2 (compromise) | 72.84 | 78.85 | 88.60 | 87.02 | 33,736 | 1.23 |

| DeepSeek (compromise) | 60.57 | 70.50 | 83.14 | 81.59 | 67,770 | 0.62 |

| GLM4 (DTS-SQL) | – | – | – | – | OOM | – |

| Qwen2 (DTS-SQL) | – | – | – | – | OOM | – |

| DeepSeek (DTS-SQL) | – | – | – | – | OOW | – |

| GLM4 (LR-SQL) | 91.38 | 94.26 | 95.50 | 95.76 | 57,152 | 6.49 |

| Qwen2 (LR-SQL) | 84.07 | 87.21 | 92.78 | 91.92 | 48,310 | 5.49 |

| DeepSeek (LR-SQL) | 79.37 | 86.16 | 91.31 | 91.23 | 77,650 | 8.82 |

| Spider-Medium | ||||||

| TotalAcc | FilteredAcc | AvgPre | AvgRecall | GPU Usage | Inference Time | |

| GLM4 (zero-shot) | 25.84 | 74.72 | 58.25 | 82.68 | 21,101 | 1.29 |

| Qwen2 (zero-shot) | 19.66 | 28.65 | 36.70 | 36.14 | 33,590 | 2.68 |

| DeepSeek(zero-shot) | – | – | – | – | OOW | – |

| GLM4 (compromise) | 80.33 | 85.39 | 92.09 | 90.45 | 39,874 | 0.67 |

| Qwen2 (compromise) | 69.66 | 74.15 | 85.95 | 83.47 | 30,268 | 1.06 |

| DeepSeek (compromise) | 71.34 | 77.52 | 86.37 | 85.15 | 60,094 | 0.53 |

| GLM4 (DTS-SQL) | 96.63 | 97.75 | 97.75 | 98.17 | 78,772 | 1.82 |

| Qwen2 (DTS-SQL) | 92.70 | 94.38 | 97.08 | 96.82 | 67,342 | 3.14 |

| DeepSeek (DTS-SQL) | – | – | – | – | OOW | – |

| GLM4 (LR-SQL) | 94.38 | 97.19 | 96.91 | 97.85 | 45,506 | 5.79 |

| Qwen2 (LR-SQL) | 89.89 | 92.13 | 94.94 | 94.48 | 38,374 | 5.33 |

| DeepSeek (LR-SQL) | 75.28 | 88.76 | 87.07 | 91.43 | 75,102 | 6.08 |

| Spider-Large | ||||

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall |

| GLM4 (no_CoT) | 88.51 | 94.52 | 95.63 | 96.74 |

| GLM4 (CoT_Injection) | 91.38 | 94.26 | 95.50 | 95.76 |

| GLM4 (CoT) | 87.99 | 91.38 | 94.77 | 94.50 |

| DeepSeek (no_CoT) | 74.15 | 88.51 | 90.07 | 93.72 |

| DeepSeek (CoT_Injection) | 79.37 | 86.16 | 91.31 | 91.23 |

| DeepSeek (CoT) | 75.20 | 85.90 | 91.07 | 92.46 |

| Qwen2 (no_CoT) | 79.90 | 83.03 | 92.15 | 91.19 |

| Qwen2 (CoT_Injection) | 84.07 | 87.21 | 92.78 | 91.92 |

| Qwen2 (CoT) | 66.58 | 67.62 | 90.77 | 81.24 |

| Spider-Medium | ||||

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall |

| GLM4 (no_CoT) | 91.57 | 96.62 | 95.79 | 97.47 |

| GLM4 (CoT_Injection) | 94.38 | 97.19 | 96.91 | 97.85 |

| GLM4 (CoT) | 88.76 | 91.01 | 97.19 | 95.08 |

| DeepSeek(no_CoT) | 73.03 | 88.20 | 87.68 | 92.36 |

| DeepSeek (CoT_Injection) | 75.28 | 88.76 | 87.07 | 91.43 |

| DeepSeek (CoT) | 73.60 | 85.39 | 88.90 | 90.96 |

| Qwen2 (no_CoT) | 88.76 | 92.13 | 94.38 | 94.57 |

| Qwen2 (CoT_Injection) | 89.89 | 92.13 | 94.94 | 94.48 |

| Qwen2 (CoT) | 76.97 | 77.53 | 92.79 | 86.42 |

| Spider-Large | ||||

| Method | EX | EM | GPU Usage | Total Time |

| LR-SQL | 85.9 | 73.9 | 57,152 | 4.53 + 6.49 |

| Zero-shot | 42.3 | 11.7 | 23,048 | 2.87 |

| DTS-SQL | – | – | OOM | – |

| DB-GPT | – | – | OOM | – |

| Spider-Medium | ||||

| Method | EX | EM | GPU Usage | Total Time |

| LR-SQL | 84.8 | 77.0 | 45,506 | 4.24 + 5.79 |

| Zero-shot | 41.0 | 14.0 | 20,924 | 2.232 |

| DTS-SQL | 85.4 | 75.3 | 78,772 | 4.32 + 1.82 |

| DB-GPT | 83.1 | 80.9 | 80,498 | 2.66 |

| Spider-Large | ||||||

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall | GPU Usage | Inference Time |

| GLM4 (, = 2100) | 87.99 | 93.47 | 94.20 | 94.71 | 69,599 | 5.40 |

| GLM4 (, = 1600) | 91.38 | 94.26 | 95.50 | 95.76 | 57,152 | 6.49 |

| GLM4 (, = 1300) | 90.60 | 93.47 | 95.10 | 95.44 | 50,429 | 7.89 |

| GLM4 (, = 1100) | 87.46 | 91.38 | 94.76 | 94.51 | 45,825 | 9.56 |

| Spider-Medium | ||||||

| TotalAcc | FilteredAcc | AvgPre | Avg Recall | GPU Usage | Inference Time | |

| GLM4 (, = 1600) | 93.82 | 95.50 | 95.36 | 95.88 | 55,059 | 4.49 |

| GLM4 (, = 1100) | 94.38 | 97.19 | 96.91 | 97.85 | 45,506 | 5.79 |

| GLM4 (, = 800) | 92.13 | 95.51 | 95.17 | 96.25 | 39,155 | 7.01 |

| GLM4 (, = 700) | 92.13 | 93.82 | 95.53 | 95.13 | 38,263 | 9.34 |

| Spider-Large | |||||

|---|---|---|---|---|---|

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall | Inference Time |

| GLM4 (total() = 8) | 91.38 | 94.52 | 95.63 | 96.74 | 6.49 |

| GLM4 (total() = 5) | 83.02 | 88.51 | 92.96 | 92.56 | 4.57 |

| GLM4 (total() = 4) | 81.98 | 86.94 | 92.59 | 91.78 | 3.90 |

| Qwen (total() = 8) | 84.07 | 87.21 | 92.78 | 91.92 | 5.49 |

| Qwen (total() = 5) | 70.49 | 72.06 | 85.33 | 80.47 | 3.60 |

| Qwen (total() = 4) | 68.15 | 69.19 | 82.13 | 76.91 | 3.08 |

| GLM4 (total() = 8) | 94.38 | 97.19 | 96.91 | 97.85 | 5.79 |

| GLM4 (total() = 5) | 88.7 | 91.57 | 95.13 | 94.42 | 3.91 |

| GLM4 (total() = 4) | 83.70 | 86.51 | 93.63 | 92.13 | 3.30 |

| Qwen (total() = 8) | 89.89 | 92.13 | 94.94 | 94.48 | 4.99 |

| Qwen (total() = 5) | 80.90 | 84.27 | 92.64 | 89.84 | 3.25 |

| Qwen (total() = 4) | 79.21 | 82.02 | 91.95 | 87.78 | 2.72 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, W.; Zhang, Y.; Pan, S.; Sun, Y.; Lu, P.; Ding, C. LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks Under Low-Resource Scenarios. Electronics 2025, 14, 3489. https://doi.org/10.3390/electronics14173489

Wen W, Zhang Y, Pan S, Sun Y, Lu P, Ding C. LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks Under Low-Resource Scenarios. Electronics. 2025; 14(17):3489. https://doi.org/10.3390/electronics14173489

Chicago/Turabian StyleWen, Wuzhenghong, Yongpan Zhang, Su Pan, Yuwei Sun, Pengwei Lu, and Cheng Ding. 2025. "LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks Under Low-Resource Scenarios" Electronics 14, no. 17: 3489. https://doi.org/10.3390/electronics14173489

APA StyleWen, W., Zhang, Y., Pan, S., Sun, Y., Lu, P., & Ding, C. (2025). LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks Under Low-Resource Scenarios. Electronics, 14(17), 3489. https://doi.org/10.3390/electronics14173489