Electrical Power Optimization of Cloud Data Centers Using Federated Learning Server Workload Allocation

Abstract

1. Introduction

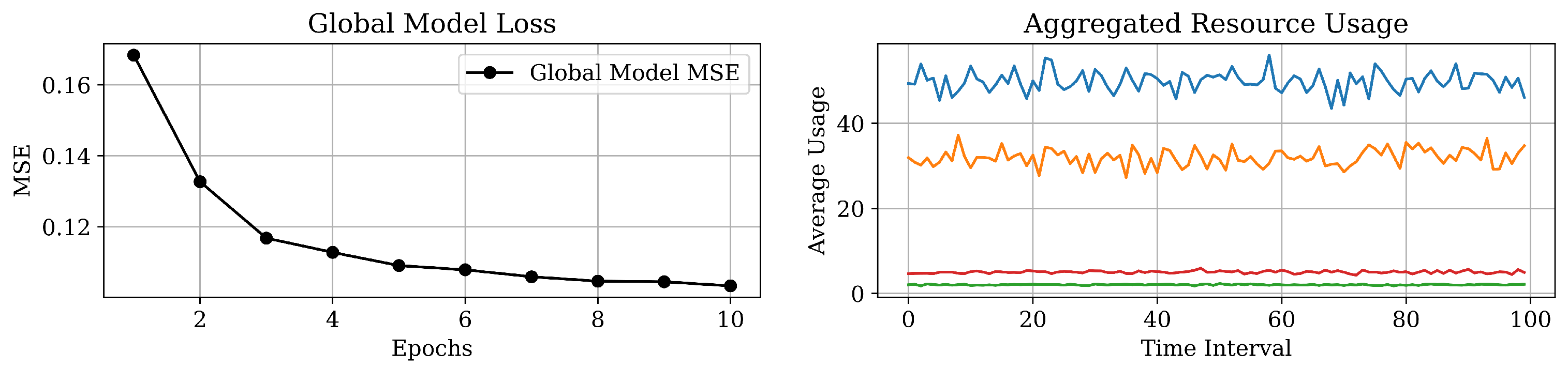

- a FL-based intelligent model is developed to allocate the workload quickly, efficiently, and securely with the least errors;

- the distribution process is conducted in just 10 intervals, tending Mean Square Error (MSE) to zero;

- an appendix is provided, including the workload shift logs, full global/local KPIs, and server status, demonstrating the low error rates in the presented KPIs.

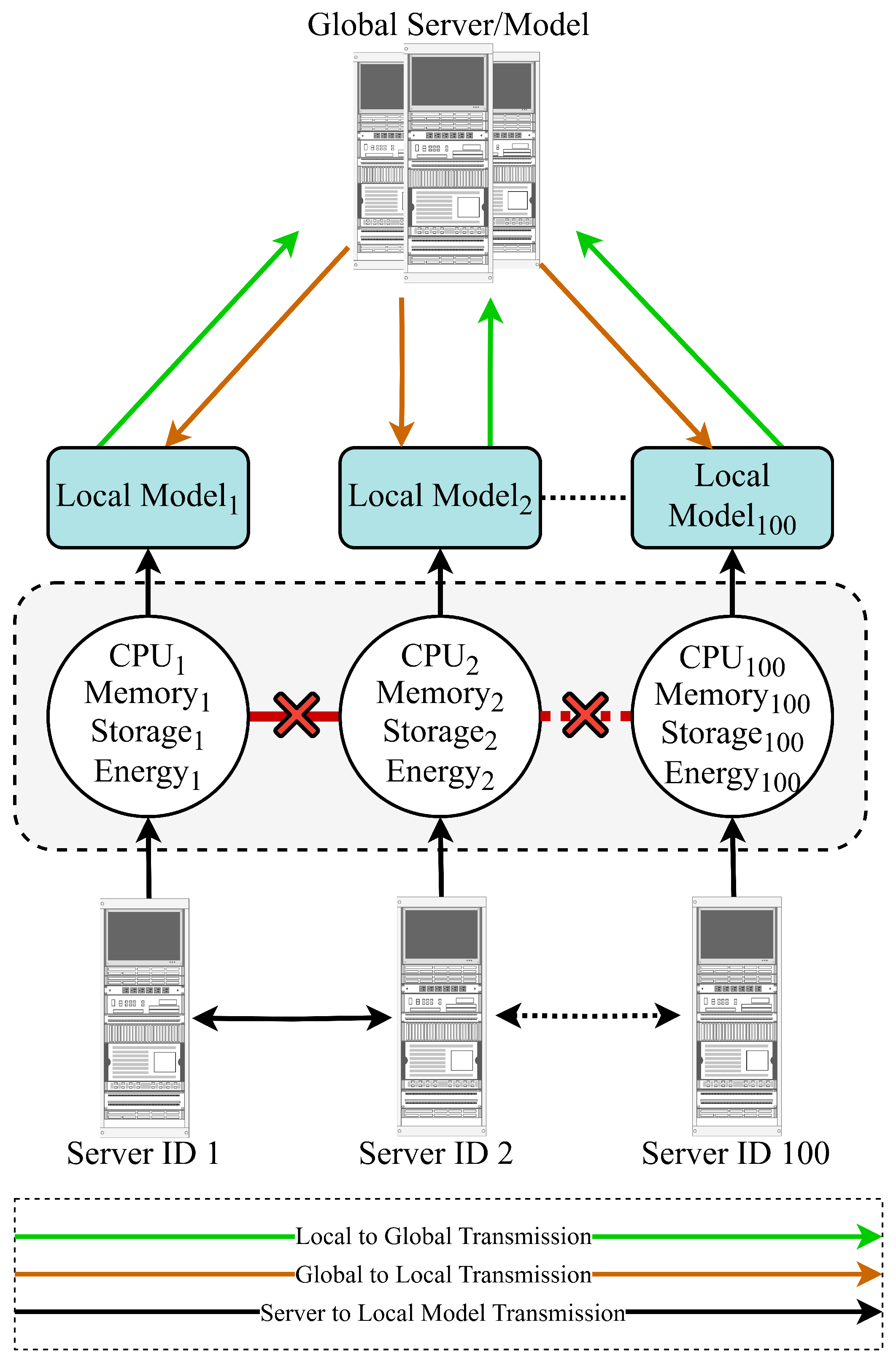

2. Proposed Workload Shifting Strategy

2.1. Preliminary Statement

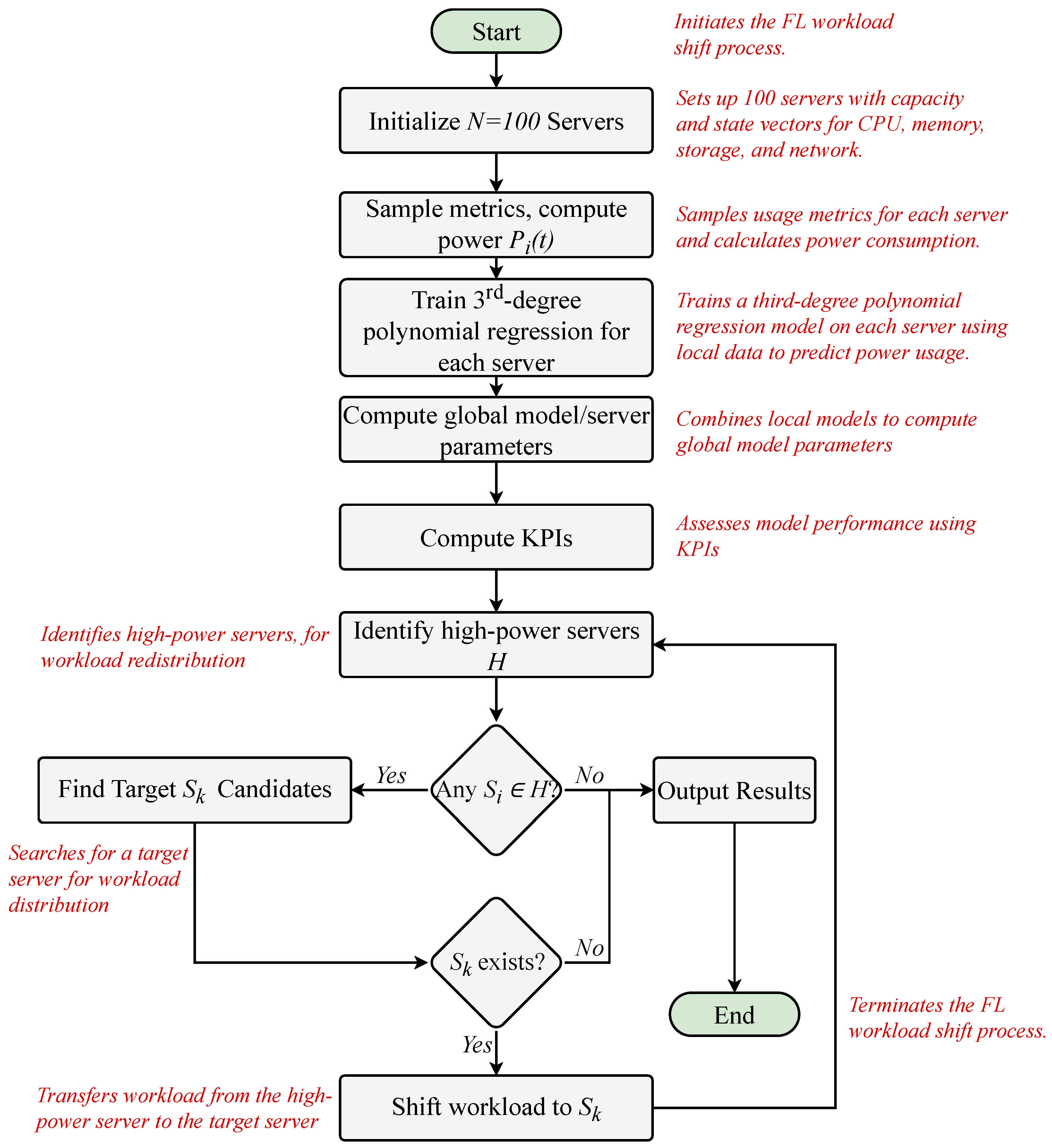

2.2. Intelligent Shifting Strategy

| Algorithm 1 Proposed strategy. |

|

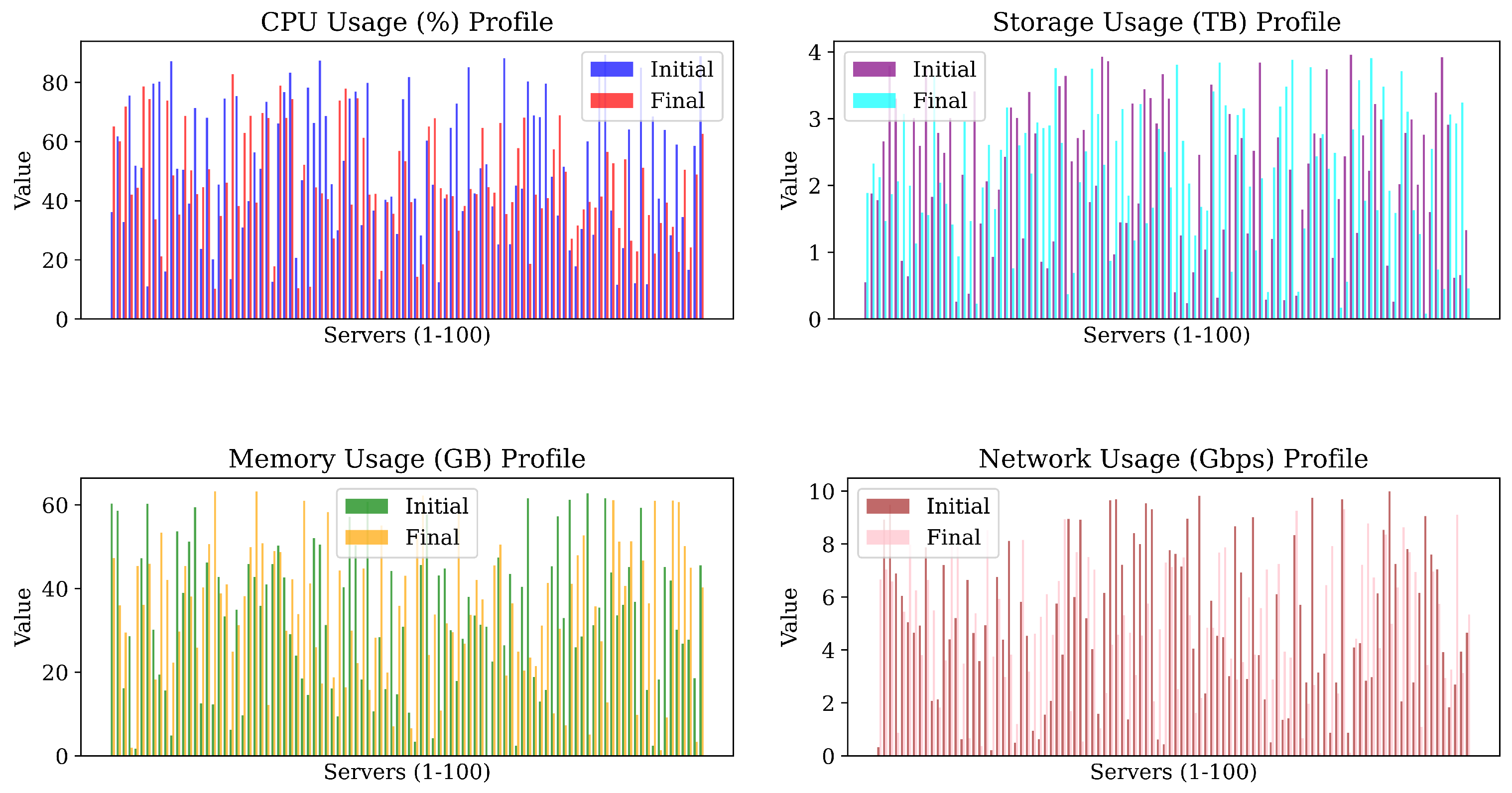

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CDC | Cloud Data Center |

| CPU | Central Processing Unit |

| FL | Federated Learning |

| HDD | Hard-Disk Drive |

| IoT | Internet-of-Things |

| IT | Information Technology |

| KPI | Key Performance Indicator |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| ML | Machine Learning |

| MSE | Mean Square Error |

| NIC | Network Interface Card |

| NSERC | Natural Sciences and Engineering Research Council of Canada |

| RAM | Random Accessible Memory |

| RMSE | Root Mean Square Error |

| SSD | Solid-State Drive |

Appendix A

Appendix A.1. Shift Logs

| Shift Log | Shift Log |

|---|---|

| Workload shifted from Server-2 to Server-62 with predicted savings of 13.52 W | Workload shifted from Server-3 to Server-81 with predicted savings of 16.48 W |

| Workload shifted from Server-4 to Server-69 with predicted savings of 23.72 W | No suitable target to shift workload from Server-5 |

| Workload shifted from Server-6 to Server-90 with predicted savings of 17.81 W | Workload shifted from Server-8 to Server-43 with predicted savings of 7.75 W |

| Workload shifted from Server-10 to Server-49 with predicted savings of 9.42 W | Workload shifted from Server-13 to Server-2 with predicted savings of 3.01 W |

| Workload shifted from Server-15 to Server-3 with predicted savings of 11.31 W | Workload shifted from Server-21 to Server-1 with predicted savings of 8.63 W |

| Workload shifted from Server-22 to Server-13 with predicted savings of 5.19 W | Workload shifted from Server-23 to Server-97 with predicted savings of 7.65 W |

| No suitable target to shift workload from Server-24 | No suitable target to shift workload from Server-25 |

| Workload shifted from Server-26 to Server-23 with predicted savings of 2.08 W | Workload shifted from Server-27 to Server-14 with predicted savings of 14.56 W |

| Workload shifted from Server-29 to Server-26 with predicted savings of 2.73 W | Workload shifted from Server-30 to Server-27 with predicted savings of 3.76 W |

| No suitable target to shift workload from Server-33 | No suitable target to shift workload from Server-37 |

| Workload shifted from Server-39 to Server-30 with predicted savings of 0.15 W | Workload shifted from Server-40 to Server-50 with predicted savings of 13.69 W |

| Workload shifted from Server-41 to Server-10 with predicted savings of 6.75 W | Workload shifted from Server-42 to Server-66 with predicted savings of 9.06 W |

| Workload shifted from Server-44 to Server-40 with predicted savings of 7.01 W | Workload shifted from Server-45 to Server-29 with predicted savings of 7.60 W |

| Workload shifted from Server-47 to Server-39 with predicted savings of 5.43 W | Workload shifted from Server-48 to Server-42 with predicted savings of 2.00 W |

| No suitable target to shift workload from Server-54 | No suitable target to shift workload from Server-55 |

| Workload shifted from Server-56 to Server-21 with predicted savings of 8.54 W | Workload shifted from Server-57 to Server-6 with predicted savings of 6.06 W |

| No suitable target to shift workload from Server-58 | Workload shifted from Server-64 to Server-7 with predicted savings of 14.04 W |

| No suitable target to shift workload from Server-65 | Workload shifted from Server-67 to Server-63 with predicted savings of 4.79 W |

| No suitable target to shift workload from Server-68 | No suitable target to shift workload from Server-70 |

| Workload shifted from Server-72 to Server-31 with predicted savings of 7.33 W | No suitable target to shift workload from Server-76 |

| No suitable target to shift workload from Server-77 | No suitable target to shift workload from Server-79 |

| No suitable target to shift workload from Server-83 | No suitable target to shift workload from Server-84 |

| No suitable target to shift workload from Server-85 | No suitable target to shift workload from Server-87 |

| Workload shifted from Server-93 to Server-17 with predicted savings of 12.65 W | No suitable target to shift workload from Server-100 |

Appendix A.2. Servers Status

| Server ID | CPU Usage (%) | Memory Usage (GB) | Storage Usage (TB) | Network Usage (Gbps) | Power Consumption (W) |

|---|---|---|---|---|---|

| Server-1 | 36.16 | 60.33 | 0.55 | 0.32 | 124.35 |

| Server-2 | 61.81 | 58.54 | 1.88 | 8.93 | 217.77 |

| Server-3 | 32.80 | 16.18 | 1.78 | 9.48 | 140.56 |

| Server-4 | 75.63 | 28.59 | 2.66 | 6.89 | 226.76 |

| Server-5 | 51.88 | 1.72 | 3.79 | 6.05 | 151.91 |

| Server-6 | 51.16 | 47.22 | 3.30 | 5.05 | 168.22 |

| Server-7 | 11.04 | 60.22 | 0.87 | 4.66 | 106.88 |

| Server-8 | 79.63 | 30.15 | 0.64 | 4.92 | 227.60 |

| Server-9 | 80.35 | 19.41 | 3.01 | 7.88 | 240.05 |

| Server-10 | 16.07 | 15.65 | 2.59 | 2.08 | 78.41 |

| Server-11 | 87.14 | 4.84 | 3.75 | 2.13 | 223.20 |

| Server-12 | 50.85 | 53.68 | 1.83 | 7.21 | 181.94 |

| Server-13 | 50.54 | 38.91 | 2.79 | 4.40 | 159.08 |

| Server-14 | 39.06 | 51.21 | 2.49 | 5.21 | 146.63 |

| Server-15 | 71.42 | 59.42 | 3.01 | 0.63 | 202.16 |

| Server-16 | 23.71 | 12.52 | 0.26 | 6.64 | 108.89 |

| Server-17 | 68.10 | 46.27 | 2.16 | 4.64 | 204.19 |

| Server-18 | 20.15 | 12.32 | 0.38 | 3.58 | 88.20 |

| Server-19 | 45.48 | 42.76 | 3.41 | 4.93 | 153.87 |

| Server-20 | 74.56 | 33.34 | 1.43 | 0.21 | 196.07 |

| Server-21 | 13.53 | 6.22 | 2.06 | 6.75 | 93.23 |

| Server-22 | 75.34 | 34.94 | 0.93 | 4.39 | 215.84 |

| Server-23 | 30.94 | 9.70 | 1.94 | 8.12 | 127.13 |

| Server-24 | 39.89 | 45.86 | 2.43 | 0.49 | 125.02 |

| Server-25 | 56.33 | 42.74 | 3.17 | 5.82 | 181.27 |

| Server-26 | 50.80 | 35.85 | 3.01 | 4.53 | 159.07 |

| Server-27 | 73.50 | 40.96 | 1.21 | 0.95 | 199.23 |

| Server-28 | 12.57 | 45.81 | 3.40 | 0.63 | 84.29 |

| Server-29 | 66.16 | 50.29 | 2.78 | 1.56 | 187.78 |

| Server-30 | 76.71 | 42.64 | 0.86 | 2.08 | 213.18 |

| Server-31 | 83.26 | 29.12 | 0.76 | 5.75 | 240.77 |

| Server-32 | 20.71 | 23.98 | 1.16 | 3.82 | 96.38 |

| Server-33 | 46.95 | 18.50 | 3.49 | 8.95 | 164.99 |

| Server-34 | 78.35 | 14.57 | 3.64 | 6.00 | 222.24 |

| Server-35 | 66.33 | 52.06 | 2.36 | 8.92 | 225.91 |

| Server-36 | 87.45 | 50.45 | 2.71 | 5.20 | 260.92 |

| Server-37 | 68.64 | 31.29 | 2.83 | 4.03 | 195.60 |

| Server-38 | 45.60 | 16.11 | 1.75 | 1.59 | 125.24 |

| Server-39 | 30.02 | 9.39 | 2.00 | 6.15 | 114.23 |

| Server-40 | 53.53 | 40.34 | 3.93 | 9.66 | 194.38 |

| Server-41 | 74.56 | 57.10 | 3.86 | 9.69 | 253.12 |

| Server-42 | 76.85 | 50.34 | 0.97 | 7.22 | 241.72 |

| Server-43 | 31.77 | 18.24 | 1.45 | 1.37 | 98.89 |

| Server-44 | 79.91 | 60.75 | 1.44 | 8.42 | 262.19 |

| Server-45 | 36.71 | 10.66 | 3.23 | 8.00 | 136.61 |

| Server-46 | 13.44 | 28.37 | 1.73 | 9.54 | 119.02 |

| Server-47 | 40.33 | 15.94 | 3.44 | 9.32 | 153.13 |

| Server-48 | 41.44 | 44.16 | 3.31 | 0.62 | 127.52 |

| Server-49 | 28.78 | 14.75 | 2.93 | 0.43 | 89.40 |

| Server-50 | 74.31 | 30.82 | 3.67 | 7.77 | 229.01 |

| Server-51 | 81.90 | 10.33 | 3.30 | 7.63 | 238.39 |

| Server-52 | 40.79 | 3.36 | 0.40 | 7.16 | 135.80 |

| Server-53 | 28.19 | 45.58 | 1.25 | 8.96 | 144.10 |

| Server-54 | 60.33 | 57.66 | 0.24 | 4.05 | 188.16 |

| Server-55 | 45.39 | 4.17 | 0.70 | 9.83 | 159.80 |

| Server-56 | 12.48 | 43.15 | 2.46 | 2.36 | 89.24 |

| Server-57 | 40.82 | 44.78 | 1.04 | 5.86 | 149.38 |

| Server-58 | 64.70 | 30.04 | 3.51 | 4.53 | 188.22 |

| Server-59 | 72.81 | 17.86 | 0.32 | 4.49 | 201.70 |

| Server-60 | 36.48 | 27.95 | 1.34 | 3.01 | 119.07 |

| Server-61 | 85.12 | 38.03 | 3.07 | 8.67 | 267.00 |

| Server-62 | 42.48 | 33.53 | 2.46 | 6.93 | 153.17 |

| Server-63 | 50.98 | 31.37 | 2.71 | 2.90 | 149.61 |

| Server-64 | 52.36 | 30.86 | 1.28 | 9.01 | 183.37 |

| Server-65 | 38.08 | 22.57 | 2.52 | 3.80 | 123.31 |

| Server-66 | 25.20 | 47.43 | 3.84 | 2.13 | 106.74 |

| Server-67 | 88.25 | 26.42 | 0.29 | 0.51 | 230.24 |

| Server-68 | 25.34 | 43.53 | 1.20 | 6.11 | 123.96 |

| Server-69 | 45.19 | 2.40 | 2.72 | 1.36 | 116.24 |

| Server-70 | 44.11 | 40.43 | 0.28 | 1.42 | 133.25 |

| Server-71 | 80.40 | 61.58 | 2.24 | 8.34 | 263.49 |

| Server-72 | 68.94 | 18.84 | 0.35 | 5.71 | 198.49 |

| Server-73 | 68.26 | 12.99 | 1.64 | 2.78 | 179.49 |

| Server-74 | 79.61 | 15.73 | 2.33 | 9.75 | 246.59 |

| Server-75 | 48.16 | 45.33 | 2.78 | 3.15 | 151.66 |

| Server-76 | 35.03 | 57.25 | 2.71 | 3.86 | 135.83 |

| Server-77 | 51.59 | 32.97 | 3.74 | 0.88 | 143.55 |

| Server-78 | 23.30 | 61.23 | 0.92 | 2.77 | 113.50 |

| Server-79 | 17.78 | 25.96 | 1.80 | 9.69 | 123.79 |

| Server-80 | 30.48 | 28.54 | 2.44 | 0.88 | 100.65 |

| Server-81 | 60.14 | 62.78 | 3.96 | 4.10 | 191.13 |

| Server-82 | 28.51 | 31.27 | 1.29 | 4.26 | 112.87 |

| Server-83 | 82.46 | 35.46 | 2.75 | 2.84 | 228.33 |

| Server-84 | 89.44 | 61.59 | 2.22 | 2.97 | 261.25 |

| Server-85 | 36.76 | 43.81 | 3.22 | 6.14 | 143.25 |

| Server-86 | 11.62 | 33.55 | 2.99 | 8.54 | 113.91 |

| Server-87 | 23.99 | 36.07 | 0.80 | 9.99 | 139.01 |

| Server-88 | 64.10 | 45.10 | 0.26 | 7.25 | 207.58 |

| Server-89 | 12.12 | 36.83 | 2.02 | 2.06 | 84.26 |

| Server-90 | 85.06 | 59.24 | 2.79 | 7.81 | 271.58 |

| Server-91 | 11.85 | 15.75 | 2.99 | 2.78 | 77.05 |

| Server-92 | 68.49 | 2.38 | 2.01 | 6.15 | 191.16 |

| Server-93 | 40.73 | 18.24 | 2.76 | 9.06 | 153.95 |

| Server-94 | 63.91 | 45.15 | 1.60 | 7.61 | 209.42 |

| Server-95 | 28.36 | 41.89 | 3.39 | 7.05 | 133.05 |

| Server-96 | 59.04 | 30.19 | 3.92 | 3.92 | 171.55 |

| Server-97 | 34.51 | 26.79 | 2.91 | 1.84 | 110.47 |

| Server-98 | 16.60 | 27.79 | 0.62 | 2.69 | 87.23 |

| Server-99 | 58.53 | 18.53 | 0.66 | 3.94 | 164.27 |

| Server-100 | 88.92 | 45.53 | 1.33 | 4.66 | 259.43 |

| Server ID | CPU Usage (%) | Memory Usage (GB) | Storage Usage (TB) | Network Usage (Gbps) | Power Consumption (W) |

|---|---|---|---|---|---|

| Server-1 | 26.58 | 33.13 | 0.96 | 5.59 | 117.96 |

| Server-2 | 59.43 | 43.76 | 3.87 | 4.24 | 180.19 |

| Server-3 | 59.06 | 7.16 | 1.42 | 6.20 | 171.09 |

| Server-4 | 84.05 | 3.85 | 2.94 | 1.74 | 212.23 |

| Server-5 | 44.37 | 45.36 | 1.87 | 5.45 | 154.94 |

| Server-6 | 72.97 | 8.82 | 0.87 | 6.28 | 205.95 |

| Server-7 | 29.88 | 45.82 | 1.09 | 2.43 | 114.34 |

| Server-8 | 67.53 | 36.51 | 3.99 | 7.60 | 214.25 |

| Server-9 | 21.19 | 53.37 | 1.13 | 6.64 | 124.79 |

| Server-10 | 70.13 | 24.28 | 1.45 | 1.83 | 186.12 |

| Server-11 | 48.54 | 22.26 | 1.56 | 1.82 | 134.86 |

| Server-12 | 35.39 | 29.71 | 3.63 | 3.61 | 121.39 |

| Server-13 | 60.89 | 28.17 | 0.79 | 9.84 | 205.77 |

| Server-14 | 15.90 | 31.40 | 0.16 | 7.55 | 113.18 |

| Server-15 | 84.58 | 51.85 | 2.83 | 6.97 | 262.86 |

| Server-16 | 44.66 | 40.29 | 0.94 | 0.66 | 131.67 |

| Server-17 | 18.14 | 49.26 | 2.88 | 1.95 | 96.72 |

| Server-18 | 10.29 | 63.21 | 1.47 | 0.37 | 89.60 |

| Server-19 | 34.90 | 38.82 | 0.23 | 8.52 | 149.54 |

| Server-20 | 46.19 | 41.04 | 1.97 | 3.75 | 148.82 |

| Server-21 | 77.14 | 28.24 | 1.86 | 2.16 | 207.56 |

| Server-22 | 76.56 | 62.56 | 3.29 | 5.96 | 240.96 |

| Server-23 | 59.81 | 15.66 | 3.41 | 4.41 | 168.54 |

| Server-24 | 68.74 | 49.88 | 3.17 | 1.20 | 193.25 |

| Server-25 | 39.37 | 63.20 | 0.76 | 8.16 | 168.78 |

| Server-26 | 66.15 | 60.65 | 1.66 | 3.25 | 200.31 |

| Server-27 | 68.75 | 13.34 | 3.13 | 0.70 | 172.09 |

| Server-28 | 17.80 | 48.95 | 2.18 | 5.25 | 112.11 |

| Server-29 | 73.28 | 40.92 | 3.54 | 3.12 | 207.86 |

| Server-30 | 67.33 | 10.93 | 2.45 | 8.53 | 205.36 |

| Server-31 | 32.24 | 20.75 | 2.49 | 5.94 | 122.97 |

| Server-32 | 10.43 | 33.88 | 3.76 | 8.94 | 115.14 |

| Server-33 | 52.21 | 60.98 | 2.64 | 1.69 | 162.25 |

| Server-34 | 10.92 | 41.20 | 0.37 | 7.69 | 112.98 |

| Server-35 | 44.50 | 25.97 | 0.69 | 0.54 | 123.62 |

| Server-36 | 42.49 | 17.29 | 2.05 | 7.51 | 148.23 |

| Server-37 | 40.60 | 58.29 | 2.51 | 7.03 | 162.51 |

| Server-38 | 27.32 | 18.77 | 3.75 | 1.04 | 91.28 |

| Server-39 | 68.83 | 48.88 | 3.27 | 0.61 | 190.88 |

| Server-40 | 71.51 | 1.14 | 0.92 | 2.28 | 179.74 |

| Server-41 | 77.51 | 59.91 | 1.74 | 9.16 | 259.87 |

| Server-42 | 78.17 | 30.26 | 2.01 | 1.07 | 206.40 |

| Server-43 | 27.55 | 26.52 | 1.15 | 0.86 | 95.21 |

| Server-44 | 84.28 | 31.62 | 3.70 | 6.11 | 247.00 |

| Server-45 | 84.69 | 56.47 | 2.35 | 9.10 | 276.21 |

| Server-46 | 16.24 | 55.09 | 3.22 | 5.75 | 115.34 |

| Server-47 | 79.00 | 39.80 | 2.87 | 4.13 | 227.36 |

| Server-48 | 71.15 | 14.10 | 3.33 | 9.56 | 222.31 |

| Server-49 | 21.76 | 23.74 | 2.12 | 6.39 | 110.37 |

| Server-50 | 17.64 | 42.47 | 2.04 | 5.99 | 112.06 |

| Server-51 | 39.52 | 6.65 | 1.97 | 2.52 | 112.66 |

| Server-52 | 14.27 | 52.87 | 3.81 | 7.50 | 121.26 |

| Server-53 | 18.49 | 62.22 | 2.67 | 5.32 | 119.70 |

| Server-54 | 65.11 | 24.11 | 2.03 | 1.62 | 172.49 |

| Server-55 | 67.89 | 33.79 | 1.25 | 2.19 | 186.48 |

| Server-56 | 88.48 | 21.60 | 3.36 | 9.69 | 273.84 |

| Server-57 | 84.37 | 63.33 | 3.25 | 9.68 | 282.78 |

| Server-58 | 41.51 | 29.59 | 3.41 | 7.68 | 153.60 |

| Server-59 | 29.89 | 59.17 | 3.84 | 7.88 | 148.16 |

| Server-60 | 38.29 | 26.84 | 3.20 | 3.68 | 125.25 |

| Server-61 | 43.98 | 33.70 | 0.71 | 2.88 | 135.74 |

| Server-62 | 12.57 | 20.13 | 1.12 | 1.42 | 73.26 |

| Server-63 | 29.19 | 18.16 | 2.75 | 3.11 | 102.43 |

| Server-64 | 89.07 | 0.14 | 3.97 | 7.65 | 252.98 |

| Server-65 | 42.73 | 45.52 | 1.03 | 5.58 | 152.56 |

| Server-66 | 27.18 | 35.37 | 1.10 | 6.50 | 124.48 |

| Server-67 | 71.00 | 38.42 | 0.81 | 5.77 | 213.50 |

| Server-68 | 39.57 | 36.43 | 2.27 | 7.25 | 150.25 |

| Server-69 | 15.78 | 23.02 | 1.71 | 3.06 | 85.50 |

| Server-70 | 68.12 | 20.39 | 3.48 | 3.72 | 187.67 |

| Server-71 | 18.64 | 23.54 | 3.88 | 9.26 | 121.65 |

| Server-72 | 84.19 | 42.95 | 0.82 | 1.33 | 230.10 |

| Server-73 | 37.45 | 31.13 | 1.36 | 1.97 | 118.20 |

| Server-74 | 40.87 | 41.29 | 3.77 | 2.68 | 132.76 |

| Server-75 | 57.37 | 10.18 | 2.44 | 0.10 | 142.37 |

| Server-76 | 68.96 | 30.38 | 2.77 | 6.45 | 207.53 |

| Server-77 | 49.86 | 7.29 | 2.25 | 7.92 | 159.99 |

| Server-78 | 27.15 | 41.15 | 2.49 | 2.36 | 107.75 |

| Server-79 | 31.67 | 47.93 | 0.17 | 9.32 | 153.46 |

| Server-80 | 37.07 | 52.71 | 0.56 | 0.12 | 121.58 |

| Server-81 | 10.14 | 1.51 | 2.13 | 1.32 | 61.33 |

| Server-82 | 37.62 | 35.74 | 3.58 | 7.22 | 146.94 |

| Server-83 | 41.45 | 27.39 | 1.77 | 8.78 | 157.53 |

| Server-84 | 56.47 | 12.76 | 3.91 | 6.74 | 170.64 |

| Server-85 | 52.74 | 61.14 | 1.63 | 4.07 | 172.81 |

| Server-86 | 30.80 | 51.26 | 3.48 | 8.36 | 148.76 |

| Server-87 | 53.98 | 40.63 | 1.92 | 4.99 | 170.22 |

| Server-88 | 26.50 | 51.29 | 1.59 | 6.36 | 130.89 |

| Server-89 | 22.93 | 9.78 | 3.71 | 8.63 | 117.12 |

| Server-90 | 14.74 | 42.30 | 2.67 | 4.55 | 101.11 |

| Server-91 | 35.17 | 36.47 | 1.63 | 6.95 | 140.87 |

| Server-92 | 22.12 | 60.96 | 1.27 | 1.09 | 104.70 |

| Server-93 | 65.03 | 2.73 | 0.16 | 6.88 | 186.57 |

| Server-94 | 39.41 | 9.18 | 2.55 | 6.96 | 135.22 |

| Server-95 | 31.24 | 61.05 | 0.74 | 5.74 | 139.45 |

| Server-96 | 22.76 | 60.69 | 0.45 | 2.94 | 113.05 |

| Server-97 | 20.62 | 42.36 | 1.36 | 1.05 | 93.16 |

| Server-98 | 24.23 | 44.98 | 2.93 | 9.11 | 139.30 |

| Server-99 | 48.88 | 3.33 | 3.24 | 3.13 | 132.04 |

| Server-100 | 62.68 | 40.31 | 0.46 | 5.34 | 191.88 |

Appendix A.3. Key Performance Indicators (KPIs) Results

| Server ID | MSE | RMSE | MAE | MAPE (%) | Max Error |

|---|---|---|---|---|---|

| Server-1 | 0.288019886 | 0.536674842 | 0.407414447 | 0.339792198 | 1.622196721 |

| Server-2 | 0.143679079 | 0.379050232 | 0.280506548 | 0.216175282 | 0.898467968 |

| Server-3 | 0.21665426 | 0.465461341 | 0.36652428 | 0.263668132 | 0.971990076 |

| Server-4 | 0.373173857 | 0.610879576 | 0.487188992 | 0.275412302 | 1.631013131 |

| Server-5 | 0.208731681 | 0.456871624 | 0.357852293 | 0.228320806 | 1.185042199 |

| Server-6 | 0.147108156 | 0.383546811 | 0.312986989 | 0.224362318 | 0.747794889 |

| Server-7 | 0.189299356 | 0.435085458 | 0.336035581 | 0.204715873 | 1.034696044 |

| Server-8 | 0.408605123 | 0.63922228 | 0.457237627 | 0.270730056 | 1.859744114 |

| Server-9 | 0.211587086 | 0.459985963 | 0.374924532 | 0.224549342 | 0.971419173 |

| Server-10 | 0.1825131 | 0.42721552 | 0.339593358 | 0.23935728 | 0.85592076 |

| Server-11 | 0.253635051 | 0.503621933 | 0.379972311 | 0.248127547 | 1.150486454 |

| Server-12 | 0.225669973 | 0.475047338 | 0.406611321 | 0.3102983 | 0.960371416 |

| Server-13 | 0.094360431 | 0.30718143 | 0.252409474 | 0.200814966 | 0.640344365 |

| Server-14 | 0.302661204 | 0.55014653 | 0.42620723 | 0.253016251 | 1.221272865 |

| Server-15 | 0.285990736 | 0.534781017 | 0.409273366 | 0.26982675 | 1.205406524 |

| Server-16 | 0.250397089 | 0.500396931 | 0.400337679 | 0.317240687 | 1.07236305 |

| Server-17 | 0.278019845 | 0.527275872 | 0.379042567 | 0.279337347 | 1.636041854 |

| Server-18 | 1.020521979 | 1.010208879 | 0.49968058 | 0.268664801 | 4.190005521 |

| Server-19 | 0.149685399 | 0.386891974 | 0.279974347 | 0.180448322 | 1.240260652 |

| Server-20 | 0.136795186 | 0.369858332 | 0.314281343 | 0.201287592 | 0.742281187 |

| Server-21 | 0.112351125 | 0.335188193 | 0.276323084 | 0.18674372 | 0.824673614 |

| Server-22 | 0.083980936 | 0.289794645 | 0.232239024 | 0.133418298 | 0.816562594 |

| Server-23 | 0.19826916 | 0.445274252 | 0.357429672 | 0.264406976 | 1.094375506 |

| Server-24 | 0.153307012 | 0.391544393 | 0.325146951 | 0.194859783 | 0.843868315 |

| Server-25 | 0.158074416 | 0.397585735 | 0.332671808 | 0.22732026 | 0.740955843 |

| Server-26 | 0.34961145 | 0.591279503 | 0.469602161 | 0.393595619 | 1.343870624 |

| Server-27 | 0.383106782 | 0.618956204 | 0.515446155 | 0.302164396 | 1.481931778 |

| Server-28 | 0.239750188 | 0.489642919 | 0.401846654 | 0.27536771 | 0.943554317 |

| Server-29 | 0.149861799 | 0.387119876 | 0.315367458 | 0.225995105 | 0.831474733 |

| Server-30 | 0.243664358 | 0.493623701 | 0.442694099 | 0.324449773 | 0.942202404 |

| Server-31 | 0.213965539 | 0.462564092 | 0.383968332 | 0.265624438 | 1.097994193 |

| Server-32 | 0.283132078 | 0.532101567 | 0.414620036 | 0.262500203 | 1.306626006 |

| Server-33 | 0.24075324 | 0.490666118 | 0.304929508 | 0.190057331 | 1.714911534 |

| Server-34 | 0.24129362 | 0.49121647 | 0.414519732 | 0.305410191 | 1.091901808 |

| Server-35 | 0.310205829 | 0.556961245 | 0.365207649 | 0.278979797 | 1.734774363 |

| Server-36 | 0.130170172 | 0.360791036 | 0.27359611 | 0.17411401 | 0.97542151 |

| Server-37 | 0.175343993 | 0.418740962 | 0.332946971 | 0.194698666 | 0.961919336 |

| Server-38 | 0.151165767 | 0.38880042 | 0.287546795 | 0.181059817 | 0.92740031 |

| Server-39 | 0.106570255 | 0.326450999 | 0.256648645 | 0.147139756 | 0.891935238 |

| Server-40 | 0.282211062 | 0.531235411 | 0.441983982 | 0.297124348 | 1.06575691 |

| Server-41 | 0.320506716 | 0.566133126 | 0.463157872 | 0.269869683 | 1.074913764 |

| Server-42 | 0.172203811 | 0.41497447 | 0.313372563 | 0.226483997 | 1.047679801 |

| Server-43 | 0.248587879 | 0.498585879 | 0.383483712 | 0.257047075 | 1.194580549 |

| Server-44 | 0.237500343 | 0.487340069 | 0.381357534 | 0.307854165 | 1.390176242 |

| Server-45 | 0.2873351 | 0.536036472 | 0.376344658 | 0.218862792 | 1.542055562 |

| Server-46 | 0.164098636 | 0.405090898 | 0.31128204 | 0.217664912 | 0.866150269 |

| Server-47 | 0.223043929 | 0.472275268 | 0.389329035 | 0.245089365 | 0.985277919 |

| Server-48 | 0.124291922 | 0.352550595 | 0.279970604 | 0.174045753 | 0.921008532 |

| Server-49 | 0.074173869 | 0.272348801 | 0.223834175 | 0.153860858 | 0.506896217 |

| Server-50 | 0.161806619 | 0.402251935 | 0.323480686 | 0.168374213 | 0.818635545 |

| Server-51 | 0.28695786 | 0.535684478 | 0.412762758 | 0.305712547 | 1.136709953 |

| Server-52 | 0.169854974 | 0.412134656 | 0.331153987 | 0.214316524 | 0.813005245 |

| Server-53 | 0.130965492 | 0.361891547 | 0.274170546 | 0.202188108 | 0.873418091 |

| Server-54 | 0.146228503 | 0.382398357 | 0.327244519 | 0.239873378 | 0.802019592 |

| Server-55 | 0.353041049 | 0.594172575 | 0.468246656 | 0.342257718 | 1.595488547 |

| Server-56 | 0.157685905 | 0.397096846 | 0.355754448 | 0.277316131 | 0.823957055 |

| Server-57 | 0.270427949 | 0.520026873 | 0.415985664 | 0.282063982 | 1.035317278 |

| Server-58 | 0.110685132 | 0.332693751 | 0.285319633 | 0.182375083 | 0.655095498 |

| Server-59 | 0.368798463 | 0.607287792 | 0.49740175 | 0.301383688 | 1.62412723 |

| Server-60 | 0.152845312 | 0.390954361 | 0.309457174 | 0.203283908 | 0.787128093 |

| Server-61 | 0.235201582 | 0.484975858 | 0.380321796 | 0.242149849 | 1.040096973 |

| Server-62 | 0.100551039 | 0.317097838 | 0.229201797 | 0.134879404 | 0.955221638 |

| Server-63 | 0.133849852 | 0.36585496 | 0.299541541 | 0.236562403 | 0.709002268 |

| Server-64 | 0.068723717 | 0.262152088 | 0.213213477 | 0.137388957 | 0.558875403 |

| Server-65 | 0.567116999 | 0.753071709 | 0.58463521 | 0.395867914 | 1.956126235 |

| Server-66 | 0.104314934 | 0.322978225 | 0.275117314 | 0.166961436 | 0.604527755 |

| Server-67 | 0.290494596 | 0.538975506 | 0.41687961 | 0.277596746 | 1.431545898 |

| Server-68 | 0.335048633 | 0.578833856 | 0.418753193 | 0.31476363 | 1.453659872 |

| Server-69 | 0.148529611 | 0.385395396 | 0.297588715 | 0.215797524 | 0.791886707 |

| Server-70 | 0.216042043 | 0.464803231 | 0.349667038 | 0.264718525 | 1.427185467 |

| Server-71 | 0.152160305 | 0.390077306 | 0.295899058 | 0.189600637 | 0.947513679 |

| Server-72 | 0.154688069 | 0.393304041 | 0.331560081 | 0.207139506 | 0.863362419 |

| Server-73 | 0.231904618 | 0.48156476 | 0.409981717 | 0.259838197 | 1.131348952 |

| Server-74 | 0.136793213 | 0.369855665 | 0.292197578 | 0.195001818 | 1.104089215 |

| Server-75 | 0.180965599 | 0.425400516 | 0.366064791 | 0.276172057 | 0.860999199 |

| Server-76 | 0.40614478 | 0.637294893 | 0.514251469 | 0.341010814 | 1.505812213 |

| Server-77 | 0.175170333 | 0.418533551 | 0.325789148 | 0.224255349 | 0.893631677 |

| Server-78 | 0.125250286 | 0.353907171 | 0.295959505 | 0.220443856 | 0.648130923 |

| Server-79 | 0.308050347 | 0.555022835 | 0.434492682 | 0.285079579 | 1.219725973 |

| Server-80 | 0.207692354 | 0.455732766 | 0.381242711 | 0.239516091 | 0.995601431 |

| Server-81 | 0.092338752 | 0.303872921 | 0.226098291 | 0.151000059 | 0.718063343 |

| Server-82 | 0.225619895 | 0.474994626 | 0.402616341 | 0.26016455 | 0.81739475 |

| Server-83 | 0.170847918 | 0.413337536 | 0.33433923 | 0.240323692 | 0.828825791 |

| Server-84 | 0.214898062 | 0.46357099 | 0.354496629 | 0.205628531 | 1.114321498 |

| Server-85 | 0.28557323 | 0.534390522 | 0.428073637 | 0.331329721 | 1.253368573 |

| Server-86 | 0.140731429 | 0.375141878 | 0.317541873 | 0.199777736 | 0.981867625 |

| Server-87 | 0.168453961 | 0.410431433 | 0.340101725 | 0.230023298 | 0.856947381 |

| Server-88 | 0.077854281 | 0.279023799 | 0.23123805 | 0.142973189 | 0.545854272 |

| Server-89 | 0.355486978 | 0.596227287 | 0.458696506 | 0.279569843 | 1.383205455 |

| Server-90 | 0.149650248 | 0.386846543 | 0.336745785 | 0.228402421 | 0.620414751 |

| Server-91 | 0.349009518 | 0.590770275 | 0.469441888 | 0.282826999 | 1.225862792 |

| Server-92 | 0.25827372 | 0.508206375 | 0.39752475 | 0.251108176 | 1.498208969 |

| Server-93 | 0.24310175 | 0.493053496 | 0.38824014 | 0.271074649 | 1.249693837 |

| Server-94 | 0.147146475 | 0.383596761 | 0.294795232 | 0.173635179 | 0.836771993 |

| Server-95 | 0.174318363 | 0.417514507 | 0.345945238 | 0.184409476 | 0.768998776 |

| Server-96 | 0.542794664 | 0.736745997 | 0.492112586 | 0.333549744 | 2.361707797 |

| Server-97 | 0.278893734 | 0.528103905 | 0.407813203 | 0.294310927 | 1.411883016 |

| Server-98 | 0.239546102 | 0.489434472 | 0.360121415 | 0.216057785 | 1.492884951 |

| Server-99 | 0.195686325 | 0.44236447 | 0.36313613 | 0.261168707 | 0.962433125 |

| Server-100 | 0.15706884 | 0.396319113 | 0.29315825 | 0.230787365 | 0.953071812 |

References

- Xu, D.; Qu, M. Energy, environmental, and economic evaluation of a CCHP system for a data center based on operational data. Energy Build. 2013, 67, 176–186. [Google Scholar] [CrossRef]

- Cho, K.; Chang, H.; Jung, Y.; Yoon, Y. Economic analysis of data center cooling strategies. Sustain. Cities Soc. 2017, 31, 234–243. [Google Scholar] [CrossRef]

- Xie, Y.; Cui, Y.; Wu, D.; Zeng, Y.; Sun, L. Economic analysis of hydrogen-powered data center. Int. J. Hydrogen Energy 2021, 46, 27841–27850. [Google Scholar] [CrossRef]

- Li, C.; He, W.; Cao, E. Impact of green data center pilots on the digital economy development: An empirical study based on dual machine learning methods. Comput. Ind. Eng. 2025, 201, 110914. [Google Scholar] [CrossRef]

- Shehabi, A.; Smith, S.J.; Masanet, E.; Koomey, J. Data center growth in the United States: Decoupling the demand for services from electricity use. Environ. Res. Lett. 2018, 13, 124030. [Google Scholar] [CrossRef]

- Ebrahimi, K.; Jones, G.F.; Fleischer, A.S. Thermo-economic analysis of steady state waste heat recovery in data centers using absorption refrigeration. Appl. Energy 2015, 139, 384–397. [Google Scholar] [CrossRef]

- Lykou, G.; Mentzelioti, D.; Gritzalis, D. A new methodology toward effectively assessing data center sustainability. Comput. Secur. 2018, 76, 327–340. [Google Scholar] [CrossRef]

- Sermet, Y.; Demir, I. A semantic web framework for automated smart assistants: A case study for public health. Big Data Cogn. Comput. 2021, 5, 57. [Google Scholar] [CrossRef]

- Yang, J.; Xiao, W.; Jiang, C.; Hossain, M.S.; Muhammad, G.; Amin, S.U. Ai-powered green cloud and data center. IEEE Access 2018, 7, 4195–4203. [Google Scholar] [CrossRef]

- Richins, D.; Doshi, D.; Blackmore, M.; Nair, A.T.; Pathapati, N.; Patel, A.; Daguman, B.; Dobrijalowski, D.; Illikkal, R.; Long, K.; et al. Ai tax: The hidden cost of ai data center applications. ACM Trans. Comput. Syst. (TOCS) 2021, 37, 1–32. [Google Scholar] [CrossRef]

- Lu, W.; Liang, L.; Kong, B.; Li, B.; Zhu, Z. AI-assisted knowledge-defined network orchestration for energy-efficient data center networks. IEEE Commun. Mag. 2020, 58, 86–92. [Google Scholar] [CrossRef]

- Liu, Y.; Du, H.; Niyato, D.; Kang, J.; Xiong, Z.; Wen, Y.; Kim, D.I. Generative AI in data center networking: Fundamentals, perspectives, and case study. IEEE Netw. 2025. [Google Scholar] [CrossRef]

- Marahatta, A.; Xin, Q.; Chi, C.; Zhang, F.; Liu, Z. PEFS: AI-driven prediction based energy-aware fault-tolerant scheduling scheme for cloud data center. IEEE Trans. Sustain. Comput. 2020, 6, 655–666. [Google Scholar] [CrossRef]

- Davenport, C.; Singer, B.; Mehta, N.; Lee, B.; Mackay, J.; Modak, A.; Corbett, B.; Miller, J.; Hari, T.; Ritchie, J.; et al. AI, Data Centers and the Coming US Power Demand Surge. Goldman Sachs. 2024. Available online: https://www.spirepointpc.com/documents/FG/spirepoint/resource-center/629373_Generational_Growth__AI_data_centers_and_the_coming_US_power_demand_surge.pdf (accessed on 28 April 2024).

- Moss, S.; Trueman, C. Goldman Sachs: $1tn to Be Spent on AI Data Centers, Chips, and Utility Upgrades, with “Little to Show for It So Far”; Data Center Dynamics: London, UK, 2024. [Google Scholar]

- Van Geet, O.; Sickinger, D. Best Practices Guide for Energy-Efficient Data Center Design; Technical Report; National Renewable Energy Laboratory (NREL): Golden, CO, USA, 2024.

- del Rio, A.; Conti, G.; Castano-Solis, S.; Serrano, J.; Jimenez, D.; Fraile-Ardanuy, J. A guide to data collection for computation and monitoring of node energy consumption. Big Data Cogn. Comput. 2023, 7, 130. [Google Scholar] [CrossRef]

- Chidolue, O.; Ohenhen, P.E.; Umoh, A.A.; Ngozichukwu, B.; Fafure, A.V.; Ibekwe, K.I. Green data centers: Sustainable practices for energy-efficient it infrastructure. Eng. Sci. Technol. J. 2024, 5, 99–114. [Google Scholar] [CrossRef]

- Rostami, S.; Down, D.G.; Karakostas, G. Linearized Data Center Workload and Cooling Management. IEEE Trans. Autom. Sci. Eng. 2024, 22, 3502–3514. [Google Scholar] [CrossRef]

- Ali, A.; Özkasap, Ö. Spatial and thermal aware methods for efficient workload management in distributed data centers. Future Gener. Comput. Syst. 2024, 153, 360–374. [Google Scholar] [CrossRef]

- Cao, Y.; Cao, F.; Wang, Y.; Wang, J.; Wu, L.; Ding, Z. Managing data center cluster as non-wire alternative: A case in balancing market. Appl. Energy 2024, 360, 122769. [Google Scholar] [CrossRef]

- Chen, D.; Ma, Y.; Wang, L.; Yao, M. Spatio-temporal management of renewable energy consumption, carbon emissions, and cost in data centers. Sustain. Comput. Inform. Syst. 2024, 41, 100950. [Google Scholar] [CrossRef]

- Su, C.; Wang, L.; Sui, Q.; Wu, H. Optimal scheduling of a cascade hydro-thermal-wind power system integrating data centers and considering the spatiotemporal asynchronous transfer of energy resources. Appl. Energy 2025, 377, 124360. [Google Scholar] [CrossRef]

- Khalil, M.I.K.; Ahmad, I.; Almazroi, A.A. Energy Efficient Indivisible Workload Distribution in Geographically Distributed Data Centers. IEEE Access 2019, 7, 82672–82680. [Google Scholar] [CrossRef]

- Hogade, N.; Pasricha, S.; Siegel, H.J. Energy and Network Aware Workload Management for Geographically Distributed Data Centers. IEEE Trans. Sustain. Comput. 2022, 7, 400–413. [Google Scholar] [CrossRef]

- Forestiero, A.; Mastroianni, C.; Meo, M.; Papuzzo, G.; Sheikhalishahi, M. Hierarchical Approach for Efficient Workload Management in Geo-Distributed Data Centers. IEEE Trans. Green Commun. Netw. 2017, 1, 97–111. [Google Scholar] [CrossRef]

- Ahmad, I.; Khalil, M.I.K.; Shah, S.A.A. Optimization-based workload distribution in geographically distributed data centers: A survey. Int. J. Commun. Syst. 2020, 33, e4453. [Google Scholar] [CrossRef]

- Chen, T.; Wang, X.; Giannakis, G.B. Cooling-Aware Energy and Workload Management in Data Centers via Stochastic Optimization. IEEE J. Sel. Top. Signal Process. 2016, 10, 402–415. [Google Scholar] [CrossRef]

- Safari, A.; Sorouri, H.; Rahimi, A.; Oshnoei, A. A Systematic Review of Energy Efficiency Metrics for Optimizing Cloud Data Center Operations and Management. Electronics 2025, 14, 2214. [Google Scholar] [CrossRef]

- Möbius, C.; Dargie, W.; Schill, A. Power consumption estimation models for processors, virtual machines, and servers. IEEE Trans. Parallel Distrib. Syst. 2013, 25, 1600–1614. [Google Scholar] [CrossRef]

- Salmanian, Z.; Izadkhah, H.; Isazadeh, A. Optimizing web server RAM performance using birth–death process queuing system: Scalable memory issue. J. Supercomput. 2017, 73, 5221–5238. [Google Scholar] [CrossRef]

- Wang, S.; Lu, Z.; Cao, Q.; Jiang, H.; Yao, J.; Dong, Y.; Yang, P. BCW: Buffer-Controlled Writes to HDDs for SSD-HDD Hybrid Storage Server. In Proceedings of the USENIX Conference on File and Storage Technologies, Santa Clara, CA, USA, 24–27 February 2020. [Google Scholar]

- Zhan, J.; Jiang, W.; Li, Y.; Wu, J.; Zhu, J.; Yu, J. NIC-QF: A design of FPGA based Network Interface Card with Query Filter for big data systems. Future Gener. Comput. Syst. 2022, 136, 153–169. [Google Scholar] [CrossRef]

- Kim, D.; Liu, Z.; Zhu, Y.; Kim, C.; Lee, J.; Sekar, V.; Seshan, S. TEA: Enabling State-Intensive Network Functions on Programmable Switches. In Proceedings of the Annual Conference of the ACM Special Interest Group on Data Communication on the Applications, Technologies, Architectures, and Protocols for Computer Communication, Virtual Event USA, 10–14 August 2020. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Zhang, C.; Xie, Y.; Bai, H.; Yu, B.; Li, W.; Gao, Y. A survey on federated learning. Knowl.-Based Syst. 2021, 216, 106775. [Google Scholar] [CrossRef]

- Safari, A.; Daneshvar, M.; Anvari-Moghaddam, A. Energy Intelligence: A Systematic Review of Artificial Intelligence for Energy Management. Appl. Sci. 2024, 14, 11112. [Google Scholar] [CrossRef]

- Dasi, H.; Ying, Z.; Ashab, M.F.B. Proposing hybrid prediction approaches with the integration of machine learning models and metaheuristic algorithms to forecast the cooling and heating load of buildings. Energy 2024, 291, 130297. [Google Scholar] [CrossRef]

- Indira, G.; Bhavani, M.; Brinda, R.; Zahira, R. Electricity load demand prediction for microgrid energy management system using hybrid adaptive barnacle-mating optimizer with artificial neural network algorithm. Energy Technol. 2024, 12, 2301091. [Google Scholar] [CrossRef]

- Hodson, T.O. Root mean square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. Discuss. 2022, 2022, 5481–5487. [Google Scholar] [CrossRef]

- Tayalati, F.; Azmani, A.; Azmani, M. Application of supervised machine learning methods in injection molding process for initial parameters setting: Prediction of the cooling time parameter. Prog. Artif. Intell. 2024, 1–17, Erratum in Prog. Artif. Intell. 2024. [Google Scholar] [CrossRef] [PubMed]

| Metric Name | Formulation | Best/Worst |

|---|---|---|

| MSE | 0/ | |

| MAE | 0/ | |

| MAPE | 0%/100% | |

| RMSE | 0/ | |

| Max. Error | 0/ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Safari, A.; Rahimi, A. Electrical Power Optimization of Cloud Data Centers Using Federated Learning Server Workload Allocation. Electronics 2025, 14, 3423. https://doi.org/10.3390/electronics14173423

Safari A, Rahimi A. Electrical Power Optimization of Cloud Data Centers Using Federated Learning Server Workload Allocation. Electronics. 2025; 14(17):3423. https://doi.org/10.3390/electronics14173423

Chicago/Turabian StyleSafari, Ashkan, and Afshin Rahimi. 2025. "Electrical Power Optimization of Cloud Data Centers Using Federated Learning Server Workload Allocation" Electronics 14, no. 17: 3423. https://doi.org/10.3390/electronics14173423

APA StyleSafari, A., & Rahimi, A. (2025). Electrical Power Optimization of Cloud Data Centers Using Federated Learning Server Workload Allocation. Electronics, 14(17), 3423. https://doi.org/10.3390/electronics14173423