Abstract

To address issues like haze, blurring, and color distortion in underwater images, this paper proposes a novel underwater image enhancement model called U-Vision Mamba, built on the Vision Mamba framework. The core innovation lies in a U-shaped network encoder for multi-scale feature extraction, combined with a novel multi-scale sparse attention fusion module to effectively aggregate these features. This fusion module leverages sparse attention to capture global context while preserving fine details. The decoder then refines these aggregated features to generate high-quality underwater images. Experimental results on the UIEB dataset demonstrate that U-Vision Mamba significantly reduces image blurring and corrects color distortion, achieving a PSNR of 25.65 dB and an SSIM of 0.972. Both comprehensive subjective evaluation and objective metrics confirm the model’s superior performance and robustness, making it a promising solution for improving the clarity and usability of underwater imagery in applications like marine exploration and environmental monitoring.

1. Introduction

Underwater image recognition technology used in underwater drones holds significant potential in fields like aquatic exploration, resource surveying, and ecological monitoring. For instance, in pollution detection, underwater drones can capture images in contaminated water areas. Image recognition can differentiate between various pollutants, such as oil spills and plastic debris. Additionally, these drones can be used to monitor ecological changes in water bodies. By patrolling and identifying aquatic habitats, they can detect alterations in wildlife and habitats, enabling timely protective actions. However, as depth increases and factors like suspended particles, light absorption, and refraction in water come into play, images suffer from reduced contrast, color distortion, blurriness, and noise. These issues not only degrade the visual quality of underwater images but also hinder accurate analysis and recognition of underwater scenes.

Therefore, enhancing underwater image quality is crucial. Underwater image enhancement techniques improve the clarity and contrast of images captured in complex underwater environments, making them more accurate representations of real-world conditions. These techniques provide clearer visual data, benefiting fields like marine science, environmental conservation, and underwater robotic navigation. To address the aforementioned underwater image issues, there are currently three main categories of enhancement algorithms. The first category is based on physical models, such as the optical transmission model [1] and the underwater dark channel method [2]. However, these methods often rely heavily on accurate estimation of water depth and other environmental parameters, which are difficult to obtain in real-world scenarios, limiting their practical applicability. The second category is based on non-physical models, including techniques like histogram equalization [3], gamma correction [4], and white balance adjustment [5]. While computationally efficient, these methods often lead to over-enhancement, introducing artifacts and noise, and lack generalization ability across diverse underwater environments. The third category is deep-learning-based image enhancement methods, which have recently gained prominence due to their powerful performance. Cao et al. [6] proposed a network model consisting of two fully convolutional networks to restore underwater images by estimating background light and scene depth. Liu et al. [7] introduced a deep residual-based method for underwater image enhancement. Chen et al. [8] proposed an improved Retinex algorithm with an attention mechanism. Liu et al. [9] proposed a global feature multi-attention fusion method based on generative adversarial networks. Chen et al. [10] used a fusion network to fuse the individual correlations of the images and introduce self-attention aggregation. However, traditional Convolutional Neural Networks (CNNs) used in these deep learning methods are inherently limited in their ability to capture long-range dependencies, hindering the effective integration of global contextual information crucial for accurate color correction and haze removal in underwater images. While Transformer-based methods, such as that of Ren et al. [11], can alleviate this long-range dependency limitation, their quadratic computational complexity poses a significant challenge for processing high-resolution underwater images.

Motivated by the need to overcome these limitations, we propose U-Vision Mamba, an underwater image enhancement model based on the Vision Mamba framework [12]. Vision Mamba, with its core Selective Structured State Space Model (S6), offers a compelling solution; its ability to efficiently capture long-range dependencies and model global context (addressing the key limitation of CNNs) is vital for holistic underwater restoration tasks like color constancy and dehazing. Crucially, its linear complexity enables efficient processing of high-resolution underwater imagery, overcoming the computational bottleneck associated with Transformers. Furthermore, the inherent selective mechanism dynamically focuses on relevant image regions while suppressing noise and irrelevant details (mitigating issues like artifacts and over-enhancement common in non-physical models and enhancing robustness), leading to significantly improved enhancement performance. By leveraging these synergistic strengths of Vision Mamba, our U-Vision Mamba model is specifically designed to tackle the core challenges faced by existing methods.

The U-Vision Mamba architecture utilizes a U-shaped encoder–decoder structure. A key innovation is the incorporation of a novel multi-scale sparse attention fusion module within this structure to effectively aggregate features across different scales. The encoder extracts multi-scale feature representations from the degraded input. These features are then processed by the multi-scale sparse attention fusion module, which computes attention weights to selectively fuse the most informative features across scales. Finally, the decoder progressively refines these aggregated features to reconstruct the high-quality enhanced underwater image. Extensive experimental results demonstrate that U-Vision Mamba effectively addresses underwater image degradation, significantly mitigating blur and correcting color distortion, achieving state-of-the-art performance in both subjective visual quality and objective metrics.

2. Methodological Model

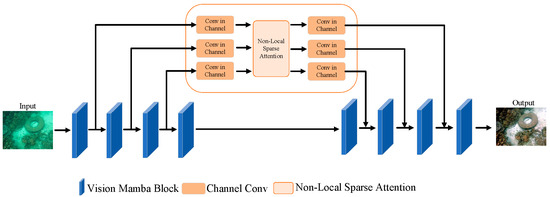

The network architecture consists of an encoder, a decoder, and a multi-scale sparse attention fusion module, with the encoder and decoder both based on Vision Mamba 13, as shown in Figure 1.

Figure 1.

Underwater image enhancement algorithm model based on Vision Mamba.

Before delving into the specifics of U-Vision Mamba, it is essential to contextualize its place within the landscape of underwater image enhancement techniques. Deep-learning-based approaches have emerged as a powerful alternative, leveraging data-driven learning to overcome the limitations of traditional methods. U-Net architectures [13], with their ability to capture local features through convolutional layers, have shown promise but can fall short in capturing long-range dependencies crucial for global context understanding. Transformer-based methods [14], with their self-attention mechanisms, excel at capturing global context but can be computationally expensive, particularly when dealing with high-resolution underwater images. Compared to generative adversarial networks (GANs), while GANs are capable of generating realistic images, they often suffer from training instability and difficulty controlling the quality of generated images. In contrast, U-Vision Mamba offers a more stable training process and greater control over enhancement quality. Compared to conventional Convolutional Neural Networks (CNNs), U-Vision Mamba, with its Vision Mamba block, better captures global information by combining the strengths of state–space models and addressing the limitations of previous methods.

First, the raw underwater image is input into the Vision Mamba-based encoder, which performs preliminary feature extraction. The feature maps obtained before each down-sampling operation in the encoder are fed into the multi-scale sparse attention fusion module, where features at different scales are aggregated, attention is computed, and then they are input into the decoder. The decoder stitches the reconstructed features obtained from up-sampling with the aggregated features obtained from the multi-scale sparse attention fusion module. Finally, an enhanced underwater image is generated.

2.1. Vision Mamba

Mamba is a novel selective structured state–space model, and the Mamba block is a key feature of Vision Mamba. It marks image sequences using positional embeddings and compresses visual representations through a bidirectional state–space model. This method addresses the inherent location sensitivity of visual data. Vision Mamba has strong performance in capturing global context from images. Given a 2D input image , it transforms the image into flattened 2D patches , where represents the dimensions of the input image, is the number of channels, and is the size of the image patches. Secondly, is linearly projected into a vector of size D, and positional embeddings are added, as shown in Equation (1).

where represents the -th patch of image and is a learnable projection matrix. The term refers to the use of a class token for the entire patch sequence. The token sequence is then fed into the -th layer of the Vision Mamba encoder to obtain the output , as shown in Equation (2).

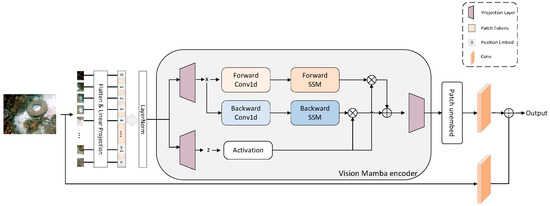

where the Vim block represents the standard Vision Mamba block. To improve feature extraction for underwater images, we normalize the image sequence before feeding it into the Vision Mamba encoder. This normalization allows for more effective multi-level feature extraction in the deep network and reduces the complexity of the input data, thereby reducing the computational burden on the Vision Mamba encoder. After processing using the Vision Mamba encoder, the output image patches are reassembled into a complete image. In order to enhance the model’s ability to capture details in the image and to enhance the robustness of the model in capturing multi-scale or multi-level image information, while adjusting the number of channels of the feature map, we added two convolutional blocks at the end of the Vision Mamba block. The outputs of the convolutional blocks are summed to combine multiple feature image representations, as shown in Figure 2.

Figure 2.

Vision Mamba block.

This design choice aims to leverage the strengths of both state–space models (Vision Mamba) and convolutional networks. Vision Mamba efficiently captures long-range dependencies, while the convolutional blocks provide enhanced local feature extraction and channel adaptation.

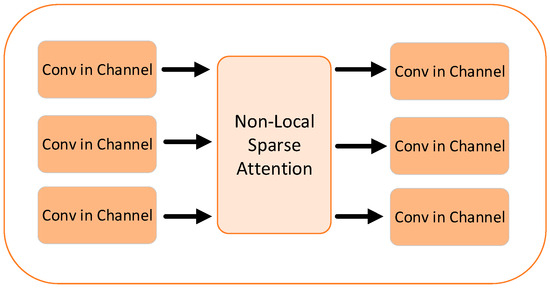

2.2. Multi-Scale Sparse Attention Fusion Module

The multi-scale sparse attention fusion module designed in this paper aims to aggregate the different scale features of U-Vision Mamba as an overall attention matrix and compute the attention sequentially. And, through residual connections, the integrity of the input information is preserved, enabling the model to capture fine image details while understanding global contextual information during image enhancement. The network consists of multi-scale feature fusion combined with a sparse attention mechanism (Non-Local Sparse Attention) [15], as shown in Figure 3. This module builds on the concept of non-local attention to efficiently capture long-range dependencies within multi-scale feature maps. By employing locality-sensitive hashing (LSH) to sparsify attention computation, it significantly reduces the computational burden compared to full self-attention mechanisms, making it more suitable for processing high-resolution underwater images.

Figure 3.

Multi-scale sparse attention fusion module.

First, feature maps with 16, 32, and 64 channels are input sequentially. Through three convolution blocks, the channel numbers are reduced to 4, 4, and 8, respectively, before being fed into the sparse attention module. In the sparse attention module, multiple hashing layers, set to 4 by default, compute sparse attention. Using locality-sensitive hashing (LSH), each pixel is assigned to a hash bucket, generating a hash code that allows the pixel features to be polymerized within the sparse attention process. By assigning features to different hash buckets, the sparse attention mechanism efficiently performs computations within localized regions, avoiding the high cost of global calculations. After assigning features to different hash buckets, padding is applied, and the features are concatenated with the original image data to ensure consistent image block size. Afterward, L2 normalization is performed, as well as calculation of unnormalized attention scores. These scores are normalized using the SoftMax function to compute the final attention weights. The attention weights are subsequently weighted and polymerized with the feature image after hash grouping and sorting using the Einstein summation convention method. Finally, the attention-weighted features are fused with the input to produce the final multi-scale features. We add three convolution blocks at the end of the module to restore the channel count of the features for decoding in the U-Vision Mamba decoder.

2.3. Loss Functions

In the field of deep learning, the selection and design of loss functions are indispensable. For this reason, many researchers have proposed many loss functions, such as pixel-level mean square error (MSE), perceptual loss, adversarial loss, and structural similarity loss (SSIM). In order to quantify the difference between the enhanced image and the reference image, better recover the effective information, and obtain high-quality underwater enhanced images, this paper constructs a multi-loss function structure composed of L1 loss function, L1 gradient loss function, and SSIM loss function as the loss function of the model in this paper. The L1 loss calculates the absolute difference at the pixel level between the enhanced image and the target image. It helps the model reduce this difference during training to obtain an enhanced image that is closer to the target image. The L1 loss is

where is the total number of pixels in the image, is the value of the image generated after enhancement at the i-th pixel position, and is the value of the corresponding target image at the i-th pixel position.

The gradient loss calculates the L1—norm of the gradient differences between the generated image and the target image in two directions. The gradient loss can guide the model to retain the details and edge information of the image as much as possible during the training process, and it is very suitable for image enhancement tasks that maintain or restore the texture and edge information of the image. The gradient loss is

where and are the horizontal and vertical gradients of the generated image in pixels and and are the horizontal and vertical gradients of the target image in pixels, respectively.

Structural similarity (SSIM) is a measure of the quality of an optimized enhanced underwater image, which integrates the brightness, contrast, and structural information of the image, and it can effectively measure the structural similarity between the enhanced image and the reference image and then guide the model to generate high-quality images more in line with human visual perception. The calculation of the SSIM loss firstly relies on the SSIM index, as shown in Equation (5).

where and are the mean values of the enhanced image and the target image, respectively, and are denoted as the variance of the two, is the covariance of the two, and and are the regularization coefficients. Then, the SSIM loss is defined as

In summary, the total loss function in this paper is

To balance the contribution of gradient and perceptual structural losses with the primary loss term, we introduce a unified weighting factor, , considering their synergistic effect on image restoration quality.

3. Experimentation and Analysis

3.1. Experimental Settings

In this paper, the publicly available UIEB [16] dataset is used, which contains 950 original underwater images, including those with color distortion and low contrast caused by different depths, turbidity, and lighting changes. The training set and the test set are allocated at a ratio of 9:1. In the preprocessing stage, all input images are scaled to a fixed size of 500 × 375, and the pixel values are normalized to the interval [0, 1] through a ToTensor operation. In addition, in order to improve the structural representation of the images and reduce the color bias interference, we converted the RGB images to YCrCb color space and used only the Y channel for training. The number of epochs is 150, the learning rate is 0.0001, and the Adam optimizer is adopted. The experimental environment platform is a Ubuntu 20.04 operating system, ×86 platform, CPU configuration for Intel i9-11900K, 64GB RAM, graphics card for NVIDIA GeForce RTX2080Ti, development environment for Python3.10 and Pytorch2.1.

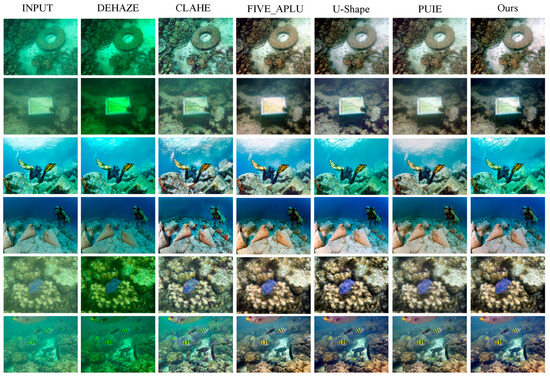

3.2. Subjective Experimental Comparison

The UIEB dataset is often used to evaluate and compare different underwater image enhancement algorithms. In order to show the enhancement effect of the algorithm in this paper more intuitively, the algorithm in this paper is compared with the CLAHE algorithm [17], the DEHAZE algorithm [18], the FIVE_APLU algorithm [19], the U-Shape algorithm [20], and the PUIE algorithm [21], as shown in Figure 4, where INPUT is the original image. The DEHAZE algorithm exacerbates the effect of color bias, resulting in oversaturation of the image’s color and low overall image brightness. The CLAHE algorithm improves both the dark and bright areas of the image simultaneously, but the color saturation of the image is low and lacks color representation. The FIVE_APLU algorithm has improved color reproduction compared to the original image, making the difference between the object and the background more obvious, but the brightness and exposure of the image are not uniform, and the signage in the center area is too bright. Both the U-Shape algorithm and PUIE have improved performance compared to the former in dealing with image fogging and blurring, which is a color bias problem, and U-Shape presents better color saturation. The method proposed in this paper effectively removes the image blurring caused by fogging. The clarity of the image is significantly increased, the overall picture becomes more transparent, the colors are more distinct, the edges of objects are more natural, and clear underwater images are obtained. Judging from the comprehensive effect, the method in this paper performs excellently in multiple aspects, such as defogging, color correction, and global contrast, and it is superior to other methods.

Figure 4.

Subjective comparison of underwater image enhancement algorithms using the UIEB dataset.

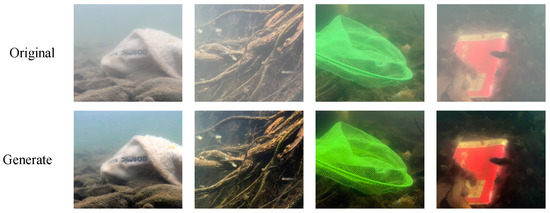

To further illustrate the effectiveness of this model for underwater image enhancement, we carried out underwater scene shooting in the Huajiang River Basin in Guilin and conducted enhancement experiment verification, as shown in Figure 5.

Figure 5.

Underwater image enhancement verification.

3.3. Objective Quality Assessment

Objective quality evaluation of image enhancement assesses the performance of algorithms through quantitative indicators to avoid the deviation of subjective evaluation. In this paper, four objective evaluation indicators, namely, Peak Signal-to-Noise Ratio (PSNR) [22], structural similarity (SSIM) [22], Underwater Color Image Quality Evaluation (UCIQE) [23], and Underwater Image Quality Measurement (UIQM) [24], are used to conduct the quantitative analysis and compare the enhanced image quality. The PSNR value is usually used to compare the difference between the original image and the enhanced image; the higher value indicates that the two are closer, with less distortion and better quality. SSIM responds to the structural similarity of images and takes into account the visual characteristics of the human eye. It uses the mean, the standard deviation, and covariance as estimates of brightness, contrast, and structural similarity, respectively. The closer the value is to one, the more similar the structures of the two images. UCIQE mainly evaluates the fidelity of image colors. UIQM is a comprehensive quality evaluation index. The higher the value, the better the enhancement effect. In this paper, the different methods proposed are compared according to these four indexes, and the optimal values are highlighted in bold black, as shown in Table 1.

Table 1.

Evaluation indicators of different methods using the UIEB dataset.

PSNR and SSIM: The higher PSNR and SSIM values of U-Vision Mamba indicate that it generates images that are more similar to the ground truth, with less distortion and better structural preservation. This suggests that the Vision Mamba architecture is effective in feature extraction and image reconstruction, particularly for underwater images.

UCIQE: The higher UCIQE score of U-Vision Mamba suggests that it produces images with better color balance and less color distortion, which is a crucial aspect of underwater image enhancement. This result indicates that the Vision Mamba architecture can better capture the features specific to underwater images, such as color casts and low contrast.

UIQM: The UIQM score suggests that U-Vision Mamba has an advantage in certain aspects of image quality. It provides more balanced and superior results across multiple metrics.

Experiments show that the UCIQE, PSNR, and SSIM indicators of the method in this paper are all superior to other methods, with values of 0.4986, 25.6463, and 0.9724, respectively. This indicates the balance of the algorithm in this paper in terms of image brightness, color, edge details, contrast, and saturation during underwater image enhancement. Although the UIQM index is slightly lower than that of the U-Shape algorithm, the algorithm in this paper is also at an above-average level, with a mean value of 2.1964. Overall, the underwater images enhanced by the algorithm in this paper can better restore the color, contour, and texture of objects, making the objects distinct from the background and more in line with the visual perception of the human eye. It can be seen that the algorithm in this paper has the best effect.

3.4. Comparison with Other Deep Learning Models

To further validate the effectiveness of our U-Vision Mamba model, we conducted additional comparative experiments with other deep learning models, specifically Convolutional Neural Networks (CNNs), which are widely used in image processing tasks. We chose U-Net and UWCNN as our comparative baselines. U-Net is a classic encoder–decoder architecture known for its strong performance in image segmentation and enhancement tasks. UWCNN is another CNN model specifically designed for underwater image enhancement.

We used the same UIEB dataset used in the previous experiments to ensure a fair comparison. The dataset consists of underwater images with varying degrees of color cast, low contrast, and haze, along with their corresponding ground truth images. Both U-Net and UWCNN were trained using the same training set of the UIEB dataset. Training was conducted over 150 epochs with a learning rate of 0.0001 using the Adam optimizer. The batch size was set to 16 for all models. We maintained consistency in other hyperparameters to ensure a fair comparison. The U-Net model was implemented with a standard encoder–decoder architecture, including skip connections between the encoder and the decoder. The UWCNN model was implemented according to the architecture described in [21], with convolutional layers specifically designed for underwater image enhancement.

The quantitative results of the comparison between our U-Vision Mamba model and the CNN models are presented in Table 2.

Table 2.

Performance comparison with CNN models using the UIEB dataset.

From the results in Table 2, it is evident that our U-Vision Mamba model outperforms both U-Net and UWCNN in terms of UCIQE, PSNR, and SSIM metrics. While U-Net provides reasonable performance, it still falls short of the results achieved by our model. UWCNN, although designed specifically for underwater image enhancement, shows inferior performance in PSNR and SSIM, indicating larger errors and image differences compared to U-Vision Mamba.

3.5. Ablation Experiment

In order to verify the effectiveness of the Vision Mamba block and the multi-sparse attention fusion module in the algorithm of this paper, an ablation experiment was carried out, and the experimental results are shown in Table 3.

Table 3.

Ablation experiment.

In experiment A, the Vision Mamba block in the network structure was removed, and the original U-Net network convolution block was used for feature extraction and learning. In experiment B, the sparse attention fusion module in the network structure was removed during the experiment, and the multi-scale features extracted by the Vision Mamba block were directly output through skip connections.

It can be seen based on the PSNR and SSIM indicators in the experimental results that the performance of our method is the best when it is complete. Among them, the Vision Mamba block has strong context reading performance, and, combined with embedding and encoding operations, it extracts image information better. The multi-scale sparse attention fusion module can aggregate differently scaled features of the U-shaped network and calculate attention, which enhances the model’s ability to extract multi-scale information and has a significant effect on image defogging, color correction, etc.

4. Conclusions

This paper presents an innovative underwater image enhancement network, U-Vision Mamba, complemented by a sparse attention fusion module. The proposed method effectively addresses common challenges in underwater imaging, such as low contrast, color distortion, and blurring, by preserving image details and capturing global features. The enhanced images demonstrate a significant improvement in correcting green and blue color casts and eliminating blur, resulting in more natural and visually appealing outputs. Furthermore, the algorithm outperforms existing methods in terms of objective metrics, including PSNR and SSIM.

While the method shows promising results, several limitations warrant consideration. First, the model’s generalization capability is primarily validated on the UIEB dataset, which may limit its performance in diverse real-world underwater environments. Second, the model’s computational complexity poses challenges for deployment on resource-constrained devices, such as underwater drones. Lastly, the current loss function and sparse attention fusion module may not be optimal for all underwater scenarios, and the integration of Mamba with CNNs could be further optimized for enhanced performance.

Future research will focus on addressing these limitations. Specifically, we aim to enhance the model’s generalization by training on more diverse datasets and employing advanced data augmentation techniques. Additionally, we will explore model compression techniques, such as pruning and quantization, to reduce computational demands and facilitate deployment on embedded systems. Further studies will also investigate alternative loss functions, incorporating metrics like color constancy and sharpness, and refine the sparse attention fusion module for improved efficiency. Lastly, we plan to explore novel integration strategies between Mamba models and CNNs to leverage their complementary strengths. These efforts will contribute to advancing underwater image enhancement technology and enable practical, real-world applications.

Author Contributions

Conceptualization, Y.W.; methodology, Y.W. and Z.C.; software, Z.C.; validation, Y.W. and Z.T.; formal analysis, M.A.-B.; investigation, Z.C. and Z.T.; resources, Y.W.; data curation, Y.W. and Z.C.; writing—original draft preparation, M.A.-B.; writing—review and editing, Z.T.; visualization, Z.C.; supervision, Y.W.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Guangxi Science and Technology Plan Project (Grant No. AB25069501, No. AD22035141), the Special Research Project on the Strategic Development of Distinctive Interdisciplinary Fields (GUAT Special Research Project on the Strategic Development of Distinctive Interdisciplinary Fields), and Guilin University of Aerospace Technology (No. TS2024431).

Data Availability Statement

The original contributions presented in this study are included in the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wen, H.; Tian, Y.; Huang, T.; Gao, W. Single Underwater Image Enhancement with a New Optical Model. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 2423–2426. [Google Scholar] [CrossRef]

- Drews, P.L., Jr.; Nascimento, E.R.; Moraes, F.M.; Botelho, S.S.C.; Campos, M.F.M. Underwater Depth Estimation and Image Restoration Based on Single Images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Johnston, R.E.; Ericksen, J.P.; Yankaskas, B.C.; Muller, K.E. Contrast-Limited Adaptive Histogram Equalization: Speed and Effectiveness. In Proceedings of the SPIE Conference on Medical Imaging IV: Image Processing, Newport Beach, CA, USA, 5–9 February 1990; Volume 1233, pp. 337–346. [Google Scholar] [CrossRef]

- Rizzi, A.; Gatta, C.; Marini, D. Color Correction Between Gray World and White Patch. In Proceedings of the SPIE Conference on Human Vision and Electronic Imaging VII, San Jose, CA, USA, 21–24 January 2002; Volume 4662, pp. 239–249. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; De Smedt, T.; Schelkens, P. Enhancing Underwater Images and Videos by Fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar] [CrossRef]

- Cao, K.; Peng, Y.T.; Cosman, P.C. Underwater Image Restoration Using Deep Networks to Estimate Background Light and Scene Depth. In Proceedings of the IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Las Vegas, NV, USA, 8–10 April 2018; pp. 181–184. [Google Scholar] [CrossRef]

- Liu, P.; Wang, G.; Qi, H.; Zhang, C.; Zheng, H.; Yu, Z. Underwater Image Enhancement with a Deep Residual Framework. IEEE Access 2019, 7, 94614–94629. [Google Scholar] [CrossRef]

- Chen, X.; Li, J.; Hua, Z. Retinex Low-Light Image Enhancement Network Based on Attention Mechanism. Multimed. Tools Appl. 2023, 82, 4235–4255. [Google Scholar] [CrossRef]

- Liu, X.; Lin, S.; Tao, Z. Underwater Image Enhancement of Global-Feature Dual-Attention-Fusion Adversarial Network. Electro-Opt. Control 2022, 29, 43–48. [Google Scholar]

- Kumar, N.; Manzar, J.; Shivani; Garg, S. Underwater Image Enhancement using Deep Learning. Multimed. Tools Appl. 2023, 82, 46789–46809. [Google Scholar] [CrossRef]

- Ren, T.; Xu, H.; Jiang, G.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced Swin-Convs Transformer for Simultaneous Underwater Sensing Scene Image Enhancement and Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-UWnet: Compressed Model for Underwater Image Enhancement (Student Abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 15102–15103. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Mei, Y.; Fan, Y.; Zhou, Y. Image Super-Resolution with Non-Local Sparse Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5627–5636. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Cong, R.; Pang, Y.; Chen, C.; Wang, Z. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, I.M.; Isa, N.A.M. Contrast Limited Adaptive Local Histogram Equalization Method for Poor Contrast Image Enhancement. IEEE Access 2025, 13, 62600–62632. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Zheng, H.; Wu, Y.; Cao, X.; Li, Y.; Duan, J. Five A+ Network: You Only Need 9K Parameters for Underwater Image Enhancement. arXiv 2023, arXiv:2305.08824. [Google Scholar] [CrossRef]

- Yao, H.; Guo, R.; Zhao, Z.; Zang, Y.; Zhao, X.; Lei, T.; Wang, H. U-Trans CNN: A U-shape transformer-CNN fusion model for underwater image enhancement. Displays 2025, 88, 103047. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater Scene Prior Inspired Deep Underwater Image and Video Enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).