Modeling Learning Outcomes in Virtual Reality Through Cognitive Factors: A Case Study on Underwater Engineering

Abstract

1. Introduction

- Leveraging insights from an interdisciplinary viewpoint, how much do immersive engagement and flow state mediate the connection between learning styles (e.g., Kolb’s experiential learning theory) and learning outcome results in a VR-based educational environment?

- Incorporating insights from educational sciences, psychology, and immersive technologies, in what ways do differences in learning styles (e.g., Kolb’s experiential learning theory) influence student performance in VR educational environments?

Interdisciplinary Approaches in Virtual Reality Applications

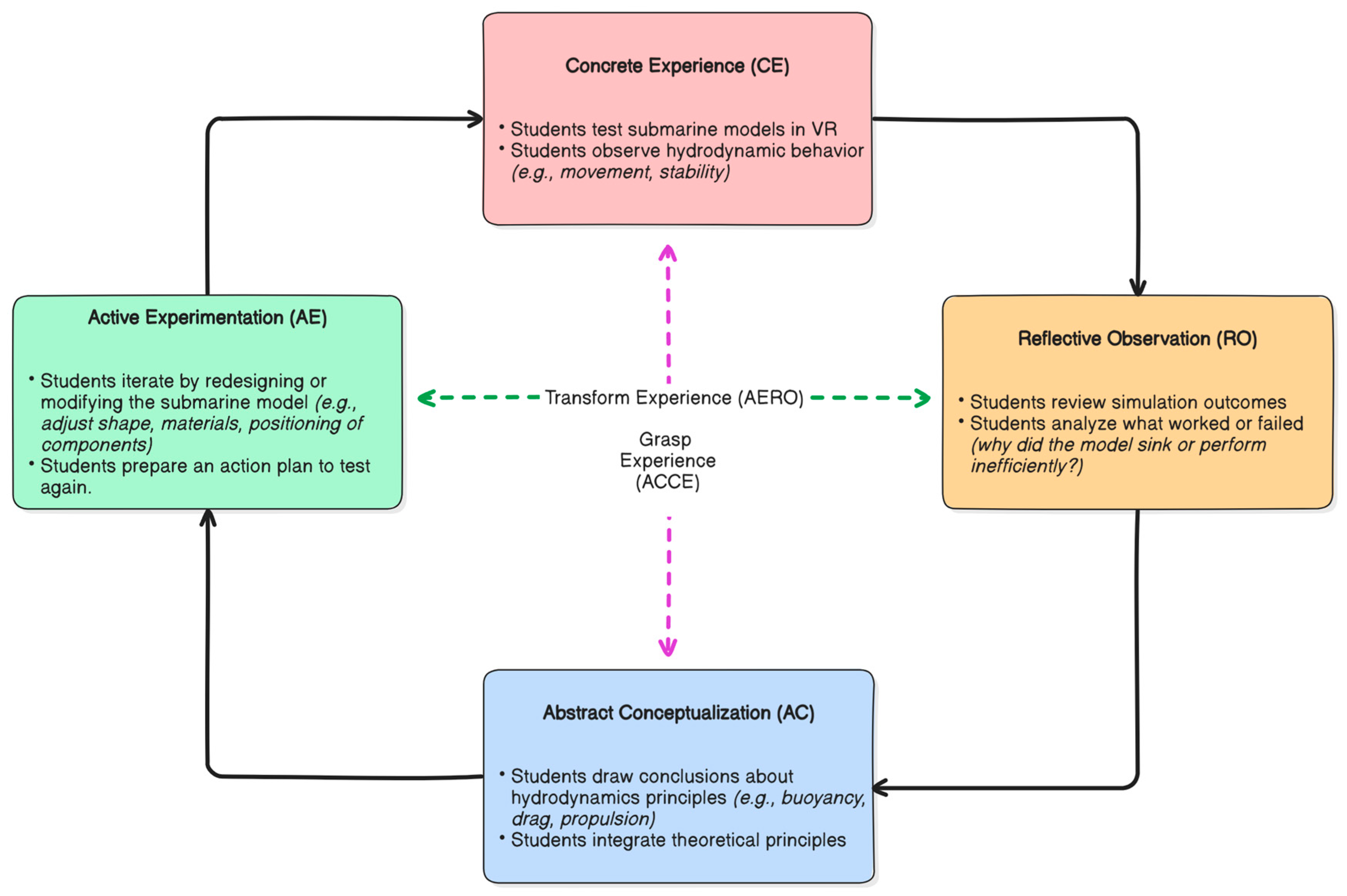

2. Kolb’s Experiential Learning Theory in Designing Virtual Reality Environments for Engineering Education

3. Research Methodology

3.1. Research Aim

3.2. Hypothesis

3.3. Target Group

3.4. Methods

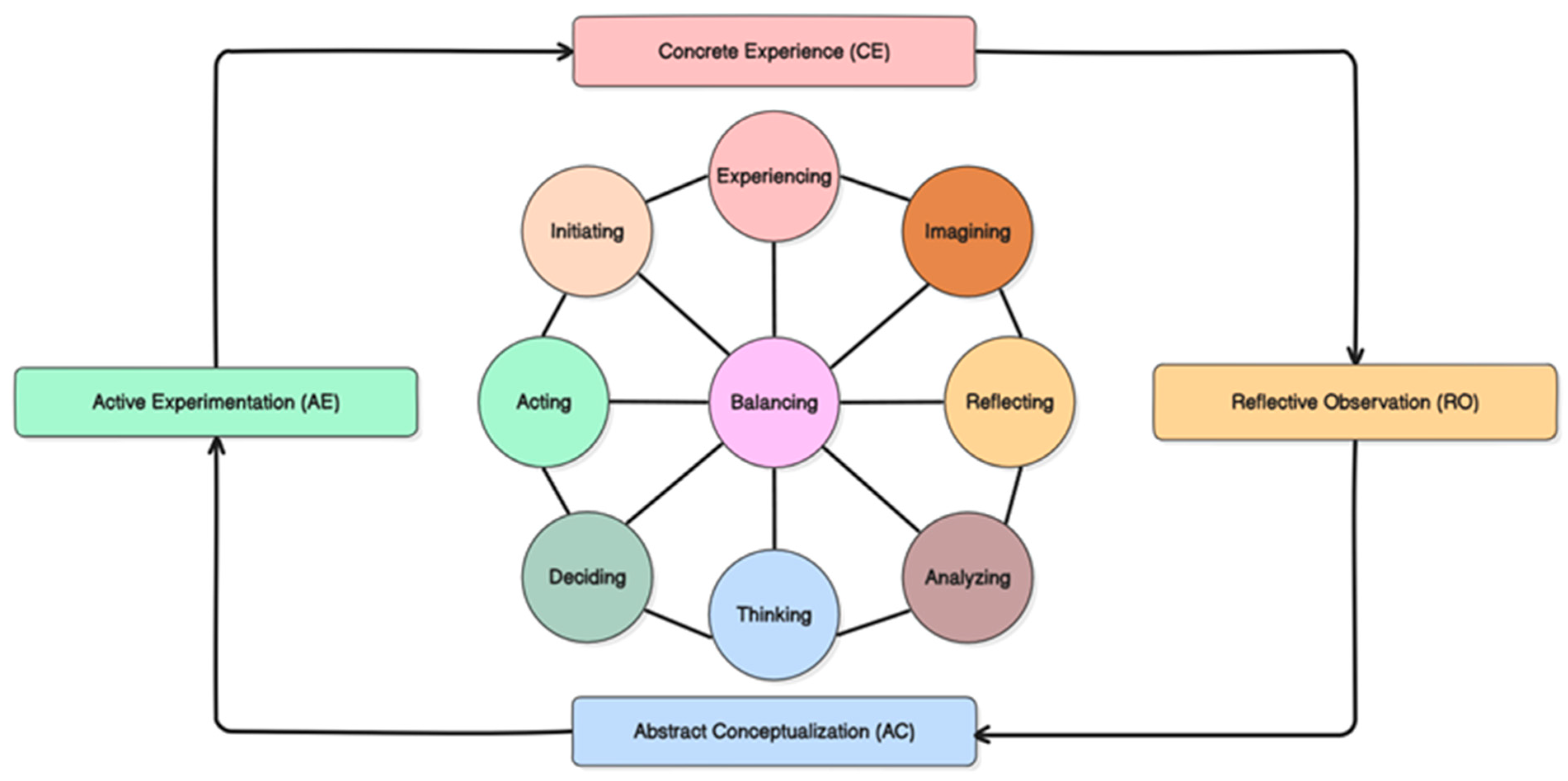

3.4.1. The Kolb Experiential Learning Profile (KELP)

- (a)

- Concrete Experience (CE)—Learners immerse themselves fully, openly, and without preconceived biases in novel experiences, emphasizing direct involvement and sensory engagement.

- (b)

- Reflective Observation (RO)—They contemplate and examine these experiences from diverse viewpoints, fostering introspection and nuanced understanding.

- (c)

- Abstract Conceptualization (AC)—They synthesize observations into coherent concepts, forming logically robust theories that explain patterns and relationships.

- (d)

- Active Experimentation (AE)—They apply these theories practically to inform decision-making and address real-world problems, testing ideas through action.

3.4.2. The Flow State Scale (FSS)

- Challenge–Skill Balance: Perceiving that personal skills match the task’s demands;

- Action–Awareness Merging: Experiencing seamless integration of actions and awareness;

- Clear Goals: Having a clear understanding of objectives;

- Unambiguous Feedback: Receiving immediate, clear feedback on performance;

- Concentration on the Task: Maintaining deep focus without distractions;

- Sense of Control: Feeling in command of the activity;

- Loss of Self-Consciousness: Becoming less aware of self and external judgments;

- Transformation of Time: Perceiving time as altered, either speeding up or slowing down;

- Autotelic Experience: Finding the activity intrinsically rewarding.

3.4.3. Immersive Tendencies Questionnaire (ITQ)

3.4.4. Basic Needs in Games (BANG)

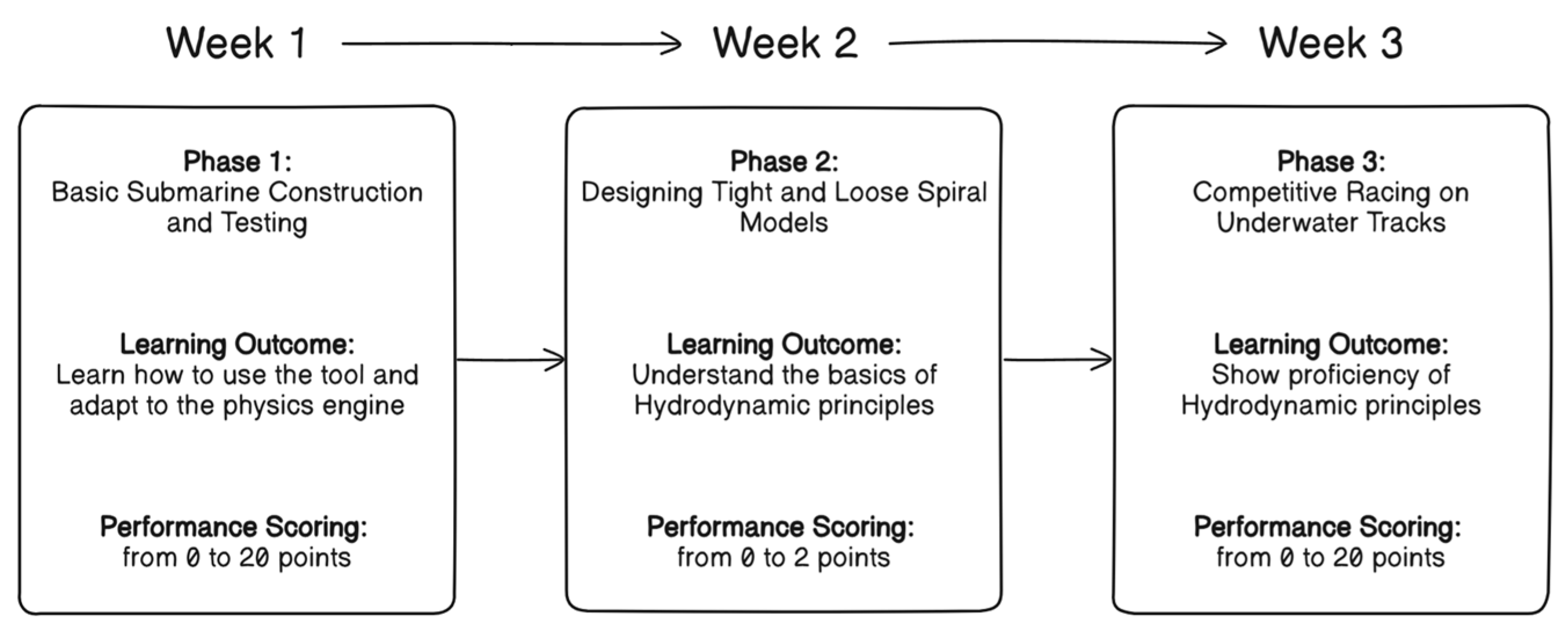

3.4.5. Scoring Performance

- Phase 1 (Basic Submarine Construction and Testing, scored on a 0–20 Scale): This structured phase emphasizes foundational building and initial testing.

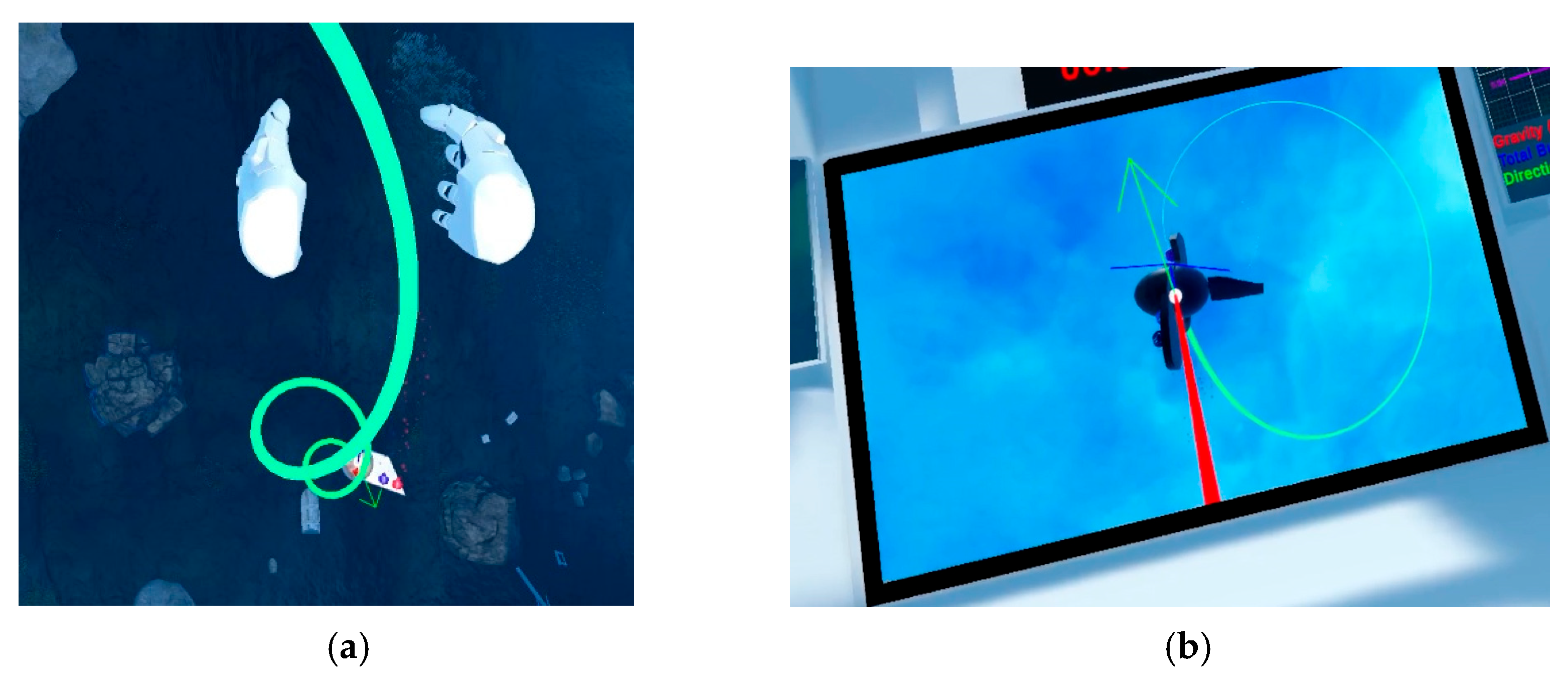

- Phase 2 (Designing Tight and Loose Spiral Models, scored on a 0–2 Scale): Focused on strategic planning and iterative refinement, the binary scoring reflects a pass/fail mechanism for the two required models: 2 points for successfully completing both, 1 point for one, and 0 points for none.

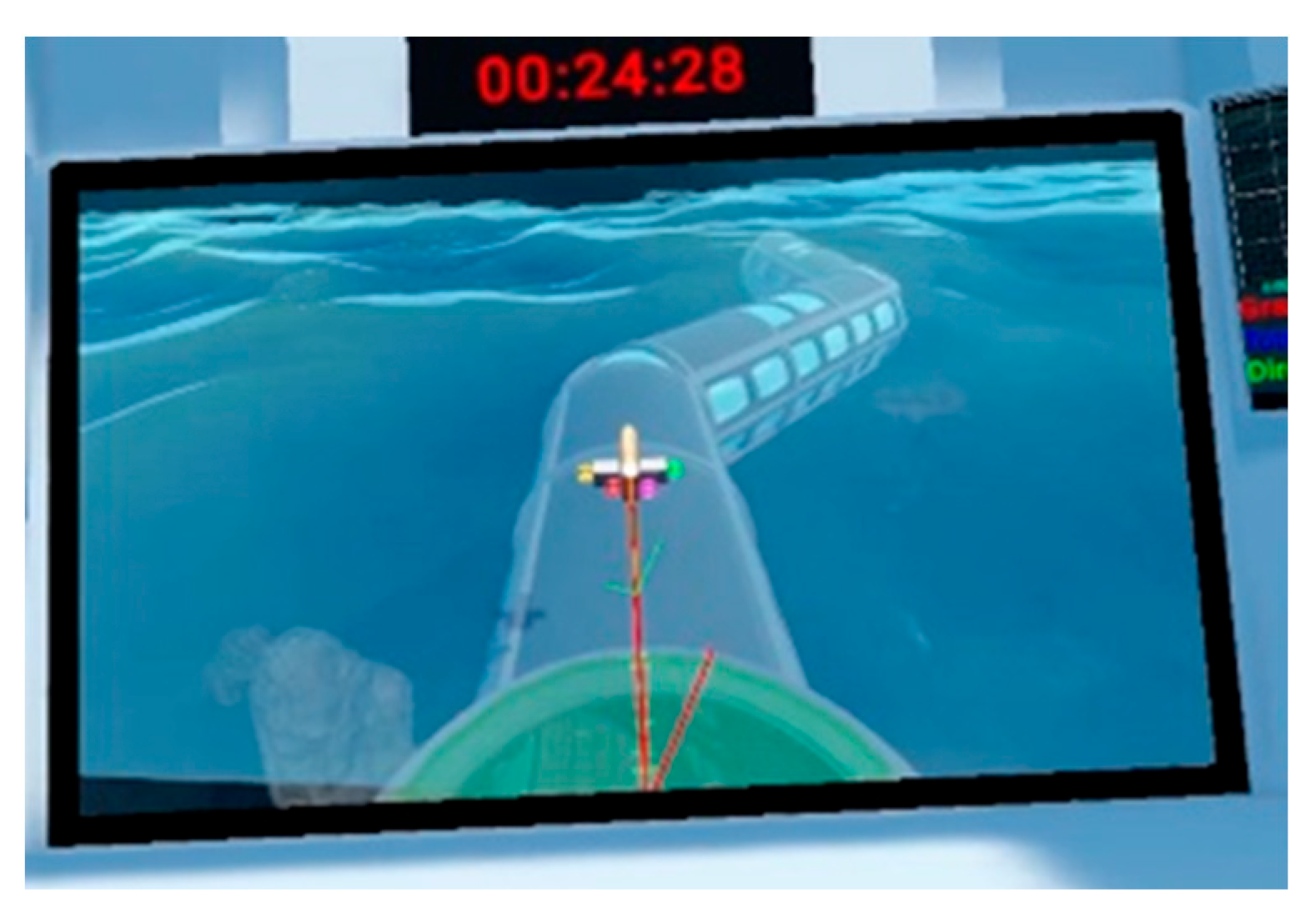

- Phase 3 (Competitive Racing on Underwater Tracks, scored on a 0–20 Scale): This dynamic phase demands real-time adaptation and quick decision-making. The scoring system was designed to be simple yet engaging. Points were awarded according to achievement on each track, with 1 point for Track 1, 2 points for Track 2, and 3 points for the more complex Track 3. Additional points would be awarded after winning the race against an opponent.

3.5. Statistical Methods

- The measurement model: which examines how well the observed variables (indicators) relate to their underlying theoretical concepts (latent variables);

- The structural model: which tests the hypothesized relationships between the latent variables themselves.

- Path Analysis: depicts the standardized path coefficients between latent variables (learning styles → VR experience → performance). These coefficients represent the strength and direction of the relationships, scaled between −1 and +1. Path coefficients were obtained by SmartPLS through an iterative algorithm that maximizes explained variance (R2) in the dependent variables (performance or outcomes learning). The percentages shown in the diagrams correspond to R2 values, representing the proportion of variance in each dependent latent variable explained by its predictors (e.g., R2 = 0.494 means 49.4% of performance variance is explained).

- Covariance: refers to the degree to which two latent variables vary together. SmartPLS calculates these values from the estimated model using the covariance of their composite scores.

- Average Variance Extracted (AVE): measures convergent validity—in other words, how well a construct explains the variance of its indicators. AVE > 0.50 indicates adequate convergence [29].

- Discriminant Validity (Fornell–Larcker Criterion): ensures that a construct is more strongly related to its own indicators than to other constructs. The square root of AVE for each construct should be greater than its correlations with other constructs.

- Cronbach’s α and Composite Reliability: assess internal consistency (values > 0.70 indicate good reliability).

- Variance Inflation Factor (VIF): checks whether indicators are excessively correlated, which can bias estimates. VIF < 3.3 indicates no critical collinearity.

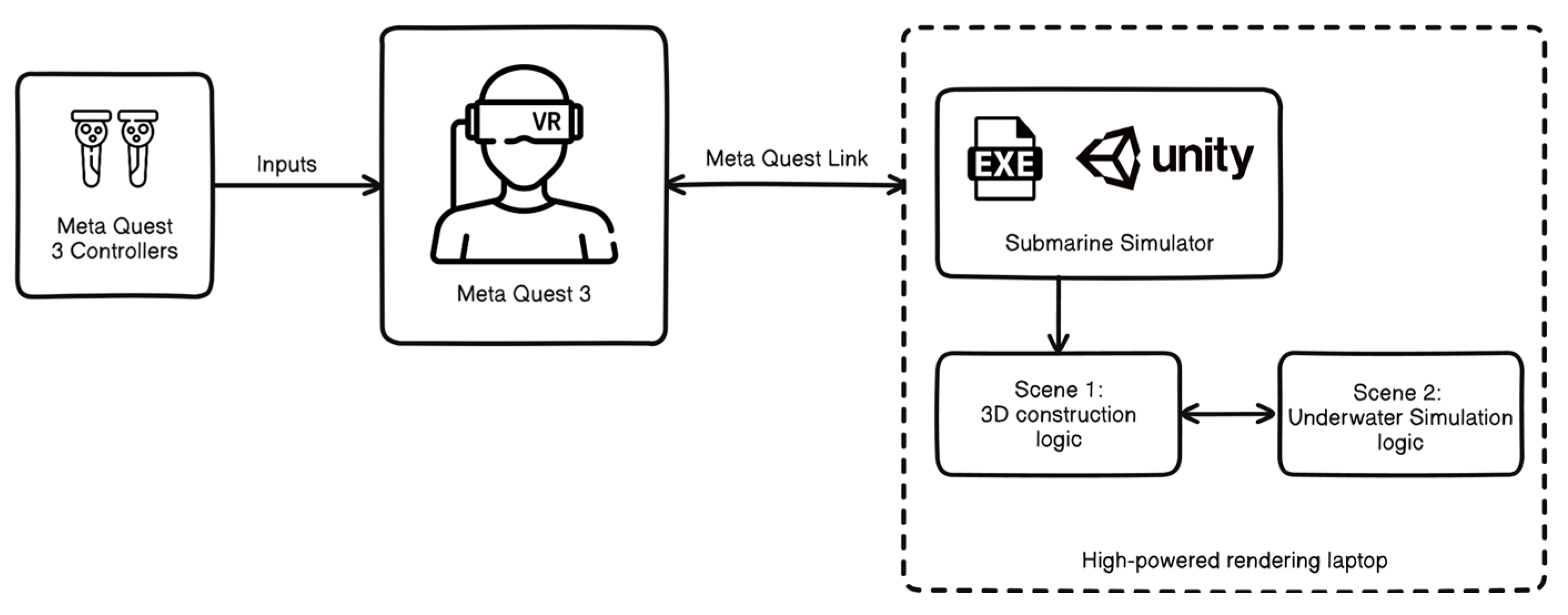

3.6. Technical Setup and Architecture

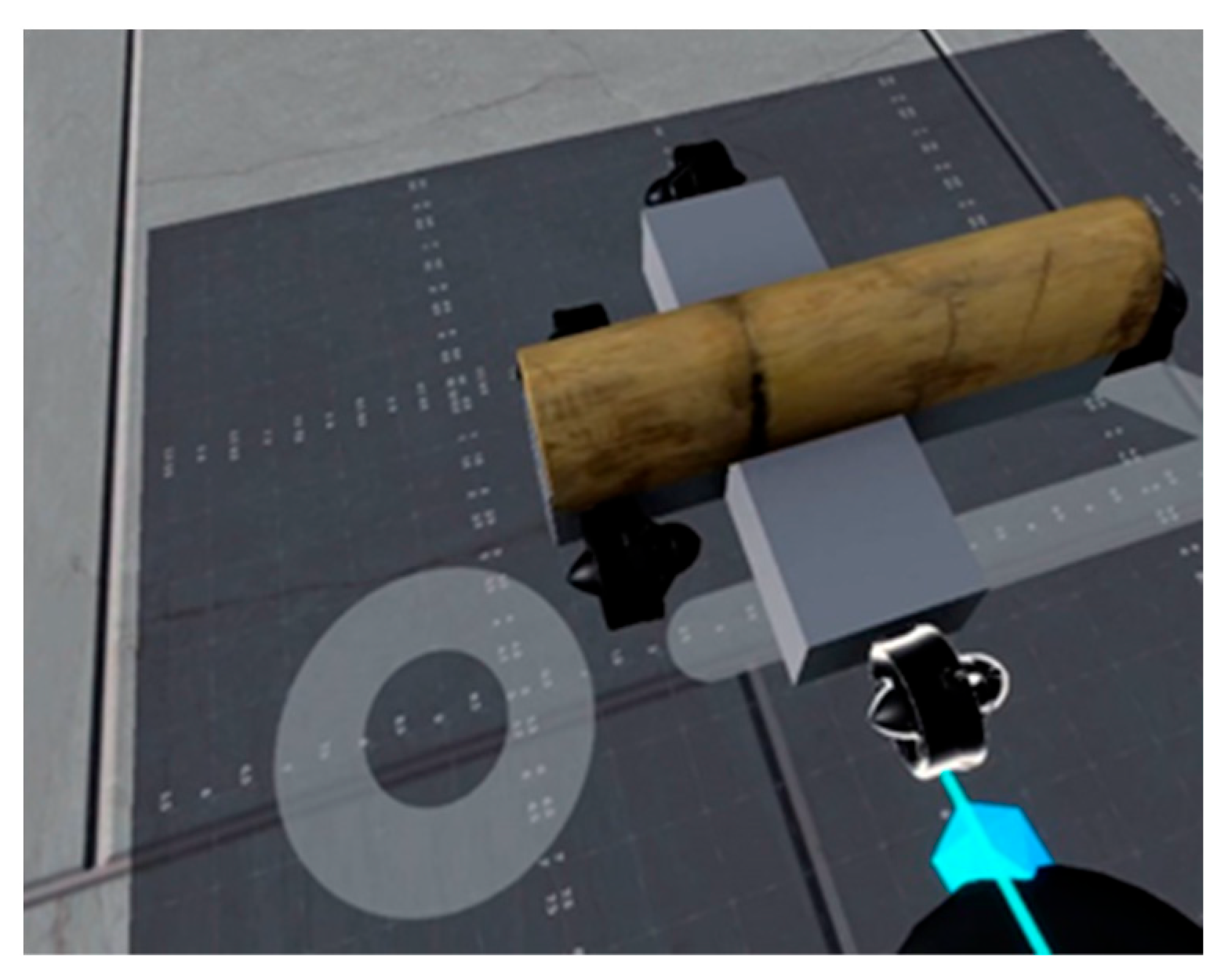

- Inserting, manipulating, and duplicating 3D objects using VR controllers;

- Scaling 3D objects proportionally, mirroring and free form, enabling users to optimize dimensions for all submarine components;

- Snapping mechanisms (direct on an object face or at an angle) to facilitate the process of combining different shapes into an ensemble;

- Activating vertical and horizontal guides to enhance construction accuracy;

- Symmetrical construction modes, contexts in which modifications on any plane (OX/OY/OZ) are automatically mirrored on the opposing side.

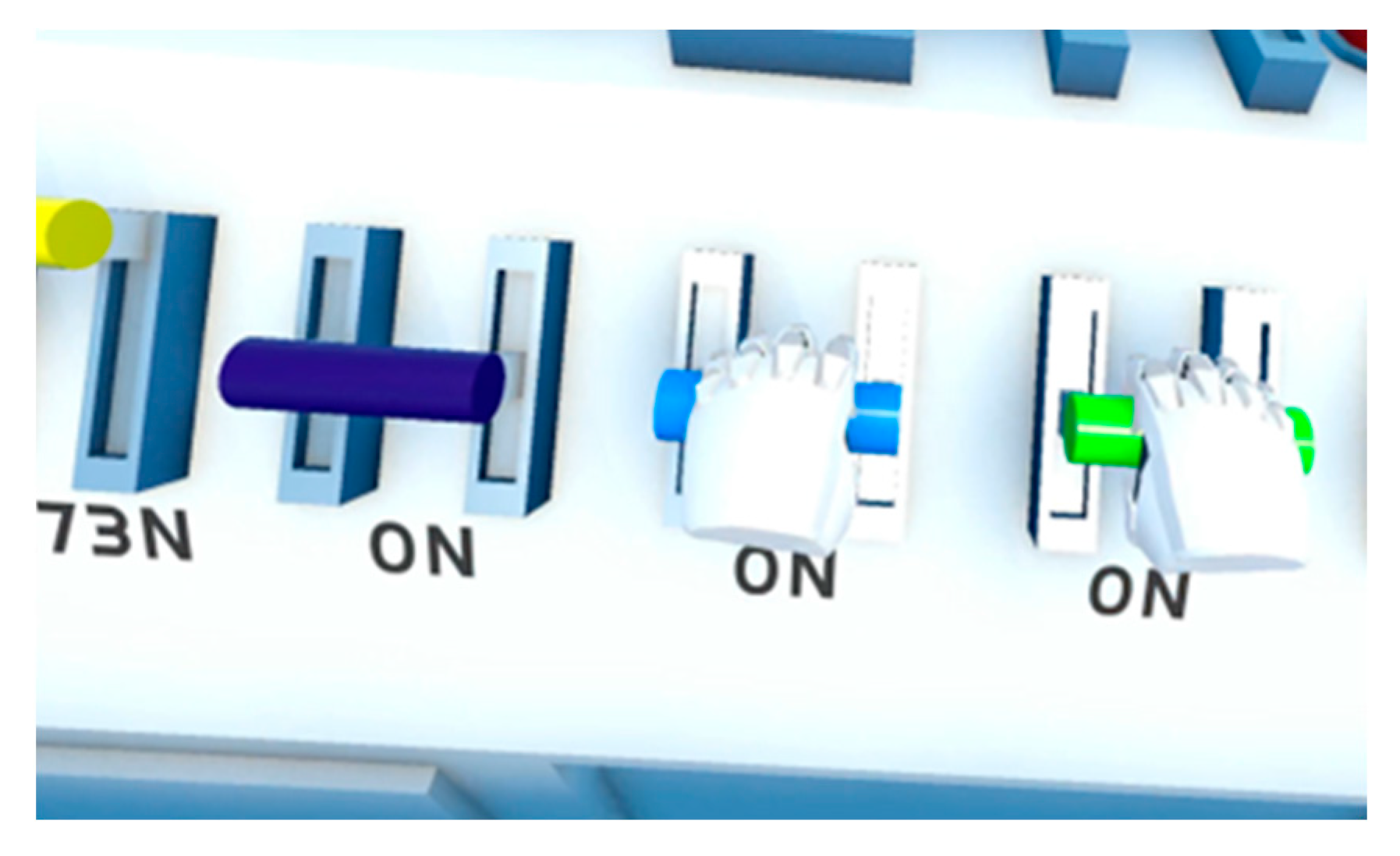

- Activation and deactivation of thrusters to accelerate the model directionally;

- Application of the custom-built physics simulations to visualize model behavior under submerged conditions;

- Rendering of a green trajectory trace to visualize the model’s path;

- Toggling between third-person and first-person perspectives;

- Restarting the underwater simulation by re-immersing the submarine model;

- Transitioning back to the construction scene for iterative refinements.

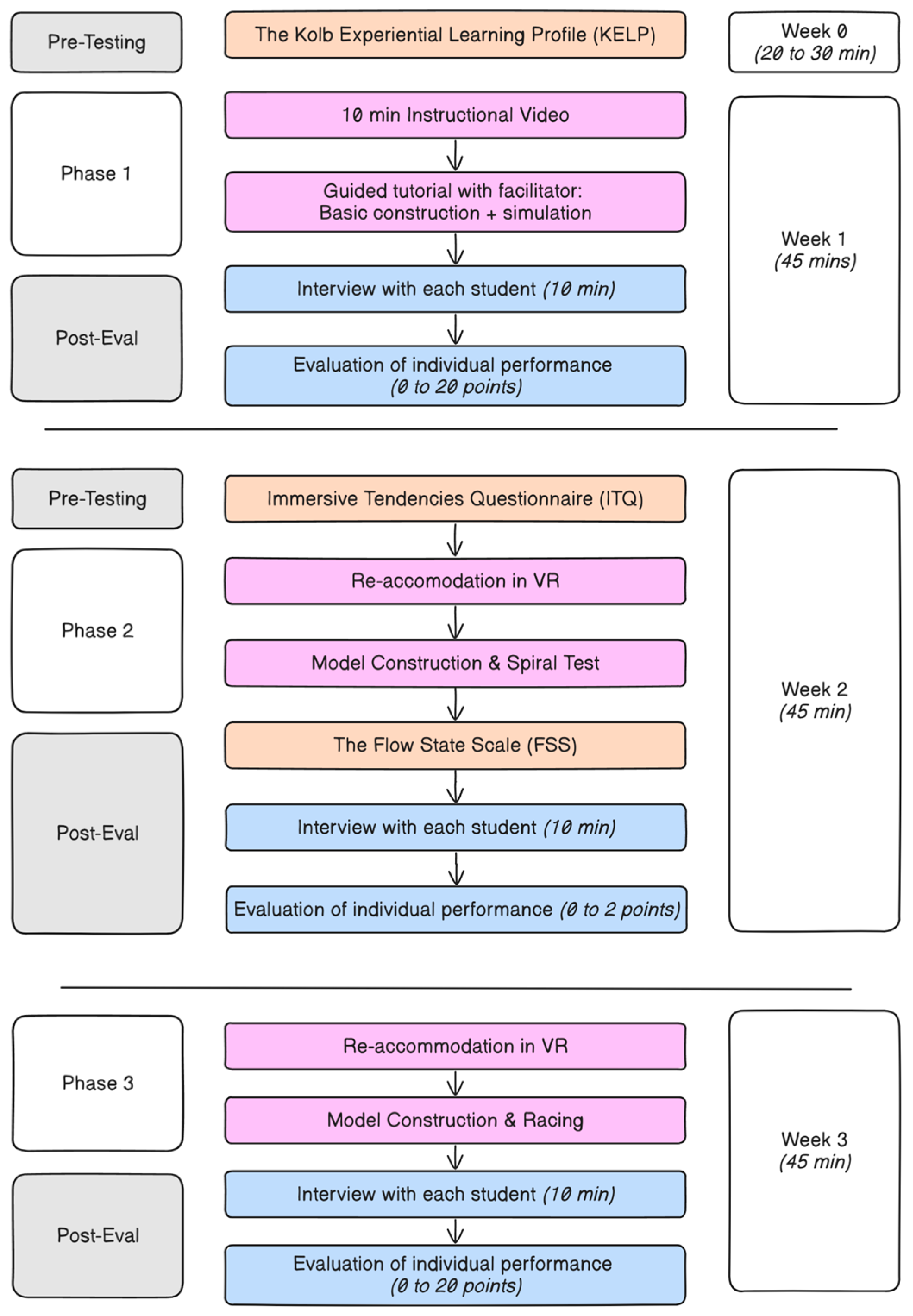

3.7. Research Design

4. Results

4.1. Path Analyses

4.2. Covariance Analysis of Latent Constructs

4.3. Construct Reliability and Validity

4.4. Discriminant Validity

4.5. Model Fit

4.6. Bootstrapping Path Coefficients

5. Discussion

6. Conclusions

Limitations

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- Concrete Experience (CE)—Learners immerse themselves fully, openly, and without preconceived biases in novel experiences, emphasizing direct involvement and sensory engagement;

- Reflective Observation (RO)—They contemplate and examine these experiences from diverse viewpoints, fostering introspection and nuanced understanding;

- Abstract Conceptualization (AC)—They synthesize observations into coherent concepts, forming logically robust theories that explain patterns and relationships;

- Active Experimentation (AE)—They apply these theories practically to inform decision-making and address real-world problems, testing ideas through action.

- Experiencing: Thrives on hands-on engagement, relying on intuition and emotional connection to immerse fully in new experiences;

- Imagining: Combines creativity and reflection, brainstorming innovative ideas by exploring diverse perspectives with empathy;

- Reflecting: Focuses on deep observation, analyzing experiences from multiple angles to uncover patterns and insights;

- Analyzing: Excels at systematic analysis, organizing observations into structured, logical frameworks;

- Thinking: Prioritizes logical reasoning, developing precise, theory-driven solutions through objective analysis;

- Deciding: Focuses on practical problem-solving, using theories to make informed decisions and achieve measurable outcomes;

- Acting: Thrives in dynamic settings, implementing ideas and adapting quickly to achieve tangible results;

- Initiating: Proactively embraces new challenges, combining risk-taking with enthusiasm to explore innovative solutions;

- Balancing: Adapts flexibly across all learning cycle stages, seamlessly integrating experiencing, reflecting, theorizing, and acting.

- ACCE (Abstract Conceptualization minus Concrete Experience): This represents the “perceiving” dimension, measuring an individual’s preference for abstract thinking versus concrete feeling when grasping new information. A positive ACCE score indicates a stronger inclination toward AC—favoring logical analysis, theoretical models, and objective reasoning (e.g., conceptualizing principles like buoyancy in a submarine simulation). A negative score leans toward CE, emphasizing tangible, hands-on experiences and intuitive, relational approaches (e.g., immersing in sensory feedback from the VR environment). This dimension highlights how learners prefer to initially encounter and internalize experiences, with balanced scores suggesting flexibility between the two.

- AERO (Active Experimentation minus Reflective Observation): This is the “processing” dimension, assessing the preference for active doing versus reflective watching when transforming experiences into knowledge. A positive AERO score points to a bias toward AE—prioritizing practical application, experimentation, and risk-taking to test ideas (e.g., iteratively redesigning a submarine model and running tests). A negative score favors RO, focusing on careful observation, contemplation, and diverse viewpoints before acting (e.g., reviewing simulation outcomes to understand failures). Like ACCE, this dimension underscores processing strategies, and neutral scores indicate adaptability.

Appendix B

Descriptive Statistics of Learning Styles and Dimensions Within Target Group

| Dimension | Mean | Std Dev | Min | 25% | Median | 75% | Max |

|---|---|---|---|---|---|---|---|

| CE (Concrete Experience) | 20.0 | 6.7 | 11 | 16.0 | 17.0 | 22.0 | 37 |

| RO (Reflective Observation) | 26.6 | 5.5 | 16 | 22.3 | 26.5 | 29.5 | 39 |

| AC (Abstract Conceptualization) | 35.1 | 5.9 | 24 | 30.3 | 36.0 | 39.0 | 44 |

| AE (Active Experimentation) | 27.3 | 5.9 | 14 | 25.0 | 27.0 | 29.0 | 41 |

| ACCE (AC—CE) | 15.1 | 8.5 | −9 | 11.0 | 16.5 | 20.0 | 27 |

| AERO (AE—RO) | 0.7 | 8.8 | −15 | −5.8 | 0.0 | 8.3 | 15 |

| Main Style | Count | Percentage |

|---|---|---|

| Analyzing | 10 | 38.5% |

| Thinking | 6 | 23.1% |

| Acting | 3 | 11.5% |

| Reflecting | 2 | 7.7% |

| Balancing | 2 | 7.7% |

| Initiating | 2 | 7.7% |

| Deciding | 1 | 3.8% |

| Flex | Count | Percentage of Total Mentions |

|---|---|---|

| Balancing | 20 | 19.8% |

| Reflecting | 19 | 18.8% |

| Thinking | 13 | 12.9% |

| Analyzing | 11 | 10.9% |

| Imagining | 11 | 10.9% |

| Deciding | 10 | 9.9% |

| Experiencing | 7 | 6.9% |

| Acting | 7 | 6.9% |

| User ID | Main Learning Style | Flow Result (FSS) | Immersive Result (IQT) | BANG Result (Satisfaction) | Learning Outcome Phase 1 | Learning Outcome Phase 2 | Learning Outcome Phase 3 |

|---|---|---|---|---|---|---|---|

| 290 | Thinking | 3.778 | 4.071 | 15 | 20 | 2 | 12 |

| 709 | Acting | 4.028 | 3.429 | 17 | 19 | 2 | 11 |

| 227 | Analyzing | 4.528 | 4.286 | 14.5 | 20 | 2 | 10 |

| 512 | Initiating | 4.222 | 3.857 | 17 | 19 | 2 | 8 |

| 177 | Balancing | 4.389 | 3.893 | 16 | 17 | 2 | 4 |

| 443 | Balancing | 4.194 | 3.929 | 18.5 | 18 | 2 | 3 |

| 187 | Initiating | 3.972 | 4.214 | 15.5 | 17 | 2 | 2 |

| 159 | Thinking | 4.333 | 4.179 | 12 | 17 | 2 | 2 |

| 830 | Analyzing | 4.194 | 3.571 | 17.5 | 17 | 2 | 0 |

| 670 | Thinking | 3.778 | 4.464 | 17.5 | 20 | 2 | 0 |

| 590 | Analyzing | 3.556 | 4.071 | 17.5 | 18 | 2 | 0 |

| 595 | Analyzing | 3.361 | 4.036 | 16 | 19 | 1 | 5 |

| 682 | Deciding | 3.972 | 4.071 | 15.5 | 16 | 1 | 3 |

| 341 | Reflecting | 4.361 | 4.536 | 15.5 | 19 | 1 | 2 |

| 411 | Thinking | 3.861 | 3.893 | 15 | 18 | 1 | 2 |

| 840 | Analyzing | 3.194 | 3.679 | 16.5 | 15 | 1 | 2 |

| 388 | Analyzing | 3.972 | 3.857 | 16 | 18 | 1 | 1 |

| 723 | Analyzing | 4.111 | 4.143 | 14.5 | 17 | 1 | 0 |

| 985 | Reflecting | 2.528 | 3.786 | 18.5 | 17 | 1 | 0 |

| 779 | Thinking | 3.361 | 4.179 | 20 | 18 | 1 | 0 |

| 298 | Acting | 3.333 | 4.286 | 13 | 18 | 1 | 0 |

| 484 | Acting | 4.528 | 3.679 | 16.5 | 16 | 1 | 0 |

| 770 | Analyzing | 3.222 | 4.036 | 15.5 | 16 | 1 | 0 |

| 955 | Thinking | 3.722 | 4.179 | 15.5 | 15 | 1 | 0 |

| 642 | Analyzing | 2.500 | 3.750 | 13 | 14 | 1 | 0 |

| 256 | Analyzing | 3.778 | 3.500 | 17 | 15 | 0 | 4 |

References

- Ghazali, A.K.; Aziz, N.A.A.; Aziz, K.A.; Kian, N.T. The usage of virtual reality in engineering education. Cogent Educ. 2024, 11, 2319441. [Google Scholar] [CrossRef]

- Wong, J.Y.; Azam, A.B.; Cao, Q.; Huang, L.; Xie, Y.; Winkler, I.; Cai, Y. Evaluations of Virtual and Augmented Reality Technology-Enhanced Learning for Higher Education. Electronics 2024, 13, 1549. [Google Scholar] [CrossRef]

- Conesa, J.; Martínez, A.; Mula, F.; Contero, M. Learning by Doing in VR: A User-Centric Evaluation of Lathe Operation Training. Electronics 2024, 13, 2549. [Google Scholar] [CrossRef]

- Bano, F.; Alomar, M.A.; Alotaibi, F.M.; Serbaya, S.H.; Rizwan, A.; Hasan, F. Leveraging Virtual Reality in Engineering Education to Optimize Manufacturing Sustainability in Industry 4.0. Sustainability 2024, 16, 7927. [Google Scholar] [CrossRef]

- Manolis, C.; Burns, D.J.; Assudani, R.; Chinta, R. Assessing experiential learning styles: A methodological reconstruction and validation of the Kolb Learning Style Inventory. Learn. Individ. Differ. 2013, 23, 44–52. [Google Scholar] [CrossRef]

- Poirier, L.; Ally, M. Considering Learning Styles When Designing for Emerging Learning Technologies. In Emerging Technologies and Pedagogies in the Curriculum; Yu, S., Ally, M., Tsinakos, A., Eds.; Bridging Human and Machine: Future Education with Intelligence; Springer: Singapore, 2020. [Google Scholar] [CrossRef]

- Whitman, G.M. Learning Styles: Lack of Research-Based Evidence. Clear. House: A J. Educ. Strateg. Issues Ideas 2023, 96, 111–115. [Google Scholar] [CrossRef]

- Huang, C.L.; Luo, Y.F.; Yang, S.C.; Lu, C.M.; Chen, A.S. Influence of Students’ Learning Style, Sense of Presence, and Cognitive Load on Learning Outcomes in an Immersive Virtual Reality Learning Environment. J. Educ. Comput. Res. 2019, 58, 596–615. [Google Scholar] [CrossRef]

- Hauptman, H.; Cohen, A. The synergetic effect of learning styles on the interaction between virtual environments and the enhancement of spatial thinking. Comput. Educ. 2011, 57, 2106–2117. [Google Scholar] [CrossRef]

- Chen, C.J.; Seong, C.T.; Wan, M.F.; Wan, I. Are Learning Styles Relevant to Virtual Reality? J. Res. Technol. Educ. 2005, 38, 123–141. [Google Scholar] [CrossRef]

- Pedram, S.; Howard, S.; Kencevski, K.; Perez, P. Investigating the Relationship Between Students’ Preferred Learning Style on Their Learning Experience in Virtual Reality (VR) Learning Environment. In Human Interaction and Emerging Technologies, Proceedings of the International Conference on Human Interaction and Emerging Technologies (IHIET 2019), Nice, France, 22–24 August 2019; Ahram, T., Taiar, R., Colson, S., Choplin, A., Eds.; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; Volume 1018. [Google Scholar] [CrossRef]

- Kampling, H. The Role of Immersive Virtual Reality in Individual Learning. In Proceedings of the Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 2–6 January 2018. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, S. The Study and Application of Adaptive Learning Method Based on Virtual Reality for Engineering Education. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2019; Volume 1015, pp. 301–308. [Google Scholar] [CrossRef]

- Cao, L.; He, M.; Wang, H. Effects of Attention Level and Learning Style Based on Electroencephalo-Graph Analysis for Learning Behavior in Immersive Virtual Reality. IEEE Access 2023, 11, 53429–53438. [Google Scholar] [CrossRef]

- Gyed, F.A.S.; Tehseen, M.; Tariq, S.; Muhammad, A.K.; Yazeed, Y.G.; Habib, H. Integrating educational theories with virtual reality: Enhancing engineering education and VR laboratories. Soc. Sci. Humanit. Open 2024, 10, 101207. [Google Scholar] [CrossRef]

- Hassan, L.; Jylhä, H.; Sjöblom, M.; Hamari, J. Flow in VR: A Study on the Relationships Between Preconditions, Experience and Continued Use. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020. [Google Scholar] [CrossRef]

- Rutrecht, H.; Wittmann, M.; Khoshnoud, S.; Igarzábal, F.A. Time Speeds Up During Flow States: A Study in Virtual Reality with the Video Game Thumper. Timing Time Percept. 2021, 9, 353–376. [Google Scholar] [CrossRef]

- Guerra-Tamez, C.R. The Impact of Immersion through Virtual Reality in the Learning Experiences of Art and Design Students: The Mediating Effect of the Flow Experience. Educ. Sci. 2023, 13, 185. [Google Scholar] [CrossRef]

- Mütterlein, J. The Three Pillars of Virtual Reality? Investigating the Roles of Immersion, Presence, and Interactivity. In Proceedings of the Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 2–6 January 2018. Available online: http://hdl.handle.net/10125/50061 (accessed on 22 July 2025).

- Zaharias, P.; Andreou, I.; Vosinakis, S. Educational Virtual Worlds, Learning Styles and Learning Effectiveness: An Empirical Investigation. 2010. Available online: https://api.semanticscholar.org/CorpusID:14092908 (accessed on 22 July 2025).

- Horváth, I. An Analysis of Personalized Learning Opportunities in 3D VR. Front. Comput. Sci. 2021, 3, 673826. [Google Scholar] [CrossRef]

- Majgaard, G.; Weitze, C. Virtual Experiential Learning, Learning Design and Interaction in Extended Reality Simulations. In Proceedings of the 14th European Conference on Games Based Learning ECGBL 2020, Virtual, 24–25 September 2020. Available online: https://www.researchgate.net/publication/344412172_Virtual_Experiential_Learning_Learning_Design_and_Interaction_in_Extended_Reality_Simulations (accessed on 5 March 2025).

- Konak, A.; Clark, T.K.; Nasereddin, M. Using Kolb’s Experiential Learning Cycle to improve student learning in virtual computer laboratories. Comput. Educ. 2014, 72, 11–22. [Google Scholar] [CrossRef]

- Kolb, D. The Kolb Experiential Learning Profile a Guide to Experiential Learning Theory, KELP Psychometrics and Research on Validity. The Kolb Experiential Learning Profile (KELP) Tech Guide. 2021. Available online: https://www.academia.edu/87570273/The_Kolb_Experiential_Learning_Profile_A_Guide_to_Experiential_Learning_Theory_KELP_Psychometrics_and_Research_on_Validity (accessed on 22 July 2025).

- Jackson, S.A.; Marsh, H. Development and validation of a scale to measure optimal experience: The Flow State Scale. J. Sport Exerc. Psychol. 1996, 18, 17–35. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence 1998, 7, 225–240. Available online: https://cs.uky.edu/~sgware/reading/papers/witmer1998measuring.pdf (accessed on 6 March 2024). [CrossRef]

- Ballou, N.; Denisova, A.; Ryan, R.; Scott Rigby, C.; Deterding, S. The Basic Needs in Games Scale (BANGS): A new tool for investigating positive and negative video game experiences. Int. J. Hum.-Comput. Stud. 2024, 188, 103289. [Google Scholar] [CrossRef]

- Ringle, C.M.; Wende, S.; Becker, J.-M. SmartPLS 3 Boenningstedt: SmartPLS GmbH: Boenningstedt 2015. Available online: http://www.smartpls.com (accessed on 5 March 2025).

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 3rd ed.; Sage: Thousand Oaks, CA, USA, 2022. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: Oxford, UK, 1988. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial Least Squares Structural Equation Modeling. In Handbook of Market Research; Homburg, C., Klarmann, M., Vomberg, A.E., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Van Laar, S.; Braeken, J. Caught off Base: A Note on the Interpretation of Incremental Fit Indices. Struct. Equ. Model. A Multidiscip. J. 2022, 29, 935–943. [Google Scholar] [CrossRef]

| Kolb’s Learning Stage | Learning Objectives and Cognitive Tasks |

|---|---|

| Concrete Experience (CE) | Learning Objective:

|

| Reflective Observation (RO) | Learning Objective:

|

| Abstract Conceptualization (AC) | Learning Objective:

|

| Active Experimentation (AE) | Learning Objective:

|

| Chapter 1: Building Mode |

| |

| Chapter 2: Floatability Testing and Underwater Navigation |

| |

| Chapter 3: Exploratory Learning |

| |

| Learning Styles | Performance | VR | |

|---|---|---|---|

| Learning styles | 1.000 | 0.290 | 0.497 |

| Performance | 0.290 | 1.000 | 0.703 |

| VR | 0.497 | 0.703 | 1.000 |

| Variables | f2 | R2 | |||

|---|---|---|---|---|---|

VR Learning styles Performance | VR | Learning Styles | Performance | R2 | R2 Adjusted |

| - | 0.329 | - | 0.247 | 0.216 | |

| - | - | - | - | - | |

| 0.977 | - | - | 0.494 | 0.473 | |

| Variables | Cronbach’s Alpha | rho_A | Composite Reliability | AVE |

|---|---|---|---|---|

| Learning styles Performance VR | - | 1.000 | - | - |

| 0.741 | 0.831 | 0.738 | 0.513 | |

| - | 1.000 | - | - |

| Variables | Learning Styles | Performance |

|---|---|---|

| Learning styles Performance VR | - | - |

| 0.289629 | 0.716043 | |

| 0.49748 | 0.702998 |

| Variables | VIF |

|---|---|

| Abstraction Conceptualization | 2.644784 |

| Active Experimentation | 3.273493 |

| Concrete Experience | 2.00095 |

| Reflective Observation | 2.122368 |

| Style | 1.127902 |

| Flow | 1.201899 |

| Immersion | 1.173842 |

| Mindset | 1.314606 |

| Satisfaction | 1.07815 |

| Points1 | 1.684684 |

| Points2 | 1.450584 |

| Points3 | 1.416396 |

| Saturated | Estimated | |

|---|---|---|

| SRMR | 0.096 | 0.096 |

| d_ULS | 0.724 | 0.730 |

| d_G | 0.449 | 0.454 |

| Chi-Square | 44.431 | 44.725 |

| Original Sample (O) | Sample Mean (M) | STDEV * | T Statistics (|O/STDEV|) | p Values | |

|---|---|---|---|---|---|

| Learning styles -> VR | 0.497 | 0.708 | 0.183 | 2.723 | 0.007 |

| VR -> Performance | 0.703 | - | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stănescu, A.-B.; Travadel, S.; Rughiniș, R.-V.; Bucea-Manea-Țoniș, R. Modeling Learning Outcomes in Virtual Reality Through Cognitive Factors: A Case Study on Underwater Engineering. Electronics 2025, 14, 3369. https://doi.org/10.3390/electronics14173369

Stănescu A-B, Travadel S, Rughiniș R-V, Bucea-Manea-Țoniș R. Modeling Learning Outcomes in Virtual Reality Through Cognitive Factors: A Case Study on Underwater Engineering. Electronics. 2025; 14(17):3369. https://doi.org/10.3390/electronics14173369

Chicago/Turabian StyleStănescu, Andrei-Bogdan, Sébastien Travadel, Răzvan-Victor Rughiniș, and Rocsana Bucea-Manea-Țoniș. 2025. "Modeling Learning Outcomes in Virtual Reality Through Cognitive Factors: A Case Study on Underwater Engineering" Electronics 14, no. 17: 3369. https://doi.org/10.3390/electronics14173369

APA StyleStănescu, A.-B., Travadel, S., Rughiniș, R.-V., & Bucea-Manea-Țoniș, R. (2025). Modeling Learning Outcomes in Virtual Reality Through Cognitive Factors: A Case Study on Underwater Engineering. Electronics, 14(17), 3369. https://doi.org/10.3390/electronics14173369