Machine Vision in Human-Centric Manufacturing: A Review from the Perspective of the Frozen Dough Industry

Abstract

1. Introduction

2. Background and Related Work

2.1. Human-Aided Quality Control and Process Monitoring

2.2. Safety and Risk Prevention

2.3. Ergonomics and Operator Assistance

2.4. Integration into Cyber-Physical Systems

2.5. Tactile Sensing and Multimodal Perception

2.6. Challenges of Vision in Food Manufacturing

2.7. Research Gap in Real-World, Human-Centric Applications

3. Review Strategy and Sources

- What is the role of machine vision in enhancing human–machine collaboration within the frozen dough manufacturing environment?

- What are the applications of machine vision that can contribute to improved safety and productivity in human-centric factories?

3.1. Stage I—Review Design

3.1.1. Phase 0—Identifying the Need for the Review

3.1.2. Phase 1—Formulating the Review Proposal

3.1.3. Phase 2—Developing the Review Protocol

3.2. Stage II—Conducting the Review

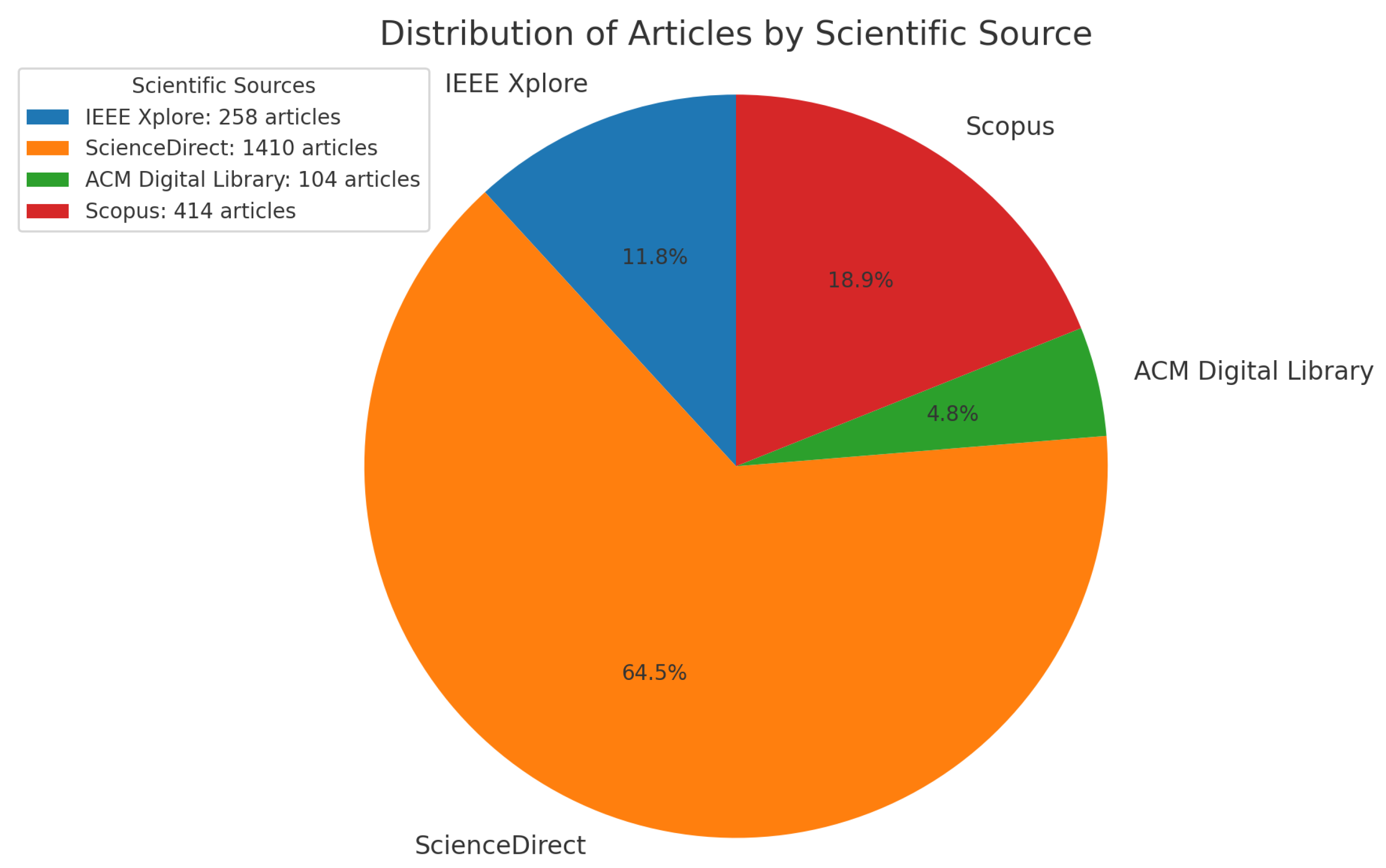

3.2.1. Phase 3—Research Identification

3.2.2. Phase 4—Study Selection

- Topical relevance: Articles had to focus on or be directly related to the role of computer vision in human-centric frozen dough manufacturing environments.

- Publication timeframe: Studies published within the last seven years (2017–2024) were selected to ensure up-to-date findings.

- Language: Only English-language publications were included to ensure consistency and comprehensibility in the review process.

- Full-text availability: Only articles with full access were included, to allow for in-depth reading and analysis.

3.2.3. Phase 5—Quality Assessment

3.2.4. Phase 6—Data Extraction and Monitoring

3.2.5. Phase 7—Data Synthesis

3.3. Stage III—Reporting and Dissemination of Results

Phase 8—Reporting and Recommendations

4. The Frozen Dough Industry

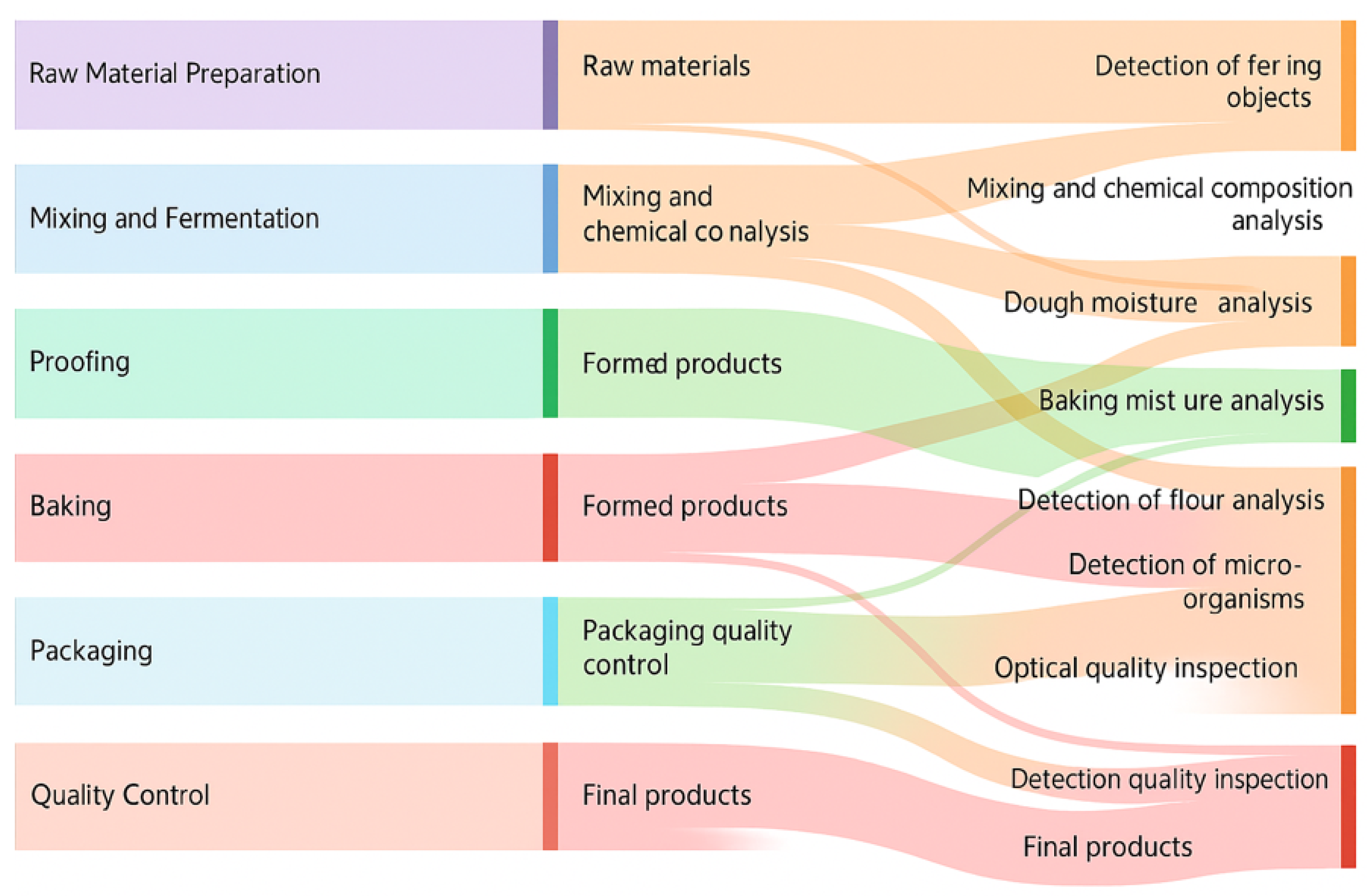

4.1. Machine Vision Techniques in Frozen Dough Production

4.1.1. Raw Material Inspection

4.1.2. Fermentation and Dough Preparation

4.1.3. Product Shaping

4.1.4. Filling and Assembly

4.1.5. Parbaking

4.1.6. Freezing Process

4.1.7. Packaging

4.1.8. Quality Control

5. Results

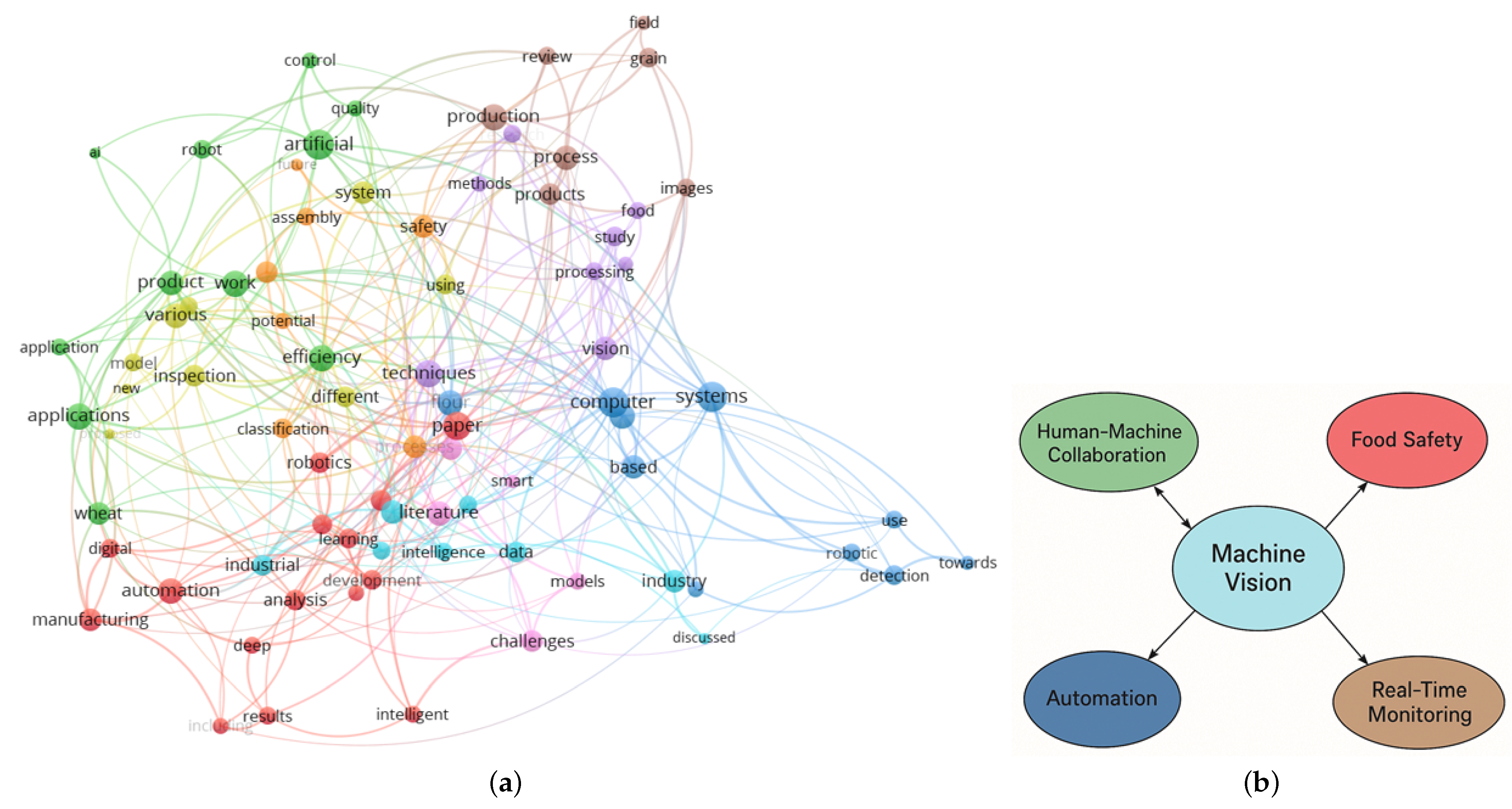

5.1. Trends in Publications on Machine Vision Applications

Bibliometric Analysis

5.2. Research Question 1: What Is the Role of Machine Vision in Enhancing Human–Machine Collaboration in the Frozen Dough Factory?

5.2.1. Human–Machine Collaboration

5.2.2. Automated Task Support

5.2.3. Impact on Worker Skills

5.2.4. Development of Collaborative Solutions

5.2.5. The Role of Real-Time Feedback

5.3. Research Question 2: What Are the Applications of Machine Vision That Can Enhance Safety and Productivity in Human-Centered Factories?

5.3.1. Enhancing Worker Safety

5.3.2. Improving Productivity

5.4. Business Intelligence and AI for Vision-Driven Decision Support

Case Study Limitation Note

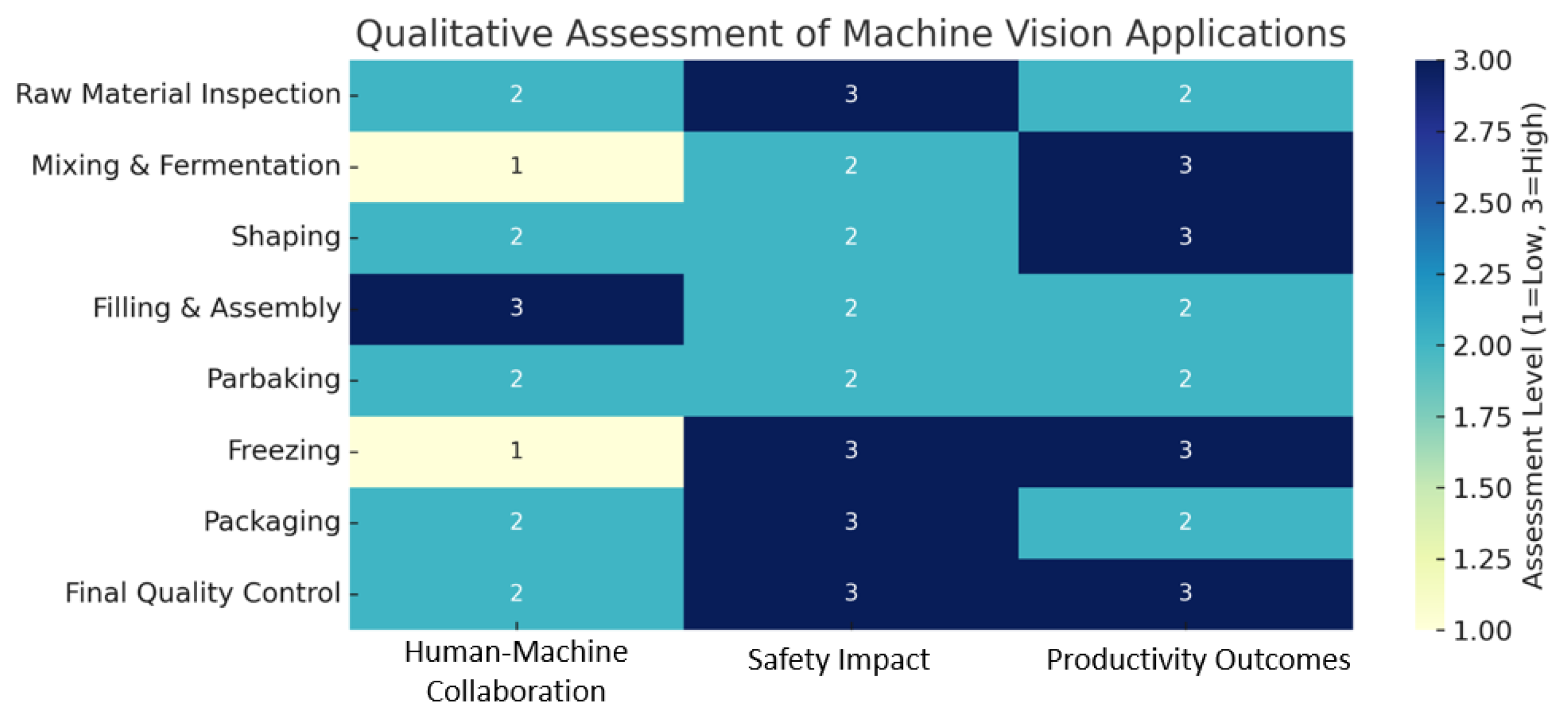

6. Assessment Framework

6.1. Assessment Criteria and Methodology

- Human–Machine Collaboration: the extent to which machine vision technologies enhance the interaction between human operators and automated systems. This includes aspects such as task guidance, ergonomic support, and intuitive feedback mechanisms.

- Safety Impact: the effectiveness of machine vision in reducing operational risks, detecting unsafe behaviors or anomalies, and enhancing real-time safety monitoring throughout the production process.

- Productivity Outcomes: the contribution of vision systems to process efficiency, error reduction, waste minimization, and overall throughput improvement.

6.2. Evaluation Results

6.3. Analysis and Interpretation

- Safety is the strongest impact area for machine vision, with consistently high scores across stages such as freezing, packaging, and final quality control. These systems are particularly effective in hazard detection, posture monitoring, and foreign object identification.

- Productivity is notably enhanced in stages like mixing & fermentation, shaping, and freezing, where visual feedback and real-time analysis improve consistency and reduce processing errors.

- Human–Machine Collaboration is strongest in tasks that involve dynamic interaction, such as filling & assembly, where adaptive guidance and co-robotic systems are implemented. However, early stages such as raw material inspection or fermentation still exhibit relatively low collaborative integration, indicating opportunities for improvement.

6.4. Summary of Insights

7. Technological Readiness Evaluation Framework

- (1)

- Algorithmic Robustness: This dimension assesses the reliability, adaptability, and generalizability of the vision algorithm under real-world conditions, including variable lighting, product heterogeneity, and operational disturbances. For instance, deep learning models like YOLOv8 must demonstrate high accuracy not only in laboratory settings but also in high-speed production lines with fluctuating visual inputs.

- (2)

- Human–Machine Integration: A crucial factor in human-centric manufacturing is the seamless interaction between operators and intelligent systems. This axis evaluates how effectively the vision system communicates outputs, enables intervention, and supports explainability. Systems that provide interpretable visual feedback and ergonomic interfaces are rated higher in readiness for Industry 5.0 use cases.

- (3)

- Infrastructure Compatibility: This refers to the degree of interoperability between the vision system and existing production infrastructure, including programmable logic controllers (PLCs), digital twins, cyber-physical systems (CPS), and industrial IoT platforms. Readiness increases with the ease of integration, modularity, and standard protocol support (e.g., OPC UA, MQTT).

- (4)

- Ethical and Social Compliance: Technologies in Industry 5.0 must also align with ethical principles, including worker privacy, data transparency, and fairness. This dimension evaluates whether the deployment of machine vision respects workplace norms and safeguards against surveillance misuse. A system’s readiness is contingent on its compliance with legal and ethical frameworks.

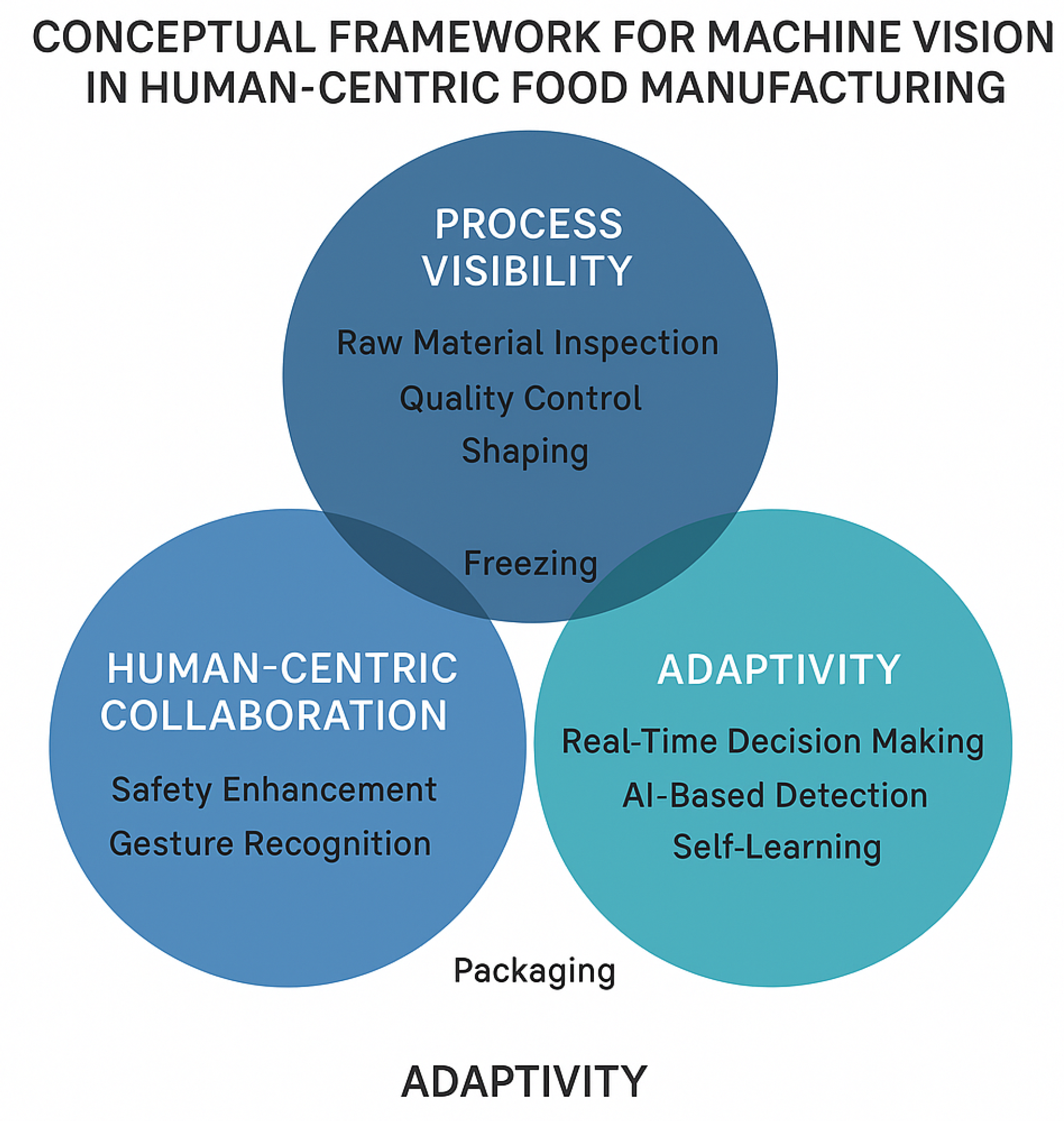

7.1. Conceptual Framework for Vision-Driven Human-Centric Manufacturing

7.2. Limitations and Open Challenges

8. Conclusions and Future Work

- Developing lightweight, ergonomic interfaces for long-term human use,

- Extending the role of digital twins in real-time decision support,

- Exploring the interoperability of vision systems with IoT infrastructures,

- Ensuring cybersecurity in increasingly connected environments.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Catalog of Scientific Studies and Application Overview

| Reference | Category | Focus Area | Methodology | Key Contribution | Application Context |

|---|---|---|---|---|---|

| Zhao et al. (2024) [20] | Smart Production | Vision-Guided Robots | Semi-Supervised Learning | Improved accuracy and efficiency in robot control, reducing response time. | Textile industry for quality inspection and automation. |

| Mandapaka et al. (2023) [67] | Automated Quality Inspection | Product Dimension Analysis | Computer Vision | Presents an automated inspection system for analyzing product dimensions. | Industrial production and quality control. |

| Nadon et al. (2018) [48] | Robotics | Manipulation of Non-Rigid Objects | Systematic Review | Robotics offers solutions for automation, precision, and safety in the food sector. | Automated food processing and packaging. |

| Zafar et al. (2024) [58] | Collaborative Robotics | Digital Twins and Human Augmentation | Systematic Literature Review | Synergies between robots, AI, and Industry 5.0 enhance human–robot collaboration. | Human–robot collaborative manufacturing in Industry 5.0. |

| Yousif et al. (2024) [78] | Safety | Safety and Vision Systems | Framework Development and Application | The Safety 4.0 framework uses machine vision for proactive hazard prevention. | Industrial worker safety and protection systems. |

| Yang et al. (2024) [76] | Computer Vision | Mass Estimation for Kimchi Cabbage | Experimental Study | A novel hybrid vision technique significantly improves mass estimation accuracy. | Agricultural processing and food quality control. |

| Wu et al. (2020) [82] | Robotics | Human Guidance by Mobile Robots | Adaptive Trajectory Prediction | Improved prediction of human movement to support mobile robots in industrial tasks. | Industrial robots for human–robot collaborative operations. |

| Akundi et al. (2021) [43] | Automated Quality Inspection | Product Dimension Analysis | Computer Vision | Presents an automated system for dimension-based quality inspection. | Industrial production and quality assurance. |

| Wang et al. (2024) [53] | Computer Vision | Inspection Systems in the Tobacco Industry | Case Study | Machine vision enhances inspection accuracy and reduces human error. | Automation for quality control in tobacco manufacturing. |

| Wang et al. (2024) [53] | Medical Manufacturing | Smart Production of Medical Devices | Literature Review | Explores challenges and future trends in smart manufacturing for medical applications. | Advanced systems for customized and precise medical device production. |

| Wang et al. (2023) [15] | Smart Manufacturing | Comparative Review of SM and IM | Bibliometric Review | Identifies key traits and evolution of Smart and Intelligent Manufacturing models. | Industry 4.0 and cyber-physical production systems. |

| Wang et al. (2019) [47] | Quality Assurance | Assembly Defect Detection | Image Processing & Deep Learning | Combines traditional and AI-based methods to detect defects on assembly lines. | Automated defect detection in industrial production. |

| Velesaca et al. (2021) [86] | Food Processing | Grain Classification | Comprehensive Review | Reviews vision-based techniques for grain quality assessment and classification. | Grain sorting and quality control systems. |

| Pereira et al. (2022) [54] | Service Robotics | Action Taxonomy in Food Services | Critical Review | Proposes a taxonomy of human actions for robotic automation in food services. | Optimization of food service operations through robotics. |

| Steger et al. (2018) [87] | Computer Vision Algorithms | Integrated Vision Techniques | Framework Development | Provides foundational techniques for applying computer vision in industrial contexts. | Quality inspection, defect detection, and robotic automation. |

| Sontakke et al. (2024) [74] | Smart Manufacturing Education | Teaching Smart Manufacturing Techniques | Case Study | Applies real-world defect detection data to enhance engineering education. | Undergraduate education in chemical engineering. |

| Siripatrawan et al. (2024) [32] | Food Safety Inspection | Fungal Contamination Classification in Rice | Hyperspectral Imaging & SVM | Achieved 93.4% accuracy in classifying fungal contamination using Gaussian SVM. | Rapid, non-destructive food safety inspection. |

| Sharma et al. (2023) [63] | Vision for Smart Manufacturing | Vision Systems in Industry 4.0 | Review | Combines sensors and neural networks to reduce errors and enhance productivity. | Cable manufacturing and defect detection systems. |

| Aviara et al. (2022) [35] | Food Quality Control | Hyperspectral Imaging for Grain Inspection | Review | Enables accurate quality analysis and defect detection in grains. | Agricultural grain quality assurance and classification. |

| Tzampazaki et al. (2024) [11] | Machine Vision | Transition from Industry 4.0 to Industry 5.0 | Systematic Review | Analyzes how machine vision contributes to productivity and product quality. | Industrial production using vision for automation and control. |

| Fan et al. (2022) [62] | Digital Twins | Human-Centric Digital Twin | Case Study | Integrates human data to adapt robotic behavior for effective collaboration. | Smart manufacturing and ergonomic optimization. |

| Modoni et al. (2023) [12] | Digital Twins | Human-Centered Industry | Implementation Framework | Proposes a framework integrating digital systems to enhance human–machine collaboration. | Furniture manufacturing with optimized interactions. |

| Sahoo et al. (2022) [66] | Smart Manufacturing | Technological Advancements in Manufacturing | Systematic Review | Reviews how IoT, AI, and automation enhance efficiency and reduce human error. | Industry 4.0 technologies in smart factories. |

| Nivelle et al. (2017) [51] | Food Science | Par-Baking Effects on Bread | Experimental Study | Demonstrates that par-baking reduces crumb hardening, improving bread quality and texture. | Bread production and quality stability. |

| Rokhva et al. (2024) [31] | Food Industry | Food Recognition with AI | Experimental Study | MobileNetV2 enables accurate, efficient food recognition, reducing waste. | Food production and monitoring. |

| Ren et al. (2024) [72] | Smart Manufacturing | Embedded Intelligence | Framework Development | Introduces AI-driven intelligence for flexible production systems. | Human-centered production and robotics. |

| Ondras et al. (2022) [42] | Robotics & Vision | Robotic Dough Shaping | Experimental Control Policies | Touch and vision-based control improves accuracy in dough shaping. | Digital services and product-service lifecycle management. |

| Lullien-Pellerin (2024) [88] | Grain Characterization | Wheat Quality Assessment | Omics Systems & Microscopy | Links wheat quality to microstructure and composition using multivariate analysis. | High-quality product development and cereal assurance. |

| Prasad et al. (2024) [68] | Smart Monitoring | Real-Time Material Tracking & Cloud Integration | Case Study | Cobots and vision systems improve process control and decision-making. | Real-time tracking in machining operations. |

| Agote-Garrido et al. (2023) [16] | Human-Centered Production | Sociotechnical Systems | Theoretical Model | Proposes a model combining sustainable technology with a human-centered approach. | Sustainable and resilient industrial systems. |

| Zhong et al. (2017) [89] | Smart Manufacturing | CPS and IoT Integration | Systematic Review | Analyzes IoT and CPS integration in smart factories with automated real-time interactions. | Digital twins and predictive analytics in factories. |

| Medina-García et al. (2024) [37] | Food Quality & Authenticity | Whole Grain Bread Authenticity | Hyperspectral Imaging & Chemometrics | Accurately verifies whole grain content for quality and fraud prevention. | Quality control in bakery production. |

| Nilsson et al. (2020) [30] | Smart Manufacturing | Categorization of Indicator Lights | Experimental Study | YOLOv2 and AlexNet achieved over 99% accuracy in detecting indicator lights in legacy systems. | Machine monitoring in old factories without IT integration. |

| Liu et al. (2023) [55] | Robotics & Vision | Food Package Recognition & Sorting | Machine Vision & Structured Light | Structured light improved packaging classification and reduced errors. | Logistics and food product management. |

| Mark et al. (2021) [61] | Worker Assistance Systems | Operator 4.0 & Cognitive Support | Systematic Review | Reviews systems enhancing worker effectiveness without replacing human roles. | Worker support in complex production tasks. |

| Nahavandi (2019) [90] | Industry 5.0 | Human–Machine Collaboration | Systematic Review | Introduced the concept of Industry 5.0, focusing on human–robot collaboration to enhance productivity. | Robotic collaboration and industrial process optimization. |

| Leiva-Valenzuela et al. (2018) [41] | Pattern Recognition | Detection of Undesirable Food Compounds | Computer Vision-Based Recognition | Accurate classification of undesirable food compounds using statistical methods. | Food quality control and defect prevention. |

| Lin et al. (2023) [80] | Agricultural Automation | Vision in Modern Agriculture | Experimental Study | Achieved 11.8% error in automated sorting and evaluation of apples. | Automated classification and quality monitoring of fruit. |

| Li et al. (2023) [91] | Human–Robot Collaboration | Proactive Human–Robot Interaction with AI | Framework Proposal | Robots with cognitive and predictive capabilities for proactive cooperation. | Collaborative robotics in flexible production lines. |

| Li et al. (2023) [71] | Deep Learning in Production | Reinforcement Learning Applications | Systematic Review | Explores adaptive decision-making in smart production using reinforcement learning. | Intelligent automation and decision-making in design and production. |

| Li et al. (2024) [92] | Smart Warehousing | Vision in Logistics | Experimental System Design | Enhances logistics efficiency and reduces human error using machine vision. | Automated sorting and storage in warehouses. |

| Leng et al. (2024) [64] | Industry 5.0 | Collaborative AI in Industry | Foresight Review | Examines integration of collaborative, self-learning AI in Industry 5.0 systems. | Product design, production, and maintenance. |

| Kang et al. (2024) [36] | Artificial Intelligence | Predicting Flour Properties during Milling | Machine Learning | Predicts flour properties using image-based analysis of grain during peeling. | Flour quality control and product customization. |

| Konstantinidis et al. (2023) [1] | Dairy Automation | Yogurt Cup Recognition | Experimental Analysis | YOLO and Mask R-CNN models achieved nearly perfect classification. | Dairy product packaging automation. |

| Konstantinidis et al. (2023) [13] | Zero-Defect Manufacturing | Digital Twin for Defect Prevention | Framework Development | Combines vision systems and digital twins to eliminate defects in dairy. | Quality assurance in dairy production. |

| Konstantinidis et al. (2021) [25] | Automotive Industry | Vision Systems in Industry 4.0 | Review | Highlights AI-powered vision systems for defect detection and optimization. | Process automation and quality control in automotive. |

| Kanth et al. (2023) [65] | Robotics | AI and Vision in Collaborative Robots | Case Studies | Demonstrates object detection in collaborative environments. | Smart factories for assembly and product handling. |

| Jia et al. (2023) [14] | Food Safety | Colorimetric Sensors for Food Quality | Comprehensive Review | Vision systems enable real-time classification of food safety parameters. | Real-time food quality and safety monitoring. |

| Page et al. (2021) [93] | Industrial Policy | Resilience and Sustainability | Strategic Policy | Proposes a human-centric, green and digital industry transformation in Europe. | European policy for sustainable, worker-centered industry. |

| Ji et al. (2021) [49] | Smart Manufacturing | Robotic Assembly Automation | Experimental Framework | Uses learning-by-observation for assembly with minimal human intervention. | Flexible assembly lines for small-batch manufacturing. |

| Qiu et al. (2023) [56] | Robotics & Manipulation | End-Effector Evaluation for Food Handling | Metric Evaluation | Proposes metrics to assess the efficiency and flexibility of robotic systems in food handling. | Food processing and packaging. |

| Javaid et al. (2021) [75] | Robotics in Industry 4.0 | Enhancing Industry 4.0 Applications | Review | Examines 18 robotics applications, focusing on automation, safety, and data collection. | Smart production systems and risk mitigation. |

| Vasudevan et al. (2024) [22] | Robotics & Vision | Primary Food Handling & Packaging | Systematic Review | Highlights computer vision and robotics in food material handling and automated packaging. | Industrial automation and error reduction. |

| Maddikunta et al. (2022) [79] | Industry 5.0 | Technologies and Applications | Systematic Review | Analyzes 6G, edge computing, and digital twins as key Industry 5.0 enablers. | Human-centric smart manufacturing and healthcare. |

| Jimoh et al. (2024) [59] | Non-Invasive Techniques | Food Quality Assessment | Review of Advanced Techniques | Non-invasive technologies improve accuracy and speed in evaluating food quality. | Food fraud detection and chemical safety. |

| Wakchaure et al. (2023) [94] | Robotics | Robotics in the Food Industry | Systematic Review | Robotics offers precise, safe, and automated solutions for food processing. | Automated food handling and packaging. |

| Ylikoski (2022) [50] | Production Optimization | Bakery Production Lines | Process Analysis & Experimental Study | Proposes workflow and machinery improvements in cinnamon roll lines. | Small-scale bakery production. |

| Yang et al. (2024) [76] | Human–Machine Interaction | Industry 5.0 and Smart Production | Systematic Review | Emphasizes HMI for human-centered, resilient, and sustainable production. | Human–machine collaboration in smart factories. |

| Murengami et al. (2025) [38] | Artificial Intelligence | Dough Monitoring via Color Imaging | Deep Learning (YOLOv8s & SEM) | Achieved 83% accuracy in dough monitoring using color features. | Bread production and quality assurance. |

| Ghobakhloo (2020) [57] | Digitization & Sustainability | Industry 4.0 Operations | Interpretive Structural Modeling | Shows the sustainability benefits (economic, social, environmental) of Industry 4.0. | Clean energy, emissions reduction, and wellbeing. |

| Fan et al. (2022) [62] | Vision-Based Collaboration | Holistic Scene Understanding | Systematic Review | Reviews vision-based approaches to proactive HRC focusing on people, objects, and environments. | Personalized manufacturing and HRC. |

| Fattahi et al. (2024) [33] | Food Quality Control | Wheat Flour Variety Classification | FT-MIR Spectroscopy & Chemometrics | Achieved 100% classification accuracy of Iranian wheat varieties. | Quality control and fraud prevention in flour industry. |

| Deng et al. (2024) [46] | Computer Vision | Aerospace Quality Inspection | Review | Vision systems improve detection of drilling and assembly defects. | Aerospace component inspection. |

| da Silva Ferreira et al. (2024) [2] | Agricultural Quality Control | Dragonfruit Maturity Classification | Comparative Study | Vision transformers outperformed ResNet in maturity classification. | Fruit grading in agriculture. |

| Derossi et al. (2023) [44] | Robotics | Unconventional Robotics in Food Industry | Analysis & Framework Proposal | Presents novel directions to improve flexibility and processes using robotics. | Food production and packaging. |

| Cuellar et al. (2023) [21] | Industry 4.0 in Construction | Construction and Infrastructure Technologies | Mixed Methods Analysis | Highlights gaps in AI and robotics adoption in construction. | Building information modeling and infrastructure planning. |

| Ciccarelli et al. (2023) [81] | Human-Centered Industry | Operator 4.0 | Systematic Literature Review | Explores how AR/VR technologies support human roles in Industry 5.0. | Worker empowerment and safety in smart factories. |

| Chakravartula et al. (2023) [28] | Food Processing | Smart Monitoring in Food Drying | Experimental Prototype | Vision systems reduce over- and under-drying using preventive PAT tools. | Agricultural food drying and quality monitoring. |

| Castillo-Ortiz et al. (2024) [95] | Gastronomy Education | Standardization in Culinary Training | Case Study | Computer vision ensures hygiene and consistency in culinary education. | Culinary training and hygiene standard compliance. |

| Barthwal et al. (2024) [39] | Artificial Intelligence | AI in the Food Industry | Comprehensive Review | Analyzes current AI trends for automation in food processing. | Automated production and process optimization. |

| Bhana et al. (2023) [45] | Industrial Safety | PPE Compliance with Vision | Experimental Study | YOLOv8 achieved 86% accuracy in detecting correct PPE usage. | Workplace safety monitoring and PPE detection. |

| Liu et al. (2023) [55] | Deep Learning & Vision | AI and Vision in Food Processing | Review | Highlights deep learning trends and challenges in food automation. | Food processing and classification in industry. |

| Azadnia et al. (2023) [73] | Agricultural Waste Reduction | Hawthorn Ripeness Detection | Experimental Study | Inception-V3 achieved 100% accuracy in ripeness classification, reducing waste. | Agricultural product grading and waste reduction. |

| Hassoun et al. (2024) [4] | Smart Manufacturing | Vision Sensors for In-Process Control | Prototype Development | Vision sensors improve autonomy in quality control with real-time adjustments. | Precision manufacturing and industrial quality assurance. |

| Zhao et al. (2024) [20] | Food Industry Applications | Vision-Based Food Quantification | Literature Review | Addresses safety, quality, and nutrition challenges using machine vision. | Automated food processing and quality assurance. |

| Xiao et al. (2022) [52] | Computer Vision | Food Detection via Vision | Systematic Review | Reviews vision capabilities for food quality and safety control. | Quality control and fraud detection in food. |

| Alimam et al. (2023) [29] | Digital Twins | Digital Triplets in Industry 5.0 | Systematic Review | Introduces digital triplet framework for advanced human-machine integration. | Cognitive collaboration in Industry 5.0. |

| Mehdizadeh (2022) [96] | Food Quality Control | Hyperspectral Imaging for Grain Inspection | Review | Hyperspectral imaging enables precise grain quality and defect detection. | Grain classification and quality assurance in agriculture. |

| Ahmad et al. (2022) [40] | Vision for Smart Manufacturing | Deep Learning for Object Detection | Review | Deep learning enhances defect detection accuracy in production lines. | Automated quality inspection with DL. |

| Hamza et al. (2024) [70] | Cyber-Physical Systems | Real-Time Vision Integration | Prototype Application | Real-time vision improves feature detection and operation accuracy. | High-speed industrial quality inspection. |

| Adjogble et al. (2023) [69] | Intelligent Manufacturing | AI in Production Processes | Framework Proposal | Smart AI systems enhance sustainability, efficiency, and decision-making. | Sustainable and intelligent production systems. |

| Villani et al. (2024) [26] | Digital Transformation | Industry 4.0 & Food Quality | Systematic Review | Explores the impact of digital transformation on quality and waste reduction. | Digitalization in quality control and food waste minimization. |

| Hashmi et al. (2022) [60] | Surface Quality Control | Vision for Surface Roughness | Comprehensive Review | Vision systems offer high-speed, automated surface quality measurements. | Surface inspection in manufacturing processes. |

References

- Konstantinidis, F.K.; Balaska, V.; Symeonidis, S.; Psarommatis, F.; Psomoulis, A.; Giakos, G.; Mouroutsos, S.G.; Gasteratos, A. Achieving zero defected products in diary 4.0 using digital twin and machine vision. In Proceedings of the 16th International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 5–7 July 2023; pp. 528–534. [Google Scholar]

- da Silva Ferreira, M.V.; Junior, S.B.; da Costa, V.G.T.; Barbin, D.F.; Barbosa, J.L., Jr. Deep computer vision system and explainable artificial intelligence applied for classification of dragon fruit (Hylocereus spp.). Sci. Hortic. 2024, 338, 113605. [Google Scholar] [CrossRef]

- Sheikh, R.A.; Ahmed, I.; Faqihi, A.Y.A.; Shehawy, Y.M. Global perspectives on navigating Industry 5.0 knowledge: Achieving resilience, sustainability, and human-centric innovation in manufacturing. J. Knowl. Econ. 2024, 1–36. [Google Scholar] [CrossRef]

- Hassoun, A.; Jagtap, S.; Trollman, H.; Garcia-Garcia, G.; Duong, L.N.; Saxena, P.; Bouzembrak, Y.; Treiblmaier, H.; Para-López, C.; Carmona-Torres, C.; et al. From Food Industry 4.0 to Food Industry 5.0: Identifying technological enablers and potential future applications in the food sector. Compr. Rev. Food Sci. Food Saf. 2024, 23, e370040. [Google Scholar] [CrossRef] [PubMed]

- Guruswamy, S.; Pojić, M.; Subramanian, J.; Mastilović, J.; Sarang, S.; Subbanagounder, A.; Stojanović, G.; Jeoti, V. Toward better food security using concepts from industry 5.0. Sensors 2022, 22, 8377. [Google Scholar] [CrossRef]

- Sood, S.; Singh, H. Computer vision and machine learning based approaches for food security: A review. Multimed. Tools Appl. 2021, 80, 27973–27999. [Google Scholar] [CrossRef]

- Madhavan, M.; Sharafuddin, M.A.; Wangtueai, S. Measuring the Industry 5.0-readiness level of SMEs using Industry 1.0–5.0 practices: The case of the seafood processing industry. Sustainability 2024, 16, 2205. [Google Scholar] [CrossRef]

- Roy, S.; Singh, S. XR and digital twins, and their role in human factor studies. Front. Energy Res. 2024, 12, 1359688. [Google Scholar] [CrossRef]

- Deeba, K.; Chinnpa Prabu Shankar, K.; Gnanavel, S.; Elsisi, M. Artificial Intelligence, Computer Vision, and Robotics for Industry 5.0. In Next Generation Data Science and Blockchain Technology for Industry 5.0: Concepts and Paradigms; John Wiley & Sons: Hoboken, NJ, USA, 2025; pp. 295–324. [Google Scholar]

- Licardo, J.T.; Domjan, M.; Orehovački, T. Intelligent robotics—A systematic review of emerging technologies and trends. Electronics 2024, 13, 542. [Google Scholar] [CrossRef]

- Tzampazaki, M.; Zografos, C.; Vrochidou, E.; Papakostas, G.A. Machine vision—Moving from Industry 4.0 to Industry 5.0. Appl. Sci. 2024, 14, 1471. [Google Scholar] [CrossRef]

- Modoni, G.E.; Sacco, M. A human digital-twin-based framework driving human centricity towards industry 5.0. Sensors 2023, 23, 6054. [Google Scholar] [CrossRef]

- Konstantinidis, F.K.; Balaska, V.; Symeonidis, S.; Tsilis, D.; Mouroutsos, S.G.; Bampis, L.; Psomoulis, A.; Gasteratos, A. Automating dairy production lines with the yoghurt cups recognition and detection process in the Industry 4.0 era. Procedia Comput. Sci. 2023, 217, 918–927. [Google Scholar] [CrossRef]

- Jia, X.; Ma, P.; Tarwa, K.; Wang, Q. Machine vision-based colorimetric sensor systems for food applications. J. Agric. Food Res. 2023, 11, 100503. [Google Scholar] [CrossRef]

- Wang, G.; Liu, B.; Wang, J.; Wang, J. Intelligent Inspection System of Tobacco Enterprise Measuring Equipment Based on Machine Vision. In Proceedings of the 2023 4th International Conference on Computer Science and Management Technology, Xi’an, China, 13–15 October 2023; pp. 291–296. [Google Scholar]

- Agote-Garrido, A.; Martín-Gómez, A.M.; Lama-Ruiz, J.R. Manufacturing system design in industry 5.0: Incorporating sociotechnical systems and social metabolism for human-centered, sustainable, and resilient production. Systems 2023, 11, 537. [Google Scholar] [CrossRef]

- Panghal, A.; Chhikara, N.; Sindhu, N.; Jaglan, S. Role of Food Safety Management Systems in safe food production: A review. J. Food Saf. 2018, 38, e12464. [Google Scholar] [CrossRef]

- Pang, J.; Zheng, P.; Fan, J.; Liu, T. Towards cognition-augmented human-centric assembly: A visual computation perspective. Robot. Comput.-Integr. Manuf. 2025, 91, 102852. [Google Scholar] [CrossRef]

- Lins, T.; Oliveira, R.A.R. Cyber-physical production systems retrofitting in context of industry 4.0. Comput. Ind. Eng. 2020, 139, 106193. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, R.; Liu, M.; Bai, L.; Sun, Y. Application of machine vision in food computing: A review. Food Chem. 2024, 463, 141238. [Google Scholar] [CrossRef]

- Cuellar, S.; Grisales, S.; Castaneda, D.I. Constructing tomorrow: A multifaceted exploration of Industry 4.0 scientific, patents, and market trend. Autom. Constr. 2023, 156, 105113. [Google Scholar] [CrossRef]

- Vasudevan, S.; Mekhalfi, M.L.; Blanes, C.; Lecca, M.; Poiesi, F.; Chippendale, P.I.; Fresnillo, P.M.; Mohammed, W.M.; Lastra, J.L.M. Robotics and Machine Vision for Primary Food Manipulation and Packaging: A Survey. IEEE Access 2024, 12, 152579–152613. [Google Scholar] [CrossRef]

- Navarro-Guerrero, N.; Toprak, S.; Josifovski, J.; Jamone, L. Visuo-haptic object perception for robots: An overview. Auton. Robot. 2023, 47, 377–403. [Google Scholar] [CrossRef]

- Palanikumar, K.; Natarajan, E.; Ponshanmugakumar, A. Application of machine vision technology in manufacturing industries—A study. In Machine Intelligence in Mechanical Engineering; Elsevier: Amsterdam, The Netherlands, 2024; pp. 91–122. [Google Scholar]

- Konstantinidis, F.K.; Mouroutsos, S.G.; Gasteratos, A. The role of machine vision in industry 4.0: An automotive manufacturing perspective. In Proceedings of the 2021 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 24–26 August 2021; pp. 1–6. [Google Scholar]

- Villani, V.; Picone, M.; Mamei, M.; Sabattini, L. A digital twin driven human-centric ecosystem for industry 5.0. IEEE Trans. Autom. Sci. Eng. 2024, 22, 11291–11303. [Google Scholar] [CrossRef]

- Chai, J.J.; O’Sullivan, C.; Gowen, A.A.; Rooney, B.; Xu, J.L. Augmented/mixed reality technologies for food: A review. Trends Food Sci. Technol. 2022, 124, 182–194. [Google Scholar] [CrossRef]

- Chakravartula, S.S.N.; Bandiera, A.; Nardella, M.; Bedini, G.; Ibba, P.; Massantini, R.; Moscetti, R. Computer vision-based smart monitoring and control system for food drying: A study on carrot slices. Comput. Electron. Agric. 2023, 206, 107654. [Google Scholar] [CrossRef]

- Alimam, H.; Mazzuto, G.; Tozzi, N.; Ciarapica, F.E.; Bevilacqua, M. The resurrection of digital triplet: A cognitive pillar of human-machine integration at the dawn of industry 5.0. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101846. [Google Scholar] [CrossRef]

- Nilsson, F.; Jakobsen, J.; Alonso-Fernandez, F. Detection and classification of industrial signal lights for factory floors. In Proceedings of the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 9–11 June 2020; pp. 1–6. [Google Scholar]

- Rokhva, S.; Teimourpour, B.; Soltani, A.H. Computer vision in the food industry: Accurate, real-time, and automatic food recognition with pretrained MobileNetV2. Food Humanit. 2024, 3, 100378. [Google Scholar] [CrossRef]

- Siripatrawan, U.; Makino, Y. Assessment of food safety risk using machine learning-assisted hyperspectral imaging: Classification of fungal contamination levels in rice grain. Microb. Risk Anal. 2024, 27, 100295. [Google Scholar] [CrossRef]

- Fattahi, S.H.; Kazemi, A.; Khojastehnazhand, M.; Roostaei, M.; Mahmoudi, A. The classification of Iranian wheat flour varieties using FT-MIR spectroscopy and chemometrics methods. Expert Syst. Appl. 2024, 239, 122175. [Google Scholar] [CrossRef]

- Liu, Y.; Pu, H.; Sun, D.W. Hyperspectral imaging technique for evaluating food quality and safety during various processes: A review of recent applications. Trends Food Sci. Technol. 2017, 69, 25–35. [Google Scholar] [CrossRef]

- Aviara, N.A.; Liberty, J.T.; Olatunbosun, O.S.; Shoyombo, H.A.; Oyeniyi, S.K. Potential application of hyperspectral imaging in food grain quality inspection, evaluation and control during bulk storage. J. Agric. Food Res. 2022, 8, 100288. [Google Scholar] [CrossRef]

- Kang, S.; Kim, Y.; Ajani, O.S.; Mallipeddi, R.; Ha, Y. Predicting the properties of wheat flour from grains during debranning: A machine learning approach. Heliyon 2024, 10, e36472. [Google Scholar] [CrossRef]

- Medina-García, M.; Roca-Nasser, E.A.; Martínez-Domingo, M.A.; Valero, E.M.; Arroyo-Cerezo, A.; Cuadros-Rodríguez, L.; Jiménez-Carvelo, A.M. Towards the establishment of a green and sustainable analytical methodology for hyperspectral imaging-based authentication of wholemeal bread. Food Control 2024, 166, 110715. [Google Scholar] [CrossRef]

- Murengami, B.G.; Jing, X.; Jiang, H.; Liu, X.; Mao, W.; Li, Y.; Chen, X.; Wang, S.; Li, R.; Fu, L. Monitor and classify dough based on color image with deep learning. J. Food Eng. 2025, 386, 112299. [Google Scholar]

- Barthwal, R.; Kathuria, D.; Joshi, S.; Kaler, R.; Singh, N. New trends in the development and application of artificial intelligence in food processing. Innov. Food Sci. Emerg. Technol. 2024, 92, 103600. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Rahimi, A. Deep learning methods for object detection in smart manufacturing: A survey. J. Manuf. Syst. 2022, 64, 181–196. [Google Scholar] [CrossRef]

- Leiva-Valenzuela, G.A.; Mariotti, M.; Mondragón, G.; Pedreschi, F. Statistical pattern recognition classification with computer vision images for assessing the furan content of fried dough pieces. Food Chem. 2018, 239, 718–725. [Google Scholar] [CrossRef] [PubMed]

- Ondras, J.; Ni, D.; Deng, X.; Gu, Z.; Zheng, H.; Bhattacharjee, T. Robotic dough shaping. In Proceedings of the 2022 22nd International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 27 November–1 December 2022; pp. 300–307. [Google Scholar]

- Akundi, A.; Reyna, M. A machine vision based automated quality control system for product dimensional analysis. Procedia Comput. Sci. 2021, 185, 127–134. [Google Scholar] [CrossRef]

- Derossi, A.; Di Palma, E.; Moses, J.; Santhoshkumar, P.; Caporizzi, R.; Severini, C. Avenues for non-conventional robotics technology applications in the food industry. Food Res. Int. 2023, 173, 113265. [Google Scholar] [CrossRef]

- Bhana, R.; Mahmoud, H.; Idrissi, M. Smart industrial safety using computer vision. In Proceedings of the 2023 28th International Conference on Automation and Computing (ICAC), Birmingham, UK, 30 August–1 September 2023; pp. 1–6. [Google Scholar]

- Deng, L.; Liu, G.; Zhang, Y. A review of machine vision applications in aerospace manufacturing quality inspection. In Proceedings of the 2024 4th International Conference on Computer, Control and Robotics (ICCCR), Shnaghai, China, 19–21 April 2024; pp. 31–39. [Google Scholar]

- Wang, J.; Hu, H.; Chen, L.; He, C. Assembly defect detection of atomizers based on machine vision. In Proceedings of the 2019 4th International Conference on Automation, Control and Robotics Engineering, Shenzhen, China, 19–21 July 2019; pp. 1–6. [Google Scholar]

- Nadon, F.; Valencia, A.J.; Payeur, P. Multi-modal sensing and robotic manipulation of non-rigid objects: A survey. Robotics 2018, 7, 74. [Google Scholar] [CrossRef]

- Ji, S.; Lee, S.; Yoo, S.; Suh, I.; Kwon, I.; Park, F.C.; Lee, S.; Kim, H. Learning-based automation of robotic assembly for smart manufacturing. Proc. IEEE 2021, 109, 423–440. [Google Scholar] [CrossRef]

- Ylikoski, M. Optimization of Gateau Fazer’s Production Line. Bachelor’s Thesis, Metropolia University of Applied Sciences, Helsinki, Finland, 2022. [Google Scholar]

- Nivelle, M.A.; Bosmans, G.M.; Delcour, J.A. The impact of parbaking on the crumb firming mechanism of fully baked tin wheat bread. J. Agric. Food Chem. 2017, 65, 10074–10083. [Google Scholar] [CrossRef]

- Xiao, Z.; Wang, J.; Han, L.; Guo, S.; Cui, Q. Application of machine vision system in food detection. Front. Nutr. 2022, 9, 888245. [Google Scholar] [CrossRef]

- Wang, X.V.; Xu, P.; Cui, M.; Yu, X.; Wang, L. A literature survey of smart manufacturing systems for medical applications. J. Manuf. Syst. 2024, 76, 502–519. [Google Scholar] [CrossRef]

- Pereira, D.; Bozzato, A.; Dario, P.; Ciuti, G. Towards Foodservice Robotics: A taxonomy of actions of foodservice workers and a critical review of supportive technology. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1820–1858. [Google Scholar] [CrossRef]

- Liu, X.; Liang, J.; Ye, Y.; Song, Z.; Zhao, J. A food package recognition and sorting system based on structured light and deep learning. In Proceedings of the 2023 International Joint Conference on Robotics and Artificial Intelligence, Shanghai, China, 7–9 July 2023; pp. 19–25. [Google Scholar]

- Qiu, Z.; Paul, H.; Wang, Z.; Hirai, S.; Kawamura, S. An evaluation system of robotic end-effectors for food handling. Foods 2023, 12, 4062. [Google Scholar] [CrossRef] [PubMed]

- Ghobakhloo, M. Industry 4.0, digitization, and opportunities for sustainability. J. Clean. Prod. 2020, 252, 119869. [Google Scholar] [CrossRef]

- Zafar, M.H.; Langås, E.F.; Sanfilippo, F. Exploring the synergies between collaborative robotics, digital twins, augmentation, and industry 5.0 for smart manufacturing: A state-of-the-art review. Robot. Comput.-Integr. Manuf. 2024, 89, 102769. [Google Scholar] [CrossRef]

- Jimoh, K.A.; Hashim, N. Recent advances in non-invasive techniques for assessing food quality: Applications and innovations. Adv. Food Nutr. Res. 2025, 114, 301–352. [Google Scholar]

- Hashmi, A.W.; Mali, H.S.; Meena, A.; Hashmi, M.F.; Bokde, N.D. Surface Characteristics Measurement Using Computer Vision: A Review. CMES-Comput. Model. Eng. Sci. 2023, 135, 917–1005. [Google Scholar]

- Mark, B.G.; Rauch, E.; Matt, D.T. Worker assistance systems in manufacturing: A review of the state of the art and future directions. J. Manuf. Syst. 2021, 59, 228–250. [Google Scholar] [CrossRef]

- Fan, J.; Zheng, P.; Li, S. Vision-based holistic scene understanding towards proactive human–robot collaboration. Robot. Comput.-Integr. Manuf. 2022, 75, 102304. [Google Scholar] [CrossRef]

- Sharma, A.; Kulkarni, A. Vision System for Smart Manufacturing: A Review. In Proceedings of the 2023 IEEE Engineering Informatics, Melbourne, Australia, 22–23 November 2023; pp. 1–9. [Google Scholar]

- Leng, J.; Zhu, X.; Huang, Z.; Li, X.; Zheng, P.; Zhou, X.; Mourtzis, D.; Wang, B.; Qi, Q.; Shao, H.; et al. Unlocking the power of industrial artificial intelligence towards Industry 5.0: Insights, pathways, and challenges. J. Manuf. Syst. 2024, 73, 349–363. [Google Scholar] [CrossRef]

- Kanth, R.; Heikkonen, J. Machine Vision and Artificial Intelligence in Robotics for Smart Factory. In Proceedings of the 2023 IEEE International Conference on Emerging Trends in Engineering, Sciences and Technology (ICES&T), Bahawalpur, Pakistan, 9–11 January 2023; pp. 1–4. [Google Scholar]

- Sahoo, S.; Lo, C.Y. Smart manufacturing powered by recent technological advancements: A review. J. Manuf. Syst. 2022, 64, 236–250. [Google Scholar] [CrossRef]

- Mandapaka, S.; Diaz, C.; Irisson, H.; Akundi, A.; Lopez, V.; Timmer, D. Application of automated quality control in smart factories—A deep learning-based approach. In Proceedings of the 2023 IEEE International Systems Conference (SysCon), Vancouver, BC, Canada, 17–20 April 2023; pp. 1–8. [Google Scholar]

- Prasad, P.D.; Patel, D.; Muthuswamy, S.; Karumbu, P. In Situ Material Identification, Machining Monitoring, and Cloud Logging Integrated with AI and Machine Vision. In Proceedings of the 2024 5th International Conference on Innovative Trends in Information Technology (ICITIIT), Kottayam, India, 15–16 March 2024; pp. 1–6. [Google Scholar]

- Adjogble, F.K.; Warschat, J.; Hemmje, M. Advanced Intelligent Manufacturing in Process Industry Using Industrial Artificial Intelligence. In Proceedings of the 2023 Portland International Conference on Management of Engineering and Technology (PICMET), Monterrey, Mexico, 23–27 July 2023; pp. 1–16. [Google Scholar]

- Hamza, S.A.; Jesser, A. Advancing Industry 4.0 with real-time machine vision integration in cyber-physical systems. In Proceedings of the 2024 IEEE 3rd International Conference on Computing and Machine Intelligence (ICMI), Mt. Pleasant, MI, USA, 13–14 April 2024; pp. 1–5. [Google Scholar]

- Li, C.; Zheng, P.; Yin, Y.; Wang, B.; Wang, L. Deep reinforcement learning in smart manufacturing: A review and prospects. CIRP J. Manuf. Sci. Technol. 2023, 40, 75–101. [Google Scholar] [CrossRef]

- Ren, L.; Dong, J.; Liu, S.; Zhang, L.; Wang, L. Embodied intelligence toward future smart manufacturing in the era of AI foundation model. IEEE ASME Trans. Mechatron. 2024, 30, 2632–2642. [Google Scholar] [CrossRef]

- Azadnia, R.; Fouladi, S.; Jahanbakhshi, A. Intelligent detection and waste control of hawthorn fruit based on ripening level using machine vision system and deep learning techniques. Results Eng. 2023, 17, 100891. [Google Scholar] [CrossRef]

- Sontakke, M.; Yerimah, L.E.; Rebmann, A.; Ghosh, S.; Dory, C.; Hedden, R.; Bequette, B.W. Integrating smart manufacturing techniques into undergraduate education: A case study with heat exchanger. Comput. Chem. Eng. 2024, 191, 108858. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R. Substantial capabilities of robotics in enhancing industry 4.0 implementation. Cogn. Robot. 2021, 1, 58–75. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Y.; Morgan, P.L. Human-machine interaction towards Industry 5.0: Human-centric smart manufacturing. Digit. Eng. 2024, 2, 100013. [Google Scholar] [CrossRef]

- Yang, H.I.; Min, S.G.; Yang, J.H.; Eun, J.B.; Chung, Y.B. A novel hybrid-view technique for accurate mass estimation of kimchi cabbage using computer vision. J. Food Eng. 2024, 378, 112126. [Google Scholar] [CrossRef]

- Yousif, I.; Samaha, J.; Ryu, J.; Harik, R. Safety 4.0: Harnessing computer vision for advanced industrial protection. Manuf. Lett. 2024, 41, 1342–1356. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Lin, S.; Qi, X. Development of Intelligent Agricultural Automation Based on Computer Vision. In Proceedings of the 2023 International Conference on Integrated Intelligence and Communication Systems (ICIICS), Kalaburagi, India, 24–25 November 2023; pp. 1–6. [Google Scholar]

- Ciccarelli, M.; Papetti, A.; Germani, M. Exploring how new industrial paradigms affect the workforce: A literature review of Operator 4.0. J. Manuf. Syst. 2023, 70, 464–483. [Google Scholar] [CrossRef]

- Wu, H.; Xu, W.; Yao, B.; Hu, Y.; Feng, H. Interacting multiple model-based adaptive trajectory prediction for anticipative human following of mobile industrial robot. Procedia Comput. Sci. 2020, 176, 3692–3701. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A state-of-the-art survey on Augmented Reality-assisted Digital Twin for futuristic human-centric industry transformation. Robot. Comput.-Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Sevetlidis, V.; Pavlidis, G.; Balaska, V.; Psomoulis, A.; Mouroutsos, S.G.; Gasteratos, A. Enhancing Weakly Supervised Defect Detection Through Anomaly-Informed Weighted Training. IEEE Trans. Instrum. Meas. 2024, 73, 3538310. [Google Scholar] [CrossRef]

- Symeonidis, S.; Peikos, G.; Arampatzis, A. Unsupervised consumer intention and sentiment mining from microblogging data as a business intelligence tool. Oper. Res. 2022, 22, 6007–6036. [Google Scholar] [CrossRef]

- Velesaca, H.O.; Suárez, P.L.; Mira, R.; Sappa, A.D. Computer vision based food grain classification: A comprehensive survey. Comput. Electron. Agric. 2021, 187, 106287. [Google Scholar] [CrossRef]

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Lullien-Pellerin, V. How can we evaluate and predict wheat quality? J. Cereal Sci. 2024, 104001. [Google Scholar] [CrossRef]

- Zhong, R.Y.; Xu, X.; Klotz, E.; Newman, S.T. Intelligent manufacturing in the context of industry 4.0: A review. Engineering 2017, 3, 616–630. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Li, S.; Zheng, P.; Liu, S.; Wang, Z.; Wang, X.V.; Zheng, L.; Wang, L. Proactive human–robot collaboration: Mutual-cognitive, predictable, and self-organising perspectives. Robot. Comput.-Integr. Manuf. 2023, 81, 102510. [Google Scholar] [CrossRef]

- Li, M.; Liu, Y.; Xu, G.; Ma, Z. The intelligent warehousing system combined with machine vision is constructed. In Proceedings of the 2024 3rd International Symposium on Control Engineering and Robotics, Changsha, China, 24–26 May 2024; pp. 104–108. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Wakchaure, M.; Patle, B.K.; Mahindrakar, A.K. Application of AI techniques and robotics in agriculture: A review. Artif. Intell. Life Sci. 2023, 3, 100057. [Google Scholar] [CrossRef]

- Castillo-Ortiz, I.; Villar-Patiño, C.; Guevara-Martínez, E. Computer vision solution for uniform adherence in gastronomy schools: An artificial intelligence case study. Int. J. Gastronomy Food Sci. 2024, 37, 100997. [Google Scholar] [CrossRef]

- Mehdizadeh, S.A. Machine vision based intelligent oven for baking inspection of cupcake: Design and implementation. Mechatronics 2022, 82, 102746. [Google Scholar] [CrossRef]

| Domain | Environment | Product Variability | Vision Complexity |

|---|---|---|---|

| Automotive | Structured | Low | Medium |

| Pharmaceutical | Cleanroom | Very Low | Low |

| Frozen Dough | Semi-structured | High | High |

| Electronics | Structured | Medium | Medium |

| Agriculture | Unstructured | Very High | Very High |

| Review Stage | Number of Studies |

|---|---|

| Identified via IEEE Xplore, ScienceDirect, ACM, Scopus | 2186 |

| Removed prior to screening | 162 |

| Screened | 2024 |

| Excluded after screening | 334 |

| Full-text retrieved | 1690 |

| Could not be retrieved | 30 |

| Assessed for eligibility | 1660 |

| Excluded after full-text assessment | 1575 |

| Final included studies | 85 |

| Application (%) | Description |

|---|---|

| Foreign object detection (13.33%) | Detects contaminants in raw materials using high-resolution imaging, ensuring food safety. |

| Packaging defect inspection (6.67%) | Identifies issues like misaligned labels and damaged seals during final packaging. |

| Dimensional inspection (6.67%) | Ensures uniform shape and size of products during shaping. |

| Elasticity and texture analysis (6.67%) | Evaluates dough elasticity in real-time to maintain consistency. |

| Uniformity and moisture control (6.67%) | Monitors dough homogeneity and moisture to ensure stable production. |

| Crack and flaw detection (6.67%) | Detects visible cracks and imperfections. |

| Dimensional analysis (6.67%) | Supports consistent size measurements across production stages. |

| Fermentation monitoring (6.67%) | Observes fermentation progress via structural and moisture analysis. |

| Moisture and chemical composition detection (6.67%) | Monitors raw material composition for quality assurance. |

| Dynamic shaping (6.67%) | Adjusts shaping parameters in real-time using robotic feedback. |

| Visual quality inspection (6.67%) | Ensures visual appeal and quality consistency of products. |

| Thickness uniformity monitoring (6.67%) | Controls and verifies even product thickness. |

| Robotic mechanism adaptation (6.67%) | Enables robots to adapt to production changes dynamically. |

| Dough condition classification (6.67%) | Classifies dough as under-, well-, or over-fermented for process optimization. |

| Production Stage | Human–Machine Collaboration | Safety Impact | Productivity Outcomes |

|---|---|---|---|

| Raw Material Inspection | Medium | High | Medium |

| Mixing & Fermentation | Low | Medium | High |

| Shaping | Medium | Medium | High |

| Filling & Assembly | High | Medium | Medium |

| Parbaking | Medium | Medium | Medium |

| Freezing | Low | High | High |

| Packaging | Medium | High | Medium |

| Final Quality Control | Medium | High | High |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balaska, V.; Tserkezis, A.; Konstantinidis, F.; Sevetlidis, V.; Symeonidis, S.; Karakatsanis, T.; Gasteratos, A. Machine Vision in Human-Centric Manufacturing: A Review from the Perspective of the Frozen Dough Industry. Electronics 2025, 14, 3361. https://doi.org/10.3390/electronics14173361

Balaska V, Tserkezis A, Konstantinidis F, Sevetlidis V, Symeonidis S, Karakatsanis T, Gasteratos A. Machine Vision in Human-Centric Manufacturing: A Review from the Perspective of the Frozen Dough Industry. Electronics. 2025; 14(17):3361. https://doi.org/10.3390/electronics14173361

Chicago/Turabian StyleBalaska, Vasiliki, Anestis Tserkezis, Fotios Konstantinidis, Vasileios Sevetlidis, Symeon Symeonidis, Theoklitos Karakatsanis, and Antonios Gasteratos. 2025. "Machine Vision in Human-Centric Manufacturing: A Review from the Perspective of the Frozen Dough Industry" Electronics 14, no. 17: 3361. https://doi.org/10.3390/electronics14173361

APA StyleBalaska, V., Tserkezis, A., Konstantinidis, F., Sevetlidis, V., Symeonidis, S., Karakatsanis, T., & Gasteratos, A. (2025). Machine Vision in Human-Centric Manufacturing: A Review from the Perspective of the Frozen Dough Industry. Electronics, 14(17), 3361. https://doi.org/10.3390/electronics14173361