Image Captioning Model Based on Multi-Step Cross-Attention Cross-Modal Alignment and External Commonsense Knowledge Augmentation

Abstract

1. Introduction

- The inherent richness of image semantics versus the limited textual descriptions in training data leads to insufficient cross-modal alignment precision, thereby degrading the quality of visual–textual feature integration. Consequently, the generated captions suffer from attribute omission, reduced accuracy, and impoverished diversity.

- Existing approaches often fail to effectively incorporate external commonsense knowledge to assist decoding during caption generation. This lack of integration prevents the GPT-2 decoder from being adequately guided by such knowledge, which in turn constrains caption diversity, semantic depth, and commonsense plausibility. Consequently, despite advances in knowledge graph technology, developing efficient mechanisms to integrate external commonsense knowledge to enhance descriptive capabilities remains a critical challenge.

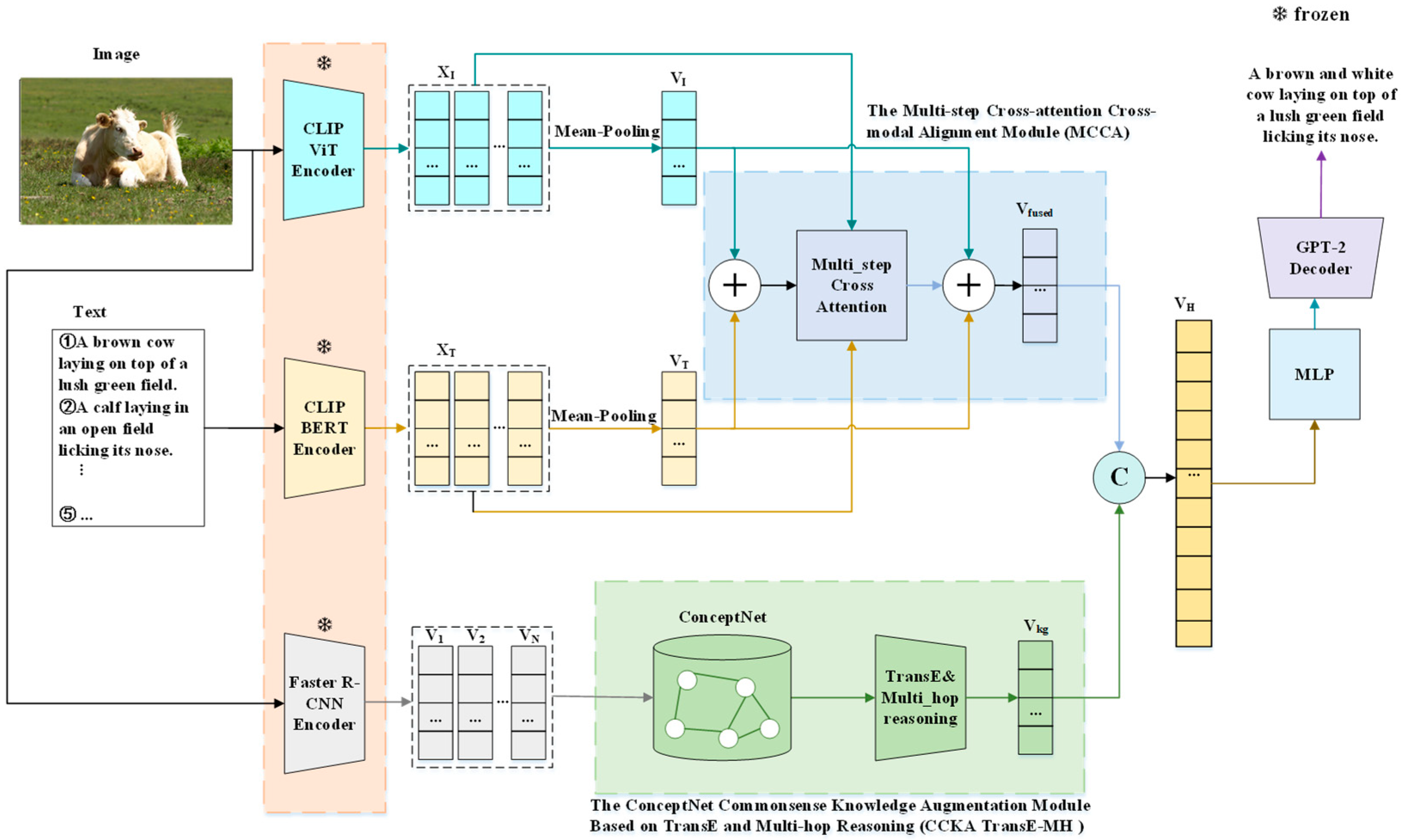

- This article proposes an image captioning architecture built upon the CLIP and GPT-2 backbone, integrating the following key components: the Vision Transformer (ViT) visual encoder (from CLIP) for global semantics, a region-based visual encoder using Faster Region-based Convolutional Neural Network (Faster R-CNN) for regional object-centric details, the Bidirectional Encoder Representations from Transformers (BERT) text encoder, a multi-step cross-attention cross-modal alignment module, an external commonsense knowledge augmentation module (leveraging ConceptNet), and the GPT-2 text decoder for description generation. This architecture embodies a trilevel enhancement through “global + regional + knowledge” integration: ViT captures global image semantics, Faster R-CNN extracts regional object particulars, and ConceptNet provides implicit relational commonsense knowledge. By synergizing multimodal deep integration with external knowledge augmentation, the framework achieves notable improvements in terms of fine-grained semantic understanding, cross-modal alignment precision, hallucination suppression, and text generation quality.

- This article presents a multi-step cross-attention mechanism that iteratively performs alignment and fusion of visual and textual features across multiple rounds. This iterative process significantly enhances semantic consistency and cross-modal fusion accuracy between image and text, while simultaneously improving the model’s robustness and generalization capabilities.

- This article introduces the open knowledge graph ConceptNet, and this approach maps region-specific object features extracted by Faster R-CNN to entities within ConceptNet. Subsequently, it obtains multiple triplets sets through retrieval, which are combined with TransE embeddings and multi-hop reasoning, enabling a deep integration of external commonsense knowledge and cross-modal image-text features. Through knowledge association and expansion, this approach enhances the semantic expression of text generation, further improving location awareness, detail awareness, richness, accuracy, and cognitive plausibility of objects in the generated text.

- This article presents an empirical study on the Microsoft Common Objects in COntext (MSCOCO) and Flickr30k Entities (Flickr30k) datasets. Experimental results demonstrate that the proposed model outperforms existing methods across multiple evaluation metrics. Furthermore, ablation studies and qualitative analysis validate the effectiveness of the multi-step cross-attention cross-modal alignment module and the external commonsense knowledge enhancement module.

2. Related Works

2.1. Vision–Language Pre-Trained Model CLIP and Generative Pre-Trained Model GPT-2

2.2. Image Captioning Method Based on the Pre-Trained Model

2.3. Image Captioning Model with Knowledge Graph Grounding and Cross-Attention Mechanisms

3. Model Design

3.1. Model Structure

3.2. Multi-Step Cross-Attention Cross-Modal Alignment Module

- Text-aligned representation computation. Taking the current query as the query and the local visual features as the keys and values, the cross-attention is computed to update the textual query vector (as shown in Equation (4)):

- Image-aligned representation computation. Taking the current query as the query and the local textual features as the keys and values, is computed to update the image query vector (as shown in Equation (5)):

- Query vector update. The aligned representations and are multiplied element-wise to generate a new query vector, as shown in Equation (6), and then proceed to Step 2:

3.3. ConceptNet Commonsense Knowledge Augmentation Module Based on TransE and Multi-Hop Reasoning

3.3.1. ConceptNet Entity Linking with Region-Based Visual Features Extracted by Faster R-CNN

3.3.2. ConceptNet Knowledge Embedding via TransE and Multi-Hop Reasoning

- Path Search: Extract multi-hop paths from ConceptNet that form relational chains (as shown in Equation (13)):In Equation (13), entities in the path are denoted by , relations by , and hop depth = 4.

- Path Vector Encoding: Using an LSTM sequence model [28,29] to encode the path information, the final hidden state constitutes the path’s global representation , this vector comprehensively integrates the temporal dependencies among all entities and relations along the path (as shown in Equation (14)):

- Path Score Function: The path score is computed as the similarity between the path vector and target entity vector, while enforcing alignment between the path’s endpoint and target entity. The path scoring and loss functions are formalized in Equations (15) and (16), respectively:

- Joint Loss Function: The unified loss function combining TransE’s single-hop loss (direct relations) and multi-hop path reasoning loss is formalized in Equation (17), where hyperparameters , .

- Parameter Update: Optimize entity vectors, relation vectors, and path encoder parameters via gradient descent.

3.4. GPT-2-Based Text Decoder Module

- Cross-Modal Feature Fusion and Joint Representation Generation

- 2.

- Dimensionality Transformation

3.5. Joint Training and Fine-Tuning

- CLIP Cross-Modal Image-Text Feature Alignment

- 2.

- Autoregressive Text Generation Optimization

- 3.

- Total Loss Function for Joint Training

4. Experiments

4.1. Experimental Datasets

4.2. Evaluation Metrics

4.3. Experimental Setup

4.3.1. Experimental Environment Configuration

4.3.2. Experimental Parameter Configuration

4.4. Model Performance Benchmarking

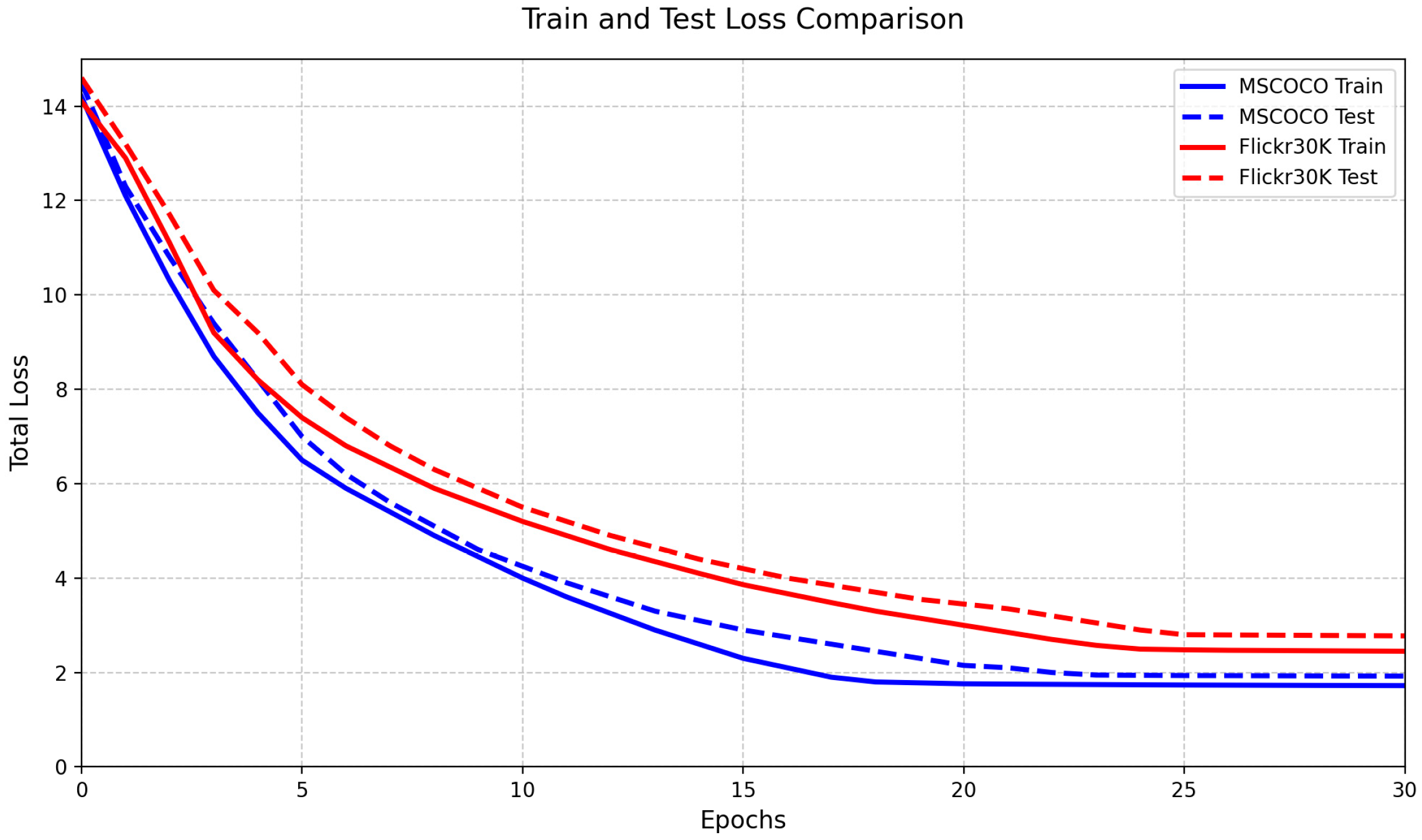

4.4.1. Comparative Analysis of Loss Functions

4.4.2. Comparative Analysis of Performance Metrics

4.5. Ablation Study

- Effectiveness of the Multi-Step Cross-Attention Cross-Modal Fusion Module

- 2.

- Effectiveness of the External Commonsense Knowledge Enhancement Module

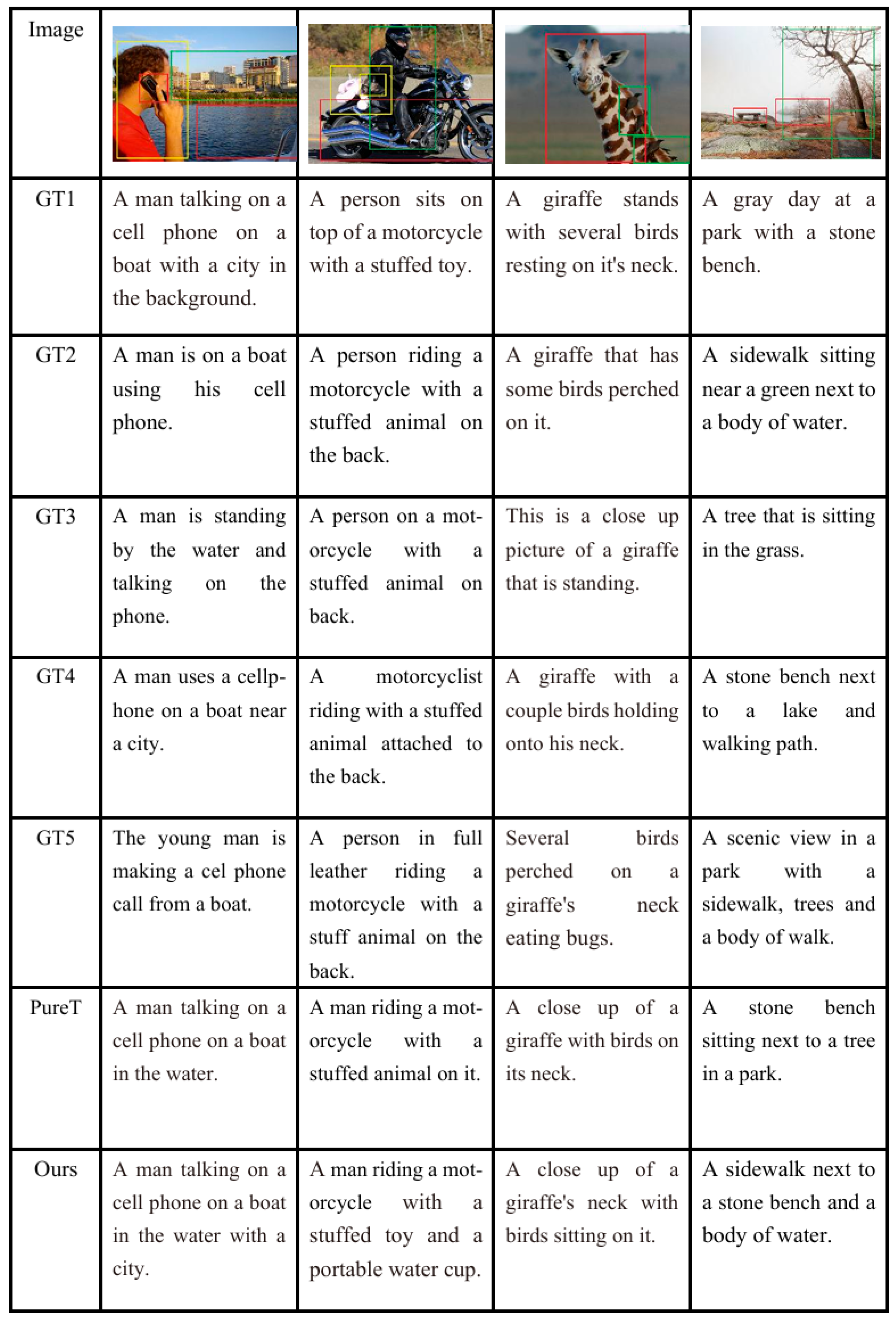

4.6. Qualitative Results Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bernardi, R.; Cakici, R.; Elliott, D.; Erdem, A.; Erdem, E.; Ikizler-Cinbis, N.; Keller, F.; Muscat, A.; Plank, B. Automatic Description Generation from Images: A Surveyof Models, Datasets, and Evaluation Measures. J. Artif. Intell. Res. 2016, 55, 409–442. [Google Scholar] [CrossRef]

- Barraco, M.; Cornia, M.; Cascianelli, S.; Baraldi, L.; Cucchiara, R. The Unreasonable Effectiveness of CLIP Features for Image Captioning: An Experimental Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022; pp. 4662–4670. [Google Scholar] [CrossRef]

- Luo, Z.; Hu, Z.; Xi, Y.; Zhang, R.; Ma, J. I-Tuning: Tuning Frozen Language Models with Image for Lightweight Image Captioning. In Proceedings of the IEEE/CVF Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–8 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; ML Research Press: New York, NY, USA, 2021; pp. 8748–8763. Available online: https://proceedings.mlr.press/v139/radford21a (accessed on 12 July 2024).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I.; Language Models Are Unsupervised Multitask Learners. OpenAI Blog. 2019. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 1 June 2024).

- Ramos, R.; Martins, B.; Elliott, D.; Kementchedjhieva, Y. SmallCap: Lightweight Image Captioning Prompted with Retrieval Augmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 2840–2849. [Google Scholar] [CrossRef]

- Mokady, R.; Hertz, A.; Bermano, A.H. ClipCap: CLIP Prefix for Image Captioning. arXiv 2021, arXiv:2111.09734. [Google Scholar] [CrossRef]

- Li, Y.; Pan, Y.; Yao, T.; Mei, T. Comprehending and Ordering Semantics for Image Captioning. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17990–17999. [Google Scholar] [CrossRef]

- Fang, Z.; Wang, J.; Hu, X.; Liang, L.; Gan, Z.; Wang, L.; Yang, Y.; Liu, Z. Injecting Semantic Concepts into End-to-End Image Captioning. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 17988–17998. [Google Scholar] [CrossRef]

- Zeng, Z.; Zhang, H.; Lu, R.; Wang, D.; Chen, B.; Wang, Z. ConZIC: Controllable Zero-Shot Image Captioning by Sampling-Based Polishing. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 23465–23476. [Google Scholar] [CrossRef]

- Nukrai, D.; Mokady, R.; Globerson, A. Text-only Training for Image Captioning Using Noise-Injected CLIP. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 4055–4063. Available online: https://aclanthology.org/2022.findings-emnlp.299/ (accessed on 16 July 2025).

- Wang, J.; Yan, M.; Zhang, Y.; Sang, J. From Association to Generation: Text-Only Captioning by Unsupervised Cross-Modal Mapping. arXiv 2023, arXiv:2304.13273. [Google Scholar] [CrossRef]

- Liu, H.; Singh, P. ConceptNet—A practical commonsense reasoning tool kit. BT Technol. J. 2004, 22, 211–226. [Google Scholar] [CrossRef]

- Speer, R.; Chin, J.; Havasi, C. ConceptNet 5.5: An Open Multilingual Graph of General Knowledge. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), San Francisco, CA, USA, 4–9 February 2017; pp. 4444–4451. [Google Scholar] [CrossRef]

- Huang, F.; Li, Z.; Wei, H.; Zhang, C.; Ma, H. Boost Image Captioning with Knowledge Reasoning. Mach. Learn. 2020, 109, 2313–2332. [Google Scholar] [CrossRef]

- Li, T.; Wang, H.; He, B.; Chen, C.W. Knowledge-Enriched Attention Network with Group-Wise Semantic for Visual Storytelling. arXiv 2022, arXiv:2203.05346. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, T.; Li, Y.; Wang, Y.; Wu, F. Multi-Modality Cross Attention Network for Image and Sentence Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10938–10947. [Google Scholar] [CrossRef]

- Lukovnikov, D.; Fischer, A. Layout-to-Image Generation with Localized Descriptions Using ControlNet with Cross-Attention Control. arXiv 2024, arXiv:2402.13404. [Google Scholar] [CrossRef]

- Song, Z.; Hu, Z.; Zhou, Y.; Zhao, Y.; Hong, R.; Wang, M. Embedded Heterogeneous Attention Transformer for Cross-Lingual Image Captioning. IEEE Trans. Multimed. 2024, 26, 9008–9020. [Google Scholar] [CrossRef]

- Cao, S.; An, G.; Cen, Y.; Yang, Z.; Lin, W. CAST: Cross-modal retrieval and visual conditioning for image captioning. Pattern Recognit. 2024, 153, 110555. [Google Scholar] [CrossRef]

- Cao, J.; Fang, J.; Meng, Z.; Liang, S. Knowledge graph embedding: A survey from the perspective of representation spaces. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Gu, X.; Chen, G.; Wang, Y.; Zhang, L.; Luo, T.; Wen, L. Text with knowledge graph augmented transformer for video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 19–24 June 2023; pp. 18941–18951. [Google Scholar] [CrossRef]

- Han, S.; Liu, J.; Zhang, J.; Gong, P.; Zhang, X.; He, H. Lightweight dense video captioning with cross-modal attention and knowledge-enhanced unbiased scene graph. Complex Intell. Syst. 2023, 9, 4995–5012. [Google Scholar] [CrossRef]

- Chen, H.; Ding, G.; Liu, X.; Lin, Z.; Liu, J.; Han, J. IMRAM: Iterative Matching with Recurrent Attention Memory for Cross-Modal Image-Text Retrieval. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12673–12682. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bordes, A.; Usunier, N.; Garcia-Durán, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-Relational Data. In Proceedings of the 27th International Conference on Neural Information Processing Systems—Volume 2 (NIPS’13), Lake Tahoe, NV, USA, 5–10 December 2013; Curran Associates Inc.: Red Hook, NY, USA, 2013; pp. 2787–2795. Available online: https://dl.acm.org/doi/10.5555/2999792.2999923 (accessed on 16 July 2025).

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A Review on the Long Short Term Memory Model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Wang, J.; Ge, C.; Li, Y.; Zhao, H.; Fu, Q.; Cao, K.; Jung, H. A Two-Layer Network Intrusion Detection Method Incorporating LSTM and Stacking Ensemble Learning. Comput. Mater. Contin. 2025, 83, 5129–5153. [Google Scholar] [CrossRef]

- Bisong, E. The Multilayer Perceptron (MLP). In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Springer Nature: Berkeley, CA, USA, 2019; pp. 401–405. [Google Scholar] [CrossRef]

- Berger, U.; Stanovsky, G.; Abend, O.; Frermann, L. Surveying the Landscape of Image Captioning Evaluation: A Comprehensive Taxonomy, Trends and Metrics Analysis. arXiv 2024, arXiv:2408.04909. [Google Scholar] [CrossRef]

- Evtikhiev, M.; Bogomolov, E.; Sokolov, Y.; Bryksin, T. Out of the BLEU: How should we assess quality of the Code Generation models? J. Syst. Softw. 2023, 203, 111741. [Google Scholar] [CrossRef]

- Lin, C. ROUGE: A Package for Automatic Evaluation of Summaries. In Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004; Available online: https://api.semanticscholar.org/CorpusID:964287 (accessed on 20 July 2025).

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; Association for Computational Linguistics: Stroudsburg, PA, USA, 2005; pp. 65–72. Available online: https://aclanthology.org/W05-0909/ (accessed on 20 July 2025).

- Vedantam, R.; Zitnick, C.L.; Parikh, D. CIDEr: Consensus-Based Image Description Evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 4566–4575. [Google Scholar] [CrossRef]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. SPICE: Semantic Propositional Image Caption Evaluation. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer Nature: Cham, Switzerland, 2016; pp. 382–398. [Google Scholar] [CrossRef]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-Memory Transformer for Image Captioning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10575–10584. [Google Scholar] [CrossRef]

- Ji, J.; Luo, Y.; Sun, X.; Chen, F.; Luo, G.; Wu, Y.; Gao, Y.; Ji, R. Improving Image Captioning by Leveraging Intra- and Inter-Layer Global Representation in Transformer Network. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 1655–1663. [Google Scholar] [CrossRef]

- Song, Z.; Zhou, X.; Dong, L.; Tan, J.; Guo, L. Direction Relation Transformer for Image Captioning. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 5056–5064. [Google Scholar] [CrossRef]

- Tewel, Y.; Shalev, Y.; Schwartz, I.; Wolf, L. Zerocap: Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17918–17928. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, J.; Sun, Y. End-to-End Transformer Based Model for Image Captioning. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; pp. 2585–2594. [Google Scholar] [CrossRef]

- Wang, J.; Wang, W.; Wang, L.; Wang, Z.; Feng, D.D.; Tan, T. Learning Visual Relationship and Context-Aware Attention for Image Captioning. Pattern Recognit. 2020, 98, 107075. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, L.; Chen, J.; Yu, D.; Li, Y. Comprehensive Image Captioning via Scene Graph Decomposition. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 211–229. [Google Scholar] [CrossRef]

- Zhang, W.; Shi, H.; Tang, S.; Xiao, J.; Yu, Q.; Zhuang, Y. Consensus Graph Representation Learning for Better Grounded Image Captioning. arXiv 2021, arXiv:2112.00974. [Google Scholar] [CrossRef]

- Du, R.; Zhang, W.; Li, S.; Chen, J.; Guo, Z. Spatial Guided Image Captioning: Guiding Attention with Object’s Spatial Interaction. IET Image Process. 2024, 18, 3368–3380. [Google Scholar] [CrossRef]

- Denton, R.; Díaz, M.; Kivlichan, I.; Prabhakaran, V.; Rosen, R. Whose Ground Truth? Accounting for Individual and Collective Identities Underlying Dataset Annotation. arXiv 2021, arXiv:2112.04554. [Google Scholar] [CrossRef]

- Lei, Z.; Zhou, C.; Chen, S.; Huang, Y.; Liu, X. A Sparse Transformer-Based Approach for Image Captioning. IEEE Access 2020, 8, 213437–213446. [Google Scholar] [CrossRef]

- Wang, L.; Hu, Y.; Xia, Z.; Chen, E.; Jiao, M.; Zhang, M.; Wang, J. Video Description Generation Method Based on Contrastive Language–Image Pre-Training Combined Retrieval-Augmented and Multi-Scale Semantic Guidance. Electronics 2025, 14, 299. [Google Scholar] [CrossRef]

- Chen, T.; Li, Z.; Wei, J.; Xian, T. Mixed Knowledge Relation Transformer for Image Captioning. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022. [Google Scholar] [CrossRef]

- Li, Z.; Tang, H.; Peng, Z.; Qi, G.; Tang, J. Knowledge-Guided Semantic Transfer Network for Few-Shot Image Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–15. [Google Scholar] [CrossRef]

| Parameter Name | Parameter Value |

|---|---|

| Lr (Learning Rate) | 1 × 10−4 |

| lr Decay | 0.9 |

| lr_step_size | 1 |

| batch_size | 64 |

| Optimizers | AdamW |

| beam size | 5 |

| CLIPViT | VIT-B/32 |

| CLIPENcoder_dim | 512 |

| GPT-2_dim | 768 |

| Epochs | 30 |

| iterations_K | 5 |

| Anchor_scale | [8, 16, 32] |

| RoI pooling size | 7 × 7 |

| RPN_POST_NMS_TOP_N_TEST | 1000 |

| CrossModal_Adapter_dim | 2048 → 512 |

| Weight decay rate | 1 × 10−2 |

| Dropout probabilities | 0.3 |

| Temperature (τ) | 0.07 |

| Random seed | 42 |

| Method Category | Method | B-1 | B-4 | M | R | C | S |

|---|---|---|---|---|---|---|---|

| Semantic Perception Enhancement models | M2Transformer [37] | 80.8 | 39.1 | 29.2 | 58.6 | 131.2 | 22.6 |

| GET [38] | 81.5 | 39.5 | 29.3 | 58.9 | 131.6 | 22.8 | |

| Spatial Relation Enhancement models | DRT [39] | 81.7 | 40.4 | 29.5 | 59.3 | 133.2 | 23.3 |

| Zero-Shot Learning models | Zerocap [40] | 81.9 | 40.7 | 29.8 | 58.7 | 136.4 | 23.5 |

| Grid Feature Enhancement models | PureT [41] | 82.1 | 40.9 | 30.2 | 60.1 | 138.2 | 24.2 |

| The method of this article | Ours | * 82.7 | * 42.1 | * 30.9 | * 60.4 | * 142.6 | * 24.3 |

| Method Category | Method | B-1 | B-4 | M | R | C | S |

|---|---|---|---|---|---|---|---|

| Semantic Perception Enhancement models | A_R_L [42] | 69.8 | 27.7 | 21.5 | 48.5 | 57.4 | - |

| Sub-GC [43] | 70.7 | 28.5 | 22.3 | - | 61.9 | 16.4 | |

| Structured Semantic Enhancement models | CGRL [44] | 72.5 | 27.8 | 22.4 | - | 65.2 | 16.8 |

| Spatial Relation Enhancement models | SGA [45] | 73.2 | 30.4 | 22.6 | 50.8 | 68.4 | 16.4 |

| The method of this article | Ours | * 76.3 | * 33.8 | * 30.5 | * 52.6 | * 78.4 | * 16.8 |

| Baseline | Multi-Step Cross-Attention | External Knowledge Augmentation | B-1 | B-4 | M | R | C | S | SUM |

|---|---|---|---|---|---|---|---|---|---|

| √ | 80.2 | 39.8 | 28.3 | 57.9 | 134.5 | 22.4 | 363.1 | ||

| √ | √ | 81.6 | 41.9 | 29.8 | 59.7 | 140.4 | 23.6 | 377.0 | |

| √ | √ | √ | * 82.7 | * 42.1 | * 30.9 | * 60.4 | * 142.6 | * 24.3 | * 383.0 |

| Baseline | Multi-Step Cross-Attention | External Knowledge Augmentation | B-1 | B-4 | M | R | C | SUM |

|---|---|---|---|---|---|---|---|---|

| √ | 74.5 | 32.4 | 29.1 | 49.7 | 65.8 | 251.5 | ||

| √ | √ | 75.8 | 33.1 | 30.3 | 51.9 | 73.5 | 264.6 | |

| √ | √ | √ | * 76.3 | * 33.8 | * 30.5 | * 52.6 | * 78.4 | * 271.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Jiao, M.; Li, Z.; Zhang, M.; Wei, H.; Ma, Y.; An, H.; Lin, J.; Wang, J. Image Captioning Model Based on Multi-Step Cross-Attention Cross-Modal Alignment and External Commonsense Knowledge Augmentation. Electronics 2025, 14, 3325. https://doi.org/10.3390/electronics14163325

Wang L, Jiao M, Li Z, Zhang M, Wei H, Ma Y, An H, Lin J, Wang J. Image Captioning Model Based on Multi-Step Cross-Attention Cross-Modal Alignment and External Commonsense Knowledge Augmentation. Electronics. 2025; 14(16):3325. https://doi.org/10.3390/electronics14163325

Chicago/Turabian StyleWang, Liang, Meiqing Jiao, Zhihai Li, Mengxue Zhang, Haiyan Wei, Yuru Ma, Honghui An, Jiaqi Lin, and Jun Wang. 2025. "Image Captioning Model Based on Multi-Step Cross-Attention Cross-Modal Alignment and External Commonsense Knowledge Augmentation" Electronics 14, no. 16: 3325. https://doi.org/10.3390/electronics14163325

APA StyleWang, L., Jiao, M., Li, Z., Zhang, M., Wei, H., Ma, Y., An, H., Lin, J., & Wang, J. (2025). Image Captioning Model Based on Multi-Step Cross-Attention Cross-Modal Alignment and External Commonsense Knowledge Augmentation. Electronics, 14(16), 3325. https://doi.org/10.3390/electronics14163325