1. Introduction

The rapid development of edge and fog computing has driven the deployment of geo-distributed systems [

1]. Kubernetes (K8s), the most widely used container orchestration tool, has been extensively studied to adapt it to these distributed environments [

2,

3]. When applying K8s into edge computing environments where resources are often limited, clearly defining resource usage limits is essential. Resource Quota sets the maximum amount of resources each service or tenant can utilize. It prevents any single service from monopolizing resources and enables efficient management of limited resources at edge computing sites [

4].

However, current K8s-based management platform only provides manual resource quota auto-scaling method. This might be an issue when edge computing sites serve multiple simultaneous services that have dynamic traffic patterns (different surge and light traffic periods), for example, e-commerce application [

5]—high traffic surge during major sale events, video streaming [

6]—huge request during big sport event, or Artificial Intelligence (AI)/Machine Learning (ML) application [

7]—high demand during training period, etc. During high-demand periods, although K8s-based systems are normally assisted by pod auto-scaling mechanisms, slow quota allocation caused by manual traffic monitoring and manual quota configuration can prevent scaling up necessary pods. This issue might cause performance degradation, pod failures, or service disruptions. Meanwhile, simultaneous services at the same edge sites do not share low- and high-demand periods at the same time. During low-demand periods of a specific service, redundant or excessive quota allocation might occupy valuable resources that other services may need, thereby affecting their performance.

Our proposed approach in this article addresses two research gaps related to resource quota auto-scaling in edge environments. First, there is no prior research work about dynamic multi-cluster quota auto-scaling. Previous works have only addressed pod- and node-level auto-scaling problems. Second, unlike other machine learning prediction-based auto-scaling approaches, we propose a backup mechanism to handle incorrect predictions and unpredictable scenarios.

Regarding the first gap about auto-scaling level, previous studies have focused only on pod and node auto-scaling, without addressing quota auto-scaling. Pod auto-scaling works proposed solutions to enhance two Kubernetes’s basic mechanisms: Horizontal Pod Auto-scaler (HPA) and Vertical Pod Auto-scaler (VPA). HPA automatically adjusts the number of pod replicas based on workload demands, enabling applications to handle fluctuations in traffic or resource utilization effectively [

8]. Meanwhile, VPA modifies resource allocations, such as CPU and memory, for individual pods, optimizing performance by aligning resources with actual needs [

9]. Studies on pod auto-scaling enhancement, such as [

10,

11,

12], solve HPA and VPA’s weakness and improve traffic adaptation capabilities and resource utilization. Meanwhile, K8s node auto-scaling studies propose solutions to dynamically add or remove nodes to meet cluster-wide resource demands [

13,

14]. Node- and pod-level auto-scaling solutions do not consider the service deployment scenario where the resource quota is defined. As previously discussed, a mismatch between the allocated resource quota and the actual resources required for pod and node auto-scaling can negatively impact service performance and lead to inefficient resource utilization.

While there are currently no available research works on automatic quota scaling, several open-source and third-party tools exist for multi-cluster quota management, such as Karmada [

15], Gatekeeper’s Open Policy Agent (OPA) [

16], and Kyverno [

17]. These tools can enforce cluster-wide quota thresholds, prevent misuse or over-provisioning, and automatically generate quota definitions when Kubernetes namespaces are created. However, they do not offer mechanisms for dynamically adjusting quotas—specifically scaling them up or down based on real-time demand. To enable this capability, a custom controller is required—one that can integrate with these multi-cluster quota management tools. In this paper, we use Karmada as an example and extend its framework with a hybrid quota auto-scaling mechanism.

Regarding the second gap about machine learning prediction-based methods for auto-scaling, previous studies did not take into account inaccurate predictions and unforeseen scenarios. ML prediction-based approaches, such as those of [

18,

19,

20,

21,

22], adjust auto-scaling configurations based on forecast resource demands. Hence, these solutions enable the K8s system to quickly adapt to service traffic fluctuation and avoid negative impacts on service performance. However, machine learning prediction models cannot guarantee perfect accuracy. Even slight inaccuracies can impact service performance. Moreover, service traffic and resource demand patterns evolve over time. Sudden, unforeseen changes that deviate from previously learned patterns (e.g., midnight flash sale burst traffic or new service launching at edge can cause sudden traffic pattern changes) can also lead to performance issues. This limitation underscores the necessity of a supplementary mechanism that can serve as a fallback to quota prediction, ensuring that quota adjustments remain both accurate and adequate to meet real-time demands, even when the prediction model underperforms.

To address these two problems, this study proposes a Resource Quota Scaling Framework that combines machine learning-based proactive and reactive mechanisms to automatically adjust quotas. Our framework enhances Karmada [

16], an open-source control-plane solution designed for managing applications and resource quotas in edge environments. Karmada allows service operators to manually configure a Federated Resource Quota (FRQ) resource that enables global and fine-grained management of quotas for applications under the same namespace running on different clusters. Our proposed proactive and reactive auto-scaling mechanisms dynamically configure this Karmada resource to achieve efficient resource quota allocation.

Proactive Resource Quota Scaling: A machine learning-based approach to predict and scale resource quotas to accommodate future workload demands effectively.

Reactive Resource Quota Scaling: A method to dynamically adjust quotas when inaccurate prediction or unpredictable moments are detected, and correct and adjust Proactive Quota Scaling unsuitable decision.

Integrated Scaling Framework: A comprehensive framework that not only adjusts resource quotas but also manages the scaling up and down of worker nodes, enabling efficient and flexible resource management in response to workload requirements.

2. Related Works

In this section, we review recent studies relevant to our proposed framework. This section discusses prior works related to resource auto-scaling, orchestration strategies, and the integration of machine learning in Kubernetes auto-scaling.

Some studies on multi-cluster service orchestration, including [

23,

24,

25], have emphasized efficient workload distribution to optimize performance and resource utilization in distributed environments. However, workload distribution cannot reach its full potential without appropriate resource scaling mechanisms to ensure sufficient resources are available for workloads, especially during sudden surges in demand. This limitation can lead to workload placement failing to meet actual resource requirements, negatively affecting system performance and responsiveness. This study addresses these shortcomings by integrating a dynamic quota scaling mechanism to balance resources according to workload fluctuations, ensuring stability and efficiency in multi-cluster systems.

Previous studies on auto-scaling did not consider auto-scaling at quota level. They can be categorized into auto-scaling at pod and node levels.

Regarding pod scaling methods, K8s provides auto-scaling mechanisms such as the HPA and VPA [

8,

9], which enable automatic adjustment of pod numbers and resource allocations. While these tools are effective for managing resources, their limitations in handling resource optimization under dynamic workload conditions have led to significant research into hybrid auto-scaling approaches. Study [

10] proposes a hybrid auto-scaling method that combines horizontal and vertical scaling with Long Short-Term Memory (LSTM)-based workload prediction to optimize resource utilization in single-cluster environments. However, this approach does not address dynamic resource quota adjustments, leading to potential quota breaches when workloads experience sudden surges. In the context of serverless environments, study [

11] introduces a hybrid auto-scaling framework for Knative that leverages traffic prediction to optimize scaling and reduce Service Level Objective (SLO) violations. Despite its contributions, it does not tackle the issue of managing shared resource quotas, resulting in possible resource contention when multiple applications scale simultaneously. Similarly, study [

12] applies reinforcement learning to minimize quota violations and improve resource efficiency but assumes static quota configurations, limiting its adaptability to highly dynamic workloads. Although these studies have significantly advanced auto-scaling techniques, they do not resolve the challenge of applications exceeding predefined resource quotas during scaling. This highlights the critical need for a dynamic resource quota scaling mechanism that can adapt quotas in real-time to ensure efficient resource allocation and system stability in complex, multi-cluster environments.

Regarding node scaling methods, most studies on scaling worker nodes to enhance cluster resources and meet workload demands are typically implemented through two common approaches. Study [

13] proposes a combined proactive and reactive auto-scaler mechanism for both Kubernetes nodes and pods. Study [

14] introduces the concept of standby worker nodes, which are pre-created and switched to an active state when needed, significantly reducing response times compared to creating nodes from scratch. While these approaches effectively address global resource scaling, they do not tackle the challenge of precise resource allocation to specific namespaces or quota adjustments to accommodate high-demand workloads. This highlights the need for more flexible resource quota management mechanisms in multi-tenant environments.

Regarding methods for optimizing Kubernetes auto-scaling, machine learning-based prediction is a frequently used method. Studies often employ techniques such as LSTM, Informer, and Autoregressive Integrated Moving Average (ARIMA) to enhance prediction accuracy while minimizing inefficiencies in resource allocation. In [

18], LSTM was applied to forecast resource demands based on both historical and real-time data, enabling proactive container scaling to meet future demand. Study [

19] compared the performance of LSTM and ARIMA models and subsequently selected the most suitable one for their proactive Kubernetes auto-scaler. The authors leveraged the predictive capabilities of these models to detect irregular traffic fluctuations and proactively scale pods, thereby preventing resource shortages and service crashes. In another approach [

20], the authors evaluated the performance of Informer, Recurrent Neural Network (RNN), LSTM, and ARIMA in predicting online application workloads. Their results demonstrated the effectiveness of these models for predictive auto-scaling, showing improvements over the default K8s HPA in terms of reduced application response time and lower SLO violation rates. Similarly, ARIMA and LSTM were also employed in [

21,

22] for predictive auto-scaling across various cloud, edge, and IoT workloads. However, a common limitation of all machine learning-based approaches is that no model can guarantee perfect forecasting accuracy. Even minor prediction errors may adversely impact service performance. Moreover, traffic and resource demand patterns are inherently dynamic and may change over time. Abrupt and unforeseen deviations from learned patterns can also lead to performance degradation.

In conclusion, first, there has been no research focusing on auto-scaling at quota level for member clusters in K8s multi-cluster environments. Previous works only solved auto-scaling at pod- and node-level problems. Second, while the machine learning-based prediction method is a popular auto-scaling approach that can also be applied at quota auto-scaling level, mechanisms for handling inaccurate predictions and unforeseen cases are lacking.

To fill these gaps, we propose a resource quota auto-scaling framework that combines a machine learning prediction-based proactive quota auto-scaling mechanism and a reactive quota auto-scaling mechanism for handling inaccurate predictions and unforeseeable cases. Our proactive and reactive mechanisms are implemented on top of Karmada’s resource quota management feature to support efficient, dynamic, and automatic quota auto-scaling in K8s multi-cluster systems.

3. Proposed Hybrid Resource Quota Scaling Framework for Kubernetes-Based Edge Computing Systems

Our proposed system, the Resource Quota Scaling Framework, is designed to dynamically adjust resource quotas in multi-cluster K8s environments, ensuring efficient resource allocation for workloads with fluctuating demands. Operating on top of the K8s and Karmada’s Application Programming Interfaces (APIs), the framework integrates monitoring, workload forecasting, proactive scaling, and reactive scaling mechanisms to optimize resource management across member clusters.

3.1. Framework Component Overview

Karmada is a widely used open-source project designed for multi-cloud and multi-cluster K8s environments. A key feature provided by Karmada is FRQ, which enables cross-cluster resource synchronization by propagating resource quota definitions from the management cluster to the edge clusters. FRQ allows administrators to centrally define and adjust Kubernetes resource quotas for the same application deployed across multiple clusters, without the need to manually configure namespaces and resource quotas in each cluster. With this capability, Karmada FRQ facilitates synchronized monitoring and control of resource consumption for each service, while uniformly updating resource quotas. This minimizes management complexity and ensures that applications operate efficiently without resource-related issues.

Since our work focuses on resource quota scaling in multi-cluster environments, we leverage the Karmada FRQ feature to take advantage of its centralized resource quota management capabilities. However, to overcome the limitations of static FRQ configuration in highly dynamic workload scenarios, we developed custom Kubernetes controllers capable of both proactive and reactive adjustments to Karmada FRQ. These adjustments enable the system to meet fluctuating workload demands while maintaining service request performance.

Our solution integrates seamlessly with existing multi-cluster cloud management systems by utilizing Karmada’s built-in orchestration capabilities and extending them through custom Kubernetes controllers, which follow Kubernetes’ native extension paradigm. This approach ensures compatibility and maintainability in Kubernetes-based systems such as Karmada.

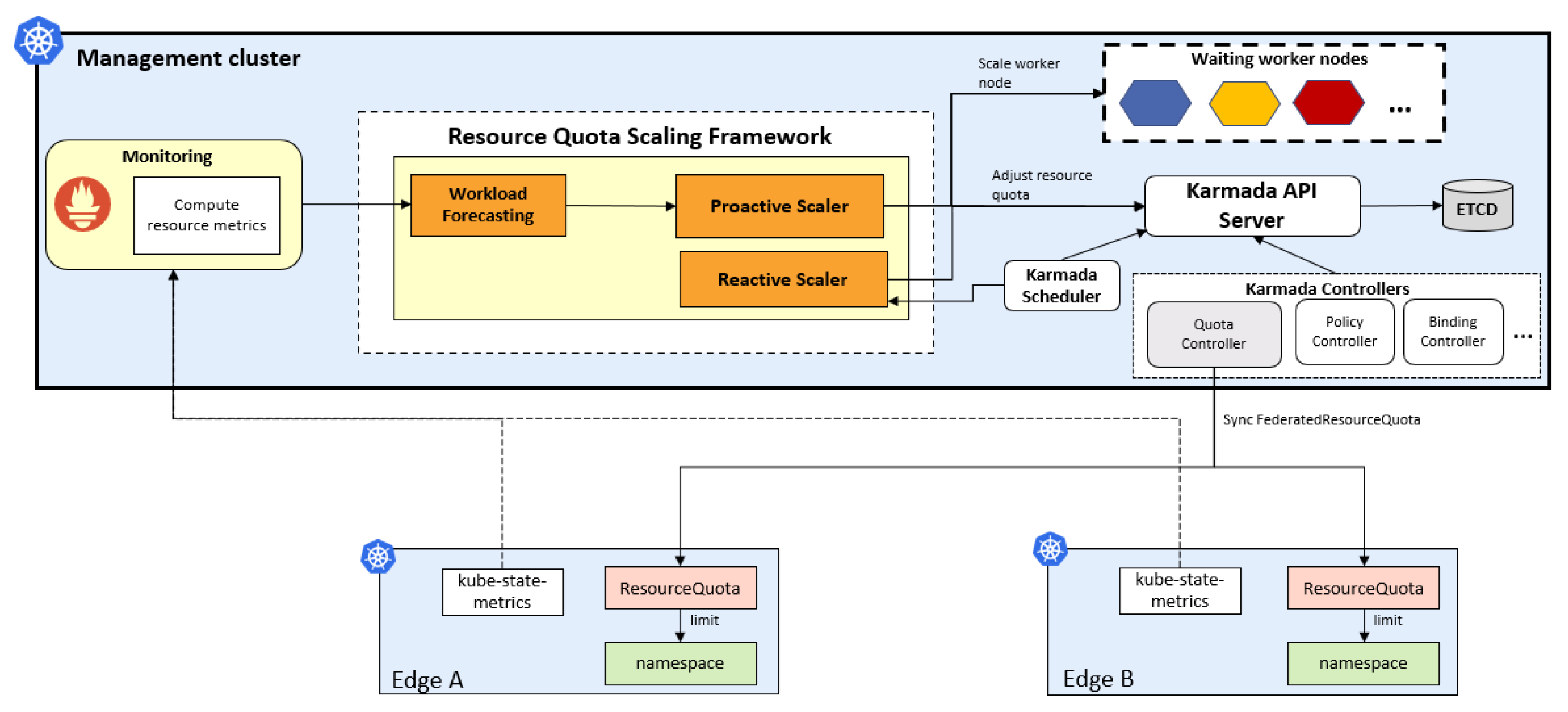

The system architecture is shown in

Figure 1, and the flow of its components is described below.

Monitoring and Metrics Collection: The system collects real-time metrics from edge clusters using Prometheus [

26], including resource usage data (CPU, memory) and namespace-level metrics via kube-state-metrics [

27]. These metrics serve as the foundation for forecasting and scaling decisions.

Workload Forecasting: This component uses machine learning models to predict future workload demands based on historical and real-time metrics. These forecasts enable the system to anticipate changes in resource requirements and plan scaling actions proactively.

Quota Proactive Scaling: This module anticipates resource demands based on workload forecasts and adjusts resource quotas in advance. By scaling quotas proactively, this mechanism ensures that resources are available to meet predicted workload surges, reducing the risk of resource shortages or performance degradation during peak demands.

Quota Reactive Scaling: This component responds to scenarios where resource demands cannot be predicted accurately or deviate significantly from forecasts. It continuously monitors real-time metrics and adjusts quotas dynamically to ensure workloads have sufficient resources. Reactive scaling acts as a safety mechanism to handle unexpected workload fluctuations or inaccuracies in predictions, maintaining system reliability.

Quota Adjustment and Synchronization: The Quota Controller integrates with the Karmada API Server to apply scaling decisions across member clusters. It updates the FederatedResourceQuota dynamically to allocate resources appropriately among namespaces, ensuring high-demand workloads are adequately supported.

Worker Node Scaling: When cluster-wide resources become insufficient, the system activates pre-provisioned worker nodes from a pool of waiting nodes. This ensures that additional physical resources are available quickly, minimizing delays in meeting scaling requirements.

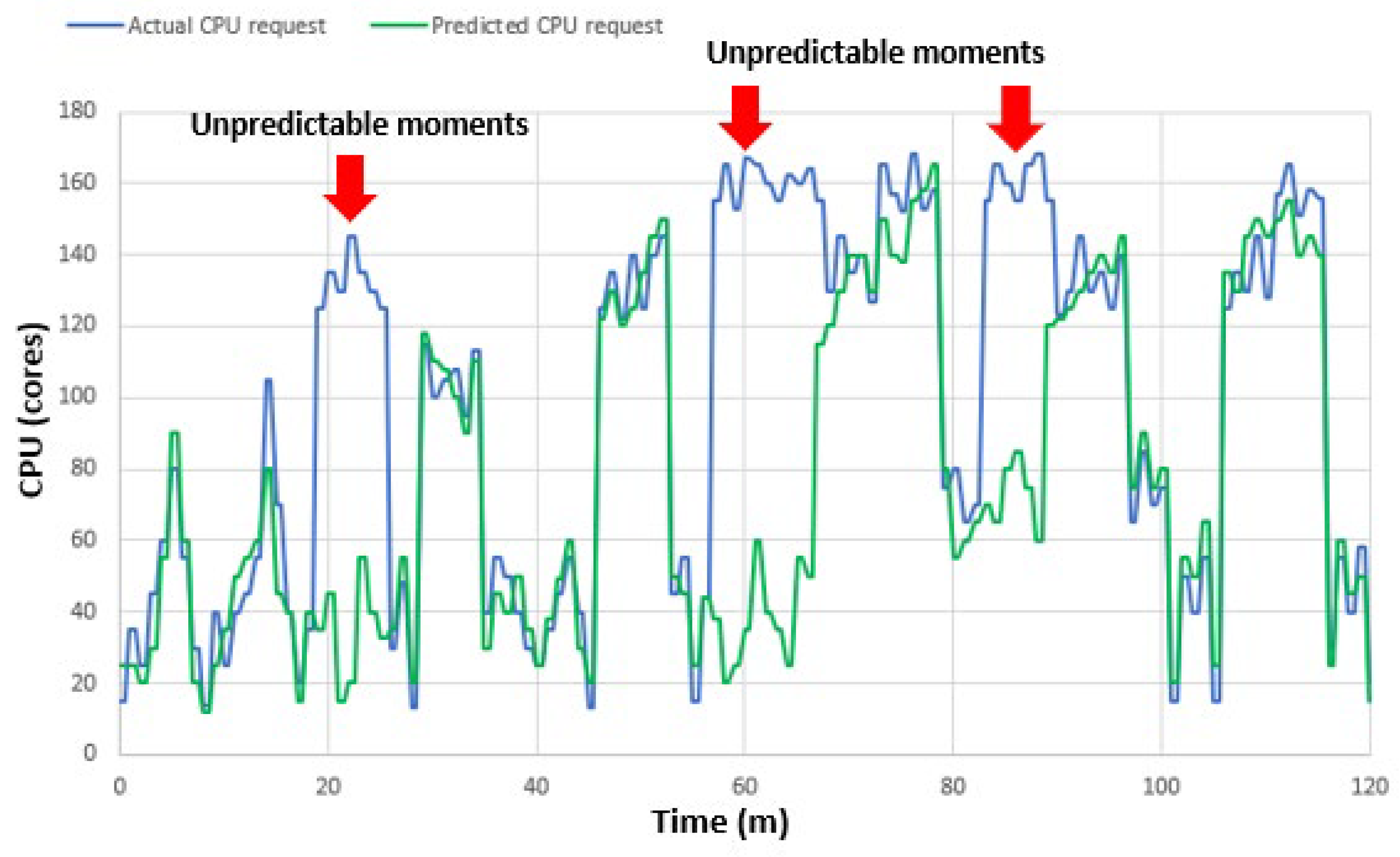

3.2. Workload Forecasting

The workload forecasting component enables proactive adjustments to resource quotas in multi-cluster Kubernetes environments. Accurate predictions of resource demands are essential for aligning resource allocation with workload requirements, minimizing inefficiencies such as underutilization or over-provisioning. We applied the LSTM time series forecasting model which was previously used in several works of our [

10,

11].

The LSTM model was trained using the Alibaba Cloud cluster trace dataset [

28], which contains detailed resource usage data from real-world environments, making it an ideal candidate for resource forecasting experiments. Following the approaches in our previous works [

10,

11], we demonstrate CPU resource forecasting as a representative example for other types of resources. The LSTM model is designed to predict the CPU resource demands of individual namespaces, enabling precise adjustments to resource allocations at a granular level. It employs a sliding window technique, where the historical data from the previous 10 min is used as input to predict CPU usage for the subsequent one-minute interval. Further details of the LSTM model, including its architecture, hyperparameter tuning, and performance evaluation, can be found in [

10,

11].

The evaluation of the LSTM model was conducted using two widely accepted metrics: Root Mean Square Error (RMSE) and Mean Absolute Error (MAE).

Table 1 compares the performance of LSTM with several traditional approaches, including ARIMA, Linear Regression (LR), Elastic Net (EN), Ordinary Least Squares (OLS), and Non-Negative Least Squares (NNLS). The results show that LSTM consistently achieves the lowest RMSE and MAE values, with an RMSE of 0.683 and an MAE of 0.482 on the Alibaba Cloud cluster trace dataset. These results demonstrate that the LSTM model is sufficiently reliable to handle the complexity and variability of workload patterns, which are often characterized by rapid changes and interdependencies.

Incorporating LSTM-based workload forecasting into our proposed system not only ensures precise resource allocation but also reduces the risks associated with under-provisioning or over-provisioning. The model helps our system adapt to dynamic workload fluctuation and enable seamless proactive quota adjustments, service SLO guarantee, and resource allocation waste avoidance.

It should be emphasized that the objective of this work is not to propose an optimal resource workload forecasting model. Instead, this study aims to design a system that integrates workload forecasting with a backup mechanism to address inaccurate predictions and unforeseen scenarios. Consequently, how the accuracy of the LSTM model affects the resource quota scaling performance is not evaluated herein. Any sufficiently accurate time series forecasting model may serve as a replacement.

3.3. Quota Proactive Scaling and Worker Node Scaling

Proactive Quota Scaling (or Proactive Scaler) is implemented as a custom K8s controller that receives predicted workload inputs from the workload forecasting models. Based on these predictions, it dynamically adjusts resource quotas and worker nodes in multi-cluster K8s environments by modifying the Karmada FRQ K8s Custom Resource (CR).

Algorithm 1 shows the implementation details of this mechanism, which addresses both increasing and decreasing resource demands to achieve efficient scaling.

When an increase in workload is predicted (ω_pred > CurrentQuota), the algorithm first evaluates cluster resource availability to determine whether the current resources (CurrentClusterResources) can meet the predicted demand. If sufficient, the quota is directly adjusted to match the predicted workload, calculated as AdjustedQuota = ω_pred, and updated via the FRQ CR. This ensures prompt allocation of resources without interrupting deployments. If available resources are insufficient, the algorithm calculates the additional resources required, defined as: Shortfall = ω_pred − CurrentClusterResources. It then determines the number of additional worker nodes required and reactivates the waiting nodes which are currently paused or hibernated. This node hibernation/pause in our context refers to a virtual machine (VM) hibernation technique (e.g., Azure VM Hibernation [

28], OpenStack VM Pause [

29]). When a VM is paused/hibernated, the VM is released from the underlying hardware and is powered off, and its working state is stored in RAM. Upon reactivation, the VM resumes from its stored state, significantly reducing boot time compared to a full initialization. In our experimental testbed, WaitingWorkerNodes (WWNs) are activated by unpausing previously paused OpenStack VMs. Once the waiting nodes are activated, the quota is adjusted to accommodate the predicted workload, allowing deployments to proceed without delay. This proactive approach prevents deployment failures and ensures that high-demand workloads are adequately supported.

Conversely, when a workload decrease is predicted (ω_pred < CurrentQuota), the algorithm initiates scale-down logic to optimize resource usage. Quota levels are reduced to the nearest manually predefined threshold by the service operator, thereby avoiding over-provisioning while maintaining sufficient resources for the workload. For example, in a system with predefined quota levels {20, 40, 60, 80, 100}, if the resource usage is 77, a predicted usage of 77 results in the quota being reduced to 80; a usage of 42 leads to a quota of 60; and a usage of 19 reduces the quota to 20. Additionally, the algorithm must determine the necessary workload rescheduling to optimize cluster resource utilization and eliminate redundant worker nodes (Lines 18 and 19 in Algorithm 1). For instance, if the predicted required resource level is 7, with workloads currently utilizing 3 and 4 resource units across two nodes, but each node has a maximum capacity of 10 resources, it would be more efficient to reschedule all workloads onto a single worker node and decommission the redundant one. This approach helps reduce resource wastage and improves overall efficiency. This strategy reduces resource wastage and improves overall efficiency.

By combining predictive scaling with real-time adjustments, the Proactive Quota Scaling mechanism ensures that the system remains prepared for workload fluctuations, enabling seamless operation while maintaining SLO compliance and enhancing system sustainability through reduced idle resources.

| Algorithm 1 Proactive Quota Scaling |

Require: Require: Predicted Workload (ω_pred), CurrentQuota, CurrentClusterResources, WaitingWorkerNodes, PredefinedQuotaLevels

1: while True do

2: if ω_pred > CurrentQuota then // Case 1: Predicted Increase

3: // Predicted increase: Scale 1 minute in advance

4: if CurrentClusterResources ≥ ω_pred then

5: AdjustedQuota ← ω_pred

6: UpdateQuota(AdjustedQuota) // Scale 1 minute early to prepare resources

7: else

8: Shortfall ← ω_pred - CurrentClusterResources

9: PendingNodes ← CalculateRequiredNodes(Shortfall)

10: ActivateWaitingNodes(PendingNodes, WaitingWorkerNodes)

11: end if

12: end if

13: else if ω_pred < CurrentQuota then // Case 2: Predicted Decrease

14: // Predicted decrease: Scale down only when the system actually reduces demand

15: AdjustedQuota ← GetNearestQuotaLevel(PredefinedQuotaLevels, ω_pred)

16: WaitUntilActualDemandDecreases() // Wait until the system actually reduces demand

17: if CurrentUsage < CurrentQuota then

18: RescheduleWorkloadToMaxResourceUtilization()

19: StopRedundantNode()

20: end if

21: end if

22: Wait for the next PredictedWorkload (ω_pred)

23: end while |

3.4. Quota Reactive Scaling

Quota Reactive Scaling (or Reactive Scaler) is another critical component of the proposed system. Similarly to the Proactive Scaler, it is implemented as a custom K8s controller. The Reactive Scaler receives failure deployment notifications triggered by resource shortages in a namespace caused by resource quota limits. Upon receiving such a notification, it automatically calculates the required adjustment and updates the resource quota by modifying the Karmada FRQ CR. The detailed implementation is presented in Algorithm 2. This mechanism is specifically designed to handle unforeseen or inaccurately predicted resource shortages.

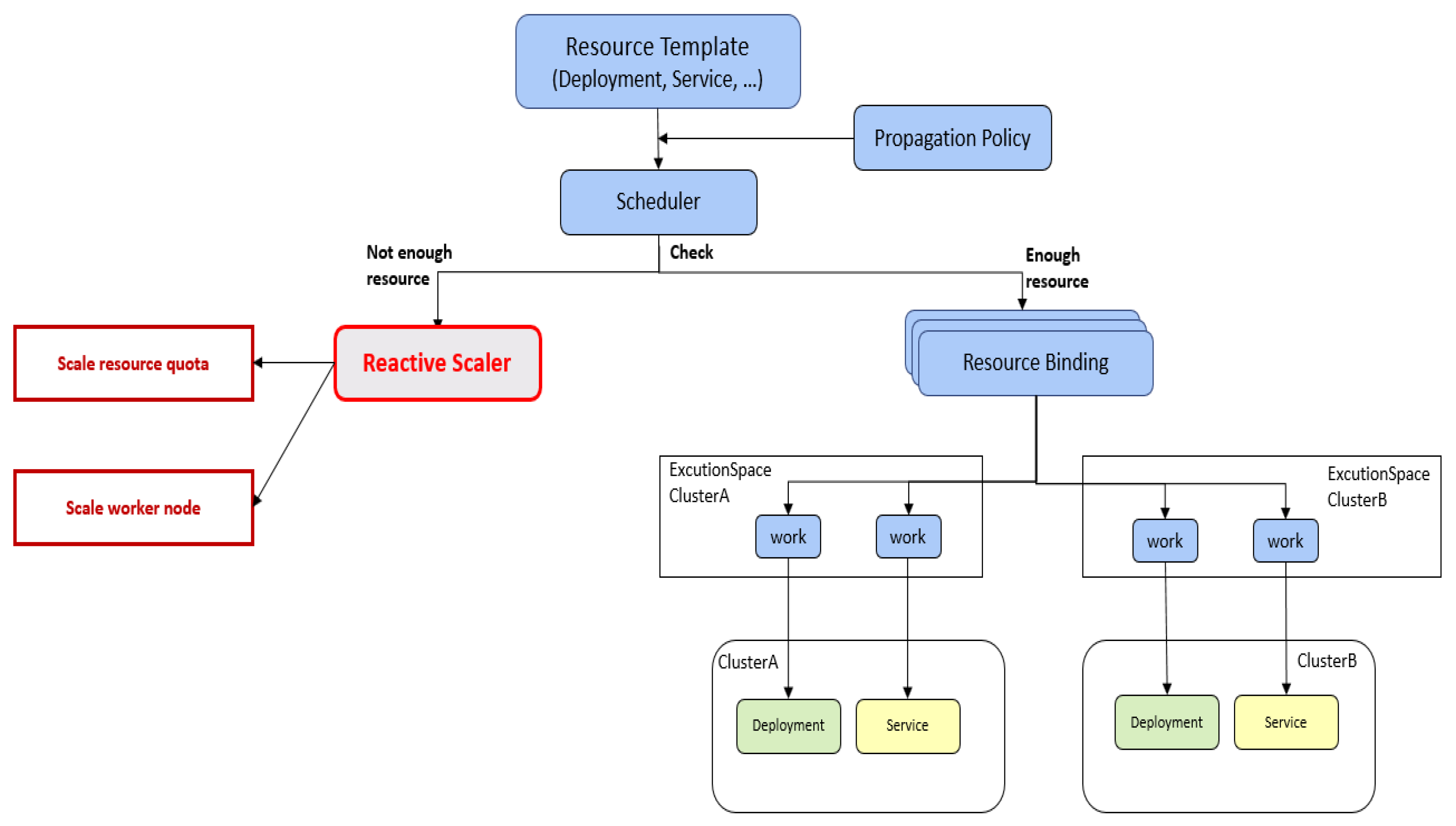

As illustrated in

Figure 2 by the black outlined boxes, Karmada’s default deployment workflow verifies the available resources of member clusters after determining the propagation policy and scheduling decisions. If the required resources are unavailable, Karmada returns an error notification (e.g., “No policy match”) and terminates the deployment process. This default behavior introduces significant delays in workload execution and causes unnecessary disruptions, thereby reducing the flexibility and responsiveness of the orchestration system.

| Algorithm 2 Reactive Quota Scaling |

Require: WorkloadRequest (W_req), CurrentQuota (Q_current), ResourceAvailability (R_avail), FederatedResourceQuota (FRQ), WaitingWorkerNodes (WWN)

1: Monitor incoming workload request W_req

2: if W_req ≤ Q_current then // Case 1: Sufficient Quota

3: Proceed with deployment

4: Return “DeploymentSuccess”

5: end if

6: Shortfall ← W_req - Q_current

7: if R_avail ≥ Shortfall then // Case 2: Adjust Quota

8: AdjustedQuota ← Q_current + Shortfall

9: Update FederatedResourceQuota (FRQ) with AdjustedQuota

10: Proceed with deployment

11: Return “DeploymentSuccess”

12: else // Case 3: Insufficient Cluster Resources

13: PendingNodes ← CalculateRequiredNodes(Shortfall - R_avail)

14: ActivateWaitingNodes(PendingNodes, WWN)

15: AdjustedQuota ← Q_current + Shortfall

16: Update FederatedResourceQuota (FRQ) with AdjustedQuota

17: Proceed with deployment

18: end if |

To overcome these limitations, the proposed system incorporates the Reactive Quota Scaling mechanism as illustrated by red outline boxes in

Figure 2. Unlike the default workflow, the Reactive Scaler dynamically adjusts the resource quotas of member clusters using the FRQ CR when resource shortages are detected. By evaluating real-time workload requirements and current cluster resource availability, the Reactive Quota Scaling component ensures that additional resources are allocated promptly, allowing deployments to proceed smoothly without manual intervention or disruption. In cases where adjusting the resource quota is insufficient to meet the workload demands, the Reactive Scaler is capable of scaling up additional worker nodes. These nodes are activated dynamically to supplement the cluster’s capacity, ensuring that even unexpected spikes in demand are handled efficiently.

Algorithm 2 is designed to dynamically adjust resource quotas in multi-cluster K8s environments based on incoming workload demands. The algorithm operates in three primary cases to ensure seamless deployment and efficient resource utilization. First, if the incoming workload request (W_req) is less than or equal to the current quota (Q_current), the system proceeds with deployment immediately. This ensures minimal latency when existing resources are sufficient. Second, in cases where W_req exceeds Q_current, the algorithm calculates the shortfall between the required and current quota. If the available cluster resources (R_avail) can satisfy the shortfall, the quota is increased by the shortfall amount, updated in the FRQ, and the deployment proceeds without disruption.

Finally, when R_avail is insufficient to meet the shortfall, the algorithm calculates the number of additional worker nodes required. It then reactivates paused WWNs to bridge the resource gap. Once the WWNs are activated, the quota is updated in the FRQ, and the deployment is executed.

By integrating real-time monitoring, dynamic quota adjustment, and on-demand node activation, the Reactive Scaler significantly enhances Karmada’s orchestration capabilities. This mechanism ensures that workloads are deployed even under resource constraints, maintains compliance with SLOs, optimizes resource allocation, and minimizes deployment delays in unpredictable workload scenarios.

5. Results and Discussion

5.1. Deployment Failure and Quota Allocation Efficiency

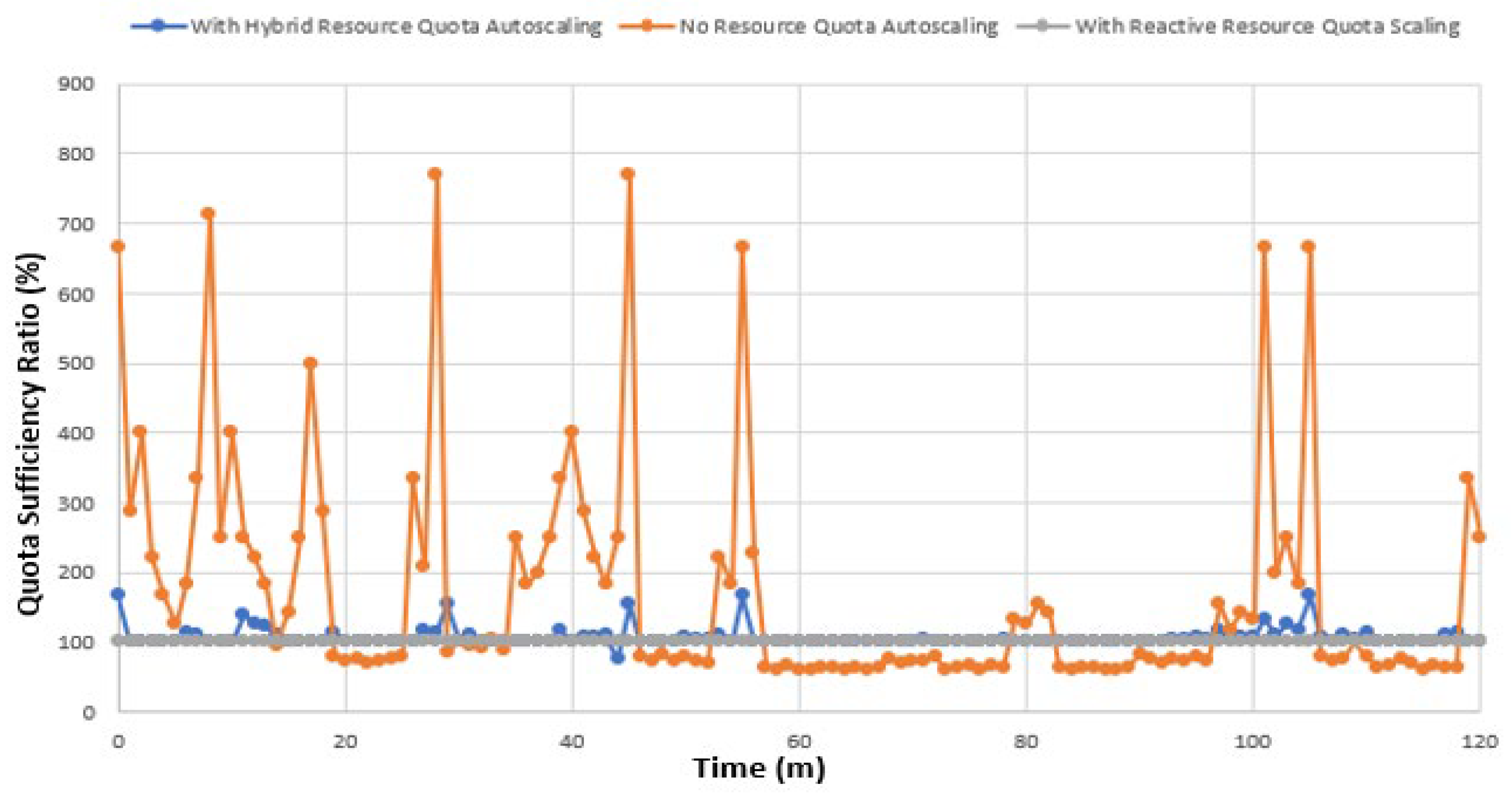

This section evaluates the effectiveness of the proposed Hybrid Resource Quota Auto-scaling against the other two approaches in terms of handling dynamic workload demands. The workloads are simulated with fluctuating CPU demands, including sudden traffic bursts. A workload is considered failed if it remains in the Pending state for more than 1 min due to insufficient resources. Two metrics are used for evaluation:

A QSR close to 100% indicates efficient resource allocation, while QSR > 100% reflects over-provisioning (resource wastage), and QSR < 100% indicates under-provisioning (insufficient resources).

- 2.

Deployment Failure Ratio (DFR): Quantifies the percentage of workloads failing to deploy due to insufficient resources. It is calculated as follows:

Effective resource management requires flexibility in adjusting quotas to meet the dynamic demands of workloads.

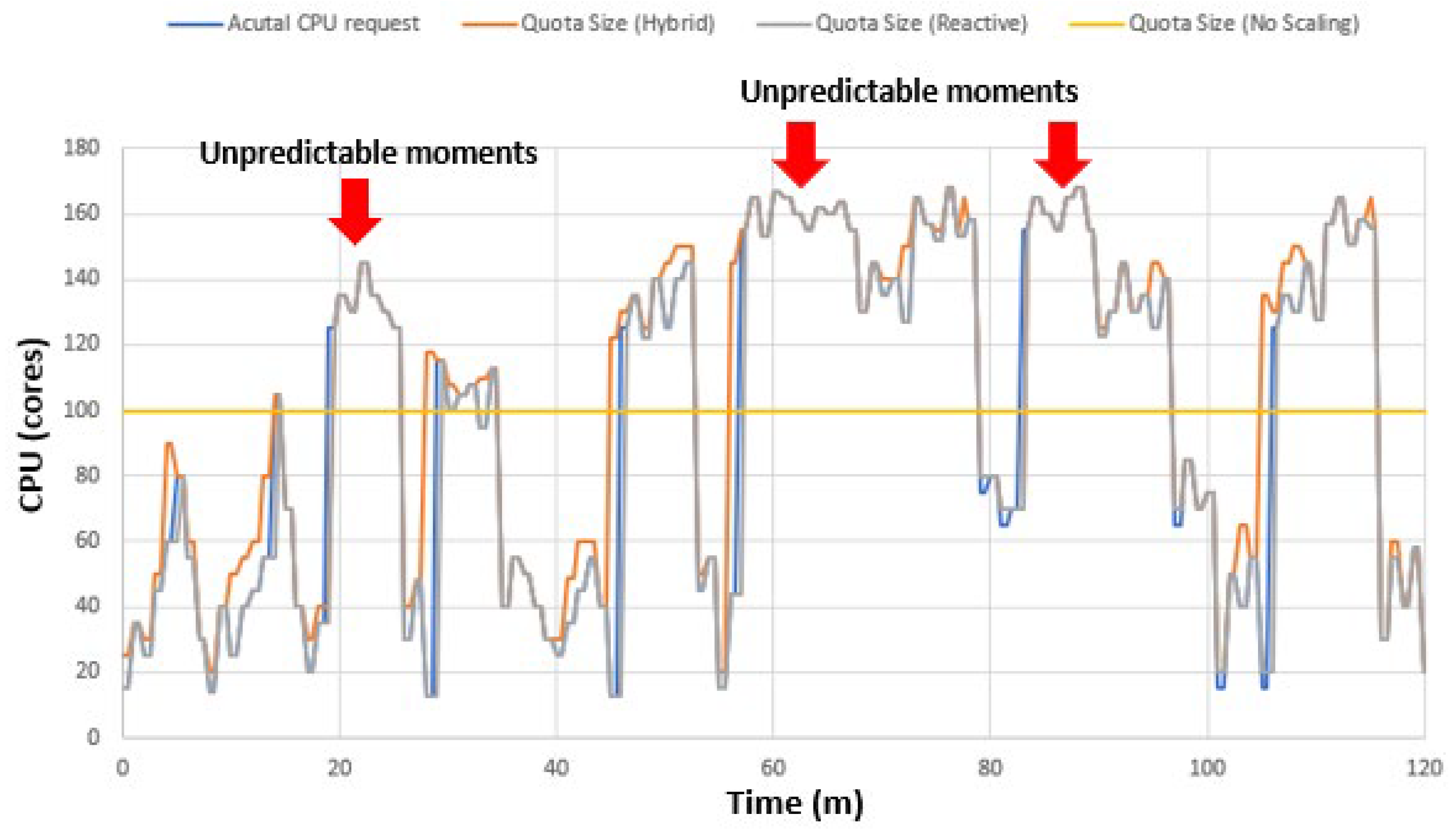

Figure 5 illustrates the performance of different scaling methods in aligning resource quotas with workload requirements in one representative experiment run.

For the No Quota Scaling approach, the quota remains fixed and cannot adapt to actual workload demands. During workload surges, fixed quotas result in resource under-provisioning, leading to workloads remaining in a pending state or causing deployment failures. Conversely, during periods of low demand, static quotas cause over-provisioning and resource wastage, as unused resources remain allocated.

For the Reactive and Hybrid Resource Quota Scaling approaches, the QSR of the Reactive approach consistently remains at 100%, demonstrating its capability to match quotas with real-time monitored resource demands. Although the QSR performance of the hybrid approach is nearly identical, occasional minor over-provisioning is observed due to unpredictable spikes in resource demand. These fluctuations require some time for the hybrid approach to re-adjust resource quota allocations through the Reactive Quota Scaling mechanism. Nevertheless, this minor disadvantage in QSR is an intentional trade-off by the proposed hybrid approach to prevent service latency SLO violations, as discussed in the following evaluation section.

5.2. Service Deployment Time

The section evaluates the three quota scaling comparison targets in terms of service deployment time, which is defined as follows:

Figure 6 shows the service deployment time performance comparison in one representative experiment run.

For the No Quota Scaling approach, the service deployment time remains within normal bounds (under 5 s) when resource demand stays below the fixed quota of 100 CPUs. The other two approaches share the same service deployment time during these periods. However, when resource demand exceeds this fixed quota, pods remain in the pending state and eventually fail to deploy due to deployment timeouts. Since there is no quota adjustment mechanism and pod initialization does not occur, the service deployment time is recorded as zero for most of the experiment duration during periods of excessive demand.

For the Reactive Quota Scaling approach, when resource demand exceeds the currently configured quota, two scenarios may occur. In the first scenario, the required quota extension is not large enough to trigger the provisioning of new worker nodes. In this case, the system simply reconfigures the resource quota, and the new pods are initialized normally. The resulting service deployment time is relatively short, approximately 5 s, as indicated by the low spikes in

Figure 6. In the second scenario, the required quota extension is substantial enough to initiate the scaling of new worker nodes. Here, the quota adjustment time includes the worker node provisioning time, resulting in a total service deployment time exceeding 30 s, as shown by the nine high spikes in

Figure 6. It is also worth noting that the service deployment time curves of the reactive and hybrid approaches overlap at the three orange spikes.

For the proposed Hybrid Quota Scaling approach, high service deployment time occurs only when the LSTM forecasting model in the Proactive Scaler inaccurately predicts the required resource demand. In such cases, the Reactive Scaler reconfigures the resource quota based on real-time monitored demand and initiates the provisioning of new worker nodes. As a result, service deployment time becomes high. These instances correspond to the three high orange spikes shown in

Figure 6. Conversely, during the other six blue spike events—where only the Reactive Scaling system experiences delays—the Hybrid Scaling system accurately forecasts the future resource demand and preemptively provisions the necessary worker nodes. Therefore, the service deployment time of the Hybrid system remains low in these scenarios.

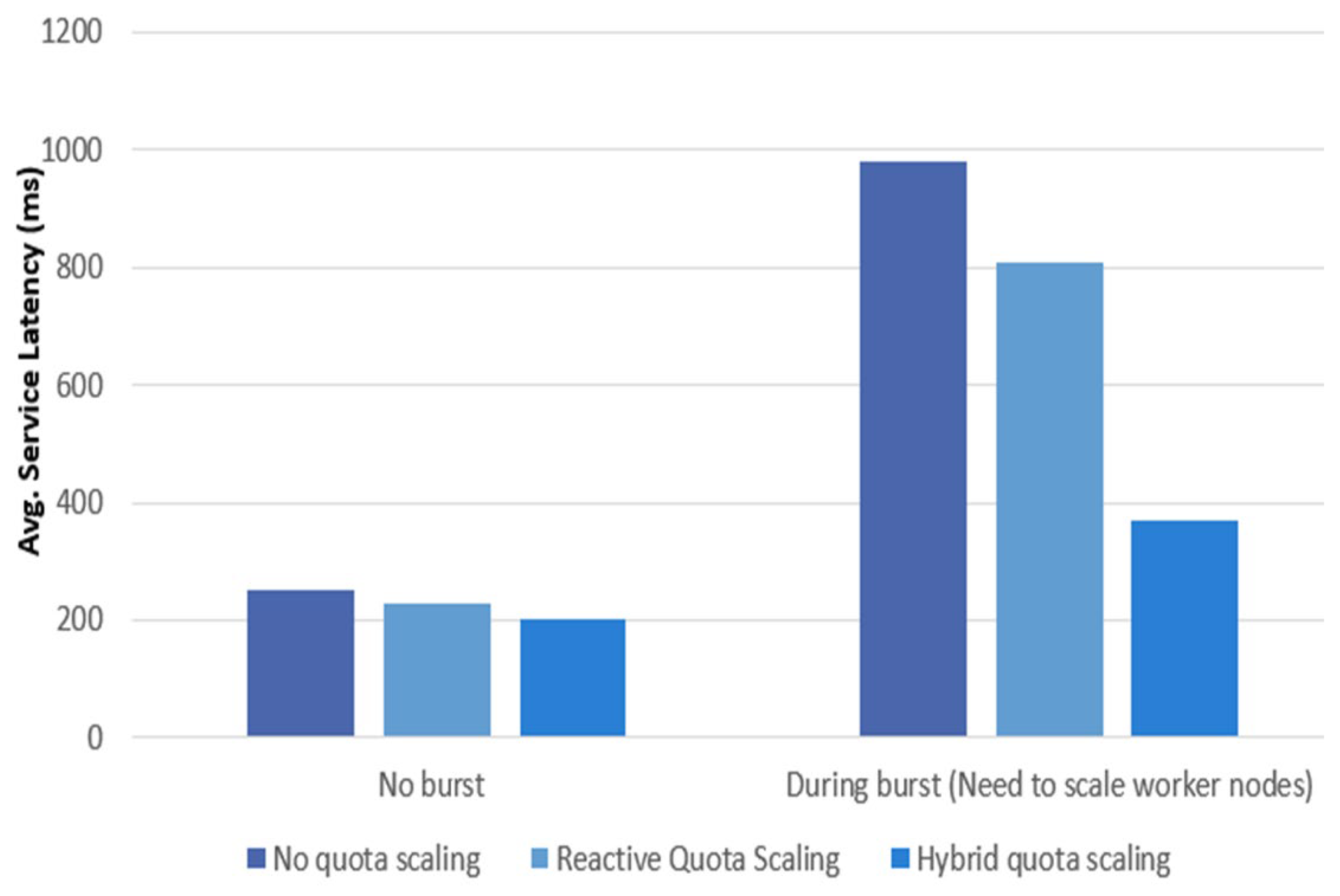

5.3. Service Response Latency

The section evaluates the three comparison targets’ average service latency performance. We observed the average service latency of service requests generated from Locust traffic generator [

32] during low and high resource demand periods (No burst and During Burst). The reported averages are computed over 30 experimental runs, with standard deviations ranging from 30 to 50 ms across the three quota scaling systems under comparison. The observation results are shown in

Figure 7.

In the “No Burst” scenario, we measured service latency during periods of low resource demand that did not require the provisioning of additional worker nodes. The three systems exhibit slight differences in service latency. The No Quota Scaling approach shows the highest latency due to its inability to dynamically adjust resource quotas, resulting in minor resource shortages and insufficient capacity to scale additional pods. However, the overall latency remains relatively low because the resource deficit is minimal. In contrast, the Reactive Quota Scaling and Hybrid Quota Scaling approaches successfully avoid this issue by adjusting quotas dynamically. The hybrid approach demonstrates slightly lower latency, as it proactively adjusts the quota in advance.

In the “During Burst” scenario, we measured service latency during periods of high resource demand that necessitate the provisioning of additional worker nodes. In this case, the differences in service latency among the three approaches are more pronounced. The No Quota Scaling approach incurs extremely high latency due to severe resource shortages caused by its fixed quota configuration. While the reactive approach is capable of adjusting the quota, it only initiates the provisioning of new worker nodes after detecting a burst in demand. Consequently, the service latency remains high during the node provisioning delay, resulting in a significantly higher average latency compared to the hybrid approach. The hybrid approach achieves the lowest latency by preemptively provisioning additional worker nodes when the Proactive Scaler accurately predicts the burst in resource demand. Nonetheless, its average latency is higher than in the “No Burst” scenario due to occasional prediction inaccuracies that require intervention from the Reactive Scaling mechanism to correct misallocated resource quotas.

5.4. Evaluation Summary

The comparison in

Table 2 highlights the strengths and limitations of the three scaling methods—No Quota Scaling, Reactive Quota Scaling, and Hybrid Quota Scaling—across key metrics. The reported QSR and DRF averages are computed over 30 experimental runs, with standard deviations ranging from 2 to 3% across the three quota scaling systems under comparison. No Quota Scaling exhibits poor performance, with a high quota sufficiency ratio (178.08%), reflecting significant resource over-provisioning during low-demand periods, and a Deployment Failure Ratio of 34.68%, indicating its inability to handle sudden traffic surges. It also suffers from the highest service latency during both “No Burst” (250 ms) and “Burst” (980 ms) scenarios due to its static nature.

In contrast, Reactive Quota Scaling effectively eliminates deployment failures (0%) and maintains an optimal Quota Sufficiency Ratio (100%). However, it struggles with longer deployment times during “Burst” scenarios (45 s) due to delays in scaling additional worker nodes, which also results in higher latency (810 ms).

Hybrid Quota Scaling demonstrates the best overall performance by balancing proactive resource allocation and reactive adjustments. With a near-optimal Quota Sufficiency Ratio (105.08%) and no deployment failures (0%), it achieves shorter deployment times (3.87 s in “No Burst” and 17.11 s in “Burst”) and the lowest service latency across scenarios (200 ms in “No Burst” and 410 ms in “Burst”). The reactive adjustment mechanism helps reduce quota redundancy from unpredictable events to a minimal level. These results confirm the effectiveness of Hybrid Quota Scaling in optimizing resource utilization and maintaining system responsiveness under dynamic workload conditions.

6. Conclusions and Future Works

This research proposes a hybrid scaling framework for multi-cluster Kubernetes edge computing environments, integrating both proactive and reactive scaling mechanisms to address the challenges of dynamic resource allocation. The proactive component employs machine learning-based workload forecasting to pre-allocate resources, thereby reducing deployment latency and minimizing resource waste. Meanwhile, the reactive component dynamically adjusts resource quotas in real time to accommodate forecasting inaccuracies and unexpected workload surges, ensuring seamless deployment and stable system performance. Experimental results demonstrate the effectiveness of the proposed framework, achieving lower latency and improved resource utilization compared to traditional approaches. These findings highlight its potential as a scalable and adaptable solution for modern cloud infrastructures

For future work, we plan to incorporate predictive failure analysis and explore resource-waste-free, energy-efficient scaling strategies to further enhance the system’s sustainability and reliability.