A Trustworthy Dataset for APT Intelligence with an Auto-Annotation Framework

Abstract

1. Introduction

2. Related Work

2.1. Automated Annotation Methods

2.2. CTI Data Quality Assessment

3. Methodology

3.1. Pre-Annotation Preparation

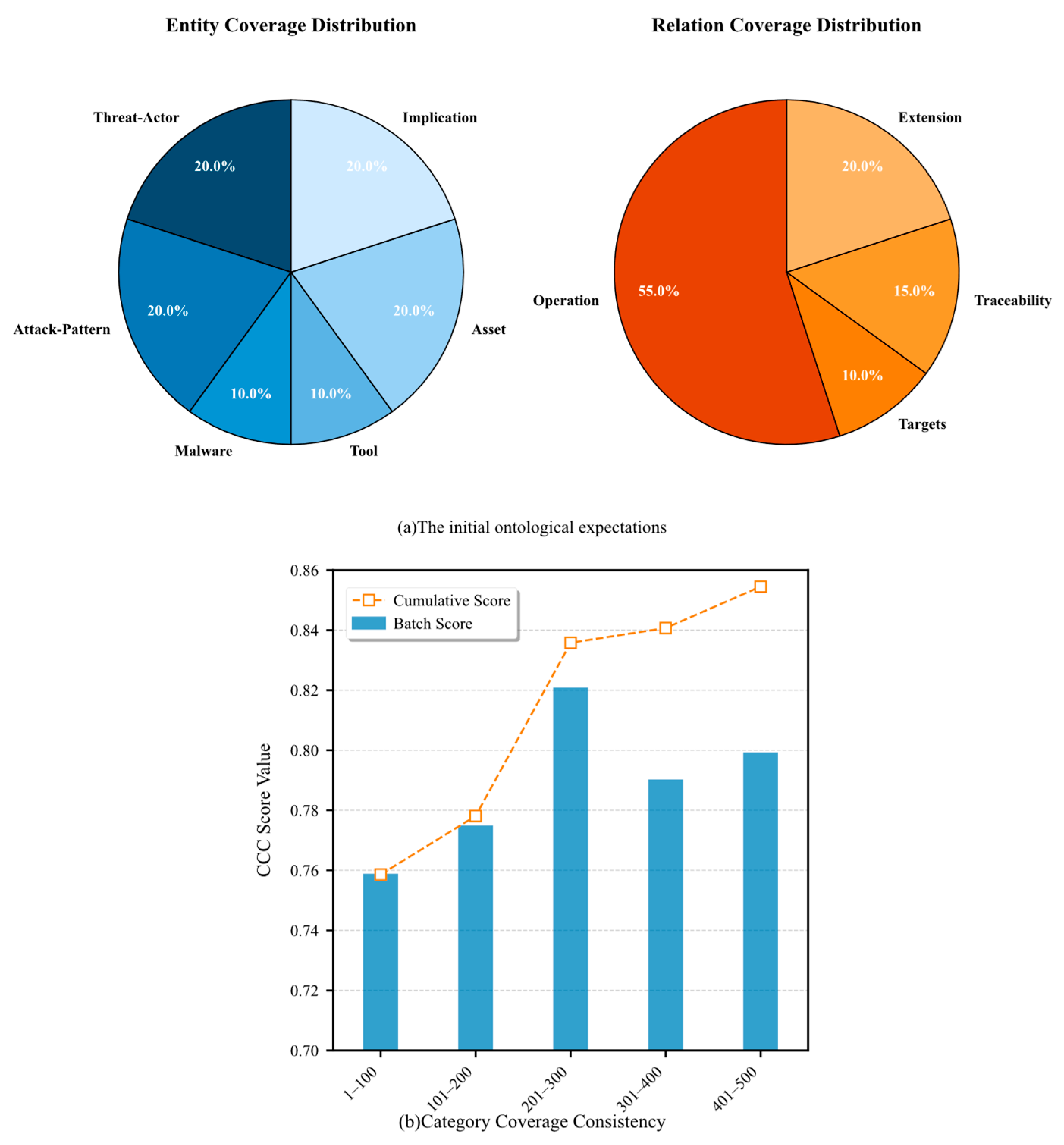

3.1.1. APT Dual-Layer Ontology

3.1.2. Trusted Data Collection

3.2. Dynamic Trustworthy Annotation Process

3.3. Annotation Quality Quantitative Indicator System

3.3.1. Basic Structural Indicators

- Category Density Anomaly Index (CDA)

- B.

- Category Distribution Balance Index (CDB)

3.3.2. Logical Consistency Indicators

- LLMs Annotation Consistency (LAC)

- B.

- Category Coverage Consistency (CCC)

- C.

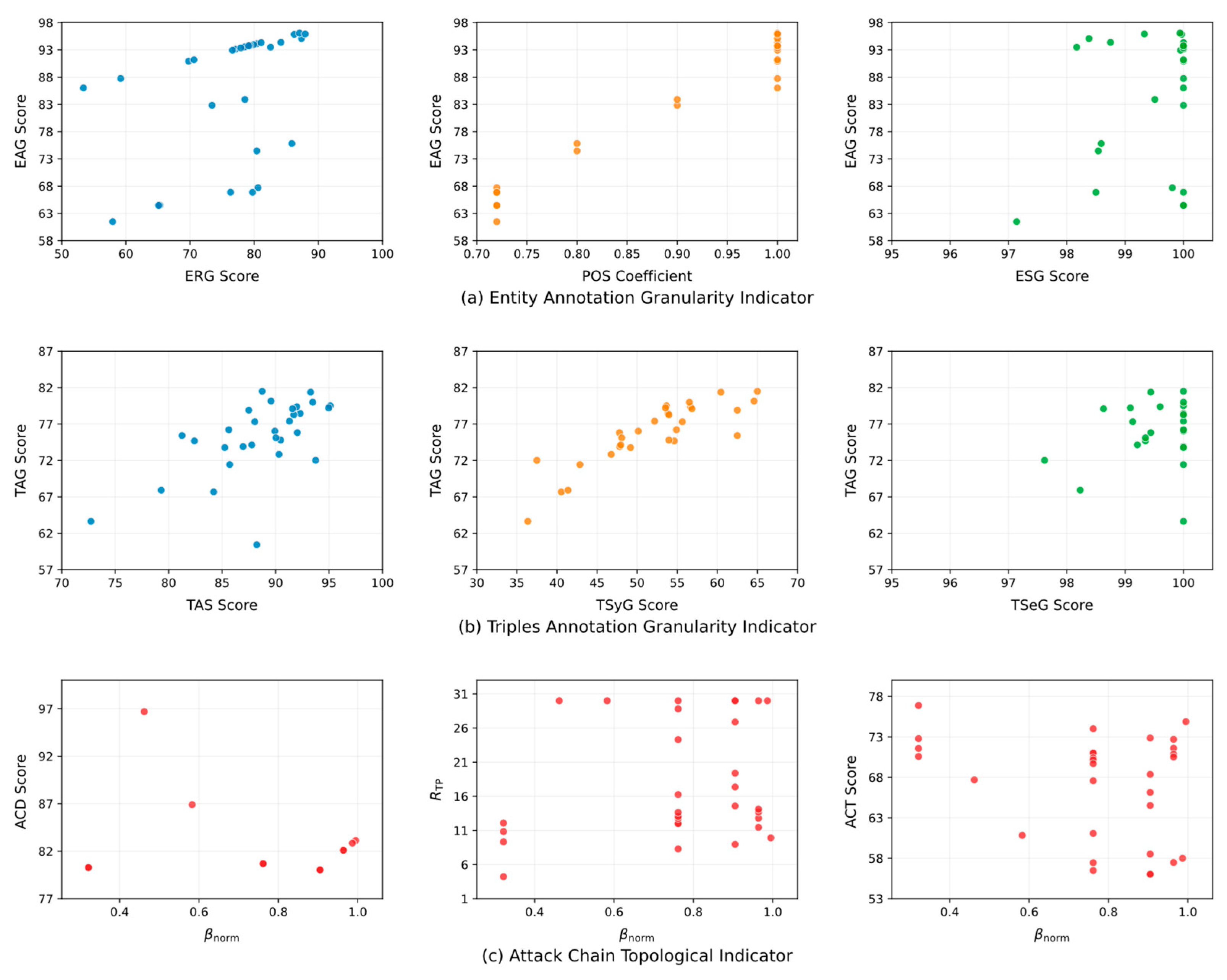

- Entity Annotation Granularity (EAG)

- D.

- Triples Annotation Granularity (TAG)

3.3.3. Attack Chain Topological Indicator

- Attack Chain Depth Score ()

- B.

- Attack Chain Total Penalty Ratio ()

3.4. Post-Annotation Evaluation

4. Experiments

4.1. Data Collection

4.2. Experimental Setup

4.2.1. Model Selection

- Large Model Selection

- B.

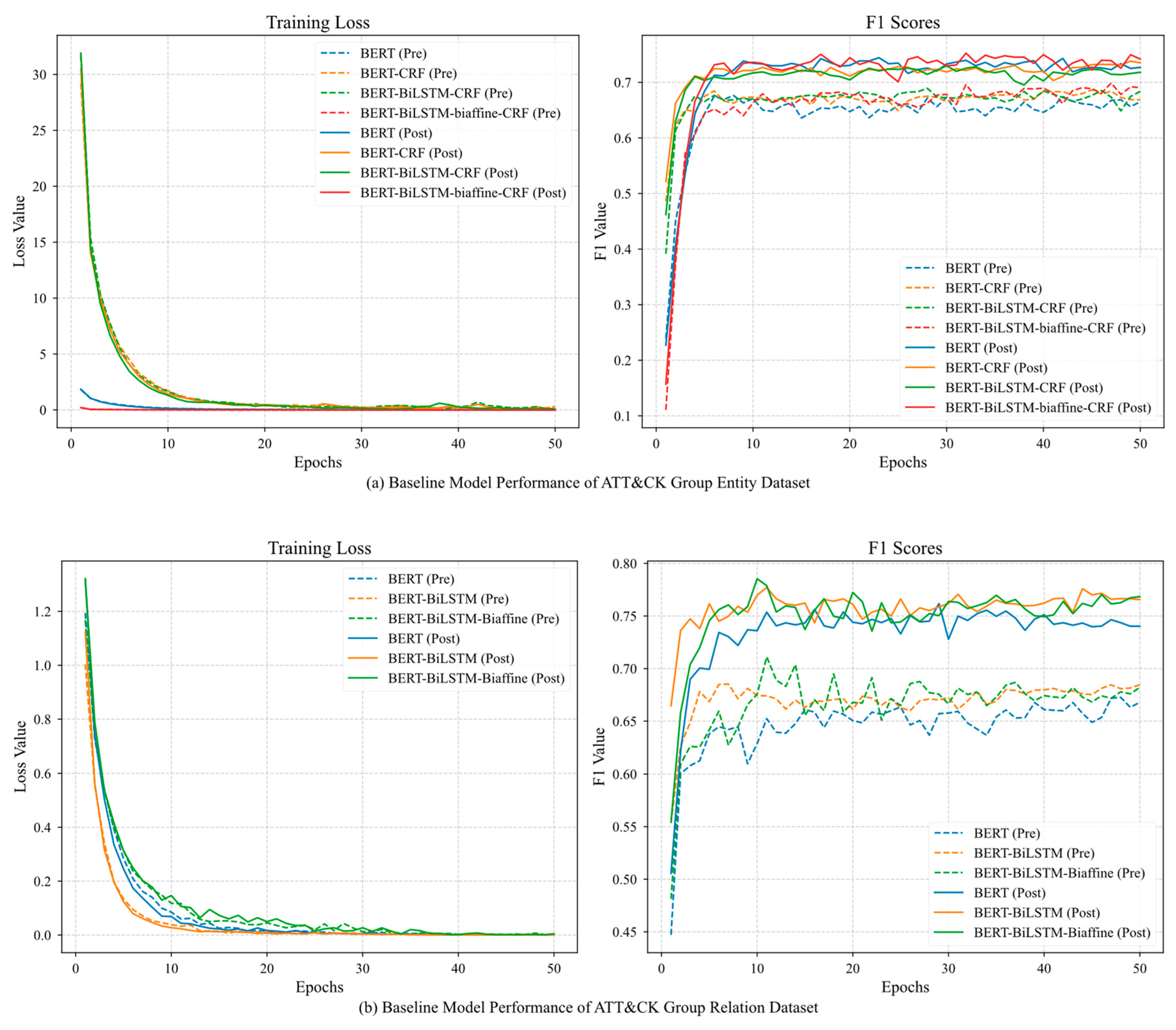

- Baseline Models

4.2.2. Parameter Configuration

4.2.3. Experimental Environment

4.3. Annotation Framework Efficiency Validation

4.4. Evaluation Indicator Validation

4.4.1. Pilot Annotation Phase Indicator Validation

- A.

- LAC Indicator Experiment

- B.

- CCC Indicator Experiment

- C.

- CDB Indicator Experiment

4.4.2. Formal Annotation Phase Indicator Validation

4.4.3. Generalizability Experiments of Evaluation System

4.5. External Consistency Experiment

4.6. Dataset Information

4.7. Baseline Model Experiments

5. Conclusions

6. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, Z.; Zhu, G.; Yang, Y. Research on Railway Network Security Performance Based on APT Characteristics. Netinfo Secur. 2024, 24, 802–811. [Google Scholar] [CrossRef]

- Zhou, Y.; Tang, Y.; Yi, M.; Xi, C.; Lu, H. CTI View: APT Threat Intelligence Analysis System. Syst. Secur. Commun. Netw. 2022, 2022, 9875199. [Google Scholar] [CrossRef]

- Liu, Y.; Han, X.; Zuo, W.; Lv, H.; Guo, J. CTI-JE: A Joint Extraction Framework of Entities and Relations in Unstructured Cyber Threat Intelligence. In Proceedings of the 2024 27th International Conference on Computer Supported Cooperative Work in Design, CSCWD 2024, Tianjin, China, 8–10 May 2024; pp. 2728–2733. [Google Scholar] [CrossRef]

- Lv, H.; Han, X.; Cui, H.; Wang, P.; Zuo, W.; Zhou, Y. Joint Extraction of Entities and Relationships from Cyber Threat Intelligence based on Task-specific Fourier Network. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Zuo, J.; Gao, Y.; Li, X.; Yuan, J. An End-to-end Entity and Relation Joint Extraction Model for Cyber Threat Intelligence. In Proceedings of the 2022 7th International Conference on Big Data Analytics, ICBDA 2022, Guangzhou, China, 4–6 March 2022; pp. 204–209. [Google Scholar] [CrossRef]

- Ahmed, K.; Khurshid, S.K.; Hina, S. CyberEntRel: Joint extraction of cyber entities and relations using deep learning. Comput. Secur. 2023, 136, 103579. [Google Scholar] [CrossRef]

- Huang, Y.; Su, M.; Xu, Y.; Liu, T. NER in Cyber Threat Intelligence Domain Using Transformer with TSGL. J. Circuits Syst. Comput. 2023, 32, 12. [Google Scholar] [CrossRef]

- Wei, T.; Li, Z.; Wang, C.; Cheng, S. Cybersecurity Threat Intelligence Mining Algorithm for Open Source Heterogeneous Data. Comput. Sci. 2023, 50, 330–337. [Google Scholar] [CrossRef]

- Gao, Y.; Li, X.; Peng, H.; Fang, B.; Yu, P.S. HinCTI: A Cyber Threat Intelligence Modeling and Identification System Based on Heterogeneous Information Network. IEEE Trans. Knowl. Data Eng. 2022, 34, 708–722. [Google Scholar] [CrossRef]

- Li, Z.; Zeng, J.; Chen, Y.; Liang, Z. AttacKG: Constructing Technique Knowledge Graph from Cyber Threat Intelligence Reports. In Computer Security—ESORICS 2022. ESORICS 2022; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13554 LNCS, pp. 589–609. [Google Scholar] [CrossRef]

- Wang, X.; Liu, X.; Ao, S.; Li, N.; Jiang, Z.; Xu, Z.; Xiong, Z.; Xiong, M.; Zhang, X. DNRTI: A large-scale dataset for named entity recognition in threat intelligence. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications, TrustCom 2020, Guangzhou, China, 29 December 2020–1 January 2021; pp. 1842–1848. [Google Scholar] [CrossRef]

- Wang, X.; He, S.; Xiong, Z.; Wei, X.; Jiang, Z.; Chen, S.; Jiang, J. APTNER: A Specific Dataset for NER Missions in Cyber Threat Intelligence Field. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design, CSCWD 2022, Hangzhou, China, 4–6 May 2022; pp. 1233–1238. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, R.; Chen, Y.; Gong, X.; Guo, Q.; Zhang, X. APTTOOLNER: A Chinese Dataset of Cyber Security Tool for NER Task. In Proceedings of the 2023 3rd Asia-Pacific Conference on Communications Technology and Computer Science, ACCTCS 2023, Shenyang, China, 25–27 February 2023; pp. 368–373. [Google Scholar] [CrossRef]

- Luo, Y.; Ao, S.; Luo, N.; Su, C.; Yang, P.; Jiang, Z. Extracting threat intelligence relations using distant supervision and neural networks. In Advances in Digital Forensics XVII; IFIP Advances in Information and Communication Technology; Springer: Cham, Switzerland, 2021; Volume 612, pp. 193–211. [Google Scholar] [CrossRef]

- YLi, Y.; Guo, Y.; Fang, C.; Liu, Y.; Chen, Q.; Cui, J. A Novel Threat Intelligence Information Extraction System Combining Multiple Models. Secur. Commun. Netw. 2022, 2022, 8477260. [Google Scholar] [CrossRef]

- Cheng, S.; Li, Z.; Wei, T. Threat intelligence entity relation extraction method integrating bootstrapping and semantic role labeling. J. Comput. Appl. 2023, 43, 1445–1453. [Google Scholar] [CrossRef]

- Shang, W.; Wang, B.; Zhu, P.; Ding, L.; Wang, S. A Span-based Multivariate Information-aware Embedding Network for joint relational triplet extraction of threat intelligence. Knowl. Based Syst. 2024, 295, 111829. [Google Scholar] [CrossRef]

- Ren, Y.; Xiao, Y.; Zhou, Y.; Zhang, Z.; Tian, Z. CSKG4APT: A Cybersecurity Knowledge Graph for Advanced Persistent Threat Organization Attribution. IEEE Trans. Knowl. Data Eng. 2023, 35, 5695–5709. [Google Scholar] [CrossRef]

- Piplai, A.; Mittal, S.; Joshi, A.; Finin, T.; Holt, J.; Zak, R. Creating Cybersecurity Knowledge Graphs From Malware After Action Reports. IEEE Access 2020, 8, 211691–211703. [Google Scholar] [CrossRef]

- Zhou, Y.; Ren, Y.; Yi, M.; Xiao, Y.; Tan, Z.; Moustafa, N.; Tian, Z. CDTier: A Chinese Dataset of Threat Intelligence Entity Relationships. IEEE Trans. Sustain. Comput. 2023, 8, 627–638. [Google Scholar] [CrossRef]

- Rastogi, N.; Dutta, S.; Gittens, A.; Zaki, M.J.; Aggarwal, C. TINKER: A framework for Open source Cyberthreat Intelligence. In Proceedings of the 2022 IEEE 21st International Conference on Trust, Security and Privacy in Computing and Communications, TrustCom 2022, Wuhan, China, 9–11 December 2022; pp. 1569–1574. [Google Scholar] [CrossRef]

- Li, H.; Shi, Z.; Pan, C.; Zhao, D.; Sun, N. Cybersecurity knowledge graphs construction and quality assessment. Complex Intell. Syst. 2023, 10, 1201–1217. [Google Scholar] [CrossRef]

- Wang, G.; Liu, P.; Huang, J.; Bin, H.; Wang, X.; Zhu, H. KnowCTI: Knowledge-based cyber threat intelligence entity and relation extraction. Comput. Secur. 2024, 141, 103824. [Google Scholar] [CrossRef]

- Sarhan, I.; Spruit, M. Open-CyKG: An Open Cyber Threat Intelligence Knowledge Graph. Knowl.-Based Syst. 2021, 233, 107524. [Google Scholar] [CrossRef]

- Jo, H.; Lee, Y.; Shin, S. Vulcan: Automatic extraction and analysis of cyber threat intelligence from unstructured text. Comput. Secur. 2022, 120, 102763. [Google Scholar] [CrossRef]

- Lu, H.; Jin, C.; Helu, X.; Du, X.; Guizani, M.; Tian, Z. DeepAutoD: Research on Distributed Machine Learning Oriented Scalable Mobile Communication Security Unpacking System. IEEE Trans. Netw. Sci. Eng. 2022, 9, 2052–2065. [Google Scholar] [CrossRef]

- Barron, R.; Eren, M.E.; Bhattarai, M.; Wanna, S.; Solovyev, N.; Rasmussen, K.; Alexandrov, B.S.; Nicholas, C.; Matuszek, C. Cyber-Security Knowledge Graph Generation by Hierarchical Nonnegative Matrix Factorization. In Proceedings of the 12th International Symposium on Digital Forensics and Security, ISDFS 2024, San Antonio, TX, USA, 29–30 April 2024. [Google Scholar] [CrossRef]

- Knuth, D.E.; Morris, J.H., Jr.; Pratt, V.R. Fast Pattern Matching in Strings. SIAM J. Comput. 2006, 6, 323–350. [Google Scholar] [CrossRef]

- Behzadan, V.; Aguirre, C.; Bose, A.; Hsu, W. Corpus and Deep Learning Classifier for Collection of Cyber Threat Indicators in Twitter Stream. In Proceedings of the 2018 IEEE International Conference on Big Data, Big Data 2018, Seattle, WA, USA, 10–13 December 2018; pp. 5002–5007. [Google Scholar] [CrossRef]

- Schlette, D.; Böhm, F.; Caselli, M.; Pernul, G. Measuring and visualizing cyber threat intelligence quality. Int. J. Inf. Secur. 2021, 20, 21–38. [Google Scholar] [CrossRef]

- Zibak, A.; Sauerwein, C.; Simpson, A.C. Threat Intelligence Quality Dimensions for Research and Practice. Digit. Threat. Res. Pract. 2022, 3, 1–22. [Google Scholar] [CrossRef]

- Sakellariou, G.; Fouliras, P.; Mavridis, I. A Methodology for Developing & Assessing CTI Quality Metrics. IEEE Access 2024, 12, 6225–6238. [Google Scholar] [CrossRef]

- Ge, W.; Wang, J. SeqMask: Behavior Extraction Over Cyber Threat Intelligence Via Multi-Instance Learning. Comput. J. 2024, 67, 253–273. [Google Scholar] [CrossRef]

- Grafarend, E.W. Linear and Nonlinear Models. Fixed effects, random effects, and mixed models. Geomatica 2006, 60, 382–383. Available online: https://go.gale.com/ps/i.do?p=AONE&sw=w&issn=11951036&v=2.1&it=r&id=GALE%7CA674565700&sid=googleScholar&linkaccess=fulltext (accessed on 2 July 2025).

- Ceriani, L.; Verme, P. The origins of the Gini index: Extracts from Variabilità e Mutabilità (1912) by Corrado Gini. J. Econ. Inequal. 2012, 10, 421–443. Available online: https://link.springer.com/article/10.1007/s10888-011-9188-x (accessed on 2 July 2025). [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Beran, R. Minimum Hellinger Distance Estimates for Parametric Models. Ann. Stat. 1977, 5, 445–463. Available online: https://www.jstor.org/stable/2958896 (accessed on 2 July 2025). [CrossRef]

- Aghaei, E.; Niu, X.; Shadid, W.; Al-Shaer, E. SecureBERT: A Domain-Specific Language Model for Cybersecurity. In Security and Privacy in Communication Networks; Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST; Springer: Cham, Switzerland, 2023; Volume 462, pp. 39–56. [Google Scholar] [CrossRef]

- Bauer, J.; Kiddon, C.; Yeh, E.; Shan, A.; Manning, C.D. Semgrex and Ssurgeon, Searching and Manipulating Dependency Graphs. April 2024. Available online: https://arxiv.org/pdf/2404.16250 (accessed on 2 July 2025).

- Li, Y.; Luo, H.; Wang, Q.; Li, J. An Advanced Persistent Threat Model of New Power System Based on ATT&CK. Netinfo Secur. 2023, 23, 26–34. [Google Scholar] [CrossRef]

- Xiang, G.; Shi, C.; Zhang, Y. An APT Event Extraction Method Based on BERT-BiGRU-CRF for APT Attack Detection. Electronics 2023, 12, 3349. [Google Scholar] [CrossRef]

- Tan, Y.; Huang, W.; You, Y.; Su, S.; Lu, H. Recognizing BGP Communities Based on Graph Neural Network. IEEE Netw. 2024, 38, 282–288. [Google Scholar] [CrossRef]

| Literature | Ontology | Mode | Annotation Quality Evaluation | ||||

|---|---|---|---|---|---|---|---|

| EN | RE | Expert | Flow | Architecture | Baseline | ||

| [2,7,8] | √ | manual | √ | ||||

| [9] | √ | manual | √ | √ | |||

| [10] | √ | manual | √ | √ | |||

| [11,12,13] | √ | manual | √ | √ | √ | ||

| [14] | √ | auto | √ | √ | √ | ||

| [6,15,16] | √ | √ | manual | √ | |||

| [17,18,19] | √ | √ | manual | √ | √ | ||

| [20,21] (CS40K) | √ | √ | auto | √ | √ | √ | |

| [21] (CS3K) [22,23,24,25] | √ | √ | manual | √ | √ | √ | |

| OURs | √ | √ | auto | √ | √ | √ | √ |

| Task | Type | SubType |

|---|---|---|

| Entity | Threat initiator | Threat-Actor, Malware, Tool, Attack-Pattern |

| Asset | Vulnerability, Configuration, File, Infrastructure, Credential | |

| Implication | Location, Identity, Industry, Observed-Data | |

| Relation | Base Relations | targets |

| Operation | uses, exploits, interacts-with, requires | |

| Traceability | located-at, indicates, attributed-to, has | |

| Extension | variant-of, affects, related-to |

| Literature | Basic Structural Indicators | Logical Consistency Indicators | ACT | Score | ||||

|---|---|---|---|---|---|---|---|---|

| CDA | CDB | LAC | CCC | EAG | TAG | |||

| DNRTI [11] |  |  |  |  |  |  |  | 78.89 |

| APTNER [12] |  |  |  |  |  |  |  | 65.67 |

| HACKER [14] |  |  |  |  |  |  |  | 61.14 |

| CVTIKG [17] |  |  |  |  |  |  |  | 57.44 |

| Ours (LAPTKG) |  |  |  |  |  |  |  | 83.65 |

indicates that the indicator was not evaluated at all,

indicates that the indicator was not evaluated at all,  indicates that the indicator was partially evaluated, and

indicates that the indicator was partially evaluated, and  indicates that the indicator was fully evaluated according to the design.

indicates that the indicator was fully evaluated according to the design.| Entity | Relation | ||||

|---|---|---|---|---|---|

| Type | ATT&CK | Report | Type | ATT&CK | Report |

| Threat-Actor | 1490 | 493 | uses | 1250 | 801 |

| Attack-Pattern | 1472 | 859 | requires | 1095 | 487 |

| Infrastructure | 726 | 650 | interacts-with | 827 | 571 |

| Tool | 635 | 474 | targets | 497 | 540 |

| Malware | 562 | 748 | attributed-to | 446 | 205 |

| Configuration | 416 | 378 | related-to | 367 | 877 |

| Identity | 396 | 635 | exploits | 336 | 79 |

| File | 393 | 293 | has | 321 | 391 |

| Observed-Data | 382 | 458 | affects | 301 | 212 |

| Credential | 206 | 79 | located-at | 295 | 216 |

| Location | 141 | 449 | indicates | 98 | 157 |

| Industry | 117 | 306 | variant-of | 74 | 147 |

| Vulnerability | 117 | 72 | - | - | - |

| Total | 7053 | 5895 | Total | 5907 | 4687 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, R.; Xiang, G.; Zhang, Y.; Yang, Q.; Cheng, M.; Zhang, H.; Ma, M.; Sun, L.; Ma, Z. A Trustworthy Dataset for APT Intelligence with an Auto-Annotation Framework. Electronics 2025, 14, 3251. https://doi.org/10.3390/electronics14163251

Qi R, Xiang G, Zhang Y, Yang Q, Cheng M, Zhang H, Ma M, Sun L, Ma Z. A Trustworthy Dataset for APT Intelligence with an Auto-Annotation Framework. Electronics. 2025; 14(16):3251. https://doi.org/10.3390/electronics14163251

Chicago/Turabian StyleQi, Rui, Ga Xiang, Yangsen Zhang, Qunsheng Yang, Mingyue Cheng, Haoyang Zhang, Mingming Ma, Lu Sun, and Zhixing Ma. 2025. "A Trustworthy Dataset for APT Intelligence with an Auto-Annotation Framework" Electronics 14, no. 16: 3251. https://doi.org/10.3390/electronics14163251

APA StyleQi, R., Xiang, G., Zhang, Y., Yang, Q., Cheng, M., Zhang, H., Ma, M., Sun, L., & Ma, Z. (2025). A Trustworthy Dataset for APT Intelligence with an Auto-Annotation Framework. Electronics, 14(16), 3251. https://doi.org/10.3390/electronics14163251