Abstract

The demand for high-quality private data in large language models is growing significantly. However, private data is often scattered across different entities, leading to significant data silo issues. To alleviate such problems, we propose a novel multi-party collaborative training framework for large language models, named MPCTF. MPCTF consists of several components to achieve multi-party collaborative training: (1) a one-click launch mechanism with multi-node and multi-GPU training capabilities, significantly simplifying user operations while enhancing automation and optimizing the collaborative training workflow; (2) four data partitioning strategies for splitting client datasets during the training process, namely fixed-size strategy, percentage-based strategy, maximum data volume strategy, and total data volume and available GPU memory strategy; (3) multiple aggregation strategies; and (4) multiple privacy protection strategies to achieve privacy protection. We conducted extensive experiments to validate the effectiveness of the proposed MPCTF. The experimental results demonstrate that the proposed MPCTF achieves superior performance; for example, our MPCTF acquired an accuracy rate of 65.43 and outperformed the existing work, which acquired an accuracy rate of 14.25 in the experiments. Moreover, we hope that our proposed MPCTF can promote the development of collaborative training for large language models.

1. Introduction

Large language model (LLM) technologies, such as GPT-4 [1] and DeepSeek-R1 [2], have achieved rapid development, and they are being applied across multiple real-world domains, such as chatbots [3,4], intelligent agents [5,6], personal assistants [7,8], and knowledge-based question answering systems [9,10], etc. Compared to smaller-scale models, large language models demonstrate impressive emergent capabilities, and they exhibit superior contextual understanding, reasoning abilities, and generalization performance. These advantages enable them to show unprecedented potential across numerous application domains. Consequently, large language models have gained widespread popularity and significant attention not only in academia but also in industry, where they are increasingly becoming a pivotal force driving innovation and transformation.

These technologies based on LLMs are reshaping human–computer interaction and driving industrial intelligent transformation. In the healthcare domain, LLMs can assist in diagnosis and drug discovery. In the education domain, LLMs can provide personalized learning solutions. In the finance domain, LLMs can be applied to intelligent investment advice and risk management. With the optimization of algorithms and the enhancement of computing power, the inferential capabilities of LLMs are progressively improving, leading to the ongoing expansion of their application scenarios. However, the performance of LLMs is highly dependent on the quality of large-scale datasets. Currently, high-quality private data, as a valuable resource, plays a significant role in achieving precise understanding and efficient application in specific domains. It has become critical for model optimization and performance enhancement of large language models. In reality, high-quality private data is typically scattered across different enterprises or institutions. However, due to restrictions imposed by relevant laws or concerns about privacy data leakage, high-quality private data cannot be effectively shared, resulting in data silos. These data barriers severely hinder the further development of LLMs.

Although federated learning frameworks have made some progress, the development of federated fine-tuning based on LLMs remains at an immature stage. The current frameworks still face several limitations. Firstly, some current frameworks require manual configuration and code modifications, as well as manual transfer of models and data. Additionally, participants cannot perform fine-tuning of LLMs across multiple machines and GPUs during federated training. Secondly, these frameworks require manual dataset splitting and use the same data size for training in each round. Thirdly, these frameworks require manual code modifications to adjust model fusion strategies and do not support automated configuration through configuration files. In addition, these frameworks require manual code modifications to adjust privacy protection strategies, lacking simple and flexible configuration support.

We propose a novel multi-party collaborative training framework for large language models, named MPCTF, to alleviate the problems mentioned above. MPCTF consists of a one-click launch mechanism with multi-node and multi-GPU training capabilities that significantly simplifies user operations and enhances automation, thereby further optimizing multi-party training processes. Four data partitioning strategies automatically split the client datasets during the training process. Specifically, the data partitioning strategies are composed of the fixed-size strategy, the percentage-based strategy, the maximum data volume strategy, and the total data volume and available GPU memory strategy. These data partitioning strategies can facilitate the adjustment of the amount of training data for each round and change the dataset according to the round. Multiple model aggregation strategies, consisting of FedAvg, FedProx, FedAdam, and FedAdagrad, are integrated into MPCTF. These model aggregation strategies and their relevant parameters can be automatically configured through the configuration file in the proposed MPCTF. Moreover, multiple privacy protection strategies, such as the server-side fixed clipping strategy, the server-side adaptive clipping strategy, the client-side fixed clipping strategy, and the client-side adaptive clipping strategy, are integrated into MPCTF to achieve data protection. The different privacy protection strategies can be simply and flexibly configured within the proposed MPCTF framework. We performed extensive experiments to demonstrate the effectiveness of the proposed MPCTF, and the experimental results validate that the proposed MPCTF achieves superior performance.

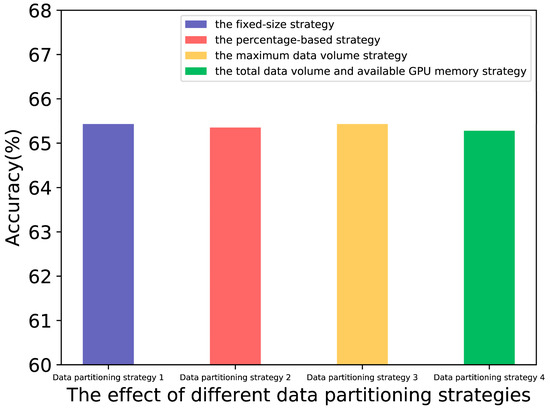

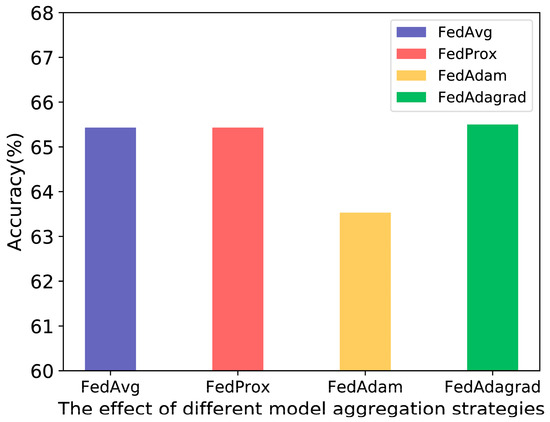

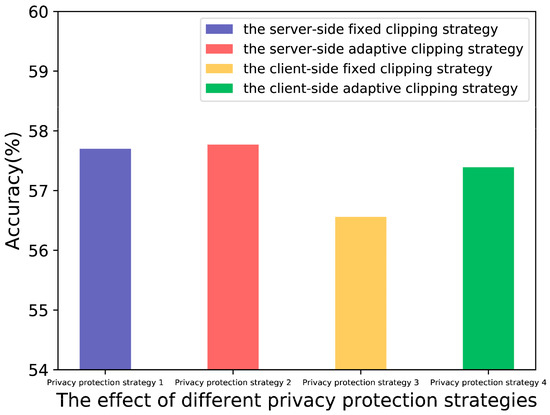

The main contributions of this work are the following: (1) We propose the MPCTF framework for multi-party collaborative training of LLMs, which can effectively utilize the local data of each participant for collaborative training to obtain a more generalized global model. The proposed MPCTF acquired an accuracy rate of 65.43 in the experiments. In the proposed MPCTF, the one-click start training mechanism can uniformly start training through a configuration file, and the multi-GPU training mechanism enables internal multi-machine and multi-card training for participants and allows for flexible use of different training resources. The existing federated learning frameworks require relatively complex configuration files to start training, and some frameworks are weak at multi-machine and multi-card training for the participant. (2) We propose four data partitioning strategies to achieve collaborative utilization of data and increase the methods of data usage. The four data partitioning strategies acquired accuracies of 65.43, 65.35, 65.43, and 65.28 in the experiments. The data splitting methods of some existing frameworks are simple and lack multiple splitting methods. (3) We integrate multiple aggregation strategies to achieve model aggregation in the server by various methods. The multiple model aggregation strategies acquired accuracies of 65.43, 65.43, 63.53, and 65.50 in the experiments. Some current frameworks lack support for multiple aggregation methods. (4) We develop multiple privacy protection strategies that can integrate differential privacy to achieve data protection in different ways. The multiple privacy protection strategies acquired accuracies of 57.70, 57.77, 56.56, and 57.39 in the experiments. The privacy protection methods of some current frameworks are relatively limited, and they are weak in supporting multiple data privacy protection methods.

The rest of this paper is structured as follows. Section 2 reviews the relevant literature on federated learning and privacy protection. Section 3 details the methodology of the proposed model in terms of the one-click launch mechanism with multi-node and multi-GPU training, data partitioning strategy, model aggregation strategy, and privacy protection. Section 4 presents the datasets, experimental setting, results, and analysis. Finally, Section 5 concludes the paper.

2. Related Work

In this section, we review the work related to our study. We first discuss the related work on federated learning and then introduce some prior work on privacy protection.

2.1. Federated Learning

The collaborative multi-party training of LLMs shares certain similarities with federated learning. Both aim to enhance model performance through distributed collaboration and preserve data privacy. FS-LLM [11], which is built upon FS [12], provides an end-to-end benchmarking pipeline that leverages parameter-efficient fine-tuning (PEFT) algorithms. By training or transferring only a minimal number of parameters through adapters, it enables fine-tuning of LLMs in federated learning scenarios. Additionally, FS-LLM can fine-tune LLMs in FL settings with low communication and computational costs, even without access to the complete model. The provided plug-and-play subroutines can support interdisciplinary research. Results show the effectiveness of this framework. However, FS-LLM is weak in multi-machine and multi-card training, data partitioning, and privacy protection. Flower [13] is a framework specifically designed for federated learning, supporting TensorFlow 2.x, PyTorch 2.x, and other frameworks. It enables collaborative model training across devices without sharing raw data. The framework adopts a client–server architecture and utilizes gRPC for communication. The capabilities of multi-machine and multi-card training, data partitioning, and privacy protection are also weak. FATE-LLM [14] is an industrial-grade federated learning framework; it is specifically designed to support the training and fine-tuning of both large language models (LLMs) and small language models (SLMs). The FATE-LLM aims to address key challenges faced by LLMs in practical applications, including high training costs, substantial data requirements, and data privacy protection concerns. The deployment of this framework is rather complex. Pei et al. [15] systematically sort out the key challenges and solutions of federated learning in scenarios of data, model, and system heterogeneity. However, their discussion on issues such as privacy protection is insufficient to a certain extent. Hu et al. [16] analyze the security and privacy threats in federated learning. They also further explore the application challenges of federated learning in fields such as healthcare and finance.

2.2. Privacy Protection

The collaborative multi-party training of LLMs and privacy protection are inherently interconnected. Generally, privacy-preserving technologies refer to comprehensive solutions that employ encryption, anonymization, access control, and other technical methods to ensure data security. That is to say, personal or organizational data is not illegally obtained or misused during collection, storage, transmission, and usage. TAOISM [17] is a model privacy protection technology based on trusted execution environments (TEEs). It aims to address mutual distrust between users and providers by deploying the provider’s foundational model within the user’s TEE. It ensures that the confidential data of the user remains secure against leakage, and it can prevent unauthorized access to the provider’s proprietary model information. AlphaEdit [18] proposes a zero-space constrained knowledge editing method by projecting perturbations onto the null space that preserves existing knowledge. This approach enables specific knowledge to be updated while protecting original knowledge. It offers a novel perspective for privacy preservation in federated learning. Charles et al. [19] have proposed a user-level differential privacy method for fine-tuning of LLMs. They aim to address uneven privacy protection, where traditional differential privacy treats single data points as privacy units during LLM fine-tuning.

3. Methodology

In this section, we first provide an overall introduction to the proposed MPCTF. Then, we introduce in detail the key capabilities of the proposed MPCTF, which are the one-click launch mechanism with multi-node and multi-GPU training, data partitioning strategy, model aggregation strategy, and privacy protection.

3.1. Overview

In this section, we provide an overall introduction to our proposed MPCTF. The overall architecture is illustrated in Figure 1. The multi-party collaborative training of LLMs focuses on multi-party training and data protection. The multi-party collaborative training of LLMs can not only empower the entire large model development process with high availability but also further improve the value of private data. The proposed MPCTF can enable each participant to train the local model using their local dataset, thereby allowing training to be conducted without the training data leaving their organization. During the training process, the server receives the partial parameters of the model sent by each participant for model aggregation, which can achieve a global model with stronger generalization.

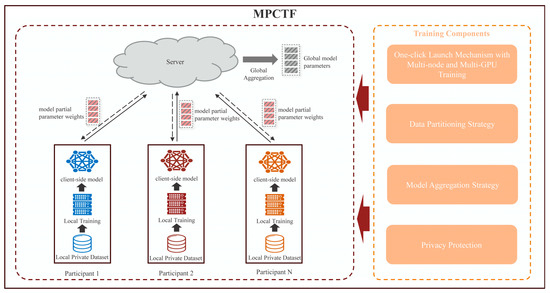

Figure 1.

The overall architecture of the proposed MPCTF. The training components on the right side are the capabilities required for multi-party collaborative training. These training components involve the model training, data processing, model aggregation, and privacy protection in the proposed MPCTF.

As illustrated on the left side of Figure 1, the multi-party collaborative training of LLMs involves the following processes. Firstly, when all the participants and the server have joined the training framework and the preparatory work is completed, the server initiates the training, and then the training process begins. Secondly, each participant uses their high-quality private data to train the local large language model through local GPU computing resources. In order to save bandwidth resources and enhance communication efficiency between each participant and the server, the parameter-efficient fine-tuning method LoRA [20] is adopted for training LLMs in MPCTF. Once the training of the current round is completed, the current participant will send the trained model’s partial parameter weights of the current round to the server. Thirdly, when the server receives the model partial parameter weights from all the participants, the server begins to perform global aggregation to obtain the global model parameters, and then the global model on the server is updated based on the aggregated global model parameters. Fourthly, the model’s partial parameter weights of the updated model are sent from the server to each participant. Each participant updates the local client-side model, and then each participant begins a new round of training. Finally, the framework repeats the above process continuously until the number of training rounds reaches the predefined value. After the training is finished, the final trained global model can be obtained.

In addition, multiple helpful and important training components ensure effective collaborative training across multiple participants in the proposed MPCTF framework, which include the one-click launch mechanism with multi-node and multi-GPU training, data partitioning strategy, model aggregation strategy, and privacy protection. These components and the fundamental framework collectively constitute a complete training system. All components are coordinated through a unified control mechanism to achieve automated management of the training workflow. During the training process, the parameter-efficient fine-tuning method LoRA is used to reduce communication overhead. One of the key factors influencing communication overhead is the model size, where larger models inherently generate greater communication costs. In addition, a larger learning rate may cause difficulties in model convergence, while a smaller learning rate may require more training rounds and time to achieve model convergence. Moreover, the developed privacy protection strategies use differential privacy to achieve data protection. The larger noise value provides better protection but may have a significant impact on the model’s performance. In the subsequent subsections, we will describe these components in detail.

3.2. One-Click Launch Mechanism with Multi-Node and Multi-GPU Training

The one-click launch mechanism with multi-node and multi-GPU training is designed to enable users to operate conveniently and enhance the level of automation. Specifically, the one-click launch mechanism with multi-node and multi-GPU training consists of the one-click start training and the multi-node and multi-GPU training. In the one-click launching training mechanism, the central server can initiate the training of all participants with just one configuration file. Different participants can customize their training configurations. In addition, the central server is capable of conducting real-time monitoring of the resource status of each participating entity. The one-click launching training mechanism can automatically generate training scripts for each participant and their sub-nodes. Furthermore, this mechanism enables the automated modification of relevant configurations and data dissemination during the collaborative training process of each participant.

The multi-node and multi-GPU training mechanism is developed to conduct the actual model training task within a single participant by leveraging multiple machines and multiple GPUs, and it can be compatible with various kinds of LLMs. In the specific training process, each participant can receive the weights from the server and store them locally. Internal nodes among the participants can distribute weights, initiate training, and transmit the optimal model weights to the server upon completion of each round in the training process. The multi-node and multi-GPU training mechanism also supports participants utilizing varying quantities of GPU resources or different hardware. In addition, the designed multi-node and multi-GPU training mechanism enables participants to configure their environmental variables based on their network conditions, such as determining whether to utilize InfiniBand (IB) or employ specific network interface cards for communication.

In the multi-node and multi-GPU training mechanism, users can specify the machines and GPUs to be used through the configuration file. In other words, the multi-node and multi-GPU training mechanism of the proposed MPCTF is used to enable each participant to perform model training using the designated machines and GPUs. If the multi-node and multi-GPU training mechanism is absent, the proposed MPCTF cannot perform training on multiple machines or GPUs.

3.3. Data Partitioning Strategy

The data partitioning strategy is proposed to achieve the splitting of the training data. It can conveniently adjust the amount of the training data used in each round. And the framework can seamlessly replace the corresponding datasets based on the progression of training rounds. The data partitioning strategy consists of the fixed-size strategy, the percentage-based strategy, the maximum data volume strategy, and the total data volume and available GPU memory strategy.

Specifically, the fixed-size strategy sets the amount of training data for each round according to the fixed size, which can be customized in the proposed MPCTF. The value of size in the fixed-size strategy determines the amount of training data in each round. The percentage-based strategy sets the amount of training data for each round according to the percentage of the total data of each participant. The amount of training data in each round is obtained by multiplying the quantity of the whole dataset and the percentage value. The maximum data volume strategy can automatically set the amount of training data for each round based on the specific participant who has the largest total data volume. In the maximum data volume strategy, the amount of training data in each round is determined by dividing the quantity of the largest dataset among the participants by the number of training rounds. The total data volume and available GPU memory strategy can automatically allocate the amount of training data for each round according to the total data amount of each participant and the total available GPU memory. In other words, the amount of training data in each round is determined jointly by the available GPU memory and the quantity of the whole datasets within each participant. In the proposed MPCTF, different data partitioning strategies can be flexibly customized and used during the training according to actual scenario requirements.

3.4. Model Aggregation Strategy

The model aggregation strategy aims to integrate the trained local models of each participant to improve the generalization of the global model. Aggregation methods such as FedAvg, FedProx, FedAdam, and FedAdagrad are integrated into the model aggregation strategy in the proposed MPCTF.

FedAvg computes a weighted average of local models, and it offers a simple yet effective aggregation approach. FedProx introduces a regularization term to mitigate local model divergence during training. FedAdam updates model parameters by incorporating momentum and adaptive learning rates, which combines the benefits of both techniques for federated optimization. FedAdagrad updates model parameters by adaptively adjusting the learning rate. If the model aggregation strategy is absent, the proposed MPCTF is unable to aggregate the models trained by the participants, and thus it cannot conduct the training for each round and cannot generate the final global model. In the proposed MPCTF, different model aggregation strategies can be selected, and the relevant parameters of each strategy can be customized through a unified configuration interface.

3.5. Privacy Protection

The privacy protection strategy is developed to achieve strict protection of training data by introducing differential privacy technology. In the proposed MPCTF, the privacy protection strategy comprises the server-side fixed clipping strategy, the server-side adaptive clipping strategy, the client-side fixed clipping strategy, and the client-side adaptive clipping strategy.

In both the server-side fixed clipping strategy and the server-side adaptive clipping strategy, the server can perform a unified clipping operation on all participants’ updates and reduce the communication overhead of the clipping values. In both client-side fixed clipping and client-side adaptive clipping strategies, the participants perform related operations to reduce the server’s computational overhead to a certain extent. In addition, the fixed clipping strategy truncates the value of model parameters through a preset fixed threshold to prevent individual outliers from interfering with the global model, and the adaptive clipping strategy truncates the value of model parameters by dynamically adjusting the threshold. It should be noted that all privacy protection strategies employ differential privacy to achieve data protection of training data. In subsequent experiments, the performance of the models obtained using different privacy protection strategies is very close. In addition, the larger noise value provides better protection but may have a significant impact on the model’s performance. Users can customize the value of the noise to achieve different levels of privacy protection. One potential limitation of privacy protection strategies is that they may reduce the performance of the model. Overall, in the proposed MPCTF, differential privacy strategies can be selected, and the relevant parameters of each strategy can also be customized through a unified configuration interface.

4. Experiments

In this section, we first introduce the dataset used for training and testing. Subsequently, we describe the experimental setting. Finally, we present extensive experimental results and conduct detailed analyses of these experimental results.

4.1. Datasets

The statistics of the fine-tuning dataset and the testing datasets curated in the proposed MPCTF are presented in Table 1. In Table 1, the symbol “Total number” denotes the total number of the datasets, the symbol “Average length of training” denotes the average length of the dataset used for training LLMs in the proposed MPCTF, the symbol “Type” indicates whether the dataset is used for fine-tuning LLMs or model evaluation of LLMs, the symbol “-” indicates that this item is not involved, and the symbols “Training dataset” and “Evaluation dataset” represent the training dataset and the evaluation dataset used in the experiments, respectively.

Table 1.

Statistics of all datasets.

The MPCT-CoT dataset was derived from existing fine-tuning datasets mainly composed of problems and reasoning answers in the field of mathematics. The MPCT-CoT dataset mainly involved four datasets: DeepScaleR [21], LIMO [22], OpenR1 [23], and OpenThoughts [24]. We consistently utilized the MPCT-CoT dataset for conducting fine-tuning experiments in MPCTF. In addition, we used the opencompass toolkit [25] and the gsm8k test dataset [26], which consists of mathematical problems, to evaluate the performance of the model.

In the process of collecting the MPCT-CoT dataset, we first performed data cleaning on these four datasets. Then, we formed a large candidate dataset from all the cleaned datasets, where the <think></think> tag was used in each sample to represent the reasoning process. The main challenge in this process is how to effectively clean the data, as the quality of data cleaning affects the model’s performance. For instance, if the model is trained on a dataset containing a large amount of meaningless symbols, it will seriously damage the performance of the model. From the large candidate dataset, we sampled 10,000 samples to constitute the final MPCT-CoT dataset.

4.2. Experiment Setting

We employed Qwen1.5-7B-Chat [27] as the base model in our experiments. Low-Rank Adaptation (LoRA) [20] was adopted for parameter-efficient fine-tuning. In multi-party collaborative training, two participants were employed to simulate real-world scenarios, and each participant used A800 GPUs for fine-tuning. We divided the MPCT-CoT dataset into two sub-datasets equally so that each sub-dataset contained 5000 sample data. The two participants used one of these two sub-datasets of the MPCT-CoT dataset for multi-party collaborative training. If not mentioned otherwise, the total number of training rounds was 10, the chunk size of the fixed-size strategy in the data partitioning setting was 500, and the learning rate was set to 1 × 10−4. It should be noted that our proposed MPCTF requires participants to have GPUs such as A800 for training LLMs, and there must be a stable network connection between the server and the participants to carry out communication parameter transmission.

4.3. Results and Analysis

In this section, we present a large number of experimental results and conduct detailed analyses and discussions on these experimental results.

4.3.1. Comparison of Multi-Party Collaborative Training with Centralized Training and the Existing Work

To validate the effectiveness of multi-party collaborative training in our proposed MPCTF, we performed comparative experiments of our proposed MPCTF with centralized training and the existing work. In centralized training, the large language model was fine-tuned on a single machine based on the overall MPCT-CoT dataset. We used MS-Swift [28] to conduct the experiments of centralized training. In the multi-party collaborative training, two participants were employed to fine-tune LLMs using our proposed MPCTF. In addition, we also compared our proposed MPCTF with the existing work, namely FS-LLM [11]. The experimental results of multi-party collaborative training, centralized training, and the existing work are shown in Table 2. The experimental results of FS-LLM [11] are reported from the original paper. In Table 2, Centralization-model-1, Centralization-model-2, and Centralization-model-3 represent the models obtained when the value of the epoch is 1, 2, and 3 in the centralized training. The MPCTF model denotes the model obtained using our proposed MPCTF in the multi-party collaborative training. The centralized training type means that the model was fine-tuned through centralized training. The multi-party collaborative training type means that the model was fine-tuned through multi-party collaborative training. The symbol “-” in the type denotes the existing work.

Table 2.

The experimental results of multi-party collaborative training, centralized training, and the existing work. The best result is marked in bold. The second-best result is marked with an asterisk.

As shown in Table 2, our proposed MPCTF achieves performance that is remarkably close to the performance of the best model trained through centralized training. The performance difference between the best Centralization-model-1 and MPCTF-model is only 0.07. We performed the paired t-test, and the results were p < 0.05. We also conducted multiple experiments on the model obtained using our proposed MPCTF, and the standard deviation was 0.04. In addition, we can also observe that the model trained based on our proposed MPCTF exceeds other models trained through centralized training, such as Centralization-model-2 and Centralization-model-3. The experimental results of the comparison of multi-party collaborative training and centralized training demonstrate the effectiveness of our proposed MPCTF. Moreover, we compared our proposed MPCTF with the existing work, namely FS-LLM [11]. As can be seen from Table 2, our proposed MPCTF acquired an accuracy rate of 65.43 and outperformed the existing work, which acquired an accuracy rate of 14.25 in the experiments. In addition, the experimental results also validate that the performance of the multi-party collaborative training model based on our proposed MPCTF is close to that of the centralized training model and that our proposed MPCTF can outperform the centralized training model to a certain extent. In addition, the training loss graph of the MPCTF model is presented in Figure 2.

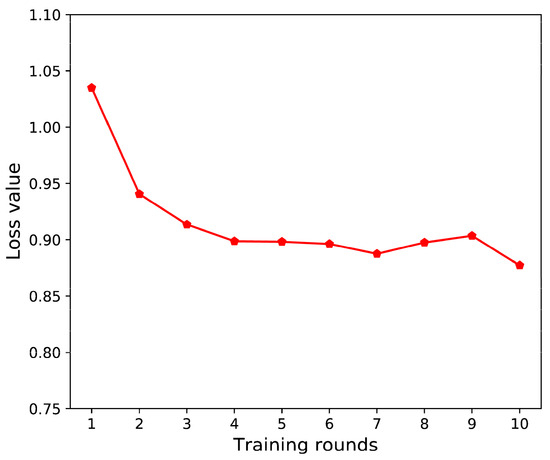

Figure 2.

The training loss graph of the MPCTF model. From the graph, we can observe that as the number of training rounds increases, the model gradually converges.

Furthermore, we also discuss the comparative analysis between some baseline frameworks and our proposed MPCTF. The existing baseline federated learning methods, such as FS-LLM [11] and Flower [13], lack the core capabilities of our proposed MPCTF. Specifically, the participants are unable to effectively utilize multiple machines and multiple cards for training. In our proposed MPCTF, the participants can use multiple machines and multiple cards for training, which can accelerate training and reduce communication costs. In addition, the baseline frameworks lack privacy protection or have few privacy protection methods. In our proposed MPCTF, we propose multiple privacy protection strategies to achieve data protection. In addition, our proposed MPCTF can initiate the training of all participants with just one configuration file and supports multiple data partitioning strategies and model aggregation strategies, which are also absent in some baseline frameworks.

4.3.2. Effect of Different Values of Batch Size

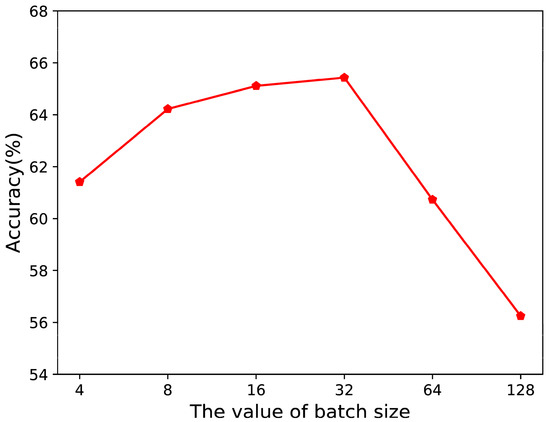

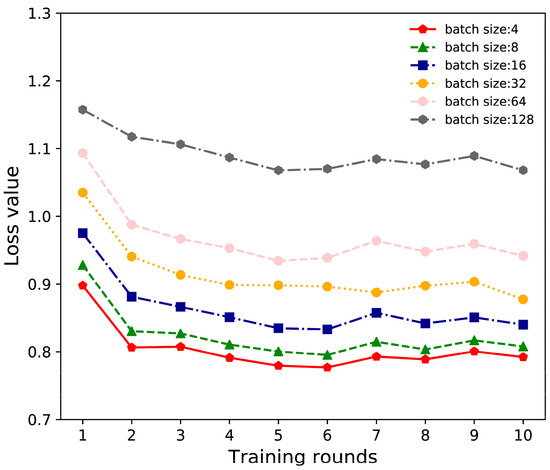

To investigate the effect of different values of batch size, we set the batch size to different values: 4, 8, 16, 32, 64, and 128. The learning rate was 1 × 10−4 in each batch size. The experimental results of different values of batch size are reported in Figure 3, and the training loss graph for each batch size is presented in Figure 4. From Figure 3, we can observe that when the batch size is 32, the model achieves the best performance. On the one hand, when the batch size of the model increases from 4 to 32, the performance of the model gradually improves. On the other hand, when the batch size of the model increases from 32 to 128, the performance of the model gradually decreases. The possible reason is that when the batch size is small, the noise of the model update is large, resulting in unstable parameter update directions. The larger batch size may reduce the randomness of gradients, resulting in decreased generalization performance of the model. From Figure 4, we can observe that as the number of training rounds increases, the model gradually converges.

Figure 3.

The effect of different values of batch size. When the batch size is 32, the model achieves the best performance. The performance of the model gradually improves when the batch size of the model increases from 4 to 32, and the performance of the model gradually decreases when the batch size of the model increases from 32 to 128.

Figure 4.

The training loss graph for each batch size. From the graph, we can observe that as the number of training rounds increases, the model gradually converges.

4.3.3. Effect of Different Data Partitioning Strategies

In the proposed MPCTF, there are four data partitioning strategies, which are the fixed-size strategy, the percentage-based strategy, the maximum data volume strategy, and the total data volume and available GPU memory strategy. These designed data partitioning strategies can easily and automatically adjust the amount of training data used in each training round based on a simple configuration.

To explore the effect of different data partitioning strategies, we conducted experiments on the four data partitioning strategies. In other words, we used the fixed-size strategy, the percentage-based strategy, the maximum data volume strategy, and the total data volume and available GPU memory strategy to fine-tune LLMs in the specific data partitioning strategy configuration. Specifically, in the fixed-size strategy, the value of chunk size was set to 500. In the percentage-based strategy, the size of the percentage was set to 0.1. The experimental results of different data partitioning strategies are presented in Figure 5.

Figure 5.

The effect of different data partitioning strategies.

The experimental results of the effect of different data partitioning strategies show that the performance of the model is basically the same when using four different data partitioning strategies in the multi-party collaborative training for LLMs. In specific practical scenarios, users can customize the specific data partitioning strategy for the multi-party collaborative training. In addition, from the experimental results in Figure 5, we can observe that the fixed-size strategy performs slightly better than other data partitioning strategies on the MPCT-CoT dataset. In general, the fixed-size strategy can be initially employed for multi-party collaborative training. If the model performance does not meet the requirements, other data partitioning strategies can be attempted for multi-party collaborative training.

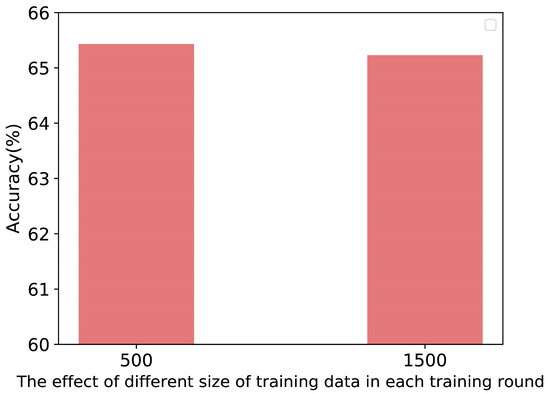

To further investigate the effect of different sizes of training data in each training round, we performed additional experiments on the value of chunk size in the fixed-size strategy. We set the chunk size to different values: 500 and 1500. It should be noted that in the experiments, the values of chunk size in the fixed-size strategy were 500 and 1500, which are equivalent to the percentage values of 0.1 and 0.3 in the percentage-based strategy, respectively.

The experimental results of different sizes of training data in each training round are reported in Figure 6. From Figure 6, we can observe that in the fixed-size strategy or the percentage-based strategy, the model acquires a better performance when the value of the chunk size of the training data in each training round is 500, while the larger value of the chunk size of the training data in each training round, such as 1500, results in a poor performance. The main reason is that when the value of the chunk size of training data in each training round is relatively large, the divergence between the models trained across different participants increases, leading to greater variance during model parameter updates, which consequently affects the convergence of the model.

Figure 6.

The effect of different sizes of training data in each training round.

4.3.4. Effect of Different Model Aggregation Strategies

In the proposed MPCTF, there are four model aggregation strategies, which are FedAvg, FedProx, FedAdam, and FedAdagrad. These model aggregation strategies are integrated to aggregate the partial parameter weights of the local models uploaded by each participant to obtain a global model with better generalization performance. To investigate the effect of different model aggregation strategies, we performed experiments on four model aggregation strategies. Specifically, the proximal hyperparameter μ was set to 0.01 in the FedProx strategy. In the FedAdam strategy, the learning rate was set to 0.001. In the FedAdagrad strategy, the learning rate was set to 0.1. The experimental results of different model aggregation strategies are reported in Figure 7.

Figure 7.

The effect of different model aggregation strategies.

As can be seen in Figure 7, the performance of models obtained through multi-party collaborative training remains largely consistent across most of the model aggregation strategies in the proposed MPCTF. In addition, we performed each experiment multiple times; the experimental results for FedAvg, FedProx, FedAdam, and FedAdagrad are 65.43 ± 0.31, 65.43 ± 0.23, 63.53 ± 0.44, and 65.50 ± 0.35, respectively. In practical scenarios, users can use the specific model aggregation strategy for the multi-party collaborative training. In general, FedAvg serves as the default model aggregation strategy within our proposed MPCTF. If the model performs poorly, other model aggregation strategies can be tried for multi-party collaborative training of LLMs.

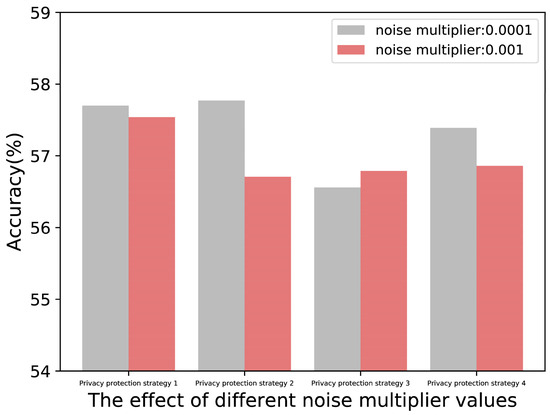

4.3.5. Effect of Different Privacy Protection Strategies

To investigate the effect of different privacy protection strategies, we performed experiments on different privacy protection strategies. In the proposed MPCTF, there are four model aggregation strategies, which are the server-side fixed clipping strategy, the server-side adaptive clipping strategy, the client-side fixed clipping strategy, and the client-side adaptive clipping strategy. These different privacy protection strategies can protect each participant from leaking some training data information in the training process to a certain extent. We set the value of the noise multiplier to 0.0001 and the value of the gradient clip norm to 1 in four privacy protection strategies. The experimental results of different privacy protection strategies are reported in Figure 8.

Figure 8.

The effect of different privacy protection strategies.

As can be seen in Figure 8, the models obtained with most privacy protection strategies perform similarly. We performed each experiment multiple times; the experimental results for privacy protection strategies 1, 2, 3, and 4 are 57.70 ± 0.21, 57.77 ± 0.32, 56.56 ± 0.29, and 57.39 ± 0.17, respectively. In addition, the experimental results also validate the effectiveness of the privacy protection strategy. From Table 2, we can observe that the accuracy of the model without using the privacy protection strategy in multi-party collaborative training is 65.43. According to the experimental results in Figure 8, when the value of the noise multiplier is 0.0001 and the value of the gradient clip norm is 1 in privacy protection strategies, the difference in accuracy between models using different privacy protection strategies and the model without the privacy protection strategy ranges from 7.66 to 8.87. The main reason is that the noise injected into the model makes it difficult for the model to effectively capture the knowledge in the local training data during the training process, which in turn reduces the performance of the model. In general, the more noise in the privacy protection strategies, the more difficult it is for attackers to obtain the training data. In practical scenarios where there is a high demand for privacy protection, users can use larger noise to perform training to achieve stronger data protection.

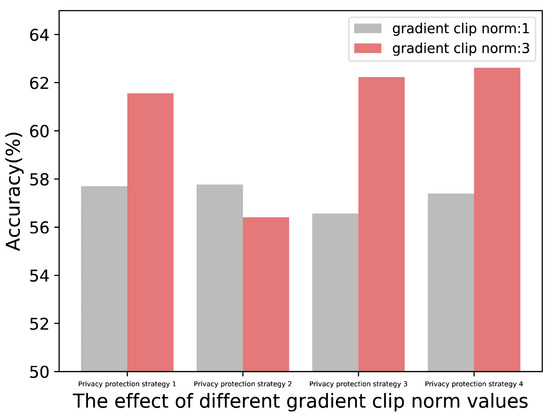

To further explore the effect of noise multiplier values and gradient clip norm values in the privacy protection strategy, we performed additional experiments on different noise multiplier values and gradient clip norm values. Specifically, we adjusted the value of the noise multiplier to 0.001 and the value of the gradient clip norm to 3. The experimental results of the effect of different noise multiplier values are reported in Figure 9, and the experimental results of the effect of different gradient clip norm values are reported in Figure 10. In Figure 9 and Figure 10, the privacy protection strategies 1, 2, 3, and 4 represent the server-side fixed clipping strategy, the server-side adaptive clipping strategy, the client-side fixed clipping strategy, and the client-side adaptive clipping strategy, respectively.

Figure 9.

The effect of different noise multiplier values.

Figure 10.

The effect of different gradient clip norm values.

The experimental results in Figure 9 show that the performance of the model with a smaller noise multiplier value is better than that of the model with a larger noise multiplier value in most privacy protection strategies. We believe the main reason is that reducing the noise multiplier value will reduce the noise injected into the model parameters, thereby reducing the interference with the model’s generalization ability.

The experimental results in Figure 10 show that the performance of the model with a larger gradient clip norm value is better than that of the model with a smaller gradient clip norm value in most privacy protection strategies. We believe the main reason is that the lower gradient clip norm value overly compresses the gradients, leading to the loss of effective gradient information and preventing the model from learning complex patterns from the local training data.

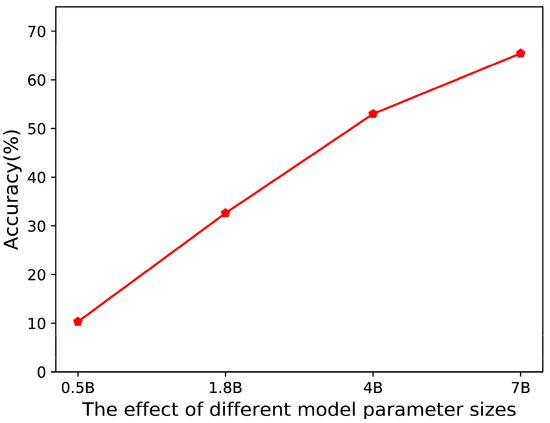

4.3.6. Effect of Different Model Parameter Sizes

The parameter size of LLMs refers to the number of parameters in the model. In general, more parameters mean that the model has stronger representational capabilities and memory potential. To investigate the effect of different model parameter sizes, we conducted experiments on the model parameter size. The experimental results of the effect of different model parameter sizes are presented in Figure 11. On the one hand, the experimental results demonstrate that the proposed MPCTF can support multi-party collaborative training of LLMs of different model sizes. On the other hand, when the model parameter size is larger, the performance of the model is better. When the model parameter size is 0.5B, the model acquires the worst result. The main reason is that larger models can generally learn more complex patterns and broader knowledge from the training dataset and have stronger generalization capabilities.

Figure 11.

The effect of different model parameter sizes.

5. Conclusions

In this paper, we propose a novel MPCTF for multi-party collaborative training of LLMs. The proposed MPCTF can effectively resolve the problem of data silos, and it can perform multi-party collaborative training of LLMs without sharing the private data of each participant with other institutions or organizations. In other words, participants can conduct local training using their respective datasets, while the server performs global aggregation of model parameters. In the proposed MPCTF, the one-click launch mechanism with multi-node and multi-GPU training is designed to simplify and optimize the operations and processes of multi-party collaborative training, and it can reduce cumbersome configurations and enhance the level of automation. The four data partitioning strategies are developed to flexibly customize and automatically configure the number of training data used in each round, and they can automatically replace the segmented dataset in the training process. A variety of model aggregation strategies are integrated to effectively aggregate the models of all clients and obtain a global model with stronger generalization ability. Different privacy protection strategies are employed to protect the security of data during the multi-party collaborative training to a certain extent. The experimental results show that the proposed MPCTF achieves superior performance and validate the effectiveness of the proposed MPCTF. In the next work, we plan to integrate other efficient optimizers and adapt more privacy protection methods to the proposed MPCTF.

Author Contributions

Conceptualization, N.L. and D.L.; methodology, N.L.; validation, N.L.; investigation, N.L.; writing—original draft preparation, N.L.; writing—review and editing, N.L. and D.L.; visualization, N.L.; supervision, N.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Kim, J.K.; Chua, M.; Rickard, M.; Lorenzo, A. ChatGPT and large language model (LLM) chatbots: The current state of acceptability and a proposal for guidelines on utilization in academic medicine. J. Pediatr. Urol. 2023, 19, 598–604. [Google Scholar] [CrossRef] [PubMed]

- Shafee, S.; Bessani, A.; Ferreira, P.M. Evaluation of LLM-based chatbots for OSINT-based Cyber Threat Awareness. Expert Syst. Appl. 2024, 261, 125509. [Google Scholar] [CrossRef]

- Guan, Y.; Wang, D.; Chu, Z.; Wang, S.; Ni, F.; Song, R.; Zhuang, C. Intelligent agents with llm-based process automation. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 5018–5027. [Google Scholar]

- Zhong, N.; Wang, Y.; Xiong, R.; Zheng, Y.; Li, Y.; Ouyang, M.; Shen, D.; Zhu, X. Casit: Collective intelligent agent system for internet of things. IEEE Internet Things J. 2024, 11, 19646–19656. [Google Scholar] [CrossRef]

- Dong, X.L.; Moon, S.; Xu, Y.E.; Malik, K.; Yu, Z. Towards next-generation intelligent assistants leveraging llm techniques. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 5792–5793. [Google Scholar]

- Shu, Y.; Zhang, H.; Gu, H.; Zhang, P.; Lu, T.; Li, D.; Gu, N. RAH! RecSys–Assistant–Human: A Human-Centered Recommendation Framework with LLM Agents. IEEE Trans. Comput. Soc. Syst. 2024, 11, 6759–6770. [Google Scholar] [CrossRef]

- Wang, F.; Shi, D.; Aguilar, J.; Cui, X.; Jiang, J.; Shen, L.; Li, M. LLM-KGMQA: Large language model-augmented multi-hop question-answering system based on knowledge graph in medical field. Knowl. Inf. Syst. 2025, 67, 6461–6503. [Google Scholar] [CrossRef]

- Lin, X.; Huang, Z.; Zhang, Z.; Zhou, J.; Chen, E. Explore What LLM Does Not Know in Complex Question Answering. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 24585–24594. [Google Scholar]

- Kuang, W.; Qian, B.; Li, Z.; Chen, D.; Gao, D.; Pan, X.; Xie, Y.; Li, Y.; Ding, B.; Zhou, J. Federatedscope-llm: A comprehensive package for fine-tuning large language models in federated learning. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 5260–5271. [Google Scholar]

- Xie, Y.; Wang, Z.; Gao, D.; Chen, D.; Yao, L.; Kuang, W.; Li, Y.; Ding, B.; Zhou, J. Federatedscope: A flexible federated learning platform for heterogeneity. arXiv 2022, arXiv:2204.05011. [Google Scholar] [CrossRef]

- Beutel, D.J.; Topal, T.; Mathur, A.; Qiu, X.; Fernandez-Marques, J.; Gao, Y.; Sani, L.; Li, K.H.; Parcollet, T.; de Gusmão, P.P.B. Flower: A friendly federated learning research framework. arXiv 2020, arXiv:2007.14390. [Google Scholar]

- Fan, T.; Kang, Y.; Ma, G.; Chen, W.; Wei, W.; Fan, L.; Yang, Q. Fate-llm: A industrial grade federated learning framework for large language models. arXiv 2023, arXiv:2310.10049. [Google Scholar] [CrossRef]

- Pei, J.; Liu, W.; Li, J.; Wang, L.; Liu, C. A review of federated learning methods in heterogeneous scenarios. IEEE Trans. Consum. Electron. 2024, 70, 5983–5999. [Google Scholar] [CrossRef]

- Hu, K.; Gong, S.; Zhang, Q.; Seng, C.; Xia, M.; Jiang, S. An overview of implementing security and privacy in federated learning. Artif. Intell. Rev. 2024, 57, 204. [Google Scholar] [CrossRef]

- Zhang, Z.; Gong, C.; Cai, Y.; Yuan, Y.; Liu, B.; Li, D.; Guo, Y.; Chen, X. No privacy left outside: On the (in-) security of tee-shielded dnn partition for on-device ml. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19 May–23 May 2024; pp. 3327–3345. [Google Scholar]

- Fang, J.; Jiang, H.; Wang, K.; Ma, Y.; Jie, S.; Wang, X.; He, X.; Chua, T.-S. Alphaedit: Null-space constrained knowledge editing for language models. arXiv 2024, arXiv:2410.02355. [Google Scholar]

- Charles, Z.; Ganesh, A.; McKenna, R.; McMahan, H.B.; Mitchell, N.; Pillutla, K.; Rush, K. Fine-tuning large language models with user-level differential privacy. arXiv 2024, arXiv:2407.07737. [Google Scholar]

- Hu, E.J.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Luo, M.; Tan, S.; Wong, J.; Shi, X.; Tang, W.Y.; Roongta, M.; Cai, C.; Luo, J.; Zhang, T.; Li, L.E. Deepscaler: Surpassing o1-preview with a 1.5 b model by scaling rl. Notion Blog 2025, 3, 5. [Google Scholar]

- Ye, Y.; Huang, Z.; Xiao, Y.; Chern, E.; Xia, S.; Liu, P. Limo: Less is more for reasoning. arXiv 2025, arXiv:2502.03387. [Google Scholar]

- Face, H. Open r1: A Fully Open Reproduction of Deepseek-r1, January 2025. Available online: https://github.com/huggingface/open-r1 (accessed on 5 March 2025).

- Guha, E.; Marten, R.; Keh, S.; Raoof, N.; Smyrnis, G.; Bansal, H.; Nezhurina, M.; Mercat, J.; Vu, T.; Sprague, Z. OpenThoughts: Data Recipes for Reasoning Models. arXiv 2025, arXiv:2506.04178. [Google Scholar] [CrossRef]

- Contributors, O. OpenCompass: A Universal Evaluation Platform for Foundation Models. 2023. Available online: https://github.Com/Open-Compass/Opencompass (accessed on 5 March 2025).

- Cobbe, K.; Kosaraju, V.; Bavarian, M.; Chen, M.; Jun, H.; Kaiser, L.; Plappert, M.; Tworek, J.; Hilton, J.; Nakano, R. Training verifiers to solve math word problems. arXiv 2021, arXiv:2110.14168. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F. Qwen technical report. arXiv 2023, arXiv:2309.16609. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, J.; Hu, J.; Wang, X.; Mao, Y.; Zhang, D.; Jiang, Z.; Wu, Z.; Ai, B.; Wang, A. Swift: A scalable lightweight infrastructure for fine-tuning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 29733–29735. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).