Abstract

With the proliferation of LEO satellite constellations and increasing demands for on-orbit intelligence, satellite networks generate massive, heterogeneous, and privacy-sensitive data. Ensuring efficient model collaboration under strict privacy constraints remains a critical challenge. This paper proposes HiSatFL, a cross-domain adaptive and privacy-preserving federated learning framework tailored to the highly dynamic and resource-constrained nature of satellite communication systems. The framework incorporates an orbital-aware hierarchical FL architecture, a multi-level domain adaptation mechanism, and an orbit-enhanced meta-learning strategy to enable rapid adaptation with limited samples. In parallel, privacy is preserved via noise-calibrated feature alignment, differentially private adversarial training, and selective knowledge distillation, guided by a domain-aware dynamic privacy budget allocation scheme. We further establish a unified optimization framework balancing privacy, utility, and adaptability, and derive convergence bounds under dynamic topologies. Experimental results on diverse remote sensing datasets demonstrate that HiSatFL significantly outperforms existing methods in accuracy, adaptability, and communication efficiency, highlighting its practical potential for collaborative on-orbit AI.

1. Introduction

1.1. Background

With the rapid advancement of global digital transformation, satellite communication technologies are experiencing unprecedented growth. As of 2024, over 8000 satellites are operating in orbit, with more than 75% being commercial satellites [1]. Large-scale LEO constellations such as SpaceX Starlink and Amazon Kuiper are constructing global broadband infrastructures, and it is projected that over 100,000 satellites will be in orbit by 2030 [2]. These networks not only support traditional communication services but also serve as critical carriers for Earth observation, environmental monitoring, and massive IoT data collection.

Modern satellite systems are generating data at an exponential rate. A single high-resolution Earth observation satellite can produce several terabytes of raw data daily, and this volume increases to the petabyte scale in large constellations [3]. The traditional “downlink-then-process” paradigm is facing bottlenecks due to limited bandwidth, overloaded ground processing, and strict latency requirements [4]. Intelligent onboard processing has thus become indispensable. However, individual satellites have limited computational resources, making it difficult to handle complex AI tasks independently. This naturally leads to distributed collaborative AI, where federated learning (FL) becomes a fitting paradigm with its characteristic of training models locally without data sharing [5].

The hierarchical architecture of satellite communication systems reflects the unique advantages and complementary characteristics of satellites operating at different orbital altitudes. Low Earth Orbit (LEO) satellites, positioned between 300 and 2000 km above the Earth’s surface, offer low communication latency (5–25 milliseconds) and high-resolution observational capabilities. However, their limited coverage necessitates the deployment of large-scale constellations to achieve global reach. Medium Earth Orbit (MEO) satellites, located between 2000 and 35,786 km, provide a favorable trade-off between coverage area and latency. A single MEO satellite can cover continental-scale regions, making it well-suited as a regional data aggregation node. Geostationary Earth Orbit (GEO) satellites, fixed at an altitude of 35,786 km above the equator, deliver stable global coverage and persistent communication links. Although their end-to-end latency is relatively high (approximately 280 milliseconds), GEO satellites play an indispensable role in global coordination and long-term data analysis.

Satellites across different orbital layers exhibit pronounced domain heterogeneity in both data characteristics and processing capabilities. LEO satellites, due to their proximity to Earth, are capable of collecting high-resolution, multispectral observational data. Nevertheless, their rapid orbital motion (with periods of 90–120 min) leads to highly dynamic data with fine spatiotemporal granularity but limited coverage continuity. MEO satellites strike a balance between spatial resolution and temporal coverage, making them ideal for regional-scale environmental monitoring and trend analysis. In contrast, although GEO satellites typically offer lower spatial resolution, their geostationary nature allows for continuous, wide-area observations. This makes them particularly valuable in application scenarios requiring persistent surveillance, such as meteorological monitoring and disaster early warning systems. The stratified distribution of such domain-specific characteristics naturally lays a technical foundation for the design of hierarchical federated learning architectures.

Satellite networks inherently exhibit multi-domain data heterogeneity due to variations in geography, climate, terrain, and mission type. Cross-domain learning is crucial for satellite AI systems. Spatial domain differences are manifested in statistical disparities between data collected from polar regions (e.g., ice coverage) and tropical areas (e.g., rainforests) [6]. Temporal domain shifts occur due to seasonal changes and diurnal cycles [7]. Technical heterogeneity arises from diverse onboard sensors across satellite generations and manufacturers [8]. Task heterogeneity varies from land cover classification to complex target tracking [9]. Existing domain adaptation methods are primarily designed for static environments and are ill-suited for the high dynamics of satellite networks. Traditional FL assumes relatively stable network topologies and stationary data distributions—assumptions that fail under satellite conditions [10], where topologies change every 90–120 min, and satellites operate under strict constraints in power, computation, storage, and communication [11].

From the privacy perspective, satellite data introduces unique challenges. Unlike typical Internet data, satellite data contains precise geolocation and timestamp metadata, making it highly sensitive [12]. Location privacy threats arise from model inversion attacks that infer collection locations, potentially compromising national security or proprietary interests [13]. Temporal privacy risks involve inference of activity patterns and resource dynamics from periodic satellite observations [14]. Multi-party privacy conflicts also emerge, as satellite data often involves stakeholders with varying privacy expectations [15]. Existing privacy-preserving methods, such as differential privacy, face deployment difficulties in resource-constrained environments [16,17]. Moreover, domain adaptation and privacy protection tend to conflict, as adaptation demands more feature sharing, which increases privacy risks. Hence, achieving effective cross-domain adaptation while preserving privacy becomes a complex tri-objective optimization problem balancing utility, adaptability, and privacy.

1.2. Motivation and Contributions

Despite extensive studies in federated learning, domain adaptation, and privacy preservation, existing research faces three critical limitations:

Theoretical Limitations: Existing FL theories mostly rely on static network assumptions and lack analytical foundations for dynamic topologies [18]. Domain adaptation theories are mainly developed for centralized learning and seldom consider federated multi-domain setups [19]. Moreover, privacy and domain adaptation are often studied independently, lacking an integrated theoretical framework [20].

Technical Limitations: Most FL algorithms are tailored for terrestrial networks, ignoring the unique characteristics of satellite systems [21]. Privacy mechanisms are often implemented post hoc, lacking end-to-end optimization [22]. Hierarchical FL studies focus more on algorithms than the integration with physical network architectures [23].

Given the inherently layered structure of satellite networks and the distinct data attributes of each orbital tier, conventional flat federated learning paradigms are ill-suited to fully exploit the advantages of such hierarchical systems. The dynamic nature of LEO satellites, the regional aggregation potential of MEO satellites, and the global coordination capabilities of GEO satellites must be effectively integrated within the federated learning framework. As a result, a key technical challenge lies in the design of a hierarchical federated learning architecture that aligns with the physical network topology while maximizing the computational and communication capabilities of each orbital layer.

This work addresses the following fundamental research question:

How can we design a federated learning framework that enables effective cross-domain adaptation and robust privacy preservation in a highly dynamic and resource-constrained satellite communication environment?

This question involves key challenges including convergence under time-varying graphs, unified modeling of multi-dimensional domain shifts, and the deep integration of privacy mechanisms with adaptation strategies.

Our key contributions are as follows:

Orbit-Aware Hierarchical Federated Architecture: We propose the first integration of physical LEO-MEO-GEO layers with logical sensing–aggregation–coordination layers to enable layered aggregation and intelligent scheduling in dynamic satellite networks.

Privacy-Aware Multi-Level Domain Adaptation: We introduce a novel domain adaptation mechanism that integrates orbital periodicity modeling, hierarchical domain graphs, and uncertainty-driven fusion to enable effective cross-domain transfer while preserving privacy.

Orbit-Enhanced Meta-Learning for Fast Adaptation: We design a novel meta-learning algorithm with orbital periodic constraints and orbital similarity weighting, enabling fast adaptation to new domains with few-shot supervision and online task switching.

This work extends federated learning theory to extreme dynamic environments and develops a new multi-dimensional domain-aware FL framework. It also provides a practical foundation for collaborative onboard AI across heterogeneous satellite constellations.

2. Related Work

2.1. Federated Learning

Federated learning (FL) is a distributed machine learning paradigm first introduced by McMahan et al. [24] in 2016, where multiple clients collaboratively train a shared model while keeping data decentralized to preserve privacy and overcome data silos. The seminal FedAvg algorithm performs multiple local SGD updates followed by weighted averaging at a central server. Li et al. [25] proposed FedProx by introducing proximal terms to address system and statistical heterogeneity. Karimireddy et al. [26] introduced SCAFFOLD using control variates to mitigate client drift and improve convergence under non-IID conditions.

A central challenge in FL is statistical heterogeneity. To address non-IID data, various methods have emerged. FedNova [27] normalizes local updates across clients. Data-sharing methods introduce small public datasets to align distributions. Hsu et al. [28] proposed a data sharing-based approach to mitigate the Non-IID problem by sharing a small amount of public data among clients. Communication efficiency is another practical constraint. DGC [29] employs gradient sparsification, quantization, and error feedback to reduce communication costs. SignSGD [30] transmits only gradient signs. FetchSGD [31] combines gradient compression with adaptive local updates.

2.2. Domain Adaptation and Transfer Learning

Domain adaptation addresses distributional shifts between source and target domains. Theoretical foundations by Ben-David et al. [32]. provide generalization bounds. DANN [33] uses adversarial training to learn domain-invariant features. DAN [34] and JAN [35] align feature distributions using multi-kernel and joint MMD, respectively. Adversarial methods like ADDA [36] and CDAN [37] further exploit classifier information or conditional alignment. MCD [38] leverages classifier disagreement for sample transferability detection.

In multi-source settings, DCTN [39] uses expert networks and dynamic weighting. Zhao et al. [40] proposed a theoretical and analytical framework for multi-source domain adaptation and gave a generalization bound for multi-source domain adaptation. M3SDA [41] aligns higher-order moments across domains. Meta-learning introduces fast adaptation: MAML [42] learns initialization that generalizes across tasks. Meta-domain adaptation frameworks [43,44] train across multiple domain pairs for efficient generalization.

2.3. Privacy-Preserving Machine Learning

Differential privacy (DP) [45], introduced by Dwork, is the most widely accepted formalism for privacy guarantees. DP-SGD [46] incorporates noise into clipped gradients to ensure DP during training. Rényi differential privacy (RDP) [47] allows tighter accounting. In FL, DP-FedAvg [48] applies local DP at clients with secure aggregation. Local vs. global DP models [49,50] balance client autonomy and central control. Secure multi-party computation (SMC) techniques [51,52,53], such as secure aggregation and homomorphic encryption, have also been applied in FL for strong privacy guarantees.

2.4. Intelligent Satellite Networks

Modern satellite networks exhibit hierarchical structures. LEO satellites offer low latency and high capacity, making them ideal for edge sensing [54,55]. SDN [56] architectures have been proposed to handle satellite dynamics via centralized control. AI-driven routing and resource management optimize traffic flows and spectrum usage [57,58]. Onboard AI systems have enabled tasks like cloud detection and disaster response [59,60].

Recent studies incorporate FL in satellite–ground architectures, but few consider domain heterogeneity, dynamic topology, or privacy. For instance, FL frameworks for SAGIN (Space–Air–Ground Integrated Networks) [61] integrate DP and reinforcement learning. Secure computation techniques [62] have been applied in proximity operations to protect satellite capabilities.

Recently, the SatFed method proposed by Zhang et al. [63] also addresses federated learning in LEO satellite networks, but its technical approach differs fundamentally from this work. SatFed primarily focuses on satellite-assisted terrestrial federated learning, employing LEO satellites as communication relays between ground devices. It mainly optimizes satellite-to-ground communication bandwidth constraints and ground device heterogeneity issues, improving communication efficiency through a freshness-prioritized model queuing mechanism and multi-graph heterogeneity modeling. In comparison, our HiSatFL framework demonstrates broader applicability and deeper technical innovations:

- (1)

- In architectural design, HiSatFL proposes an orbit-aware three-tier hierarchical federated architecture (LEO-MEO-GEO), elevating satellites from the role of communication relays to active learning participants, achieving deep integration of physical network topology with logical learning structures;

- (2)

- In technical content, HiSatFL not only addresses communication efficiency but more importantly, systematically solves for the first time the unified optimization of multi-dimensional domain adaptation (spatial, temporal, technical, and mission domains), dynamic topology adaptation, and privacy preservation with domain adaptation in satellite networks;

- (3)

- In theoretical contributions, HiSatFL establishes federated learning convergence theory on time-varying graphs and provides differential privacy guarantees with dynamic budget allocation, while SatFed mainly focuses on communication optimization analysis.

3. Cross-Domain Adaptive Privacy-Preserving Federated Learning

3.1. Hierarchical Satellite Federated Learning Architecture

To address the high dynamicity, resource constraints, and multi-dimensional cross-domain characteristics of satellite communication environments, this section proposes a novel Hierarchical Satellite Federated Learning Architecture (HiSatFed). This architecture is based on the physical–logical hierarchical mapping principle, organically unifying the physical orbital stratification of LEO-MEO-GEO with the logical functional stratification of sensing–aggregation–coordination. Through innovative mechanisms such as orbit-aware scheduling and hierarchical asynchronous aggregation, it achieves efficient cross-domain adaptive federated learning under extremely dynamic environments.

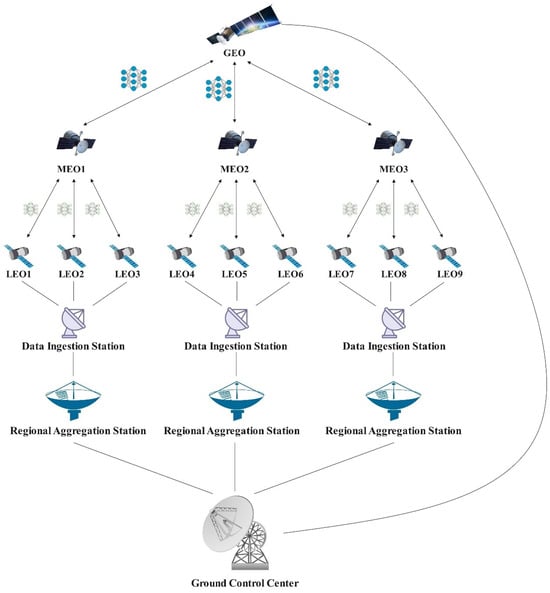

The core of the HiSatFed architecture is the three-layer design of the space segment, as illustrated in Figure 1. The LEO sensing layer consists of low-earth-orbit satellites deployed at 300–2000 km altitude, responsible for data collection and local model training functions. LEO satellites leverage their low-earth-orbit advantages to acquire high-resolution Earth observation data, execute local feature extraction and model updates through onboard AI processors, and form dynamic collaborative clusters with neighboring satellites via inter-satellite links for local aggregation. The MEO aggregation layer comprises medium-earth-orbit satellites at 2000–35,786 km altitude, undertaking regional data aggregation and cross-domain adaptation core functions. MEO satellites cover continental-scale geographical regions, coordinate and manage dozens of LEO satellites, execute complex domain adaptation and transfer learning algorithms, and achieve knowledge transfer across spatial, temporal, and technical domains. The GEO coordination layer consists of Geostationary Earth Orbit satellites, serving as global coordination centers to formulate federated learning strategies, fuse cross-regional knowledge, conduct long-term trend analysis, and ensure consistency and optimality of network-wide learning.

Figure 1.

Schematic diagram of Hierarchical Satellite Federated Learning Architecture.

The ground control center serves as the system’s nerve center, primarily connecting with GEO satellites and undertaking global monitoring, orbit prediction, hyperparameter optimization, and emergency response functions. Regional aggregation stations mainly communicate with MEO satellites, responsible for regional data preprocessing, algorithm optimization, and cross-regional coordination. Data access stations conduct high-frequency data exchange with LEO satellites, providing edge intelligent processing and communication protocol adaptation. The processing results from data access stations serve as inputs to regional aggregation stations, while the optimization strategies of regional aggregation stations guide the operation of data access stations.

The hierarchical architecture design fully considers the physical characteristic differences among various track layers:

Physical characteristics of the LEO layer:

- -

- Orbital velocity: 7.8 km/s, resulting in rapid ground track changes;

- -

- Doppler shift: ±4.2 kHz, affecting the stability of the communication link;

- -

- Orbital decay: Atmospheric drag causes an average annual decrease in altitude of about 1–2 km;

- -

- Visible duration: 8–12 min for a single transit.

Physical properties of the MEO layer:

- -

- Orbital velocity: 3.9 km/s, providing more stable regional coverage;

- -

- Orbital period: 6 h, suitable for regional data aggregation cycle;

- -

- Radiation environment: Due to the influence of the Van Allen radiation belt, equipment reliability needs to be considered.

Physical characteristics of the GEO layer:

- -

- Orbital velocity: 3.07 km/s, synchronous with the Earth’s rotation;

- -

- Fixed coverage: Continuously covering 1/3 of the Earth’s surface;

- -

- Propagation delay: 280 milliseconds one-way delay, affecting real-time requirements.

These physical characteristics are directly mapped to the system design of federated learning, such as the rapid dynamic changes in the LEO layer corresponding to the rapid adaptation requirements of the meta-learning module, the regional stability in the MEO layer corresponding to the hierarchical aggregation strategy, and the global coverage in the GEO layer corresponding to the global coordination function.

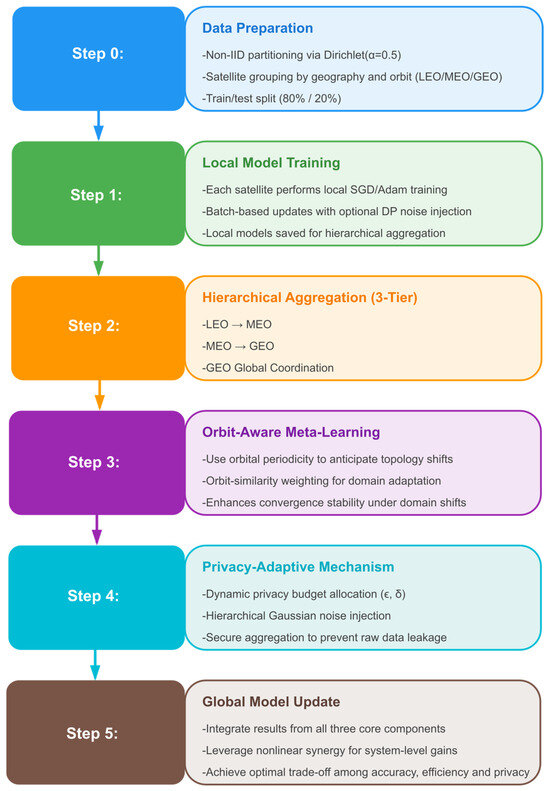

The overview of the research methodology in this article is presented in Figure 2.

Figure 2.

Overview diagram of the methodology.

3.2. Multi-Level Domain Adaptation Mechanism

To address the unique challenges of satellite network environments, this paper defines the multi-dimensional domain space as follows:

Definition 1 (Multi-dimensional Domain Space).

Let denote the multi-dimensional domain space of a satellite network, where represents the Spatial Domain, characterizing changes in geospatial location; represents the Temporal Domain, capturing the effects of temporal evolution; represents the Technical Domain, signifying sensor technology differences; and represents the Mission Domain, indicating task complexity levels.

Traditional domain adaptation methods assume that domain shifts are singular and static. However, domain variations in satellite network environments exhibit characteristics of multi-dimensional coupling, periodic evolution, and hierarchical distribution. The periodic domain variation patterns inherent in satellite orbits endow satellite spatial domain changes with predictable periodic characteristics, providing a theoretical foundation for designing domain-aware adaptation strategies. The multi-level domain adaptation mechanism proposed in this paper effectively addresses the complex multi-dimensional domain variation problems in satellite networks through three core components: hierarchical domain identification, progressive domain adaptation, and multi-source domain fusion.

3.2.1. Hierarchical Domain Identification

Traditional domain adaptation methods typically assume that domain boundaries are pre-given, but in dynamic satellite environments, domain boundaries are fuzzy and time-varying. This paper proposes a multi-scale domain representation method based on orbital dynamics awareness, combining satellite physical motion laws with domain modeling to establish a predictive domain representation framework.

Let data samples in the satellite network be , and based on the multi-dimensional domain definition framework in Section 3, establish the multi-scale domain representation function as follows:

where is the feature vector, is the label, is the spatial position coordinate, is the timestamp, is the orbital parameter, and represents the hierarchy level.

Orbit-aware representation of spatial domain combines orbital dynamics, considering not only instantaneous position but also orbital prediction information. Grid partitioning follows hierarchical principles, with grid size growing exponentially with hierarchy level, expressed as follows:

where represents the latitude or longitude grid size (degrees) of the -th layer, represents the base grid sizer.

Multi-scale modeling of temporal domain considers the multiple temporal periodicities of satellite systems, including comprehensive representation of diurnal cycles, seasonal cycles, and orbital cycles; specifically

where represents the diurnal cycle, represents the seasonal cycle, and represents the orbital cycle. Here, days denotes the annual cycle, which is used to model the impact of seasonal variations on satellite observational data. This includes annually periodic phenomena such as vegetation phenology, snow cover dynamics, and sea ice evolution. represents the orbital period of the satellite. For typical Low Earth Orbit (LEO) satellites—such as Starlink satellites operating at an altitude of approximately 550 km—the orbital period is approximately minutes. This parameter is crucial for capturing the periodic variations in observational geometry, solar incidence angles, and atmospheric path lengths driven by orbital motion.

Hierarchical clustering of technical domain employs sensor feature-based hierarchical clustering method, determining technical domain identifiers through spectral clustering:

where represents the -th layer feature extractor, is the center of the k-th technical domain cluster, and is the number of clusters in the -th layer.

Domain heterogeneity in satellite network environments exhibits a distinctly hierarchical structure, rendering conventional flat clustering methods insufficient for capturing the multi-scale characteristics of such domain distributions. Specifically, domain heterogeneity manifests at the following hierarchical levels:

Macroscopic-Level Heterogeneity:

At the continental scale (e.g., Europe, North America, Asia–Pacific), substantial differences exist in climatic zones, topographic features, and land-use patterns. These macroscopic variations lead to systematic shifts in the statistical distribution of satellite observation data across regions. Flat clustering methods, constrained to a single layer of abstraction, fail to simultaneously account for both global similarity and local variability.

Mesoscopic-Level Heterogeneity:

Within continental regions, individual countries or geographic sub-units exhibit intermediate-scale environmental gradients. For instance, the gradual transition from the boreal coniferous forests of Northern Europe to the sclerophyllous woodlands of the Mediterranean reflects changes in vegetation and climate conditions. Capturing such transitional patterns necessitates an intermediate layer of clustering.

Microscopic-Level Heterogeneity:

At the local scale, fine-grained domain variation is primarily influenced by anthropogenic activities, micro-topography, and specific land cover types. This small-scale heterogeneity requires low-level clustering strategies capable of identifying and adapting to detailed domain shifts.

Let denote the distance between domains and . The objective function of traditional single-layer clustering is given by the following:

In contrast, the hierarchical clustering strategy is defined by a multi-level objective function:

where is the weight assigned to level , represents the level-specific distance metric, and denotes the cluster assignment of domain at level .

Comparative experiments demonstrate that hierarchical clustering exhibits significant advantages in addressing multi-scale domain heterogeneity. In domain adaptation tasks, it outperforms flat clustering by improving adaptation accuracy, accelerating convergence, and enhancing generalization performance. These improvements are primarily attributed to the ability of hierarchical clustering to capture similarity patterns across different levels of abstraction, thereby enabling more precise and progressive transfer pathways. This leads to substantially improved efficiency and effectiveness in cross-domain knowledge transfer.

Based on multi-scale domain representation, this paper constructs a hierarchical graph structure of domains to model containment relationships and similarities between domains. The domain hierarchy graph is defined as , where is the domain node set, specifically expressed as follows:

where represents the domain node set of the -th layer, and represents the k-th domain node of the -th layer.

Using multi-dimensional domain distance (defined as the weighted sum of distances across different dimensions), domain similarity is computed as follows:

Here is the multi-dimensional domain distance, expressed as follows:

where is the distance measure for the k-th dimension, and is the weight coefficient learned through attention mechanism.

3.2.2. Progressive Domain Adaptation

Based on the orbital periodic domain variation theory (satellite spatial domain changes exhibit periodicity with period T), this paper designs an orbital period-aware progressive domain adaptation strategy. This strategy utilizes the periodic characteristics of orbits to predict domain change trends and plan optimal adaptation paths.

Let the source domain be and the target domain be , with the adaptation path defined as the following domain sequence:

where is the lowest common ancestor domain, is the target domain, and m is the path length.

Based on the optimal path, this paper designs a hierarchical progressive adaptation algorithm. The core idea of this algorithm is to start with coarse-grained domains and gradually refine to the target domain, with each step fully utilizing knowledge from the previous layer. The total adaptation loss is defined as the weighted sum of adaptation losses at each layer:

where is the hierarchical weight of the -th layer, satisfying , and is the single-layer adaptation loss function.

The adaptation loss for each layer combines classification loss, domain discrimination loss, and feature alignment loss, specifically expressed as follows:

where is the classification loss, is the domain discrimination loss, is the feature alignment loss, and , are loss balancing parameters.

To better utilize the domain hierarchical structure, this paper designs an adaptive weight learning mechanism. This mechanism dynamically adjusts weights at each layer based on performance feedback during the adaptation process:

where are learnable parameters updated through gradient descent, and is the domain similarity function.

3.2.3. Multi-Source Domain Fusion

In satellite networks, multiple source domains often exist that can provide useful information for the target domain. Unlike traditional methods, this paper’s source domain selection strategy considers orbital configuration similarity and spatiotemporal coverage of data acquisition. Given K source domains , the importance weight of source domain for target domain combines domain distance and orbital similarity, specifically expressed as follows:

where is the orbital configuration similarity, and are the orbital parameters of the source and target domains, respectively, and is the temperature parameter controlling the sharpness of weight distribution.

The orbital configuration similarity function, OrbitSim(), is computed based on a weighted Euclidean distance over the six classical orbital elements. Given two orbital configurations, and , the orbital similarity is defined as follows:

where denotes the normalization factor for the orbital element, and is the corresponding weight coefficient reflecting the relative importance of each element. The six orbital elements considered are as follows: semi-major axis, eccentricity, inclination, right ascension of the ascending node (RAAN), argument of perigee, and mean anomaly. The weights are assigned based on the influence of each element on the satellite’s observational geometry and coverage characteristics.

When multiple source domains provide conflicting information, this paper designs a conflict resolution strategy based on uncertainty quantification and orbital confidence. The conflict degree between source domains is comprehensively evaluated through feature differences and confidence differences:

where and are the feature representations of the i-th and j-th source domains, respectively, and is the confidence difference.

Conflict resolution strategy includes orbital confidence-based selection and uncertainty-based soft fusion. When conflicts are detected, the system selects the source domain with the highest orbital confidence or uses uncertainty as fusion weights for soft fusion. The core idea of soft fusion is to allocate fusion weights based on the uncertainty degree of each source domain, with lower uncertainty source domains receiving higher weights.

3.2.4. Sensor-Aware Domain Adaptation Strategies

In real-world satellite networks, satellites from different generations and manufacturers are often equipped with heterogeneous sensor technologies, exhibiting substantial variations in technical specifications. This heterogeneity introduces unique challenges for domain adaptation in federated learning. This section elaborates on how domain adaptation techniques can be tailored to incorporate sensor-specific information to enable effective knowledge transfer across heterogeneous sensing domains.

Sensor Feature Standardization Mechanism:

To address spectral discrepancies across multispectral sensors, an adaptive feature alignment strategy is proposed as follows:

Here, denotes the spectral characteristics of the sensor, including minimum and maximum wavelengths, spectral resolution, and signal-to-noise ratio (SNR). The transformation function performs cross-sensor data alignment through spectral response function interpolation and noise normalization.

Real-World Application Scenarios:

Scenario 1: Cross-Platform Collaboration between Landsat and Sentinel

Consider federated collaboration between the Landsat-8 OLI sensor (9 bands, 30 m resolution) and the Sentinel-2 MSI sensor (13 bands, 10–60 m resolution). A band-mapping matrix is constructed as follows:

This enables the projection of the nine-band feature space of Landsat-8 into the 13-band feature space of Sentinel-2, ensuring physical consistency in spectral information.

Scenario 2: Fusion of High- and Low-Resolution Sensors

In resource-constrained LEO satellites equipped with low-resolution sensors (e.g., MODIS, 250 m resolution), knowledge sharing with high-resolution sensors (e.g., WorldView, 0.5 m resolution) is facilitated through resolution-aware domain adaptation:

Here, denotes the upsampling operation, and and represent the high- and low-resolution feature extractors, respectively.

Sensor Uncertainty Quantification:

To model measurement uncertainty across different sensors, a Bayesian framework is employed as follows:

where denotes the intrinsic noise characteristics of the sensor. During domain adaptation, this noise is used as a weighting factor to ensure that data from high-quality sensors are assigned proportionally greater influence.

3.3. Meta-Learning Driven Fast Adaptation

In satellite network environments, domain changes often exhibit sudden and real-time characteristics. Traditional domain adaptation methods require large amounts of data and long training times to adapt to new domains, making it difficult to meet satellite systems’ requirements for rapid response. Although domain changes in satellite networks are complex, they possess certain periodic and predictable patterns. Based on this observation, this section proposes a meta-learning [64] driven fast adaptation mechanism that enables the system to quickly adapt when encountering new domains by learning the ability to learn quickly.

3.3.1. Orbit-Period-Aware Meta-Learning

Traditional meta-learning methods typically assume that task distributions are static, but in satellite networks, task distributions themselves change periodically with orbital cycles. This paper proposes an orbit-period-aware meta-learning framework that fully utilizes the periodic characteristics of orbital motion to design meta-learning tasks. Given the domain change sequence experienced by satellites within orbital periods, meta-learning tasks are defined as follows:

where is the support set for fast adaptation training data, is the query set for evaluating adaptation effectiveness test data, and is the orbital phase parameter, with t being time and being the orbital period.

To fully utilize orbital periodicity, an orbital phase-based task sampling strategy is designed as follows:

where Z is the normalization constant ensuring the probability distribution sums to 1, is the target orbital phase, is the phase sampling variance controlling sampling concentration, and is the orbital weight function reflecting the importance of different orbital phases.

The orbital phase-based task sampling is presented in Algorithm 1.

| Algorithm 1. Orbital phase-based task sampling strategy |

| Input: Target orbital phase , orbital period phase sampling variance , orbital weighting function , candidate task set , orbital phase corresponding to each task , number of tasks to sample N |

| Output: Sampled task set |

|

1: Initialize sampled task set 2: Compute normalization constant: 3: for i = 1 to N do: 4: Compute sampling probability for task : 5: end for 6: Construct cumulative probability distribution: 7: for k = 1 to K do: 8: Generate random number 9: Use binary search to find index idx in such that 10: Add task to 11: end for 12: return |

The core principle of this algorithm leverages the periodic characteristics of orbital dynamics by employing Gaussian kernel functions to perform importance-weighted sampling of tasks across different orbital phases, ensuring that the sampled tasks better represent learning scenarios in the vicinity of the target orbital phase. The algorithm first computes similarity weights for each candidate task relative to the target orbital phase, where these weights combine a Gaussian decay term based on phase distance with orbital-specific quality assessment factors. Through constructing a cumulative probability distribution and utilizing inverse transform sampling, the algorithm efficiently samples the required number of tasks according to the computed probability distribution.

The innovation of this algorithm lies in deeply integrating orbital dynamics knowledge into the task sampling process of meta-learning, enabling meta-models to better adapt to the periodic domain shift patterns characteristic of satellite networks. Compared to traditional uniform random sampling or simple similarity-based sampling approaches, this method significantly enhances the rapid adaptation capability of meta-learning under new orbital phases, particularly demonstrating superior generalization performance in few-shot learning scenarios.

The algorithm has a time complexity of , where N is the total number of candidate tasks and K is the number of tasks to be sampled. The probability computation phase requires time, cumulative distribution construction requires time, and K binary search operations for sampling require a total of time.

Based on orbital periodicity observations, this paper proposes an orbital dynamics-constrained meta-optimization algorithm. This algorithm introduces orbital dynamics constraints based on the standard MAML framework, ensuring smoothness of model parameters for adjacent orbital phases. The meta-objective function is defined as follows:

where are the meta-model parameters, are the parameters after fast adaptation on task , is the task-specific loss function, is the orbital constraint regularization term, and is the regularization coefficient balancing task loss and orbital constraints.

The orbital constraint regularization term considers physical constraints of orbital dynamics, specifically the following:

where is the phase difference, is the phase difference weight function, and is the smoothness control parameter.

3.3.2. Few-Shot Domain Adaptation

In satellite networks, labeled data for new domains is often scarce, requiring strong few-shot learning capabilities. Based on the prototypical network framework, this paper designs orbit-enhanced prototypical networks by incorporating orbital information. Traditional prototypical networks consider only feature similarity when computing class prototypes, introducing orbital similarity weights as follows:

where is the prototype vector for class k, is the support sample set for class k, is the similarity weight between sample i and the target orbit, is the feature representation of sample , and and are the orbital parameters of the sample and target, respectively.

Orbital similarity weight is defined as follows:

where is the scale parameter controlling orbital similarity sensitivity, and is the orbital distance calculated based on orbital element differences.

In few-shot scenarios, uncertainty quantification is crucial for reliable domain adaptation. This paper adopts a Bayesian meta-learning framework to quantify the model’s epistemic and aleatoric uncertainties. The variational Bayesian meta-learning objective function is defined as follows:

where is the variational posterior distribution of parameters, is the prior distribution of parameters, is the KL divergence measuring the difference between two distributions, and is the KL weight coefficient balancing task loss and regularization term.

Considering the impact of orbital position on observation quality, an orbit-aware uncertainty calibration mechanism is designed as follows:

where is the calibrated uncertainty, represents the model-predicted uncertainty, and is a comprehensive indicator that reflects the data quality of satellite observations at specific orbital positions. It takes into account various factors through which the orbital configuration—such as altitude, inclination, and eccentricity—affects observation quality, and is used to calibrate the uncertainty in model predictions.

3.3.3. Online Domain Adaptation

In the dynamic environment of satellite networks, domain changes may occur at any time, requiring real-time detection mechanisms to trigger adaptation processes. This paper designs a multi-level domain change detection algorithm that detects domain drift at different abstraction levels.

Data-level detection based on statistical distance is as follows:

In terms of the data-level detection threshold, the MMD statistic threshold is , with a significance level of (corresponding to a 99% confidence level). The sample window size is samples, and the detection period is once per training round.

The determination of the MMD threshold is based on the following Bootstrap resampling method:

where B = 1000 represents the number of Bootstrap resamplings.

Feature-level detection based on feature distribution changes is as follows:

Regarding the feature-level detection threshold, the Mahalanobis distance threshold is set as , with a degree of freedom d = 512 (the dimension of the final hidden layer in ResNet-18). The covariance estimation method is based on the Shrinkage Estimator, and the historical window length is set to rounds of training data.

The update of the feature covariance matrix employs exponential moving average is as follows:

The attenuation factor is set to 0.1.

Prediction-level detection based on prediction performance degradation is as follows:

where and represent data distributions at times and , respectively, and are data samples at corresponding times, and are the mean and covariance matrix of features, respectively, is the prediction accuracy at time , MMD is the maximum mean discrepancy, and is the matrix trace.

Regarding the prediction-level detection threshold, the accuracy drop threshold is set at (representing a 5% performance decrease), with an evaluation window of rounds of training. The statistical test employed is the Wilcoxon signed-rank test, and the p-value threshold is set at .

The trend detection of predictive performance employs the Mann–Kendall trend test:

The comprehensive domain change indicator is obtained by weighted fusion of multi-level detection results:

where , , and represent the weight coefficients of each level detection. For the setting of the weighting coefficients , , and , we adopt a task-aware empirical tuning strategy, augmented with domain-specific statistical heuristic weighting. When , the domain adaptation process is triggered, where is the domain drift threshold. is determined using an adaptive statistical thresholding method based on the moving average and standard deviation of recent drift indicators. This strategy enables dynamic adjustment to drift characteristics across different tasks and orbital phases.

The duration parameter settings are as follows:

Minimum trigger duration: Adaptation is triggered only after drift is detected in three consecutive rounds.

Adaptation cooling-off period: After completing one domain adaptation, no new adaptations will be triggered within 10 rounds.

Historical statistics update cycle: Re-evaluate detection parameters every 20 rounds.

After detecting domain changes, the model needs to be quickly updated to adapt to the new domain. This paper designs an incremental meta-model update algorithm that can quickly adapt to new domains without forgetting historical knowledge. To prevent catastrophic forgetting, an experience replay buffer is maintained, and updates are performed through the following incremental meta-optimization objective:

where is the current task loss, is the experience replay buffer, represents the importance weight of -th historical task, represents the loss function of the -th task in the buffer, represents the replay weight coefficient, represents the regularization weight coefficient, and represents the model parameters from the previous time step (round t − 1). For weight optimization, we adopt a meta-learning-driven joint learnable weighting strategy, which dynamically and jointly adjusts the three weights—, , and —to enable the model to effectively adapt to new tasks while retaining previously acquired knowledge. This approach facilitates efficient and stable cross-domain federated learning.

3.4. Privacy-Preserving Federated Learning

Based on the aforementioned multi-level domain adaptation mechanism and meta-learning driven fast adaptation technologies, this section proposes a Cross-Domain Adaptive Hierarchical Federated Learning Architecture (Hierarchical Satellite Federated Learning, HiSatFL). This architecture combines physical network topology with logical learning structure, achieving three-layer collaborative learning of LEO local model training, MEO local aggregation, and GEO global aggregation. Meanwhile, this section designs privacy-aware domain adaptation mechanisms that achieve efficient cross-domain knowledge transfer while protecting sensitive information.

3.4.1. Hierarchical Aggregation Mechanism

The HiSatFL architecture deploys federated learning computational tasks in layers according to satellite orbital altitude, forming a three-layer structure of “sensing-aggregation-coordination”. Given a system containing LEO satellites, MEO satellites, and GEO satellites, the hierarchical aggregation process is defined as follows:

LEO Local Training: The local objective function of the i-th LEO satellite in the t-th training round is as follows:

where represents the local model parameters of the i-th LEO satellite in the t-th round, including the weights and biases of each neural network layer, the parameters of local domain adaptation modules, and local regularization hyperparameters, is the local dataset of the i-th LEO satellite, denotes the size of the local dataset on the i-th LEO satellite, represents the loss function, represents the neural network forward propagation function, is the orbit-aware regularization term, and is the orbital phase of the i-th LEO satellite in the t-th round.

The local update rule is as follows:

where is the LEO layer learning rate and is the gradient operator with respect to parameter , and is the loss function value under current parameters.

MEO Regional Aggregation: The j-th MEO satellite is responsible for aggregating LEO satellite models within its coverage area. Given that the coverage set of MEO satellite j is , the regional aggregation weight is as follows:

where represents the data volume of LEO satellite i, represents the link quality between LEO satellite i and MEO satellite j, and represents domain similarity calculated based on the multi-dimensional domain distance in Equation (7).

The MEO regional aggregation model is as follows:

where are the regional aggregation model parameters of the j-th MEO satellite.

GEO Global Aggregation: The GEO layer performs final global aggregation, considering the importance of each MEO region:

where is the weight of the j-th MEO region in global aggregation, represents the importance weight of region j based on coverage area and data quality, and is the regional model quality assessment value.

The global model update is expressed as follows:

Due to the high dynamicity of satellite networks, traditional random client selection strategies are no longer applicable. This paper designs an orbit-dynamics-aware client selection mechanism that intelligently selects based on orbital prediction, link quality, and domain adaptation requirements.

For the t-th round of federated learning, the availability assessment of LEO satellite i is as follows:

where is the geometric visibility probability of satellite i at time t, represents communication link availability probability, and is the computational resource availability probability.

Geometric visibility probability is calculated based on orbital dynamics:

where is the indicator function, represents the elevation angle of satellite i relative to the ground station at time t, is the minimum visible elevation threshold, represents eclipse duration, and is the orbital period.

Combining the multi-level domain adaptation mechanism in Section 3.2, client selection also needs to consider domain diversity and adaptation requirements:

where is the domain value of LEO satellite i, representing the informational contribution and representativeness of its local data domain within the current federated learning task. It serves as a critical indicator for evaluating the extent to which the satellite’s local data can enhance the generalization capability of the global model. A higher domain value implies that the satellite’s data exhibits greater domain discrepancy, richer information content, or stronger collaborative potential in the current training round, and is therefore more desirable for participation in model aggregation. is the domain adaptation requirement, reflecting the current global model’s adaptation degree to this domain.

Domain value calculation is expressed as follows:

where represents domain similarity measure, and represents the weight of other clients in current aggregation.

3.4.2. Privacy-Aware Domain Adaptation

Traditional domain adaptation methods achieve cross-domain knowledge transfer through feature alignment, but directly sharing feature representations may leak sensitive information. This paper designs a privacy-constrained feature alignment mechanism that achieves effective feature distribution alignment while protecting privacy. Given source domain features and target domain features , the traditional MMD alignment loss is as follows:

where and are the number of samples in source and target domains, respectively, is the feature representation of the i-th sample in the source domain, is the feature representation of the j-th sample in the target domain, represents kernel function mapping to reproducing kernel Hilbert space , and is the norm in space .

To protect privacy, this paper adds calibrated noise in feature computation as follows:

where and are noise-added feature representations, represents multi-dimensional Gaussian noise, is the sensitivity of feature representation, is the privacy budget parameter, is the failure probability parameter, and is the identity matrix.

The privacy-preserving feature alignment loss is as follows:

According to the domain hierarchy structure in Section 3.2.1, the privacy budget consumption for the -th layer in the t-th round is defined as follows:

where L represents the number of system layers (LEO = 1, MEO = 2, GEO = 3), denotes the number of satellites in the -th layer, represents the contribution importance of the -th layer to the overall federated learning task in the t-th training round, which depends on coverage contribution, communication and computational quality, and represents the complexity and diversity of data domains that the -th layer needs to process in the t-th round, which depends on geographical diversity, temporal variability, and technological heterogeneity.

Objective: To prove whether this dynamic allocation mechanism itself satisfies differential privacy and how it integrates with the privacy guarantees of the overall system.

Assumptions:

Assumption 1 (Parameter Non-sensitivity).

The computation of dynamic parameters and relies only on the following non-sensitive information: orbital physical parameters, network topology information, and task configuration parameters.

Assumption 2 (Budget Conservation).

For any round t

Assumption 3 (Parameter Boundedness).

There exist constants such that

Theorem 1 (Privacy Guarantees of Dynamic Budget Allocation).

Let the HiSatFL system adopt the dynamic budget allocation mechanism from Formula (51). Under Assumptions 1–3, this mechanism satisfies the following properties:

Allocation Privacy: satisfies-differential privacy.

System Privacy: The overall system satisfies-differential privacy.

Budget Optimality: The allocation strategy maximizes expected utility under given constraints.

Lemma 1 (Privacy of Allocation Function).

Under Assumption 1, the budget allocation function satisfies -differential privacy.

Proof

Let , be neighboring datasets such that . We need to prove the following:

According to Assumption 1, the computation process of the allocation function is as follows:

Step 1: Weight computation

where depends only on non-sensitive parameters and is independent of dataset .

Step 2: Complexity computation

where similarly depends only on non-sensitive parameters.

Step 3: Budget allocation

Since both and are independent of dataset content,

This proves that satisfies -differential privacy. □

Lemma 2 (Single-layer Privacy Mechanism).

The privacy mechanism of the -th layer satisfies -differential privacy under allocated budget .

Proof:

The -th layer adopts Gaussian mechanism for gradient protection:

where the noise standard deviation is as follows:

The gradient sensitivity is as follows:

According to the privacy guarantee of Gaussian mechanism, for neighboring datasets , , and any output set ,

Therefore, satisfies -differential privacy. □

Lemma 3 (Hierarchical Composition Theorem).

Privacy guarantees of HiSatFL’s hierarchical architecture under parallel-sequential hybrid composition.

Proof

Step 1: Intra-layer parallel composition

Within the -th layer, multiple satellites execute local computations in parallel, processing disjoint data subsets. According to the parallel composition theorem:

Step 2: Inter-layer sequential composition

Different layers proceed in LEO → MEO → GEO order, where each layer’s input contains the output from the previous layer. According to the sequential composition theorem,

Step 3: Budget conservation verification

By Assumption 2,

Therefore, the overall system satisfies -differential privacy. □

Complete Proof of Theorem 1.

Part 1 (Allocation Privacy):

By Lemma 1, the budget allocation mechanism satisfies -differential privacy.

Part 2 (System Privacy):

Combining Lemmas 2 and 3, the overall system satisfies the following: -Differential Privacy, where .

Part 3 (Budget Optimality):

We need to prove that the allocation strategy maximizes system utility under given constraints.

Let the system utility function be

Under constraint , using Lagrange multiplier method:

For linear utility function , the optimality condition is as follows:

Solving: for all .

Combined with the constraint condition, we obtain the optimal allocation:

This is exactly the form of Formula (51), proving the optimality of the allocation strategy.

Domain adversarial training achieves domain-invariant feature learning through adversarial loss, but gradient information may leak domain-specific knowledge. This paper integrates differential privacy mechanisms in domain adversarial training, designing privacy-preserving domain adversarial training algorithms.

Traditional domain adversarial loss is as follows:

where represents the feature extractor, represents the domain discriminator, and and are the source and target domain data distributions, respectively. denotes the mathematical expectation. Specifically, represents the expectation taken over data sampled from the source domain distribution , and computes the average value of .

In privacy-preserving settings, noise is added to the domain discriminator gradients:

where represents the privacy-preserving domain discriminator gradient, is the gradient clipping function with threshold , is the privacy budget for domain adversarial training, and are the domain discriminator parameters.

To balance privacy protection and domain adaptation effectiveness, a privacy-aware adversarial weight adjustment mechanism is designed:

where is the adversarial weight at round t, is the initial adversarial weight, is the privacy decay coefficient, represents the consumed privacy budget, represents the current inter-domain difference calculated based on MMD distance.

In the hierarchical architecture, upper-layer satellites (such as MEO, GEO) can serve as teacher networks to transfer knowledge to lower-layer satellites (such as LEO), but traditional knowledge distillation may leak sensitive information from teacher models. This paper designs privacy-preserving knowledge distillation mechanisms. In the HiSatFL architecture, knowledge distillation proceeds hierarchically:

GEO → MEO Distillation: GEO global model transfers knowledge to MEO regional models;

MEO → LEO Distillation: MEO regional model transfers knowledge to LEO local models.

Traditional knowledge distillation loss is as follows:

where and are the output probability distributions of student and teacher networks, respectively, and represents KL divergence.

To protect teacher model privacy, noise is added to teacher network outputs:

where represents the noise-added teacher network output, is the logits output of the teacher network, represents the distillation temperature parameter, and represents the knowledge distillation noise variance, and is the identity matrix of size , where is the number of categories.

Noise variance is calculated based on privacy budget:

where represents the sensitivity of logits output, and represents the privacy budget for knowledge distillation, and represents the failure probability parameter. Equation (75) corresponds to the standard formulation of noise variance in the Gaussian mechanism under differential privacy. The privacy guarantee theorem associated with the Gaussian mechanism can be derived straightforwardly based on this formulation. The constant factor 1.25 arises from technical proofs within the theoretical framework of differential privacy.

To reduce privacy leakage risks, this paper designs a selective knowledge transfer mechanism that only transmits valuable knowledge to the student network. The knowledge value evaluation function is defined as follows:

where the student network’s uncertainty for sample is , the teacher network’s confidence for sample is , and the relevance of sample to the target domain is represented as . Here is the information entropy function, is the student network’s prediction probability distribution, is the total number of categories in the classification task, represents the maximum possible entropy, represents the teacher network’s prediction probability for class , represents the domain to which sample belongs, and is the temperature parameter.

The knowledge transfer decision is represented as follows:

where is the knowledge transfer threshold, dynamically adjusted based on privacy budget and computational resources. □

3.4.3. Orbit-Period-Aware Federated Optimization

Due to the high dynamicity of satellite networks, traditional federated learning convergence analysis no longer applies. This paper establishes convergence theory for time-varying networks based on orbital periodic characteristics.

Definition 2 (Satellite Network Time-Varying Graph).

The topology of a satellite network at time t can be represented as a time-varying graph , where is the node set, representing in-orbit satellites, is the edge set, representing communication links, and is the weight matrix, representing link quality.

Orbital Periodicity Assumption: Assume that satellite network topology changes exhibit periodicity, i.e.,

where is the orbital period.

Theorem 2 (Hierarchical Federated Learning Convergence).

Under the orbital periodicity assumption and the following conditions:

- Loss function satisfies -strong convexity and -smoothness;

- Local gradients are bounded: ;

- Data heterogeneity is bounded: .

The convergence rate of the HiSatFL algorithm satisfies the following condition:

where is the local loss function of the -th satellite, is the global loss function, is the global model parameters at round , is the optimal parameters, represents the total number of satellites participating in training, is the noise standard deviation corresponding to the privacy budget, is the strong convexity parameter, is the smoothness parameter, and is the data heterogeneity measure, where are the global model parameters at round T, are the optimal parameters, represents the total number of satellites participating in training, and is the noise standard deviation corresponding to the privacy budget.

The above convergence rate formula indicates that the hierarchical architecture maintains convergence while privacy protection introduces additional convergence error.

Traditional federated learning uses fixed aggregation weights, but this strategy cannot adapt to network and data changes in dynamic satellite environments. This paper designs adaptive aggregation weight optimization mechanisms.

The weight of LEO satellite i in MEO aggregation comprehensively considers multiple factors:

where each component is defined as follows:

where represents the data volume of LEO satellite i, represents the set of LEO satellites in current aggregation, represents the remaining privacy budget of satellite i at round t, is the quality sensitivity adjustment parameter controlling the influence of model quality on aggregation weights, is the domain diversity contribution degree where larger values indicate more unique domains for that satellite, and is the domain diversity reward parameter controlling the positive incentive degree of domain diversity on aggregation weights.

Weight normalization is expressed as follows:

where represents the normalized aggregation weight.

In satellite communication environments, packet loss is primarily caused by the following factors: signal attenuation, atmospheric interference, satellite attitude variations, and ground station obstructions. The packet loss rate can be modeled as a distance-dependent function:

where represents the distance between the satellite and the ground station or another satellite, is the atmospheric attenuation coefficient, and denotes the signal-to-noise ratio threshold.

Considering the retransmission mechanism of the TCP/IP protocol, the effective transmission latency for model aggregation is as follows:

where is the ideal aggregation latency, and n represents the average number of retransmissions.

For the LEO-MEO-GEO three-tier architecture, the total aggregation latency is modeled as follows:

where is the propagation delay at the -th layer, with being the speed of light. denotes the processing delay at the -th layer. represents the retransmission delay.

Impact of Doppler Shift on Communication Quality:

The high-speed motion of LEO satellites (approximately 7.8 km/s) induces significant Doppler shifts, which degrade communication link quality. The Doppler shift magnitude is given by the following:

where is the carrier frequency, is the relative velocity, and is the line-of-sight angle.

The increased bit error rate (BER) due to Doppler shift can be modeled as follows:

Here, is the ideal BER, is the Doppler sensitivity coefficient, and is the channel bandwidth.

Communication Window Analysis Under Orbital Dynamics Constraints:

The duration of satellite communication windows directly affects the completion of aggregation processes. For LEO satellites, the visibility window duration is as follows:

where is the orbital angular velocity, is the Earth’s radius, is the satellite altitude, and is the minimum elevation angle.

Accounting for communication window constraints, the effective aggregation completion probability is as follows:

where is the cumulative distribution function of the total latency.

Based on real-time communication quality assessment, HiSatFL employs an adaptive aggregation strategy:

Communication Quality Metric:

where is the remaining communication window time, and .

Dynamic Aggregation Weight Adjustment:

Here, is the transmission latency of the i-th satellite, and is the latency tolerance threshold.

For a typical LEO constellation (altitude: 550 km, inclination: 53°):

Packet Loss Impact:

- -

- Clear-sky conditions: , latency increase factor ~1.0001.

- -

- Adverse weather: , latency increase factor ~1.01.

- -

- Obstructed scenarios: , latency increase factor ~1.11.

Doppler Shift Impact:

- -

- Ku-band (14 GHz): Maximum shift ~±364 kHz.

- -

- For 100 MHz bandwidth: Relative shift ~0.36%.

- -

- BER increase ~5–15%, leading to higher retransmission probability.

Communication Window Constraints:

- -

- Single-pass duration: ~5–10 min.

- -

- High-elevation effective time: ~2–4 min.

- -

- Aggregation completion probability: >95% of transmissions must finish within the window.

These quantitative analyses demonstrate that HiSatFL’s hierarchical architecture and adaptive aggregation mechanism effectively address the inherent challenges of satellite communication environments, ensuring aggregation efficiency and reliability under dynamic conditions.

The Hierarchical Satellite Federated Learning main algorithm is shown in Algorithm 2.

| Algorithm 2. Hierarchical Satellite Federated Learning Main Algorithm (HiSatFL) |

| Input: Satellite network , Multi-domain dataset , Orbital parameters , Privacy budget , failure probability , System parameters (learning rate), (local epochs), (global rounds) |

| Output: Global model |

|

1: // ========== System Initialization Phase ========== 2: Initialize global model 3: Initialize meta-learning parameters 4: Construct orbital predictor 5: Initialize privacy accountant 6: for to T do: 7: // ========== Orbital-Aware Scheduling Phase ========== 8: 9: 10: 11: // ========== Dynamic Privacy Budget Allocation ========== 12: for each do: 13: 14: 15: end for 16: 17: // ========== LEO Layer Local Training and Domain Adaptation ========== 18: for each in parallel do: 19: 20: 21: // Domain drift detection 22: 23: 24: if then: 25: // Orbital-aware meta-learning adaptation 26: 27: 28: else: 29: 30: end if 31: 32: // Local training with privacy protection 33: for to do: 34: 35: 36: 37: 38: end for 39: 40: // Update privacy accountant 41: 42: end for 43: // == MEO Layer Regional Aggregation and Cross-Domain Fusion == 44: for each do: 45: 46: 47: // Multi-source domain fusion 48: 49: 50: 51: // Regional aggregation with privacy protection 52: 53: 54: // Progressive domain adaptation 55: 56: end for 57: // ========== GEO Layer Global Coordination and Knowledge Distillation ========== 58: 59: 60: // Privacy-preserving knowledge distillation 61: 62: 63: // ========== Meta-Learning Parameter Update ========== 64: 65: 66: // ========== Convergence Check and Privacy Verification ========== 67: if then: 68: break 69: end if 70: 71: 72: end for 73: output |

Algorithm 2 represents the core execution framework of the Hierarchical Satellite Federated Learning system proposed in this research. The algorithm integrates key technical components including orbital-aware scheduling, dynamic privacy budget allocation, multi-level domain adaptation, and meta-learning-based rapid adaptation, achieving efficient cross-domain federated learning in highly dynamic satellite network environments.

The algorithm adopts a hierarchical execution architecture that decomposes the complex satellite federated learning task into three levels: LEO layer local training and domain adaptation, MEO layer regional aggregation and cross-domain fusion, and GEO layer global coordination and knowledge distillation. Through orbital-aware client selection and topology prediction, the algorithm can efficiently complete model training and aggregation within satellite visibility windows. The dynamic privacy budget allocation mechanism adaptively adjusts privacy protection intensity according to the domain complexity and importance weights of each layer, maximizing model performance while ensuring differential privacy guarantees. Multi-source domain fusion and conflict resolution strategies handle knowledge transfer conflicts between different domains, while the orbital-aware meta-learning mechanism enables rapid adaptation capabilities for new domains.

The overall time complexity of Algorithm 2 is , where the Orbital-aware scheduling phase time complexity is as follows: , which includes the following:

Topology prediction time complexity: ;

Visibility computation time complexity: ;

Client selection time complexity: ;

Dynamic privacy budget allocation time complexity: ;

LEO layer local training time complexity: ;

MEO layer regional aggregation time complexity: ;

GEO layer global coordination time complexity: ;

Meta-learning update time complexity: .

The complexity analysis demonstrates that the algorithm achieves polynomial-time execution while handling the inherent complexity of multi-layer satellite networks, making it computationally feasible for real-world deployment in resource-constrained satellite environments.

4. Experimental Results and Evaluation

4.1. Experimental Setup

4.1.1. Experimental Environment

The hardware environment for this experiment consists of an Intel Core i7-7820HQ processor, 32 GB memory, NVIDIA Quadro P5000 graphics card, and 2 TB hard disk. The software environment utilizes PyCharm 17.0.10 as the development platform, Python 3.9 as the programming language, PyTorch 2.3 as the deep learning framework, and CUDA 11.8 configuration to fully leverage GPU performance.

The Low Earth Orbit (LEO) layer is simulated with a constellation of 24 satellites deployed at an altitude of 550 km, with an orbital inclination of 53 degrees and an approximate orbital period of 96 min. These satellites are evenly distributed across six orbital planes, each containing four satellites, thereby achieving full global coverage. Each LEO satellite is responsible for data sensing and onboard local training, and is equipped with limited computational resources, reflecting the constraints commonly encountered in edge computing scenarios.

The Medium Earth Orbit (MEO) layer comprises six satellites positioned at an altitude of 10,000 km with an orbital inclination of 55 degrees and an orbital period of approximately six hours. These MEO satellites are arranged across three orbital planes, with two satellites per plane, and function primarily as regional relays and aggregators.

At the Geostationary Earth Orbit (GEO) layer, three satellites are stationed at an altitude of 35,786 km directly above the equator, located at longitudes 0°, 120°, and 240°, respectively, to provide seamless global coverage. GEO satellites are equipped with the highest computational capacity and the most stable network connectivity, and are tasked with global model aggregation, cross-domain coordination, and long-term strategic decision-making.

Physical dynamics modeling:

To ensure the authenticity of the experiment, this study employs a high-precision orbital dynamics model to simulate satellite motion. The orbital velocity of a LEO satellite is approximately 7.8 km per second, and it orbits the Earth once within a 96 min orbital period. The orbital dynamics equations take into account the following primary physical factors:

- (1)

- Earth’s gravitational field: Utilizing the WGS84 Earth gravity model, incorporating the effects of J2–J6 order harmonic terms, it is specifically represented as follows:

- (2)

- Atmospheric drag: LEO satellites are affected by residual atmospheric drag at an altitude of 550 km, which is specifically expressed as follows:

Among them, atmospheric density is kg/m3.

- (3)

- Solar radiation pressure: It affects the long-term evolution of satellite orbits, and is specifically expressed as follows:

- (4)

- Third-body gravity of the Moon and the Sun: These physical effects have a significant impact on MEO and GEO satellites. They directly affect the visibility window, communication link quality, and data acquisition geometry of the satellites, thereby influencing the data distribution and communication scheduling of federated learning.

The experimental configurations of this work are listed in Table 1, and the data configurations are detailed in Table 2.

Table 1.

Experimental settings.

Table 2.

Data configuration.

4.1.2. Datasets

This paper employs four representative remote sensing image datasets to construct multi-dimensional cross-domain scenarios for validating algorithm effectiveness, with specific datasets as follows:

EuroSAT [61]: 27,000 multispectral Sentinel-2 images (64 × 64 px, 13 bands) across 10 land-use classes. Enables cross-domain learning validation for multispectral knowledge transfer.

UC-Merced [65]: 2100 high-resolution RGB images (256 × 256 px, 30 cm spatial resolution). Ideal for few-shot/transfer learning validation in data-scarce federated scenarios.

RSI-CB [66]: 36,000-image benchmark covering 45 global scene categories. Enables evaluation of complex multi-class federated learning with geographical diversity.

Overhead MNIST [67]: 70,000 aerial-perspective grayscale images (28 × 28 px). Provides rapid validation for cross-domain federated learning prototypes.

The cross-domain design in the spatial domain is achieved through geographical region division, subdividing Europe into three sub-regions: Northern Europe, Southern Europe, and Central Europe. North America is treated as an independent domain, while the Asia–Pacific region is constructed based on RSI-CB data, and the synthetic domain uses Overhead MNIST data. The cross-domain in the temporal domain is achieved by simulating seasonal and diurnal changes. Data from spring is enhanced with increased brightness and green channel intensity, data from summer is enhanced with contrast and saturation, data from autumn is filtered with a warm tone, data from winter is reduced in brightness and added with a cool tone, and data from nighttime is significantly reduced in brightness and increased with noise to simulate low-light conditions. The cross-domain in the technical domain simulates different sensor characteristics. The high-resolution optical domain uses original images, the medium-resolution optical domain is downsampled to 128 × 128 pixels, and the low-resolution optical domain is downsampled to 64 × 64 pixels. The synthetic aperture radar domain is simulated by adding speckle noise and grayscale processing, and the multispectral domain simulates multiband data through random channel combinations.

4.1.3. Evaluation Metrics

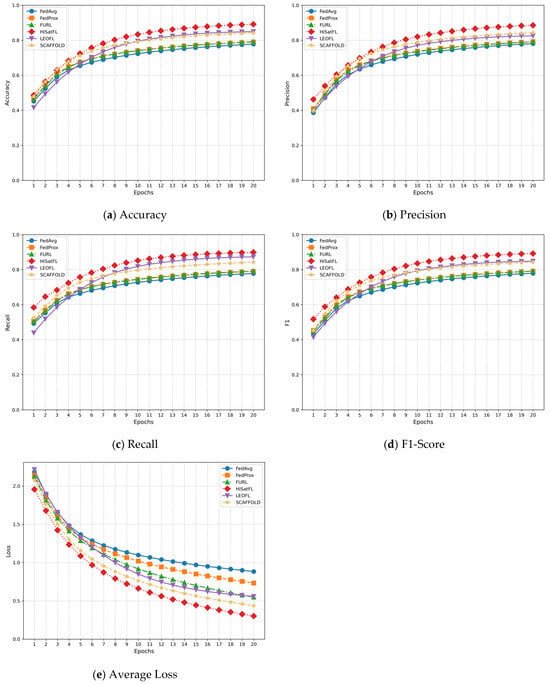

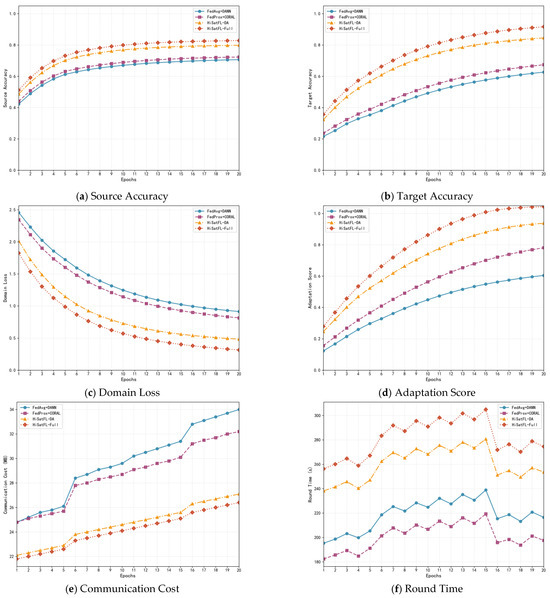

This paper evaluates the proposed method based on accuracy, precision, F1-score, average loss, source accuracy, target accuracy, and other metrics.

The accuracy metric is defined as follows:

where TP (True Positive) represents the number of samples correctly predicted as positive class, TN (True Negative) represents the number of samples correctly predicted as negative class, FP (False Positive) represents the number of samples incorrectly predicted as positive class, and FN (False Negative) represents the number of samples incorrectly predicted as negative class.

The precision metric is defined as follows:

which represents the proportion of actual positive samples among all samples predicted as positive class, reflecting the model’s ability to reduce false positives.

The recall metric is defined as follows:

which represents the proportion of correctly predicted positive samples among all actual positive samples, reflecting the model’s ability to reduce false negatives.

The F1-score is defined as follows:

Its meaning is the harmonic mean of precision and recall, comprehensively reflecting the model’s classification performance.

The average loss is defined as follows:

where N represents the total number of satellites participating in training, represents the local loss function of the i-th satellite, and represents the global model parameters.

Source accuracy is defined as follows:

where represents the source domain dataset, is the model’s prediction for input x, and represents the indicator function that returns 1 when the condition is true and 0 otherwise.

Target accuracy is defined as follows:

where represents the target domain dataset.

Domain loss is defined as follows:

where MMD (Maximum Mean Discrepancy) is defined as follows: , , and represent the feature distributions of source and target domains, respectively, is the feature mapping function, is the reproducing kernel Hilbert space, and and are the number of samples in the source and target domains, respectively.

Adaptation score is defined as follows:

where , , and are weight coefficients satisfying .

Communication cost is defined as follows:

where denotes the set of LEO satellites participating in training during the r-th communication round; represents the byte size of the model parameters; refers to the local model parameters of the i-th LEO satellite; and denotes the aggregated model generated by the j-th MEO satellite.

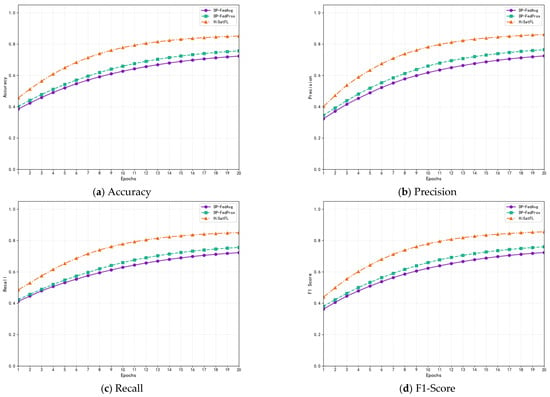

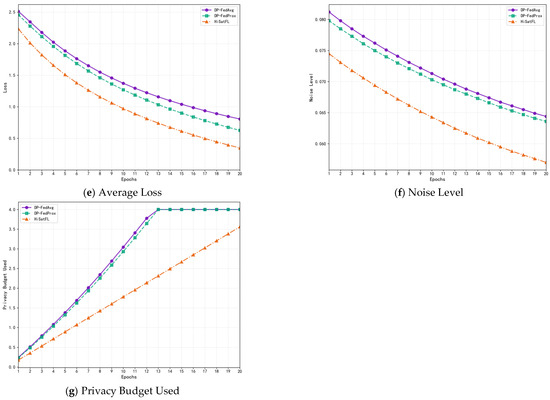

4.2. Experimental Results and Analysis