1. Introduction

Massive Multiple-Input Multiple-Output (MIMO) technology is one of the key enablers of 6G [

1]. It can achieve multi-user parallel services on the same time-frequency resource by deploying hundreds of antennas at the base station (BS) [

2]. Massive MIMO technology, with its excellent energy efficiency performance and spectrum utilization efficiency, is regarded as the core driving force for the development of next-generation communication systems. Meanwhile, based on the ultra-high spatial degree of freedom, massive MIMO technology is also a supporting technology for Integrated Sensing and Communication (ISAC) [

3], which enables joint communications and wireless sensing and has received extensive research attention [

4].

In the downlink, beamforming techniques or efficient precoding underpin the excellent functionality of massive MIMO. Related studies include traditional precoding methods [

5,

6,

7,

8,

9,

10] and neural network based precoding methods [

11,

12,

13,

14,

15,

16,

17,

18,

19].

Matched Filter (MF) precoding is the simplest precoding scheme, where the precoding matrix is directly obtained by the conjugate transpose of the channel matrix, but its performance is limited [

5]. Therefore, Zero Forcing (ZF) and Minimum Mean Square Error (MMSE) further take the inter-user interference into account, yet their capability still falls short of modern requirements due to inherent limitations [

6,

7]. Subsequently, more advanced precoding methods are devised. For example, a Gradient Descent (GD) based precoding scheme is proposed in [

10], where it is implicitly used to solve the Lagrangian formulation of the precoding problem under power constraints. The authors of [

8,

9] employed Semidefinite Relaxation (SDR) in precoding design, which reformulates the nonconvex precoding design problems into tractable semidefinite programs (SDPs). However, the high computational complexity restricts the practical use of these approaches.

To further enhance the performance of the MIMO system, neural network-based precoding methods have been proposed. Liquid Neural Networks (LNN) is used in [

11] to generate precoding matrices, which utilize gradient-based optimization to extract high-order channel information and incorporate a residual connection to ease training. Fully connected neural networks (FCNN) are employed in [

12,

13], which use fully connected layers to map estimated channels to precoding vectors, incorporating a scalar quantization layer to handle discrete phase constraints. The authors of [

14,

15,

16] adopted deep reinforcement learning (DRL) by formulating the NP-hard precoding problem as a Markov decision process (MDP). DRL’s model-free nature proves particularly effective in multi-hop and multi-user scenarios, where conventional optimization struggles with non-convexity and high dimensionality. Meta-learning (ML) is leveraged in [

17,

18,

19] by enabling the neural networks to learn optimization strategies across diverse scenarios, thereby improving the efficiency in solving the non-convex precoding problem. By feeding the gradients of precoding matrices into lightweight neural networks and employing nested optimization loops, the frameworks dynamically adjust parameters to navigate complex environments, ensuring consistent performance under varying channel conditions. All the above research demonstrates extraordinary precoding operations. However, the use of large-scale networks leads to a steep increase in computational complexity with a large number of antennas.

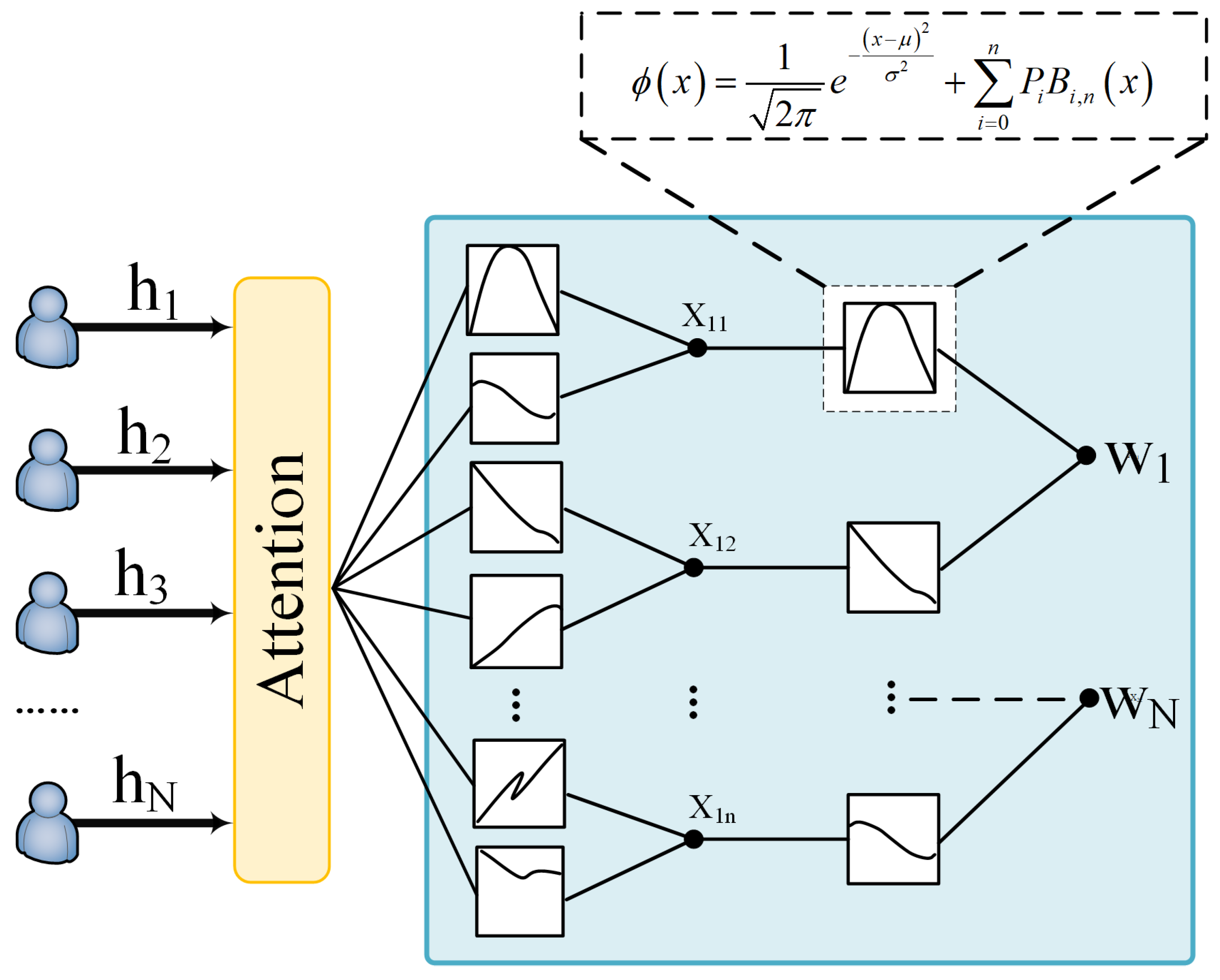

In the case of ISAC, where the communication users require high data rate services and the sensing users require accurate target detection and environment sensing capabilities, a multidimensional challenge is posed to the downlink precoding design. To address this issue, we propose a novel KAN and Attention-based ISAC Precoding (KAIP) scheme for massive MIMO ISAC systems. Based on the attention mechanism and Kolmogorov–Arnold Network (KAN) [

20], we design a neural network to generate the precoding matrix for both the communication and the sensing users, with consideration of the received power (RP) for the sensing users as well as the sum rate (SR) obtained by the communication users. The detailed contributions of this work are summarized as follows:

The precoding problem is formulated for massive MIMO ISAC systems, which takes both the communication users and the sensing users into consideration.

We propose the novel KAIP scheme that employs KAN and the self-attention mechanism for precoding design in massive MIMO ISAC systems. The attention mechanism in KAIP deeply extracts interference features from the channel matrix to reduce multi-user interference, while the KAN network is responsible for directly generating the precoding matrix with low complexity.

In order to evaluate the performance of KAIP, extensive simulations are carried out. The proposed KAIP is compared with existing approaches such as ZF, MMSE, FCNN-based scheme, and CNN-based scheme. Numerical results show that the proposed KAIP scheme exhibits significant performance enhancements with a rather low complexity.

The rest of the paper is organized as follows: In

Section 2, the system model and the problem formulation is provided. The proposed KAIP scheme is introduced in

Section 3. The numerical results are presented in

Section 4, and conclusions are drawn in

Section 5.

2. System Model and Problem Formulation

Consider the downlink of a massive MIMO ISAC system. The BS is equipped with

M antennas. There are

N single-antenna users in the system, including

communication users and

sensing users. The distance between different users and the BS varies, and there are obstacles between them. An illustration of the considered scenario is given in

Figure 1.

The signal received by the

n-th user is denoted as

. Let

be the received signal vector at all the

N users, which is expressed as (

1).

where

is the channel matrix from the BS to the N users;

is the precoding matrix;

with

denotes the symbols for the

n-th user,

is the noise vector, and

.

Using (

1), we can obtain the received signal vector of the communicating users

and that of the sensing user

, respectively, as (

2).

where

and

are the channel matrix for

communicating users and

sensing users, respectively;

and

are the precoding matrix for the communicating users and the sensing users, respectively;

and

with

and

denote the symbols for the

i-th communicating user and the

j-th sensing user, respectively;

,

and

,

denote the noise matrix of the communicating users and the sensing users, respectively.

The received signal at the

i-th communication user, i.e.,

is given by (

3).

where

and

are the

i-th and the

j-th column of

and

, respectively;

is the noise of the

i-th communication user;

represents the interference from other communication users and

represents the interference from all the sensing users to the communication user’s received signal, respectively.

is the

i-th row of

, denoting the channel for the

i-th communication user, which is modeled as (

4).

where

denotes the small-scale fading coefficients and

denotes the pathloss of the

i-th communication user, which is at a distance of

from the BS. The pathloss is modeled as

, where

is the path loss exponent, which usually takes values ranging from 2.0 to 6.0 [

21];

c is the pathloss at a reference distance and can be treated as a constant.

For the

i-th communication user, the signal to interference plus noise ratio (SINR) is given by (

5).

The achievable rate can be expressed as (

6).

Then the SR of the MIMO system can be obtained as (

7).

The massive MIMO ISAC system also needs to sense the position of the sensing users. In this work, we take the RP at the sensing user as a metric for evaluating the system perception performance [

22]. The RP for the

j-th sensing user

is denoted as (

8).

where

is modeled in the same way as the communication users. To ensure the sensing accuracy, the RP should be no less than a predefined threshold

. Therefore, we have (

9).

To this end, we can formulate the precoding problem for massive MIMO ISAC systems as

This optimization problem is difficult to solve due to the non-convex objective and the constraint of C2. In the following section, we propose to use a machine learning-based scheme to solve (

10) and introduce the novel KAIP scheme.

4. Numerical Results and Analysis

In order to evaluate the behavior of the proposed KAIP scheme, we carried out extensive numerical studies. We consider a scenario where the number of antennas at the BS is M = 256 and randomly generate a set of 10,000 channel matrices, including 5000 Rician fading channel samples and 5000 Rayleigh fading channel samples. The transmit power is normalized to 1 W, whilst the threshold power is set as

W. The path loss exponent

is set to 3.0. The weights in the loss function are set to

, and the training set and test set for the KAIP model training are divided in an 8:2 ratio. The parameters for training KAIP are shown in

Table 1. The proposed KAIP is trained and tested using an Intel i7-12700H CPU (made by Hewlett-Packard in Beijing, China) with Python 3.11 and Pytorch 2.1.2.

In order to show the superiority of the proposed KAIP, the following schemes are used for comparison:

ZF: inter-user interference is eliminated by finding the pseudo-inverse of the channel matrix to generate the precoding matrix.

MMSE: the precoding matrix is generated by balancing inter-user interference and noise enhancement.

FCNN-based precoding scheme [

24]: Two fully connected neural layers are adopted in FCNN. The parameters of FCNN are summarized in

Table 2.

CNN precoding scheme [

25]: Two convolutional layers are adopted in CNN. The parameters of CNN are summarized in

Table 3.

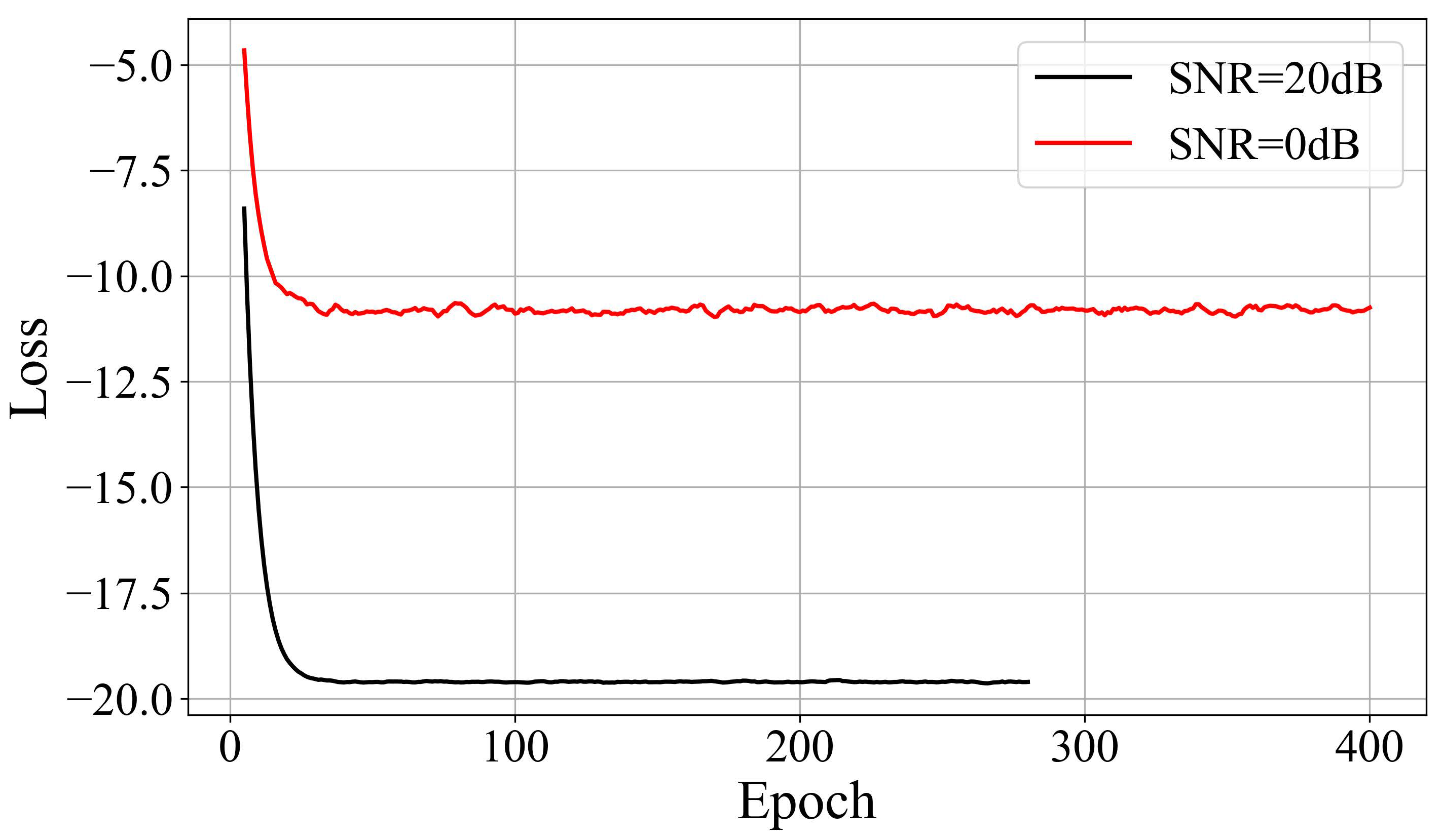

4.1. Convergence Analysis

In

Figure 4, we show the local best convergence curves for two of the most interesting experiments in terms of number of epochs needed to reach the convergence.

The convergence curves illustrate the training dynamics of the proposed KAIP scheme in a Rician fading channel with 16 communication users and 4 sensing users. The horizontal axis (Epoch) represents the number of training iterations, where each epoch corresponds to a full pass of the training dataset through the network. The vertical axis (Loss) quantifies the value of the composite loss function defined in Equation (

16).

The first curve (black line, SNR = 20 dB) shows rapid convergence, with the loss stabilizing near −19.6 by epoch 280, accompanied by minimal oscillations. This behavior reflects KAIP’s ability to efficiently optimize precoding matrices under high-SNR conditions, where strong signal dominance reduces gradient noise during training. In contrast, the second curve (red line, SNR = 0 dB) converges more slowly, reaching −10.8 by epoch 400, with larger fluctuations due to heightened interference and channel uncertainty in low-SNR scenarios. The distinct convergence patterns underscore KAIP’s adaptability: higher SNR accelerates optimization by providing clearer gradient directions, while lower SNR introduces instability, necessitating more iterations to mitigate noise-induced perturbations in the loss landscape. The reduced oscillation amplitude at SNR = 20 dB further confirms the scheme’s stability in favorable channel conditions.

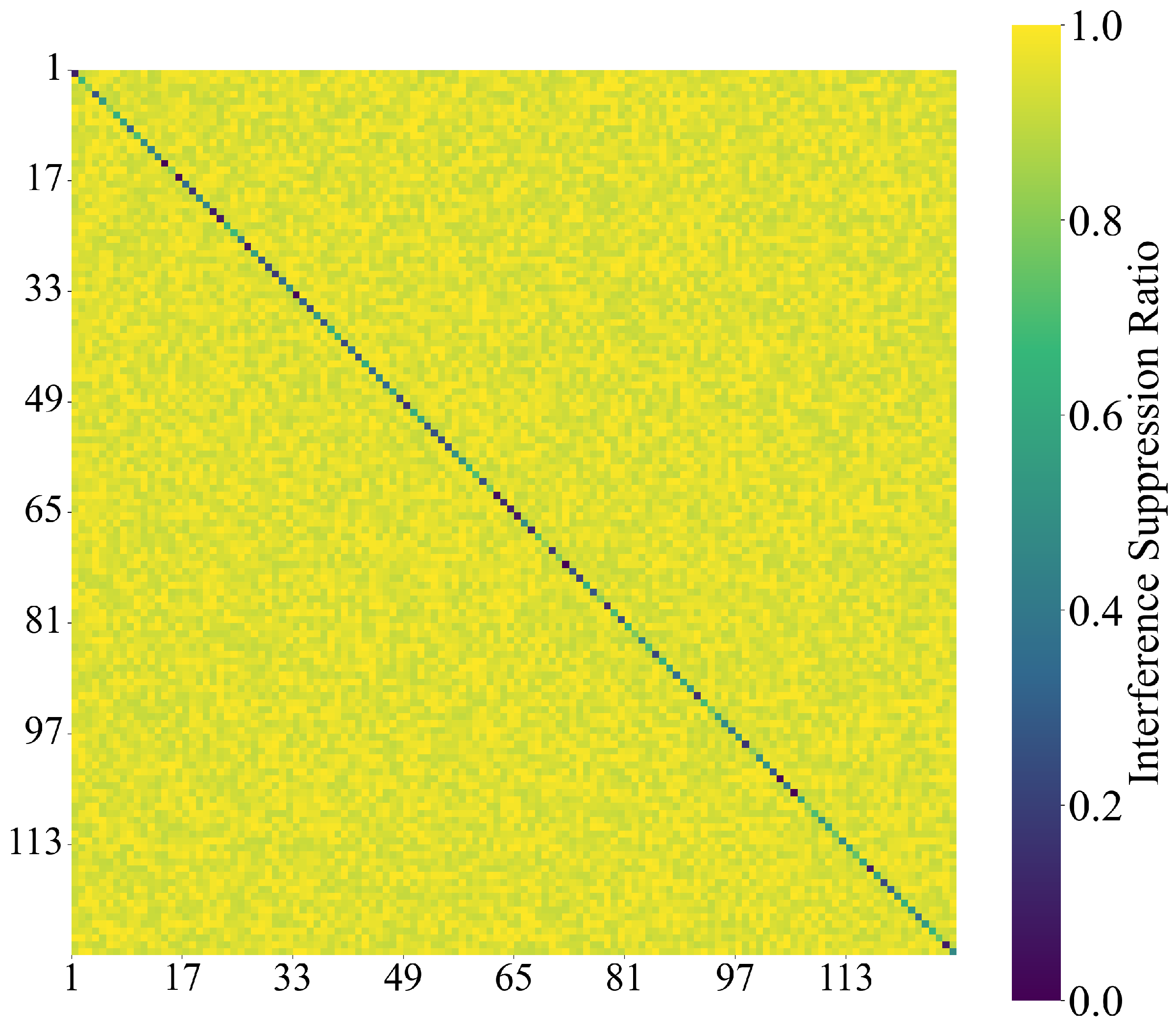

4.2. ISR

To quantitatively validate the attention mechanism’s interference suppression capability, we analyze the Interference Suppression Ratio (ISR) heatmap generated from a 128-user scenario, where ISR is defined as follows:

. The larger the ISR, the stronger the interference suppression capability. The simulation results of ISR is shown in

Figure 5.

The simulation results show that off-diagonal blocks exhibit graduated suppression levels (close to yellow, ratio = 0.4–0.8), reflecting strong interference suppression capability. However, strong diagonal dominance (close to purple, ratio ≈ 0.2) confirms effective self-signal preservation.

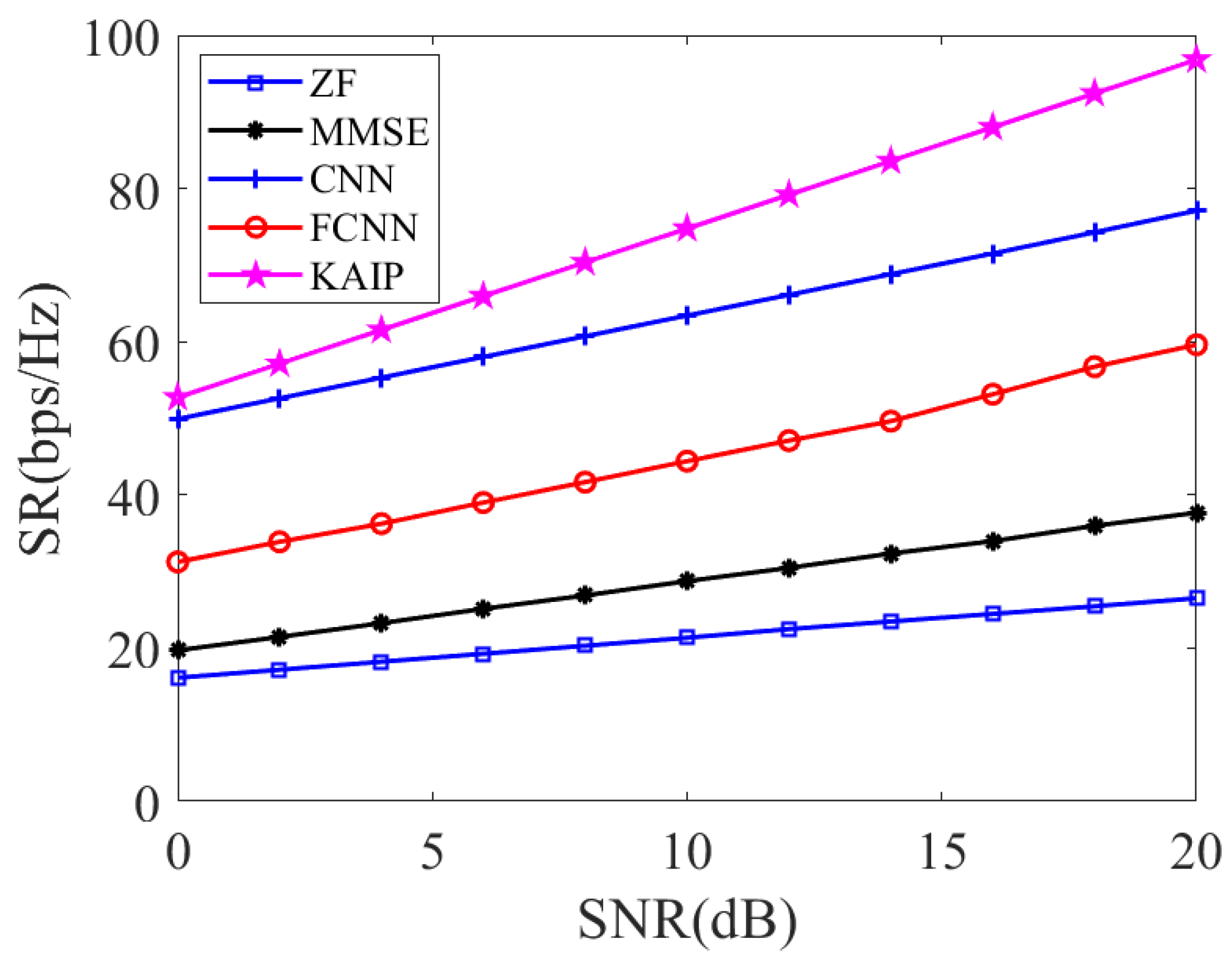

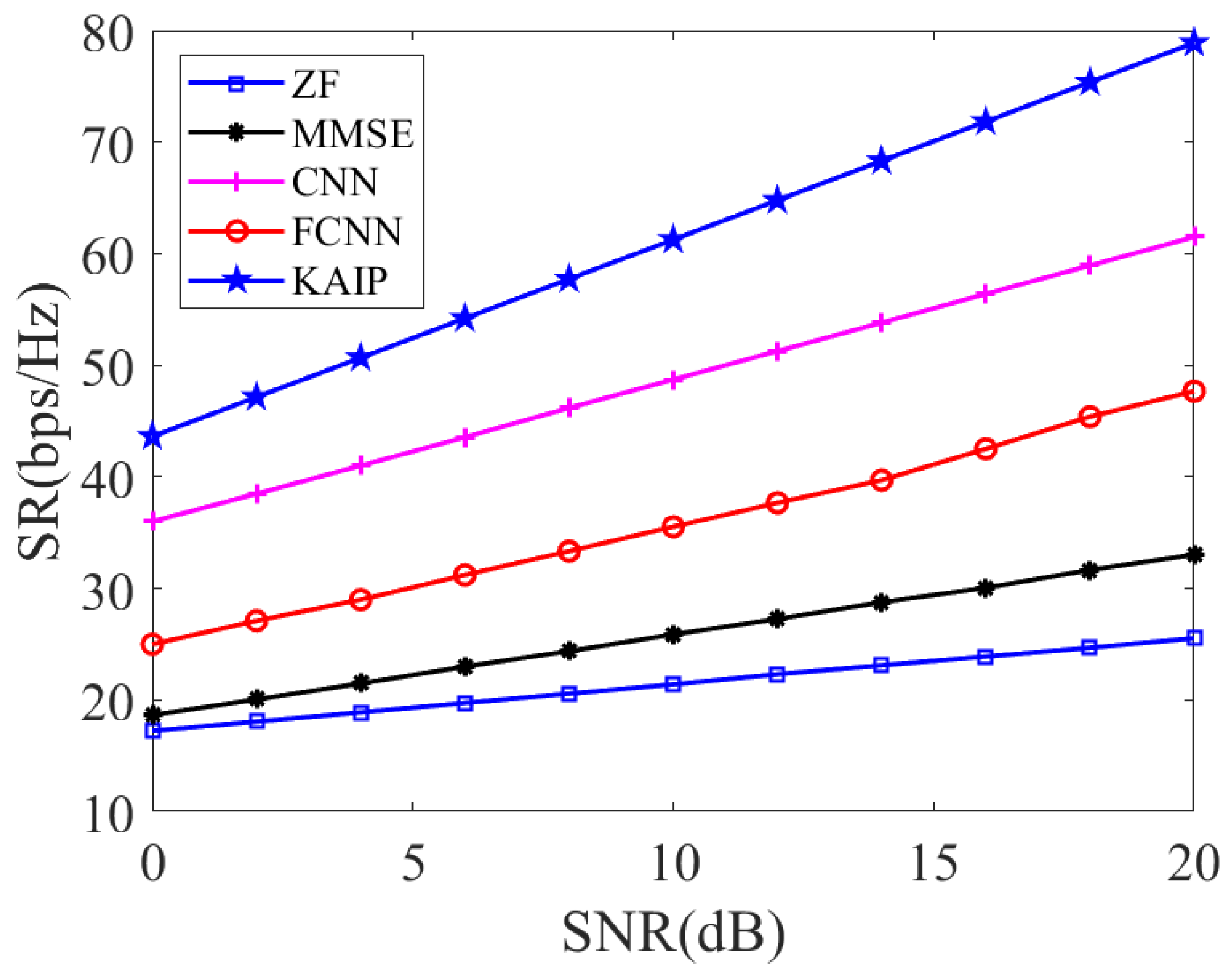

4.3. SR

The SR performance is tested with different signal-to-noise ratios (SNRs) in both Rician and Rayleigh fading channels, where the number of communication users is

. The SNR is defined as

. As shown in

Figure 6 and

Figure 7, whether in Rician or Rayleigh fading channel, the SR always increases with respect to SNR. The deep learning-based schemes (i.e., FCNN, CNN, and KAIP) significantly outperform the conventional ZF and MMSE. Moreover, it can be clearly seen that the proposed KAIP achieves the highest SR, with a gain of around 15% over CNN-based precoding and 70% over FCNN-based precoding. The outstanding performance of the proposed KAIP comes from the fact that KAN has a stronger ability for nonlinear fitting than FCNN and CNN. Therefore, KAIP is more adapted to different channel environments.

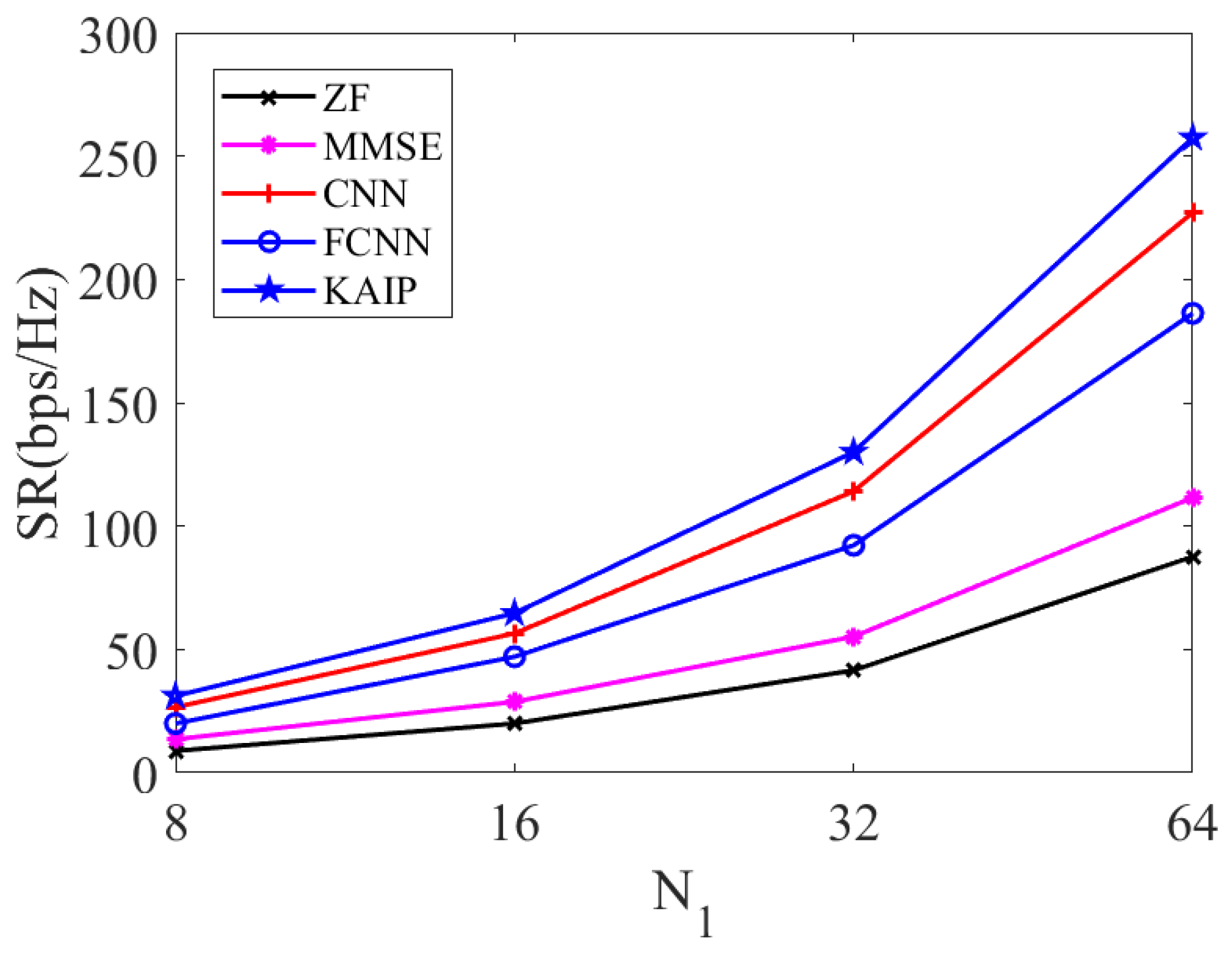

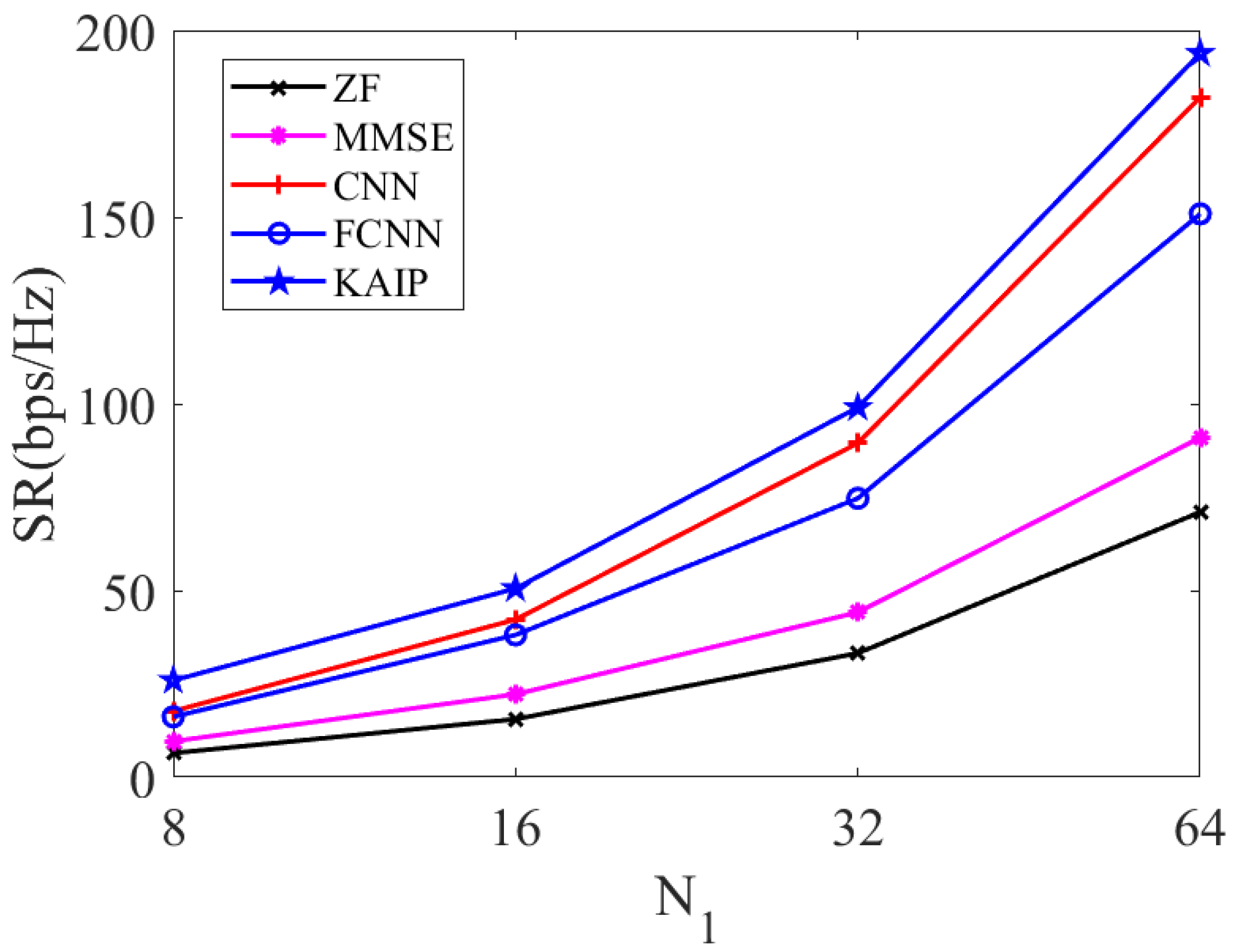

The SR performance under Rician and Rayleigh fading channels for different numbers of communicating users is given in

Figure 8 and

Figure 9, respectively, where the SNR is set to 10 dB. From the figures, it can be seen that the SR increases with the number of users in both fading cases. Among all the curves, the proposed KAIP obtains the highest SR, with a significant gain over its counterparts.

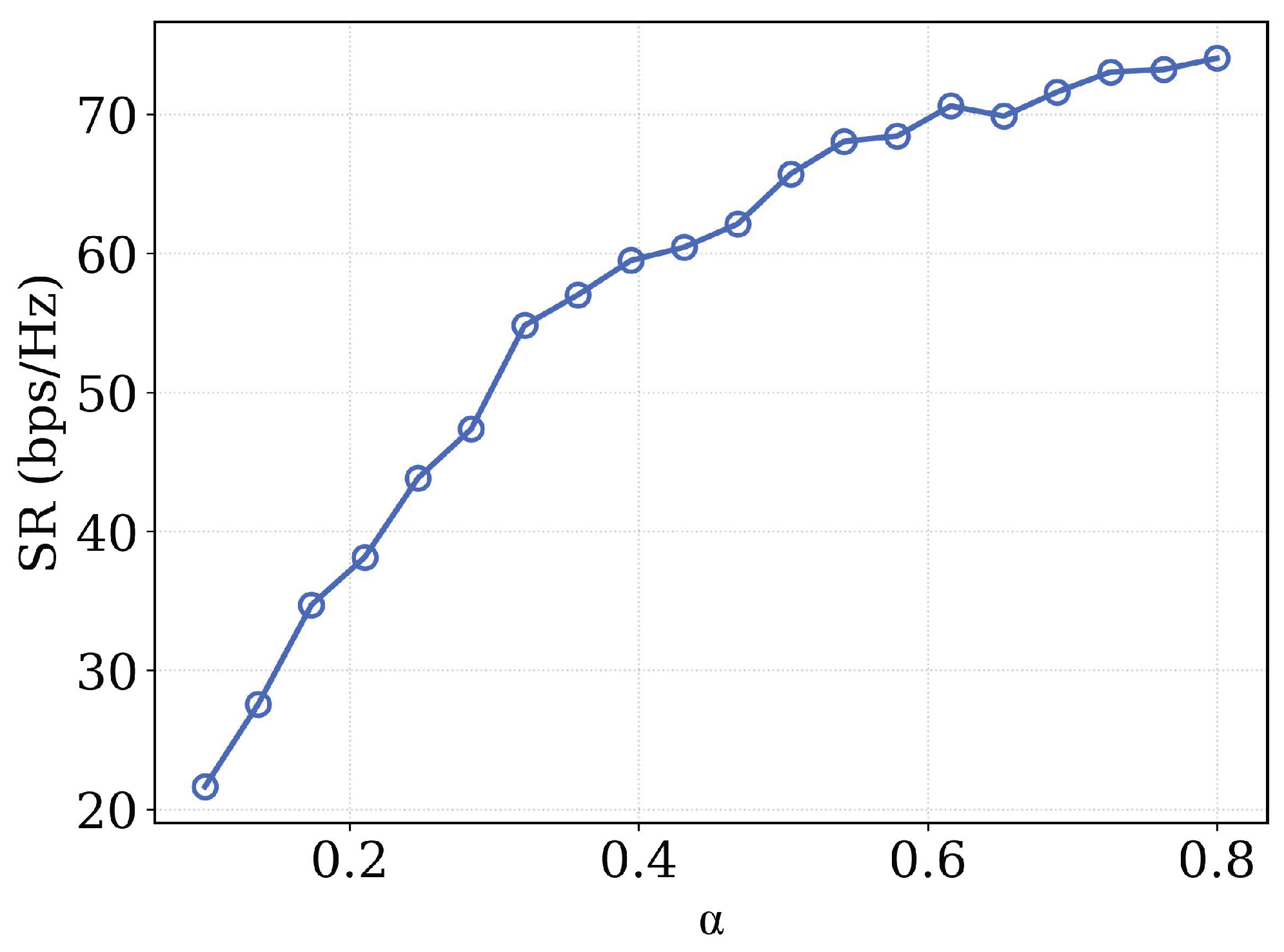

4.4. Analysis of , and

The experimental results presented in

Figure 10,

Figure 11 and

Figure 12 provide a comprehensive analysis of how the key parameters

,

and

influence overall system performance across their respective operational ranges of 0.1–0.8, 0.1–0.5 and 0.1–0.5. The spectral efficiency (SR) demonstrates characteristic saturating growth behavior as parameter

increases, where initial rapid improvements in data rates gradually approach an asymptotic limit at higher

values, suggesting the existence of fundamental capacity constraints within the system architecture. This enhancement in spectral efficiency, however, introduces significant performance trade-offs that manifest through two distinct mechanisms. First, the system experiences progressively higher rate variance among users as

increases, indicating a substantial degradation in fairness metrics where certain users achieve disproportionately higher throughput compared to others in the network. Second, the inverse relationship observed between

and the channel adaptability parameter

reveals that the improved spectral efficiency comes at the expense of reduced system agility in responding to dynamic channel conditions, potentially compromising performance in time-varying wireless environments. Similarly, the inverse correlation between

and

further confirms that gains in spectral efficiency directly impact fairness considerations, establishing a fundamental performance trade-off that system designers must carefully balance when optimizing these parameters for specific operational requirements and quality of service objectives. The comprehensive analysis of these interrelated effects provides valuable insights for developing adaptive parameter tuning strategies that can maintain optimal system performance across diverse network conditions and user requirements.

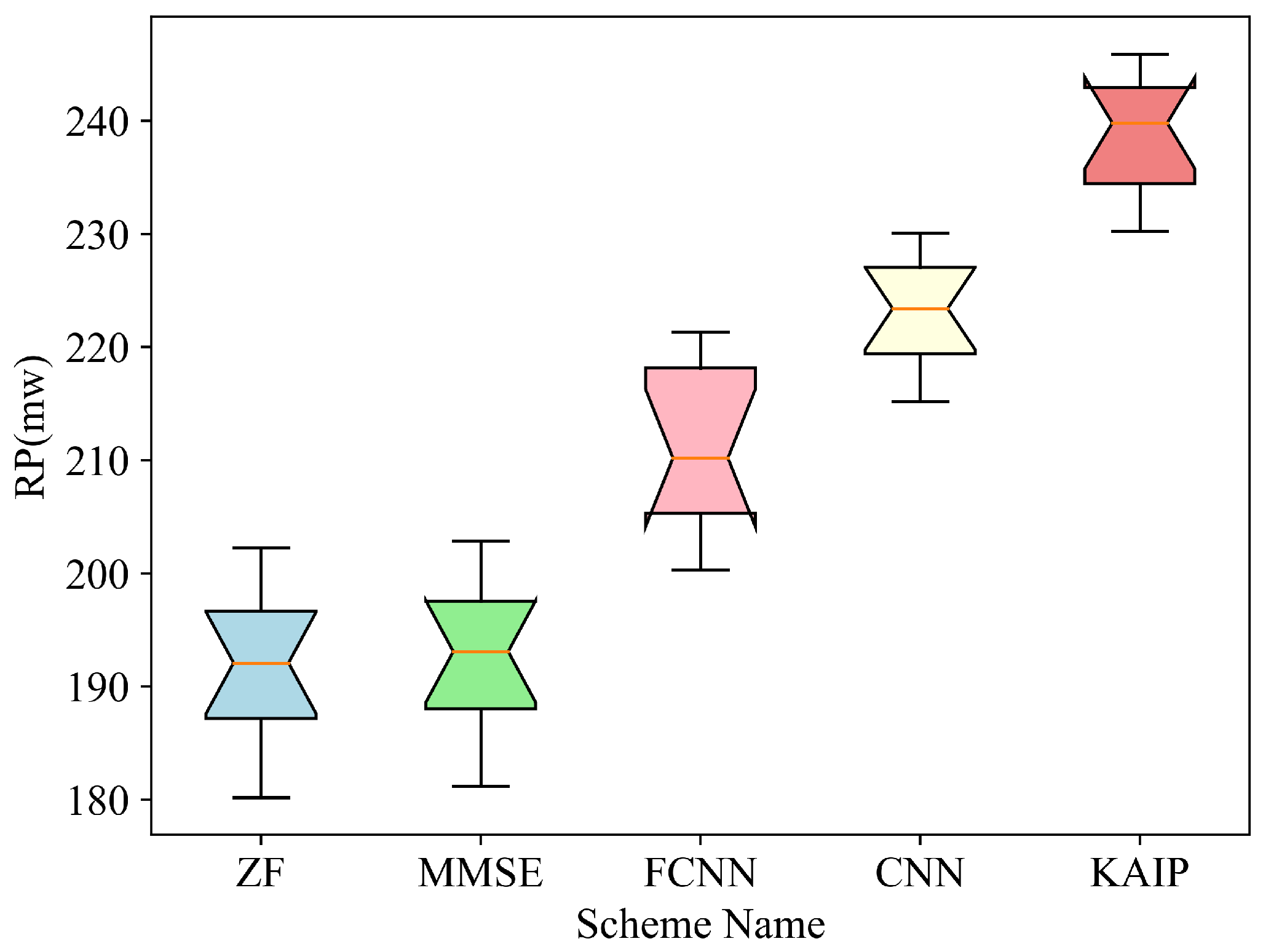

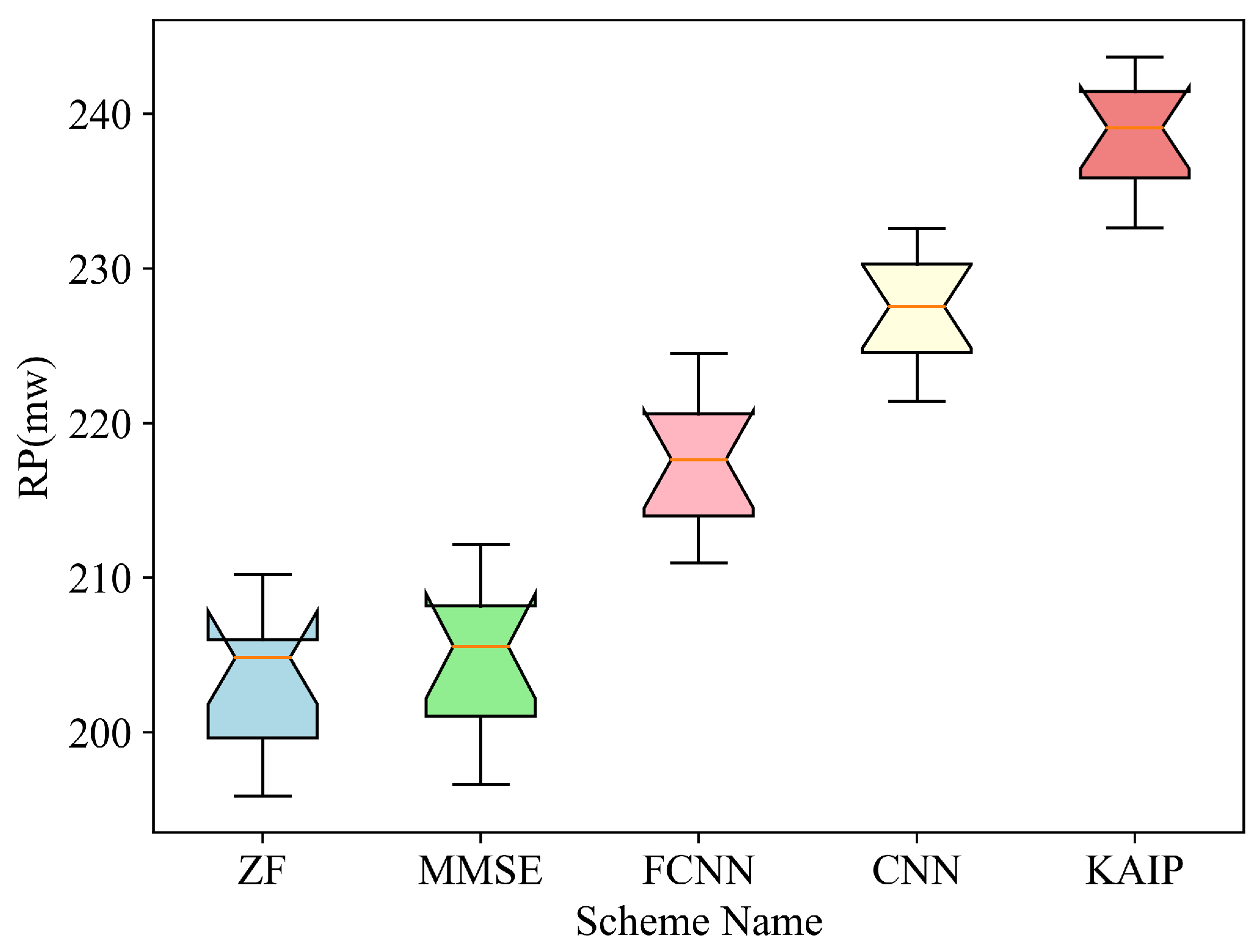

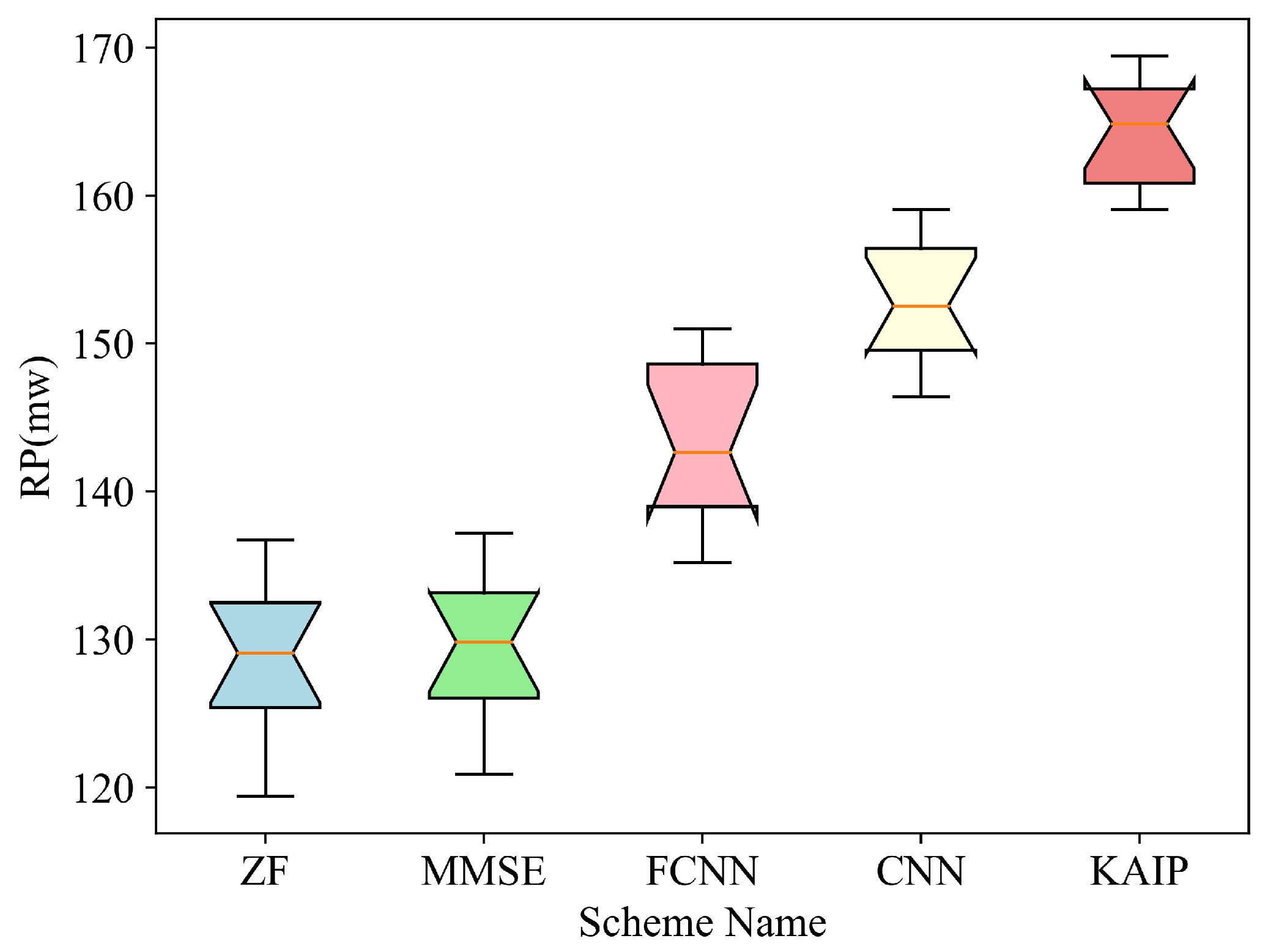

4.5. RP

The RP of four sensing users is tested at different distances from the BS (30 m and 40 m, respectively) in both Rician and Rayleigh fading channels. As shown in

Figure 13,

Figure 14,

Figure 15 and

Figure 16, the deep learning based schemes (i.e., FCNN, CNN and KAIP) significantly outperform the conventional ZF and MMSE. It can be seen that the proposed KAIP achieves the highest RP, with a gain of around 4% over CNN-based precoding and 11% over FCNN-based precoding. The RP decreases as the distance between the sensing user and the BS increases. The exceptional performance of the proposed KAIP is attributed to the fact that the neurons of KAN are composed of nonlinear functions, and its nonlinear fitting ability is much stronger than FCNN and CNN. Therefore, the KAIP-generated precoding matrix is more adapted to the different channel conditions at the same distance.

4.6. Complexity

The computation time (CT) of all the algorithms is given in

Figure 17 with regard to different number of users. As shown in

Figure 17, the CT of the deep learning based schemes (i.e., FCNN, CNN and KAIP) are significantly higher than the conventional ZF and MMSE. However, KAIP outperforms the FCNN and CNN based schemes, with a decrease in CT of around 90% and 96% compared with the CNN and FCNN based precoding, respectively. The proposed KAIP owns a superior behavior in that KAN layer in KAIP can complete the data fitting by using less hidden layers and fewer neurons. Therefore, the computational complexity of KAIP is much lower than the other deep learning based schemes.

Furthermore,

Table 4 provides a theoretical analysis of the computational complexity between KAIP and other baselines. The computational complexity of ZF is primarily determined by the matrix inversion and multiplication operations [

26], represented as

. MMSE shares a similar complexity of

due to its reliance on regularized matrix inversion [

26]. FCNN involves dense matrix multiplications across layers [

27], leading to a complexity of

.

is the number of nodes in the first FCNN layer.

is the number of nodes in the second FCNN layer. The computational complexity of CNN is determined by its convolution operations [

28], represented as

, where

represents the first kernel size and

represents the second kernel size. KAIP combines KAN and attention mechanisms [

29], leading to a complexity of

, where

represents the number of neurons in hidden layer 1 after pruning, and

represents the number of neurons in hidden layer 2 after pruning.

4.7. Ablation Experiments

The ablation experiments were conducted under 256 BS antennas serving 16 communication users and 4 sensing users, evaluating three scheme variants (KAIP, No Attention, No KAN) across key metrics (RP, SR, CT) with parameters including SNR (14 dB, 16 dB, 18 dB, 20 dB), user distances (30 m, 40 m and 50 m), and system scale (N = 10 and 100 users), using mixed Rician/Rayleigh fading channels to validate the individual contributions of attention mechanisms and KAN layers. The ablation experiment results are shown in

Table 5 and

Table 6.

For RP, removing the attention mechanism reduces RP by 10–16% across distances (30–50 m), demonstrating its critical role in interference suppression and power allocation for sensing users. The degradation worsens with distance (e.g., −16% at 50 m), highlighting attention’s adaptability to pathloss. Without KAN, RP drops by 3–7%, showing KAN’s ability to fine-tune precoding weights for optimal power delivery, especially in far-field scenarios.

For SR, the absence of attention causes a severe 22–23% SR loss at all SNRs, proving its necessity for multi-user interference management and channel feature extraction. The absence of KAN layers reduces SR by 11–12%, as KAN’s nonlinear fitting capability better adapts to complex channel conditions.

4.8. SR-Weighted (SW)

Finally, from a cost-benefit trade-off perspective, we define a new SW precoding metric inspired by [

30] under a Rayleigh fading channel. SW is defined as

. The proposed precoding metric SW quantifies the system efficiency by measuring the joint communication-sensing performance per unit of computational cost. The results are depicted in

Figure 18.

The experimental evaluation reveals that KAIP achieves an optimal balance between performance gains and computational efficiency from a cost-benefit standpoint, establishing its superiority over conventional approaches. Compared with traditional ZF and MMSE methods, KAIP demonstrates substantial performance improvements with 62–137% higher system welfare (SW), while maintaining a consistent 14–23% advantage over deep learning baselines, including FCNN and CNN architectures. These significant gains are attributed to KAIP’s innovative synergistic design that effectively combines complementary technologies. The integrated attention mechanism contributes a notable 23% enhancement in spectral efficiency (SR) through its sophisticated interference suppression capabilities, while the KAN layers provide a 12% improvement in resource partitioning (RP) with remarkable computational efficiency—requiring only an 8% incremental processing overhead. Ablation studies further validate the architectural choices, confirming that each component contributes meaningfully to the overall system performance without introducing excessive complexity. This carefully engineered balance between performance enhancement and computational cost positions KAIP as a practical solution for real-world deployment scenarios where both efficiency and effectiveness are critical considerations. The results demonstrate how intelligent architectural design can achieve substantial quality-of-service improvements while maintaining reasonable implementation costs, addressing a key challenge in modern communication system optimization.

5. Conclusions

In this work, we have proposed a novel precoding scheme, i.e., KAIP, to improve the performance of massive MIMO ISAC systems in both Rayleigh and Rician fading scenarios. KAIP’s attention layer has shown to be able to suppress the inter-user interference, whilst KAN’s spline-based nonlinear activation can adapt to dynamic channel conditions with low complexity. The proposed KAIP based precoding scheme outperforms ZF, MMSE, FCNN and CNN based precoding schemes, achieving significant improvement in both SR and RP over existing schemes with a over 90% reduction in CT compared with other performance-harvesting deep learning based schemes. Furthermore, from a theoretical perspective, we provide an analysis of KAIP’s computational complexity, convergence, and the interference suppression capability of the attention layers, along with experimental verification.

However, this study does not consider scenarios where users are in high-speed motion or channels are time-varying, which imposes limitations on the applicability of KAIP. The adaption to high mobility scenarios is important and is remained for future work. As KAN is a key component of KAIP, we do not analyze its intrinsic performance (e.g., approximation guarantees). The rationality and specific performance of KAN have been thoroughly discussed in [

31], and thus, they are not elaborated on in this paper.