Abstract

Smart contracts have become integral to decentralized applications, yet their programmability introduces critical security risks, exemplified by high-profile exploits such as the DAO and Parity Wallet incidents. Existing vulnerability detection methods, including static and dynamic analysis, as well as machine learning-based approaches, often struggle with emerging threats and rely heavily on large, labeled datasets. This study investigates the effectiveness of open-source, lightweight large language models (LLMs) fine-tuned using parameter-efficient techniques, including Quantized Low-Rank Adaptation (QLoRA), for smart contract vulnerability detection. We introduce the EVuLLM dataset to address the scarcity of diverse evaluation resources and demonstrate that our fine-tuned models achieve up to 94.78% accuracy, surpassing the performance of larger proprietary models, while significantly reducing computational requirements. Moreover, we emphasize the advantages of lightweight models deployable on local hardware, such as enhanced data privacy, reduced reliance on internet connectivity, lower infrastructure costs, and improved control over model behavior, factors that are especially critical in security-sensitive blockchain applications. We also explore Retrieval-Augmented Generation (RAG) as a complementary strategy, achieving competitive results with minimal training. Our findings highlight the practicality of using locally hosted LLMs for secure, efficient, and reproducible smart contract analysis, paving the way for broader adoption of AI-driven security in blockchain ecosystems.

1. Introduction

Blockchain technology, introduced with Bitcoin [1], has evolved into a transformative force across various domains, particularly through its application in smart contracts. These self-executing contracts facilitate decentralised applications (dApps), automating transactions without intermediaries. Smart contracts have found applications across various sectors such as financial services, supply chain operations, healthcare systems, and public administration [2,3,4,5], offering advantages such as transparency, security, and efficiency.

Ethereum’s introduction in 2015 [6] represented a major step forward by enabling fully programmable smart contracts. These contracts form the foundation of decentralised finance (DeFi) platforms, automating lending, trading, and compliance processes [7]. They also improve security by reducing Distributed Denial-of-Service (DDoS) attacks and trust problems in cloud computing [8]. However, their programmability creates security weaknesses, leading to significant financial and security risks.

Past security breaches highlight the vital need for secure smart contracts. The DAO attack (2016) exploited a reentrancy weakness, causing a loss of over USD 50 million. Similarly, the Parity Wallet hack (2017) resulted in USD 150 million in Ether being permanently locked due to an uninitialized contract [9]. These incidents show that small coding mistakes can cause massive losses, requiring strong vulnerability detection methods.

Current security approaches, including static and dynamic analysis, effectively find known vulnerabilities but have trouble with new attack methods [10]. Machine Learning (ML) techniques have been tested to improve detection, using operation code sequences and labeled datasets. However, these methods need large amounts of labeled data, which is still scarce. Large Language Models (LLMs) show great potential in finding complex, meaning-based vulnerabilities that traditional methods cannot detect [11]. Their strong language understanding helps them recognize subtle code patterns and security flaws in context. However, using these powerful models is often restricted by high costs and heavy computing needs, creating obstacles to their widespread use in security workflows.

To address these limitations, this study investigates the use of open-source, lightweight LLMs that can be deployed locally without specialized or expensive hardware. These models are adapted using Parameter-Efficient Fine-Tuning (PEFT) techniques, such as Low-Rank Adaptation (LoRA) and its quantized variant (QLoRA), which significantly reduce memory and computational demands while preserving performance. We highlight the strategic value of small, locally hosted LLMs over large, proprietary alternatives for detecting vulnerabilities in Ethereum smart contracts. Locally deployable models offer practical advantages in security-sensitive domains: by keeping data on premises, they enhance privacy and reduce the risk of information leakage. Unlike proprietary APIs, which may have usage restrictions, unpredictable latency, or opaque internal mechanisms, open local models provide full transparency and control over inference. This fosters trust and enables precise fine-tuning and reproducibility. Furthermore, the low computational footprint of these models allows them to run on consumer-grade hardware, making them suitable for resource-constrained settings and eliminating reliance on external cloud infrastructure. This self-sufficiency supports scenarios requiring offline use, regulatory compliance, or fine-grained customization.

Building on these motivations, this paper makes the following key contributions:

- C1

- We propose an enhanced vulnerability detection approach for Ethereum smart contracts through the fine-tuning of Large Language Models (LLMs) using parameter-efficient fine-tuning (PEFT) techniques, including Quantized Low-Rank Adaptation (QLoRA), which achieves improved accuracy and efficiency.

- C2

- We demonstrate the viability of open-source, lightweight LLMs for smart contract analysis, significantly lowering the financial and computational barriers to adoption.

- C3

- We introduce a novel, combined evaluation dataset to address the scarcity of diverse, code-snippet-based benchmarks in smart contract security research.

- C4

- We develop fine-tuned LLMs capable of effectively identifying vulnerabilities in Ethereum smart contracts across a range of common patterns.

These improvements aim to make smart contract vulnerability detection more accessible and effective, addressing key security challenges in blockchain systems.

The rest of this paper is structured as follows: In Section 2, we provide essential background information to establish the context for our study. Section 3 reviews related work, highlighting previous approaches and identifying gaps that our research addresses. In Section 4, we present our methodology in detail, describing the techniques and procedures employed. Section 5 discusses the results obtained and provides an in-depth analysis of their implications. Finally, Section 6 summarizes the study and suggests avenues for future investigation.

2. Background

This section introduces key concepts related to Large Language Models (LLMs), fine-tuning strategies, and Parameter-Efficient Fine-Tuning (PEFT), providing foundational knowledge necessary to understand the methods and contributions of this work.

2.1. Large Language Models

LLMs are sophisticated AI frameworks built upon the Transformer architecture, aimed at understanding and producing text that resembles human language [12]. They leverage self-attention mechanisms to understand linguistic patterns and relationships across sequences, enabling tasks such as translation, summarization, and dialogue generation [13]. Training these models involves a two-phase approach using extensive text datasets: pretraining, where they learn general language representations through tasks like masked language modeling, and fine-tuning, which refines them for specific applications.

LLMs exhibit emergent capabilities, such as zero-shot and few-shot learning, allowing them to generalise across tasks with minimal additional training [14,15]. Notable models include OpenAI’s GPT-4, Google’s Gemini, and Meta’s LLaMA. Their scale, often reaching trillions of parameters, enables robust contextual understanding, making them the foundation of modern generative AI applications.

2.2. Fine-Tuning Large Language Models

Fine-tuning customizes pre-trained LLMs for particular tasks or domains by modifying their parameters [15,16]. Traditional full-parameter fine-tuning retrains the entire model but is computationally expensive. Efficiency-focused methods like PEFT adjust only a portion of the model’s parameters, lowering computational expenses without compromising performance. Techniques like Reinforcement Learning with Human Feedback (RLHF) further refine models to align with user preferences and ethical guidelines.

PEFT optimizes fine-tuning by adjusting only select model parameters, significantly reducing memory and compute requirements [17]. One such approach, Low-Rank Adaptation (LoRA), introduces small trainable matrices to existing model weights, achieving efficient adaptation with fewer parameters [18]. QLoRA extends this by incorporating 4-bit quantization and double quantization techniques, further minimizing memory use while preserving model performance [19].

Fine-tuning allows the model to internalize domain-specific knowledge by adapting its weights during training. As such, it is a training-time strategy that results in a modified model capable of improved inference in that domain.

2.3. Retrieval-Augmentented Generation

Retrieval-Augmented Generation (RAG) enhances LLM performance by dynamically incorporating external information into the inference process [20,21]. Unlike fine-tuning, which permanently adjusts a model’s internal parameters, RAG leaves the base model unchanged. Instead, it retrieves relevant context documents or examples at inference time, appending them to the model’s input prompt. This approach enables the model to produce more accurate and context-aware outputs, especially in domains that require up-to-date, task-specific, or factual information.

RAG is particularly valuable in scenarios where training resources are limited, or frequent model updates are impractical. By offloading part of the reasoning to an external retrieval system, RAG can achieve competitive performance without additional training, making it an attractive alternative to fine-tuning in constrained environments.

In this work, we evaluate RAG as an alternative strategy to fine-tuning for smart contract vulnerability detection. While RAG does not modify model weights, it benefits from integrating relevant vulnerability descriptions, code examples, or documentation at runtime.

3. Related Work

This section reviews existing research on the use and security of smart contracts, contextualizing our work within ongoing efforts to improve reliability in blockchain-based financial systems.

The majority of DeFi applications exist on the Ethereum blockchain [22], primarily due to the advantages offered by the Ethereum Virtual Machine (EVM) and other features that facilitate development [23]. As smart contracts are a crucial component of DeFi applications, their security is paramount, particularly given their immutability after deployment. To mitigate financial risks and ensure the reliability of DeFi applications, numerous researchers and developers have focused on enhancing the security of smart contracts.

3.1. Static and Machine Learning-Based Analysis

Several static analysis tools have been developed to identify vulnerabilities in Ethereum smart contracts. Notably, Ethainter [24] is a security analyzer that detects complex vulnerabilities in Ethereum smart contracts by employing information flow analysis, modeling key concepts such as sanitization and persistent storage, and accurately identifying real-world exploits. Ethainter has demonstrated high performance, achieving % precision and successfully testing exploits on over 800 contracts. S-gram [25] combines N-gram language modeling with basic static semantic analysis to spot vulnerabilities, achieving over 90% accuracy on 1500 contracts. SAILFISH [26] detects state inconsistency bugs by leveraging a storage dependency graph (SDG) and value-summary analysis, outperforming previous tools in both speed and accuracy. SmartDagger [27] uses bytecode-based analysis with neural machine translation models to detect vulnerabilities across contracts, achieving % precision and % recall on 250 contracts. SMARTEST [28] improves symbolic execution with statistical language models to focus on likely vulnerable transaction sequences. It performed better than other tools, finding critical previously unknown vulnerabilities.

Machine learning-based techniques have also been applied to smart contract vulnerability detection and repair. ContractWard [29] extracts bigrams from simplified opcodes instead of source code to classify contracts using a one-vs-rest method for multi-label classification. The best performance was achieved with XGBoost when SMOTETomek was used for balancing, attaining a micro F1-score of %. SmartFix [30] automates vulnerability repair using a generate-and-verify approach with formal verification and statistical models. It ranks candidate patches using feedback from the verifier, attaining a % success rate in fixing vulnerabilities like integer overflows and underflows, Ether-leak, suicidal, reentrancy, and improper use of tx.origin.

3.2. Large Language Models (LLMs)

Several studies have explored using LLMs to improve smart contract vulnerability detection while reducing false positives. GPTScan [31] combines GPT-based analysis with static verification to identify logic vulnerabilities, achieving precision over 90%.

Hybrid approaches integrate LLMs with traditional techniques to refine detection. IRIS [32] enhances static analysis by leveraging LLMs to infer taint specifications and apply contextual filtering, reducing false positives. LLM4FUZZ [33] improves fuzz testing by using LLM-generated insights to guide testing towards high-risk code regions, outperforming existing fuzzing tools in critical vulnerability detection.

3.3. Fine-Tuned LLMs

Fine-tuning LLMs has emerged as a key strategy in improving smart contract vulnerability detection. Ma et al. [34] propose a framework comprising two stages that separates classification and justification, using multiple agents (an initial voting-based classifier, a reasoner, a ranker, and a critic) to refine explanations. This approach leverages a zero-shot chain-of-thought (CoT) method, achieving an F1-score of . Similarly, Boi et al. [35] fine-tune Llama-2-7b-chat-hf and GPT-2-XL on annotated smart contracts mapped to the Open Worldwide Application Security Project (OWASP) Top 10 and Smart Contract Weakness Classification (SWC) categories, improving both detection accuracy and remediation relevance.

To address the high cost of fine-tuning, Yang et al. [36] employ PEFT with Llama2-13B and CodeLlama, classifying vulnerabilities into nine predefined categories. This approach balances efficiency and accuracy, achieving precision rates of 31–36%.

Sóley [37] concentrates on uncovering logic flaws in Ethereum smart contracts by leveraging a dataset-oriented strategy. It preprocesses contract code from GitHub (https://github.com/, accessed on 11 August 2025), slices it into smaller segments, and fine-tunes CodeBERTa on labeled instances, allowing for a more structured and data-driven vulnerability identification process.

3.4. Retrieval-Augmented Generation

Recent work also investigates enhancing LLM-based vulnerability detection through knowledge retrieval and context-aware prompting. Specifically, LLM4Vuln [38] enhances reasoning in Ethereum smart contract analysis by integrating vector-based knowledge retrieval, function calling, and structured prompt schemes like Chain of Thought (CoT). Evaluated across 4950 scenarios with GPT-4, Mixtral, and Code Llama, LLM4Vuln significantly reduces false positives and improves accuracy. Similarly, VulnHunt-GPT [39] enhances vulnerability detection by leveraging prompt engineering and context enrichment via LangChain and LlamaIndex, thereby augmenting GPT-3.5-turbo’s ability to analyze smart contracts. When evaluated on the SmartBugs Curated dataset, VulnHunt-GPT demonstrated improved detection performance across 10 vulnerability categories.

Other works integrate RAG to address data limitations. VulScribeR [40] generates diverse vulnerable code samples to enhance Deep Learning-based Vulnerability Detection (DLVD) models. It employs three strategies: Mutation (modifying existing vulnerabilities), Injection (embedding vulnerabilities into clean samples), and Extension (adding logic to expand vulnerable code), filtered through a fuzzy parser. Yu [41] also applies RAG for vulnerability detection, constructing a vector store of 830 vulnerable contracts using Pinecone and OpenAI’s text-embedding-ada-002. GPT-4-1106 analyzes retrieved data, achieving a % success rate in guided detection and % in blind detection.

3.5. Summary

Existing vulnerability detection approaches fall into three main categories: Static Analysis, ML, and LLMs.

Static Analysis tools like SmartDagger analyze code without execution to detect vulnerabilities such as reentrancy and integer overflow. While effective at identifying syntactic and semantic issues, they struggle with complex logic vulnerabilities and have high false positive rates.

ML-based approaches, such as ContractWard and SmartFix, detect vulnerabilities by learning patterns in code data. These models reduce dependency on predefined rules but require high-quality labeled datasets, which are often scarce.

LLM-based methods, including GPTScan and VulnHunt-GPT, leverage contextual understanding and semantic analysis to achieve state-of-the-art results. Techniques like fine-tuning and RAG further improve performance. However, challenges such as high computational costs, limited customisation, and reliance on proprietary models remain.

An overview of the approaches discussed in this chapter is presented in Table 1. The first column lists the reference for each study while categorising it under its respective methodological approach, Static Analysis, ML, or LLMs. Additionally, a brief description of the methodology employed in each work is provided to highlight key techniques and contributions.

Table 1.

Overview and categorisation of existing vulnerability detection approaches in smart contracts.

Table 2 presents a comparative analysis of LLM-based approaches, highlighting their key characteristics. The first column categorizes each approach by the specific LLM model employed, facilitating direct comparisons between different architectures. The table further assesses performance in terms of model size, availability (free or paid), PEFT, quantization strategies (e.g., QLoRA), and evaluation metrics. It is important to note that it was not feasible to report the same performance metric for all approaches due to differences in evaluation methodologies across studies.

Table 2.

Comparison of LLM-based approaches for smart contract vulnerability detection: model characteristics, fine-tuning techniques, and performance metrics.

Approaches like GPTScan [31] and VulnHunt-GPT [39] leverage pre-trained models (e.g., GPT-3.5 and GPT-4) to analyze vulnerabilities directly using prompt engineering. While these methods exhibit reasonable performance (e.g., GPTScan with % F1-score), they face certain limitations:

- High Cost: Proprietary models such as GPT-4 and Claude-v1.3 incur significant computational and financial costs, particularly for large-scale analysis of smart contracts.

- Limited Customisation: Pre-trained models cannot be adapted easily to domain-specific patterns without fine-tuning, leading to higher false positives and lower precision.

Despite these challenges, pre-trained models are valuable as baseline tools. On the other hand, fine-tuning methods, including Sóley [37] and the multi-agent framework proposed in Ma et al. [34], demonstrate significantly higher performance, achieving F1-scores above 90%. While these approaches show a shift toward free models, most of the selected architectures still rely on models with a large number of parameters, making the process more time-consuming and less scalable.

There is a clear opportunity to explore smaller models that avoid the costs and complexities of fine-tuning while maintaining competitive accuracy.

RAG frameworks, such as LLM4Vuln [38], integrate knowledge retrieval systems with LLMs, dynamically fetching relevant information to augment code analysis. While RAG reduces the need for retraining large models, it introduces higher runtime latency due to dynamic retrieval operations.

Finally, with respect to the datasets available, Table 3 highlights the range of datasets employed in vulnerability detection, distinguishing between contract-level, function-level, and snippet-level datasets.

Table 3.

Overview of the datasets employed in the literature.

Contract-based vulnerability detection presents significant challenges due to the complexity and modularity of smart contracts. Contracts often consist of multiple interdependent functions, external calls, and inherited behaviours, making it difficult to isolate specific vulnerabilities. Moreover, vulnerabilities can arise from interactions between functions rather than from a single function itself, requiring extensive context for accurate classification. This complexity makes contract-level datasets less suitable for fine-tuning LLMs for binary classification. To overcome this, we opted for function-level datasets, as they allow for a more granular approach to vulnerability detection. By focusing on individual functions, we reduce noise introduced by unrelated contract logic and ensure that models learn vulnerability patterns directly from function implementations.

While the TrustLLM dataset already provides function-level vulnerability classification, there remains a critical need for additional datasets to improve model generalization and robustness.

As summarized in Table 2, prior work has already explored the use of PEFT for smart contract vulnerability detection. Specifically, Boi et al. [35] applied QLoRA to fine-tune a Llama-2 7B model, achieving an accuracy of % in identifying vulnerabilities, demonstrating that LLMs can match the performance of traditional detection tools. Similarly, Yang et al. [36] fine-tuned Llama2b 13B and CodeLlama 13B models on a labeled function dataset, reaching an accuracy of 34%. These studies reflect a growing interest in PEFT within this domain. However, our work differs in both scope and methodology: we introduce a novel evaluation dataset (EVuLLM), systematically benchmark multiple PEFT-compatible models, and combine fine-tuning with ensemble prompt engineering and RAG strategies. This comprehensive evaluation reveals performance gains not only from fine-tuning but also from advanced prompt and inference techniques, highlighting our contributions beyond PEFT alone.

While existing approaches to smart contract vulnerability detection employ static and dynamic analysis, and more recently LLMs, they often face limitations in scalability, transparency, and accessibility. Many LLM-based methods depend on large proprietary models or require full fine-tuning, which entails significant financial and computational costs, posing barriers for researchers and developers in resource-constrained settings. Our approach addresses these challenges by demonstrating that lightweight, open-source LLMs fine-tuned using PEFT can deliver state-of-the-art accuracy, even outperforming larger proprietary models, while significantly reducing resource demands.

Unlike prior studies that frequently rely on narrow or proprietary datasets, we introduce EVuLLM, a publicly available benchmark that consolidates and extends existing resources to support more comprehensive evaluation. Furthermore, our study explores RAG-based methods for smart contract analysis, showing that retrieval-enhanced inference can serve as an effective alternative to fine-tuning when computational resources or labeled data are limited. Together, these contributions promote a more accessible, reproducible, and privacy-preserving path for deploying AI-powered security tools within blockchain ecosystems.

4. Methodology

This section describes the methodology used in this study, focusing on the techniques and tools applied to address smart contract vulnerability detection. It begins with an overview of the datasets used, including their structure and preparation. First, TrustLLM, an existing dataset, is described. Following that, our own EVuLLM extended dataset is introduced. Next, the fine-tuning process for LLMs is detailed, covering the tools, model selection, and parameter configurations. Finally, it outlines our RAG approach, including its architecture, components, and implementation to enhance the model’s performance.

Fine-tuning and RAG represent two fundamentally different approaches: the former embeds task knowledge into the model parameters through training, while the latter injects task-relevant information at inference without retraining. We include RAG in our experiments not as a direct competitor to fine-tuning, but as a practical alternative for environments where training is infeasible or where flexibility and external knowledge integration are prioritized. Both approaches are evaluated on the same dataset to ensure a fair and transparent comparison of their effectiveness.

4.1. TrustLLM Dataset

The TrustLLM dataset, originally introduced in Ma et al. [34], is designed for evaluating smart contract security through classification and justification tasks. It consists of three splits: training, validation, and test sets, comprising 1734 positive and negative samples derived from Code4rena’s smart contract auditing reports [43].

The dataset supports two primary tasks: (1) classification, which determines whether a given Solidity code snippet is safe or vulnerable, and (2) justification, which provides an explanatory rationale for the classification. Each dataset entry includes an ID, an input prompt guiding the model’s analysis, and a completion representing the model’s generated response.

For the classification task, five prompt variations are utilized to enhance linguistic diversity, mitigate bias, and improve robustness. These variations rephrase instructions while maintaining the core objective of the security assessment. Similarly, the justification task employs five distinct prompt templates to refine the model’s ability to provide structured, context-aware explanations. The validation set consists of 567 code snippets, split across both tasks.

The test set, comprising 709 Solidity code snippets (343 safe, 366 vulnerable), is structured in JSON format. Each entry contains meta-information (contract and function names, Solidity code, and function call relationships), input prompts for the justification task, a ground truth label indicating security status, and a target reason explaining the classification. The prompts are designed to incorporate varying levels of contextual information, including function call relationships, further strengthening the dataset’s applicability to real-world smart contract auditing.

4.2. EVuLLM Dataset

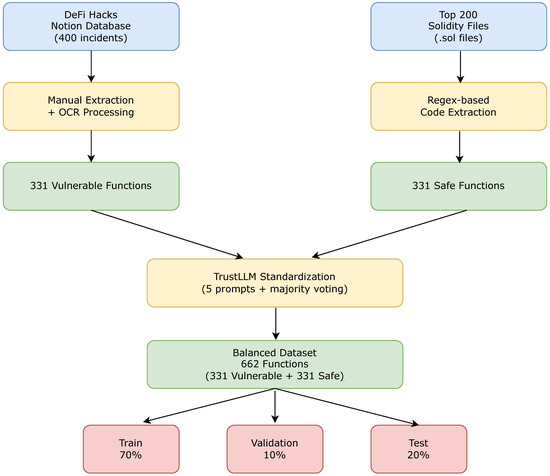

While TrustLLM remains a fundamental benchmark, evaluating models based on a single dataset may not sufficiently assess their generalizability. To enhance the robustness of results and provide an additional benchmark dataset, we curated a complementary function-level dataset. Given that our prompts require function-level inputs rather than entire contracts, we utilised the DeFiHacks dataset from SunSec [31] and the Top200 dataset from GPTScan [44], as no other function-level dataset was available. This contributes to a more comprehensive and reliable evaluation of smart contract vulnerability detection systems, ultimately enhancing their real-world applicability.

The DeFiHacks dataset documents 400 Decentralized Finance (DeFi) hack incidents in a structured table. The table offers an in-depth technical analysis of each exploit, detailing how vulnerabilities were leveraged including attack transaction links and analysis discussions from security researchers on forums and social media. Although the dataset initially contained 400 incidents, several lacked explicit code snippets, yielding a final dataset of 331 entries.

To add safe contracts and balance the DeFi Hacks dataset, we added functions from the GPTScan Top200 dataset. This dataset includes 303 open-source contract projects spanning six prominent Ethereum-compatible blockchains. Given that these contracts have undergone extensive audits and enjoy broad usage, they are regarded as largely free from critical vulnerabilities, serving as a reliable benchmark for comparison.

We extracted function snippets from these contracts using regular expressions to find contract definitions and function code. Contracts were found using the “contract” keyword, while functions are pulled out using patterns that capture their complete multi-line code. The extracted contract names were used as labels to make sure each function was linked to the right contract. Details like function names and complete code snippets were also saved. The processed data were then stored in JSONL format.

A total of functions were extracted from the Solidity source files in the GPTScan-Top200 dataset. To ensure balance, a subset of 331 functions was randomly sampled to complement the vulnerable examples in the DeFi Hacks dataset.

To align with TrustLLM’s methodology, we reformatted the extracted code snippets to match its structure and combined them into a unified dataset. The resulting EVuLLM dataset contains 662 entries, evenly split between safe and vulnerable function snippets. We constructed this extended dataset by merging function snippets from the GPTScan-Top200 and DeFi Hacks datasets. Both sources were stored in JSONL format and processed using a custom function to correct formatting issues. After loading, we combined the datasets into a single collection for analysis. To maintain logical organization, entries were grouped based on the first six characters of their unique IDs and kept consistent during dataset splitting. We then shuffled the collection to introduce randomness before partitioning it. The dataset was divided into training (70%), validation (10%), and test (20%) sets. Finally, we stored the training and validation sets in JSONL format and the test set in JSON format.

Figure 1 shows the processing pipeline described here.

Figure 1.

Processing pipeline for the EVuLLM dataset.

The complete dataset as well as all relevant processing code is publicly available under a Creative Commons license (https://github.com/Datalab-AUTH/EVuLLM-dataset, accessed on 11 August 2025).

We used TrustLLM primarily because it is a well-established benchmark dataset introduced in prior literature, allowing for meaningful comparison with existing vulnerability detection models. Its structured format, real-world provenance from Code4rena audits, and support for both classification and justification tasks make it ideal for fine-tuning and evaluation. In contrast, EVuLLM was introduced in this work as a complementary dataset to enhance robustness and test generalization. It was constructed from real-world smart contract data (DeFiHacks and Top200) and adapted for function-level classification, which aligns better with the input granularity of our models. Using both datasets enables a broader and more reliable assessment: TrustLLM offers continuity with prior work, while EVuLLM adds diversity and context breadth that help validate our findings across multiple real-world settings.

4.3. Fine-Tuning LLMs for Vulnerability Detection

Building upon previous discussion, which emphasized the transformative potential of LLMs in automating vulnerability detection, and our reviews of the state-of-the-art methodologies leveraging LLMs, this section outlines the methodologies, tools, and approaches used to fine-tune LLMs for the task of vulnerability detection in smart contracts.

We used the Unsloth library [45] for fine-tuning, which provides computational efficiency and seamless integration with modern frameworks. It leverages state-of-the-art optimization techniques to reduce memory usage and training time. For baseline comparisons, we used the BitsAndBytes library [46], known for its optimization techniques and efficient memory utilization.

The HuggingFace Transformers [47] and Datasets [48] libraries are used for configuration and efficient dataset management. The PEFT library [49] supports PEFT approaches like LoRA [18] and QLoRA [19], allowing for memory-efficient training.

All experiments were conducted on the Aristotelis HPC cluster at AUTh, specifically on the Ampere partition. This partition has eight NVIDIA A100 GPUs with 40 GB of GDDR6 memory, supported by AMD EPYC 7742 processors and 1 TB of RAM per node. One GPU was used for all experiments.

4.3.1. Model Selection

The selection of models for this study involved a systematic evaluation of various pre-trained LLMs. The goal was to identify models that strike an optimal balance between scalability and performance accuracy while meeting the specific requirements of smart contract vulnerability detection. Furthermore, compatibility with the Unsloth library was a crucial consideration in the model selection process.

The final selection consisted of two distinct categories: code-specialized models and general-purpose models. This classification was designed to enable a comprehensive evaluation of domain-specific capabilities and broader adaptability. The selected models, along with their key characteristics, including the number of parameters, pre-quantization status, and instruction-tuned status, are presented in Table 4.

Table 4.

Selected models for fine-tuning.

Model suitability is heavily influenced by dataset size [55]. For large datasets with over 1000 samples, base models are generally preferred, as they enable extensive fine-tuning to capture complex patterns and contextual dependencies. For medium-sized datasets (ranging from 300 to 1000 samples), both base and instruction-tuned models are suitable, with the choice depending on the prioritization of domain-specific customization or broader generalization. In contrast, for smaller datasets with fewer than 300 samples, instruction-tuned models are recommended, as they retain pre-trained capabilities while requiring minimal fine-tuning, thereby reducing the risk of overfitting and preserving task-specific performance.

While these recommendations provide general guidance, they may not be universally applicable, and empirical experimentation is advised where feasible. In our case, the models listed in Table 4 demonstrated superior performance for the given task.

A proprietary GPT-4o model was also included in the study in order to provide a high anchor for the performance of open models as it was expected to outperform all open models before fine-tuning due to its considerably larger size.

4.3.2. Fine-Tuning Methodology

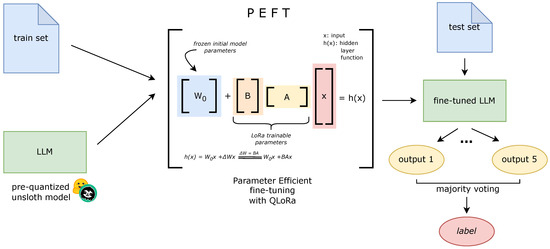

This study employed a Parameter-Efficient Fine-Tuning (PEFT) approach, utilizing LoRA and its quantized variant, QLoRA, to adapt LLMs to downstream tasks with minimal additional computational overhead. The fine-tuning process commenced by loading both the model and tokenizer using Unsloth’s FastLanguageModel module, which provided a similar functionality to the AutoModel interface offered by the Transformers library. Models were retrieved in a pre-quantized format directly from Unsloth’s Hugging Face repository. Furthermore, FastLanguageModel supported Rotary Position Embeddings (RoPE), enabling long-context processing capabilities.

The training and validation datasets were supplied in JSON format and underwent preprocessing to ensure compatibility with the model’s input requirements. A formatting function was employed to concatenate each sample’s “prompt” and “completion” fields, followed by the insertion of an End-of-Sequence (EOS) token, which was parsed from the pre-defined tokeniser. The dataset was shuffled to promote randomness during training and then mapped into the tokenization-ready format.

This process guaranteed that each example included both prompts and completions, preserving the semantic structure of the training input and ensuring correct sequence alignment.

The relevant fields from each data sample were combined, with the EOS token appended to delineate the end of a sequence. This structured formatting ensured compatibility with the tokeniser and preserved the integrity of the data for supervised fine-tuning, where the presence of both input and target text was essential.

The LoRA configuration specified the transformer submodules to be fine-tuned and introduced trainable parameters into those components. Parameters such as rank, alpha scaling, and dropout were carefully selected to balance model expressiveness with computational and memory efficiency. Additionally, training settings such as batch size and gradient accumulation steps were configured to accommodate hardware constraints while preserving training stability.

A high-level overview of the fine-tuning architecture is presented in Figure 2. This diagram illustrates how a pre-quantised model, sourced from Hugging Face, is fine-tuned using LoRA while the base model weights remain frozen. The LoRA adapters are injected into specific transformer modules to enable task-specific learning, thereby facilitating efficient adaptation without compromising the model’s memory footprint.

Figure 2.

Overview of the fine-tuning architecture using QLoRA.

The hyperparameters used for fine-tuning with the TrustLLM and EVuLLM datasets are shown in Table 5. While we did not perform an exhaustive hyperparameter search due to computational constraints, we based our choices on a combination of empirical testing, prior findings from similar PEFT-based LLM studies and recommendations from the Unsloth library and Hugging Face documentation for 4-bit fine-tuning with QLoRA.

Table 5.

Hyperparameter values used for fine-tuning.

In particular, we experimented with a small number of configurations and selected the ones that offered the best trade-off between performance and training stability on a validation subset of the TrustLLM and EVuLLM datasets. While not exhaustive, our approach prioritizes practical reproducibility and reflects real-world constraints in deploying efficient fine-tuning pipelines for smart contract analysis.

For evaluation, a distinct set of five prompts, different from those used during training, was employed to test the generalization performance of the fine-tuned model. Each test prompt elicited a generated response from the model, and final predictions were obtained through a majority voting scheme. This method ensured robustness across multiple input formulations. Upon configuration of the training parameters, the training procedure was initiated. Following completion, the model was uploaded to the Hugging Face Hub, and all relevant training logs and evaluation metrics were stored to support downstream analysis and visualization.

4.4. Implementation of a RAG Framework for Vulnerability Detection Using Ollama

As previously mentioned, interest in RAG frameworks has been growing because they can add external knowledge, making outputs more accurate and relevant to the context.

The RAG framework used in this study includes several components, which we explain in detail in the following sections.

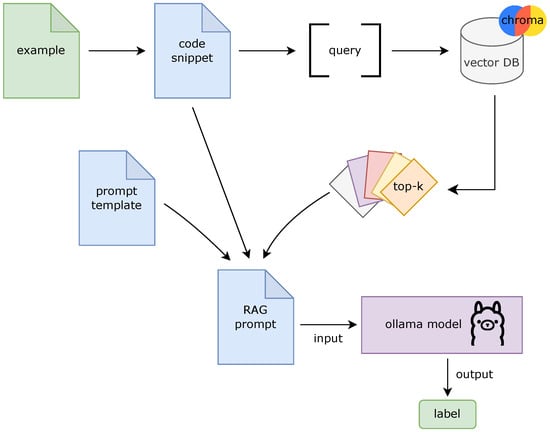

Architecture Design for the RAG Framework

The system started by converting input queries into dense vector representations using a pre-trained embedding model. An overview of the complete architecture is presented in Figure 3.

Figure 3.

RAG architecture outline.

ChromaDB, an open-source vector database [56], formed the core of the retrieval system. During setup, ChromaDB was filled with processed documents, each converted into a vector space using the same embedding model. Before adding them to ChromaDB, documents went through a processing pipeline designed to improve retrieval quality. Each document contained two parts: a code snippet and its matching label, taken directly from the training data. Since the dataset had five prompt versions for each entry, we chose only the representative code snippet (function) to keep things consistent. Processing also included breaking text into tokens and shortening or dividing long snippets to fit token limits. These documents were then converted to vectors using the same model used for input queries, ensuring they were represented in the same way.

Retrieval works in the same manner as few-shot learning. When receiving a test input, the system extracts the code snippet, converts it to a vector, and uses it to search for similar snippets in the vector database. The system finds the top-k most similar examples based on cosine similarity, providing contextual knowledge to the generative model. These examples aim to provide the benefits of fine-tuning without needing to retrain the model.

The retrieval component utilizes approximate nearest neighbor (ANN) search to quickly locate relevant training examples. The number of retrieved examples (k) can be adjusted. Retrieved entries comprise code-label pairs that assist the model in making classifications.

The prompt used by the RAG framework comprises three components:

- Instructions: Task description and classification guidance.

- Input Code Snippet: The Solidity code under analysis.

- Response Placeholder: Marks where the model generates its output.

The input code is embedded and used to query the vector database. Retrieved examples, combined with the input and prompt template, form the complete prompt that is fed to the language model, which subsequently classifies the code.

Ollama [57] was employed in this study for its user-friendly interface and support for a diverse set of LLMs, including models optimized for general-purpose tasks, embeddings, and vision. Within the RAG framework, three models, Code Gemma (8B), Granite Code (8B), and Gemma 2 (9B), were evaluated for their ability to classify code snippets as “safe” or “vulnerable.” Model configurations were standardized through predefined parameter settings to ensure consistency across evaluations. An overview of the selected models is presented in Table 6. Notably, the CodeGemma model used in our RAG experiments differed from the one used in our fine-tuning experiments. Specifically, the RAG experiments employ the CodeGemma-8B model sourced from the Ollama library, while the fine-tuning experiments utilize the smaller CodeGemma-7B variant provided by the Unsloth library via the Hugging Face repository. These models differ not only in parameter size but also in quantization method and intended usage context.

Table 6.

Models employed for the RAG framework.

The proprietary GPT-4o model served as a high anchor in this case and was expected to outperform all open models without retrieval.

ChromaDB [56] was utilized as the vector database for indexing and retrieving training instances (hereafter “documents”). The dataset, consisting of code-label pairs, was embedded into dense vector representations using a pre-trained embedding model and subsequently stored in ChromaDB for similarity-based retrieval.

LangChain [61] was adopted to orchestrate the RAG pipeline, offering a modular framework for integrating LLMs and vector databases. Within this setup, LangChain handled the end-to-end interaction between input queries, ChromaDB, and Ollama models.

The process commenced with embedding the input query, followed by the retrieval of semantically similar documents from ChromaDB. The retrieved context was appended to the query and passed to the selected LLM for label generation.

5. Results and Discussion

This section presents and analyzes the results of our proposed detection methods, evaluating their effectiveness through standard classification metrics to assess the performance impact of the RAG approach and fine-tuning strategies.

5.1. Baseline Performance

To establish a baseline, models were initially evaluated without fine-tuning or retrieval. This included two scenarios: (i) models in the RAG setup with retrieval disabled and (ii) models prior to fine-tuning. Additionally, a proprietary GPT-4o model was included as a high-performance anchor. The results provide insight into the individual contributions of retrieval and fine-tuning to overall performance.

5.1.1. RAG Models Without Retrieval

Models were evaluated using isolated prompts, excluding the retrieved examples. Table 7 reports results on TrustLLM and EVuLLM datasets.

Table 7.

Baseline evaluation results for RAG (no retrieval).

The proprietary GPT-4o model outperformed all open models, which was expected given its significantly larger size compared to the other models.

Among the open models, Gemma 2 consistently outperformed others across various datasets. In contrast, CodeGemma and Granite Code exhibited lower recall, indicating a tendency to miss vulnerabilities, which is particularly concerning in security-focused contexts. This is likely due to the fact that these models were specifically trained for code generation rather than security analysis, rendering them less sensitive to detecting potential security flaws in smart contracts.

5.1.2. Models Used for Fine-Tuning (Pre-Fine-Tuning)

Baseline results for the models used in fine-tuning, prior to adaptation, are presented in Table 8, alongside the GPT-4o model, which serves as a high-performance anchor. Predictions were extracted using regex-based filtering, and final labels were determined through majority voting.

Table 8.

Baseline evaluation results for models used in fine-tuning.

As anticipated, the proprietary GPT-4o model significantly outperformed all open models in most cases. Notably, most open models demonstrated high recall but low precision, with Mistral v0.3 achieving the best overall balance. This suggests that the models were overly cautious, frequently misclassifying safe contracts as vulnerable in an effort to avoid missing actual threats. The results underscore the necessity of fine-tuning to mitigate false alarms and enhance decision reliability.

5.2. Retrieval-Augmented Generation Results

Results for RAG evaluation are shown in Table 9. Gemma 2 demonstrated the best overall performance on the EVuLLM dataset, while CodeGemma achieved the highest recall across both datasets. Granite Code performed slightly worse, particularly in terms of recall. The substantial improvement in results compared to the baseline can be attributed to RAG’s ability to provide relevant examples during inference. By retrieving similar vulnerability patterns from the training data, the models acquire contextual knowledge that enables them to make more accurate classifications without requiring costly retraining. This approach effectively emulates few-shot learning by granting models access to relevant examples at prediction time.

Table 9.

RAG evaluation results.

In comparison to the baseline evaluation results presented in Table 7, it is evident that metrics have significantly improved across the board for open models. When benchmarked against the proprietary GPT-4o model, the results demonstrate that RAG techniques are highly effective in specializing models with a substantially smaller number of parameters, enabling them to reach performance levels comparable to, and sometimes even surpassing, those of large proprietary models. These findings confirm that RAG enhances performance beyond that of pre-trained models, with gains contingent upon the model’s ability to effectively utilize the retrieved context.

5.3. Fine-Tuning Experiments

This section evaluates the performance of our fine-tuned models using PEFT techniques, specifically LoRA and QLoRA, on the TrustLLM and EVuLLM datasets.

5.3.1. TrustLLM Dataset

Table 10 presents the evaluation results of our models, which were fine-tuned using PEFT. Each model is based on a corresponding base implementation, as detailed in Table 4, which includes the original source and reference for reproducibility.

Table 10.

Fine-tuning results on the TrustLLM dataset.

Compared to the baseline results shown in Table 8, it is obvious that there is a dramatic increase in performance with respect to all metrics.

The fine-tuning results demonstrate that parameter-efficient fine-tuning (PEFT) techniques can significantly enhance model performance for smart contract vulnerability detection. Notably, most models achieved accuracy exceeding 90%, representing a substantial improvement from their baseline performance of around 50%. The fine-tuned version of the CodeGemma model outperformed all other models across all metrics, including the proprietary GPT-4o model. This success can be attributed to the models learning to recognize vulnerability patterns specific to smart contracts during the fine-tuning process. An exception was Qwen 2.5 Coder, which attained low scores; however, this model was trained for only five epochs due to hardware limitations, likely insufficient for effective learning of vulnerability patterns. Interestingly, general-purpose models performed comparably to code-specialized ones, suggesting that the fine-tuning process effectively adapts models to the domain, regardless of their initial specialization.

The fined-tuned models that were produced using the TrustLLM dataset are publicly available under an Apache 2.0 license:

- TrustLLM Qwen 2.5 Coder: https://huggingface.co/emandana/TrustLLM_Qwen2_5_Coder_7B_Instruct_4b (accessed on 11 August 2025),

- TrustLLM CodeGemma: https://huggingface.co/emandana/TrustLLM_codegemma_7b_4b (accessed on 11 August 2025),

- TrustLLM Mistral v0.3: https://huggingface.co/emandana/TrustLLM_mistral_7b_Instruct_v03_4b (accessed on 11 August 2025),

- TrustLLM Mistral Nemo: https://huggingface.co/emandana/TrustLLM_Mistral_Nemo_Instruct_2407_4b (accessed on 11 August 2025),

- TrustLLM LLaMA 3.1: https://huggingface.co/emandana/TrustLLM_Meta_Llama_3.1_8B_Instruct_4b (accessed on 11 August 2025).

5.3.2. EVuLLM Dataset

This section evaluates the generalization capabilities of our models using the extended EVuLLM dataset. The performance of our fine-tuned models is presented in Table 11, which builds upon the original models listed in Table 4.

Table 11.

Fine-tuning results on the EVuLLM dataset.

The results on the EVuLLM dataset demonstrate that fine-tuned models can achieve outstanding performance, with most models attaining accuracy exceeding 89% and CodeGemma reaching nearly 95% across all metrics. This strong performance indicates that the models have successfully learned generalizable vulnerability patterns that are effective across different types of smart contract code. CodeGemma’s superior performance can be attributed to its specialized code understanding, combined with the comprehensive LoRA configuration that adapted a larger number of model components. The consistently high performance across models confirms that parameter-efficient fine-tuning (PEFT) using 4-bit quantization and LoRA/QLoRA yields state-of-the-art results with reduced computational requirements.

The fined-tuned models that were produced using the EVuLLM dataset are publicly available under an Apache 2.0 license:

- EVuLLM Qwen 2.5 Coder: https://huggingface.co/emandana/EVuLLM_Qwen2_5_Coder_7B_Instruct_4b_d200 (accessed on 11 August 2025),

- EVuLLM Mistral v0.3: https://huggingface.co/emandana/EVuLLM_mistral_7b_Instruct_v03_4b_d200 (accessed on 11 August 2025),

- EVuLLM Mistral Nemo: https://huggingface.co/emandana/EVuLLM_Mistral_Nemo_Instruct_2407_4b_d200 (accessed on 11 August 2025),

- EVuLLM LLaMa 3.1: https://huggingface.co/emandana/EVuLLM_Meta_Llama_3.1_8B_Instruct_4b_d200 (accessed on 11 August 2025),

- EVuLLM CodeGemma: https://huggingface.co/emandana/EVuLLM_codegemma_7b_4b_d200 (accessed on 11 August 2025).

5.4. Classification Examples

To gain a deeper understanding of our models’ limitations and inform future enhancements, we present a selection of representative examples of correctly and incorrectly classified functions. These cases demonstrate how the models handle various smart contract patterns and highlight potential sources of error. By examining both successful predictions and misclassifications, we aim to provide insight into the practical behavior of the models and their limitations, particularly in scenarios involving complex security contexts or ambiguous patterns.

Figure 4 shows a function that the CodeGemma model, using the RAG approach, misclassified as safe, despite being labeled as vulnerable in the dataset.

Figure 4.

The preSign function.

The vulnerability arises from the ability to arbitrarily pre-sign orders, which can lead to replay attacks or unauthorized trades. Despite the presence of the onlyOwner modifier, this function introduces a serious security risk due to its unrestricted pre-authorization logic. The model appears to have relied heavily on the presence of the onlyOwner modifier as a safety indicator, leading to a superficial generalization that “owner-only functions are safe.” Without contextually similar examples in the retrieved set to challenge this assumption, the model failed to capture the deeper security issue. This illustrates a key limitation of the RAG-based approach: its effectiveness depends on the relevance of retrieved context. If retrieved samples do not reflect the specific vulnerability class, the model may default to pattern-based heuristics rather than deeper semantic understanding.

Interestingly, while the CodeGemma model misclassified the preSign function as safe, the Granite Code model correctly identified it as vulnerable. This discrepancy underscores how model architecture and training data can significantly affect the ability to recognize subtle or context-dependent vulnerabilities, such as those involving unrestricted authorization mechanisms.

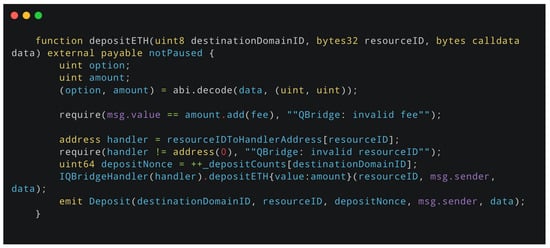

In contrast to the earlier misclassification example, the depositETH function from the QBridge contract (Figure 5) was correctly classified as safe by all models. This function includes a set of robust validation checks, such as verifying the message value against the expected amount plus fee, confirming the existence of a valid handler for the given resource ID, and emitting appropriate events. The model’s correct prediction in this case can be attributed to its ability to recognize standard, well-structured patterns of secure fund handling and authorization logic. Moreover, similar examples present in the retrieval context likely contributed to the model’s accurate decision, reinforcing the importance of representative training data and relevant contextual examples during prediction.

Figure 5.

The depositETH function.

5.5. Cost and Performance Analysis

In this section, we provide a cost analysis for commercially available large language models, focusing on the publicly disclosed pricing of ChatGPT. We also present detailed performance metrics for our fine-tuned and RAG models. Although the infrastructure used for training our models was provided at no direct cost, we report runtime, memory usage, and efficiency measurements to illustrate the practical benefits and resource requirements of our approach. This comparison highlights the potential cost efficiency and performance trade-offs associated with using open-source lightweight models versus commercial alternatives.

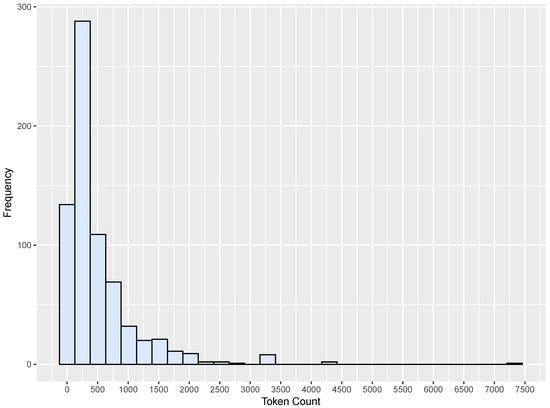

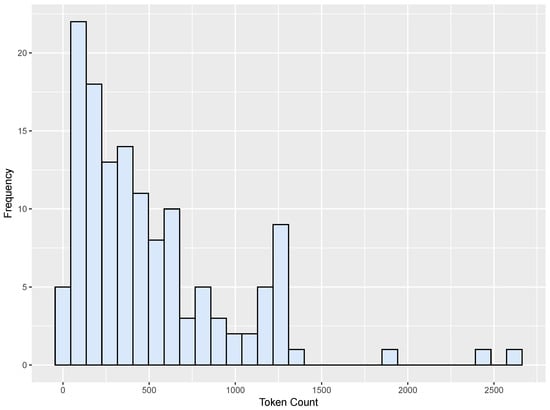

The TrustLLM and EVuLLM datasets included functions with an average size of about 500 tokens. The token distribution for each respective dataset is shown in Figure 6 and Figure 7.

Figure 6.

Token distribution for the TrustLLM dataset.

Figure 7.

Token distribution for the EVuLLM dataset.

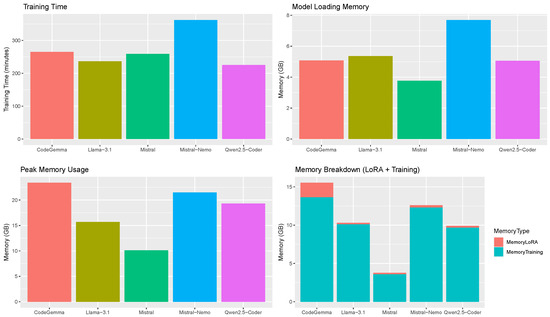

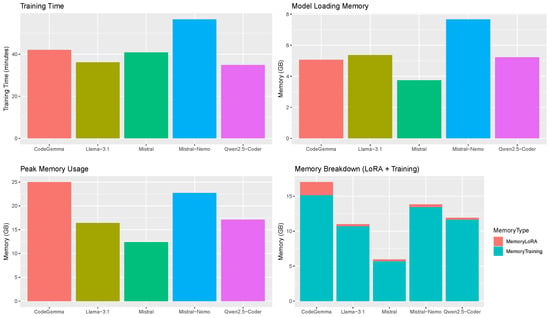

We assessed the computational requirements for fine-tuning the local models used in our study. For the EVuLLM dataset, training times ranged from 34.9 to 56.6 min per model, with peak memory usage between 12.4 GB and 25.0 GB, and model loading memory ranging from 3.74 GB to 7.67 GB. In contrast, training on the TrustLLM dataset required substantially more time, ranging from 225.1 to 362.0 min, with peak memory usage between 10.1 GB and 23.4 GB, and loading memory between 3.76 GB and 7.69 GB. Detailed per-model results are presented in Figure 8 and Figure 9. The memory breakdown metrics shown in these figures list how much memory was occupied by LoRA adapter parameters and how much for training-related computations like gradients and optimizer states.

Figure 8.

Performance metrics for fine-tuning models using the TrustLLM dataset.

Figure 9.

Performance metrics for fine-tuning models using the EVuLLM dataset.

Using similar hardware to the one we used for fine-tuning our models on a standard cloud platform (e.g., an AWS g6e.xlarge instance) currently incurs a cost of approximately USD per hour. Therefore, fine-tuning one model with the EVuLLM dataset would cost approximately USD 2 at most, while fine-tuning one model with the TrustLLM dataset would cost approximately USD 12 at most.

The proprietary ChatGPT-4o model used in this study for inference has a pricing structure of USD 5 per 1 million input tokens and USD 20 per 1 million output tokens. Given that each API request typically generates approximately 3000 output tokens, we estimate the cost of evaluating the vulnerability of a single function to be around USD on average. The complete TrustLLM dataset includes a total of input tokens and costs approximately USD 45 to run the complete dataset through the ChatGPT-4o API. Similarly, the EVuLLM dataset includes a total of tokens and costs approximately USD to run through the ChatGPT-4o API.

The inference performance of our fine-tuned models reached approximately 1000 tokens per second when running on the same A100 GPUs used during the fine-tuning process. As a result, processing an average function with 500 input tokens and generating around 3000 output tokens takes only a few seconds on this hardware. The entire TrustLLM dataset takes about 36 min to run, with the associated cost being approximately USD 1 on a standard cloud platform. The entire EVuLLM dataset completes in less than 7 min, with an associated cost of approximately USD 0.12 on a standard cloud platform.

Although our fine-tuned models were trained on high-end GPUs, they remain deployable on commodity hardware. On a mid-range laptop equipped with an Intel i7-1165G7 CPU (2.80 GHz) and no dedicated GPU, inference speed drops to approximately 5–10 tokens per second. In this configuration, processing a single function of average size may take several minutes. In contrast, on a modern desktop system with a high-performance GPU, such as the NVIDIA RTX 3090 Ti, inference speed exceeds 50 tokens per second, enabling a significantly more responsive user experience. On such a system, processing an average function typically takes only a few seconds. The power consumption of this desktop configuration is approximately 450 W, with the operating cost depending on local electricity rates.

In contrast, RAG approaches do not require fine-tuning and can operate entirely on standard hardware. However, they tend to be slower in practice. In our experiments, RAG-based methods were consistently approximately four times slower than our fine-tuned models. Consequently, RAG represents a trade-off: it eliminates the need for specialized hardware for fine-tuning, but at the cost of significantly longer inference times.

Estimating the cost of inference on local hardware is inherently challenging due to the many factors involved. Unlike cloud-based APIs with transparent, usage-based pricing, local inference costs depend on variables such as hardware acquisition and depreciation, electricity consumption, cooling, system maintenance, and potential opportunity costs associated with hardware availability. These costs also vary widely depending on the scale of deployment and the specific hardware used. As such, while performance metrics like tokens per second provide useful insight, translating them into a precise monetary cost is non-trivial and highly context-dependent.

These results demonstrate that our approach not only achieves high performance but also offers a highly cost-efficient alternative to large proprietary models, particularly for privacy-sensitive or resource-constrained environments.

6. Conclusions and Future Work

This section summarizes the key contributions and findings of our study on LLM-based smart contract vulnerability detection and outlines potential directions for future research and improvement.

Our study demonstrates the effectiveness of integrating LLMs into smart contract vulnerability detection. We present publicly available, high-performance models that achieve up to 94% accuracy on Solidity code, showing that smaller, open-source LLMs can match or exceed the performance of significantly larger proprietary models in both accuracy and reliability.

We released the comprehensive EVuLLM dataset designed to support the evaluation of prompt engineering and majority-voting strategies. This resource is intended to improve classification robustness and reduce bias.

We also developed a suite of fine-tuned models trained on the TrustLLM dataset and our extended EVuLLM dataset. These models confirmed that domain-specific fine-tuning leads to significant performance gains. Our best-performing model, based on CodeGemma, achieved 94.78% accuracy on EVuLLM and 92.52% on TrustLLM, surpassing GPT-4o despite using far fewer parameters. These results highlight the strength of parameter-efficient fine-tuning techniques such as LoRA and QLoRA, which deliver high task performance with lower memory and compute requirements.

Our findings emphasize the practical advantages of deploying lightweight LLMs locally. By using open-source, resource-efficient models, our approach enables use cases where privacy, transparency, and infrastructure independence are critical, such as in security-sensitive smart contract analysis. In contrast to proprietary services, these models offer full control and customization, making them well-suited for constrained or regulated environments.

We further explored retrieval-augmented generation (RAG) as a complementary technique. Although retrieval quality can vary, our best-performing RAG model, Gemma-2, achieved 79.1% accuracy and a 78.8% F1-score on EVuLLM. While RAG underperforms compared to fine-tuned models, it still outperforms earlier non-fine-tuned baselines and demonstrates meaningful potential for security-focused tasks with minimal training.

This work also identifies several limitations that guide future research. The current binary classification framework (safe vs. vulnerable) limits the granularity of insights, and the relatively small dataset size with coarse annotations constrains generalizability. Future efforts should aim to develop larger, more detailed datasets that include specific vulnerability types and their locations. Our function-level analysis also lacks inter-function and contract-level context, highlighting the need for models capable of capturing broader semantic relationships. Additionally, it is important to evaluate models on a continuously updated set of real-world contracts that were not used during training. As part of future work, we recommend curating and annotating datasets from newly deployed contracts to support ongoing benchmarking.

To address these limitations, future research should prioritize the construction of larger, better-labeled datasets that enable multi-class vulnerability detection and fine-grained localization. Incorporating full contract-level representations and static analysis features could further enhance model understanding. Improving RAG pipelines through more effective context filtering and semantic similarity retrieval may also boost reliability.

Beyond smart contract auditing, the methods described in this paper, particularly lightweight fine-tuning and hybrid LLM-retrieval techniques, can be applied to other security-sensitive code analysis tasks. These include detecting misconfigurations in infrastructure-as-code (e.g., Terraform) or identifying vulnerabilities in API definitions. The same methodology could extend to automated compliance checks and secure software development workflows where code correctness is critical.

From a methodological standpoint, smaller model variants such as 3B or 1B remain underexplored and could provide further advantages in low-resource environments. Optimizing prompt efficiency and systematically tuning RAG parameters may also help close the performance gap between retrieval-based and fine-tuned approaches.

Finally, future work should explore multi-class vulnerability classification based on taxonomies such as SWC and OWASP. This requires richer datasets with detailed annotations, as well as new model architectures capable of handling more complex output structures.

Overall, this study establishes a foundation for LLM-driven smart contract security. By leveraging lightweight, fine-tuned, and open-source models, it opens the path to accessible, efficient, and secure automated vulnerability detection in blockchain ecosystems.

Author Contributions

Conceptualization, G.V.; methodology, E.M.; software, E.M.; validation, E.M. and G.V.; investigation, E.M. and G.V.; resources, E.M. and G.V.; data curation, E.M.; writing—original draft preparation, E.M.; writing—review and editing, G.V. and A.V.; visualization, E.M.; supervision, G.V. and A.V.; project administration, A.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data available in a publicly accessible repository.

Acknowledgments

Results presented in this work have been produced using the Aristotle University of Thessaloniki (AUTh) High-Performance Computing Infrastructure and Resources. The authors gratefully acknowledge the support provided by the AUTh IT Centre throughout the course of this research.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CoT | Chain of Thought |

| DAO | Decentralized Autonomous Organization |

| DeFi | Decentralized Finance |

| HPC | High Performance Computing |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| PEFT | Parameter-Efficient Fine-Tuning |

| QLoRA | Quantized Low-Rank Adaptation |

| RAG | Retrieval-Augmented Generation |

References

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System. 2008. Available online: https://assets.pubpub.org/d8wct41f/31611263538139.pdf (accessed on 11 August 2025).

- Kshetri, N. Blockchain’s roles in meeting key supply chain management objectives. Int. J. Inf. Manag. 2018, 39, 80–89. [Google Scholar] [CrossRef]

- Mengelkamp, E.; Gärttner, J.; Rock, K.; Weinhardt, C.; Bretschneider, B. Designing microgrid energy markets: A case study: The Brooklyn Microgrid. Appl. Energy 2018, 210, 870–880. [Google Scholar] [CrossRef]

- Azaria, A.; Ekblaw, A.; Vieira, T.; Lippman, A. MedRec: Using Blockchain for Medical Data Access and Permission Management. In Proceedings of the 2016 2nd International Conference on Open and Big Data (OBD), Vienna, Austria, 22–24 August 2016; pp. 25–30. [Google Scholar] [CrossRef]

- Zheng, Z.; Xie, S.; Dai, H.N.; Chen, X.; Wang, H. An overview of blockchain technology: Architecture, consensus, and future trends. In Proceedings of the 2017 IEEE International Congress on Big Data (BigData Congress), Boston, MA, USA, 11–14 December 2017; pp. 557–564. [Google Scholar] [CrossRef]

- Wood, G. Ethereum: A secure decentralised generalised transaction ledger. Ethereum Proj. Yellow Pap. 2014, 151, 1–32. [Google Scholar]

- Hewa, T.; Ylianttila, M.; Liyanage, M. Survey on Blockchain Based Smart Contracts: Applications, Opportunities and Challenges. J. Netw. Comput. Appl. 2021, 177, 102857. [Google Scholar] [CrossRef]

- Zheng, Z.; Xie, S.; Dai, H.N.; Chen, W.; Chen, X.; Weng, J.; Imran, M. An Overview on Smart Contracts: Challenges, Advances and Platforms. Future Gener. Comput. Syst. 2020, 105, 475–491. [Google Scholar] [CrossRef]

- Macrinici, D.; Cartofeanu, C.; Gao, S. Smart Contract Applications within Blockchain Technology: A Systematic Mapping Study. Telemat. Inform. 2018, 35, 2337–2354. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, B.; Xu, W.; Lin, Z. Demystifying Exploitable Bugs in Smart Contracts. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 615–627. [Google Scholar] [CrossRef]

- Zhou, X.; Cao, S.; Sun, X.; Lo, D. Large Language Model for Vulnerability Detection and Repair: Literature Review and the Road Ahead. arXiv 2024, arXiv:2404.02525. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2022, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. arXiv 2018, arXiv:1801.06146. [Google Scholar] [CrossRef]

- Kumar, A.B.V. Fine-Tuning LLM: Parameter Efficient Fine-Tuning (PEFT) —LoRA, QLoRA (Part 1). 2024. Available online: https://abvijaykumar.medium.com/fine-tuning-llm-parameter-efficient-fine-tuning-peft-lora-qlora-part-1-571a472612c4 (accessed on 27 September 2012).

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. arXiv 2023, arXiv:2305.14314. [Google Scholar] [CrossRef]

- Lewis, P.S.H.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. arXiv 2020, arXiv:2005.11401. [Google Scholar] [CrossRef]

- Borgeaud, S.; Mensch, A.; Hoffmann, J.; Cai, T.; Rutherford, E.; Millican, K.; van den Driessche, G.; Lespiau, J.B.; Damoc, B.; Clark, A.; et al. Improving language models by retrieving from trillions of tokens. arXiv 2022, arXiv:2112.04426. [Google Scholar] [CrossRef]

- Schär, F. Decentralized Finance: On Blockchain- and Smart Contract-Based Financial Markets. In Federal Reserve Bank of St. Louis Review, Second Quarter; Federal Reserve Bank of St. Louis: St. Louis, MO, USA, 2021; pp. 153–174. [Google Scholar] [CrossRef]

- Gemini. Why Are Most dApps Built on Ethereum? 2023. Available online: https://www.gemini.com/cryptopedia/dapps-ethereum-decentralized-application (accessed on 4 September 2024).

- Brent, L.; Grech, N.; Lagouvardos, S.; Scholz, B.; Smaragdakis, Y. Ethainter: A Smart Contract Security Analyzer for Composite Vulnerabilities. In Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation, New York, NY, USA, 15–20 June 2020; PLDI: Seoul, Republic of Korea, 2020; pp. 454–469. [Google Scholar] [CrossRef]

- Liu, H.; Liu, C.; Zhao, W.; Jiang, Y.; Sun, J. S-gram: Towards Semantic-Aware Security Auditing for Ethereum Smart Contracts. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, New York, NY, USA, 3–7 September 2018; ASE ’18. pp. 814–819. [Google Scholar] [CrossRef]

- Bose, P.; Das, D.; Chen, Y.; Feng, Y.; Kruegel, C.; Vigna, G. SAILFISH: Vetting Smart Contract State-Inconsistency Bugs in Seconds. arXiv 2021, arXiv:2104.08638. [Google Scholar] [CrossRef]

- Liao, Z.; Zheng, Z.; Chen, X.; Nan, Y. SmartDagger: A Bytecode-Based Static Analysis Approach for Detecting Cross-Contract Vulnerability. In Proceedings of the 31st ACM SIGSOFT International Symposium on Software Testing and Analysis, New York, NY, USA, 18–22 July 2022; ISSTA: Trondheim, Norway, 2022; pp. 752–764. [Google Scholar] [CrossRef]

- So, S.; Hong, S.; Oh, H. SmarTest: Effectively Hunting Vulnerable Transaction Sequences in Smart Contracts through Language {Model-Guided} Symbolic Execution. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Vancouver, BC, Canada, 11–13 August 2021; pp. 1361–1378. [Google Scholar]

- Wang, W.; Song, J.; Xu, G.; Li, Y.; Wang, H.; Su, C. ContractWard: Automated Vulnerability Detection Models for Ethereum Smart Contracts. IEEE Trans. Netw. Sci. Eng. 2021, 8, 1133–1144. [Google Scholar] [CrossRef]

- So, S.; Oh, H. SmartFix: Fixing Vulnerable Smart Contracts by Accelerating Generate-and-Verify Repair Using Statistical Models. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, San Francisco, CA, USA, 3–9 December 2023; pp. 185–197. [Google Scholar] [CrossRef]

- Sun, Y.; Wu, D.; Xue, Y.; Liu, H.; Wang, H.; Xu, Z.; Xie, X.; Liu, Y. GPTScan: Detecting Logic Vulnerabilities in Smart Contracts by Combining GPT with Program Analysis. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; pp. 1–13. [Google Scholar] [CrossRef]

- Li, Z.; Dutta, S.; Naik, M. LLM-Assisted Static Analysis for Detecting Security Vulnerabilities. arXiv 2024, arXiv:2405.17238. [Google Scholar] [CrossRef]

- Shou, C.; Liu, J.; Lu, D.; Sen, K. LLM4Fuzz: Guided Fuzzing of Smart Contracts with Large Language Models. arXiv 2024, arXiv:2401.11108. [Google Scholar] [CrossRef]

- Ma, W.; Wu, D.; Sun, Y.; Wang, T.; Liu, S.; Zhang, J.; Xue, Y.; Liu, Y. Combining Fine-Tuning and LLM-based Agents for Intuitive Smart Contract Auditing with Justifications. arXiv 2024, arXiv:2403.16073. [Google Scholar] [CrossRef]

- Boi, B.; Esposito, C.; Lee, S. Smart Contract Vulnerability Detection: The Role of Large Language Model (LLM). SIGAPP Appl. Comput. Rev. 2024, 24, 19–29. [Google Scholar] [CrossRef]

- Yang, Z.; Man, G.; Yue, S. Automated Smart Contract Vulnerability Detection Using Fine-tuned Large Language Models. In Proceedings of the 2023 6th International Conference on Blockchain Technology and Applications, New York, NY, USA, 26–28 June 2024; ICBTA ’23. pp. 19–23. [Google Scholar] [CrossRef]

- Soud, M.; Nuutinen, W.; Liebel, G. Soley: Identification and Automated Detection of Logic Vulnerabilities in Ethereum Smart Contracts Using Large Language Models. arXiv 2024, arXiv:2406.16244. [Google Scholar] [CrossRef]

- Sun, Y.; Wu, D.; Xue, Y.; Liu, H.; Ma, W.; Zhang, L.; Shi, M.; Liu, Y. LLM4Vuln: A Unified Evaluation Framework for Decoupling and Enhancing LLMs’ Vulnerability Reasoning. arXiv 2024, arXiv:2401.16185. [Google Scholar] [CrossRef]

- Boi, B.; Esposito, C.; Lee, S. VulnHunt-GPT: A Smart Contract Vulnerabilities Detector Based on OpenAI chatGPT. In Proceedings of the 39th ACM/SIGAPP Symposium on Applied Computing, New York, NY, USA, 8–12 April 2024; SAC ’24. pp. 1517–1524. [Google Scholar] [CrossRef]

- Daneshvar, S.S.; Nong, Y.; Yang, X.; Wang, S.; Cai, H. Exploring RAG-based Vulnerability Augmentation with LLMs. arXiv 2024, arXiv:2408.04125. [Google Scholar] [CrossRef]

- Yu, J. Retrieval Augmented Generation Integrated Large Language Models in Smart Contract Vulnerability Detection. arXiv 2024, arXiv:2407.14838. [Google Scholar] [CrossRef]

- David, I.; Zhou, L.; Qin, K.; Song, D.; Cavallaro, L.; Gervais, A. Do You Still Need a Manual Smart Contract Audit? arXiv 2023, arXiv:2306.12338. [Google Scholar] [CrossRef]

- Code4rena. 2024. Available online: https://code4rena.com (accessed on 5 January 2025).

- MetaTrustLabs. Github Repository–GPTScan Top200. 2024. Available online: https://github.com/MetaTrustLabs/GPTScan-Top200 (accessed on 23 November 2024).

- Unsloth AI. Unsloth: Fine-Tuning and Reinforcement Learning for LLMs. 2024. Available online: https://github.com/unslothai/unsloth (accessed on 25 May 2025).

- Dettmers, T. Bitsandbytes: 8-Bit Optimizers and Quantization Routines. 2022. Available online: https://github.com/TimDettmers/bitsandbytes (accessed on 25 May 2025).

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; Liu, Q., Schlangen, D., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Lhoest, Q.; Villanova del Moral, A.; Jernite, Y.; Thakur, A.; von Platen, P.; Patil, S.; Chaumond, J.; Drame, M.; Plu, J.; Tunstall, L.; et al. Datasets: A Community Library for Natural Language Processing. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online/Punta Cana, Dominican Republic, 7–11 November 2021; Adel, H., Shi, S., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 175–184. [Google Scholar] [CrossRef]

- Mangrulkar, S.; Gugger, S.; Debut, L.; Belkada, Y.; Paul, S.; Bossan, B. PEFT: State-of-the-Art Parameter-Efficient Fine-Tuning Methods. 2022. Available online: https://github.com/huggingface/peft (accessed on 25 May 2025).

- Unsloth AI. Codegemma 7B Instruct 4bit. 2024. Available online: https://huggingface.co/unsloth/codegemma-7b-it-bnb-4bit (accessed on 5 January 2025).