Analysis of OpenCV Security Vulnerabilities in YOLO v10-Based IP Camera Image Processing Systems for Disaster Safety Management

Abstract

1. Introduction

2. Related Research

2.1. Research on Security Vulnerabilities in IP Cameras

2.2. Types of Attacks and Preventive Measures Against Video Surveillance Systems

2.3. Integrated Fire and Safety Tool Detection Algorithm Using YOLO Network

2.4. YOLOv5, YOLOv8, YOLOv10: Evolution and Comparative Analysis of Object Detectors for Real-Time Vision

3. Proposal

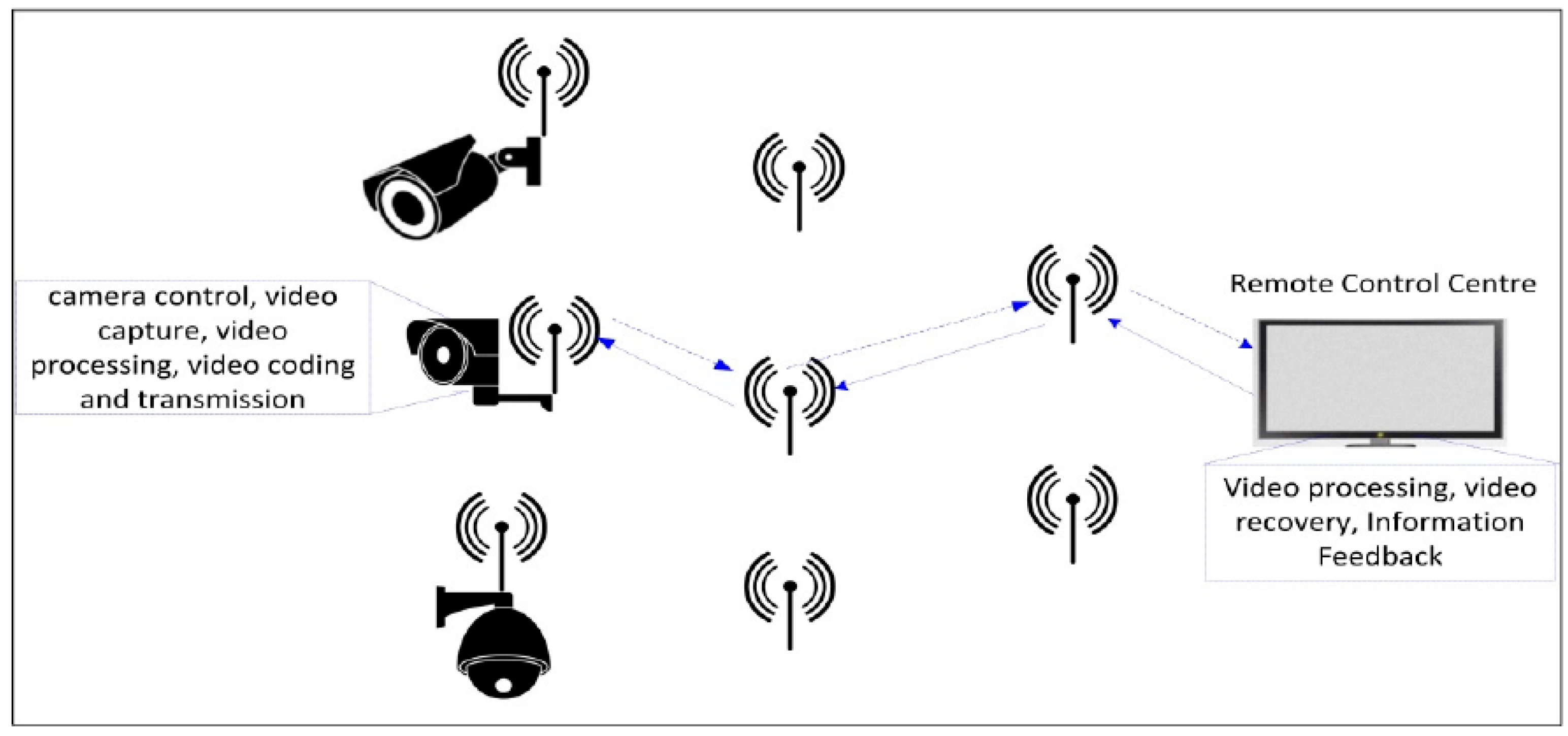

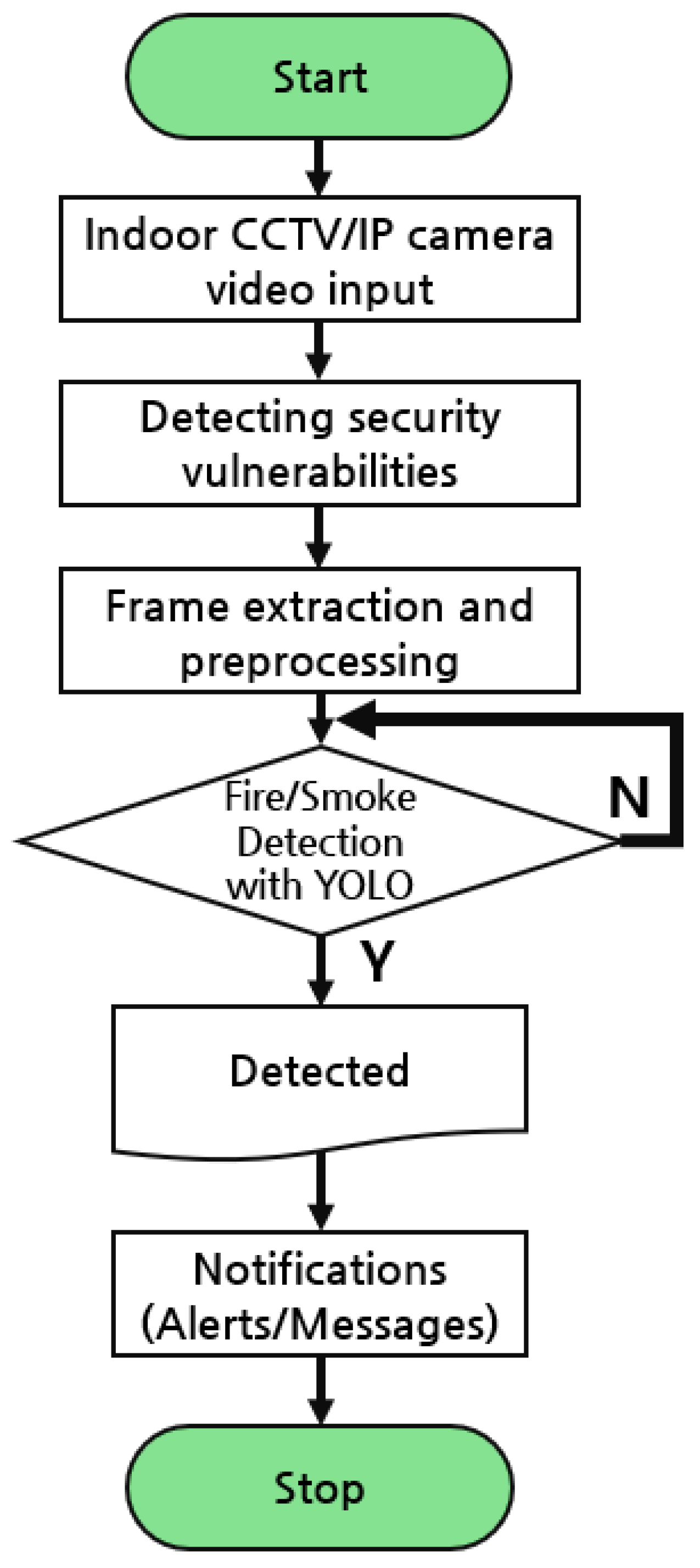

3.1. Disaster Safety System for Fire and Smoke Using YOLO v10

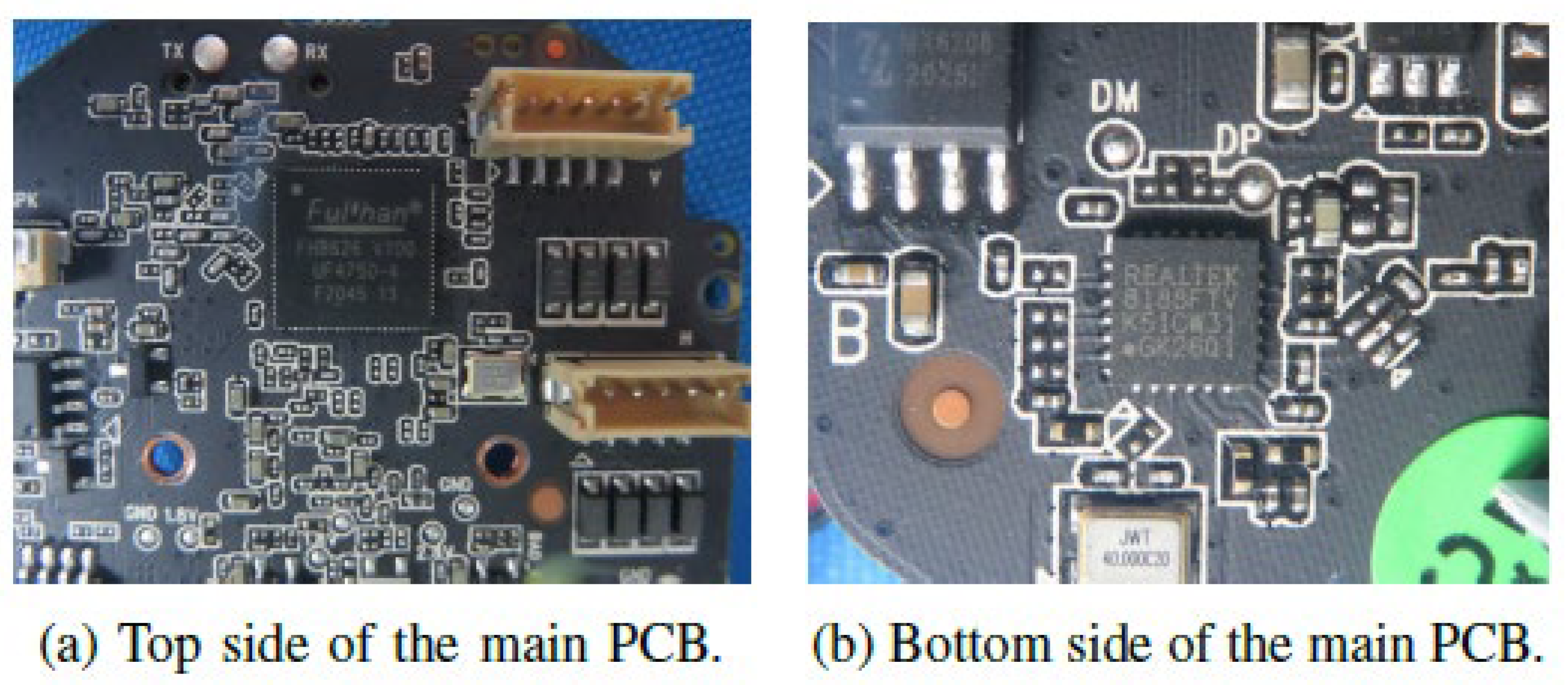

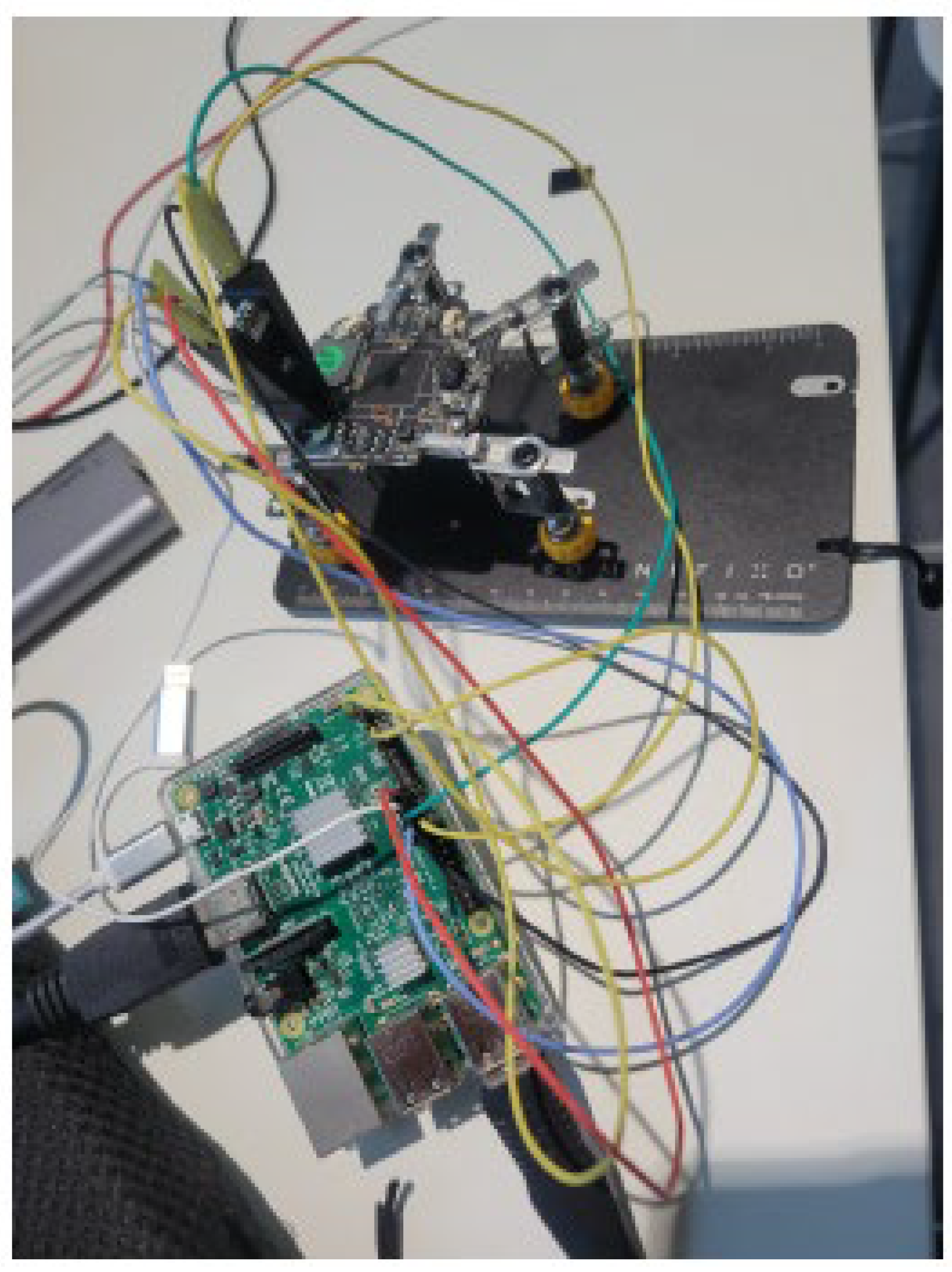

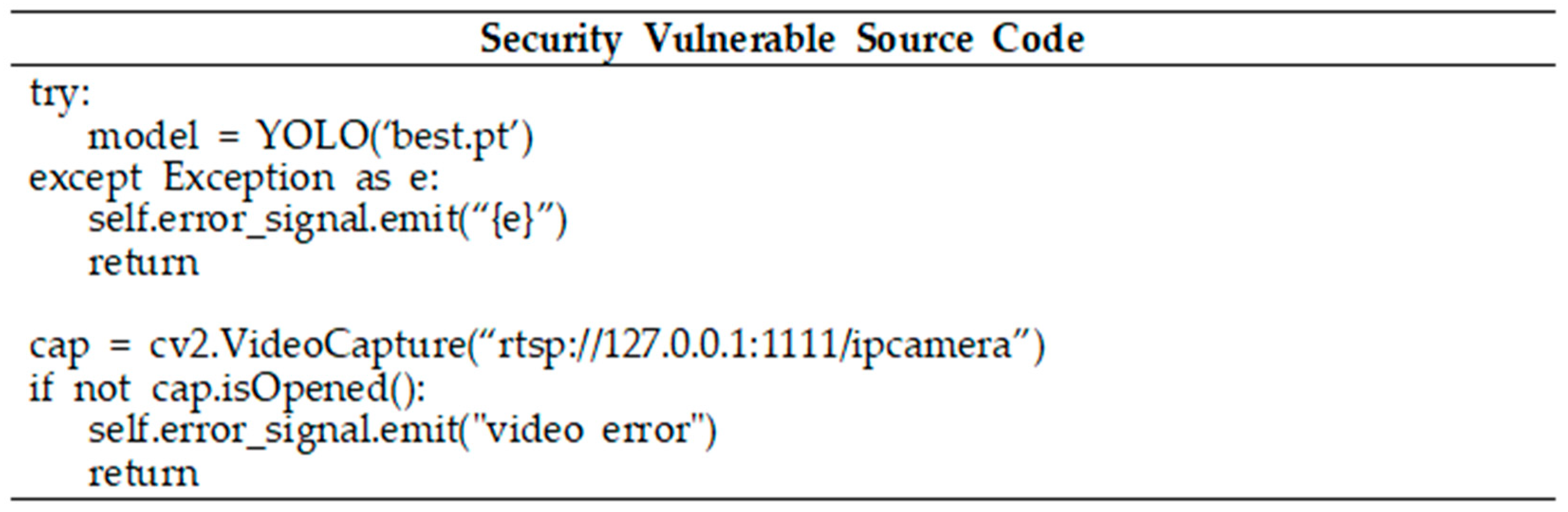

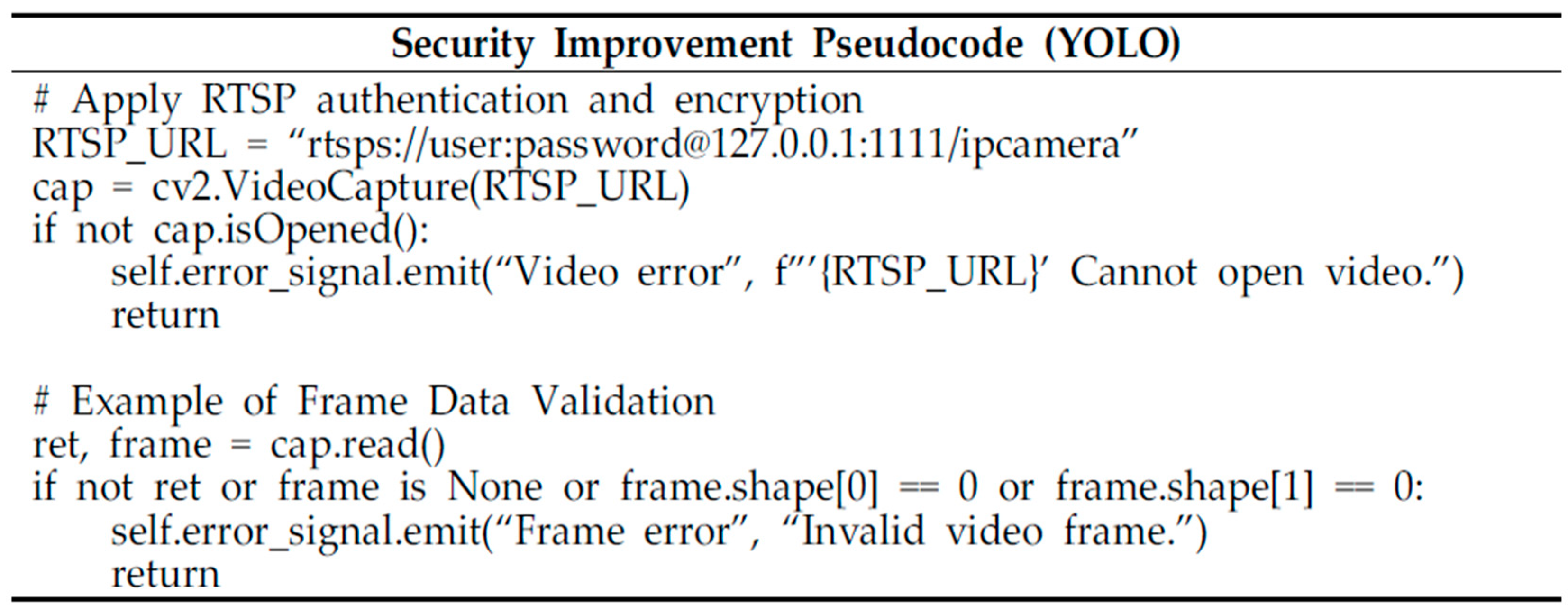

3.2. OpenCV Vulnerability Improvement for IP Camera

4. Experimental Results and Analysis

4.1. Experimental Environment

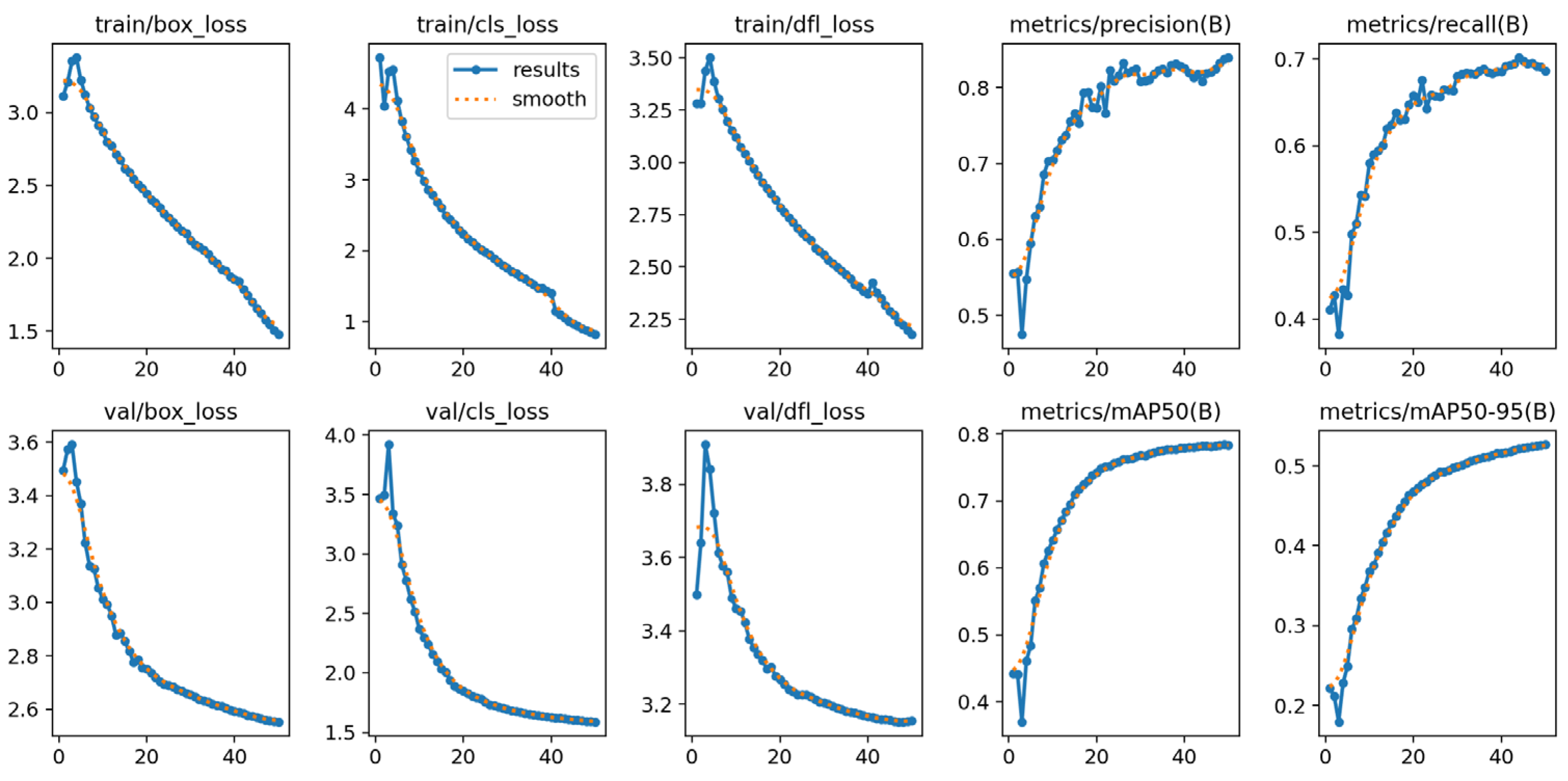

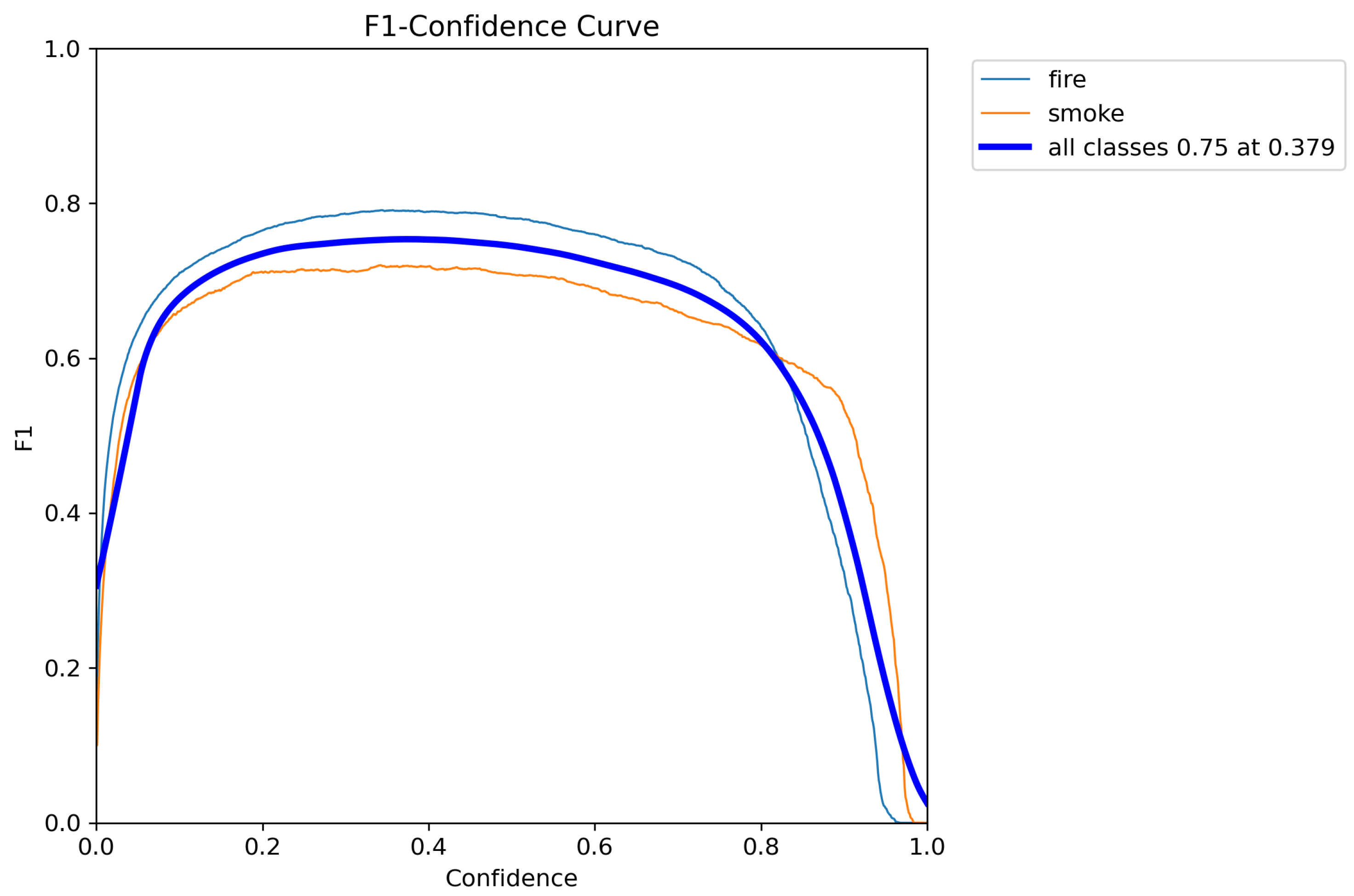

4.2. Results of Indoor Fire Detection Experiment Using YOLO v10

4.3. Results of Security Vulnerability Testing on OpenCV Code

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Irene, S.; Prakash, A.J.; Uthariaraj, V.R. Person search over security video surveillance systems using deep learning methods: A review. Image Vis. Comput. 2024, 143, 104930. [Google Scholar] [CrossRef]

- Ganapathy, S.; Ajmera, D. An Intelligent Video Surveillance System for Detecting the Vehicles on Road Using Refined YOLOV4. Comput. Electr. Eng. 2023, 113, 109036. [Google Scholar] [CrossRef]

- Sapkota, R.; Flores-Calero, M.; Qureshi, R.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M.; et al. YOLO advances to its genesis: A decadal and comprehensive review of the You Only Look Once (YOLO) series. Artif. Intell. Rev. 2025, 58, 274. [Google Scholar] [CrossRef]

- Biondi, P.; Bognanni, S.; Bella, G. Vulnerability assessment and penetration testing on IP camera. In Proceedings of the 2021 8th International Conference on Internet of Things: Systems, Management and Security (IOTSMS), Gandia, Spain, 6–9 December 2021; pp. 1–8. [Google Scholar]

- Moon, J.; Bukhari, M.; Kim, C.; Nam, Y.; Maqsood, M.; Rho, S. Object detection under the lens of privacy: A critical survey of methods, challenges, and future directions. ICT Express 2024, 10, 1124–1144. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Rahimi, A. SH17: A dataset for human safety and personal protective equipment detection in manufacturing industry. J. Saf. Sci. Resil. 2025, 6, 175–185. [Google Scholar] [CrossRef]

- Stabili, D.; Bocchi, T.; Valgimigli, F.; Marchetti, M. Finding (and exploiting) vulnerabilities on IP Cameras: The Tenda CP3 case study. Adv. Comput. Secur. 2024, 195–210. [Google Scholar]

- Bhardwaj, A.; Bharany, S.; Ibrahim, A.O.; Almogren, A.; Rehman, A.U.; Hamam, H. Unmasking vulnerabilities by a pioneering approach to securing smart IoT cameras through threat surface analysis and dynamic metrics. Egypt. Inform. J. 2024, 27, 100513. [Google Scholar] [CrossRef]

- Vennam, P.; T.C., P.; B.M., T.; Kim, Y.G.; B.N., P.K. Attacks and Preventive Measures on Video Surveillance Systems: A Review. Appl. Sci. 2021, 11, 5571. [Google Scholar] [CrossRef]

- Wang, X.; Cai, L.; Zhou, S.; Jin, Y.; Tang, L.; Zhao, Y. Fire Safety Detection Based on CAGSA-YOLO Network. Fire 2023, 6, 297. [Google Scholar] [CrossRef]

- Li, X.; Liang, Y. Fire-RPG: An Urban Fire Detection Network Providing Warnings in Advance. Fire 2024, 7, 214. [Google Scholar] [CrossRef]

- Hussain, M. Yolov5, yolov8 and yolov10: The go-to detectors for real-time vision. arXiv 2024, arXiv:2407.02988. [Google Scholar]

| Division | Detail |

|---|---|

| OS | Ubuntu Server 22.04 jammy |

| Kernel | X86_64 Linux 5.15.0–1018-nvidia |

| CPU | Intel Core i7 12th generation 12700 (3.2 Ghz) |

| RAM | 64 GB (DDR5-5600) |

| GPU | NVIDIA Tesla M40 24 GB (GDDR5) |

| Disk | SSD 1TB + HDD 2TB |

| Python | 3.11 |

| Framework | PyTorch 2.12 + CUDA 12.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, D.-Y.; Kim, N.-H. Analysis of OpenCV Security Vulnerabilities in YOLO v10-Based IP Camera Image Processing Systems for Disaster Safety Management. Electronics 2025, 14, 3216. https://doi.org/10.3390/electronics14163216

Jung D-Y, Kim N-H. Analysis of OpenCV Security Vulnerabilities in YOLO v10-Based IP Camera Image Processing Systems for Disaster Safety Management. Electronics. 2025; 14(16):3216. https://doi.org/10.3390/electronics14163216

Chicago/Turabian StyleJung, Do-Yoon, and Nam-Ho Kim. 2025. "Analysis of OpenCV Security Vulnerabilities in YOLO v10-Based IP Camera Image Processing Systems for Disaster Safety Management" Electronics 14, no. 16: 3216. https://doi.org/10.3390/electronics14163216

APA StyleJung, D.-Y., & Kim, N.-H. (2025). Analysis of OpenCV Security Vulnerabilities in YOLO v10-Based IP Camera Image Processing Systems for Disaster Safety Management. Electronics, 14(16), 3216. https://doi.org/10.3390/electronics14163216