1. Introduction

With autonomous vehicles (AVs) becoming increasingly common, ensuring they can safely handle a wide range of driving situations is more important than ever. To test this capability, researchers use a framework known as the Operational Design Domain (ODD). The definition of an ODD can vary, depending on the perspective of the end user, system designer, or other stakeholders. It is extensible in nature, allowing new attributes or details to be added based on input from these roles [

1].

A widely accepted definition describes an ODD as the set of operating conditions under which a given driving automation system or a specific feature of it is designed to function. These conditions may include environmental, geographical, and time-of-day restrictions, as well as the presence or absence of certain traffic or roadway characteristics [

2]. In essence, the ODD defines the operational environment within which an AV is intended to operate reliably and safely [

2].

Given the safety-critical nature of AVs, the precise specification of the ODD is essential. As the level of autonomy increases, decision-making responsibility shifts more from humans to machines, requiring AVs to manage a growing range of unexpected real-world scenarios. Consequently, if an AV operates outside its defined ODD, its safety can no longer be assured [

3].

In simulation-based development and testing of AVs, the ODD plays a central role by delineating the specific conditions under which the system must perform as intended [

4]. By specifying operational constraints, such as weather, traffic, and road conditions, developers can ensure that AV systems are tested and validated within clearly defined boundaries [

2,

5]. In this context, attributes, such as searchability, exchangeability, extensibility, machine readability, human readability (via constrained natural language), measurability, and verifiability, must be satisfied [

4]. These factors collectively enable simulations to support systematic testing across key metrics, including performance, safety, and reliability.

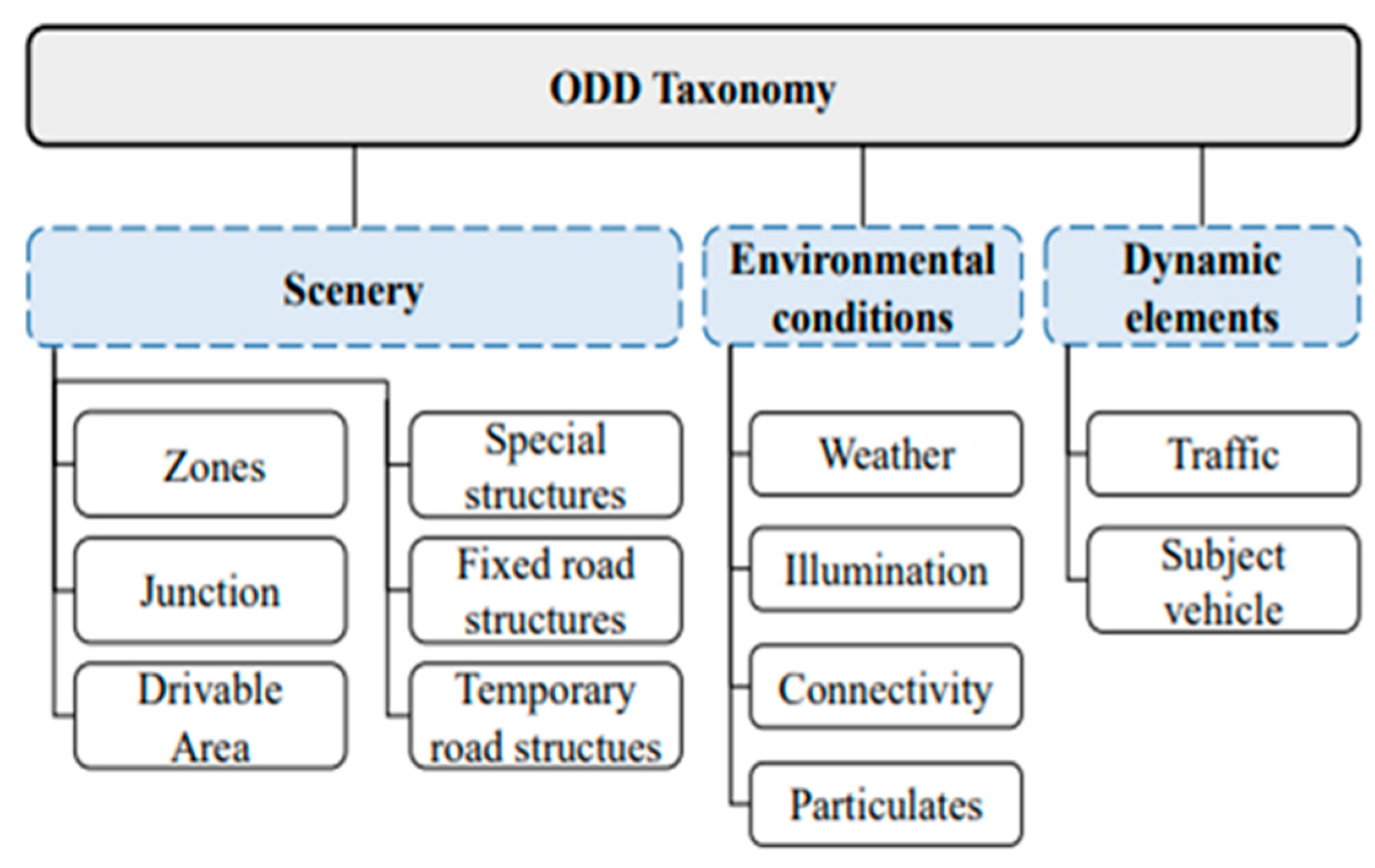

Figure 1 highlights the operational constraints used to design and test simulation scenarios that reflect real-world complexities for AVs.

Converting ODDs into simulation scenarios for autonomous vehicles presents several challenges, primarily due to the complexity and variability of the real-world conditions that define an ODD [

6]. The manual creation of such scenarios is often labor-intensive and time-consuming. Critical tasks, such as data labeling for AV simulations, are still predominantly carried out by engineers with minimal automation support [

7].

Furthermore, as AV technology evolves, the scope of ODDs naturally expands. This growth necessitates continual updates to simulation scenarios to ensure they remain effective, scalable, and reflective of the intended operational environment. Most existing methods for generating simulation scenarios rely heavily on manual input or rigid rule-based systems. While these approaches are functional, they often lack the flexibility required to capture the full complexity of real-world driving, particularly in rare or edge-case situations, such as unexpected pedestrian behavior or sudden weather changes [

8].

This is where large language models (LLMs), such as GPT-4 and LLaMA, offer significant potential. Due to their ability to comprehend and generate natural language, LLMs can be employed to transform textual ODD descriptions into detailed, executable driving scenarios suitable for testing and validation.

2. Related Works

2.1. Scenario Generation for Autonomous Vehicles

Scenario generation offers significant advantages in terms of scene diversity, hazard representation, interpretability, and generation efficiency—factors that are crucial for the testing and validation of intelligent vehicles. These benefits directly contribute to enhancing the safety and reliability of autonomous driving technologies [

9].

Scenario generation technologies generally fall into two main categories. The first category comprises model-based approaches, such as virtual simulation, which utilize precise physical modeling, efficient numerical simulation, high-fidelity image rendering, and other techniques to construct realistic driving environments. These environments encompass elements such as vehicles, roads, weather, lighting conditions, traffic participants, and various in-vehicle sensors [

10]. Given the vast diversity of features in real-world driving environments and their complex impact on onboard sensor systems, virtual testing scenarios are generated by integrating geometric mapping, physical mapping, pixel-level rendering, and probabilistic modeling. These scenarios can be tailored to meet various application-specific requirements.

The second category includes data-driven approaches, which focus on extracting latent patterns from large volumes of real-world scenario data to accurately replicate their characteristics and statistical distributions. Representative techniques in this category include generative adversarial networks (GANs) and accelerated sampling methods [

11].

2.2. LLM Applications for AVs

With the advancement of large language models (LLMs), researchers have begun exploring their application in the design of systems and modules related to autonomous driving. Typical applications include visual perception, vehicle control, and motion planning.

In the area of visual perception, Wu et al. [

12] proposed

PromptTrack, a framework that leverages cross-modal feature fusion to predict and infer referent objects within complex visual environments. Similarly, Elhafsi et al. [

13] employed the contextual reasoning capabilities of LLMs to identify semantically abnormal visual scenarios.

For vehicle control,

Language MPC introduces algorithms that use LLM-based reasoning to translate high-level decisions into executable driving commands [

14].

GPT Co-pilot [

15] analyzes the characteristics of various controllers and utilizes GPT to select appropriate control strategies based on different road environments.

DriveGPT4 further demonstrates the ability to interpret vehicle movements and respond to user queries, thereby enhancing human–machine interaction. It predicts control commands in an end-to-end manner [

16].

These frameworks collectively demonstrate the potential of LLMs to improve reasoning, contextual understanding, and adaptability in autonomous vehicle systems, promising performance across various tasks.

3. Methodology

As illustrated in

Figure 1, ODDs are classified based on three key factors essential for scenario specification. These factors will be used to create three corresponding scenario groups to develop a comprehensive pipeline for scenario generation. Together, these groups constitute a complete ODD scenario.

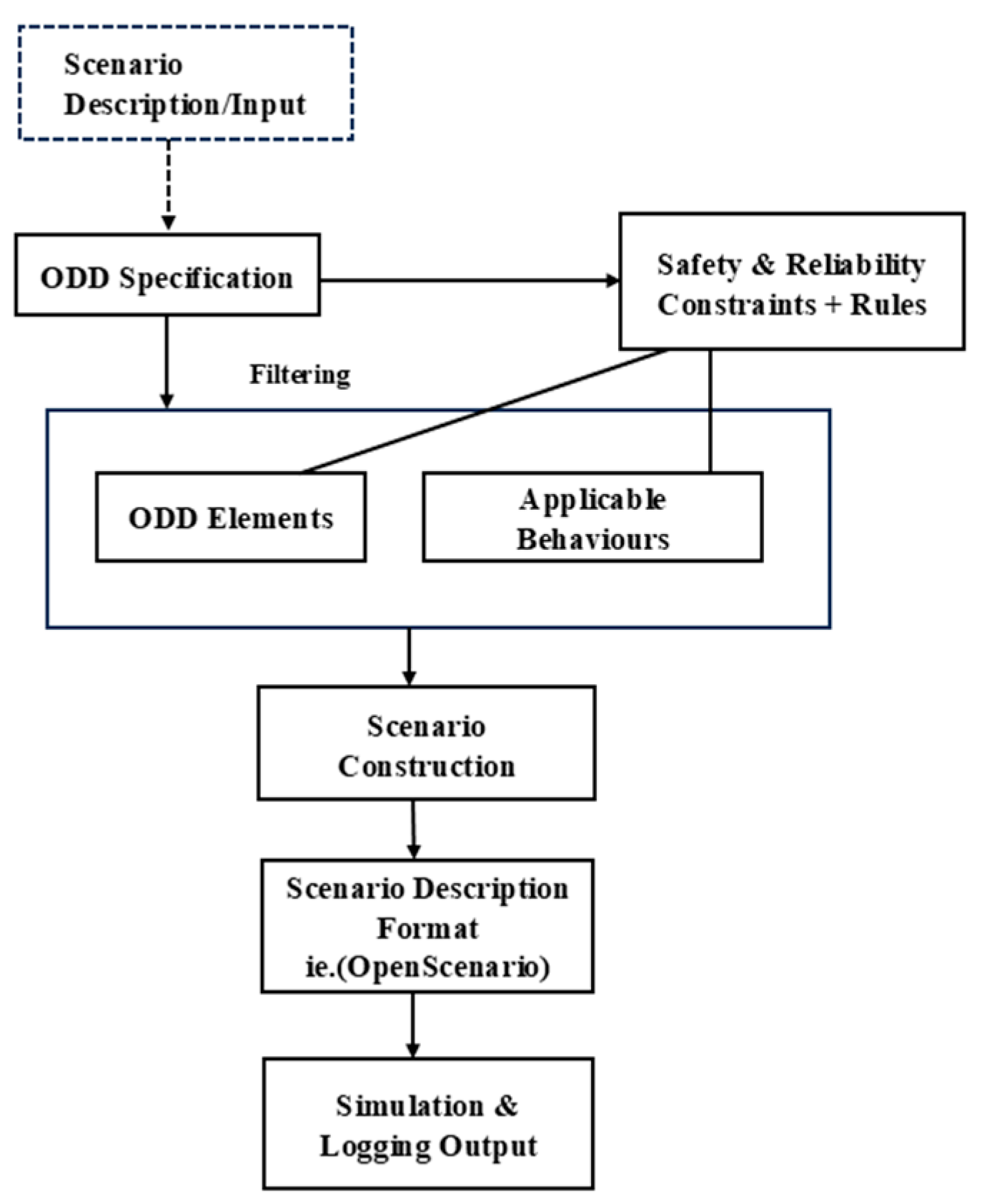

Figure 2 illustrates a workflow designed to enable developers to comprehensively test autonomous vehicles within their intended operational environments, ensuring safety and reliability. The proposed pipeline employs a large language model (LLM) to decompose and interpret ODD descriptions into extensible components, which can then be transformed into executable scenarios on simulation platforms, such as CARLA. Utilizing prompt engineering techniques, the approach aims to generate accurate and realistic simulation scenarios by incorporating chain-of-thought reasoning and domain-specific few-shot learning, thereby converting inputs into high-quality outputs with minimal errors.

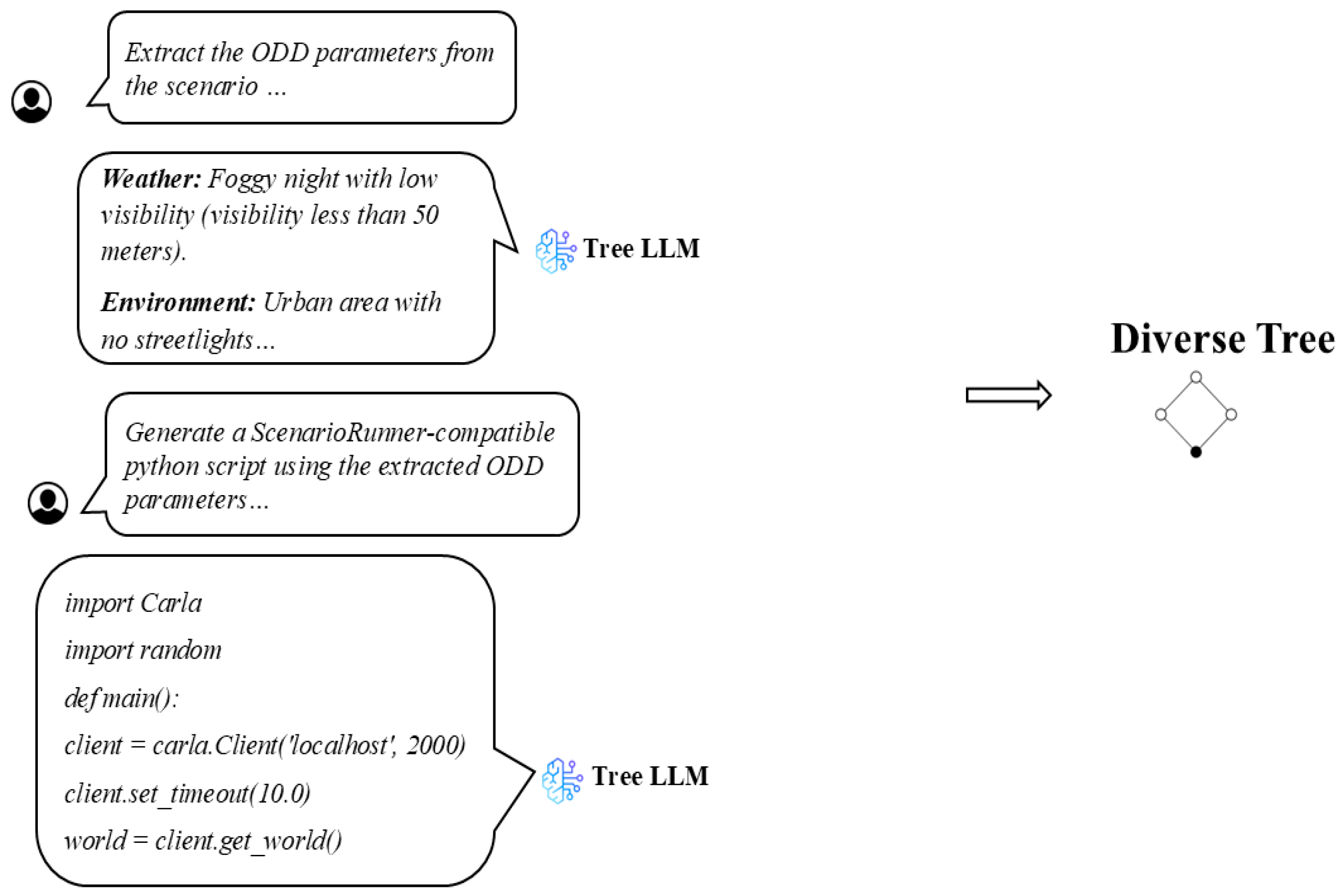

Figure 3 illustrates how simple scenarios can be used as prompts to generate more complex scenarios through large language models (LLMs) within a parallel framework. This approach facilitates virtual-real interaction by integrating descriptive, prescriptive, and predictive learning [

17].

3.1. Environmental ODD Group

This scenario group encompasses situations in which an environmental element significantly deviates from its normal state, thereby affecting the performance of the autonomous vehicle (AV). These scenarios typically do not involve dynamic interactions with other road users but instead arise from anomalies in the surrounding environment that the AV must detect and respond to appropriately. Environmental conditions in this group have the potential to impact all AV functions from perception and planning to actuation control by affecting visibility, sensor accuracy, vehicle maneuverability (due to changes in drivable surface conditions), and communication systems [

18]. Examples include extreme weather events such as heavy snowfall or dense fog, along with unexpected road obstructions caused by static objects like fallen trees or large debris [

18].

3.2. Scenery ODD Group

This scenario group includes spatially fixed elements within the AV’s operational environment, such as lane configurations, including the number of lanes, lane width, and lane markings, as well as static infrastructure. While these elements have fixed locations, their operational states may dynamically evolve. For example, traffic flow direction on certain drivable areas may vary depending on the time of day or day of the week. Other factors influencing AV perception and decision-making include the presence or absence of lane markings, lane availability (e.g., lane closures), and temporary modifications to static elements, such as construction zones, road signs, barriers, and sidewalks. Additionally, movable infrastructure, such as bridges that open and close to allow boat traffic, illustrates a changing operational state despite the fixed spatial location of the bridge itself [

18]. Therefore, all attributes with fixed locations are classified as scenery elements, while their states may vary over time [

18].

3.3. Dynamic ODD Group

This scenario group comprises the movable elements of the ODD, such as traffic participants and the subject vehicle. Dynamic elements introduce additional complexity to the operating environment due to their diverse nature and the presence of both predictable and unpredictable behaviors [

18].

4. Model-Based Approach

The model-based approach relies on predefined rules, logic, and domain knowledge to structure the outputs. Specifically, the LLM is guided by carefully designed rule-based prompts to extract structured parameters from ODD descriptions. These rules ensure that the generated parameters are formatted into an executable syntax compatible with the target simulation system. In this work, we utilize ScenarioRunner and the CARLA simulation platform [

19]. The generated simulation scenarios are then evaluated against predefined performance metrics, such as scenario accuracy, logical consistency, and execution success rate, to iteratively refine the scenario generation process.

4.1. Carla ScenarioRunner

ScenarioRunner is a module designed to define and execute traffic scenarios within the CARLA simulator [

19]. It enables users to create complex traffic situations and test autonomous driving algorithms in a controlled virtual environment. ScenarioRunner supports scenarios defined using the OpenSCENARIO 1.0 standard [

20], facilitating standardized scenario descriptions that can be authored through a Python interface.

4.2. Prompt Engineering

Prompt engineering refers to techniques that use task-specific inputs, known as prompts, to adapt pre-trained large language models (LLMs) for new tasks. This enables models to make predictions based solely on the prompts, without requiring updates to the underlying model parameters. Prompts can be created either manually [

9] or generated automatically as natural language hints or vector representations [

21].

Prompt engineering is generally divided into two categories: hard prompts and soft prompts. Hard prompts [

9,

22,

23] consist of manually crafted, interpretable text tokens that are discrete and typically used for selecting samples during contextual learning. Leveraging prompt engineering, LLMs, and multimodal large models can transfer learned representations from pre-training to various downstream applications, including content generation [

24], image or video retrieval [

25], semantic segmentation [

26], and other multimodal tasks [

27].

4.3. Prompt Engineering for Scenario Generation

The goal of scenario prompting is to provide an input prompt with a consistent and structured format for describing ODD parameters, ensuring that the LLM accurately understands and generates the desired output. By first defining what an ODD is, outlining some of its components, and explaining its relevance to autonomous vehicle simulations, the initial step is completed.

The subsequent step involves abstracting the scenario into a format that conveys high-level information. This is achieved by providing an example scenario containing a detailed list of ODD elements, such as the scenario groups described in the previous section, while allowing the LLM flexibility to generate additional details. This approach balances the need to avoid over-specification, enabling creativity, while maintaining sufficient control over scenario generation.

4.4. LLM Scenario Generation Pipeline Workflow Based on the Model-Based Approach

The pipeline leverages the Tree of Thoughts (ToT) paradigm [

28], which enables LLMs to explore multiple reasoning paths during scenario generation. A few-shot Chain of Thought (CoT) prompting approach is employed to generate these scenarios. The process begins by introducing the concept of scenario simulation for autonomous vehicles based on ODD descriptions to the LLM and requesting it to produce similar example scenarios referred to as the

initial-tree. This initial-tree is supplemented with classification patterns corresponding to the ODD scenario groups intended for scenario generation. Subsequently, the LLM is prompted to generate a scenario that satisfies all ODD requirements and incorporates all three ODD scenario groups. The resulting LLM output, referred to as the

tree-LLM instance, is illustrated in

Figure 4.

The next step in the pipeline involves prompting the

tree-LLM to generate executable ScenarioRunner code for the CARLA simulator based on the scenario descriptions; this output pattern is referred to as the

diverse-Tree. At this stage, outputs produced by

tree-LLM may contain inconsistencies and errors. The generated code can encompass numerous elements or highly complex interactions, which may exceed CARLA’s simulation capabilities and resource constraints, making the

diverse-Tree not necessarily executable. According to the CARLA documentation, common errors include syntax errors, runtime errors, and timeout errors [

29]. The tree-LLM thus generates ScenarioRunner code according to the specifications of the

diverse-Tree. This stage involves parsing, i.e., mapping elements from the scenario description to corresponding ScenarioRunner components and attributes, to facilitate scenario construction, as illustrated in

Figure 5.

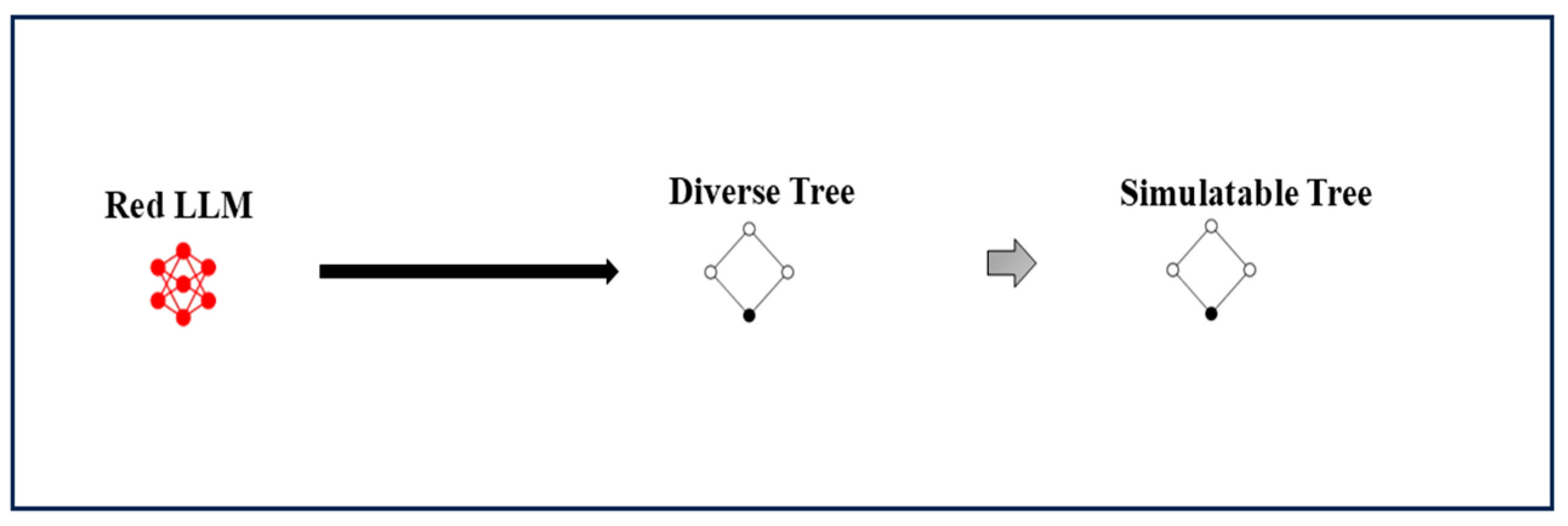

To evaluate the quality of the

tree-LLM output, we adopt a strategy inspired by LLM-based red teaming—a security and testing methodology that leverages large language models to identify vulnerabilities, weaknesses, or potential failures in systems, algorithms, or processes [

30]. This LLM instance is referred to as

red-LLM. Using a few-shot prompting technique,

red-LLM transforms the

diverse-Tree output into a refined pattern termed the

simulatable-tree (see

Figure 6).

The

simulatable-tree process involves providing

red-LLM with correct and accurate ScenarioRunner code, relevant CARLA assets, and a set of potential errors and configuration inconsistencies to anticipate, thereby minimizing the likelihood of generating incorrect outputs. This iterative learning procedure produces an enhanced LLM instance, referred to as

augmented-LLM. The output of

augmented-LLM is then used to initialize scenario simulations within CARLA, as illustrated in

Figure 7. The resulting code is expected to be error-free and to execute smoothly within the CARLA environment.

4.5. Script/Code Generation Prompt Engineering

The objective of script generation prompting is to translate the scenario description into executable CARLA code while maintaining a structured and reproducible prompting framework for generating additional scenarios. The generated code must be both syntactically and logically correct, conforming to the CARLA ScenarioRunner API.

Table 1 presents examples illustrating how ODD parameters are mapped to corresponding CARLA code elements.

Hypothesis 1. Generate a CARLA ScenarioRunner-compatible Python script that defines a simulation scenario based on the provided ODD parameters.

4.6. Refinement Prompt Engineering

The goal of refinement prompting is to identify and correct errors in the generated CARLA code, ensure compatibility with the CARLA ScenarioRunner API, optimize ego vehicle behavior to reflect real-world dynamics consistent with the ODD, enhance code maintainability and readability, and verify the logical correctness of event sequences and conditions. To achieve this, the red-LLM is employed to effectively refine and debug the generated CARLA scripts. An example prompt for this process is as follows:

Hypothesis 2. Refine the generated CARLA script to ensure correctness, efficiency, and compliance with CARLA ScenarioRunner. Identify and fix errors, optimize vehicle behavior, and validate execution flow.

Specific debugging and error detection prompts can also be used to find and fix common syntax, logic, and runtime errors.

Hypothesis 3. Check the generated ScenarioRunner script for syntax errors, missing imports, and incorrect function calls. Ensure proper indentation and formatting.

5. Experiments

The experiments were conducted by prompting a pre-trained LLaMA 3 model to generate scripts, which were subsequently executed using ScenarioRunner (versions 0.9.13 and 0.9.15) via its Python 3.7 API. Simulations were initialized using alternating versions of CARLA, specifically 0.9.13 and 0.9.15. A Jupyter notebook was prepared in advance to ensure proper connection with CARLA; this notebook establishes a client connection through the Python API and verifies the availability of essential assets, such as maps, vehicle blueprints, and props. This verification step is necessary because different CARLA versions may vary in asset availability, which can affect simulation execution.

The designed scenarios tested various aspects of autonomous vehicle performance, including high-speed highway maneuvers, low-visibility rural navigation, construction zones, and dynamic urban environments. Two distinct scenario examples are presented, with detailed breakdowns of their descriptions and the scenario dynamics, actions, and events implemented within the CARLA environment.

5.1. Scenario 1

The first scenario, referred to as the Urban Downtown Intersection Scenario, combines the complexity of urban environments with dynamic actors and environmental challenges, while adhering to typical ODD constraints. These constraints include mapped urban roads, moderate weather conditions, and defined operational hours, thereby introducing realism and complexity. This scenario is configured with the following parameters:

A high-definition urban downtown area using CARLA’s Town03 map with multiple lanes, well-marked roadways, designated pedestrian crosswalks, and advanced traffic signal systems.

Weather and Time-of-Day: Light rain with intermittent overcast skies, representing midday lighting conditions to ensure optimal sensor performance.

Scenario Layout and Road Features:

The ego vehicle starts on a three-lane road (one lane per direction and one turning lane), heading toward a busy intersection (a four-way intersection controlled by adaptive traffic lights), and includes marked crosswalks on all sides.

On the approach to the intersection, a temporary construction zone is active on the right-hand side (indicated by traffic signs and NPC construction workers).

Dynamic Actors and Events:

Multiple NPC vehicles obey traffic rules.

Standard pedestrians crossing at the crosswalks. Include one or two “unpredictable” pedestrian agents that may jaywalk (e.g., stepping into the street unexpectedly) to test emergency braking and evasive maneuvers.

At least one NPC cyclist rides along a designated bike lane adjacent to the road. The cyclist may change speed or swerve slightly to simulate realistic behavior in an urban setting.

An emergency vehicle (e.g., an ambulance) can approach the intersection and may run a red light to simulate urgency, requiring the ego vehicle to yield and re-plan its path.

Scenario Dynamics and Triggers:

Ego vehicle (vehicle.toyota.prius) is tasked to navigate from a defined start point on the primary road toward and through the intersection while safely managing interactions with other dynamic agents.

Ego vehicle (vehicle.toyota.prius) must react appropriately to the construction zone by merging to an open lane well in advance.

Ego vehicle (vehicle.toyota.prius) must recognize and yield to the emergency vehicle by safely pulling over or stopping if necessary.

Ego vehicle (vehicle.toyota.prius) must detect and respond to jaywalking pedestrians with emergency braking or evasive steering.

Sensor and Perception Considerations: A 360-degree LiDAR providing point clouds for object detection and high-resolution cameras simulating forward, rear, and side views.

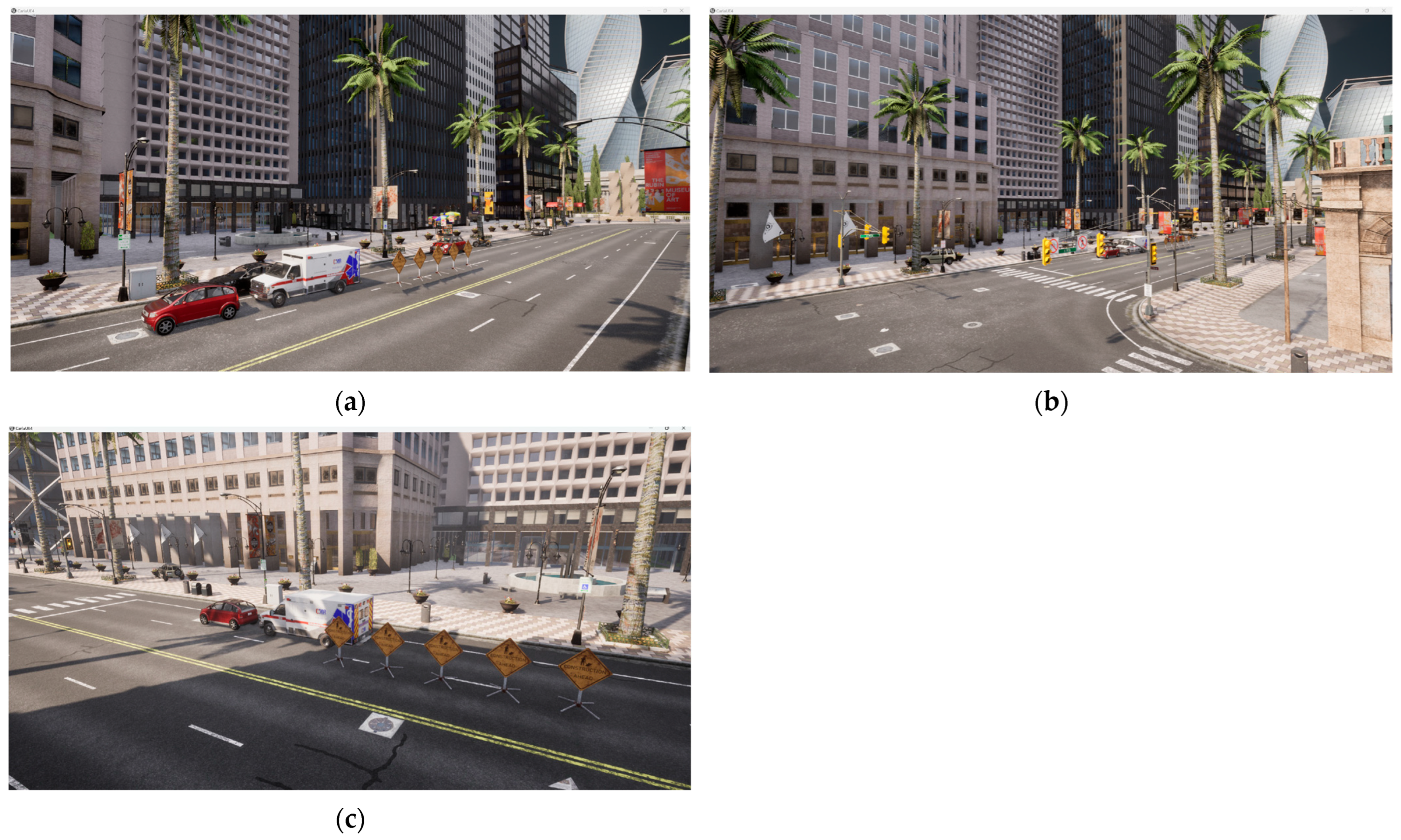

Observations from Scenario 1 (

Figure 8) indicate that assets classified under the Operational Environment loaded correctly and generally functioned as intended, in contrast to the dynamic actors, which exhibited execution shortcomings. Static elements, such as well-marked roadways, designated pedestrian crosswalks, and advanced traffic signal systems, are low in complexity and largely pre-integrated into CARLA town maps, facilitating reliable loading. However, dynamic actors presented challenges related to timing and synchronization: pedestrians crossed the intersection either prematurely or with delay, and both cyclists and emergency vehicles initiated movement before the ego vehicle reached the appropriate trigger point.

5.2. Scenario 2

The second scenario, referred to as the Suburban Residential Area with School Zone Dynamics, simulates a suburban neighborhood characterized by narrow streets, residential houses, and a designated school zone featuring reduced speed limits, enhanced signage, and pedestrian crosswalks near schools. This scenario is configured with the following parameters:

Time of Day: Early morning with soft, low-angle sunlight.

Atmospheric Conditions: Light fog or dew (simulated with a moderate fog density and wetness).

Static (Parked) Vehicles: Parked along the roadside.

Speed and Maneuvering: Emphasis on low-speed driving and careful maneuvering as the school bus present in the scene must move in accordance with the school zone’s low-speed requirements.

Safety: Enhanced detection and reaction to vulnerable road users with strict adherence to yielding rules.

Dynamic Actors and Events:

Parked vehicles lining the street, with occasional moving vehicles entering/exiting driveways.

Children and school buses appearing unpredictably at crosswalks.

Cyclists and pedestrians using sidewalks and crossing the street between parked cars.

Scenario Dynamics and Triggers:

Ego vehicle (vehicle.toyota.prius) must detect stop signs, slow zones, or a stopped school bus.

Ego vehicle (vehicle.toyota.prius) must yield to crossing pedestrians.

Ego vehicle (vehicle.toyota.prius) may have to stop suddenly or replan due to obstruction.

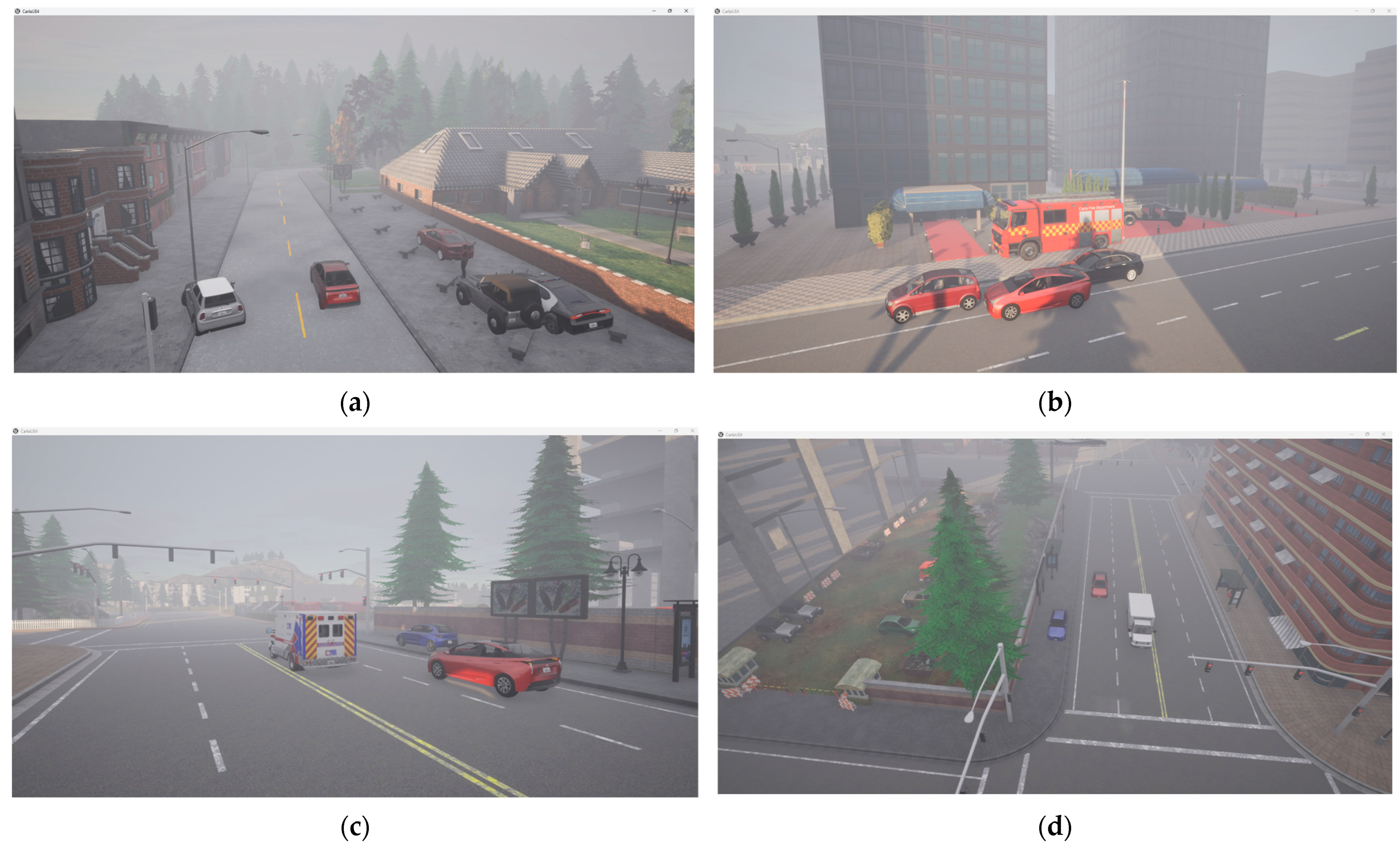

Observations from Scenario 2 (

Figure 9) revealed outcomes similar to those in Scenario 1. The school bus substitute vehicle (i.e., water truck), along with environmental conditions such as soft, low-angle sunlight and light fog, loaded correctly as part of the Operational Environment assets. However, dynamic actors frequently spawned too close to the roadway due to improper execution timing. Consequently, the overall scenario realism was diminished by persistent timing and synchronization challenges.

6. Results

6.1. Why Use Chain-of-Thought (CoT) Prompting

The Chain-of-Thought (CoT) prompting technique facilitates the decomposition of complex scenario generation tasks into structured, logical reasoning steps. This approach ensures logical consistency in the generated scenarios and enables a more realistic, context-aware creation process that better aligns with ODD specifications. Consequently, CoT prompting increases the likelihood of producing executable scripts. Experimental results demonstrate that employing CoT prompting leads to measurable improvements in scenario accuracy, execution success rates, and overall pipeline efficiency.

6.2. Pipeline Application Metrics

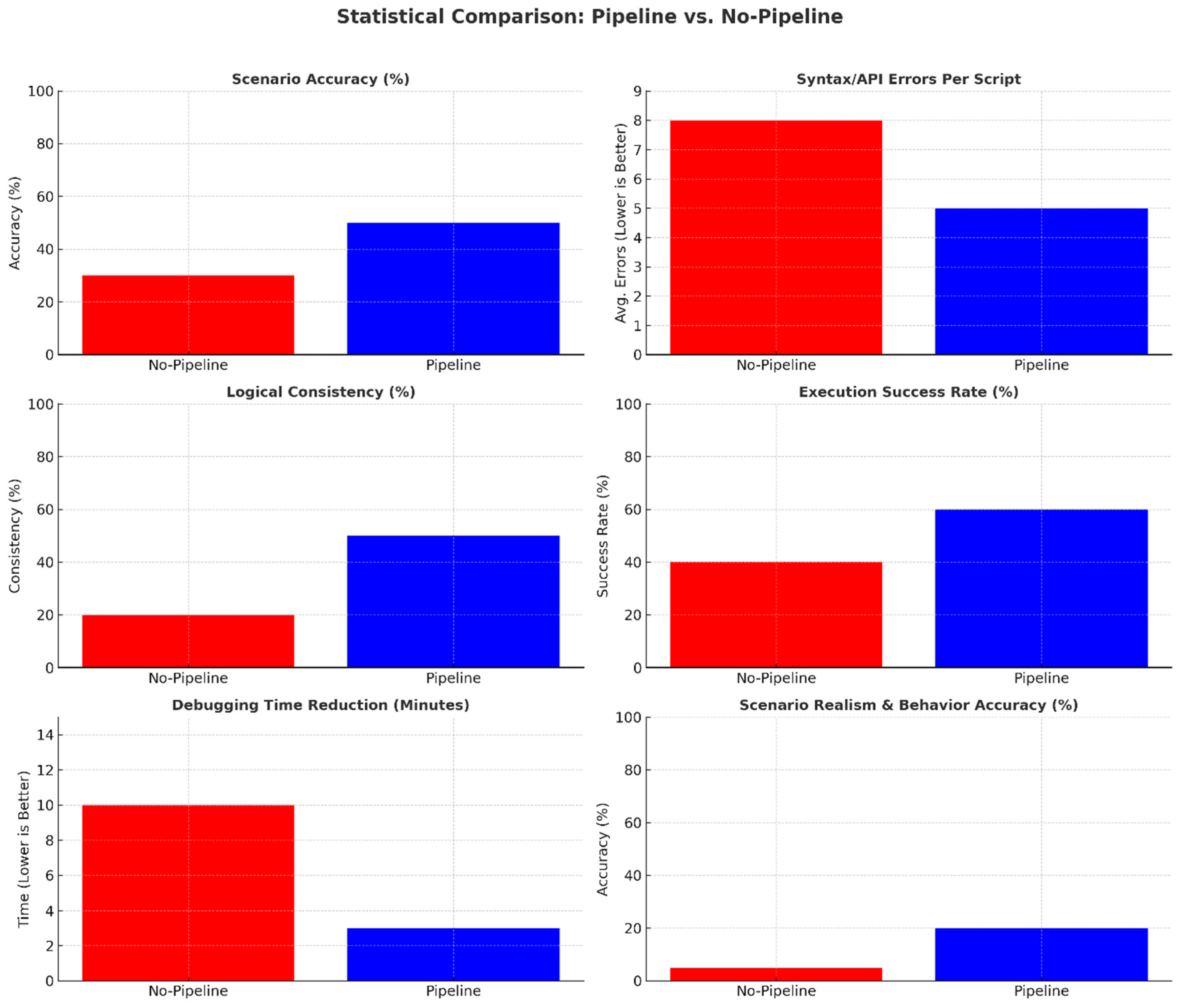

The evaluation comprised eight scenarios classified under the Environmental and Dynamic groups of the ODD categories. These scenarios simulated real-world driving challenges, including emergency braking events, lane-blocking situations, road construction zones, lane-merging maneuvers, and overtaking scenarios. Each was designed to test the model’s capacity to handle variations in environmental conditions, dynamic agent interactions, and infrastructure constraints.

To assess the impact of the proposed pipeline, experiments compared two main variants: the

Baseline (without pipeline), representing conventional methods, and the

With Pipeline, which applies the model-based approach. Following the generation and execution of the eight scenarios with and without the pipeline, relevant performance attributes were recorded for analysis. The full set of evaluation metrics is listed in

Table 2.

6.3. Comparisons of LLM Output Quality (Pipeline vs. No-Pipeline)

As illustrated in

Figure 10, the metric results obtained from simulations of each scenario are summarized. The sample size is

n = 8, with each metric averaged over eight independent scenario generations per condition. Results are presented as mean ± standard deviation (SD), reflecting both central tendency and variability. Statistical significance was assessed using paired

t-tests (

α = 0.05) comparing the Baseline (no pipeline) and Pipeline conditions. The percentage improvement,

x, is calculated using the formula:

Aggregate improvements obtained with the pipeline are reported in

Table 3.

6.4. Improvements and Advantages of the LLM-Based Automated Scenario Generation Pipeline

The proposed pipeline introduces a semi-automated framework for transforming textual Operational Design Domain (ODD) descriptions into executable CARLA simulation scenarios. It follows a structured process beginning with a fully automated scenario generation phase, which leverages structured prompt engineering and the Tree-of-Thought (ToT) reasoning paradigm. This stage requires no human-authored logic beyond prompt design. The subsequent script refinement phase is semi-automated and employs the red-LLM instance to apply known simulation error patterns and corresponding corrections. Although largely automated, this phase may require minor manual interventions, such as spawn-point adjustments or compensations for missing assets in CARLA.

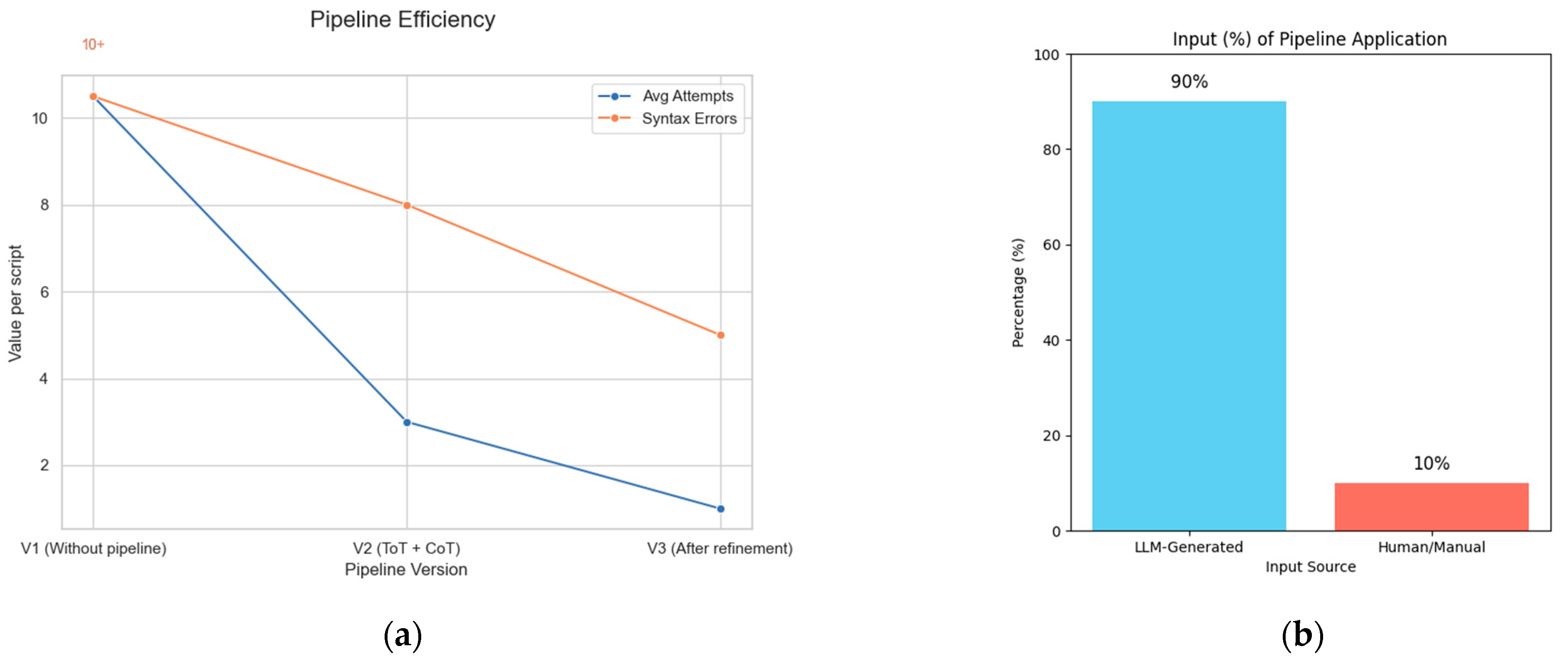

Figure 11 presents visual summaries of the pipeline’s performance.

Figure 11a compares the average number of prompt generations required to produce a valid simulation script against the average number of errors encountered per script.

Figure 11b illustrates the proportion of code generated by the LLM (90%) versus human contributions (10%), which were mainly limited to debugging and addressing CARLA-specific limitations.

7. Discussion and Future Work

Although fully automated scenario generation has not yet been achieved, experimental results demonstrate that static elements and environmental conditions defined within the ODD can be reliably mapped into CARLA simulation scenarios using our approach and an LLM. This significantly reduces the manual effort required to develop test cases for autonomous driving tasks, representing a key advancement in the development pipeline.

Future experiments may explore a data-driven alternative, leveraging machine learning techniques to further automate scenario generation. This approach would utilize a pre-trained language model (LLM), fine-tuned on a curated dataset composed of real-world ODD descriptions, parameter extraction and parsing examples, and CARLA ScenarioRunner code syntax. The dataset would be partitioned into training and testing subsets to enhance generalization performance. Fine-tuning could be performed using frameworks such as Hugging Face Transformers, PyTorch, or TensorFlow.

For models such as LLaMA 3, this adaptation may involve either full fine-tuning (i.e., adjusting model weights) or inference-time methods, such as prompt engineering and embedding-based conditioning. Additionally, an iterative refinement loop can be employed, where the outputs are evaluated and fed back into the model to improve the quality and compatibility of generated simulation code over time.

8. Conclusions

This study explores the potential of using large language models (LLMs) to automate the transformation of Operational Design Domain (ODD) descriptions into simulation scenarios for autonomous vehicles.

A key finding was that static elements related to the operational environment, such as weather conditions and road configurations, were reliably instantiated. In contrast, dynamic components often exhibited inaccuracies and inconsistent behavior, highlighting limitations in the current prompt engineering and script generation strategies when handling complex, interactive elements.

Several critical research questions emerged from these observations:

How can prompt engineering be optimized to improve the consistency and accuracy of generated scripts?

What are the underlying causes of dynamic actor execution errors, and how can these be systematically addressed?

What methodologies can effectively incorporate simulation feedback into the fine-tuning process for better scenario generation?

Addressing these questions in future work could significantly improve the reliability and realism of dynamically rich simulation scenarios. Iterative refinement strategies, such as red teaming techniques, may provide a structured mechanism to identify and correct failure points in the generation pipeline.

In summary, this paper presented a pipeline that leverages LLMs to bridge the gap between abstract ODD definitions and executable simulation scenarios in CARLA. By integrating model-based reasoning with data-driven techniques, the pipeline provides a structured approach that begins with scenario decomposition and progresses through code generation and refinement. Experimental results demonstrated the effectiveness of the approach for static scenario components, while also identifying key areas for improvement in handling dynamic elements.

Author Contributions

Conceptualization, A.A.D. and U.B.; Formal analysis, A.A.D.; Investigation, A.A.D.; Methodology, A.A.D.; Project administration, U.B.; Supervision, U.B.; Writing—original draft, A.A.D.; Writing—review and editing, U.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors thank Markus Lange-Hegermann for his valuable discussions and insights during the development of this work.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ODD | Operational Design Domain |

| LLM | Large Language Model |

| AV | Autonomous Vehicle |

| TOT | Tree of Thoughts |

| COT | Chain of Thought |

| ISO | International Organization for Standardization |

| NPC | Non-Player Character |

| OE | Operational Environment |

References

- PAS 1883:2020; Operational Design Domain (ODD) Taxonomy for An Automated Driving System (ADS)—Specification. BSI Standards Limited: London, UK, 2020. Available online: https://knowledge.bsigroup.com/products/operational-design-domain-odd-taxonomy-for-an-automated-driving-system-ads-specification (accessed on 20 November 2024).

- Standard J3016; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2021.

- Berman, B. The Key to Autonomous Vehicle Safety Is ODD. Available online: https://www.sae.org/news/2019/11/odds-for-av-testing (accessed on 21 November 2024).

- ASAM. ASAM OpenODD V1.0.0. Available online: https://www.asam.net/standards/detail/openodd (accessed on 20 November 2024).

- Miao, Q.; Zheng, W.; Lv, Y.; Huang, M.; Ding, W.; Wang, F.-Y. DAO to HANOI via DeSci: AI paradigm shifts from AlphaGo to ChatGPT. IEEE/CAA J. Autom. Sin. 2023, 10, 877–897. [Google Scholar] [CrossRef]

- Irvine, P.; Zhang, X.; Khastgir, S.; Schwalb, E.; Jennings, P. A two-level abstraction ODD definition language: Part I. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2614–2621. [Google Scholar]

- Esenturk, E.; Wallace, A.G.; Khastgir, S.; Jennings, P. Identification of traffic accident patterns via cluster analysis and test scenario development for autonomous vehicles. IEEE Access 2022, 10, 6660–6675. [Google Scholar] [CrossRef]

- Li, X.; Wang, F.-Y. Scenarios engineering: Enabling trustworthy and effective AI for autonomous vehicles. IEEE Trans. Intell. Veh. 2023, 8, 2305–3210. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Proc. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Li, X.; Wang, Y.; Yan, L.; Wang, K.; Deng, F.; Wang, F.-Y. ParallelEye-CS: A new dataset of synthetic images for testing the visual intelligence of intelligent vehicles. IEEE Trans. Veh. Technol. 2019, 68, 9619–9631. [Google Scholar] [CrossRef]

- Li, L.; Lin, Y.; Wang, Y.; Wang, F.-Y. Simulation driven AI: From artificial to actual and vice versa. IEEE Intell. Syst. 2023, 38, 3–8. [Google Scholar] [CrossRef]

- Wu, D.; Han, W.; Liu, Y.; Wang, T.; Xu, C.Z.; Zhang, X.; Shen, J. Language prompt for autonomous driving. arXiv 2023, arXiv:2309.04379. [Google Scholar] [CrossRef]

- Elhafsi, A.; Sinha, R.; Agia, C.; Schmerling, E.; Nesnas, I.A.D.; Pavone, M. Semantic anomaly detection with large language models. Auton. Robot. 2023, 47, 1035–1055. [Google Scholar] [CrossRef]

- Sha, H.; Mu, Y.; Jiang, Y.; Chen, L.; Xu, C.; Luo, P.; Li, S.E.; Tomizuka, M.; Zhan, W.; Ding, M. Language MPC: Large language models as decision makers for autonomous driving. arXiv 2023, arXiv:2310.03026. [Google Scholar] [CrossRef]

- Wen, L.; Fu, D.; Li, X.; Cai, X.; Ma, T.; Cai, P.; Dou, M.; Shi, B.; He, L.; Qiao, Y. DiLu: A knowledge-driven approach to autonomous driving with large language models. arXiv 2023, arXiv:2309.16292. [Google Scholar]

- Wang, X.; Zhu, Z.; Huang, G.; Chen, X.; Zhu, J.; Lu, J. DriveDreamer: Towards real-world-driven world models for autonomous driving. arXiv 2023, arXiv:2309.09777. [Google Scholar]

- Zhang, X.; Khastgir, S.; Asgari, H.; Jennings, P. Test framework for automatic test case generation and execution aimed at developing trustworthy AVs from both verifiability and certifiability aspects. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 312–319. [Google Scholar]

- ISO 34503:2023(E); ODD Taxonomy—Top Level ODD Classification. International Organization for Standardization: Geneva, Switzerland, 2023.

- Scenario Runner Documentation. CARLA ROS Scenario Runner Documentation. Available online: https://scenario-runner.readthedocs.io/en/latest (accessed on 29 December 2024).

- ASAM. ASAM OpenSCENARIO V1.0.0. Available online: https://releases.asam.net/OpenSCENARIO/1.0.0/ASAM_OpenSCENARIO_BS-1-2_User-Guide_V1-0-0.html (accessed on 28 November 2024).

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic chain of thought prompting in large language models. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023; pp. 754–767. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Perez, E.; Kiela, D.; Cho, K. True few-shot learning with language models. Adv. Neural Inf. Process. Syst. 2021, 34, 11054–11070. [Google Scholar]

- Li, X.; Teng, S.; Liu, B.; Dai, X.; Na, X.; Wang, F.-Y. Advanced scenario generation for calibration and verification of autonomous vehicles. IEEE Trans. Intell. Veh. 2023, 8, 3211–3216. [Google Scholar] [CrossRef]

- Gabeur, V.; Sun, C.; Alahari, K.; Schmid, C. Multi-modal transformer for video retrieval. In Proceedings of the European Conference on Computer Vision, Glasgow, Scotland, 23–28 August 2020; pp. 214–229. [Google Scholar]

- Huang, P.; Han, J.; Liu, N.; Ren, J.; Zhang, D. Scribble-supervised video object segmentation. IEEE/CAA J. Autom. Sin. 2021, 9, 339–353. [Google Scholar] [CrossRef]

- Tian, Y.; Li, X.; Wang, K.; Wang, F.-Y. Training and testing object detectors with virtual images. IEEE/CAA J. Autom. Sin. 2018, 5, 539–546. [Google Scholar] [CrossRef]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. arXiv 2023, arXiv:2305.10601v2. [Google Scholar] [CrossRef]

- CARLA Documentation. Running CARLA—Can’t Run A Script. Available online: https://carla.readthedocs.io/en/latest/build_faq (accessed on 17 December 2024).

- Ge, S.; Zhou, C.; Hou, R.; Khabsa, M.; Wang, Y.C.; Wang, Q.; Han, J.; Mao, Y. MART: Improving LLM Safety with Multi-round Automatic Red-Teaming. arXiv 2023, arXiv:2311.07689v1. [Google Scholar] [CrossRef]

Figure 1.

BSI PAS 1883: ODD Taxonomy [

1].

Figure 1.

BSI PAS 1883: ODD Taxonomy [

1].

Figure 2.

High-level workflow for logical scenario generation based on ODD.

Figure 2.

High-level workflow for logical scenario generation based on ODD.

Figure 3.

Illustration of LLM-driven scenario generation.

Figure 3.

Illustration of LLM-driven scenario generation.

Figure 4.

Creating a new LLM instance using Initial Tree by applying the ToT prompting technique.

Figure 4.

Creating a new LLM instance using Initial Tree by applying the ToT prompting technique.

Figure 5.

The Tree LLMs script generation process by mapping out ODD parameters.

Figure 5.

The Tree LLMs script generation process by mapping out ODD parameters.

Figure 6.

RedLLM facilitating the transformation of Diverse Tree into Simulatable Tree.

Figure 6.

RedLLM facilitating the transformation of Diverse Tree into Simulatable Tree.

Figure 7.

Final LLM instance referred to as Augmented LLM.

Figure 7.

Final LLM instance referred to as Augmented LLM.

Figure 8.

Presents screenshots from Scenario 1. (a) The Urban Downtown Intersection Scenario, featuring multiple lanes, well-marked roadways, designated pedestrian crosswalks, and advanced traffic signal systems; (b) The absence of construction workers (NPC pedestrians) walking slowly near cones and traffic signs; (c) A temporary construction zone on the right-hand side approaching the intersection, with lane closures indicated by cones and traffic signs, requiring the vehicle to safely perform a lane change.

Figure 8.

Presents screenshots from Scenario 1. (a) The Urban Downtown Intersection Scenario, featuring multiple lanes, well-marked roadways, designated pedestrian crosswalks, and advanced traffic signal systems; (b) The absence of construction workers (NPC pedestrians) walking slowly near cones and traffic signs; (c) A temporary construction zone on the right-hand side approaching the intersection, with lane closures indicated by cones and traffic signs, requiring the vehicle to safely perform a lane change.

Figure 9.

Presents screenshots from Scenario 2. (a) A suburban residential area with school zone dynamics, featuring parked vehicles along the roadside and occasional moving vehicles on a narrow street; (b) A school bus substitute vehicle (e.g., a water truck) spawned statically on the side-walk, alongside occasional moving vehicles on the highway; (c) Early morning conditions characterized by soft, low-angle sunlight, light fog, or dew simulated with moderate fog density and wetness; (d) The absence of pedestrians on crosswalks, with occasional cyclists moving unpredictably along the highway.

Figure 9.

Presents screenshots from Scenario 2. (a) A suburban residential area with school zone dynamics, featuring parked vehicles along the roadside and occasional moving vehicles on a narrow street; (b) A school bus substitute vehicle (e.g., a water truck) spawned statically on the side-walk, alongside occasional moving vehicles on the highway; (c) Early morning conditions characterized by soft, low-angle sunlight, light fog, or dew simulated with moderate fog density and wetness; (d) The absence of pedestrians on crosswalks, with occasional cyclists moving unpredictably along the highway.

Figure 10.

Metrics comparison of 8 different scenarios after applying pipeline vs. no-pipeline, from scenario script generation, refinement, and then to simulation.

Figure 10.

Metrics comparison of 8 different scenarios after applying pipeline vs. no-pipeline, from scenario script generation, refinement, and then to simulation.

Figure 11.

Performance charts of the pipeline application metrics. (a) Pipeline versions vs. avg. prompts & errors per script (b) 90% code/logic generated by LLM and 10% human input handling debugging & asset issues.

Figure 11.

Performance charts of the pipeline application metrics. (a) Pipeline versions vs. avg. prompts & errors per script (b) 90% code/logic generated by LLM and 10% human input handling debugging & asset issues.

Table 1.

ODD to code parameter mapping.

Table 1.

ODD to code parameter mapping.

| ODD Parameter | CARLA Code Representation |

|---|

| Weather (rain, fog, etc.) * | carla.WeatherParameters() |

| Environment (road type, lane markings, etc.) | CARLA world and map settings |

| Actors (vehicles, pedestrians, etc.) | carla.Vehicle() and carla.Walker() |

| Event Timeline | Scenario step sequence |

| Behavior (speed, braking, lane changes, etc.) | scenario trigger() and behavior.py |

Table 2.

Performance metrics to be measured following processes ranging from the instantiation of the initial tree to the final output of Augmented LLM.

Table 2.

Performance metrics to be measured following processes ranging from the instantiation of the initial tree to the final output of Augmented LLM.

| Metric | Description |

|---|

| Scenario Accuracy | Counting how many scenarios are correctly mapped from ODD. (Correct Mapping/Total Scenarios) × 100 |

| Code Validity (Syntax/API Errors) | Running the generated scripts and counting syntax errors per script. |

| Logical Consistency (Event Sequencing) | Comparing expected vs. generated event order in each scenario. |

| Execution Success Rate | Number of scripts that run without manual fixes. (Successful Runs/Total Runs) × 100% |

| Debugging Time Reduction | Measuring the time taken to fix errors in scripts before they run correctly. |

| Vehicle Behavior Accuracy | Checking if the generated vehicle behaviors match real-world expected actions. |

| Weather and Environment Effects | Validating whether ODD weather settings are properly applied in CARLA. |

Table 3.

Shows the improvement % of the pipeline application on all scenarios.

Table 3.

Shows the improvement % of the pipeline application on all scenarios.

| Metric | Baseline (Mean ± SD) | Pipeline (Mean ± SD) | Improvement (%) | p-Value |

|---|

| Scenario Accuracy | 30 ± 4% | 50 ± 5% | +66.7% | 0.004 |

| Logical Consistency (Correct Event Sequencing) | 20 ± 6% | 50 ± 5% | +150% | 0.002 |

| Syntax/API Errors per Script | 8 ± 2 err | 5 ± 1.5 err | −37.5% | 0.015 |

| Execution success rate (without manual fixes) | 40 ± 5% | 60 ± 4% | +50% | 0.008 |

| Time to Debug (minutes) | 10 ± 2 min | 3 ± 1 min | −70% | 0.002 |

| Vehicle Behavior Accuracy | 5 ± 3% | 20 ± 4% | +300% | 0.001 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).