Towards Energy Efficiency of HPC Data Centers: A Data-Driven Analytical Visualization Dashboard Prototype Approach

Abstract

1. Introduction

1.1. Background

1.2. Aim and Objectives

- Conducting a literature review on previous work related to digital twins for data centers and analyzing the structure and characteristics of the dataset.

- Examining the available datasets to characterize their features.

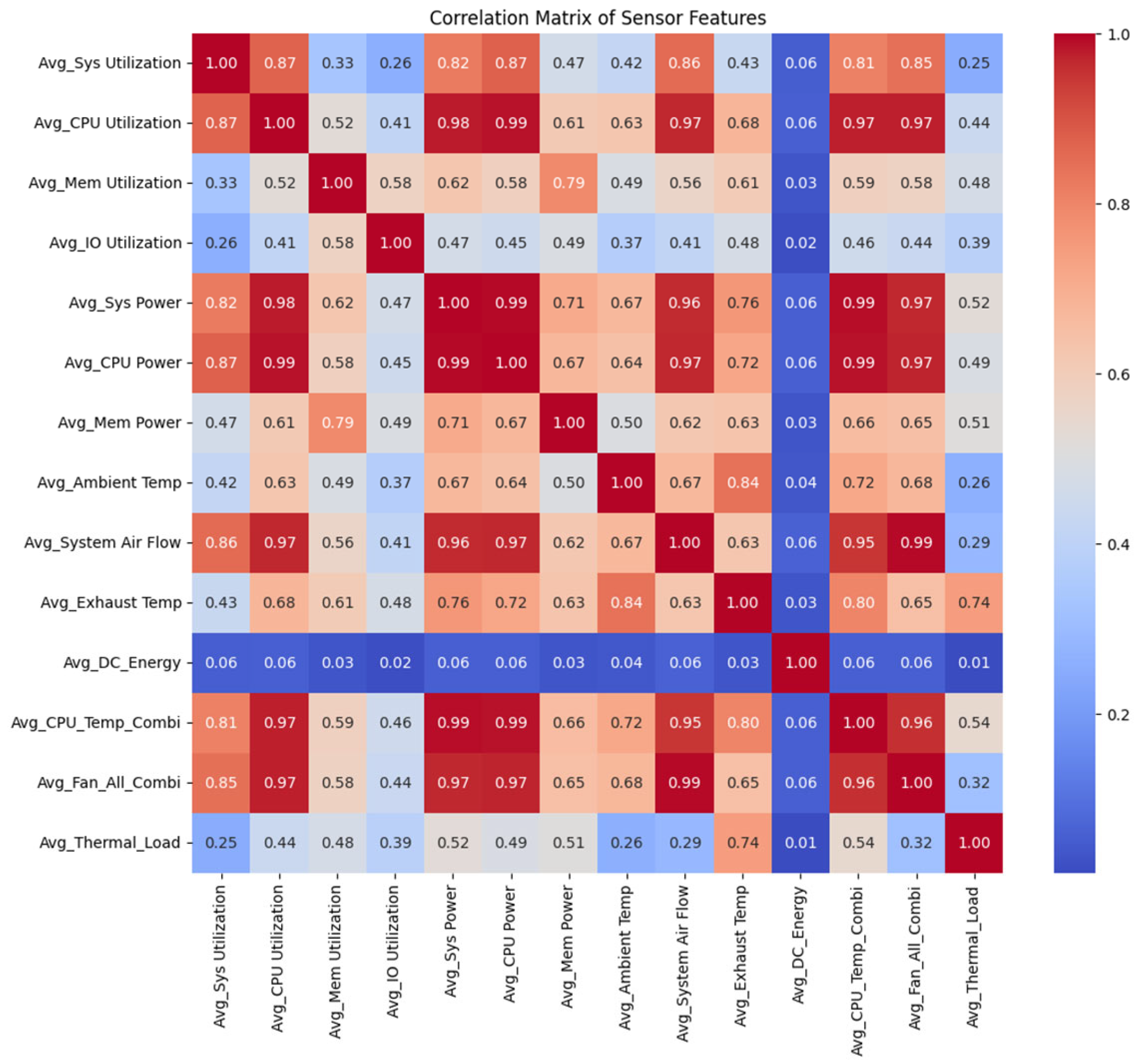

- Understanding the relationships and dependencies among variables within and between the sensor and job datasets.

- Developing and training predictive models for thermal and energy consumption.

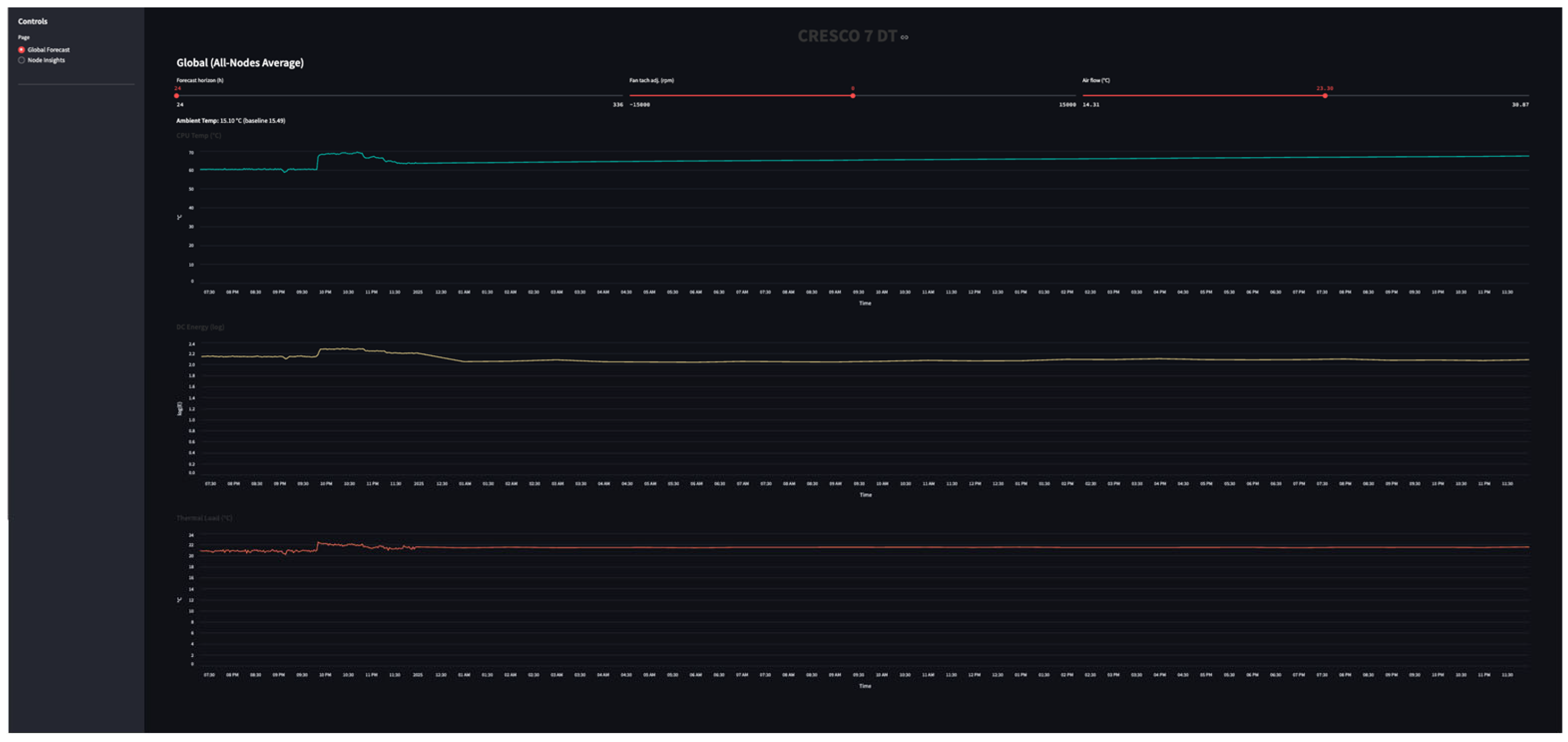

- Integrating the predictive models into a digital twin-based representation of the HPC data center.

- Phase 1: Descriptive analysis of the dataset.

- Phase 2: Correlation and inferential analysis.

- Phase 3: Predictive modelling for energy and thermal metrics.

- Phase 4: Visualization of the digital twin-inspired analytical dashboard.

1.3. CRESCO7 Cluster

1.4. Rationale

1.5. Contribution of Research

1.6. Paper Structure

2. Related Work

2.1. Current Data Center Situation

2.2. Energy Consumption for Power Management: Challenges and Improvements

2.3. Advanced Cooling Technologies and Energy Implications

| Cooling Technology | Typical Efficiency (PUE or Saving) | Relative Cost (CapEx) | Adoption (Current Trend) |

|---|---|---|---|

| Traditional Air Cooling | PUE ~1.3–1.5 (baseline) | Low (baseline) | Nearly universal in legacy designs (dominant overall) |

| Air Cooling + Rear-Door Heat Exch. | PUE ~1.2–1.3 (improved by ~10–20%) | Medium | Moderate adoption |

| Direct Liquid (Cold Plate) | PUE ~1.1–1.2 (significant improvement) | Medium–High | Growing adoption in HPC and AI clusters (select deployments) |

| Single-Phase Immersion Cooling (Sp-LIC) | PUE ~1.03–1.05 (near optimal, 10–50% energy saving) [22] | High (specialized infrastructure needed) | Limited adoption (niche trials by hyperscalers; a few production deployments) |

| Two-Phase Immersion Cooling | PUE ~1.02–1.05 (similar to single-phase) | Very High (complex systems, costly fluid) | Very limited (experimental deployments only) |

2.4. Digital Twins for HPC Data Center

2.5. Reliability and Adoption of Immersion Cooling

2.6. Machine Learning for Energy Savings in Data Centers

3. Methodology

3.1. Phase 1: Descriptive Statistics and Data Analysis

3.2. Phase 2: Inferential Statistical Analysis

3.3. Phase 3: Machine Learning Model for Cooling Optimization

- (i)

- Deep learning models, such as Long Short-Term Memory (LSTM) networks, require significantly larger training datasets and extensive tuning efforts. They are also prone to overfitting when trained on only four months of data. Additionally, their training and inference times are considerably longer, which could lead to latency issues for real-time applications.

- (ii)

- Simpler statistical models like ARIMA or Prophet rely on specific structures, such as seasonality or trends, and typically handle only one time series at a time. This limitation makes it challenging to incorporate multiple correlated inputs—such as utilization rates or fan speed influences—without considerable manual feature engineering for each relationship.

- (iii)

- The other models were resource-intensive and took much longer to train, which would hinder the performance of our analytical dashboard and defeat its intended purpose.

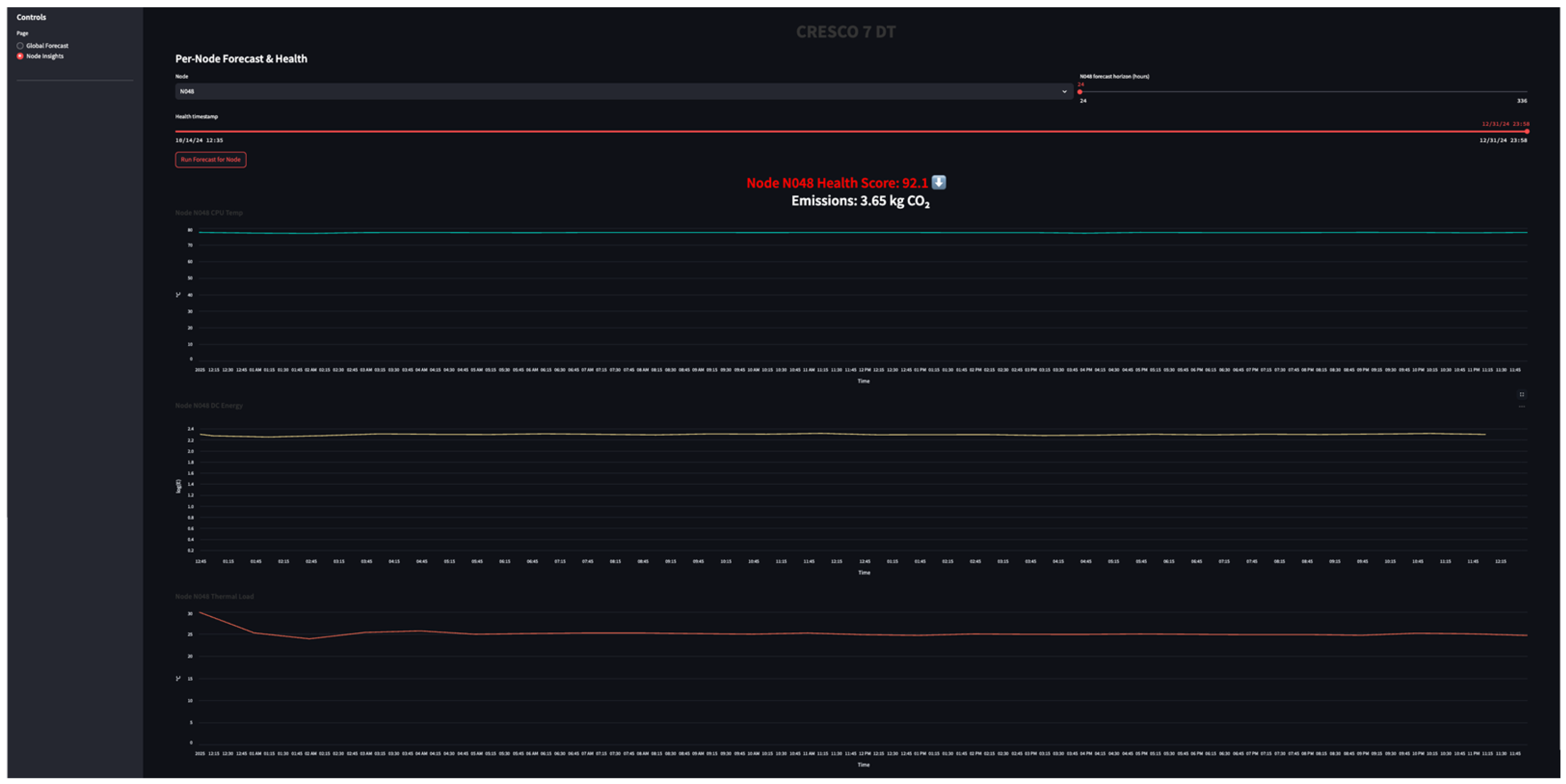

3.4. Phase 4: Analytical Visualization Dashboard Development

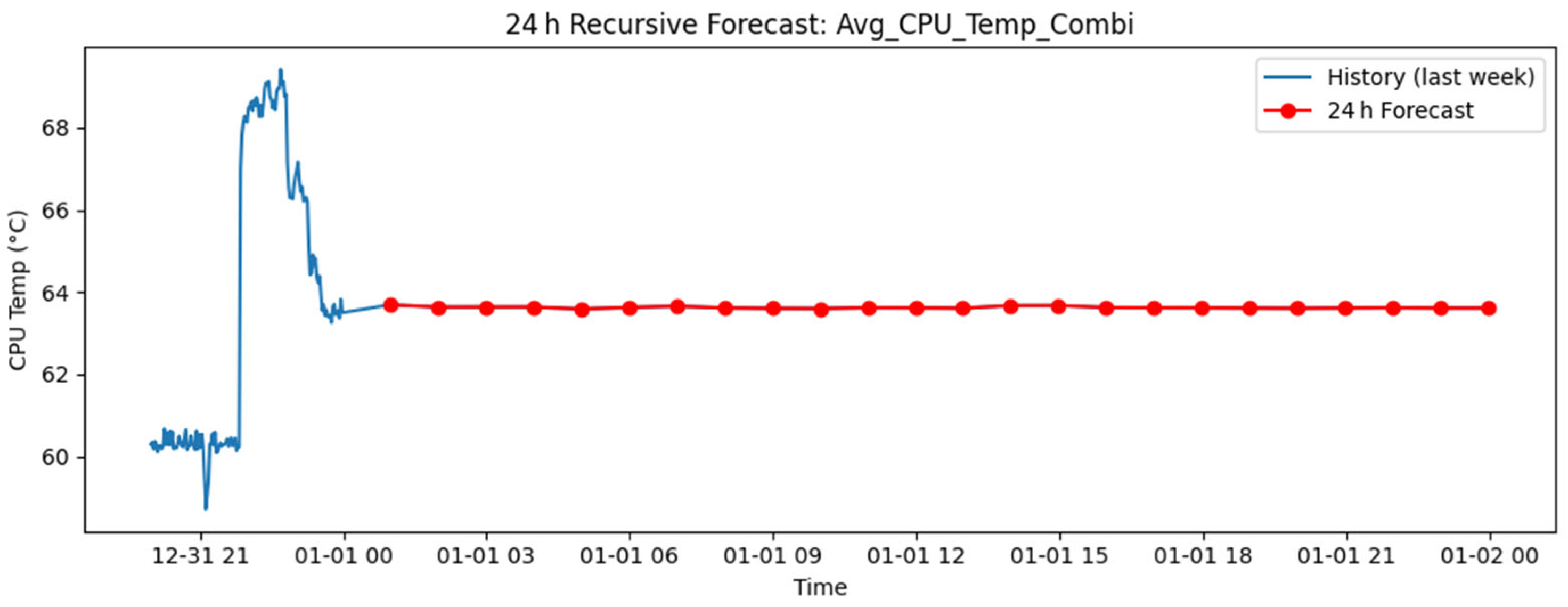

- Average CPU temperature.

- Total data center energy consumption.

- Average thermal load.

3.5. Scalability and Retraining

4. Results and Discussion

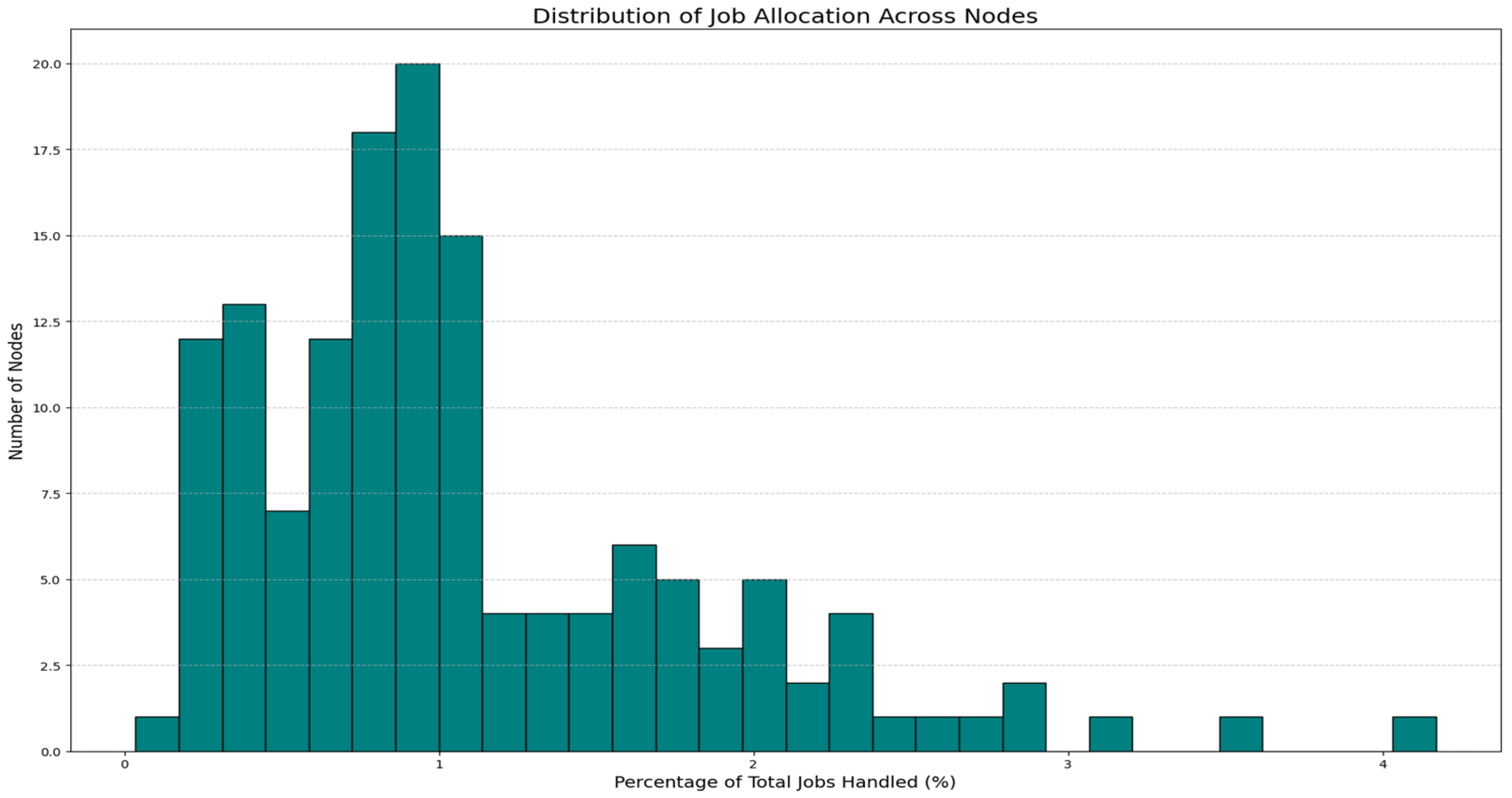

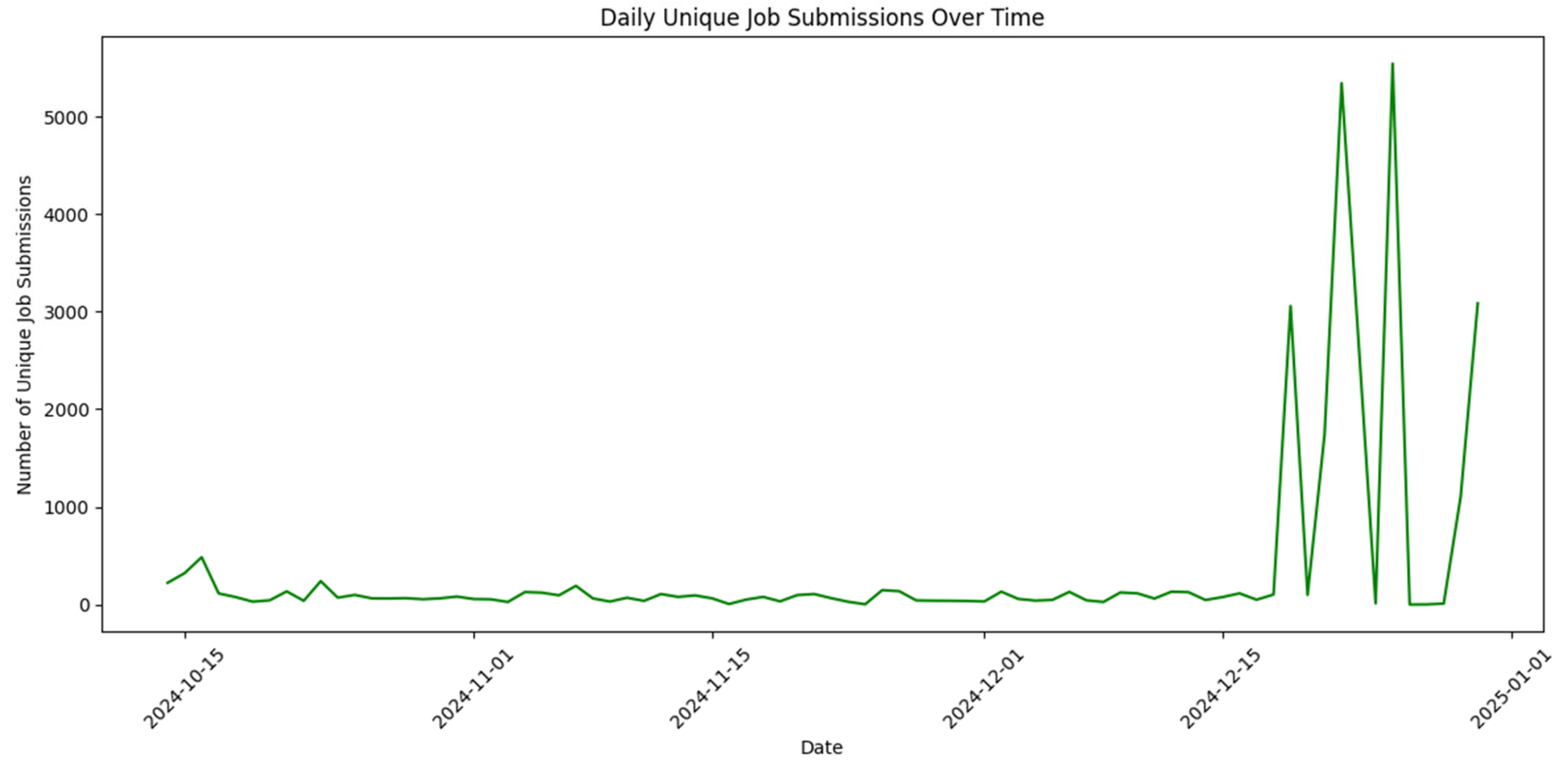

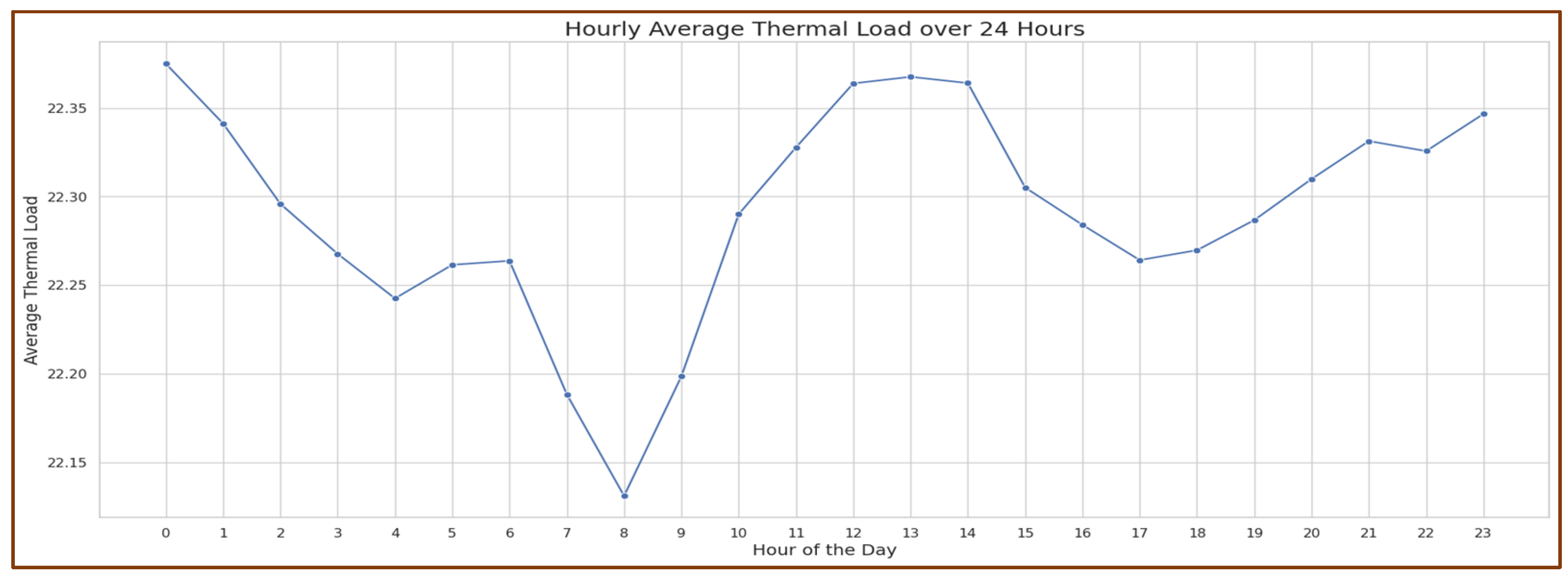

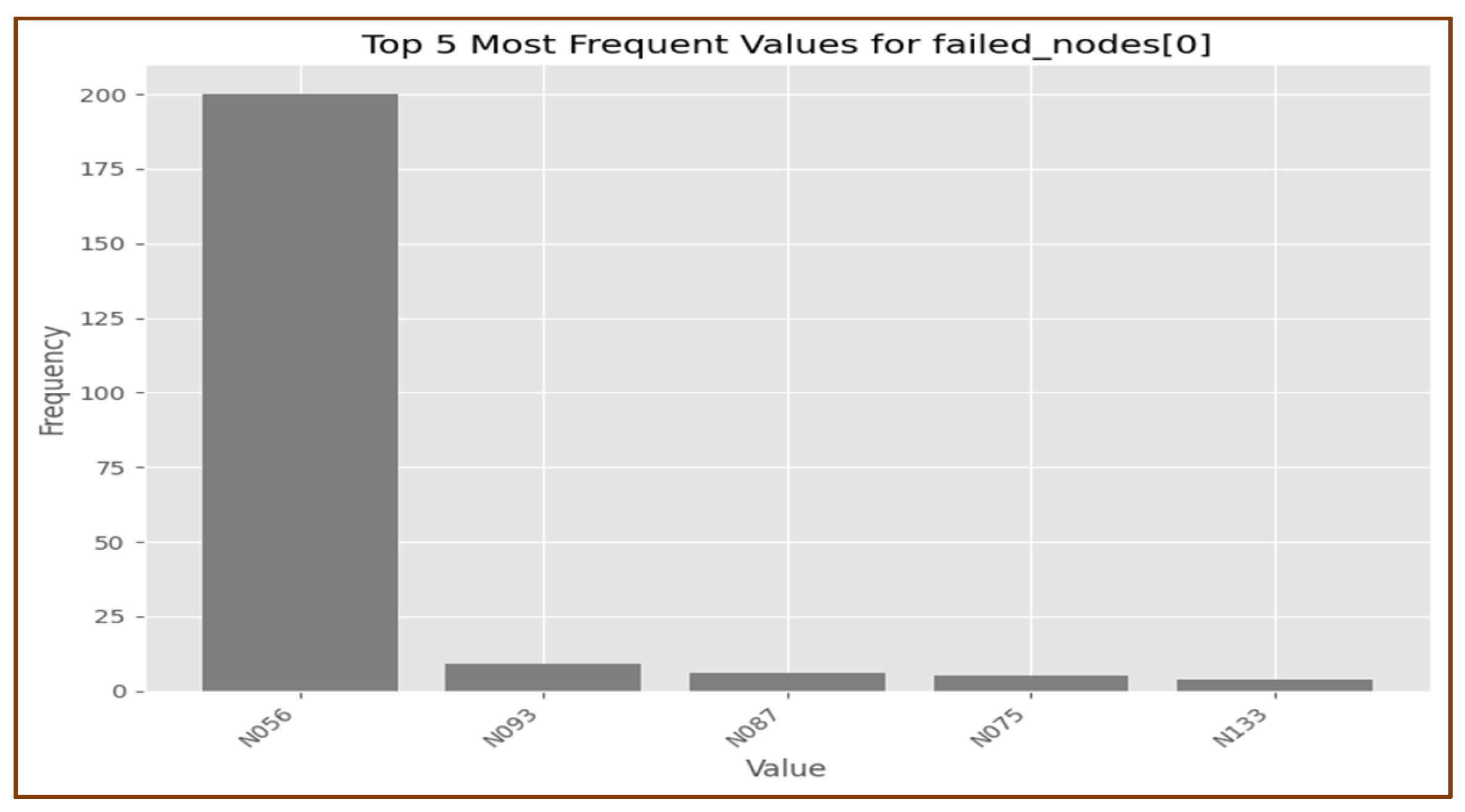

4.1. Phase 1: Data Exploration and Analysis

4.2. Phase 2: Inferential Statistics

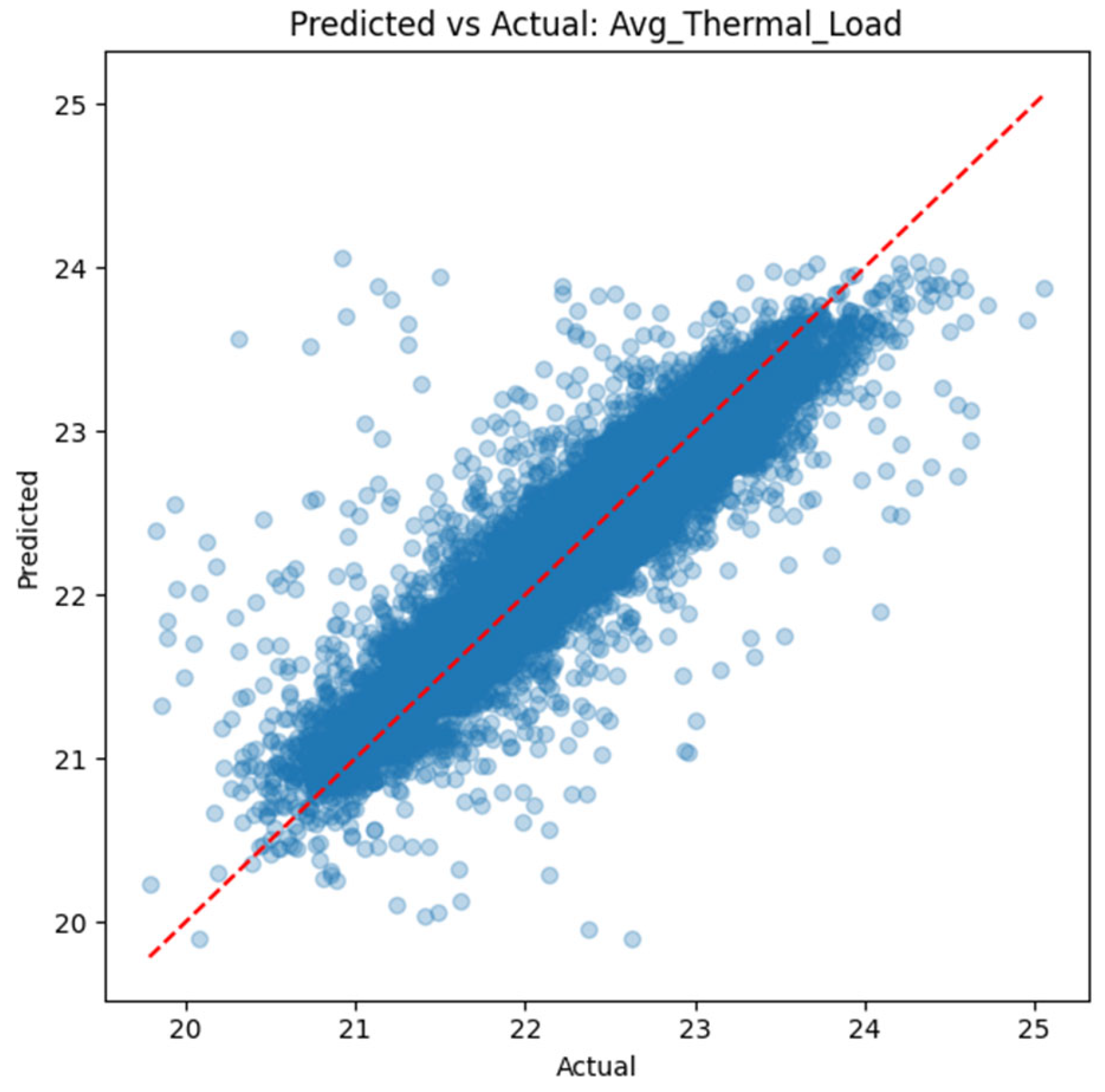

4.3. Phase 3: Predictive Modeling of Hot Isle Temperature and Cooling Parameters

- -

- CPU temperature: RMSE = 0.50 °C, MAE = 0.28 °C, MAPE = 0.41%

- -

- DC energy: RMSE = 0.04, MAE = 0.03, MAPE = 1.44%

- -

- Thermal load: RMSE = 0.26 °C, MAE = 0.18 °C, MAPE = 0.81%

4.4. Phase 4: Building of the Analytical Visualization Dashboard with Modeling

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Galkin, N.; Ruchkin, M.; Vyatkin, V.; Yang, C.-W.; Dubinin, V. Automatic Generation of Data Center Digital Twins for Virtual Commissioning of Their Automation Systems. IEEE Access 2023, 11, 4633–4644. [Google Scholar] [CrossRef]

- Avgerinou, M.; Bertoldi, P.; Castellazzi, L. Trends in Data Center Energy Consumption under the European Code of Conduct for Data Center Energy Efficiency. Energies 2017, 10, 1470. [Google Scholar] [CrossRef]

- Muller, U.; Strunz, K. Resilience of Data Center Power System: Modelling of Sustained Operation under Outage, Definition of Metrics, and Application. J. Eng. 2019, 2019, 8419–8427. [Google Scholar]

- Haghighat, M.; Shoukourian, H.; Bui, H.H.; Karanasos, K.; Sehgal, N. Towards Greener Large-Scale AI Training Systems: Challenges and Design Opportunities. arXiv 2025, arXiv:2503.11011. [Google Scholar]

- Chinnici, A.; Ahmadzada, E.; Kor, A.-L.; De Chiara, D.; Domínguez-Díaz, A.; de Marcos Ortega, L.; Chinnici, M. Towards Sustainability and Energy Efficiency Using Data Analytics for HPC Data Center. Electronics 2024, 13, 3542. [Google Scholar] [CrossRef]

- Arora, N.K.; Mishra, I. United Nations Sustainable Development Goals 2030 and Environmental Sustainability: Race against Time. Environ. Sustain. 2019, 2, 339–342. [Google Scholar] [CrossRef]

- Berezovskaya, Y.; Yang, C.-W.; Mousavi, A.; Vyatkin, V.; Minde, T.B. Modular Model of a Data Center as a Tool for Improving Its Energy Efficiency. IEEE Access 2020, 8, 46559–46573. [Google Scholar] [CrossRef]

- Statista (2024). Amount of Data Created Daily (2025). Statista. Available online: https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://explodingtopics.com/blog/data-generated-per-day&ved=2ahUKEwiXh4e8zvqOAxU58bsIHV9RAzsQFnoECBcQAQ&usg=AOvVaw0R3Nt9FgoT13KUejjHnmtc (accessed on 24 July 2025).

- Safari, A.; Sorouri, H.; Rahimi, A.; Oshnoei, A. A Systematic Review of Energy Efficiency Metrics for Optimizing Cloud Data Center Operations and Management. Electronics 2025, 14, 2214. [Google Scholar] [CrossRef]

- Koronen, C.; Åhman, M.; Nilsson, L.J. Data Centers in Future European Energy Systems—Energy Efficiency, Integration and Policy. Energy Effic. 2019, 13, 129–144. [Google Scholar] [CrossRef]

- Ganapathy, S.; Rajendran, P.; Yuvaraj, S.; Rufus, H.A.N.; Chaithanya, T.R.; Solanki, R.S. Computational Engineering-based Approach on Artificial Intelligence and Machine Learning-Driven Robust Data Center for Safe Management. J. Mach. Comput. 2023, 3, 465–474. [Google Scholar]

- Hossain, A.; Abdurahman, A.; Islam, M.A.; Ahmed, K. Power-Aware Scheduling for Multi-Center HPC Electricity Cost Optimization. arXiv 2025, arXiv:2503.11011v1. [Google Scholar]

- Christensen, J.D.; Therkelsen, J.; Georgiev, I.; Sand, H. Data Center Opportunities in the Nordics. Nordic Council of Ministers. 2018. Available online: https://www.norden.org/en/publication/data-centre-opportunities-nordics (accessed on 24 July 2025).

- Nur, M.M.; Kettani, H. Challenges in Protecting Data for Modern Enterprises. J. Econ. Bus. Manag. 2020, 8, 67–73. [Google Scholar] [CrossRef]

- Hamann, H.; Klein, L. A Measurement Management Technology for Improving Energy Efficiency in Data Centers and Telecommunication Facilities; Office of Scientific and Technical Information (OSTI), U.S. Department of Energy: Oak Ridge, TN, USA, 2012. [Google Scholar]

- Kim, J.H.; Shin, D.U.; Kim, H. Data Center Energy Evaluation Tool Development and Analysis of Power Usage Effectiveness with Different Economizer Types in Various Climate Zones. Buildings 2024, 14, 299. [Google Scholar] [CrossRef]

- Agonafer, D.; Bansode, P.; Saini, S.; Gullbrand, J.; Gupta, A. Single Phase Immersion Cooling for Hyper Scale Data Centers: Challenges and Opportunities. In Proceedings of the ASME Heat Transfer Summer Conference (HT2023), Washington, DC, USA, 10–12 July 2023. [Google Scholar]

- Jia, D.; Lv, X.; Guo, T.; Xu, C.; Liu, C. Design of a New Integrated Air-Water Cooling Method to Improve Energy Use in Data Centers. In Proceedings of the 6th International Conference on Energy Systems and Electrical Power (ICESEP), Wuhan, China, 21–23 June 2024; pp. 214–217. [Google Scholar]

- Heydari, A.; Eslami, B.; Chowdhury, U.; Radmard, V.; Shahi, P.; Miyamura, H.; Tradat, M.; Chen, P.; Tuholski, D.; Gray, K.; et al. A Comparative Data Center Energy Efficiency and TCO Analysis for Different Cooling Technologies. In Proceedings of the ASME International Technical Conference and Exhibition on Packaging and Integration of Electronic and Photonic Microsystems (InterPACK2023), San Diego, CA, USA, 24–26 October 2023. [Google Scholar]

- Gao, T.; Kumar, E.; Sahini, M.; Ingalz, C.; Heydari, A.; Lu, W.; Sun, X. Innovative Server Rack Design with Bottom Located Cooling Unit. In Proceedings of the IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm), Las Vegas, NV, USA, 31 May–3 June 2016; pp. 1172–1181. [Google Scholar]

- Chen, H.; Li, D.; Wang, S.; Chen, T.; Zhong, M.; Ding, Y.; Li, Y.; Huo, X. Numerical Investigation of Thermal Performance with Adaptive Terminal Devices for Cold Aisle Containment in Data Centers. Buildings 2023, 13, 268. [Google Scholar] [CrossRef]

- Haghshenas, K.; Setz, B.; Blosch, Y.; Aiello, M. Enough hot air: The role of immersion cooling. Energy Inform. 2023, 6, 14. [Google Scholar] [CrossRef]

- Fouquet, F.; Hartmann, T.; Cecchinel, C.; Combemale, B. GreyCat: A Framework to Develop Digital Twins at Large Scale. In Proceedings of the ACM/IEEE 27th International Conference on Model Driven Engineering Languages and Systems, Linz, Austria, 22–27 September 2024. [Google Scholar]

- Botín-Sanabria, D.M.; Mihaita, A.-S.; Peimbert-García, R.E.; Ramírez-Moreno, M.A.; Ramírez-Mendoza, R.A.; de Lozoya-Santos, J.J. Digital Twin Technology Challenges and Applications: A Comprehensive Review. Remote Sens. 2022, 14, 1335. [Google Scholar] [CrossRef]

- Baig, S.-u.-R.; Iqbal, W.; Berral, J.L.; Carrera, D. Adaptive Sliding Windows for Improved Estimation of Data Center Resource Utilization. Future Gener. Comput. Syst. 2020, 104, 212–224. [Google Scholar] [CrossRef]

- Elyasi, N.; Bellini, A.; Klungseth, N.J. Digital Transformation in Facility Management: An Analysis of the Challenges and Benefits of Implementing Digital Twins in the Use Phase of a Building. IOP Conf. Ser. Earth Environ. Sci. 2023, 1176, 012001. [Google Scholar] [CrossRef]

- Breen, D. Immersion Cooling (Part 1): Redefining Reliability Standards for Immersion Cooling in the Data Center. Electronic Design 2025, Industrial Technologies (article), May 15 2025, authored by Dennis Breen. Available online: https://www.electronicdesign.com/technologies/industrial/article/55290691/molex-redefining-reliability-standards-for-immersion-cooling-in-the-data-center (accessed on 25 July 2025).

- Thomas, E. Turn Up the Volume: Data Center Liquid Immersion Cooling Advancements Fill 2024. Data Center Frontier 2024, May (online article), author by Erica Thomas. Available online: https://www.datacenterfrontier.com/cooling/article/55130995/turn-up-the-volume-data-center-liquid-immersion-cooling-advancements-so-far-in-2024 (accessed on 25 July 2025).

- Kumar, R.; Khatri, S.K.; Divan, M.J. Data Center Air Handling Unit Fan Speed Optimization Using Machine Learning Techniques. In Proceedings of the 9th International Conference on Reliability, Infocom Technologies and Optimization (ICRITO), Noida, India, 3–4 September 2021; pp. 1–10. [Google Scholar]

- Panwar, S.S.; Rauthan, M.M.S.; Barthwal, V. A Systematic Review on Effective Energy Utilization Management Strategies in Cloud Data Centers. J. Cloud Comput. 2022, 11, 95. [Google Scholar] [CrossRef]

- Saxena, D.; Kumar, J.; Singh, A.K.; Schmid, S. Performance Analysis of Machine Learning Centered Workload Prediction Models for Cloud. IEEE Trans. Parallel Distrib. Syst. 2023, 34, 1313–1330. [Google Scholar] [CrossRef]

- Islam, M.R.; Subramaniam, M.; Huang, P.-C. Image-based Deep Learning for Smart Digital Twins: A Review. Artif. Intell. Rev. 2025, 58, 146. [Google Scholar] [CrossRef]

- Wang, G.; Xu, W.; Zhang, L. What Can We Learn from Four Years of Data Center Hardware Failures? In Proceedings of the 47th IEEE/IFIP International Conference on Dependable Systems and Networks (DSN’17), Baltimore, MD, USA, 26–29 June 2017; pp. 45–56. [Google Scholar] [CrossRef]

- International Data Corporation (IDC). IDC Report Reveals AI-Driven Growth in Datacenter Energy Consumption, Predicts Surge in Datacenter Facility Spending Amid Rising Electricity Costs. Press Release, September 2024. Available online: https://my.idc.com/getdoc.jsp?containerId=prUS52611224 (accessed on 24 July 2025).

- Samatas, G.G.; Moumgiakmas, S.S.; Papakostas, G.A. Predictive maintenance—Bridging artificial intelligence and IoT. arXiv 2021, arXiv:2103.11148. [Google Scholar] [CrossRef]

- Meza, J.; Wu, Q.; Kumar, S.; Mutlu, O. Revisiting memory errors in large-scale production data centers: Analysis and modeling of new trends from the field. In Proceedings of the 45th IEEE/IFIP International Conference on Dependable Systems and Networks (DSN’15), IEEE. Rio de Janeiro, Brazil, 22–25 June 2015; pp. 415–426. [Google Scholar]

- U.S. EPA. eGRID 2022 Technical Guide; Table A-15: CO2 Emissions Output Emission Rates (lbs/MWh); U.S. Environmental Protection Agency: Washington, DC, USA, January 2024. [Google Scholar]

| Dataset | Columns |

|---|---|

| Jobs Data | Id, JobID, user, State, ExitStatus, Ncpus, Nnodes, CPU Utilized (s-core), CPU Efficiency (%), Walltime (s), Memory utilized (GB), Memory allocated (GB), Memory Efficiency (%), rat, Submit, Start, End, Node |

| Sensor Data | Id, data ITA, NodeNumber, Failed nodes [0], Sys Utilization, CPU Utilization, Mem Utilization, IO Utilization, Sys Power, CPU Power, Mem Power, CPU 1 Temp, CPU 2 Temp, Fan1A Tach, Fan1B Tach, Fan2A Tach, Fan2B Tach, Fan3A Tach, Fan3B Tach, Fan4A Tach, Fan4B Tach, Fan5A Tach, Fan5B Tach, Ambient Temp, System Air Flow, Exhaust Temp, DC Energy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Veigas, K.L.; Chinnici, A.; De Chiara, D.; Chinnici, M. Towards Energy Efficiency of HPC Data Centers: A Data-Driven Analytical Visualization Dashboard Prototype Approach. Electronics 2025, 14, 3170. https://doi.org/10.3390/electronics14163170

Veigas KL, Chinnici A, De Chiara D, Chinnici M. Towards Energy Efficiency of HPC Data Centers: A Data-Driven Analytical Visualization Dashboard Prototype Approach. Electronics. 2025; 14(16):3170. https://doi.org/10.3390/electronics14163170

Chicago/Turabian StyleVeigas, Keith Lennor, Andrea Chinnici, Davide De Chiara, and Marta Chinnici. 2025. "Towards Energy Efficiency of HPC Data Centers: A Data-Driven Analytical Visualization Dashboard Prototype Approach" Electronics 14, no. 16: 3170. https://doi.org/10.3390/electronics14163170

APA StyleVeigas, K. L., Chinnici, A., De Chiara, D., & Chinnici, M. (2025). Towards Energy Efficiency of HPC Data Centers: A Data-Driven Analytical Visualization Dashboard Prototype Approach. Electronics, 14(16), 3170. https://doi.org/10.3390/electronics14163170