1. Introduction

With the proliferation of large-scale digital literature repositories, there has been an increasing demand for information retrieval systems capable of leveraging diverse cues such as cover images, Optical Character Recognition (OCR)-extracted text, and multilingual content. This trend poses dual challenges for retrieval systems in handling both multimodal and cross-lingual information [

1,

2]. Cross-modal retrieval (e.g., image–text matching) and cross-lingual retrieval (e.g., Chinese–English document search) have emerged as two critical subfields in information retrieval, each achieving significant advances in recent years [

3,

4]. For cross-modal retrieval, early efforts focused on learning joint embedding spaces between images and textual descriptions. For instance, Contrastive Language–Image Pretraining (CLIP) proposed by OpenAI demonstrated that contrastive learning on 400 million image–text pairs enables the acquisition of general-purpose visual-semantic representations without requiring additional labels [

5]. The success of CLIP showcased the potential of large-scale pretraining and proved that visual models can benefit directly from natural language supervision. Similarly, Google’s ALIGN model, trained on billions of web image–alt text pairs, further enhanced the alignment between visual and textual modalities [

6]. In the realm of cross-lingual retrieval, multilingual pretrained language models have significantly improved semantic alignment across languages [

7], enabling users to issue queries in one language while retrieving relevant documents in another. Such capabilities have been widely adopted in cross-lingual information retrieval and question answering.

However, most existing cross-modal retrieval models are developed within a monolingual (primarily English) context [

4,

8,

9], rendering them unsuitable for multilingual scenarios. Conversely, conventional cross-lingual retrieval approaches typically rely solely on textual alignment, failing to incorporate visual information. Consequently, integrating cross-modal and cross-lingual retrieval into a unified framework has become an emerging research focus [

3]. One critical obstacle lies in the highly imbalanced distribution of multilingual image–text datasets, with over 90% of available pairs skewed toward high-resource languages such as English. In contrast, cross-modal data for low-resource languages such as Chinese remain scarce [

4]. To mitigate this issue, various strategies involving machine translation and multilingual corpora have been proposed to augment and align datasets. For example, SMALR introduced by Burns et al. incorporates cross-lingual consistency constraints to retrieve similar results for translated queries, supporting ten languages and outperforming previous models with minimal additional parameters [

10]. Similarly, the MURAL model proposed by Jain et al. leverages large-scale translation pairs within contrastive learning, significantly improving retrieval performance across over 100 languages, particularly benefiting low-resource languages compared to models trained only on English image–text data [

4].

On the architectural side, achieving unified alignment across modalities and languages remains technically challenging. Cross-encoder-based multimodal pretraining models such as M3P [

1] and UC

2 [

2] are capable of jointly processing images and multilingual text, thereby capturing deep cross-modal and cross-lingual interactions. Despite their expressive capacity, these models impose substantial computational overhead during inference, which hinders their deployment in large-scale retrieval scenarios. In contrast, dual-encoder architectures enable efficient retrieval by independently encoding images and text, yet they face significant challenges in aligning representation spaces across different modalities and languages [

5,

6]. To address this, recent research has explored a variety of alignment strategies, including contrastive learning for joint embedding alignment, multitask loss functions combining image–text matching with translation consistency, and reranking mechanisms guided by translated queries [

4,

11,

12]. Nevertheless, issues of performance instability and cross-modal inconsistency persist. For example, Nie et al. observed that cross-lingual pretraining often yields uneven retrieval performance across different languages. Moreover, conventional cross-modal models may suffer from ranking inconsistency when retrieving image–text pairs described in multiple languages, due to optimization bias during training [

3]. In addition, most existing studies focus on aligning global image semantics with textual descriptions while overlooking the rich information embedded in scene text within images. Only a few works have attempted to incorporate scene text into retrieval models [

13]. For instance, Miyawaki et al. proposed scene-text-enhanced dual encoders to improve English image–text retrieval [

14]; however, their approach did not address cross-lingual scenarios.

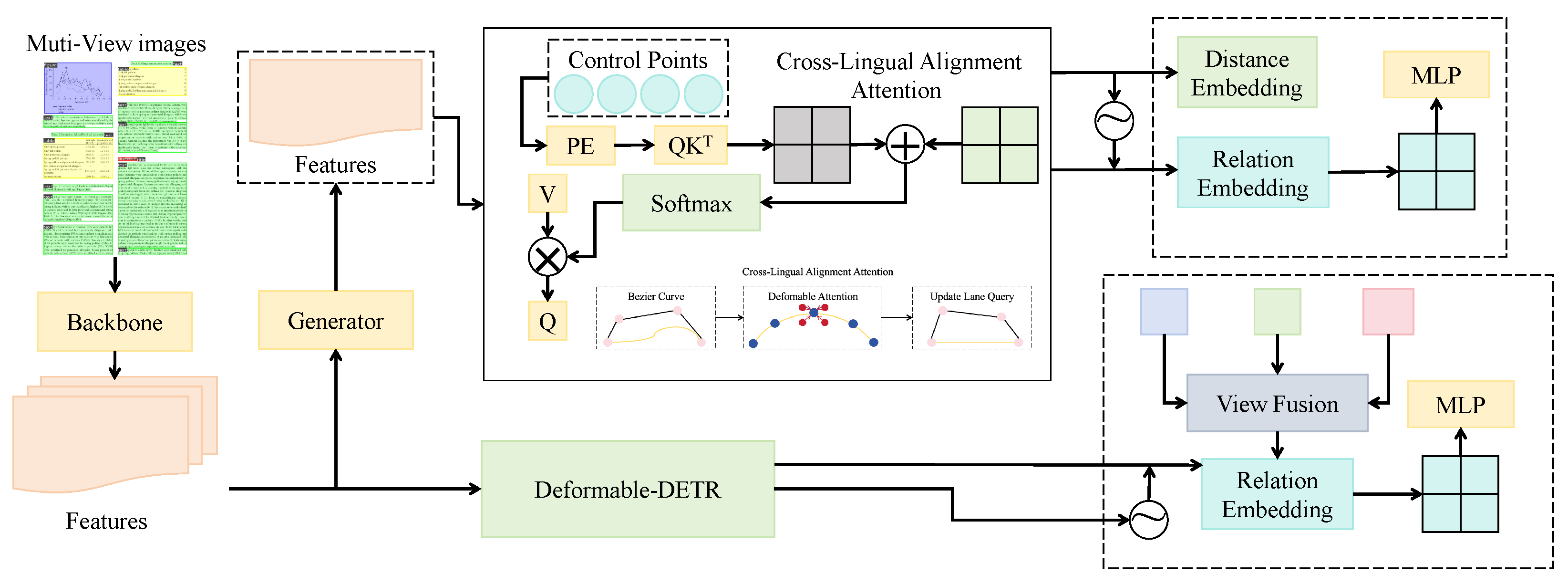

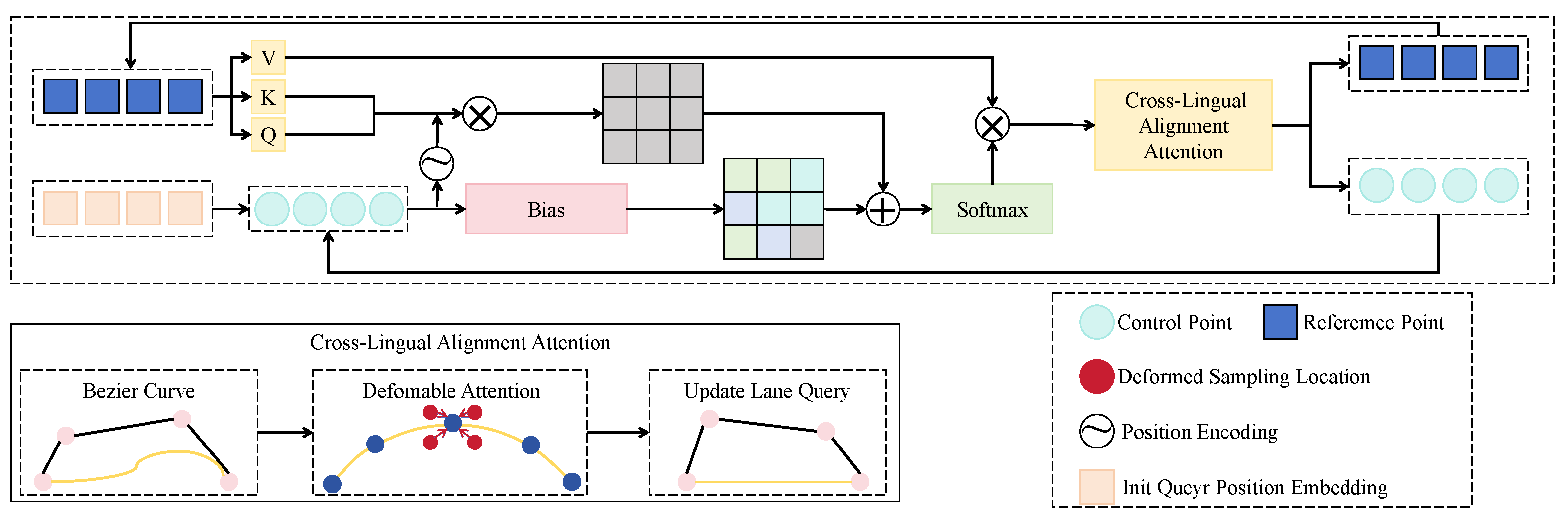

To address the aforementioned challenges, a cross-modal and cross-lingual retrieval model that integrates visual features, OCR text, and bilingual semantics is proposed. Specifically, a multi-encoder architecture based on a unified embedding space is designed, comprising visual, OCR, and multilingual text encoders. These encoders enable unified mapping of image features, scene text, and Chinese–English semantic representations, facilitating efficient retrieval across modalities and languages. For the first time, PubLayNet and WikiCLIR datasets are jointly utilized for end-to-end training, bridging visual–OCR alignment with Chinese–English cross-lingual retrieval and enhancing the model’s generalization across tasks. The proposed architecture consists of four key modules: visual encoding, OCR encoding, language encoding, and a multimodal fusion layer, supporting flexible retrieval using combinations of image, OCR text, Chinese queries, and English documents. Furthermore, a multi-loss training strategy is introduced, incorporating contrastive losses for visual–text, OCR–text, and cross-lingual pairs. These components collaboratively optimize the model to enhance representational consistency and robustness across modalities and languages, effectively reducing retrieval biases introduced by semantic and modality mismatches. The main contributions of this work can be summarized as follows:

We propose a novel architecture that seamlessly integrates visual, OCR, and bilingual semantic features into a shared embedding space, enabling retrieval across arbitrary modality and language combinations.

We are the first to jointly train on PubLayNet (image–OCR) and WikiCLIR (Chinese–English) datasets, bridging visual–OCR alignment with cross-lingual retrieval in a single unified model.

We design a structure-aware OCR encoder and a cross-lingual alignment module based on deformable attention, enhancing fine-grained semantic consistency across modalities and languages.

We introduce a joint contrastive loss combining visual–text, OCR–text, and cross-lingual objectives, significantly improving robustness and mitigating semantic bias in cross-modal and cross-lingual retrieval.

Extensive experiments on image→OCR, Chinese→English, and joint multimodal retrieval tasks demonstrate superior results compared to strong baselines such as CLIP, LayoutLMv3, and UNITER.

4. Results and Discussion

4.1. Experimental Settings

4.1.1. Hardware and Software Platform

All experiments were conducted on a high-performance computing workstation equipped with an NVIDIA RTX 3090 GPU (24 GB VRAM), an Intel Core i9-12900K CPU, and 128 GB of memory, operating under Ubuntu 20.04. All models were implemented using the PyTorch 1.13.1 framework, with training accelerated by CUDA 11.6. The multilingual encoders were instantiated from the HuggingFace Transformers library using XLM-R large, while the visual encoders included pretrained ResNet-50 and ViT-B/16 models. The OCR module was implemented using Tesseract and LayoutLMv3. Data preprocessing and augmentation were performed with OpenCV, NLTK, and jieba. The AdamW optimizer was employed with an initial learning rate of , a batch size of 32, and 20 total training epochs. All models were evaluated under identical settings to ensure reproducibility and fair comparison.

4.1.2. Baselines

To comprehensively validate the effectiveness of the proposed method in multimodal and multilingual retrieval tasks, several representative models were selected as baselines. These included CLIP [

5], LayoutLMv3 [

20], UNITER (single-stream architecture) [

16], an image-to-OCR baseline [

30], and a Chinese-to-English document retrieval baseline (CLIR baseline) [

31]. CLIP, as a representative dual-encoder model, demonstrates strong performance in image–text alignment, especially in image-to-OCR tasks. LayoutLMv3 integrates visual, textual, and layout information, making it suitable for OCR understanding in structured document scenarios. UNITER, as a single-stream cross-modal pretraining model, captures fine-grained associations between image regions and language, yielding stable retrieval performance across joint tasks. The CLIR baseline utilizes multilingual encoders such as mBERT or XLM-R to model cross-lingual representation between Chinese queries and English documents, serving as a fundamental benchmark for language alignment. The image-to-OCR baseline defines a visual-only alignment task between document images and text regions, allowing evaluation of visual retrieval performance without linguistic input.

4.1.3. Evaluation Metrics

A variety of standard metrics were adopted to evaluate the model from multiple dimensions, including retrieval precision, ranking relevance, and overall retrieval capability. These included Precision@K, Recall@K, Mean Average Precision (mAP), Mean Reciprocal Rank (MRR), Normalized Discounted Cumulative Gain (nDCG@K), and F1@K. Their mathematical definitions are given as follows:

here,

denotes the relevance of the

i-th ranked document (typically 0 or 1), and

R is the total number of relevant documents for a given query.

is the precision at the

k-th position,

Q represents the total number of queries, and

indicates the rank position of the first relevant document for the

i-th query.

is the ideal DCG when all relevant documents are perfectly ranked. Precision@K measures the proportion of relevant items among the top

K results, reflecting retrieval accuracy. Recall@K evaluates the coverage of relevant documents among retrieved results. mAP averages the precision across recall thresholds for all queries. MRR emphasizes the rank of the first relevant document, offering insight into real-world user experience. nDCG@K assesses ranking quality by penalizing lower-ranked relevant items. F1@K harmonizes precision and recall. These complementary metrics enable a comprehensive assessment of performance in complex multimodal and multilingual retrieval scenarios.

4.2. Performance Comparison Across Tasks and Models in Multimodal and Multilingual Retrieval

This experiment was designed to evaluate the overall performance of various model types across three retrieval tasks: image-to-OCR unimodal retrieval, Chinese-to-English cross-lingual retrieval, and joint multimodal retrieval. Representative models including CLIP, LayoutLMv3, UNITER, task-specific baselines, and the proposed method were selected to systematically compare their adaptability and expressive capacity under multilingual and multimodal scenarios. The objective of the experimental design was to investigate the performance boundaries of unimodal structures, single-stream architectures, and dual-stream cross-modal frameworks under different retrieval contexts and to assess the advantages of the proposed unified alignment framework in complex practical tasks.

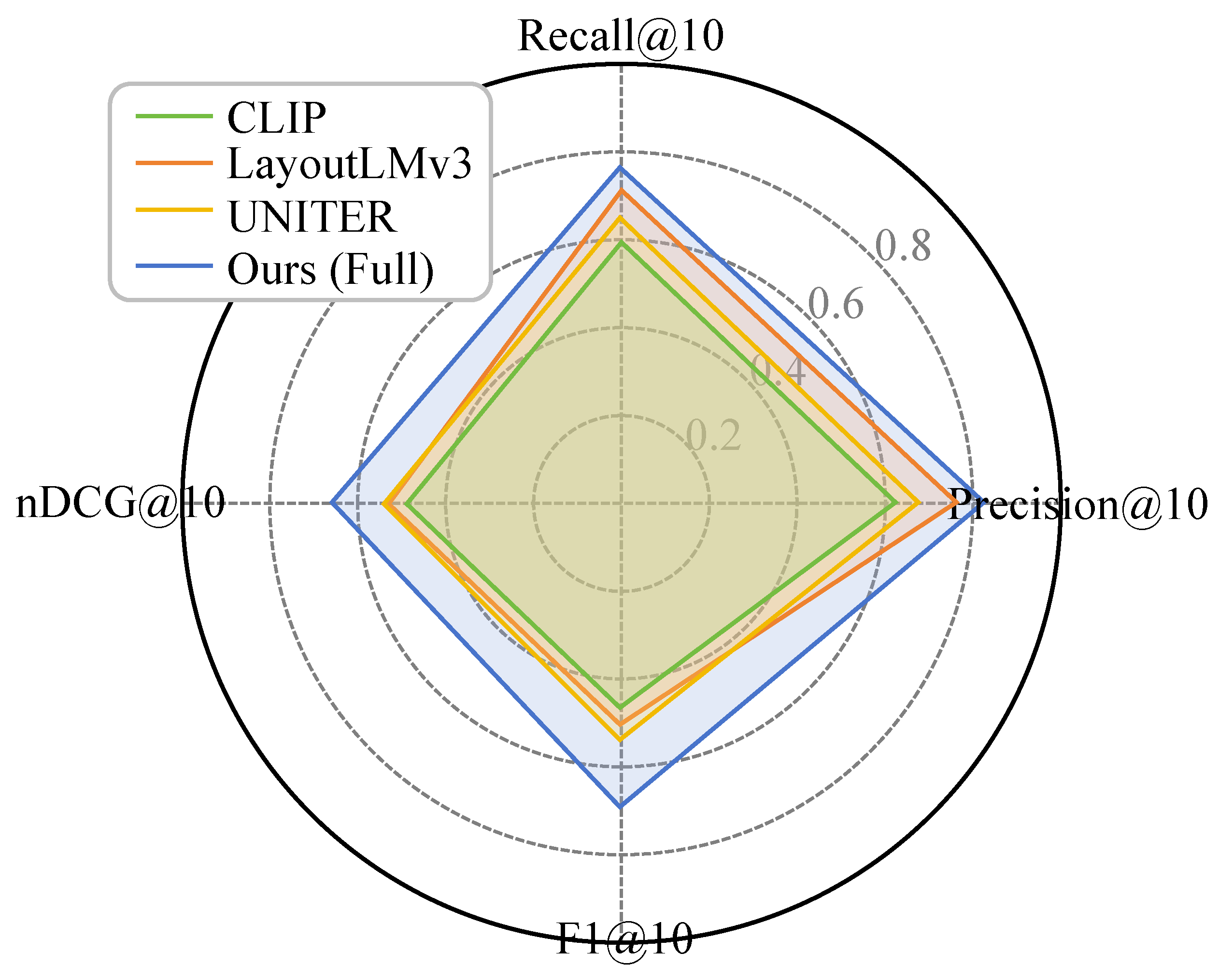

As shown in

Table 2 and

Figure 4, the proposed model achieved superior performance across all three tasks. Notably, in the joint multimodal setting, a Precision@10 of 0.693 was attained, surpassing UNITER (0.566) and CLIP (0.528) by a considerable margin. Although CLIP, as a typical dual-encoder model, demonstrates efficient inference, its reliance on contrastive loss for cross-modal constraint results in limited capability to capture local structures and linguistic details, leading to suboptimal performance in the image-to-OCR task (Precision@10 = 0.621). LayoutLMv3, due to its integration of OCR and layout features, adapts well to structured document scenarios, achieving a Precision@10 of 0.778 in the same task, but exhibits limitations in cross-lingual contexts. UNITER, being a single-stream cross-modal transformer, offers stronger semantic modeling, reflected in higher nDCG@10 scores compared to CLIP, but lacks structural awareness. The proposed method unifies image, OCR, and language modalities via a shared semantic embedding space and a joint contrastive learning strategy, forming a compact and aligned representation path in low-dimensional space. This mitigates the projection shift issue caused by modal switching in conventional frameworks and facilitates optimal performance under multi-task conditions.

4.3. Performance on Long-Tail Queries and Noisy Inputs

To better approximate real-world retrieval scenarios, we further evaluated the proposed framework and baseline models under two challenging conditions: long-tail queries and noisy document inputs. Long-tail queries refer to infrequent or domain-specific terms that rarely appear in the training corpus, which are representative of niche user information needs in practical retrieval settings. To simulate this, we extracted a subset of evaluation queries with low occurrence frequency and assessed retrieval performance specifically on this subset. Noisy document inputs were generated to mimic realistic degradations that occur in scanned or historical materials, including Gaussian noise, JPEG compression artifacts, random skew, and synthetic OCR errors such as character substitutions or deletions. These conditions were applied to both the image → OCR and Chinese → English retrieval tasks. The resulting performance comparison between the proposed framework and representative baselines is presented in

Table 3, illustrating the robustness of different models when facing rare query distributions and degraded document quality.

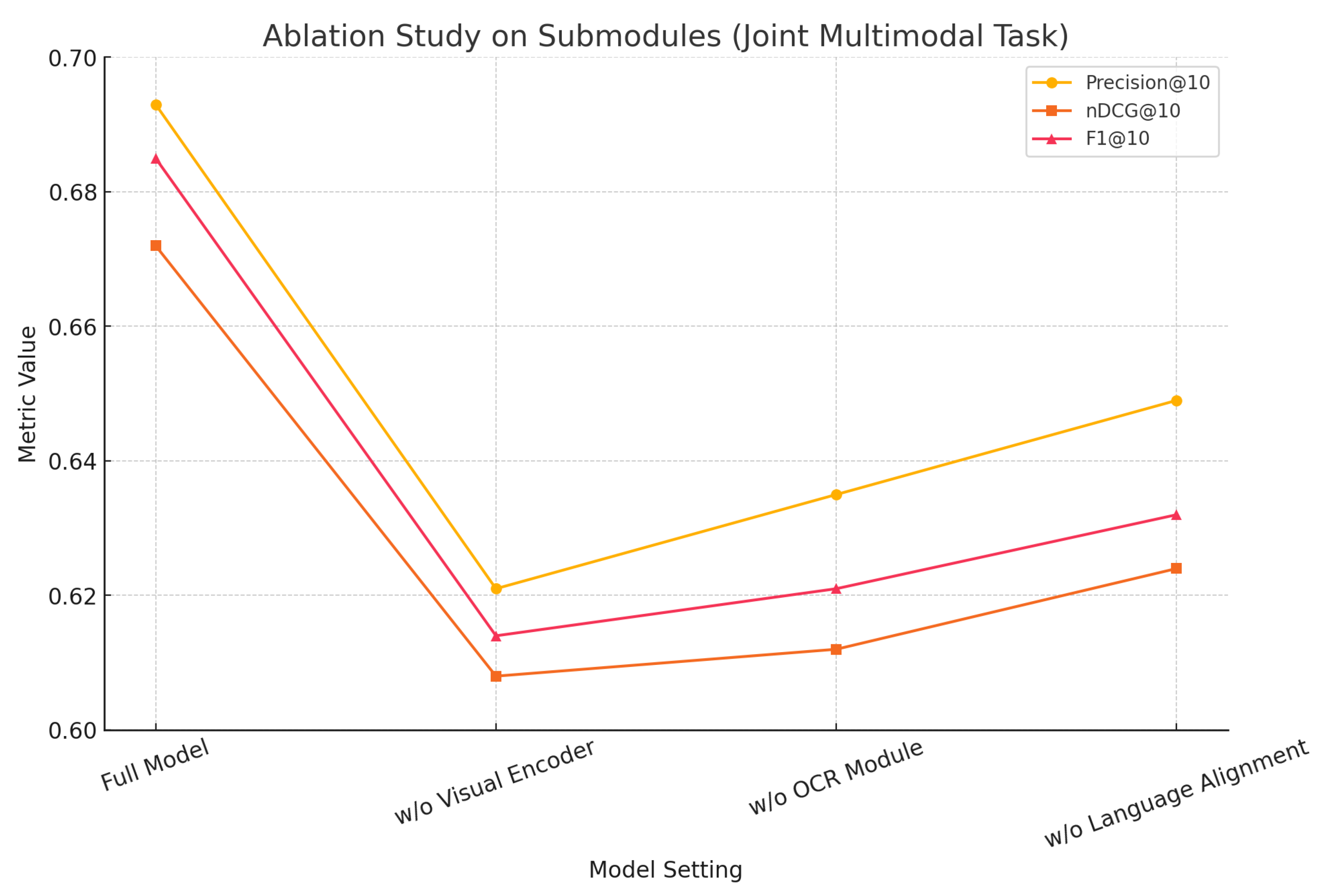

4.4. Ablation Study of Submodules (Joint Multimodal Task)

To validate the contribution of key submodules—including the visual encoder, OCR module, and language alignment module—an ablation study was conducted within the joint multimodal retrieval setting. Each submodule was removed individually from the full model, and the impact on Precision@10, nDCG@10, and F1@10 was evaluated. This experiment quantitatively assessed the role of each modality within the fusion framework and provided empirical evidence for the effectiveness of the proposed collaborative mechanism in supporting unified retrieval.

As presented in

Table 4 and

Figure 5, the full model outperformed all ablated variants, indicating that each submodule provides complementary benefits. Removing the visual encoder reduced Precision@10 to 0.621, highlighting the importance of global structure and visual semantics from the document cover. The removal of the OCR module also caused a performance drop in F1@10, demonstrating the indispensability of localized text region features for fine-grained semantic modeling. Excluding the language alignment module led to a notable decline in nDCG@10, emphasizing its core role in aligning multilingual queries and ensuring accurate semantic ranking. From a mathematical perspective, the visual encoder contributes high-dimensional compressed representations of global image features, the OCR module introduces auxiliary alignment through local structural modeling, and the language alignment module enhances cross-lingual consistency through shared embeddings and contrastive optimization. The joint embedding of these modalities within a unified space enables multi-center, multi-scale feature representation, enhancing convergence stability and query robustness under multimodal conditions. Thus, the synergistic integration of submodules not only improves local task-specific outcomes but also systematically enhances global semantic alignment and retrieval quality.

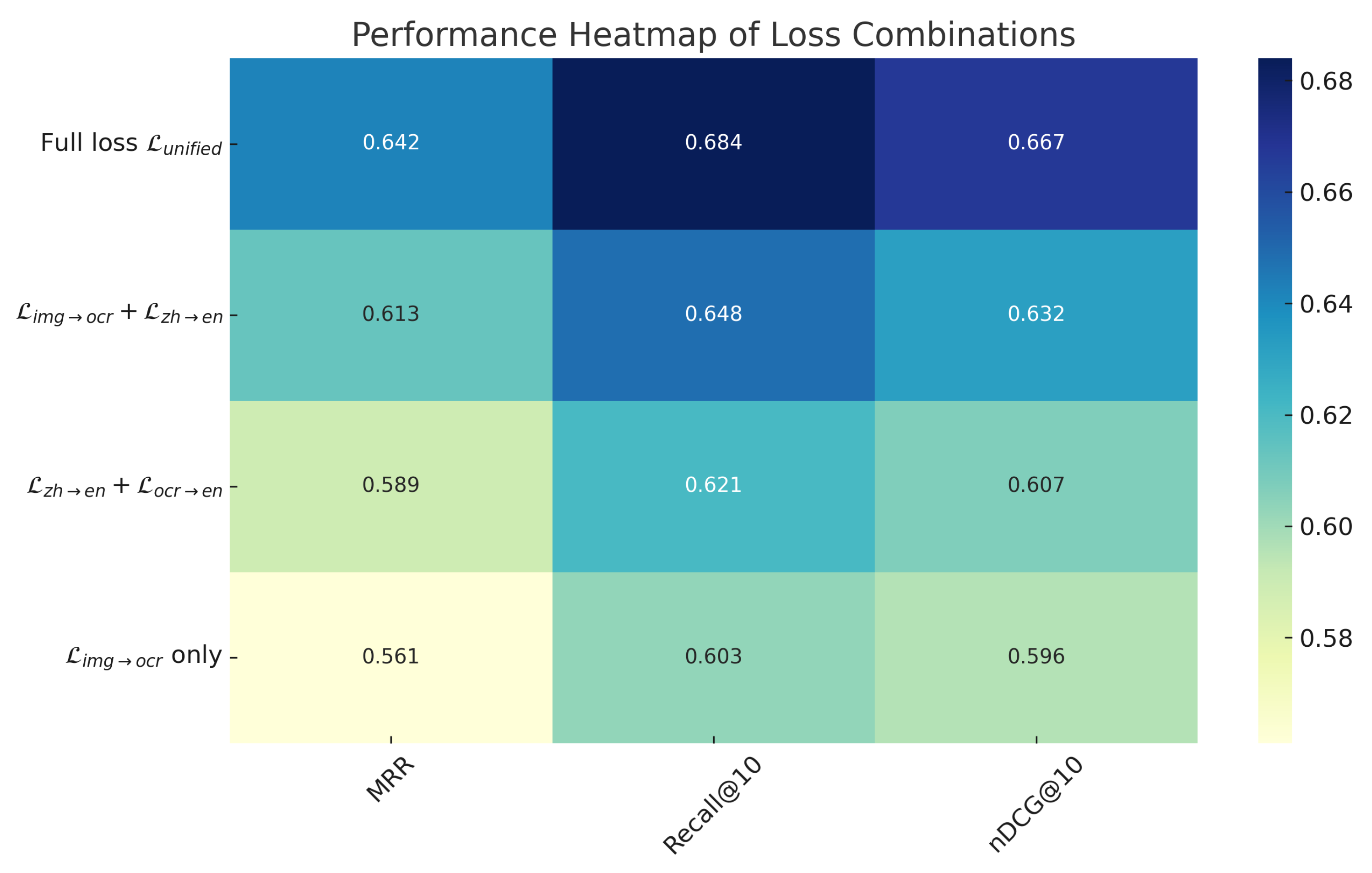

4.5. Ablation Results of Joint Loss Functions for Unified Embedding Retrieval

This experiment was conducted to investigate the specific contributions of different loss functions in the learning of a unified multimodal embedding space. A series of ablation configurations were designed by selectively removing key alignment terms across modalities and languages, with the aim of evaluating the impact of each loss component on retrieval performance. The experiment focused on metrics such as MRR, Recall@10, and nDCG@10, to assess the role of complete versus partial loss structures in achieving semantic mapping stability and consistent ranking performance in multilingual and multimodal settings. This design provides insight into the objective function’s role in modeling semantic alignment across modalities.

As presented in

Table 5 and

Figure 6, the complete joint loss

achieved the best performance across all metrics, with an MRR of 0.642, significantly surpassing all partial loss settings. When the cross-modal language loss was removed—thus excluding the direct alignment between language modalities and image/OCR representations—nDCG@10 dropped to 0.632, indicating a weakened semantic boundary across modalities and reduced ranking precision. Further excluding the visual loss and retaining only language-level alignment between Chinese and English OCR resulted in an MRR of 0.589, emphasizing the importance of the visual modality in constructing global semantic representations. The configuration using only

yielded the poorest performance (MRR = 0.561), which can be attributed to the absence of language-guided semantic abstraction. Without linguistic supervision, the embedding space failed to decode query semantics effectively, leading to degenerated semantic projection structures. Mathematically, the joint contrastive loss not only expands intra-modal positive-negative pairs but also forms hierarchical geometric relationships across modality-specific subspaces through multi-objective optimization. This experiment demonstrates that relying solely on either visual or linguistic alignment is insufficient to achieve comprehensive semantic consistency. A complete loss framework is therefore essential to build a robust and generalizable retrieval system across modalities.

4.6. Discussion

4.6.1. Practical Application Analysis

The proposed unified multimodal and multilingual retrieval framework demonstrates significant advantages across diverse real-world scenarios, particularly in library information services, cross-language document retrieval, and multimodal educational resource recommendation. In traditional university library systems, users typically rely on cover images, OCR-extracted content tables, or chapter information for fuzzy search. However, existing systems are predominantly unimodal and incapable of handling mixed queries involving images, text, and language. By incorporating visual encoders and OCR alignment modules, the proposed method enables structured retrieval through simple cover image inputs. The system automatically parses layout and text content, facilitating linkage to relevant documents, papers, or collections. On international platforms such as multilingual digital libraries or Wikimedia Commons, users often encounter retrieval barriers between Chinese queries and English content. Through the proposed cross-lingual alignment mechanism, Chinese queries can be mapped to English documents, images, or OCR content without the need for external translation tools, significantly enhancing retrieval accuracy and efficiency. In educational domains, particularly for digitalized K–12 content management, educators can upload textbook covers or screenshots to automatically retrieve related subjects or multilingual reference materials. Moreover, in domains like news media management and digital copyright monitoring, the joint modeling of cover images, OCR abstracts, and multilingual retrieval supports consistent cross-modal copyright verification.

4.6.2. Computational Efficiency Analysis

Although the proposed framework integrates multiple components—visual encoding, structure-aware OCR processing, and multilingual language modeling—it has been designed with computational efficiency in mind. The architecture adopts a dual-encoder retrieval paradigm, allowing visual and textual inputs to be encoded independently. This enables pre-computation of embeddings and efficient similarity search at inference time, avoiding the need for computationally expensive joint query–document encoding for every retrieval request. In addition, the modular structure of the framework allows the visual encoder, OCR module, and language encoder to operate in parallel. During both training and inference, these modules process their respective modalities concurrently before feature fusion in the unified semantic space. This parallelism reduces end-to-end latency and improves throughput in large-scale retrieval tasks. Furthermore, training efficiency is improved by leveraging pretrained backbone models for the visual and multilingual encoders, which reduces convergence time and minimizes redundant computation in early layers. The OCR component is similarly integrated into the pipeline in a way that avoids repeated text extraction for static document collections, further saving processing time in deployment scenarios. Through these design choices, the framework achieves strong retrieval performance while keeping computational demands manageable for large-scale multimodal and multilingual document retrieval applications.

4.6.3. Generalization and Robustness Analysis

The proposed unified multimodal multilingual retrieval framework is inherently language-agnostic in design. By projecting visual, OCR, and multilingual textual representations into a shared embedding space, the architecture can in principle accommodate languages beyond Chinese and English without requiring structural changes. The use of multilingual pretrained language encoders further facilitates transfer to additional languages, as such encoders already embed semantic knowledge across a wide variety of language families. We expect that, with appropriate parallel corpora or translation-based augmentation, the framework could be adapted to low-resource languages, even in scenarios where annotated multimodal training data is scarce. The design also supports robustness to variations in document quality. Because retrieval relies on complementary signals from both visual layout features and OCR-extracted text, the system is likely to remain effective even when one modality is partially degraded, as might occur with scanned receipts, historical documents, or other noisy inputs. For example, when OCR accuracy drops due to low resolution or complex layouts, visual features can still provide discriminative cues, and conversely, clean textual cues can compensate for less informative visual patterns. This multimodal complementarity positions the framework to better handle challenging real-world document conditions compared to unimodal approaches. In summary, while the current evaluation focuses on Chinese–English retrieval with clean document inputs, the framework’s modularity, multilingual foundation, and cross-modal design suggest promising potential for extension to low-resource languages and for robust performance under noisy document conditions in future applications.

4.7. Limitation and Future Work

Despite the superior performance exhibited by the proposed unified multimodal multilingual retrieval framework across multiple tasks, certain limitations remain. First, model training currently relies on predefined modality pairs and alignment labels (e.g., cover–OCR pairs or Chinese–English document alignments), which may limit scalability in scenarios with insufficient coverage. Second, the current system lacks user interaction feedback mechanisms, hindering dynamic adaptation and online optimization based on real-time user behaviors such as clicks, corrections, or ratings. Future research directions include introducing generative multimodal augmentation and multilingual knowledge graph supervision to improve robustness under low-resource conditions. Additionally, user-driven online learning mechanisms can be developed to enable model updates based on real-time feedback and support personalized retrieval optimization, thereby promoting widespread deployment of intelligent retrieval systems in real-world multimodal and multilingual environments. Additionally, human-in-the-loop approaches and reinforcement learning from human feedback (RLHF) can be explored to incorporate explicit and implicit user feedback into the training process, enabling the system to learn personalized retrieval preferences and adapt to evolving information needs. Such user-driven online learning mechanisms would allow the model to continuously refine its performance, thereby promoting widespread deployment of intelligent retrieval systems in real-world multimodal and multilingual environments.

5. Conclusions

With the rapid growth of library information systems, digital literature platforms, and cross-lingual knowledge retrieval demands, conventional retrieval systems based on single modality or single language have become inadequate for supporting efficient information access in multimodal and multilingual environments. To address this challenge, a unified multimodal and multilingual aligned retrieval framework has been proposed. By constructing a shared semantic space for visual inputs, OCR texts, and Chinese–English language representations, end-to-end cross-modal alignment and high-precision retrieval have been achieved across images, texts, and languages. The proposed model architecture integrates multimodal embedding encoders, a cross-lingual attention mechanism, and a joint contrastive learning loss, enabling support for arbitrary combinations of query modalities—such as cover image queries, OCR content alignment, and cross-language retrieval—across complex real-world scenarios. The effectiveness of the proposed framework has been extensively validated through experimental evaluations. In tasks including image → OCR, Chinese → English document retrieval, and joint multimodal input scenarios, the proposed model consistently outperformed existing baselines across multiple key metrics such as Precision@10, Recall@10, and nDCG@10. Specifically, in the joint multimodal input task, a Precision@10 of 0.693 and an F1@10 of 0.685 were achieved, demonstrating significant improvements over mainstream baseline models. Additional ablation studies confirmed the complementary contributions of the visual, OCR, and language alignment modules, while the loss function ablation results further highlighted the critical role of multimodal contrastive learning strategies in constructing a unified semantic space.