ImpactAlert: Pedestrian-Carried Vehicle Collision Alert System

Abstract

1. Introduction

2. Related Work

2.1. Smart Canes for the Visually Impaired

2.2. Pedestrian-Carried Vehicle Alerting Systems

3. Materials and Methods

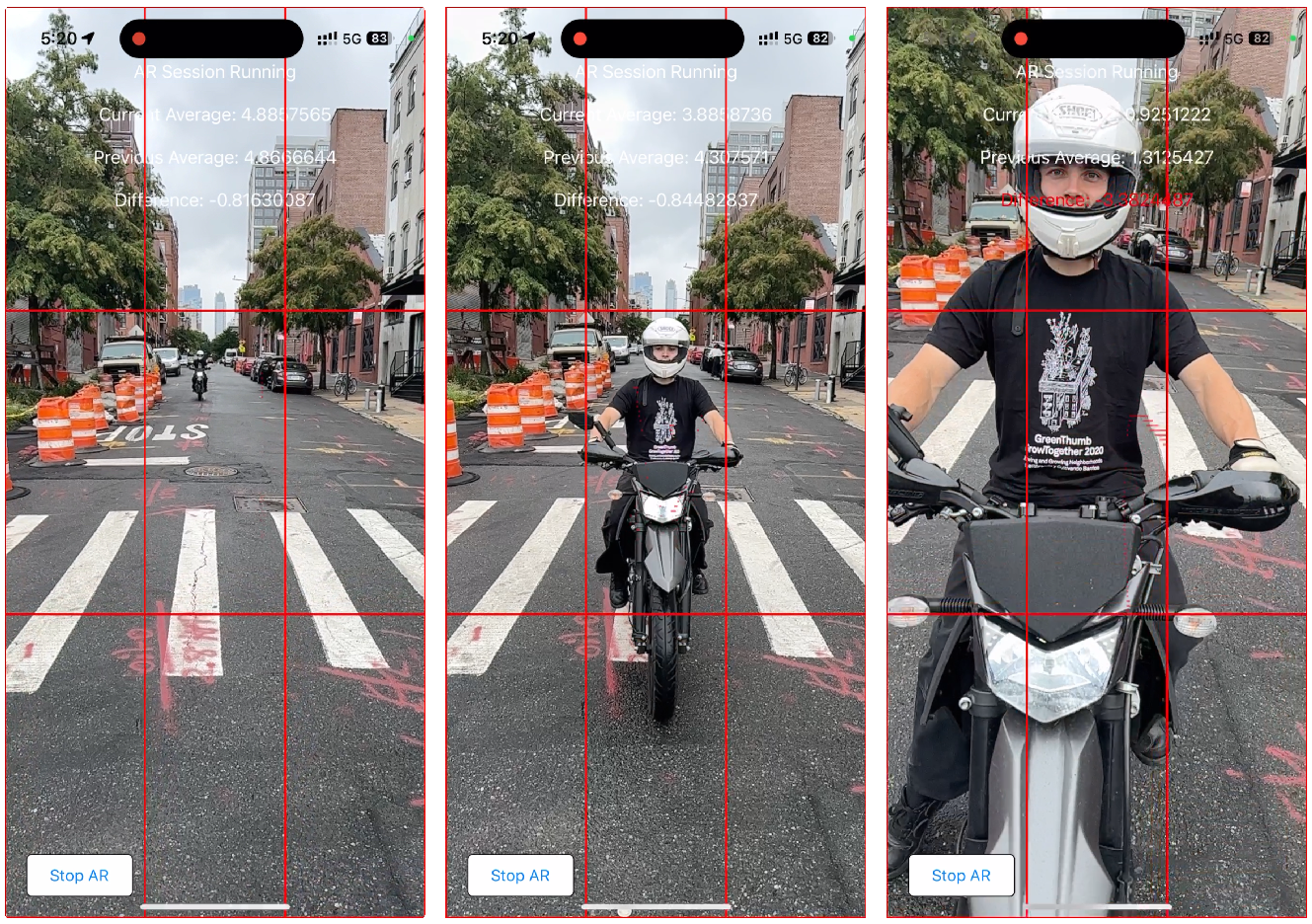

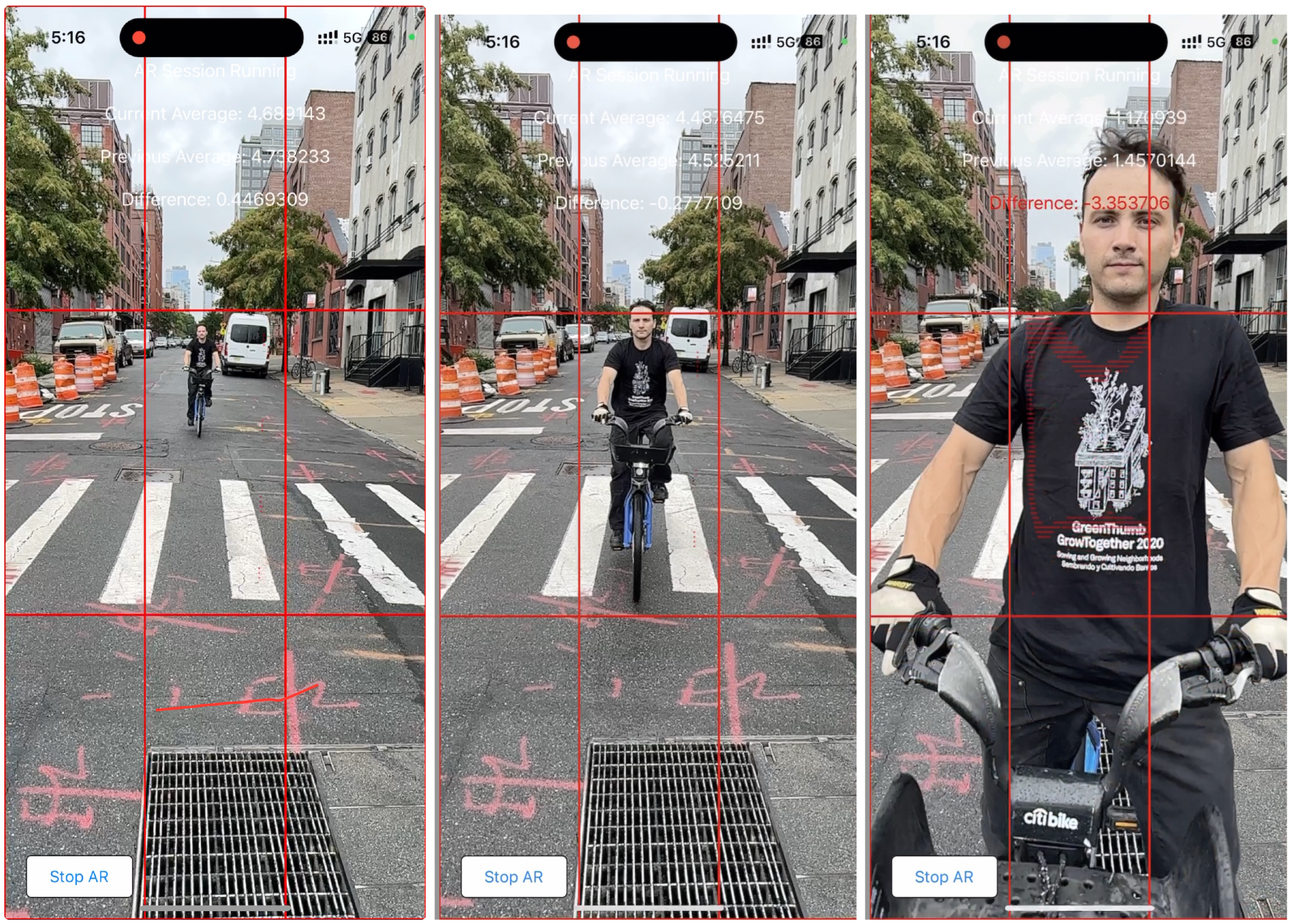

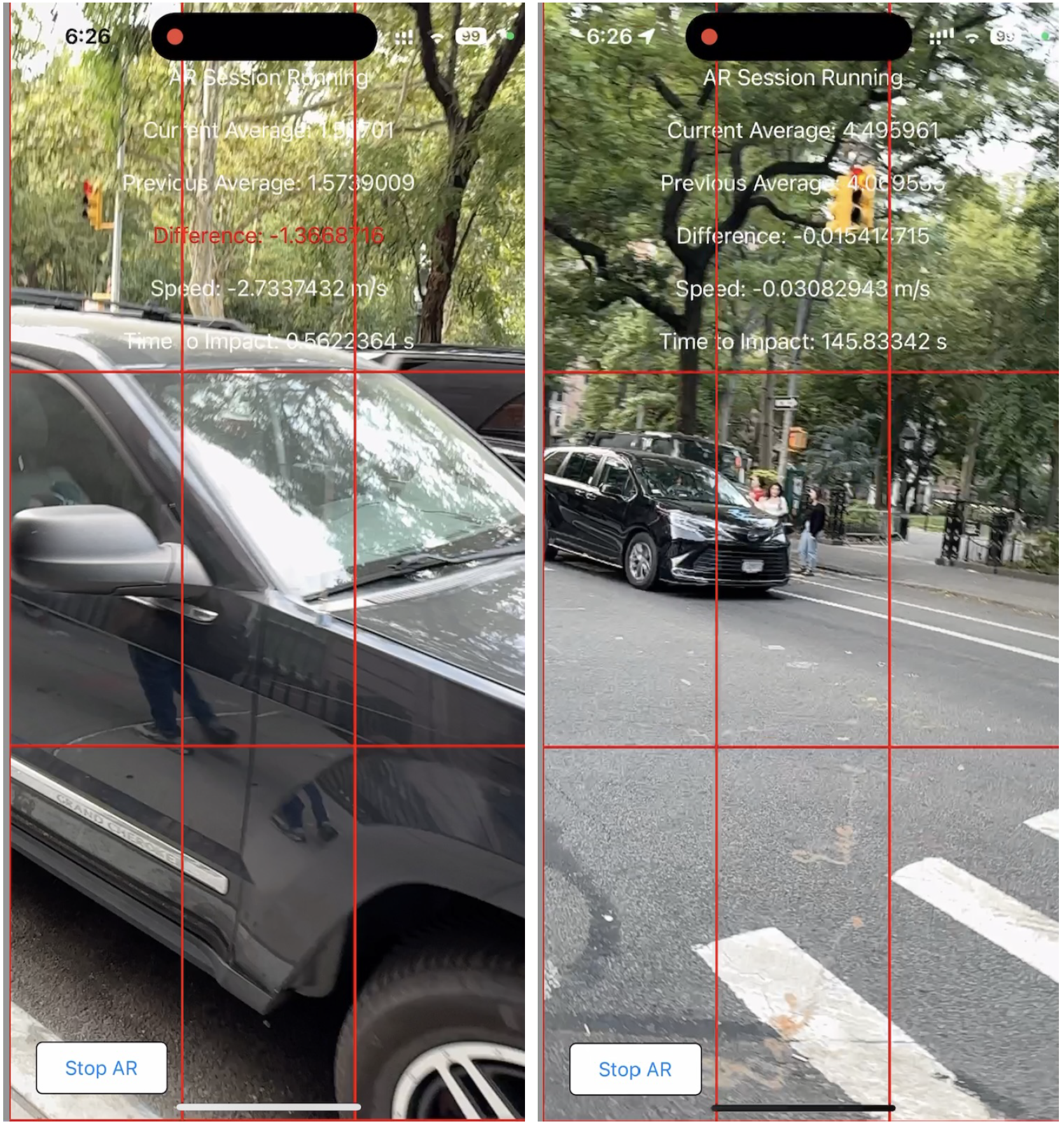

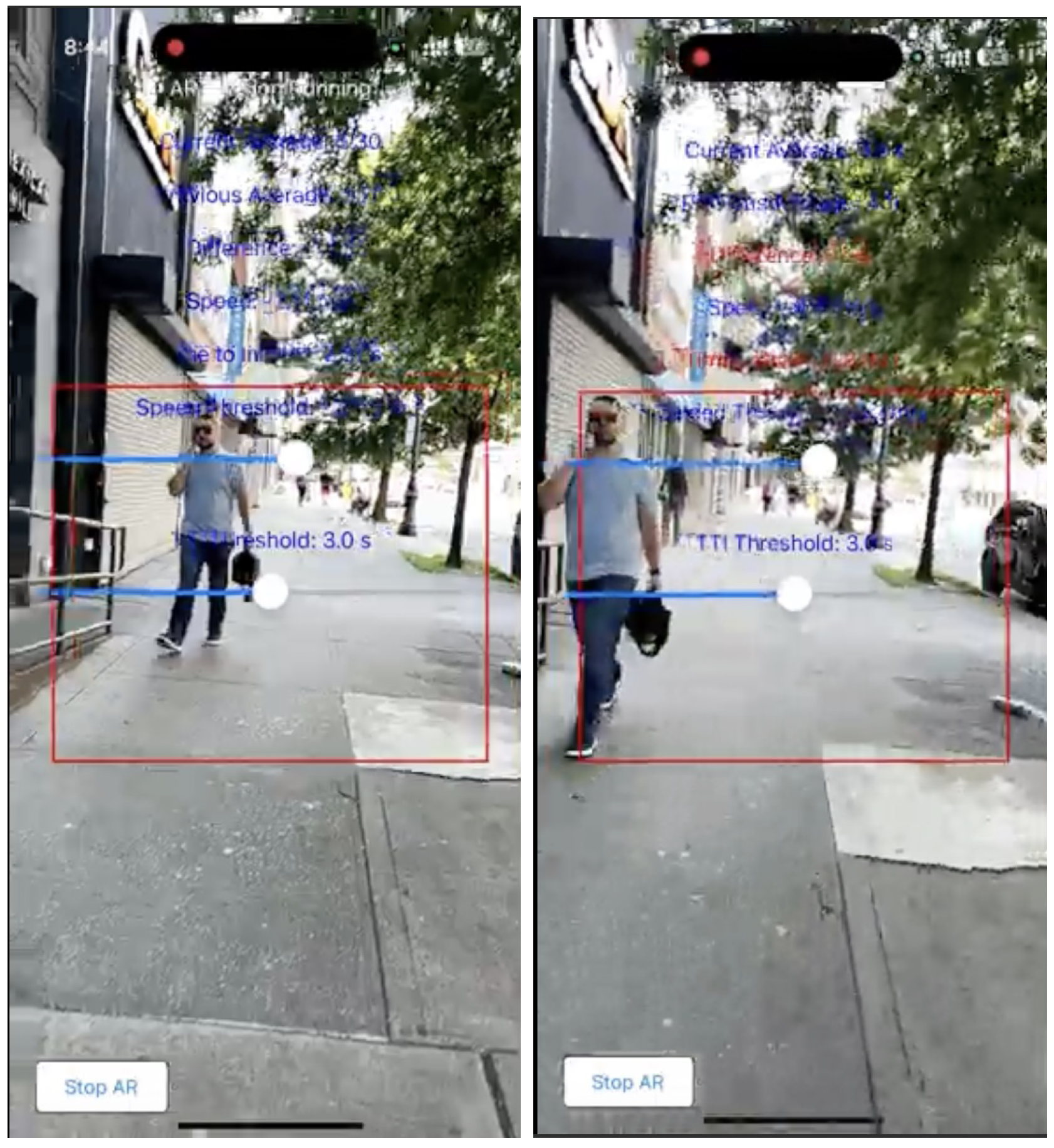

3.1. Threat Detection Technology and Thresholds

3.2. Algorithms

- Objects that are static with relative to the pedestrian should not influence the calculations of the threatDistance or speed, so we focus only on objects that have changed distances. Please note however that the pedestrian might walk towards fixed objects in which case the pedestrian should be alerted.

- Further, if the pedestrian moves the camera and some pixels register static objects to have moved towards the pedestrian, approximately the same number of pixels will register that static objects have moved away from the pedestrian.

- Approaching objects (or objects that the pedestrian approaches) will occupy more of the screen and therefore cause more pixels to register relative movement towards the pedestrian, reducing the threatDistance and therefore increasing the possibility of threat detection.

- By contrast, objects moving to the side will occupy less of the screen and therefore cause more pixels to register movement away from the pedestrian, reducing the possibility of false alarms.

| Algorithm 1 Main Routine: Process middle depth data from LiDAR frame. Look at the close points that are moving. Based on default thresholds, if the object is approaching faster than 2.2 m per second and the time to impact is less than 3 s, then an alert is sounded. The user has control over these thresholds. |

|

| Algorithm 2 Subroutine: ExtractDepth. The most important screen grid points points are in the screen center because those points indicate objects that potentially approach the pedestrian. In our training experiments, objects not in the center of the screen were not a threat because they would go off to the side. |

|

| Algorithm 3 Subroutine: FindThreatDistanceMove. Determine the distance of the close points in the center of the screen that have moved as well as their average net movement. |

|

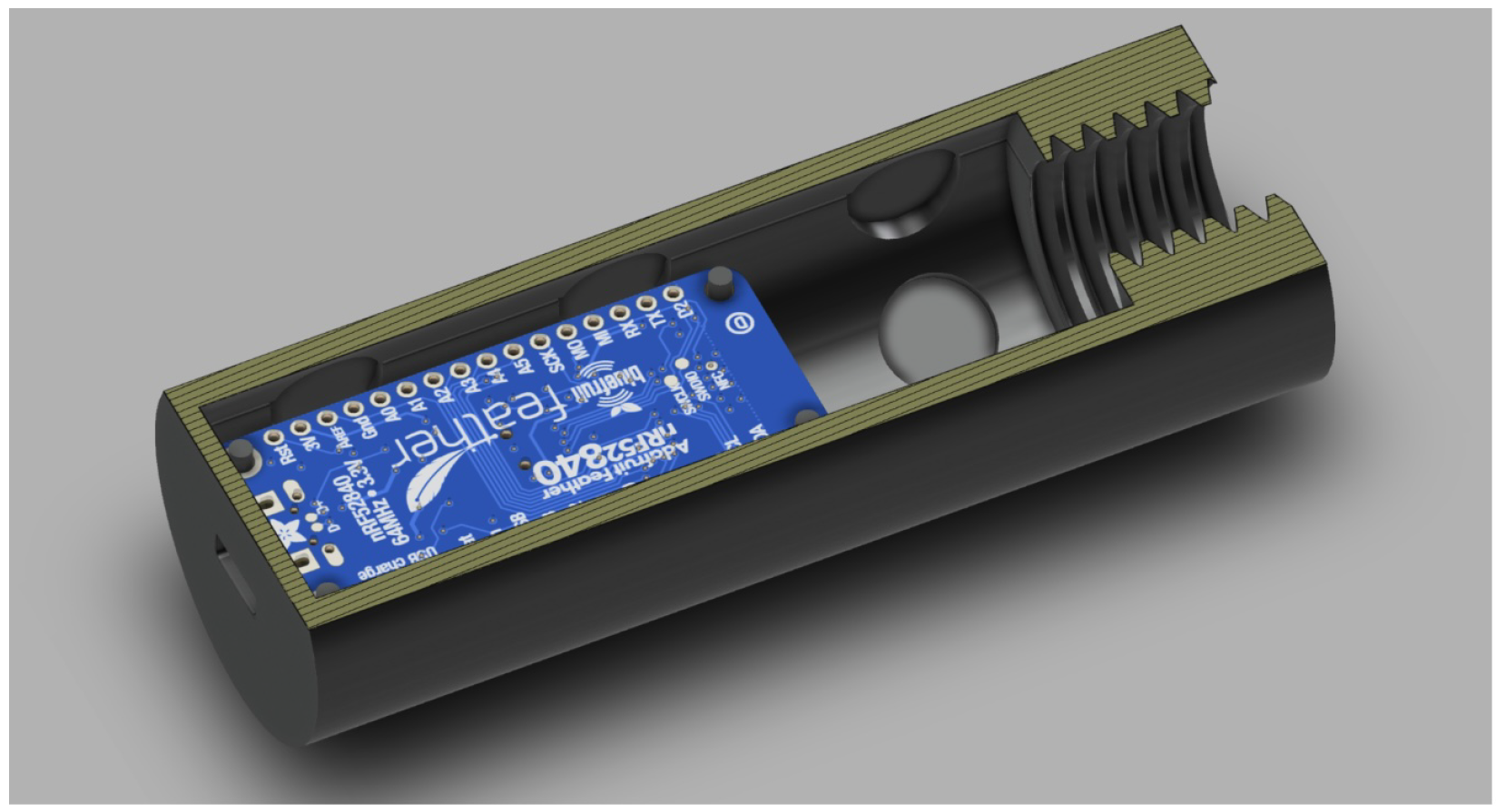

4. HapticHandle: An Inexpensive Cane Handle for Visually Impared Pedestrians

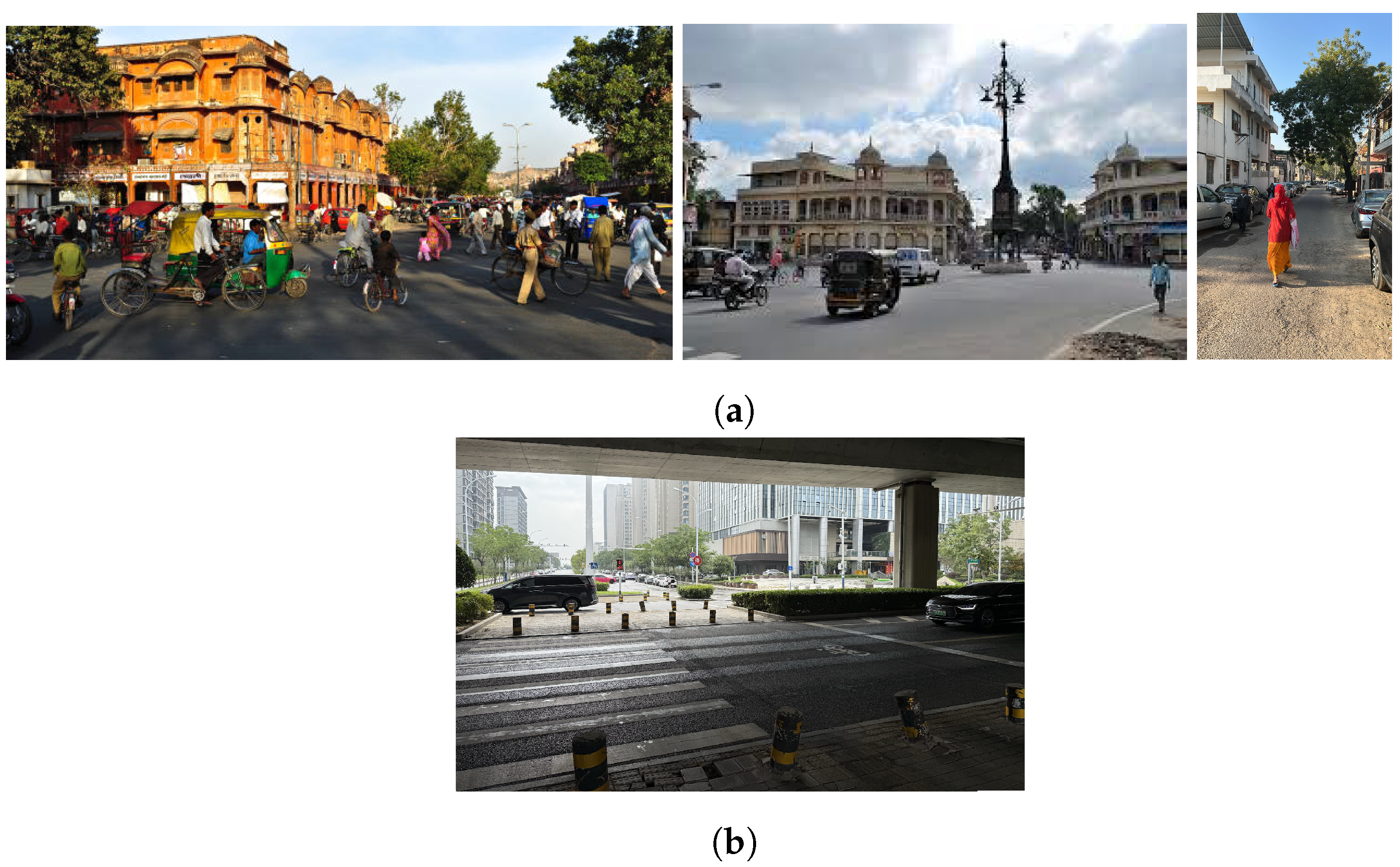

5. Threat Types

5.1. Scooters/Motorcycles

5.2. Bicycle

5.3. Static Threats

5.4. Pedestrian to Pedestrian Collisions

6. Experimental Results

6.1. Precision, Recall, and F1-Score

- Precision:

- Recall:

- F1 Score:

6.2. Vehicle-Based Confusion Matrix

6.3. Quality Evaluations for Different Thresholds

7. Sensitivity Slider

8. Limitations

9. Future Work

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Raw Data Results

| Walking1 | 0:03:50 | −0.77 m/s | 5.48 s | No |

| Walking1 | 0:04:10 | −0.63 m/s | 6.33 s | No |

| Walking1 | 0:04:30 | −2.50 m/s | 1.75 s | Yes |

| Walking1 | 0:04:50 | −3.63 m/s | 1.12 s | Yes |

| Walking1 | 0:05:10 | −4.28 m/s | 0.91 s | Yes |

| Walking1 | 0:05:30 | −6.34 m/s | 0.58 s | Yes |

| Walking1 | 0:05:50 | −7.05 m/s | 0.47 s | Yes |

| Walking2 | 0:03:00 | −0.27 m/s | 21.38 s | No |

| Walking2 | 0:03:20 | −7.73 m/s | 0.73 s | Yes |

| Walking2 | 0:03:40 | −7.77 m/s | 0.62 s | Yes |

| Walking2 | 0:04:00 | −8.78 m/s | 0.52 s | Yes |

| Walking2 | 0:04:20 | −10.40 m/s | 0.39 s | Yes |

| Walking2 | 0:04:40 | −8.80 m/s | 0.41 s | Yes |

| Walking2 | 0:05:00 | −8.10 m/s | 0.37 s | Yes |

| Walking3 | 0:04:30 | −2.70 m/s | 1.64 s | Yes |

| Walking3 | 0:04:50 | −1.63 m/s | 2.59 s | No |

| Walking3 | 0:05:10 | −3.17 m/s | 1.04 s | Yes |

| Walking3 | 0:05:30 | −2.35 m/s | 1.46 s | Yes |

| Walking3 | 0:05:50 | 0.35 m/s | 9999.00 s | No |

| Walking3 | 0:06:10 | 0.14 m/s | 9999.00 s | No |

| Walking3 | 0:06:30 | −0.24 m/s | 22.47 s | No |

| Walking3 | 0:06:50 | −0.37 m/s | 1391.66 s | No |

| Walking3 | 0:16:55 | −6.08 m/s | 1.26 s | Yes |

| Walking3 | 0:17:15 | −3.18 m/s | 1.24 s | Yes |

| Walking3 | 0:17:35 | −2.99 m/s | 1.61 s | Yes |

| Walking3 | 0:17:55 | −4.23 m/s | 0.82 s | Yes |

| Walking4 | 0:05:00 | −2.11 m/s | 2.51 s | No |

| Walking4 | 0:05:20 | −2.45 m/s | 1.46 s | No |

| Walking4 | 0:06:00 | −4.09 m/s | 1.02 s | No |

| Walking4 | 0:06:20 | −0.20 m/s | 4.40 s | No |

| Walking5 | 0:02:20 | −6.76 m/s | 0.44 s | Yes |

| Walking5 | 0:03:10 | −4.13 m/s | 0.69 s | Yes |

| Walking6 | 0:04:30 | −5.67 m/s | 0.45 s | Yes |

| Walking6 | 0:04:50 | −9.66 m/s | 0.23 s | Yes |

| Walking6 | 0:05:10 | −11.32 m/s | 0.17 s | Yes |

| Walking6 | 0:05:30 | −10.38 m/s | 0.16 s | Yes |

| Walking7 | 0:17:40 | −2.69 m/s | 1.99 s | Yes |

| Walking7 | 0:18:00 | −3.31 m/s | 1.39 s | Yes |

| Walking7 | 0:18:20 | −7.09 m/s | 0.69 s | Yes |

| Walking7 | 0:18:40 | −2.09 m/s | 1.76 s | Yes (False Negative) |

| Walking7 | 0:19:00 | −4.44 m/s | 0.73 s | Yes |

| Walking8 | 0:05:00 | −3.39 m/s | 1.72 s | Yes |

| Walking8 | 0:05:20 | −3.73 m/s | 1.71 s | Yes |

| Walking8 | 0:05:40 | −0.14 m/s | 9999.00 s | No |

| Car1 | 0:01:15 | −1.67 m/s | 4.52 s | No |

| Car1 | 0:01:35 | −5.08 m/s | 1.58 s | Yes |

| Car1 | 0:01:55 | −6.02 m/s | 1.18 s | Yes |

| Car1 | 0:02:15 | −7.18 m/s | 0.94 s | Yes |

| Car1 | 0:02:35 | −10.58 m/s | 0.59 s | Yes |

| Car1 | 0:02:55 | −8.93 m/s | 0.7 s | Yes |

| Car1 | 0:03:15 | −0.11 m/s | 12.7 s | No |

| Car2 | 0:01:30 | −2.30 m/s | 4.18 s | No |

| Car2 | 0:01:50 | −3.78 m/s | 2.82 s | Yes |

| Car2 | 0:02:10 | −6.04 m/s | 1.56 s | Yes |

| Car2 | 0:02:30 | −7.54 m/s | 1.07 s | Yes |

| Car2 | 0:02:50 | −8.23 m/s | 0.36 s | Yes |

| Car2 | 0:03:10 | −4.05 m/s | 1.76 s | Yes |

| Car2 | 0:03:30 | −6.16 m/s | 1.18 s | Yes |

| Car2 | 0:03:50 | −0.08 m/s | 116.03 s | No |

| Car3 | 0:00:45 | −3.92 m/s | 9999.00 s | No |

| Car3 | 0:01:05 | 4.33 m/s | 9999.00 s | No |

| Car3 | 0:01:25 | 3.37 m/s | 9999.00 s | No |

| Car3 | 0:01:45 | −0.95 m/s | 8.92 s | No |

| Bus1 | 0:16:40 | −0.68 m/s | 24.91 s | No |

| Bus1 | 0:17:00 | −0.62 m/s | 21.24 s | No |

| Bus1 | 0:17:20 | −4.12 m/s | 3.44 s | No |

| Bus1 | 0:17:40 | −6.35 m/s | 1.57 s | Yes |

| Bus1 | 0:18:00 | −10.90 m/s | 0.76 s | Yes |

| Bus1 | 0:18:20 | −12.26 m/s | 0.62 s | Yes |

| Bus1 | 0:18:40 | −11.96 m/s | 0.70 s | Yes |

| Bus1 | 0:19:00 | −7.24 m/s | 1.40 s | Yes |

| Bus2 | 0:05:25 | −1.54 m/s | 7.62 s | No |

| Bus2 | 0:05:45 | −2.92 m/s | 3.71 s | No |

| Bus2 | 0:06:05 | −3.61 m/s | 3.00 s | No |

| Bus2 | 0:06:35 | −3.20 m/s | 3.57 s | No |

| Bus2 | 0:06:55 | −3.05 m/s | 3.54 s | No |

| Scooter1 | 0:00:22 | −4.50 m/s | 2.73 s | Yes |

| Scooter1 | 0:00:42 | −0.90 m/s | 11.81 s | Yes (False Neagtive) |

| Scooter1 | 0:01:05 | −0.53 m/s | 21.63 s | No |

| Scooter2 | 0:00:35 | −3.5 m/s | 1.03 s | Yes |

| Scooter2 | 0:00:55 | −4.86 m/s | 1.03 s | No |

| Scooter3 | 0:04:50 | −0.25 m/s | 45.90 s | No |

| Scooter3 | 0:05:10 | −6.03 m/s | 2.37 s | Yes |

| Scooter3 | 0:05:30 | −8.75 m/s | 1.39 s | Yes |

| Scooter3 | 0:05:50 | −7.97 m/s | 1.09 s | Yes |

References

- Vision Australia, Guide Dogs Victoria and Blind Citizens Australia. New Report Reveals 1 in 12 Pedestrians Being Hit by Motor Vehicles and Cyclists. 2019. Available online: https://visionaustralia.org/news/2019-08-23/new-report-reveals-1-12-pedestrians-being-hit-motor-vehicles-and-cyclists (accessed on 5 March 2023).

- Prabhath, P.; Olvera-Herrera, V.O.; Chan, V.F.; Clarke, M.; Wright, D.M.; MacKenzie, G.; Virgili, G.; Congdon, N. Vision impairment and traffic safety outcomes in low-income and middle-income countries: A systematic review and meta-analysis. Lancet Glob. Health 2021, 9, e1411–e1422. [Google Scholar]

- Roberts, I.; Norton, R. Sensory deficit and the risk of pedestrian injury. Inj. Prev. 1995, 1, 12–14. [Google Scholar] [CrossRef] [PubMed]

- Navarro, P.J.; Fernández, C.; Borraz, R.; Alonso, D. A Machine Learning Approach to Pedestrian Detection for Autonomous Vehicles Using High-Definition 3D Range Data. Sensors 2017, 17, 18. [Google Scholar] [CrossRef] [PubMed]

- Traffic Safety Store. Collision Sentry Overview. 2021. Available online: https://www.trafficsafetywarehouse.com/collision-sentry (accessed on 5 March 2023).

- Li, Z.; Wang, K.; Li, L.; Wang, F.-Y. A Review on Vision-Based Pedestrian Detection for Intelligent Vehicles. In Proceedings of the 2006 IEEE International Conference on Intelligent Transportation Systems (ITSC), Toronto, ON, Canada, 17–20 September 2006; pp. 224–229. [Google Scholar] [CrossRef]

- Scalvini, F.; Bordeau, C.; Ambard, M.; Migniot, C.; Dubois, J. Outdoor Navigation Assistive System Based on Robust and Real-Time Visual-Auditory Substitution Approach. Sensors 2024, 24, 166. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, H. Real-Time Interaction Models for Vehicle and Pedestrian Safety. IEEE Trans. Intell. Transp. Syst. 2024, 8, 22–35. [Google Scholar]

- Hamidaoui, M.; Talhaoui, M.Z.; Li, M.; Midoun, M.A.; Haouassi, S.; Mekkaoui, D.E.; Smaili, A.; Cherraf, A.; Benyoub, F.Z. Survey of Autonomous Vehicles’ Collision Avoidance Algorithms. Sensors 2025, 25, 395. [Google Scholar] [CrossRef] [PubMed]

- Bolgiano, J.R. Laser Cane for the Blind. Proc. IEEE 1967, 55, 697–698. [Google Scholar] [CrossRef]

- Benjamin, J.M.; Ali, N.A.; Schepis, A.F. Laser Cane for the Blind. In Engineering in Biology and Medicine; Reivich, M., Ed.; University Park Press: Baltimore, MD, USA, 1973; pp. 63–70. [Google Scholar]

- Mai, X.; Zhang, L.; Li, Y.; Wang, H. Fusion of 2D LiDAR and RGB-D Camera for Smart Cane Navigation and Obstacle Detection. Sensors 2022, 24, 870. [Google Scholar] [CrossRef]

- Slade, P.; Tambe, A.; Kochenderfer, M.J. Multimodal sensing and intuitive steering assistance improve navigation and mobility for people with impaired vision. Sci. Robot. 2021, 6, eabg6594. [Google Scholar] [CrossRef] [PubMed]

- Moovit. Moovit and WeWALK Partner to Improve Transit Navigation for Blind and Partially Sighted. Mass Transit, 1 December 2021. Available online: https://www.masstransitmag.com/technology/article/21248474/\moovit-and-wewalk-partner-to-improve-transit-navigation-for-blind-and-partially-sighted (accessed on 5 March 2023).

- Lan, M.; Nahapetian, A.; Vahdatpour, A.; Au, L.; Kaiser, W.; Sarrafzadeh, M. SmartFall: An automatic fall detection system based on subsequence matching for the ImpactAlert. In Proceedings of the Fourth International Conference on Body Area Networks, Los Angeles, CA, USA, 1–3 April 2009; pp. 1–8. [Google Scholar]

- Gupta, A.; Patel, M.; Saini, M.; Sharma, R. A survey on assistive devices for visually impaired people. Sensors 2024, 24, 4834. [Google Scholar] [CrossRef]

- AI-Powered Headphones Alert Pedestrians to Incoming Cars. IEEE Spectrum. Available online: https://spectrum.ieee.org/ai-headphone-pedestrians-safety-warning-cars (accessed on 5 March 2023).

- Tefft, B.C. Impact Speed and a Pedestrian’s Risk of Severe Injury or Death; AAA Foundation for Traffic Safety: Washington, DC, USA, 2011. [Google Scholar]

- Deisenroth, M.P.; Faisal, A.A.; Ong, C.S. Mathematics for Machine Learning; Cambridge University Press: Cambridge, UK, 2020; Available online: https://mml-book.github.io/ (accessed on 5 March 2023).

| Vehicle Type | True Positives (TP) | True Negatives (TN) | False Positives (FP) | False Negatives (FN) |

|---|---|---|---|---|

| Bus | 5 | 8 | 0 | 0 |

| Car | 11 | 8 | 0 | 0 |

| Scooter | 5 | 2 | 1 | 1 |

| Walking | 30 | 11 | 2 | 1 |

| Speed Thresh | Time To Impact Thresh | True Pos (TP) | False Pos (FP) | False Neg (FN) | True Neg (TN) | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|---|---|---|---|

| −5.0 | 3 | 32 | 0 | 21 | 32 | 0.753 | 1.000 | 0.604 | 0.753 |

| −3.0 | 3 | 46 | 2 | 7 | 30 | 0.894 | 0.958 | 0.868 | 0.911 |

| −2.2 | 1 | 25 | 0 | 28 | 32 | 0.671 | 1.000 | 0.472 | 0.641 |

| −2.2 | 3 | 51 | 3 | 2 | 29 | 0.941 | 0.944 | 0.962 | 0.953 |

| −2.2 | 5 | 51 | 9 | 2 | 23 | 0.871 | 0.850 | 0.962 | 0.903 |

| −1.0 | 3 | 52 | 5 | 1 | 27 | 0.929 | 0.912 | 0.981 | 0.945 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rawat, R.; Lant, C.; Yuan, H.; Shasha, D. ImpactAlert: Pedestrian-Carried Vehicle Collision Alert System. Electronics 2025, 14, 3133. https://doi.org/10.3390/electronics14153133

Rawat R, Lant C, Yuan H, Shasha D. ImpactAlert: Pedestrian-Carried Vehicle Collision Alert System. Electronics. 2025; 14(15):3133. https://doi.org/10.3390/electronics14153133

Chicago/Turabian StyleRawat, Raghav, Caspar Lant, Haowen Yuan, and Dennis Shasha. 2025. "ImpactAlert: Pedestrian-Carried Vehicle Collision Alert System" Electronics 14, no. 15: 3133. https://doi.org/10.3390/electronics14153133

APA StyleRawat, R., Lant, C., Yuan, H., & Shasha, D. (2025). ImpactAlert: Pedestrian-Carried Vehicle Collision Alert System. Electronics, 14(15), 3133. https://doi.org/10.3390/electronics14153133