1. Introduction

Driven by a new wave of technological revolution and industrial transformation, the manufacturing industry is accelerating toward a development stage characterized by high-end, intelligent, and personalized production. The deep integration of next-generation information technologies with advanced manufacturing techniques has given rise to the evolution of intelligent manufacturing—from automation to autonomous intelligence. Represented by the European Union’s “Industry 5.0” initiative, this new wave of industrial transformation emphasizes human-centric manufacturing, advocating for deep collaboration between humans and robots and aiming to achieve a unified integration of technological empowerment and human value [

1]. Against this backdrop, Human–Robot Collaboration (HRC) has become a key direction in the evolution of intelligent manufacturing [

2]. This trend is particularly evident in high-end equipment manufacturing sectors such as aerospace, where increasing complexity, precision requirements, and customization in assembly processes render traditional assembly methods—reliant on manual experience and paper-based process documentation—insufficient to meet the demands for high efficiency, high quality, and traceability in production [

3].

Complex aerospace products—particularly during critical assembly processes in confined spaces such as launcher electronic compartments—are often confronted with challenges such as multi-component integration, high-precision requirements, and spatial constraints [

4]. Traditional manual assembly methods, lacking effective task planning and real-time guidance, are prone to errors, inefficiency, and a lack of traceability, thereby falling short of meeting the current demands for high reliability and rapid responsiveness in production. Consequently, there is an urgent need to develop novel human–robot integrated assembly approaches to overcome the cognitive and operational limitations of conventional processes and to reduce the workload on operators [

5]. Augmented Reality (AR), as a key interactive technology that integrates virtual information with real-world operational environments, enables the overlay of virtual guidance information onto the physical workspace during assembly, offering a new solution to improve the visibility and accessibility of assembly processes [

6]. Meanwhile, with the rapid advancement of Large Language Models (LLMs) in knowledge reasoning and natural language understanding, these models can assist in constructing interpretable assembly knowledge systems and support intelligent reasoning and real-time response for task planning, anomaly detection, and process compliance in assembly operations [

7].

LLMs offer innovative approaches to HRC by continuously accumulating and updating experiential knowledge. With ongoing advancements in computational power and data aggregation, the capabilities of these models have been significantly enhanced. The GPT series, for instance, utilizes techniques such as pre-training and fine-tuning to understand and follow human instructions, thereby enabling the accurate interpretation and resolution of complex problems [

8]. Given the complexity of HRC assembly scenarios, LLMs are well-positioned to bridge the gap between humans and the physical world, significantly enhancing the flexibility of collaborative robotic assembly processes [

9]. Wang et al. [

10] proposed a navigation method for human–robot interactive mobile inspection robots based on an LLM, capable of replacing human operators in hazardous industrial environments and performing complex navigation tasks in response to natural language instructions. Chen et al. [

11] introduced an autonomous HRC assembly approach driven by LLM and digital twin technologies, in which an LLM is integrated into the control system of a collaborative robotic arm. They developed an intelligent assistant powered by an LLM to facilitate autonomous HRC. Liu et al. [

12] proposed a framework combining knowledge graphs and LLMs for fault diagnosis in aerospace assembly. Utilizing subgraph embedding and retrieval-augmented knowledge fusion techniques, this framework significantly enhances reasoning capabilities and enables efficient fault localization and resolution. Bao et al. [

13] developed an LLM-assisted AR assembly methodology tailored to complex product assembly, integrating an LLM with AR and constructing a matching process information model to achieve intelligent LLM-guided assembly assistance. Benefiting from powerful autonomous reasoning and computational capabilities, LLMs provide novel approaches for HRC. However, challenges remain, including limited flexibility in task planning, incorrect application of inferred process knowledge, and a lack of professionalism in generated assembly plans. Furthermore, achieving seamless interaction between LLM and HRC systems, as well as enabling more natural and intuitive collaborative assembly processes, still requires extensive exploration.

AR technology integrates virtual information with the real environment by superimposing digital content—such as images, text, or models—into the user’s field of view, allowing users to perceive virtual elements overlaid with physical objects [

14]. This capability enables operators to better understand the operational context and utilize mapped virtual information to support the execution of assembly tasks [

15]. Liu et al. [

16] designed a user-centered AR-enhanced interactive interface, allowing personnel to perform assisted maintenance tasks through visual guidance, thereby mitigating the impact of spatial constraints and human factors. Fang et al. [

17] proposed a human-centric, multimodal, context-aware AR method for recognizing stages in on-site assembly. This method can automatically confirm actual assembly outcomes, reduce the cognitive load associated with triggering subsequent instructions, and support real-time validation of the current task state during operation. Yan et al. [

18] introduced a mixed reality (MR)-based remote collaborative assembly approach that combines information recommendation and visual enhancement. This method optimizes users’ attention allocation during remote collaboration, improves the visibility of key guidance information, and enhances the efficiency and user experience of MR-assisted assembly. Chan et al. [

19] developed an AR-assisted interface for HRC-based assembly, demonstrating that the AR interface effectively reduces physical intervention requests and improves the utilization of cobots. In AR-assisted HRC assembly, tracking and registration technologies are critical components. These technologies enable the accurate integration of virtual 3D models or information with the physical world, thereby supporting precise virtual-to-real alignment [

20]. As a core technology of AR systems, virtual–real mapping ensures accurate alignment between virtual 3D models and real components [

21]. Among the tracking and registration technologies required for virtual–real mapping, ORB-SLAM2 (Oriented FAST and Rotated BRIEF Simultaneous Localization and Mapping) is one of the most widely adopted visual SLAM methods. Xi et al. [

22] improved ORB-SLAM2 by incorporating the Progressive Sample Consensus (PROSAC) algorithm for outlier rejection and added dense point cloud and octree map construction threads to generate maps suitable for robotic navigation and path planning. Zhang et al. [

23] employed a Grid-based Motion Statistics (GMS) algorithm to optimize the performance of ORB-SLAM2, thereby reducing processing time and correcting matching errors. Although significant progress has been made in AR-based guidance and tracking registration technologies, limitations persist in complex HRC assembly environments. Existing AR-assisted assembly solutions are mostly focused on guiding predefined procedures, with limited support for intelligent interaction and information integration. Moreover, assembly scenarios often lack prominent texture features, posing challenges for natural feature-based tracking methods and visual SLAM techniques, which struggle under low-texture or textureless conditions. To better meet the demands of complex assembly tasks, the robustness of AR-based HRC systems must be further improved.

The integration of LLMs with AR technologies has further enhanced the real-time performance and intelligence of HRC assembly. By leveraging the advanced data processing and reasoning capabilities of LLMs together with the real-time information visualization of AR, a more seamless fusion between humans and the assembly environment can be achieved [

24]. To address the challenges that persist in HRC assembly, this paper proposes an intelligent HRC assembly approach that integrates LLM-driven reasoning with AR-based interaction. This method is motivated by the human-centric development paradigm of Industry 5.0 and aims to support emerging requirements in HRC. The goal is to overcome the rigid human–robot relationships in traditional assembly processes and to promote more organic integration between humans and robots. By employing domain-specific knowledge reasoning via an LLM, information-enhanced visualization, and interactive fusion techniques, the proposed approach enables operators to accurately comprehend task information and assembly context, thereby facilitating more natural and efficient collaboration. This research primarily focuses on addressing key challenges encountered during the assembly of complex aerospace products in confined spaces, such as limited visibility, difficulty in accessing domain knowledge, and heavy reliance on decision-making support. The main contributions of this paper are as follows:

- (1)

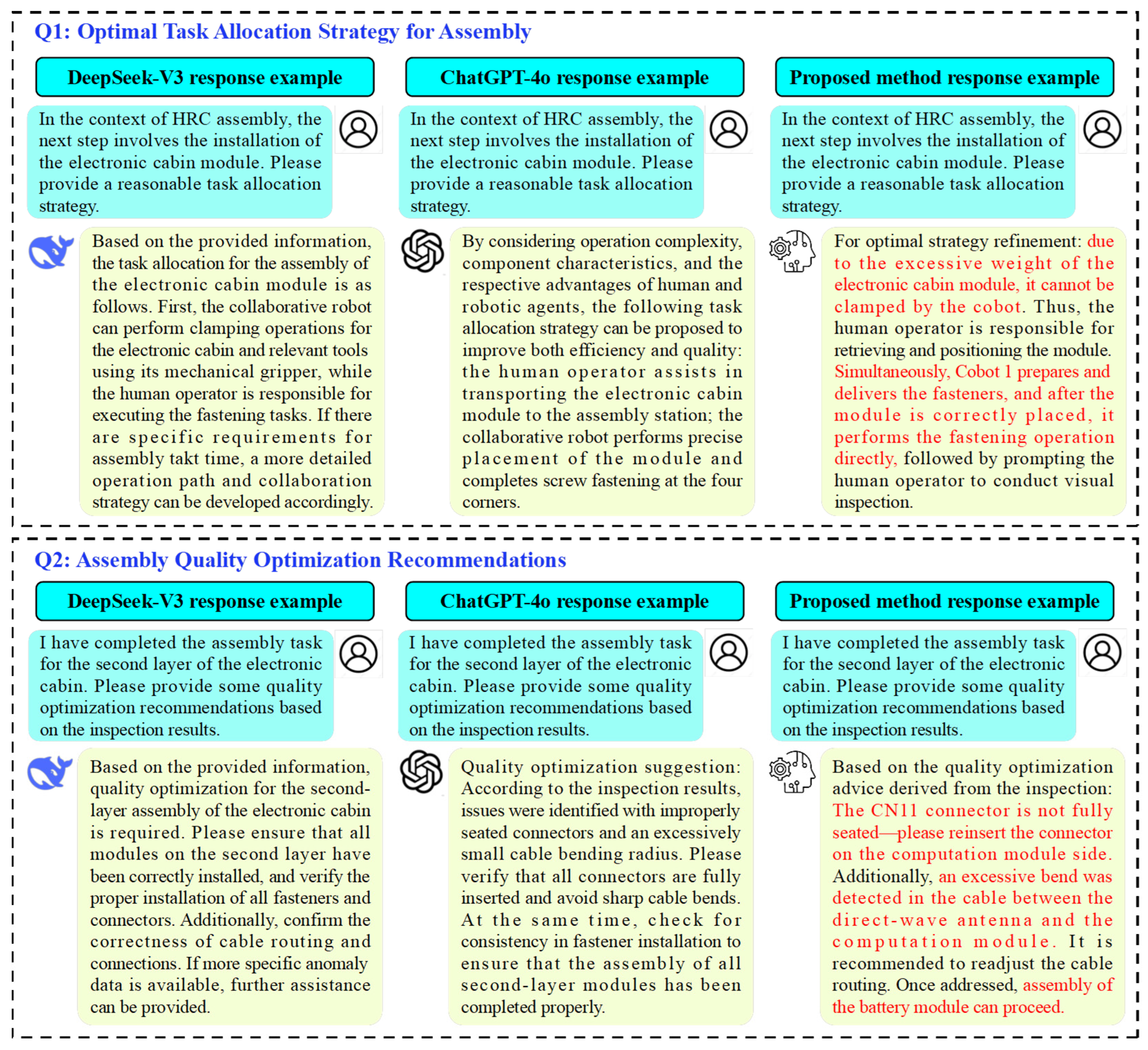

A knowledge reasoning method for complex aerospace product assembly assisted by an LLM is proposed. By parsing assembly tasks into sequential components and integrating them with process requirements, a structured process information model is constructed. This enables LLM-driven intelligent task guidance and optimized decision-making for assembly quality. The method effectively leverages the respective strengths of humans and robots in task execution, thereby improving the efficiency of HRC during the assembly process.

- (2)

An intelligent AR-based visual interaction system is developed, which achieves a virtual–real fusion of assembly scenarios through virtual scene construction and real-time tracking of assembly components. The system employs AR technology to provide enhanced visual guidance and intelligent interaction throughout the assembly process. This significantly improves the visibility and responsiveness of assembly information, increases operational efficiency, reduces error rates, and enhances the overall experience of HRC assembly.

- (3)

Validation of the proposed intelligent HRC assembly approach is conducted through a case study involving the assembly of a complex aerospace product. The results demonstrate the feasibility and effectiveness of the proposed system in real-world assembly scenarios.

2. Methodology

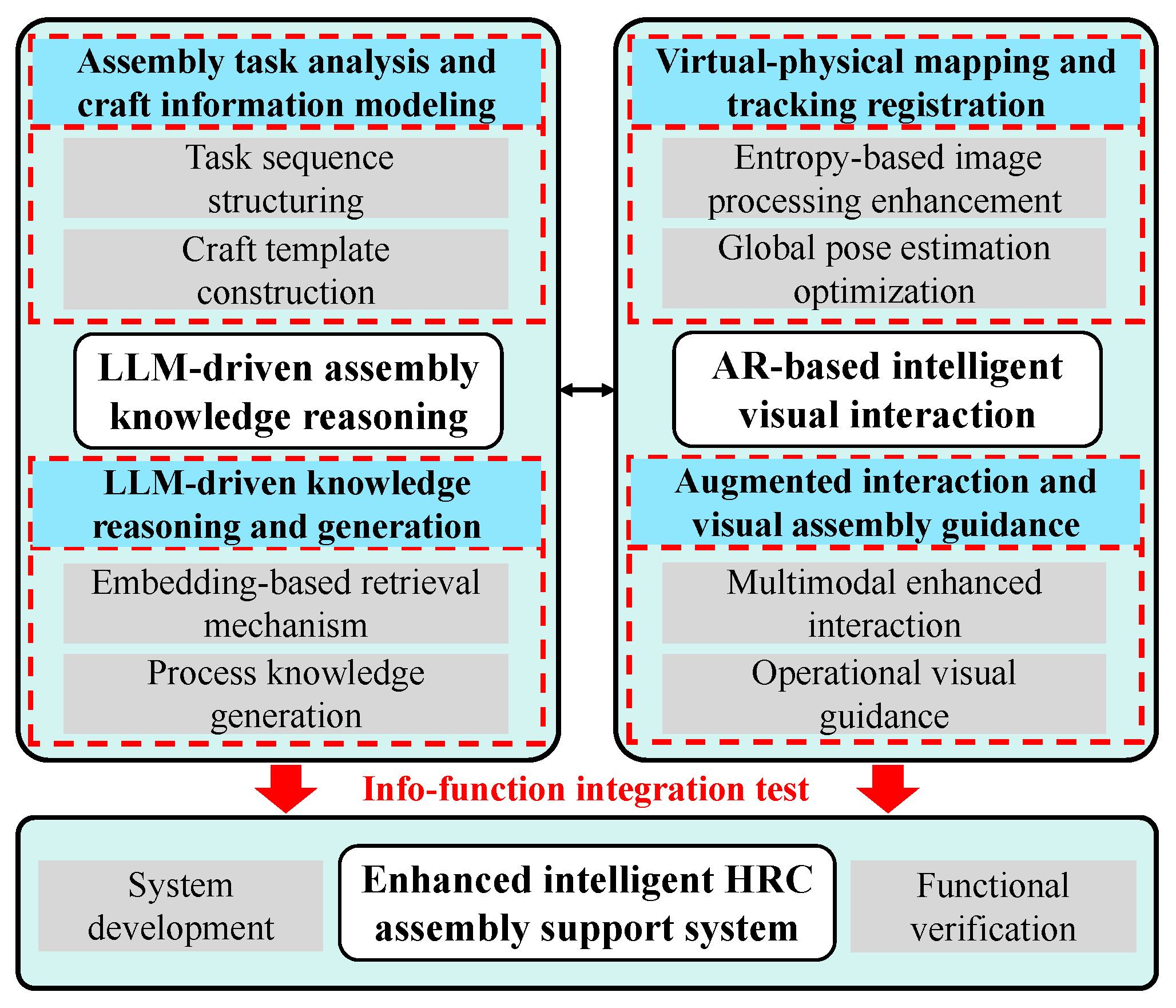

The proposed method integrates LLM and AR technologies to construct an enhanced intelligent HRC assembly assistance system. As illustrated in

Figure 1, the system framework is divided into two main modules: assembly knowledge reasoning and intelligent visual interaction. Firstly, the process begins with assembly task analysis and process information modeling. The assembly workflow is structured through task sequence decomposition and process template construction. These structured data serve as inputs for LLM-driven knowledge reasoning and generation, including retrieval augmentation and contextual knowledge synthesis, thereby enabling data-based decision support for assembly processes. Simultaneously, virtual–real mapping and tracking registration are achieved via entropy-based image processing and optimized global pose estimation, ensuring precise alignment of the physical scene. Furthermore, AR-based intelligent visual interaction facilitates multimodal enhanced interaction and visual guidance for operators. By integrating these informational functionalities, seamless interaction between the LLM and human operators is realized, allowing knowledge reasoning to be effectively embedded within the assembly process.

2.1. Assembly Task Analysis and Process Information Modeling

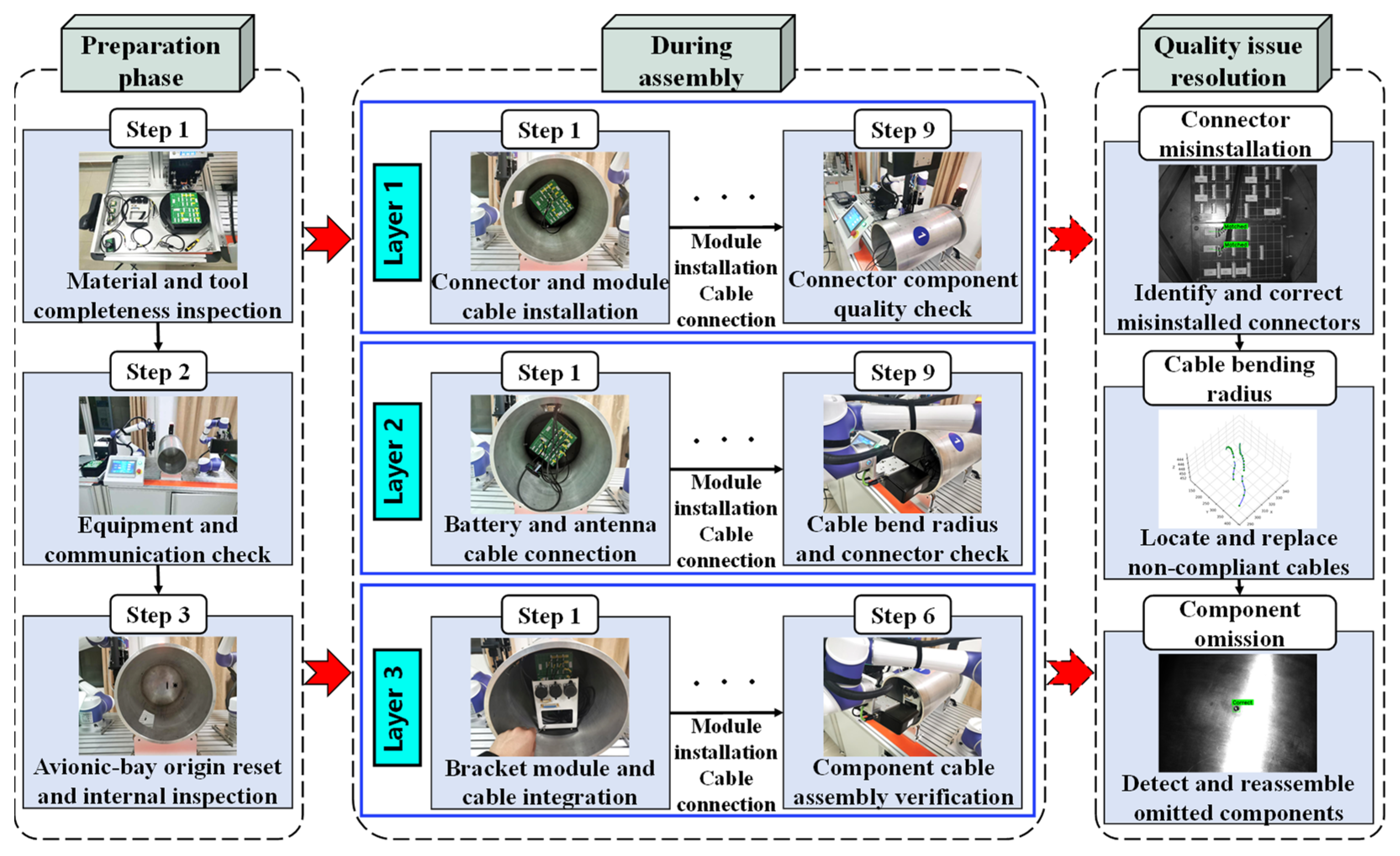

To enable intelligent assistance and knowledge reasoning in the HRC assembly of complex products, it is essential to first conduct a systematic analysis of assembly tasks and construct a structured process information model. As shown in

Figure 2, the assembly of a specific model of an aerospace electronic cabin is selected as a representative scenario. Based on its assembly craft documentation and operational procedures, the overall assembly task is divided into three primary stages: the preparation stage, the assembly execution stage, and the quality issue resolution stage. The assembly process is further structured into three hierarchical layers, progressing from inside to outside, each of which is decomposed into several subtasks. Each subtask includes key information such as operational objectives, assembly objects, required tools, and assembly constraints. The directed relationships among subtasks represent task dependencies: a subtask can only be executed after the completion of its predecessor, as indicated by the directional arrows. Each layer of assembly tasks must be executed sequentially, with each subsequent layer triggered by the successful completion of the previous one. Furthermore, three typical quality issues and their corresponding solutions are predefined within the model. This structured task representation serves as a knowledge template to support subsequent reasoning processes such as assembly sequence planning and task allocation.

On this basis, the execution roles for each task are assigned by leveraging the respective advantages of human operators and cobots in handling different task types. To further enhance the automation of information processing, the multidimensional data involved in HRC scenarios must be effectively integrated to ensure consistency throughout the complex assembly process. Subsequently, the textual information of each assembly task is encoded into a structured sequence input template, as shown in

Figure 3, which includes fields such as Task ID, Process Description, Required Resources, Execution Role, and Expected Outcome. The resulting process information model serves not only as a foundational data source for the AR-based visual interaction system but also as the input for LLM-driven knowledge reasoning in assembly. Through structured representation and semantic embedding, this model provides reliable knowledge support for subsequent intelligent task recommendation and reasoning for quality optimization.

2.2. LLM-Driven Assembly Knowledge Reasoning and Generation

Building upon the structured modeling of assembly tasks and process information, this study further develops an assembly knowledge reasoning mechanism driven by an LLM to support the execution of complex tasks and the generation of optimization suggestions in HRC scenarios. This mechanism leverages a retrieval-augmented generation (RAG) framework to enable intelligent access to structured assembly knowledge and context-aware responses, as illustrated in

Figure 4.

Based on the previously constructed process information templates, each subtask is transformed into a structured input comprising elements such as task description, target component, executor role, process specifications, and inspection requirements. These process data are encoded into text and embedded into high-dimensional vectors, forming the foundation of a knowledge base that supports reasoning by the LLM. When an assembly-related query is initiated—either by the operator or the system—the input is first standardized and converted into a query vector. This vector is then compared with all embedded knowledge entries in the database using similarity computation to retrieve the most relevant information. The retrieved content is concatenated with the original query and fed into the LLM as context prompts, enabling a knowledge-enhanced generation process under the RAG architecture.

For complex aerospace electronic cabin assembly tasks, the reasoning module not only provides precise assembly instructions and key considerations but also dynamically analyzes whether the task requires multi-agent collaboration. It can autonomously determine the optimal division of labor between human operators and cobots. Moreover, by integrating quality inspection feedback, the LLM can propose quality optimization suggestions—such as modifying cable routing strategies or adjusting fastener installation sequences. To ensure that the knowledge output aligns with operational conventions and communication norms specific to HRC assembly scenarios, a prompt-based fine-tuning mechanism is designed. This enables the LLM to tailor its response format, linguistic style, and granularity of information to better suit the requirements of aerospace assembly contexts, thereby enhancing both the accuracy and the professionalism of the reasoning outcomes.

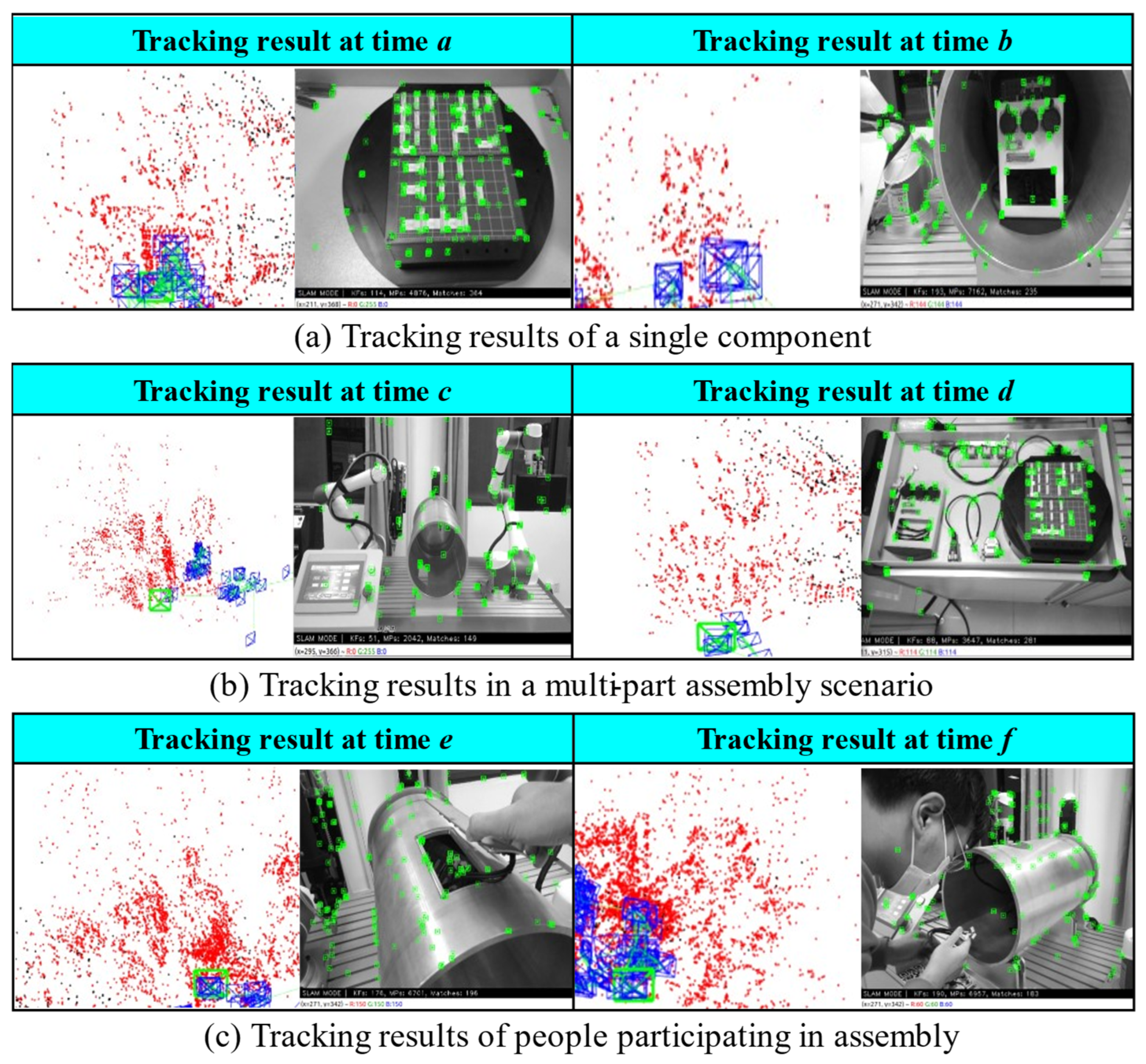

2.3. Virtual–Real Mapping and Component Tracking Registration in Assembly Scenarios

To enable AR-assisted HRC assembly, it is essential to ensure precise and stable integration of virtual scenes with real-world environments, as well as accurate virtual–real mapping of assembly components. This requires the use of 3D tracking and registration algorithms. ORB-SLAM2, one of the most widely used algorithms for 3D tracking and registration, offers high real-time performance and accuracy. However, its heavy reliance on feature point extraction and matching makes it less stable in complex, low-texture environments commonly encountered in HRC assembly tasks. To enhance the performance of 3D tracking and registration for AR-assisted HRC assembly, this study introduces improvements to the original ORB-SLAM2 algorithm, aiming to meet the requirements of virtual scene mapping and precise component tracking, as illustrated in

Figure 5.

Information entropy reflects the richness of texture and pixel gradient variation within local image regions during feature extraction. It is calculated as follows:

where

p(

xi) denotes the probability of a pixel having grayscale value

i in the image. A probability close to 1 implies lower uncertainty in image information.

The entropy value is closely tied to the characteristics of the assembly scene. Since images from different scenes contain varying levels of information richness, their optimal entropy thresholds differ. Therefore, fixed thresholds often fail to yield satisfactory feature extraction and matching results across varied viewing angles in HRC assembly. To address this, an adaptive entropy threshold determination method is proposed, as follows:

where

H(

i)

ave is the average image entropy computed from the first

i frames captured during the initial run in the scene, and

δ is a correction factor empirically set to 0.5 to achieve effective results. The resulting

E0 serves as the adaptive threshold for feature extraction in the current scene.

To achieve seamless virtual–real fusion in assembly scenes, global pose estimation is required. This estimation problem is transformed into a nonlinear least squares optimization to reduce complexity and enhance precision. Using keyframes and 3D coordinates of target points from the physical environment, the Efficient Perspective-n-Point (EPnP) algorithm with Gauss–Newton optimization is employed for real-time pose estimation of the AR headset. This ensures spatial consistency between virtual models and the physical scene, enabling stable and accurate AR-assisted guidance for HRC assembly.

where

PiN represents the 3D coordinates of a target point in the real world, and

CjN represents the 3D coordinates of control points. The parameter

aij defines the barycentric coordinates that linearly relate the target point to its associated control points. The transformation from the real world to the AR reference frame is expressed as

By solving the camera projection matrix M, real-time localization and tracking can be performed. This enhanced ORB-SLAM2-based registration algorithm thus enables reliable virtual–real mapping and supports visualized assembly guidance in AR-enhanced HRC scenarios.

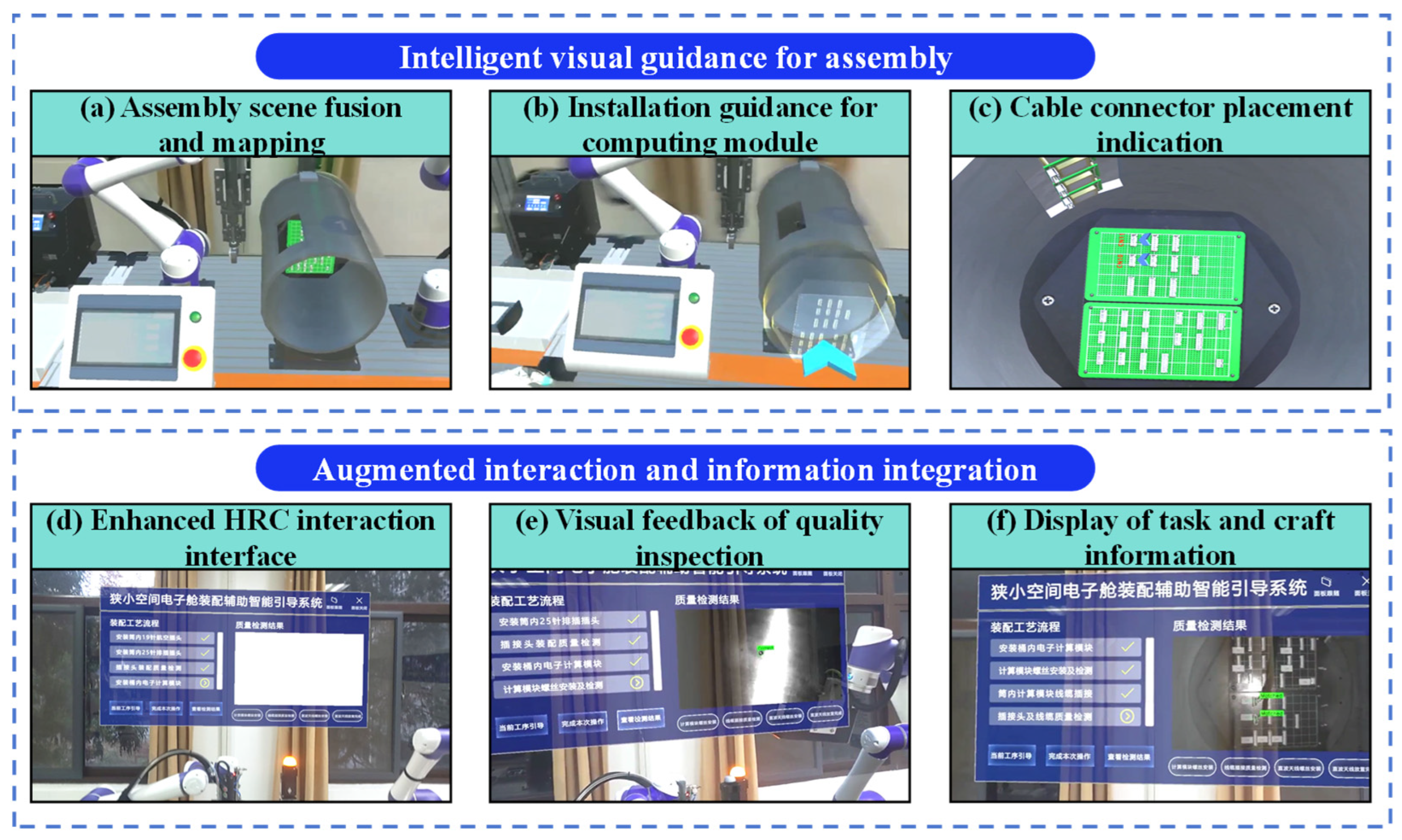

2.4. Human–Robot Augmented Interaction and Assembly Visualization Guidance

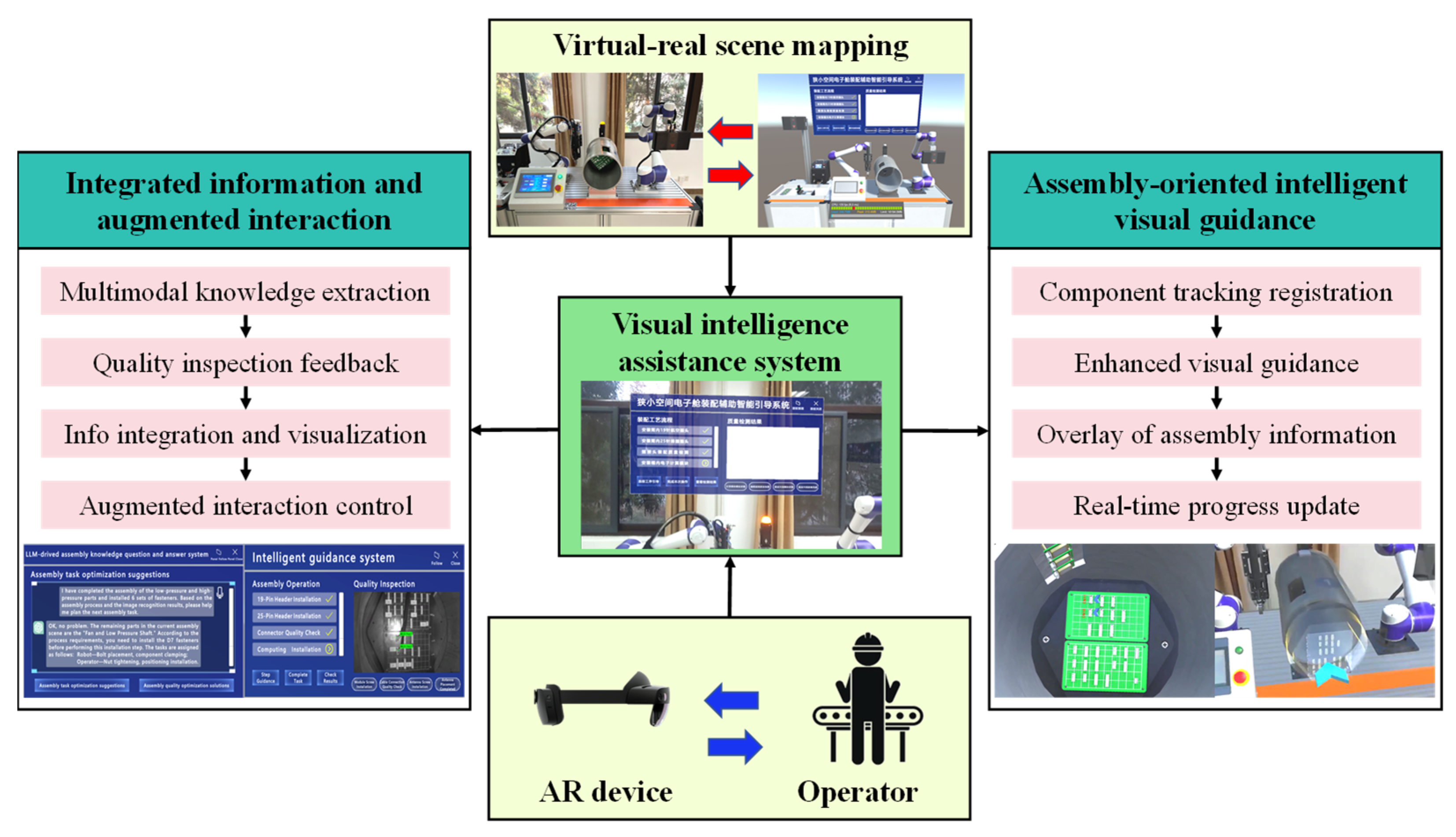

The virtualization of the HRC assembly scenario is constructed using Unity3Dv2019, wherein the digital twin models of cobots and assembly components are integrated. These models can be tracked and mapped in real time through wearable AR devices, enabling seamless fusion of the physical and virtual environments. Based on the virtual–real scene mapping and component tracking registration, an HRC assembly support system is constructed, with further development of augmented interaction and intelligent guidance modules. As shown in

Figure 6, the system enables the dynamic presentation of assembly knowledge and interactive control through AR devices, providing intelligent assistance for HRC and realizing visual guidance throughout the assembly process. The system first extracts multimodal information from LLM reasoning results, including assembly procedures, quality inspection standards, and collaborative action prompts, and associates this information with the current task status of the operator. Then, via the AR interface, these data are overlaid onto the actual assembly scene in the form of text prompts, 3D animations, and flow diagrams, enabling visual instruction of task information, dynamic presentation of assembly steps, and real-time feedback on quality issues. To enhance the naturalness and efficiency of interaction, operators can interact with the AR interface using voice commands, gestures, or eye movements without interrupting their ongoing tasks. This allows for quick retrieval of task information, review of past tasks, and control of cobots. Through AR technology, the system achieves seamless interaction between the LLM and the operator, effectively integrating knowledge reasoning into the assembly process. This not only improves operational efficiency and accuracy but also significantly reduces the operator’s cognitive load, enabling a more natural and efficient collaborative assembly experience.

4. Discussion

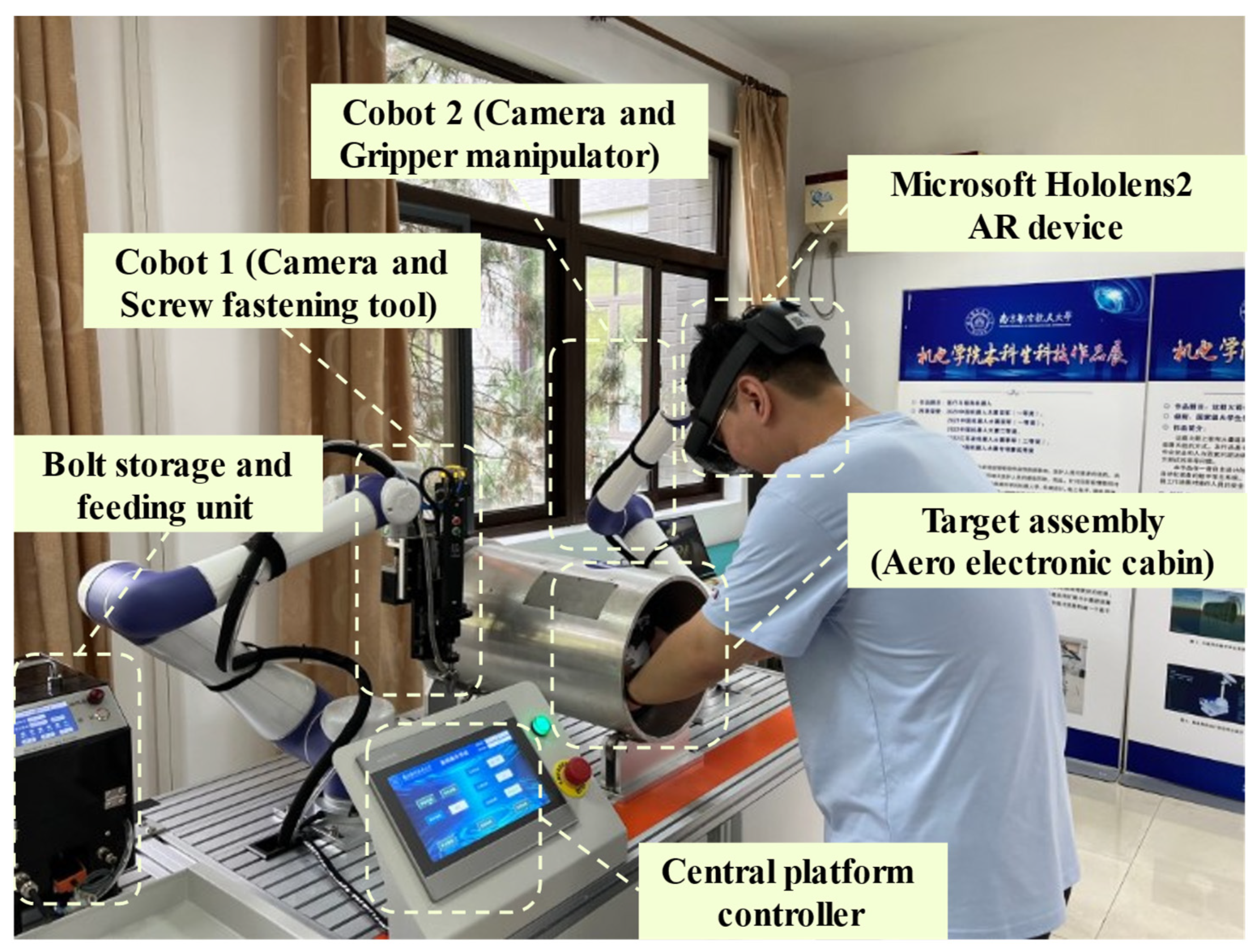

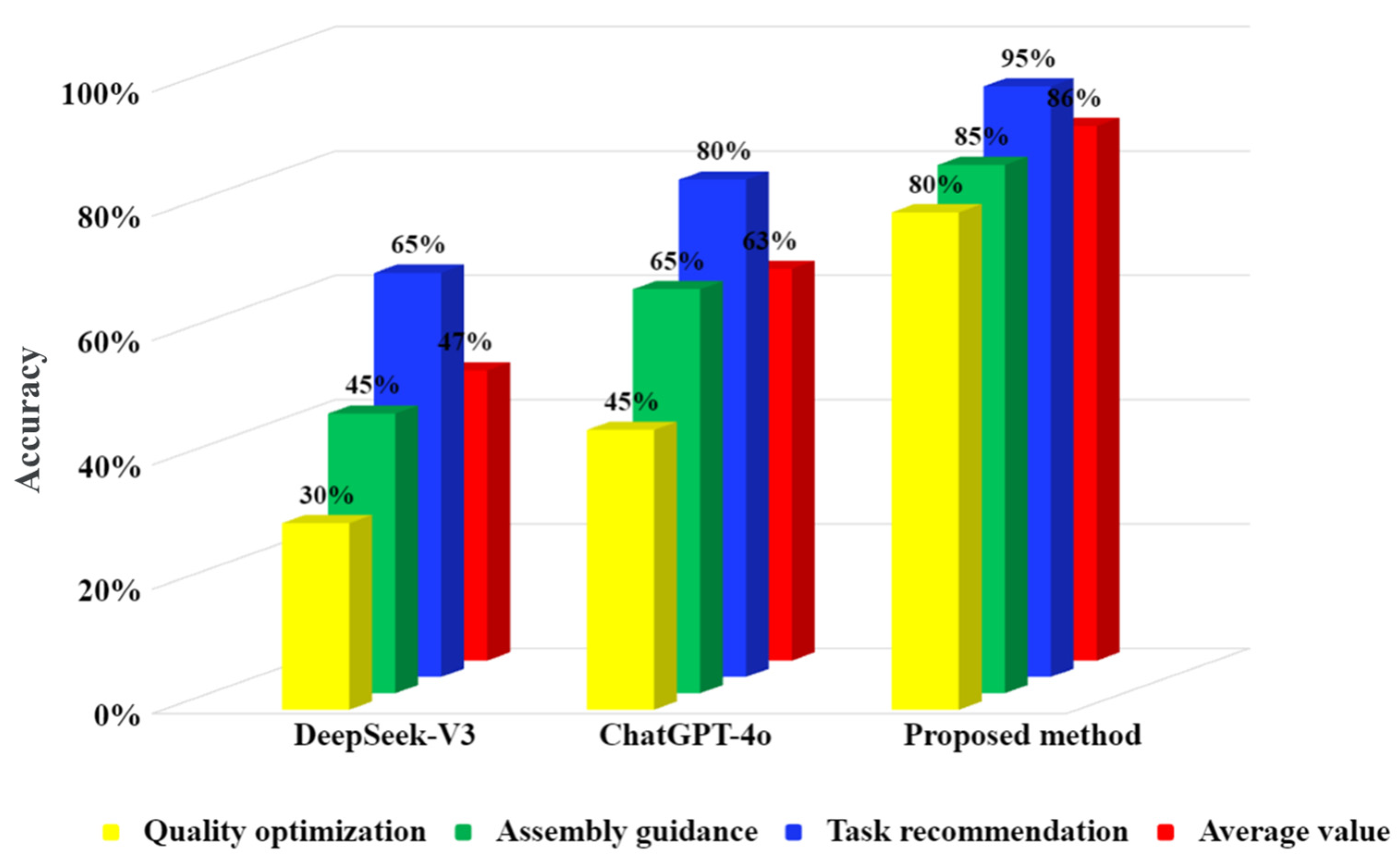

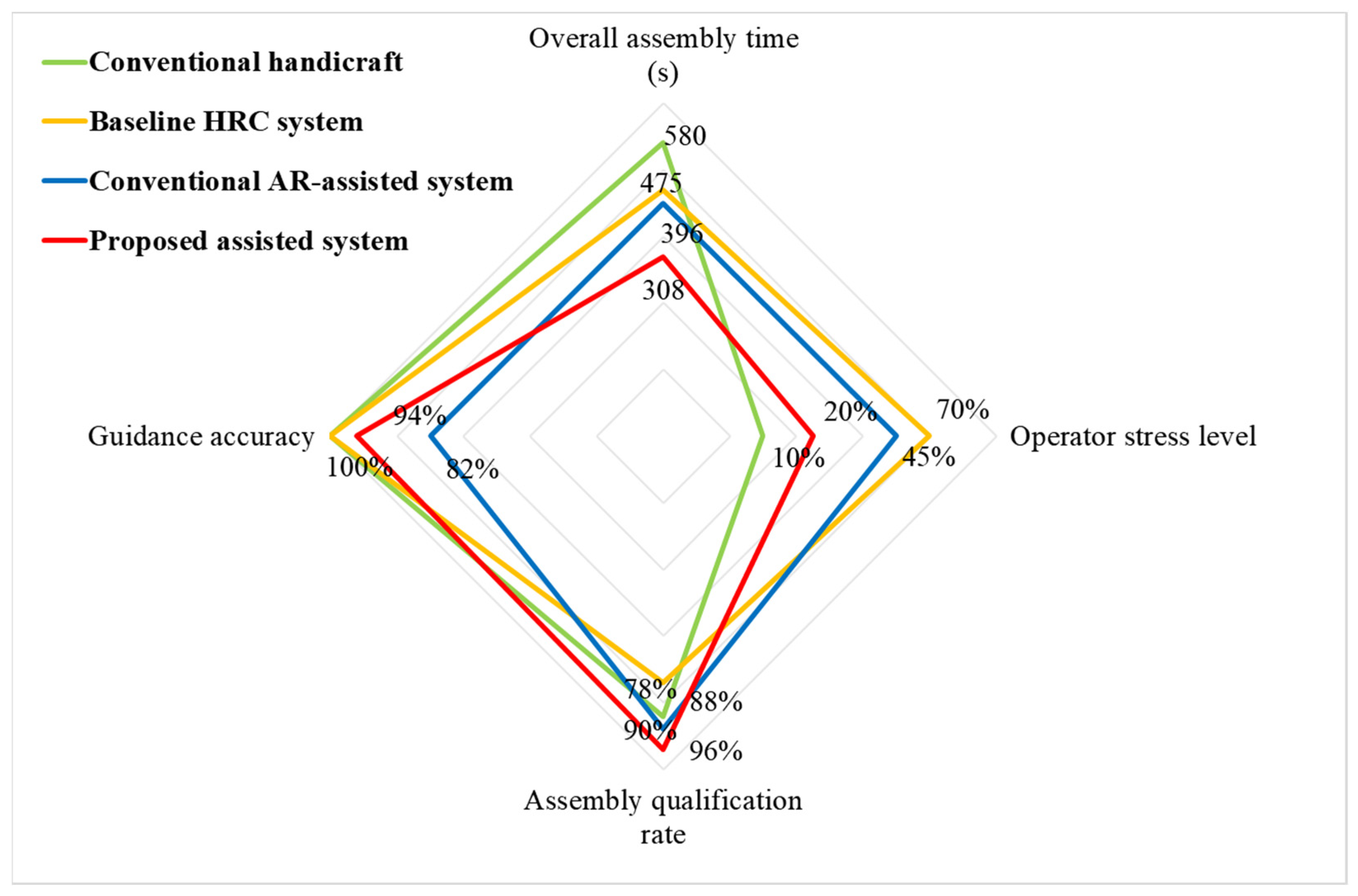

To evaluate the effectiveness of the proposed augmented intelligent HRC assembly assistance system in HRC tasks and to demonstrate its advantages over traditional HRC approaches and conventional AR-based assistance systems, a comparative experiment was conducted using an aerospace electronic cabin assembly task. Twenty participants were randomly assigned to four groups, each performing the assembly using one of the following methods: (1) conventional handicraft, (2) a baseline HRC system, (3) a conventional AR-based assistance system, and (4) the proposed method. The baseline HRC system refers to a setup in which only a cobot is used to assist the assembly process. The robot is equipped with a sensor-integrated end-effector capable of performing programmed material transport and screw-fastening tasks, with manual command input required via a controller. The conventional AR-assisted system, as adopted in existing studies, utilizes MRTK to provide assembly guidance, which is limited to visual overlays of assembly steps and requirements. For each group, we recorded the total assembly time, assembly pass rate, and guidance accuracy. In addition, participants’ stress levels were assessed using a structured questionnaire, with evaluation criteria and corresponding scores shown in

Table 2. The average values for each metric across participants in a group were used as the final results, as illustrated in

Figure 12. Experimental results indicate that the use of HRC systems significantly improves the assembly efficiency and pass rate compared to manual assembly, but tends to increase operator stress. In contrast, the proposed system not only reduces total assembly time and improves assembly quality but also effectively alleviates operator stress during the collaborative process. Furthermore, compared to the conventional AR assistance system, the improved tracking and registration algorithm in our method enhances guidance accuracy, reducing issues such as misassembly or omissions caused by mapping drift or tracking loss. In summary, the proposed system demonstrates notable improvements in both assembly efficiency and quality while mitigating the cognitive burden on human operators. It also exhibits strong operational stability across multiple trials, highlighting its practical applicability and robustness in complex HRC assembly tasks.

This paper proposes an enhanced intelligent HRC assembly assistance system that integrates an LLM with AR technology, significantly improving collaboration efficiency and assembly quality in complex assembly tasks. In the representative aerospace electronic cabin assembly scenario, the system effectively realizes knowledge-driven task reasoning and suggestion generation, as well as intelligent guidance based on visual interaction. Despite demonstrating strong practical value and deployment potential, the system still faces certain limitations in real-world applications. Firstly, the current approach heavily relies on the completeness and accuracy of predefined structured process templates. In scenarios with insufficient knowledge coverage or inconsistent expression, the stability of reasoning outputs may be affected. Secondly, the visual tracking component used for virtual–real mapping may experience performance degradation under dynamic occlusions or varying lighting conditions, which can impact the robustness of AR-based guidance. Future research will focus on enhancing the adaptability and generalization of large models across diverse industrial tasks. This includes introducing multimodal perception mechanisms to improve understanding of operator intent and behavioral states, as well as exploring dynamic knowledge graphs and interactive knowledge learning to strengthen the system’s self-adaptation and evolutionary capabilities.