1. Introduction

Person Re-Identification (Re-ID) [

1], as a subtask of image retrieval, aims to identify and track specific individuals across non-overlapping camera views under varying perspectives, illumination conditions, and pose variations. This technology holds transformative potential for intelligent surveillance, public safety, and smart infrastructure systems, driving extensive research efforts in recent years [

2,

3,

4]. Traditional Re-ID methodologies predominantly focus on unimodal frameworks leveraging visible-light imagery [

5,

6,

7]. However, visible surveillance cameras suffer severe limitations in low-light and nocturnal environments, where captured images often lack discriminative color and texture cues essential for accurate identification. In contrast, infrared cameras are inherently robust to ambient illumination, enabling high-fidelity person imaging even under total darkness. Modern surveillance systems increasingly integrate dual-mode sensing capabilities, seamlessly switching between RGB and infrared imaging modality, thereby motivating the emergence of Visible-Infrared cross-modal person Re-Identification (VI-ReID) [

8,

9] to enable robust all-weather target recognition and monitoring.

VI-ReID aims to re-identify a target individual across non-overlapping camera views and modality shifts, enabling robust identification despite variations in illumination, pose, and sensor characteristics. It offers distinct advantages over traditional visible-spectrum methods, particularly in scenarios with poor lighting conditions. The complementary nature of visible and IR cameras enhances system robustness and expands applicability across diverse environments. However, bridging the gap between these two modalities introduces significant challenges. Two primary challenges hinder VI-ReID performance: intra-person variations (e.g., pose, illumination, occlusion) and cross-modal discrepancies arising from fundamentally distinct imaging mechanisms between visible and infrared spectra. These discrepancies manifest as significant appearance inconsistencies for the same individual across modalities. For instance, visible-light imagery relies on ambient illumination to capture surface reflectance, while infrared imagery encodes thermal radiation patterns emitted by the human body. It results in intrinsic differences in texture granularity, chromaticity, and contrast, rendering traditional unimodal feature extraction and matching methodologies ineffective for cross-modal generalization. Furthermore, infrared imagery suffers from impoverished textural and chromatic cues, compounded by vulnerability to environmental noise interference. These limitations severely impede feature alignment and discriminative representation learning, necessitating innovative solutions to bridge the modality gap.

To address the aforementioned issues, early efforts focused on designing dual-branch architectures that extract features from each modality separately before performing cross-modal fusion. One representative method, AGW [

7], introduced a two-stream network with a Weighted Regularization Triplet (WRT) loss, setting a strong baseline for subsequent studies in VI-ReID. The WRT loss was particularly effective in balancing intra-class compactness and inter-class separability under cross-spectral conditions, offering insights into loss function design for heterogeneous data learning. Another line of work explored data augmentation through synthetic image generation. Zhang et al. [

10] proposed the Diverse Embedding Expansion Network (DEEN), which generates diverse visual appearances to enrich training samples. This approach not only mitigates the lack of real-world cross-modality data but also enhances the model’s robustness against illumination variations—a common challenge in low-light environments. In parallel, multi-channel input strategies have been adopted to exploit complementary information across modalities. For example, MSCMNet [

11] incorporated both original and enhanced versions of visible and infrared images, enabling more discriminative feature learning through multi-scale fusion. Despite these advances, methods relying solely on global feature representations often suffer from performance degradation due to misalignment caused by pose variation and occlusion. To tackle this limitation, DDAG [

12] introduced a dynamic dual-attentive aggregation mechanism that adaptively refines local features through cropping, slicing, and segmentation, thereby capturing context-aware representations that are more resilient to spatial misalignment. Fang et al. [

13] proposed semantic alignment and affinity inference to address part misalignment issues. However, these methods neglect higher-order spatial relationships between body parts. Compressing full-body features into compact vectors risks diluting critical discriminative cues (e.g., distinct facial features or clothing patterns), as these details may be averaged out during dimensionality reduction.

Unlike CNNs that process features in a grid-like manner, Graph Neural Networks (GNNs) enable explicit cross-modal interactions through graph edges, allowing complementary information exchange between infrared and visible features. Moreover, their message passing mechanisms inherently preserve both local details and global contextual features during information propagation. Feng et al. [

14] proposed a graph-based architecture that integrates local body-part features with relational reasoning via graph neural networks, effectively modeling both homogeneous and heterogeneous structural dependencies. However, directly applying GNNs to VI-ReID faces several challenges. First, infrared images suffer from poor parsing quality due to low contrast and missing texture details, leading to unreliable graph construction. Second, without proper guidance, information propagation in graphs may cause feature dilution rather than enhancement. Third, the semantic gap between modalities requires careful design of cross-modal graph interactions.

To address these limitations, we propose the Parsing-guided Differential Enhancement Graph Learning (PDEGL) method for VI-ReID. By constructing a Graph Neural Network (GNN), our method explicitly models high-order topological relationships among body components, enabling inter-node information propagation and adaptive feature fusion. Ultimately, this structured relational reasoning empowers the GNN to learn feature representations that are not only highly discriminative but also significantly more robust to the aforementioned cross-modal and intra-class variations. Specifically, our proposed Parsing-guided Differential Enhancement Graph Learning (PDEGL) method distinctively employs human parsing techniques to decompose pedestrian images into constituent body parts. These parts are subsequently conceptualized as nodes within a GNN. This paradigm shifts the matching process from direct, often unstable image-feature comparisons to a more robust evaluation of spatial interdependencies among key body parts. The proposed PDEGL method operates through a specially designed dual-branch architecture. The first branch processes global features extracted from the original Visible (VIS) and Infrared (IR) images using a ResNet50 backbone. These features are further refined by our novel Position-sensitive Spatial-Channel Attention (PSCA) module, which dynamically emphasizes discriminative spatial regions and feature channels pivotal for bridging cross-modal discrepancies. The second branch focuses on part-based analysis. While the Self-Correction for Human Parsing (SCHP) [

15] network achieves strong performance on visible images, it suffers from inherent limitations in the IR domain, such as low resolution, absence of chromatic information, and poor signal-to-noise ratios, leading to parsing inaccuracies. To address this problem, we propose a Differential Infrared Part Enhancement (DIPE) module, inspired by analog differential amplifiers [

16] that suppress common-mode noise while amplifying differential signals. The DIPE module synergistically fuses information from the raw IR image and its initial parsed counterpart. This is achieved through a channel-weighted aggregation mechanism, which rectifies local parsing inaccuracies and ultimately generates a more complete and veridical IR human parsing images. Subsequently, we introduce the specially designed Parsing Structural Graph (PSG) module, where the refined parts serve as GNN nodes, with edges defined by an adjacency matrix encoding human anatomical topology. Within each node, pixel connectivity is established via an eight-connected strategy. This graph structure explicitly models spatial distributions and inter-dependencies of body parts, enabling identity matching through structural consistency analysis rather than appearance-based comparisons. Through the interaction of PSCA, DIPE, and PSG, our framework is empowered to match individuals by comparing the structural congruence of their respective part-graphs, which leads to a more discriminative and robust person representation across visible and infrared modalities.

Our main contributions are summarized as follows:

We propose a novel Parsing-guided Differential Enhancement Graph Learning (PDEGL) method, which explicitly constructs interconnected body-component graphs of the target person. By transforming person re-identification into a high-level structural matching problem, PDEGL captures spatial configurations and inter-dependencies among anatomical regions, demonstrating exceptional robustness against inherent appearance variations in cross-modal scenarios.

We introduce an innovative Differential Infrared Part Enhancement (DIPE) module. Inspired by the operational principles of differential amplifiers, the DIPE module synergistically fuses information from the raw infrared image and its initial parsed counterpart via adaptive channel weighting. This mechanism is specifically designed to address and rectify local inaccuracies prevalent in infrared human parsing, yielding more veridical part segmentations. Meanwhile, to construct the internal structural relationships among the various parts of the human body, the proposed Parsing Structural Graph (PSG) module is executed after DIPE enhanced IR human parsing images.

We design a Position-sensitive Spatial-Channel Attention (PSCA) module, integrated with the ResNet50 backbone. PSCA dynamically accentuates salient global features and informative channels, effectively mitigating cross-modal discrepancies within the global feature representations.

Extensive experimental evaluations conducted on the challenging SYSU-MM01, RegDB, and LLCM datasets compellingly demonstrate that our proposed PDEGL method achieves great performance.

3. The Proposed Method

In this section, we introduce the proposed Parsing-guided Differential Enhancement Graph Learning (PDEGL) method in detail. First, we introduce the overall structure of the PDEGL method. Subsequently, we elaborate on the design details of the Differential Infrared Part Enhancement (DIPE) module, Parsing Structural Graph (PSG) module, and Position-sensitive Spatial-Channel Attention (PSCA) module. Lastly, we provide the total loss for our PDEGL method.

3.1. Overall Structure

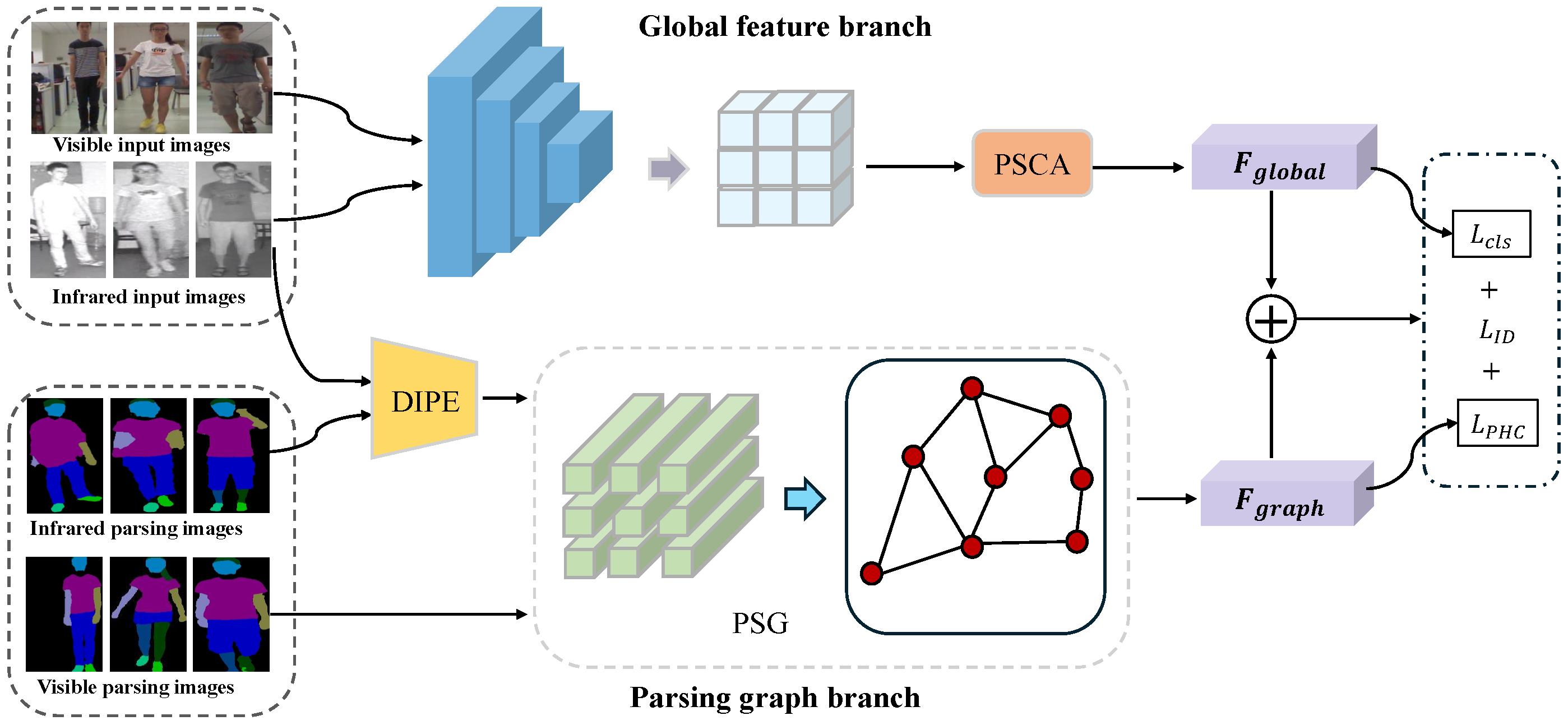

As shown in

Figure 1, the proposed PDEGL method adopts a dual-branch structure network, including global feature branch and parsing graph branch. Firstly, the input Visible (VIS) and Infrared (IR) pedestrian images are decomposed into anatomically meaningful components with the Self-Correction for Human Parsing (SCHP) [

15] method (e.g., head, arms, and legs). The global feature branch extracts global features from raw images through a ResNet50 backbone, augmented by a novel Position-sensitive Spatial-Channel Attention (PSCA) module. This mechanism dynamically focuses on the most discriminative spatial regions and channel-wise features, enhancing cross-modal feature alignment. Central to our PDEGL framework, the parsing graph branch specializes in part-based graph representation learning. Given that SCHP suffers from parsing inaccuracies in IR images, we introduce a Differential Infrared Part Enhancement (DIPE) module due to low resolution and the absence of chromatic information. Inspired by analog differential amplifiers, DIPE synergistically fuses raw IR inputs with preliminary parsing results via channel-wise weighted aggregation, correcting local parsing errors and refining IR part segmentation. Subsequently, these parsed components serve as nodes in the Parsing Structural Graph (PSG) module. The adjacency matrix encodes spatial topological relationships between body parts, while intra-node pixel connectivity is established through an eight-connected strategy. This GNN architecture module explicitly constructs spatial distributions and interdependencies among body components, transforming person re-identification from simplistic features matching into a robust structural consistency comparison. Finally, a component-level heterogeneous center loss supervises the learning of discriminative cross-modal part graphs, ensuring optimal alignment of VIS and IR embeddings under challenging conditions.

3.2. Differential Infrared Part Enhancement Module

When directly applying the pretrained SCHP to infrared (IR) pedestrian images, the parsing accuracy often deteriorates significantly due to inherent limitations in IR images, such as low spatial resolution and absence of chromatic and textural details. Such erroneous IR parsing results subsequently mislead the Graph Neural Network (GNN) learning process, compromising its ability to capture genuine human structural relationships, and thereby affect the performance of cross-modal matching.

To address this challenge, we propose a Differential Infrared Part Enhancement (DIPE) module, whose core design principle is inspired by the characteristics of differential amplifier circuits that amplify differential-mode signals while suppressing common-mode noise. Analogously, the DIPE module aims to intelligently integrate low-level spatial information from raw IR images with semantic part-aware features generated by the preliminary SCHP parsing. By adaptively amplifying subtle discrepancies between these two modalities, leveraging critical details in the raw IR data to rectify parsing inaccuracies, the module achieves precise correction and enhancement of the initial infrared parsing results.

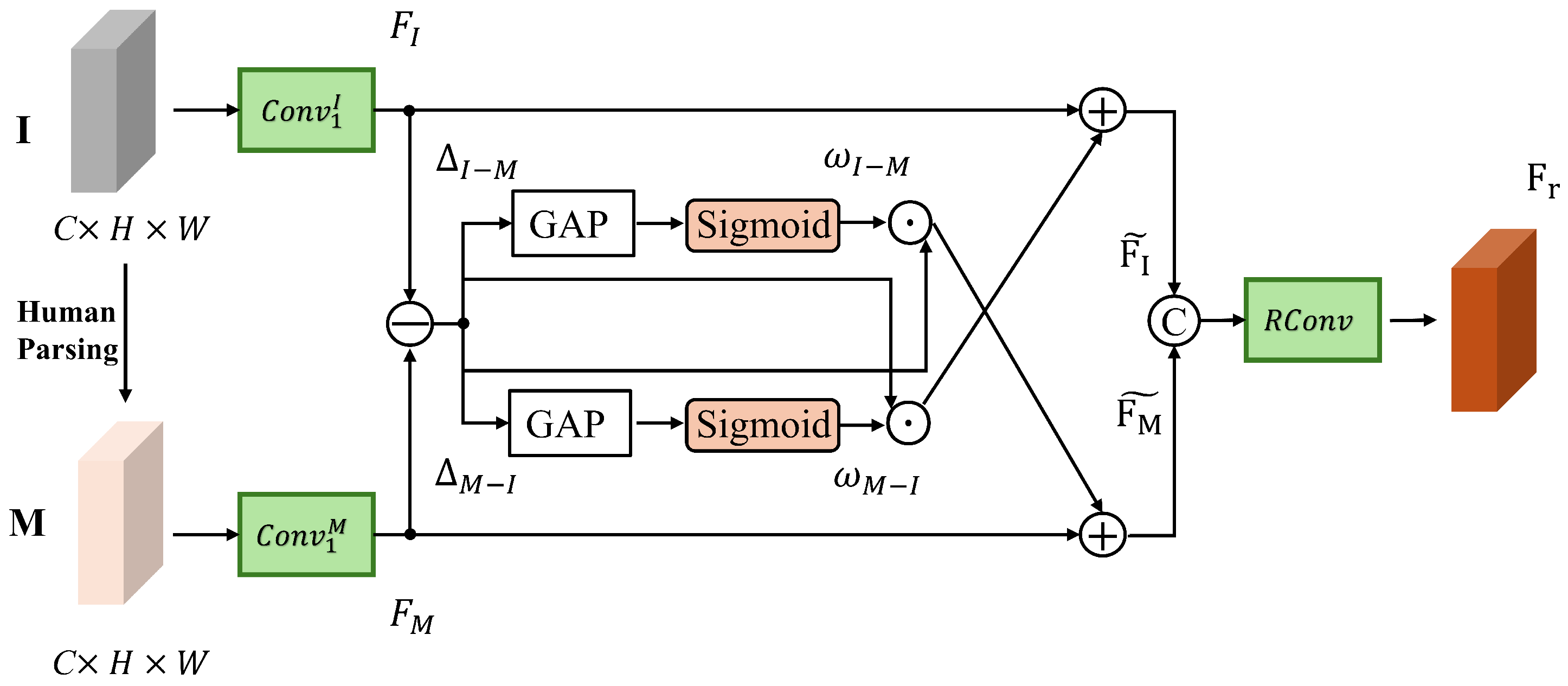

The DIPE module adopts a dual-stream parallel processing architecture, as illustrated in

Figure 2. It takes the raw infrared image

and the SCHP parsed semantic map

as input, where the parsed map

M specifically encodes semantic label information for 20 anatomical regions (e.g., head, upper body, pants, etc.):

In the feature encoding phase, multi-level shared-weight convolutional layers are first applied to process the raw infrared image

I and the parsed semantic map

M, generating corresponding feature maps

and

. Subsequently, bidirectional differential signals are computed to capture complementary information between these two modalities:

The differential signals

and

capture complementary information. Specifically,

highlights regions where the raw IR image contains noise or background clutter absent in the parsed map, while

identifies body part structures missing from the raw image due to low thermal contrast. Then, the differential signals are compressed into global descriptors through Global Average Pooling (GAP), and the adaptive channel attention weights are generated by the Sigmoid activation function:

It dynamically modulates feature response strengths across channels, enhancing identity-discriminative features while suppressing modality-specific artifacts. Based on the proposed DIPE module, we further construct a differential-aware feature map:

where ⊕ represents element-by-element addition and ⊙represents channel-level multiplication. Through this cross-enhancement strategy, the original features and the analytical features have achieved complementary fusion.

Finally, the spatial resolution of the differential sensing feature map after channel stitching is gradually restored through the deconvolution network, and the enhanced infrared human body feature map is output:

3.3. Parsing Structural Graph Module

Conventional VI-ReID approaches typically perform feature extraction on holistic body representations. However, this coarse-grained strategy fails to exploit semantic and spatial relationships between anatomical components, making it highly sensitive to pose variations and occlusions. By contrast, we propose the Parsing Structural Graph (PSG) module to construct graph-structured representations from DIPE-enhanced parsing results, explicitly modeling inter-part spatial dependencies and semantic correlations through Graph Neural Networks (GNNs). The PSG module independently processes every input pedestrian sample in a weight-sharing manner, whether derived from RGB or DIPE-enhanced infrared inputs. Specifically, it represents parsed human bodies as graphs where semantically consistent components (e.g., head, torso, upper/lower limbs) serve as nodes, while their inherent anatomical and spatial relationships define edges. This formulation enables the network to generate more robust feature representations by learning relational configurations of human body parts.

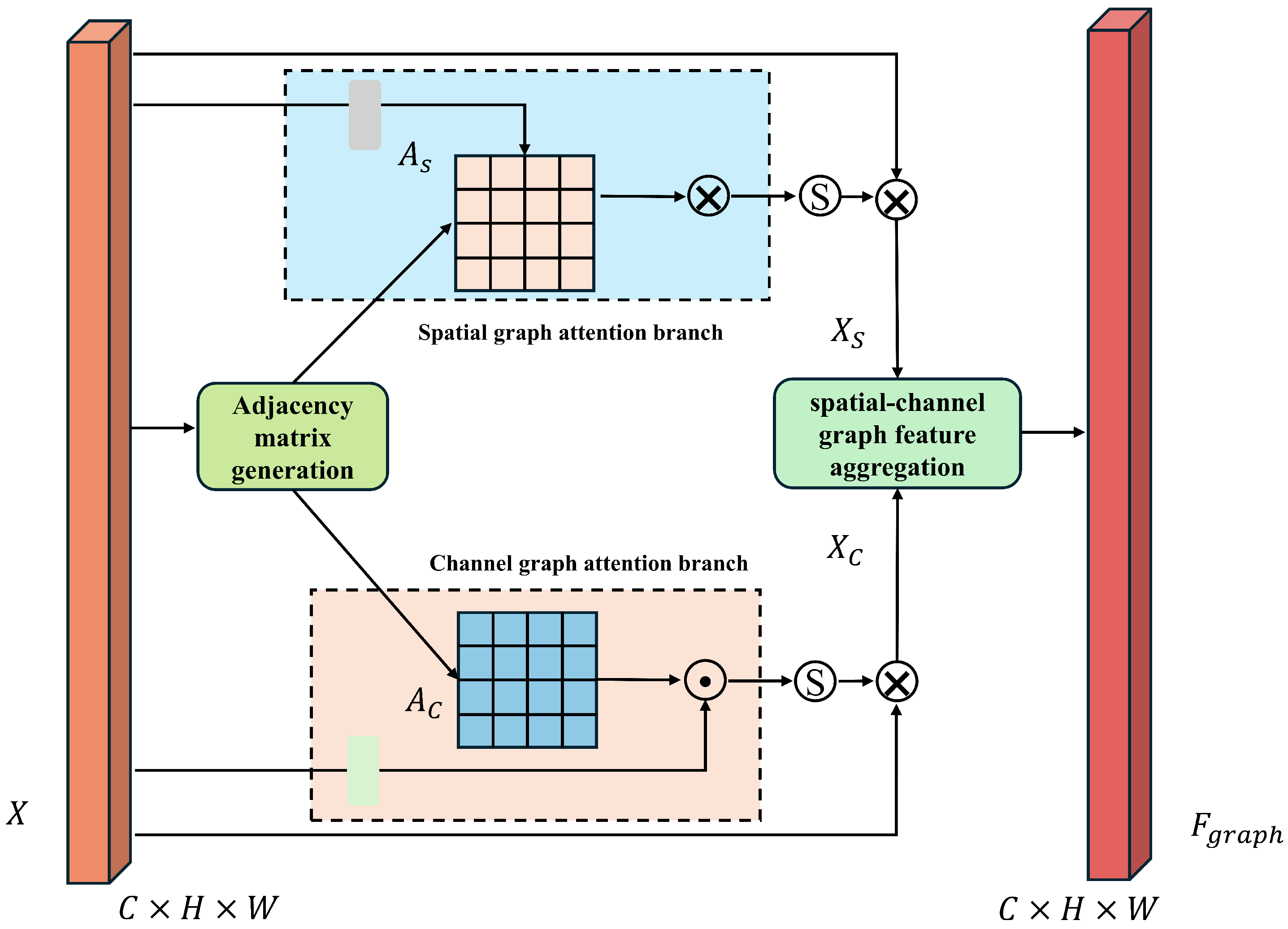

As shown in

Figure 3, for each parsed pedestrian image we construct an undirected graph,

where the nodes

represent

body semantic components. Then, a spatial adjacency matrix

is formulated to encode anatomical spatial topological relationships between body parts:

where

represents the set of neighbors of node

i.

Subsequently, the spatial graph attention branch maps the input feature map to the low-dimensional space to obtain the projected feature vector:

Consequently, the spatial graph attention weights are calculated as:

The core objective of the spatial graph attention branch is to dynamically assign importance to different parts based on their contextual relationships. By computing feature similarities and constraining the results with a predefined anatomical structure adjacency matrix

, the model learns which part combinations are most critical for identity recognition. After feature aggregation:

where

is a scaling factor and

is the spatially flattened feature vector.

Figure 3.

The workflow of the PSG module to construct the spatial structure relationship of human body parts.

Figure 3.

The workflow of the PSG module to construct the spatial structure relationship of human body parts.

The channel adjacency matrix models the long-range dependencies between multi-modal feature channels by constructing a chain-like connection. The sparse adjacency matrix that constrains information propagation between adjacent channels is represented as follows:

Then, the channel graph attention branch models pedestrian features through a channel-wise correlation matrix:

In this way, it models dependencies between different feature channels, which enables the model to learn more discriminative feature combinations and adaptively enhance the feature channels most critical for identity recognition. After applying constraints with the channel adjacency matrix, the attention weights of the channel graph are obtained through normalization:

After weighted aggregation, the channel graph feature map is calculated as:

So far, through the joint force of the attention branches in the spatial position map and the channel relationship map, the local spatial dependence and channel correlation among various parts of the human body have been revealed. After that, the operation of spatial-channel graph feature aggregation is carried out. The node features are updated by aggregating the neighbor information to calculate the scores of adjacent nodes and normalize them:

After constructing the single-layer graph attention mechanism, multiple parallel and independent attention heads are aggregated to capture feature relationships from different subspaces. The final results

obtained from all attention heads are concatenated to obtain the final graph feature output:

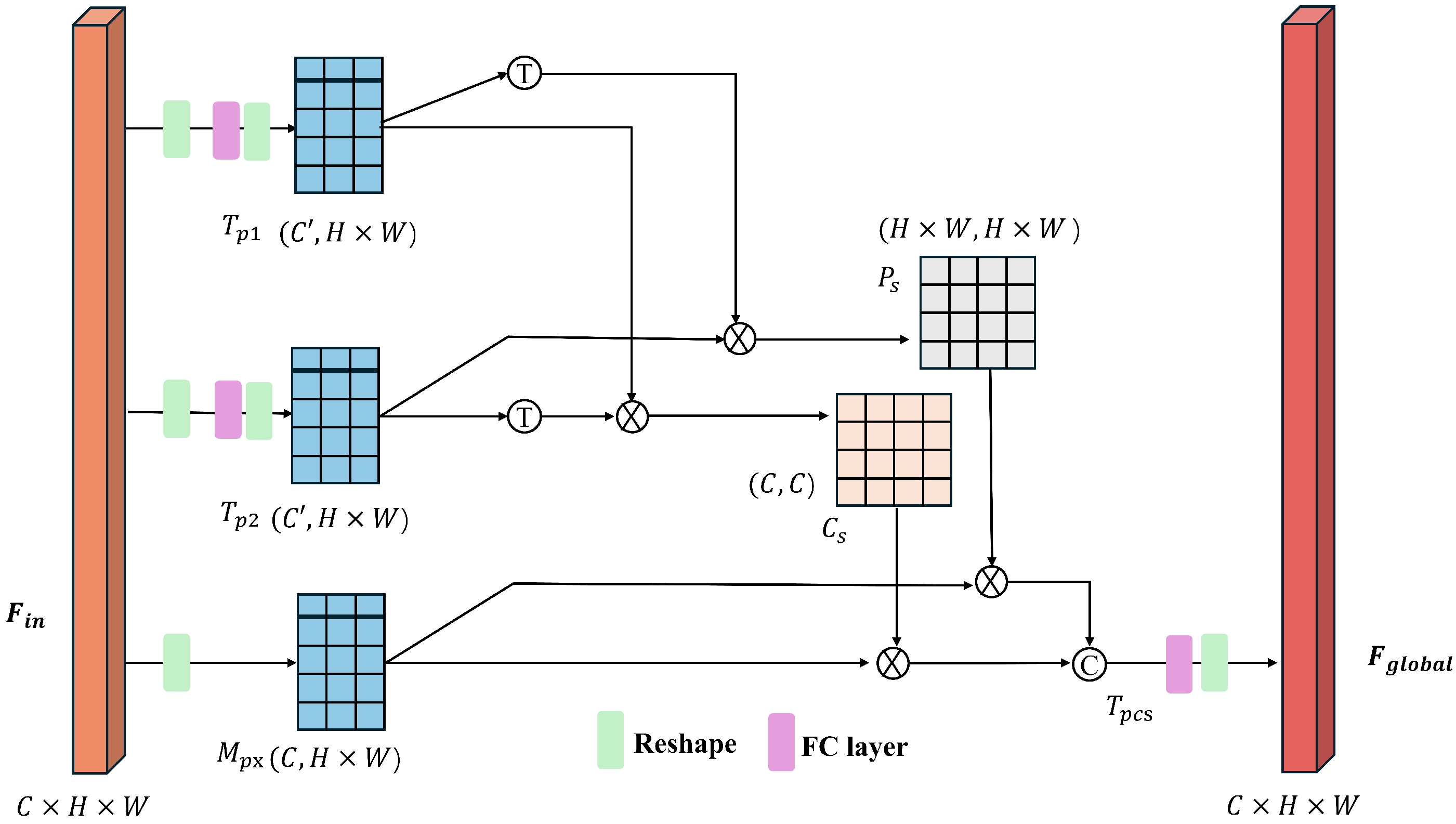

3.4. Position-Sensitive Spatial-Channel Attention Module

Due to the inherent differences between imaging modalities in VI-ReID tasks, the appearance features of pedestrians captured from infrared and visible modalities show significant heterogeneity. Although the proposed PDEGL method could learn the structural correspondence among various parts of the human body through the PSG module we introduced earlier, global features are still an important supplement for overall context information. However, the global features extracted directly from the feature extraction network ResNet50 cannot fully adapt to the huge differences between modalities, resulting in the limitation of its effectiveness in cross-modal matching.

To solve this problem and enhance the representation ability of global feature branches, we designed the Position-aware Spatial-Channel Attention (PSCA) module. It is designed to enable attention mechanisms to adaptively focus on the most important spatial dependencies and feature channels according to different spatial regions of the feature map. The core idea is to decompose the feature map into multiple patches, and independently perform attention operations in the spatial and channel dimensions within each region, so that the feature enhancement strategy based on location awareness can be implemented. The structure of the PSCA module is shown in

Figure 4.

Specifically, the similarity between any two locations in the input feature map is first calculated, and then the importance weight of each location is dynamically adjusted based on the calculated similarity to refine the final feature representation. For the given input feature map

, in order to make better use of its feature information in the spatial and channel dimensions, it is reshaped by the convolutional layer to obtain

. Then, each matrix is uniformly divided into several patches in parallel by column and converted into three tensors

, respectively, where

and

N stands for the number of image patches. Next, the association between different spatial locations and channel information is merged by generating a position autocorrelation matrix and a channel autocorrelation matrix. Thus, the position self-correlation matrix of each patch (assuming serial number

i) can be calculated as:

And the channel self-correlation matrix is:

where

.

The position self-correlation matrix represents the pairwise relationship between different positions in the feature map, and its elements indicate the importance of one position relative to another. Similarly, each element of the channel self-correlation matrix represents the importance of one channel relative to another.

represents the original input feature, which is weighted by the position self-correlation matrix

and channel self-correlation matrix

, so that the position and channel relationship of the input feature map could be merged as

:

This operation fuses the salient features extracted from both channel attention and spatial attention to form a holistic enhanced representation for the current image patch. Finally, the feature maps of all patches are aggregated to obtain the final output

of the global feature branch, which is a global feature map that integrates position-sensitive spatial and channel context information:

3.5. Loss Function

Realizing the alignment and discrimination of cross-modal features at the component level is the key to supervising the training of our proposed PDEGL method. It means minimizing the characteristic differences of the corresponding components of the same pedestrian in different modes to a great extent, while increasing the characteristic differences among the corresponding components of different pedestrians. Specifically, we maintain a learnable central prototype

for each human body component

of each identity

in the trainset, where

is the dimension of the feature of a single component. These central prototypes are updated along with the network parameters during the training process. Based on this, we design the Parsing-level Hetero-Center Loss:

where

is the enhanced feature of the

kth component of the

ith sample output by the PSG module,

is the identity label of the

ith sample, and

M is a preset margin parameter used to ensure that there is sufficient spacing between the centers of components of different identities.

prompts the corresponding components of the same identity to aggregate towards the shared component center in the feature space, thereby reducing cross-modal differences. enhances the discriminative power of component-level features by pushing away the component centers of different identities. In this way, effectively optimizes the feature space at the component level, enabling the model to learn fine-grained representations that are robust to modal changes and sensitive to identities.

Furthermore, we design a novel sample classification center loss

to enhance the global sensitivity of the target pedestrians in different modalities of the model while promoting the close aggregation of similar samples:

The pedestrian identity constraint loss is defined as

, which is used to measure the difference between the identity labels predicted by the model and the true labels, using the cross-entropy loss:

Ultimately, the overall loss function adopted for our PDEGL method training is the weighted sum of

,

and

:

4. Experiments

4.1. Datasets

We conducted extensive experiments on three widely used datasets in the VI-ReID task, SYSU-MM01 [

8], RegDB [

9], and LLCM [

10], to verify the effectiveness of our proposed PDEGL method. The datasets are schematically shown in

Figure 5 and detailed as described below.

SYSU-MM01. The SYSU-MM01 dataset contains images in both visible (RGB) and infrared (IR) modalities. The captured images are taken by six cameras, four of which were RGB cameras and two were IR cameras. The images are captured in different indoor and outdoor scenes, including bright indoor environments and dark outdoor environments. The researchers captured a total of 45,863 images from 491 pedestrians, of which 30,071 are RGB images and 15,792 are IR images.

RegDB. The information collection for the dataset relies on the dual-modal information collection system deployed. The dual-modal information acquisition system includes both visible and infrared modal acquisition equipment, and the information acquired covers 412 pedestrian identities (158 male and 254 female), and 10 visible and infrared images are acquired for each identity, totaling 8240 images. The dataset is set up in terms of the angle of information acquisition, with 156 of the 412 identities acquiring information from the front and the remaining 256 identities acquiring information from the back.

LLCM. The LLCM dataset contains 7800 pedestrian RGB images and 7800 IR images, totaling 15,600 images. The dataset provides 390 pedestrian identities, and each pedestrian identity has 20 images in each of the above two modalities. The pedestrian images in it are collected by two cameras with overlapping perspectives, and pedestrians walk between the two cameras. It is one of the larger cross-modal pedestrian re-identification datasets at present, providing important data support for research in the field of cross-modal pedestrian re-identification.

Evaluation Metrics. We use Cumulative Matching Characteristics (CMCs) and mean Average Precision (mAP) as evaluation metrics in the comparison between the performance of the PDEGL method with other SOTA methods in the article.

4.2. Implementation Details

Our proposed PDEGL method was implemented on an Nvidia 4090 GPU with the Pytorch framework [

50]. Following [

7,

51], a ResNet50 [

52] pre-trained on ImageNet was employed as the backbone for global feature extraction. We removed the final fully connected classification layer and used the feature map from the layer4 output. We resized the input images into

. For data augmentation, we adopted some common strategies, including random horizontal flip and random erasing [Random Erasing Data Augmentation]. In a training batch, 8 pedestrian samples with different identities were randomly selected from the pedestrian dataset, and each pedestrian identity contained 8 different images. The sampling method is designed to simulate the diversity of pedestrians in a real scene. The entire network was trained end-to-end using the AdamW optimizer with an initial learning rate of

and a weight decay of

. A cosine annealing learning rate schedule was employed over a total of 120 epochs. We used a warm-up strategy for the first 10 epochs, where the learning rate was linearly increased from

to

.

4.3. Comparison with State-of-the-Art Methods

In this section, in order to verify the effectiveness and advancement of the proposed PDEGL method, we compare it with some SOTA methods in the same conduction described in

Section 4.2 on the SYSU-MM01, RegDB, and LLCM datasets. Experimental results show that our proposed Parsing-guided Differential Enhancement Graph Learning (PDEGL) method achieves excellent performance on the above three datasets.

Comparison on SYSU-MM01. As shown in

Table 1, the proposed PDEGL method demonstrates highly competitive performance on SYSU-MM01. In the All search mode, our method achieved a 75.2% mAP and a 74.1% Rank-1 accuracy rate. Under the Indoor search setting, PDEGL achieved an 86.4% mAP and an 82.8% Rank-1 accuracy rate. This is significantly ahead of the suboptimal DEEN, in particular, by more than 10% ahead of PMWGCN, which also adopts the graph learning strategy, demonstrating the powerful ability of the PDEGL method to learn discriminative features in complex indoor environments. This advantage stems from the differential infrared local enhancement module and the high-order relation modeling based on graph neural networks that we proposed, effectively alleviating the problems of infrared image parsing errors and modal differences.

Comparison on RegDB. For the RegDB dataset, our proposed PDEGL method achieves 87.2% mAP and 91.3% Rank-1 on the visible to infrared mode (VIS to IR). Such performance is only 0.3% weaker than that of MMN in Rank-1. Similar results also occur in the infrared to visible (IR to VIS) mode. The characteristic of the RegDB dataset is that the differences between modes are large but the viewing angles are aligned. This indicates that the achievement of this excellent performance is attributed to the proposed Position-sensitive Spatial-Channel Attention (PSCA) module. The local–global alignment of cross-modal features has been effectively achieved.

Comparison on LLCM. On the challenging LLCM dataset, facing complex posture change and occlusion scenes, our method demonstrates consistent superiority. The PDEGL method achieves 67.6% mAP and 63.2% Rank-1 on the visible to infrared (VIS to IR) mode and 63.4% mAP and 55.2% Rank-1 on the infrared to visible (IR to VIS) mode. This is attributed to our graph learning strategy to explicitly model the spatial topological relationships of human body parts, significantly enhancing the robustness of the network against occlusion and pose changes.

4.4. Ablation Study

In this section, we follow the experimental configuration in

Section 4.2 and conduct a systematic ablation study of the effectiveness of the proposed components on the SYSU-MM01 and RegDB datasets. We choose ResNet-50, referring to the set of AGW [

7] as the baseline. The detailed experimental results are presented in

Table 2.

Effectiveness of PSCA. To verify the effectiveness of the PSCA module, we integrated the PSCA into the baseline, resulting in “+PSCA”, as shown in

Table 2. Specifically, in the “VIS to IR” (IR to VIS) mode on the RegDB dataset, improvements of 3.3% (4.5%) in mAP and 6.2% (4.9%) in Rank-1 have been demonstrated when compared with the baseline. Similar improvements can also be clearly observed on the SYSU-MM01 dataset. The above experimental results prove the effectiveness of PSCA. The weight contrast of significant regions in pedestrian images could be enhanced and feature confusion between modalities could be avoided through establishing a location-channel cooperative perception mechanism at the same time, which could provide a new optimized path for cross-modal feature learning.

Effectiveness of PSG. To verify the effectiveness of our Parsing Structural Graph (PSG) module, we integrated it with the baseline as “+PSG” in

Table 2. PSG regards each parsed human body component as a node in a graph and encodes the spatial connection topology between each part, which can match the target pedestrians to be retrieved from the high-dimensional structural relationship. Specially, under the “All search” (“Indoor search”) mode in SYSU-MM01 dataset, it has been evaluated improvements of 11.1% (9.9%) in mAP and 4.2% (3.1%) in Rank-1 compared with the baseline. Therefore, the above experimental results prove the validity of PSG module.

Effectiveness of DIPE. To verify the effectiveness of our Differential Infrared Part Enhancement module, we evaluate "+DIPE" where only DIPE is added to the baseline. As shown in

Table 2, DIPE alone achieves consistent improvements: 1.8%/4.0% (mAP/Rank-1) gains on RegDB VIS to IR, and 6.0% mAP improvement on SYSU-MM01 All Search. The other experiment performance is displayed on the last line as “+PSCA+PSG+DIPE”. Metrics of mAP and Rank-1 have collectively improved by 0.9% (1.2%) and 2.6% (1.7%), respectively, when compared with “+PSCA+PSC” under “VIS to IR“ (“IR to VIS”) mode on the RegDB dataset. The refined parsing serves as better input for subsequent graph modules, as evidenced by the substantial performance boost in the full model (75.2% mAP). The experimental results demonstrate that our DIPE module could effectively compensate for parsing errors inherent in IR imaging by intelligently fusing complementary information from original and parsed infrared images, significantly improving the quality of part-level graph representations for our PDEGL method.

Effectiveness of loss function. In this section, we conduct a detailed ablation study on the SYSU-MM01 dataset under the all-search mode to verify the validity of the designed loss function. As shown in

Table 3, the proposed PDEGL method performs poorly when using only identity loss

. After introducing the sample classification center loss

, the performance of PDEGL has been significantly improved. The metrics of mAP and Rank-1 improve by 4.5% and 5.6%, respectively. This indicates that the discriminative ability of the model for sample classification has been effectively enhanced. Then, we adopt the designed Parsing-level Hetero-Center Loss

to supervise the PDEGL method with the strategy of graph learning. The metrics of mAP and Rank-1 improve by 7.4% and 10.1%, respectively, compared with

. It can be confirmed that

can reduce the characteristic differences of the corresponding components of the same pedestrian in different modes while increasing the characteristic differences among the corresponding components of different pedestrians. Finally, according to our design of the loss function, the training performances of the PDEGL method are the best with the adoption of

and

.

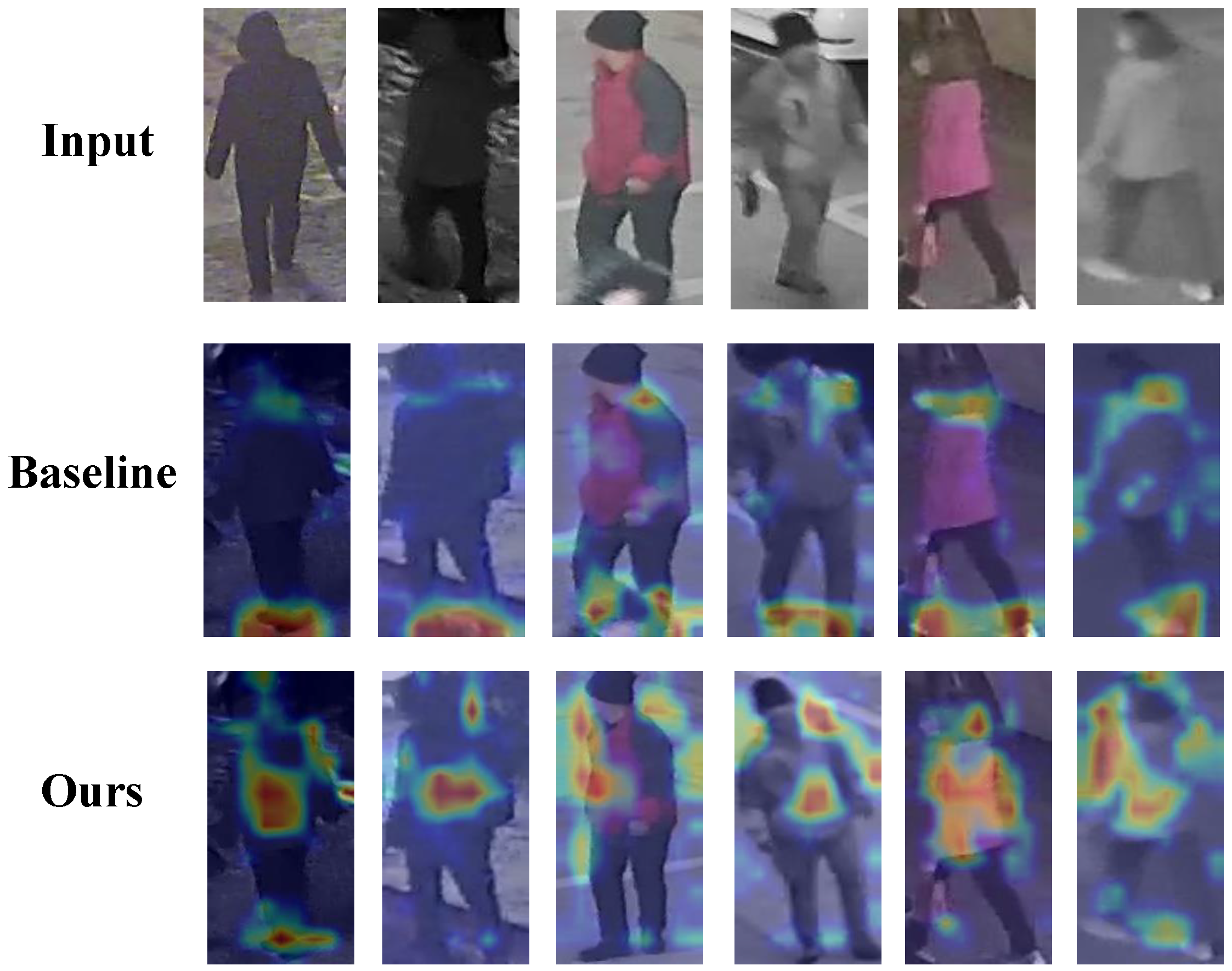

4.5. Visualization

Heatmap interest visualization. To verify the advantages of the proposed PDEGL method in feature extraction and attention allocation, we compared the attention visualization heat maps of the baseline method and the proposed method. The comparison results are shown in

Figure 6. It can be seen that the baseline’s attention distribution on the human trunk is relatively scattered, yet it gives a higher level of attention to some unimportant areas. This indicates that the baseline method may have certain limitations in the feature extraction process and fails to fully focus on the important feature areas of pedestrians. By contrast, our PDEGL method pays more attention to the important feature areas of pedestrians (such as the head, trunk, and legs), and the highlighted areas in the heat map are more concentrated and obvious. It can be concluded that PDEGL can extract the key features of pedestrians more effectively and reduce the distribution of attention in unimportant areas.

Retrieval results visualization. The Top-10 retrieval matching results of “VIS to IR“ mode and “IR to VIS” mode on the LLCM dataset are shown in

Figure 7. Correct pedestrian identity matching is marked as the green box, and incorrect matching is marked as the red box. On the query image, we select four fixed pedestrian samples for retrieval in two modes. It can be observed from

Figure 7 that the proposed PDEGL method can present highly accurate pedestrian matching results, but there are still some incorrect matching situations among them. To be specific, two different pedestrians may have very similar body proportions and postures. At this time, they may be wrongly identified as the same person due to the high matching of the graph structure. For example, the first column with “VIS to IR” mode and the fourth column with “IR to VIS” mode.

4.6. Robustness Analysis and Limitations

While our PDEGL method achieves competitive performance on standard benchmarks, real-world deployment faces significant challenges beyond controlled datasets. Current VI-ReID datasets lack diversity in weather conditions, severe occlusions, and infrared imaging variations, limiting comprehensive robustness assessment. To understand these practical limitations, we analyze our method’s performance under challenging conditions available in the LLCM dataset and compare it with the representative DEEN. The comparison retrieval results are presented in

Figure 8.

The scene shown in the upper rows is about a person wearing a bulky black coat with poor thermal contrast. Our method fails to retrieve correct matches (Ranks 1–6 are incorrect), while DEEN achieves better results with two correct matches in the top-5. The failure of PDEGL stems from parsing degradation and graph structure ambiguity. Specifically, the low thermal contrast and bulky clothing in thermal images lead to parsing degradation, as accurate body part segmentation becomes extremely challenging, causing DIPE to produce unreliable parsing results. Furthermore, the lack of clear body part boundaries introduces graph structure ambiguity, which diminishes the discriminative power of the constructed graph and forces matches to rely on the overall silhouette rather than on detailed structural cues. In the lower rows, this contrast is even more obvious. The target pedestrian carried a red bag and had a unique posture, which caused severe disruption to the expected human topological structure, resulting in the failure of our graph-based matching method when compared with individuals lacking similar attachments.

Despite our PDEGL achieving higher overall performance metrics, as shown in

Table 1, DEEN presents better robustness under extreme conditions. This can be attributed to its diverse embedding strategy expanding visual representations through an expansion module, capturing appearance variations beyond structural patterns to maintain robustness when structural cues are unreliable.

It can be concluded that although structured methods like PDEGL perform well when body parts are clearly visible and parsed correctly, they become vulnerable when these assumptions are violated. Methods that emphasize appearance diversity and global features exhibit better fail-safe behavior under extreme conditions. This is where we need to improve in the future.

5. Conclusions and Future Work

In this paper, we propose a novel Parsing-guided Differential Enhancement Graph Learning (PDEGL) method for Visible-Infrared Person Re-Identification (VI-ReID). Our PDEGL method leverages a dual-branch architecture, integrating global feature learning with a sophisticated part-based graph analysis. The PDEGL framework is built upon a dual-branch architecture that distinctly handles global feature learning and part-based graph analysis. Its core contributions include the Differential Infrared Part Enhancement (DIPE) module for correcting infrared parsing errors, the Parsing Structural Graph (PSG) module for modeling complex inter-part topological relationships, and the Position-sensitive Spatial-Channel Attention (PSCA) module for enhancing global feature discriminability. Extensive evaluations on SYSU-MM01, RegDB, and LLCM datasets demonstrate that PDEGL achieves state-of-the-art or highly competitive performance, substantially outperforming existing methods, particularly in scenarios with parsing errors and significant appearance variations. These results underscore the efficacy of explicitly modeling structural relationships and enhancing feature quality across modalities. The PDEGL framework offers a robust solution for VI-ReID, advancing the potential for reliable cross-modal person identification in diverse real-world applications.

Looking forward, several important directions remain to be explored. First, collecting and annotating datasets under extreme weather conditions would enable more realistic evaluation of VI-ReID methods. Second, developing adaptive graph topologies that can handle varying body configurations and severe occlusions would improve robustness. Third, incorporating uncertainty estimation mechanisms would help identify unreliable matches in challenging scenarios. Finally, exploring domain adaptation techniques could address the generalization gap between controlled datasets and real-world deployments. These efforts are crucial for transitioning VI-ReID from laboratory success to practical applications.