1. Introduction

In today′s highly interconnected digital age, social media platforms such as Twitter and Weibo have become indispensable channels for information dissemination during public health events [

1]. They not only provide governments and health organizations with convenient channels to issue alerts, health guidance, and updates on the epidemic to the public [

2,

3], but also facilitate rapid information flow and effective resource allocation by supporting two-way communication between affected communities and health institutions. However, the vast scale of user-generated content also presents significant challenges, particularly in accurately extracting valuable information from a large amount of redundant or ineffective information [

4]. Although existing research has extensively explored information detection in the context of natural disasters [

5], attention to public health events remains relatively insufficient. These events often possess unique attributes such as complex semantic features and diverse dissemination paths, necessitating differentiated modeling.

Effective information detection in public health events faces multiple challenges. First, the heterogeneity of social media data makes uniform information quality difficult to achieve. User-generated text typically contains informal language, emotional expressions, and context dependency [

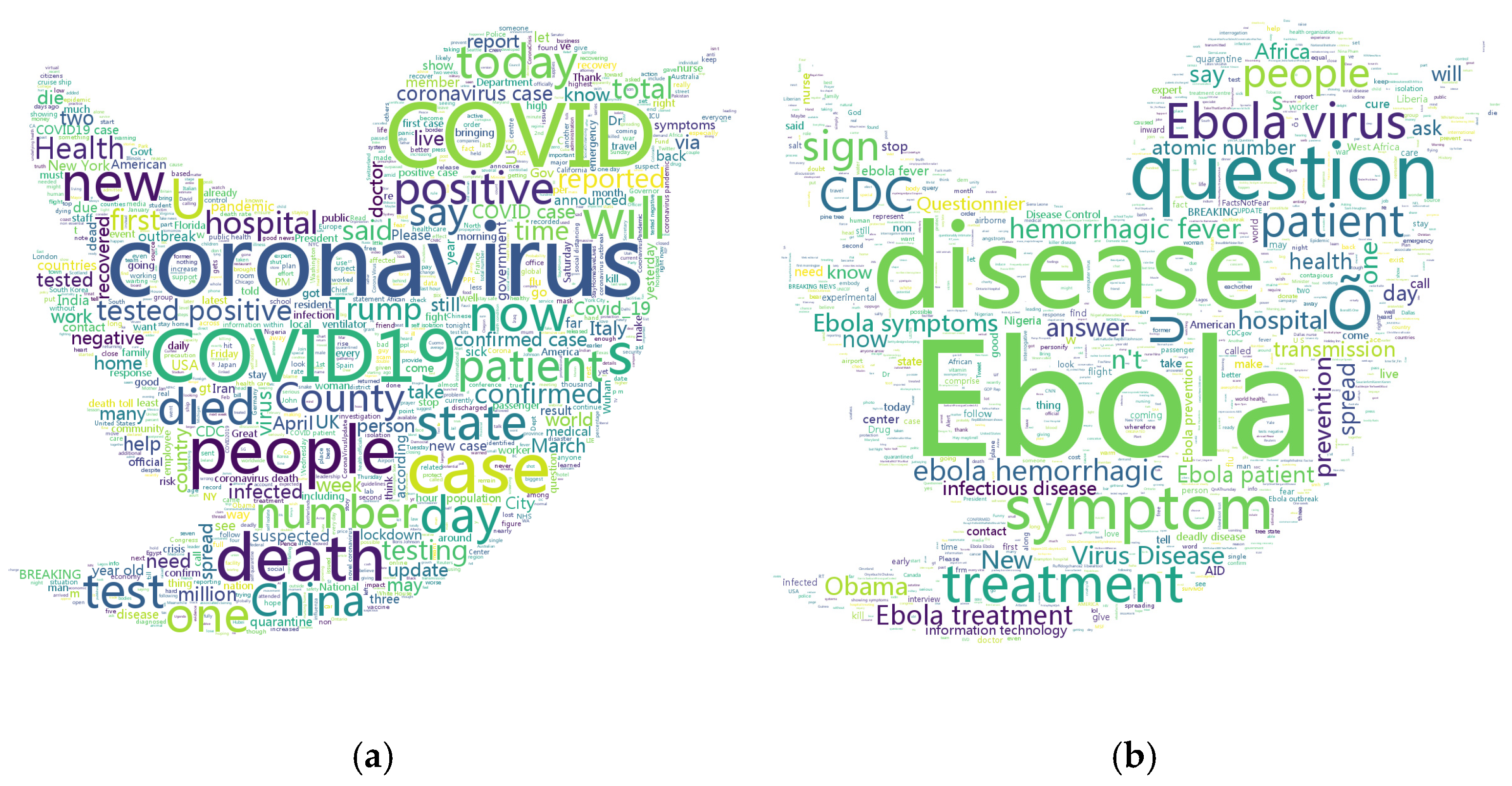

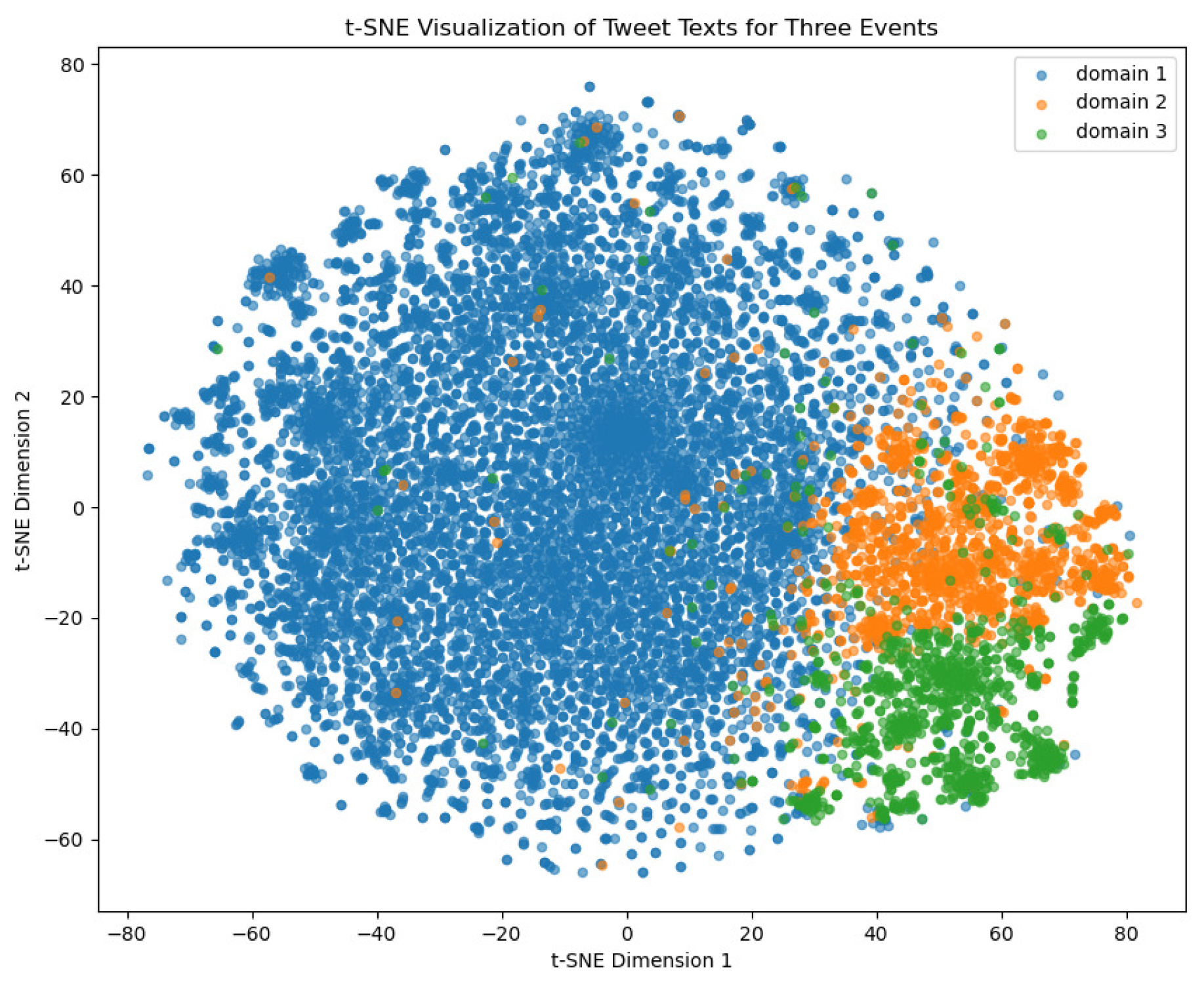

4], which increases the complexity of understanding and classification. Second, the dynamic nature of data distribution further exacerbates the difficulty of detection. In different public health events, the semantic features and distribution patterns of information may exhibit significant variations, thereby causing notable domain shifts that pose challenges to cross-event generalization. As shown in

Figure 1, we randomly selected 1000 tweets related to the COVID-19 and Ebola events, respectively, and conducted in-depth visual analysis of the semantic distribution differences between different events. Word clouds showed that “coronavirus”, “COVID19” and “death” frequently appeared in COVID-19 tweets, whereas “Ebola”, “disease” and “symptom” were more prominent in Ebola-related tweets. These differences reflect the distinct semantic emphases and information needs of each event. Further, we used the t-SNE [

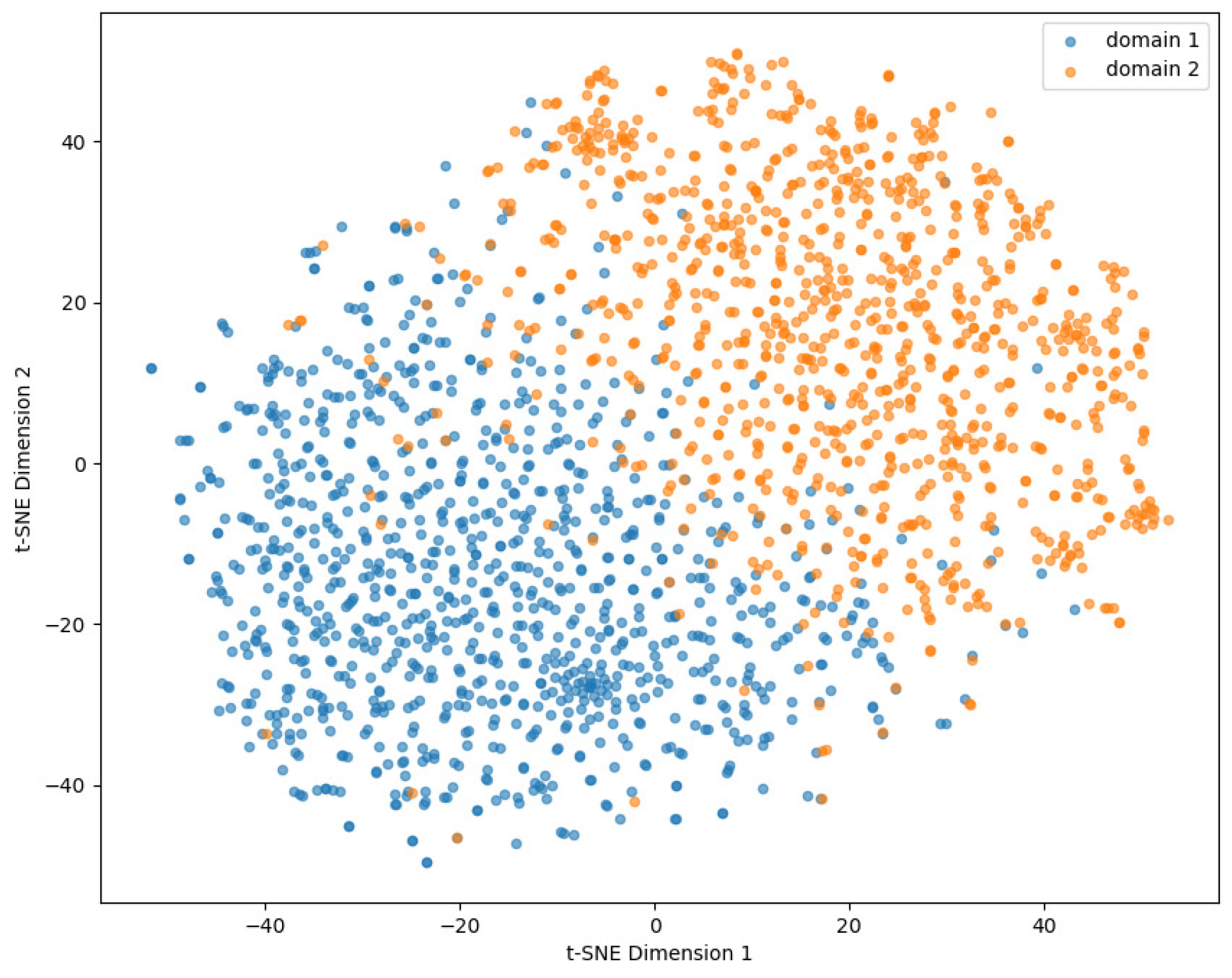

6] method to perform dimensionality reduction and visualization on the above tweets (as shown in

Figure 2) to reveal the distribution structure of text features in the low-dimensional space. The results show that tweets related to COVID-19 (blue) and Ebola (orange) formed clearly distinguishable clusters in the two-dimensional space, with well-defined domain boundaries. This indicates that, in the context of public health events, knowledge learned by a model from one event may encounter significant generalization challenges when directly transferred to another, due to the pronounced semantic and structural differences.

These challenges indicate that traditional information detection methods based on supervised learning have significant limitations in dealing with the complexity of public health events, and their performance often declines due to the lack of sufficient labeled data and cross-event adaptability. In recent years, domain adaptation, as a transfer learning approach [

7], has garnered significant attention in the field of information detection. Domain adaptation seeks to reduce distribution disparities between the source domain and the target domain by learning domain-invariant features or adjusting model parameters, thereby enhancing model generalization in the unlabeled target domain. In this context, adversarial learning [

8] has emerged as a research focus due to its superior performance in feature alignment, whereby a domain discriminator is introduced to distinguish source and target domain features, enabling the feature extractor to generate domain-invariant representations and thus facilitate cross-domain knowledge transfer. Although these methods have made progress in improving detection performance, the diversity and complexity of public health events continue to pose greater demands on model generalization and adaptability, as single-domain adaptation mechanisms and one-hot vector label representations may fail to adequately capture cross-event semantic variations and differences in sample contributions. From the perspective of sample labels, tweets in public health events vary significantly in terms of quality, semantic expression, and content structure, resulting in inconsistent transfer values in different target events. Traditional methods usually use hard-coded representations for category labels and treat all source domain samples equally, failing to reflect the strength of the association between different tweets and the target domain. This approach may conceal some essential transfer features and introduce a considerable amount of irrelevant or weakly relevant information, leading to the issue of label confusion. Label confusion primarily arises when the model fails to accurately align the label spaces of the source domain and the target domain due to significant semantic deviations of labels across different domains, resulting in incorrect assessments. Taking the COVID-19 and Ebola events as examples, although both belong to the category of infectious diseases, the tweets “COVID-19 daily cases update” and “Ebola virus detected in DR Congo” emphasize two different semantic cores of the labels, namely epidemic statistics and virus origin. Direct transfer would lead to an information mismatch. This difference in label distribution reflects the distance between the semantic centers of labels across domains, thus highlighting the necessity of constructing a label confusion awareness mechanism.

Based on the above background, this paper proposes a new framework—Label Confusion Domain Adversarial Network (LCDAN)—for detecting effective information in public health events. The core idea of LCDAN is to introduce label-level confusion modeling on the basis of the traditional adversarial domain adaptation framework, so as to achieve dual alignment of the semantic space and the label space simultaneously. On the one hand, the model extracts domain-invariant text features by introducing an adversarial training mechanism between the domain discriminator and the feature extractor, thus alleviating the distribution shift problem caused by event specificity. On the other hand, it is difficult to solve the potential conflicts at the label level only by relying on the adversarial mechanism. Especially in cross-event tasks, the semantic centers represented by the source domain labels may deviate significantly from those in the target domain. For example, in the Ebola event, tweets in the “symptom” category are often related to virus spread, while in the COVID-19 event, similar tweets are more likely to express public emotions and policy feedback. Such differences may cause confusion in the category prediction output by the model, even in the shared feature space.

To further strengthen the unified modeling of semantic structure and label structure by the model, LCDAN introduces the label confusion distribution mechanism, which incorporates the label modeling process into the adversarial training framework for collaborative optimization. Through this mechanism, the model can actively suppress the interference of source samples with obvious domain features but inconsistent labels in the training process. It emphasizes the key samples that are consistent with the target event both semantically and in terms of labels. It also effectively alleviates the negative transfer problem caused by label confusion, and improves the information recognition ability and generalization performance of the model on new events. The innovation of LCDAN combines the advantages of adversarial learning and label confusion. Adversarial domain adaptation ensures the alignment of cross-domain features, and label confusion can better learn meta-knowledge to adapt to new events. At the same time, a dynamic sample weighting mechanism is introduced to adjust sample weights to optimize the transfer effect. The above design gives LCDAN significant advantages in dealing with the complex data characteristics of public health events. The main contributions of this paper include the following aspects:

We propose the LCDAN framework, which integrates adversarial domain adaptation and label confusion, providing a novel solution for detecting effective information in public health events.

We address the text offset problem present in different domains of public health events. Experiments conducted on multiple public health event datasets demonstrate that LCDAN can effectively distinguish relevant information from irrelevant information in public health events and exhibits strong transfer performance.

We propose a label confusion method for transfer learning in public health events. By introducing label confusion between events and learning probabilistic label distributions, this method adjusts source domain sample weights to optimize transfer performance, evaluates sample importance to the target domain, and enhances the model’s ability to identify relevant information.

We introduce the informative message detection task into the public health field. Compared with general events, the information in public health tweets is complex and time-sensitive. This study expands the application boundary of cross-domain information detection.

The rest of the paper is structured as follows.

Section 2 reviews related work concerning public health events, domain adaptation, and adversarial learning.

Section 3 introduces the proposed LCDAN methodology.

Section 4 presents empirical results across three domains, accompanied by detailed analysis and discussion. Finally,

Section 5 concludes the paper.

3. Methodology

3.1. Framework

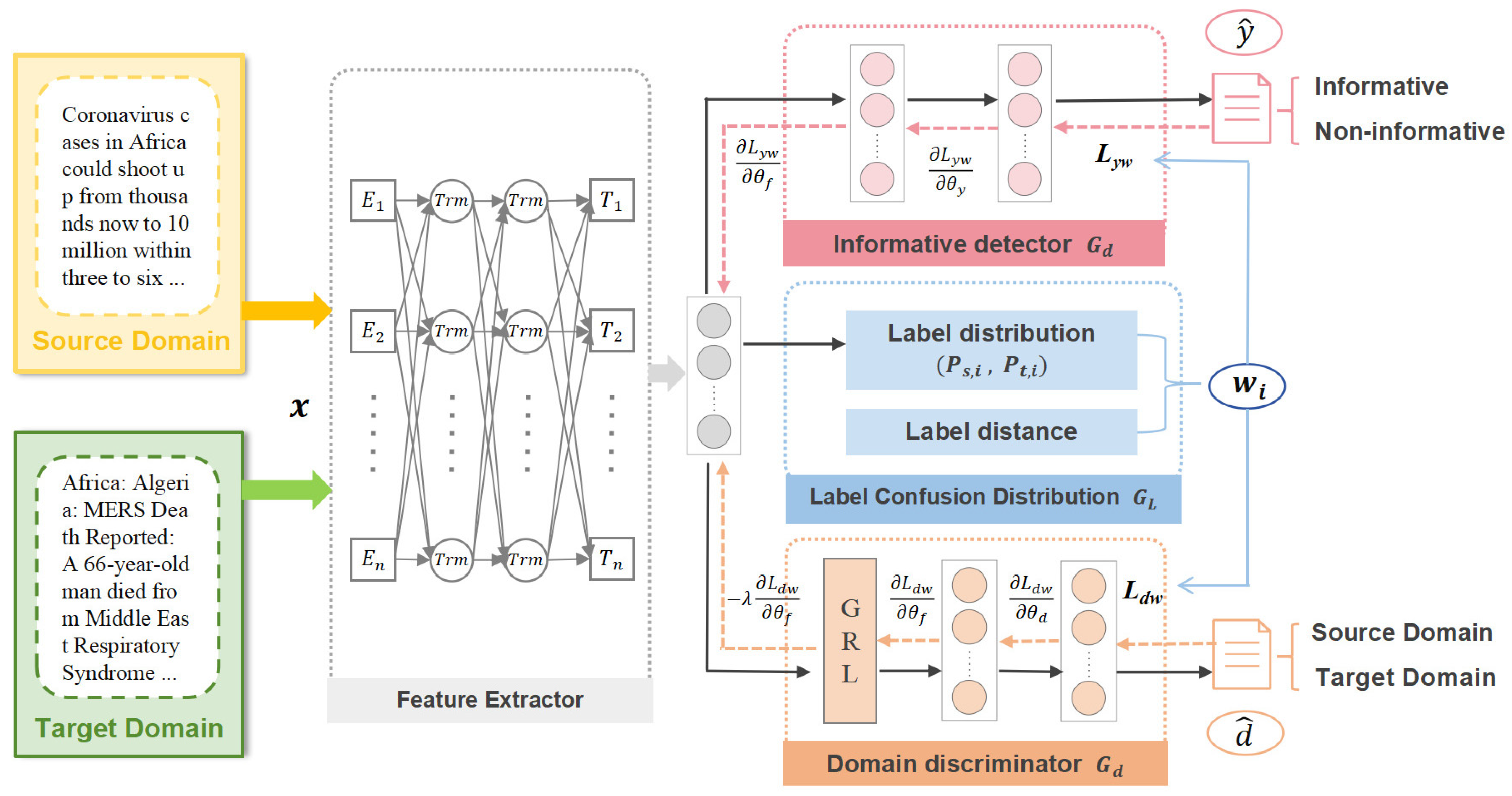

As a central platform for information dissemination and public engagement in public health events, social media generates text data characterized by high heterogeneity, significant context dependency, and strong event specificity. These characteristics render the accurate identification of effective information from vast social media data a highly challenging task. To address this issue, a novel detection framework—Label Confusion Domain Adversarial Network (LCDAN)—is proposed, integrating adversarial learning with label confusion-aware mechanisms to enhance both information identification capabilities and cross-domain generalization performance in multi-event contexts. The design of the LCDAN framework is based on the following key considerations. First, social media text, typically unstructured and informal in expression, requires a feature extraction module capable of capturing deep semantic information to replace the reliance of traditional methods on shallow information. Second, to enhance cross-event transfer capabilities, event-specific feature interference must be suppressed through adversarial training, enabling the model to learn more generalizable representations. However, relying solely on feature alignment is insufficient to address differences in cross-domain label spaces. Due to significant semantic shifts in labels across different public health events, the transfer value of source domain tweets to the target task remains uncertain. If one-hot labels are uniformly applied and source samples are treated with equal weight, label confusion issues are likely to arise, thereby leading to negative transfer. Therefore, LCDAN introduces a label confusion distribution modeling mechanism to finely construct the semantic association between source samples and the target task in the label space. By dynamically weighting and regulating the weights of training samples, transfer optimization is achieved at the label level.

Specifically, the LCDAN framework comprises the following modules: The feature extractor maps social media texts to the semantic space based on a pretrained text model and extracts context-related features; the informative detector performs supervised classification on the extracted features to determine whether a tweet contains effective information; the domain discriminator, utilizing a gradient reversal mechanism, implements adversarial training to align source and target domain distributions in the feature space, reducing event-specific biases; the most crucial label confusion distribution module combines the label distribution and semantic center distance to measure the potential value of source samples for the target domain and adjusts their contributions during the training process through a dynamic weight mechanism, enhancing the model’s perception ability of transfer-related samples and alleviating the label confusion problem caused by semantic mismatch. Through the synergistic operation of these modules, LCDAN establishes a detection framework that integrates feature alignment and label optimization, thereby significantly improving the model’s generalization across multi-source heterogeneous events. The introduction of the label confusion mechanism not only enhances the discriminability of source sample selection but also provides an optimization approach for cross-event knowledge transfer based on semantic alignment, thereby distinguishing LCDAN from existing adversarial models. The process is illustrated in

Figure 3.

The notations used frequently in this article are summarized in

Table 2.

3.2. Feature Extractor

When identifying informative messages related to public health events on social networks, the text feature encoder encodes the original text data from different domains. Tweets typically contain numerous entities related to events and their domains, which vary across domains, thereby significantly impacting the classification performance of the classifier. Traditional text representation methods, such as bag-of-words models or static word embeddings, are inadequate due to their inability to sufficiently capture context dependency and event specificity. To address this, BERT [

29] is adopted for text feature extraction, whereby latent semantic and contextual information is captured through a multi-layer bidirectional Transformer encoder, producing high-quality semantic representations. Given an input text sequence

, where

denotes the text length, BERT first converts each token

into a token representation through a tokenizer. Subsequently, each token is transformed into an input representation through three types of embeddings: token embeddings

, positional embeddings

, and segment embeddings

. Specifically, for a token

, its input vector

is computed as

The input vector sequence

, where

denotes the embedding dimension, is fed into BERT’s multi-layer Transformer encoder, which, through a self-attention mechanism, produces context-dependent output representations

:

where

denotes the BERT feature extractor, and

represents the fixed-dimensional feature vector for the text

.

To obtain a global feature representation of the text, the output vector of the [CLS] token,

, is typically extracted and mapped to the target feature space through a fully connected layer:

where

ReLU denotes the activation function,

represents the weight matrix,

represents the bias vector,

denotes the BERT output dimension, and

denotes the target feature dimension.

The module takes the raw text sequence as input and produces the feature , providing semantically rich input for the information detection classifier and domain discriminator. The BERT Transformer encoder, owing to its bidirectional context modeling and self-attention mechanism, effectively captures the semantic features of public health event texts, thereby enhancing classification performance.

3.3. Informative Detector

The informative detector

outputs

, which represents the probability that the post is “Informative”, based on the features

output by the feature extractor. Let the label be

, where

represents informative and

represents not-informative text. The structure of

includes a two-layer fully connected network, equipped with a ReLU activation function and a softmax output layer, which is expressed as

where

denotes the predicted probability distribution, and

and

represent the network parameters. For the source domain data, the optimization objective of the informative detector is to minimize the cross-entropy loss:

where

and

denote the true label and predicted probability, respectively, for the

-th sample, effective information detection must address the generalization challenges posed by new domain events, as solely minimizing detection loss tends to capture domain-specific knowledge, making generalization difficult. Consequently, the model must be trained to learn more general feature representations to capture common features across all events.

3.4. Domain Discriminator

To reduce domain-specific features, a domain discriminator is introduced, which, through adversarial training, encourages the feature extractor to generate domain-invariant shared features. The domain discriminator

, via adversarial training, distinguishes whether the feature

originates from the source or target domain, drawing on the concept of adversarial learning. Let the domain label be

, where

denotes the source domain and

denotes the target domain. Its structure consists of a multi-layer fully connected network, with the output representing the probability distribution of event categories:

where

,

,

represents the number of event categories, and

denotes the intermediate layer dimension.

To implement adversarial training, a gradient reversal layer (GRL) is introduced between the feature extractor and the event discriminator. The GRL maintains the input unchanged during forward propagation but multiplies the gradient by a negative coefficient

during back propagation, thereby enabling the feature extractor to maximize the loss of the event discriminator, promoting the generation of event-invariant features. The loss function of the domain discriminator is defined as the cross-entropy loss:

3.5. Label Confusion Distribution

The label confusion distribution takes the feature vector generated by as input, quantifying the importance of source domain samples to the target domain through label distributions and domain center distances, and assigning weights to each sample to adjust its influence during training, thereby optimizing the model’s performance in the target domain.

Effective information detection in the public health domain faces challenges due to the high timeliness, diverse sources, and varied content of information. Traditional methods typically employ one-hot vectors to represent true labels. However, one-hot representations may fail to adequately capture the relationships between samples and labels [

28] and are susceptible to mislabeling, leading to difficulties in distinguishing similar labels during prediction and causing label confusion issues. Particularly in cross-domain scenarios, if domain labels of source samples are uniformly assigned while ignoring inter-sample differences, the model may overfit to source domain-specific features, thereby reducing adaptability to the target domain. In this study, the objective is to extract features that maximally distinguish whether samples contain effective information while minimally identifying their domain. Consequently, by introducing label confusion to domain labels, the model’s reliance on domain-specific features can be effectively reduced, thereby enhancing the learning of general effective information features. Although achieving a true label distribution theoretically is challenging, it can be approximated by mining the semantic information underlying instances and labels.

Assume the output space is , which contains possible labels. For each source domain sample , its label distribution is defined as a vector , where denotes the probability that the sample belongs to label . The domain label of each sample is represented by a two-dimensional vector , corresponding to the probabilities of belonging to the source and target domains, respectively, satisfying the constraint . The domain label can be approximated through the output of the domain discriminator . Here, is the feature vector generated by the feature extractor from the source domain sample . For a target domain sample , its domain label is fixed as , indicating complete affiliation with the target domain.

In public health, there are different categories of fields. Although each field belongs to the scope of public health, their natures, impacts, and response methods vary greatly. These differences are reflected in the semantics of data labels, resulting in significant variations in label distances between different domains. To better understand and leverage these differences, the label distance is defined as the cosine distance between the source domain’s central label representation and the target domain’s central label representation. The central label representations for the source and target domains are, respectively, defined as

where

,

,

and

denote the central label representations and the number of labels for the source and target domains, respectively.

The label distance is calculated as

By integrating label distributions and domain label center distances, the dynamic weight of the source domain sample is calculated. Specifically, the weight

of the source domain sample

is defined as

Here, when approaches 1, the sample may contain significant source domain-specific information, and its weight should be reduced to mitigate negative transfer. Thus, such tweets should be assigned lower weights, and the weights are applied to the information detection loss and domain discrimination loss.

3.6. Loss Function

LCDAN uses a weighted mechanism of label confusion to more accurately align the distributions of different domains, reduce the influence of irrelevant or abnormal source tweets on unlabeled target tweets, and more effectively detect effective information in new domains. Through dynamic weighting, the label confusion mechanism can adjust the contribution of source domain samples to the training loss and optimize the cross-domain adaptability of the model. The optimized information detection loss is

The weighted domain discrimination loss is

The total loss function comprises two components: the weighted information detection loss

and the weighted domain discrimination loss

. The final loss of LCDAN is formulated as a linear combination of these two losses:

where

is a hyperparameter used to balance the trade-off between effective information detection and domain discrimination.

A gradient reversal layer (GRL) is incorporated before the fully connected layer of to facilitate a min–max game between and . During training, the model parameters are optimized by minimizing , while the feature extractor is optimized to minimize and simultaneously maximize .

4. Experiments

4.1. Datasets

This study is based on the open-source datasets provided in references [

30,

31]. Three typical tweets related to public health events were selected to construct the informative message recognition task. These three public health events are COVID-19, Ebola disease, and Middle East Respiratory Syndrome. Specifically, the COVID-19 Twitter dataset was obtained from [

30], while tweets on Ebola virus disease and Middle East Respiratory Syndrome (MERS) were extracted from [

31]. These three events are public health events that have attracted significant global attention in recent years, with notable social impact and transmissibility. COVID-19 exemplifies a global pandemic, Ebola represents one of the most severe infectious diseases worldwide, and Middle East Respiratory Syndrome (MERS) is characterized by cross-border transmission of a respiratory illness. The selection of these three events enables a comprehensive evaluation of the proposed method’s capability to identify informative message across diverse types and scales of public health crises, thereby validating the model’s robustness and applicability. All tweets were classified as informative messages and non-informative content. For example, the tweet “Correction: PA has 83 new cases of COVID-19, bringing our statewide total to 268 cases, said PA Health Secretary Dr. Rachel Levine. That is more than double the new cases Wednesday” provides an epidemic update and is considered a typical informative message. In contrast, the tweet “Starting off the weekend because I am never afraid. I am legend on AMC already feeling better. #COVID-19”, despite mentioning keywords, lacks useful information and is not considered an informative message. This classification provides high-quality sample support for subsequent model training and cross-domain transfer, facilitating the validation of the proposed method’s effectiveness in identifying informative messages in public health scenarios. For more details about these datasets, please see [

30,

31].

We manually deleted some unnecessary data and performed simple data augmentation on the imbalanced data.

Table 3 shows the label distribution of the three public health events. Six informative message detection tasks are constructed as follows:

and

. Our datasets are available at

https://github.com/yyy-2200/LCDAN (accessed on 4 July 2025).

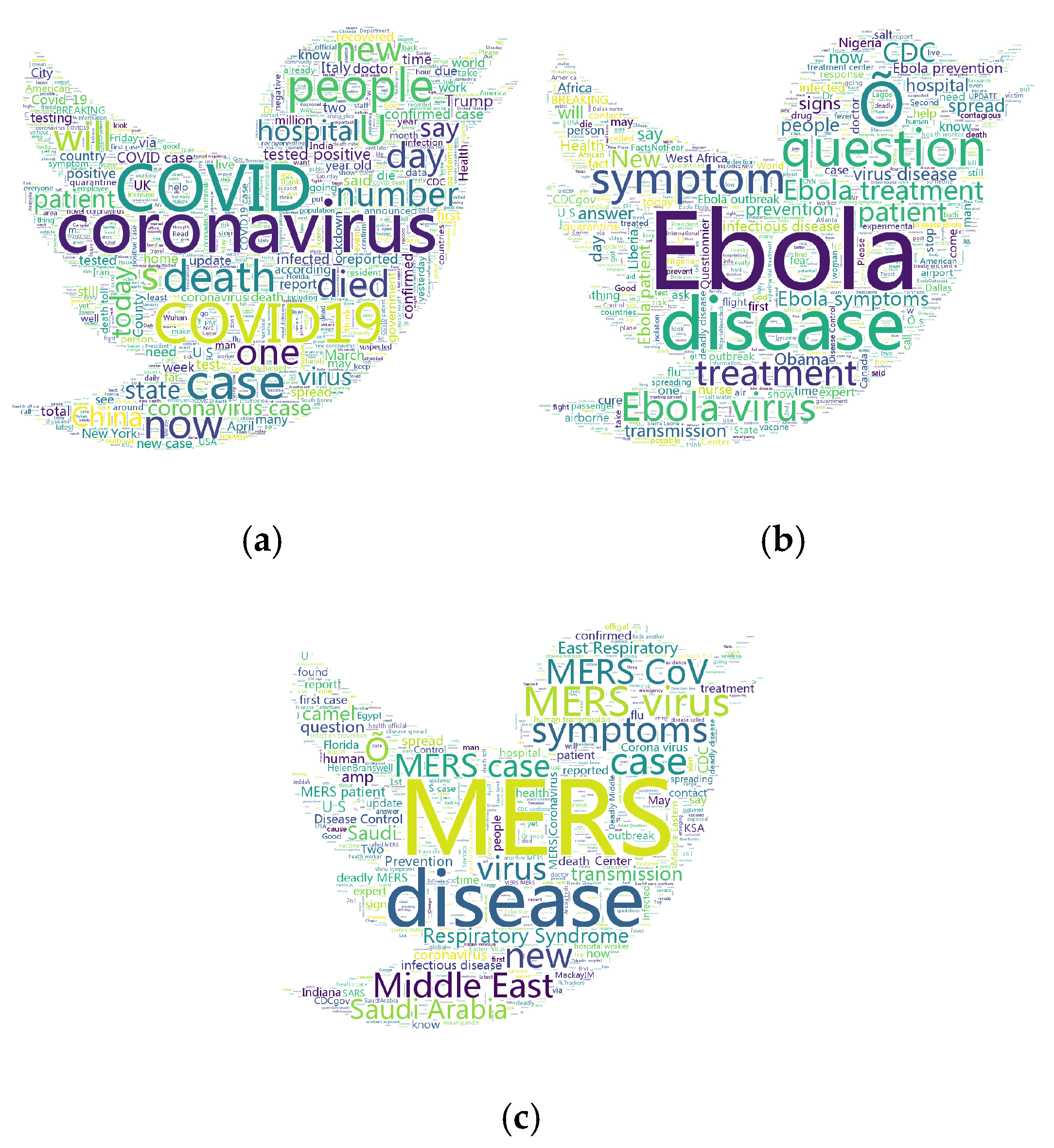

Considering the different text characteristics in different domains, we visualized the word clouds of specific domains, as shown in

Figure 4. The word cloud display clearly shows that Twitter significantly emphasizes the themes and locations of all emergencies. For the COVID-19 event, words such as the number of people, death, and COVID-19 appear frequently in the word cloud. In the Ebola tweets, Ebola is the most frequently occurring word. Meanwhile, words such as disease, treatment, and symptoms are also very common. Regarding Middle East Respiratory Syndrome, MERS is one of the most frequently occurring words, and words such as the Middle East, Saudi Arabia, and disease are also prominent in the vocabulary. The word cloud shows obvious differences between texts in different domains. When identifying informative messages across multiple domains, it is crucial to consider knowledge transfer in the text.

As shown in

Figure 5, the t-SNE visualization results illustrate the distribution of tweet texts from three public health events (i.e., COVID-19, Worldwide Ebola, and Middle East Respiratory Syndrome) in the semantic space, where blue nodes represent COVID-19, orange nodes represent Ebola, and cyan nodes represent Middle East Respiratory Syndrome. It can be clearly observed that tweets from different events form relatively distinct clustering structures in the embedding space, reflecting significant differences in language expression, thematic content, and user focus across different event domains. This cross-event distribution inconsistency (i.e., domain shift) indicates strong event dependency in text features, further confirming the challenge of achieving robust generalization in cross-domain informative message detection tasks when relying solely on single-event training models.

4.2. Experimental Settings

For each detection task, we split the dataset into training, validation, and test sets with a ratio of . The splits were randomly sampled but fixed across all runs to ensure consistency and comparability. Additionally, all target domain data was strictly held out during training to prevent data leakage and maintain the integrity of the transfer learning evaluation. In the LCDAN parameter settings, the dropout rate is set to 0.3 to prevent overfitting. The training batch size is 32, and the learning rate is . We used metrics commonly used in classification tasks, including accuracy, precision, recall, and F1-score.

We selected baseline models from different categories, including traditional feature extraction-based models, deep learning models, and deep domain adaptation models, to verify the effectiveness of the model.

4.2.1. Text Topic Model

To compare models, we initially selected topic modeling, a widely adopted and interpretable approach for short text classification. Specifically, we chose BERTopic [

32], the prevalent model in this category, as our baseline for comparison.

BERTopic is a topic modeling tool based on BERT embeddings and clustering, extracting document topics through Transformer models. It integrates HDBSCAN clustering and c-TF-IDF to automatically discover topics, making it suitable for analyzing dynamic and diverse text data.

4.2.2. Deep Learning-Based Text Encoder

The proposed model was compared with traditional deep learning models, including Text Convolutional Neural Network (Text-CNN), Attention-based Recurrent Neural Network (Att-RNN), and Bidirectional Long Short-Term Memory (Bi-LSTM).

- (a)

Text-CNN is a deep learning model widely applied to various NLP tasks. For text classification tasks, Text-CNN utilizes convolutional neural networks to extract relevant features from text data automatically.

- (b)

Att-RNN is an extension of the standard RNN, incorporating an attention mechanism. This enhancement enables the model to more effectively capture dependencies, handle variable-length sequences, and provide interpretability. Additionally, a sigmoid function in the fully connected layer is used to predict the informativeness of content.

- (c)

Bi-LSTM offers significant advantages over LSTM in capturing bidirectional context and long-range dependencies, which is crucial for understanding text sequences. These advantages make Bi-LSTM a preferred choice for many NLP tasks where contextual understanding is critical.

4.2.3. Pretrained Models

We selected several widely used pretrained text models for fine-tuning on the information recognition task. The pretrained models included in the comparison are RoBERTa [

33] and BERTweet [

34].

- (a)

RoBERTa is an optimized NLP model that enhances BERT’s pretraining performance through larger-scale data, longer sequences, dynamic masking, and hyperparameter tuning.

- (b)

BERTweet, a model based on RoBERTa, is optimized for Twitter data, pretrained on a large corpus of tweets, and excels at handling short sentences and non-standard language in social media text.

4.2.4. Deep Domain Adaptation Models

The deep domain adaptation models compared in this study include EANN [

23], Margin Disparity Discrepancy (MDD) [

35], and BDANN [

26]. Details of these models are provided below.

- (a)

Margin Disparity Discrepancy (MDD) is a technique used in domain adaptation and machine learning, primarily designed to address domain shift issues. MDD aims to reduce distribution differences between source and target domains in domain adaptation scenarios. This is achieved by explicitly minimizing the disparity in decision boundaries between source and target domain data points.

- (b)

Event Adversarial Neural Network (EANN): A multimodal feature extractor, an event discriminator, and a fake news detector are jointly trained for multimodal fake news detection. As this study focuses on the text modality, the visual modality feature extraction component was removed, and the model is denoted as EANN-text.

- (c)

BDANN: A BERT-based domain adaptation neural network, which eliminates event-specific dependencies through a feature extractor, a domain classifier, and a fake news detector, used for fake news detection. Similarly, the visual modality feature extraction component was removed, and the model is denoted as BDANN-text.

4.3. Informative Message Detection Results

Table 4 and

Table 5 present the experimental results of the baseline models and the proposed method across six tasks. It is evident that the proposed method significantly outperforms the baseline methods in all evaluated tasks. For instance, in the

task, the proposed method achieved an accuracy of 0.914 and an F1 score of 0.911, surpassing all other baseline methods. Similarly, in other tasks, the method demonstrates consistently excellent performance. However, among the transfer tasks, the

task exhibits relatively poorer performance compared to others, likely attributable to greater disparities between the source and target domains in this specific task.

Although Text-CNN is capable of extracting local features, its performance is marginally inferior to that of the proposed method in integrating both local and global feature representations. When compared to memory-based neural networks, such as Bi-LSTM and Att-RNN, the proposed method demonstrates superior performance in processing short text data associated with public health events. While Bi-LSTM and Att-RNN are effective in modeling sequential dependencies, they still fall short of the proposed method’s ability to handle short, event-driven text. This is likely because tweets related to public health events are typically composed of shorter text samples. Additionally, LCDAN demonstrates superior performance compared to MDD, EANN-text, and BDANN-text, suggesting that employing label confusion to dynamically weighted samples facilitates more detailed learning and training at the sample level, proving effective for informative message detection tasks.

In conclusion, the proposed method exhibits substantial performance improvements in detecting effective information related to public health events, thereby confirming its effectiveness in cross-domain transfer scenarios.

4.4. Ablation Study

To clearly illustrate the roles of the feature extractor

, domain discriminator

, and label confusion distribution

, an ablation study was conducted for the six detection tasks. As shown in

Table 6,

denotes the LCDAN without the label confusion distribution, and

denotes the LCDAN without the domain discriminator. When

is removed, the importance of each source tweet to the target event cannot be obtained. Furthermore, when

is removed alone,

cannot optimize the model to reduce distribution differences between events, rendering the model equivalent to a non-transfer learning method (BERT).

Compared to the LCDAN, removal of the feature extractor results in an average accuracy decrease of 20.92%, confirming the effectiveness of the feature extractor. In the task, the accuracy drops to 0.591, highlighting the critical role of this module in extracting deep semantic features across domains. The removal of the feature extractor has a particularly significant impact on tasks requiring fine-grained feature representations; for instance, in the COVID-19 to MERS task, the accuracy decreases from 0.854 to 0.631, a reduction of 26.11%. This may be attributed to the diverse linguistic patterns in public health event texts, where the feature extractor effectively captures textual features associated with public health events.

Removal of the domain discriminator results in an average accuracy decrease of 5.75% compared to the LCDAN, indicating that knowledge transfer significantly enhances the detection performance of deep models on new data. In the task, the accuracy decreases from 0.867 to 0.739, a significant reduction, highlighting the critical role of the domain discriminator in aligning feature distributions between source and target domains, particularly in tasks with significant domain distribution differences. In tasks with smaller domain differences, such as , the impact is relatively minor, with the accuracy decreasing from 0.897 to 0.884, indicating that the impact of this module is positively correlated with the degree of domain differences.

Removal of the domain discriminator results in an average accuracy decrease of 5.75% compared to the LCDAN, indicating that knowledge transfer significantly enhances the detection performance of deep models on new data. In the task, the accuracy decreases from 0.867 to 0.739, a significant reduction, highlighting the critical role of the domain discriminator in aligning feature distributions between source and target domains, particularly in tasks with significant domain distribution differences. In tasks with smaller domain differences, such as , the impact is relatively minor, with the accuracy decreasing from 0.897 to 0.884, indicating that the impact of this module is positively correlated with the degree of domain differences.

4.5. Parameter Sensitivity

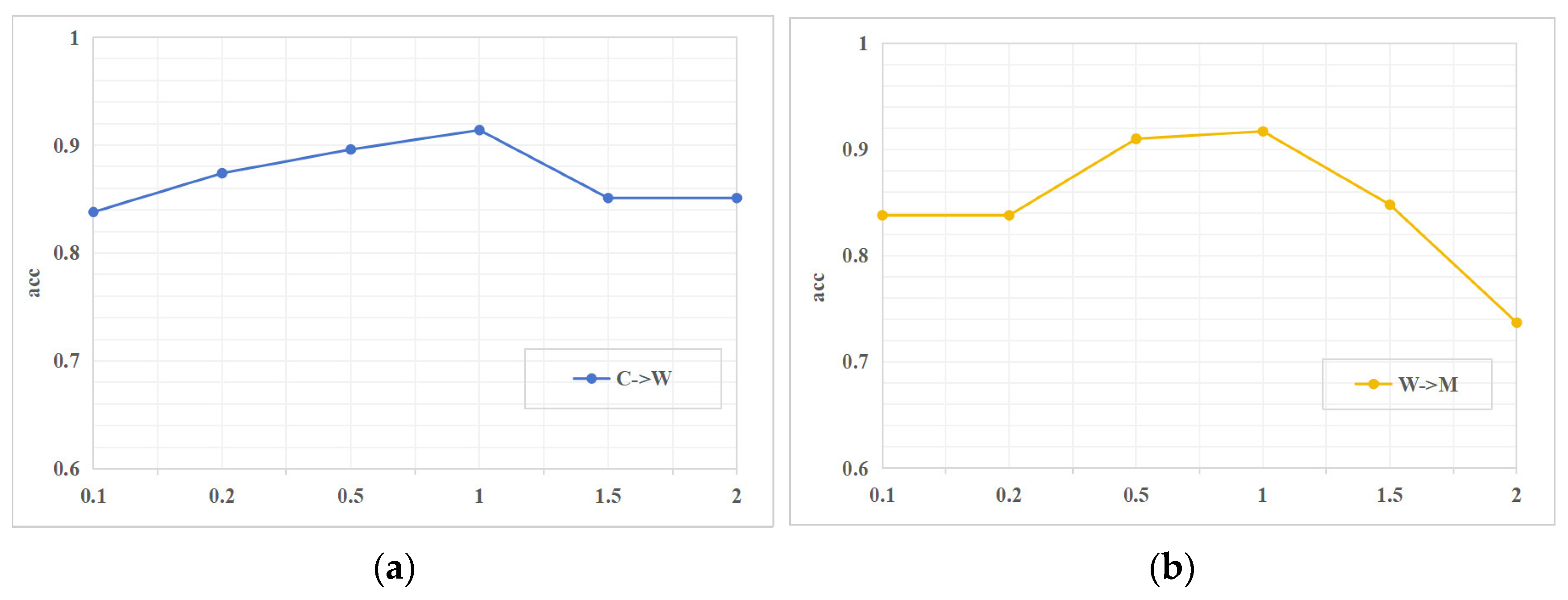

The hyperparameter

controls the trade-off between loss terms. To investigate parameter sensitivity, experiments were conducted on two selected tasks. The experimental results for varying

in the set

are shown in

Figure 6.

The model achieves optimal performance when , effectively balancing task-specific classification and domain alignment, thereby enhancing cross-domain generalization capability. Deviating from this value leads to a noticeable trend, where when , accuracy decreases rapidly. This trend indicates that an excessively large causes the model to overemphasize domain feature alignment, neglecting task-specific classification requirements. Conversely, a smaller also impairs performance, though the impact is less severe. This suggests that insufficient domain adaptation results in residual distribution differences, limiting the effectiveness of transfer learning. The accuracy exhibits an inverted U-shaped curve with respect to , with as the inflection point, indicating optimal coordination between classification and domain alignment objectives at this point.

The response of different tasks to variations in further reveals its impact. In the task , the accuracy decreases from 0.914 at to 0.851 at , indicating high sensitivity to excessive alignment. In contrast, the task exhibits greater robustness, with accuracy only slightly decreasing from 0.917 at to 0.910 at . This difference may stem from domain similarity, where tasks with closer domains exhibit higher tolerance to variations in , whereas tasks with greater domain differences require more precise hyperparameter tuning.

4.6. Case Analysis

We selected the EANN_text model for comparison with the LCDAN to analyze misclassified samples. It was found that the main errors of EANN_text stemmed from over-reliance on event-specific features, neglecting the overall semantics of the text, and a lack of in-depth understanding of text semantics, which led to misjudgments. For example, the sample “I’ve been thinking. Maybe it would be a good idea for the CDC to focus on disease prevention instead of bike paths” was misjudged as informative by EANN_text because it contained “cdc” and “disease prevention”, but in fact, it was an expression of opinion. The LCDAN reduced the weight of opinion-type texts through the label confusion mechanism and correctly identified them as non-informative. The sample “Via @NatureNews: #Ebola by numbers: 1 person infected usually spreads the disease to 1–2 people”, as factual data, was misjudged as non-informative, reflecting its insufficient semantic understanding. In contrast, the model proposed in this paper introduced the label confusion and sample weighting mechanisms, which could dynamically adjust the training weights of samples according to their label distribution and semantic features, thereby significantly improving the recognition accuracy. This mechanism reduced the model′s reliance on single keywords by modeling the label distribution and semantic consistency and better grasped the integrity of the context and factual content. In the two examples of EANN_text misjudgments, the proposed method could accurately identify their speculative expressions and successfully avoid misclassification.

To further explore the limitations of the LCDAN in public health event information detection, we analyzed the misclassified samples in the task and found that the main errors were concentrated in scenarios of non-standard expressions with complex semantics, keyword misguidance, and cross-domain semantic differences. In texts related to public health events, non-standard expressions (such as irony and metaphor) increased the difficulty of semantic understanding, making it difficult for the model to distinguish between informative and non-informative content accurately. For example, the humorous mention of “Ebola” in the sample “Easiest way to dump your girlfriend is to tell the police she has Ebola-like symptoms” was misjudged as informative. In addition, in the context of the public health field, the model was sensitive to keywords (such as “disease”) and ignored the context, resulting in non-informative texts being misclassified as informative. For example, “Review: The End of Plagues: The Global Battle against Infectious Disease by John Rhodes” was misjudged as informative because it contained “infectious disease”, ignoring the semantics of “review”. Although LCDAN performed well in most tasks, to address the challenges of non-standard expressions and domain differences, it was necessary to further optimize it by enhancing context modeling, introducing sentiment analysis, and expanding multilingual training data to improve its robustness and adaptability.

By effectively detecting informative messages on social media, this study provides a valuable tool for public health event management. With the detected informative messages, the government or public health institutions can monitor the development trend of events in real time, issue early warning information in a timely manner, and prevent the spread of misleading information. Simultaneously, aggregating and analyzing the detected informative messages can provide decision makers with information from multiple perspectives and help formulate response strategies.

5. Discussion

Although LCDAN shows strong potential for cross-domain applications, it still faces several challenges and limitations. First, although the proposed application of label confusion techniques in domain adaptation for public health event detection has demonstrated effectiveness, future work can further explore advanced label confusion strategies to enhance domain adaptability. Second, the current evaluation is primarily based on three infectious disease outbreaks—COVID-19, Ebola, and MERS—due to the limited availability of other high-quality annotated public health datasets. In future research, once suitable datasets become available, we will seek to incorporate additional public health scenarios to assess the generalizability of LCDAN across diverse event types comprehensively.

Automatic information detection in health crises presents ethical challenges. The system may misclassify non-informative content as critical, or amplify unverified information, potentially impacting public perception and decision-making. Therefore, it is necessary to incorporate validation mechanisms to mitigate such risks. Additionally, practical deployment of such systems requires careful handling of personal data, adherence to privacy regulations, and effective integration with existing public health systems to provide timely and dependable support for emergency decision-making and resource coordination.