The real-time 3D sensor data visualization system proposed in this study aims to intuitively and reliably represent diverse sensor information collected in digital twin environments. To achieve this, the system was designed based on the Unity3D game engine, actively leveraging the GPU’s parallel processing capabilities to support the real-time processing of large-scale 3D models and numerous sensor data. The system primarily comprises the following: a system architecture and its components; GPU-based real-time sensor data interpolation; enhancement of the physical representation accuracy using geodesic distance; and advanced rendering techniques for improving visual fidelity.

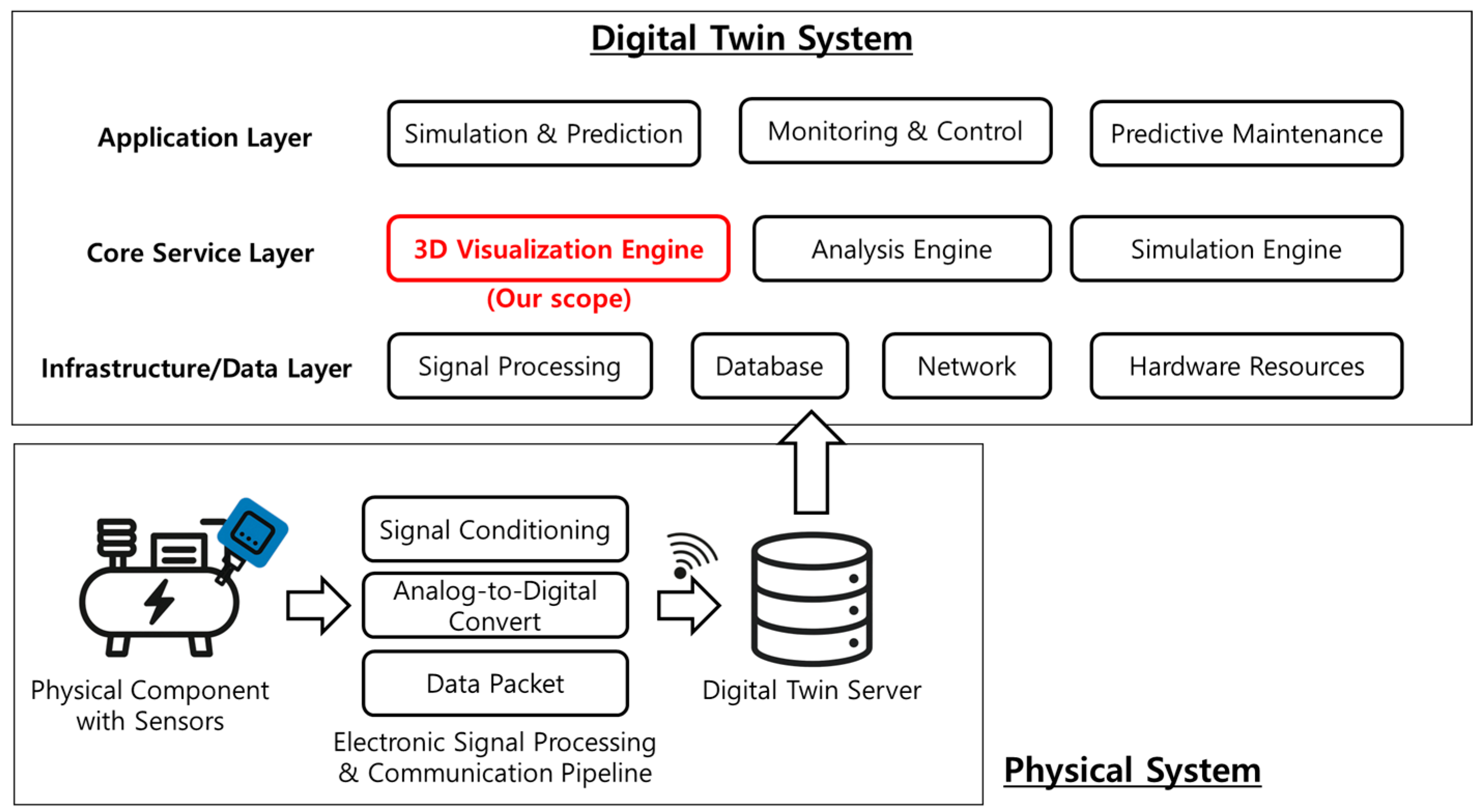

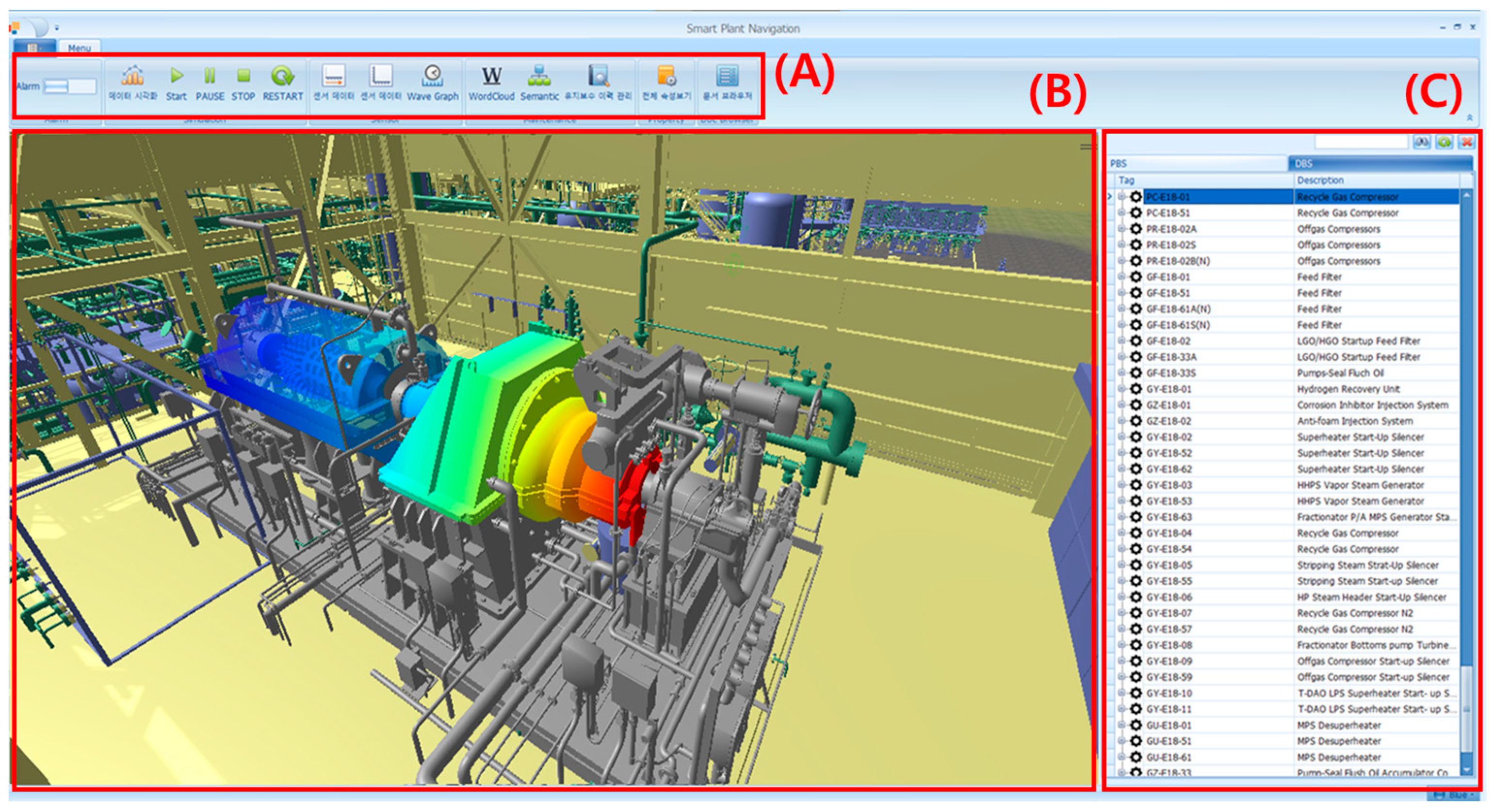

3.1. System Architecture

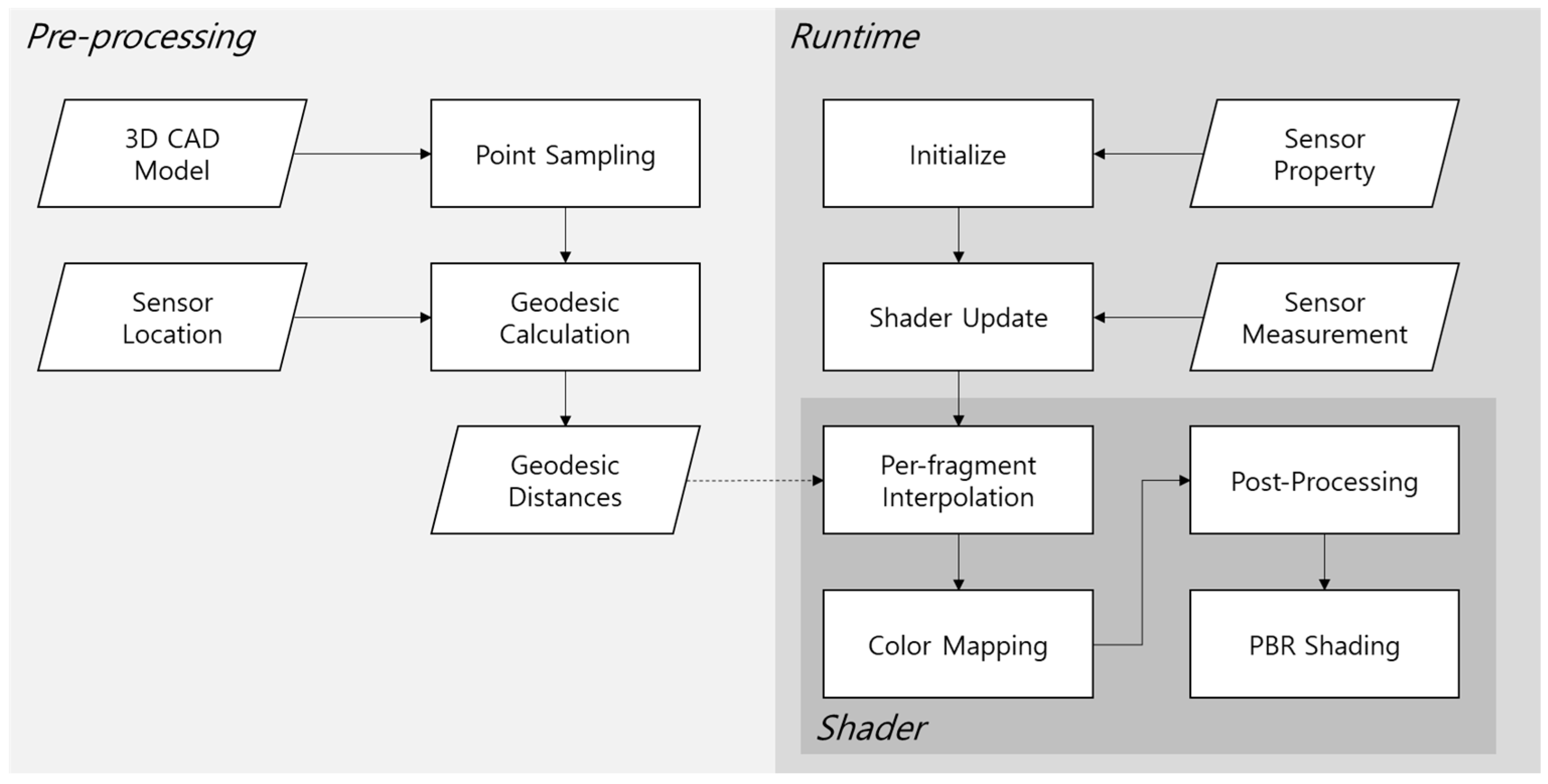

The overall processing flow of the proposed system is illustrated in

Figure 2. The system inputs are 3D CAD model information and sensor information (including sensor installation locations, attributes of each sensor, and sensor measurements). The output is an image representing the current state of the actual facility where sensors are installed, mapped as colors onto the 3D CAD model. The proposed system can generate images that reflect real-time changes in sensor measurements. In typical computer graphics applications, such images are generated in real-time by shaders operating on the GPU, and in this study, the core proposed algorithms are also implemented as shader programs.

First, the pre-processing stage involves the pre-calculation of geodesic distances for the geodesic distance interpolation function based on the 3D CAD model and sensor locations. The method proposed in this study utilizes the distance between locations on the 3D CAD model surface and the sensors as key information for interpolation. For distance calculation, a method allowing the selective use of either Euclidean distance or geodesic distance was chosen. Among these, because the real-time calculation of geodesic distance is difficult, an approach of pre-calculating these distances was adopted; further details are provided in

Section 3.3.

In the runtime visualization stage, various pieces of information required for program execution are first initialized. During this process, the attributes of each sensor are configured, including the maximum and minimum measurable values for each sensor, and sensor attenuation parameters. These values are used in calculating the interpolation algorithm. Once sensor measurements begin to update from the actual sensors, the sensor information is aggregated in the Shader Update component and then transmitted to the shaders running on the GPU.

Within the shaders, based on this information, sensor measurement values for each visible surface of the 3D CAD model are first calculated via per-fragment interpolation. Subsequently, the color value for that surface is determined through color mapping based on the calculated measurement. Depending on user selection, operations for ramp shading and additional post-processing are performed. The final computed color is then used as the Albedo value for the PBR (Physically Based Rendering) Shader and is displayed on the screen through the remaining PBR pipeline of the Unity3D engine, which was utilized for the experiments in this study. The per-fragment interpolation method, which is central to the proposed approach, is detailed in

Section 3.2.

3.2. GPU-Based Real-Time Sensor Data Interpolation

As previously mentioned, this study aims to propose a system for digital twins that enables the real-time observation of an entire object’s state based on discrete data acquired from a few sensors installed at specific locations. In existing digital twin monitoring systems, sensor measurements for such state monitoring are typically displayed as 2D graphs. This approach imposes an additional cognitive load on users attempting to understand the object’s state within a spatial context, leading to difficulties in intuitively perceiving the states of multiple objects. To aid in understanding an object’s state in a spatial context, another common method involves using engineering simulation tools to generate and then visualize the state of the entire 3D CAD model through simulation. However, this method has the disadvantage of being inapplicable to real-time monitoring processes, as simulations can take from several minutes to hours.

To address these issues, this study designs a method to estimate the state of the entire 3D CAD model in real-time through interpolation based on discrete sensor data. The proposed interpolation algorithm is illustrated below in Algorithm 1:

| Algorithm 1 Fragment shading algorithm for sensor group visualization |

Input: 3D mesh , camera and sensor group , where denotes world coordinate, denotes measured value, denotes strength of each sensor, and denotes decay control parameter. Sensor group shares its and value to measure.

Output: , where denotes color of each pixel

,

for in do

end for

for in do (Parallel calculation)

for in do

end for

end for |

We introduce the concept of a “sensor group,” defined as a collection of sensors that measure the same physical quantity and share common specifications. For the purpose of normalization within our algorithm, the shared minimum and maximum values from these specifications are used as an absolute reference to map each sensor’s reading to a [0, 1] range. To ensure a clear and unambiguous visualization, our system is designed to process and display one sensor group at a time.

A 3D mesh model undergoes a transformation process to be rendered on the screen, typically through the model–view–projection transformation in a vertex shader. Subsequently, through the viewport transformation, the screen space coordinates (pixel coordinates) of each triangle constituting the 3D model are calculated. In Algorithm 1, signifies the world space coordinates of these pixels that represent a specific model; that is, it is the coordinate value in 3D space of a pixel visible on the screen. The objective of the algorithm is to determine the set of color values, , for these . To achieve this, the algorithm was designed to proceed through a two-stage calculation process.

The first stage is to calculate the influence weight (

) of each sensor for every pixel. The principle behind our weighting scheme is that the state at any point on a surface can be represented based on nearby sensor measurements. This approach assumes that sensors located closer to a given point provide a stronger contribution to its representation than those farther away. While various alternative weighting functions exist, and different choices may yield more physically plausible results for specific phenomena, this research adopts the softmax function (Equation (1)) as a representative method that embodies this core principle. Here,

represents the total number of attached sensors. The term

is the normalized inverse of the distance between the pixel’s world space coordinates and the

i-th sensor. Consequently, if the distance between a sensor and a pixel is short,

will have a value close to 1.0, and if the distance is large, its value will be close to 0.0. The strength parameter

provides control over the influence of each sensor. A higher

value results in the sensor exerting a stronger influence over a narrower area, whereas a lower strength value leads to a weaker influence spread over a wider area.

Once these sensors’ influence weights are calculated, they are used to compute the final interpolated sensor value,

. Again, various design choices exist for this calculation. In this study, we adopt Equation (2) as a representative method, which provides further controllability over the final representation. This equation defines the contribution of each sensor as follows:

This design ensures that pixel values at sensor locations strongly reflect their respective sensor readings, with values becoming attenuated by as the distance increases. When multiple sensors are attached to an object, the influence of each sensor on a given pixel is controlled by the weights. Furthermore, the decay control parameter allows users to adjust the falloff speed of the visualized influence with distance. A larger value produces a sharper, more localized falloff, while a smaller value results in a more gradual decay. For instance, if a measured property is known to be relatively uniform across a component, a user can select a small value. This creates a visual representation with a very slow decay, making the output more consistent with the expected physical behavior in that specific scenario.

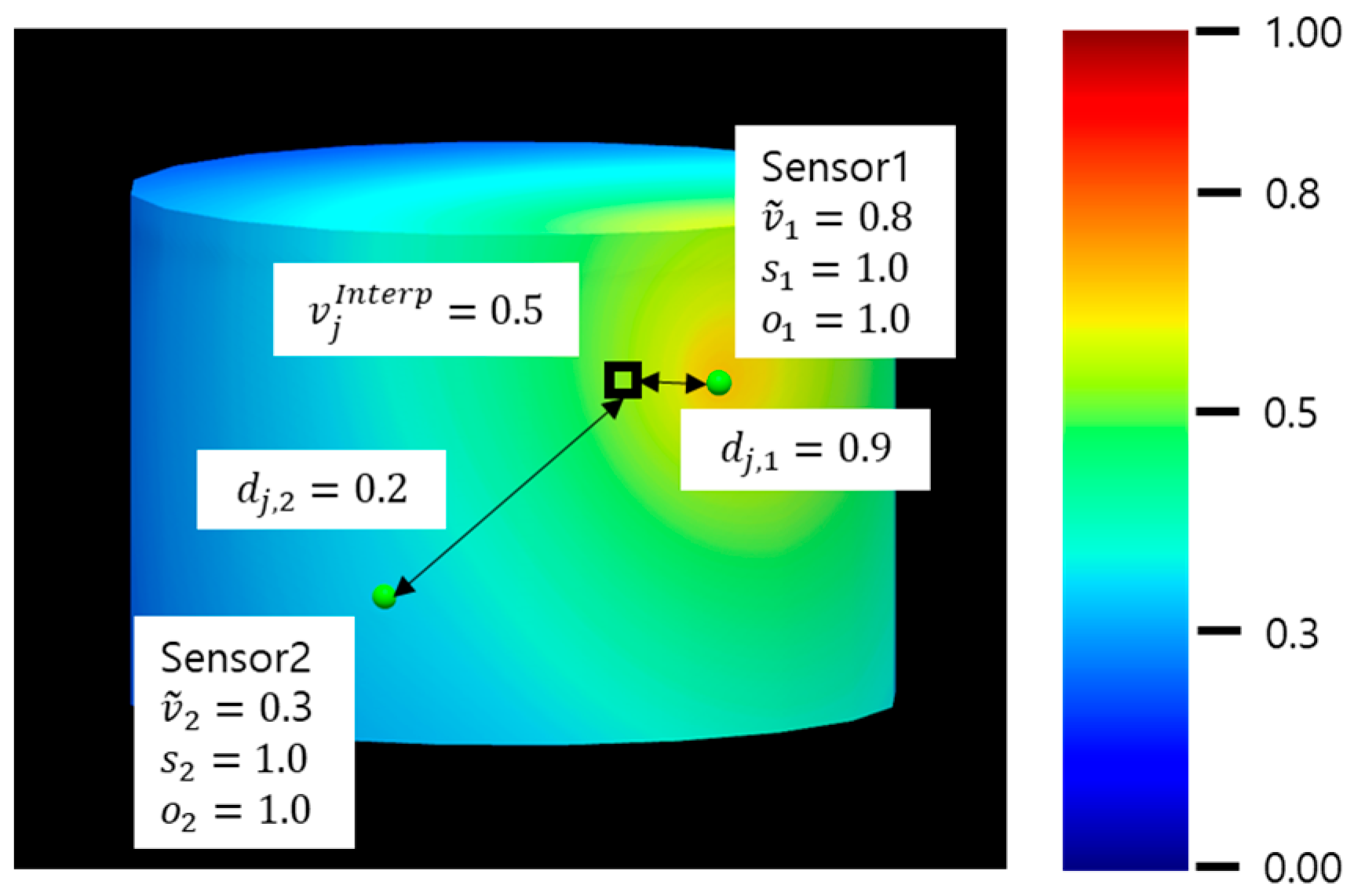

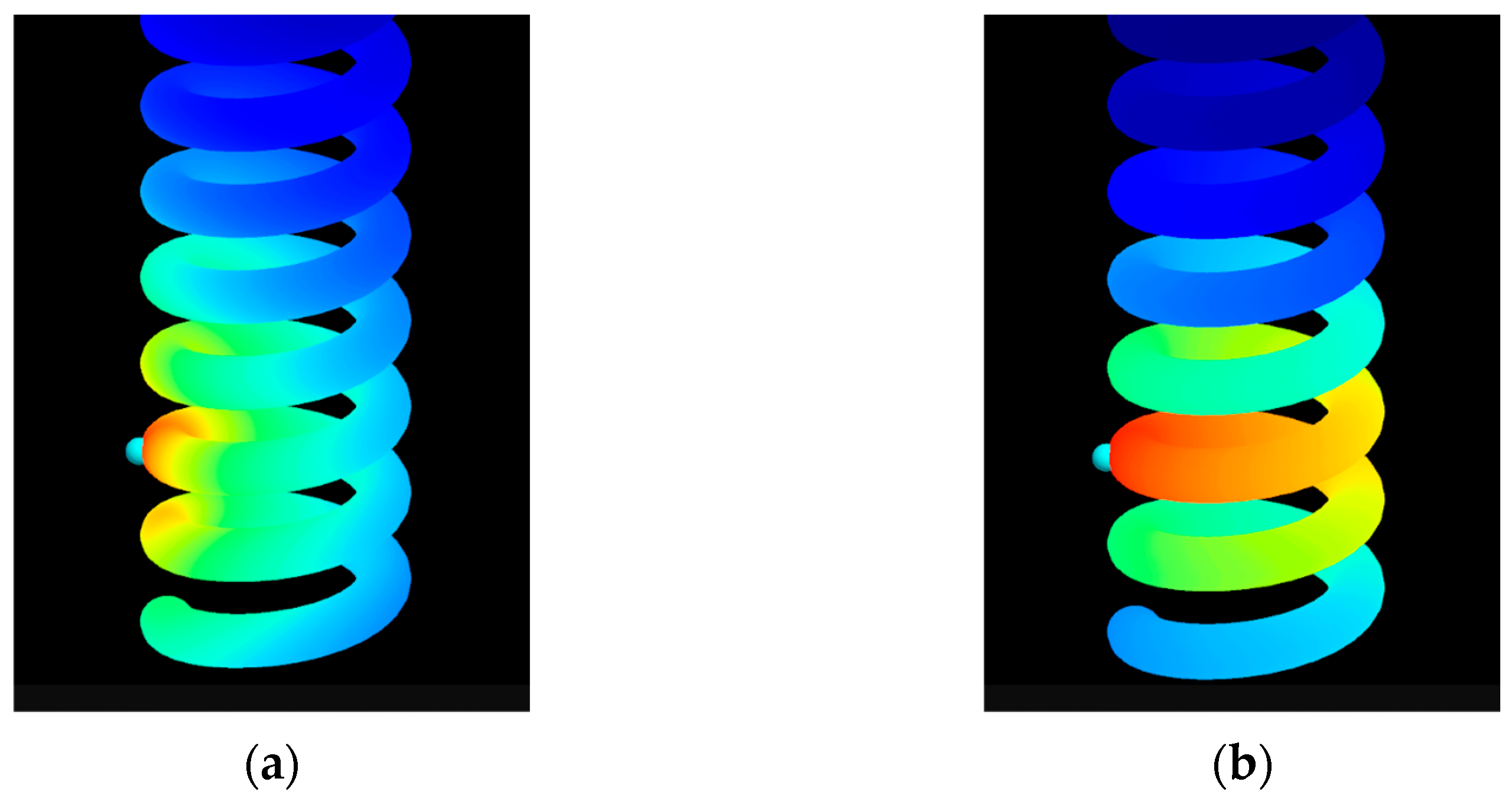

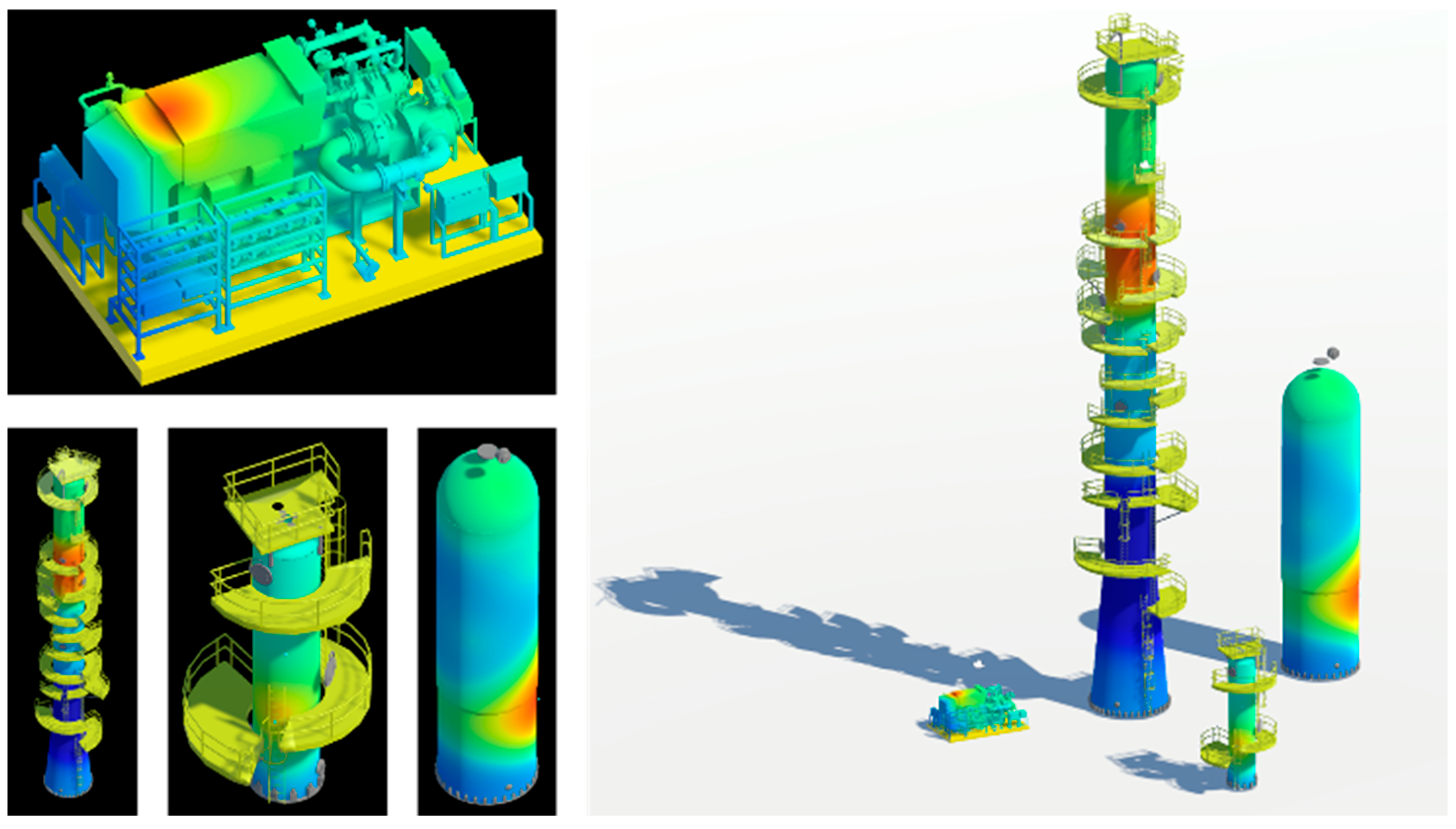

Ultimately, when these interpolated measurements are visualized on screen via color mapping, a result similar to

Figure 3 is produced. In the provided example, sensor locations are indicated by green spheres. If we assume the normalized sensor readings are 0.8 and 0.3, and the distances value from a specific pixel (highlighted with a bold border) to these sensors are 0.9 and 0.2, respectively, the proposed method calculates an interpolated value of approximately 0.5 for that pixel. As shown in the figure, this demonstrates the attenuation effect based on sensor proximity.

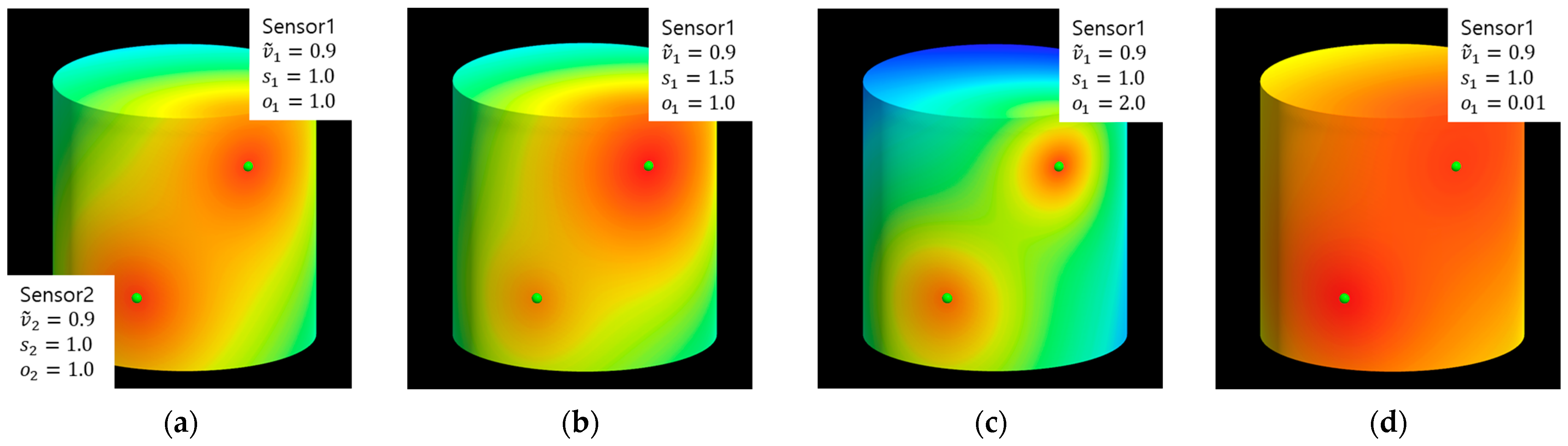

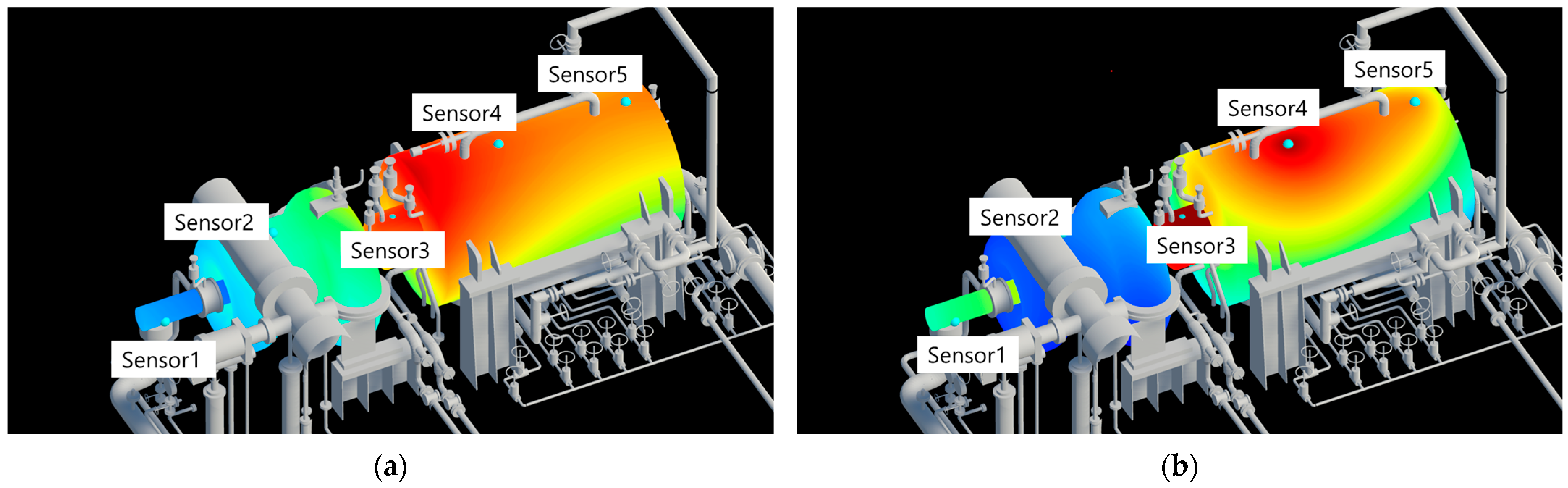

Figure 4 illustrates the effects of the strength (

) and decay control (

) parameters on the visualization for two sensors on the cylinder’s surface. As demonstrated, adjusting these parameters allows a user to generate a wide range of visual representations from the same sensor data. The determination of which representation is most ‘plausible’ is not absolute; it is dependent on the specific physical properties being measured and the characteristics of the component, such as its material. This highlights the primary objective of our system: to provide a controllable, real-time, and intuitive visualization tool, rather than a predictive simulation. Consequently, the selection of appropriate parameter values to best represent a given scenario is intended to be determined by a domain expert.

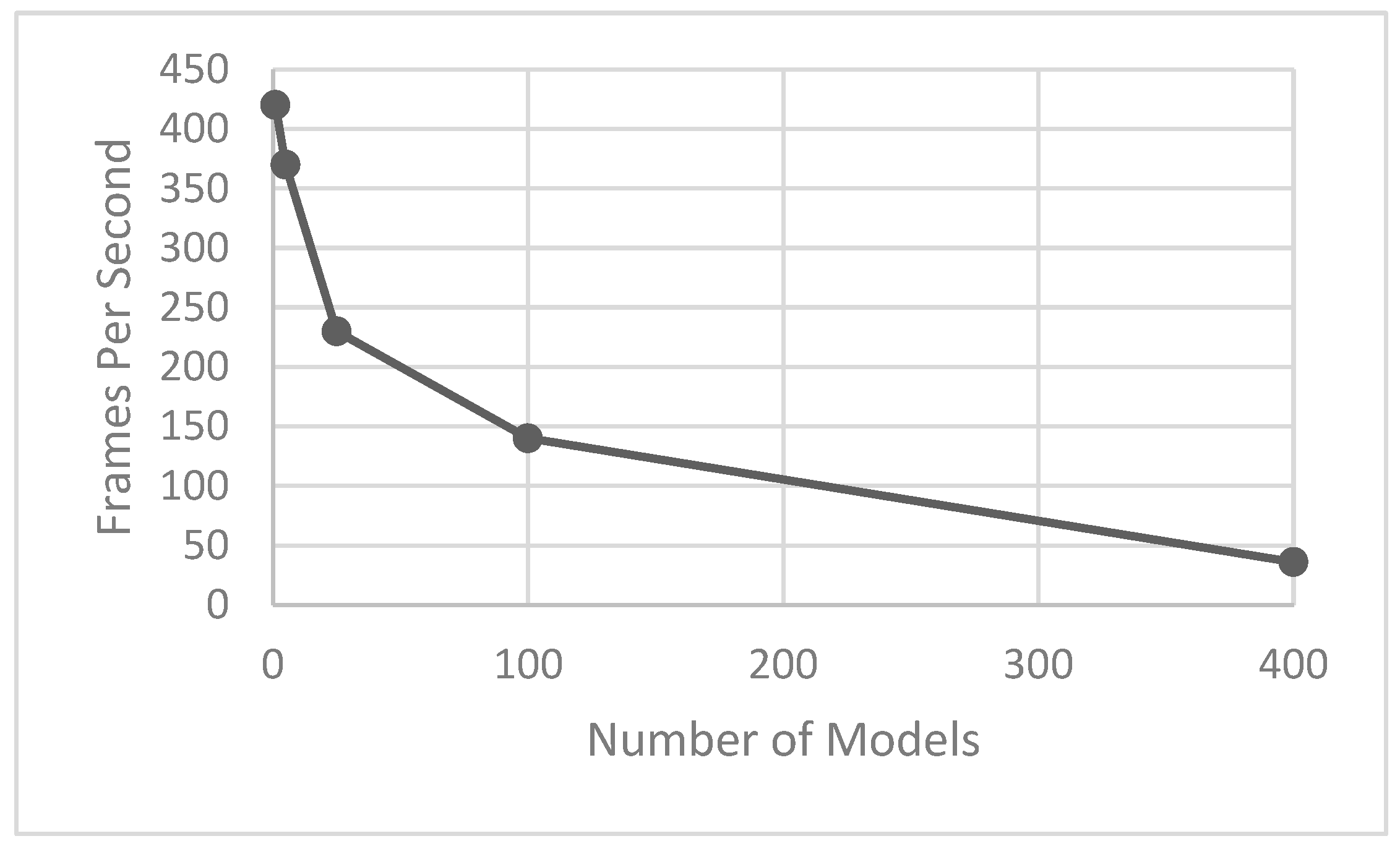

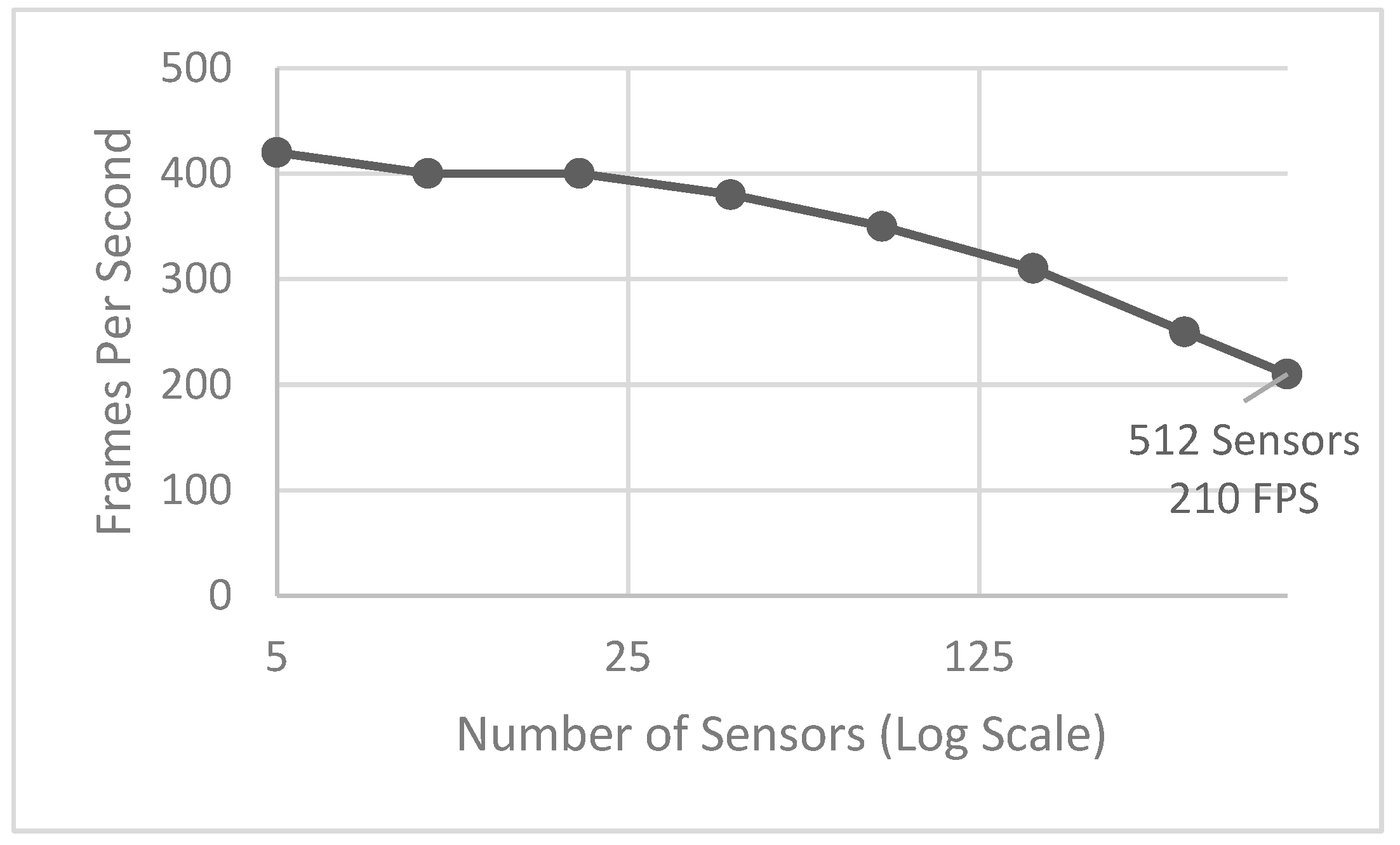

Since these calculations are implemented on the GPU via shaders, they can be performed in parallel for each pixel. This enables real-time computation for all pixels where the model is displayed. Ultimately, the color corresponding to the sensor’s measurement value is sampled from a Colormap image, and this color is displayed on the screen. In the figure referenced (e.g.,

Figure 3), it can be observed that this process is executed in parallel not only for any specifically highlighted pixel but for all visible pixels of the model, resulting in their colors being determined and displayed simultaneously. Due to the inherent parallel processing capabilities of the GPU, these computations can be executed at high speed. Consequently, the system is characterized by its ability to immediately reflect dynamically changing sensor measurements in real-time.

3.3. Enhancing Physical Representativeness Using Geodesic Distance

Physical phenomena represented by sensor data, such as temperature distribution on a structure’s surface or stress propagation, often exhibit the characteristic of propagating along the object’s surface. In such cases, interpolating sensor value influences using geodesic distance—which represents the shortest path on the object’s surface—is more physically plausible than using Euclidean distance (a simple straight line between two points). This approach can significantly enhance the reliability of the visualization results.

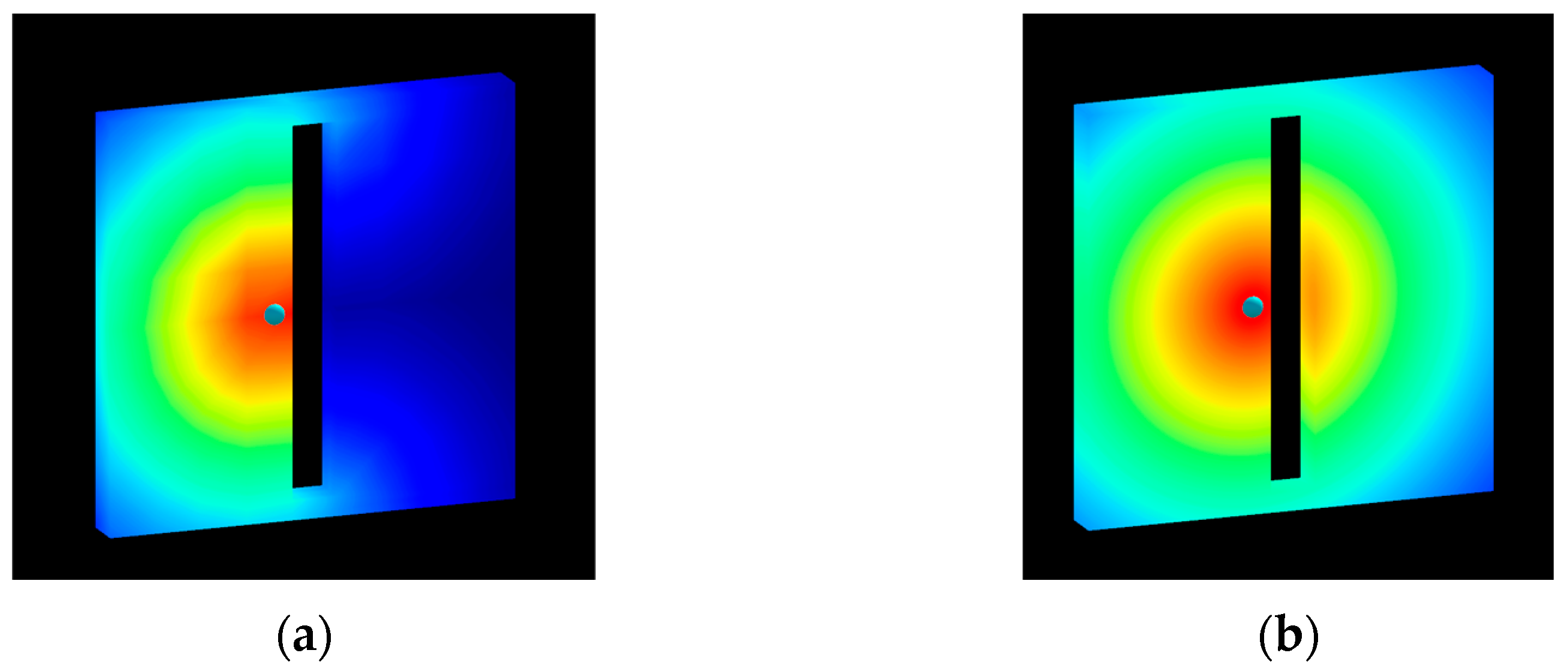

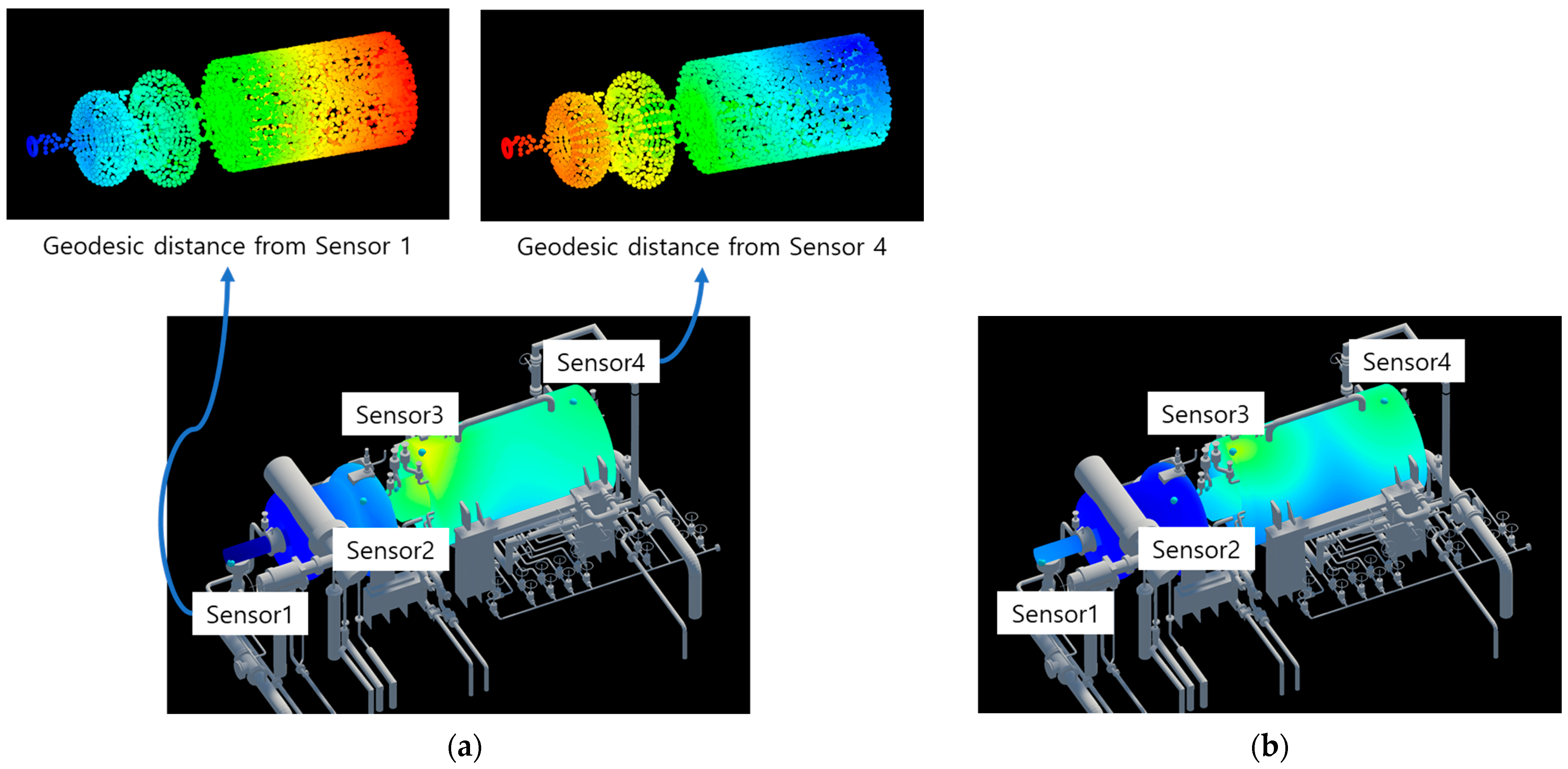

Figure 5 below illustrates a typical limitation demonstrating this issue.

Figure 5a shows an example where Euclidean distance is used for the distance (

) calculation, as described in

Section 3.2. As can be seen in the figure, because weights and attenuation are calculated based on the straight-line distance between the sensor location and points on the 3D CAD model surface, values are interpolated and displayed irrespective of surface connectivity. For instance, if the measured value is temperature and the object possesses heat-conducting properties, the state shown in

Figure 5b would represent a more physically reliable result. In this study, to enable visualization that considered such physical connectivity of an object, the system was developed to allow the selection of a distance calculation method utilizing the Heat Method.

3.3.1. Heat-Method-Based Geodesic Distance Calculation

Geodesic distance refers to the length of the shortest path between two points on a curved surface, such as a 3D mesh. Unlike Euclidean distance, which simply represents the straight-line distance in space, geodesic distance is measured along the surface’s topology. Therefore, it is utilized as a key element in various computer graphics and vision fields for analyzing phenomena that occur along an object’s actual surface (e.g., heat conduction, stress propagation), as well as for applications like robot path planning, shape analysis, and segmentation.

A classical algorithm for calculating exact geodesic distances on a 3D polygonal mesh is the Mitchell, Mount, and Papadimitriou (MMP) algorithm [

17], which has a time complexity of

, where N is the number of mesh vertices. Subsequently, Surazhsky et al. [

18] conducted research that improved computational efficiency by proposing enhanced exact algorithms alongside practical approximation algorithms. These exact computation methods often entail high computational costs, making their real-time application to large-scale meshes challenging. Consequently, various approximation algorithms are being researched to meet the specific requirements (e.g., speed, accuracy) of particular application domains.

The Heat Method proposed by Crane et al. [

19] is a geodesic distance calculation technique that effectively approximates distances on curved surfaces based on heat flow simulation. The core idea of this method is to divide the distance calculation into two main stages. First, the heat diffusion equation is solved for a short time to obtain the heat distribution, denoted as

. From this distribution, a normalized gradient field,

, is computed, which indicates the direction of increasing distance. Subsequently, by taking the divergence of this vector field

, the Poisson equation,

, is solved to finally obtain the geodesic distance function,

.

The Heat Method offers several advantages: it is relatively simple to implement, and because it utilizes standard linear partial differential equation solvers, it is numerically stable and efficient. Notably, after a single pre-factorization step, distance calculations can be performed very rapidly, making it well-suited for applications that require repetitive distance queries. This method possesses the generality to be applied to various types of domains, including not only triangle meshes but also point clouds or grids. It is widely recognized for achieving an excellent balance between accuracy and computational speed in practical applications.

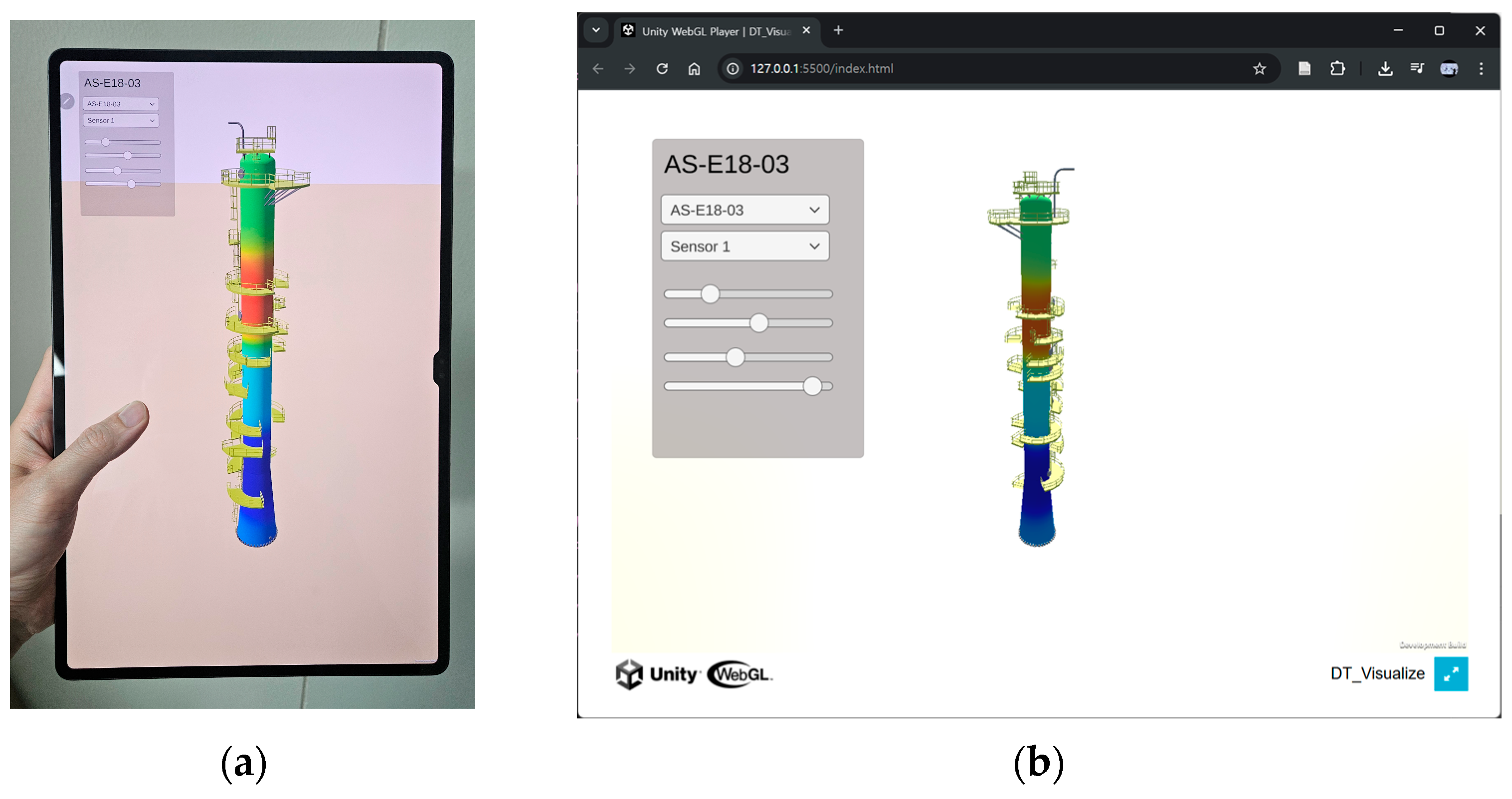

In the system proposed in this study, geodesic distances from each sensor location to all vertices on the 3D model surface are pre-calculated and stored during a pre-processing stage. At runtime, these stored values are retrieved and utilized for interpolation. The system is configured to allow users to select either Euclidean distance or the pre-calculated geodesic distance for the interpolation process, depending on the type of sensor data or the specific analytical objective.

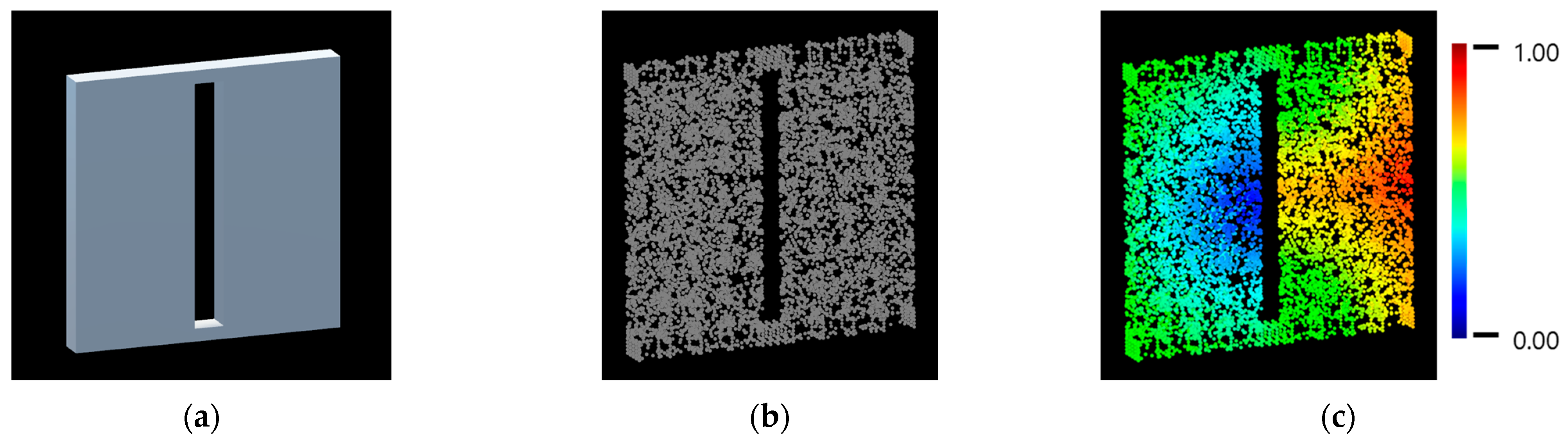

3.3.2. Point Cloud Sampling for CAD Model Connectivity Issues

The previously mentioned Heat-Method-based geodesic distance calculation, while feasible for ideal 3D CAD models based on their triangle mesh information, is often not directly applicable in practical scenarios. In actual digital twin construction, 3D CAD models representing objects are typically utilized after being automatically converted from existing 3D design resources. During this conversion process, various issues can arise due to interference between different design components, the limitations of the conversion tools, or suboptimal parameter selections. These problems include the formation of non-manifold geometry resulting from face intersection issues (where triangles improperly overlap or penetrate each other), and face gap problems where parts of a single object lose their intended surface connectivity. An illustration of such phenomena is provided in

Figure 6.

While these modeling imperfections can be rectified through manual correction by modeling experts or by carefully tuning conversion parameters, such solutions incur significant time and expenses. This is particularly problematic for digital twin applications, which often involve a multitude of complex objects, making manual intervention or extensive parameter optimization impractical on a large scale.

To address these practical problems, this study introduces a point-cloud-sampling-based geodesic distance calculation method that does not directly rely on the mesh’s topological connectivity. The pre-processing steps for this approach are as follows:

A point cloud is generated by uniformly sampling a specific number of points from the surface of the 3D model intended for visualization. The vertices that constitute the original 3D model are also included in this point cloud set.

Within the generated point cloud, the k-Nearest Neighbors (kNNs) are identified for each point. Based on this proximity, a virtual connectivity graph is constructed among the points. This process depends solely on geometric proximity, irrespective of the actual connectivity state of the original mesh.

The Heat Method is applied to this constructed point cloud graph to calculate the geodesic distances from the points corresponding to sensor locations to all other points in the cloud.

From the comprehensive geodesic distance information computed for the entire point cloud, the data corresponding specifically to the vertices of the original 3D model is extracted. This provides the geodesic distance value from each sensor to every vertex of the model.

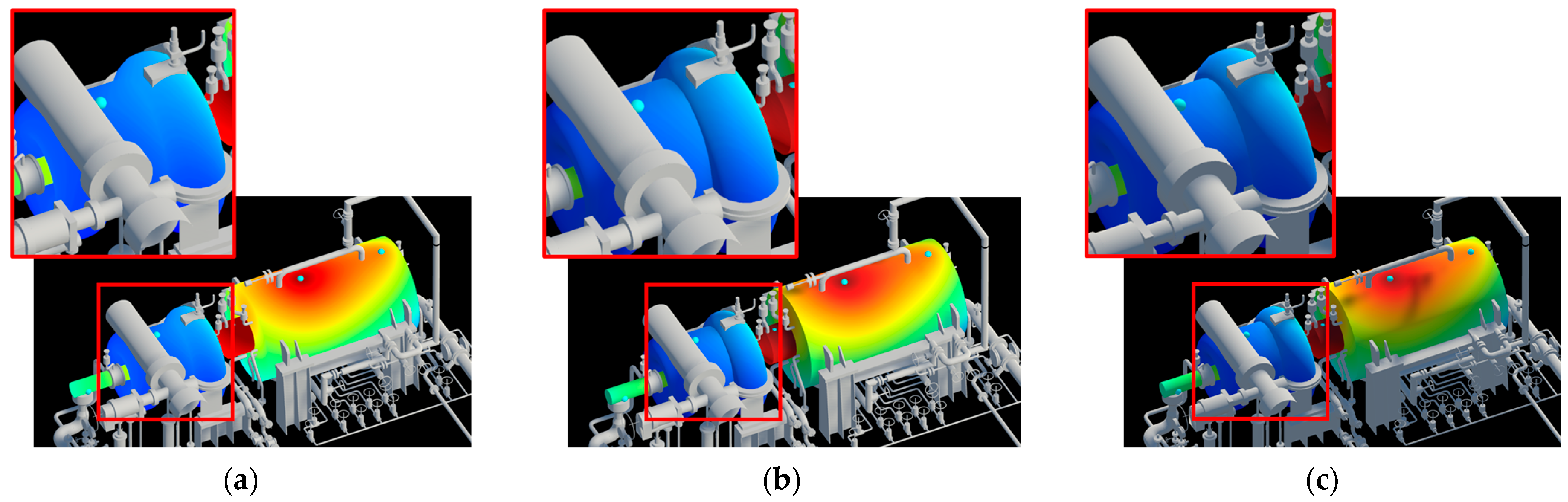

The data generated through steps 1–3 of the process described above is illustrated in

Figure 7. The visualization result obtained using this generated data is shown in

Figure 8a. A comparison with

Figure 8b, which utilizes Euclidean distance, reveals that the visualization achieved using the geodesic distance calculation method (

Figure 8a) produces more physically reliable results.