TSE-APT: An APT Attack-Detection Method Based on Time-Series and Ensemble-Learning Models

Abstract

1. Introduction

- The accuracy of the proposed TSE-APT method in the CIC-IDS2018 dataset was 97.32%, and the false positive rate was 0.69, outperforming the baseline model.

- Combining dynamic time-series features with static flow data improves detection accuracy by over 2% and recall by approximately 3% compared to using static features alone.

- Propose a dynamic weight strategy for sub-model allocation. It effectively improves the F1 score by approximately 2% and recall by over 3% identifying new attacks more accurately.

2. Related Works

2.1. Temporal Feature

2.2. Ensemble Learning

2.3. Attention Mechanisms

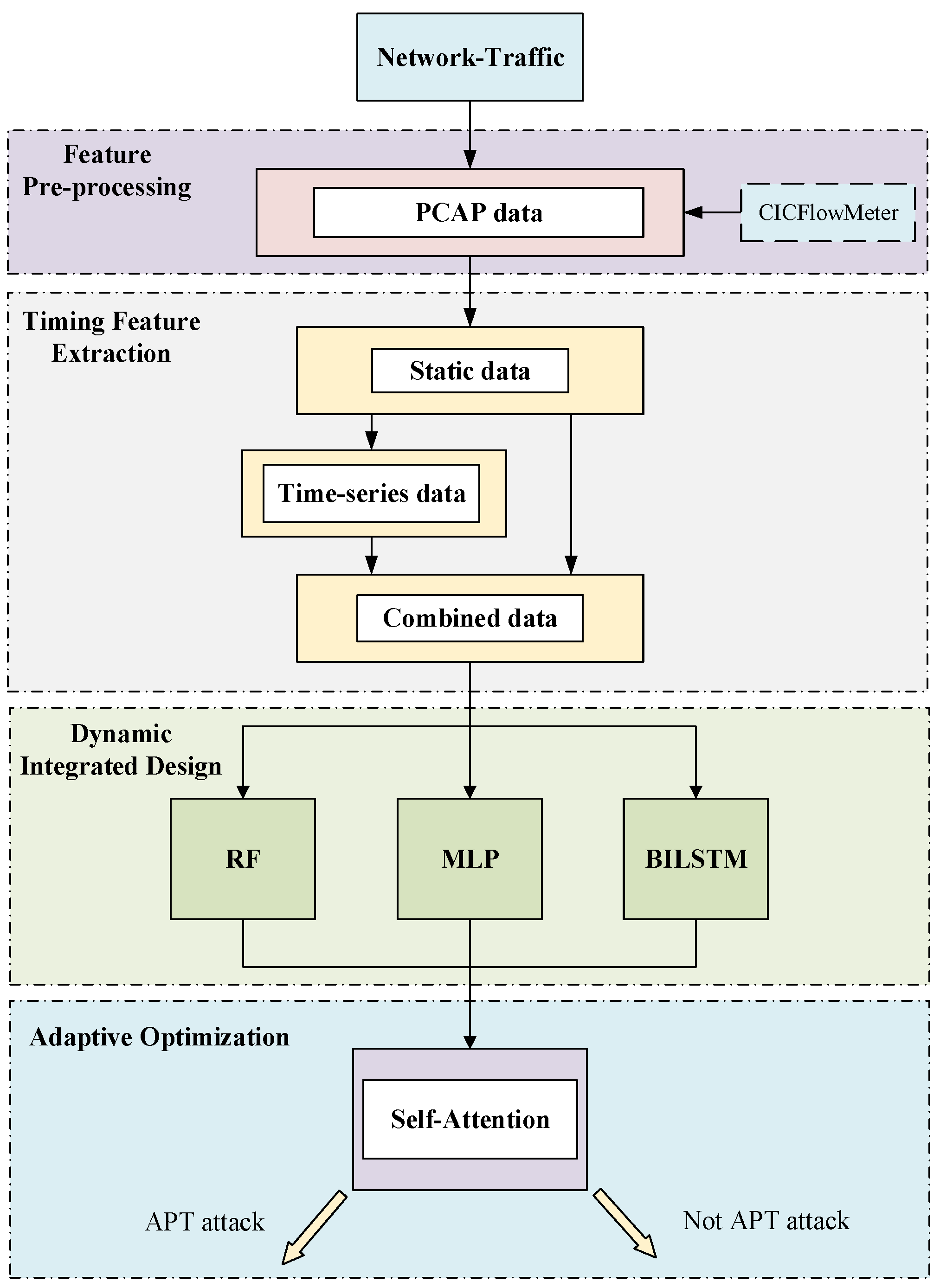

3. Materials and Methods

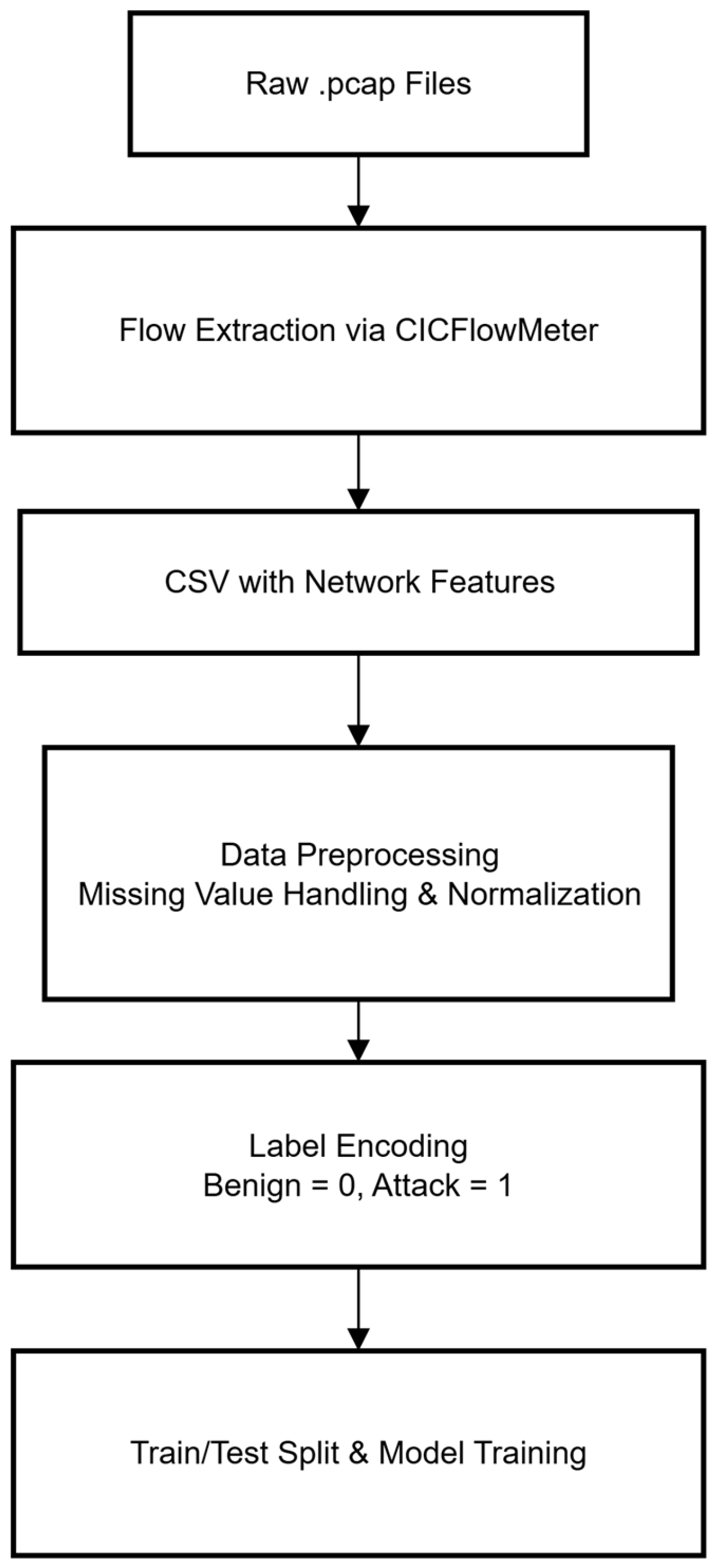

3.1. Feature Preprocessing

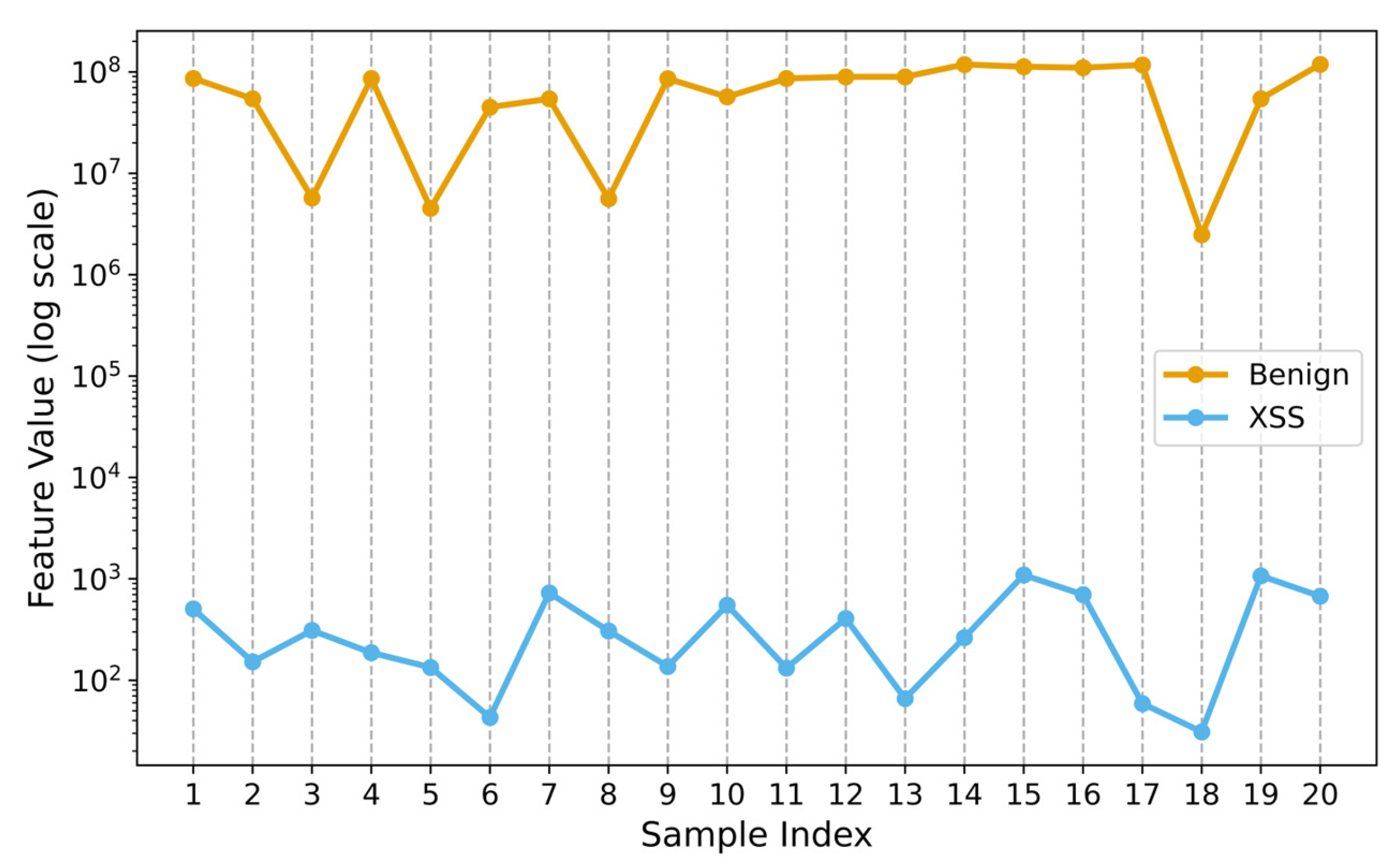

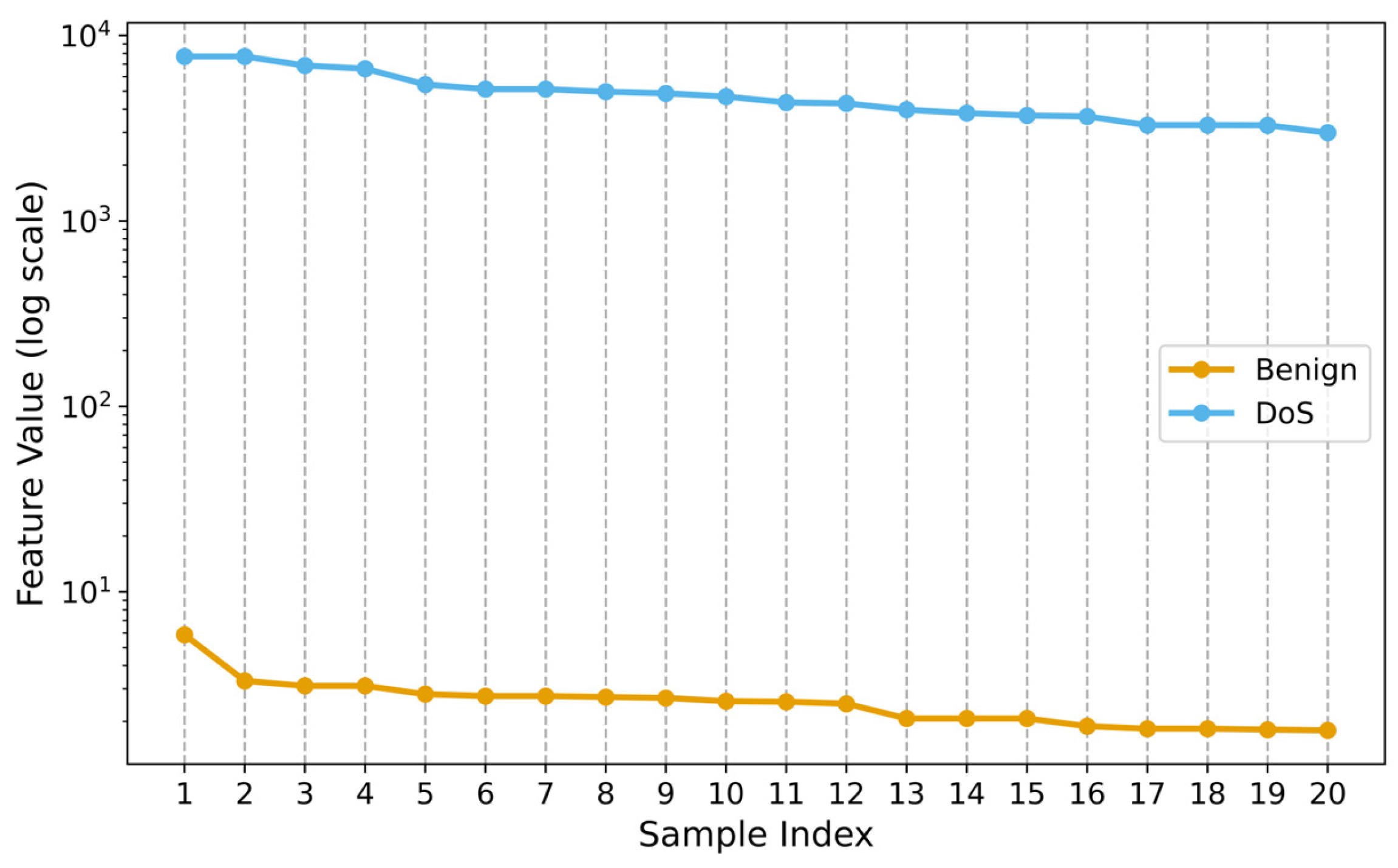

3.2. Temporal Feature Extraction

3.2.1. Packer Arrival Interval (IAT, Fwd IAT, Bwd IAT)

3.2.2. Flow Rate Change Within the Sliding Window (FRC)

3.2.3. Flow Variance of the Flow Within the Window (FV)

3.2.4. Flow Variance of the Flow Within the Window (F2)

3.3. Ensemble-Learning Module Design

3.3.1. Sub-Model Training

3.3.2. Self-Attention Mechanism

| Algorithm 1 Self-attention mechanism | |

| Input: Dimension X, prediction P[1], P[2], P[3], number of attention heads H | |

| Output: final malicious-probability Y | |

| 1. | Q←WQ·X/* Query vector */ |

| 2. | K←WK·X/* Key vector*/ |

| 3. | V←WV·X/* Value vector*/ |

| 4. | for h in H do |

| 5. | |

| 6. | |

| 7. | end for |

| 8. | C←concat(C1 … CH) |

| 9. | γ←FC (P)/*self-confidence*/ |

| 10. | Ĉ←C ⨁ γ/*concatenation*/ |

| 11. | ω←Softmax( FCw(Ĉ))/*weight-generator*/ |

| 12. | |

3.3.3. Meta-Model Further Optimization

4. Experimental Analysis

4.1. Experimental Configuration

4.1.1. Experimental Environment

4.1.2. Experimental Data

4.1.3. Classification of Experiments

4.1.4. Model Training Process

4.2. Performance Evaluation

4.2.1. Introducing Time-Series Detection Efficiency

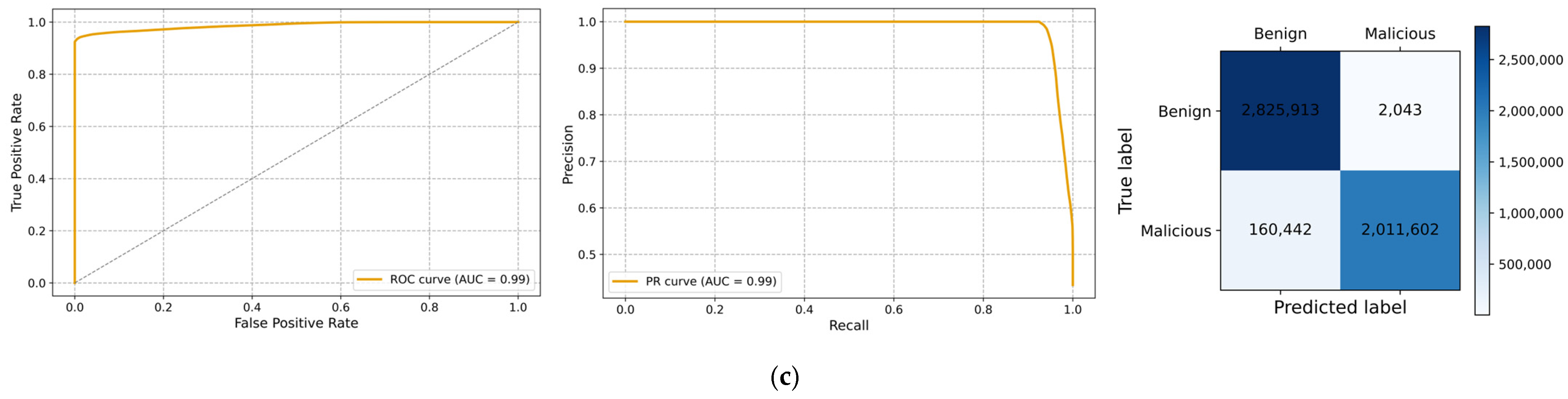

4.2.2. Ensemble-Learning Model Detection Efficiency

4.2.3. Ablation Studies on the Method Components

4.2.4. Feature Importance Analysis

4.2.5. Comparison of Different Classification Methods

4.2.6. Overhead Analysis

5. Discussion

5.1. Justification of the Dataset for APT Detection

5.2. Discussion of Data-Processing

5.2.1. Design of Time Windows and Step Sizes

5.2.2. The Dataset Seems to Be Unbalanced

5.2.3. Flowchart Description

5.3. Discussion with the Rest of the Models

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; He, X.; Yi, X.; Li, Z.; Cao, X.; Yin, T.; Li, S.; Fu, A.; Zhang, Y. Survey of attack and detection based on the full life cycle of APT. J. Commun./Tongxin Xuebao 2024, 45, 106. [Google Scholar]

- Wang, H.; Cui, B.; Yuan, Q.; Shi, R.; Huang, M. A review of deep learning based malware detection techniques. Neurocomputing 2024, 598, 128010. [Google Scholar] [CrossRef]

- Li, S.; Zhang, Q.; Wu, X.; Han, W.; Tian, Z.; Yu, S. Attribution classification method of APT malware in IoT using machine learning techniques. Secur. Commun. Netw. 2021, 2021, 9396141. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, J.; Shen, Y.; Simsek, M.; Kantarci, B.; Mouftah, H.T.; Djukic, P. Machine learning-enabled iot security: Open issues and challenges under advanced persistent threats. ACM Comput. Surv. 2022, 55, 105. [Google Scholar] [CrossRef]

- Mönch, S.; Roth, H. Real-time APT detection technologies: A literature review. In Proceedings of the 2023 IEEE International Conference on Cyber Security and Resilience (CSR), Venice, Italy, 31 July–2 August 2023. [Google Scholar]

- Alshamrani, A.; Myneni, S.; Chowdhary, A.; Huang, D. A survey on advanced persistent threats: Techniques, solutions, challenges, and research opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 1851–1877. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef] [PubMed]

- Ghafir, I.; Hammoudeh, M.; Prenosil, V.; Han, L.; Hegarty, R.; Rabie, K.; Aparicio-Navarro, F.J. Detection of advanced persistent threat using machine-learning correlation analysis. Future Gener. Comput. Syst. 2018, 89, 349–359. [Google Scholar] [CrossRef]

- Tan, Y.; Huang, W.; You, Y.; Su, S.; Lu, H. Recognizing BGP Communities Based on Graph Neural Network. IEEE Netw. 2024, 38, 282–288. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Hossain, M.N.; Milajerdi, S.M.; Wang, J.; Eshete, B.; Gjomemo, R.; Sekar, R.; Stoller, S.; Venkatakrishnan, V.N. SLEUTH: Real-time attack scenario reconstruction from COTS audit data. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017. [Google Scholar]

- Ren, Y.; Xiao, Y.; Zhou, Y.; Zhang, Z.; Tian, Z. CSKG4APT: A cybersecurity knowledge graph for advanced persistent threat organization attribution. IEEE Trans. Knowl. Data Eng. 2022, 35, 5695–5709. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Afnan, S.; Sadia, M.; Iqbal, S.; Iqbal, A. LogShield: A Transformer-based APT Detection System Leveraging Self-Attention. arXiv 2023, arXiv:2311.05733. [Google Scholar]

- Wang, N.; Wen, X.; Zhang, D.; Zhao, X.; Ma, J.; Luo, M.; Nie, S.; Wu, S.; Liu, J. Tbdetector: Transformer-based detector for advanced persistent threats with provenance graph. arXiv 2023, arXiv:2304.02838. [Google Scholar]

- Stojanović, B.; Hofer-Schmitz, K.; Kleb, U. APT datasets and attack modeling for automated detection methods: A review. Comput. Secur. 2020, 92, 101734. [Google Scholar] [CrossRef]

- Benabderrahmane, S.; Berrada, G.; Cheney, J.; Valtchev, P. A rule mining-based advanced persistent threats detection system. arXiv 2021, arXiv:2105.10053. [Google Scholar] [CrossRef]

- Niu, W.; Zhou, J.; Zhao, Y.; Zhang, X.; Peng, Y.; Huang, C. Uncovering APT malware traffic using deep learning combined with time sequence and association analysis. Comput. Secur. 2022, 120, 102809. [Google Scholar] [CrossRef]

- Wang, H.; Mumtaz, S.; Li, H.; Liu, J.; Yang, F. An identification strategy for unknown attack through the joint learning of space–time features. Future Gener. Comput. Syst. 2021, 117, 145–154. [Google Scholar] [CrossRef]

- Wang, H.; Di, X.; Wang, Y.; Ren, B.; Gao, G.; Deng, J. An intelligent digital twin method based on spatio-temporal feature fusion for IoT attack behavior identification. IEEE J. Sel. Areas Commun. 2023, 41, 3561–3572. [Google Scholar] [CrossRef]

- Yu, K.; Tan, L.; Mumtaz, S.; Al-Rubaye, S.; Al-Dulaimi, A.; Bashir, A.K.; Khan, F.A. Securing critical infrastructures: Deep-learning-based threat detection in IIoT. IEEE Commun. Mag. 2021, 59, 76–82. [Google Scholar] [CrossRef]

- Quintero-Bonilla, S.; Martín del Rey, A. A new proposal on the advanced persistent threat: A survey. Appl. Sci. 2020, 10, 3874. [Google Scholar] [CrossRef]

- Ghafir, I.; Kyriakopoulos, K.G.; Lambotharan, S.; Aparicio-Navarro, F.J.; Assadhan, B.; Binsalleeh, H.; Diab, D.M. Hidden Markov models and alert correlations for the prediction of advanced persistent threats. IEEE Access 2019, 7, 99508–99520. [Google Scholar] [CrossRef]

- Bodström, T.; Hämäläinen, T. A novel deep learning stack for APT detection. Appl. Sci. 2019, 9, 1055. [Google Scholar] [CrossRef]

- Ramaki, A.A.; Ghaemi-Bafghi, A.; Rasoolzadegan, A. Captain: Community-based advanced persistent threat analysis in it networks. Int. J. Crit. Infrastruct. Prot. 2023, 42, 100620. [Google Scholar] [CrossRef]

- Baradaran, R.; Amirkhani, H. Ensemble learning-based approach for improving generalization capability of machine reading comprehension systems. Neurocomputing 2021, 466, 229–242. [Google Scholar] [CrossRef]

- Saini, N.; Kasaragod, V.B.; Prakasha, K.; Das, A.K. A hybrid ensemble machine learning model for detecting APT attacks based on network behavior anomaly detection. Concurr. Comput. Pract. Exp. 2023, 35, e7865. [Google Scholar] [CrossRef]

- Arefin, S.; Chowdhury, M.; Parvez, R.; Ahmed, T.; Abrar, A.S.; Sumaiya, F. Understanding APT detection using Machine learning algorithms: Is superior accuracy a thing? In Proceedings of the 2024 IEEE International Conference on Electro Information Technology (eIT), Eau Claire, WI, USA, 30 May–1 June 2024. [Google Scholar]

- Li, D.; Li, Q. Adversarial deep ensemble: Evasion attacks and defenses for malware detection. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3886–3900. [Google Scholar] [CrossRef]

- Choi, S. Malicious powershell detection using attention against adversarial attacks. Electronics 2020, 9, 1817. [Google Scholar] [CrossRef]

- Su, T.; Sun, H.; Zhu, J.; Wang, S.; Li, Y. BAT: Deep learning methods on network intrusion detection using NSL-KDD dataset. IEEE Access 2020, 8, 29575–29585. [Google Scholar] [CrossRef]

- Jiao, R.; Wang, S.; Zhang, T.; Lu, H.; He, H.; Gupta, B.B. Adaptive feature selection and construction for day-ahead load forecasting use deep learning method. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4019–4029. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Lashkari, A.H.; Gil, G.D.; Mamun, M.S.I.; Ghorbani, A.A. Characterization of tor traffic using time based features. In International Conference on Information Systems Security and Privacy; SciTePress: Setúbal, Portugal, 2017. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 2: Short papers. [Google Scholar]

- Gu, Z.; Hu, W.; Zhang, C.; Lu, H.; Yin, L.; Wang, L. Gradient Shielding: Towards Understanding Vulnerability of Deep Neural Networks. IEEE Trans. Netw. Sci. Eng. 2020, 8, 921–932. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Lu, J.; Chen, K.; Zhuo, Z.; Zhang, X. A temporal correlation and traffic analysis approach for APT attacks detection. Clust. Comput. 2019, 22, 7347–7358. [Google Scholar] [CrossRef]

- Xuan, C.D.; Duong, D.; Dau, H.X. A multi-layer approach for advanced persistent threat detection using machine learning based on network traffic. J. Intell. Fuzzy Syst. 2021, 40, 11311–11329. [Google Scholar] [CrossRef]

- Charan, P.S.; Shukla, S.K.; Anand, P.M. Detecting word based DGA domains using ensemble models. In Proceedings of the Cryptology and Network Security: 19th International Conference, CANS 2020, Vienna, Austria, 14–16 December 2020; Proceedings 19; Springer: Cham, Switzerland, 2020. [Google Scholar]

| Metrics | Formula |

|---|---|

| Accuracy | (TP + TN)/(TP + FP + FN + TN) |

| Precision | TP/(TP + FP) |

| Recall | FN/(TP + FN) |

| F1 Score | 2 × ((precision×recall)/(precision + recall)) |

| False Positive Rate | FP/(FP + TN) |

| Type | Size | Malware Type | Packet Num |

|---|---|---|---|

| Malware traffic | 961 MB | 15 | 2,172,044 |

| normal traffic | 1.2 GB | 0 | 2,827,956 |

| Model | Hyperparameters | Search Space | Selected |

|---|---|---|---|

| RF | Number of Trees | [10, 50, 100] | 50 |

| RF | Random State | [0, 42, 100] | 42 |

| MLP | Hidden Layers | [2, 3, 4, 5] | 4 |

| MLP | Activation Function | [ReLU, GELU, Tanh] | GELU |

| MLP | Dropout Rate | [0.1, 0.2, 0.3, 0.5] | 0.3 |

| BiLSTM | Hidden Size | [64, 128, 256] | 128 |

| BiLSTM | Layers | [1, 2] | 1 |

| BiLSTM | CNN Kernel Size | [3, 5, 7] | 3 |

| BiLSTM | CNN Channels | [64, 128, 256] | 128 |

| BiLSTM | Dropout | [0.1, 0.3, 0.5] | 0.3 |

| BiLSTM | Sequence Length | [4, 6, 8] | 6 |

| XGBoost | Booster | [gbtree, gblinear] | gbtree |

| XGBoost | Max Depth | [3, 6, 10] | 6 |

| XGBoost | Subsample | [0.6, 0.8, 1.0] | 0.8 |

| XGBoost | Colsample_bytree | [0.6, 0.8, 1.0] | 0.8 |

| XGBoost | Number of Trees | [50, 100, 150] | 100 |

| Self-Attention | Attention Heads | [2, 4, 8] | 4 |

| Self-Attention | Q/K/V Dimensions | [32, 64, 128] | 64 |

| Self-Attention | Feedforward Dimension | [128, 256, 512] | 256 |

| Algorithm | Parameter | Accuracy (%) | Precision (%) | Recall (%) | F1 (%) | FPR (%) | |

|---|---|---|---|---|---|---|---|

| RF | Tree = 50 | Static | 94.45 | 95.88 | 91.81 | 95.82 | 2.12 |

| Tree = 50 | Combined | 96.23 | 98.42 | 94.11 | 96.20 | 1.17 | |

| MLP | Unite = 4 | Static | 94.80 | 97.32 | 91.28 | 96.20 | 0.49 |

| Unite = 4 | Combined | 96.33 | 98.20 | 93.45 | 96.19 | 0.58 | |

| BiLSTM | Hidden = 64 | Static | 94.50 | 96.29 | 91.61 | 95.89 | 1.26 |

| Hidden = 64 | Combined | 96.78 | 98.93 | 94.06 | 96.25 | 0.77 | |

| Preparation | McNemar’s Statistic | p-Value |

|---|---|---|

| RF vs. MLP | 29,610.04 | <0.001 |

| RF vs. BiLSTM | 29,152.00 | <0.001 |

| MLP vs. BiLSTM | 658.44 | <0.001 |

| Algorithm | Parameter | Accuracy (%) | Precision (%) | Recall (%) | F1 (%) | FPR (%) |

|---|---|---|---|---|---|---|

| Logical | C = 1.0 | 96.55 | 99.09 | 93.92 | 95.90 | 0.66 |

| XGBoost | gbtree | 96.92 | 99.34 | 94.56 | 96.36 | 0.67 |

| Self-attention | Hidden = 128 | 97.32 | 99.26 | 96.23 | 97.51 | 0.69 |

| Modification | Accuracy (%) | Precision (%) | Recall (%) | F1 (%) | FPR (%) |

|---|---|---|---|---|---|

| Full model (TES-APT) | 97.32 | 99.26 | 96.23 | 97.51 | 0.69 |

| - Self-attention | 96.92 | 99.34 | 94.56 | 96.36 | 0.67 |

| - Ensemble learning | 96.78 | 98.93 | 94.06 | 96.25 | 0.77 |

| - Time-series features | 94.50 | 96.29 | 91.61 | 95.89 | 1.61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, M.; Xiang, G.; Yang, Q.; Ma, Z.; Zhang, H. TSE-APT: An APT Attack-Detection Method Based on Time-Series and Ensemble-Learning Models. Electronics 2025, 14, 2924. https://doi.org/10.3390/electronics14152924

Cheng M, Xiang G, Yang Q, Ma Z, Zhang H. TSE-APT: An APT Attack-Detection Method Based on Time-Series and Ensemble-Learning Models. Electronics. 2025; 14(15):2924. https://doi.org/10.3390/electronics14152924

Chicago/Turabian StyleCheng, Mingyue, Ga Xiang, Qunsheng Yang, Zhixing Ma, and Haoyang Zhang. 2025. "TSE-APT: An APT Attack-Detection Method Based on Time-Series and Ensemble-Learning Models" Electronics 14, no. 15: 2924. https://doi.org/10.3390/electronics14152924

APA StyleCheng, M., Xiang, G., Yang, Q., Ma, Z., & Zhang, H. (2025). TSE-APT: An APT Attack-Detection Method Based on Time-Series and Ensemble-Learning Models. Electronics, 14(15), 2924. https://doi.org/10.3390/electronics14152924