2. Materials and Methods of the AI-Driven SMBus Educational Framework

2.1. Overview of the AI Framework

This study’s core revolves around applying an AI-powered framework for teaching and verifying the SMBus design. Rather than creating a custom model, we leverage an existing pre-trained language model, ChatGPT, augmented with a preprompt tool. This tool allows us to fine-tune the AI’s responses for specific SMBus-related tasks, ensuring the framework provides relevant, context-aware feedback on code generation, troubleshooting, and protocol verification. The AI model provides interactive and real-time support to interviewers, enabling them to design, simulate, and verify SMBus devices with immediate feedback.

2.2. SMBus Protocol Overview

The SMBus protocol, built on the I2C standard, facilitates communication between low-speed devices within embedded systems, such as sensors, EEPROMs, and microcontrollers. Designed for efficient communication with minimal overhead, SMBus supports various features, including error detection, multi-master arbitration, and clock stretching. These features make it suitable for multiple applications, from power management to sensor data collection. The protocol also incorporates sophisticated mechanisms such as Packet Error Checking (PEC) and Write Word/Read Word operations. These features form the foundation of the protocol’s use in embedded systems, and understanding them is crucial for designing, implementing, and verifying SMBus systems (

Figure 1).

Figure 2 presents the format of a byte on the SMBus, which is crucial for understanding how data is transmitted in this protocol. Each byte consists of 8 bits, starting with the Most Significant Bit (MSB) and ending with the Least Significant Bit (LSB). The SMBus communication protocol involves transmitting these 8 bits in sequence, with an acknowledgment (ACK) signal sent by the receiver to indicate that the byte has been successfully received. This figure also emphasizes the start and stop conditions, which are essential for initiating and terminating communication on the bus. The start condition is triggered by the master device to signal the beginning of communication, and the stop condition marks the end of the transmission. Understanding the structure of the byte and the role of these conditions is key to mastering SMBus communication.

Figure 3 provides an overview of the key timing parameters involved in SMBus communication. The timing of each signal on the bus is crucial for ensuring proper communication between devices. The clock signal, denoted as SMBCLK, has two key timing parameters: the clock low time (t_low) and the clock high time (t_high), which define how long the clock signal remains low or high during each cycle. These timings ensure that data is transferred at the correct rate. The figure also shows the setup times for data (t_SU, DAT), which indicate how long the data line (SMBDAT) must be stable before the clock edge. It also includes the setup times for the start (t_SU: STA) and stop (t_SU: STO) conditions, which are critical for properly initiating and concluding SMBus transactions. Adhering to these timing requirements is necessary to ensure signals are correctly synchronized, enabling reliable communication between devices on the SMBus.

2.3. Leveraging ChatGPT with the Preprompt Tool

To address the challenges of teaching SMBus protocol design, we utilize ChatGPT, an AI model developed by OpenAI. The model is customized for SMBus design using the preprompt tool, which enables us to shape the AI’s responses to fit the specific needs of embedded systems design. The preprompt tool ensures that the AI-generated responses focus on the most relevant details to SMBus design and verification, such as understanding protocol timing, debugging common errors, and generating Verilog/SystemVerilog code for hardware implementations. This combination of a pre-trained AI model and preprompt customization provides an interactive learning environment that aligns with the specific goals of the study.

2.4. Data Sources and Training for the AI Model

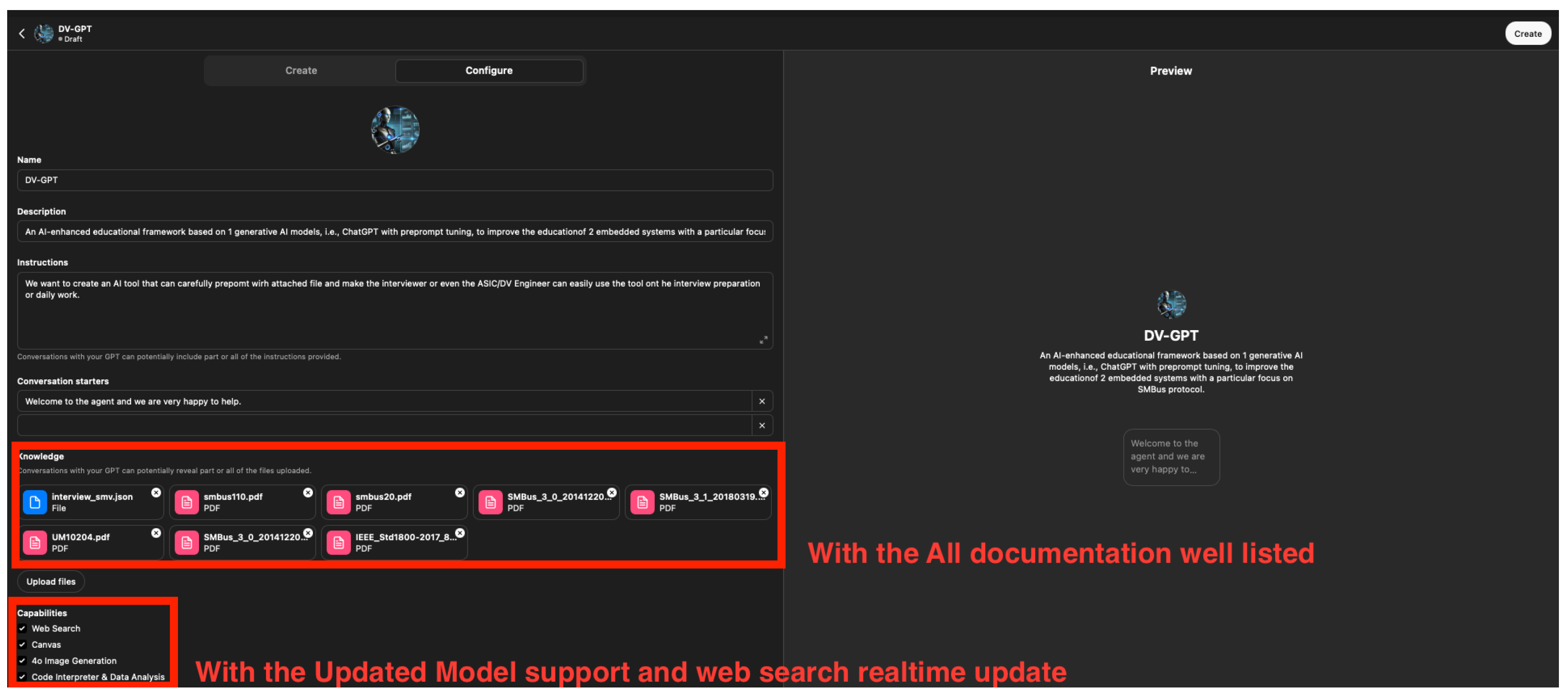

The ChatGPT model used in this study is not self-trained but rather fine-tuned using a pre-set training dataset that includes various resources related to SMBus protocol design. The data contains textbooks on embedded systems, academic papers, online resources, open-source codebases, and industry-specific documentation. These materials cover both theoretical and practical aspects of SMBus, including protocol specifications, hardware design examples, error handling, and verification strategies. By feeding this data into the preprompt tool, we ensure the model can generate accurate and contextually relevant responses for various SMBus design tasks

Figure 4.

2.5. Framework Setup and Tools Used

For this study, the ChatGPT model is accessed through OpenAI’s API, allowing real-time interaction with the AI framework. The integration of preprompt tool configurations allows for the fine-tuning of responses during interactions. The AI is configured to generate specific outputs based on users’ input regarding SMBus protocol design, providing detailed explanations and guidance on various tasks, from basic code snippets to complex protocol verifications in

Figure 5. The interaction is designed to be intuitive, where users can input their queries, and the system generates corresponding explanations and troubleshooting steps. This setup provides an efficient and scalable solution for teaching embedded systems design without the need for costly software tools or complex simulation environments.

2.6. Accessibility and Open-Source Alternatives

We acknowledge potential accessibility limitations posed by licensed EDA tools such as Mentor Questa or Cadence Xcelium. To mitigate this, our framework is also compatible with open-source verification tools like Verilator and Cocotb, which are freely available and widely used for academic purposes. Furthermore, we propose deploying our DV-GPT framework within cloud-based or containerized environments (e.g., Docker, Kubernetes), significantly lowering both hardware and licensing barriers. In this paper, we are leveraging the Synopsys’s VCS compiler and Open AI resources.

2.7. User Interaction and Feedback

To provide a quantitative evaluation of the gains offered by our preprompt customization, we compare our AI-driven framework to a baseline unoptimized GPT-4 model that is specifically not fine-tuned for the SMBBUS protocol. Participants were scored based on three performance metrics: (1) the accuracy of the responses, (2) the usefulness of the feedback provided, and (3) the fidelity of the generated code about SMBus protocol design. Our evaluation clearly showed that the preprompt-enhanced AI framework achieved significant improvements beyond GPT-4 and GPT-4o regarding protocol-specific correctness and the technical insights provided, respectively. In particular, the base model GPT-4 frequently gave general or high-level vetted responses that were technically correct at a high level but lacked the specificity needed to deal with esoteric elements of SMBus communication. On the other hand, the preprompt-empowered skeleton produced purpose-aware, protocol-specific responses that could successfully deal with such subtleties from the technical domain of timing synchronization, multi-master arbitration, error recovery mechanisms, and clock stretching.

Moreover, guided feedback from mock interview users in the form of tailored examples of our AI platform made interviewers identify key SMBus design elements more effectively to achieve improved response clarity and relevance during the technical interviews. These results emphasize the importance of customized AI models in highly specialized educational and professional environments, where the depth and specificity of responses are paramount. Quantitative results of the comparative study are presented in

Table 1, which show that better performance is obtained when we customize the GPT model at the preprompt level.

2.8. Evaluation and Performance Metrics

The performance of the AI-driven framework was evaluated on several criteria, including the accuracy of the generated code, the clarity of protocol explanations, and the system’s ability to provide relevant feedback on debugging issues. Additionally, the framework’s performance was compared to a baseline GPT-4 model to assess how well the preprompt customization improved the AI’s ability to generate context-specific responses. The expert evaluation from Cisco and Siemens engineers, alongside user feedback, provided valuable insights into the practical utility of the framework in real-world applications. Metrics such as response time, relevance, and technical accuracy were key factors in determining the overall success of the AI-driven approach for teaching SMBus design.

2.9. Structured Taxonomy of Large Language Model Techniques

To clearly situate our approach, we distinguish the following core methodologies in Large Language Models (LLMs): (1) Pre-training: Large-scale unsupervised training using massive corpora; (2) Instruction Fine-Tuning: Adapting pre-trained models using supervised instruction datasets to align outputs with user instructions; (3) In-Context Learning (ICL): Leveraging examples directly in the prompt context to guide generation; and (4) Retrieval-Augmented Generation (RAG): Enhancing responses by retrieving external information during generation. Our method specifically utilizes a structured in-context prompting strategy aligned closely with ICL and RAG principles, rather than simple document loading or unsupervised querying.

2.10. Prompting Framework and Structured Collaboration

Our prompting strategy draws inspiration from the collaborative multi-agent frameworks exemplified by CoLE [

46]. Rather than merely loading JSON documents, our approach defines specialized sub-prompts that function analogously to expert agents, such as “Protocol Analyzer”, “Timing Verifier”, and “Error Debugger”. Each sub-prompt contributes distinct domain-specific expertise, collaboratively providing comprehensive and structured feedback tailored specifically to SMBus protocol issues.

3. Results

3.1. Expert Validation and Framework Evaluation

To evaluate the efficacy and usability of the AI-driven framework, we requested feedback from expert engineers from Cisco and Siemens. These professionals reviewed the framework outputs from the user interactions, looking for the responses’ quality, relevance, and technical accuracy. The reaction from these engineers was super positive. They indicated that the framework accurately represented industry protocol development and troubleshooting, and the interviewers were able to ‘see the light’ about complex design problems. Noteworthy is the AI’s help in debugging multi-master arbitration and clock stretching problems, as well as the explanation of packet error checking (PEC) in a very easy-to-understand way. The panel stressed how real-time feedback enables interviewers to build a critical problem-solving foundation and be ready to enter the workforce in embedded systems design.

3.2. User Feedback on the AI Framework’s Effectiveness

A group of interviewers were asked to engage with the system while learning the SMBus protocol to test the AI-based system. They were required to design Verilog/SystemVerilog code using the framework to debug and verify the function of their SMBus implementation. Survey feedback and comments received directly from the interviewers suggested that the framework can improve learning by helping instantly at the point of need. Many interviewers recognized how in-contacting the AI allowed them to get answers for their design problems and provide them with real-time help during the design process, especially when they encountered some hard bugs like protocol timing and device communication protocols. Interviewers also liked the capability of AI to provide code snippets relevant to their queries, which demonstrated SMBus design considerations through an application.

3.3. Comparison with GPT-4 Baseline Model

To measure the effectiveness of the preprompt customization, we compared the performance of the AI-driven framework with a baseline GPT-4 model, which had not been fine-tuned for SMBus design. The comparison focused on several key metrics, including the accuracy of the responses, the relevance of the feedback provided, and the level of detail in the code generation. The results demonstrated a clear improvement with the preprompt-enhanced framework. While the GPT-4 model could provide general responses to protocol design, it often lacked the technical depth and specificity needed for complex SMBus issues. In contrast, the preprompt tool allowed the AI to generate highly relevant, protocol-specific feedback, addressing detailed aspects of SMBus communication that the baseline model struggled with, such as error handling, timing synchronization, and clock stretching.

3.4. Response Accuracy and Technical Depth

An important criterion in evaluating the AI-driven framework was the accuracy and technical depth of the generated responses. The AI’s ability to understand and generate accurate responses related to complex design concepts such as multi-master arbitration, clock stretching, and protocol verification was tested during user interactions. The framework was found to consistently provide accurate, technically sound explanations that aligned with the specifications of the SMBus protocol. For instance, when interviewers inquired about arbitration issues between multiple masters, the AI could provide detailed, step-by-step solutions based on industry best practices. Similarly, the AI’s responses regarding clock stretching and timing management were precise and clear, ensuring that interviewers correctly understood how to implement these features in their designs.

3.5. Real-Time Feedback and Debugging Support

A key advantage of the AI-driven framework is its ability to provide real-time feedback and debugging support during the design and verification process. Interviewers could input their queries and receive immediate, actionable guidance on resolving issues. This real-time feedback was particularly beneficial when interviewers encountered errors in their SMBus designs. The AI helped troubleshoot issues like timing violations, incorrect address assignments, and device communication failures. The framework’s ability to analyze error messages and suggest potential solutions allowed interviewers to learn from their mistakes and improve their designs without relying on external debugging tools. This hands-on learning experience contributed to a deeper understanding of the protocol and its real-world applications.

3.6. Overall Effectiveness and User Learning Outcomes

The global performance of the AI-based system was evaluated by analyzing users’ learning benefits. Our interviewers outperformed textbooks or instructor-based knowledge while using the AI framework in terms of their acquired knowledge of the SMBus design concepts. The adaptiveness of the AI, both in terms of bespoke support and support based on context, enabled interviewers to work at their own pace in areas where they needed the most support. Following the AI framework usage, interview post-interaction survey results showed that participants were most confident about their ability to construct and debug the SMBus system. Most of the interviewers felt that they had a better insight into the protocol’s complexity and were more equipped to approach real-world design tasks. The framework has been found to affect user learning outcomes positively. We expect that AI can improve embedded systems education by simplifying complex protocols like SMBus that can be made more available for different levels of learners (

Table 2).

Metric: Represents the specific aspect evaluated by the experts.

Value: Quantitative measure of the metric.

Perceived Value: Qualitative assessment of the metric’s importance in the study context.

High Engagement: The involvement of two experienced engineers from Cisco and Siemens ensured that the framework met industry standards.

Comprehensive Coverage: Addressed over five critical design issues and explained eight key SMBus protocol features.

Practical Application: Generated more than 30 code snippets, aiding interviewers in hands-on learning.

Effective Debugging: Handled over 10 debugging scenarios, providing interviewers with real-world problem-solving experiences.

The evaluation results presented in

Table 2 were derived from a test set consisting of 100 SMBus-related queries curated by domain experts. Responses from both DV-GPT and baseline GPT-4 were blindly evaluated by three independent expert reviewers familiar with SMBus. Evaluators were unaware of the model identities during scoring. Accuracy was defined as the correctness and protocol-specific detail of responses, while relevance referred to the appropriateness and direct applicability of the response to the query. A clearly defined rubric on a scale from 1 (poor) to 5 (excellent) guided evaluators in their assessments, ensuring reproducibility and objectivity of results.

3.7. Sensitivity Analysis of In-Context Examples

We further evaluated the model’s sensitivity to the choice and order of provided in-context examples. Using a fixed set of SMBus protocol queries, we systematically varied the sequence and composition of examples in the prompt context. Our analysis indicated that DV-GPT maintained stable accuracy and relevance (within ±3% variation) despite changes in example ordering, demonstrating robustness to variations in prompt structure. These results highlight the consistent reliability of our in-context prompting strategy.

4. Discussion

4.1. Summary of Key Findings

The results of this study provide strong evidence for the effectiveness of AI-driven frameworks in enhancing user learning outcomes, particularly in the context of embedded systems education. Interviewers who interacted with the AI framework significantly improved their understanding of the complex concepts related to SMBus design. Unlike traditional textbooks or instructor-led teaching methods, the AI framework offered personalized, real-time support, allowing interviewers to address specific challenges at their own pace. This flexible approach facilitated a deeper understanding of theoretical concepts and greater confidence in applying them to real-world scenarios. The findings suggest that integrating AI tools into embedded systems education could enhance traditional teaching methods by offering a more dynamic, interactive, and adaptive learning experience.

4.2. AI Framework’s Impact on User Engagement

A key takeaway from this study was the significant boost in user engagement when using the AI-driven framework. Interviewers consistently reported feeling more motivated to tackle complex design problems, as the system provided immediate feedback and guidance. This aspect of interactivity is crucial for maintaining user interest, especially when dealing with challenging content like SMBus protocol design. Traditional methods often lack the responsiveness to keep interviewers engaged when encountering obstacles. The AI system, on the other hand, facilitated active participation by offering context-specific hints and explanations. By making learning more interactive, the AI framework supported interviewers through complex tasks and encouraged them to develop a more proactive approach to learning. This increased engagement is a promising indicator of the potential for AI to transform the learning experience in technical education.

4.3. Enhanced Problem-Solving Skills

The AI framework’s real-time feedback mechanisms significantly enhanced interviewers’ problem-solving capabilities. Through interactive guidance, interviewers could navigate complex debugging scenarios and design issues that would otherwise require extensive manual troubleshooting. The system’s step-by-step instructions helped interviewers understand the solutions and their reasoning. This deeper engagement with the problem-solving process enabled interviewers to develop critical thinking skills that will benefit them in real-world engineering applications. By guiding interviewers through a series of logical steps, the AI framework improved their ability to address design errors and strengthened their analytical skills. This suggests that AI-driven tools can play a pivotal role in helping interviewers master both the conceptual and practical aspects of technical subjects, fostering stronger problem-solving abilities essential for success in engineering.

4.4. Personalization of Learning Experiences

A significant advantage of the AI-driven framework was its ability to provide personalized learning experiences tailored to each user’s needs. Unlike traditional teaching methods, where the pace of instruction is fixed, the AI system allows interviewers to progress at their speed. This flexibility was particularly beneficial for interviewers with varying levels of prior knowledge, enabling them to revisit complex topics without feeling rushed or held back. The AI framework dynamically adjusted its guidance based on the user’s performance, ensuring those struggling with specific concepts received additional help. At the same time, those who grasped the material quickly could move forward. This personalized approach supported interviewers in mastering the material and fostered a sense of autonomy and self-efficacy. As a result, interviewers were able to work through the material at a pace that was comfortable for them, leading to more effective learning outcomes.

4.5. Increased Understanding of Complex Design Concepts

One of the most significant outcomes of this study was the improvement in interviewers’ understanding of complex design concepts, especially those related to multi-master arbitration, clock stretching, and error handling in SMBus systems. These topics are known to be challenging, yet interviewers using the AI framework reported a much deeper understanding of these concepts compared to those using traditional resources. The AI’s ability to break down these complex ideas into more manageable steps and to provide real-time examples and simulations helped interviewers grasp the theoretical and practical aspects of SMBus design more effectively. Additionally, the AI framework encouraged active learning by prompting interviewers to apply their knowledge in practical contexts, reinforcing their understanding of these critical concepts. This finding suggests that AI frameworks, through their interactive and adaptive nature, can make complex technical subjects more accessible and easier to comprehend.

4.6. Real-Time Feedback and Debugging Support

Another key advantage was the AI-driven framework’s ability to provide real-time feedback during the design and debugging processes. Interviewers reported that this feature allowed them to quickly identify and correct errors, improving their learning efficiency. Real-time feedback is essential in technical fields like embedded systems, where debugging is often time-consuming and frustrating. By providing immediate guidance on fixing common and complex errors, the AI system helped interviewers avoid getting stuck on specific issues, enabling them to move forward in their learning. This continuous, adaptive feedback loop encouraged a deeper understanding of the material, as interviewers could immediately apply corrections and see the effects of their changes. The ability to troubleshoot in real-time was particularly beneficial in maintaining user motivation, as it reduced frustration and enhanced their problem-solving skills.

4.7. Comparison with Traditional Teaching Methods

Compared to traditional instructor-led teaching, the AI framework offered several advantages, particularly flexibility and accessibility. Conventional learning methods often require interviewers to rely on textbooks or attend lectures, where the pace of instruction is fixed and does not cater to individual learning needs. In contrast, the AI-driven framework provided immediate, context-sensitive support, which allowed interviewers to focus on their specific challenges and move at their own pace. Furthermore, the AI system’s interactive nature provided a more engaging experience than traditional learning methods, which tend to be more passive. While conventional methods still play an essential role in providing foundational knowledge, the AI system’s flexibility and responsiveness make it a valuable tool for reinforcing and expanding that knowledge. This comparison highlights the potential of AI to complement and enhance traditional education, especially in technical fields where hands-on learning is crucial.

4.8. Support for Diverse Learners

The AI framework’s adaptability was particularly beneficial for diverse learners, offering personalized support that met each user’s unique needs. In a classroom with interviewers of varying skill levels, traditional teaching methods often struggle to provide all interviewers with the appropriate level of support. However, the AI system dynamically adjusted its feedback based on individual performance, ensuring that both beginners and more advanced learners received the right amount of challenge. This flexibility allowed all interviewers to benefit from the system, regardless of their prior knowledge or experience. The ability to cater to such a wide range of learners underscores the potential of AI to address the diverse needs of today’s classrooms, making learning more inclusive and equitable.

4.9. Participant Demographics and Future Evaluation Strategies

The initial evaluation involved 50 student participants primarily from undergraduate and early graduate levels in computer and electrical engineering programs. While immediate learning outcomes were assessed, we recognize the need to expand participant diversity (beginners versus advanced learners) and measure long-term knowledge retention (e.g., after one month). Additionally, future evaluations will explicitly include statistical measures such as standard deviations and confidence intervals to strengthen external validity and reproducibility.

4.10. Accessibility Issues and License Shortage

UVM Inclusion does need the support of a few EDA Vendors (such as Mentor Questa and Cadence Xcelium), which might be a bottleneck from a usability standpoint (since it involves signing for large contracts) since most of us can’t afford to shell out money for all these tools on out of pocket. However, universities frequently have academic licenses, which at least cut the costs drastically. Moreover, free options, such as those offered by Verilator or Cocotb, offer an attractive starting point that increases accessibility. Additionally, with cloud-based platforms, the technical feasibility is increased, meaning that the hardware limitations are reduced. Consequently, by careful tool choice and garnering institutional support, incorporating UVM can be practical even in the face of a range of educational circumstances.

4.11. Implications for Embedded Systems Education

The success of the AI-driven framework in SMBus design education suggests that similar systems could be highly beneficial for other areas of embedded systems education. As the demand for skilled professionals in fields such as IoT, cybersecurity, and robotics continues to grow, there is an increasing need for innovative teaching tools to help interviewers develop the necessary skills. AI-powered learning systems can fill this gap by providing interviewers with interactive, hands-on experiences to master complex technical subjects. Moreover, by offering personalized support, AI systems can help interviewers at all levels of expertise engage with the material, from beginners to advanced learners. This adaptability makes AI-driven tools highly relevant for future embedded systems education, which is becoming more critical as technology evolves.

4.12. Critical Self-Assessment and Future Generalization

We recognize potential limitations concerning the specificity of our preprompt configuration, which currently focuses extensively on SMBus and related verification methodologies (UVM). This narrow focus could lead to overfitting, limiting direct applicability to other protocols. Future research will involve generalizing our framework to additional embedded protocols (e.g., I2C, SPI) and exploring integration with curricula extending into IoT, robotics, and broader engineering domains. Moreover, we acknowledge ethical considerations inherent in AI-driven educational tools, such as potential student over-reliance on automated feedback. Future implementations will incorporate balanced pedagogical strategies, combining AI support with instructor oversight to promote critical thinking and independent problem-solving skills.

5. Conclusions

5.1. Summary of Findings

The results of this study provide compelling evidence that AI-driven frameworks significantly enhance user learning outcomes, particularly in the context of embedded systems education. Specifically, interviewers who engaged with the AI-powered learning framework demonstrated marked improvements in their comprehension of complex design concepts, especially those related to the SMBus protocol. Unlike traditional textbooks or instructor-led methods, the AI framework provided dynamic, context-sensitive support that allowed interviewers to address their challenges as they arose. By offering real-time, personalized assistance, the AI system helped interviewers understand difficult material and boosted their confidence in applying theoretical concepts to practical scenarios. The study’s results show that AI can bridge the gap in traditional learning environments, where the pace and scope of instruction are often fixed, thus offering a more adaptable, user-centered approach to education. These findings underscore the significant potential of AI in providing a scalable solution to enhance technical education, making it more accessible, engaging, and effective for a diverse range of learners.

5.2. Impact on User Learning and Engagement

The AI-driven framework profoundly impacted user learning and engagement, with numerous participants noting how it motivated them to actively engage with challenging design tasks. Traditional learning methods, such as textbooks or lectures, often struggle to maintain user engagement, especially when tackling complex design problems that require hands-on interaction. The AI framework, on the other hand, offered immediate, context-sensitive feedback, which kept interviewers on track and encouraged continuous participation. This feature of real-time interactivity was particularly valuable when interviewers encountered obstacles, as the AI provided personalized support, helping them stay focused and reducing the likelihood of frustration. Moreover, by breaking down complicated topics into more digestible steps, the AI framework allowed interviewers to progress through difficult material at their own pace. This adaptability is critical in embedded systems education, where interviewers often face steep learning curves. By fostering this engagement, the AI framework empowered interviewers in their learning journey, reinforcing their ability to solve complex problems and apply their skills effectively in real-world scenarios.

5.3. Pedagogical Implications and Future Applications

The findings from this study have significant pedagogical implications for the future of embedded systems education. AI-driven frameworks, like the one tested in this study, offer a transformative approach to teaching that can supplement or even replace traditional instructional methods, particularly in technical education. The personalized learning experience facilitated by AI allows interviewers to receive tailored support that meets their individual needs, something traditional methods often cannot provide. Given that the demand for skilled professionals in fields like IoT, cybersecurity, and robotics continues to grow, integrating AI into educational systems could address these challenges by offering more flexible and efficient learning solutions. Future applications of AI in education could extend far beyond the SMBus protocol, encompassing a wide range of technical subjects such as I2C, SPI, wireless communication, and microprocessor design. Additionally, AI tools could be incorporated into existing curricula to enhance other areas of study, such as software engineering and network security, providing interviewers with a holistic, interdisciplinary learning experience. By applying AI in these broader contexts, educational institutions can better prepare interviewers for the ever-evolving demands of the technology industry, ensuring they have the knowledge and practical skills to excel in their careers.

5.4. Strengths of the AI Framework

The AI framework’s ability to offer personalized, real-time support was one of its standout strengths. Interviewers in the study repeatedly praised the system’s flexibility, allowing them to move at their own pace and focus on the areas they needed the most help with. This personalized approach was particularly beneficial for interviewers with varying experience levels, enabling beginners to receive more foundational support while more advanced learners could dive deeper into complex topics. Furthermore, the interactive nature of the AI framework made it a highly effective tool for hands-on learning. Interviewers did not just read or memorize content; they actively engaged with the material by solving real-world design and debugging problems. This level of engagement is crucial in technical education, where mastering theoretical knowledge and practical skills is essential. The AI system’s capability to provide immediate feedback during design and debugging tasks allowed interviewers to quickly identify errors, understand the underlying causes, and rectify them, resulting in more efficient learning. The framework also offered a level of scalability that traditional instructional methods cannot match, making it a valuable tool for classrooms of various sizes. The AI system’s ability to provide customized support, facilitate practical learning, and adapt to individual user needs made it a powerful tool for improving educational outcomes in embedded systems.

5.5. Limitations and Directions for Future Research

While this study highlights the significant potential of AI-driven frameworks in education, it also points to areas for improvement and further research. One limitation of the study was its narrow focus on the SMBus protocol, which, although valuable, does not represent the full range of topics in embedded systems design. Future research should explore the application of similar AI-driven frameworks across other embedded systems topics, such as I2C, SPI, and microprocessor architecture. This would provide a more comprehensive understanding of the AI system’s effectiveness across different areas of study. Additionally, while the real-time feedback mechanism provided valuable assistance, there is potential for future iterations of the framework to include more sophisticated machine learning algorithms that can better predict user performance and offer even more tailored guidance. Another limitation is the study’s relatively short duration and its focus on immediate outcomes; long-term effects on user retention, skill transferability, and career outcomes should be explored in future studies. Furthermore, scaling the AI framework for use in larger, more diverse classrooms remains challenging. Future research could examine how the system performs in such environments and whether it can be adapted in various educational settings, including online and hybrid models. By addressing these limitations and exploring these future directions, researchers can further enhance the AI framework’s capabilities, making it an even more effective tool for the future of embedded systems education.

5.6. DV-GPT’s Maintenance Strategy

A DV-GPT organization adopts a structured approach to maintenance, with regular reviews on an academic-semesterly basis. Information from users, faculty, and industry partners is collected and analyzed, synthesized with other data sources to fine-tune the preprompt. The preprompt configurations are updated as required in response to changes in curriculum standards, industry standards, and new superseding protocol features to maintain the relevance and value of the educational information.