Autonomous Textile Sorting Facility and Digital Twin Utilizing an AI-Reinforced Collaborative Robot

Abstract

1. Introduction

2. Related Work

3. Contributions

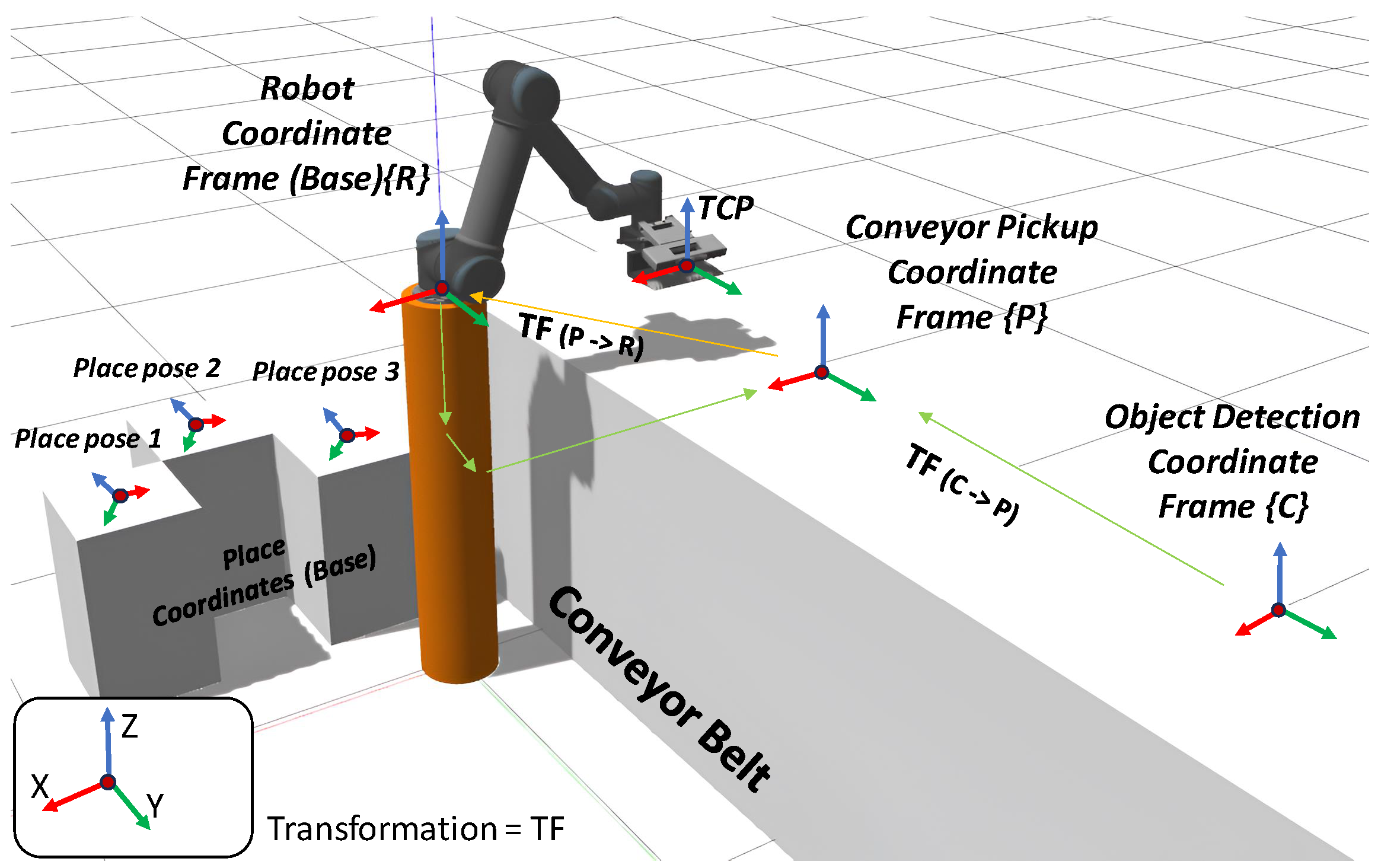

- Propose a system architecture consisting of integrated hardware and software components that enable real-time textile classification, localization, and sorting on a dynamic conveyor belt guided by an RGB+D camera and a computing device running multi-threaded software for real-time control.

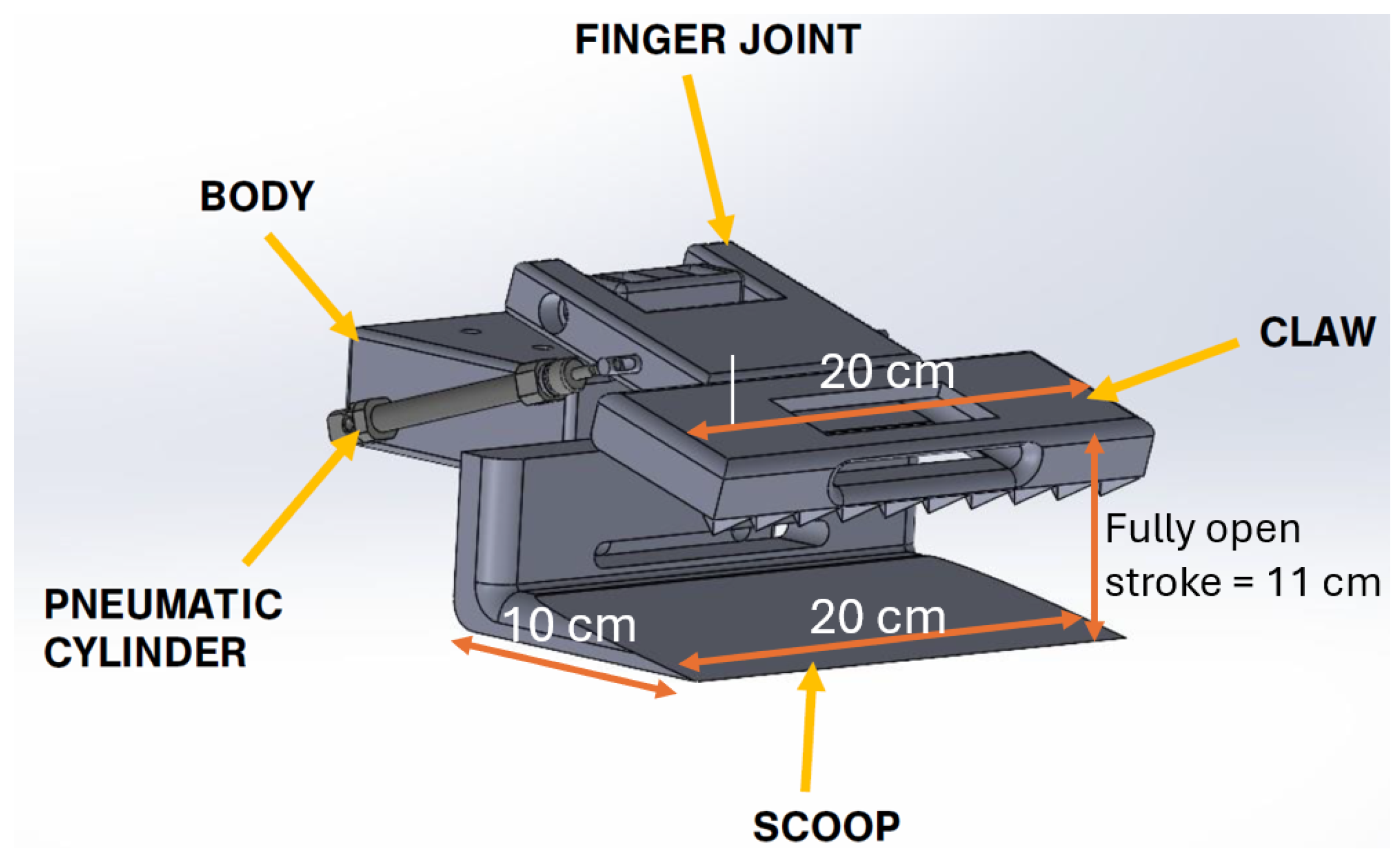

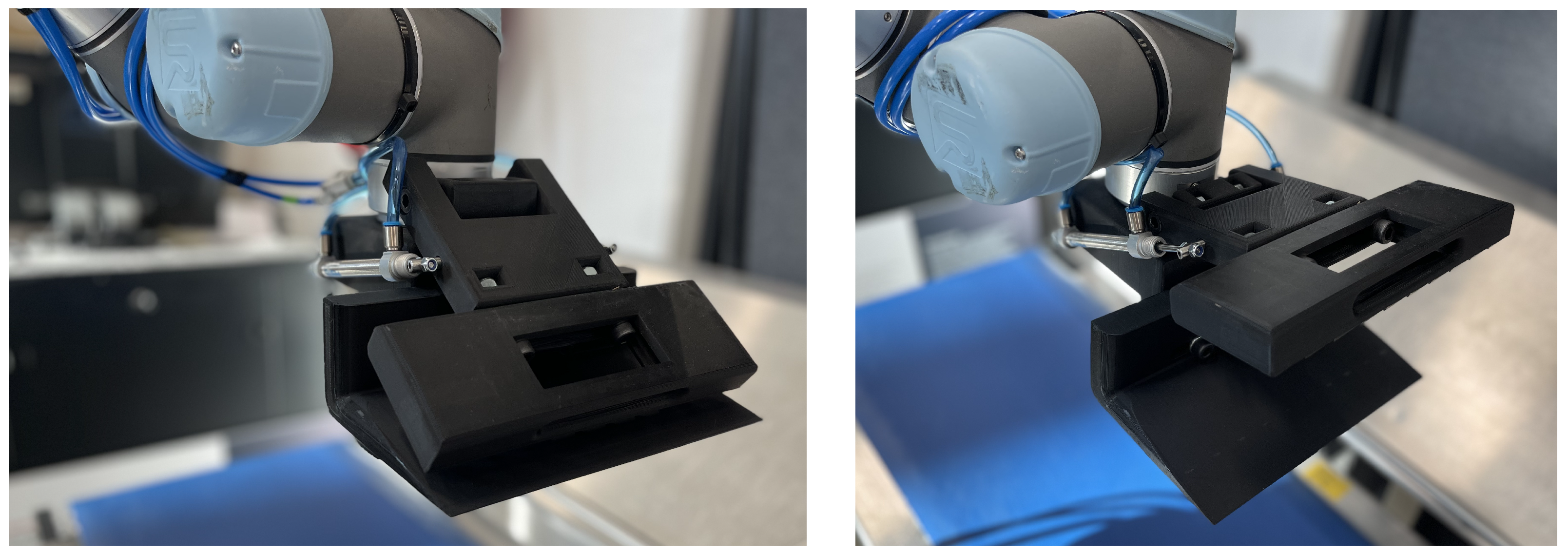

- Design and implement a custom gripper that takes into account the different industrial and functional constraints and can be mounted on a robot for pick-and-place operation.

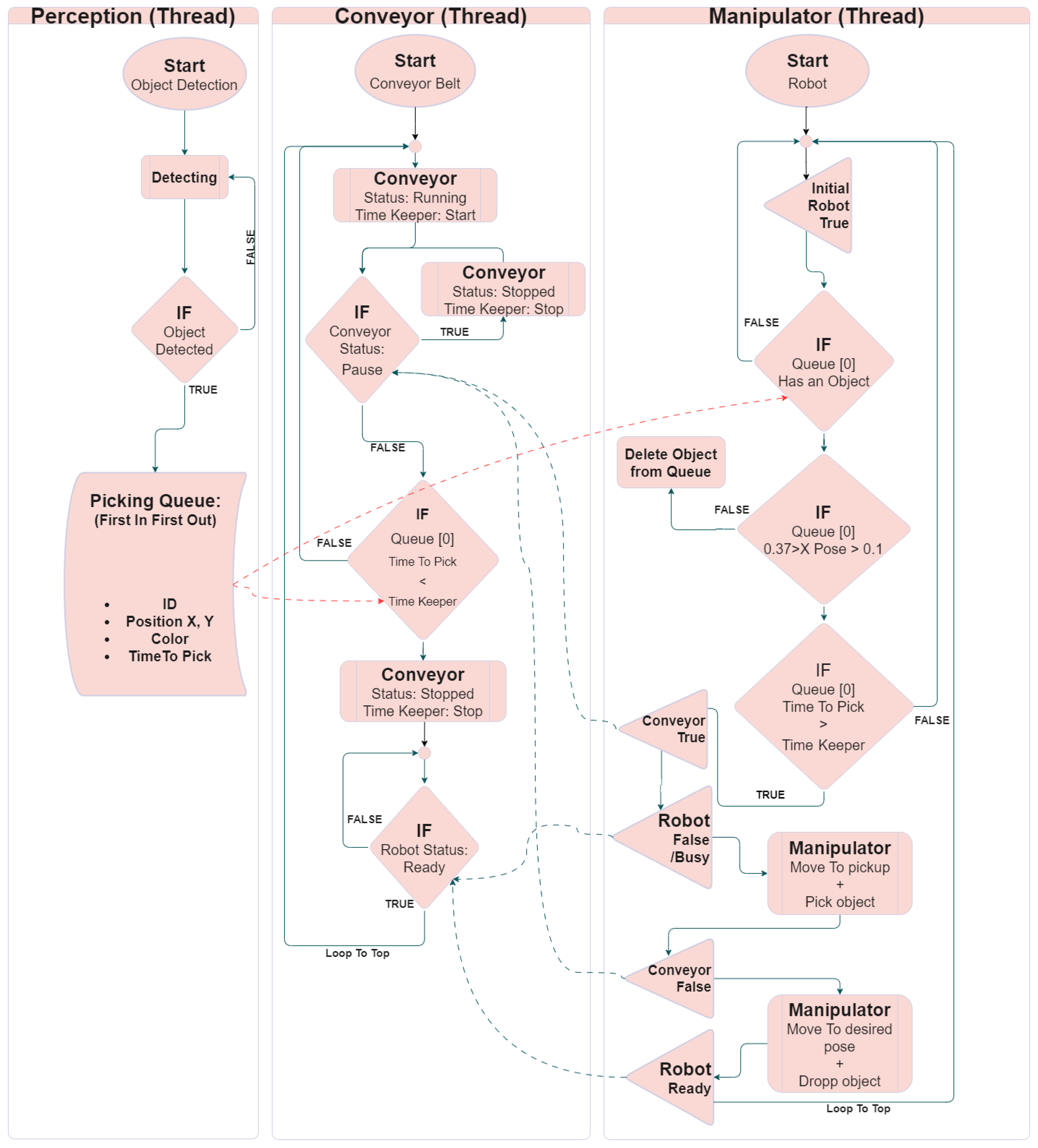

- Implement multithreading software that integrates different hardware (robot, conveyor belt, and camera) components (object detection module and pick-and-place operations). Further, implement the management of queues for continuous operation and optimization, leading towards a smart, scalable, modular, and connected system.

- Optimize system via (a) concurrent task management: streamline the execution of multitasking; (b) communication: ensure seamless communication between different system components; and (c) coordination: achieve efficient coordination between perception, decision-making, and actuation processes.

4. Experimental Setup

4.1. Hardware Architecture

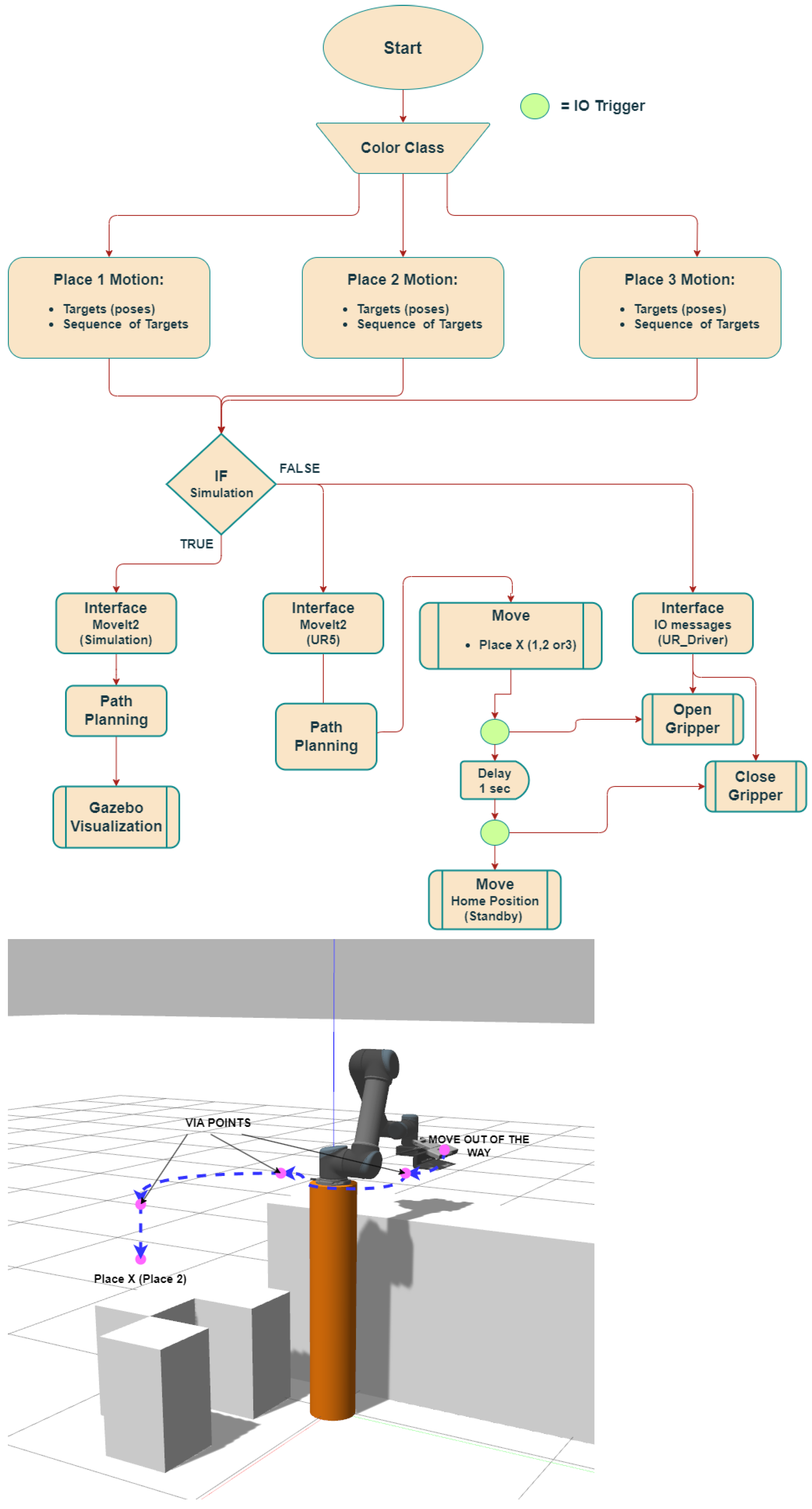

4.2. Software Architecture

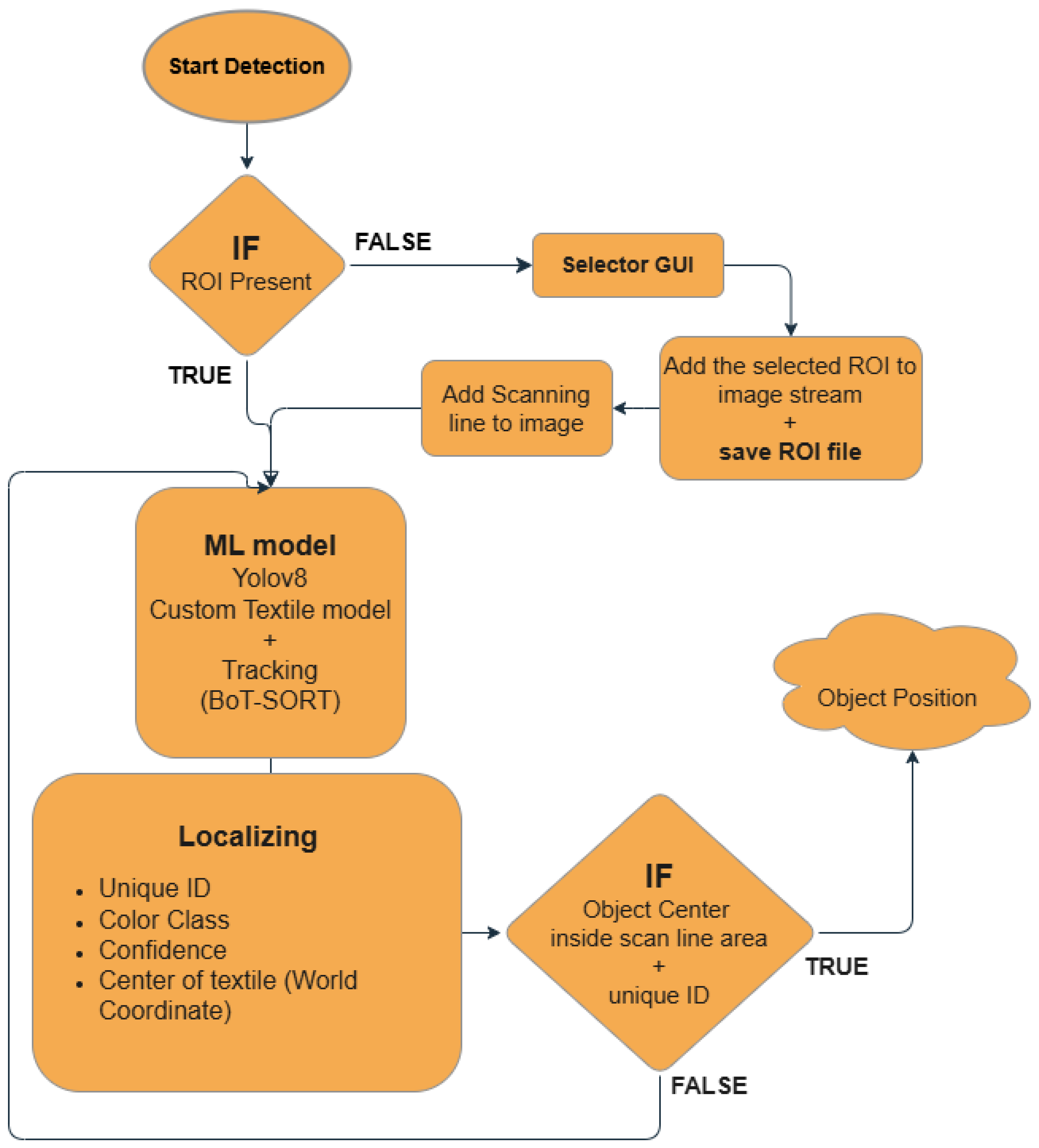

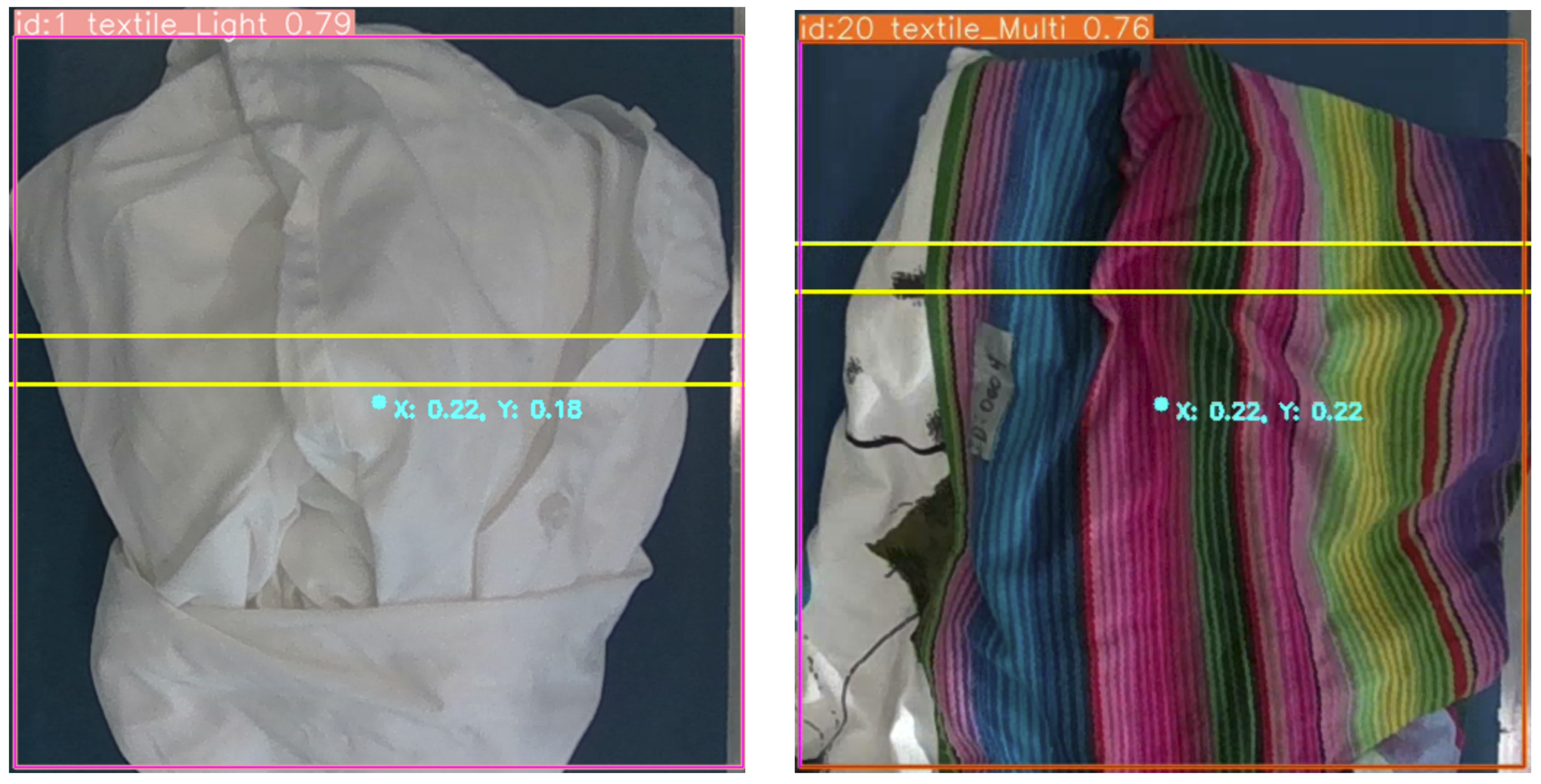

4.2.1. Textile Classification and Localization

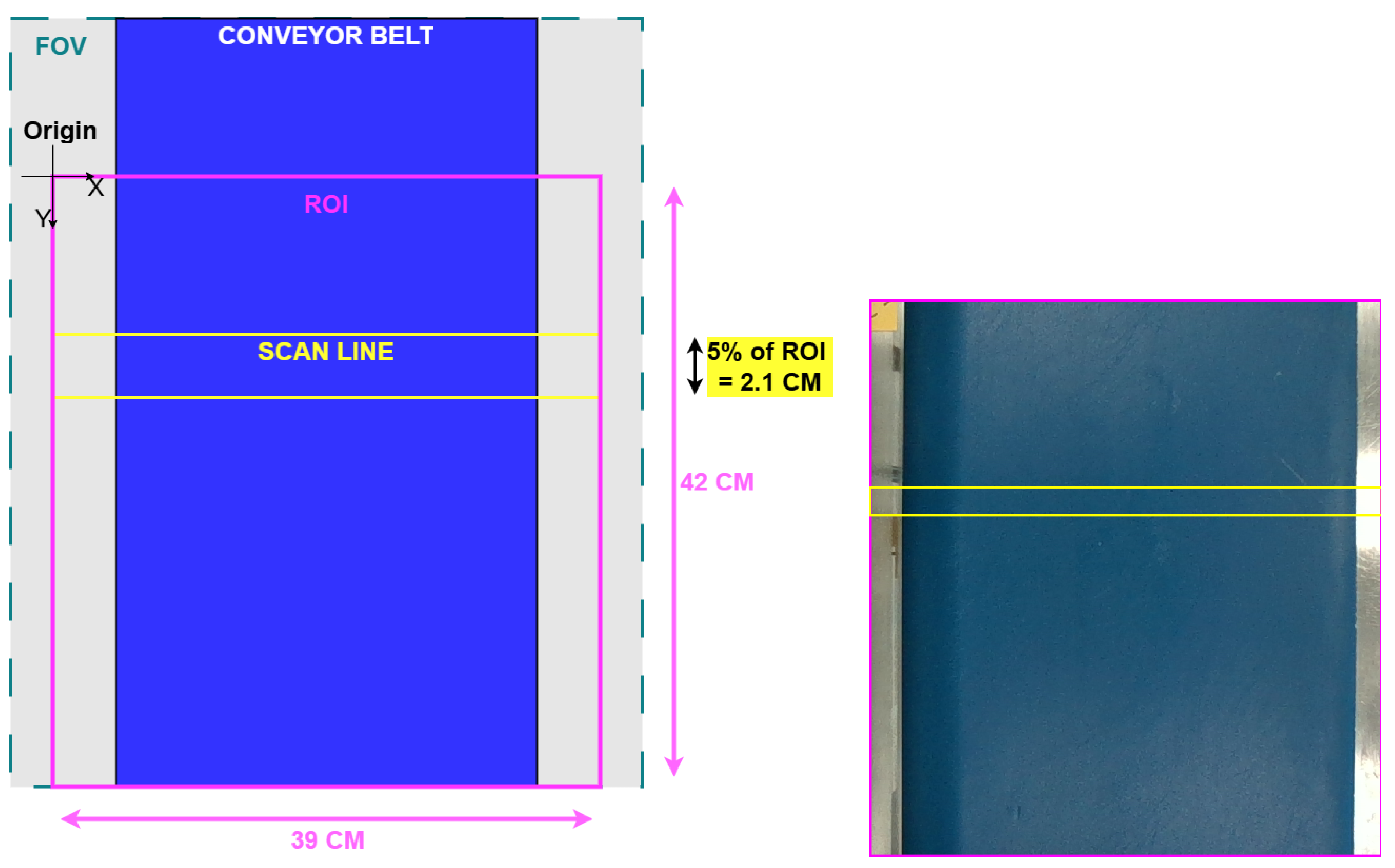

4.2.2. Region of Interest (ROI)

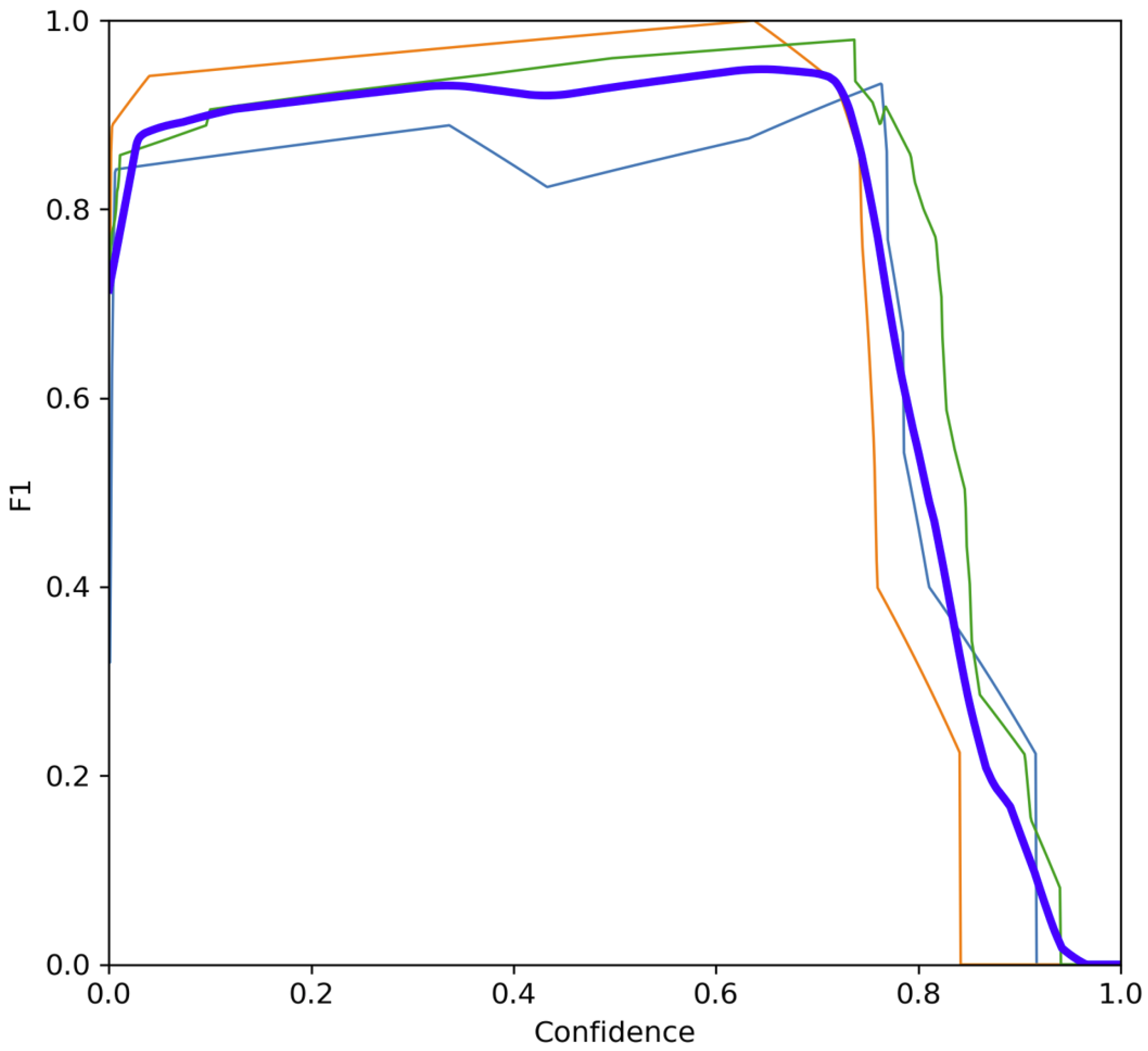

4.2.3. ML Model

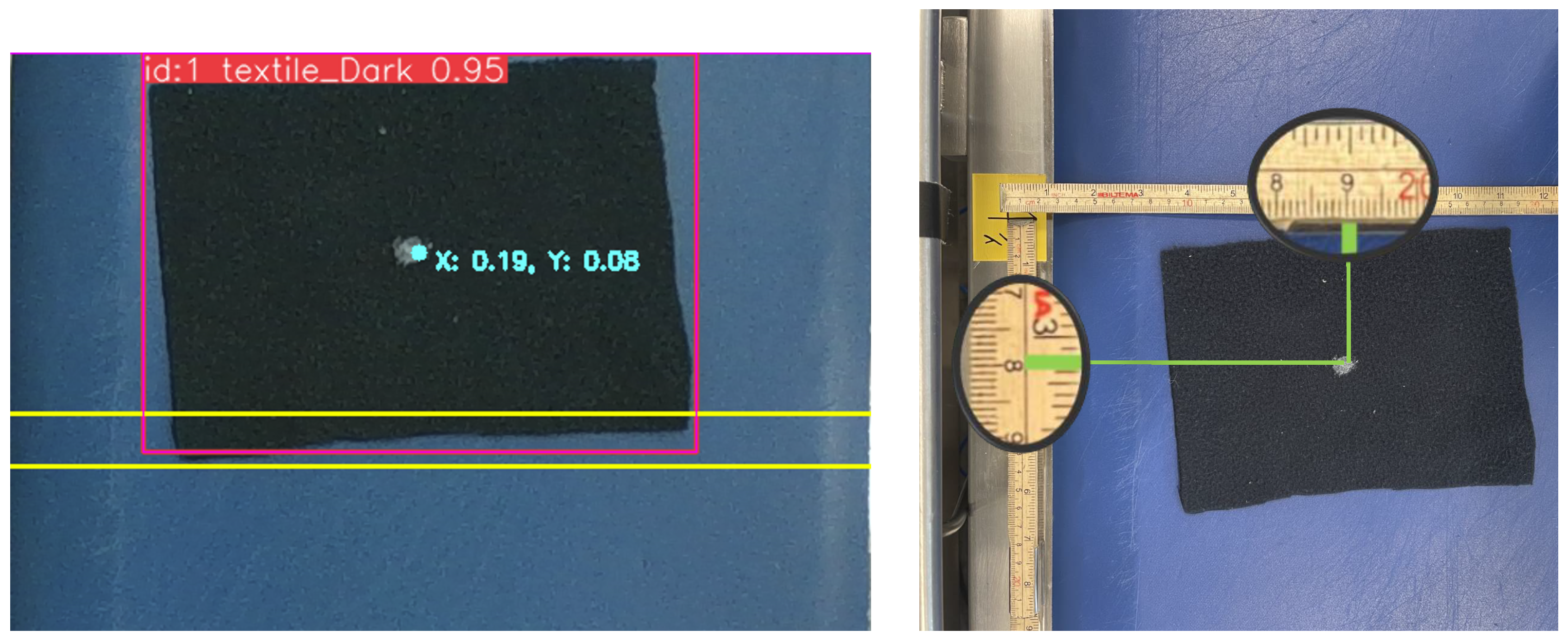

4.2.4. Textile Localization

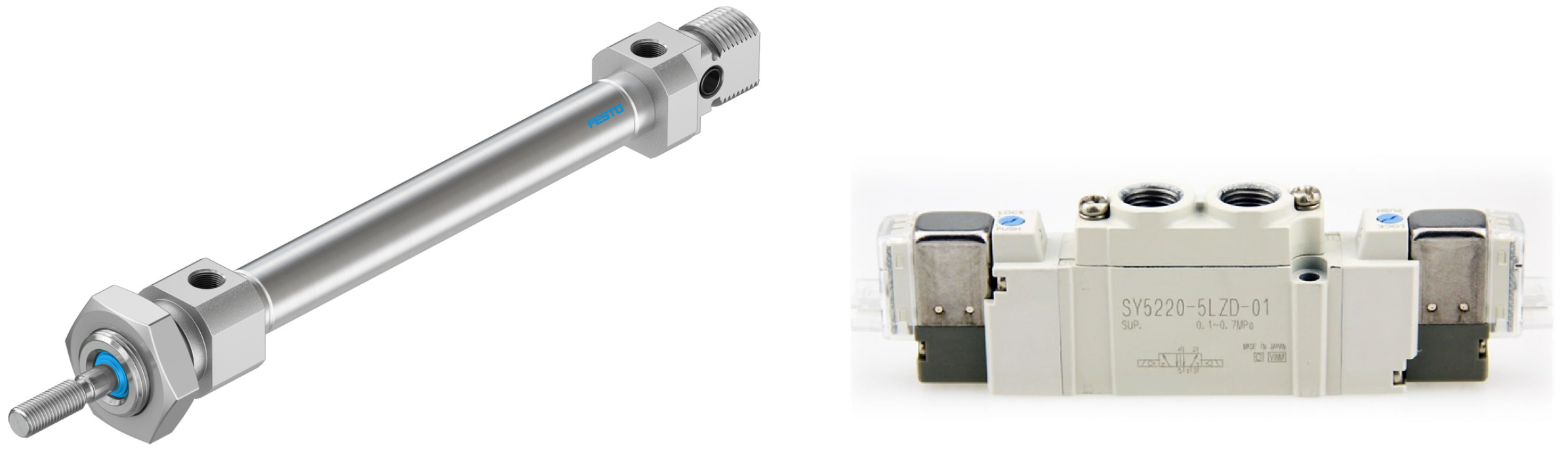

4.2.5. Gripper Design and Development

4.3. Simulation and Digital Twin

5. System Integration

5.1. Multi-Thread Component Integration

5.2. Perception Thread

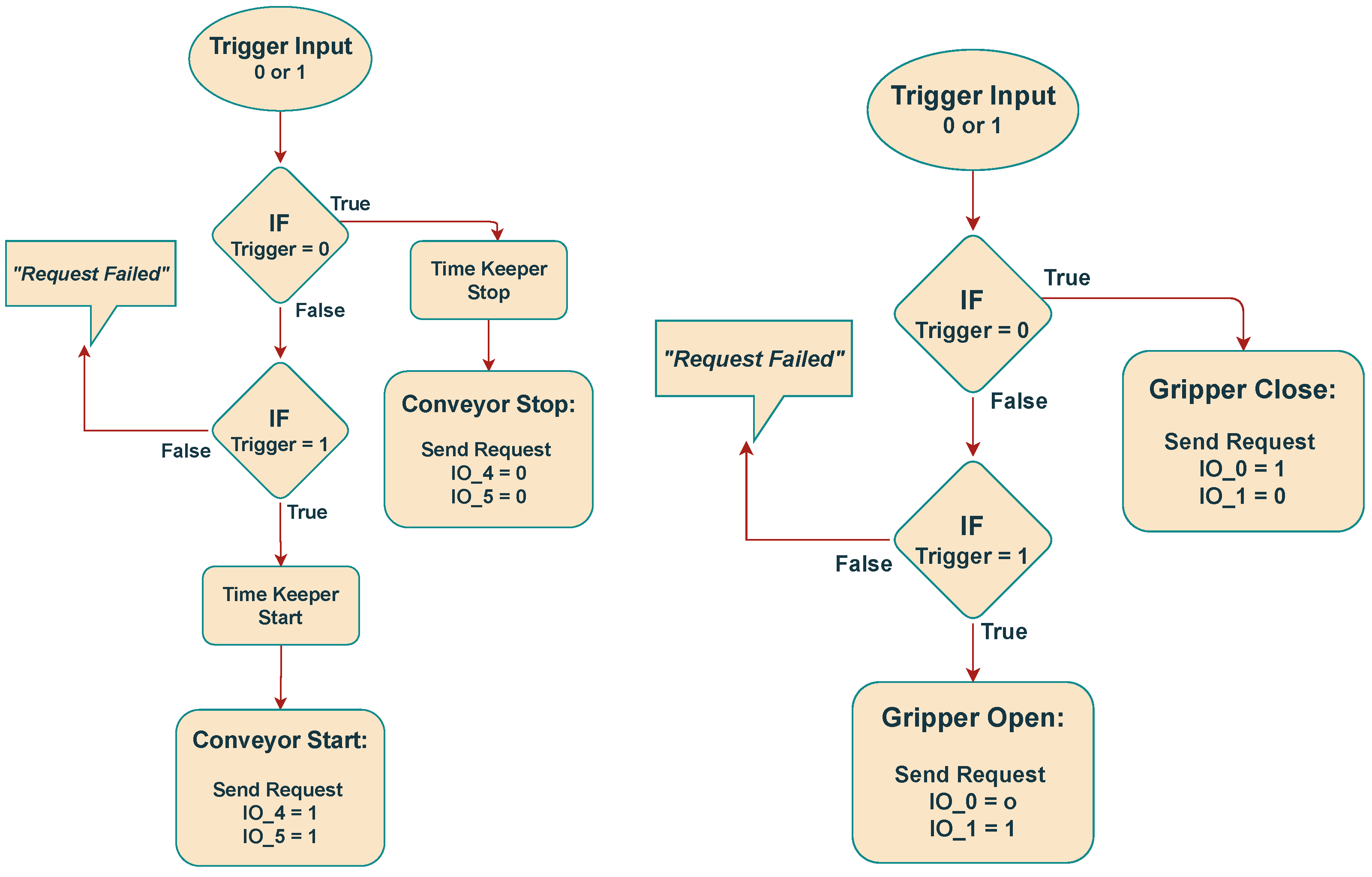

5.3. Conveyor Thread

5.4. Manipulator Thread

6. Results

6.1. Textile Detection and Localization

6.2. Gripper Design and Development

6.3. System Integration

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sondh, S.; Upadhyay, D.S.; Patel, S.; Patel, R.N. Strategic approach towards sustainability by promoting circular economy-based municipal solid waste management system- A review. Sustain. Chem. Pharm. 2024, 37, 101337. [Google Scholar] [CrossRef]

- Ma, W.; Liu, T.; Hao, J.L.; Wu, W.; Gu, X. Towards a circular economy for construction and demolition waste management in China: Critical success factors. Sustain. Chem. Pharm. 2023, 35, 101226. [Google Scholar] [CrossRef]

- Molla, A.H.; Shams, H.; Harun, Z.; Kasim, A.N.C.; Nallapaneni, M.K.; Rahman, M.N.A. Evaluation of end-of-life vehicle recycling system in India in responding to the sustainability paradigm: An explorative study. Sci. Rep. 2023, 13, 4169. [Google Scholar] [CrossRef]

- Angelo, A.C.M.; Saraiva, A.B.; Clímaco, J.C.N.; Infante, C.E.; Valle, R. Life Cycle Assessment and Multi-criteria Decision Analysis: Selection of a strategy for domestic food waste management in Rio de Janeiro. J. Clean. Prod. 2017, 143, 744–756. [Google Scholar] [CrossRef]

- Mishra, K.; Siwal, S.S.; Thakur, V.K. E-waste recycling and utilization: A review of current technologies and future perspectives. Curr. Opin. Green Sustain. Chem. 2024, 47, 100900. [Google Scholar] [CrossRef]

- Voukkali, I.; Papamichael, I.; Loizia, P.; Economou, F.; Stylianou, M.; Naddeo, V.; Zorpas, A.A. Fashioning the Future: Green chemistry and engineering innovations in biofashion. Chem. Eng. J. 2024, 497, 155039. [Google Scholar] [CrossRef]

- Papamichael, I.; Chatziparaskeva, G.; Pedreño, J.N.; Voukkali, I.; Almendro Candel, M.B.; Zorpas, A.A. Building a new mind set in tomorrow fashion development through circular strategy models in the framework of waste management. Curr. Opin. Green Sustain. Chem. 2022, 36, 100638. [Google Scholar] [CrossRef]

- De Ponte, C.; Liscio, M.C.; Sospiro, P. State of the art on the Nexus between sustainability, fashion industry and sustainable business model. Sustain. Chem. Pharm. 2023, 32, 100968. [Google Scholar] [CrossRef]

- Papamichael, I.; Voukkali, I.; Economou, F.; Loizia, P.; Demetriou, G.; Esposito, M.; Naddeo, V.; Liscio, M.C.; Sospiro, P.; Zorpas, A.A. Mobilisation of textile waste to recover high added value products and energy for the transition to circular economy. Environ. Res. 2024, 242, 117716. [Google Scholar] [CrossRef]

- Chioatto, E.S.P. Transition from waste management to circular economy: The European Union roadmap. Environ. Dev. Sustain. 2023, 25, 249–276. [Google Scholar] [CrossRef]

- Bianchi, S.; Bartoli, F.; Bruni, C.; Fernandez-Avila, C.; Rodriguez-Turienzo, L.; Mellado-Carretero, J.; Spinelli, D.; Coltelli, M.B. Opportunities and Limitations in Recycling Fossil Polymers from Textiles. Macromol 2023, 3, 120–148. [Google Scholar] [CrossRef]

- Riba, J.R.; Cantero, R.; Canals, T.; Puig, R. Circular economy of post-consumer textile waste: Classification through infrared spectroscopy. J. Clean. Prod. 2020, 272, 123011. [Google Scholar] [CrossRef]

- Bonifazi, G.; D’Adamo, I.; Palmieri, R.; Serranti, S. Recycling-Oriented Characterization of Space Waste Through Clean Hyperspectral Imaging Technology in a Circular Economy Context. Clean Technol. 2025, 7, 26. [Google Scholar] [CrossRef]

- Tsai, P.F.; Yuan, S.M. Using Infrared Raman Spectroscopy with Machine Learning and Deep Learning as an Automatic Textile-Sorting Technology for Waste Textiles. Sensors 2025, 25, 57. [Google Scholar] [CrossRef]

- Miao, Z.; Yao, L.; Zeng, F.; Wang, Y.; Hong, Z. Feature Fusion-Based Re-Ranking for Home Textile Image Retrieval. Mathematics 2024, 12, 2172. [Google Scholar] [CrossRef]

- Owczarek, M. A New Method for Evaluating the Homogeneity within and between Weave Repeats in Plain Fabric Structures Using Computer Image Analysis. Materials 2024, 17, 3229. [Google Scholar] [CrossRef]

- Behera, M.; Nayak, J.; Banerjee, S.; Chakrabortty, S.; Tripathy, S.K. A review on the treatment of textile industry waste effluents towards the development of efficient mitigation strategy: An integrated system design approach. J. Environ. Chem. Eng. 2021, 9, 105277. [Google Scholar] [CrossRef]

- Wojnowska-Baryła, I.; Bernat, K.; Zaborowska, M.; Kulikowska, D. The Growing Problem of Textile Waste Generation—The Current State of Textile Waste Management. Energies 2024, 17, 1528. [Google Scholar] [CrossRef]

- Araye, A.A.; Yusoff, M.S.; Awang, N.A.; Abd Manan, T.S.B. Evaluation of the Methane (CH4) Generation Rate Constant (k Value) of Municipal Solid Waste (MSW) in Mogadishu City, Somalia. Sustainability 2023, 15, 4531. [Google Scholar] [CrossRef]

- Khan, D.; Baek, M.; Kim, M.Y.; Seog Han, D. Multimodal Object Detection and Ranging Based on Camera and Lidar Sensor Fusion for Autonomous Driving. In Proceedings of the 2022 27th Asia Pacific Conference on Communications (APCC), Jeju Island, Republic of Korea, 19–21 October 2022; pp. 342–343. [Google Scholar] [CrossRef]

- Sahba, R.; Sahba, A.; Sahba, F. Using a Combination of LiDAR, RADAR, and Image Data for 3D Object Detection in Autonomous Vehicles. In Proceedings of the 2020 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Online, 4–7 November 2020; pp. 0427–0431. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Li, F.; Feng, Z.; Chen, L.; Jia, L.; Li, P. Foreign Object Detection Method for Railway Catenary Based on a Scarce Image Generation Model and Lightweight Perception Architecture. IEEE Trans. Circuits Syst. Video Technol. 2025, 1. [Google Scholar] [CrossRef]

- Yan, L.; Wang, Q.; Zhao, J.; Guan, Q.; Tang, Z.; Zhang, J.; Liu, D. Radiance Field Learners As UAV First-Person Viewers. In Proceedings of the Computer Vision—ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 88–107. [Google Scholar]

- Zhang, B.; Xie, Y.; Zhou, J.; Wang, K.; Zhang, Z. State-of-the-art robotic grippers, grasping and control strategies, as well as their applications in agricultural robots: A review. Comput. Electron. Agric. 2020, 177, 105694. [Google Scholar] [CrossRef]

- Samadikhoshkho, Z.; Zareinia, K.; Janabi-Sharifi, F. A Brief Review on Robotic Grippers Classifications. In Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE), Edmonton, AB, Canada, 5–8 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Fenjan, S.Q.; Dehkordi, S.F. Design and Fabrication of a Pneumatic Soft Robot Gripper Using Hyper-Flexible Silicone. In Proceedings of the 2023 11th RSI International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 19–21 December 2023; pp. 641–646. [Google Scholar] [CrossRef]

- Ji, D.; Lee, J.; Jin, M. Design and control of hybrid Flexible robotic gripper with high stiffness and stability. In Proceedings of the 2022 13th Asian Control Conference (ASCC), Jeju, Republic of Korea, 4–7 May 2022; pp. 2503–2505. [Google Scholar] [CrossRef]

- Ariyanto, M.; Munadi, M.; Setiawan, J.; Mulyanto, D.; Nugroho, T. Three-Fingered Soft Robotic Gripper Based on Pneumatic Network Actuator. In Proceedings of the 2019 6th International Conference on Information Technology, Computer and Electrical Engineering (ICITACEE), Semarang, Indonesia, 26–27 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Heikkilä, P.; Harlin, A.; Heikkinen, H.; Tuovinen, J.; Kuittinen, S. Textile Recognition and Sorting for Recycling at an Automated Line Using NIR Spectroscopy. Recycling 2023, 6, 11. [Google Scholar] [CrossRef]

- Spyridis, Y.; Argyriou, V.; Sarigiannidis, A.; Radoglou, P.; Sarigiannidis, P. Autonomous AI-enabled Industrial Sorting Pipeline for Advanced Textile Recycling. In Proceedings of the 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT), Abu Dhabi, United Arab Emirates, 29 April–1 May 2024. [Google Scholar] [CrossRef]

- Luo, J.; Zhang, Z.; Wang, Y.; Feng, R. Robot Closed-Loop Grasping Based on Deep Visual Servoing Feature Network. Actuators 2025, 14, 25. [Google Scholar] [CrossRef]

- Morrison, D.; Corke, P.; Leitner, J. Multi-view picking: Next-best-view reaching for improved grasping in clutter. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar] [CrossRef]

- ASEE PEER. Modular Conveyor Belt System with Robotic Sorting. 2019. Available online: https://peer.asee.org/modular-conveyor-belt-system-with-robotic-sorting (accessed on 1 March 2024).

- ROS 2 Humble Documentation. 2023. Available online: https://docs.ros.org/en/humble/index.html (accessed on 1 March 2024).

- Aharon, N.; Orfaig, R.; Bobrovsky, B.Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Subedi, D.; Jha, A.; Tyapin, I.; Hovland, G. Camera-LiDAR Data Fusion for Autonomous Mooring Operation. In Proceedings of the 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA), Kristiansand, Norway, 9–13 November 2020; pp. 1176–1181. [Google Scholar] [CrossRef]

- The Open Motion Planning Library. Available online: https://ompl.kavrakilab.org/ (accessed on 20 May 2024).

- OMPL Geometric RRT Class Reference. Available online: https://ompl.kavrakilab.org/classompl_1_1geometric_1_1RRT.html (accessed on 20 May 2024).

- Xing, X.; Burdet, E.; Si, W.; Yang, C.; Li, Y. Impedance Learning for Human-Guided Robots in Contact With Unknown Environments. IEEE Trans. Robot. 2023, 39, 3705–3721. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Halvorsen, T.S.; Tyapin, I.; Jha, A. Autonomous Textile Sorting Facility and Digital Twin Utilizing an AI-Reinforced Collaborative Robot. Electronics 2025, 14, 2706. https://doi.org/10.3390/electronics14132706

Halvorsen TS, Tyapin I, Jha A. Autonomous Textile Sorting Facility and Digital Twin Utilizing an AI-Reinforced Collaborative Robot. Electronics. 2025; 14(13):2706. https://doi.org/10.3390/electronics14132706

Chicago/Turabian StyleHalvorsen, Torbjørn Seim, Ilya Tyapin, and Ajit Jha. 2025. "Autonomous Textile Sorting Facility and Digital Twin Utilizing an AI-Reinforced Collaborative Robot" Electronics 14, no. 13: 2706. https://doi.org/10.3390/electronics14132706

APA StyleHalvorsen, T. S., Tyapin, I., & Jha, A. (2025). Autonomous Textile Sorting Facility and Digital Twin Utilizing an AI-Reinforced Collaborative Robot. Electronics, 14(13), 2706. https://doi.org/10.3390/electronics14132706