Interframe Forgery Video Detection: Datasets, Methods, Challenges, and Search Directions

Abstract

1. Introduction

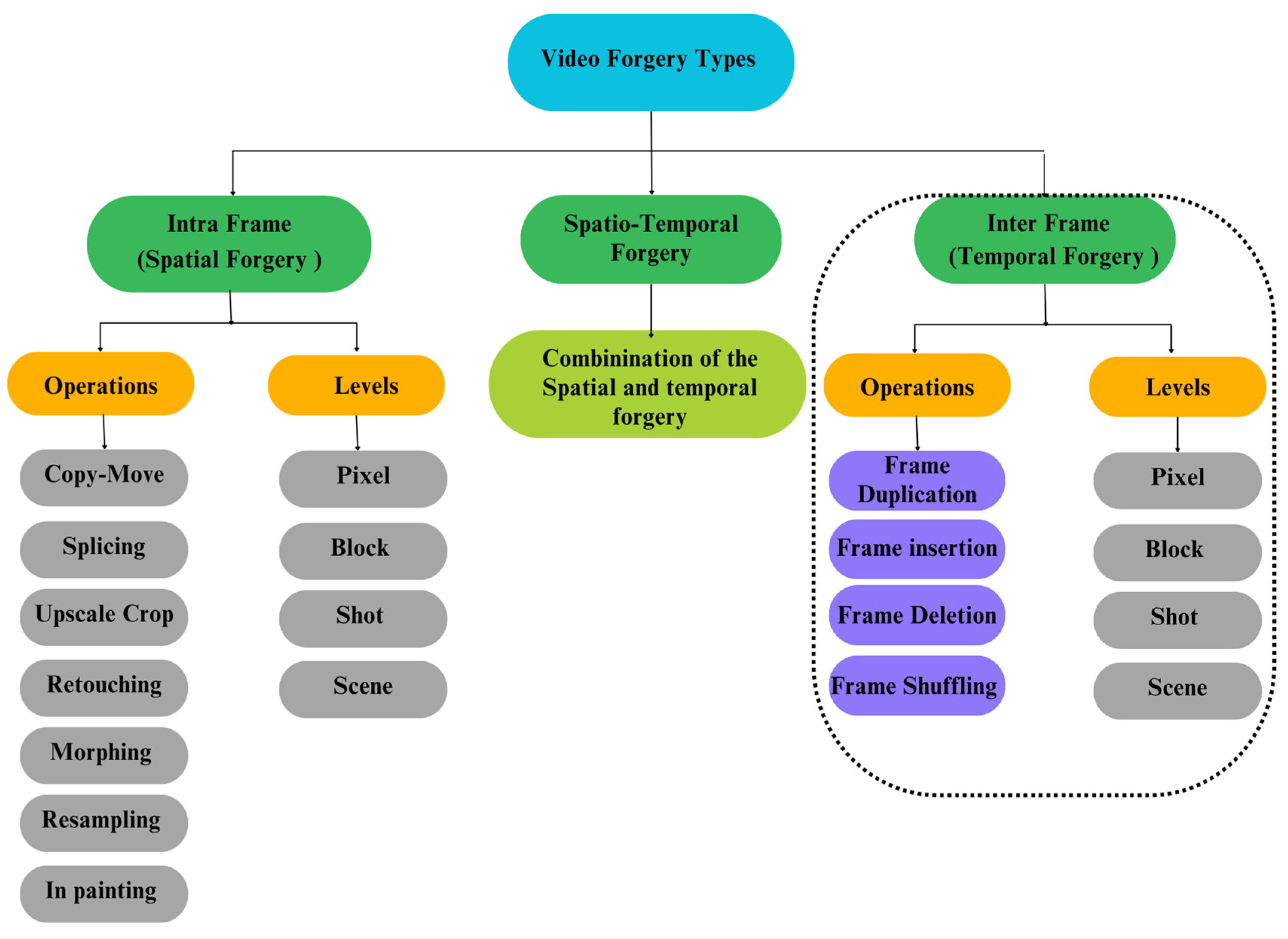

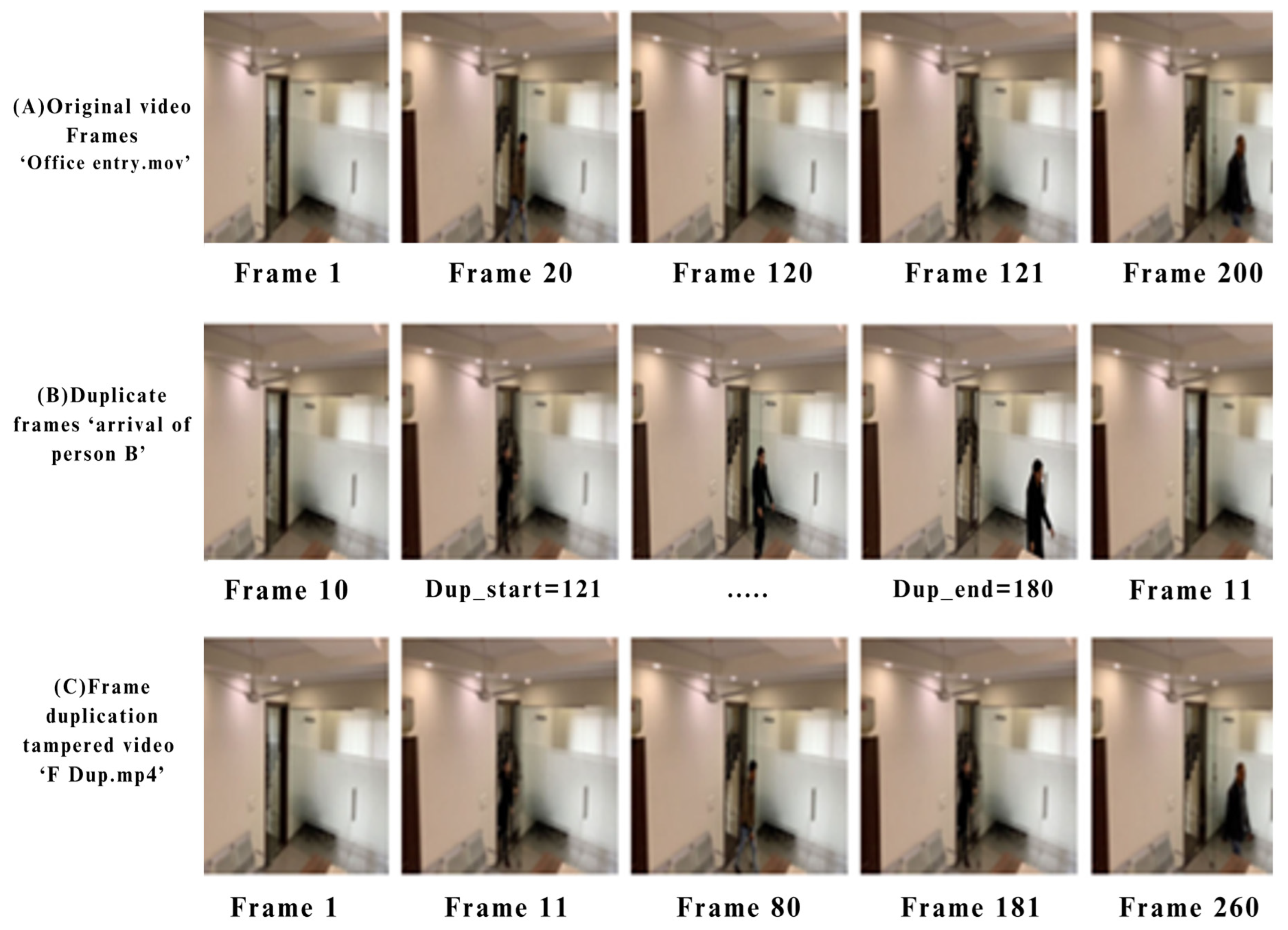

2. Video Forgery Types

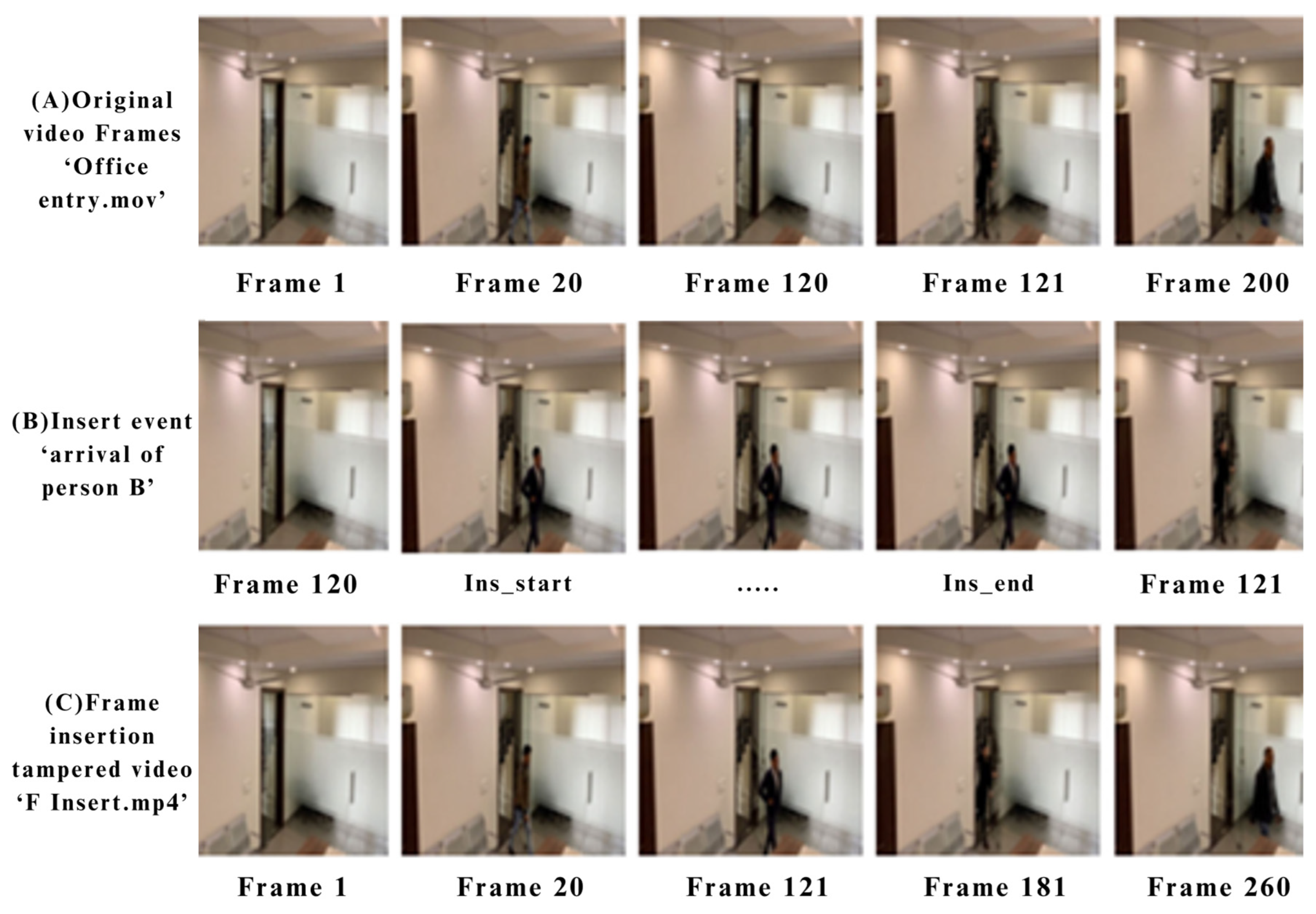

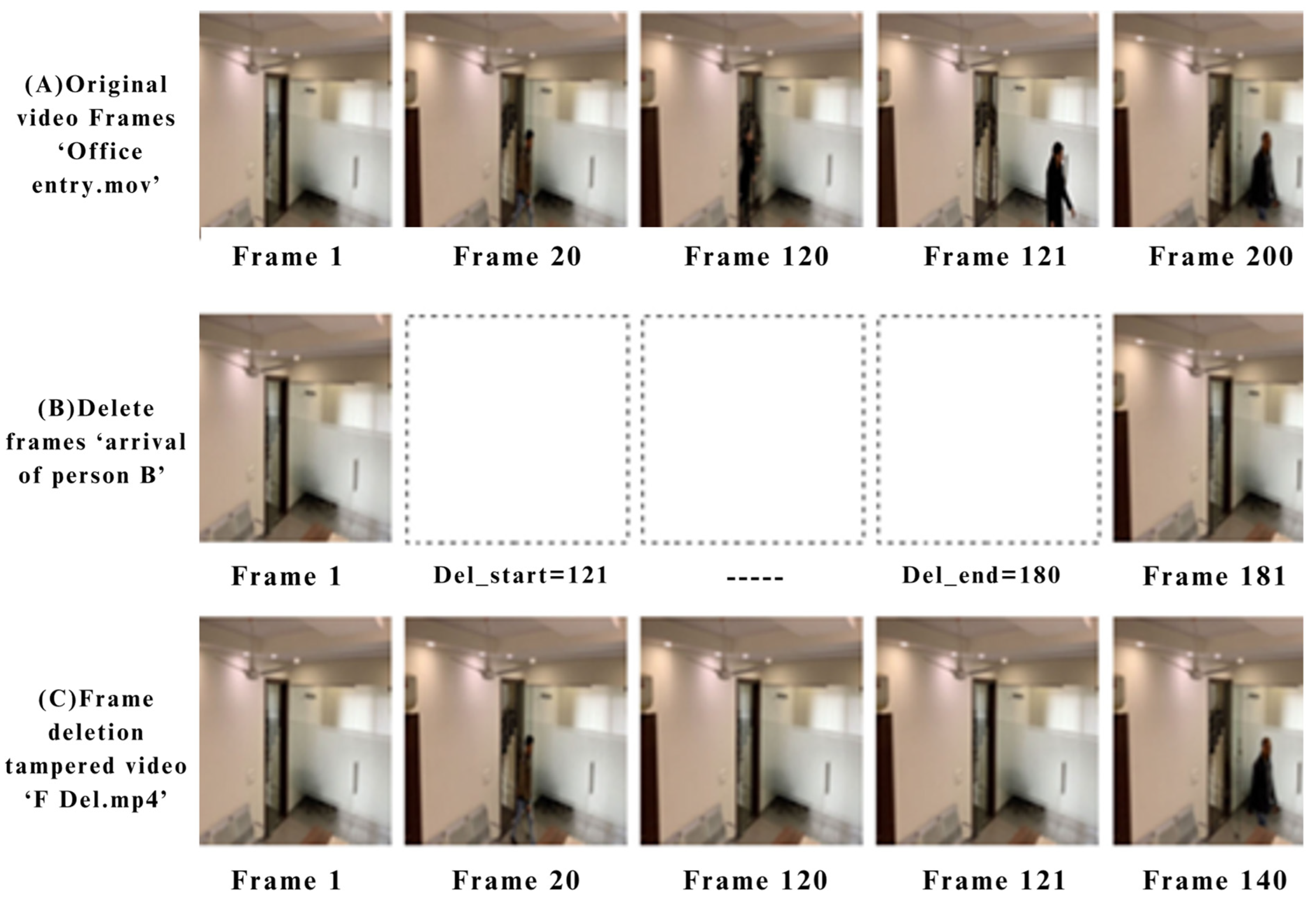

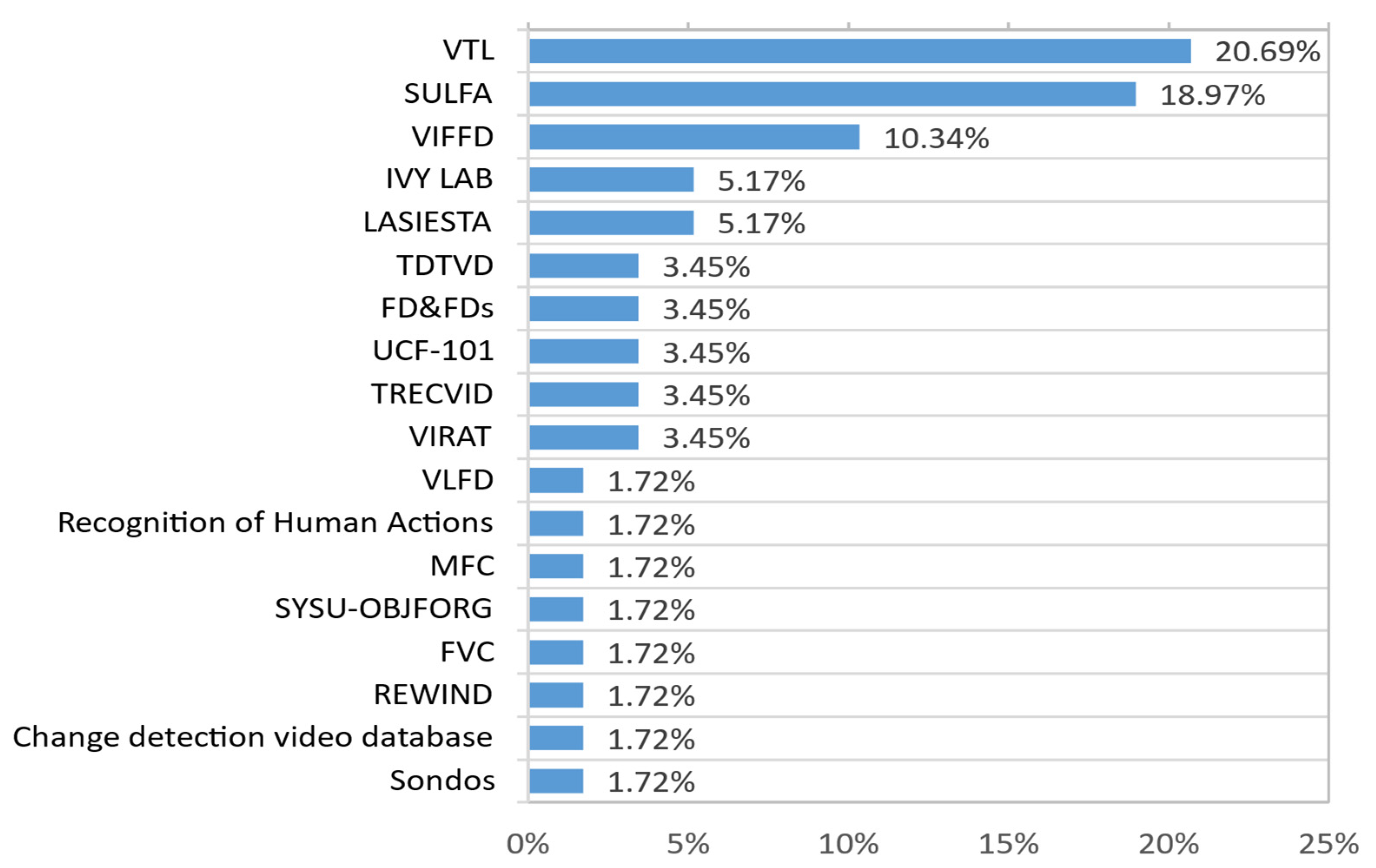

3. Video Forensics Datasets

| Ref. | Dataset | Types of forgery | No. of Videos | Format | Resolution | Direct Download Link |

|---|---|---|---|---|---|---|

| [37] | HTVD | Frame insertion (Realistic) | 108 | MP4 | 1980 × 1080 | http://rb.gy/2onorf (accessed on 14 September 2024) |

| Frame insertion (Smart) | 2700 | |||||

| Frame Deletion (Realistic) | 108 | |||||

| Frame Deletion (Smart) | 2700 | |||||

| Frame Duplication (Realistic) | 108 | |||||

| Frame Duplication (Smart) | 2700 | |||||

| Object cloning | 90 | |||||

| Splicing | 90 | |||||

| Inpaint | 90 | |||||

| [38] | TDTVD | Frame deletion | 210 | AVI | 320 × 240 | https://rebrand.ly/TVTVD (accessed on 14 September 2024) |

| Frame duplication | ||||||

| Frame insertion | ||||||

| [39] | VTL | Frame deletion | 24 | 4:2:0 YUV | 352 × 288 | https://tinyurl.com/33v39d8u (Accessed: 14 September 2024) |

| [40] | SULFA | Copy-move | 150 | MOV, AVI | 320 × 240 | Not directly download |

| [41] | REWIND | Copy-move | 20 | MP4, AVI | 320 × 240 | https://github.com/ShobhitBansal/Video_Forgery_Detection_Using_Machine_Learning?tab=readme-ov-file (accessed on 1 January 2024) |

| [42] | GRIP | Copy-move | 154 | MP4 | 1280 × 720 | http://www.grip.unina.it/ (accessed on 1 January 2024) (Not directly download) |

| Splicing | 10 | AVI | ||||

| [43] | FD&FDs | Frame duplication | 53 | AVI | 320 × 240 | Not directly download |

| Frame shuffling | ||||||

| [46] | VTD | Copy-move | 33 | AVI | 1280 × 720 | https://tinyurl.com/4mfjy5f9 (accessed on 14 September 2024) |

| Splicing | ||||||

| Frame shuffling | ||||||

| [49] | FVD | Frame Deletion | 32 | AVI | 320 × 240, 352 × 288, 704 × 576 | https://rb.gy/roitjj (accessed on 14 September 2024) |

| Frame Insertion | ||||||

| Frame Duplication | ||||||

| Frame Duplication with Shuffling | ||||||

| [47] | TVD | Copy-move | 160 | AVI | 360 × 640, 576 × 768, 540 × 960 | https://rb.gy/6br9m3 (accessed on 14 September 2024 (Not directly download) |

| Geometric transformations | ||||||

| [50] | VLFD | Frame duplication | 210 | AVI, MP4 | 3840 × 2160, 1920 × 1080 | https://csepup.ac.in/vlfd-dataset/ (accessed on 14 September 2024) (Not directly download) |

| [51] | VIFFD | Frame insertion | 390 | AVI | 1920 × 1080, 720 × 404 | https://rb.gy/pqtkpc (accessed on 14 September 2024) |

| Frame deletion | ||||||

| Frame duplication | ||||||

| Frame shuffling | ||||||

| [52] | Sondos | Frame insertion | 15 | AVI | 320 × 240 352 × 288 704 × 576 | Not directly download |

| Frame deletion | ||||||

| Frame duplication | ||||||

| Frame shuffling | ||||||

| [53] | TRECVID | - | 40 | MPEG-1 | 720 × 576 | https://trecvid.nist.gov/ (accessed on 30 September 2022) |

| [54] | SYSU- OBJFORG | Object-based forged | 100 | MP4, MPEG-4 | 1280 × 720 | https://tinyurl.com/yzv6veum (accessed on 14 September 2024) (Not directly download) |

| [55] | UTF101 | - | 13K | AVI | 320 × 240 | https://tinyurl.com/4hk32upd (accessed on 14 September 2024) |

| [56] | MFC | - | 4k | MP4, MOV | - | Not directly download |

| [57] | VIRAT | - | 329 | MP4 | 640 × 480 | https://viratdata.org/ (accessed on 14 September 2024) |

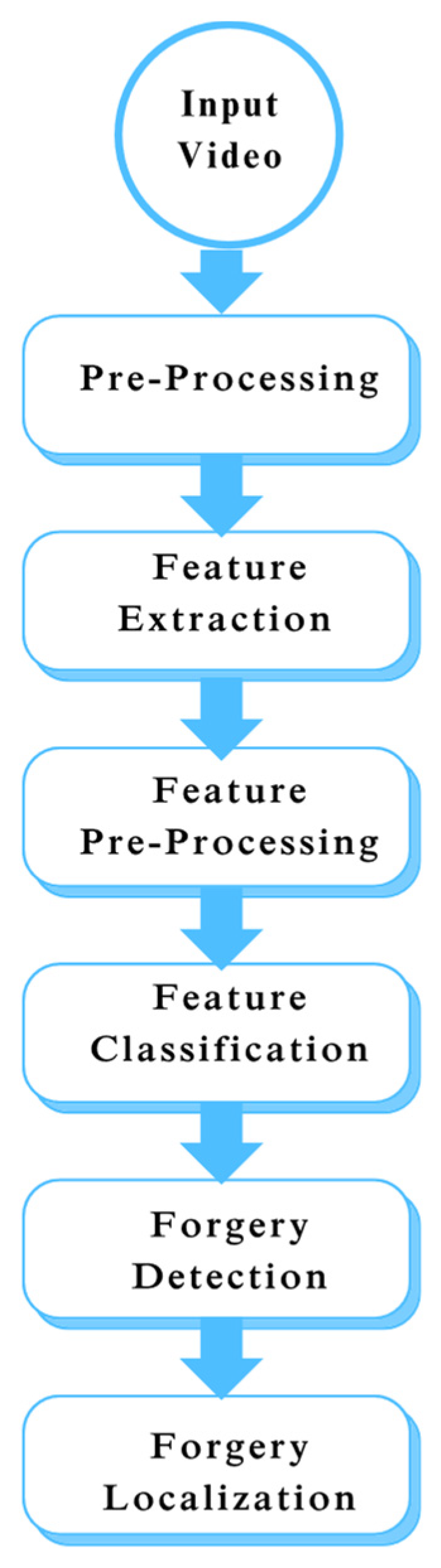

4. Classification of Interframe Forgery Video Techniques According to Methodology

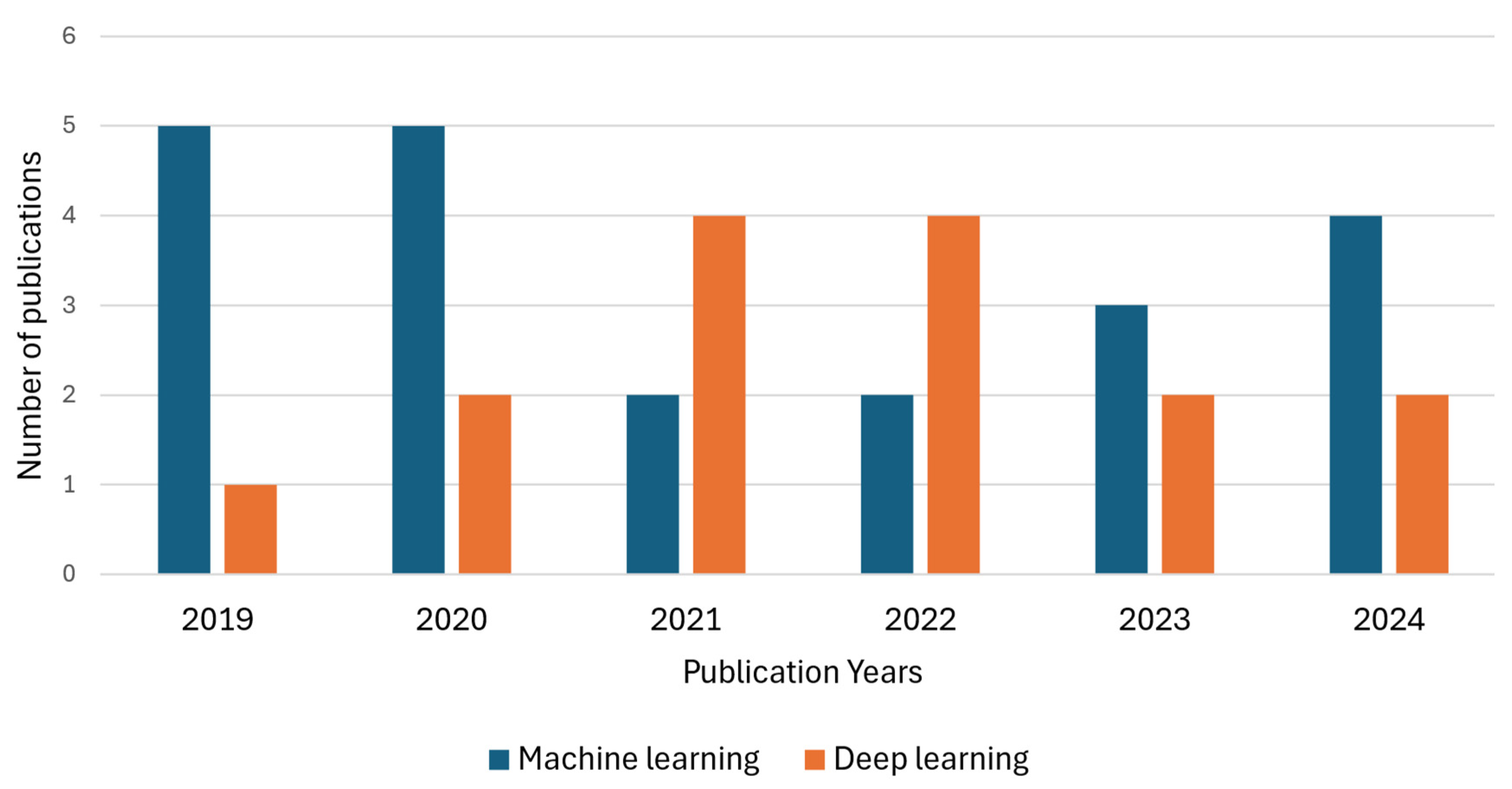

4.1. Machine Learning Methods

| Ref. | Year | Frame Deletion | Frame Insertion | Frame Shuffling | Frame Duplication | Double Compression | Short Description |

|---|---|---|---|---|---|---|---|

| [63] | 2024 | ✓ | It identifies potential forgery by analyzing motion vectors and detecting abrupt variations in the mean values of frames, which indicate possible frame shuffling. Suspicious frames are validated using SIFT features and RANSAC homography to confirm forgery. | ||||

| [62] | 2024 | ✓ | It involves three main steps—noise extraction, computation of the noise transfer matrix, and adjustment of the transfer matrix weights. Noise extraction uses a three-layer pyramid structure that captures local and structural noise features. The noise transfer matrix evaluates the distance between noise features in consecutive frames. | ||||

| [3] | 2024 | ✓ | ✓ | ✓ | ✓ | Frame difference analysis by examining the pixel intensities of adjacent frames in a video. The original sequence of frames exhibits a smooth pattern of differences, while any manipulation disrupts this pattern, resulting in spikes. Sequencing alteration detection, which analyzes the differences between adjacent frames and identifies alterations and potential forgery. Location identification by pinpointing the presence of spikes. | |

| [71] | 2024 | ✓ | ✓ | ✓ | A passive approach to detect and localize interframe forgeries using texture features like HoG, uniform, and rotation invariant LBP. | ||

| [66] | 2023 | ✓ | A two-level method for detecting video frame insertion forgeries. It combines temporal perceptual analysis using z-score anomaly detection with SSIM-based verification. | ||||

| [70] | 2023 | ✓ | It detects tampering artifacts by post-processing techniques, such as adjusting contrast, boosting brightness, blurring, and introducing noise. A voting mechanism is used to predict duplicate images. A filtering method removes false detections and generates duplicate sequences. | ||||

| [29] | 2023 | ✓ | ✓ | It detects anomalous points based on compression field features. It verifies the irregular points associated with the localization of forgery. | |||

| [7] | 2023 | ✓ | The method extracts feature vectors from video frames using DCT and converts them into binary images. Applies edge detection to the binary images and identifies the candidate regions for forgery using histogram analysis and correlation coefficients. Searches for the corresponding source frames of the duplicated frames using SSIM. | ||||

| [11] | 2022 | ✓ | ✓ | ✓ | Uses Li et al.’s robust hashing method, which is based on selective quaternion invariance, to compute hash values from the extended frames and compare them with a threshold to detect tampered frames. | ||

| [9] | 2021 | ✓ | The SSIM-based method measures the similarity between consecutive frames. The search algorithm detects and localizes the tampered frames. | ||||

| [69] | 2021 | ✓ | The tampered video sequence is segmented into small overlapping subsequences, and the similarity between them is calculated using the enhanced Levenshtein distance. Subsequence merging and localization. | ||||

| [49] | 2020 | ✓ | ✓ | ✓ | ✓ | The method utilizes HOG as a distinctive attribute extracted from each image to detect interframe forgery. Correlation coefficients are employed to identify instances of frame deletion and insertion, with abnormal points detected using Grubbs’ test. MEI is applied to edge images of each shot to identify instances of frame duplication and shuffling, contributing to the overall capability of identifying all interframe forgeries. | |

| [72] | 2020 | ✓ | ✓ | ✓ | Extracting the ENF signal from the suspicious video. The ENF signal is obtained using band-pass filtering and cubic spline interpolation techniques. Analyzing the correlation coefficient between adjacent periods in the interpolated ENF signal. | ||

| [73] | 2020 | ✓ | A passive blind forgery detection technique for identifying frame duplication attacks in MPEG-4 videos. It employs a two-step algorithm that involves SIFT key points for feature extraction and a RANSAC algorithm for identifying duplicate frames. | ||||

| [74] | 2020 | ✓ | ✓ | The Triangular Polarity Feature Classification (TPFC) framework for detecting video frame insertion and deletion forgeries. It utilizes a novel TPD and extracts discriminative features from the MLS framework. | |||

| [43] | 2020 | ✓ | ✓ | Using temporal average and GLCM features. | |||

| [30] | 2019 | ✓ | ✓ | ✓ | ✓ | Identify manipulated video segments and label the altered frames based on their texture and micro-patterns. The method employs DRLBP. | |

| [34] | 2019 | ✓ | ✓ | ✓ | The technique leverages residue data extracted during video decoding by analyzing spatial and temporal energies. | ||

| [64] | 2019 | ✓ | ✓ | ✓ | The correlation between the Haralick-coded frames proved effective for static and dynamic videos. | ||

| [52] | 2019 | ✓ | ✓ | ✓ | The method calculates the temporal mean of non-overlapping subsequences of frames to minimize the number of comparisons and processing time. Utilizing the UQI is essential for evaluating the quality of neighboring TP images and identifying discrepancies that suggest tampering. By examining the UQI values of TP images, the method recognizes the interframe forgery. | ||

| [67] | 2019 | ✓ | Frames are converted to grayscale and transformed using DCT, and mean features are extracted. A sequence matrix is constructed, and correlation coefficients are calculated to identify duplicated frames. The mean and standard deviation of grayscale values are computed to calculate the coefficient of variation, which distinguishes between original and forged frames. | ||||

| [58] | 2017 | ✓ | ✓ | ✓ | Analyzing the spatial and temporal effects on specific video frames. The algorithm classifies videos into three types—single-compressed, double-compressed without tampering, and double-compressed with tampering. | ||

| [31] | 2016 | ✓ | ✓ | ✓ | It detects quantization-error-rich areas in P frames and use them to calculate spatially constrained residual errors. It also employs a wavelet-based algorithm to enrich the traces of quantization error in the frequency domain. | ||

| [65] | 2016 | ✓ | It detects frame deletion in the video with the magnitude of the fingerprint using the periodicity of the P-frame prediction error sequence. | ||||

| [75] | 2016 | ✓ | Variation in prediction of residual magnitude and number of intra-macroscopic blocks. | ||||

| [59] | 2015 | ✓ | ✓ | Visual inspection of DCT patterns on a logarithmic scale provides a simple but effective method for distinguishing between single and recompressed video images. Presented a new method for exploiting the semi-peak light in an optical flow to detect and establish frame deletion in digital videos. | |||

| [76] | 2015 | ✓ | ✓ | Tchebyshev’s inequality is used twice in localization to determine the locations of insertions and deletions. It focuses on the inconsistency of QCCoLBP values at the tampered frame locations. | |||

| [60] | 2014 | ✓ | ✓ | ✓ | The algorithm consists of extracting DCT coefficients from I at the quantization level and extracting residual errors from the P-frame. It calculates the optical flow between consecutive frames after detecting fake locations based on the consistency of optical flow. | ||

| [61] | 2014 | ✓ | ✓ | ✓ | It checks the consistency of the velocity field in the video to detect interframe forgery. Then, use the generalized ESD test to pinpoint specific locations where these changes occurred in the videos. |

4.2. Deep Learning Methods

| Ref. | Year | Frame Deletion | Frame Insertion | Frame Shuffling | Frame Duplication | Double Compression | Short Description |

|---|---|---|---|---|---|---|---|

| [13] | 2024 | ✓ | ✓ | The system involves four stages—preprocessing, feature extraction using VGG-16, feature selection with KPCA, and correlation analysis to detect forgeries. | |||

| [8] | 2024 | ✓ | ✓ | It involves 2D-CNN for feature extraction, an autoencoder for dimensionality reduction, and LSTM/GRU for analyzing long-range dependencies in video frames. | |||

| [12] | 2023 | ✓ | ✓ | ✓ | A spatiotemporal averaging method was used to extract background and moving objects for video forgery detection. Features were extracted using the GoogleNet model to obtain feature vectors. The UFS-MSRC method was employed to select the most crucial feature vectors, thereby accelerating training and enhancing detection accuracy. An LSTM network was applied to detect forgery in different video sequences. | ||

| [14] | 2023 | ✓ | ✓ | It uses a 3DCNN model to detect and locate video forgeries between frames. It uses a multi-scale algorithm to measure the similarity of video frames and find the forged segments. It uses an absolute difference algorithm to reduce the redundancy of video frames and spot the fake artifacts. | |||

| [85] | 2022 | ✓ | CNN is employed to detect duplicates, locate video frames, and create and match integrity embeddings. | ||||

| [36] | 2022 | ✓ | The method uses a VFID-Net to extract deep features from adjacent frames and computes the correlation coefficients and distances between them. It also employs a threshold control parameter and a minimum distance score to classify the frames as either original or inserted. | ||||

| [10] | 2022 | ✓ | ✓ | It calculates the correlation factors of the deep features of neighboring frames to detect forgeries. | |||

| [15] | 2022 | ✓ | ✓ | ✓ | ✓ | ✓ | 3D-CNN is the foundation upon which the suggested model is constructed. It uses pre-processing techniques to provide different frames that detect differences between consecutive adjacent frames. |

| [83] | 2021 | ✓ | ✓ | ✓ | It uses a hybrid optimization algorithm, called MO-BWO, that combines the mayfly optimization (MO) and the black widow optimization (BWO) algorithms to fine-tune the weights of the CNN and improve its performance. It uses prediction residual gradient (PRG) and optical flow gradient (OFG) to automatically locate the tampered frames and identify the type of forgery within the GOP structure. | ||

| [2] | 2021 | ✓ | It extracts features and converts videos into frames. The I3D network then detects frame-to-frame duplication by examining an original video alongside a forged one. RNN is responsible for detecting sequence-to-sequence forgery in videos. | ||||

| [16] | 2021 | ✓ | ✓ | ✓ | It exploits the high correlation of video data in both spatial and temporal domains and constructs a third-order tensor tube-fiber mode to represent video subgroups. It uses correlation analysis, Harris corner detection, and SVD feature extraction to measure the discontinuity of frame sequences and identify the forged frames. | ||

| [78] | 2021 | ✓ | ✓ | ✓ | ✓ | To extract spatiotemporal information from video frames, a 2D-CNN is utilized to identify the video frames, and a Gaussian radial basis function (RBF-MSVM) is utilized. | |

| [82] | 2020 | ✓ | It detects and localizes object-based forgery in high-definition videos using CNNs. The approach consists of two steps, as follows: it uses a temporal CNN to categorize the frames of a video as either solid or double compressed, primarily based on the motion residuals extracted from a sliding window technique. | ||||

| [81] | 2020 | ✓ | ✓ | ✓ | ✓ | It uses retrained CNN models (e.g., MobileNetv2, ResNet18) on the ImageNet dataset. It combines residuals of adjacent frames and optical flow for robust detection. | |

| [32] | 2019 | ✓ | ✓ | It uses two novel forensics filters, Q4 and Cobalt, designed for manual verification by human experts, and combines them with deep CNNs for visual classification. |

5. Performance Analysis of Interframe Forgery Detection Techniques

5.1. Evaluation Metrics

5.2. Performance of Machine Learning-Based Video Forgery Detection Methods

5.3. Performance of Deep Learning-Based Video Forgery Detection Methods

6. Discussion

6.1. Limitations and Challenges

6.1.1. Challenges in Detecting Video Frame Shuffling and Duplication

- Shuffled frames often retain similar statistical properties to authentic frames, making detection difficult [43].

- Advanced editing tools produce forgeries that closely resemble authentic frames, complicating detection efforts and reducing the efficacy of traditional methods, such as SSIM or optical flow estimation [62].

- Manipulations in real-time videos present significant challenges, especially when no reference videos are available [1].

- Processing large volumes of video data, especially in real time, requires significant computational resources [77].

- Few methods can effectively detect duplicate frames regardless of their order or the total count of forged frames.

- Detection accuracy decreases in videos with dynamic camera movements, limiting the application of specific detection methods [1].

6.1.2. Challenges in Detecting Frame Insertion and Deletion

6.1.3. Challenges in Detecting Double Compression and Post-Processing Forgeries

- Differentiating between video compression artifacts and genuine forgery traces, especially with multiple compression layers [35].

- Techniques such as noise addition can mask forgery traces, reducing the accuracy of detection algorithms [60].

- Current methods are often not robust enough to detect forgeries after extensive post-processing, such as compression or noise addition [58].

6.2. Search Directions

- Real-Time Forgery Detection Limitations:

- GOP Size and Forgery Classification:Fixed GOP sizes and the absence of forgery classification constrain current studies. Future research could enhance detection by investigating how adaptive GOPs impact forgery identification, and it could also explore the classification of forgery types based on fundamental manipulation techniques [35,60].

- Specific Forgery Types:Current methods are primarily designed for specific types of forgery, such as frame insertion and deletion, but may not be effective against other types of manipulation. Hybrid frameworks combining multiple forgery detection strategies, such as Siamese-RNN for duplication [2] and CNN-LSTM for shuffling [12], could enhance adaptability.

- Applicability to Dynamic Scenes:

- Computational Complexity:High computational costs limit real-time deployment. Optimizing algorithms for parallel processing and integrating low-complexity models, such as UFS-MSRC, with LSTM networks [12] could reduce resource demands.

- Post-Processing Robustness:

- Integration with Machine Learning/Deep Learning:

- Comprehensive Datasets:There is a need for more extensive datasets that cover multiple types of forgery operations, facilitating the development of more generalized models.

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Networks |

| 2D-CNN | Two-Dimensional Convolutional Neural Network |

| 3DCNN | Three-Dimensional Convolutional Neural Network |

| RNN | Recurrent Neural Networks |

| DCT | Discrete Transform |

| BAS | Block Artifact Strength |

| VPF | Variation In Prediction Footprint |

| SSIM | The Structural Similarity Index Measure |

| LSTM | Long Short-Term Memory Network |

| LBP | Local Binary Pattern |

| DRLBP | The Discriminative Robust Local Binary Pattern |

| U-LBP | Uniform Local Binary Pattern |

| R-LBP | Rotation Invariant Local Binary Pattern |

| GLCM | Gray Level Co-Occurrence Matrix |

| MEI | Motion Energy Image |

| HOG | Histogram of Oriented Gradients |

| ENF | Electrical Network Frequency |

| SIFT | Scale Invariant Feature Transform |

| RANSAC | Random Sample Consensus |

| MLS | Multi-Level Subtraction |

| TPD | Triangular Polarity Detector |

| VGG | Visual Geometry Group |

| KPCA | Kernel Principal Component Analysis |

| MVC | Computational Complexity in Multi-View Video Coding |

| PDTM | Perceptual Distortion Threshold Model |

References

- Nam, S.-H.; Park, J.; Kim, D.; Yu, I.-J.; Kim, T.-Y.; Lee, H.-K. Two-stream network for detecting double compression of H.264 videos. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 111–115. [Google Scholar]

- Munawar, M.; Noreen, I. Duplicate Frame Video Forgery Detection Using Siamese-based RNN. Intell. Autom. Soft Comput. 2021, 29, 927–937. [Google Scholar] [CrossRef]

- Shekar, B.; Abraham, W.; Pilar, B. A Simple Difference Based Inter Frame Video Forgery Detection and Localization. In Proceedings of the International Conference on Soft Computing and its Engineering Applications, Anand, India, 7–9 December 2023; pp. 3–15. [Google Scholar]

- Verdoliva, L. Media forensics and deepfakes: An overview. IEEE J. Sel. Top. Signal Process. 2020, 14, 910–932. [Google Scholar] [CrossRef]

- El-Shafai, W.; Fouda, M.A.; El-Rabaie, E.-S.M.; El-Salam, N.A. A comprehensive taxonomy on multimedia video forgery detection techniques: Challenges and novel trends. Multimed. Tools Appl. 2024, 83, 4241–4307. [Google Scholar] [CrossRef]

- Sandhya; Kashyap, A. A comprehensive analysis of digital video forensics techniques and challenges. Iran J. Comput. Sci. 2024, 7, 359–380. [Google Scholar] [CrossRef]

- Bozkurt, I.; Ulutaş, G. Detection and localization of frame duplication using binary image template. Multimed. Tools Appl. 2023, 82, 31001–31034. [Google Scholar] [CrossRef]

- Akhtar, N.; Hussain, M.; Habib, Z. DEEP-STA: Deep Learning-Based Detection and Localization of Various Types of Inter-Frame Video Tampering Using Spatiotemporal Analysis. Mathematics 2024, 12, 1778. [Google Scholar] [CrossRef]

- Mohiuddin, S.; Malakar, S.; Sarkar, R. Duplicate frame detection in forged videos using sequence matching. In Proceedings of the International Conference on Computational Intelligence in Communications and Business Analytics, Kalyani, India, 27–28 January 2021; pp. 29–41. [Google Scholar]

- Kumar, V.; Kansal, V.; Gaur, M. Multiple Forgery Detection in Video Using Convolution Neural Network. Comput. Mater. Contin. 2022, 73, 1347–1364. [Google Scholar] [CrossRef]

- Niwa, S.; Tanaka, M.; Kiya, H. A Detection Method of Temporally Operated Videos Using Robust Hashing. In Proceedings of the 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 18–21 October 2022; pp. 144–147. [Google Scholar]

- Girish, N.; Nandini, C. Inter-frame video forgery detection using UFS-MSRC algorithm and LSTM network. Int. J. Model. Simul. Sci. Comput. 2023, 14, 2341013. [Google Scholar] [CrossRef]

- Shelke, N.A.; Kasana, S.S. Multiple forgery detection in digital video with VGG-16-based deep neural network and KPCA. Multimed. Tools Appl. 2024, 83, 5415–5435. [Google Scholar] [CrossRef]

- Gowda, R.; Pawar, D. Deep learning-based forgery identification and localization in videos. Signal Image Video Process. 2023, 17, 2185–2192. [Google Scholar] [CrossRef]

- Oraibi, M.R.; Radhi, A.M. Enhancement digital forensic approach for inter-frame video forgery detection using a deep learning technique. Iraqi J. Sci. 2022, 63, 2686–2701. [Google Scholar] [CrossRef]

- Alsakar, Y.M.; Mekky, N.E.; Hikal, N.A. Detecting and locating passive video forgery based on low computational complexity third-order tensor representation. J. Imaging 2021, 7, 47. [Google Scholar] [CrossRef]

- Zhong, J.-L.; Gan, Y.-F.; Vong, C.-M.; Yang, J.-X.; Zhao, J.-H.; Luo, J.-H. Effective and efficient pixel-level detection for diverse video copy-move forgery types. Pattern Recognit. 2022, 122, 108286. [Google Scholar] [CrossRef]

- Tyagi, S.; Yadav, D. A detailed analysis of image and video forgery detection techniques. Vis. Comput. 2023, 39, 813–833. [Google Scholar] [CrossRef]

- Singh, G.; Singh, K. Copy-Move Video Forgery Detection Techniques: A Systematic Survey with Comparisons, Challenges and Future Directions. Wirel. Pers. Commun. 2024, 134, 1863–1913. [Google Scholar] [CrossRef]

- Fayyaz, M.A.; Anjum, A.; Ziauddin, S.; Khan, A.; Sarfaraz, A. An improved surveillance video forgery detection technique using sensor pattern noise and correlation of noise residues. Multimed. Tools Appl. 2020, 79, 5767–5788. [Google Scholar] [CrossRef]

- Verde, S.; Bondi, L.; Bestagini, P.; Milani, S.; Calvagno, G.; Tubaro, S. Video codec forensics based on convolutional neural networks. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 530–534. [Google Scholar]

- D’Avino, D.; Cozzolino, D.; Poggi, G.; Verdoliva, L. Autoencoder with recurrent neural networks for video forgery detection. arXiv 2017, arXiv:1708.08754. [Google Scholar] [CrossRef]

- Thajeel, S.A.; Sulong, G.B. State of the art of copy-move forgery detection techniques: A review. Int. J. Comput. Sci. Issues (IJCSI) 2013, 10, 174. [Google Scholar]

- Mizher, M.A.; Ang, M.C.; Mazhar, A.A.; Mizher, M.A. A review of video falsifying techniques and video forgery detection techniques. Int. J. Electron. Secur. Digit. Forensics 2017, 9, 191–208. [Google Scholar] [CrossRef]

- Srivalli, D.; Tech, M.; Sri, D.; Begum, M.; Prakash, C.; Kumar, S.; Pallavi, P. Video Inpainting with Local and Global Refinement. Int. J. Sci. Res. Eng. Manag. 2024, 8, 3. [Google Scholar] [CrossRef]

- Nabi, S.T.; Kumar, M.; Singh, P.; Aggarwal, N.; Kumar, K. A comprehensive survey of image and video forgery techniques: Variants, challenges, and future directions. Multimed. Syst. 2022, 28, 939–992. [Google Scholar] [CrossRef]

- Mondaini, N.; Caldelli, R.; Piva, A.; Barni, M.; Cappellini, V. Detection of malevolent changes in digital video for forensic applications. In Proceedings of the Security, Steganography, and Watermarking of Multimedia Contents IX, San Jose, CA, USA, 29 January–1 February 2007; pp. 300–311. [Google Scholar]

- Li, Q.; Wang, R.; Xu, D. A video splicing forgery detection and localization algorithm based on sensor pattern noise. Electronics 2023, 12, 1362. [Google Scholar] [CrossRef]

- Singla, N.; Nagpal, S.; Singh, J. A two-stage forgery detection and localization framework based on feature classification and similarity metric. Multimed. Syst. 2023, 29, 1173–1185. [Google Scholar] [CrossRef]

- Saddique, M.; Asghar, K.; Bajwa, U.I.; Hussain, M.; Habib, Z. Spatial Video Forgery Detection and Localization using Texture Analysis of Consecutive Frames. Adv. Electr. Comput. Eng. 2019, 19, 97–108. [Google Scholar] [CrossRef]

- Aghamaleki, J.A.; Behrad, A. Inter-frame video forgery detection and localization using intrinsic effects of double compression on quantization errors of video coding. Signal Process. Image Commun. 2016, 47, 289–302. [Google Scholar] [CrossRef]

- Zampoglou, M.; Markatopoulou, F.; Mercier, G.; Touska, D.; Apostolidis, E.; Papadopoulos, S.; Cozien, R.; Patras, I.; Mezaris, V.; Kompatsiaris, I. Detecting tampered videos with multimedia forensics and deep learning. In Proceedings of the MultiMedia Modeling: 25th International Conference, MMM 2019, Thessaloniki, Greece, 8–11 January 2019; Proceedings, Part I 25; Springer: Berlin/Heidelberg, Germany, 2019; pp. 374–386. [Google Scholar]

- Sitara, K.; Mehtre, B. Detection of inter-frame forgeries in digital videos. Forensic Sci. Int. 2018, 289, 186–206. [Google Scholar]

- Fadl, S.M.; Han, Q.; Li, Q. Inter-frame forgery detection based on differential energy of residue. IET Image Process. 2019, 13, 522–528. [Google Scholar] [CrossRef]

- Kingra, S.; Aggarwal, N.; Singh, R.D. Video inter-frame forgery detection approach for surveillance and mobile recorded videos. Int. J. Electr. Comput. Eng. 2017, 7, 831. [Google Scholar] [CrossRef][Green Version]

- Kumar, V.; Gaur, M.; Kansal, V. Deep feature based forgery detection in video using parallel convolutional neural network: VFID-Net. Multimed. Tools Appl. 2022, 81, 42223–42240. [Google Scholar] [CrossRef]

- Singla, N.; Singh, J.; Nagpal, S.; Tokas, B. HEVC based tampered video database development for forensic investigation. Multimed. Tools Appl. 2023, 82, 25493–25526. [Google Scholar] [CrossRef]

- Video Tampering Dataset Development in Temporal Domain for Video Forgery Authentication. Available online: https://drive.google.com/drive/folders/1y_TVO6-ow2yoKGSLLvj-GAYf_-HtCLw4 (accessed on 14 September 2024).

- Vtl Video Trace Library. Available online: http://trace.eas.asu.edu/yuv/index.html (accessed on 24 July 2023).

- Qadir, G.; Yahaya, S.; Ho, A.T. Surrey university library for forensic analysis (SULFA) of video content. In Proceedings of the IET Conference on Image Processing (IPR 2012), London, UK, 1–4 July 2012; p. 121. [Google Scholar]

- Quiros, J.V.; Raman, C.; Tan, S.; Gedik, E.; Cabrera-Quiros, L.; Hung, H. REWIND Dataset: Privacy-preserving Speaking Status Segmentation from Multimodal Body Movement Signals in the Wild. arXiv 2024, arXiv:2403.01229. [Google Scholar]

- Grip Dataset. Available online: http://www.grip.unina.it/web-download.html (accessed on 3 August 2020).

- Fadl, S.; Megahed, A.; Han, Q.; Qiong, L. Frame duplication and shuffling forgery detection technique in surveillance videos based on temporal average and gray level co-occurrence matrix. Multimed. Tools Appl. 2020, 79, 17619–17643. [Google Scholar] [CrossRef]

- Cuevas, C.; Yáñez, E.M.; García, N. Labeled dataset for integral evaluation of moving object detection algorithms: LASIESTA. Comput. Vis. Image Underst. 2016, 152, 103–117. [Google Scholar] [CrossRef]

- Sohn, H.; De Neve, W.; Ro, Y.M. Privacy protection in video surveillance systems: Analysis of subband-adaptive scrambling in JPEG XR. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 170–177. [Google Scholar] [CrossRef]

- Al-Sanjary, O.I.; Ahmed, A.A.; Sulong, G. Development of a video tampering dataset for forensic investigation. Forensic Sci. Int. 2016, 266, 565–572. [Google Scholar] [CrossRef]

- Ardizzone, E.; Mazzola, G. A tool to support the creation of datasets of tampered videos. In Proceedings of the Image Analysis and Processing—ICIAP 2015: 18th International Conference, Genoa, Italy, 7–11 September 2015; Proceedings, Part II 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 665–675. [Google Scholar]

- CANTATA D4.3 Datasets for CANTATA Project. Available online: https://www.hitech-projects.com/euprojects/cantata/datasets_cantata/dataset.html (accessed on 16 May 2025).

- Fadl, S.; Han, Q.; Qiong, L. Exposing video inter-frame forgery via histogram of oriented gradients and motion energy image. Multidimens. Syst. Signal Process. 2020, 31, 1365–1384. [Google Scholar] [CrossRef]

- Sharma, H.; Kanwal, N. Video interframe forgery detection: Classification, technique & new dataset. J. Comput. Secur. 2021, 29, 531–550. [Google Scholar]

- Nguyen, X.H.; Hu, Y. VIFFD—A dataset for detecting video inter-frame forgeries. Mendeley Data 2020, 6, 2020. [Google Scholar]

- Fadl, S.; Han, Q.; Li, Q. Surveillance video authentication using universal image quality index of temporal average. In Proceedings of the Digital Forensics and Watermarking: 17th International Workshop, IWDW 2018, Jeju Island, Korea, October 22–24, 2018; Proceedings 17; Springer: Berlin/Heidelberg, Germany, 2019; pp. 337–350. [Google Scholar]

- NIST Trec Video Retrieval Evaluation. Available online: http://trecvid.nist.gov/ (accessed on 30 September 2022).

- Sysu-Objforg Dataset. Available online: http://media-sec.szu.edu.cn/sysu-objforg/index.html (accessed on 18 May 2024).

- Soomro, K. UCF101: A Dataset of 101 Human Actions Classes from Videos in the Wild. Available online: https://www.crcv.ucf.edu/data/UCF101.php (accessed on 29 June 2025).

- Guan, H.; Kozak, M.; Robertson, E.; Lee, Y.; Yates, A.N.; Delgado, A.; Zhou, D.; Kheyrkhah, T.; Smith, J.; Fiscus, J. MFC Datasets: Large-Scale Benchmark Datasets for Media Forensic Challenge Evaluation. Available online: https://www.nist.gov/publications/mfc-datasets-large-scale-benchmark-datasets-media-forensic-challenge-evaluation (accessed on 16 May 2025).

- Oh, S.; Hoogs, A.; Perera, A.; Cuntoor, N.; Chen, C.-C.; Lee, J.T.; Mukherjee, S.; Aggarwal, J.; Lee, H.; Davis, L. A large-scale benchmark dataset for event recognition in surveillance video. In Proceedings of the Computer Vision and Pattern Recognition (CVPR) 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3153–3160. [Google Scholar]

- Abbasi Aghamaleki, J.; Behrad, A. Malicious inter-frame video tampering detection in MPEG videos using time and spatial domain analysis of quantization effects. Multimed. Tools Appl. 2017, 76, 20691–20717. [Google Scholar] [CrossRef]

- Singh, R.D.; Aggarwal, N. Detection of re-compression, transcoding and frame-deletion for digital video authentication. In Proceedings of the 2015 2nd International Conference on Recent Advances in Engineering & Computational Sciences (RAECS), Chandigarh, India, 21–22 December 2015; pp. 1–6. [Google Scholar]

- Wang, W.; Jiang, X.; Wang, S.; Wan, M.; Sun, T. Identifying video forgery process using optical flow. In Proceedings of the Digital-Forensics and Watermarking: 12th International Workshop, IWDW 2013, Auckland, New Zealand, 1–4 October 2013; Revised Selected Papers 12; Springer: Berlin/Heidelberg, Germany, 2014; pp. 244–257. [Google Scholar]

- Wu, Y.; Jiang, X.; Sun, T.; Wang, W. Exposing video inter-frame forgery based on velocity field consistency. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 2674–2678. [Google Scholar]

- Bao, Q.; Wang, Y.; Hua, H.; Dong, K.; Lee, F. An anti-forensics video forgery detection method based on noise transfer matrix analysis. Sensors 2024, 24, 5341. [Google Scholar] [CrossRef]

- Prashant, K.J.; Krishnrao, K.P. Frame Shuffling Forgery Detection Method for MPEG-Coded Video. J. Inst. Eng. Ser. B 2024, 105, 635–645. [Google Scholar] [CrossRef]

- Bakas, J.; Naskar, R.; Dixit, R. Detection and localization of inter-frame video forgeries based on inconsistency in correlation distribution between Haralick coded frames. Multimed. Tools Appl. 2019, 78, 4905–4935. [Google Scholar] [CrossRef]

- Kang, X.; Liu, J.; Liu, H.; Wang, Z.J. Forensics and counter anti-forensics of video inter-frame forgery. Multimed. Tools Appl. 2016, 75, 13833–13853. [Google Scholar] [CrossRef]

- Panchal, H.D.; Shah, H.B. Multi-Level Passive Video Forgery Detection based on Temporal Information and Structural Similarity Index. In Proceedings of the 2023 Seventh International Conference on Image Information Processing (ICIIP), Solan, India, 22–24 November 2023; pp. 137–144. [Google Scholar]

- Singh, G.; Singh, K. Video frame and region duplication forgery detection based on correlation coefficient and coefficient of variation. Multimed. Tools Appl. 2019, 78, 11527–11562. [Google Scholar] [CrossRef]

- Pandey, R.; Kushwaha, A.K.S. A Novel Histogram-Based Approach for Video Forgery Detection. In Proceedings of the 2024 Second International Conference on Intelligent Cyber Physical Systems and Internet of Things (ICoICI), Coimbatore, India, 28–30 August 2024; pp. 827–830. [Google Scholar]

- Ren, H.; Atwa, W.; Zhang, H.; Muhammad, S.; Emam, M. Frame duplication forgery detection and localization algorithm based on the improved Levenshtein distance. Sci. Program. 2021, 2021, 5595850. [Google Scholar] [CrossRef]

- Mohiuddin, S.; Malakar, S.; Sarkar, R. An ensemble approach to detect copy-move forgery in videos. Multimed. Tools Appl. 2023, 82, 24269–24288. [Google Scholar] [CrossRef]

- Shehnaz; Kaur, M. Detection and localization of multiple inter-frame forgeries in digital videos. Multimed. Tools Appl. 2024, 83, 71973–72005. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, Y.; Liew, A.W.-C.; Li, C.-T. ENF based video forgery detection algorithm. Int. J. Digit. Crime Forensics (IJDCF) 2020, 12, 131–156. [Google Scholar] [CrossRef]

- Kharat, J.; Chougule, S. A passive blind forgery detection technique to identify frame duplication attack. Multimed. Tools Appl. 2020, 79, 8107–8123. [Google Scholar] [CrossRef]

- Huang, C.C.; Lee, C.E.; Thing, V.L. A novel video forgery detection model based on triangular polarity feature classification. Int. J. Digit. Crime Forensics (IJDCF) 2020, 12, 14–34. [Google Scholar] [CrossRef]

- Yu, L.; Wang, H.; Han, Q.; Niu, X.; Yiu, S.-M.; Fang, J.; Wang, Z. Exposing frame deletion by detecting abrupt changes in video streams. Neurocomputing 2016, 205, 84–91. [Google Scholar] [CrossRef]

- Zhang, Z.; Hou, J.; Ma, Q.; Li, Z. Efficient video frame insertion and deletion detection based on inconsistency of correlations between local binary pattern coded frames. Secur. Commun. Netw. 2015, 8, 311–320. [Google Scholar] [CrossRef]

- Jiang, G.; Du, B.; Fang, S.; Yu, M.; Shao, F.; Peng, Z.; Chen, F. Fast inter-frame prediction in multi-view video coding based on perceptual distortion threshold model. Signal Process. Image Commun. 2019, 70, 199–209. [Google Scholar] [CrossRef]

- Fadl, S.; Han, Q.; Li, Q. CNN spatiotemporal features and fusion for surveillance video forgery detection. Signal Process. Image Commun. 2021, 90, 116066. [Google Scholar] [CrossRef]

- Mohsen, H.; Ghali, N.I.; Khedr, A. Offline signature verification using deep learning method. Int. J. Theor. Appl. Res. 2023, 2, 225–233. [Google Scholar]

- Koshy, L.; Shyry, S.P. Detection of tampered real time videos using deep neural networks. Neural Comput. Appl. 2024, 37, 7691–7703. [Google Scholar] [CrossRef]

- Nguyen, X.H.; Hu, Y.; Amin, M.A.; Khan, G.H.; Truong, D.-T. Detecting video inter-frame forgeries based on convolutional neural network model. Int. J. Image Graph. Signal Process. 2020, 10, 1. [Google Scholar] [CrossRef]

- Kohli, A.; Gupta, A.; Singhal, D. CNN based localisation of forged region in object-based forgery for HD videos. IET Image Process. 2020, 14, 947–958. [Google Scholar] [CrossRef]

- Patel, J.; Sheth, R. An optimized convolution neural network based inter-frame forgery detection model-a multi-feature extraction framework. ICTACT J. Image Video Process 2021, 12, 2570–2581. [Google Scholar] [CrossRef]

- Chandru, R.; Priscilla, R. Video Integrity Detection with Deep Learning. In Proceedings of the 2024 10th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 14–15 March 2024; pp. 1288–1292. [Google Scholar]

- Singla, N.; Nagpal, S.; Singh, J. Frame Duplication Detection Using CNN-Based Features with PCA and Agglomerative Clustering. In Communication and Intelligent Systems: Proceedings of ICCIS 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 383–391. [Google Scholar]

- Hu, Z.; Duan, Q.; Zhang, P.; Tao, H. An Attention-Erasing Stripe Pyramid Network for Face Forgery Detection. Signal Image Video Process. 2023, 17, 4123–4131. [Google Scholar] [CrossRef]

| Metric | Description | Optimal Value | Mathematical Formula |

|---|---|---|---|

| Double-compressed frame accuracy (DFACC) | The accuracy of correctly classifying double-compressed frames is determined by dividing the number of correctly classified double-compressed frames by the total number of double-compressed frames, accurately reflecting the effectiveness of the classification process in identifying double-compressed frames. | 1 | |

| Forged frame accuracy (FFACC) | The accuracy of correctly classifying forged frames is calculated by dividing the number of correctly classified forged frames by the total number of forged frames. This metric evaluates the system’s ability to accurately identify forged frames. | 1 | |

| Frame accuracy (FACC) | The accuracy is calculated by dividing the number of correctly classified forged frames by the total number of forged frames. It is used to assess the performance of frame-level detection. This metric provides insight into how accurately the system detects forgeries at the individual frame level. | 1 | |

| Precision | Precision measures the proportion of true positive predictions among all positive predictions, indicating how many of the detected positives are correct. It is calculated by dividing the number of true positive predictions (TP) by the total number of positive predictions, including both true and false positives (FP). | 1 | |

| Recall | Recall measures the proportion of true positive predictions relative to all actual positive instances, reflecting the system’s ability to identify all relevant instances. It is calculated by dividing the number of true positive predictions (TP) by the total number of actual positives, including both true and false negatives (FN). | 1 | |

| Probability of error (Pe) | The error rate measures how often a classification system makes mistakes. It is calculated by subtracting the correct predictions (true positives and true negatives) from the total number of instances. This shows the chance of the system making a wrong prediction, including false positives and negatives. | 0 | |

| F1-score | The F1-score is a metric that balances precision and recall, providing a single measure of a model’s accuracy, particularly useful when the data has an uneven class distribution. It is calculated by taking the harmonic mean of precision and recall to assess the model’s performance, combining precision and recall into a single value that considers both false positives and false negatives. | 1 | |

| Detection accuracy (DA) | This refers to the proportion of correct predictions (whether authentic or forged) out of the total number of predictions made by the model. It measures the model’s overall effectiveness in correctly classifying authentic and forged instances. | 1 | |

| The proportion of positive cases (TPR) | TPR stands for the percentage of positive cases—forged frames that are successfully categorized. | 1 | |

| Video accuracy classification (VAC) | The percentage of accurately categorized video segments (CCFVS) to all video segments (N) in a video. | 1 |

| Ref. | Method | Dataset | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| [59] | DCT coefficient and optical flow analysis | SULFA, change detection video database | 0.993 | - | - | - |

| [60] | Optical flow analysis and anomaly detection | TRECVID | [0.867, 0.933] | - | - | - |

| [61] | Consistency of the velocity field | TRECVID | [0.80, 0.90] | - | - | - |

| [30] | Texture analysis for spatial video forgery detection and localization | SULFA | 0.96 | - | - | - |

| [29] | A methodology for detecting and localizing forgery using similarity metrics and feature classification | VTL | 0.9492 | 0.9589 | 0.9655 | 0.9622 |

| [7] | Detection and localization of frame duplication using a binary image template | SULFA, VLFD, VTL | - | 621 | 0.9863 | 0.993 |

| [9] | Duplicate frame detection using sequence matching | REWIND | 0.989 | - | - | - |

| [66] | Passive video forgery detection based on temporal information and Structural Similarity Index | TDTVD | 94.44% | - | - | - |

| [71] | Detection and localization of multiple interframe forgeries in digital videos | SULFA | [HOG 0.992, R-LBR 0.996] | [HOG 0.992, R-LBR 0.994] | [HOG 0.991, R-LBR 0.992] | [HOG 0.991, R-LBR 0.995] |

| [69] | An algorithm for detecting and localizing frame duplication forgeries based on the increased Levenshtein distance | VTL | - | 0.995 | 0.1 | 0.9975 |

| [70] | An ensemble approach to detect frame duplication | FD&FDs | 0.9932 | - | - | - |

| [11] | A robust hashing-based temporally operated video detection method | SELF-MADE | 0.9825 | 1 | - | - |

| [31] | Quantization errors in video coding for utilizing the inherent effects of double compression | VTL | - | 0.89 | 0.86 | 0.87 |

| [65] | Video interframe forgery counter-forensics | VTL | 0.969 | - | - | - |

| [76] | Detection of inconsistencies in correlations between locally binary pattern-coded frames for effective video frame insertion and deletion | VTL | - | 0.95 | 0.92 | 0.93 |

| [77] | Detecting frame deletion by detecting abrupt modifications in video streams | VTL | - | 0.72 | 0.66 | 0.69 |

| [49] | Using a motion energy image and an oriented gradient histogram | VTL | - | 0.98 | 0.99 | 0.98 |

| [3] | Detecting and localizing interframe video forgery using differences | VIFFD, TDTVD | [VIFFD 0.866, TDTVD 0.9192] | - | - | - |

| [73] | A method of passively detecting blind forgeries to detect frame duplication | SELF-MADE | 0.998 | 0.999 | 0.997 | - |

| [74] | A new approach to video forgery detection using triangle polarity feature classification | Recognition of Human Actions | - | 0.9576 | 0.9826 | - |

| [43] | An approach for detecting frame duplication and shuffling forgeries in surveillance recordings that uses a temporal average and a gray-level co-occurrence matrix | FD&FDs | - | [0.94, 0.99] | [0.96, 0.98] | - |

| [34] | Detection of interframe forgeries using residue differential energy | SULFA, LASIESTA, IVY LAB | - | [Dup0.97, Ins0.99, Del0.97] | [Dup 0.99, Ins 0.99, Del 0.95] | [Dup 0.98, Ins 0.99, Del 0.96] |

| [52] | Using the temporal average of the universal picture quality index for surveillance video authentication | SULFA, LASIESTA, IVYLAB | - | [Ins0.99, Shuf0.96, Del0.98] | [Ins 0.99, Shuf0.97, Del0.99] | [Ins 0.99, Shuf0.96, Del0.98] |

| [63] | A method for detecting frame shuffling in MPEG-coded video | SELF-MADE | - | 1 | 1 | - |

| [64] | Variations in the correlation distribution of frames coded with Haralick | VTL | - | 0.85 | 0.89 | 0.87 |

| [58] | Quantization effect studies in the spatial and temporal domains applied to MPEG videos | VTL | 83.39 | - | - | - |

| [67] | Detection of video frame and region duplication forgeries using the correlation and coefficient of variation | SULFA | 0.995 | 1 | 0.99 | 0.994 |

| Ref. | Method | Dataset | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| [13] | Digital video multiple forgery detection using a deep neural network based on VGG-16 and KPCA | SULFA, VTL | 0.97 | 0.96 | - | 0.958 |

| [8] | Spatiotemporal analysis-based deep learning identification and localization of different kinds of interframe forgery videos | SELF-MADE | [Ins 0.9898, Del 0.9418] | - | - | - |

| [82] | CNN-based localization of the forged region | SYSU- OBJFORG | [FACC 0.974, DFACC 0.989] | - | - | - |

| [12] | Using the LSTM network and UFS-MSRC algorithm for forgery detection | SULFA, Sondos | [SULFA 0.9813, Sondos 0.9738] | - | - | - |

| [14] | Identification and localization of forgeries in videos using deep learning | VIFFD, UCF-101 | 0.98 | 0.96 | 0.95 | 0.97 |

| [32] | Multimedia forensics and deep learning for tampered video detection | FVC | 0.85 | - | - | - |

| [36] | Using parallel convolutional neural network—VFID-Net | VIFFD, SULFA | [VIFFD 0.865, SULFA 0.92] | - | - | 0.87 |

| [83] | CNN-based detection model with multi-feature extraction framework | - | 0.85 | 0.825 | - | 0.826 |

| [85] | Frame duplication detection with PCA and agglomerative clustering using CNN-based features | SELF-MADE | 0.94 | - | - | - |

| [10] | Multiple forgery detection in videos using convolution neural network | VIFFD, TDTVD | [VIFFD 0.82, TDTVD 0.86] | - | - | - |

| [15] | Deep learning technique for improving the digital forensic approach for interframe video forgery detection | UCF-101 | 0.9914 | - | - | - |

| [2] | Using Siamese-based RNN to duplicate frame video forgery detection | MFC, VIRAT | [MFC 0.866, VIRAT 0.933] | [MFC 0.875, VIRAT 0.933] | [MFC 0.866, VIRAT 0.933] | [MFC 0.865, VIRAT 0.933] |

| [16] | Using third-order tensor representation with low computational complexity as the basis | VTL | - | 0.99 | 0.99 | 0.99 |

| [78] | Detecting surveillance video forgeries using CNN spatiotemporal characteristics and fusion | VIRAT, SULFA, LASIESTA, IVY LAB | 0.983 | - | - | - |

| [81] | Using a convolutional neural network model to identify video interframe forgeries | VIFFD | 0.9917 | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, M.M.; Ghali, N.I.; Hamza, H.M.; Hosny, K.M.; Vrochidou, E.; Papakostas, G.A. Interframe Forgery Video Detection: Datasets, Methods, Challenges, and Search Directions. Electronics 2025, 14, 2680. https://doi.org/10.3390/electronics14132680

Ali MM, Ghali NI, Hamza HM, Hosny KM, Vrochidou E, Papakostas GA. Interframe Forgery Video Detection: Datasets, Methods, Challenges, and Search Directions. Electronics. 2025; 14(13):2680. https://doi.org/10.3390/electronics14132680

Chicago/Turabian StyleAli, Mona M., Neveen I. Ghali, Hanaa M. Hamza, Khalid M. Hosny, Eleni Vrochidou, and George A. Papakostas. 2025. "Interframe Forgery Video Detection: Datasets, Methods, Challenges, and Search Directions" Electronics 14, no. 13: 2680. https://doi.org/10.3390/electronics14132680

APA StyleAli, M. M., Ghali, N. I., Hamza, H. M., Hosny, K. M., Vrochidou, E., & Papakostas, G. A. (2025). Interframe Forgery Video Detection: Datasets, Methods, Challenges, and Search Directions. Electronics, 14(13), 2680. https://doi.org/10.3390/electronics14132680