Real-Time DDoS Detection in High-Speed Networks: A Deep Learning Approach with Multivariate Time Series

Abstract

1. Introduction

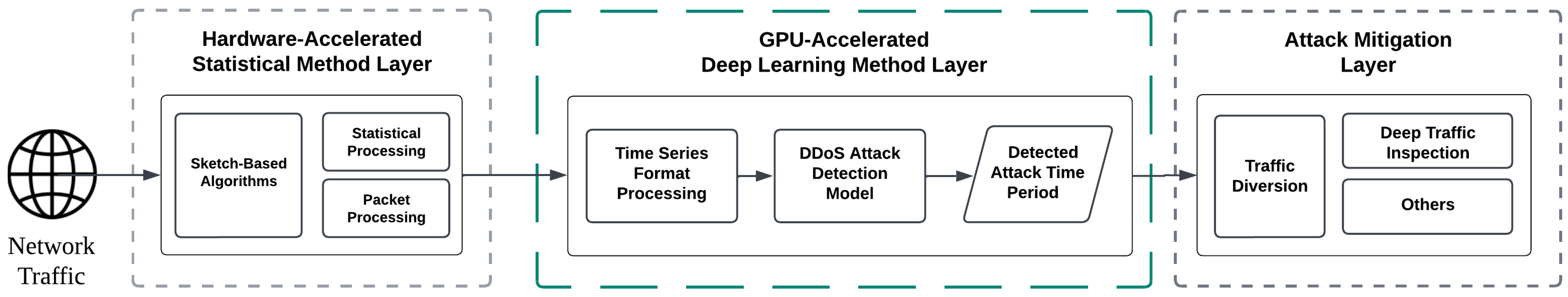

- Reference Architecture for Real-time DDoS Detection: A reference multi-layered architecture for real-time DDoS detection that utilizes hardware acceleration and multivariate time series features. The modular and extendable architecture allows adaptation for different application domains and performance requirements.

- Model Validation: Comprehensive evaluation of deep learning models (TCN, LSTM, Transformer) demonstrating the potential for effective integration into the proposed high-speed architecture.

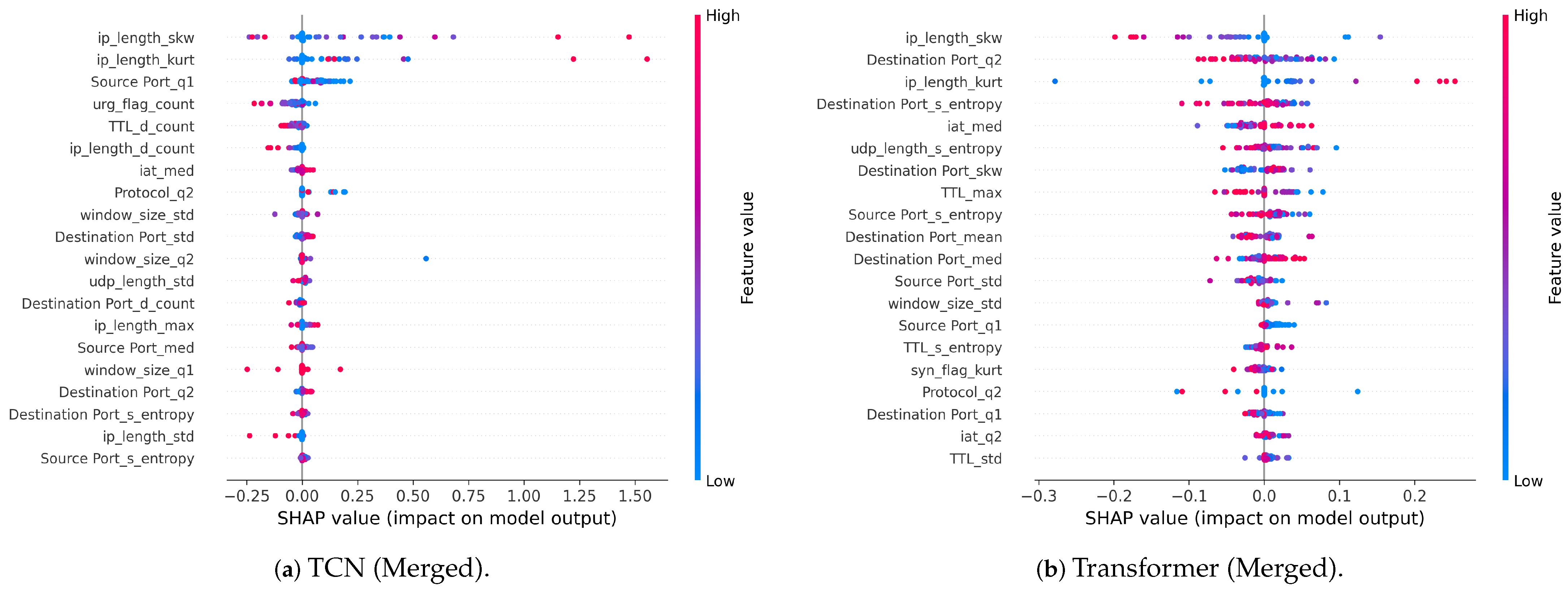

- Feature Selection for Efficiency: Critical features are identified using SHAP analysis to enable lightweight and high-speed processing essential for real-time performance and cost-effective deployment.

2. Related Work

2.1. Detection Approaches

2.2. Flow-Based Features

2.3. Packet-Based Features

3. Proposed Architecture

3.1. Hardware-Accelerated Statistical Method Layer

Sketch-Based Algorithms

- Alon-Matias-Szegedy (AMS) Sketch [25]: Using the MassDAL [26] AMS Sketch implementation. Each feature uses five 512-entry tables with 32-bit entries and approximately 70 KB of storage. Computationally, this requires seven 32-bit multipliers and fourteen 64-bit multipliers to calculate the second to fourth statistical moments.

- Distributed Distribution (DD) Sketch [27]: Implemented as a histogram with 800 entries of 64 bits each, the DD Sketch consumes approximately 43.7 KB of storage. This approach supports quantile estimations with index-based table traversal and cumulative counts.

- Flajolet—Martin (FM) Sketch [28]: Each feature uses a 128×64-bit table for cardinality estimation and requires approximately 2.1 KB of storage. Hashing computations are optimized using H3 hash functions implemented in combinational logic that eliminate the need for multipliers.

- Entropy Estimation (Clifford and Cosma Method [29]): This method uses twenty tables of 2048 entries with 32-bit words per feature, resulting in about 1.09 MB of storage. Computational demand is high, with approximately 280 32-bit multipliers employed for weighted frequency calculations and entropy tabulation. We implemented the hardware-accelerated processing of time-window entropy on downstream systems and support packet processing up to 40 Gbps [16].

- Mean Calculation: Implemented with two 64-bit registers per feature, one for summation and one for count. The mean requires minimal storage (64 bytes) and no multiplier resources.

3.2. GPU-Accelerated Deep Learning Method Layer

3.3. Attack Mitigation Layer

4. Deep Learning for Time Series DDoS Detection

4.1. Multivariate Time Series Data

4.1.1. Dataset

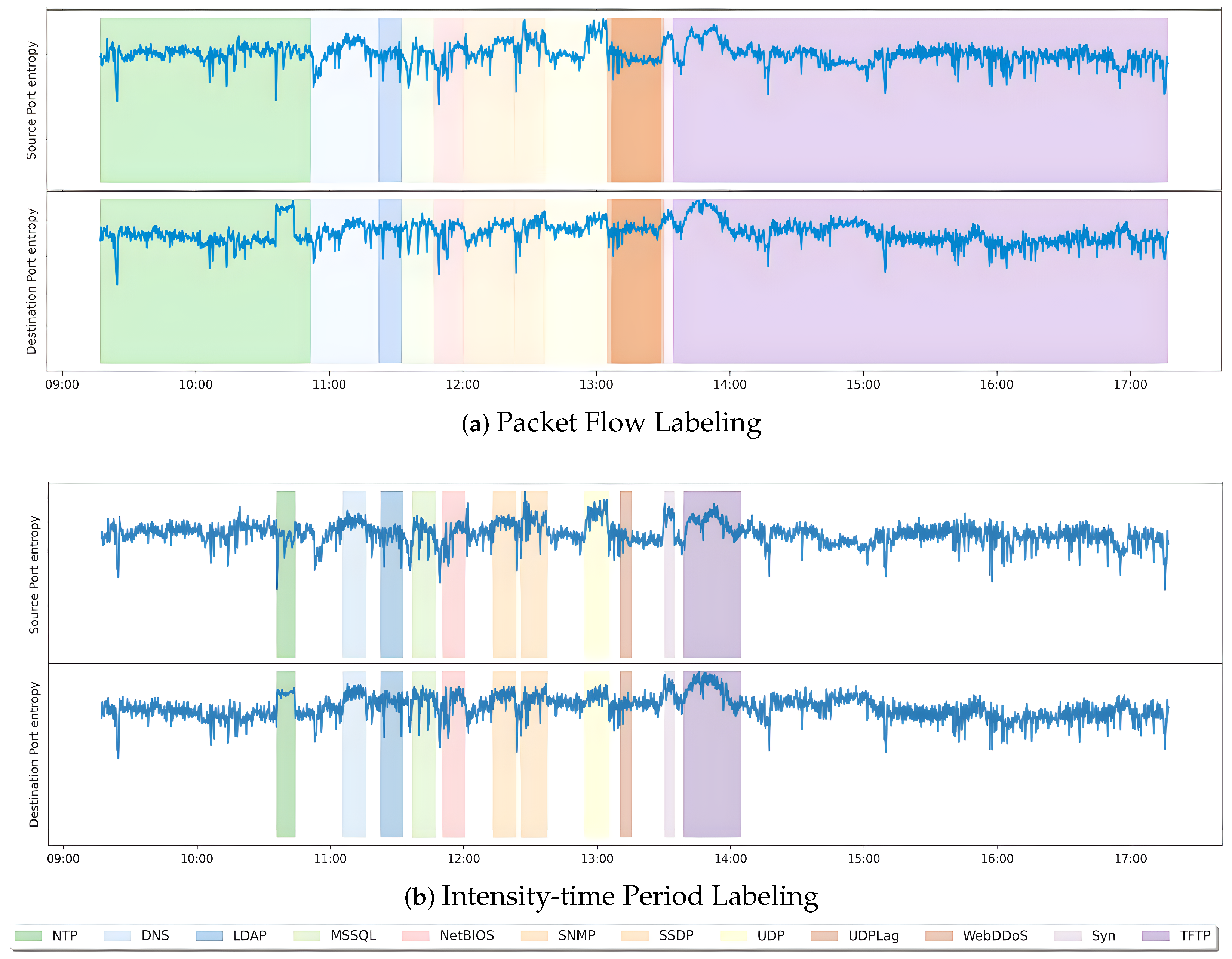

- Packet flow labeling labels based on each packet are used to examine attack vectors and specific traits in detail.

- Intensity-time period labeling labels data based on periods where the attack’s intensity surpasses set thresholds to emphasize segments with peak attacks based on the Table 2.

4.1.2. Data Preprocessing

- Dataset Cleaning—The dataset contains several records irrelevant to creating time series data. Features not associated with Network Layers 2 and 3, along with sparse values, are eliminated to prioritize the model’s focus on essential features.

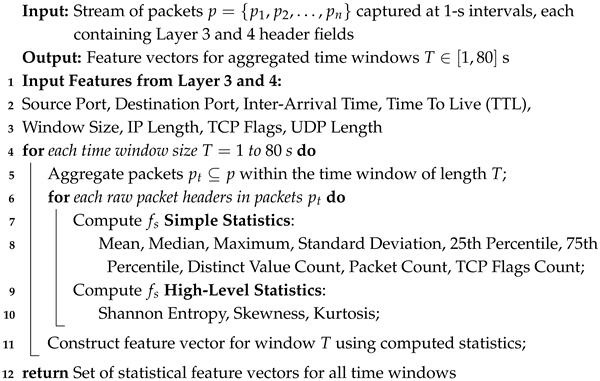

- Feature Extraction—The dataset consists of raw packet-level headers extracted from Layer 3 and 4 of the network traffic, using statistical methods to extract significant information. In line with prior works [31,35,36], we adopt a preprocessing approach that transforms low-level raw packet features into statistical features. Let denote the raw packet headers captured at 1-s intervals, and represent the statistical features extracted from each packet header, including source/destination ports, inter-arrival time, time to live (TTL), window size, IP length, TCP flags, and UDP length. As detailed in Algorithm 1, for each time window s, the algorithm aggregates these raw packet headers into statistical features by computing both simple statistics (mean, median, max, standard deviation, 25th and 75th percentiles, distinct count, packet count, and flags count) and high-level statistics (Shannon entropy, skewness, and kurtosis). This multi-variate temporal representation captures variations in traffic behavior and is essential for classification tasks such as anomaly detection or DDoS attack classification.

- Feature Scale—Our normalization method is MinMaxScaler to rescale the data to a range of [0–1] using the minimum and maximum values. Normalizing the data eliminates the bias in each feature due to different scales and ensures that each feature contributes to model training equally.

- Time Series Formatting—The dataset was transformed into a time series format using a sliding window approach. Each preprocessed feature transforms as a sequential window based on different time series lengths in seconds. For multivariate time series classification, the input size , where represents the input feature sequence and is the corresponding label. Specifically, has the shape , where n is the number of samples, T is the time window length, and is the number of features.

| Algorithm 1: Multivariate statistical feature extraction from network traffic. |

|

4.2. Deep Learning Models

4.2.1. Temporal Convolutional Networks (TCN)

4.2.2. Long Short-Term Memory (LSTM)

4.2.3. Transformer

4.3. SHAP (SHapley Additive exPlanations) Analysis

4.4. Performance Evaluation

- Precision: The accuracy of positive predictions, where the true positive is computed over the data that is predicted as positive.

- Recall: The percentage of actual positive predictions correctly identified, where the true positive is computed over all positives in the data.

- F1-Score: The harmonic mean of precision and recall.

- Average Inference Time: The model’s average time to predict in a serving environment.

5. Results and Discussion

5.1. Packet Flow vs. Intensity-Time Period Labeling

5.1.1. Anomaly Detection

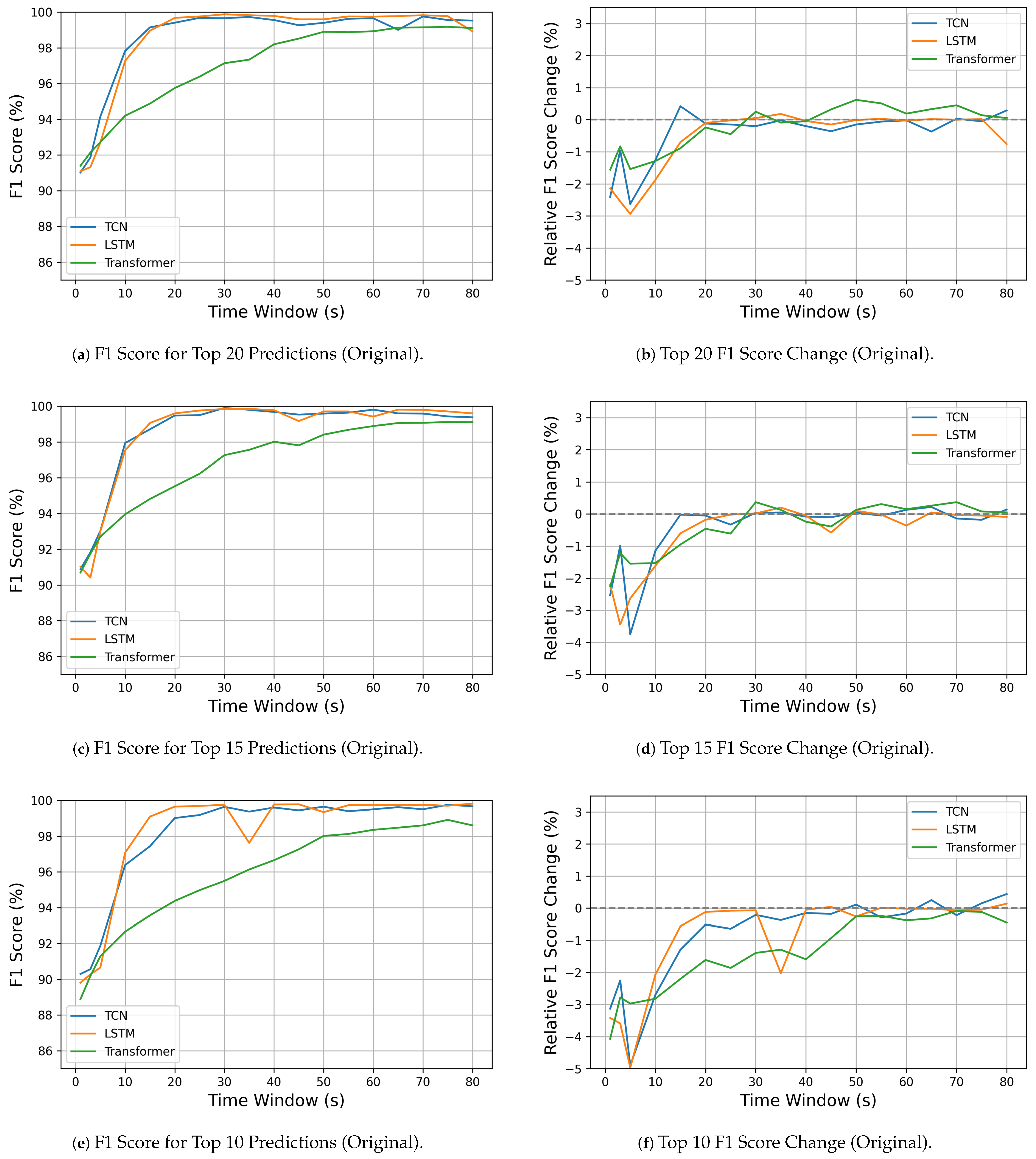

5.1.2. Distributed Denial-of-Service Attack Classification

5.2. Simulating High-Volume Background Traffic for DDoS Detection

5.2.1. Evaluation of DDoS Detection Models in High-Volume Conditions

5.2.2. Reliability and Efficiency of DDoS Model Architectures

5.3. SHAP Analysis Simulation

5.3.1. SHAP Feature Importance on Original Background Traffic

5.3.2. SHAP Feature Importance on MAWI-CICDDoS2019 Merged Background Traffic

5.3.3. Common Discriminative Features Analysis

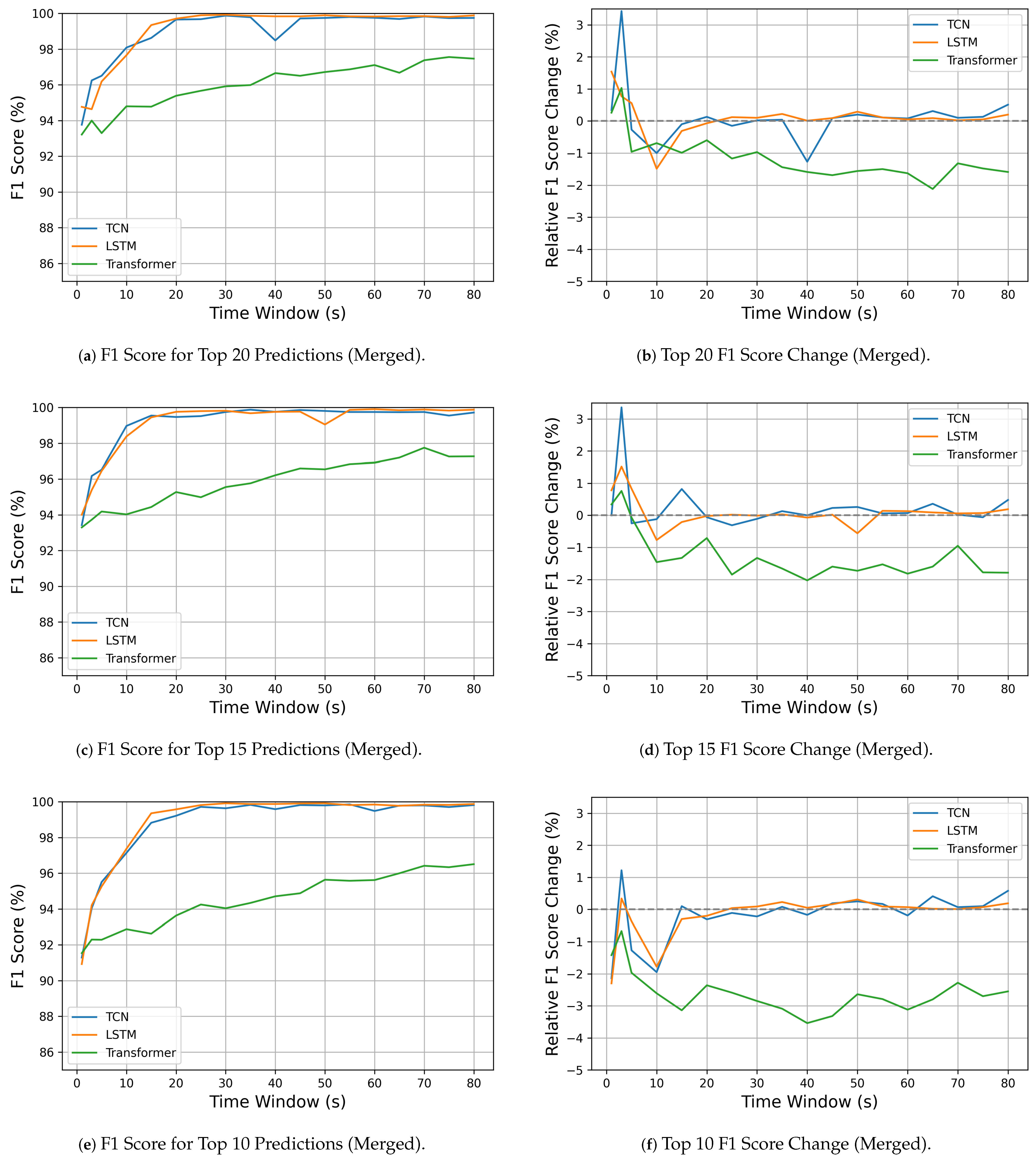

5.4. Model Performance Trends Across Detection Scenarios

5.4.1. Model Detection Performance

5.4.2. Inference Latency and Computational Efficiency

6. Threats to Validity

6.1. Threats to External Validity

6.2. Threats to Internal Validity

6.3. Threats to Construct Validity

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Model Parameters

| Parameter | Value |

|---|---|

| Number of convolutional layers | 2 |

| Conv1d layer 1: out channels | 64 |

| Conv1d layer 1: kernel size | 3 |

| Conv1d layer 1: padding | 1 |

| Conv1d layer 2: out channels | 128 |

| Conv1d layer 2: kernel size | 3 |

| Conv1d layer 2: padding | 1 |

| Global average pooling | 1 |

| Fully connected layer | 256 |

| Activation functions | ReLU |

| Optimizer | Adam |

| Parameter | Value |

|---|---|

| Number of layers | 4 |

| Number of LSTM units | 256 |

| Dropout rate | 0.3 |

| Optimizer | Adam |

| Parameter | Value |

|---|---|

| Number of layers | 6 |

| Number of attention heads | 4 |

| Feed-forward dimension | 512 |

| Embedding/model dimension | 512 |

| Positional encoding | Yes |

| Masking | No |

| Dropout rate | 0.5 |

| Optimizer | Adagrad |

Appendix B. Detailed Model Metrics

| TW (ms) | TCN (%) | LSTM (%) | Transformer (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | |

| 1 | 98.02 | 98.01 | 98.01 | 0.47 | 97.95 | 97.95 | 97.94 | 0.41 | 97.25 | 97.25 | 97.24 | 2.09 |

| 3 | 94.96 | 94.98 | 94.96 | 0.46 | 95.82 | 95.81 | 95.81 | 0.57 | 94.94 | 95.14 | 95.00 | 2.28 |

| 5 | 95.69 | 95.67 | 95.67 | 0.49 | 93.73 | 93.77 | 93.74 | 0.73 | 93.06 | 94.05 | 93.31 | 2.26 |

| 10 | 95.69 | 95.72 | 95.70 | 0.47 | 94.09 | 94.06 | 94.07 | 0.88 | 93.81 | 94.23 | 93.94 | 2.29 |

| 15 | 97.74 | 97.73 | 97.73 | 0.49 | 96.84 | 96.84 | 96.83 | 1.09 | 94.46 | 94.94 | 94.59 | 2.30 |

| 20 | 97.42 | 97.55 | 97.45 | 0.46 | 96.95 | 96.95 | 96.94 | 1.11 | 93.92 | 94.71 | 94.14 | 2.33 |

| 25 | 98.37 | 98.36 | 98.35 | 0.51 | 98.62 | 98.62 | 98.61 | 1.39 | 95.82 | 95.91 | 95.85 | 2.20 |

| 30 | 98.10 | 98.18 | 98.12 | 0.48 | 97.85 | 97.85 | 97.84 | 1.51 | 96.32 | 96.50 | 96.37 | 2.28 |

| 35 | 98.47 | 98.47 | 98.44 | 0.45 | 98.85 | 98.86 | 98.85 | 1.70 | 96.40 | 96.66 | 96.48 | 2.24 |

| 40 | 98.78 | 98.80 | 98.78 | 0.40 | 98.20 | 98.19 | 98.19 | 1.79 | 95.27 | 96.06 | 95.46 | 2.19 |

| 45 | 99.05 | 99.05 | 99.04 | 0.51 | 99.15 | 99.15 | 99.15 | 1.98 | 96.98 | 97.13 | 97.03 | 2.27 |

| 50 | 99.00 | 99.00 | 98.99 | 0.47 | 98.60 | 98.60 | 98.60 | 2.10 | 96.88 | 96.89 | 96.88 | 2.21 |

| 55 | 99.38 | 99.39 | 99.38 | 0.52 | 99.17 | 99.17 | 99.16 | 2.38 | 97.45 | 97.61 | 97.49 | 2.47 |

| 60 | 99.05 | 99.08 | 99.05 | 0.48 | 99.22 | 99.21 | 99.21 | 3.16 | 97.63 | 97.72 | 97.66 | 2.44 |

| 65 | 99.53 | 99.53 | 99.53 | 0.49 | 99.62 | 99.62 | 99.61 | 2.59 | 97.85 | 97.90 | 97.86 | 2.39 |

| 70 | 98.85 | 98.92 | 98.86 | 0.51 | 99.00 | 99.01 | 99.00 | 2.86 | 97.90 | 97.91 | 97.90 | 2.49 |

| 75 | 99.47 | 99.47 | 99.46 | 0.59 | 99.50 | 99.51 | 99.50 | 2.98 | 97.65 | 97.89 | 97.70 | 2.54 |

| 80 | 98.93 | 98.96 | 98.94 | 0.53 | 99.07 | 99.07 | 99.06 | 3.09 | 97.76 | 97.93 | 97.80 | 2.55 |

| TW (ms) | TCN (%) | LSTM (%) | Transformer (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | |

| 1 | 95.31 | 95.37 | 95.28 | 0.46 | 95.27 | 95.43 | 95.23 | 0.56 | 95.07 | 95.20 | 95.03 | 2.11 |

| 3 | 94.51 | 94.50 | 94.49 | 0.47 | 94.01 | 94.00 | 94.00 | 0.55 | 95.21 | 95.25 | 95.18 | 2.27 |

| 5 | 97.10 | 97.10 | 97.10 | 0.48 | 95.77 | 95.78 | 95.76 | 0.73 | 95.57 | 95.60 | 95.55 | 2.37 |

| 10 | 99.70 | 99.70 | 99.70 | 0.49 | 98.82 | 98.82 | 98.81 | 1.10 | 96.40 | 96.40 | 96.40 | 2.38 |

| 15 | 99.70 | 99.70 | 99.70 | 0.47 | 99.72 | 99.72 | 99.71 | 1.13 | 96.75 | 96.75 | 96.75 | 2.39 |

| 20 | 99.57 | 99.57 | 99.56 | 0.48 | 99.83 | 99.83 | 99.83 | 1.18 | 96.99 | 97.03 | 96.97 | 2.51 |

| 25 | 99.83 | 99.83 | 99.83 | 0.49 | 99.77 | 99.77 | 99.76 | 1.33 | 97.54 | 97.53 | 97.53 | 2.14 |

| 30 | 99.83 | 99.83 | 99.83 | 0.46 | 99.87 | 99.87 | 99.86 | 1.61 | 99.87 | 99.87 | 97.83 | 2.19 |

| 35 | 99.87 | 99.87 | 99.86 | 0.49 | 99.70 | 99.70 | 99.70 | 1.72 | 97.98 | 97.98 | 97.98 | 2.17 |

| 40 | 99.72 | 99.72 | 99.71 | 0.42 | 99.75 | 99.75 | 99.75 | 1.79 | 98.48 | 98.48 | 98.48 | 2.23 |

| 45 | 99.83 | 99.83 | 99.83 | 0.46 | 99.83 | 99.83 | 99.83 | 1.99 | 98.32 | 98.33 | 98.31 | 2.29 |

| 50 | 99.68 | 99.68 | 99.68 | 0.49 | 99.75 | 99.75 | 99.75 | 2.46 | 98.17 | 98.19 | 98.16 | 4.29 |

| 55 | 99.82 | 99.82 | 99.81 | 0.49 | 99.83 | 99.83 | 99.83 | 2.34 | 98.97 | 98.97 | 98.96 | 2.44 |

| 60 | 99.82 | 99.82 | 99.81 | 0.49 | 99.35 | 99.35 | 99.34 | 2.50 | 98.58 | 98.58 | 98.58 | 2.48 |

| 65 | 99.40 | 99.41 | 99.40 | 0.49 | 99.80 | 99.80 | 99.79 | 2.63 | 98.00 | 98.04 | 98.00 | 2.43 |

| 70 | 99.75 | 99.75 | 99.74 | 0.52 | 99.28 | 99.29 | 99.28 | 3.58 | 98.83 | 98.85 | 98.83 | 2.42 |

| 75 | 99.80 | 99.80 | 99.79 | 0.52 | 99.83 | 99.83 | 99.83 | 3.03 | 98.95 | 98.95 | 98.94 | 2.47 |

| 80 | 99.62 | 99.62 | 99.61 | 0.49 | 99.72 | 99.72 | 99.71 | 3.16 | 99.13 | 98.13 | 98.13 | 2.47 |

| TW (ms) | TCN (%) | LSTM (%) | Transformer (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | |

| 1 | 78.42 | 78.45 | 77.91 | 0.47 | 79.27 | 79.05 | 78.97 | 0.40 | 75.97 | 75.85 | 75.47 | 2.08 |

| 3 | 80.23 | 80.09 | 80.05 | 0.53 | 79.00 | 78.95 | 78.83 | 0.55 | 77.72 | 77.67 | 77.58 | 2.37 |

| 5 | 84.09 | 84.10 | 83.99 | 0.46 | 82.81 | 82.69 | 82.62 | 0.70 | 79.83 | 79.84 | 79.76 | 2.31 |

| 10 | 91.83 | 92.01 | 91.84 | 0.48 | 90.51 | 90.48 | 90.46 | 0.87 | 81.89 | 82.43 | 82.06 | 2.28 |

| 15 | 94.41 | 94.64 | 94.38 | 0.47 | 96.34 | 96.33 | 96.33 | 1.03 | 83.94 | 83.94 | 83.90 | 2.32 |

| 20 | 96.17 | 96.30 | 96.18 | 0.45 | 96.35 | 96.40 | 96.36 | 1.19 | 85.10 | 85.42 | 85.18 | 2.34 |

| 25 | 97.20 | 97.27 | 97.19 | 0.45 | 97.84 | 97.83 | 97.83 | 1.35 | 85.96 | 86.33 | 86.02 | 2.16 |

| 30 | 97.34 | 97.39 | 97.32 | 0.39 | 96.67 | 96.77 | 96.57 | 1.60 | 87.66 | 87.87 | 87.69 | 2.79 |

| 35 | 97.55 | 97.62 | 97.56 | 0.46 | 98.68 | 98.68 | 98.68 | 1.58 | 89.06 | 89.20 | 89.09 | 2.25 |

| 40 | 98.12 | 98.12 | 98.11 | 0.43 | 98.00 | 98.00 | 97.99 | 1.75 | 89.50 | 89.90 | 89.58 | 2.20 |

| 45 | 97.93 | 98.00 | 97.92 | 0.48 | 98.85 | 98.85 | 98.84 | 1.89 | 90.80 | 91.10 | 90.87 | 2.24 |

| 50 | 98.08 | 98.12 | 98.08 | 0.46 | 98.60 | 98.61 | 98.60 | 2.04 | 90.93 | 91.07 | 90.97 | 2.17 |

| 55 | 98.87 | 98.89 | 98.87 | 0.50 | 96.20 | 96.68 | 96.25 | 2.29 | 93.13 | 93.22 | 93.15 | 2.39 |

| 60 | 98.58 | 98.59 | 98.57 | 0.52 | 98.90 | 98.91 | 98.89 | 2.38 | 93.71 | 93.84 | 93.72 | 2.45 |

| 65 | 98.58 | 98.62 | 98.58 | 0.48 | 99.18 | 99.19 | 99.18 | 2.56 | 93.81 | 93.81 | 93.79 | 2.42 |

| 70 | 98.91 | 98.92 | 98.91 | 0.50 | 98.92 | 98.92 | 98.91 | 2.69 | 93.38 | 94.16 | 93.43 | 2.51 |

| 75 | 97.63 | 97.81 | 97.60 | 0.49 | 99.22 | 99.22 | 99.21 | 2.88 | 94.25 | 94.40 | 94.23 | 2.44 |

| 80 | 98.25 | 98.32 | 98.26 | 0.50 | 99.05 | 99.05 | 99.04 | 2.93 | 94.88 | 95.03 | 94.90 | 2.65 |

| TW (ms) | TCN (%) | LSTM (%) | Transformer (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | |

| 1 | 93.63 | 93.35 | 93.43 | 0.55 | 93.33 | 93.24 | 93.23 | 0.41 | 93.36 | 92.78 | 92.96 | 2.15 |

| 3 | 92.28 | 93.67 | 92.82 | 0.47 | 94.09 | 93.72 | 93.87 | 0.56 | 92.78 | 93.41 | 92.97 | 2.33 |

| 5 | 96.72 | 96.94 | 96.78 | 0.48 | 95.69 | 95.60 | 95.63 | 0.71 | 94.13 | 94.56 | 94.26 | 2.38 |

| 10 | 99.08 | 99.13 | 99.09 | 0.46 | 99.17 | 99.17 | 99.15 | 0.86 | 95.37 | 95.69 | 95.49 | 2.44 |

| 15 | 98.67 | 98.92 | 98.73 | 0.39 | 99.67 | 99.67 | 99.66 | 1.01 | 95.67 | 96.05 | 95.77 | 2.39 |

| 20 | 99.53 | 99.55 | 99.53 | 0.47 | 99.78 | 99.79 | 99.78 | 1.44 | 95.84 | 96.32 | 95.99 | 2.40 |

| 25 | 99.83 | 99.83 | 99.83 | 0.49 | 99.78 | 99.78 | 99.78 | 1.34 | 96.77 | 97.01 | 96.84 | 2.22 |

| 30 | 99.87 | 99.87 | 99.86 | 0.44 | 99.83 | 99.83 | 99.83 | 1.54 | 96.89 | 96.98 | 96.89 | 2.30 |

| 35 | 99.75 | 99.75 | 99.75 | 0.45 | 99.65 | 99.67 | 99.65 | 1.68 | 97.37 | 97.57 | 97.43 | 2.24 |

| 40 | 99.77 | 99.77 | 99.76 | 0.38 | 99.83 | 99.83 | 99.83 | 1.77 | 98.28 | 98.25 | 98.25 | 2.24 |

| 45 | 99.63 | 99.64 | 99.63 | 0.45 | 99.75 | 99.75 | 99.75 | 1.97 | 98.20 | 98.24 | 98.20 | 2.26 |

| 50 | 99.55 | 99.56 | 99.55 | 0.38 | 99.62 | 99.62 | 99.61 | 2.14 | 98.27 | 98.31 | 98.28 | 2.25 |

| 55 | 99.70 | 99.70 | 99.69 | 0.49 | 99.73 | 99.73 | 99.73 | 3.06 | 98.32 | 98.49 | 98.37 | 2.52 |

| 60 | 99.68 | 99.69 | 99.68 | 0.43 | 99.78 | 99.79 | 99.78 | 2.42 | 98.73 | 98.77 | 98.74 | 2.45 |

| 65 | 99.38 | 99.40 | 99.38 | 0.46 | 99.77 | 99.77 | 99.76 | 2.64 | 98.80 | 98.83 | 98.80 | 2.47 |

| 70 | 99.73 | 99.74 | 99.73 | 0.50 | 99.83 | 99.83 | 99.83 | 2.81 | 98.70 | 98.72 | 98.70 | 2.51 |

| 75 | 99.62 | 99.62 | 99.61 | 0.47 | 99.77 | 99.77 | 99.76 | 2.95 | 99.03 | 99.07 | 99.04 | 2.57 |

| 80 | 99.25 | 99.25 | 99.24 | 0.49 | 99.70 | 99.70 | 99.69 | 3.29 | 99.07 | 99.07 | 99.06 | 2.55 |

| TW (ms) | CNN (%) | LSTM (%) | Transformer (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | Rec | Pre | F1 | AIT (ms) | |

| 1 | 94.22 | 96.61 | 95.10 | 0.42 | 95.47 | 96.79 | 95.96 | 0.37 | 94.19 | 95.96 | 94.88 | 2.09 |

| 3 | 96.70 | 97.26 | 96.91 | 0.43 | 96.36 | 96.69 | 96.50 | 0.55 | 94.58 | 96.15 | 95.18 | 2.34 |

| 5 | 97.42 | 98.04 | 97.63 | 0.44 | 97.36 | 97.65 | 97.47 | 0.65 | 94.49 | 96.48 | 95.22 | 2.30 |

| 10 | 98.91 | 99.18 | 98.99 | 0.43 | 99.00 | 99.04 | 99.01 | 0.85 | 95.16 | 96.86 | 95.77 | 2.33 |

| 15 | 99.00 | 99.12 | 99.02 | 0.42 | 99.36 | 99.39 | 99.37 | 0.97 | 94.77 | 96.81 | 95.47 | 2.37 |

| 20 | 99.74 | 99.74 | 99.73 | 0.40 | 99.78 | 99.78 | 99.77 | 1.13 | 95.70 | 97.17 | 96.22 | 2.35 |

| 25 | 99.70 | 99.73 | 99.70 | 0.43 | 99.39 | 99.42 | 99.39 | 1.31 | 96.11 | 97.61 | 96.64 | 2.22 |

| 30 | 99.76 | 99.77 | 99.76 | 0.39 | 99.95 | 99.95 | 99.94 | 1.47 | 96.57 | 97.48 | 96.90 | 2.21 |

| 35 | 99.77 | 99.77 | 99.77 | 0.39 | 99.90 | 99.90 | 99.89 | 1.66 | 96.06 | 97.55 | 96.56 | 2.23 |

| 40 | 99.80 | 99.81 | 99.80 | 0.39 | 99.86 | 99.86 | 99.86 | 1.79 | 96.06 | 97.65 | 96.59 | 2.22 |

| 45 | 99.81 | 99.82 | 99.81 | 0.44 | 98.88 | 99.27 | 99.00 | 2.00 | 95.71 | 97.61 | 96.34 | 2.24 |

| 50 | 99.86 | 99.87 | 99.86 | 0.40 | 99.92 | 99.92 | 99.92 | 2.15 | 96.02 | 97.63 | 96.54 | 2.24 |

| 55 | 99.77 | 99.78 | 99.77 | 0.41 | 99.91 | 99.92 | 99.91 | 2.33 | 95.71 | 97.56 | 96.30 | 2.49 |

| 60 | 99.70 | 99.71 | 99.70 | 0.42 | 99.82 | 99.82 | 99.82 | 2.44 | 96.74 | 97.84 | 97.10 | 2.46 |

| 65 | 99.76 | 99.76 | 99.76 | 0.41 | 99.75 | 99.76 | 99.75 | 2.74 | 96.63 | 97.78 | 97.02 | 2.41 |

| 70 | 99.70 | 99.71 | 99.70 | 0.40 | 99.89 | 99.89 | 99.88 | 2.84 | 97.03 | 98.06 | 97.36 | 2.50 |

| 75 | 99.85 | 99.85 | 99.84 | 0.39 | 99.78 | 99.78 | 99.77 | 3.03 | 96.55 | 97.83 | 96.96 | 2.48 |

| 80 | 99.68 | 99.69 | 99.68 | 0.42 | 99.86 | 99.86 | 99.85 | 3.15 | 96.38 | 97.71 | 96.80 | 2.48 |

Appendix C. SHAP Analysis Cumulative Graph

References

- Jakober, L. Akamai Mitigates Sophisticated 1.44 Tbps and 385 Mpps DDoS Attack. Security Boulevard, Boca Raton, FL, USA. 2020. Available online: https://securityboulevard.com/2020/06/akamai-mitigates-sophisticated-1-44-tbps-and-385-mpps-ddos-attack (accessed on 11 April 2025).

- StormWall. 2024 in Review: DDoS Attacks Report by StormWall. StormWall s.r.o., Bratislava, Slovakia. 2025. Available online: https://stormwall.network/resources/blog/ddos-attack-statistics-2024 (accessed on 12 June 2025).

- Wallance, R. Annual DDoS Report 2024 Trends and Insights. Vercara, Herndon, VA, USA. 2025. Available online: https://vercara.digicert.com/resources/annual-ddos-report-2024-trends-and-insights (accessed on 12 June 2025).

- Yoachimik, O.; Pacheco, J. Record-Breaking 5.6 Tbps DDoS Attack and Global DDoS Trends for 2024 Q4. Cloudflare, London, UK. 2025. Available online: https://blog.cloudflare.com/ddos-threat-report-for-2024-q4/ (accessed on 12 June 2025).

- Wagner, D.; Kopp, D.; Wichtlhuber, M.; Dietzel, C.; Hohlfeld, O.; Smaragdakis, G.; Feldmann, A. United We Stand: Collaborative Detection and Mitigation of Amplification DDoS Attacks at Scale. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, Republic of Korea, 15–19 November 2021; pp. 970–987. [Google Scholar] [CrossRef]

- Sayed, M.S.E.; Le-Khac, N.A.; Azer, M.A.; Jurcut, A.D. A Flow-Based Anomaly Detection Approach With Feature Selection Method Against DDoS Attacks in SDNs. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1862–1880. [Google Scholar] [CrossRef]

- Fouladi, R.F.; Ermis, O.; Anarim, E. A DDoS attack detection and defense scheme using time-series analysis for SDN. J. Inf. Secur. Appl. 2020, 54, 102587. [Google Scholar] [CrossRef]

- Tan, L.; Pan, Y.; Wu, J.; Zhou, J.; Jiang, H.; Deng, Y. A New Framework for DDoS Attack Detection and Defense in SDN Environment. IEEE Access 2020, 8, 161908–161919. [Google Scholar] [CrossRef]

- Haseeb-ur rehman, R.M.A.; Aman, A.H.M.; Hasan, M.K.; Ariffin, K.A.Z.; Namoun, A.; Tufail, A.; Kim, K.H. High-Speed Network DDoS Attack Detection: A Survey. Sensors 2023, 23, 6850. [Google Scholar] [CrossRef]

- Salopek, D.; Mikuc, M. Enhancing Mitigation of Volumetric DDoS Attacks: A Hybrid FPGA/Software Filtering Datapath. Sensors 2023, 23, 7636. [Google Scholar] [CrossRef]

- Scherrer, S.; Vliegen, J.; Sateesan, A.; Hsiao, H.C.; Mentens, N.; Perrig, A. ALBUS: A Probabilistic Monitoring Algorithm to Counter Burst-Flood Attacks. In Proceedings of the 2023 42nd International Symposium on Reliable Distributed Systems (SRDS), Marrakesh, Morocco, 25–29 September 2023; pp. 162–172. [Google Scholar] [CrossRef]

- Hoque, N.; Kashyap, H.; Bhattacharyya, D. Real-time DDoS attack detection using FPGA. Comput. Commun. 2017, 110, 48–58. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2017; Volume 30. [Google Scholar]

- Gaur, V.; Dogra, A.; Gupta, A.; Tibrewal, A. Multiclass classification for DDoS attacks using LSTM time-series model. In Proceedings of the 7th International Conference on Computing in Engineering & Technology (ICCET 2022), Online, 12–13 February 2022; pp. 135–141. [Google Scholar] [CrossRef]

- Shi, H.; Cheng, G.; Hu, Y.; Wang, F.; Ding, H. RT-SAD: Real-Time Sketch-Based Adaptive DDoS Detection for ISP Network. Secur. Commun. Networks 2021, 2021, 1–10. [Google Scholar] [CrossRef]

- Lai, Y.K.; Huang, P.Y.; Lee, H.P.; Tsai, C.L.; Chang, C.S.; Nguyen, M.H.; Lin, Y.J.; Liu, T.L.; Chen, J.H. Real-Time DDoS Attack Detection using Sketch-based Entropy Estimation on the NetFPGA SUME Platform. In Proceedings of the 2020 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Auckland, New Zealand, 7–10 December 2020; pp. 1566–1570. [Google Scholar]

- Ramamoorthi, A.; Subbulakshmi, T.; Shalinie, S.M. Real time detection and classification of DDoS attacks using enhanced SVM with string kernels. In Proceedings of the 2011 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 3–5 June 2011; pp. 91–96. [Google Scholar] [CrossRef]

- Lai, Y.K.; Chang, K.P.; Ku, X.W.; Hua, H.L. A Machine Learning Accelerator for DDoS Attack Detection and Classification on FPGA. In Proceedings of the 2022 19th International SoC Design Conference (ISOCC), Gangneung-si, Republic of Korea, 19–22 October 2022; pp. 181–182. [Google Scholar] [CrossRef]

- Gebremeskel, T.G.; Gemeda, K.A.; Krishna, T.G.; Ramulu, P.J. DDoS Attack Detection and Classification Using Hybrid Model for Multicontroller SDN. Wirel. Commun. Mob. Comput. 2023, 2023, 1–18. [Google Scholar] [CrossRef]

- Yungaicela-Naula, N.M.; Vargas-Rosales, C.; Perez-Diaz, J.A.; Jacob, E.; Martinez-Cagnazzo, C. Physical Assessment of an SDN-Based Security Framework for DDoS Attack Mitigation: Introducing the SDN-SlowRate-DDoS Dataset. IEEE Access 2023, 11, 46820–46831. [Google Scholar] [CrossRef]

- Fuladi, R.; Baykas, T.; Anarim, E. The Use of Statistical Features for Low-Rate Denial of Service Attack Detection. In Proceedings of the 2023 2nd International Conference on 6G Networking (6GNet), Paris, France, 18–20 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Cynthia, C.; Ghosh, D.; Kamath, G.K. Detection of DDoS Attacks Using SHAP-Based Feature Reduction. Int. J. Mach. Learn. 2023, 13, 173–180. [Google Scholar] [CrossRef]

- Hernandez, D.V.; Chen, M.T.; Lai, Y.K. Identification of DDoS Attacks Through SHAP-Based Feature Analysis and Transformer Models: A Multivariate Time Series Data Approach. In Proceedings of the TENCON 2024—2024 IEEE Region 10 Conference (TENCON), Singapore, 1–4 December 2024; pp. 632–635. [Google Scholar] [CrossRef]

- Chang, K.-P. A Machine Learning Accelerator for DDoS Attack Detection and Classification on FPGA. Ph.D. Thesis, Chung Yuan Christian University, Taoyuan, Taiwan, 2022. [Google Scholar]

- Alon, N.; Matias, Y.; Szegedy, M. The Space Complexity of Approximating the Frequency Moments. J. Comput. Syst. Sci. 1999, 58, 137–147. [Google Scholar] [CrossRef]

- Cormode, G. MassDAL Public Code Bank: Sketches and Frequent Items. 2004. Available online: https://www.dimacs.rutgers.edu/~graham/code/ (accessed on 11 June 2025).

- Masson, C.; Rim, J.E.; Lee, H.K. DDSketch: A fast and fully-mergeable quantile sketch with relative-error guarantees. Proc. VLDB Endow. 2019, 12, 2195–2205. [Google Scholar] [CrossRef]

- Flajolet, P.; Nigel Martin, G. Probabilistic counting algorithms for data base applications. J. Comput. Syst. Sci. 1985, 31, 182–209. [Google Scholar] [CrossRef]

- Clifford, P.; Cosma, I. A simple sketching algorithm for entropy estimation over streaming data. In Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics, Scottsdale, AZ, USA, 29 April–1 May 2013; Volume 31, pp. 196–206. [Google Scholar]

- Lopes, I.O.; Zou, D.; Abdulqadder, I.H.; Akbar, S.; Li, Z.; Ruambo, F.; Pereira, W. Network intrusion detection based on the temporal convolutional model. Comput. Secur. 2023, 135, 103465. [Google Scholar] [CrossRef]

- Doriguzzi-Corin, R.; Millar, S.; Scott-Hayward, S.; Martinez-del Rincon, J.; Siracusa, D. Lucid: A Practical, Lightweight Deep Learning Solution for DDoS Attack Detection. IEEE Trans. Netw. Serv. Manag. 2020, 17, 876–889. [Google Scholar] [CrossRef]

- Ta, V.; Park, M. Man-edos: A multihead attention network for the detection of economic denial of sustainability attacks. Electronics 2021, 10, 2500. [Google Scholar] [CrossRef]

- Karnani, S.; Shakya, H.K. Mitigation strategies for distributed denial of service (DDoS) in SDN: A survey and taxonomy. Inf. Secur. J. A Glob. Perspect. 2023, 32, 444–468. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Hakak, S.; Ghorbani, A.A. Developing Realistic Distributed Denial of Service (DDoS) Attack Dataset and Taxonomy. In Proceedings of the 2019 International Carnahan Conference on Security Technology (ICCST), Chennai, India, 1–3 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Kind, A.; Stoecklin, M.; Dimitropoulos, X. Histogram-based traffic anomaly detection. IEEE Trans. Netw. Serv. Manag. 2009, 6, 110–121. [Google Scholar] [CrossRef]

- Fouladi, R.; Kayatas, C.; Anarim, E. Statistical Measures: Promising Features for Time Series Based DDoS Attack Detection. In Proceedings of the International Workshop on Computational Intelligence for Multimedia Understanding (IWCIM), Izmir, Turkey, 2–5 May 2018; p. 96. [Google Scholar] [CrossRef]

- Wei, Y.; Jang-Jaccard, J.; Singh, A.; Sabrina, F.; Camtepe, S. Classification and Explanation of Distributed Denial-of-Service (DDoS) Attack Detection using Machine Learning and Shapley Additive Explanation (SHAP) Methods. arXiv 2023, arXiv:2306.17190. [Google Scholar] [CrossRef]

- Fontugne, R.; Borgnat, P.; Abry, P.; Fukuda, K. MAWILab: Combining diverse anomaly detectors for automated anomaly labeling and performance benchmarking. In Proceedings of the 6th International COnference, Philadelphia, PA, USA, 30 November–3 December 2010; pp. 1–12. [Google Scholar] [CrossRef]

- GitHub Contributors. SHAP Issue #3415: Pytorch LSTM Not Recognizednn.Model:LSTM. 2023. Available online: https://github.com/shap/shap/issues/3415 (accessed on 10 June 2025).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar]

- Hansen, C.; Hansen, C.; Alstrup, S.; Simonsen, J.G.; Lioma, C. Neural Speed Reading with Structural-Jump-LSTM. arXiv 2019, arXiv:1904.00761. [Google Scholar] [CrossRef]

| Paper | Acc | Rec | F1 | Data | Feature | Archi | Detection Method | # of Class |

|---|---|---|---|---|---|---|---|---|

| [17] | 99.32% | – | – | Attack Generation Tool | Flow | – | Enhanced SVM | 2 |

| [8] | 98.85% | 98.47% | – | NSL-KDD | Flow | SDN | K-means and KNN | 2 |

| [7] | 98.82% | – | 98.80% | MAWI Dataset | Flow | SDN | ARIMA, Chaos Theory, Exp. Filter + Dynamic Threshold | 2 |

| [19] | 99.42% | 99.46% | 99.43% | CICDDoS2019 | Flow | SDN | Entropy Threshold + LSTM | 6 |

| [20] | 90.87% | – | – | SDN-SlowRate-DDoS | Flow | SDN | LSTM Model | 2 |

| [15] | 99.51% | – | – | CERNET | Flow | FPGA | Dynamic Threshold Update | 13 |

| [12] | 99.95% | – | – | CAIDA DDoS 2007 | Packet | FPGA | Correlation Measure (NaHiD) | 2 |

| [16] | 96.63% | 98.35% | 96.63% | CAIDA DDoS 2007 + MAWI | Packet Time Series | FPGA | Entropy-Based | 2 |

| [18] | 98.40% | 97.20% | 97.80% | CICDDoS2019 | Packet Time Series | FPGA | Dense + Autoencoder Model | 9 |

| Ours | 99.87% | 99.87% | 99.86% | CICDDoS2019 | Packet Time Series (30 s) | FPGA + GPU | TCN (ITPL) | 14 |

| Ours | 99.83% | 99.83% | 99.83% | CICDDoS2019 | Packet Time Series (30 s) | FPGA + GPU | LSTM (ITPL) | 14 |

| Ours | 99.86% | 99.87% | 99.86% | CICDDoS2019 + MAWI | Packet Time Series (50 s) | FPGA + GPU | TCN (ITPL) | 14 |

| Ours | 99.94% | 99.95% | 99.95% | CICDDoS2019 + MAWI | Packet Time Series (30 s) | FPGA + GPU | LSTM (ITPL) | 14 |

| Classes | 1st Day | 2nd Day |

|---|---|---|

| DNS | - | 10:52–11:05 |

| LDAP | 10:21–10:30 | 11:22–11:32 |

| MSSQL | 10:33–10:42 | 11:36–11:45 |

| NTP | - | 10:35–10:45 |

| NetBIOS | 10:00–10:09 | 11:50–12:00 |

| Portmap | 9:43–9:51 | - |

| SNMP | - | 12:12–12:23 |

| SSDP | - | 12:27–12:37 |

| SYN | 11:28–17:35 | 13:29–13:34 |

| TFTP | - | 13:35–17:15 |

| UDP | 10:53–11:03 | 12:45–13:09 |

| UDPLag | 11:14–11:24 | 13:11–13:15 |

| WebDDoS | - | 13:18–13:29 |

| Class | Packets | Time Series | |

|---|---|---|---|

| Packet Flow | Intensity-Time Period | ||

| Benign | 112,233 | 6599 | 17,911 |

| Syn | 5,902,487 | 3880 | 2168 |

| TFTP | 20,079,910 | 5274 | 1522 |

| UDP | 6,915,271 | 2438 | 1263 |

| MSSQL | 10,297,520 | 1689 | 1115 |

| LDAP | 4,292,516 | 1173 | 1084 |

| NetBIOS | 7,548,310 | 1257 | 1082 |

| UDPLag | 1,090,986 | 1343 | 744 |

| SSDP | 2,609,774 | 821 | 704 |

| DNS | 5,069,361 | 1552 | 624 |

| SNMP | 5,158,820 | 994 | 614 |

| NTP | 1,202,116 | 2467 | 490 |

| WebDDoS | 437 | 512 | 470 |

| Portmap | 191,694 | 427 | 255 |

| Time Window (s) | Packet Flow Labeling (%) | Intensity-Time Period Labeling (%) | ||||

|---|---|---|---|---|---|---|

| TCN | LSTM | TRF | TCN | LSTM | TRF | |

| 1 | 98.01 | 97.94 | 97.24 | 95.28 | 95.23 | 95.03 |

| 3 | 94.96 | 95.81 | 95.00 | 94.49 | 94.00 | 95.18 |

| 5 | 95.67 | 93.74 | 93.31 | 97.10 | 95.76 | 95.55 |

| 10 | 95.70 | 94.07 | 93.94 | 99.70 | 98.81 | 96.40 |

| 15 | 97.73 | 96.83 | 94.59 | 99.70 | 99.71 | 96.75 |

| 20 | 97.45 | 96.94 | 94.14 | 99.56 | 99.83 | 96.97 |

| 25 | 98.35 | 98.61 | 95.85 | 99.83 | 99.76 | 97.53 |

| 30 | 98.12 | 97.84 | 96.37 | 99.83 | 99.86 | 97.83 |

| 35 | 98.44 | 98.85 | 96.48 | 99.86 | 99.70 | 97.98 |

| 40 | 98.78 | 98.19 | 95.46 | 99.71 | 99.75 | 98.48 |

| 45 | 99.04 | 99.15 | 97.03 | 99.83 | 99.83 | 98.31 |

| 50 | 98.99 | 98.60 | 96.88 | 99.68 | 99.75 | 98.16 |

| 55 | 99.38 | 99.16 | 97.49 | 99.81 | 99.83 | 98.96 |

| 60 | 99.05 | 99.21 | 97.66 | 99.81 | 99.34 | 98.58 |

| 65 | 99.53 | 99.61 | 97.86 | 99.40 | 99.79 | 98.00 |

| 70 | 98.86 | 99.00 | 97.90 | 99.74 | 99.28 | 98.83 |

| 75 | 99.46 | 99.50 | 97.70 | 99.79 | 99.83 | 98.94 |

| 80 | 98.94 | 99.06 | 97.80 | 99.61 | 99.71 | 99.13 |

| Time Window (s) | Packet Flow Labeling (%) | Intensity-Time Period Labeling (%) | ||||

|---|---|---|---|---|---|---|

| TCN | LSTM | TRF | TCN | LSTM | TRF | |

| 1 | 77.91 | 78.97 | 75.47 | 93.43 | 93.23 | 92.96 |

| 3 | 80.05 | 78.83 | 77.58 | 92.82 | 93.87 | 92.97 |

| 5 | 83.99 | 82.62 | 79.76 | 96.78 | 95.63 | 94.26 |

| 10 | 91.84 | 90.46 | 82.06 | 99.09 | 99.15 | 95.49 |

| 15 | 94.38 | 96.33 | 83.90 | 98.73 | 99.66 | 95.77 |

| 20 | 96.18 | 96.36 | 85.18 | 99.53 | 99.78 | 95.99 |

| 25 | 97.19 | 97.83 | 86.02 | 99.83 | 99.78 | 96.84 |

| 30 | 97.32 | 96.57 | 87.69 | 99.86 | 99.83 | 96.89 |

| 35 | 97.56 | 98.68 | 89.09 | 99.75 | 99.65 | 97.43 |

| 40 | 98.11 | 97.99 | 89.58 | 99.76 | 99.83 | 98.25 |

| 45 | 97.92 | 98.84 | 90.87 | 99.63 | 99.75 | 98.20 |

| 50 | 98.08 | 98.60 | 90.97 | 99.55 | 99.61 | 98.28 |

| 55 | 98.87 | 96.25 | 93.15 | 99.69 | 99.73 | 98.37 |

| 60 | 98.57 | 98.89 | 93.72 | 99.68 | 99.78 | 98.74 |

| 65 | 98.58 | 99.18 | 93.79 | 99.38 | 99.76 | 98.80 |

| 70 | 98.91 | 98.91 | 93.43 | 99.73 | 99.83 | 98.70 |

| 75 | 97.60 | 99.21 | 94.23 | 99.61 | 99.76 | 99.04 |

| 80 | 98.26 | 99.04 | 94.90 | 99.24 | 99.69 | 99.06 |

| Model | Rec (%) (Mean ± Std) | Pre (%) (Mean ± Std) | F1 (%) (Mean ± Std) | AIT (ms) (Mean ± Std) |

|---|---|---|---|---|

| CNN | 99.62 ± 0.16 | 99.64 ± 0.15 | 99.63 ± 0.16 | 0.34 ± 0.04 |

| LSTM | 99.35 ± 0.75 | 99.48 ± 0.49 | 99.39 ± 0.67 | 1.23 ± 0.14 |

| Transformer | 95.49 ± 0.34 | 97.04 ± 0.29 | 96.03 ± 0.16 | 2.35 ± 0.78 |

| Rank | Feature | Transformer Rank | TCN Rank | Combined Score |

|---|---|---|---|---|

| 1 | TTL_s_entropy | 1 | 2 | 199 |

| 2 | Destination Port_max | 6 | 5 | 191 |

| 3 | Source Port_std | 4 | 9 | 189 |

| 4 | TTL_std | 14 | 1 | 187 |

| 5 | window_size_q1 | 10 | 6 | 186 |

| 6 | Protocol_d_count | 13 | 4 | 185 |

| 7 | iat_s_entropy | 11 | 10 | 181 |

| 8 | Destination Port_std | 20 | 3 | 179 |

| 9 | Protocol_max | 3 | 20 | 179 |

| 10 | TTL_max | 16 | 8 | 178 |

| 11 | ip_length_s_entropy | 12 | 15 | 175 |

| 12 | window_size_max | 21 | 7 | 174 |

| 13 | window_size_s_entropy | 5 | 23 | 174 |

| 14 | Destination Port_s_entropy | 18 | 14 | 170 |

| 15 | TTL_q1 | 25 | 13 | 164 |

| 16 | Protocol_q2 | 2 | 38 | 162 |

| 17 | Source Port_med | 7 | 34 | 161 |

| 18 | window_size_med | 28 | 18 | 156 |

| 19 | Protocol_med | 8 | 39 | 155 |

| 20 | TTL_med | 26 | 21 | 150 |

| Rank | Feature | Transformer Rank | TCN Rank | Score |

|---|---|---|---|---|

| 1 | ip_length_skw | 1 | 1 | 200 |

| 2 | ip_length_kurt | 3 | 2 | 197 |

| 3 | iat_med | 5 | 7 | 190 |

| 4 | Source Port_q1 | 14 | 3 | 185 |

| 5 | Destination Port_q2 | 2 | 17 | 183 |

| 6 | Destination Port_s_entropy | 4 | 18 | 180 |

| 7 | window_size_std | 13 | 9 | 180 |

| 8 | Protocol_q2 | 17 | 8 | 177 |

| 9 | Source Port_s_entropy | 9 | 20 | 173 |

| 10 | udp_length_s_entropy | 6 | 24 | 172 |

| 11 | TTL_d_count | 28 | 5 | 169 |

| 12 | TTL_max | 8 | 26 | 168 |

| 13 | Destination Port_std | 25 | 10 | 167 |

| 14 | udp_length_std | 23 | 12 | 167 |

| 15 | Destination Port_d_count | 22 | 13 | 167 |

| 16 | Destination Port_med | 11 | 27 | 164 |

| 17 | ip_length_d_count | 34 | 6 | 162 |

| 18 | iat_q2 | 19 | 22 | 161 |

| 19 | Destination Port_q1 | 18 | 25 | 159 |

| 20 | Source Port_med | 29 | 15 | 150 |

| Rank | CICDDoS2019 | MAWI + CICDDoS2019 |

|---|---|---|

| 1 | TTL_s_entropy | ip_length_skw |

| 2 | Destination Port_max | ip_length_kurt |

| 3 | Source Port_std | iat_med |

| 4 | TTL_std | Source Port_q1 |

| 5 | window_size_q1 | Destination Port_q2 |

| 6 | Protocol_d_count | Destination Port_s_entropy |

| 7 | iat_s_entropy | window_size_std |

| 8 | Destination Port_std | Protocol_q2 |

| 9 | Protocol_max | Source Port_s_entropy |

| 10 | TTL_max | udp_length_s_entropy |

| 11 | ip_length_s_entropy | TTL_d_count |

| 12 | window_size_max | TTL_max |

| 13 | window_size_s_entropy | Destination Port_std |

| 14 | Destination Port_s_entropy | udp_length_std |

| 15 | TTL_q1 | Destination Port_d_count |

| 16 | Protocol_q2 | Destination Port_med |

| 17 | Source Port_med | ip_length_d_count |

| 18 | window_size_med | iat_q2 |

| 19 | Protocol_med | Destination Port_q1 |

| 20 | TTL_med | Source Port_med |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernandez, D.V.; Lai, Y.-K.; Ignatius, H.T.N. Real-Time DDoS Detection in High-Speed Networks: A Deep Learning Approach with Multivariate Time Series. Electronics 2025, 14, 2673. https://doi.org/10.3390/electronics14132673

Hernandez DV, Lai Y-K, Ignatius HTN. Real-Time DDoS Detection in High-Speed Networks: A Deep Learning Approach with Multivariate Time Series. Electronics. 2025; 14(13):2673. https://doi.org/10.3390/electronics14132673

Chicago/Turabian StyleHernandez, Drixter V., Yu-Kuen Lai, and Hargyo T. N. Ignatius. 2025. "Real-Time DDoS Detection in High-Speed Networks: A Deep Learning Approach with Multivariate Time Series" Electronics 14, no. 13: 2673. https://doi.org/10.3390/electronics14132673

APA StyleHernandez, D. V., Lai, Y.-K., & Ignatius, H. T. N. (2025). Real-Time DDoS Detection in High-Speed Networks: A Deep Learning Approach with Multivariate Time Series. Electronics, 14(13), 2673. https://doi.org/10.3390/electronics14132673