An Enhanced Fractal Image Compression Algorithm Based on Adaptive Non-Uniform Rectangular Partition

Abstract

1. Introduction

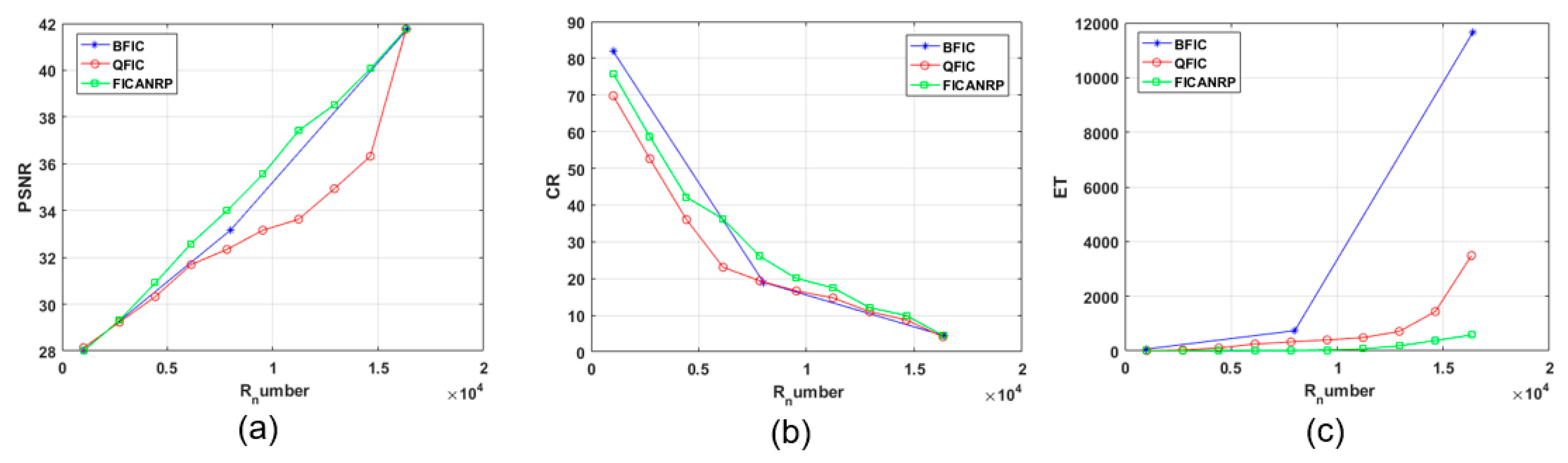

- We propose utilizing the adaptive non-uniform rectangular partition algorithm to segment images into non-overlapping D-blocks guided by local textures and features. This approach results in D-blocks of varying sizes and categorizes based on block dimensions, ultimately effectively reducing the pool of D-blocks and matching scope while improving the compression ratio and match precision.

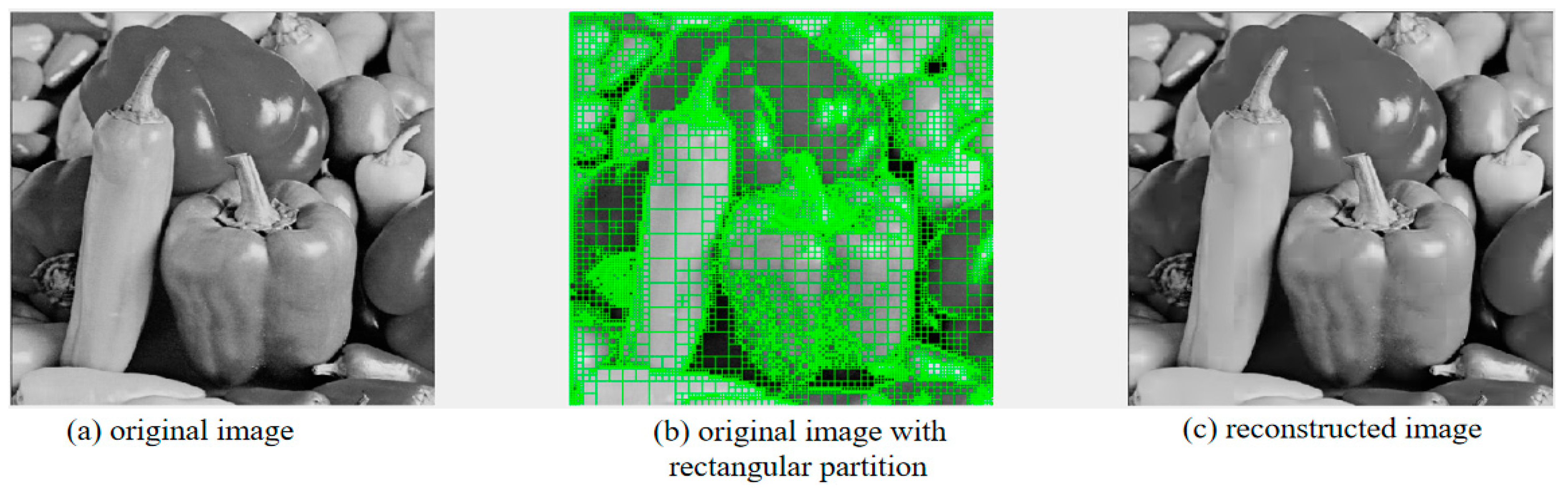

- We design and use the non-uniform partition algorithm to adaptively segment images into different-sized R-blocks. Small R-blocks reconstruct regions with complex textures, while large R-blocks reconstruct areas with smooth or straightforward textures. The variable block size can help compress images, reduce “block effects” more effectively, and improve image reconstruction quality and compression ratio.

- We propose a novel block similarity-matching algorithm that incorporates precomputing. This approach entails summing pixel values for each D-block before conducting the R-block similarity match. This approach avoids redundant calculations during the loop-matching process, reducing computational complexity and encoding time.

2. The Fractal Image Compression and Non-Uniform Rectangular Partition

2.1. Fractal Image Compression

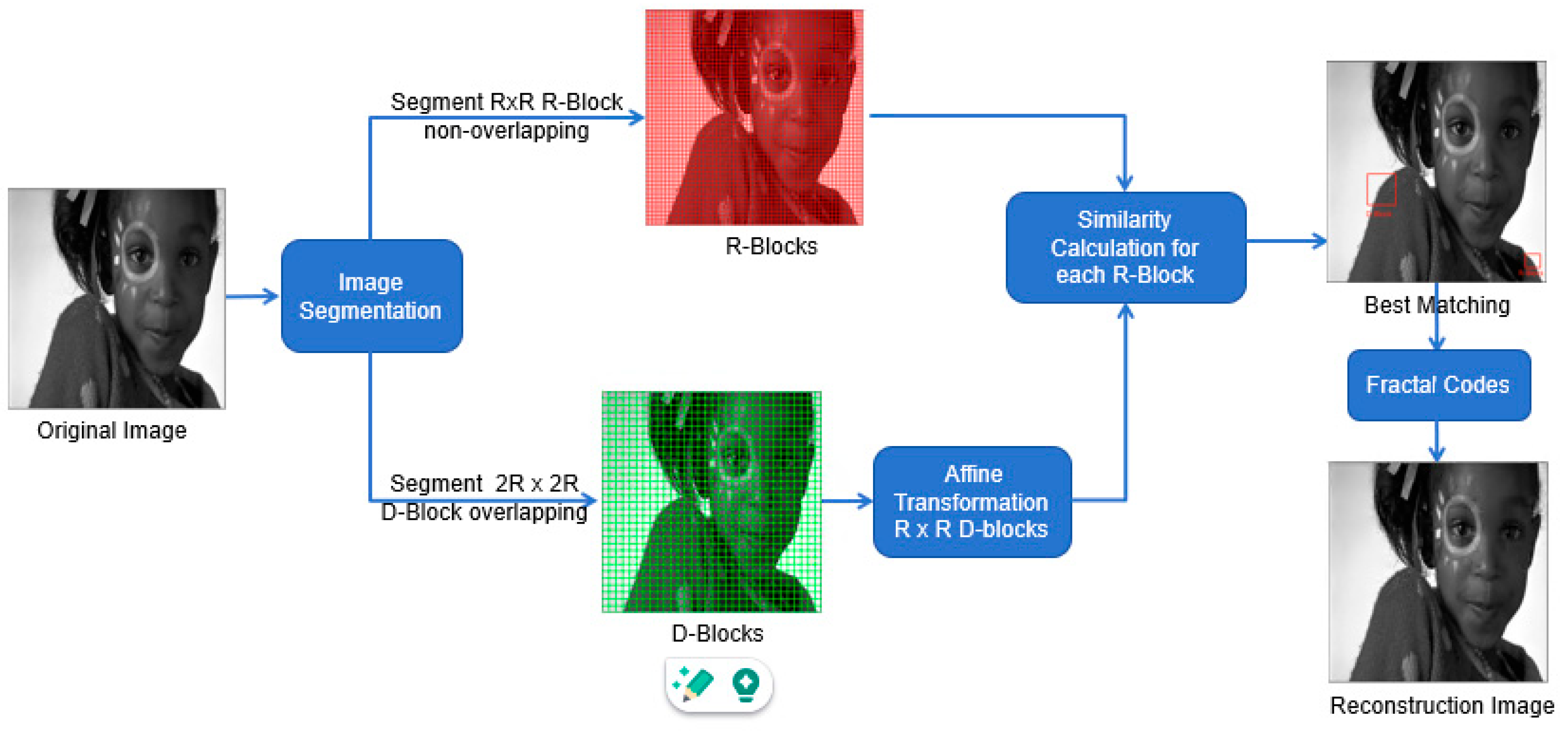

2.1.1. Image Segmentation

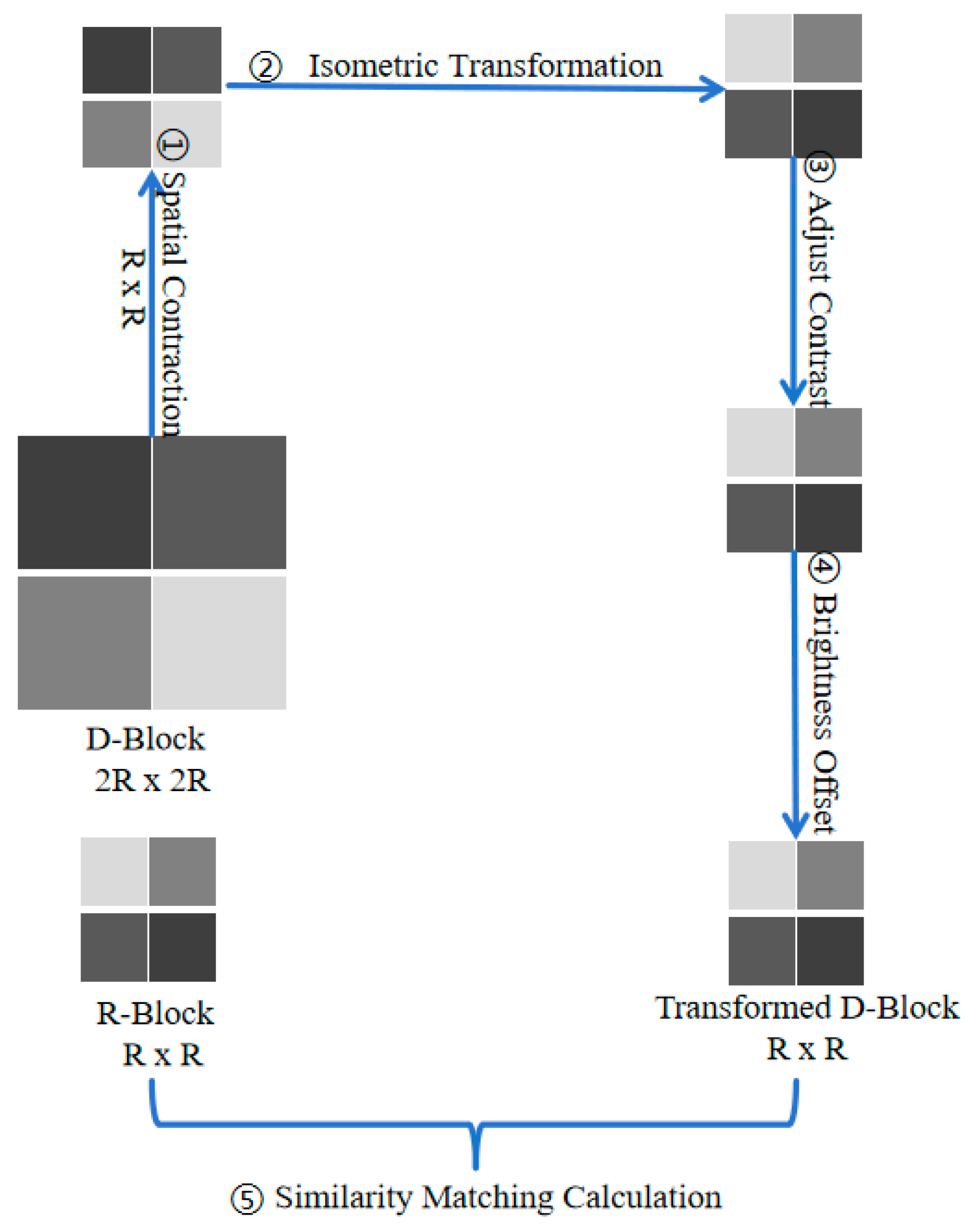

2.1.2. Affine Transformation

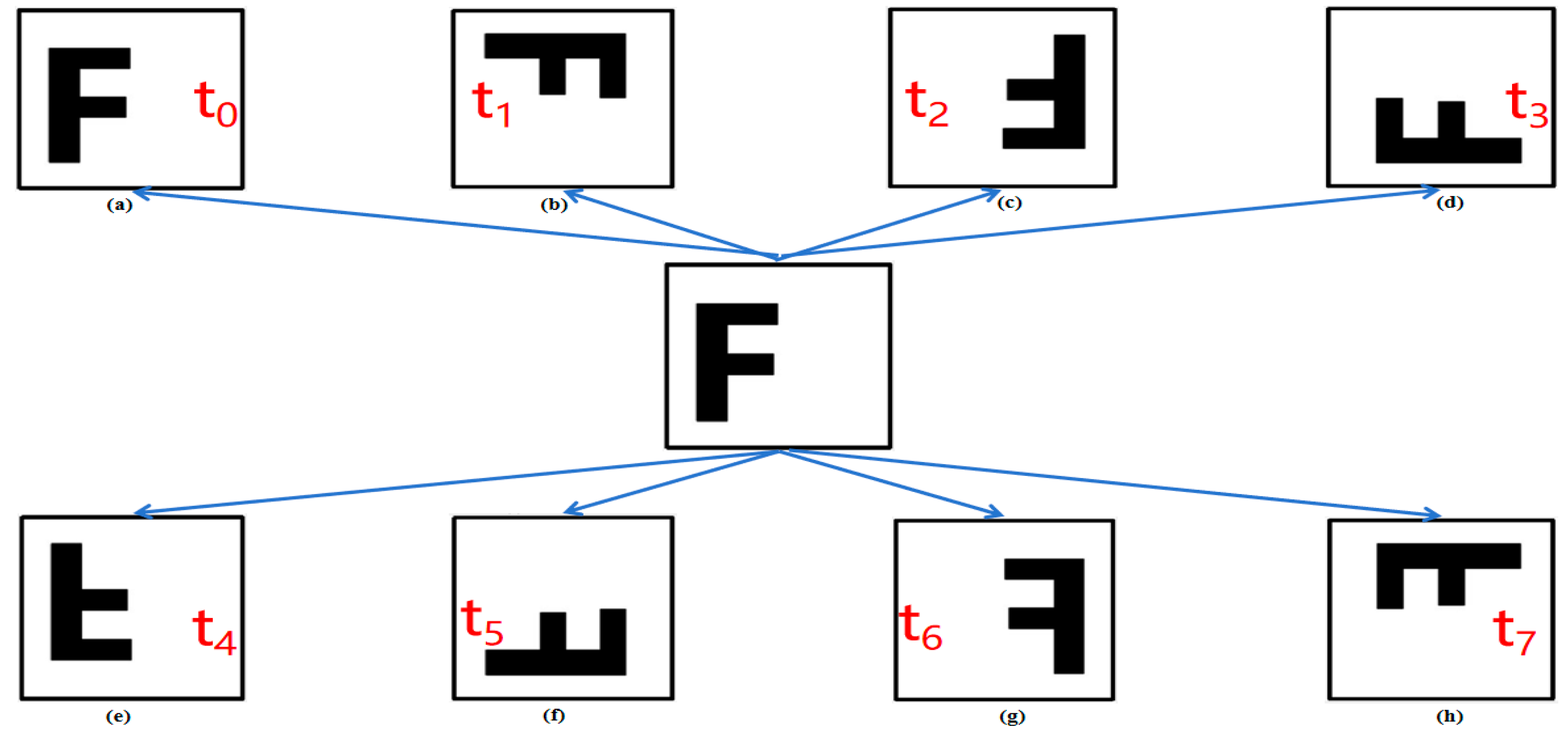

- (1)

- Identity transformation : , as shown in Figure 3a.

- (2)

- Rotate 90 degrees clockwise : , as shown in Figure 3b.

- (3)

- Rotate 180 degrees clockwise : , as shown in Figure 3c.

- (4)

- Rotate 270 degrees clockwise : , as shown in Figure 3d.

- (5)

- Symmetric reflection on x : , as shown in Figure 3e.

- (6)

- Symmetric reflection on y = x : , as shown in Figure 3f.

- (7)

- Symmetric reflection on y : , as shown in Figure 3g.

- (8)

- Symmetric reflection on y = −x : , as shown in Figure 3h.

2.1.3. Decoding and Reconstruction

2.2. The Non-Uniform Rectangular Partition

3. Methodology and Algorithm Analysis

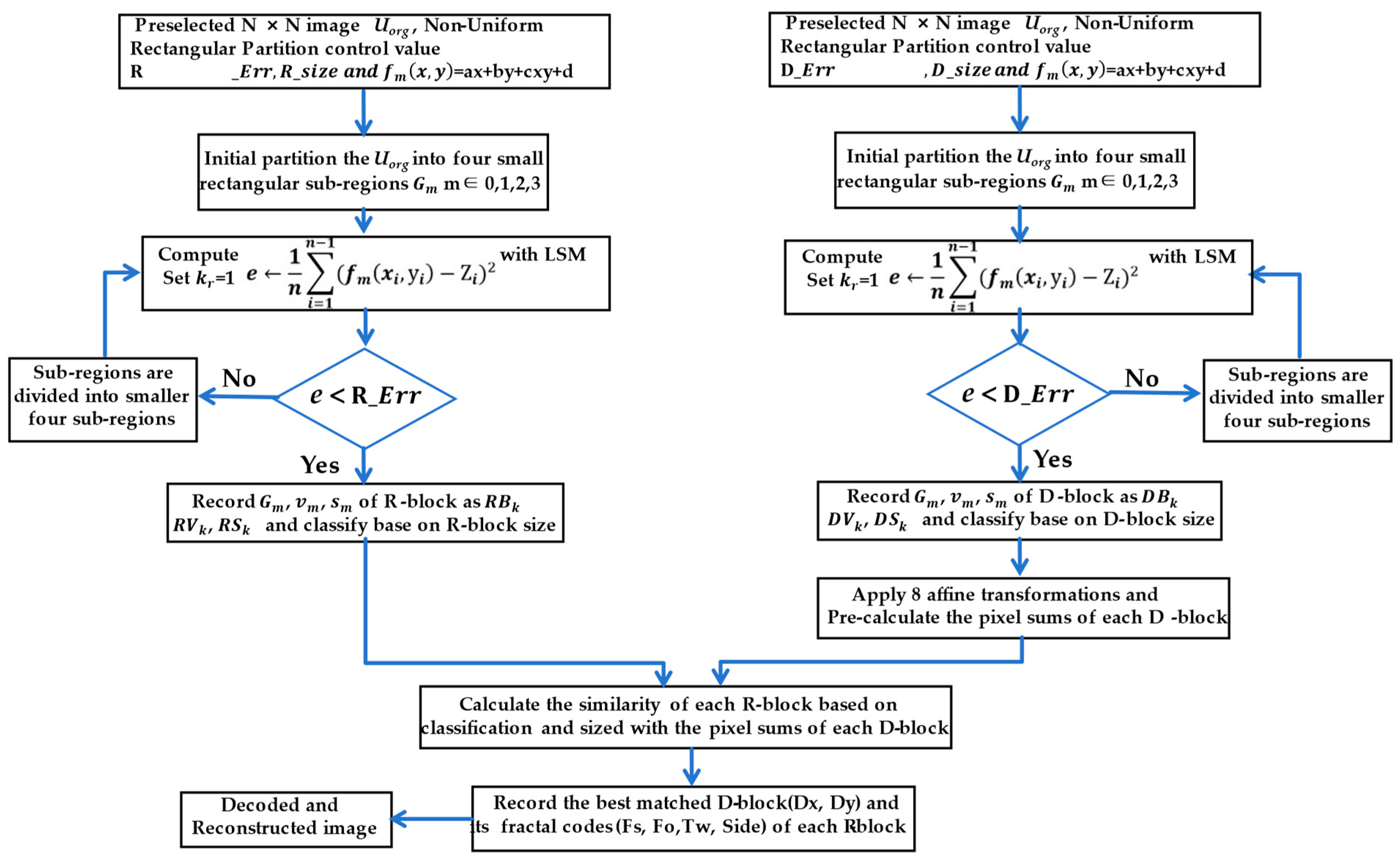

3.1. Block Segmentation Method

3.2. Process of the FICANRP Scheme

3.3. Algorithm Details

3.4. Algorithm Description

| Algorithm 1: The FICANRP encoding algorithm |

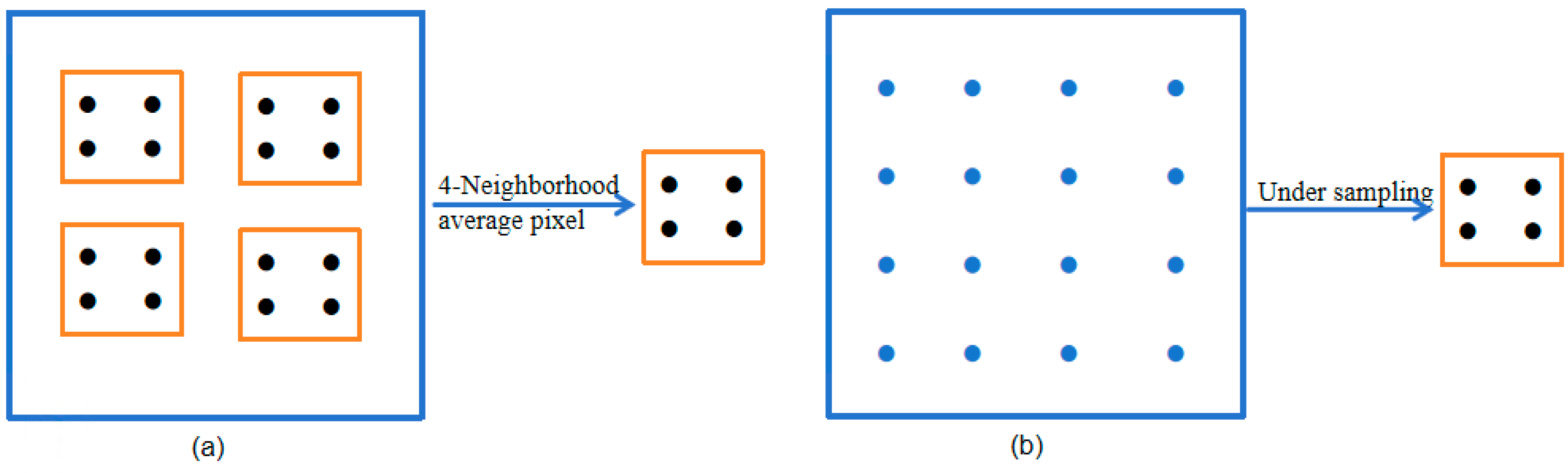

| Input: Size N × N Output: Fractal codes (Fs, Fo, Tw, R_size, Dtx, Dty) Algorithm process: 1. Preset the non-uniform partition control threshold R_Err, D_Err, and the range of R-block size and D-block size. 2. Apply the adaptive non-uniform rectangular partition algorithm on image , to obtain different sizes of R-blocks and D-blocks, respectively. 3. /* k is the sub-region code / 4. Set k = 1 5. Initial partition the into four small rectangular sub-regions , m ∈ 0,1,2,3 6. Compute Vk, Sk, Bk with ENCODING () 7. Function ENCODING () 8. Set the top left vertex of as Vm, the size of as Sk, the grey value of as Bk 9. For each pixel point (, ) in do 10. Compute , ) ← + + + with LSM 11. /* is the gray value of the pixel in the sub-region / 12. Compute e ← 13. Endfor 14. If e < R_Err or Sk ≤ min R-block size/e < D_Err or Sk ≤ min D-block size 15. Record Gm, Vm, Sm of R-block/D-block, as Bk, Vk, Sk, 16. Classify Bk based on block size. 17. Else 18. Compute ENCODING (Gmr), r ∈ 0, 1, 2, 3 19. End if 20. End Function 21. Perform the average of 4-neighborhood pixel values for each D-block to obtain the compression transformation D’-block. Then, perform eight isometric transformations and various summations with block pixels to form the pool Ω of D-blocks. 22. Preprocess calculations by summing up the pixel values of the D-block before each R-block similarity match. 23. According to the partition order of R-block, calculate the similarity coefficient of R-block and D-block, the smaller the , the more similar it is, then record the fractal codes of each R-block where the is smallest. 24. After all R-blocks are matched, the corresponding image reconstruction of fractal codes Fs, Fo, Tw, R_size, Dtx, and Dty will be obtained. |

| Algorithm 2: The FICANRP decoding algorithm |

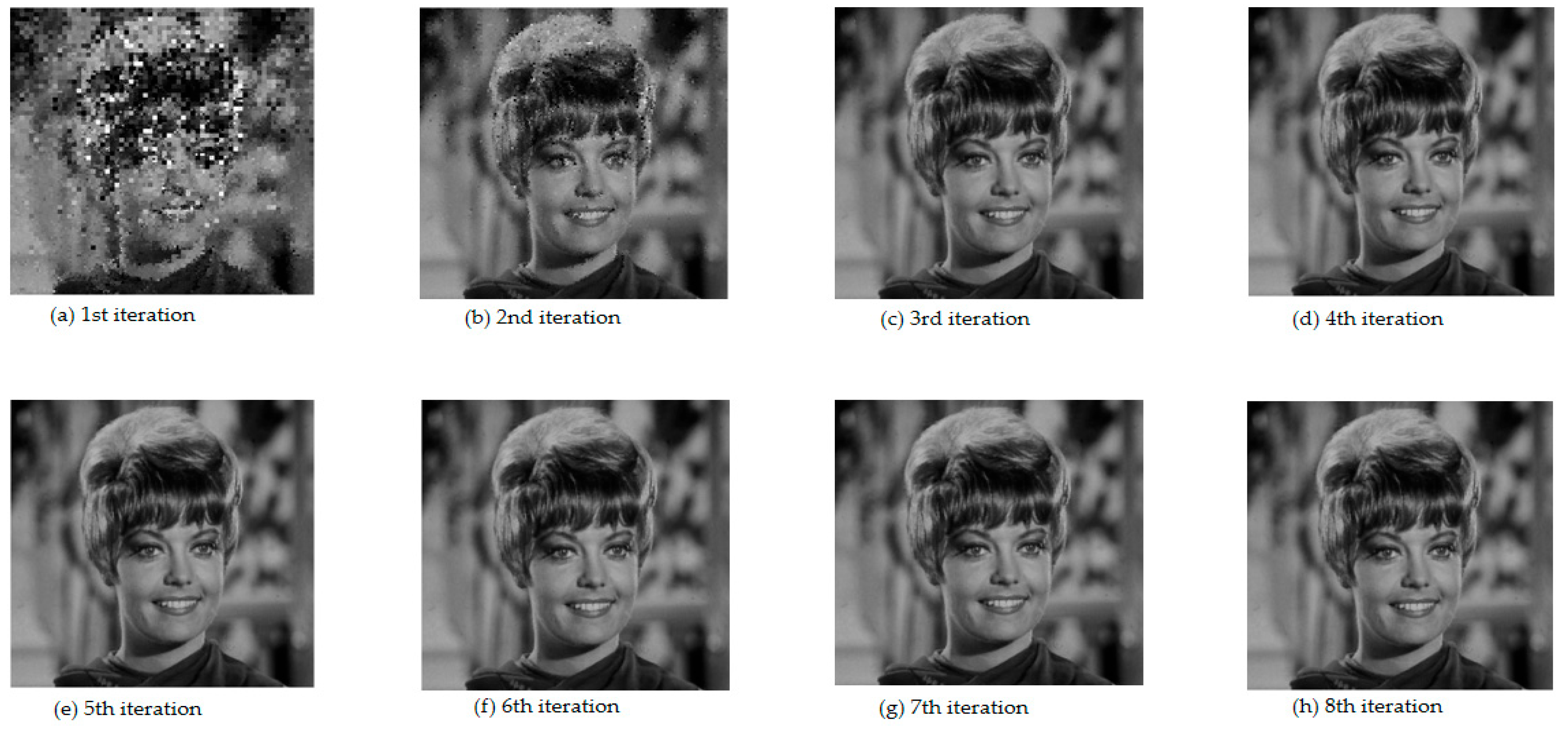

| Input: Fractal codes (Fs, Fo, Tw, R_size, Dtx, Dty) Output: Decoding reconstruction Image Algorithm process: 1. Preset the maximum iteration number N, read the fractal codes information, and extract data from fractal encoding files, including the IFS parameter set (Fs, Fo, Tw, R_size, Dtx, Dty) of each R-block. 2. Initialize decoding space and create two buffers the same size as the original image: an R-region buffer and a D-region buffer. 3. Initialize an arbitrary image matrix I_New, the same size as the original, to reconstruct the decoded image. For n = 1:N/* n represents the iteration number/ For Nr = 1: Tprn /* Tprn represents the number of R-block/ Dx = Dtx(Nr) Dy = Dty (Nr) /* For each R-block R(Nr), locate the best match D-block D(Nr)/ D(Nr) = I_New(Dx + 2R_size: Dy + 2R_size) /* Apply spatial compression Ts and isometric transformation Tw to D(Nr)/ Temp(Nr) = Tw (Ts(D(Nr))) I_New(Nr) = Fs(Nr) Temp(Nr) + Fo(Nr) Nr = Nr + 1 End for End for 4. Update the decoding area, copy and paste the content of the R-region image generated by the current iteration into the D-region, thereby updating the content of the entire decoded image. 5. Check if the number of iterations N has reached the preset maximum n. If so, end the iteration process; If not, return to step (2) to continue with the next iteration. Generally, the number of iterations is 8–10 times, and the reconstruction quality of the image will reach its optimal level. |

4. Simulation Experiments and Results

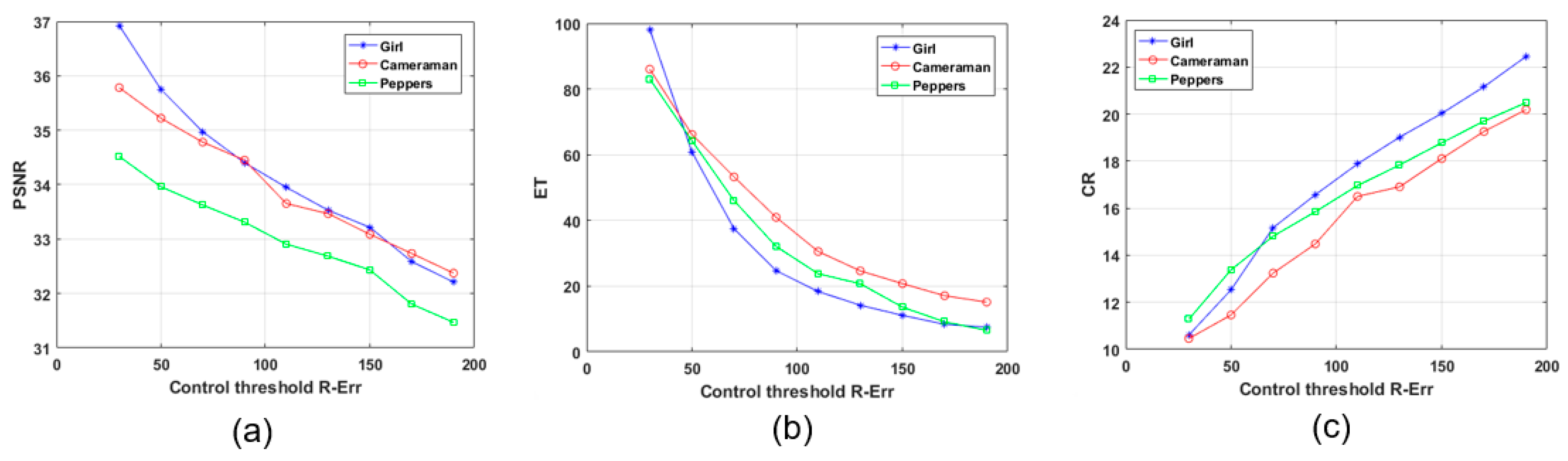

4.1. Experimental Conditions and Key Parameters

4.2. Evaluation Standard

4.3. Algorithm Complexity

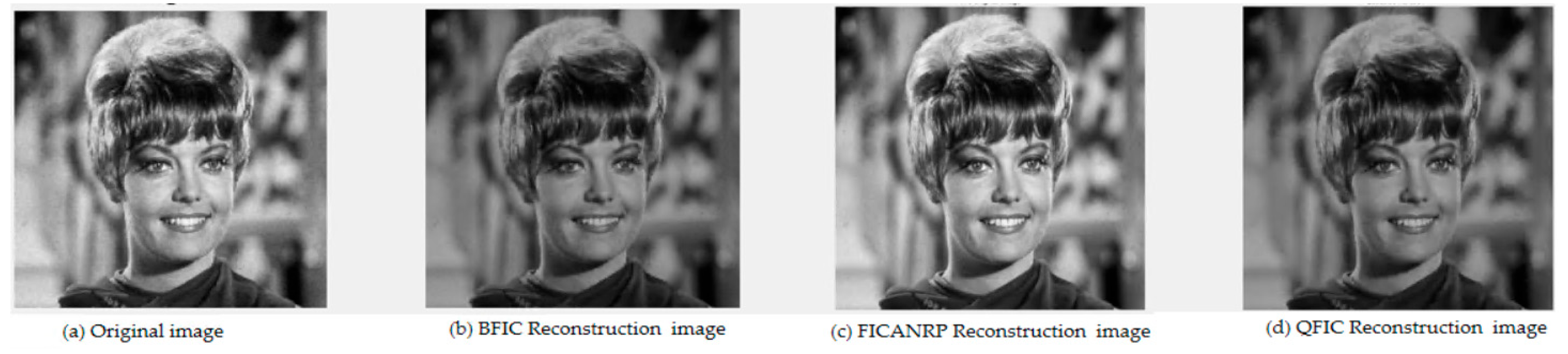

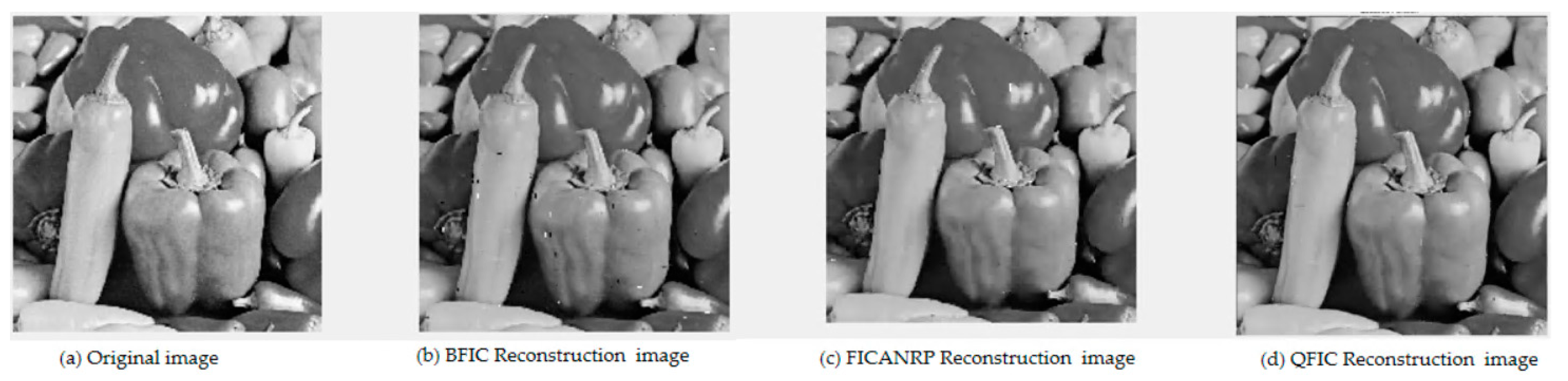

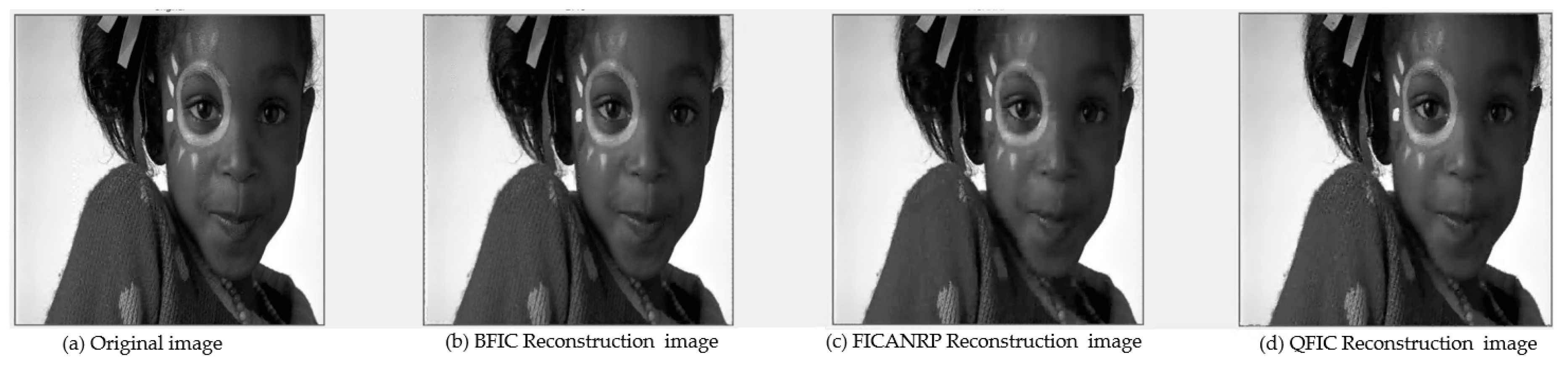

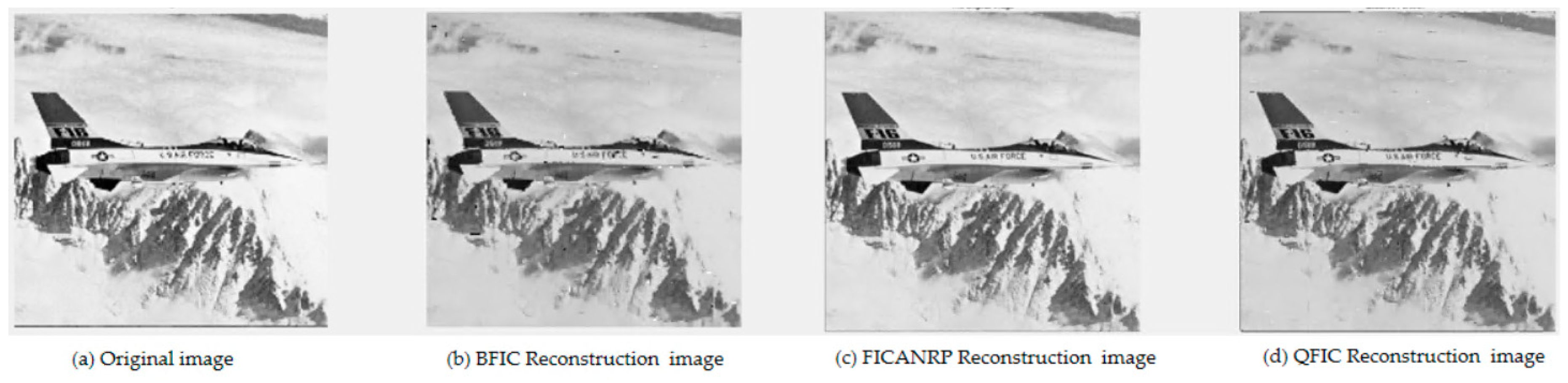

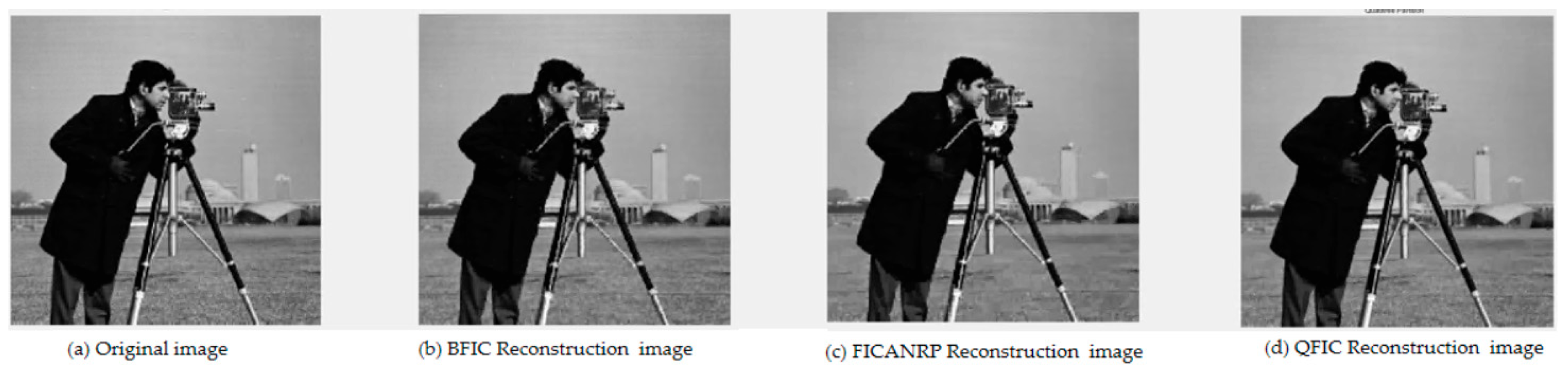

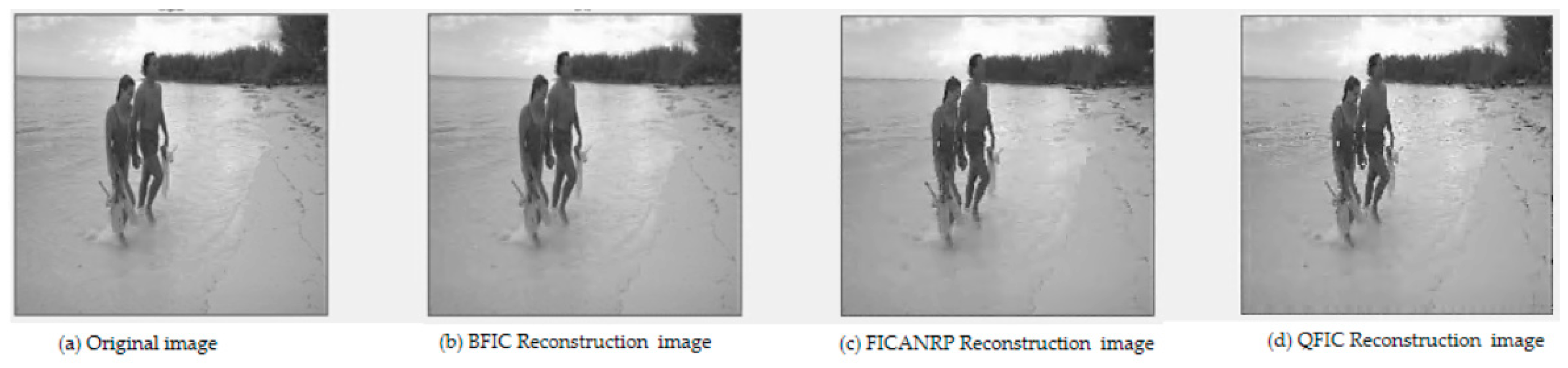

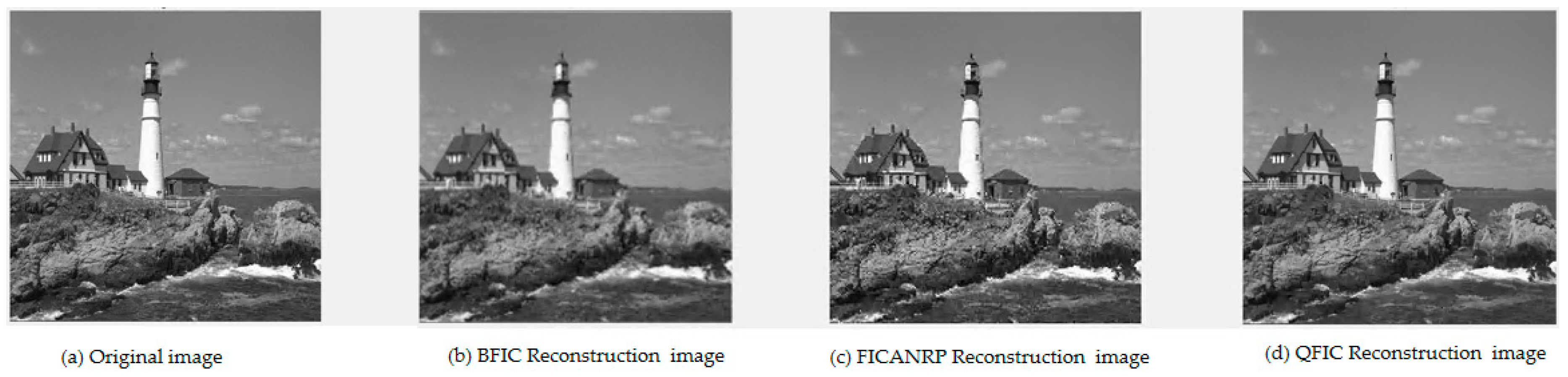

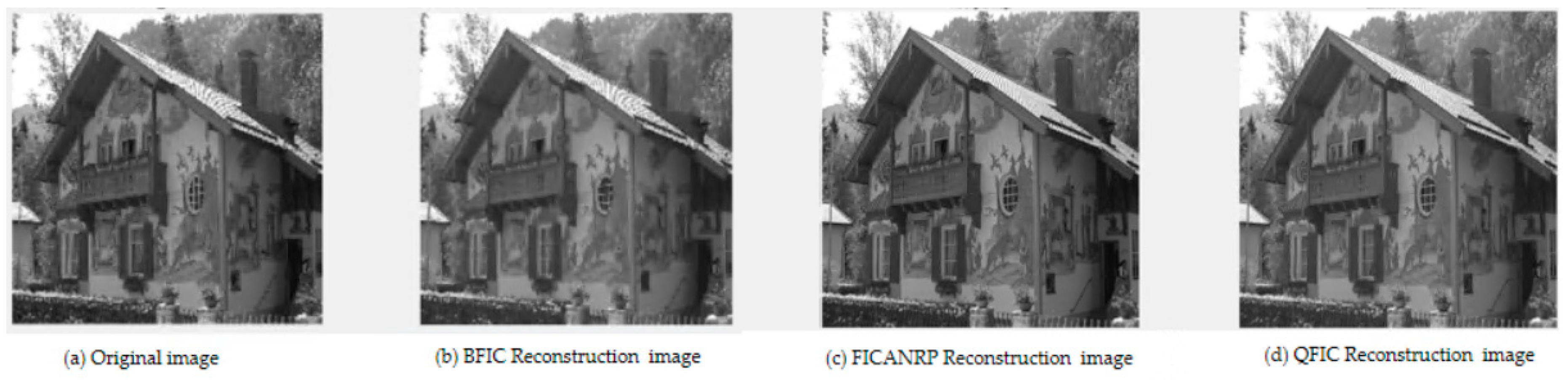

4.4. Analysis and Results of the Experiment

5. Conclusions and Future

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Barnsley, M.F. Fractals Everywhere, 2nd ed.; 3. [Print.]; Morgan Kaufmann: San Diego, CA, USA, 1993. [Google Scholar]

- Jacquin, A.E. Image coding based on a fractal theory of iterated contractive image transformations. IEEE Trans. Image Process. 1992, 1, 18–30. [Google Scholar] [CrossRef] [PubMed]

- Barnsley, M.F.; Demko, S. Iterated function systems and the global construction of fractals. Proc. R. Soc. Lond. Math. Phys. Sci. 1985, 399, 243–275. [Google Scholar] [CrossRef]

- Tong, C.S.; Wong, M. Adaptive approximate nearest neighbor search for fractal image compression. IEEE Trans. Image Process. 2002, 11, 605–615. [Google Scholar] [CrossRef]

- Tan, T.; Yan, H. The fractal neighbor distance measure. Pattern Recognit. 2002, 35, 1371–1387. [Google Scholar] [CrossRef]

- Wang, X.-Y.; Wang, Y.-X.; Yun, J.-J. An improved fast fractal image compression using spatial texture correlation. Chin. Phys. B 2011, 20, 104202. [Google Scholar] [CrossRef]

- Jaferzadeh, K.; Kiani, K.; Mozaffari, S. Acceleration of fractal image compression using fuzzy clustering discrete-cosine-transform-based metric. Image Process. IET 2012, 6, 1024–1030. [Google Scholar] [CrossRef]

- Wang, J. A Novel Fractal Image Compression Scheme With Block Classification and Sorting Based on Pearson’s Correlation Coefficient. IEEE Trans. Image Process. 2013, 22, 3690–3702. [Google Scholar] [CrossRef]

- Jaferzadeh, K.; Moon, I.; Gholami, S. Enhancing fractal image compression speed using local features for reducing search space. Pattern Anal. Appl. 2017, 20, 1119–1128. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, A.; Shi, L. Orthogonal sparse fractal coding algorithm based on image texture feature. IET Image Process. 2019, 13, 1872–1879. [Google Scholar] [CrossRef]

- Fisher, Y. Fractal Image Compression: Theory and Application; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1995. [Google Scholar]

- Wang, X.-Y.; Guo, X.; Zhang, D.-D. An effective fractal image compression algorithm based on plane fitting. Chin. Phys. B 2012, 21, 090507. [Google Scholar] [CrossRef]

- Li, W.; Pan, Q.; Lu, J.; Li, S. Research on Image Fractal Compression Coding Algorithm Based on Gene Expression Programming. In Proceedings of the 2018 17th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Wuxi, China, 19–23 October 2018; pp. 88–91. [Google Scholar]

- Smriti, S.; Laxmi, A.; Hima, B.M. Image Compression using PSO-ALO Hybrid Metaheuristic Technique. Int. J. Perform. Eng. 2021, 17, 998. [Google Scholar] [CrossRef]

- Tang, Z.; Yan, S.; Xu, C. Adaptive super-resolution image reconstruction based on fractal theory. Displays 2023, 80, 102544. [Google Scholar] [CrossRef]

- Varghese, B.; S., K. Parallel Computation strategies for Fractal Compression. In Proceedings of the 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 18–19 December 2020; pp. 1024–1027. [Google Scholar]

- Al Sideiri, A.; Alzeidi, N.; Al Hammoshi, M.; Chauhan, M.S.; AlFarsi, G. CUDA implementation of fractal image compression. J. Real-Time Image Process. 2020, 17, 1375–1387. [Google Scholar] [CrossRef]

- Li, L.-F.; Hua, Y.; Liu, Y.-H.; Huang, F.-H. Study on fast fractal image compression algorithm based on centroid radius. Syst. Sci. Control Eng. 2024, 12, 2269183. [Google Scholar] [CrossRef]

- Lin, Y.; Xie, Z.; Chen, T.; Cheng, X.; Wen, H. Image privacy protection scheme based on high-quality reconstruction DCT compression and nonlinear dynamics. Expert Syst. Appl. 2024, 257, 124891. [Google Scholar] [CrossRef]

- Zhang, Y.; Jia, C.; Chang, J.; Ma, S. Machine Perception-Driven Facial Image Compression: A Layered Generative Approach. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 3825–3836. [Google Scholar] [CrossRef]

- Song, J.; He, J.; Feng, M.; Wang, K.; Li, Y.; Mian, A. High Frequency Matters: Uncertainty Guided Image Compression with Wavelet Diffusion. arXiv 2024, arXiv:2407.12538. [Google Scholar] [CrossRef]

- Relic, L.; Azevedo, R.; Zhang, Y.; Gross, M.; Schroers, C. Bridging the Gap between Diffusion Models and Universal Quantization for Image Compression. arXiv 2025, arXiv:2504.02579. [Google Scholar]

- Afrin, A.; Mamun, M.A. A Comprehensive Review of Deep Learning Methods for Hyperspectral Image Compression. In Proceedings of the 2024 3rd International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), Gazipur, Bangladesh, 25–27 April 2024; pp. 1–6. [Google Scholar]

- Shen, T.; Peng, W.-H.; Shih, H.-C.; Liu, Y. Learning-Based Conditional Image Compression. In Proceedings of the 2024 IEEE International Symposium on Circuits and Systems (ISCAS), Singapore, Singapore, 19–22 May 2024; pp. 1–5. [Google Scholar]

- Kuang, H.; Ma, Y.; Yang, W.; Guo, Z.; Liu, J. Consistency Guided Diffusion Model with Neural Syntax for Perceptual Image Compression. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 1622–1631. [Google Scholar]

- Oppenheim, A.; Johnson, D.; Steiglitz, K. Computation of spectra with unequal resolution using the fast Fourier transform. Proc. IEEE 1971, 59, 299–301. [Google Scholar] [CrossRef]

- Bagchi, S.; Mitra, S.K. The nonuniform discrete Fourier transform and its applications in filter design. I. 1-D. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 1996, 43, 422–433. [Google Scholar] [CrossRef]

- Bagchi, S.; Mitra, S.K. The nonuniform discrete Fourier transform and its applications in filter design. II. 2-D. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 1996, 43, 434–444. [Google Scholar] [CrossRef]

- Donoho, D.L. Wedgelets: Nearly minimax estimation of edges. Ann. Stat. 1999, 27, 859–897. [Google Scholar] [CrossRef]

- Tak, U.K.; Tang, Z.; Qi, D. A non-uniform rectangular partition coding of digital image and its application. In Proceedings of the 2009 International Conference on Information and Automation, Zhuhai/Macau, China, 22–24 June 2009; pp. 995–999. [Google Scholar]

- Yuan, X.; Cai, Z. An Adaptive Triangular Partition Algorithm for Digital Images. IEEE Trans. Multimed. 2019, 21, 1372–1383. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, Z.; Xiong, G. A New Image Compression Algorithm Based on Non-Uniform Partition and U-System. IEEE Trans. Multimed. 2021, 23, 1069–1082. [Google Scholar] [CrossRef]

- Chen, H.; Zendehdel, N.; Leu, M.C.; Yin, Z. A gaze-driven manufacturing assembly assistant system with integrated step recognition, repetition analysis, and real-time feedback. Eng. Appl. Artif. Intell. 2025, 144, 110076. [Google Scholar] [CrossRef]

- Benouaz, T.; Arino, O. Least square approximation of a nonlinear ordinary differential equation. Comput. Math. Appl. 1996, 31, 69–84. [Google Scholar] [CrossRef]

- Zhao, W.; U, K.; Luo, H. Adaptive non-uniform partition algorithm based on linear canonical transform. Chaos Solitons Fractals 2022, 163, 112561. [Google Scholar] [CrossRef]

- Zhao, W.; U, K.; Luo, H. Image representation method based on Gaussian function and non-uniform partition. Multimed. Tools Appl. 2023, 82, 839–861. [Google Scholar] [CrossRef]

- U, K.; Ji, N.; Qi, D.; Tang, Z. An Adaptive Quantization Technique for JPEG Based on Non-uniform Rectangular Partition. In Future Wireless Networks and Information Systems; Zhang, Y., Ed.; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2012; Volume 143, pp. 179–187. ISBN 978-3-642-27322-3. [Google Scholar]

- Song, R.; Li, Y.; Zhang, Q.; Zhao, Z. Image denoising method based on non-uniform partition and wavelet transform. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; pp. 703–706. [Google Scholar]

- Liu, X.; Kintak, U. A novel multi-focus image-fusion scheme based on non-uniform rectangular partition. In Proceedings of the 2017 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Ningbo, China, 09–12 July 2017; pp. 53–58. [Google Scholar]

- Zhao, W.; U, K.; Luo, H. An image super-resolution method based on polynomial exponential function and non-uniform rectangular partition. J. Supercomput. 2023, 79, 677–701. [Google Scholar] [CrossRef]

- Wang, H.; Cheng, X.; Wu, H.; Luo, X.; Ma, B.; Zong, H.; Zhang, J.; Wang, J. A GAN-based anti-forensics method by modifying the quantization table in JPEG header file. J. Vis. Commun. Image Represent. 2025, 110, 104462. [Google Scholar] [CrossRef]

- U, K.; Hu, S.; Qi, D.; Tang, Z. A robust image watermarking algorithm based on non-uniform rectangular partition and DWT. In Proceedings of the 2009 2nd International Conference on Power Electronics and Intelligent Transportation System (PEITS), Shenzhen, China, 19–20 December 2009; pp. 25–28. [Google Scholar]

- Gao, S.; Zhang, Z.; Iu, H.H.-C.; Ding, S.; Mou, J.; Erkan, U.; Toktas, A.; Li, Q.; Wang, C.; Cao, Y. A Parallel Color Image Encryption Algorithm Based on a 2-D Logistic-Rulkov Neuron Map. IEEE Internet Things. J. 2025, 12, 18115–18124. [Google Scholar] [CrossRef]

- Gao, S.; Iu, H.H.-C.; Erkan, U.; Simsek, C.; Toktas, A.; Cao, Y.; Wu, R.; Mou, J.; Li, Q.; Wang, C. A 3D Memristive Cubic Map with Dual Discrete Memristors: Design, Implementation, and Application in Image Encryption. IEEE Trans. Circuits Syst. Video Technol. 2025, 1. [Google Scholar] [CrossRef]

| Image | Algorithm | PSNR | ET (s) | Num_R | Num_D | CR | R_Err | R_MSE | O(T) |

|---|---|---|---|---|---|---|---|---|---|

| BFIC | 35.86 | 749.47 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 34.82 | 269 | 3055 | 16,129 | 22.88 | \ | 50 | 2.4 × 107 | |

| Zelda | FICANRP | 36.10 | 13.45 | 3895 | 424 | 20.05 | 70 | \ | 3.4 × 103 |

| BFIC | 29.73 | 751.91 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 30.06 | 397.40 | 4141 | 16,129 | 16.88 | \ | 50 | 7.7 × 107 | |

| Peppers | FICANRP | 31.12 | 6.15 | 3673 | 295 | 21.10 | 200 | \ | 3.4 × 103 |

| BFIC | 31.20 | 720.52 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 30.93 | 530.04 | 4945 | 16,129 | 13.68 | \ | 50 | 2.8 × 108 | |

| Girl | FICANRP | 32.10 | 12.76 | 3838 | 751 | 20.23 | 180 | \ | 6.0 × 103 |

| BFIC | 24.54 | 762.56 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 25.07 | 690.16 | 5848 | 16,129 | 11.95 | \ | 50 | 3.9 × 108 | |

| Plane | FICANRP | 29.35 | 10.51 | 3895 | 424 | 19.94 | 420 | \ | 3.4 × 103 |

| BFIC | 30.99 | 733.08 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 32.30 | 567.72 | 5095 | 16,129 | 13.70 | \ | 50 | 2.4 × 108 | |

| Cameraman | FICANRP | 32.34 | 14.17 | 3847 | 538 | 20.19 | 200 | \ | 4.3 × 103 |

| BFIC | 32.13 | 767.14 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 29.95 | 452.82 | 4267 | 16,129 | 15.85 | \ | 50 | 2.2 × 109 | |

| Kodim12 | FICANRP | 32.85 | 8.68 | 3991 | 349 | 19.46 | 200 | \ | 1.1 × 104 |

| BFIC | 27.37 | 751.26 | 4096 | 3969 | 18.96 | \ | \ | 5.2 × 108 | |

| QFIC | 30.71 | 560.70 | 5047 | 16,129 | 13.40 | \ | 50 | 2.6 × 108 | |

| Kodim20 | FICANRP | 31.72 | 12.82 | 3655 | 475 | 21.25 | 200 | \ | 1.5 × 104 |

| BFIC | 31.06 | 12,575.00 | 16,384 | 16,130 | 17.66 | \ | \ | 8.5 × 109 | |

| Kodim21 | QFIC | 31.80 | 6948.04 | 22,639 | 65,025 | 16.70 | \ | 50 | 5.4 × 109 |

| (1024 × 1024) | FICANRP | 32.26 | 112.20 | 15,640 | 1312 | 18.50 | 220 | \ | 1.1 × 104 |

| BFIC | 29.48 | 12,466.00 | 16,384 | 16,130 | 17.66 | \ | \ | 8.5 × 109 | |

| Kodim24 | QFIC | 29.58 | 7045.00 | 23,533 | 65,025 | 11.50 | \ | 50 | 5.8 × 109 |

| (1024 × 1024) | FICANRP | 30.17 | 117.34 | 15,157 | 1558 | 19.08 | 220 | \ | 1.2 × 104 |

| Image | Algorithm | PSNR | ET(s) | Num_R | Num_D | CR | R_Err | R_mse |

|---|---|---|---|---|---|---|---|---|

| QFIC | 34.82 | 269 | 3055 | 16,129 | 22.88 | \ | 50 | |

| Zelda | FICANRP | 34.83 | 7.22 | 3271 | 343 | 23.75 | 200 | \ |

| QFIC | 30.06 | 397.4 | 4141 | 16,129 | 16.88 | \ | 50 | |

| Peppers | FICANRP | 30.14 | 6.15 | 2902 | 298 | 26.76 | 185 | \ |

| QFIC | 30.93 | 530.04 | 4945 | 16,129 | 13.68 | \ | 50 | |

| Girl | FICANRP | 30.97 | 8.19 | 3155 | 712 | 24.61 | 330 | \ |

| QFIC | 25.07 | 690.16 | 5848 | 16,129 | 11.95 | \ | 50 | |

| Plane | FICANRP | 25.80 | 6.01 | 2500 | 280 | 31.07 | 400 | \ |

| QFIC | 32.30 | 567.72 | 5095 | 16,129 | 13.7 | \ | 50 | |

| Cameraman | FICANRP | 32.34 | 14.17 | 3847 | 538 | 20.19 | 200 | \ |

| QFIC | 29.95 | 452.82 | 4267 | 16,129 | 15.85 | \ | 50 | |

| Kodim12 | FICANRP | 29.95 | 6.5 | 2344 | 301 | 33.13 | 450 | \ |

| QFIC | 30.71 | 560.7 | 5047 | 16,129 | 13.4 | \ | 50 | |

| Kodim20 | FICANRP | 30.77 | 8.2 | 3085 | 376 | 25.18 | 350 | \ |

| Kodim21 | QFIC | 31.80 | 6948.04 | 16,493 | 65,025 | 16.7 | \ | 50 |

| (1024 × 1024) | FICANRP | 31.92 | 107.21 | 15,040 | 1312 | 19.23 | 250 | \ |

| Kodim24 | QFIC | 29.58 | 7045 | 23,533 | 65,025 | 11.50 | \ | 50 |

| (1024 × 1024) | FICANRP | 30.17 | 117.34 | 15,157 | 1558 | 19.08 | 250 | \ |

| ET(s) Without Precomputing | ET(s) With Precomputing | |||

|---|---|---|---|---|

| BFIC | FICANRP | BFIC | FICANRP | |

| Zelda | 749.47 | 73.33 | 292.23 | 13.45 |

| Peppers | 751.91 | 33.55 | 298.56 | 6.15 |

| Girl | 720.52 | 80.13 | 281.63 | 12.76 |

| Plane | 762.56 | 56.08 | 292.69 | 10.51 |

| Cameraman | 733.08 | 69.89 | 299.38 | 14.17 |

| Kodim21 (1024 × 1024) | 12,575.00 | 762.27 | 4857.90 | 112.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Tak U, K. An Enhanced Fractal Image Compression Algorithm Based on Adaptive Non-Uniform Rectangular Partition. Electronics 2025, 14, 2550. https://doi.org/10.3390/electronics14132550

Li M, Tak U K. An Enhanced Fractal Image Compression Algorithm Based on Adaptive Non-Uniform Rectangular Partition. Electronics. 2025; 14(13):2550. https://doi.org/10.3390/electronics14132550

Chicago/Turabian StyleLi, ManLong, and Kin Tak U. 2025. "An Enhanced Fractal Image Compression Algorithm Based on Adaptive Non-Uniform Rectangular Partition" Electronics 14, no. 13: 2550. https://doi.org/10.3390/electronics14132550

APA StyleLi, M., & Tak U, K. (2025). An Enhanced Fractal Image Compression Algorithm Based on Adaptive Non-Uniform Rectangular Partition. Electronics, 14(13), 2550. https://doi.org/10.3390/electronics14132550